FedStaleWeight: Buffered Asynchronous Federated Learning with Fair Aggregation via Staleness Reweighting

Abstract

Federated Learning (FL) endeavors to harness decentralized data while preserving privacy, facing challenges of performance, scalability, and collaboration. Asynchronous Federated Learning (AFL) methods have emerged as promising alternatives to their synchronous counterparts bounded by the slowest agent, yet they add additional challenges in convergence guarantees, fairness with respect to compute heterogeneity, and incorporation of staleness in aggregated updates. Specifically, AFL biases model training heavily towards agents who can produce updates faster, leaving slower agents behind, who often also have differently distributed data which is not learned by the global model. Naively upweighting introduces incentive issues, where true fast updating agents may falsely report updates at a slower speed to increase their contribution to model training. We introduce FedStaleWeight, an algorithm addressing fairness in aggregating asynchronous client updates by employing average staleness to compute fair re-weightings. FedStaleWeight reframes asynchronous federated learning aggregation as a mechanism design problem, devising a weighting strategy that incentivizes truthful compute speed reporting without favoring faster update-producing agents by upweighting agent updates based on staleness. Leveraging only observed agent update staleness, FedStaleWeight results in more equitable aggregation on a per-agent basis. We both provide theoretical convergence guarantees in the smooth, non-convex setting and empirically compare FedStaleWeight against the commonly used asynchronous FedBuff with gradient averaging, demonstrating how it achieves stronger fairness, expediting convergence to a higher global model accuracy. Finally, we provide an open-source test bench to facilitate exploration of buffered AFL aggregation strategies, fostering further research in asynchronous federated learning paradigms.111Code for experiments can be found at https://github.com/18jeffreyma/afl-bench

1 Introduction

1.1 Motivation and Background

In real-world scenarios, the task of training a model may be distributed among up to millions of agents, called edge devices, each of whom contribute their own limited (and perhaps private) set of data, a paradigm known as federated learning (FL). Our paper focuses on cross-device FL, in which there must be a large number of agents in order to extract meaningful results. The other type of FL, cross-silo, utilizes fewer agents because each one has more data and participates actively in the model training process, like a consortium of hospitals sharing information about a new disease [1].

Cross-device FL faces two major challenges when put into practice: scalability and privacy. In a massive distributed network, it’s impossible for every agent to be active at every time step, or for all agents to train their private models at the same speed. Naive aggregation techniques rely on concurrent reports from agents because, for instance, a simple average may be taken over reports in order to update the global model at regular intervals. By relaxing the concurrency constraint, we move away from synchronous FL, and the problem becomes how to best aggregate updates arriving at different points in time. On the topic of privacy, agents want their personal data to remain fully confidential; the global model must therefore evolve in a way that does not reveal individual contributions through gradient updates or the like [2]. Recent work in secure aggregation (SecAgg) and differential privacy provide frameworks for exploring the tradeoffs between privacy and utility [3].

Unlike its synchronous conterpart, Asynchronous federated learning (AFL) does not rely on clients to all finish and communicate their results in the same round and differs from its synchronous counterpart in the following ways:

-

•

Synchronicity: Clients are assumed to be heterogeneous and may return updates at different times or fail to return updates in time, necessitating mechanisms that do not wait for all participants before updating the global model through aggregation.

-

•

Staleness: As models may take longer to update, AFL mechanisms often are able to merge updates to models (i.e. model version is deployed to a client, the client takes a long time to fit, and it replies with its updated model while the global server has already aggregated updates and stepped to model version ). Emerging research in AFL hypothesizes that these stale updates are likely still useful and should be incorporated into learning.

-

•

Convergence challenges: AFL often experiences higher training instability based on the strategy chosen for aggregating stale updates, similar to issues encountered in off-policy reinforcement learning (RL).

1.2 Our Contribution

We observe that the asynchronicity in AFL naturally leaves slow updating agents behind: since updates are no longer incorporated synchronously and are incorporated on demand, agents who are not able to update as quickly will contribute less to global model learning. However, naively up-weighting slow agent updates creates incentive issues: if our upweighting is too extreme, we incentivize fast updating agents to throttle and falsely report at slower speeds to increase their contribution to learning.

Thus, we explore the problem of fairly aggregating updates from clients in AFL to offset the unfairness introduced by compute heterogeneity in asynchronous federated learning. Several known synchronous approaches such as FedAvg and FedProx exist and more nuanced asynchronous approaches have been explored such as a fixed buffer length with averaging [2] or simply updating the central model in an asynchronous immediate manner [4]. To our knowledge, more nuanced weighting of stale updates or an adaptive approach has not yet been explored. Our contribution is the following:

-

1.

We reframe the problem of asynchronous federated learning aggregation as a welfare maximization problem and derive a gradient aggregation method which is strategy-proof to fast agents who may throttle their update rate to have their updates weighted higher while increasing the contribution of slower update producing agents to global model training.

-

2.

We show how our derived fair weighting can be computed via observing agent update staleness, a quantity naturally visible in buffered asynchronous federated learning, and present an algorithm for fair federated learning, FedStaleWeight.

-

3.

We provide a convergence guaruntee for FedStaleWeight in the smooth, non-convex setting, showing that the upweighting of stale model updates can still yield convergence.

-

4.

We empirically show in a series of realistic non-IID federated learning settings how current AFL aggregation techniques are biased towards clients with higher throughput and how FedStaleWeight maintains fairness and subsequently converges to higher global model accuracy faster. We release an open-source test bench for other researchers to explore further buffered AFL aggregation strategies.

2 Related Work

Synchronous FL. Synchronous FL excels in the privacy dimension: when the principal must wait for all agents before aggregating their data, it becomes nearly impossible to recover an individual contribution from an average over so many points. The slowest agent, however, determines the training pace of the global model, resulting in a bottleneck. If the principal were to perform aggregation with only, say, the fastest half of agents, then an element of selection bias is introduced. These concerns aside, numerous synchronous techniques have found experimental success, and their primary features can be summarized as follows:

-

•

FedAvg: McMahan et al. (2016) coined the term “federated learning” and suggest a simple weighted average of gradients to update the global model, where the weights correspond to the terms in the overall objective described in the next section [5].

-

•

FedProx: Li et al. (2020) improve upon FedAvg by adding a regularization term to each local objective , where is the current global model. This allows each local model to more resemble the global model but converges under a more restrictive set of assumptions [6].

-

•

FedAvgM: Hsu et al. (2019) also employ a form of regularization through server momentum, which accumulates past gradients in order to stabilize and accelerate training [7].

-

•

FedNova: Wang et al. (2020) note that heterogeneous update speeds can cause objective inconsistency, where the server model approaches a local optimum that does not match the global optimum of the original objective function [8]. The authors provide a formal analysis of this phenomenon and propose FedNova, which normalizes each client’s gradient by the number of updates that the client has sent in the current round before taking the overall average for a round. This technique encompasses both FedAvg and FedProx and has been shown to outperform both of them.

Asynchronous FL. AFL is a natural fit in the cross-device setting due to heterogeneity among agents’ availability as well as training and data transfer speeds. Here, we provide an overview of the most prominent work in AFL to date:

-

•

FedAsync: Xie et al. (2019) gave the first convergence guarantees for the AFL problem; their fully asynchronous method forces the server to update its model after every client update [9]. As later works observe, this is not only computationally taxing but insecure.

-

•

ASO-Fed: Chen et al. (2020) deal with the special case of online learning, where each agent’s local dataset is continuously growing as new observations arrive [4]. Newer data need not stem from the same distribution as older data, which means that a robust AFL framework must be able to handle non-IID data.

-

•

FedAdaGrad, FedAdam, FedYogi: Reddi et al. (2020) tailor the well-known adaptive optimizers AdaGrad, Adam, and Yogi to the FL problem [10].

-

•

SAFA: Wu et al. (2020) propose Semi-Asynchronous Federated Averaging (SAFA) to deal with the issues of stragglers, dropouts, and staleness [11].

-

•

Pisces: Jiang et al. (2022) use a custom scoring mechanism to choose which agents will participate in the global model training at a given time step [12]. Their utility function attempts to distinguish between slow clients with high-quality data and those which are just slow.

-

•

AsyncFedED: Wang et al. (2022) aggregate client data by calculating a “Euclidean distance” between stale models and the global model and then weighting each client’s update appropriately [13].

-

•

FedBuff: In order to avoid full asynchronicity, Nguyen et al. (2022) hold agent updates in a buffer. Only when the buffer is full does the data get aggregated and pushed to the server [2]. This work directly inspired and most closely resembles our work. SecAgg [14] [15] can also be integrated to maintain privacy guarantees.

-

•

FedFix: Fraboni et al. (2023) try to unify a large number of the above methods through their FedFix framework: it uses stochastic weights during aggregation [16].

3 Preliminaries

3.1 Asynchronous FL Setting

The federated optimization problem with agents can be formulated as:

| (1) |

where is the importance of the th agent (often assumed to be in proportion to the size of each client’s dataset, i.e. or equal for all agents ). represents agent ’s local objective function and is only available to agent .

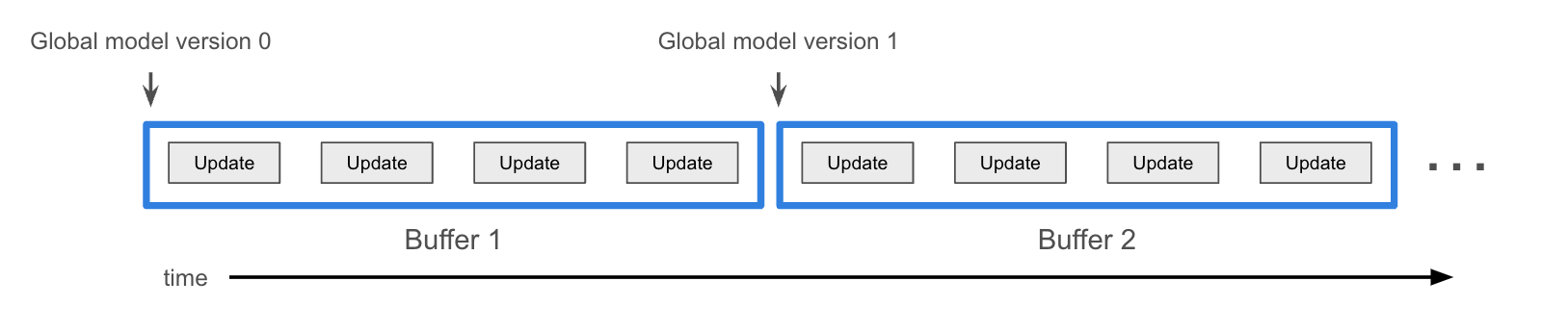

Specifically, we study the buffered asynchronous federated learning setting, consisting of a singular central server with an update aggregation buffer and clients. The server only updates once updates arrive at the buffer, where is a tune-able parameter. Each client has their own private data and pulls the latest version of the global model, trains it on its private dataset and communicates the update back to the server, which is appended to the buffer to later be aggregated (Figure 1). Specifically, in the asynchronous setting, we introduce the challenge of staleness, where updates to update model at version may have been trained on models of version smaller than .

In the buffered AFL setting, we denote staleness for an agent ’s update as the positive difference in version number between the current global model and the global model that the update was computed from. Formally, we denote a global model with version and with 0 client training steps as . For a client , we denote the global model with version before and after training on local steps as and , respectively; the client’s update is denoted as . Given the current global model and update , the staleness is thus .

3.2 Defining Fairness in Update Aggregation

Each FL client represents its true update reporting speed as a random variable where we denote the mean reporting speed as . Each agent chooses to report a random variable with mean as their observed update reporting speed. We assume for simplicity that , following intuition of fixed hardware specs: in other words, clients cannot manipulate to be faster on average than their fastest (true) compute speed on average. Given reported update speeds, we naturally see that the expected proportion that each agent contributes to learning without re-weighting is:

| (2) |

A fair re-weighting takes and returns a weighting close to equal for every participating learning agent. In other words, we seek an that solves the following constrained welfare maximization problem:

| (3) |

Clients seek to maximize a utility representing their effective influence (and thus their accuracy), modelled as their re-weighting times the overall proportional influence of their reported mean update rate. We use this utility function to justify strategy-proofness in Section 4.2.1.

| (4) |

3.3 Step Size as Uncertainty in Approximation

Recall that computing the update step for a client with loss at point can be framed as the solution of the following minimization game, where step size represents the uncertainty in using the approximation for stochastic gradient descent:

| (5) |

Similarly, in federated learning, we wish to restrict our aggregated gradient to be no larger than the step sizes of any of the individual aggregated gradients for convergence reasons, necessitating that any solution must have as noted above.

4 Algorithm

4.1 Solving the Welfare Maximization Problem

Naively, if is chosen (all agents are weighted equally), we see that is strictly increasing with respect to and all agents will report . However, we see that welfare is poor, since an agent’s influence is exactly increasing with respect to its rate, meaning that slow agents are left behind as they cannot manipulate any higher than their true .

We observe that should be monotonically decreasing with respect to its input, but not at a rate that decreases slower than increases. If the latter as true, faster agents would be incentivized to throttle their rates to improve their own weighted influence. The optimal becomes , where . Thus, with this choice, each agent’s weighted influence remains constant regardless of which reported they choose, and thus there is no incentive to not report truthfully. We note that this weighting achieves weak truthfulness where agents are indifferent between reporting at any speed, since their utility stays the same regardless.

4.2 Deriving Expected Influence From Staleness

In buffered AFL, we assume the global aggregator has access only to the aggregation buffer and not the arrivals of individual agent updates, disallowing use of a weighting scheme that relies on estimating individual agent reporting schemes based on unique identifiers. We proceed to show how a fair weighting can be computed from staleness, an available local quantity, in asynchronous federated learning.

Given an initially empty buffer, we can compute the expected index of the first update from a given agent with mean rate by summing the expected number of updates from all agents in the expected time period for one update from agent . For example, we note that an agent twice as fast as would have two updates expected in the same time as ’s first expected update. Recall that staleness corresponds to the difference between the version number of the current global model and the version number of the original global model that an agent’s update was reported from. We derive the following expression for expected staleness of agent .

| (6) |

Reconciling this with our mechanism definitions above, we get the unweighted influence of agent given its expected staleness.

| (7) |

Recalling our derived weighting from the mechanism, we get a formula for our weighting as a function of expected staleness. We note that under a synchronous setting of zero expected staleness, we recover the exactly equal weighting used in synchronous FedAvg.

| (8) |

4.2.1 Extending To Online Learning

We reconcile the derived weighting above into the online setting of arriving client updates. As noted in Section 3.3, at any given aggregation, we should avoid updating the global model with a step size larger than any individual clients; otherwise, global model training can quickly diverge. As such, given a buffer of updates to be aggregated, we compute the desired weightings per the above and normalize such that they sum to 1.

However, this introduces complexity into our derivation from above. Intuitively, if an agent chooses to increase their expected staleness by slowing down their reporting speed, the best they can do is enjoy a single aggregation step to themselves of full step size, but consequently, they participate in fewer aggregations later due to their slower reporting speed. As a result, they contribute less to learning. We show this analytically: we can compute the normalized re-weighting for agent ’s update in a buffer as the following:

Isolating , we get the following:

We further note that, as an agent, by manipulating downwards, we increase expected staleness but also decrease the frequency of an agent’s update. Recall from Section 3.2 that the utility of an agent is the normalized re-weighting times the proportional frequency of an agent’s update based on the agent’s reported update speed.

| (9) |

Denoting , and , we take the derivative of the utility with respect to an agent’s reporting speed to get:

Since our algorithm reweights based on expected staleness, we can rescale reported update rates linearly by any positive constant without changing the result of expected staleness (or our reweighting) to make as small as possible and as large as possible. Furthermore, since increases with larger , we observe with large enough (a large enough client pool) that , indicating that agents want to truthfully report as high of an update speed as possible and our aggregation mechanism is strictly strategy-proof with respect to agent manipulation of .

4.3 Algorithm

We present the following algorithm, FedStaleWeight, applying the above weighting to each aggregation. In practice, to compute the expected staleness for each agent , , we maintain a moving average of previous staleness for that agent. Our method computes a weighting to apply over the aggregation buffer, meaning it can be combined with methods like weighted secure aggregation [15] to protect client gradients.

5 Theoretical Results

In this section, we provide a convergence guarantee for FedStaleWeight in the non-convex, smooth setting. Since FedStaleWeight effectively upweights stale updates in addition to operating in the asynchronous setting, it is essential to understand the relationship between convergence and our methods of reweighting to create asynchronous fairness. The full proof can be found in Appendix A.

Notation. We use the following notation and assumptions throughout as used in FedBuff [2]: represents the set of all clients, is the gradient with respect to the loss on client ’s data. is the minimum of , denotes the stochastic gradient on client , is the buffer size for aggregation as noted above, and is the number of local steps taken by each client. Similar to other seminal works in federated learning, [6] [10] [17] [18], we take the following assumptions:

Assumption 1.

(Unbiasedness of client stochastic gradient)

Assumption 2.

(Bounded local and global variance) for all clients ,

Assumption 3.

(Bounded gradient) for all

Assumption 4.

(Lipschitz gradient) for all client the gradient is L-smooth ,

Assumption 5.

(Bounded Staleness) For all clients and for each server step , the staleness between the model version a FedStaleWeight client uses to start local training and the model version in which the aggregated update is used to modify the global version is not larger than when . Moreover, any buffered asynchronous aggregation with has the maximum delay at most [2].

6 Empirical Results

6.1 Simulation Details

We implement a generic testbench to empirically verify our algorithm above. The simulation environment consists of concurrently running clients with private data and and a centralized server and buffer, where an aggregation strategy can be specified.

-

1.

Clients run each on their own process thread concurrently, continuously pulling the latest available model from the global server and training it on their local private data before communicating the update to the server and repeating. Clients are initialized with both an individual slice of the overall dataset being evaluated (split into training and evaluation) and as a private runtime model representing a training delay distribution, from which the client process samples and sleeps for that duration to simulate local training delays.

-

2.

Server aggregates updates from the global buffer and updates the global model continuously. The server is initialized with a specified aggregation strategy and an aggregation buffer to pull updates from: once the buffer fills to a specific size, aggregation is performed and the global model is updated, after which clients will begin to pull that new model once they finish training. Clients broadcast their updates once training and their simulated delays complete, and their update is appended to the global buffer.

The testbench allows users to specify custom aggregation strategies by implementing a simple callback function, from which evaluation against other baselines can be computed, logged and compared. We also implement the FedAvg baseline in this testbench.

6.2 Dataset Distribution

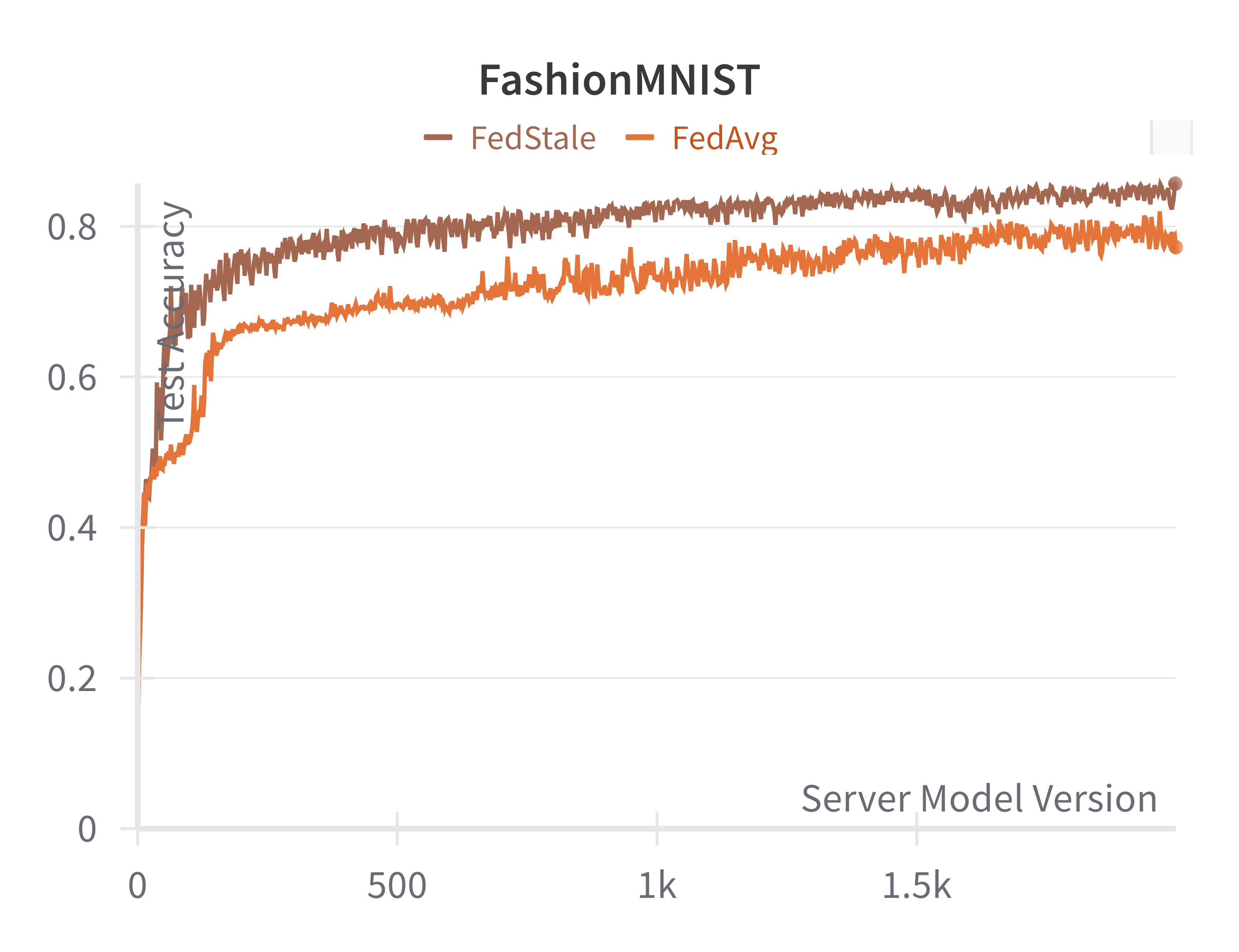

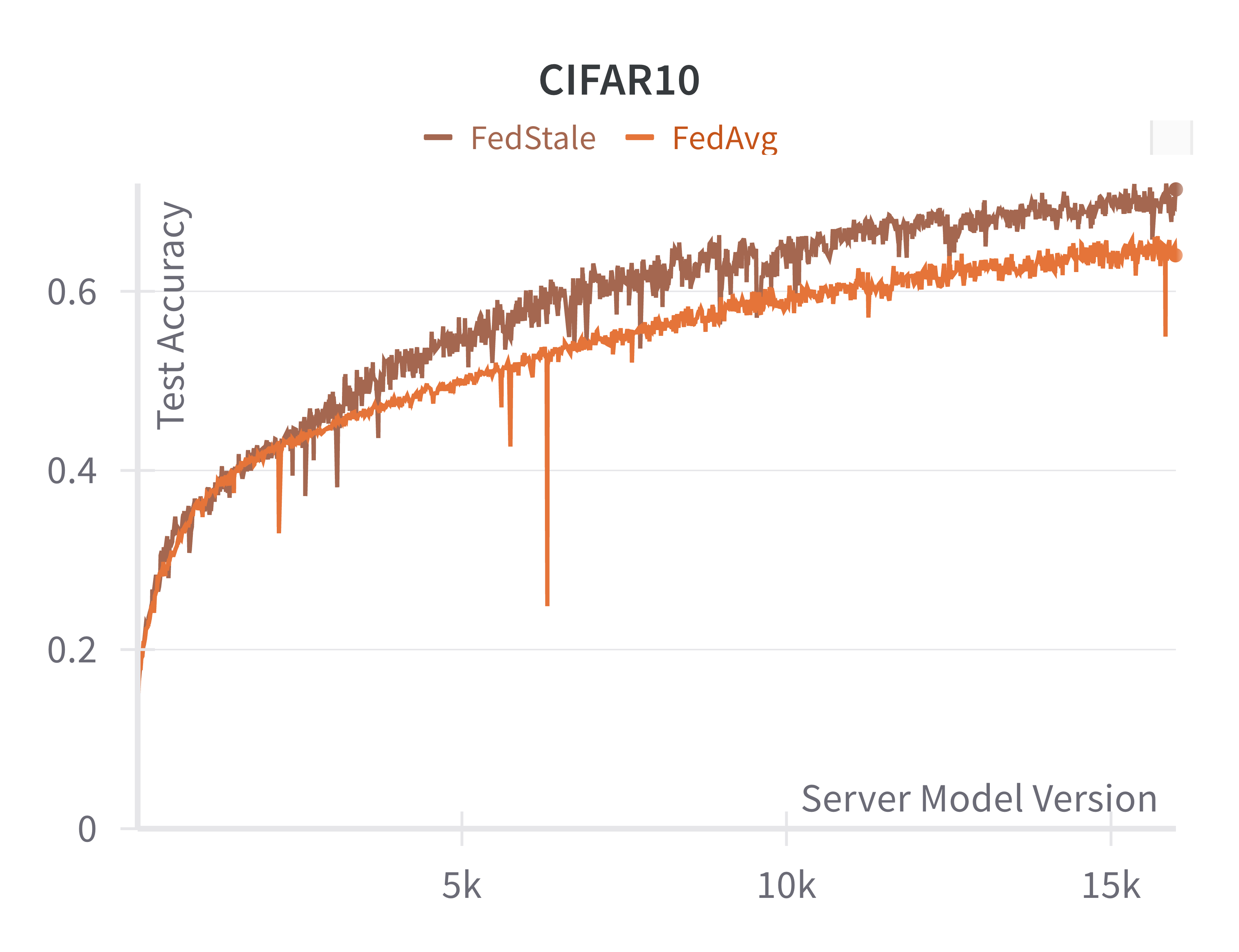

We test our algorithm empirically on two datasets, CIFAR10 and FashionMNIST. For both datasets, we model a system of 15 agents, with 10 “fast" agents with training delay randomly sampled from uniform distributions and 5 “slow" agents with training delays . Data is distributed in a non-IID fashion and we reserve 20% of data across all labels for testing global model accuracy to measure overall generalization For the remainder, the fast agents are given data points with labels 4 through 9, IID-distributed across fast agents where no two agents have the same data point. The slow agents are given labels 0 through 3, similarly IID-distributed. The results are shown in Figure 2.

Specifically, we model a scenario where low-compute agents have exclusive access to a non-trivial subset of all data: in this setting, higher fairness equates to a model with higher global accuracy, demonstrated in our empirical results. We run FashionMNIST and CIFAR10 for 4,000 and 16,000 aggregations respectively. We evaluate FedAvg and our FedStaleWeight with a buffer size of and a local learning rate of with local step. We clearly observe that our algorithm FedStaleWeight converges more quickly to a higher server test accuracy, indicating better generalization and less bias towards fast update producing agents.

7 Discussion

In this paper, we tackle the FL problem of training a model through updates received from a large number of edge devices. Our novel asynchronous technique for aggregating updates, FedStaleWeight, takes into account the staleness of client updates in order to re-weight them fairly. We argue that a slower client that sends fewer updates is undervalued in the aggregate under traditional averaging schemes, resulting in worse global models if the client’s data set is unique; similarly, a faster client should not dominate the aggregate off of speed of compute alone. The algorithm utilizes a buffer that holds updates until full, at which time it performs the weighted aggregation computed by approximating expected staleness via a moving average and updates the global model accordingly.

By sending updates to the server, each client implicitly reports an “update speed” to a mechanism which is interpreted only via the update’s staleness. This idea allows us to derive a re-weighting that increases fairness while still incentivizes truthfulness, ensuring that no agent wishes to intentionally slow down. This is critical to include when aggregation criteria uses the expected staleness as weighting, a inverse proxy for update speed. We show that the optimal weights are related to each client’s expected staleness, a quantity easily estimable after multiple rounds have elapsed. We provide work towards verifying that truthfulness guarantees hold when extending to online learning and per buffer normalization by calibrating the mechanism parameters.

Finally, we provide an ergodic convergence guarantee for FedStaleWeight and verify its efficacy through simulation. Our testbench simulates concurrent client threads and a server that executes our buffered aggregation algorithm while supporting the implementation of other aggregation techniques. This environment further serves as a useful resource for future work in the AFL space. In comparison to classic aggregation methods like FedAvg, we observe that FedStaleWeight converges faster and to a more accurate result, avoiding the pitfall of heavily weighting fast clients who may not be representative of the whole population.

There are several open questions, which we leave as future work to build upon. First, our framework assumes continuous client participation, when many real-world settings observe clients participating and dropping out frequently, necessitating more exploration into a scheme robust to probabilistic agent participation while maintaining fairness guarantees. We also assume that manipulation is zero-cost, when throttling could bring benefits of lower power consumption, affecting client utility. However, our work represents a strong step in narrowing the compute fairness gap between synchronous and asynchronous federated learning.

8 Acknowledgements

We are hugely grateful also to Safwan Hossain and the CS236R course for their support and mentorship during this project. This work was also supported by the FASRC computing cluster at Harvard University.

References

- [1] Chao Huang, Jianwei Huang, and Xin Liu. Cross-silo federated learning: Challenges and opportunities, 2022.

- [2] John Nguyen, Kshitiz Malik, Hongyuan Zhan, Ashkan Yousefpour, Mike Rabbat, Mani Malek, and Dzmitry Huba. Federated learning with buffered asynchronous aggregation. In International Conference on Artificial Intelligence and Statistics, pages 3581–3607. PMLR, 2022.

- [3] Swanand Kadhe, Nived Rajaraman, O. Ozan Koyluoglu, and Kannan Ramchandran. Fastsecagg: Scalable secure aggregation for privacy-preserving federated learning, 2020.

- [4] Yujing Chen, Yue Ning, Martin Slawski, and Huzefa Rangwala. Asynchronous online federated learning for edge devices with non-iid data, 2020.

- [5] H. Brendan McMahan, Eider Moore, Daniel Ramage, Seth Hampson, and Blaise Agüera y Arcas. Communication-efficient learning of deep networks from decentralized data, 2023.

- [6] Tian Li, Anit Kumar Sahu, Manzil Zaheer, Maziar Sanjabi, Ameet Talwalkar, and Virginia Smith. Federated optimization in heterogeneous networks. Proceedings of Machine learning and systems, 2:429–450, 2020.

- [7] Tzu-Ming Harry Hsu, Hang Qi, and Matthew Brown. Measuring the effects of non-identical data distribution for federated visual classification. arXiv preprint arXiv:1909.06335, 2019.

- [8] Jianyu Wang, Qinghua Liu, Hao Liang, Gauri Joshi, and H Vincent Poor. Tackling the objective inconsistency problem in heterogeneous federated optimization. Advances in neural information processing systems, 33:7611–7623, 2020.

- [9] Cong Xie, Sanmi Koyejo, and Indranil Gupta. Asynchronous federated optimization. arXiv preprint arXiv:1903.03934, 2019.

- [10] Sashank Reddi, Zachary Charles, Manzil Zaheer, Zachary Garrett, Keith Rush, Jakub Konečnỳ, Sanjiv Kumar, and H Brendan McMahan. Adaptive federated optimization. arXiv preprint arXiv:2003.00295, 2020.

- [11] Wentai Wu, Ligang He, Weiwei Lin, Rui Mao, Carsten Maple, and Stephen Jarvis. Safa: A semi-asynchronous protocol for fast federated learning with low overhead. IEEE Transactions on Computers, 70(5):655–668, 2020.

- [12] Zhifeng Jiang, Wei Wang, Baochun Li, and Bo Li. Pisces: efficient federated learning via guided asynchronous training. In Proceedings of the 13th Symposium on Cloud Computing, pages 370–385, 2022.

- [13] Qiyuan Wang, Qianqian Yang, Shibo He, Zhiguo Shi, and Jiming Chen. Asyncfeded: Asynchronous federated learning with euclidean distance based adaptive weight aggregation. arXiv preprint arXiv:2205.13797, 2022.

- [14] Keith Bonawitz, Vladimir Ivanov, Ben Kreuter, Antonio Marcedone, H Brendan McMahan, Sarvar Patel, Daniel Ramage, Aaron Segal, and Karn Seth. Practical secure aggregation for federated learning on user-held data. arXiv preprint arXiv:1611.04482, 2016.

- [15] Jiale Guo, Ziyao Liu, Kwok-Yan Lam, Jun Zhao, Yiqiang Chen, and Chaoping Xing. Secure weighted aggregation for federated learning, 2021.

- [16] Yann Fraboni, Richard Vidal, Laetitia Kameni, and Marco Lorenzi. A general theory for federated optimization with asynchronous and heterogeneous clients updates. Journal of Machine Learning Research, 24(110):1–43, 2023.

- [17] Sai Praneeth Karimireddy, Satyen Kale, Mehryar Mohri, Sashank J. Reddi, Sebastian U. Stich, and Ananda Theertha Suresh. Scaffold: Stochastic controlled averaging for federated learning, 2021.

- [18] Hao Yu, Sen Yang, and Shenghuo Zhu. Parallel restarted sgd with faster convergence and less communication: Demystifying why model averaging works for deep learning, 2018.

- [19] Andreea B. Alexandru and George J. Pappas. Private weighted sum aggregation. IEEE Transactions on Control of Network Systems, 9(1):219–230, 2022.

- [20] Dzmitry Huba, John Nguyen, Kshitiz Malik, Ruiyu Zhu, Mike Rabbat, Ashkan Yousefpour, Carole-Jean Wu, Hongyuan Zhan, Pavel Ustinov, Harish Srinivas, et al. Papaya: Practical, private, and scalable federated learning. Proceedings of Machine Learning and Systems, 4:814–832, 2022.

- [21] Donald Shenaj, Marco Toldo, Alberto Rigon, and Pietro Zanuttigh. Asynchronous federated continual learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, pages 5055–5063, June 2023.

- [22] Zachary Charles, Zachary Garrett, Zhouyuan Huo, Sergei Shmulyian, and Virginia Smith. On large-cohort training for federated learning. Advances in neural information processing systems, 34:20461–20475, 2021.

- [23] Marten van Dijk, Nhuong V Nguyen, Toan N Nguyen, Lam M Nguyen, Quoc Tran-Dinh, and Phuong Ha Nguyen. Asynchronous federated learning with reduced number of rounds and with differential privacy from less aggregated gaussian noise. arXiv preprint arXiv:2007.09208, 2020.

- [24] Jinhyun So, Ramy E Ali, Başak Güler, and A Salman Avestimehr. Secure aggregation for buffered asynchronous federated learning. arXiv preprint arXiv:2110.02177, 2021.

- [25] Yifan Shi, Yingqi Liu, Yan Sun, Zihao Lin, Li Shen, Xueqian Wang, and Dacheng Tao. Towards more suitable personalization in federated learning via decentralized partial model training, 2023.

- [26] Yuchen Zeng, Hongxu Chen, and Kangwook Lee. Improving fairness via federated learning, 2022.

- [27] Xuezhen Tu, Kun Zhu, Nguyen Cong Luong, Dusit Niyato, Yang Zhang, and Juan Li. Incentive mechanisms for federated learning: From economic and game theoretic perspective, 2021.

Appendix

Appendix A Proof of Convergence

In this section, we prove the main convergence result for FedStaleWeight. Recall that FedStaleWeight updates can be described as the following update rule:

| Description | Symbol |

|---|---|

| Number of server updates, server update index | |

| Set of clients contributing to update index | |

| Number of clients, client index | , or |

| Number of local steps per round, local step index | |

| Server model after steps | |

| Stochastic gradient at client | |

| Local learning rate at local step | |

| Global learning rate | |

| Number of clients per update | b |

| Local and global gradient variance | |

| Delay or staleness of the client ’s model update for the -th server update | |

| Maximum staleness for buffer size of | |

| Normalized FedStaleWeight re-weighting with respect to other elements | |

| for buffer for agent such that |

As in [2], in addition to our assumptions above, we assume that is a uniform subset of : in other words, any client is equally likely to contribute in any given round. We can ensure this is the case in practice by, if a client contributes to a current update round, only allowing the server to sample that client once the current update is complete.

Theorem 2.

We first restate a useful lemma from [2] which we use below.

Lemma 1.

, where the total expectation is evaluated over the randomness with respect to client participation and the stochastic gradient taken by a client.

Proof. By -smoothness assumption,

We then derive the upper bounds on and . We expand as follows:

Using conditional expectation, we can expand the expectation as

where takes the expectation of the history of time-steps, takes the expectation of the distribution of clients contributing at time-step over all clients and over the stochastic gradient of one step on a client.

Using the identity

we further expand as follows:

We expand as follows:

We can choose step sizes such that , meaning that we can choose for all . We then get the following inequality:

We can telescope and expand as follows. Note that this variance term after telescoping breaks down into four key portions:

Using our assumptions on -smoothness, we can further reduce this as follows. We note that due to unbiasedness of the sample mean, on average, the mean over all agent’s gradients is equal to the mean of the gradients of agents sampled at each stage.

We can produce an upper-bound on the staleness error (first term above) as follows:

Taking the expectation of this with respect to , we get:

Recalling our definition of and our assumption on bounded staleness, we can see that given some max-staleness , we see that the largest that can be is an aggregation buffer with one agent with maximum expected staleness with all other agents having zero staleness (and un-normalized weighting).

The expectation for the staleness error becomes bounded as follows, using the above result and Lemma 1:

Likewise for the local drift error, we can bound it similarly using Lemma 1:

Finally, we can bound the re-weighting error as follows:

We note from before that the largest that can be is . Consequently, the smallest that can be is an agent with zero expected staleness (minimizing the numerator), with all other buffer agents having maximum expected staleness (maximizing the denominator):

We can then upper-bound as follows, denoting this as quantity :

| (11) |

We arrive at the following expectation of , given the above result and Assumption 3:

Thus, combining everything, denoting and , we have

Rearranging and summing from to , we get the following:

Thus, we conclude

∎