Feedback Communication over Individual Channels

Abstract

We consider the problem of communicating over a channel for which no mathematical model is specified. We present achievable rates as a function of the channel input and output sequences known a-posteriori for discrete and continuous channels. Furthermore we present a rate-adaptive scheme employing feedback which achieves these rates asymptotically without prior knowledge of the channel behavior.

I Introduction

The problem of communicating over a channel with an individual, predetermined noise sequence which is not known to the sender and receiver was addressed by Shayevitz and Feder [1][2] and Eswaran et. al. [3][4]. The simple example discussed in [1] is of a binary channel where the error sequence can be any unknown sequence. Using perfect feedback and common randomness, communication is shown to be possible in a rate approaching the capacity of the binary symmetric channel (BSC) whose the error probability equals the empirical error probability of the sequence (the relative number of ’1’-s in ). Subsequently both authors extended this model to general discrete channels and modulu-additive channels ([3],[2] resp.) with an individual state sequence, and showed that the empirical mutual information can be attained.

In this work we take this model one step further. We consider a channel where no specific probabilistic or mathematical relation between the input and the output is assumed. We term this channel an individual channel and we would like to characterize the achievable rate using only the input and output sequences. The decoder may have a feedback link in which the channel output or other information from the decoder can be sent back. Without this feedback it would not be possible to match the rate of transmission to the quality of the channel so outage would be inevitable. This model has various advantages and disadvantages compared to the classical one, however there is no question about the reality of the model: this is the only channel model that we know for sure exists in nature. This point of view is similar to the approach used in universal source coding of individual sequences where the goal is to asymptotically attain for each sequence the same coding rate achieved by the best encoder from a model class, tuned to the sequence.

Just to inspire thought, let us ask the following question: suppose the sequence with power encodes a message and is transmitted over a continuous real-valued input channel. The output sequence is . One can think of as a noise sequence and measure its power . The rate is the capacity of a Gaussian additive channel with the same noise variance. Is the rate also achievable in the individual case, under appropriate definitions ?

The way it was posed, the answer to this question would be ”no”, since this model predicts rate of bit/use for the channel whose output which cannot convey any information. However with the slight restatement done in the next section (see Eq.(2) below) the answer would be ”yes”.

We consider two classes of individual channels: discrete input and output channels and continuous real valued input and output channels. In both cases we assume that feedback and common randomness exist (perfect feedback is not required). In [5] we address also the case where feedback does not exist, which yields interesting results, but to keep the presentation concise we focus here on the more important case of feedback communication. The main result is that with small amount of feedback, a communication at a rate close to the empirical mutual information (or its Gaussian equivalent for continuous channels) can be achieved, without any prior knowledge, or assumptions, about the channel structure. Here we present the main result and the communication scheme obtaining it and give an outline of the proof. The full proof is omitted and appears in [5]. We also give several examples and highlight areas for further study.

II Overview of the main results obtained so far

We start with a high level overview of the definitions and results. The discussion below is conceptual rather than accurate, while the detailed definitions follow in the next section.

We say a given rate function is achieved by a communication scheme with feedback if for large block size , data at rate close to or exceeding is decoded successfully with arbitrarily large probability for every output sequence and almost every input sequence. Roughly speaking, this means that in any instance of the system operation, where a specific was the input and a specific was the output, the communication rate had been at least . Note that the only statistical assumptions are related to the common randomness, and we consider the rate (message size) and error probability conditioned on a specific input and output, where the error probability is averaged over common randomness.

The definition of achievability is not complete without stating the input distribution, since it affects the empirical rate. For example, by setting one can attain every rate function where in a void way, since other sequences will never appear. In contrast with classical results of information theory, we do not use the input distribution only as a means to show the existence of good codes: taking advantage of the common randomness we require the encoder to emit input symbols that are random and distributed according to a defined prior (currently we assume i.i.d. distribution).

In this paper we focus on rate functions that depend on the instantaneous (zero order) empirical statistics. Extension to higher order models seems technical. For the discrete channel we show that a rate

| (1) |

is achievable with any input distribution where denotes the empirical mutual information [6]. For the continuous (real valued) channel we show that a rate

| (2) |

is achievable with Gaussian input distribution , where is the empirical correlation factor between the input and output sequences (at this stage for simplicity is defined in a slightly non standard way without subtracting the mean). Although the result regarding the continuous case is less tight, we show in [5] that this is the best rate function that can be defined by second order moments, and it is tight for the Gaussian additive channel (for this channel therefore ). The same rates apply also to the case of communication without feedback where achievability is defined by the ability to decode a fixed rate whenever .

We may now rephrase our example question from the introduction so that it will have an affirmative answer: given the input and output sequences, describe the output by the virtual additive channel with a gain , so the effective noise sequence is . Chose so that , i.e. . An equivalent condition is that minimizes . The resulting is the LMMSE coefficient in estimation of from (assuming zero mean), i.e. . Define the effective noise power as , and the effective . It is easy to check that . Then according to Eq.(2) the rate is achievable, in the sense defined above. Reexamining the counter example we gave above, in this model if we set we obtain and therefore , or equivalently the effective channel has and , therefore (instead of , and ).

As will be seen, we achieve these rates by random coding and universal decoders, and use iterated instances of rateless coding. The scheme is able to operate asymptotically with ”zero rate” feedback (meaning any positive capacity of the feedback channel suffices). A similar although more complicated scheme was used in [3]. The main differences are the use of training to evaluate the stopping condition as well as a different code construction and are summarized in [5].

The classical point of view first assumes a channel model and then devises a communication system optimized for it. Here we take the inverse direction: we devise a communication system without assumptions on the channel which guarantees rates depending on channel behavior. The channel model we assume is more stringent than the probabilistic and semi-probabilistic models since we make less assumptions about the channel, and the error probability and rate are required to be met for (almost) every input and output sequence (rather than on average). This change of viewpoint does not make probabilistic or semi probabilistic channel models redundant but merely suggests an alternative. By using a channel model we can formalize questions relating to optimality such as capacity (single user, networks) and error exponent as well as guarantee a communication rate a-priori. Another aspect is that we pay a price for universality. Even if one considers an individual channel scheme that guarantees asymptotically optimum rates over a large class of channels, it can never consider all possible channels (block-wise), and for a finite block size it will have larger overhead (a reduction in the amount of information communicated with same error probability) compared to a scheme optimized for the specific channel.

Several concepts used in this work such as common randomness and rateless coding, are borrowed from prior work on arbitrarily varying channels (AVC, see for example [7][8]) compound channels with feedback [9][10] and individual noise sequence channels with feedback [2][3]. It is worth noting [11] where a somewhat similar concept was used in defining an achievable communication rate by properties of the channel input and output. An important observation is that a strict definition of capacity exists only for fixed rate systems (where the capacity is the supremum of achievable rates) while in rate adaptive communication there is some freedom in determining the rate function.

Following our results, the individual channel approach becomes a very natural starting point for determining achievable rates for various probabilistic and semi-probabilistic models (AVC, individual noise sequences, probabilistic models, compound channels) under the realm of randomized encoders, since the achievable rates for these models follow easily from the achievable rates for specific sequences, and the law of large numbers. We will give some examples later on.

III Definition of variable rate communication system with feedback

A randomized block encoder and decoder pair for the channel (defined by the two alphabets ) with block length adaptive rate and feedback communicates a message expressed by the infinite sequence . The system is defined using a random variable distributed over the set (the common randomness) and a feedback alphabet . The encoder is defined by a series of mappings and the decoder is defined by a feedback function , a decoding function and a rate function . The error probability for message is defined as , i.e. recovery of the first bits by the decoder is considered a successful reception. This system is illustrated in figure 1.

IV Statement of the main result

We consider two cases:

-

1.

discrete: The input and output alphabets are discrete and finite, and the prior can be arbitrarily chosen

-

2.

continuous: The input and output alphabets are real valued and the prior is Gaussian

The scheme proposed below satisfies the following theorem with respect to these two cases:

Theorem 1 (Theorems 3,4 of [5]).

For every there is large enough and random encoder and decoder with feedback and variable rate over block size with a subset , such that:

-

•

The distribution of the input sequence is independently of the feedback and message

-

•

The probability of error is smaller than for any

-

•

For any input sequence and output sequence the rate is , where

(3) -

•

The probability of the subset is bounded by

The limit , which can be arbitrarily large, reflects the fact the communication rate is finite, even when ( = 1 in the continuous case). In the discrete case can be omitted (by selecting ).

Regarding the subset as we shall see in the proof outline there are some sequences for which poor rate is obtained, and since we committed to an input distribution we cannot avoid them. However there is an important distinction between claiming for example that ”for each the probability of is at most ” and the claim made in the theorem that ” only when belongs to a subset with probability at most ”. The first claim is weaker since choosing as a function of may potentially increase the probability of beyond , by attempting to select for every a sequence for which is a bad input sequence. This weakness is avoided in the second claim. A consequence of this definition is that the probability of is bounded by for any conditional probability over the sequences. The probability can be absorbed into with the implication that the error probability becomes limited to the set (see [5]).

V The proposed rate adaptive scheme

The following communication scheme sends indices from over channel uses (or equivalently sends the number in resolution ), where is fixed, and varies according to empirical channel behavior. The building block is a rateless transmission of one of codewords ( information units), which is iterated until the -th symbol is reached. The codebook consists of codewords of length , where all symbols are drawn i.i.d. and known to the sender and receiver.

In each rateless block , a new index is sent to the receiver. denotes the absolute time index . Block starts from index , where , and is incremented following the decoder’s decision to terminate a block. After symbol is reached the transmission stops and the number of blocks sent is . The transmission of each block follows the procedure described below:

-

1.

The encoder sends index by sending the symbols of codeword : , and incrementing until the decoder announces the end of the block. Note that different blocks use different symbols from the codebook.

- 2.

-

3.

When the end of block is announced one of the fulfilling Eq.(4) is determined as the index of the decoded codeword (breaking ties arbitrarily).

-

4.

If symbol is reached without fulfilling Eq.(4), then the last block is terminated without decoding.

The threshold is defined as:

| (5) |

The scheme achieves the claims of Theorem 1 when is chosen to increase as . The scheme uses one bit of feedback per channel use, however the same asymptotical rates are obtained if (a possibly delayed)) feedback is sent only once every symbols (for any ), therefore we can claim the theorem holds with ”zero rate” feedback.

VI Outline of the proof of the main result

The error analysis is based on two lemmas (Lemma 1 and Lemma 4 of [5]) which state that the probability of the metric used in section V to exceed a given threshold when the -length is drawn i.i.d. independently of (and from a Gaussian distribution in the continuous case), is approximately , or more accurately:

| (6) |

where . This bound determines the pairwise error probability. Using this bound with and the union bound (over competing codewords and over decoding attempts), we show that the error probability is bounded below .

The analysis of the rate is more intricate. Basically it relies on the fact that when a block is decoded, the metric exceeds the threshold at the last symbol, but it lies below the threshold at the previous symbol, therefore roughly speaking, at the end of the block where is the instantaneous transmission rate over the block. Therefore the empirical rate is attained per rateless block and a convexity argument is used in order to show that the total rate (average of over blocks) is at least the empirical rate measured over the complete sequences.

There are several difficulties, however. Considering for example the discrete case, since the rate achieved instantaneously over a rateless block is approximately the empirical mutual information over the block, we would like to claim that the averaged rate over rateless blocks is greater or equal to the empirical mutual information over the entire transmission, which implies convexity of the empirical mutual information. However the mutual information is concave with respect to the input distribution. Here, the input distribution is the empirical distribution over rateless blocks, whose limits are determined during transmission by the decoding rule and depend on the channel output . Another difficulty is that the last symbol of each block is not fully utilized: the empirical mutual information crosses the threshold at the last symbol. But whether it crosses it just barely, or crosses it by a significant extent, the rates our scheme attains remain the same. However a large increase in empirical mutual information at the last symbol increases the target rate thus increasing the gap between the target rate and the rate attained. Here, a ”good” channel is bad for our purpose. Since we operate under an arbitrary channel regime, this increase is not bounded by the average information contents of a single symbol. This is especially evident in the continuous case where is unbounded. A similar difficulty arises when bounding the loss from the potentially unfinished last block in the transmission: since is arbitrary it can be determined so that this block has the best mutual information.

We resolve the aforementioned difficulties by proving a property we term ”likely convexity” (Lemmas 5,6 in [5]): given a partitioning of the symbols into subsets, we show that if the number of subsets does not grow too fast, and independently of their size, there is a group of sequences with vanishing probability, such that if , the mutual information (in the discrete case) and the squared correlation factor (in the continuous case) are convex up to an arbitrarily small offset , i.e. the convex combination of mutual information (resp. ) over the subsets, weighted by their size, exceeds the mutual information (resp. ) measured over the entire symbols minus . The likely convexity is used to bound the loss from unused symbols (by bounding their number, and the mutual information or correlation factor, resp.), as well as show that the mean rate over rateless blocks meets or exceeds the overall empirical rate (mutual information or its Gaussian counterpart). Convexity of follows from convexity of by Jensen’s inequality.

The likely convexity property results in the existence of the subset of bad sequences. An example for such a sequence is the sequence of zeros followed by ones (for the binary channel), in which at most one block will be sent, and thus the asymptotic rate tends to , although the empirical distribution is () and the empirical mutual information may be .

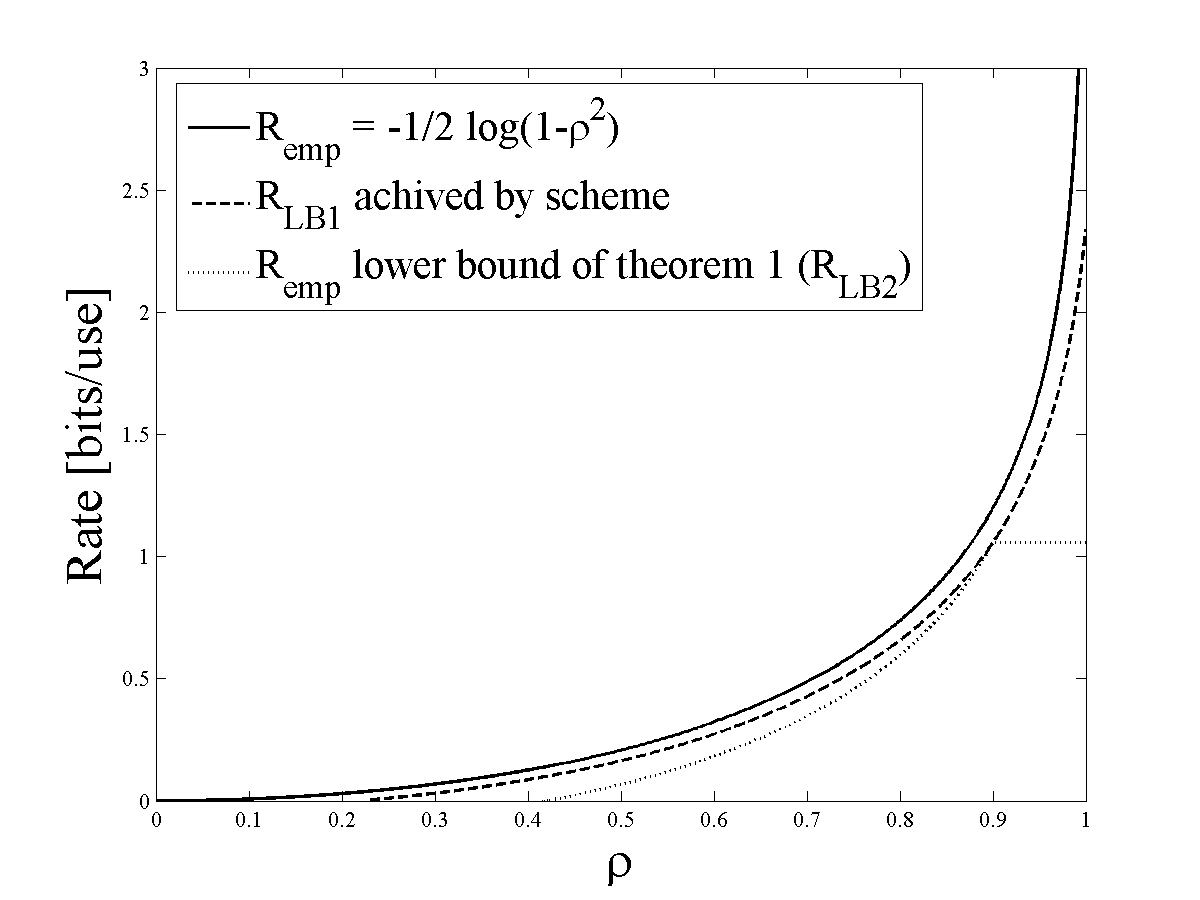

Finally, in order to make sure the error probability, the probability of , and the various rate offsets inserted by the communication system and by the proof technique all tend to zero as , the information contents of each block is required to increase at a rate . As part of the proof in [5] we introduce several lemmas which seem to constitute fundamental and useful tools in analyzing individual sequences. Figure (2) illustrates a lower bound for the rate achieved by the proposed scheme for finite (termed ) which is calculated in [5], as well as a bound () satisfying the form defined in Theorem 1.

VII Examples

In this section we give some examples to illustrate the model developed in this paper. Further details appear in [5].

VII-A Non linear channels

The expression determines a rate which is always achievable using a Gaussian prior, and is useful for analyzing non linear channels. As an example, transmitter noise generated by power amplifier distortions is usually modeled as an additive noise, although it is correlated with the transmitted signal, resulting in an overly optimistic model. Using the procedure described in the overview of finding the coefficient such that this noise is orthogonal to the transmitted signal we can model the non linearity as an effective gain plus an additive noise. The rates computed using this model are always achievable, and thus are a practical alternative to calculating the channel capacity, and enable simplified modeling of the distortion as an additive noise.

VII-B Channels that fail the zero order and the correlation model

The fact we used the zero-order empirical distribution makes the scheme less effective for channels with memory. For example for the error free channel the achieved rate would be 0 (with high probability). Similarly for the correlation model if then . The remedy should be sought in employing higher order empirical distributions and in the continuous case in using tighter approximations of the empirical statistics (e.g. by higher order statistics).

VII-C Application to other channel models

As we noted in the overview, the results obtained for the arbitrary channel model constitute a convenient starting point for analyzing channel models which have a full or partial probabilistic behavior. It is clear that results regarding achievable rates in fully probabilistic, compound, arbitrarily varying and individual noise sequence models can be obtained from applying the weak law of large numbers to Theorem 1 (limited, in general, to the randomized encoders regime). For example the result of [1] for the binary channel can be easily reconstructed by applying the scheme with , asymptotically approaching (or exceeding) the rate:

| (7) |

Since is i.i.d. so is , and the limit follows from the law of large numbers and the continuity of . In [5] we consider the discrete channel with state sequence presented by [3] where the sequence is potentially determined by an adversary knowing the past channel inputs and outputs (as opposed to a fixed sequence assumed in [3]), and show by similar arguments that the same communication rates can be attained. This result is a superset of the results of [3] and [2], and is new, to our knowledge. Applying Theorem 1 the proof is simple: it only remains to show through a probabilistic calculation that the difference between the empirical mutual information and the target rate (the state averaged mutual information defined in [3]) converges to 0 in probability.

A more anecdotic particular case is the additive Gaussian channel where by Theorem 2 and Lemma 4 of [5] we obtained a very simple proof for the achievability part of this channel’s capacity, using simple and geometrical considerations without the heavy machinery of AEPs or error exponents, and by employing a maximum correlation factor decoder rather than the maximum likelihood (minimum Euclidian distance) decoder.

VIII Further study

This work lays the foundations and introduces the new concept of individual channels together with basic achievability results. Following that, there are many open questions. To name the most outstanding ones:

-

•

Extensions of the model to include time dependency

-

•

Definition of the empirical mutual information for continuous alphabets, and extension of the scheme to approach this empirical mutual information. Unification of the discrete and continuous cases, and extension to multiple input/output channels.

-

•

Analysis of the overheads and their dependence on the model complexity (asymptotical rate - overhead tradeoff)

-

•

Best asymptotical error rates

-

•

Determining and adjusting the channel input to channel behavior (e.g. by adjusting the prior), and considering alternatives to the strict constraint imposed here on the input prior

-

•

Outer bounds on achievable rates

-

•

The minimal amount of randomization required to attain the empirical mutual information

In [5] we give additional details and make some initial comments about these directions.

References

- [1] O. Shayevitz and M. Feder, ”Communicating using Feedback over a Binary Channel with Arbitrary Noise Sequence”, International Symposium on Information Theory (ISIT), Adelaide, Australia, September 2005.

- [2] Ofer Shayevitz and Meir Feder, ”Achieving the Empirical Capacity Using Feedback Part I: Memoryless Additive Models”, Dept. of Electrical Engineering Systems, Tel Aviv University, Tel Aviv 69978, Israel

- [3] Krishnan Eswaran, Anand D. Sarwate, Anant Sahai, and Michael Gastpar, ”Limited feedback achieves the empirical capacity,” Department of Electrical Engineering and Computer Sciences, University of California, arXiv:0711.0237v1 [cs.IT] 2 Nov 2007.

- [4] K. Eswaran and A.D. Sarwate and A. Sahai and M. Gastpar, ”Using zero-rate feedback on binary additive channels with individual noise sequences,” Proceedings of the 2007 Infernational Symposium on Information Theory (ISIT 2007), Nice, France, June 2007

- [5] Yuval Lomnitz and Meir Feder, ”Communication over Individual Channels,” arXiv:0901.1473v1 [cs.IT], 11 Jan 2009, http://arxiv.org/abs/0901.1473v1

- [6] V. D. Goppa, Nonprobabilistic mutual information without memory, Probl. Contr. Inform. Theory, vol. 4, pp. 97-102, 1975

- [7] A. Lapidoth and P. Narayan, ”Reliable communication under channel uncertainty,” Information Theory, IEEE Transactions on , vol.44, no.6, pp.2148-2177, Oct 1998

- [8] I. Csiszár and P. Narayan, ”The Capacity of the Arbitrarily Varying Channel Revisited : Positivity, Constraints”, IEEE Transactions On Information Theory, Vol. 34, No. 2, March 1988

- [9] Nadav Shulman, ”Communication over an Unknown Channel via Common Broadcasting,” Ph.D. dissertation, Tel Aviv University, 2003

- [10] Aslan Tchamkerten and I. Emre Telatar, ”Variable Length Coding Over an Unknown Channel”, IEEE Transactions On Information Theory, Vol. 52, No. 5, May 2006

- [11] Mukul Agarwal, Anant Sahai, Sanjoy Mitter, ”Coding into a source: a direct inverse Rate-Distortion theorem,” arXiv:cs/0610142v1 [cs.IT] (presented at Allerton 06)