Feedback Strategies for Hypersonic Pursuit of a Ground Evader

Abstract

In this paper, we present a game-theoretic feedback terminal guidance law for an autonomous, unpowered hypersonic pursuit vehicle that seeks to intercept an evading ground target whose motion is constrained in a one-dimensional space. We formulate this problem as a pursuit-evasion game whose saddle point solution is in general difficult to compute onboard the hypersonic vehicle due to its highly nonlinear dynamics. To overcome this computational complexity, we linearize the nonlinear hypersonic dynamics around a reference trajectory and subsequently utilize feedback control design techniques from Linear Quadratic Differential Games (LQDGs). In our proposed guidance algorithm, the hypersonic vehicle computes its open-loop optimal state and input trajectories off-line and prior to the commencement of the game. These trajectories are then used to linearize the nonlinear equations of hypersonic motion. Subsequently, using this linearized system model, we formulate an auxiliary two-player zero-sum LQDG which is effective in the neighborhood of the given reference trajectory and derive its feedback saddle point strategy that allows the hypersonic vehicle to modify its trajectory online in response to the target’s evasive maneuvers. We provide numerical simulations to showcase the performance of our proposed guidance law.

1 Introduction

Trajectory optimization for hypersonic re-entry vehicles is in general a challenging problem that does not admit analytic solutions due to the vehicle’s highly nonlinear dynamics. For this reason, most research efforts in this area have focused on developing or enhancing numerical optimal control techniques, including direct [1] and indirect [2] methods, for efficient trajectory optimization. In the presence of parametric uncertainty or external disturbances, however, trajectories pre-computed offline may no longer be optimal during flight, which raises the need for a hypersonic vehicle to have an inner-loop feedback guidance system for stabilization or tracking. Traditionally, neighboring optimal (or extremal) control [3] has played an important role in aerospace applications and control of nonlinear systems which are subject to perturbations such as within initial states or terminal constraints. For the applications of neighboring optimal control in hypersonic trajectory design, one can refer to, for instance, [4, 5].

Robust control and guidance problems for hypersonic vehicles have been addressed by several different methods in the literature including LQR-based feedback guidance [6], desensitized optimal control [7], and deep learning method [8] to name but a few. Most of the recent work in hypersonic guidance are, however, focused on steering a hypersonic vehicle to a target that is either static or moves in a known/deterministic fashion but is not capable of maneuvering or evading. If the target can evade, the hypersonic trajectory optimizaiton problem evolves into a differential game [9], in which we are interested in finding a feedback strategy for the hypersonic vehicle to reshape its trajectory on the fly in response to the evading actions of the target.

Differential game theory, first proposed by Isaacs in his pioneering work [9], has been widely used in aerospace, defense, and robotics applications as a framework to model adversarial interactions between two or more players. Pursuit-evasion games, a special class of differential games in which a group of players attempt to capture another group of players, provide a powerful tool to obtain the worst-case strategies for intercepting a maneuvering target [10, 11]. Applying differential game theory to the problem of intercepting an evader by a hypersonic pursuer, however, to our best knowledge, has not yet been proposed in the relevant literature. This is due to analytical and computational complexities of the problem which can be attributed to the nonlinear dynamics of the hypersonic vehicle and the dependence of the atmospheric density upon the altitude. Although numerical methods for computing an open-loop representation of the feedback saddle point solution in two-player differential games have been well studied [12], such open-loop solutions can be ineffective against the target’s unexpected evasion.

The main contribution of this work is a novel formulation of the problem of intercepting a maneuvering ground target by a hypersonic pursuit vehicle as a tractable pursuit-evasion game. After solving for the target’s optimal evasion strategy based on a simplified version of the original nonlinear differential game, we reduce the latter game into an optimal control problem which can readily be solved with existing numerical techniques (either direct or indirect). Thereafter, we linearize the nonlinear hypersonic dynamics around the reference game trajectory and in turn formulate an auxiliary differential game whose feedback saddle point solution can be obtained analytically and computed quickly (real-time) and efficiently compared to the original noninear pursuit-evasion game.

The rest of this paper is structured as follows. In Section 2, the dynamical system models of an unpowered hypersonic pursuit vehicle and a maneuvering ground target are introduced to formulate a pursuit-evasion game. In Section 3, an approximation of the saddle point strategy of the target is computed and subsequently, the pursuit-evasion game is reduced to an optimal control problem whose solution will serve as a reference pursuit trajectory. In Section 4, the game dynamics are linearized with respect to the reference trajectory and an auxiliary two-player zero-sum LQDG is constructed. In Section 5, numerical simulations are presented. Lastly, in Section 6, some concluding remarks are provided.

2 Two-Player Pursuit-Evasion Game

In this section, the state space models that describe the motion of a hypersonic pursuit vehicle () and a maneuvering ground target () are introduced. Subsequently, the corresponding interception problem is formulated as a pursuit-evasion game.

2.1 Problem setup and system models

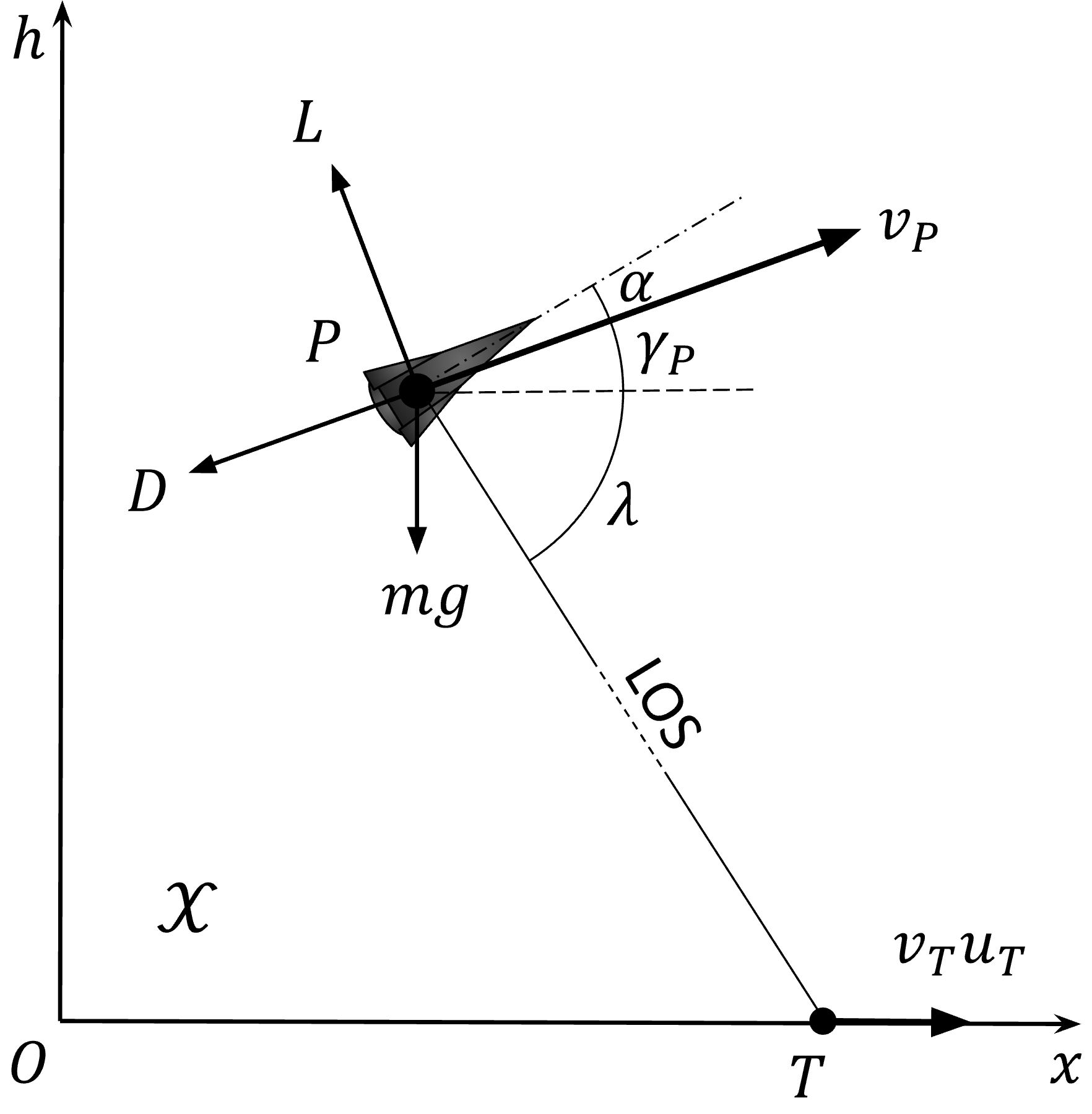

The game space, denoted by , is defined as a vertical plane with the horizontal axis being the downrange and the vertical axis being the altitude, where . Let be a state space and denote a compact set of admissible inputs, then the non-affine, nonlinear equations of planar hypersonic motion of in are given by

| (1) |

where (resp., ) is the state (resp., initial state), is the control input, and is the vector field of . In particular, includes

| (2) | ||||

| (3) | ||||

| (4) | ||||

| (5) |

where , , , and denote the horizontal and vertical position, velocity, and flight path angle of , respectively. Furthermore, is the vehicle mass, is gravitational acceleration, and and are the lift and drag forces which satisfy

| (6) | ||||

| (7) |

Here, is the vehicle reference area and is an exponential function that determines an approximation of the atmosphere density in terms of ’s vertical position, that is,

| (8) |

where is the surface air density and is the scale height. The lift and drag coefficients, and , are known functions of the angle of attack :

| (9) | ||||

| (10) |

where (angle of attack) is the control input of , i.e., . Additionally, we denote the position of as (projection of on ).

The target is a ground vehicle whose motion is constrained along the horizontal axis. In this paper, we assume the speed of to be much less than that of yet has better maneuverability. In particular, its motion is modelled by the so-called simple motion kinematics [9] such that can directly control its velocity vector, that is,

| (11) |

where (resp., ) is the state (resp., initial state) and is the control input of with and denoting her horizontal and vertical position, respectively. Also, , where denotes ’s maximum speed. Note that, since we assume the motion of is constrained along the horizontal axis, it holds that .

2.2 The pursuit-evasion game

In the target interception problem we will discuss, ’s goal is to intercept as soon as possible while ’s goal is to delay the interception. Hence, this interception problem can naturally be formulated as a pursuit-evasion game [9]:

Problem 1 (Two-player pursuit-evasion game)

| s.t. | |||

where with denoting the capture radius. In words, given the system models (1) and (11) and the initial positions of and , we are interested in finding the feedback strategies and such that, if these strategies are applied to (1) and (11), will be intercepted by at time (time of capture). The planar geometry of pursuit-evasion between and is illustrated in Figure 1, where all the variables therein have been defined except for which denotes the angle of depression of .

To ensure the feasibility of the game in Problem 1, we make a few assumptions:

Assumption 1

(Perfect information game) Both and can observe their opponent’s state at every instance of time.

Assumption 2

(Game of kind) The initial states of both players are given such that capture is guaranteed under optimal play, i.e., .

The feedback strategies and constitute a (feedback) saddle point of the game must satisfy

| (12) |

which implies that if plays optimally while plays non-optimally, can take advantage of ’s non-optimal play and capture earlier than the saddle point time of capture. Conversely, if plays non-optimally while plays optimally, can delay (or even avoid) the termination of game. The game in Problem 1 always possesses a saddle point that satisfies (12) because the Hamiltonian of the game

| (13) |

where and denote co-states, satisfies the Isaacs’ condition [9]:

| (14) |

since (13) is separable in the control inputs and . The Value of the game, which then is assured to exist, is given by

| (15) |

3 Open-Loop Pursuit-Evasion Trajectories

In this section, we first derive the optimal strategy for in Problem 1 by imposing a few additional assumptions on the dynamical behaviors of and in order to obtain an approximated value function. With this strategy we simplify the game in Problem 1 into ’s one-sided optimal control problem and compute his optimal trajectory.

3.1 Approximated saddle point strategy for optimal evasion

First, we assume that ’s flight trajectory during the end game is a straight line, i.e., the flight path angle of aligns with its line of sight () and that the speed of linearly decreases with time. In consideration of these assumptions, the motion of can also be described by the simple motion kinematics (with decreasing maximum speed), and therefore the value function can be approximated as a linear function of the initial distance between the two players as follows:

| (16) |

with where denotes the average velocity of during the flight. The partial derivative of (16) with respect to is given by

| (17) |

Substituting (17) into the Hamilton-Jacobi-Isaacs (HJI) equation [13] and using the fact that the value function is time-invariant, one can derive

from which we can conclude that the saddle point strategy for is given by

| (18) |

In other words, the initial relative position of with respect to in the coordinate determines the optimal heading direction of . We can further assume that, excluding the case when , no switching will occur during the game because, due to the assumption on the pursuit trajectory being a straight-line, the sign of relative position will never be switched. Thus, strategy (3.1) can also be considered an open-loop strategy for .

3.2 Optimal pursuit trajectory

Despite ’s highly nonlinear equations of motion, given the fact that the optimal evasion trajectory of is a straight line with direction determined by the initial relative horizontal distance, the open-loop representation of the saddle point strategy of is no longer difficult to derive. Provided the initial positions of the two players, we first determine ’s (open-loop) strategy by , which we call the Open-Loop Evasion Strategy (OLES). Since the evasion direction of is time-invariant and known a priori to , Problem 1 can be reduced to a one-sided optimal control problem for as follows:

Problem 2 (’s optimal control problem)

| s.t. | |||

Problem 2 can be readily solved using any existing numerical optimal control technique (either direct or indirect). Details on the implementation of such techniques will be omitted herein due to space limitations, yet one can refer instead to standard references in the field (for instance, see [14] and references therein). Such numerical techniques will yield the (nominal) minimum time of capture as well as the optimal state and input trajectories of , denoted by and , respectively. We will also refer to as the Open-Loop Pursuit Strategy (OLPS). In addition, the state trajectories of and generated with the application of the OLPS and OLES, are together referred to as the Open-Loop Saddle Point State Trajectory (OLSPT).

4 Auxiliary Zero-Sum LQDG

The OLPS, albeit optimal in an open-loop sense, is in fact not effective against when the latter makes sub-optimal and / or decisions which are inconsistent with the OLES. This is because the OLPS cannot take into account the deviation in ’s state. In other words, as opposed to our discussion on (12), cannot take advantage of ’s sub-optimal play with the OLPS. To cope with the possible unexpected maneuvers of , some auxiliary feedback input is needed such that the pursuit trajectory can be continuously reshaped in response to the state deviation of .

Fortunately, in light of the fact that and have a large speed difference, we may further assume that any resulting pursuit-evasion trajectory caused by ’s evading maneuvers (whether optimal or not) will not significantly differ from the OLSPT. This particular assumption will allow us to linearize the dynamics of the players around the OLPTS and construct an auxiliary differential game in the neighborhood of this trajectory. Thereafter, we can derive an auxiliary feedback strategy for and combine it with the OLPS.

4.1 Linear approximation of game dynamics

To obtain an approximate linear state space model for the equations of motion of given in (1) which is valid in the neighborhood of the OLSPT, we can take the first order Taylor series expansion of the vector field and substitute the solution of Problem 2 (namely, , , and ) into the resulting linearized equations. By doing so, we obtain the following linear time-varying (LTV) system model for :

| (19) |

where , , and are the deviation of current state and input of from the open-loop ones, respectively. Moreover,

| (20) | |||

| (21) |

where . Note that the matrices and are only defined over the finite time interval .

Similarly, ’s motion near her optimal evasion trajectory can be derived (in an almost trivial way) as

| (22) |

which is in the same form as (11).

4.2 Auxiliary differential game

Now, given the linear system models (19) and (22), an auxiliary differential game can be constructed around the OLSPT. The corresponding game dynamics are written as

| (23) |

where is the joint state deviation, is the initial joint state deviation, and , and

| (26) | ||||

| (27) | ||||

| (28) |

Note again that these matrices are also defined over the finite interval only. Furthermore, strategies and are not bounded in this formulation. With this LTV game dynamics, we construct a two-player zero-sum LQDG:

Problem 3 (Auxiliary two-player zero-sum LQDG)

| s.t. |

where the auxiliary payoff functional is the weighted combination of the soft terminal constraint on miss distance and the accumulated control inputs. The weight coefficients , , and are positive constants which can be tuned via trial and error. The fixed final time of the game corresponds to the nominal time of capture which we have already found in Problem 2. Let us additionally define a matrix

| (31) |

then the auxiliary payoff functional can equivalently be written as

| (32) |

The feedback saddle point solution of Problem 3, namely and which satisfy a similar inequality as (12):

can readily be derived as [15]

| (33) | ||||

| (34) |

where corresponds to the solution of the following Matrix Riccati Differential Equation (MRDE):

| (35) |

with

| (36) |

By solving the MRDE backward in time one can compute the feedback gains in (33) and (34). Finally, to ensure that the aggregate feedback strategies meet the input constraints defined in Section 1, we define a function for such that

| (37) |

where . Hence, the (bounded) aggregate feedback strategies are given by

| (38) | ||||

| (39) |

| Parameter | Symbol | Value (units) |

|---|---|---|

| Reference area | 0.2919 | |

| Mass | 340.1943 | |

| Lift coefficient | 1.5658 | |

| Drag coefficients | , | 0.0612, 1.6537 |

| Variable | Initial value (units) |

|---|---|

| 50,000 | |

| 20,000 | |

| 4,000 | |

| 0.4 | |

| 0 | |

| 0 |

| Evasion strategy | Miss distance (units) |

|---|---|

| 13.1660 | |

| 16.8271 | |

| 3.1349 |

5 Numerical Simulations

In this section, we present numerical simulation results of the proposed game-theoretic terminal guidance law. The vehicle parameters of are specified in Table 1, whereas the initial conditions of and are listed in Table 2. Other parameters that are necessary for the simulations are selected as: surface air density , scale height , and maximum target speed . The angle of attack, , takes values in the compact set . The capture radius is assumed to be zero, which means that the game terminates if the positions of and coincide.

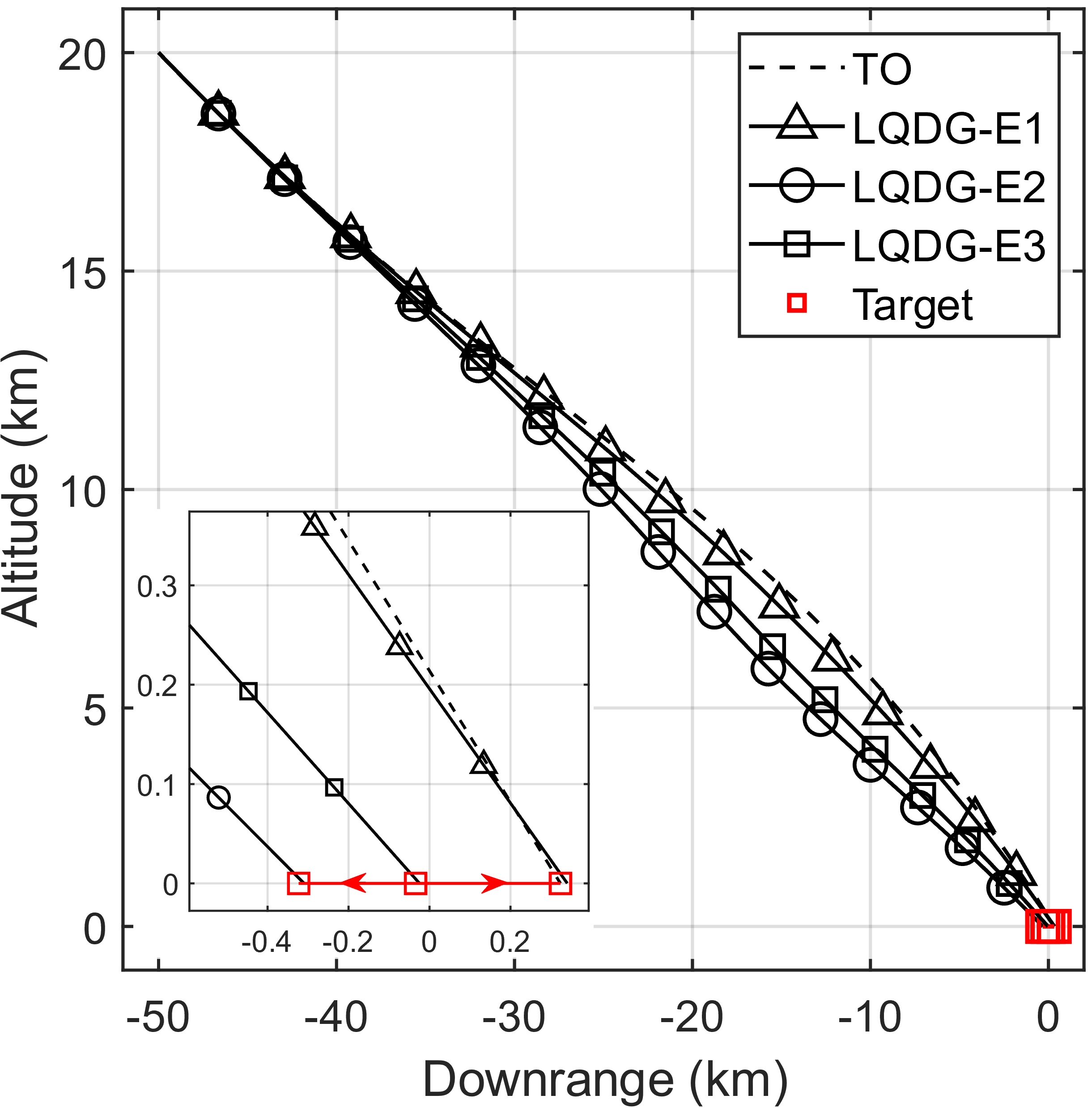

We first solve Problem 2 by utilizing a numerical optimal control technique (for instance, direct collocation [14]) to obtain the OLPS. Note that one can use any numerical method here (either direct or indirect), and in fact the OLPS and the corresponding reference trajectory do not have to be optimal (i.e., they may be sub-optimal) in order to apply the aggregate guidance laws proposed in Section 4; however, the closer this reference trajectory is to the optimal one, the smaller one can obtain. From Table 2 we see that is initially located to the right of (i.e., ), which implies that the OLES, according to (3.1), is given by . In the following simulation results, we refer to ’s optimal trajectory, , as the Time Optimal (TO) trajectory, which is illustrated as a dash line in Figure 2. In addition, the nominal time of capture is obtained from the numerical solver as .

Given the TO trajectory and the nominal time of capture, we can compute the time-varying matrices and as well as and prior to the commencement of game, all of which are defined over the finite time horizon . Given these matrices, the MRDE (35) can be solved numerically (backward as said) to compute the feedback gains in (33) and (34), also prior to the commencement of game. Proper selection of the weighted coefficients, , , and , is the most critical process of this guidance algorithm, which must be done via trial and error. The tuning procedures we have adopted are as follows; first, we find a sufficiently smaller value of from its current value such that the auxiliary control input does not override the OLPS , in which case can easily stall. Once this is done, the final position of may be located at the desired position but not at the proper position (non-zero altitude). For this reason, we next increase the value of such that . Our final choice for the weighted coefficients are as follows: , , and .

In order to investigate the performance of our guidance law, namely (38), against unpredictable evasion of , we apply it against who employs one of the following three evasion strategies: The first strategy, named , is the optimal evasion, i.e., for all . In this case, we expect that will simply follow (or track) its reference trajectory since the deviation of ’s horizontal position () will always be zero. The second strategy, , is evasion in the opposite direction of , i.e., for all . From the game-theoretic point of view, this strategy would be considered the worst strategy for since it would yield the smallest time of capture, only if knew its feedback saddle point strategy. Since employs, however, the proposed guidance law which is not necessarily optimal in Problem 1, can be used to test the robustness of the latter guidance law. Lastly, the third strategy, named , is random evasion which is meant to confuse by changing the evasion direction randomly; in particular, periodically chooses her control input to be a random sample from the (discrete) uniform distribution over the set .

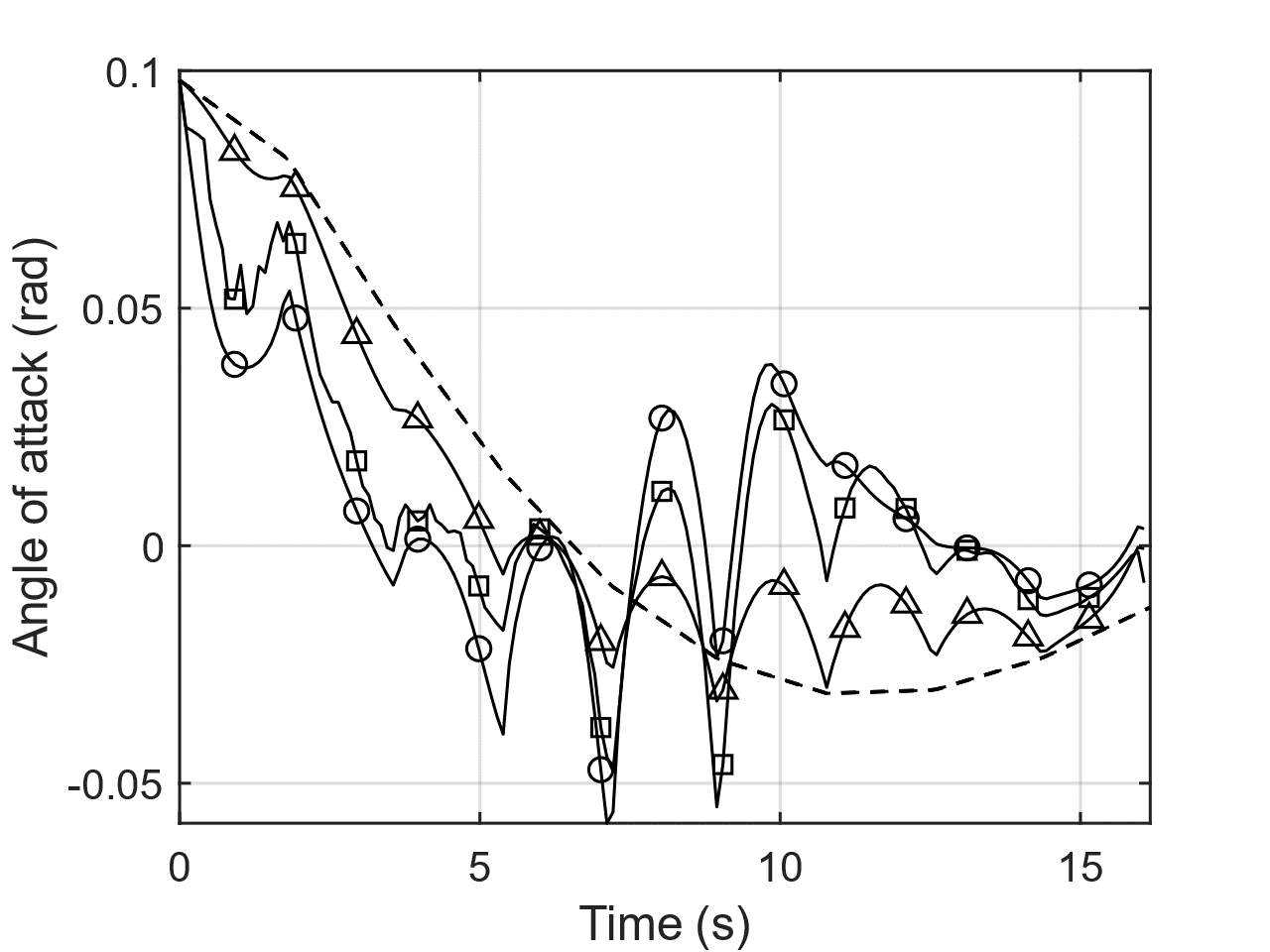

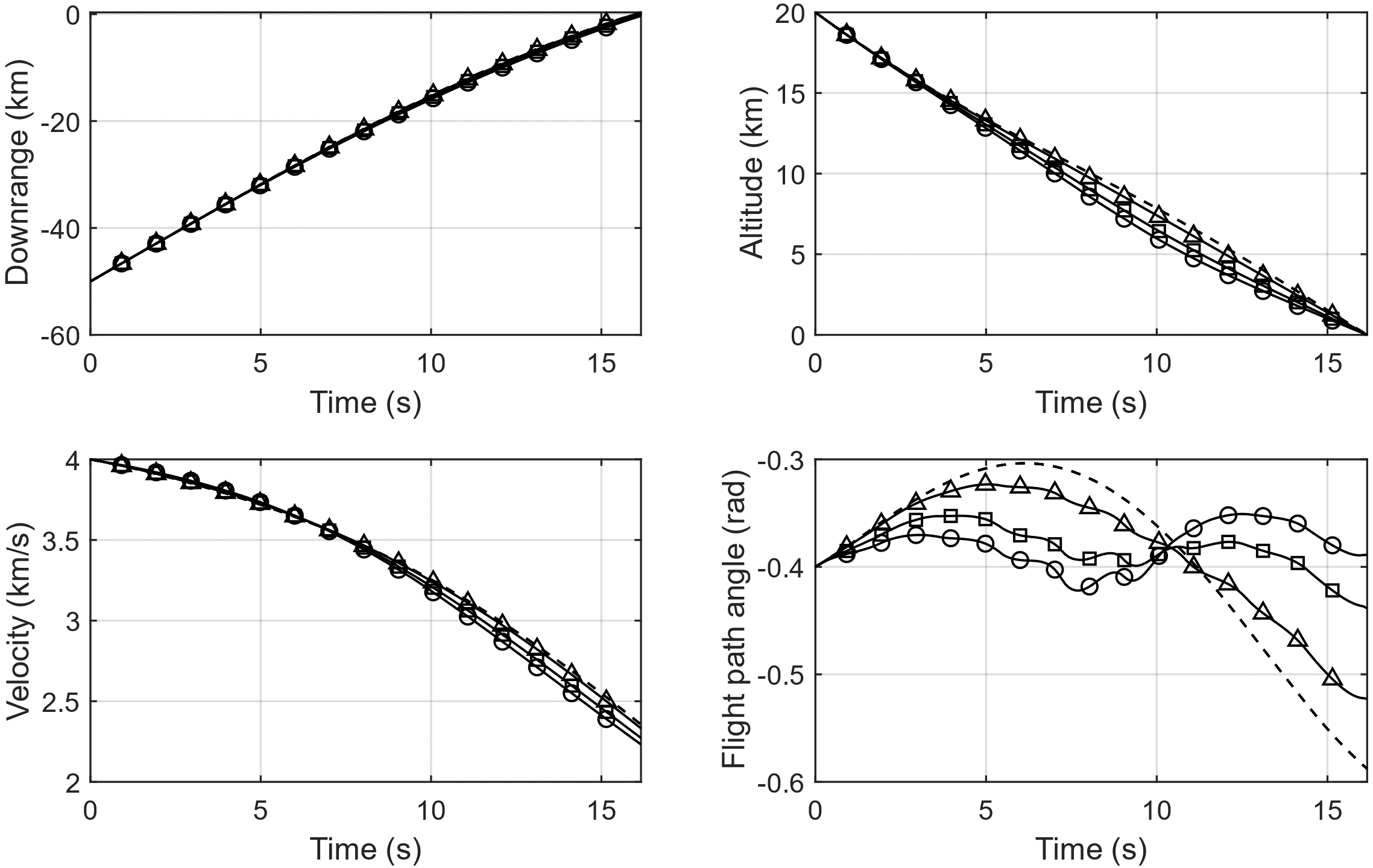

In Figure 2, the trajectories of corresponding to , , and are shown as red curves, whereas the trajectories of in response to these strategies are shown as black curves with different shapes of markers. The miss distances are listed in Table 3, all of which turn out to be reasonably small. Since our terminal guidance law is prescribed with a fixed final time, in all three cases reaches his final position at . It is interesting to observe that ’s trajectory against is, as opposed to our earlier expectation, is notably different from the TO trajectory. This is presumably because employs the OLPS, originally a smooth function obtained from the numerical solver, as a piece-wise continuous function in the numerical simulations. Despite the numerical error that comes from such discretization, however, it is shown that is able to intercept eventually with a reasonably small miss distance. Finally, the input and state trajectories of are shown in Figures 3 and 4, respectively.

6 Conclusions

In this paper, we have proposed a terminal feedback guidance law that is based on a linearized approximation of the nonlinear hypersonic dynamics and the saddle point solution to a two-player zero-sum linear quadratic differential game. In the proposed guidance algorithm, given that the target’s optimal evasion is confined in one axis and under a few simplifying assumptions, an open-loop reference pursuit trajectory have been computed by solving an optimal hypersonic trajectory optimization problem. The latter trajectory has then been used to linearize the nonlinear game dynamics and construct an auxiliary linear quadratic differential game whose saddle point solution is combined with the open-loop input. We have also presented numerical simulation results to verify that, as long as the weighted coefficients are properly selected via trial and error, our proposed guidance law performs well and guarantees the hypersonic pursuit vehicle to intercept the maneuvering (evading) target in a finite time with reasonably small miss distances. One of the limitations of the proposed scheme is that it is only applicable to a scenario in which the target’s motion is constrained along a line. It is also required that the target’s position must be known to the hypersonic pursuit vehicle at every time instant in order to compute the feedback control input derived from the auxiliary differential game along a reference pursuit trajectory. Possible future directions of this work include consideration of scenarios in which the target may evade on a plane and the information given to the hypersonic pursuit vehicle on the target’s position is stochastic rather than deterministic.

References

- [1] D. A. Benson, G. T. Huntington, T. P. Thorvaldsen, and A. V. Rao, “Direct trajectory optimization and costate estimation via an orthogonal collocation method,” Journal of Guidance, Control, and Dynamics, vol. 29, no. 6, pp. 1435–1440, 2006.

- [2] M. Vedantam, M. R. Akella, and M. J. Grant, “Multi-stage stabilized continuation for indirect optimal control of hypersonic trajectories,” in AIAA Scitech 2020 Forum, 2020, p. 0472.

- [3] A. E. Bryson and Y.-C. Ho, Applied optimal control: optimization, estimation, and control. Routledge, 2018.

- [4] G. R. Eisler and D. G. Hull, “Guidance law for hypersonic descent to a point,” Journal of Guidance, Control, and Dynamics, vol. 17, no. 4, pp. 649–654, 1994.

- [5] J. Bain and J. Speyer, “Robust neighboring extremal guidance for the advanced launch system,” in Guidance, Navigation and Control Conference, 1993, p. 3750.

- [6] J. Carson, M. S. Epstein, D. G. MacMynowski, and R. M. Murray, “Optimal nonlinear guidance with inner-loop feedback for hypersonic re-entry,” in 2006 American Control Conference, 2006, pp. 6–pp.

- [7] V. R. Makkapati, J. Ridderhof, P. Tsiotras, J. Hart, and B. van Bloemen Waanders, “Desensitized trajectory optimization for hypersonic vehicles,” in 2021 IEEE Aerospace Conference (50100), 2021, pp. 1–10.

- [8] Y. Shi and Z. Wang, “Onboard generation of optimal trajectories for hypersonic vehicles using deep learning,” Journal of Spacecraft and Rockets, vol. 58, no. 2, pp. 400–414, 2021.

- [9] R. Isaacs, Differential Games: A Mathematical Theory with Applications to Warfare and Pursuit, Control and Optimization. New York, NY: Wiley, 1965.

- [10] V. Turetsky and J. Shinar, “Missile guidance laws based on pursuit–evasion game formulations,” Automatica, vol. 39, no. 4, pp. 607–618, 2003.

- [11] S. Battistini and T. Shima, “Differential games missile guidance with bearings-only measurements,” IEEE Transactions on Aerospace and Electronic Systems, vol. 50, no. 4, pp. 2906–2915, 2014.

- [12] K. Horie and B. A. Conway, “Optimal fighter pursuit-evasion maneuvers found via two-sided optimization,” Journal of Guidance, Control, and Dynamics, vol. 29, no. 1, pp. 105–112, 2006.

- [13] T. Başar and G. J. Olsder, Dynamic noncooperative game theory. SIAM, 1998.

- [14] M. Kelly, “An introduction to trajectory optimization: How to do your own direct collocation,” SIAM Review, vol. 59, no. 4, pp. 849–904, 2017.

- [15] D. Li and J. B. Cruz, “Defending an asset: a linear quadratic game approach,” IEEE Transactions on Aerospace and Electronic Systems, vol. 47, no. 2, pp. 1026–1044, 2011.

Yoonjae LeeFigures/Lee.jpg received the B.S. degree in aerospace engineering from the University of California, San Diego, CA, USA in 2020. He is currently enrolled as a graduate student studying aerospace engineering in the Department of Aerospace Engineering and Engineering Mechanics at the University of Texas at Austin. His research is mainly focused on game theory, multi-agent systems, and pursuit-evasion games.

Efstathios Bakolas (Member, IEEE)Figures/Bakolas.jpg received the Diploma degree in mechanical engineering with highest honors from the National Technical University of Athens, Athens, Greece, in 2004 and the M.S. and Ph.D. degrees in aerospace engineering from the Georgia Institute of Technology, Atlanta, Atlanta, GA, USA, in 2007 and 2011, respectively. He is currently an Associate Professor with the Department of Aerospace Engineering and Engineering Mechanics, The University of Texas at Austin, Austin, TX, USA. His research is mainly focused on stochastic optimal control theory, optimal decision-making, differential and dynamic games, control of uncertain systems, data-driven modeling and control of nonlinear systems, and control of aerospace systems.

Maruthi R. AkellaFigures/Akella.jpeg is a tenured faculty member with the Department of Aerospace Engineering and Engineering Mechanics at The University of Texas at Austin (UT Austin) where he holds the Ashley H. Priddy Centennial Professorship in Engineering. He is the founding director for the Center for Autonomous Air Mobility and the faculty lead for the Control, Autonomy, and Robotics area at UT Austin. His research program encompasses control theoretic investigations of nonlinear and coordinated systems, vision-based sensing for state estimation, and development of integrated human and autonomous multivehicle systems. He is Editor-in-Chief for the Journal of the Astronautical Sciences and previously served as the Technical Editor (Space Systems) for the IEEE Transactions on Aerospace and Electronic Systems. He is a Fellow of the IEEE and AAS.