Fever Basketball: A Complex, Flexible, and Asynchronized Sports Game Environment for Multi-agent Reinforcement Learning

Fever Basketball: A Complex, Flexible, and Asynchronized Sports Game Environment for Multi-agent Reinforcement Learning

Abstract

The development of deep reinforcement learning (DRL) has benefited from the emergency of a variety type of game environments where new challenging problems are proposed and new algorithms can be tested safely and quickly, such as Board games, RTS, FPS, and MOBA games. However, many existing environments lack complexity and flexibility, and assume the actions are synchronously executed in multi-agent settings, which become less valuable. We introduce the Fever Basketball game, a novel reinforcement learning environment where agents are trained to play basketball game. It is a complex and challenging environment that supports multiple characters, multiple positions, and both the single-agent and multi-agent player control modes. In addition, to better simulate real-world basketball games, the execution time of actions differs among players, which makes Fever Basketball a novel asynchronized environment. We evaluate commonly used multi-agent algorithms of both independent learners and joint-action learners in three game scenarios with varying difficulties, and heuristically propose two baseline methods to diminish the extra non-stationarity brought by asynchronism in Fever Basketball Benchmarks. Besides, we propose an integrated curricula training (ICT) framework to better handle Fever Basketball problems, which includes several game-rule based cascading curricula learners and a coordination curricula switcher focusing on enhancing coordination within the team. The results show that the game remains challenging and can be used as a benchmark environment for studies like long-time horizon, sparse rewards, credit assignment, and non-stationarity, etc. in multi-agent settings.

Introduction

Deep reinforcement learning (DRL) has achieved great success in many domains, including games (Mnih et al. 2013; Silver et al. 2017; Lample and Chaplot 2017), recommendation systems (Munemasa et al. 2018), robot control (Haarnoja et al. 2018) and autonomous driving (Pan et al. 2017). Among all these domains, games are one of the most active and popular settings. Because they are simulations of reality and have relatively lower cost of trial and error. Besides, games can be run in parallel to collect experience for training, which is another advantage of facilitating the success of DRL. There have been a variety kinds of game environments nowadays, for example, Atari games (Mnih et al. 2013, 2015), board games (Silver et al. 2016, 2017), card games (Heinrich and Silver 2016), first-person shooting (FPS) games (Lample and Chaplot 2017), multiplayer online battle arena (MOBA) games (OpenAI 2018; Jiang, Ekwedike, and Liu 2018), real-time strategy (RTS) games (Vinyals et al. 2019; Liu et al. 2019). However, the lack of complexity and flexibility, and the assumption of synchronized actions in many existing environments that support multi-agent training remain potential barriers for better development of RL.

As a typical sports game (SPG), Fever Basketball simulates basic elements of basketball games (Figure ), which is challenging for modern RL algorithms. First of all, the long-time horizon and sparse rewards remain issues for most DRL methods. In basketball, it is normally not until scoring shall the agents get a reward (goal in or not), which may require a long sequence of consecutive events such as dribbling and passing the ball to teammates to break through the defense of opponents. Second, the whole basketball game is a combination of many challenging sub-tasks based on game rules, for example, the offense sub-task, the defense sub-task, and the sub-task of fighting for ball possession when the ball is free. Third, it is a multi-agent system that requires teammates to cooperate well to win the game. Fourth, players in reality have different reaction time, which makes the decision making asynchronized within the same team. Moreover, the different characters and positions classified according to players’ capabilities or tactical strategies such as center (C), power forward (PF), small forward (SF), point guard (PG), and shoot guard (SG) add extra stochasticity to the game. All of these reasons described above make basketball a challenging SPG.

In this paper, we propose the Fever Basketball Environment, a novel open-source asynchronized reinforcement learning environment where agents can learn to play one of the world’s most popular sports basketball. Building upon a commercial basketball game engine, our main contributions are as follows:

-

1)

We provide the Fever Basketball Environment, an advanced and challenging basketball simulator that supports all the major basketball rules.

-

2)

We provide the asynchronized Fever Basketball game clients to better simulate reality in multi-agent settings.

-

3)

We provide different training curricula (such as offense, defense, freeball, ballclear) for handling the whole basketball task.

-

4)

We provide various training scenarios (such as 1v1, 2v2, 3v3), multiple characters, and tasks of varying difficulties that can be used to compare different algorithms.

-

5)

We evaluate common algorithms for multi-agent scenarios and propose two heuristic methods for handling the asynchronism for joint-action learners.

-

6)

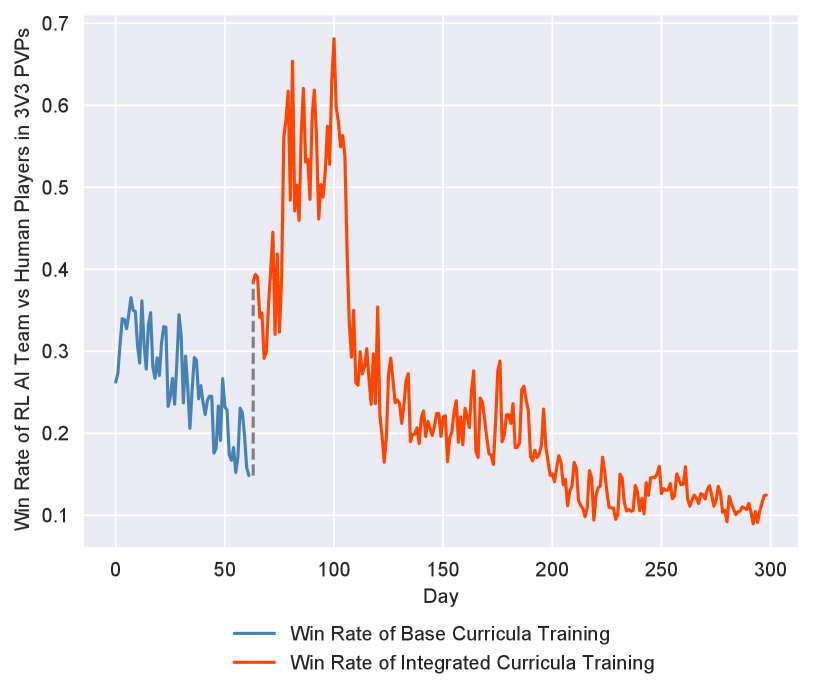

We propose an integrated curricula training (ICT) framework that reaches up to 70% win-rate during a 300-day online evaluation with human players.

Motivation and Related Works

The development of algorithms benefits from the emergence of new challenging problems, and game environments have been serving as the fundamental place where the reinforcement learning community tests its ideas nowadays. However, most of the existing environments have certain deficiencies which can be made up by Fever Basketball game:

Low task complexity.

As deep reinforcement learning algorithms become more sophisticated, existing environments with low task complexity and randomness become less challenging, and the benchmarks based on them become less informative (Juliani et al. 2018). For example, the canonical CartPole and MountainCar tasks (Sutton and Barto 2018) are too simple to distinguish the performance of different algorithms. Meanwhile, most of the agents of Atari games in the commonly used Arcade Learning Environment (Bellemare et al. 2013) have been trained to super-human level (Badia et al. 2020). The same applies to the DeepMind Lab (Beattie et al. 2016) and Procgen (Cobbe et al. 2019), the former of which consists of several relatively simple first-person navigation maze environments and the latter is a suite of several game-like environments mainly designed to benchmark generalization in reinforcement learning. Besides, games in OpenAI Retro (Nichol et al. 2018) such as Sonic The Hedgehog can be easily solved by existing algorithms (Schulman et al. 2017; Hessel et al. 2018).

Fixed number of agents.

Many existing environments only support the controlling of a fixed number of agents, and most of them are single-agent reinforcement learning (SARL) problems. For example, the Hard Eight (Paine et al. 2019) environment focuses on a single agent’s training to solve hard exploration problems. The Obstacle Tower Environment (Juliani et al. 2019) also only supports the training of a single agent to solve puzzles and make plans on multiple floors. The Atari games and OpenAI Retro games are also environments that support single-agent training. However, most of the real-world scenarios involve more than one agents, such as basketball matches and autonomous driving cars (Shalev-Shwartz, Shammah, and Shashua 2016), which can be naturally modeled as multi-agent systems (MAS) in a centralized or distributed manner. In addition to the challenges in SARL such as long time horizon and sparse rewards, MARL brings extra challenges such as non-stationarity (Papoudakis et al. 2019), credit assignment (Nguyen, Kumar, and Lau 2018) as well as scalability with the number of agents increasing (Hernandez-Leal, Kartal, and Taylor 2019; Zhang, Yang, and Başar 2019). Thus, platforms with flexible settings on the number of controlled agents become both important and valuable for relevant studies.

Synchronized actions.

Common SARL paradigm assumes that the environment will not change between when the environment state is observed and when the action is executed. The system is treated sequentially: the agent observes a state, freezes time while computing the action and applies the action, and then unfreezes time. The same settings normally apply to MARL, where multiple agents calculate actions together and then execute them at the same time step. However, it is usually not the case in the real world that the whole system’s decision making and executing processes are synchronized. This results from agents’ different reaction time and diverse action execution time under various situations, and it makes the whole MAS works asynchronously. For example, in multi-robot control areas, robots could behave asynchronously when executing different actions due to hardware limitations (Xiao et al. 2020).

Other related works.

There are also many other open-source environments focusing on certain game types and specific research fields. For example, the SMAC (Vinyals et al. 2017), which is a representative of the challenging RTS games, has been used as a test-bed for MARL algorithms despite that the additive and dense rewards settings could make it less challenging (Foerster et al. 2017; Rashid et al. 2018; Vinyals et al. 2019). The DeepMind Control Suite (Tassa et al. 2018), AI2Thor (Kolve et al. 2017), Habitat (Savva et al. 2019), and PyBullet (Coumans and Bai 2016) environments are all related to continuous control tasks. The most similar platform to ours is the Google Research Football (Kurach et al. 2019), which offers another kind of SPG: football. However, it is a synchronized game platform and there are many differences between football and basketball in terms of game settings like game rules, number of players as well as tactics.

Fever Basketball Games

Fever Basketball is an online basketball game, which simulates a half-court (length=11.4 meters, width=15 meters) basketball match between two teams**footnotemark: *11footnotetext: https://github.com/FuxiRL/FeverBasketball. The game includes the most common basketball aspects, such as jump ball, dribble, three-pointer, dunk, rebound, etc (see Figure 1LABEL:basketball_rule for a few examples). The objective of each team is to score as much as possible to win the match within a limited time.

Supported basketball elements.

The game offers more than 30 characters (Charles, Alex, Steven, etc.) of different positions (C, PF, SF, PG, SG) with various attributes and skills to choose from before a match, which largely enriches both the randomness and challenges of the game. At the beginning of each match, one player from each team will do the jump ball. The team which gets the ball will be the offense team and the other team will be the defense team. The player holding the ball in the offense team is in the state of attack and the other two players are in the state of assist. Players in the offense team can use offense actions (such as screen, fast break, jockey for position, etc.) and shooting actions (such as jump shot, layup, dunk, etc.) to score. Meanwhile, players in the defense team should try their best to prevent the offense team from scoring by applying defense strategy like one-on-one checks, steals, rejections, and so on. Once the ball is out of the hands of the possessed player such as after shoot or rebound, all of the players will be in the freeball states. At such a moment, if players of the defense team manage to fetch the ball, they need to go through an attack-defense switch process named ballclear to prepare for offense by dribbling out of the three-point-line. Once the offense team scores, the ball possession will be handed to the opposite team. A typical match lasts for three minutes (with an average FPS of 60) except for the overtime. The shoot clock violation (20 seconds) will be punished by handling the ball possession to the opposite team.

Supported player control modes.

Fever Basketball offers a convenient way to control game players by modifying corresponding keywords when launching the game clients. The number of players within each team can be chosen from , which covers both the single-agent training and the multi-agent training scenarios with increasing complexity and difficulty in a curriculum manner. Meanwhile, the position of each player can be chosen from {C, PF, SF, PG, SG} and the game characters can be switched freely within the players we provide. Furthermore, the game also provides three control modes. The first one is the Bot mode, where the agents can be trained with the built-in rule-based bots whose difficulty levels can be chosen from {easy, medium, hard} with different reaction time and shooting rate. The second one is the SelfPlay mode, which allows the training of both teams through self-play. The third mode is the Human mode, where the human player can control the specific position of the home team and fight against the built-in bots or pre-trained agents.

Game states and representations.

Raw game states in Fever Basketball are data packages received from game clients, which includes information like current scene name (attack, assist, defense, freeball, ballclear), general game information (such as attack remain time and scores), both teams’ information (such as the player type, player position, facing angle and shoot rate), ball information (such as ball position, ball velocity, owned player and owned team) and the results of the last action. Please see the detailed description in the Appendix. Besides, we also provide a vector-based representation wrapper class corresponding to each game scene as well as some useful functions like distance calculation of two coordinates, based on which researchers can easily define their state representations for training. We collect transition experience from 20 parallelled

Asynchronized game actions.

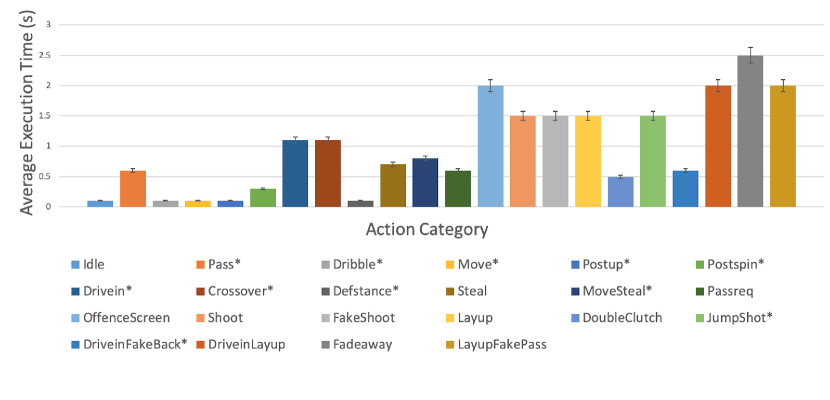

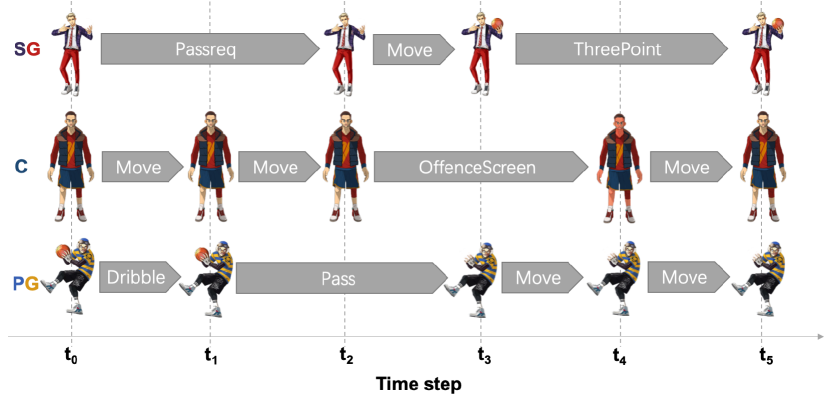

To better simulate the real-world basketball game, one of the key features of Fever Basketball is that a player’s primitive actions have different execution time (see Figure 2(a)), which makes the actions of the players within the same team asynchronized. For example, consider the offense scenario depicted in Figure 2(b), where the PG is dribbling the ball with the SG requesting the ball and the C keep moving at . After getting the ball at time step , which is passed from PG at time , the SG makes a three-point shot that costs two time steps of execution (i.e., finishing shooting at ), with C keeps doing the OffenceScreen action (i.e. pick-and-roll) from to . Unlike common MARL environments which assume the agents’ actions are synchronized, the asynchronism in Fever Basketball brings extra challenges for the current MARL algorithms, especially for the ones with centralized training (Sunehag et al. 2018; Rashid et al. 2018). Each agent faces a much more non-stationary environment since the other agents’ ongoing actions may have a large impact on the state transitions observed by the agent. Besides, the number of actions for different positions and different game scenes are listed in Table 1 (3v3 mode). A detailed description of these actions can be found in Appendix.

| C | PF | SF | PG | SG | |

| Attack | 29 | 30 | 43 | 35 | 42 |

| Defense | 19 | 19 | 19 | 27 | 27 |

| Freeball | 10 | 10 | 9 | 9 | 9 |

| Ballclear | 22 | 22 | 25 | 25 | 25 |

| Assist | 11 | 11 | 11 | 11 | 11 |

Game rewards settings.

Game rewards settings in Fever Basketball are also highly flexible and can be easily customized by researchers in terms of both the shaping rewards and the game rewards. We currently offer a set of game rewards related to corresponding game scenes. To be specific, in the offense scene (attack & assist), the agent will be rewarded with 2 or 3 if the team goals while being punished with -1 if the ball is blocked, stolen, lost, or time is up. Rewards settings in the defense scene are the opposite of the offense scene. For the freeball scene, the agent will be rewarded with 1 if it possesses the ball and will be punished with -1 if it loses (such as that the opponent gets the ball or time is up). In the ballclear scene, the agent will be rewarded with 1 if it gets the ball out of the three-point line successfully and will be punished similarly as those in the offense scene.

Fever Basketball Benchmarks

Fever Basketball is a complex, flexible, and highly customizable game environment which allows researchers to try new ideas and solve problems in basketball games. Compared with the single-agent mode, the multi-agent mode is more challenging and includes both competition and collaboration scenarios. In addition, it brings new challenges such as asynchronized actions. To evaluate the performance of existing algorithms and handle the asynchronism problems in Fever Basketball, we propose some heuristic methods and provide a set of benchmarks regarding the 3v3 tasks. In all of these tasks, the goal of the trained agents is to score as many points as possible in a limited amount of time (3 minutes per round) against the built-in bots, whose difficulty levels range from easy, medium to hard. In addition, to facilitate fair comparisons, we use the SG position for both teams.

Methods

Generally speaking, there are two major learning paradigms in MARL, namely the joint action learner (JAL) and the independent learner (IL) (Claus and Boutilier 1998). In cooperative settings, JAL, which also includes the centralised training with decentralised execution paradigm, assumes all the agents’ actions can be observed, such as VDN (Sunehag et al. 2018) and QMIX (Rashid et al. 2018). In contrast, IL only relies on its action and the coordination can be achieved through heuristics of optimistic and average rewards, such as HYQ (Matignon, Laurent, and Le Fort-Piat 2007), EXCEL(Hu et al. 2019).

The modeling of asynchronized actions in Fever Basketball differs from that of MacDec-POMDPs (Amato et al. 2019; Xiao, Hoffman, and Amato 2020) where options are proposed, and applied to dynamic programming problems and model-free robot-control areas, respectively. Since the asynchronized actions in Fever Basketball are still primitive actions and the decision-making still focuses on a low level of granularity, and proper methods of collecting transitions remain critical. In terms of experience collection, IL algorithms have advantages over JAL algorithms because their learning processes can be handled independently and do not rely on collecting other agents’ on-going actions. However, it will be a problem to find an appropriate time to collect the joint-action transitions for JAL.

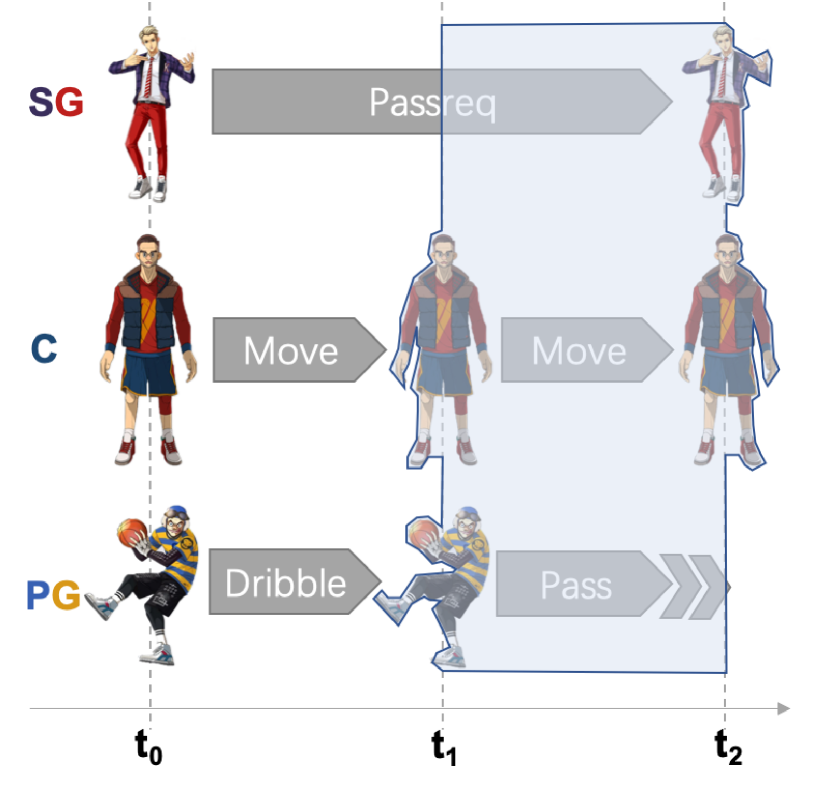

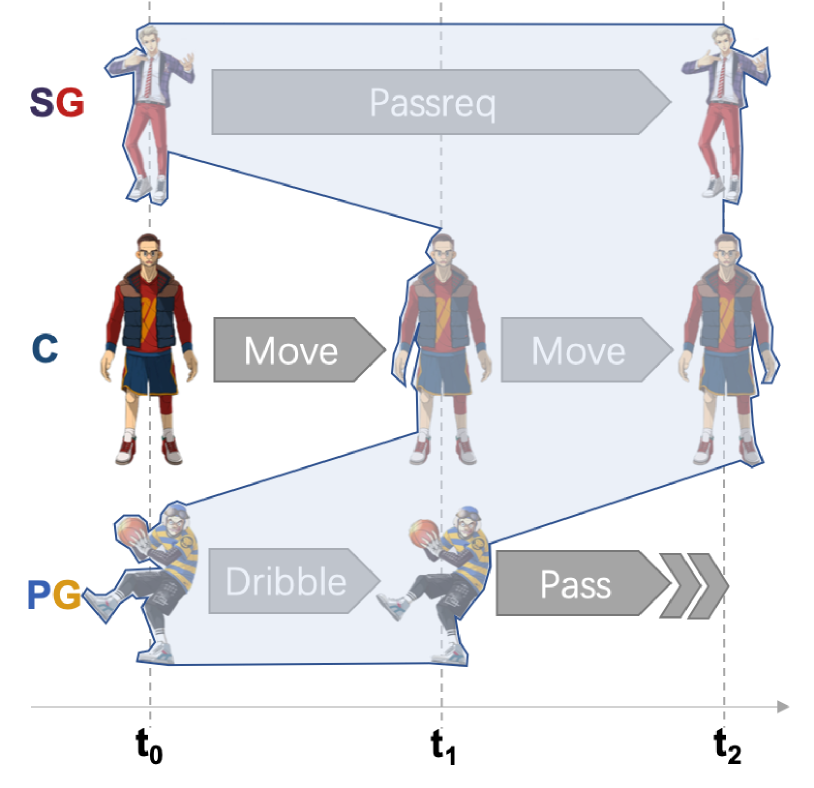

As illustrated in Figure 3, we propose two methods to collect the joint-action experience. The first method is experience-mask (EXP-Ms), which masks the on-going actions out and regards them as Idle when collecting joint transitions at a certain time-step. For example, if we denote , , , , as the global observation, local observation, action, global reward, and done information of player at time step , respectively. The global experience in the shaded area of Figure 2(a) would be:

The second method is experience splice (EXP-Sp), which means that we collect the joint transition when all the players have finished the recent on-going actions, and then splice the experience to form final transitions based on the global states observed at the time step when the agents start to execute actions. As illustrated by Figure 2(b), the joint transition experience in the shaded area would be:

By learning from the reconstructed experience, we expect these two heuristic methods can help the joint-action learners acquire the perception of the execution time of corresponding actions to facilitate better coordination.

Experimental results

In this section, we provide benchmark results for both IL algorithms IQL (Mnih et al. 2015), HYQ (Matignon, Laurent, and Le Fort-Piat 2007), EXCEL (Hu et al. 2019)) and JAL algorithms VDN (Sunehag et al. 2018), QMIX (Rashid et al. 2018) with the EXP-Ms and EXP-Sp methods. We evaluate these algorithms in both the Full Game setting and Divide and Conquer setting. In the Full Game setting, the learners need to handle all the sub-tasks (i.e. offense (attack & assist), defense, freeball, ballclear) through one model with unavailable actions masked out. In the Divide and Conquer setting, each of these sub-tasks is allocated with a corresponding learner, which decreases the difficulties of training. The technical details of the training architectures and hyperparameters can be found in Appendix.

The experimental results of the Fever Basketball Benchmarks, which are averaged over 10 game clients after trained for 100 rounds, are shown in Figure 4. It can be found that the Full Game setting is much more challenging than the Divide and Conquer setting, where all of the algorithms fail to defeat the built-in bots. Besides, the hard bots are more difficult to be defeated than the medium and easy ones. The performances of the independent learners generally outperform the joint-action learners even though we try to eliminate the action asynchronism within the team by using EXP-Ms and EXP-Sp methods. In addition, it seems the EXP-Ms method performs relatively better than the EXP-Sp method, which might result from the neglect of some agent’s short-time transitions when generating the global experience, such as the transition from to of player C in Figure 3(b). The results indicate that the asynchronism problem is not well solved and worth further studying.

The Integrated Curricula Training (ICT)

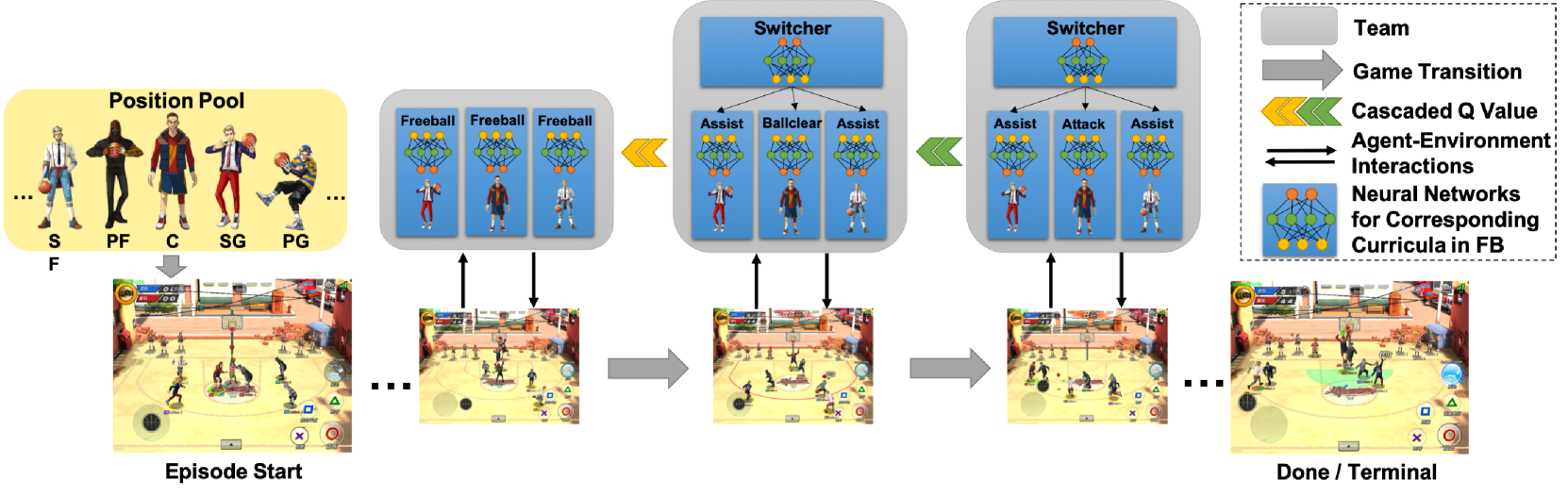

Although the complex basketball problem can be partially solved through existing MARL algorithms under the Divide and Conquer settings, the correlations between corresponding sub-tasks are neglected and there could be miscoordinations induced by the asynchronism. Besides, it also seems that the proposed Exp-Ms and Exp-Sp methods struggle to facilitate the learning of action execution time, and the asynchronism in Fever Basketball remains a critical problem, especially for joint-action learners. To make further progress, we take advantage of the independent learners and propose a curriculum learning based framework named ICT (Figure 5), which mainly includes a set of weighted cascading curricula learners and a coordination curricula switcher. These weighted cascading curricula learners are responsible for corresponding sub-tasks generated by basketball game rules. And the coordination curricula switcher, which has a relatively higher priority in making decisions, focuses on learning cooperative policy on primitive actions that will result in curriculum switch, such as the pass action that triggers switch between the attack and the assist curricula.

Methods

The weighted cascading curricula learners.

Curriculum learning is used to solve complex and difficult problems (Wu and Tian 2016; Wu, Zhang, and Song 2018). As mentioned before, Fever Basketball offers a set of base training scenarios according to game rules, namely attack, defense, freeball, ballclear and assist from the perspective of a single player. All of these five base curricula are the fundamental aspects of an integrated basketball match. And only by mastering these basic curricula can one be ready for generating appropriate policies throughout the entire basketball match. Thus we intend to firstly train a corresponding DRL agent () to learn each of these base curricula (), the interaction process of which can be formulated as a finite Markov Decision Process (MDP). During each episode, agent perceives the state of the corresponding base curriculum at each time step t, and outputs an action according to policy . A scalar reward is then yielded from the environment and the agent will transit to a new state with a probability distribution . The transition is stored in replay buffer . Agent ’s goal is to find an optimal policy to maximize the expected accumulative (discounted) rewards from each state s in corresponding curriculum , namely the value function which can be formulated as:

The update of the network parameters are carried out by randomly sampling mini-batches from corresponding replay buffer and performing a gradient descent step on . The Q value labels can be calculated as follows:

In this way, the complicated and challenging basketball problem is decomposed into several easier curricula which can be preliminarily solved by applying co-training of corresponding DRL agents similar to the divide-and-conquer strategy. Although these base curricula training has enabled the agents to acquire some primary skills towards corresponding sub-tasks, the whole basketball match remains a challenge. This is because a round of basketball match could include many inter-transitions between corresponding sub-tasks, and these five base curricula are actually highly correlated. For example, the attack and assist curricula are normally followed by freeball after the shot of the offense team, thus the policy used in former curricula will contribute to the outcomes of the latter curricula.

To deal with the correlations between corresponding base curricula, we propose the cascading curricula training approach. It is implemented by adding the max value of the following base curriculum to the reward that agent received from environment to form the new label when former base curriculum reaches a terminal. Meanwhile, we use a weight parameter to adjust the ratio of the cascading Q values heuristically during training. The adjustment procedure for is crucial for both the stabilization and performance of the whole training process. When equals to 0, the cascading curricula training becomes the base curricula training. When increases to 1 by following certain heuristic procedures during training, the correlations between corresponding base curricula will be gradually established through the backup of the learned Q values and contribute to the final integrated policy throughout the whole basketball match. The new Q value labels can be formulated as:

The coordination curricula switcher.

Although the weighted cascading curricula training can degrade the complex basketball problem into relatively easier base curricula and take their correlations into account, it is only from the perspective of a single player. However, as a typical team sport, coordination within the same team plays a crucial role in all basketball matches. Based on the cascading curricula training, we propose a high-level coordination curricula switcher to facilitate the training of coordination by focusing on learning cooperative actions that could induce curricula switching within the same team. For example, the pass action, which is the core primary action that transfers ball possession and creates basketball tactics in the offense team. By taking over such action, the coordination curricula switcher will focus on learning how to pass the ball to the right player in an appropriate time, which, in the meantime, will also result in the curriculum switch between attack / ballclear and assist within the same team. The coordination curricula switcher will have a relatively higher priority over those weighted cascading base curricula learners on action selection to ensure the performance of coordination when it is necessary. Meanwhile, by taking over coordination related actions from original action spaces, it also enables a reduction of original action spaces for attack, ballclear and assist scene, which helps the agents to learn policies more effectively in corresponding curricula. The pseudo-code of the ICT framework for Fever Basketball can be found in the Appendix (see Algorithm ).

Experimental results

In this subsection, an ablation study is firstly carried out to assess the effects of different parts in our ICT framework by playing with the hard built-in bots, which includes the one-model training, the base curricula training, the cascading curricula training, and ICT framework. The performance of the whole ICT framework is then further evaluated with online players in Fever Basketball. The APEX-Rainbow (Horgan et al. 2018; Hessel et al. 2018) algorithm is used for all learners and we put the detailed training setups and architectures in Appendix.

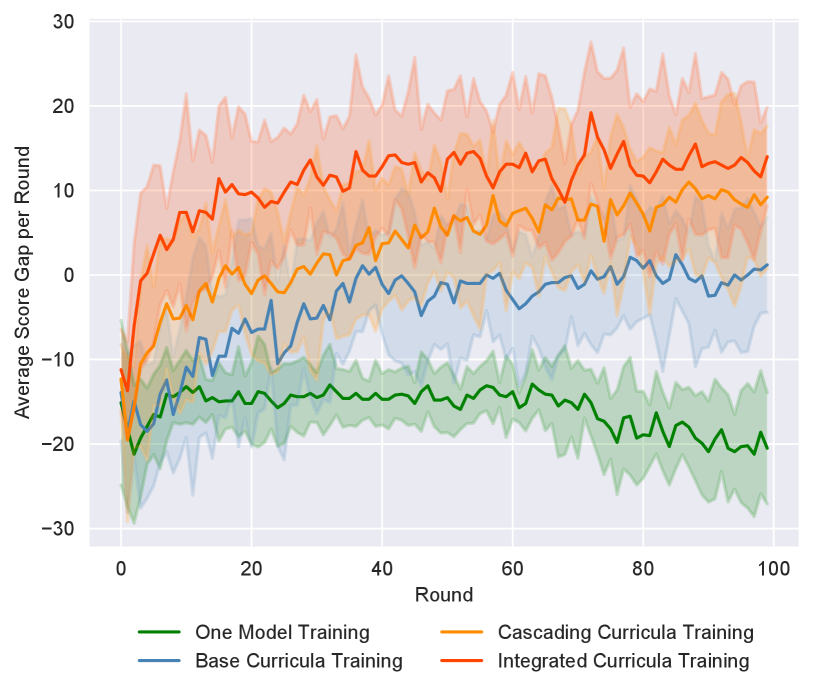

The results of the ablation experiments are demonstrated in Figure 6(a). The horizontal axis is the number of evaluated matches along with the training process (3 minutes per round). The vertical axis is the average score gap between proposed training approaches and the built-in hard bots in one match over 10 game clients. We can find that the one-model training approach performs the worst and the players trained by this approach struggle to master these five distinct sub-tasks together. The base curriculum training approach performs much better than the one-model training method since it can focus only on the corresponding sub-task and generate some fundamental policies towards basic game rules. Players trained in this way tend to play solo while lacking tactical movements since correlations between related sub-tasks are ignored. The weighted cascading curriculum training can make further improvements compared with the base curriculum training because the correlation between related sub-tasks is retained and the policy can be optimized over the whole task despite that the coordination within one team remains a weakness. The ICT framework significantly outperforms other training approaches since the coordination performance can be essentially improved by using the coordination curricula switcher.

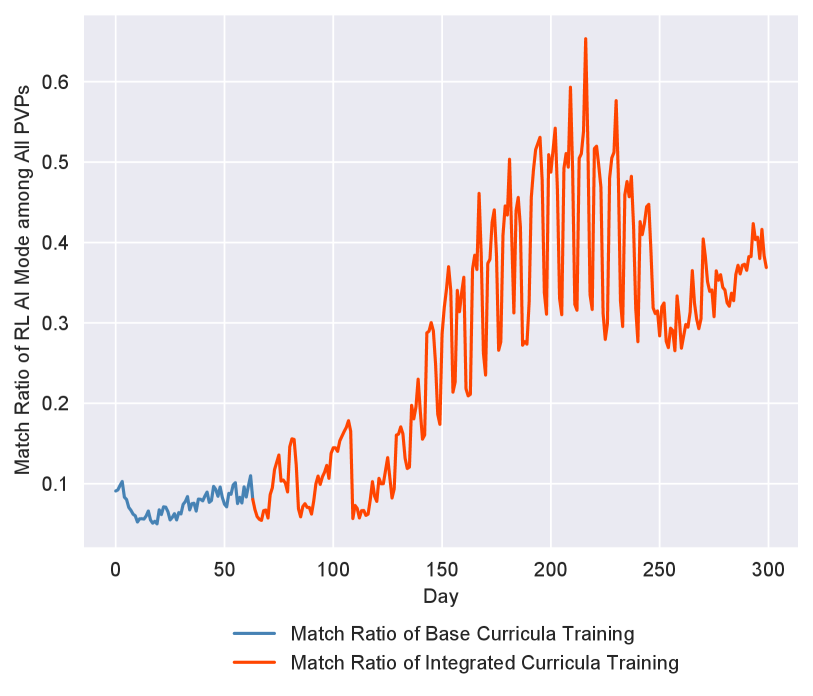

The results of a 300-day online evaluation with human players are illustrated in Figure 6(b and c). During this evaluation, we first test the model learned by the base curriculum learning and change the online model to the one trained through the ICT framework on day 63. As is shown in Figure 6(b), the win rate of the updated model (almost up to 70%, red broken lines) increases more than twice of the former model (around 30%, blue broken lines) at the beginning of each online evaluations with human players in 3v3 PVP (i.e. player vs player) matches. The team trained with our method can generate many professional coordination tactics like give-and-go, and it is more likely to create wide-open areas to score by passing smoothly. In addition, the match ratio that human players participated in playing against the challenging AI teams keeps increasing (see Figure 6 (c)) among all the PVP matches, which indicates that we bring extra revenues for the game.

Discussion and Conclusion

In this paper, we present the Fever Basketball Environment, a novel open-source reinforcement learning environment of the basketball game. It is a complex and challenging environment which supports both single-agent training and multi-agent training. Besides, the actions with different execution time in this environment make it a good platform for studying the challenging problem of asynchronized multi-agent decision-making. We implement and evaluate the state-of-the-art MARL algorithms (such as VDN, Qmix, and EXCEL) together with two heuristic methods (i.e. EXP-Ms and EXP-Sp) to alleviate the effect of asynchronism in both the Full Game setting and Divide and Conquer setting of Fever Basketball. The results show that the game is challenging and existing algorithms fail to solve the asynchronism problems. To shed light on this complex task, we take advantage of the curriculum learning and propose an integrated curricula training framework to solve this problem step by step. Though progress has been made, the win-rate against on-line human players is not high (up to 70%) and keeps decreasing as the evaluation process goes, which may result from our model’s lacking generation to unseen opponents, and meanwhile demonstrates the difficulties of mastering the basketball game. We expect the components involved in Fever Basketball such as the complexity, the flexible settings , and the asynchronism will be useful for investigating current scientific challenges like long-time horizon, spare rewards, credit assignment, non-stationarity.

Ethical Impact

Considering that the game platforms have substantially boosted the development of reinforcement learning (RL), the open-source of our Fever Basketball platform is expected to further enrich the types of existing virtual environments for RL communities. What’s more, the new challenges brought by our platform are also of great potential to incubate new algorithms, which is another aspect for contributing to the development of RL.

References

- Amato et al. (2019) Amato, C.; Konidaris, G.; Kaelbling, L. P.; and How, J. P. 2019. Modeling and planning with macro-actions in decentralized POMDPs. Journal of Artificial Intelligence Research 64: 817–859.

- Badia et al. (2020) Badia, A. P.; Piot, B.; Kapturowski, S.; Sprechmann, P.; Vitvitskyi, A.; Guo, D.; and Blundell, C. 2020. Agent57: Outperforming the atari human benchmark. arXiv preprint arXiv:2003.13350 .

- Beattie et al. (2016) Beattie, C.; Leibo, J. Z.; Teplyashin, D.; Ward, T.; Wainwright, M.; Küttler, H.; Lefrancq, A.; Green, S.; Valdés, V.; Sadik, A.; et al. 2016. Deepmind lab. arXiv preprint arXiv:1612.03801 .

- Bellemare et al. (2013) Bellemare, M. G.; Naddaf, Y.; Veness, J.; and Bowling, M. 2013. The arcade learning environment: An evaluation platform for general agents. Journal of Artificial Intelligence Research 47: 253–279.

- Claus and Boutilier (1998) Claus, C.; and Boutilier, C. 1998. The dynamics of reinforcement learning in cooperative multiagent systems. AAAI/IAAI 1998(746-752): 2.

- Cobbe et al. (2019) Cobbe, K.; Hesse, C.; Hilton, J.; and Schulman, J. 2019. Leveraging procedural generation to benchmark reinforcement learning. arXiv preprint arXiv:1912.01588 .

- Coumans and Bai (2016) Coumans, E.; and Bai, Y. 2016. Pybullet, a python module for physics simulation for games, robotics and machine learning .

- Foerster et al. (2017) Foerster, J.; Farquhar, G.; Afouras, T.; Nardelli, N.; and Whiteson, S. 2017. Counterfactual multi-agent policy gradients. arXiv preprint arXiv:1705.08926 .

- Haarnoja et al. (2018) Haarnoja, T.; Zhou, A.; Abbeel, P.; and Levine, S. 2018. Soft actor-critic: Off-policy maximum entropy deep reinforcement learning with a stochastic actor. arXiv preprint arXiv:1801.01290 .

- Heinrich and Silver (2016) Heinrich, J.; and Silver, D. 2016. Deep reinforcement learning from self-play in imperfect-information games. arXiv preprint arXiv:1603.01121 .

- Hernandez-Leal, Kartal, and Taylor (2019) Hernandez-Leal, P.; Kartal, B.; and Taylor, M. E. 2019. A survey and critique of multiagent deep reinforcement learning. Autonomous Agents and Multi-Agent Systems 33(6): 750–797.

- Hessel et al. (2018) Hessel, M.; Modayil, J.; Van Hasselt, H.; Schaul, T.; Ostrovski, G.; Dabney, W.; Horgan, D.; Piot, B.; Azar, M.; and Silver, D. 2018. Rainbow: Combining improvements in deep reinforcement learning. In Thirty-Second AAAI Conference on Artificial Intelligence.

- Horgan et al. (2018) Horgan, D.; Quan, J.; Budden, D.; Barth-Maron, G.; Hessel, M.; Van Hasselt, H.; and Silver, D. 2018. Distributed prioritized experience replay. arXiv preprint arXiv:1803.00933 .

- Hu et al. (2019) Hu, Y.; Chen, Y.; Fan, C.; and Hao, J. 2019. Explicitly Coordinated Policy Iteration. In IJCAI, 357–363.

- Jiang, Ekwedike, and Liu (2018) Jiang, D. R.; Ekwedike, E.; and Liu, H. 2018. Feedback-based tree search for reinforcement learning. arXiv preprint arXiv:1805.05935 .

- Juliani et al. (2018) Juliani, A.; Berges, V.-P.; Vckay, E.; Gao, Y.; Henry, H.; Mattar, M.; and Lange, D. 2018. Unity: A general platform for intelligent agents. arXiv preprint arXiv:1809.02627 .

- Juliani et al. (2019) Juliani, A.; Khalifa, A.; Berges, V.-P.; Harper, J.; Teng, E.; Henry, H.; Crespi, A.; Togelius, J.; and Lange, D. 2019. Obstacle tower: A generalization challenge in vision, control, and planning. arXiv preprint arXiv:1902.01378 .

- Kolve et al. (2017) Kolve, E.; Mottaghi, R.; Han, W.; VanderBilt, E.; Weihs, L.; Herrasti, A.; Gordon, D.; Zhu, Y.; Gupta, A.; and Farhadi, A. 2017. Ai2-thor: An interactive 3d environment for visual ai. arXiv preprint arXiv:1712.05474 .

- Kurach et al. (2019) Kurach, K.; Raichuk, A.; Stańczyk, P.; Zając, M.; Bachem, O.; Espeholt, L.; Riquelme, C.; Vincent, D.; Michalski, M.; Bousquet, O.; et al. 2019. Google research football: A novel reinforcement learning environment. arXiv preprint arXiv:1907.11180 .

- Lample and Chaplot (2017) Lample, G.; and Chaplot, D. S. 2017. Playing FPS games with deep reinforcement learning. In Thirty-First AAAI Conference on Artificial Intelligence.

- Liu et al. (2019) Liu, T.; Zheng, Z.; Li, H.; Bian, K.; and Song, L. 2019. Playing Card-Based RTS Games with Deep Reinforcement Learning. In Proceedings of the Twenty-Eighth International Joint Conference on Artificial Intelligence, IJCAI-19, 4540–4546. International Joint Conferences on Artificial Intelligence Organization. doi:10.24963/ijcai.2019/631. URL https://doi.org/10.24963/ijcai.2019/631.

- Matignon, Laurent, and Le Fort-Piat (2007) Matignon, L.; Laurent, G. J.; and Le Fort-Piat, N. 2007. Hysteretic q-learning: an algorithm for decentralized reinforcement learning in cooperative multi-agent teams. In 2007 IEEE/RSJ International Conference on Intelligent Robots and Systems, 64–69. IEEE.

- Mnih et al. (2013) Mnih, V.; Kavukcuoglu, K.; Silver, D.; Graves, A.; Antonoglou, I.; Wierstra, D.; and Riedmiller, M. 2013. Playing atari with deep reinforcement learning. arXiv preprint arXiv:1312.5602 .

- Mnih et al. (2015) Mnih, V.; Kavukcuoglu, K.; Silver, D.; Rusu, A. A.; Veness, J.; Bellemare, M. G.; Graves, A.; Riedmiller, M.; Fidjeland, A. K.; Ostrovski, G.; et al. 2015. Human-level control through deep reinforcement learning. Nature 518(7540): 529.

- Munemasa et al. (2018) Munemasa, I.; Tomomatsu, Y.; Hayashi, K.; and Takagi, T. 2018. Deep reinforcement learning for recommender systems. In 2018 International Conference on Information and Communications Technology (ICOIACT), 226–233. IEEE.

- Nguyen, Kumar, and Lau (2018) Nguyen, D. T.; Kumar, A.; and Lau, H. C. 2018. Credit assignment for collective multiagent RL with global rewards. In Advances in Neural Information Processing Systems, 8102–8113.

- Nichol et al. (2018) Nichol, A.; Pfau, V.; Hesse, C.; Klimov, O.; and Schulman, J. 2018. Gotta learn fast: A new benchmark for generalization in rl. arXiv preprint arXiv:1804.03720 .

- OpenAI (2018) OpenAI. 2018. OpenAI Five. https://blog.openai.com/openai-five/.

- Paine et al. (2019) Paine, T. L.; Gulcehre, C.; Shahriari, B.; Denil, M.; Hoffman, M.; Soyer, H.; Tanburn, R.; Kapturowski, S.; Rabinowitz, N.; Williams, D.; et al. 2019. Making Efficient Use of Demonstrations to Solve Hard Exploration Problems. arXiv preprint arXiv:1909.01387 .

- Pan et al. (2017) Pan, X.; You, Y.; Wang, Z.; and Lu, C. 2017. Virtual to real reinforcement learning for autonomous driving. arXiv preprint arXiv:1704.03952 .

- Papoudakis et al. (2019) Papoudakis, G.; Christianos, F.; Rahman, A.; and Albrecht, S. V. 2019. Dealing with non-stationarity in multi-agent deep reinforcement learning. arXiv preprint arXiv:1906.04737 .

- Rashid et al. (2018) Rashid, T.; Samvelyan, M.; De Witt, C. S.; Farquhar, G.; Foerster, J.; and Whiteson, S. 2018. QMIX: Monotonic value function factorisation for deep multi-agent reinforcement learning. arXiv preprint arXiv:1803.11485 .

- Savva et al. (2019) Savva, M.; Kadian, A.; Maksymets, O.; Zhao, Y.; Wijmans, E.; Jain, B.; Straub, J.; Liu, J.; Koltun, V.; Malik, J.; et al. 2019. Habitat: A platform for embodied ai research. In Proceedings of the IEEE International Conference on Computer Vision, 9339–9347.

- Schulman et al. (2017) Schulman, J.; Wolski, F.; Dhariwal, P.; Radford, A.; and Klimov, O. 2017. Proximal policy optimization algorithms. arXiv preprint arXiv:1707.06347 .

- Shalev-Shwartz, Shammah, and Shashua (2016) Shalev-Shwartz, S.; Shammah, S.; and Shashua, A. 2016. Safe, multi-agent, reinforcement learning for autonomous driving. arXiv preprint arXiv:1610.03295 .

- Silver et al. (2016) Silver, D.; Huang, A.; Maddison, C. J.; Guez, A.; Sifre, L.; Van Den Driessche, G.; Schrittwieser, J.; Antonoglou, I.; Panneershelvam, V.; Lanctot, M.; et al. 2016. Mastering the game of Go with deep neural networks and tree search. nature 529(7587): 484.

- Silver et al. (2017) Silver, D.; Schrittwieser, J.; Simonyan, K.; Antonoglou, I.; Huang, A.; Guez, A.; Hubert, T.; Baker, L.; Lai, M.; Bolton, A.; et al. 2017. Mastering the game of go without human knowledge. Nature 550(7676): 354.

- Sunehag et al. (2018) Sunehag, P.; Lever, G.; Gruslys, A.; Czarnecki, W. M.; Zambaldi, V. F.; Jaderberg, M.; Lanctot, M.; Sonnerat, N.; Leibo, J. Z.; Tuyls, K.; et al. 2018. Value-Decomposition Networks For Cooperative Multi-Agent Learning Based On Team Reward. In AAMAS, 2085–2087.

- Sutton and Barto (2018) Sutton, R. S.; and Barto, A. G. 2018. Reinforcement learning: An introduction. MIT press.

- Tassa et al. (2018) Tassa, Y.; Doron, Y.; Muldal, A.; Erez, T.; Li, Y.; Casas, D. d. L.; Budden, D.; Abdolmaleki, A.; Merel, J.; Lefrancq, A.; et al. 2018. Deepmind control suite. arXiv preprint arXiv:1801.00690 .

- Vinyals et al. (2019) Vinyals, O.; Babuschkin, I.; Chung, J.; Mathieu, M.; Jaderberg, M.; Czarnecki, W. M.; Dudzik, A.; Huang, A.; Georgiev, P.; Powell, R.; et al. 2019. AlphaStar: Mastering the real-time strategy game StarCraft II. DeepMind Blog .

- Vinyals et al. (2017) Vinyals, O.; Ewalds, T.; Bartunov, S.; Georgiev, P.; Vezhnevets, A. S.; Yeo, M.; Makhzani, A.; Küttler, H.; Agapiou, J.; Schrittwieser, J.; et al. 2017. Starcraft ii: A new challenge for reinforcement learning. arXiv preprint arXiv:1708.04782 .

- Wu and Tian (2016) Wu, Y.; and Tian, Y. 2016. Training agent for first-person shooter game with actor-critic curriculum learning .

- Wu, Zhang, and Song (2018) Wu, Y.; Zhang, W.; and Song, K. 2018. Master-Slave Curriculum Design for Reinforcement Learning. In IJCAI, 1523–1529.

- Xiao et al. (2020) Xiao, T.; Jang, E.; Kalashnikov, D.; Levine, S.; Ibarz, J.; Hausman, K.; and Herzog, A. 2020. Thinking While Moving: Deep Reinforcement Learning with Concurrent Control. arXiv preprint arXiv:2004.06089 .

- Xiao, Hoffman, and Amato (2020) Xiao, Y.; Hoffman, J.; and Amato, C. 2020. Macro-Action-Based Deep Multi-Agent Reinforcement Learning. arXiv preprint arXiv:2004.08646 .

- Zhang, Yang, and Başar (2019) Zhang, K.; Yang, Z.; and Başar, T. 2019. Multi-agent reinforcement learning: A selective overview of theories and algorithms. arXiv preprint arXiv:1911.10635 .