language=Python, frame=tb, basicstyle=, upquote=true, numbers = none, escapechar=‘, float, moredelim=[il][]--latexlabel, otherkeywords=self, commentstyle=, keywordstyle=, emph=MyClass,__init__,Config,HighThroughputExecutor,SlurmProvider,LocalChannel, emphstyle=, showstringspaces=false language=Python, basicstyle=, upquote=true, numbers = none, numberstyle=, escapechar=‘, moredelim=[il][]--latexlabel, otherkeywords=self, commentstyle=, keywordstyle=, emph=MyClass,__init__,@python_app,@bash_app, emphstyle=, showstringspaces=false, frame=bt

uncX: A Federated Function Serving Fabric for Science

Abstract.

Exploding data volumes and velocities, new computational methods and platforms, and ubiquitous connectivity demand new approaches to computation in the sciences. These new approaches must enable computation to be mobile, so that, for example, it can occur near data, be triggered by events (e.g., arrival of new data), be offloaded to specialized accelerators, or run remotely where resources are available. They also require new design approaches in which monolithic applications can be decomposed into smaller components, that may in turn be executed separately and on the most suitable resources. To address these needs we present uncX—a distributed function as a service (FaaS) platform that enables flexible, scalable, and high performance remote function execution. uncX’s endpoint software can transform existing clouds, clusters, and supercomputers into function serving systems, while uncX’s cloud-hosted service provides transparent, secure, and reliable function execution across a federated ecosystem of endpoints. We motivate the need for uncX with several scientific case studies, present our prototype design and implementation, show optimizations that deliver throughput in excess of 1 million functions per second, and demonstrate, via experiments on two supercomputers, that uncX can scale to more than more than concurrent workers.

1. Introduction

The idea that one should be able to compute wherever makes the most sense—wherever a suitable computer is available, software is installed, or data are located, for example—is far from new: indeed, it predates the Internet (Fano, 1965; Parkhill, 1966), and motivated initiatives such as grid (Foster et al., 2001) and peer-to-peer computing (Milojicic et al., 2002). But in practice remote computing has long been complex and expensive, due to, for example, slow and unreliable network communications, security challenges, and heterogeneous computer architectures.

Now, however, with ubiquitous high-speed communications, universal trust fabrics, and containerization, computation can occur essentially anywhere. Commercial cloud services have embraced this new reality (Varghese et al., 2019), in particular via their function as a service (FaaS) (Baldini et al., 2017; Fox et al., 2017) offerings that make invoking remote functions trivial. Thus one simply writes client.invoke(FunctionName="F", Payload=D) to invoke a remote function F(D). The FaaS model allows monolithic applications to be transformed into ones that use event-based triggers to dispatch tasks to remote cloud providers.

There is growing awareness of the benefits of decomposing monolithic science applications into functions that can be more efficiently executed on remote computers (Foster and Gannon, 2017; Spillner et al., 2017; Malawski, 2016; Fox and Jha, 2017; Kiar et al., 2019). As we move towards this reality, it becomes easy for scientists to place computations wherever it makes the most sense, and to then move those computations between resources. For example, physicists at FermiLab report that a data analysis task that takes two seconds on a CPU can be dispatched to an FPGA device on the Amazon Web Services (AWS) cloud, where it takes 30 ms to execute, for a total of 50 ms once a round-trip latency of 20 ms to Virginia is included: a speedup of 40 (Duarte et al., 2018). Such examples can be found in many scientific domains; however, until now, there has been no universal and easy-to-use way to remotely execute and move functions between resources.

Unfortunately, existing FaaS systems are not designed to be deployed on heterogeneous research cyberinfrastructure (CI) nor are they designed to federate resources. Further, existing CI is not designed to support granular and sporadic function execution. For example, existing CI typically expose batch scheduling interfaces, use inflexible authentication and authorization models, and have unpredictable scheduling delays for provisioning resources, to name just a few challenges. We are therefore motivated to overcome these challenges by adapting the FaaS model to research CI with the aim to enable reliable computation of granular tasks (i.e., at the level of programming functions) at scale across a diverse range of existing CI, including clouds, clusters, and supercomputers.

To explore this approach we have developed a distributed, scalable, and high-performance function execution platform, uncX, that adapts the powerful and flexible FaaS model to support science workloads across federated research CI, a model that is not achievable with existing FaaS platforms. uncX is a cloud-hosted software as a service (SaaS) system that allows researchers to register Python functions and then invoke those functions on supplied inputs on remote CI. uncX manages the reliable and secure execution of functions on remote CI, provisioning resources, staging function code and inputs, managing safe and secure execution sandboxes using containers, monitoring execution, and returning outputs to users. Functions can execute on any compute resource where uncX’s endpoint software, a uncX agent, is installed and that a requesting user is authorized to access. uncX agents can turn any existing resource (e.g., laptop, cloud, cluster, supercomputer, or container orchestration cluster) into a FaaS endpoint.

The primary novelty of uncX lies in how it combines the extreme convenience of FaaS with support for the specialized and distributed research ecosystem. On-demand remote computing, of which FaaS is an implementation, has motivated initiatives such as grid (Foster et al., 2001), Condor (Thain et al., 2005), peer-to-peer computing (Milojicic et al., 2002), and Remote Procedure Call (RPC) implementations. The cloud-based FaaS that dominates in industry innovates by enabling on-demand function execution on cloud datacenters. Open source FaaS systems enable function execution on a single computer or container orchestration system (Baldini et al., 2016; Fn, [n. d.]; Kub, [n. d.]; Stubbs et al., 2017).

uncX is the first federated FaaS system that enables execution of functions across heterogeneous, distributed resources. Building on a hybrid cloud model that combines user-managed endpoints and a reliable cloud management service, it implements a multi-layered and reliable communication model to overcome the unreliability of distributed endpoints; supports heterogeneous resources with various cloud and batch scheduler interfaces, container technologies, and unpredictable provisioning delays; integrates with the research identity and data management ecosystem providing access via standard web authorization protocols; and includes performance optimizations focused on addressing the unique challenges associated with this federated FaaS model. uncX thus serves as a foundational research platform on which a range of new applications can be developed and research opportunities explored, from multi-level function scheduling and hybrid cloud-edge computing, to scalable data management and integration of accelerators.

The contributions of our work are as follows:

-

•

The distributed and federated uncX platform that can: be deployed on research CI, dynamically provision and manage resources, use various container technologies, and facilitate secure, scalable, and distributed function execution.

-

•

Design and evaluation of performance enhancements for function serving on research CI, including memoization, function warming, batching, and prefetching.

-

•

Experimental studies showing that uncX delivers execution latencies comparable to those of commercial FaaS platforms and scales to 1M+ functions across 130K active workers on supercomputers.

-

•

Discussion of our experiences applying uncX to real-world use cases and exploration of the advantages and disadvantages of FaaS in science.

The rest of this paper is as follows. §2 describes requirements of FaaS in science. §3 presents a conceptual model of uncX. §4 describes the uncX system architecture. §5 evaluates uncX performance. §6 reviews uncX’s use in scientific case studies. §7 discusses related work. Finally, §8 summarizes our contributions.

2. Requirements

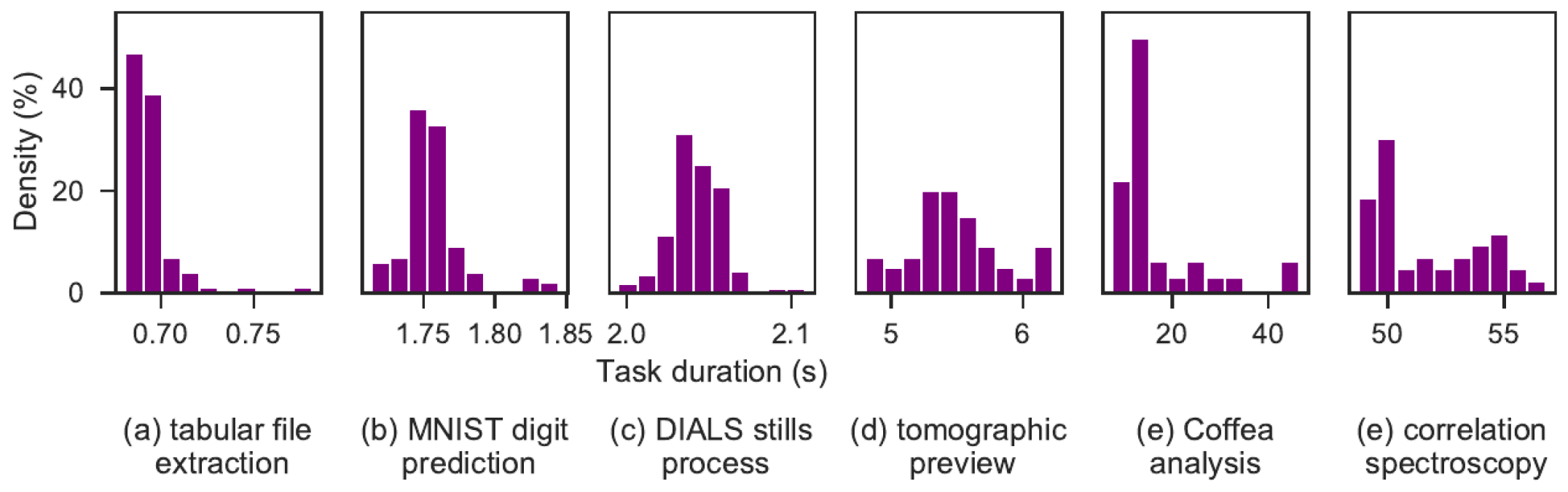

Our work is guided by the unique requirements of FaaS in science. To illustrate these requirements we present six representative case studies: scalable metadata extraction, machine learning inference as a service, synchrotron serial crystallography, neuroscience, correlation spectroscopy, and high energy physics. Figure 1 shows function execution time distributions for each case study. These short duration tasks exemplify opportunities for FaaS in science. We summarize by highlighting requirements for FaaS in science.

Metadata extraction: The effects of high-velocity data expansion are making it increasingly difficult to organize, discover, and manage data. Edge file systems and data repositories now store petabytes of data which are created and modified at an alarming rate (Paul et al., 2017). Xtract (Skluzacek et al., 2019) is a distributed metadata extraction system that applies a set of general and specialized metadata extractors, such as those for identifying topics in text, computing aggregate values from tables, and recognizing locations in maps. To reduce data transfer costs, Xtract executes extractors “near” to data, by pushing extraction tasks to the edge. Extractors are implemented as Python functions, with various dependencies, and each extractor typically executes for between 3 milliseconds and 15 seconds.

Machine learning inference: DLHub (Chard et al., 2019) is a service that supports ML model publication and on-demand inference. Users deposit ML models, implemented as functions with a set of dependencies, in the DLHub catalog by uploading the raw model (e.g., PyTorch, TensorFlow) and model state (e.g., training data, hyperparameters). DLHub uses this information to dynamically create a container for the model using repo2docker (Forde et al., 2018) that contains all model dependencies and necessary model state. Other users may then invoke the model through DLHub on arbitrary input data. DLHub currently includes more than one hundred published models, many of which have requirements for specific ML toolkits and execute more efficiently on GPUs and accelerators. Figure 1 shows the execution time when invoking the MNIST digit identification model. Other DLHub models execute for between seconds and several minutes.

Synchrotron Serial Crystallography (SSX) is an emerging method for imaging small crystal samples 1–2 orders of magnitude faster than other methods. To keep pace with the increased data production, SSX researchers require automated approaches that can process the resulting data with great rapidity: for example, to count the bright spots in an image (“stills processing”) within seconds, both for quality control and as a first step in structure determination. The DIALS (Waterman et al., 2013) crystallography processing tools are implemented as Python functions that execute for 1–2 seconds per sample. Analyzing large datasets requires HPC resources to derive crystal structures in a timely manner.

Quantitative Neurocartography and connectomics map the neurological connections in the brain—a compute- and data-intensive process that requires processing ~20GB every minute during experiments. Researchers apply automated workflows to perform quality control on raw images (to validate that the instrument and sample are correctly configured), apply ML models to detect image centers for subsequent reconstruction, and generate preview images to guide positioning. Each of these steps is implemented as a function that can be executed sequentially with some data exchange between steps. However, given the significant data sizes, researchers typically rely on HPC resources and are subject to scheduling delays.

Real-time data analysis in High Energy Physics (HEP): The traditional HEP analysis model uses successive processing steps to reduce the initial dataset (typically, 100s of PB) to a size that permits real-time analysis. This iterative approach requires significant computation time and storage of large intermediate datasets, and may take weeks or months to complete. Low-latency, query-based analysis strategies (Pivarski et al., 2017) are being developed to enable real-time analysis using native operations on hierarchically nested, columnar data. Such queries are well-suited to FaaS. To enable interactive analysis, for example as a physicist engages in real-time analysis of several billion particle collision events, successive compiled functions, each running for seconds, need to be dispatched to the data. Analysis needs require sporadic (and primarily remote) invocation, and compute needs increase as new data are collected.

X-ray Photon Correlation Spectroscopy (XPCS) is an experimental technique used to study the dynamics in materials at nanoscale by identifying correlations in time series of area detector images. This process involves analyzing the pixel-by-pixel correlations for different time intervals. The detector acquires megapixel frames at 60 Hz (~120 MB/sec). Computing correlations at these data rates is a challenge that requires HPC resources but also rapid response time. Image processing functions, such as XPCS-eigen’s corr function, execute for approximately 50 seconds, and images can be processed in parallel.

Requirements for FaaS in science: The case studies illuminate benefits of FaaS approaches (e.g., decomposition, abstraction, flexibility, scalability, reliability), but also elucidate requirements unmet by existing FaaS solutions:

-

•

Specialized compute: functions may require HPC-scale and/or specialized and heterogeneous resources (e.g., GPUs).

-

•

Distribution: functions may need to execute near to data and/or on a specialized computer.

-

•

Dependencies: functions often require specific libraries and user-specified dependencies.

-

•

Data: functions analyze both small and large data, stored in various locations and formats, and accessible via different methods (e.g., Globus (Chard et al., 2014)).

-

•

Authentication: institutional identities and specialized security models are used to access data and compute resources.

-

•

State: functions may be connected and share state (e.g., files or database connections) to decrease overheads.

-

•

Latency: functions may be used in online (e.g., experiment steering) and interactive environments (e.g., Jupyter notebooks) that require rapid response.

-

•

Research CI: resources offer batch scheduler interfaces (with long delays, periodic downtimes, proprietary interfaces) and specialized container technology (e.g., Singularity, Shifter) that make it challenging to provide common execution interfaces, elasticity, and fault tolerance.

-

•

Billing: research CI use allocation-based usage models.

3. Conceptual Model

We first describe the conceptual model behind uncX to provide context to the implementation architecture. uncX allows users to register and then execute functions on arbitrary endpoints. All user interactions with uncX are performed via a REST API implemented by a cloud-hosted uncX service.

Functions: uncX is designed to execute functions—snippets of Python code that perform some activity. A uncX function explicitly defines a Python function and input signature. The function body must specify all imported modules. Listing 1 shows the registration of a function for creating a tomographic preview image from raw tomographic data contained in an HDF5 input file. The function’s input specifies the file and parameters to identify and read a projection. It uses the Automo (De Carlo, [n. d.]) Python package to read the data, normalize the projection, and then save the preview image. The function returns the name of the saved preview image.

Function registration: A function must be registered with the uncX service before it can be executed. Registration is performed via a JSON POST request to the REST API. The request includes: a name and the serialized function body. Users may also specify users, or groups of users, who may invoke the function. Optionally, the user may specify a container image to be used. Containers allow users to construct environments with appropriate dependencies (system packages and Python libraries) required to execute the function. uncX assigns a universally unique identifier (UUID) for management and invocation. Users may update functions they own.

Endpoints: A uncX endpoint is a logical entity that represents a compute resource. The corresponding uncX agent allows the uncX service to dispatch functions to that resource for execution. The agent handles authentication and authorization, provisioning of nodes on the compute resource, and monitoring and management. Administrators or users can deploy a uncX agent and register an endpoint for themselves and/or others, providing descriptive (e.g., name, description) metadata. Each endpoint is assigned a unique identifier for subsequent use.

Function execution: Authorized users may invoke a registered function on a selected endpoint. To do so, they issue a request via the uncX service which identifies the function and endpoint to be used as well as inputs to be passed to the function. Functions are executed asynchronously: each invocation returns an identifier via which progress may be monitored and results retrieved. We refer to an invocation of a function as a “task.”

uncX service: Users interact with uncX via a cloud-hosted service which exposes a REST API for registering functions and endpoints, and for executing functions, monitoring their execution, and retrieving results. The service is paired with accessible endpoints via the endpoint registration process.

User interface: uncX provides a Python SDK that wraps the REST API. Listing 1 shows an example of how the SDK can be used to register and invoke a function on a specific endpoint. The example first constructs a client and registers the preview function. It then invokes the registered function using the run command and passes the unique function identifier, the endpoint id on which to execute the function, and inputs (in this case fname, start, end, and step). Finally, the example shows that the asynchronous results can be retrieved using get_result.

4. Architecture and Implementation

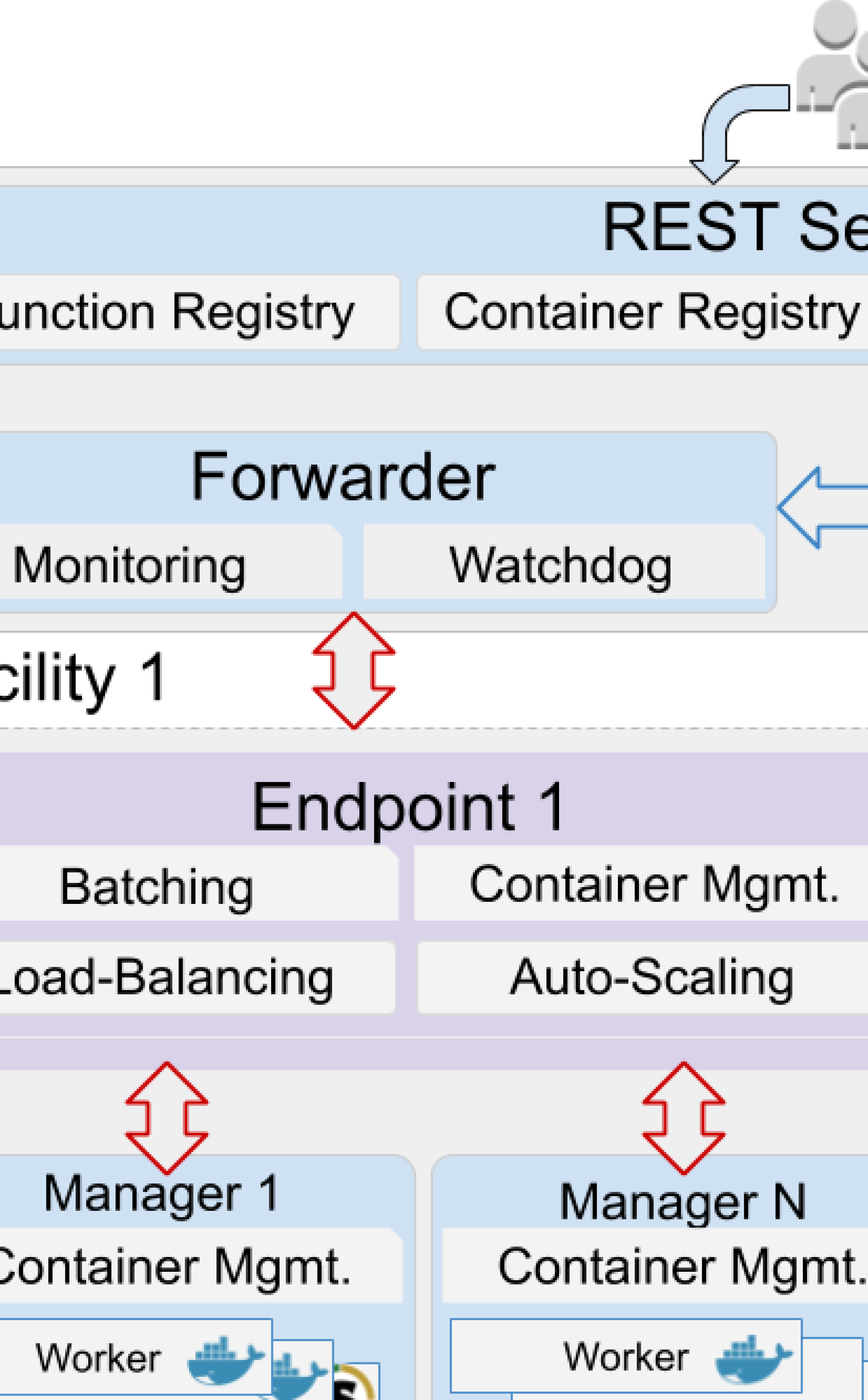

The uncX system combines a cloud-hosted management service with software agents deployed on remote resources: see Figure 2.

4.1. The uncX Service

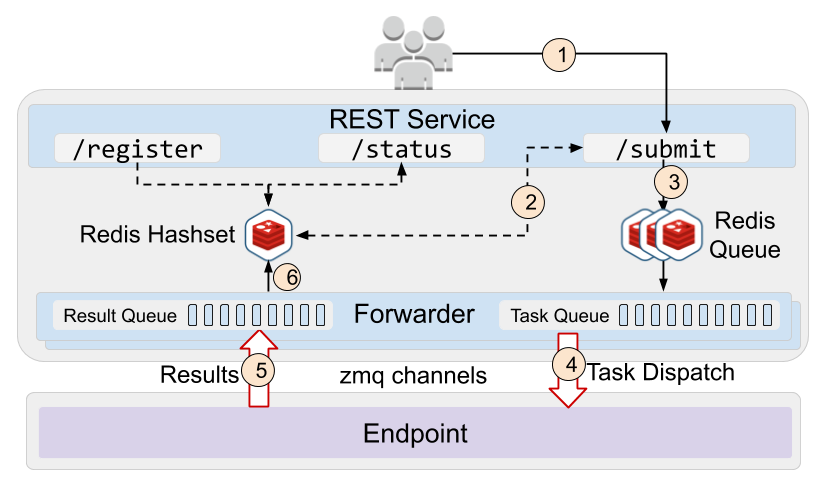

The uncX service maintains a registry of uncX endpoints, functions, and users in a persistent AWS Relational Database Service (RDS) database. To facilitate rapid function dispatch, uncX stores serialized function codes and tasks (including inputs and task metadata) in an AWS ElastiCache Redis hashset. The service also manages a Redis queue for each endpoint that stores task ids for tasks to be dispatched to that endpoint. The service provides a REST API to register and manage endpoints, register functions, execute and monitor functions, and retrieve the output from tasks. The uncX service is secured using Globus Auth (Tuecke et al., 2016) which allows users, programs and applications, and uncX endpoints to securely make API calls. When an endpoint registers with the uncX service a unique forwarder process is created for each endpoint. Endpoints establish ZeroMQ connections with their forwarder to receive tasks, return results, and perform heartbeats.

uncX implements a hierarchical task queuing architecture consisting of queues at the uncX service, endpoint, and worker. These queues support reliable fire-and-forget function execution that is resilient to failure and intermittent endpoint connectivity. At the first level, each registered endpoint is allocated a unique Redis task queue and result queue that reliably stores and tracks tasks.

Figure 3 shows the uncX task lifecycle. At function submission the uncX service routes the task to the specified endpoint’s task queue. The forwarder dispatches tasks to the agent only when an agent is connected. The forwarder uses heartbeats to detect if an agent is disconnected and then returns outstanding tasks back into the task queue. When the agent reconnects the tasks are forward to that agent. This architecture ensures that uncX agents receive tasks with at least once semantics. uncX agents internally queue tasks at both the manager and worker. These queues ensure that tasks are not lost once they have been delivered to the endpoint. Similarly, results are returned to the uncX service and stored in the endpoint’s result queue until they are retrieved by the user.

uncX relies on AWS hosted databases, caches, and Web serving infrastructure to reduce operational overhead, elastically scale resources, and provide high availability. While these services provide significant benefits to uncX, they have associated costs. To minimize these costs we apply several techniques, such as using small cloud instances with responsive scaling to minimize the steady state cost and restricting the size of input and output data passed through the uncX service to reduce storage costs (e.g., in Redis store). For larger data sizes we use out-of-band transfer mechanisms such as Globus (Chard et al., 2014). Further, we periodically purge results from the Redis store once they have been retrieved by the client.

4.2. Function Containers

uncX uses containers to package function code and dependencies that are to be deployed on a compute resource. Our review of container technologies, including Docker (Merkel, 2014), LXC (LXC, [n. d.]), Singularity (Kurtzer et al., 2017), Shifter (Jacobsen and Canon, 2015), and CharlieCloud (Priedhorsky and Randles, 2017), leads us to adopt Docker, Singularity, and Shifter in the first instance. Docker works well for local and cloud deployments, whereas Singularity and Shifter are designed for use in HPC environments and are supported at large-scale computing facilities (e.g., Singularity at ALCF and Shifter at NERSC). Singularity and Shifter implement similar models and thus it is easy to convert from a common representation (e.g., a Dockerfile) to both formats.

uncX requires that each container includes a base set of software, including Python 3 and uncX worker software. Other system libraries or Python modules needed for function execution must also be included. When registering a function, users may optionally specify a container to be used for execution; if no container is specified, uncX executes functions using the worker’s Python environment. In future work, we intend to make this process dynamic, using repo2docker (Forde et al., 2018) to build Docker images and convert them to site-specific container formats as needed.

4.3. The uncX Endpoint

The uncX endpoint represents a remote resource and delivers high-performance remote execution of functions in a secure, scalable, and reliable manner.

The endpoint architecture, depicted in the lower portion of Figure 2, is comprised of three components, which are discussed below:

-

•

uncX agent: persistent process that queues and forwards tasks and results, interacts with resource schedulers, and batches and load balances requests.

-

•

Manager: manages the resources for a single node by deploying and managing a set of workers.

-

•

Worker: executes tasks within a container.

The uncX agent is a software agent that is deployed by a user on a compute resource (e.g., an HPC login node, cloud instance, or a laptop). It registers with the uncX service and acts as a conduit for routing tasks and results between the service and workers. The uncX agent manages resources on its system by working with the local scheduler or cloud API to deploy managers on compute nodes. The uncX agent uses a pilot job model (Turilli et al., 2018) to provision and communicate with resources in a uniform manner, irrespective of the resource type (cloud or cluster) or local resource manager (e.g., Slurm, PBS, Cobalt). As each manager is launched on a compute node, it connects to and registers with the uncX agent. The uncX agent then uses ZeroMQ sockets to communicate with its managers. To minimize blocking, all communication is performed by threads using asynchronous communication patterns. The uncX agent uses a randomized scheduling algorithm to allocate tasks to suitable managers with available capacity. The uncX agent can be configured to provide access to specialized hardware or accelerators. When deploying the agent users can specify how worker containers should be launched, enabling them to mount specialized hardware and execute functions on that hardware. In future work we will extend the agent configuration to specify custom hardware and software capabilities and report this information to the uncX agent and service for scheduling.

To provide fault tolerance and robustness, for example with respect to node failures, the uncX agent relies on periodic heartbeat messages and a watchdog process to detect lost managers. The uncX agent tracks tasks that have been distributed to managers so that when failures do occur, lost tasks can be re-executed (if permitted). uncX agents communicate with the uncX service’s forwarder via a ZeroMQ channel. Loss of a uncX agent is detected by the forwarder and when the uncX agent recovers, it repeats the registration process to acquire a new forwarder and continue receiving tasks. To reduce overheads, the uncX agent can shut down managers to release resources when they are not needed; suspend managers to prevent further tasks being scheduled to them; and monitor resource capacity to aid scaling decisions.

Managers represent, and communicate on behalf of, the collective capacity of the workers on a single node, thereby limiting the number of sockets used to just two per node. Managers determine the available CPU and memory resources on a node, and partition the node among the workers. Once all workers connect to the manager it registers with the endpoint. Managers advertise deployed container types and available capacity to the endpoint.

Workers persist within containers and each executes one task at a time. Since workers have a single responsibility, they use blocking communication to wait for functions from the manager. Once a task is received it is deserialized, executed, and the serialized results are returned via the manager.

4.4. Managing Compute Infrastructure

uncX is designed to support a range of computational resources, from embedded computers to clusters, clouds, and supercomputers, each with distinct access modes. As uncX workloads are often sporadic, resources must be provisioned as needed to reduce costs due to idle resources. uncX uses Parsl’s provider interface (Babuji et al., 2019) to interact with various resources, specify resource-specific requirements (e.g., allocations, queues, limits, cloud instance types), and define rules for automatic scaling (i.e., limits and scaling aggressiveness). This interface allows uncX to be deployed on batch schedulers such as Slurm, Torque, Cobalt, SGE, and Condor; the major cloud vendors such as AWS, Azure, and Google Cloud; and Kubernetes.

4.5. Container Management

uncX agents are able to execute functions on workers deployed in specific containers. Thus, managers must dynamically deploy, manage, and scale containers based on function requirements. Each manager advertises its deployed container types to the uncX agent. The uncX agent implements a greedy, randomized scheduling algorithm to route tasks to managers and an on-demand container deployment algorithm on the manager. When routing functions to a manager, the agent attempts to send tasks to managers with suitable deployed containers. If there is availability on several managers, the agent allocates pending tasks in a randomized manner. Upon receiving the task, the manager either deploys a new worker in a suitable container or sends the task to an existing worker deployed in a suitable container. Both the function routing and container deployment components are implemented with modular interfaces via which users can integrate their own algorithms.

When an endpoint is deployed on Kubernetes, both the manager and the worker are deployed within a pod and thus the manager cannot change worker containers. In this case, a set of managers are deployed with specific container images and the agent simply routes tasks to suitable managers.

4.6. Serialization and Data Management

uncX supports registration of arbitrary Python functions and the passing of data (e.g., primitive types and complex objects) to/from functions. uncX uses a Facade interface that leverages several serialization libraries, including cpickle, dill, tblib, and JSON. The uncX serializer sorts the serialization libraries by speed and applies them in order successively until the object is serialized. This approach exploits the strengths of various libraries, including support for complex objects (e.g., machine learning models) and traceback objects in a fast and transparent fashion. Once objects are serialized, they are packed into buffers with headers that include routing tags and the serialization method, such that only the buffers need be unpacked and deserialized at the destination.

While the serializer can act on arbitrary Python objects and input/output data, for performance and cost reasons we limit the size of data that can be passed through the uncX service. Instead, we rely on out-of-band data transfer mechanisms, such as Globus, when passing large datasets to/from uncX functions. Data can be staged prior to the invocation of a function (or after the completion of a function) and a reference to the data’s location can be passed to/from the function as input/output arguments. Many early users use this method to move large files to/from functions (see §6).

4.7. Optimizations

We apply several optimizations to enable high-performance function serving in a wide range of research environments. We briefly describe four optimization methods employed in uncX.

Container warming is used by FaaS platforms to improve performance (Wang et al., 2018). Function containers are kept warm by leaving them running for a short period of time (5-10 minutes) following the execution of a function. Warm containers remove the need to instantiate a new container to execute a function, significantly reducing latency. This need is especially evident in HPC environments for several reasons: first, loading many concurrent Python environments and containers puts a strain on large, shared file systems; second, many HPC centers have their own methods for instantiating containers that may place limitations on the number of concurrent requests; and third, individual cores are often slower in many core architectures like Xeon Phis. As a result the start time for containers can be much larger than what would be seen locally.

Batching requests enables uncX to amortize costs across many function requests. uncX implements two batching models: internal batching to enable managers to request many tasks on behalf of their workers, minimizing network communication costs; and, a programmatic map command that enables user-driven batching of function inputs, allowing users to tradeoff efficient execution and increased per-function latency by creating fewer, larger requests. The map command can be expressed via the SDK as:

f = fmap(func_id, iterator, ep_id, batch_size, batch_count),

where iterator can support any Python object that implements Python’s iterator interface, batch_size is the number of tasks included in each batch, and batch_count is the total number of batches. (Note: batch_count takes precedence over batch_size). The map function partitions the computation’s iterator into memory-efficient batches of tasks. It exploits two key features of Python iterators: 1) iterators are evaluated in a lazy fashion and use minimal memory before being called; and 2) islice operators can partition iterators without evaluating them. Both batching techniques can increase overall throughput.

Advertising with opportunistic prefetching is a technique in which managers continuously advertise the anticipated capacity in the near future. uncX managers asynchronously advertise and receive tasks, thus interleaving network communication with computation. This can improve performance for high-throughput, short-duration, workloads.

Memoization involves returning a cached result when the input document and function body have been processed previously. uncX supports memoization by hashing the function body and input document and storing a mapping from hash to computed results. Memoization is only used if explicitly set by the user.

4.8. Security Model

We implement a comprehensive security model to ensure that functions are executed by authenticated and authorized users and that one function cannot interfere with another. We rely on two security-focused technologies: Globus Auth (Tuecke et al., 2016) and containers.

uncX uses Globus Auth for authentication, authorization, and protection of all APIs. The uncX service is registered as a resource server, allowing users to authenticate using a supported Globus Auth identity (e.g., institution, Google, ORCID) and enabling various OAuth-based authentication flows (e.g., native client) for different scenarios. uncX has associated Globus Auth scopes (e.g., “urn:globus:auth:scope:funcx:register_function”) via which other clients (e.g., applications and services) may obtain authorizations for programmatic access. uncX endpoints are themselves Globus Auth native clients, each dependent on the uncX scopes, which are used to securely connect to the uncX service. Endpoints require the administrator to authenticate prior to registration in order to acquire access tokens used for constructing API requests. The connection between the uncX service and endpoints is established using ZeroMQ. Communication addresses are communicated as part of the registration process. Inbound traffic from endpoints to the cloud-hosted service is limited to known IP addresses.

uncX function execution can be isolated in containers to ensure they cannot access data or devices outside their context. To enable fine grained tracking of execution, we store execution request histories in the uncX service and in logs on uncX endpoints.

5. Evaluation

We evaluate the performance of uncX in terms of latency, scalability, throughput, and fault tolerance. We also explore the effects of batching, memoization, and prefetching.

5.1. Latency

We first compare uncX with commercial FaaS platforms by measuring the time required for single function invocations. We have created and deployed the same Python function on Amazon Lambda, Google Cloud Functions, Microsoft Azure Functions, and uncX. To minimize unnecessary overhead we use the same payload when invoking each function: the string “hello-world.” Each function simply returns the string.

Although each provider operates its own data centers, we attempt to standardize network latencies by placing functions in an available US East region (between South Carolina and Virginia). We deploy the uncX service and endpoint on separate AWS EC2 instances in the US East region. We then measure latency as the round-trip time to submit, execute, and return a result from the function. We submit all requests from the login node of Argonne National Laboratory’s (ANL) Cooley cluster, in Lemont, IL (18.2 ms latency to the uncX service).

We compare the cold and warm start times for each FaaS service. The cold start time aims to capture the scenario where a function is first executed and the function code and execution environment must be configured. To ensure cold start for uncX functions, we restart the endpoint and measure the time taken to launch the first function. For the other services, we invoke functions every 15 minutes (providers report maximum cache times of 10 minutes, 5 minutes, and 5 minutes, for Google, Amazon, and Azure, respectively) in order to ensure that each function starts cold. We execute the cold start functions times, and the warmed functions times. Table 1 shows the total time for warm and cold functions as well as the computed overhead and function execution time. For the closed-source, commercial FaaS systems we obtain function execution time from execution logs and compute overhead as any additional time spent invoking the function.

| Overhead | Function | Total | Std. Dev. | ||

|---|---|---|---|---|---|

| Azure | warm | 118.0 | 12.0 | 130.0 | 14.4 |

| cold | 1,327.7 | 32.0 | 1,359.7 | 1,233.1 | |

| warm | 80.6 | 5.0 | 85.6 | 12.3 | |

| cold | 203.8 | 19.0 | 222.8 | 141.8 | |

| Amazon | warm | 100.0 | 0.3 | 100.3 | 6.9 |

| cold | 468.2 | 0.6 | 468.8 | 70.8 | |

| uncX | warm | 109.1 | 2.2 | 111.3 | 11.2 |

| cold | 1,491.1 | 6.1 | 1,497.2 | 10.2 | |

Amazon Lambda, Google Functions, and Azure Functions exhibit warmed round trip times of 100ms, 86ms, and 130ms, respectively. uncX offers comparable performance, with 111ms round trip time.

Amazon Lambda, Google Functions, Azure Functions, and uncX exhibit cold round trip times of 469ms, 223ms, ms, and ms, respectively. In the case of uncX, the overhead is primarily due to the startup time of the container (see Table 2). Google and Amazon exhibit significantly better cold start performance than uncX, perhaps as a result of the simplicity of our function (which requires only standard Python libraries and therefore could be served on a standard container) or perhaps due to the low overhead of proprietary container technologies (Wang et al., 2018).

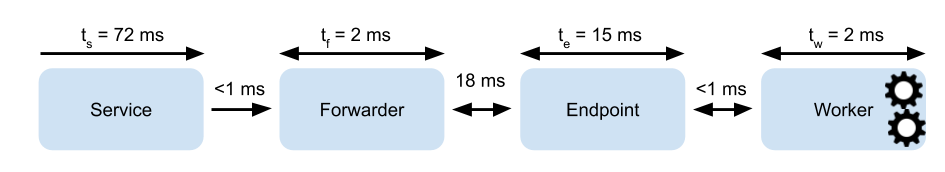

We further explore latency for uncX by instrumenting the system. The results in Figure 4 for a warm container report times as follows: : Web service latency to authenticate, store the task in Redis, and append the task to an endpoint’s queue; : forwarder latency to read task from Redis store, forward the task to an endpoint, and write the result to the Redis store; : endpoint latency to receive tasks and send results to the Forwarder, and to send tasks and receive results from the worker; and : function execution time. We observe that is fast relative to the overall system latency. The network latency between and only includes minimal communication time due to internal AWS networks (measured at ¡1ms). Most uncX overhead is captured in as a result of authentication, and in due to internal queuing and dispatching.

5.2. Scalability and Throughput

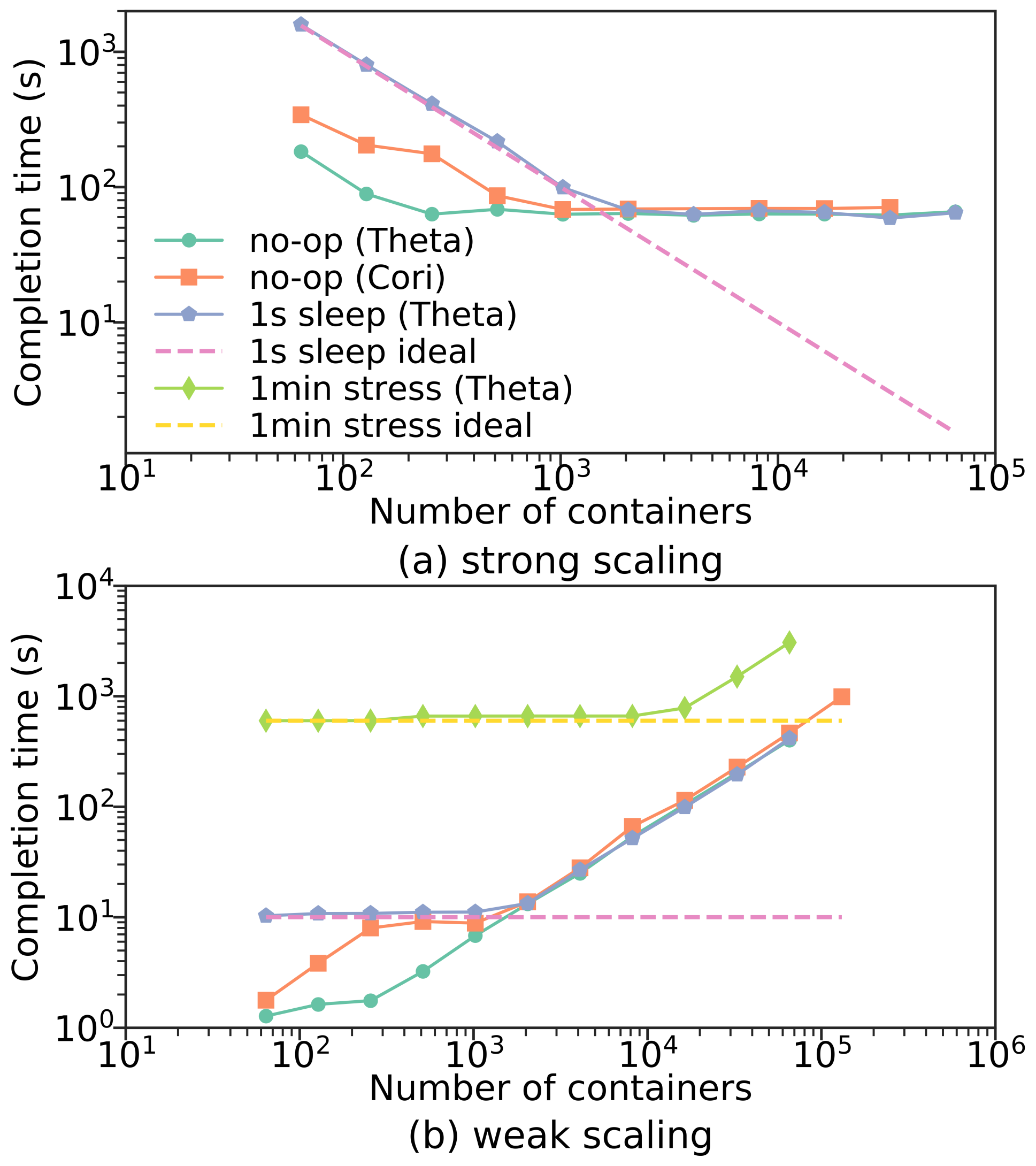

We study the strong and weak scaling of the uncX agent on ANL’s Theta and NERSC’s Cori supercomputers. Theta is a 11.69-petaflop system based on the second-generation Intel Xeon Phi “Knights Landing” (KNL) processor. Its nodes each have a 64-core processor with 16 GB MCDRAM, 192 GB of DDR4 RAM, and are interconnected with high speed InfiniBand. Cori is a 30-petaflop system with an Intel Xeon “Haswell” partition and an Intel Xeon Phi KNL partition. We ran our tests on the KNL partition, which has nodes, each with a 68-core processor (with 272 hardware threads) with six 16GB DIMMs, 96 GB DDR4 RAM, and interconnected with Dragonfly topology. We perform experiments using 64 Singularity containers on each Theta node and 256 Shifter containers on each Cori node. Due to a limited allocation on Cori we use the four hardware threads per core to deploy more containers than cores.

Strong scaling evaluates performance when the total number of function invocations is fixed; weak scaling evaluates performance when the average number of functions executed on each container is fixed. To measure scalability we created functions of various durations: a 0-second “no-op” function that exits immediately, a 1-second “sleep” function, and a 1-minute CPU “stress” function that keeps a CPU core at 100% utilization. For each case, we measured completion time of a batch of functions as we increased the total number of containers. Notice that the completion time of running “no-op” functions on workers indicates the overhead of uncX to distribute the functions to containers. Due to limited allocation we did not execute sleep or stress functions on Cori, nor did we execute stress functions for strong scaling on Theta.

5.2.1. Strong scaling

Figure 5(a) shows the completion time of concurrent function requests with an increasing number of containers. On both Theta and Cori the completion time decreases as the number of containers increases, until we reach 256 containers for “no-op” and 2048 containers for “sleep” on Theta. As reported by Wang et al. (Wang et al., 2018) and Microsoft (azu, [n. d.]b), Amazon Lambda achieves good scalability for a single function to more than 200 containers, Microsoft Azure Functions can scale up to 200 containers, and Google Cloud Functions does not scale well beyond 100 containers. While these results may not indicate the maximum number of containers that can be used for a single function, and likely include per-user limits imposed by the platform, our results show that uncX scales similarly to commercial platforms.

5.2.2. Weak scaling

To conduct the weak scaling tests we performed concurrent function requests such that each container receives, on average, 10 requests. Figure 5(b) shows weak scaling for “no-op,” “sleep,” and “stress.” For “no-op,” the completion time increases with more containers on both Theta and Cori. This reflects the time required to distribute requests to all of the containers. On Cori, uncX scales to concurrent containers and executes more than 1.3 million “no-op” functions. Again, we see that the completion time for “sleep” remains close to constant up to 2048 containers, and the completion time for “stress” remains close to constant up to containers. Thus, we expect a function with several minute duration would scale well to many more containers.

5.2.3. Throughput

We observe a maximum throughput for a uncX agent (computed as number of function requests divided by completion time) of and requests per second on Theta and Cori, respectively.

5.2.4. Summary

Our results show that uncX agents i) scale to + containers for a single function; ii) exhibit good scaling performance up to approximately containers for a 1-second function and containers for a 1-minute function; and iii) provide similar scalability and throughput using both Singularity and Shifter containers on Theta and Cori. It is important to note that these experiments study the uncX agent, and not the end-to-end throughput of uncX. While the uncX Web service can elastically scale to meet demand, the communication overhead may limit throughput. To address this challenge and amortize communication overheads we enable batch submission of tasks. These optimizations are discussed in §5.5

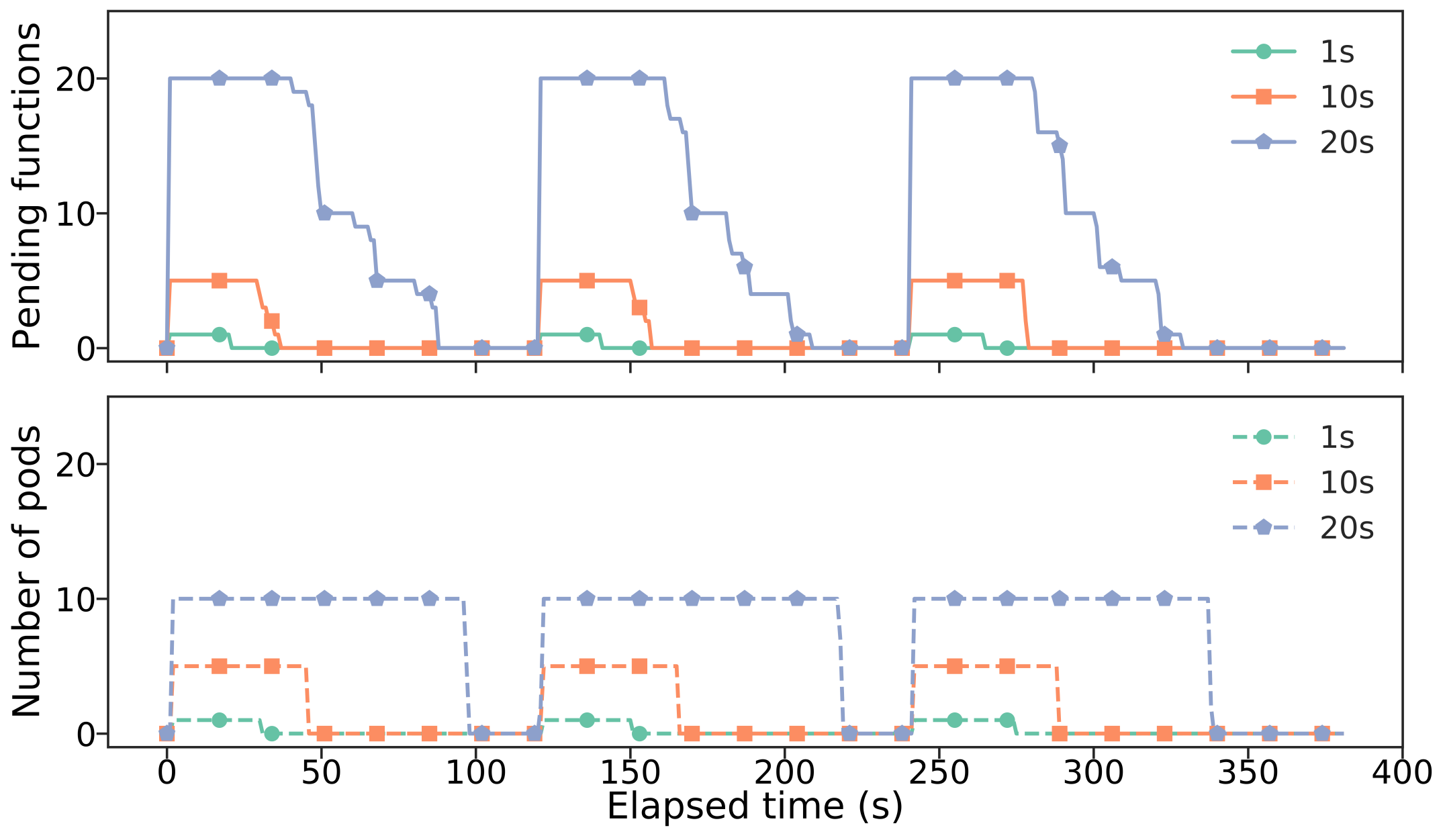

5.3. Elasticity

uncX endpoints dynamically scale and provision compute resources in response to function load. To demonstrate this feature, we deployed a uncX endpoint on a Kubernetes cluster, and used uncX to scale the number of active pods. We deployed three sleep functions (running for 1s, 10s, and 20s), each in its own container. We limit each function to use between 0 to 10 pods. Every 120 seconds, we submitted one 1s, five 10s, and twenty 20s functions to the endpoint. Figure 6 illustrates the concurrent functions submitted to the endpoint (solid lines) and the number of active pods as time elapsed (dashed lines). We see that upon task arrivals at time 0, 120, and 240, the number of active pods is increased to accommodate the load. For example, at time 0, uncX provisioned one, five, and ten (ten is the maximum) pods to process one 1s, five 10s, and twenty 20s functions, respectively. When the functions completed, uncX terminated unused pods.

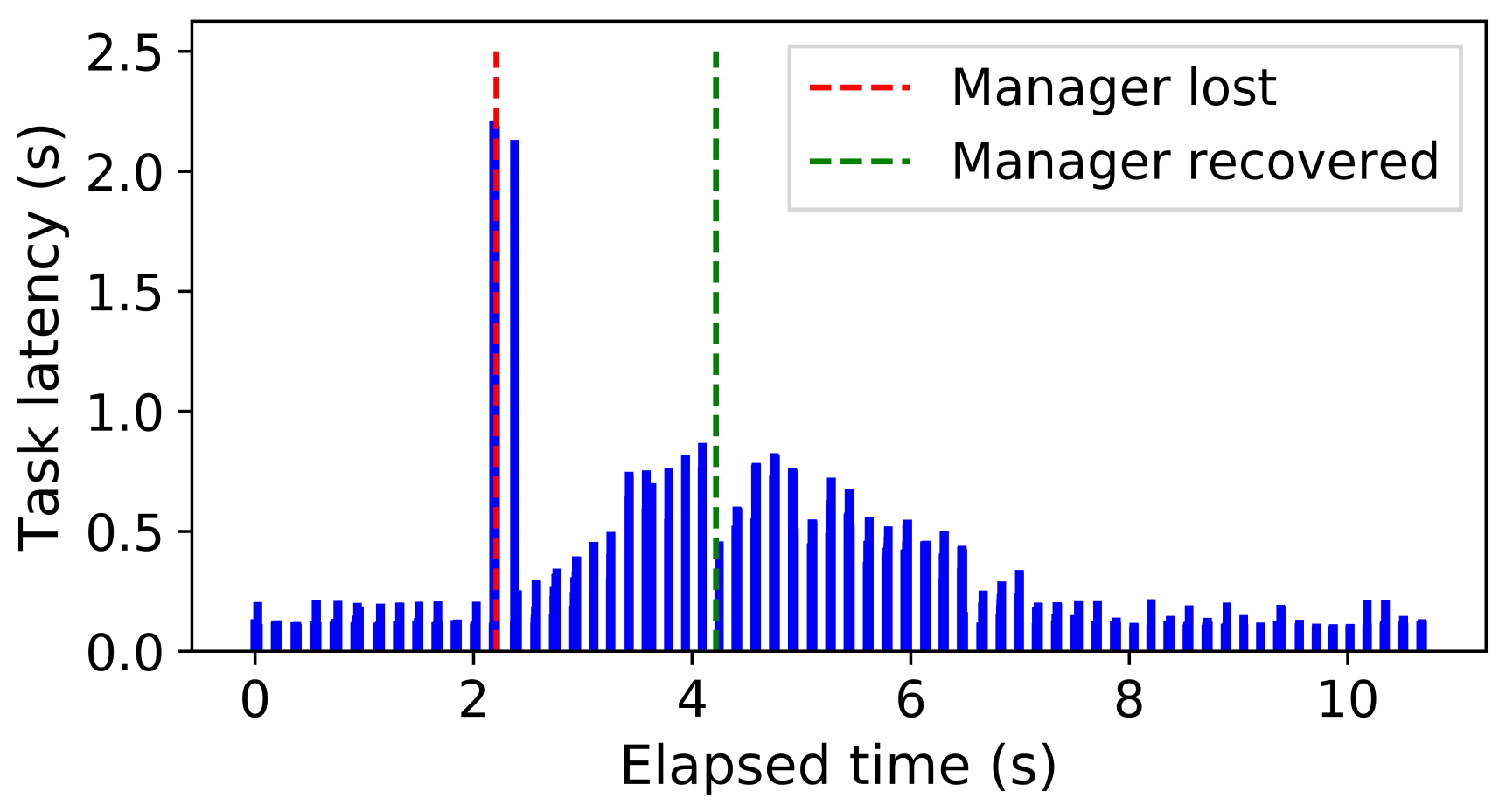

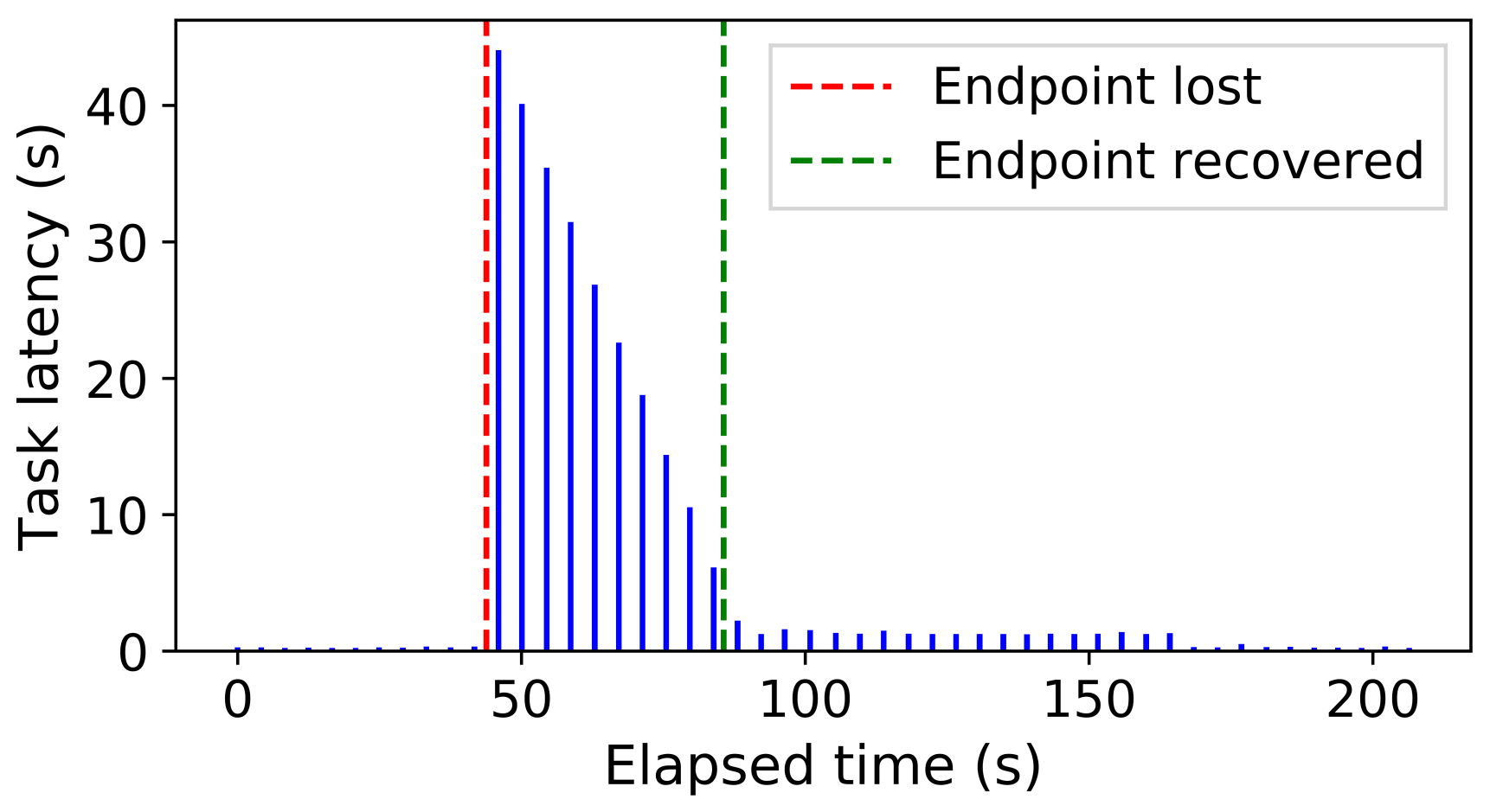

5.4. Fault Tolerance

uncX uses heartbeat messages to detect and respond to component failures. We evaluate this feature by forcing endpoint and manager failures while processing a workload of 100ms sleep functions launched at a uniform rate.

The first experiment uses two managers processing a stream of tasks launched at uniform intervals, ensuring that the system is kept at capacity. One manager is terminated after 2 seconds and restarted after 4 seconds. Figure 7 illustrates the task latencies measured as the experiment progresses. It shows that task latency increases immediately following the failure, as tasks are queued, and then quickly reduce after the manager recovers.

To explore the impact of an endpoint failing (or going offline), we launch a stream of tasks at a uniform rate, and trigger the failure and recovery of the endpoint after 43s and 85s, respectively. Figure 8 illustrates the task latencies measured as the experiment progresses. We see that task latency increases immediately following the failure and returns to previous levels after recovery.

5.5. Optimizations

In this section we evaluate the effect of our optimization mechanisms. In particular, we investigate how container initialization, batching, prefetching, and memoization impact performance.

5.5.1. Container instantiation

To understand the time to instantiate various container technologies on different execution resources we measure the time it takes to start a container and execute a Python command that imports uncX’s worker modules—the baseline steps that would be taken by every cold uncX function. We deploy the containers on an AWS EC2 m5.large instance and on compute nodes on Theta and Cori following best practices laid out in facility documentation. Table 2 shows the results. We speculate that the significant performance deterioration of container instantiation on HPC systems can be attributed to a combination of slower clock speed on KNL nodes and shared file system contention when fetching images. These results highlight the need to apply function warming approaches to reduce overheads.

| System | Container | Min (s) | Max (s) | Mean (s) |

|---|---|---|---|---|

| Theta | Singularity | 9.83 | 14.06 | 10.40 |

| Cori | Shifter | 7.25 | 31.26 | 8.49 |

| EC2 | Docker | 1.74 | 1.88 | 1.79 |

| EC2 | Singularity | 1.19 | 1.26 | 1.22 |

5.5.2. Executor-side batching

To evaluate the effect of executor-side batching we submit concurrent “no-op” function requests and measure the completion time when executors can request one function at a time (batching disabled) vs when they can request many functions at a time based on the number of idle containers (batching enabled). We use 4 nodes (64 containers each) on Theta. We observe that the completion time with batching enabled is 6.7s (compared to 118s when disabled).

5.5.3. User-driven batching

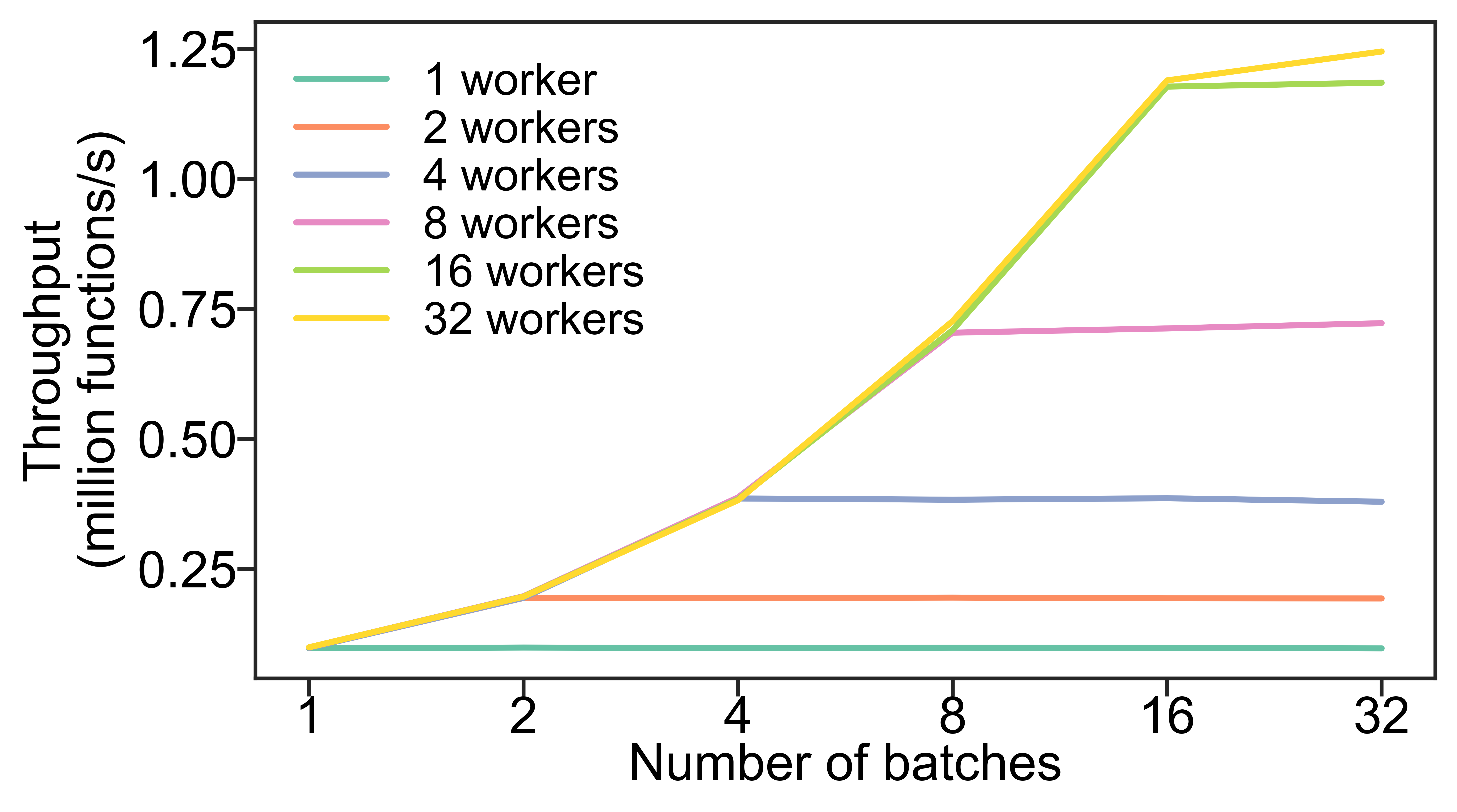

Figure 9 shows the strong-scaling performance of uncX’s map command as we vary batch size and number of workers. In this experiment we launch 10 million functions each executing for 10s, with the client and endpoint both running on one AWS EC2 c5n.9xlarge instance. We see that uncX can achieve a peak throughput of 1.2 million functions-per-second on a single machine, well beyond what is possible without batching.

5.5.4. Batching case studies

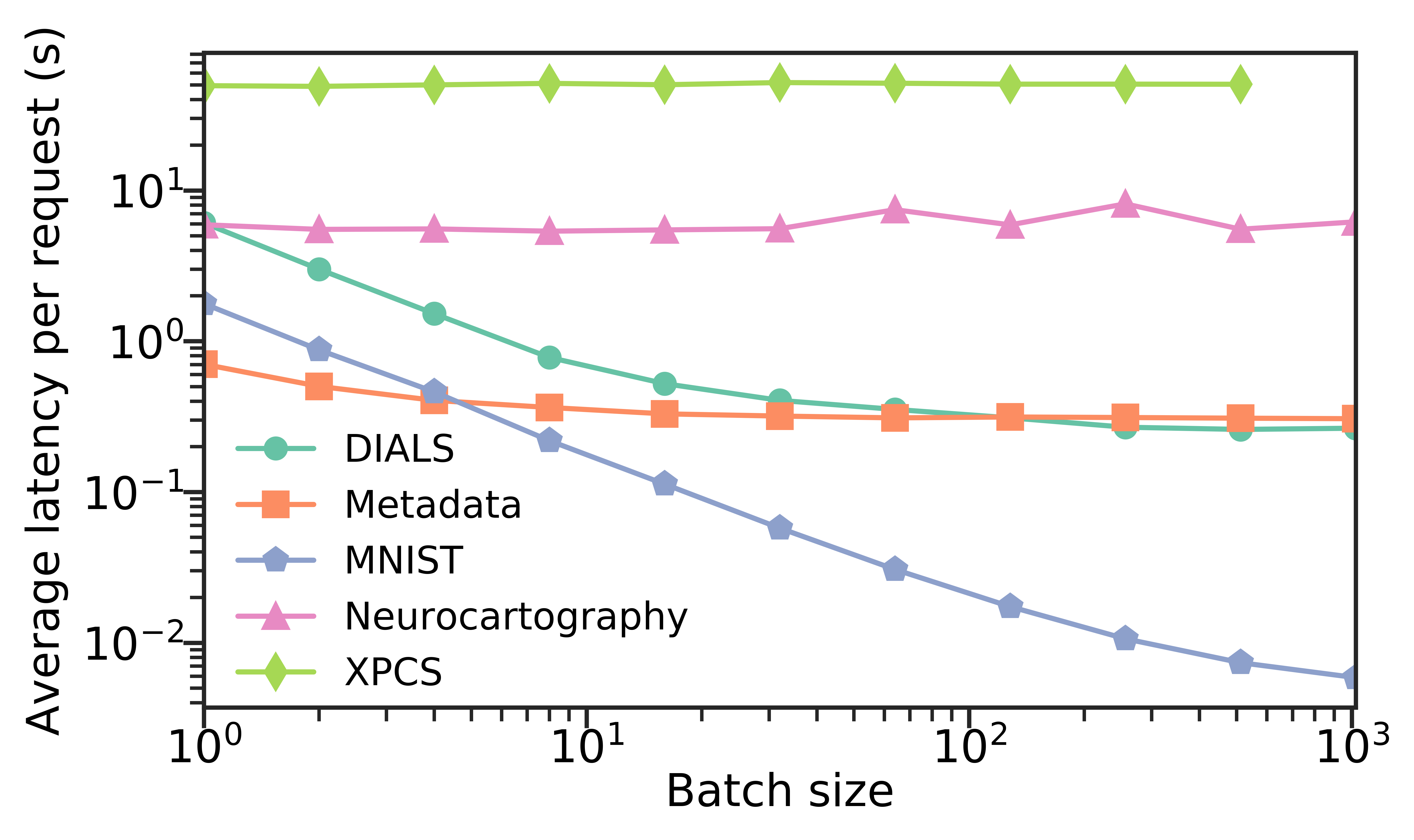

To evaluate the effect of user-driven batching we explore a subset of the scientific case studies discussed in §2. These case studies represent various scientific functions, ranging in execution time from half a second through to almost one minute, and provide perspective to the real-world effects of batching on different types of functions. The batch size is defined as the number of requests transmitted to the container for execution. Figure 10 shows the average latency per request (total completion time of the batch divided by the batch size), as the batch size increases. We observe that batching provides enormous benefit for the shortest running functions and reduces the average latency dramatically when combining tens or hundreds of requests. However, larger batches provide little benefit, indicating that it would be better to distribute the requests to additional workers. Similarly, long-running functions do not benefit, as the communication and startup costs are small relative to computation time.

5.5.5. Prefetching

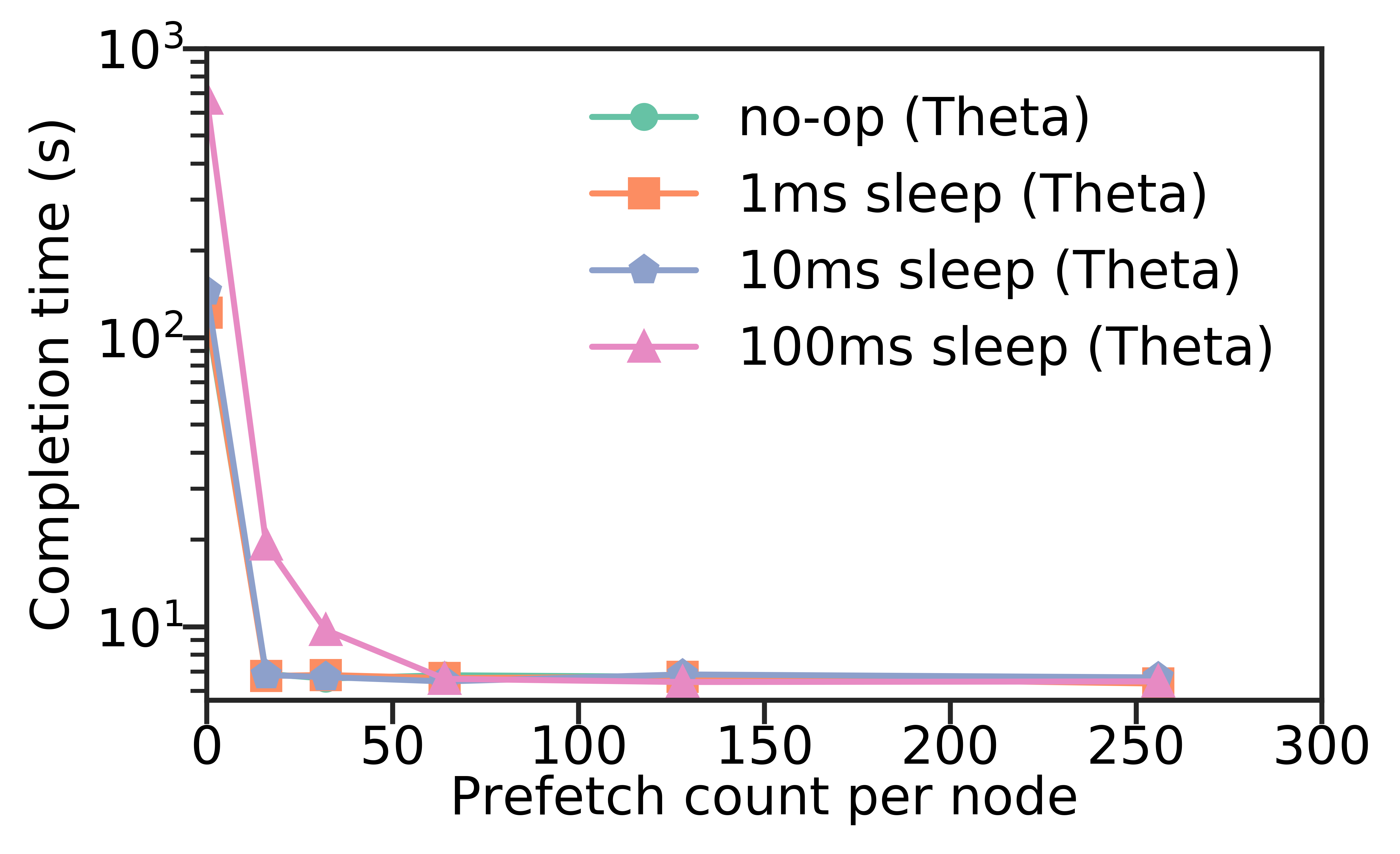

We evaluate the effect of prefetching by creating a no-op and 1ms, 10ms, and 100ms sleep functions, and measuring the time for concurrent function requests as the prefetch count per node is increased. Figure 11 shows the results of each function with 4 nodes (64 containers each) on Theta. We observe that completion time decreases dramatically as prefetch count increases. This benefit starts diminishing when prefetch count is greater than 64, suggesting that a good setting of prefetch count would be close to the number of containers per node.

5.5.6. Memoization

To measure the effect of memoization, we create a function that sleeps for one second and returns the input multiplied by two. We submit concurrent function requests to uncX. Table 3 shows the completion time of the requests when the percentage of repeated requests is increased. We see that as the percentage of repeated requests increases, the completion time decreases dramatically. This highlights the significant performance benefits of memoization for workloads with repeated deterministic function invocations.

|

0 | 25 | 50 | 75 | 100 | |

|---|---|---|---|---|---|---|

| Completion time (s) | 403.8 | 318.5 | 233.6 | 147.9 | 63.2 |

6. Experiences with uncX

We conclude by describing our experiences applying uncX to the six scientific case studies presented in §2.

Metadata extraction: Xtract uses uncX to execute its pre-registered metadata extraction functions centrally (by transferring data to the service) and on remote uncX endpoints where data reside without moving them to the cloud.

Machine learning inference: DLHub uses uncX to perform model inference on arbitrary compute resources. Each model is registered as a uncX function, mapped to the DLHub registered containers. uncX provides several advantages to DLHub, most notably, that it allows DLHub to use various remote compute resources via a simple interface, and includes performance optimizations (e.g., batching and caching) that improve overall inference performance.

Synchrotron Serial Crystallography (SSX): We deployed the DIALS (Waterman et al., 2013) crystallography processing tools as uncX functions. uncX allows SSX researchers to submit the same stills process function to either a local endpoint to perform data validation or HPC resources to process entire datasets and derive crystal structures.

Quantitative Neurocartography: Previous practice depended on batch computing jobs that required frequent manual intervention for authentication, configuration, and failure resolution. With uncX, researchers can use a range of computing resources without the overheads previously associated with manual management. In addition, they can now integrate computing into their automated visualization and analysis workflows via programmatic APIs.

X-ray Photon Correlation Spectroscopy (XPCS): We incorporated the XPCS-eigen corr function, deployed as a uncX function, into an on-demand analysis pipeline triggered as data are collected at the beamline. This work allows scientists to offload analysis tasks to HPC resources, simplify large-scale parallel processing for large data rates. uncX’s scalability meets the demands of the XPCS data rates by acquiring multiple nodes to serve functions.

Real-time data analysis in High Energy Physics (HEP): We developed a uncX backend to Coffea (CMS collaboration, 2019), an analysis framework that can be used to parallelize real-world HEP analyses operating on columnar data to aggregate histograms of analysis products of interest in real time. Subtasks representing partial histograms are dispatched as uncX requests. We completed a typical HEP analysis of 300 million events in nine minutes (1.9 s/event), simultaneously using two uncX endpoints provisioning heterogeneous resources.

Summary: Based on discussion with these researchers we have identified several benefits of the uncX approach in these scenarios. 1) uncX abstracts the complexity of using diverse compute resources. Researchers are able to incorporate scalable analyses without having to know anything about the computing environment (batch queues, container technology, etc.). 2) Researchers appreciated the ability to simplify application code, automatically scale resources to workload needs, and avoid the complexity of mapping applications to batch jobs. Several highlighted the benefits for elastically scaling resources to long-tail task durations. 3) Researchers found that the flexible web-based authentication model significantly simplified remote computing when compared to the previous models that relied on SSH keys and two-factor authentication. 4) Several case studies use uncX to enable event-based processing. We found that the uncX model lends itself well to such use cases, as it allows for the execution of sporadic workloads. The neurocartography, XPCS, and SSX use cases all exhibit such characteristics, requiring compute resources only when experiments are running. 5) Researchers highlighted portability as a benefit of uncX, not only for using multiple resources but also to overcome scheduled and unscheduled facility downtime. 6) uncX allowed resources to be used efficiently and opportunistically, for example using backfill queues to quickly execute tasks. 7) uncX allowed users to securely share their functions, enabling collaborators to easily (without needing to setup environments) apply functions on their own datasets. This was particularly useful in the XPCS use case as researchers share access to the same instrument.

While initial feedback has been encouraging, our experiences also highlight important challenges that need to be addressed. 1) FaaS is not suitable for some applications, for example applications with tightly integrated computations, that share large amounts of data, and are implemented with large and complex code bases. 2) Containerization does not always provide entirely portable codes that can be run on arbitrary resources, due to the need to compile and link resource-specific modules. For example, in the XPCS use case we needed to compile codes specifically for a target resource. 3) The coarse allocation models employed by research infrastructure does not map well to fine grain and short duration function usage, work is needed to support accounting and billing models to track usage on a per-user and per-function basis. 4) There are other barriers that make it difficult to decompose applications into functions. For example, the neurocartography tools are designed to perform many interlaced tasks and thus we chose to package the entire toolkit as a function rather than to decompose these tools into many functions. We also found that it can be difficult to modify applications for stateless execution, as state is not easily shared between functions, and poorly designed solutions may lead to significant communication overhead.

7. Related Work

FaaS platforms have proved extremely successful in industry as a way to reduce costs and remove the need to manage infrastructure.

Hosted FaaS platforms: Amazon Lambda (ama, [n. d.]), Google Cloud Functions (goo, [n. d.]), and Azure Functions (azu, [n. d.]a) are the most well-known FaaS platforms. Each service supports various function languages and trigger sources, connects directly to other cloud services, and is billed in granular increments. Lambda uses Firecracker, a custom virtualization technology built on KVM, to create lightweight micro-virtual machines. To meet the needs of IoT use cases, some cloud-hosted platforms also support local deployment (e.g., AWS Greengrass (gre, [n. d.])); however, they support only single machines and require that functions be exported from the cloud platform.

Open source platforms: Open FaaS platforms resolve two of the key challenges to using FaaS for scientific workloads: they can be deployed on-premise and can be customized to meet the requirements of data-intensive workloads without set pricing models.

Apache OpenWhisk (ope, [n. d.]), the basis of IBM Cloud Functions (IBM, [n. d.]), defines an event-based programming model, consisting of Actions which are stateless, runnable functions, Triggers which are the types of events OpenWhisk may track, and Rules which associate one trigger with one action. OpenWhisk can be deployed locally as a service using a Kubernetes cluster.

Fn (Fn, [n. d.]) is an event-driven FaaS system that executes functions in Docker containers. Fn allows users to logically group functions into applications. Fn can be deployed locally (on Windows, MacOS, or Linux) or on Kubernetes.

The Kubeless (Kub, [n. d.]) FaaS platform builds upon Kubernetes. It uses Apache Kafka for messaging, provides a CLI that mirrors Amazon Lambda, and supports comprehensive monitoring. Like Fn, Kubeless allows users to define function groups that share resources.

SAND (Akkus et al., 2018) is a lightweight, low-latency FaaS platform from Nokia Labs that provides application-level sandboxing and a hierarchical message bus. SAND provides support for function chaining via user-submitted workflows. SAND is closed source and as far as we know cannot be downloaded and installed locally.

Abaco (Stubbs et al., 2017) implements the Actor model, where an actor is an Abaco runtime mapped to a specific Docker image. Each actor executes in response to messages posted to its inbox. It supports functions written in several programming languages and automatic scaling. Abaco also provides fine-grained monitoring of container, state, and execution events and statistics. Abaco is deployable via Docker Compose.

Comparison with uncX: Hosted cloud providers implement high performance and reliable FaaS models that are used by an enormous number of users. However, they are not designed to support heterogeneous resources or research CI (e.g., schedulers, containers), do not integrate with the science ecosystem (e.g., in terms of data and authentication models), and can be costly.

Open source and academic frameworks support on-premise deployments and can be configured to address a range of use cases. However, each system we surveyed is Docker-based and relies on Kubernetes (or other container orchestration platforms) for deployment. These systems therefore cannot be easily adapted to existing HPC environments. We are not aware of any systems that support remote execution of functions over a distributed or federated ecosystem of endpoints.

Other Related Approaches FaaS has many predecessors, notably grid and cloud computing, container orchestration, and analysis systems. Grid computing (Foster et al., 2001) laid the foundation for remote, federated computations, most often through federated batch submission (Krauter et al., 2002). GridRPC (Seymour et al., 2002) defines an API for executing functions on remote servers requiring that developers implement the client and the server code. uncX extends these ideas to allow interpreted functions to be registered and subsequently dynamically executed within sandboxed containers via a standard endpoint API.

Container orchestration systems (Rodriguez and Buyya, 2019; Hightower et al., 2017; Hindman et al., 2011) allow users to scale deployment of containers while managing scheduling, fault tolerance, resource provisioning, and addressing other user requirements. These systems primarily rely on dedicated, cloud-like infrastructure and cannot be directly used with most HPC resources. However, these systems provide a basis for other serverless platforms, such as Kubeless. uncX focuses at the level of scheduling and managing functions, that are deployed across a pool of containers. We apply approaches from container orchestration systems (e.g., warming) to improve performance.

Data-parallel systems such as Hadoop (had, 2019) and Spark (spa, 2019) enable map-reduce style analyses. Unlike uncX, these systems dictate a particular programming model on dedicated clusters. Python parallel computing libraries such as Parsl and Dask (das, [n. d.]) support development of parallel programs, and parallel execution of selected functions within those scripts, on clusters and clouds. These systems could be extended to use uncX for remote execution of tasks.

8. Conclusion

uncX is a distributed FaaS platform that is designed to support the unique needs of scientific computing. It combines a reliable and easy-to-use cloud-hosted interface with the ability to securely execute functions on distributed endpoints deployed on various computing resources. uncX supports many HPC systems and cloud platforms, can use three container technologies, and can expose access to heterogeneous and specialized computing resources. We demonstrated that uncX provides comparable latency to that of cloud-hosted FaaS platforms and showed that uncX agents can scale to execute 1M tasks over concurrent workers when deployed on the Cori supercomputer. We also showed that uncX can elastically scale in response to load, automatically respond to failures, and that user-driven batching can execute more than one million functions per second on a single machine.

uncX demonstrates the advantages of adapting the FaaS model to create a federated computing ecosystem. Based on early experiences using uncX in six scientific case studies, we have found that the approach provides several advantages, including abstraction, code simplification, portability, scalability, and sharing; however, we also identified several limitations including suitability for some applications, conflict with current allocation models, and challenges decomposing applications into functions. We hope that uncX will serve as a flexible platform for scientific computing while also enabling new research related to function scheduling, dynamic container management, and data management.

In future work we will extend uncX’s container management capabilities to create containers dynamically based on function requirements, and to stage containers to endpoints on-demand. We will also explore techniques for optimizing performance, for example by sharing containers among functions with similar dependencies and developing resource-aware scheduling algorithms. uncX is open source and available at https://github.com/funcx-faas.

Acknowledgment

This work was supported in part by Laboratory Directed Research and Development funding from Argonne National Laboratory under U.S. Department of Energy under Contract DE-AC02-06CH11357 and used resources of the Argonne Leadership Computing Facility.

References

- (1)

- ama ([n. d.]) Amazon Lambda. https://aws.amazon.com/lambda. Accessed April 20, 2020.

- ope ([n. d.]) Apache OpenWhisk. http://openwhisk.apache.org/. Accessed April 20, 2020.

- gre ([n. d.]) AWS Greengrass. https://aws.amazon.com/greengrass/. Accessed April 20, 2020.

- azu ([n. d.]a) Azure Functions. https://azure.microsoft.com/en-us/services/functions/. Accessed April 20, 2020.

- das ([n. d.]) Dask. http://docs.dask.org/en/latest/. Accessed April 20, 2020.

- Fn ([n. d.]) Fn project. https://fnproject.io. Accessed April 20, 2020.

- goo ([n. d.]) Google Cloud Functions. https://cloud.google.com/functions/. Accessed April 20, 2020.

- IBM ([n. d.]) IBM Cloud Functions. https://www.ibm.com/cloud/functions. Accessed April 20, 2020.

- Kub ([n. d.]) Kubeless. https://kubeless.io. Accessed April 20, 2020.

- LXC ([n. d.]) Linux containers. https://linuxcontainers.org. Accessed April 20, 2020.

- azu ([n. d.]b) Microsoft Azure Functions Documentation. https://docs.microsoft.com/en-us/azure/azure-functions/functions-scale. Accessed April 20, 2020.

- had (2019) Apache Hadoop. https://hadoop.apache.org/. Accessed April 20, 2020.

- spa (2019) Apache Spark. https://spark.apache.org/. Accessed April 20, 2020.

- Akkus et al. (2018) I. E. Akkus, et al. 2018. SAND: Towards high-performance serverless computing. In USENIX Annual Technical Conference. 923–935.

- Babuji et al. (2019) Y. Babuji, et al. 2019. Parsl: Pervasive Parallel Programming in Python. In 28th International Symposium on High-Performance Parallel and Distributed Computing (HPDC’19). ACM, 25–36.

- Baldini et al. (2017) I. Baldini, et al. 2017. Serverless computing: Current trends and open problems. In Research Advances in Cloud Computing. Springer, 1–20.

- Baldini et al. (2016) I. Baldini, et al. 2016. Cloud-native, event-based programming for mobile applications. In Intl Conf. on Mobile Software Engineering and Systems. ACM, 287–288.

- Chard et al. (2014) K. Chard, et al. 2014. Efficient and Secure Transfer, Synchronization, and Sharing of Big Data. IEEE Cloud Computing 1, 3 (2014), 46–55.

- Chard et al. (2019) R. Chard, et al. 2019. DLHub: Model and data serving for science. In 33rd IEEE International Parallel and Distributed Processing Symposium.

- CMS collaboration (2019) CMS collaboration. 2019. COFFEA - Columnar Object Framework For Effective Analysis. In 24th Intl Conf. on Computing in High Energy and Nuclear Physics.

- De Carlo ([n. d.]) F. De Carlo. Automo. https://automo.readthedocs.io. Accessed April 20, 2020.

- Duarte et al. (2018) J. Duarte, et al. 2018. Fast inference of deep neural networks in FPGAs for particle physics. Journal of Instrumentation 13, 07 (2018), P07027.

- Fano (1965) R. M. Fano. 1965. The MAC system: The computer utility approach. IEEE Spectrum 2, 1 (1965), 56–64.

- Forde et al. (2018) J. Forde, et al. 2018. Reproducible research environments with repo2docker. In Workshop on Reproducibility in Machine Learning.

- Foster and Gannon (2017) I. Foster et al. 2017. Cloud Computing for Science and Engineering. MIT Press.

- Foster et al. (2001) I. Foster, et al. 2001. The anatomy of the grid: Enabling scalable virtual organizations. Intl Journal of Supercomputer Applications 15, 3 (2001), 200–222.

- Fox et al. (2017) G. Fox, et al. 2017. Status of serverless computing and function-as-a-service (FaaS) in industry and research. arXiv preprint arXiv:1708.08028 (2017).

- Fox and Jha (2017) G. Fox et al. 2017. Conceptualizing a computing platform for science beyond 2020. In IEEE 10th International Conference on Cloud Computing. 808–810.

- Hightower et al. (2017) K. Hightower, et al. 2017. Kubernetes: Up and running dive into the future of infrastructure (1st ed.). O’Reilly Media, Inc.

- Hindman et al. (2011) B. Hindman, et al. 2011. Mesos: A platform for fine-grained resource sharing in the data center. In 8th USENIX Conf. on Networked Sys. Design and Impl. 295–308.

- Jacobsen and Canon (2015) D. M. Jacobsen et al. 2015. Contain this, unleashing Docker for HPC. Cray User Group (2015).

- Kiar et al. (2019) G. Kiar, et al. 2019. A serverless tool for platform agnostic computational experiment management. Frontiers in Neuroinformatics 13 (2019), 12.

- Krauter et al. (2002) K. Krauter, et al. 2002. A taxonomy and survey of grid resource management systems for distributed computing. Software: Practice and Experience 32, 2 (2002), 135–164.

- Kurtzer et al. (2017) G. M. Kurtzer, et al. 2017. Singularity: Scientific containers for mobility of compute. PloS one 12, 5 (2017), e0177459.

- Malawski (2016) M. Malawski. 2016. Towards serverless execution of scientific workflows–HyperFlow case Ssudy. In Workshop on Workflows in Support of Large-Scale Science. 25–33.

- Merkel (2014) D. Merkel. 2014. Docker: Lightweight Linux containers for consistent development and deployment. Linux Journal 239 (2014), 2.

- Milojicic et al. (2002) D. S. Milojicic, et al. 2002. Peer-to-Peer Computing. Technical Report.

- Parkhill (1966) D. Parkhill. 1966. The Challenge of the Computer Utility. Addison-Wesley.

- Paul et al. (2017) A. K. Paul, et al. 2017. Toward scalable monitoring on large-scale storage for software defined cyberinfrastructure. In 2nd Joint Intl. Workshop on Parallel Data Storage & Data Intensive Scalable Computing Systems (PDSW-DISCS ’17). 49–54.

- Pivarski et al. (2017) J. Pivarski, et al. 2017. Fast access to columnar, hierarchically nested data via code transformation. In IEEE International Conference on Big Data. 253–262.

- Priedhorsky and Randles (2017) R. Priedhorsky et al. 2017. CharlieCloud: Unprivileged containers for user-defined software stacks in HPC. In International Conference for High Performance Computing, Networking, Storage and Analysis. ACM, 36.

- Rodriguez and Buyya (2019) M. A. Rodriguez et al. 2019. Container-based cluster orchestration systems: A taxonomy and future directions. Software: Practice and Experience 49, 5 (2019), 698–719.

- Seymour et al. (2002) K. Seymour, et al. 2002. Overview of GridRPC: A remote procedure call API for grid computing. In Grid Computing. 274–278.

- Skluzacek et al. (2019) T. J. Skluzacek, et al. 2019. Serverless workflows for indexing large scientific data. In 5th International Workshop on Serverless Computing (WOSC’19). ACM, 43–48.

- Spillner et al. (2017) J. Spillner, et al. 2017. Faaster, better, cheaper: The prospect of serverless scientific computing and HPC. In Latin American High Performance Computing Conference. 154–168.

- Stubbs et al. (2017) J. Stubbs, et al. 2017. Containers-as-a-service via the Actor Model. In 11th Gateway Computing Environments Conference.

- Thain et al. (2005) D. Thain, et al. 2005. Distributed computing in practice: the Condor experience. Concurrency - Practice and Experience 17, 2-4 (2005), 323–356.

- Tuecke et al. (2016) S. Tuecke, et al. 2016. Globus Auth: A research identity and access management platform. In 12th IEEE International Conference on e-Science. 203–212.

- Turilli et al. (2018) M. Turilli, et al. 2018. A comprehensive perspective on pilot-job systems. Comput. Surveys 51, 2 (2018), 43.

- Varghese et al. (2019) B. Varghese, et al. 2019. Cloud futurology. Computer 52, 9 (2019), 68–77.

- Wang et al. (2018) L. Wang, et al. 2018. Peeking behind the curtains of serverless platforms. In USENIX Annual Technical Conference. 133–146.

- Waterman et al. (2013) D. G. Waterman, et al. 2013. The DIALS framework for integration software. CCP4 Newslett. Protein Crystallogr 49 (2013), 13–15.