FICGAN: Facial Identity Controllable GAN for De-identification

Abstract

In this work, we present Facial Identity Controllable GAN (FICGAN) for not only generating high-quality de-identified face images with ensured privacy protection, but also detailed controllability on attribute preservation for enhanced data utility. We tackle the less-explored yet desired functionality in face de-identification based on the two factors. First, we focus on the challenging issue to obtain a high level of privacy protection in the de-identification task while uncompromising the image quality. Second, we analyze the facial attributes related to identity and non-identity and explore the trade-off between the degree of face de-identification and preservation of the source attributes for enhanced data utility. Based on the analysis, we develop Facial Identity Controllable GAN (FICGAN), an autoencoder-based conditional generative model that learns to disentangle the identity attributes from non-identity attributes on a face image. By applying the manifold -same algorithm to satisfy -anonymity for strengthened security, our method achieves enhanced privacy protection in de-identified face images. Numerous experiments demonstrate that our model outperforms others in various scenarios of face de-identification.

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/c3be54cd-ca88-46ae-92ad-4dc976640317/x1.png)

Introduction

Face de-identification refers to obscuring the identity of a person’s face by manipulating the identity dominant attributes such as nose, eyes, eyebrows, and mouth, whereas the identity invariant attributes are preserved, such as pose, expression, shadow, and illumination. The face is one of the most sensitive elements compare to other generic objects due to its personal identity information which is directly related to privacy. Therefore, face de-identification is an important research topic in social aspects of security and privacy, and has been utilized to anonymize the faces that appeared in media interviews, the street-views on video surveillance contents, and medical research data (Chi and Hu 2015; Senior 2009). The conventional methods, such as the black-bar masking, blurring, and pixelization, are simple but aggressive to remove the facial information, resulting in reduced data utility and unsuitable image quality (Ribaric and Pavesic 2015; Zhu et al. 2020a).

Recently, deep learning methods have been employed for the task of face de-identification. Thanks to the advances in Generative Adversarial Networks (GAN) (Goodfellow et al. 2014), simple methods such as face swap (Bitouk et al. 2008) can be used to generate realistic outputs (Li et al. 2019; Zhu et al. 2020b); however, since it employs the target person’s face swapped onto the source image, it can be still identifiable and the risk of privacy leakage still remains. Also, since the facial attributes are simply inherited from the target, the attributes from the source cannot be preserved to be suited for various needs. To tackle this issue, most previous studies have taken either of the following two approaches: simply tweaking parts of the identity attributes or applying -anonymity111The -anonymity is a definition of the privacy security level. Please refer to Newton et al. (2005) for details. to face images to ensure privacy at the cost of quality degradation. Although these methods have paved a way for de-identification using deep learning, there is still room to improve. The risk of privacy leakage along with the challenge to control which facial attributes to preserve for data utility still remains for the first approach. Also, the blurry results from the averaged face of number of images reduce the image quality in both aesthetic and utility aspects for the second approach.

To tackle the issues, we have studied the under-explored challenges of securing a high level of privacy protection by achieving -anonymity based on the -same algorithm, while achieving the quality and controllability on the preservation of facial attributes. In this work, we propose Facial Identity Controllable GAN (FICGAN), a highly secure and controllable face de-identification GAN model that learns to disentangle identity and non-identity attributes. Our method differentiates itself in two aspects: First, our method achieves -anonymity for robust security by using the simple manifold -same algorithm (Yan, Pei, and Nie 2019), which exploits mixing the number of different face images on the learned latent identity space as a target identity embedding. Second, the identity disentanglement and layer-wised generator enable detailed controllability on the degree of de-identification and also attribute preservation according to users’ preference. Numerous experiments demonstrate that our method can generate privacy-ensured de-identified face images with carefully controlled attributes from the source image to enhance data utility. Furthermore, we provide ample discussions and analysis on the trade-off as well as the relationship between the degree of identity attributes and non-identity attributes of the human face.

Related Work

The traditional methods for face de-identification include black bar masking, blurring, and pixelization (Ribaric and Pavesic 2015; Zhu et al. 2020a), which can aggressively destroy the facial information. Thus, recent studies focus on preserving the facial attributes while effectively obfuscating the identity (Gafni, Wolf, and Taigman 2019; Nitzan et al. 2020), by applying fusing (Zhu et al. 2020b; Nirkin, Keller, and Hassner 2019; Li et al. 2019; Zhu et al. 2020a; Thies, Zollhöfer, and Nießner 2019; Thies et al. 2016) and averaging (Newton, Sweeney, and Malin 2005; Gross et al. 2006, 2005; Jourabloo, Yin, and Liu 2015; Yan, Pei, and Nie 2019; Chi and Hu 2015) the faces of the examples to remove the source identity and fill in with the target identity. Another method is to mask the entire facial area and synthesize a new face using inpainting (Sun et al. 2018a, b). The face swap based methods (Nirkin, Keller, and Hassner 2019; Li et al. 2019; Mahajan, Chen, and Tsai 2017; Bitouk et al. 2008; Lin et al. 2012) inevitably follow the attributes of the swapped face that may lead to another privacy leakage or malicious usage. Conditioning the landmarks (Sun et al. 2018a, b) of the face images for de-identification can be useful to maintain the facial expressions and head poses, but it is still limited to fully convey other physical traits; thus, those methods share the same limitation with the face swap based methods.

To avoid identity leakage, it is more effective to utilize multiple images for de-identification. The -same algorithms (Newton, Sweeney, and Malin 2005; Gross et al. 2005, 2006; Jourabloo, Yin, and Liu 2015) for de-identification have been known to guarantee -anonymity (Yan, Pei, and Nie 2019); however, the models employing -same algorithms have not been able to generate high-quality outputs. However, our method achieves high-quality face de-identification with -anonymity by using a manifold -same algorithm, where indicates the number of different face images averaged on the learned latent space. Our model can generate highly realistic de-identified face images for enhanced data utility.

The recent methods of advanced face de-identification have focused on disentangling the identity and non-identity attributes (Nitzan et al. 2020; Gafni, Wolf, and Taigman 2019; Bao et al. 2018). However, it is difficult to separate those attributes since they are rather mixed (Abudarham, Shkiller, and Yovel 2019; Sendik, Lischinski, and Cohen-Or 2019; Sinha and Poggio 1996; Toseeb, Keeble, and Bryant 2012). This manifests as a trade-off between the degree of de-identification and preservation of attributes (Xiao et al. 2020), and any restriction on the de-identification tasks may yield undesirable face manipulation quality. Instead of restricting the region or attributes for de-identification, we design our model to control various properties for detailed control of the trade-off based on the user’s preferences.

Facial Identity Controllable GAN

In this section, we provide detailed explanations on our face de-identification model, FICGAN. We first describe the overall framework, then explain the loss functions used to train our model. Then we introduce four ways to utilize our model for various purposes.

Overall Framework

Our goal is to disentangle the identity (ID) and non-identity (non-ID) features to obtain detailed controllability on the trade-off between the degree of de-identification and preservation of facial attributes for enhanced data utility. The encoder () is designed to disentangle the identity (ID) and non-identity (non-ID) attributes from the face images. For robust de-identification, our model mixes the ID attributes from the target image with the non-ID attributes from the source image . Generator () de-identifies face images by mixing the features extracted from and . By incorporating the verification network () of SphereFace (Liu et al. 2017) for loss function, our model can effectively disentangle the ID and non-ID attributes for robust face de-identification. The overall framework is shown in Figure 2.

Encoder

Embedding an image into the spatial and non-spatial codes with our careful loss design induces the disentanglement of the identity (ID) attributes from the non-identity (non-ID) attributes containing the spatial characteristics, such as the head pose, background, and local color scheme. The encoder () takes the input images and extracts two types of features: the first is the ID latent code (), which is a vector of size 512 for encoding the ID attributes; the second is the spatial latent code () titled the non-ID latent code, which is a 3-dimensional tensor of size encoding the non-identity attributes including the spatial information. In contrast to the non-ID latent code containing the spatial information, such as the head pose, background, and local color scheme, the ID latent codes contain the non-spatial information, which make it easier to disentangle the ID and non-ID attributes. It is similar to (Park et al. 2020) that utilizes the latent codes from a different dimension to obtain the structural information.

Generator

The ID and non-ID latent codes with the different dimensions from the encoder () are inserted as the input of the generator (). The non-ID latent code is inserted into the generator in a feedforward manner to help generate the structural attributes, such as the head pose and expression, in the lower dimension of the image. In contrast, the ID latent code is processed by adaptive instance normalization (AdaIN) (Huang and Belongie 2017) to be adjusted to the generator in a layer-wise manner (Karras, Laine, and Aila 2019). Before being inserted into the generator, the ID latent code is embedded to the intermediate latent vector , which controls the ID of the generated faces. It helps to locate the appropriate ID-space when applying the -same algorithm in the domain of to generate high-quality de-identified images.

The mapping network consists of a series of multi-layer perceptrons (MLP). If the latent codes of ID () and non-ID () originate from a source image, generates a reconstructed image (). In contrast, if and originate from different images, generates a de-identified image ().

Face Verification Network

We utilize the pre-trained verification network to effectively disentangle the ID attributes. The face verification network () takes an image and extracts a feature vector of size 512. Because the verification networks extract discriminative features of facial identities, they are used for contrast learning to disentangle identities.

FICGAN Losses

Contrastive Verification Loss

We disentangle it into two features and , which allows independent modification of the facial attributes. In order to disentangle the identity attributes from the input image , contrastive learning (Dai and Lin 2017; Kang and Park 2020; Zhang et al. 2020) is applied. From the input image , the encoder separates the two latent codes and . The two types of contrastive verification loss can be defined by the ID features of , , and mixed image . The embedding features are extracted by , and the cosine similarity () is used to calculate the similarity between the embedding features.

The first type of loss to disassociate from the in identity attributes can be defined as:

| (1) |

where indicates the ID latent code disentangled from , while indicates the spatial latent code of the non-identity attributes of . The second type of loss to associate close to in identity attributes can be defined as:

| (2) |

Reconstruction Loss

The reconstruction loss is designed for the network to preserve all of the inserted information of the source image . Enabling to reconstruct from , the reconstruction loss can be defined as below:

| (3) |

The reconstruction loss helps maintain the information of even after disentangling the ID and non-ID attributes.

Adversarial Loss

The adversarial loss (Goodfellow et al. 2014) is designed to ensure the visual quality of reconstructed images and mixed images as below:

It manages the degradation of image quality due to the blurriness caused by applying the -same algorithm and the unnatural imagery due to a combination of and .

Total Loss

During an iteration of the training phase, the model performs both reconstruction and mixing. The first is the reconstruction loss when , and the second is the contrastive verification loss when . Thus, the total loss can be defined as below:

Manipulation Methods at Inference Time

Manifold -Same Algorithm

To obtain a high level of privacy protection, we apply -same algorithm (Newton, Sweeney, and Malin 2005) in the latent space of our model, which is titled as manifold -same algorithm. From the randomly selected facial images, the centroid of is calculated by the encoder and the mapping network as shown in Figure 2-(b). This centroid vector (or -anonymized code; ) satisfies the -same algorithm because the properties of are combined. Based on , our single model can perform de-identification in four other ways with securing -anonymity, depending on the purpose of use as follows.

Controlling Degree of De-identification

We leverage the layer-wise controllability offered by StyleGAN (Karras, Laine, and Aila 2019) architecture. Unlike StyleGAN that simply mixes up the styles in images, we mix up the ID attributes of the source and target images in a layer-wise manner for a fine-grained control to manage the trade-off between the degree of de-identification and preservation of attributes for enhanced data utility. In this way, we can observe the transition resulting from adjusting the identity-related characteristics in facial attributes. First, we can control the degree of de-identification by selecting the index of layer (), which is the point of standards to adjust the amount of and obtained from the source image . To be specific, we insert from the first to -th layers and from -th to -th layers. When , mean identity is inserted to all layers, thus the source image is strongly de-identified (strong de-ID). In contrast, when , is applied only to the highest layer, thus leading to a weak de-identification (weak de-ID). An example (i=1, when L=4) of controlling the degree of de-identification is shown in Figure 2-(c). The greater the is, the less the degree of de-identification is, which leads to more attributes preserved to enable a fine-grained control. We provide detailed explanations on the trade-off between the identity attributes and non-identity attributes according to the adjustment of in the paragraph of ‘Trade-off between De-identification and Attribute Preservation’ under Experiments.

Attribute Control

We can control specific attributes of the image according to the user’s preference. Loss of specific attributes is inevitable due to a trade-off between the identity and non-identity attributes (Gross et al. 2005). However, we opt to control the loss by employing , the mean vector of specific attributes obtained from a group of images with the same attributes. Using , we can either preserve or insert specific attributes for fine-grained control of de-identification. We provide detailed explanations on how our model controls the preservation of attributes as desired in the paragraph of ‘Controllability on Facial Attributes’ under Experiments.

Example-based Control

Our model can control the direction of de-identification using the examplar of face images, therefore achieving data diversity. The increase of in -anonymity has a twofold effect: it can guarantee a greater degree of anonymity for privacy protection, but can also lead to excessive generalization of mean facial identity, resulting in identical faces. However, data diversity can be required in some cases. For example, if multiple people are presented in the same scene, their de-identified faces should be different from each other. Also, it is important to consider diversity in race, ethnicity, gender, and age to fully represent a broad range of faces.

For such cases, we can generate diverse images of de-identified faces by controlling the direction and the degree of de-identification through the interpolation between and . The -anonymized code for direction, , can be calculated by employing the scaling factor as below:

| (5) |

It utilizes the linear interpolation between and the latent space of a specific target image . Diverse images of de-identification are provided in the paragraph of ’Diversity of De-identified Images’ under Experiments.

Identity Swapping

Lastly, target ID code is employed instead of for identity swapping to switch to the target identity. The results are illustrated in Fig. 9.

Experiments

Dataset

For training, we use 60,000 images out of 70,000 images in FFHQ (Karras, Laine, and Aila 2019). For testing, we use the remaining 10,000 images of FFHQ and the unseen CelebA-HQ dataset. To compare with the existing methods, we use celebrity face images from ALAE (Pidhorskyi, Adjeroh, and Doretto 2020), IDInvert (Zhu et al. 2020b), and Liveface (Gafni, Wolf, and Taigman 2019).

Evaluation Metrics

For quantitative analysis, we use three types of metrics. First, we measure the Fréchet Inception Distance (FID) (Heusel et al. 2017) score to evaluate the quality of de-identified images. Then, to test the effectiveness of de-identification, we measure the cosine similarity between the identity features of the source image and the de-identified image . For a fair comparison of the identity similarity, we use the pre-trained ArcFace (Deng et al. 2019), the face verification network excluded from our training. To evaluate whether the attributes are well preserved, we train a multi-class classifier with CelebA-HQ (Lee et al. 2020) which contains images labeled with 40 attributes. We use 27,000 images from CelebA-HQ for training and the remaining 3,000 images for testing. The average performance of the classifier for 40 attributes is 90% on the test set.

Trade-off between De-identification and Attribute Preservation

In this paragraph, we measure the degree of de-identification and the preservation of the attributes according to the layer where is applied. Consecutively inserting from the lowest to highest layers leads to greater application of -anonymized identity, resulting in strong de-ID of the source face (). In contrast, inserting only to the highest layers leads to greater preservation of the facial features in , resulting in weak de-ID as shown in Figure 3. Figure 4-(a,b) shows the performance of ID-similarity and attribute preservation when the -same algorithm () or an arbitrary ID () is applied. Also, Figure 4-(c) shows the accuracy of the attribute to evaluate the preservation of each attribute. This quantitatively explains the relationship between the degree of de-identification and preservation of the attributes for each layer.

As shown in Figure 4-(a), when strong de-ID is applied ID-similarity decreases and it is favorable in terms of de-identification, but the accuracy of the attributes also decreases, which may not be favorable depending on user’s preference. As shown in Figure 3, the glasses are removed (first row) while bangs (second row) are preserved when strong de-ID is applied. It can be also observed in Figure 4-(c). This is because glasses play an important role in recognizing the identity of an individual. As illustrated, the stronger the degree of de-identification is, the weaker the attributes are preserved. A deep analysis of the ID and non-ID attributes per layer of the network is provided in supplementary materials.

Controllability on Facial Attributes

Figure 6 illustrates how the facial attributes can be controlled using the averaged attribute code . In the images of the last column in Figure 6, applying the attributes of (a) male to the source image effectively preserves the male attributes but removes the glasses of , due to their high correlation to identity. This can be improved by applying the attributes of (b) male wearing glasses. In addition, attributes such as male, smiling, and pale-skinned can be added to the source image to generate controlled de-identified images.

Our model not only controls the attributes of face images using the averaged attributes with high complexity, but also performs de-identification suitable for k-anonymity. More diverse results of the k-same algorithm applied to other models are provided in supplementary materials.

Diversity of De-identified Images

Figure 5 shows that example-based control with equation 5 can manipulate the degree of de-identification as well as the direction to be manipulated with a target identity from a -anonymized code . Although the same mean attribute is used in Figure 5, the de-identified results gradually become to look more like the corresponding based on the interpolation, as shown in the eyebrows and skin color. Moreover, it guarantees anonymity even for the interpolation between and , since it uses an arbitrary other than those that have been already disentangled from .

| Model | FID | ID Similarity | Attribute |

|---|---|---|---|

| FSGAN (Nirkin, Keller, and Hassner 2019) | 45.62 | 0.21 | 79.92 |

| IDDis (Nitzan et al. 2020) | 46.48 | 0.01 | 74.31 |

| FICGAN | 20.45 | 0.09 | 81.35 |

| FSGAN (Nirkin, Keller, and Hassner 2019) + -same | 63.24 | 0.33 | 83.56 |

| IDDis (Nitzan et al. 2020) + -same | 131.23 | 0.01 | 78.06 |

| FICGAN + Manifold -same (Ours) | 22.25 | 0.14 | 85.44 |

Comparison to Previous Literature

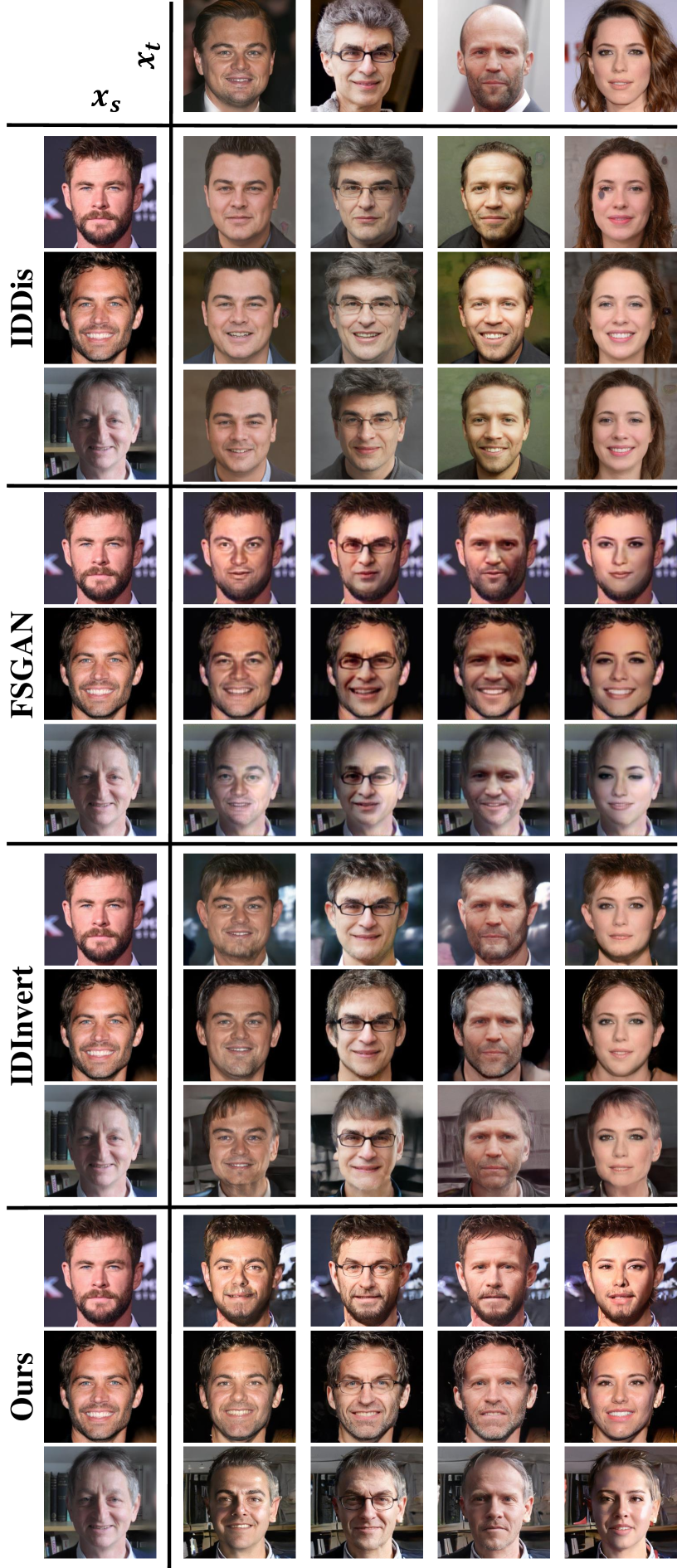

In this paragraph, we conduct quantitative and qualitative analyses on our model compared to the previous literature. Figure 7 illustrates the de-identified images based on the images used in Live Face (Gafni, Wolf, and Taigman 2019). The results of our model preserve the head pose, expression, skin tone, and hairstyle of the face of , while successfully obfuscating the identity. Also, Figure 8 illustrates the results of our model compared to APFD (Yan, Pei, and Nie 2019), which applied k-same algorithm. The results of APFD are blurry and unnatural with the disappeared head pose, while our model successfully performs de-identification even with the k-same algorithm applied. Figure 9 illustrates the de-identified images of our model compared to those of other face-swapping models. ID-Dis (Nitzan et al. 2020) only preserves the head pose of the source image and disregards the rest of the non-identity attributes.

By effectively managing the trade-off between the ID and non-ID attributes, our model achieves exceptional performance in preserving the non-ID attributes up to a level where they are almost identical when evaluated with the CelebA-HQ test set. The results in Table 1 show that our method produces the most realistic face images, as shown by the FID score. For the de-identification performance measured by ID similarity, our method is in the second place after IDDis. However, as shown in the attribute measure in this table and will be shown in Figure 9, our method preserves the high-quality source attributes, while IDDis does not. Besides, the high FID fluctuations at “FSGAN+-same” and “IDDis+-same” imply that it is difficult to extend those methods to -anonymity. More experiments on the comparative analysis are provided in supplementary materials.

Ablation Study

| Model | Similarity | Attribution |

|---|---|---|

| FICGAN | 0.091 | 81.35 |

| w/o Spatial code () | 0.008 | 74.49 |

| w/o Positive loss () | 0.192 | 85.76 |

| w/o Negative loss () | 0.312 | 89.02 |

| FICGAN | 0.142 | 85.44 |

| w/o Spatial code () + k-same | 0.001 | 78.09 |

| w/o Positive loss () + k-same | 0.189 | 87.01 |

| w/o Negative loss () + k-same | 0.313 | 89.05 |

Figure 10 shows the effect of the proposed spatial code () and two types of contrastive verification loss (, ). If is absent, the model tends to maintain the identity of the source image, which indicates that improves the quality of attribute disentanglement. Though less noticeable, makes the swapped identity appear closer to . If is replaced with the constant vector, it can result in the overall facial features distorted and the non-identity attributes of vanished. Table 2 also shows more cases of ablation study. Without , it results in the lowest similarity but the least amount of remaining attributes, which indicates the importance of in preserving the attributes in . Also, it shows that employing both of and results in a higher quality of de-identification.

Conclusion

We propose FICGAN that generates high-quality de-identified face images with robust privacy protection satisfying -anonymity. By enabling detailed controllability on the trade-off between the degree of de-identification and preservation of facial attributes, our method can generate diverse de-identified images without sacrificing image quality. We empirically demonstrate that our method outperforms other methods in various scenarios. Based on the analysis and discussions on the relationship between the ID and non-ID attributes, our method opens up a new door for future work and even new promising applications, e.g., a media director can protect the identity of interviewees while controlling the facial attributes in detail for various needs.

References

- Abudarham, Shkiller, and Yovel (2019) Abudarham, N.; Shkiller, L.; and Yovel, G. 2019. Critical features for face recognition. Cognition.

- Bao et al. (2018) Bao, J.; Chen, D.; Wen, F.; Li, H.; and Hua, G. 2018. Towards open-set identity preserving face synthesis. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition.

- Bitouk et al. (2008) Bitouk, D.; Kumar, N.; Dhillon, S.; Belhumeur, P.; and Nayar, S. K. 2008. Face swapping: automatically replacing faces in photographs. In ACM SIGGRAPH 2008 papers.

- Chi and Hu (2015) Chi, H.; and Hu, Y. H. 2015. Face de-identification using facial identity preserving features. In GlobalSIP.

- Dai and Lin (2017) Dai, B.; and Lin, D. 2017. Contrastive learning for image captioning. In Neural Information Processing Systems.

- Deng et al. (2019) Deng, J.; Guo, J.; Xue, N.; and Zafeiriou, S. 2019. Arcface: Additive angular margin loss for deep face recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition.

- Gafni, Wolf, and Taigman (2019) Gafni, O.; Wolf, L.; and Taigman, Y. 2019. Live face de-identification in video. In Proceedings of the IEEE International Conference on Computer Vision.

- Goodfellow et al. (2014) Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; and Bengio, Y. 2014. Generative adversarial nets. Advances in neural information processing systems, 27.

- Gross et al. (2005) Gross, R.; Airoldi, E.; Malin, B.; and Sweeney, L. 2005. Integrating utility into face de-identification. In International Workshop on Privacy Enhancing Technologies.

- Gross et al. (2006) Gross, R.; Sweeney, L.; De la Torre, F.; and Baker, S. 2006. Model-based face de-identification. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshop.

- Heusel et al. (2017) Heusel, M.; Ramsauer, H.; Unterthiner, T.; Nessler, B.; and Hochreiter, S. 2017. Gans trained by a two time-scale update rule converge to a local nash equilibrium. In Neural Information Processing Systems.

- Huang and Belongie (2017) Huang, X.; and Belongie, S. 2017. Arbitrary style transfer in real-time with adaptive instance normalization. In Proceedings of the IEEE International Conference on Computer Vision.

- Jourabloo, Yin, and Liu (2015) Jourabloo, A.; Yin, X.; and Liu, X. 2015. Attribute preserved face de-identification. In ICB.

- Kang and Park (2020) Kang, M.; and Park, J. 2020. ContraGAN: Contrastive Learning for Conditional Image Generation. In Neural Information Processing Systems.

- Karras, Laine, and Aila (2019) Karras, T.; Laine, S.; and Aila, T. 2019. A style-based generator architecture for generative adversarial networks. In Proceedings of the IEEE conference on computer vision and pattern recognition.

- Kingma and Ba (2014) Kingma, D.; and Ba, J. 2014. Adam: A Method for Stochastic Optimization. International Conference on Learning Representations.

- Lee et al. (2020) Lee, C.-H.; Liu, Z.; Wu, L.; and Luo, P. 2020. MaskGAN: Towards Diverse and Interactive Facial Image Manipulation. In Proceedings of the IEEE conference on computer vision and pattern recognition.

- Li et al. (2019) Li, L.; Bao, J.; Yang, H.; Chen, D.; and Wen, F. 2019. Faceshifter: Towards high fidelity and occlusion aware face swapping. arXiv preprint arXiv:1912.13457.

- Lin et al. (2012) Lin, Y.; Lin, Q.; Tang, F.; and Wang, S. 2012. Face replacement with large-pose differences. In Proceedings of the 20th ACM international conference on Multimedia.

- Liu et al. (2017) Liu, W.; Wen, Y.; Yu, Z.; Li, M.; Raj, B.; and Song, L. 2017. Sphereface: Deep hypersphere embedding for face recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition.

- Mahajan, Chen, and Tsai (2017) Mahajan, S.; Chen, L.; and Tsai, T. 2017. SwapItUp: A Face Swap Application for Privacy Protection. In 2017 IEEE 31st International Conference on Advanced Information Networking and Applications (AINA).

- Newton, Sweeney, and Malin (2005) Newton, E. M.; Sweeney, L.; and Malin, B. 2005. Preserving privacy by de-identifying face images. IEEE transactions on Knowledge and Data Engineering, 17(2): 232–243.

- Nirkin, Keller, and Hassner (2019) Nirkin, Y.; Keller, Y.; and Hassner, T. 2019. Fsgan: Subject agnostic face swapping and reenactment. In Proceedings of the IEEE International Conference on Computer Vision, 7184–7193.

- Nitzan et al. (2020) Nitzan, Y.; Bermano, A.; Li, Y.; and Cohen-Or, D. 2020. Face identity disentanglement via latent space mapping. ACM Transactions on Graphics (TOG), 39(6): 1–14.

- Park et al. (2020) Park, T.; Zhu, J.-Y.; Wang, O.; Lu, J.; Shechtman, E.; Efros, A. A.; and Zhang, R. 2020. Swapping autoencoder for deep image manipulation. In Neural Information Processing Systems.

- Pidhorskyi, Adjeroh, and Doretto (2020) Pidhorskyi, S.; Adjeroh, D. A.; and Doretto, G. 2020. Adversarial latent autoencoders. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition.

- Ribaric and Pavesic (2015) Ribaric, S.; and Pavesic, N. 2015. An overview of face de-identification in still images and videos. In 2015 11th IEEE International Conference and Workshops on Automatic Face and Gesture Recognition (FG). IEEE.

- Richardson et al. (2020) Richardson, E.; Alaluf, Y.; Patashnik, O.; Nitzan, Y.; Azar, Y.; Shapiro, S.; and Cohen-Or, D. 2020. Encoding in style: a stylegan encoder for image-to-image translation. arXiv preprint arXiv:2008.00951.

- Sendik, Lischinski, and Cohen-Or (2019) Sendik, O.; Lischinski, D.; and Cohen-Or, D. 2019. What’s in a Face? Metric Learning for Face Characterization. In Computer Graphics Forum, volume 38, 405–416. Wiley Online Library.

- Senior (2009) Senior, A. 2009. Privacy protection in a video surveillance system. In Protecting Privacy in Video Surveillance.

- Sinha and Poggio (1996) Sinha, P.; and Poggio, T. 1996. I think I know that face… Nature.

- Sun et al. (2018a) Sun, Q.; Ma, L.; Oh, S. J.; Van Gool, L.; Schiele, B.; and Fritz, M. 2018a. Natural and effective obfuscation by head inpainting. In Proceedings of the IEEE/CVF International Conference on Computer Vision.

- Sun et al. (2018b) Sun, Q.; Tewari, A.; Xu, W.; Fritz, M.; Theobalt, C.; and Schiele, B. 2018b. A hybrid model for identity obfuscation by face replacement. In European conference on computer vision.

- Thies, Zollhöfer, and Nießner (2019) Thies, J.; Zollhöfer, M.; and Nießner, M. 2019. Deferred neural rendering: Image synthesis using neural textures. ACM Transactions on Graphics (TOG), 38(4): 1–12.

- Thies et al. (2016) Thies, J.; Zollhofer, M.; Stamminger, M.; Theobalt, C.; and Nießner, M. 2016. Face2face: Real-time face capture and reenactment of rgb videos. In Proceedings of the IEEE conference on computer vision and pattern recognition.

- Toseeb, Keeble, and Bryant (2012) Toseeb, U.; Keeble, D. R.; and Bryant, E. J. 2012. The significance of hair for face recognition. PloS one.

- Xiao et al. (2020) Xiao, T.; Tsai, Y.-H.; Sohn, K.; Chandraker, M.; and Yang, M.-H. 2020. Adversarial learning of privacy-preserving and task-oriented representations. In Proceedings of the AAAI Conference on Artificial Intelligence, volume 34, 12434–12441.

- Yan, Pei, and Nie (2019) Yan, B.; Pei, M.; and Nie, Z. 2019. Attributes Preserving Face De-Identification. In Proceedings of the IEEE/CVF International Conference on Computer Vision Workshops.

- Zhang et al. (2020) Zhang, H.; Zhang, Z.; Odena, A.; and Lee, H. 2020. Consistency regularization for generative adversarial networks. International Conference on Learning Representations.

- Zhang et al. (2018) Zhang, R.; Isola, P.; Efros, A. A.; Shechtman, E.; and Wang, O. 2018. The unreasonable effectiveness of deep features as a perceptual metric. In Proceedings of the IEEE conference on computer vision and pattern recognition, 586–595.

- Zhu et al. (2020a) Zhu, B.; Fang, H.; Sui, Y.; and Li, L. 2020a. Deepfakes for Medical Video De-Identification: Privacy Protection and Diagnostic Information Preservation. In Proceedings of the AAAI/ACM Conference on AI, Ethics, and Society, 414–420.

- Zhu et al. (2020b) Zhu, J.; Shen, Y.; Zhao, D.; and Zhou, B. 2020b. In-domain gan inversion for real image editing. In European conference on computer vision, 592–608. Springer.

FICGAN: Facial Identity Controllable GAN for De-identification

– Supplementary Material –

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/c3be54cd-ca88-46ae-92ad-4dc976640317/x11.png)

Due to the limited space of the main manuscripts, we attach additional details on the implementation, experimental results, and discussions in this supplementary material. First, Section A describes the details on the training settings, such as the tools and hyperparameters. Then, Section B analyzes the experimental results conducted to observe the role of each layer for the layer-wise control of attributes for de-identification. Next, Section C provides additional examples of the controllable and uncontrollable attributes during the de-identification process. Lastly, the comparative analysis on our model and the existing methods for reconstruction, identity swapping, and manifold k-same algorithm are provided in Section D, Section E, and Section F, respectively.

Appendix A Implementation Details

We resize the input images into , then the generated output images have the same size. For training, we use a single NVIDIA RTX 8000 with the batch size of and iterations. The encoder, generator, discriminator networks are trained by Adam optimizer (Kingma and Ba 2014) with the learning rate of . Also, the weights of loss are set to , , and , and for the manifold -same algorithm, we set .

Appendix B Layer-wise Control of Attributes

In de-identification, the middle layers play a significant role to obscure the identity, as shown in Figure A. Out of the three types of layers, the middle layers effectively manage the attributes of glasses, mustache, and age, while the low layer only manages smiling. The hair color and style are most likely preserved unless all layers are modified. All faces in each layer are effectively de-identified even when the attributes are controlled layer-wise. For the highest degree of attribute preservation, it is most effective to modify the identity attributes in the low layers.

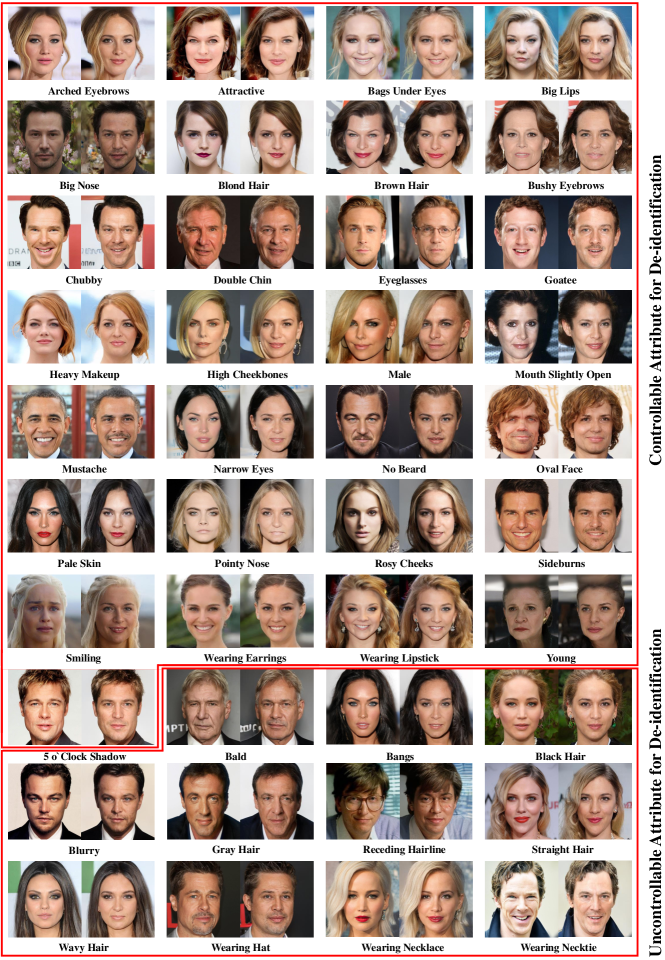

Appendix C Controllable Attributes

Figure B illustrates the controllable and uncontrollable attributes out of 40 different attributes of CelebA-HQ (Lee et al. 2020). Effectively disentangling the identity and non-identity attributes, FICGAN can distinguish which attribute is considered related to identity by the face recognition model (Liu et al. 2017). If an attribute to mix is considered related to identity by the face recognition model, it is reflected in the face after the mixing process; however, if an attribute to mix is considered unrelated to identity, the face recognition model excludes it in the mixing process and thus, the final result does not reflect it. As shown in the figure, most attributes are controllable, but those regarding hair and accessories, such as age-related hairstyle, hat and necklaces, are unrelated to identity and thus uncontrollable by the model. However, certain attributes, such as smiling, wearing earrings, and lipstick, seem unrelated to identity but are controllable by the model, and vice versa. This indicates an area for improvement for the face recognition model.

Appendix D Reconstruction

| Model | MSE | LPIPS | Similarity |

|---|---|---|---|

| ALAE (Pidhorskyi, Adjeroh, and Doretto 2020) | 0.15 | 0.32 | 0.06 |

| IDInvert (Zhu et al. 2020b) | 0.06 | 0.22 | 0.18 |

| pSp (Richardson et al. 2020) | 0.04 | 0.19 | 0.58 |

| FICGAN | 0.02 | 0.15 | 0.30 |

Table A indicates the reconstructing ability of ALAE (Pidhorskyi, Adjeroh, and Doretto 2020), IDInvert (Zhu et al. 2020b), pSp (Richardson et al. 2020), and our model. The results indicate that our model achieves the highest scores in Mean Squared Error (MSE) and Learned Perceptual Image Patch Similarity (LPIPS) (Zhang et al. 2018). Since the negative loss() used for training of our model is to obscure identity from the source image, our model results in a lower similarity score despite its higher scores in MSE and LPIPS compared to pSp (Richardson et al. 2020). Figure C illustrates the models’ reconstructed images based on those used in pSp (Richardson et al. 2020), indicating our model’s reconstructing ability compared to others.

Appendix E Identity Swapping

More examples of identity swapping are provided using various models and examples. Figure D shows various models’ results of identity swapping to compare the performance of (Nitzan et al. 2020), (Nirkin, Keller, and Hassner 2019), (Zhu et al. 2020b), and our model, using the examples from IDInvert (Zhu et al. 2020b). Figure E shows the results of identity swapping using the examples from FFHQ (Karras, Laine, and Aila 2019) unseen during the training phase. The results of our model are of the highest quality among others, as shown in the included illumination, shadows, and attributes from the source images. Our model precisely reflects the direction and size of shadows, while others fail to do so. While IDDis (Nitzan et al. 2020) disentangles identity and modifies hat, our model considers hat as unrelated to identity and thus excludes it from the result.

Since FSGAN (Nirkin, Keller, and Hassner 2019) fuses the face area of the target image with that of the source image for de-identification, it leaves the other areas in the image untouched; however, as shown in the first sample on the left, the eye area is found distorted due to the frame of the glasses located outside of the face area. IDInvert (Zhu et al. 2020b) diffuses the face of the source image to the target image for effective de-identification, but other attributes, such as hair, skin tone, and the background are not preserved well. Compared to other models, our model achieves a high-quality face de-identification by effectively removing the source identity while preserving the non-identity attributes.

Appendix F Manifold k-same Algorithm

To compare the models’ performance with the manifold k-same algorithm as experimented in Figure 5, we conduct additional experiments by employing the average faces of male, male wearing glasses, and smiling people with pale skin as . The results of IDDis (Nitzan et al. 2020), FSGAN (Nirkin, Keller, and Hassner 2019), IDInvert (Zhu et al. 2020b), and our model with -anonymity are shown in Figure F. The results of FSGAN (Nirkin, Keller, and Hassner 2019) and IDInvert (Zhu et al. 2020b) are blurry, while those of IDDis (Nitzan et al. 2020) and our model are sharp and well reflecting the attributes . Compared to IDInvert (Zhu et al. 2020b) even modifying the hair style, jaw line, and background, our model preserves the non-identity attributes of while effectively reflecting the identity of , which indicates superior controllability of our model.

Appendix G Failure Cases

Our method still faces some challenges in certain cases. We observe that our model has difficulties with extreme poses or occlusions (mask, sunglasses, and unseen graphic effects like texts) that are not available in the training data. A more fundamental source of these challenges would be due to the encoder, where it may not be able to accurately localize the facial features in such cases. Compared to the previous methods using separate landmark detection, our method is already more robust with no accumulation error. Our method may be further improved if such extreme data is available. We leave this for our future research direction.