fRegGAN with K-space Loss Regularization for Medical Image Translation

Abstract

Generative adversarial networks (GANs) have shown remarkable success in generating realistic images and are increasingly used in medical imaging for image-to-image translation tasks. However, GANs tend to suffer from a frequency bias towards low frequencies, which can lead to the removal of important structures in the generated images. To address this issue, we propose a novel frequency-aware image-to-image translation framework based on the supervised RegGAN approach, which we call fRegGAN. The framework employs a K-space loss to regularize the frequency content of the generated images. It incorporates well-known properties of MRI K-space geometry to guide the network training process. By combining our method with the RegGAN approach, we can mitigate the effect of training with misaligned data and frequency bias at the same time. We evaluate our method on the public BraTS dataset and outperform the baseline methods in quantitative and qualitative metrics when synthesizing T2-weighted from T1-weighted MR images. Detailed ablation studies are provided to understand the effect of each modification on the final performance. The proposed method is a step toward improving the performance of image-to-image translation and synthesis in the medical domain.

1 Introduction

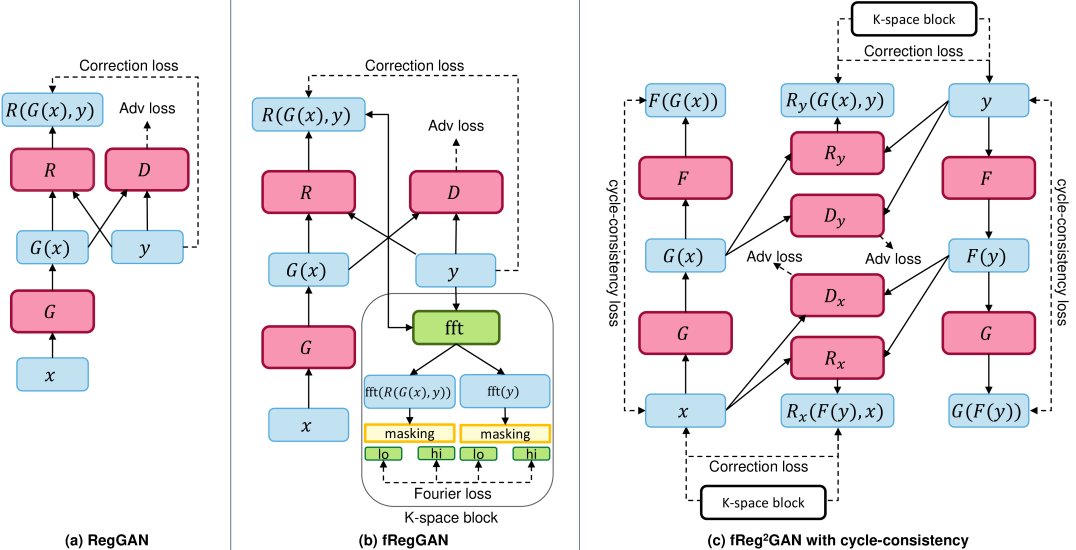

Generative adversarial networks (GANs)[1] have gained much attention in the last few years due to their ability to generate realistic images. In the medical imaging field, they are often used for image-to-image translation problems, such as mapping magnetic resonance imaging (MRI) to computed tomography images[2, 3], T1-weighted to T2-weighted MRI[4, 5], or low- to high dose contrast-enhanced MRIs[6, 7]. Such approaches could potentially reduce healthcare costs and patient burden while maintaining or even improving the diagnostic value of a modality. While GANs have shown promising results in these tasks, they tend to suffer from a frequency bias towards low frequencies[8]. This is especially problematic for medical image-to-image translation tasks, where preserving the images’ high-frequency content (i.e., edges) is crucial. Local errors in the generated images can lead to removing essential structures, such as lesions, which can significantly impact downstream tasks. To address this problem, we propose a novel frequency-aware image-to-image translation framework based on the supervised RegGAN framework[9]. We employ a K-space loss, which can regularize the frequency content of the generated images (see Figure 1).

Obtaining well-aligned data is often a challenging task in medical image-to-image translation. Common image misalignments, like poor registration of source and target domain samples, can lead to significant degradation in the performance of supervised approaches like Pix2Pix[10], which rely heavily on pixel-wise reconstruction losses. While unsupervised training methods like CycleGAN[11] can alleviate this issue, they generally tend to have lower performance compared to supervised methods. Huang et al.[9] proposed the supervised RegGAN medical image-to-image translation approach. In the RegGAN framework, the misaligned target images are considered noisy labels, and the generator is trained with an additional registration network estimating a displacement vector field to fit the misaligned noise distribution adaptively. We are not the first to investigate the use of the frequency domain for improving the performance of GANs, but our work is the first to apply the frequency domain to the medical image-to-image translation task and to combine it with the RegGAN. In the field of image processing and generation, several recent studies [12, 13, 14] have explored the use of the frequency domain for improving the performance of various models. The closest to our idea is in the work of Cai et al. [15], where a frequency domain image translation framework was proposed for image-to-image translation. The framework exploits frequency information by adding multiple losses based on the frequency-transformed images to the optimization problem of the generator. They showed natural images that the proposed method could improve the performance of image-to-image translation and synthesis. An open question is if the proposed method can be combined with cycle consistency and applied to medical images. We summarize our contributions as follows:

-

1.

We further investigate the RegGAN approach by Huang et al. [9] by generally applicable modifications to the architecture and training procedure. We extend their CycleGAN approach by adding a second registration network, which we call Reg2CycleGAN.

-

2.

We incorporate constraints in the frequency domain (K-space) to regularize and guide the network training process. This regularization technique is motivated by MRI acquisition and reconstruction methods which leverage the distribution of image feature information in K-space to reduce noise and/or accelerate image acquisition.

-

3.

Our proposed method is evaluated on the publicly available BraTS dataset. The results show that our method outperforms the baseline (i.e., RegGAN and CycleGAN) methods in quantitative and qualitative metrics. We also provide detailed ablation studies to understand the effect of each modification on the final performance. Our proposed method is a step towards improving the performance of image-to-image translation and synthesis in the medical domain to bring GAN-based methods closer to clinical application.

2 Methods

This section begins with a brief overview of how vanilla GANs[1] are formulated and then summarizes later improvements like the LSGAN[16] and RegGAN[15]. Afterward, we describe our new K-space Loss regularization and its integration into the RegGAN and the CycleGAN. GANs are generative models composed of two networks, a generator and a discriminator . The generator learns a mapping from source domain to target domain such that is close to w.r.t. a specific metric. The discriminator is a binary classifier trained to distinguish between real and generated . Here, and denote sets of paired images where is an image in the source domain and is the corresponding image in the target domain . Following the notation from Kong et al.[9], the vanilla GAN optimization problem is:

| (1) |

Since optimizing and simultaneously is not possible, the optimization problem is solved by alternating between and [1]. The vanilla GAN training procedure has stability issues, which motivated the proposition of several modifications. The most relevant for our experiments is the Least Squares GAN (LSGAN)[16], which replaces the binary cross entropy loss with the mean squared error loss, resulting in the optimization task:

| (2) | ||||

In the work of Kong et al.[9], the robustness of Pix2Pix[10], which uses an L1-loss for supervision, was analyzed in the presence of misaligned source and target domain image data. Based on their experiments on the publicly available BraTS benchmark, it is concluded that the L1-loss reconstruction term only works with well-aligned images, which is often not the case in medical imaging. Therefore, the RegGAN method proposes to replace the L1-loss with the following correction loss:

| (3) |

Here denotes a deformation vector field (DVF) which is estimated based on using VoxelMorph[17] and is the resample operator. Adding the smoothness constraint for the DVF, the final RegGAN optimization problem is then formulated as follows:

| (4) | ||||

For unsupervised image-to-image translation, Zhu et al.[11] proposed to use two generators , and two discriminators , and to add a cycle consistency loss . In additional experiments, they showed that an identity loss preserves content information. All four models are trained jointly, and the optimization problem for the generators is formulated as follows:

| (5) |

Kong et al.[9] also proposed combining cycle consistency with their registration loss

| (6) |

which turns the unsupervised training task into a supervised one. This has the beneficial effect of reducing the number of solutions for the generators and improving the overall quality.

2.1 Reg2CycleGAN

To further improve the performance of the registration and supervision of the generators in equation (6), we propose an extension to the existing method. As shown in Figure 1, we suggest expanding the registration loss to generator . Both and suffer from the same data misalignment, which can negatively impact their performance when training with a supervised loss. By modifying the optimization problem described above, we can ensure that both generators are subjected to the same level of registration and supervision. This can help improve the overall stability and reliability of the generated images, as well as ensure that both generators are able to learn from each other’s mistakes and improve their performance over time. Here, cycle consistency links the optimization of both generators, meaning that improvements to one will likely result in improvements to the other. So, not only will benefit from the improved registration loss, but should also see a positive impact. We can formulate the optimization problem as:

| (7) |

2.2 Frequency regularization

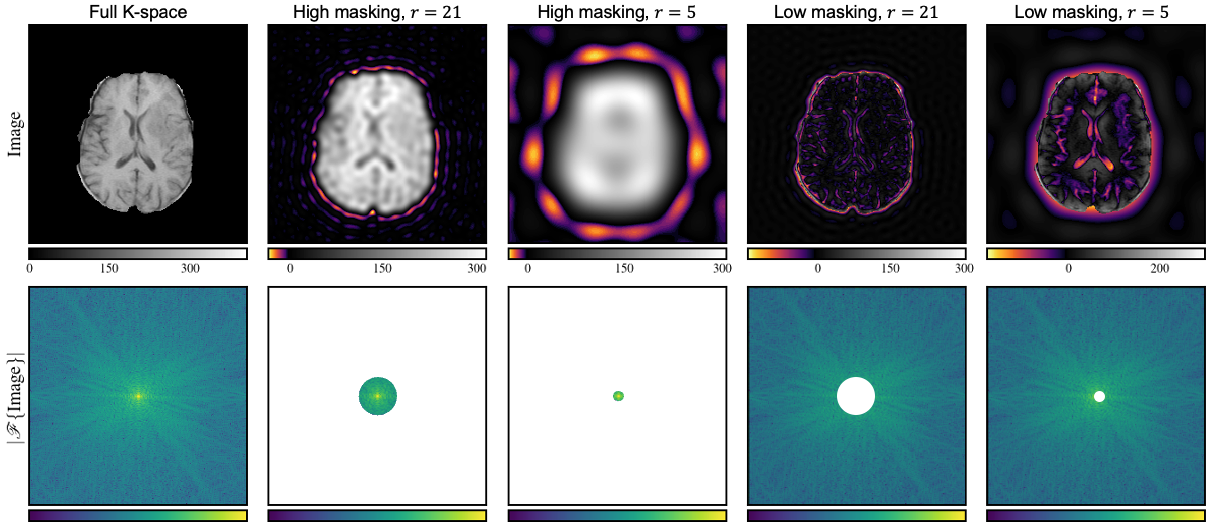

The proposed frequency regularization is motivated by MRI, where the raw signal is a frequency domain signal that is organized in K-space. An inverse Fourier transform of the K-space yields the final image. The information in K-space is represented by complex-valued base coefficients that result from a waveform decomposition of the reconstructed image. Coefficients near the center of K-space correspond to low-frequency components that capture a large proportion of the image’s contrast and texture information. In contrast, coefficients near the outer boundary of K-space correspond to high-frequency features of the image. In MRI pulse sequence development, this knowledge about information content in different regions of K-space is crucial. It leads to a preference for acquiring central K-space regions when acquisition time is limited. Another essential property of the waveform base functions that further motivates the proposed regularization is their non-locality, i.e., locally changing image voxel values globally impact the K-space coefficients, and local changes to K-space coefficients globally affect voxel values. We define the frequency loss as the mean L1 distance between the magnitude of the discrete Fourier transform of the generated image and the magnitude of the discrete Fourier transform of the target image . In addition, we use a binary mask , where the circular region around the origin with radius is set to 1, its inverse , and a weighting factor . In our experiments below we consider the three scenarios . The frequency loss is then defined as

| (8) | ||||

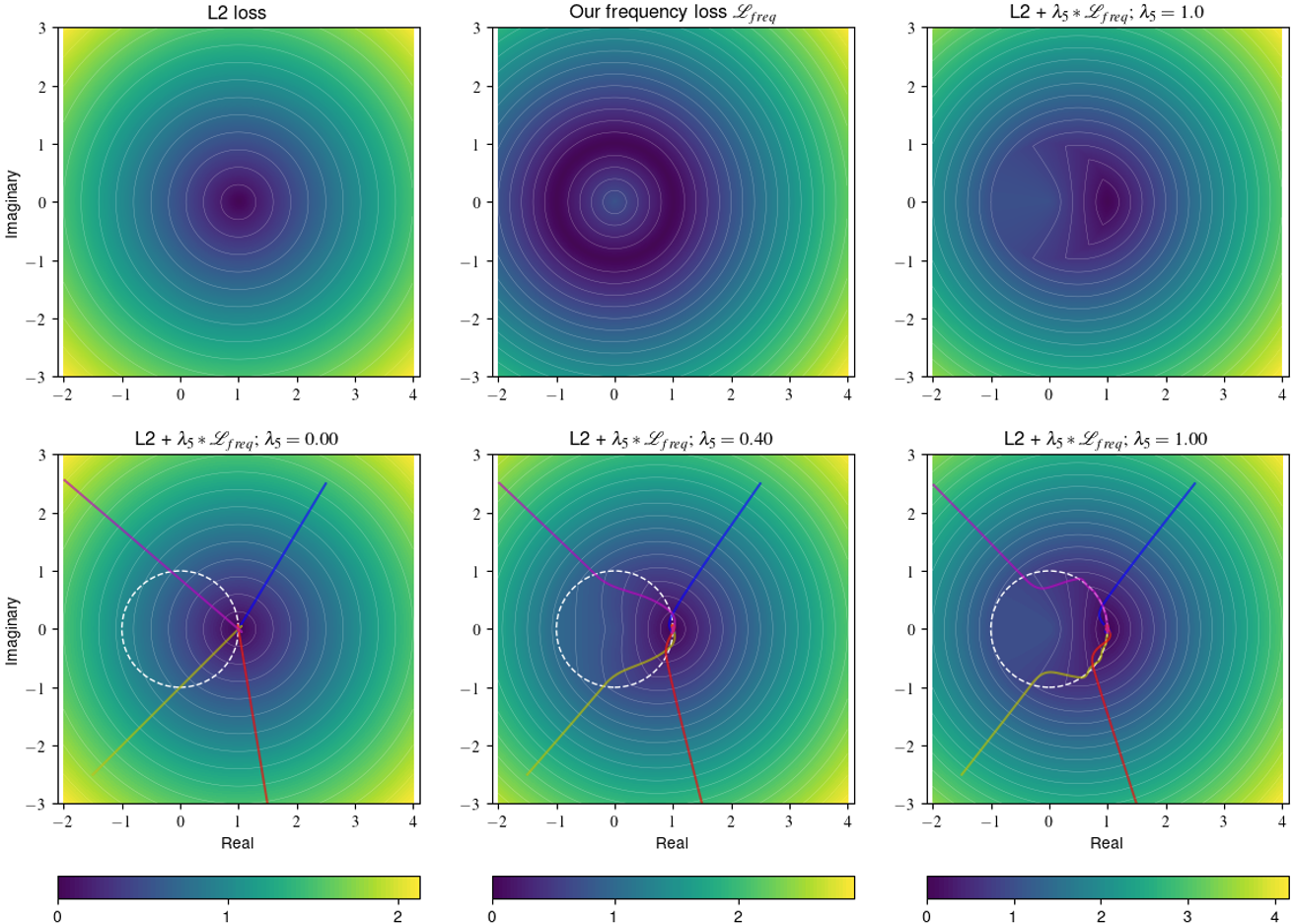

where denotes the Hadamard product, the element-wise absolute value of the complex tensor and our selected window function. The given choice of implies a distinct penalization of deviations of the low and high-frequency components. The parameter controls the separation boundary, whereas the weighting factor balances the impact of those two classes. Clearly, other choices of are possible, e.g. Gaussian kernel or a Hann window. To provide more intuition about our choice of window function,we show in Figure 2 the effect of K-space masking with a variable radius. We can see that the masking of the high-frequency components leads to a blurry image. On the other hand, the masking of the low-frequency components leads to a loss of contrast and texture information. Our frequency loss (8) has some beneficial properties compared to losses in the image domain. First, is translation invariant because we only consider the magnitude, not the phase. Secondly, it is more sensitive to blur than to mild noise because blurry solutions have a higher impact on the high-frequency components. In order to further study the effect of the proposed frequency regularization, we compare a simple L2 loss with our frequency loss and the combination of both. Please note that the L2 loss is equivalent in image and frequency domain due to Plancherel’s theorem. In the top row of Figure 3, we calculated the loss value for a complex target point and various predictions. On the x-axis, we show the real part, and on the y-axis, we show the imaginary part. The color shows the loss value. The L2 loss has circular level sets and increases as we move away from the unique minimum . In contrast, the frequency loss has multiple minimums. All points which have the same magnitude as the target point, i.e. points located on the sphere , are minimizers. In the bottom row of Figure 3, we show a simple gradient descent with momentum optimization for four different starting points. The white circle corresponds to , i.e the optimal solutions based on our frequency loss, and the star marks the minimum for the L2 loss. We combine the L2 and the frequency loss using different different weighting factors in . For , the frequency loss is not considered, and the optimization converges to the L2 minimum. For and the frequency loss is added, we can observe that our new loss acts as a regularizer. The optimization converges to the minimum of the frequency loss and follows the manifold to the optimal solution. The higher , the more the optimization is drawn to and to take gradient steps along this surface. Like the other supervised losses, the frequency loss can not be used if the data pair is not well aligned (e.g., rotation). Therefore, we propose to replace the generated image with its registered version in this case. In our experiments, we add and to the total loss of the GAN and CycleGAN training, which yields the final fRegGAN and fReg2CycleGAN architectures as depicted in Figure 1.

3 Experiments and Results

We perform two sets of experiments. First, we set a baseline and solely investigate our changes to the Reg2CycleGAN. Secondly, we explore the combination of the RegGAN and Reg2CycleGAN with our K-space loss (see equation (8)). All our experiments are trained on the public BraTS 2021 dataset[18, 19, 20]. BraTS has 1251 MRI scans of patients with brain tumors. The dataset contains four different modalities, but we only use the T1w and T2w modalities to train our models. All MRI scans are available as NIfTI files and were acquired with other clinical protocols and various scanners from multiple data-contributing institutions. In addition, all volumes are pre-processed, i.e., co-registered to the same anatomical template, interpolated to the same resolution (), and skull-stripped. Hence, the dataset is well aligned, and each volume has a size of voxels. To train and evaluate our models, we randomly split the dataset on patient-level into a training, validation, and testing set with 1000, 51, and 200 volumes, respectively. We further pre-process the dataset by normalizing each volume to the range based on the and percentile of the volume. Afterward, we sliced the 3d volumes in axial direction into 2d images and removed all slices without information. This results in 139221, 6891, and 27956 2d slices for training, validation, and testing, respectively. Based on the survey papers[21, 22, 23], we selected to most used metrics for evaluating our models: peak signal-to-noise ratio (PSNR), structural similarity index (SSIM), and multi-scale structural similarity index (MS-SSIM). The SSIM is a measure of the similarity between two images. It is based on the mean and variance of the local luminance and contrast of the images. The MS-SSIM is a multi-scale version of the SSIM. It is defined as the geometric mean of the SSIM at different scales. The SSIM and MS-SSIM are in the range and a higher value indicates a better synthetization. Both metrics are implemented using the TorchMetrics 0.10.3 library[24].

Implementation:

We used a fixed training setup for all experiments to ensure a fair comparison. All models are trained with online data augmentation. Here, we use random rotation between and degrees, random translation between and pixels for x and y direction, and random scaling between and . In a second step, the input images and the target images are artificially misaligned by applying the same random transformations as the online data augmentation, but only to the target images. Similar to Kong et al.[9], we use the term noise for this misalignment in the following. As optimizer, we use Adam[25] with a learning rate of , of , of and no weight decay. We train all models for iterations with a batch size of . For the total loss, we used , , , . Empirically, we found for our K-space loss that , for and for and work well. To implement our models, we use PyTorch 1.12.1[26] and the PyTorch Lightning 1.8.1[27] libraries. All experiments are preformed on a single NVIDIA Tesla A100 GPU with 40 GB of memory. Our code is publicly available at https://github.com/Bayer-Group/fRegGAN.

Registration with GAN and CycleGAN

Table 1 shows in the right block the results of our first set of experiments. Our baseline (i.e., without artificial misalignment) results show for all three metrics PSNR, SSIM, and MS-SSIM that the supervised GAN is performing better than the unsupervised CycleGAN (i.e., 26.0 vs. 24.1, 0.91 vs. 0.89, and 0.94 vs. 0.91). This is expected since CycleGAN is trained without any supervision. However, adding noise (i.e., artificial misalignment) to the training, we can observe that the situation is vice-versa (i.e., 21.9 vs. 23.8, 0.83 vs. 0.88, and 0.84 vs. 0.90). Both observations are in line with other results in the literature[9]. As described in Section 2, adding a registration loss to the CycleGan (i.e., RegCycleGAN) makes it also supervised. Now, the supervised RegCycleGAN is performing better than the supervised GAN (i.e., 27.4 vs. 26.0, 0.92 vs. 0.91, and 0.95 vs. 0.94). The cycle consistency combined with supervised learning is a strong regularization term that helps to learn the mapping between the input and the target image. Adding a second registration loss (i.e., Reg2CycleGAN) to the RegCycleGAN improves the results of the generator but does not help to further improve our main generator . The second registration loss has only an indirect connection to the first generator by the cycle consistency, which is not enough to improve the results.

| CycleGAN | ||||||

| GAN | Pix2Pix[9] | CycleGAN[9] | ||||

| \hlineB1.25 | baseline | 26.0 | 24.1 | 23.9 | 25.6 | 23.9 |

| w/ n | 21.9 | 23.8 | 22.9 | 15.0 | 23.7 | |

| w/ n, r | 26.8 | 27.4 | 23.2 | 25.2† | 23.8* | |

| PSNR | w/ n, r2 | - | 27.3 | 27.3 | - | - |

| \hlineB1.25 | baseline | 0.91 | 0.89 | 0.89 | 0.85 | 0.83 |

| w/ n | 0.83 | 0.88 | 0.87 | 0.74 | 0.83 | |

| w/ n, r | 0.91 | 0.92 | 0.89 | 0.85† | 0.85* | |

| SSIM | w/ n, r2 | - | 0.92 | 0.90 | - | - |

| \hlineB1.25 | baseline | 0.94 | 0.91 | 0.93 | - | - |

| w/ n | 0.84 | 0.90 | 0.92 | - | - | |

| w/ n, r | 0.94 | 0.95 | 0.92 | - | - | |

| MS-SSIM | w/ n, r2 | - | 0.95 | 0.96 | - | - |

K-space loss

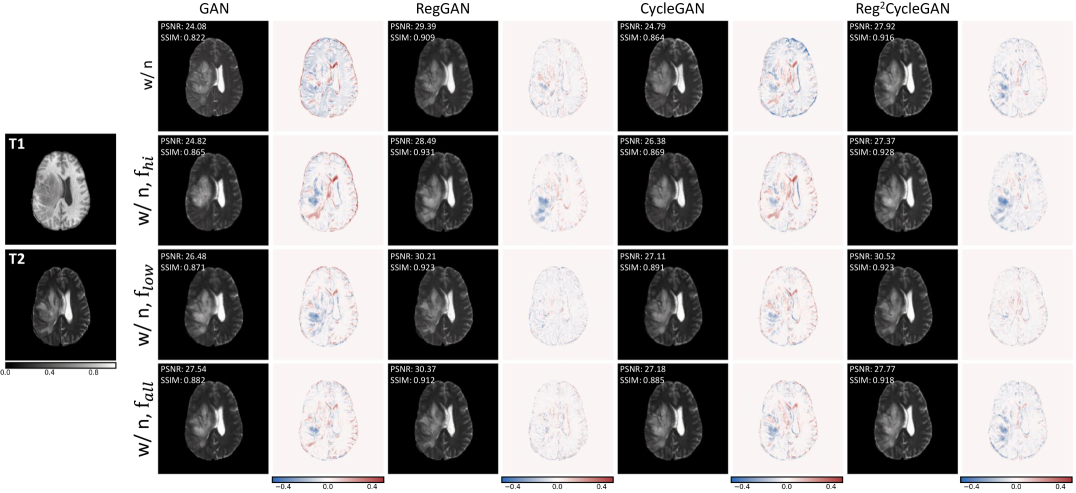

We defined three different settings of the K-space loss for our experiments. First, we only select the low frequencies with . Secondly, the high frequencies are selected by . Finally, we also test the effect if all frequencies are used . All three settings are tested for both GAN and CycleGAN models with and without registration loss. The results are shown in Table 2. For all four training modes (i.e., GAN with noise, GAN with noise and reg, CycleGAN with noise, and CycleGAN with noise and reg), we observe that adding a frequency loss improves the quantitative metrics. The only exception is the CycleGAN model with . Here, the PSNR is slightly worse than the baseline (i.e., 23.6 vs 23.8). However, the SSIM and MS-SSIM are the same as the baseline with 0.88 and 0.90, respectively. The best results are achieved by the GAN model with and the registration loss. The PSNR is 28.8, the SSIM is 0.92, and the MS-SSIM is 0.97. The results show that the frequency loss is useful for improving the quantitative metrics. In Figure 4, we show qualitative examples of the generated images. Here, we see that the models trained with and registration can better synthesize the highlighted region.

| GAN | CycleGAN | ||||

| w/ n | w/ n, r | w/ n | w/ n, r2 | ||

| \hlineB1.25 | baseline | 21.9 | 26.8 | 23.8 | 27.3 |

| 22.7 | 26.9 | 23.6 | 27.4 | ||

| 24.4 | 28.8 | 25.2 | 28.7 | ||

| PSNR | 24.6 | 28.4 | 25.2 | 28.7 | |

| \hlineB1.25 | baseline | 0.83 | 0.91 | 0.88 | 0.92 |

| 0.86 | 0.93 | 0.88 | 0.93 | ||

| 0.88 | 0.92 | 0.89 | 0.92 | ||

| SSIM | 0.88 | 0.91 | 0.89 | 0.92 | |

| \hlineB1.25 | baseline | 0.84 | 0.94 | 0.90 | 0.95 |

| 0.87 | 0.94 | 0.90 | 0.95 | ||

| 0.91 | 0.97 | 0.93 | 0.96 | ||

| MS-SSIM | 0.92 | 0.96 | 0.93 | 0.96 | |

4 Conclusion

We have proposed a novel image-to-image translation approach in the medical imaging field called fRegGAN. Our method extends the RegGAN approach by incorporating a Fourier-space based loss to regularize and guide the network training process. Our extensive experiments on the BraTS 2021 dataset show that our proposed method outperforms the baseline methods in terms of both quantitative metrics and qualitative visual inspection. The frequency regularization loss helps the optimization process find solutions that preserve more details and are closer to the ground truth. However, in our evaluation, we found that comparison based on quantitative metrics with reference images has some limitations. Skull stripping (to anonymize patients) resulted in some artificial artifacts between input and output volumes (i.e., T1w and T2w). Our results prove that incorporating Fourier-space information can improve image-to-image translation’s performance and visual quality in the medical domain. Our findings suggest that fRegGAN could have broader implications for the medical imaging field. For example, frequency regularization, an essential aspect of our proposed method, might be beneficially applied to image synthesis tasks using diffusion models. The improvements observed in image-to-image translation tasks could potentially translate to enhancements in the quality and accuracy of synthesized images, particularly in complex tasks such as those involving multiple modalities. Moreover, the idea of k-space to k-space synthesis, although not directly addressed in our study, could serve as a promising future research direction. Such an approach might further aid clinical decision-making, diagnostics, and treatment planning by providing more accurate and realistic synthetic medical images.

References

- [1] Goodfellow, I. J., Pouget-Abadie, J., Mirza, M. & et al. Generative Adversarial Networks. \JournalTitleCommunications of the ACM 63, 139–144, DOI: 10.1145/3422622 (2020).

- [2] Maspero, M., Savenije, M. H. F., Dinkla, A. M. & et al. Dose evaluation of fast synthetic-CT generation using a generative adversarial network for general pelvis MR-only radiotherapy. \JournalTitlePhysics in Medicine and Biology 63, 185001, DOI: 10.1088/1361-6560/aada6d (2018).

- [3] Yang, H., Sun, J., Carass, A. & et al. Unsupervised MR-to-CT Synthesis Using Structure-Constrained CycleGAN. \JournalTitleIEEE Transactions on Medical Imaging 39, 4249–4261, DOI: 10.1109/TMI.2020.3015379 (2020).

- [4] Liu, X., Xing, F., Fakhri, G. E. & et al. A Unified Conditional Disentanglement Framework for Multimodal Brain MR Image Translation. In IEEE 18th International Symposium on Biomedical Imaging, 10–14, DOI: 10.1109/ISBI48211.2021.9433897 (2021).

- [5] Shen, L., Zhu, W., Wang, X. & et al. Multi-Domain Image Completion for Random Missing Input Data. \JournalTitleIEEE Transactions on Medical Imaging 40, 1113–1122, DOI: 10.1109/TMI.2020.3046444 (2021).

- [6] Haubold, J., Jost, G., Theysohn, J. M. & et al. Contrast Agent Dose Reduction in MRI Utilizing a Generative Adversarial Network in an Exploratory Animal Study. \JournalTitleInvestigative Radiology DOI: 10.1097/RLI.0000000000000947 (2023).

- [7] Pasumarthi, S., Tamir, J. I., Christensen, S. & et al. A generic deep learning model for reduced gadolinium dose in contrast-enhanced brain MRI. \JournalTitleMagnetic Resonance in Medicine 86, 1687–1700, DOI: 10.1002/mrm.28808 (2021).

- [8] Schwarz, K., Liao, Y. & Geiger, A. On the Frequency Bias of Generative Models. In Advances in Neural Information Processing Systems 34 (NeurIPS 2021) (2021).

- [9] Kong, L., Lian, C., Huang, D. & et al. Breaking the Dilemma of Medical Image-to-image Translation. In Advances in Neural Information Processing Systems 34 (NeurIPS 2021), vol. 34, 1964–1978 (2021).

- [10] Isola, P., Zhu, J.-Y., Zhou, T. & et al. Image-to-Image Translation with Conditional Adversarial Networks. In IEEE Conference on Computer Vision and Pattern Recognition, DOI: 10.1109/CVPR.2017.632 (2017).

- [11] Zhu, J.-Y., Park, T., Isola, P. & et al. Unpaired Image-to-Image Translation using Cycle-Consistent Adversarial Networks. In IEEE International Conference on Computer Vision, DOI: 10.1109/ICCV.2017.244 (2020).

- [12] Yang, Y. & Soatto, S. FDA: Fourier Domain Adaptation for Semantic Segmentation. In IEEE Computer Society Conference on Computer Vision and Pattern Recognition, DOI: 10.1109/CVPR42600.2020.00414 (2020).

- [13] Jiang, L., Dai, B., Wu, W. & et al. Focal Frequency Loss for Image Reconstruction and Synthesis. In IEEE International Conference on Computer Vision, DOI: 10.1109/ICCV48922.2021.01366 (2021).

- [14] Yang, M., Wang, Z., Chi, Z. & et al. FreGAN: Exploiting Frequency Components for Training GANs under Limited Data. \JournalTitlearXiv DOI: 10.48550/arXiv.2210.05461 (2022).

- [15] Cai, M., Zhang, H., Huang, H. & et al. Frequency Domain Image Translation: More Photo-realistic, Better Identity-preserving. In IEEE International Conference on Computer Vision, 13930–13940, DOI: 10.1109/ICCV48922.2021.01367 (2021).

- [16] Mao, X., Li, Q., Xie, H. & et al. Least Squares Generative Adversarial Networks. In IEEE International Conference on Computer Vision, 2794–2802, DOI: 10.1109/ICCV.2017.304 (2017).

- [17] Balakrishnan, G., Zhao, A., Sabuncu, M. R. & et al. VoxelMorph: A Learning Framework for Deformable Medical Image Registration. \JournalTitleIEEE Transactions on Medical Imaging 38, 1788–1800, DOI: 10.1109/TMI.2019.2897538 (2019).

- [18] Baid, U., Ghodasara, S., Mohan, S. & et al. The RSNA-ASNR-MICCAI BraTS 2021 Benchmark on Brain Tumor Segmentation and Radiogenomic Classification. \JournalTitlearXiv DOI: 10.48550/arXiv.2107.02314 (2021).

- [19] Menze, B. H., Jakab, A., Bauer, S. & et al. The Multimodal Brain Tumor Image Segmentation Benchmark (BRATS). \JournalTitleIEEE transactions on medical imaging 34, 1993–2024, DOI: 10.1109/TMI.2014.2377694 (2015).

- [20] Bakas, S., Akbari, H., Sotiras, A. & et al. Advancing The Cancer Genome Atlas glioma MRI collections with expert segmentation labels and radiomic features. \JournalTitleScientific Data 4, 170117, DOI: 10.1038/sdata.2017.117 (2017).

- [21] Liu, Y., Dwivedi, G., Boussaid, F. & et al. 3D Brain and Heart Volume Generative Models: A Survey. \JournalTitlearXiv DOI: 10.48550/arXiv.2210.05952 (2022).

- [22] Xie, G., Wang, J., Huang, Y. & et al. Cross-Modality Neuroimage Synthesis: A Survey. \JournalTitlearXiv DOI: 10.48550/arXiv.2202.06997 (2022).

- [23] Ali, H., Biswas, M. R., Mohsen, F. & et al. The role of generative adversarial networks in brain MRI: A scoping review. \JournalTitleInsights into Imaging 13, 98, DOI: 10.1186/s13244-022-01237-0 (2022).

- [24] Detlefsen, N. S., Borovec, J., Schock, J. & et al. TorchMetrics - Measuring Reproducibility in PyTorch. \JournalTitleJournal of Open Source Software 7, 4101, DOI: 10.21105/joss.04101 (2022).

- [25] Kingma, D. P. & Ba, J. Adam: A Method for Stochastic Optimization. \JournalTitlearXiv DOI: 10.48550/arXiv.1412.6980 (2017).

- [26] Paszke, A., Gross, S., Massa, F. & et al. PyTorch: An Imperative Style, High-Performance Deep Learning Library. In Advances in Neural Information Processing Systems 32 (NeurIPS 2019) (2019).

- [27] Falcon, W. & the PyTorch Lightning team. Pytorch lightning, DOI: 10.5281/zenodo.7469930 (2019).

Author contributions statement

I.M.B., M.L., F.K., and A.H. conceived the method and experiments, I.M.B. and A.H. conducted the experiments, I.M.B., M.L., M.D., A.H., analyzed the results. All authors reviewed the manuscript.

Additional information

Competing interests

All authors are employees of Bayer AG, Berlin, Germany.

Data Availability

The dataset (i.e., BraTS2021) analyzed during the current study are available from http://braintumorsegmentation.org/.