From Time Series to Networks in \proglangR with

the \pkgts2net Package

Leonardo N. Ferreira

\PlaintitleFrom Time Series to Networks in R with ts2net

\ShorttitleFrom Time Series to Networks in \proglangR

\Abstract

Network science established itself as a prominent tool for modeling time series and complex systems. This modeling process consists of transforming a set or a single time series into a network. Nodes may represent complete time series, segments, or single values, while links define associations or similarities between the represented parts. \proglangR is one of the main programming languages used in data science, statistics, and machine learning, with many packages available. However, no single package provides the necessary methods to transform time series into networks. This paper presents \pkgts2net, an \proglangR package for modeling one or multiple time series into networks. The package provides the time series distance functions that can be easily computed in parallel and in supercomputers to process larger data sets and methods to transform distance matrices into networks. \pkgTs2net also provides methods to transform a single time series into a network, such as recurrence networks, visibility graphs, and transition networks. Together with other packages, \pkgts2net permits using network science and graph mining tools to extract information from time series.

\Keywordstime series, networks, graphs, network science, complex networks, \proglangR

\Plainkeywordstime series, networks, graphs, network science, complex networks, R

\Address

Leonardo N. Ferreira

Center for Humans & Machines

Max Planck Institute for Human Development

Lentzeallee 94, 14195 Berlin, Germany

E-mail:

URL: https://www.leonardoferreira.com/

1 Introduction

Networks are one of the most prominent tools for modeling complex systems (Mitchell, 2006). Complex systems are commonly represented by a set of time series with interdependencies or similarities (Silva and Zhao, 2018). This set can be modeled as a network where nodes represent time series, and links connect pairs of associated ones. Associations are usually measured by time series distance functions, carefully chosen by the user to capture the desired similarities. Thus, the network construction process consists of two steps. First, the distance between all pairs of time series is calculated and stored in a distance matrix. Then, this distance matrix is transformed into an adjacency matrix. The network topology permits not just the analysis of the small parts (nodes) in the system but also their relationship (edges). This powerful model allows network science and graph mining tools to extract information from different domains such as climate science (Boers et al., 2019; Ferreira et al., 2021a), social science (Ferreira et al., 2021b), neuroscience (Bullmore and Sporns, 2009), and finance (Tumminello et al., 2010).

In recent years, networks have also been applied to model a single time series. The goal is to transform one time series into a network and apply network science techniques to perform time series analysis (Silva et al., 2021). Different modeling approaches have been proposed, and the general idea is to map single values (Lacasa et al., 2008), time windows, transitions Campanharo et al. (2011), or recurrence Donner et al. (2010) as nodes and their relationship by links. Then, the general goal is to use network science and graph mining tools to extract information from the modeled time series.

R is one of the most used programming languages in statistics, data science, machine learning, complex networks, and complex systems. Different packages provide distance functions and network analysis, but no single package provides the necessary tools to model one or multiple time series as networks. This paper presents \pkgts2net, a package to construct networks from time series in R. \pkgTs2net permits the use of network science to study time series. Together with other \proglangR packages, \pkgts2net permits distance calculations, network construction, visualization, statistical measures extraction, and pattern recognition using graph mining tools. Most of the key functions are implemented using multi-core programming, and some useful methods make \pkgts2net easy to run in supercomputers and computer clusters. The package is open-access and released under the MIT license.

2 Background Concepts

In summary, the whole network construction process involves the calculations of time series distance functions, represented by a distance matrix, and the transformation of this matrix into a network. This section presents the distances and the network construction methods available in the \pkgts2net package.

2.1 Distance functions

Time series comparison is a fundamental task in data analysis. This comparison can estimated using similarity or distance functions (Deza and Deza, 2009; Esling and Agon, 2012; Wang et al., 2013). Some research domains use the concept of similarity, while others prefer distance functions. Similarity and distance are inverse concepts that have the same usefulness. Here in this paper, for simplicity, I use the distance concept. However, it is important to remember that a similarity function can be generally transformed into distance functions and vice-versa. The distance or metric is a function that for all time series , the following properties hold:

-

•

non-negativity: ,

-

•

symmetry: ,

-

•

reflexity: ,

-

•

triangular difference: .

Some of the time series comparison measures used in \pkgts2net are not true metrics because they do not follow one or more required properties above (Deza and Deza, 2009). However, for simplicity reasons, this paper uses the term “distance” to generally refer to any of these functions. The rest of Sec. 2.1 reviews the main distance functions implemented in the \pkgts2net package.

2.1.1 Correlation-based distances

The Pearson Correlation Coefficient (PCC) is one of the most used association measures (Asuero et al., 2006). Given two time series and (both with length ), and their respective mean values and , the PCC is defined as

| (1) |

The PCC can be transformed into a distance function by considering the absolute value of as follows:

| (2) |

In this case (Eq. 2), both strong correlations () and strong anti-correlation () lead to . This distance is particularly useful if both correlated and anti-correlated time series should be linked in the network. Conversely, if only strong positive correlations or strong negative correlation should be mapped into links in the network, then Eq. 3 or Eq. 4 can be used instead.

| (3) |

| (4) |

Cross-correlation is also a widely used measure to find lagged associations between time series. Instead of directly calculating the PCC, the cross-correlation function (CCF) applies different lags (displacement) to the series and then returns the correlation to each lag. Instead of returning a single correlation value, as in the PCC, the CCF returns an array of size , where is the maximum lag defined by the user. Each element of this array corresponds to the between the time series and , which is the lagged by units.

The CCF can be transformed into a distance function in the same way as the PCC (Eqs. 2, 3, and 4). The maximum absolute (Eq. 5), positive (Eq. 6), or negative (Eq. 7) correlations .

| (5) |

| (6) |

| (7) |

One crucial question is the correlation statistical significance. The significance test avoids spurious correlations and false links in the network. One way to test consists of using the Fisher z-transformation defined as (Asuero et al., 2006):

| (8) |

This transformation stabilizes the variance and can be used to construct a confidence interval (CI) using standard normal theory, defined (in z scale) as

| (9) |

where is the desired normal quantile and is the length of the time series. The CI can be transformed back into the original scale using the inverse transformation:

| (10) |

leading to

| (11) |

The correlation (Eqs. 2, 3, and 4) and cross-correlation distances (Eqs. 5, 6, and 7) can be adapted to consider the statistical test given a significance level . These adaptations consist of substituting the correlation function in the equations with a binary

| (12) |

2.1.2 Distances based on information theory

Mutual information (MI) is one of the most common measures used to detect non-linear relations between time series. The MI measures the average reduction in uncertainty about X that results from learning the value of Y or vice versa (MacKay, 2003). The concept of information is directly linked to Shannon’s entropy, which captures the “amount of information” in a time series. Given a discrete time series and its probability mass function , the marginal entropy is defined as

| (13) |

The conditional entropy measures how much uncertainty has when is known.

| (14) |

A histogram can be used to estimate the probabilities. The number of bins in the histogram directly influences the entropy results and should be chosen accordingly. Some common options to estimate the number of bins are the Sturges’s, Scott’s, and Freedman-Diaconis criteria (Wand, 1997).

The mutual information (MI) can be estimated using Eq. 15.

| (15) |

The mutual information has only a lower bound (zero), which makes it sometimes difficult to interpret the results. Some normalization methods can limit the MI to the interval to make the comparison easier. The normalized mutual information (NMI) can be defined as

| (16) |

where is the normalization factor. Common normalization approaches are either the half of the sum of the entropies , minimum entropy , maximum entropy , or the squared root of the entropies product (Vinh et al., 2010). The NMI distance is

| (17) |

Another common distance function based on MI is the Variation of Information (VoI) (Eq. 18) (Vinh et al., 2010). VoI, different from the MI, is a true metric.

| (18) |

The maximal information coefficient (MIC) is an algorithm that searches for the largest MI between two series (Reshef et al., 2011). MIC considers that if there exists a relationship between two time series, then it is possible to draw a grid on the scatter plot of these two series that encapsulates that relationship. MIC automatically finds the best grid and returns the largest possible MI.

2.1.3 Dynamic Time Warping

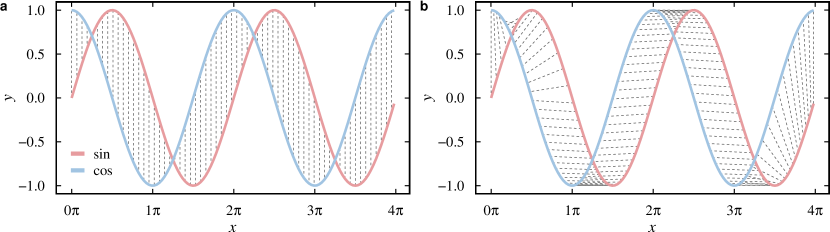

The Dynamic Time Warping (DTW) (Berndt and Clifford, 1994; Giorgino, 2009) is an elastic distance function that uses a warping path to align two time series and then compare them. Different from lock-step measures (such as the euclidean distance), elastic measures use a warping path defining a mapping between the two series (Fig. 1). The optimal path minimizes the global warping cost. The DTW distance between two time series and (both of length ) can be defined using dynamic programming. The total distance is the cumulative distance calculated from the recurrence relation , defined as:

| (19) |

2.1.4 Event-based distances

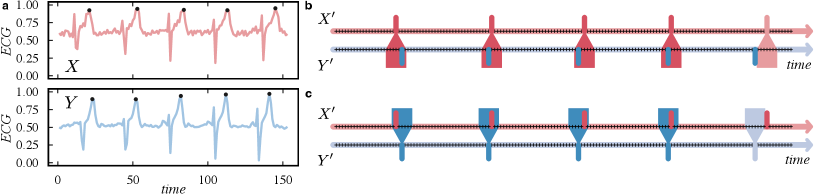

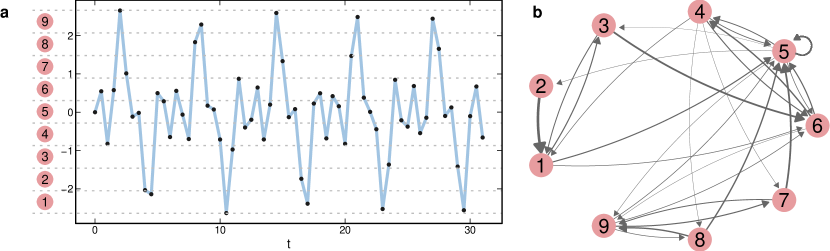

The idea behind event-based distance functions is to compare marked time points where some events occur in the time series. The definition of an event is specific to the domain where the time series comes from. For example, Fig. 2-a illustrates two ECG time series and where events (black dots) can be defined as some local maximum values as the T wave representing the ventricular repolarization during the heartbeat. Another example is the concept of extreme events of precipitation that can be defined as the days with rainfall sums above 99th percentiles of wet days during rain season (Boers et al., 2019). Common approaches used to define events are local maxima and minima, values higher or lower than a threshold, values higher or lower percentiles, or outliers in the series. An event time series is here defined as a binary time series composed of ones and zeros representing the presence or absence of an event respectively in a time series . Fig. 2-b and c illustrate these events time series for the ECG time series in Fig. 2-a. Another common representation is the events sequence that defines the time indices where each event occur.

Event-based distance functions try to find co-occurrences or synchronization between two event time series. The general aim is to count pairs of events in both time series that occur at the same time window of length. For example, considering the five events (black dots) in Fig. 2-a, Fig 2-b shows the co-occurrence of events from to considering a time window. For the five events, only the last one does not co-occur. Fig 2-c shows the inverse process that can or not be equal depending on the specific distance function. Thus, functions can be symmetrical or not (directed). Symmetrical distances consider co-occurrences from to but also from to . Directed measures only consider the events in a precursor time series compared to another one , but not the other way around.

Quian Quiroga et al. (2002) proposed a method to measure the synchronization between two event time series. Considering two event sequences and , and a time lag , this method counts the number of times an event appears in shortly after it appears in considering using the following equation

| (20) |

where is defined as

| (21) |

where and are the time indices and from and respectively. Analogously, can also be calculated using Eq. 20. The symmetrical event-based distance between and , can be calculated using Eq. 22, where indicates full synchronization.

| (22) |

The asymmetrical version counts only the number of events in that precede those in (or the opposite in an analogous way), and it is originally defined in the interval [-1,1]. To standardize the interval with the other distances in this paper, Eq. 23 measures the asymmetrical distance where zero means that all events in precede those in , and one no synchronization.

| (23) |

The authors also propose a local time window when event rates changes in the time series. The counting function (Eq. 20) can be adapted to a local time window by replacing by

| (24) |

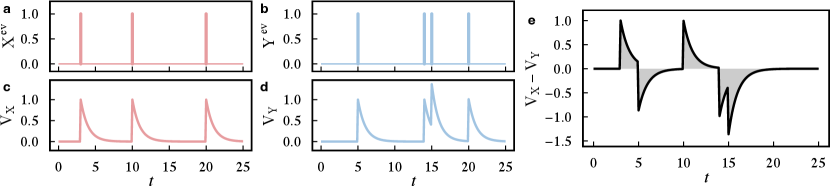

The van Rossum distance (v. Rossum, 2001) is another method used to find synchronizations between events. The main idea is to transform each event into a real function and compare them, as illustrated in Fig. 3. The first step consists on mapping an event sequence into a function , where is a filtering function. Examples of filtering functions include the Gaussian and the laplacian kernels. The parameter defines the co-occurrence time scale. The van Rossum distance can be calculated as

| (25) |

One common approach to test the significance of event-based distance functions considers surrogate data (Boers et al., 2019; Ferreira et al., 2021b). The idea is to generate artificial event sequences by shuffling the events in the two original time series and then measuring their distance. After repeating this process a considerable high number of times, it is possible to construct a distribution of distances and use it as a confidence level for the comparison.

2.2 Transforming a set of time series into a network

A network or a graph (Bondy and Murty, 2008; Barabási and Pósfai, 2016), is composed by a set of nodes (also called vertices) and a set of links (also called edges) connecting two nodes. The most common representation for a network is the adjacency matrix whose entry if the nodes and are connected or otherwise. A weighted network can also be represented by . In this case, each entry corresponds to the weight between nodes and , or = 0 if there is no link.

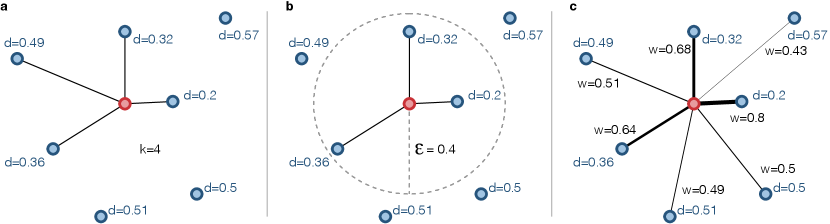

A set of time series can be transformed into a network by calculating the distance between all pairs of time series. These distances are represented by the distance matrix whose position stores the distance between the time series and . The network construction process consists of transform the distance matrix into an adjacency matrix where each node represents a time series. The most common building approaches are (Silva and Zhao, 2018; Qiao et al., 2018):

-

•

-NN network: In a nearest neighbors network (-NN), each node (representing a time series) is connected to the other ones with the shortest distances. Thus, the construction process requires finding for each row of the other elements with shortest distances, where is a parameter defined by the user. Approximation algorithms speed up the construction process (Arya et al., 1998; Chen et al., 2009).

-

•

-NN network: In a nearest neighbors network (-NN), (Fig. 4-b), each node is connected to all the other ones if their distances are shorter than , defined by the user. An advantage of the - over -NN networks is that the permits a finer in the threshold choice. Another advantage is the fact that can restrict the connections to only strong ones, while the -NN will always connect nodes regardless if the shortest distances are small or high. Similar to -NN networks, approximations can speed up the construction process (Arya et al., 1998; Chen et al., 2009).

-

•

Weighted network: Instead of limiting the number of links, a weighted network (Fig. 4-c) can be constructed from by simply connecting all pairs of nodes and using their distances to set weights. In general, link weights have the opposite definition of a distance, which means that the shorter the distance between two time series, the stronger the link connecting the respective nodes. Considering that is in the interval [0,1], the weighted adjacency matrix can be defined as = 1 - . Conversely, if , can be be first normalized between [0,1] () and then consider = 1 - .

-

•

Networks with significant links: In many applications, it is important to minimize the number of spurious links in the network. One way of minimizing these spurious links is by considering statistical tests for the distance functions. In other words, this network construction process connects all pairs of nodes only if their distance is statistically significant. For example, the significance of the Pearson correlation coefficient can be tested using the z-transformation (Eq. 9) described in Sec. 2.1. This network construction process can be seen as a special case of -NN network where is a threshold for significant links. It is important to remember that this building approach minimizes the number of spurious links but does not guarantee that they will not appear.

Networks can be static or temporal (Holme and Saramäki, 2012; Kivelä et al., 2014). A static network uses the whole historical data in a time series to construct a single network. Temporal networks divide the historical period into time windows that are used to construct layers in a temporal (multilayered) network. A temporal network is a sequence of static networks ordered in time. Each layer can be seen as a snapshot of the time window. The temporal network construction process requires the computation of a set of distance matrices , one for each of the windows. A time window is defined by the user and has a length and a step that defines the number of values that are included and excluded in each window. The temporal network construction consists of transforming each distance matrix into a network layer using the same process used for a static network previously described in this section. One of the main advantages of this approach is to make possible the temporal analysis (evolution) of the modeled system.

The network construction process has a combinatorial nature. Considering a set of time series, the whole construction process requires distance calculations in a static network or in a temporal network, where is the number of layers. The transformation from the distance matrix to the adjacency matrix requires searches for pairs of shortest nodes, a task that is much faster than the distance calculations. For this reason, the conversion step () can be neglected in the total time complexity is . This means that the number of distance computations can increase very fast for very large time series data sets, making the network modeling approach infeasible.

2.3 Transforming a single time series into a network

This section presents methods to transform a single time series into a network (Zou et al., 2019; Silva et al., 2021).

2.3.1 Proximity networks

The most straightforward method to perform this transformation consists of breaking the time series into time windows and using the same approach described for multiple time series (Sec. 2.2). Each time window is considered a time series and the network construction process requires calculating a distance matrix between all time windows using a distance function. Then, can be transformed into an adjacency matrix using methods such as -NN and -NN networks (Silva and Zhao, 2018). The time window size should be carefully chosen. For example, time windows too small can be problematic when calculating correlation distances. For this reason, this simple construction procedure may not be suitable for short time series because it could generate small time windows.

2.3.2 Transition networks

Transition networks map time series into symbols, represented by nodes and edges to link transitions between symbols. Campanharo et al. (2011) proposed a straightforward way of defining the symbols, which consists of first dividing a time series into equally-spaced bins. Each bin corresponds to a symbol that is mapped to a node in the network. Each time series value is mapped to a sequence of symbols according to the bins they are inserted. Then, for every pair of consecutive symbols, a directed link is established between the corresponding nodes. Two equal consecutive symbols lead to self-loops in the network. Edge weights count the number of times that a transition between the two symbols occurs. After the construction process, the weights of the outgoing links from each node can be normalized and interpreted as a transition probability between symbols. In this case, the network can be seen as a Markov Chain. Fig. 5 presents an example of a transition network.

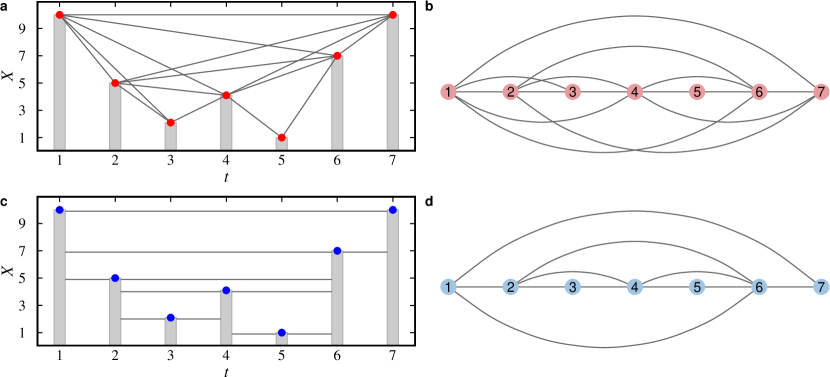

2.3.3 Visibility graphs

Lacasa et al. (2008) proposed a method called visibility graph that transforms a time series into a network. The idea is to represent each value in a time series by a bar in a barplot, as illustrated in Fig. 6. Then, each value becomes a node and links connect them if they can “see” each other. In the original definition, called natural visibility graph (NVG), the concept of visibility is defined as a line in any direction that does not cross any other bar. Considering a time series with values and its natural visibility graph , two nodes and are connected if

| (26) |

Other works adapted or restricted the idea of visibility. The horizontal visibility graph (HVG) (Luque et al., 2009) defines visibility as horizontal lines between bars (Fig. 6 c and d). In this case, two nodes and from are connected if

| (27) |

Threshold values can limit the maximum temporal distance between values (Ting-Ting et al., 2012). Visibility graphs can also be directed, representing the temporal order. Another important concern is the time complexity of the construction process. The original algorithms to construct NVGs and HVGs have quadratic time complexity O(), where is the number of elements in the series. Further improvements can reduce this time complexity to O() (Lan et al., 2015).

2.3.4 Recurrence networks

The transformation approach proposed by Donner et al. (2010) relies on the concept of recurrences in the phase space. The general idea consists of interpreting the recurrence matrix of a time series as an adjacency matrix. Nodes represent states in the phase-space and links connect recurrent ones. A recurrence matrix connects different points in time if the states are closely neighbored in phase space. Considering a time series and its -dimensional time delay embedding where is the delay, a recurrence matrix can be defined as

| (28) |

where is the Heaviside function, is a distance function (e.g., Euclidean or Manhattan), and is a threshold distance that defines close states. A recurrence matrix can then be transformed into an adjacency matrix of a network as:

| (29) |

where is the Kronecker delta introduced to remove self-loops. The resulting network is unweighted, undirected, and without self-loops

3 The ts2net Package

The goal of the \pkgts2net package is to provide methods to model a single or a set of time series into a network in \proglangR. It implements time series distance functions, parallel distance calculations, network construction methods, and some useful functions. Some functions are fully implemented, while others are adapted from other packages. Thus, another goal of the \pkgts2net package is to put together useful methods from other packages.

The \pkgts2net package is available on the Comprehensive \proglangR Archive Network (CRAN) and can be installed with the following command: {CodeChunk} {CodeInput} R> install.packages("ts2net") Another option is to install \pkgts2net from GitHub using the \codeinstall_github() function from either \pkgremotes or \pkgdevtools packages: {CodeChunk} {CodeInput} R> install.packages("remotes") # if not installed R> remotes::install_github("lnferreira/ts2net")

3.1 Implementation aspects

The \pkgts2net package relies on some other important packages. One of the most important ones is \pkgigraph, which is used to model networks (Csardi and Nepusz, 2006). The \pkgigraph package provides many different graph and network analysis tools, which can be used to extract information from the modeled time series. The \pkgigraph objects can be easily transformed or exported to other formats such as graphml, gml and edge list. Besides, other network analysis packages commonly accept these objects. Examples are the \pkgsna (Butts, 2020), \pkgtsna (Bender-deMoll and Morris, 2021), and \pkgmuxViz (De Domenico et al., 2014).

The network construction process normally requires a high number of calculations. For this reason, the most important functions in \pkgts2net are implemented in parallel using multi-core programming. The parallelization was implemented using the \codemclapply() function from the \pkgparallel, which comes with \proglangR. This implementation uses forks to create multiple processes, an operation that is not available in Windows systems. It means that, on Windows, all functions in \pkgts2net with num_cores can only run with one core.

Besides the multicore parallelization, the \pkgts2net was also constructed to be run in supercomputers and computer clusters. The idea is to divide the distance calculations into jobs that could be calculated in parallel and then merged together to construct the distance matrix. The \codets_dist_dir() and \codets_dist_dir() functions make this process easier.

3.2 Distance calculation

Functions to calculate the distance matrix:

-

•

\code

ts_dist(): Calculates all pairs of distances and returns a distance matrix . This function receives a list of time series which can either have or not have the same length. It runs serial or in parallel (except in Windows) using \codemclapply() from \pkgparallel package. This function receives a distance function as a parameter. \pkgTs2net provides some distance functions (functions starting with \codetsdist_…()). Other distances can be easily implemented or adapted to be used within this function.

-

•

\code

ts_dist_part(): Calculate distances between pairs of time series (similarly to \codets_dist()) in part of a list. This function is particularly useful to run in parallel as jobs in supercomputers or computer clusters (HPC). Instead of having a single job calculating all distances (with \codets_dist()), this function permits the user to divide the calculation into different jobs. The result is a data frame with the distances and indexes and . To construct the distance matrix , the user can merge all the results using the functions \codedist_parts_merge() or \codedist_parts_file_merge().

-

•

\code

ts_dist_part_file(): This function works similar as \codets_dist_part(). The difference is that it reads the time series from RDS files in a directory. The advantage of this approach is that it does not load all the time series in memory but reads them only when necessary. This means that this function requires much less memory and should be preferred when memory consumption is a concern, e.g., huge data set or very long time series. The disadvantage of this approach is that it requires a high number of file read operations which considerably takes more time during the calculations. The user can merge all the results using the functions \codedist_parts_merge() or \codedist_parts_file_merge().

List of distance functions:

- •

- •

- •

- •

- •

-

•

\code

tsdist_mic(): Maximal information coefficient (MIC) distance. This function transforms the \codemine() function from the \pkgminerva package (Albanese et al., 2012) into a distance.

-

•

\code

tsdist_es(): The events synchronization distance (Eq. 22) proposed by Quian Quiroga et al. (2002). This function can also use the modification proposed by Boers et al. (2019) (). This function optionally test the significance for the events synchronization by shuffling the events in the time series.

-

•

\code

tsdist_vr(): Van Rossum distance. This function uses the \codefmetric() function from the \pkgmmpp package (Hino et al., 2015). It optionally test the significance for the events synchronization by shuffling the events in the time series.

Other distances can be easily applied in the \pkgts2net. The only two requirements are that the distance function should accept two arrays and it must return a distance. The packages \pkgTSclust (Montero and Vilar, 2014) and \pkgTSdist (Mori et al., 2016) provide many other distance functions that can be used to create network with \pkgts2net. For example, the euclidean distance (\codediss.EUCL() from \pkgTSclust) can be used to construct the distance matrix: {CodeChunk} {CodeInput} R> install.packages("TSclust") R> library(TSclust) R> D <- ts_dist(ts_list, dist_func=diss.EUCL) It is important to remember that the parameter \codedist_func receives a function, so no parenthesis is necessary (\codedist_func=diss.EUCL() is an error).

Another possibility is to implement specific functions and apply them. For example, the following code snippet implements a distance function that compares the mean values of each time series and applies it to calculate the distance matrix : {CodeChunk} {CodeInput} R> ts_dist_mean <- function(ts1, ts2) abs(mean(ts1) - mean(ts2)) R> D <- ts_dist(ts_list, dist_func=ts_dist_mean)

3.3 Network construction methods

Multiple time series into a network:

-

•

\code

net_knn(): Constructs a -NN network from a distance matrix .

-

•

\code

net_knn_approx(): Creates an approximated -NN network from a distance matrix . This implementation may omit some actual nearest neighbors, but it is considerably faster than \codenet_knn(). It uses the \codekNN() function from the \pkgdbscan package.

-

•

\code

net_enn(): Constructs a -NN network from a distance matrix .

-

•

\code

net_enn_approx(): Creates an approximated -NN network from a distance matrix . This implementation may omit some actual nearest neighbors, but it is considerably faster than \codenet_enn(). It uses the \codefrNN() function from the \pkgdbscan package.

-

•

\code

net_weighted(): Creates a full weighted network from a distance matrix . In this case, should be in the interval (see \codedist_matrix_normalize()). The link weights in the resulting network is .

-

•

\code

net_significant_links(): Construct a network with significant links.

A single time series into a network:

-

•

\code

ts_to_windows(): Extract time windows from a time series. This function can be used to construct a network from time windows. It returns a list of windows that can be used as input for \codets_dist() to construct a distance matrix .

-

•

\code

tsnet_qn(): Creates transition (quantile) networks.

-

•

\code

tsnet_vg(): Creates natural and horizontal visibility graphs. This function can be run in parallel.

-

•

\code

tsnet_rn(): Creates a recurrence network from a time series. This function uses the \coderqa() function from the \pkgnonlinearTseries package to obtain the recurrence matrix.

3.4 Useful functions

-

•

\code

dist_matrix_normalize(): normalizes (min-max) a distance matrix between zero and one.

-

•

\code

dist_percentile(): Returns the distance value that corresponds to the desired percentile. This function is useful when the user wants to generate networks with different distance functions but with the same link density.

-

•

\code

ts_dist_dirs_merge(): \codets_dist_dir() and \codets_dist_dir() calculate parts of the distance matrix . This function merges the results and constructs a distance matrix .

-

•

\code

tsdist_file_parts_merge(): Merges the distance parts as \codets_dist_dirs_merge(), but reading them from files.

-

•

\code

events_from_ts(): Function to extract events from a time series. For example, it can return the desired percentile of highest or lowest values. This function is particularly useful for event-based distance functions.

3.5 Data

-

•

\code

dataset_sincos_generate(): Generates a data set with sine and cosine time series with arbitrary noise.

-

•

\code

random_ets(): Creates random event time series with uniform probability.

-

•

us_cities_temperature_df: A data set containing the temperature in 27 US cities between 2012 and 2017. This data set was adapted from the original (Beniaguev, 2017) for simplicity reasons. Different from the original, this data set comprehends only cities in the US, grouped by month (mean value) temperature and without days with missing data. The data set is stored in a data frame with 61 rows and 28 variables where each column (except date), corresponds to the mean temperature values (°C) for each city.

-

•

us_cities_temperature_list: Similar to us_cities_temperature_df, but in a list, which is the required format for distance calculations (e.g. \codets_dist()).

3.6 Source code

The \pkgts2net is open-source and free to use according to the MIT License (https://github.com/lnferreira/ts2net/blob/main/LICENSE). All the code is available on Github at https://github.com/lnferreira/ts2net.

4 Applications

This section presents how the \pkgts2net package can be used to transform a set or a single time series into networks. Two real data sets were used as examples. The goal here is not to present complicated applications and deep analyses but straightforward and didactic examples of how to use networks to model time series.

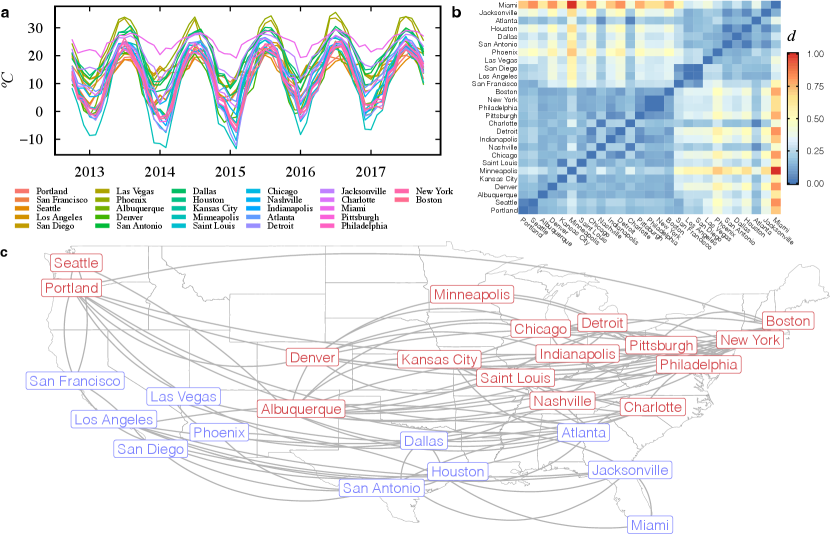

4.1 A set of time series into a network

This section presents a network modeling example using real data. The data set is composed of monthly temperature data from 27 cities in the US between 2012 and 2017, illustrated in Fig. 7-a. The version presented here was extracted and adapted from Kaggle (Beniaguev, 2017) for simplicity reasons by taking the monthly averages and keeping only US cities.

The following code calculates the distance matrix (Fig. 7-b) using DTW, finds the that corresponds to the 30% of the shortest distances, constructs a -NN network (Fig. 7-c), and find communities using the algorithm proposed by Girvan and Newman (2002):

R> D <- ts_dist(us_cities_temperature_list, dist_func = tsdist_dtw) R> eps <- dist_percentile(D, percentile = 0.3) R> net <- net_enn(D, eps) R> cluster_edge_betweenness(net) {CodeOutput} IGRAPH clustering edge betweenness, groups: 2, mod: 0.33 + groups: ‘2‘ [1] "San Francisco" "Los Angeles" "San Diego" [4] "Las Vegas" "Phoenix" "San Antonio" [7] "Dallas" "Houston" "Atlanta" + … omitted several groups/vertices

Fig. 7-c illustrates the resulting network where nodes are conveniently placed according to their geographical position. Node colors represent the two communities that cluster together colder (blue nodes) and hotter (red nodes) cities.

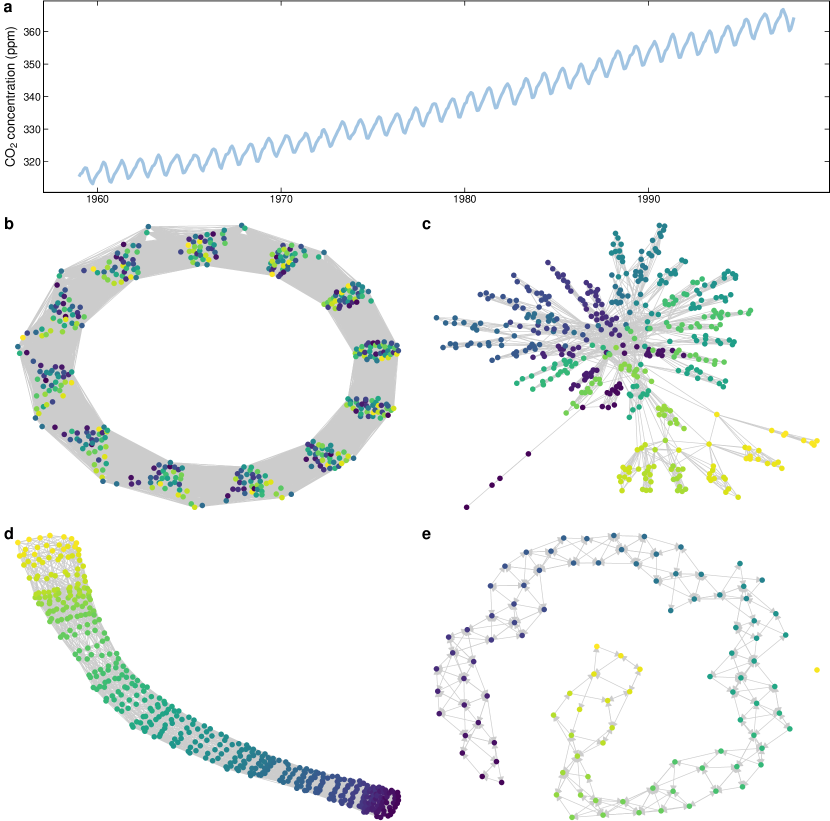

4.2 Transforming a set of time series into a network

This section presents how a time series extracted from real data can be modeled as networks. The time series with the monthly atmospheric concentration of carbon dioxide (ppm) measured in the Mauna Loa Observatory between 1959 and 1997 (Keeling et al., 2005), illustrated in Fig. 8a. This data set was mainly chosen by its simplicity and accessibility (available with the base installation of R).

The following code generates a time-window network (net_w) with width 12 and one-value step, a visibility graph (net_vg), a recurrence network (net_rn) with , and a transition network (net_qn) using 100 equally spaced bins using the \pkgts2net package.

R> net_w = as.vector(co2) |> ts_to_windows(width = 12, by = 1) |> ts_dist(cor_type = "+") |> net_enn(eps = 0.25) R> net_vg = as.vector(co2) |> tsnet_vg() R> net_rn = as.vector(co2) |> tsnet_rn(radius = 5) R> net_qn = as.vector(co2) |> tsnet_qn(breaks = 100)

Figures 8b-e illustrate the networks resulting from the transformation of the CO2 time series. From the topology of the resulting networks, it is possible to extract useful information. For example, the time-window network (Fig. 8b) shows 12 groups representing each month. The visibility graph (Fig. 8c) presents many small-degree nodes that represent the values on valleys while a few hubs connect peaks values of CO2 after 1990 that connect to many other nodes. These hubs appear due to the increasing trend of CO2 contraction and some slightly higher peaks during the seasons after 1980. The recurrence (Fig. 8d) and transition (Fig. 8e) networks present some local connections caused by the seasons, and a line shape as a result the increasing trend in the CO2 concentration over the years.

5 Final considerations

This paper presents \pkgts2net, a package to transform time series into networks. One important drawback when transforming a set of time series into a network is the high computational cost required to construct a distance matrix (combinations of time series), making it unfeasible for huge data sets. To minimize this problem, \pkgts2net provides tools to run distance functions in parallel and in high-performance computers via multiple jobs.

The \pkgts2net’s goal is not to provide all transformation methods in the literature but the most used ones. It does not mean that this package cannot be extended or customized. For example, as explained in Sec. 3.2, this package accepts other time series distance functions implemented by other packages or the user. Additional modeling approaches can also be incorporated to the package in the future.

Computational details

The \pkgts2net package requires \proglangR version 4.1.0 or higher. \proglangR itself and all packages used are available from the Comprehensive \proglangR Archive Network (CRAN) at https://CRAN.R-project.org/.

References

- Albanese et al. (2012) Albanese D, Filosi M, Visintainer R, Riccadonna S, Jurman G, Furlanello C (2012). “Minerva and minepy: a C engine for the MINE suite and its R, Python and MATLAB wrappers.” Bioinformatics, p. bts707.

- Arya et al. (1998) Arya S, Mount DM, Netanyahu NS, Silverman R, Wu AY (1998). “An Optimal Algorithm for Approximate Nearest Neighbor Searching Fixed Dimensions.” J. ACM, 45(6), 891–923. ISSN 0004-5411. 10.1145/293347.293348.

- Asuero et al. (2006) Asuero AG, Sayago A, González AG (2006). “The Correlation Coefficient: An Overview.” Critical Reviews in Analytical Chemistry, 36(1), 41–59. 10.1080/10408340500526766.

- Barabási and Pósfai (2016) Barabási AL, Pósfai M (2016). Network science. Cambridge University Press, Cambridge. ISBN 9781107076266 1107076269.

- Bender-deMoll and Morris (2021) Bender-deMoll S, Morris M (2021). tsna: Tools for Temporal Social Network Analysis.

- Beniaguev (2017) Beniaguev D (2017). “Historical Hourly Weather Data 2012-2017.” https://www.kaggle.com/datasets/selfishgene/historical-hourly-weather-data. [Online; accessed 01-June-2022].

- Berndt and Clifford (1994) Berndt DJ, Clifford J (1994). “Using Dynamic Time Warping to Find Patterns in Time Series.” In Proceedings of the 3rd International Conference on Knowledge Discovery and Data Mining, AAAIWS’94, pp. 359–370. AAAI Press.

- Boers et al. (2019) Boers N, Goswami B, Rheinwalt A, Bookhagen B, Hoskins B, Kurths J (2019). “Complex networks reveal global pattern of extreme-rainfall teleconnections.” Nature, 566(7744), 373–377. 10.1038/s41586-018-0872-x.

- Bondy and Murty (2008) Bondy JA, Murty USR (2008). Graph theory. Springer.

- Bullmore and Sporns (2009) Bullmore E, Sporns O (2009). “Complex brain networks: graph theoretical analysis of structural and functional systems.” Nature Reviews Neuroscience, 10(3), 186–198. 10.1038/nrn2575.

- Butts (2020) Butts CT (2020). sna: Tools for Social Network Analysis. R package version 2.6.

- Campanharo et al. (2011) Campanharo ASLO, Sirer MI, Malmgren RD, Ramos FM, Amaral LAN (2011). “Duality between Time Series and Networks.” PLOS ONE, 6(8), 1–13. 10.1371/journal.pone.0023378.

- Chen et al. (2009) Chen J, Fang Hr, Saad Y (2009). “Fast Approximate kNN Graph Construction for High Dimensional Data via Recursive Lanczos Bisection.” J. Mach. Learn. Res., 10, 1989–2012. ISSN 1532-4435.

- Csardi and Nepusz (2006) Csardi G, Nepusz T (2006). “The igraph software package for complex network research.” InterJournal, Complex Systems, 1695.

- De Domenico et al. (2014) De Domenico M, Porter MA, Arenas A (2014). “MuxViz: a tool for multilayer analysis and visualization of networks.” Journal of Complex Networks, 3(2), 159–176. ISSN 2051-1310. 10.1093/comnet/cnu038.

- Deza and Deza (2009) Deza MM, Deza E (2009). “Encyclopedia of distances.” In Encyclopedia of distances, pp. 1–583. Springer.

- Donner et al. (2010) Donner RV, Zou Y, Donges JF, Marwan N, Kurths J (2010). “Recurrence networks—a novel paradigm for nonlinear time series analysis.” New Journal of Physics, 12(3), 033025. 10.1088/1367-2630/12/3/033025.

- Esling and Agon (2012) Esling P, Agon C (2012). “Time-Series Data Mining.” ACM Comput. Surv., 45(1). ISSN 0360-0300. 10.1145/2379776.2379788.

- Ferreira et al. (2021a) Ferreira LN, Ferreira NCR, Macau EEN, Donner RV (2021a). “The effect of time series distance functions on functional climate networks.” The European Physical Journal Special Topics, 230(14), 2973–2998. 10.1140/epjs/s11734-021-00274-y.

- Ferreira et al. (2021b) Ferreira LN, Hong I, Rutherford A, Cebrian M (2021b). “The small-world network of global protests.” Scientific Reports, 11(1), 19215. 10.1038/s41598-021-98628-y.

- Giorgino (2009) Giorgino T (2009). “Computing and Visualizing Dynamic Time Warping Alignments in R: The dtw Package.” Journal of Statistical Software, 31(7), 1–24. 10.18637/jss.v031.i07.

- Girvan and Newman (2002) Girvan M, Newman MEJ (2002). “Community structure in social and biological networks.” Proceedings of the National Academy of Sciences, 99(12), 7821–7826. 10.1073/pnas.122653799.

- Goldberger et al. (2000) Goldberger AL, Amaral LAN, Glass L, Hausdorff JM, Ivanov PC, Mark RG, Mietus JE, Moody GB, Peng CK, Stanley HE (2000). “PhysioBank, PhysioToolkit, and PhysioNet.” Circulation, 101(23), e215–e220. 10.1161/01.CIR.101.23.e215.

- Hino et al. (2015) Hino H, Takano K, Murata N (2015). “mmpp: A Package for Calculating Similarity and Distance Metrics for Simple and Marked Temporal Point Processes.” The R Journal, 7(2), 237–248. 10.32614/RJ-2015-033.

- Holme and Saramäki (2012) Holme P, Saramäki J (2012). “Temporal networks.” Physics Reports, 519(3), 97–125. ISSN 0370-1573. 10.1016/j.physrep.2012.03.001. Temporal Networks.

- Keeling et al. (2005) Keeling CD, Piper SC, Bacastow RB, Wahlen M, Whorf TP, Heimann M, Meijer HA (2005). Atmospheric CO2 and 13CO2 Exchange with the Terrestrial Biosphere and Oceans from 1978 to 2000: Observations and Carbon Cycle Implications, pp. 83–113. Springer New York, New York, NY. ISBN 978-0-387-27048-7. 10.1007/0-387-27048-5_5.

- Kivelä et al. (2014) Kivelä M, Arenas A, Barthelemy M, Gleeson JP, Moreno Y, Porter MA (2014). “Multilayer networks.” Journal of Complex Networks, 2(3), 203–271. ISSN 2051-1310. 10.1093/comnet/cnu016.

- Lacasa et al. (2008) Lacasa L, Luque B, Ballesteros F, Luque J, Nuño JC (2008). “From time series to complex networks: The visibility graph.” Proceedings of the National Academy of Sciences, 105(13), 4972–4975. ISSN 0027-8424. 10.1073/pnas.0709247105.

- Lan et al. (2015) Lan X, Mo H, Chen S, Liu Q, Deng Y (2015). “Fast transformation from time series to visibility graphs.” Chaos: An Interdisciplinary Journal of Nonlinear Science, 25(8), 083105. 10.1063/1.4927835.

- Liu et al. (2016) Liu C, Springer D, Li Q, Moody B, Juan RA, Chorro FJ, Castells F, Roig JM, Silva I, Johnson AEW, Syed Z, Schmidt SE, Papadaniil CD, Hadjileontiadis L, Naseri H, Moukadem A, Dieterlen A, Brandt C, Tang H, Samieinasab M, Samieinasab MR, Sameni R, Mark RG, Clifford GD (2016). “An open access database for the evaluation of heart sound algorithms.” Physiological Measurement, 37(12), 2181–2213. 10.1088/0967-3334/37/12/2181.

- Luque et al. (2009) Luque B, Lacasa L, Ballesteros F, Luque J (2009). “Horizontal visibility graphs: Exact results for random time series.” Phys. Rev. E, 80, 046103. 10.1103/PhysRevE.80.046103.

- MacKay (2003) MacKay D (2003). Information Theory, Inference and Learning Algorithms. Cambridge University Press. ISBN 9780521642989.

- Meyer (2014) Meyer PE (2014). infotheo: Information-Theoretic Measures. R package version 1.2.0.

- Mitchell (2006) Mitchell M (2006). “Complex systems: Network thinking.” Artificial Intelligence, 170(18), 1194–1212. ISSN 0004-3702. 10.1016/j.artint.2006.10.002. Special Review Issue.

- Montero and Vilar (2014) Montero P, Vilar JA (2014). “TSclust: An R Package for Time Series Clustering.” Journal of Statistical Software, 62(1), 1–43. 10.18637/jss.v062.i01.

- Mori et al. (2016) Mori U, Mendiburu A, Lozano JA (2016). “Distance Measures for Time Series in R: The TSdist Package.” The R Journal, 8(2), 451–459. 10.32614/RJ-2016-058.

- Qiao et al. (2018) Qiao L, Zhang L, Chen S, Shen D (2018). “Data-driven graph construction and graph learning: A review.” Neurocomputing, 312, 336–351. ISSN 0925-2312. 10.1016/j.neucom.2018.05.084.

- Quian Quiroga et al. (2002) Quian Quiroga R, Kreuz T, Grassberger P (2002). “Event synchronization: A simple and fast method to measure synchronicity and time delay patterns.” Phys. Rev. E, 66, 041904. 10.1103/PhysRevE.66.041904.

- Reshef et al. (2011) Reshef DN, Reshef YA, Finucane HK, Grossman SR, McVean G, Turnbaugh PJ, Lander ES, Mitzenmacher M, Sabeti PC (2011). “Detecting Novel Associations in Large Data Sets.” Science, 334(6062), 1518–1524. 10.1126/science.1205438.

- Silva and Zhao (2018) Silva T, Zhao L (2018). Machine Learning in Complex Networks. Springer International Publishing. ISBN 9783319792347.

- Silva et al. (2021) Silva VF, Silva ME, Ribeiro P, Silva F (2021). “Time series analysis via network science: Concepts and algorithms.” WIREs Data Mining and Knowledge Discovery, 11(3), e1404. 10.1002/widm.1404.

- Ting-Ting et al. (2012) Ting-Ting Z, Ning-De J, Zhong-Ke G, Yue-Bin L (2012). “Limited penetrable visibility graph for establishing complex network from time series.” Acta Physica Sinica, 61(3), 030506. 10.7498/aps.61.030506.

- Tumminello et al. (2010) Tumminello M, Lillo F, Mantegna RN (2010). “Correlation, hierarchies, and networks in financial markets.” Journal of Economic Behavior & Organization, 75(1), 40–58. ISSN 0167-2681. 10.1016/j.jebo.2010.01.004. Transdisciplinary Perspectives on Economic Complexity.

- v. Rossum (2001) v Rossum MCW (2001). “A Novel Spike Distance.” Neural Computation, 13(4), 751–763. 10.1162/089976601300014321.

- Vinh et al. (2010) Vinh NX, Epps J, Bailey J (2010). “Information Theoretic Measures for Clusterings Comparison: Variants, Properties, Normalization and Correction for Chance.” Journal of Machine Learning Research, 11(95), 2837–2854.

- Wand (1997) Wand MP (1997). “Data-Based Choice of Histogram Bin Width.” The American Statistician, 51(1), 59–64. 10.1080/00031305.1997.10473591.

- Wang et al. (2013) Wang X, Mueen A, Ding H, Trajcevski G, Scheuermann P, Keogh E (2013). “Experimental comparison of representation methods and distance measures for time series data.” Data Mining and Knowledge Discovery, 26(2), 275–309. 10.1007/s10618-012-0250-5.

- Zou et al. (2019) Zou Y, Donner RV, Marwan N, Donges JF, Kurths J (2019). “Complex network approaches to nonlinear time series analysis.” Physics Reports, 787, 1–97. ISSN 0370-1573. 10.1016/j.physrep.2018.10.005. Complex network approaches to nonlinear time series analysis.