11email: {jganad,golin}@cse.ust.hk

Fully Dynamic -Center in Low Dimensions via Approximate Furthest Neighbors

Abstract

Let be a set of points in some metric space. The approximate furthest neighbor problem is, given a second point set to find a point that is a approximate furthest neighbor from

The dynamic version is to maintain over insertions and deletions of points, in a way that permits efficiently solving the approximate furthest neighbor problem for the current

We provide the first algorithm for solving this problem in metric spaces with finite doubling dimension. Our algorithm is built on top of the navigating net data-structure.

An immediate application is two new algorithms for solving the dynamic -center problem. The first dynamically maintains approximate -centers in general metric spaces with bounded doubling dimension and the second maintains approximate Euclidean -centers. Both these dynamic algorithms work by starting with a known corresponding static algorithm for solving approximate -center, and replacing the static exact furthest neighbor subroutine used by that algorithm with our new dynamic approximate furthest neighbor one.

Unlike previous algorithms for dynamic -center with those same approximation ratios, our new ones do not require knowing or in advance. In the Euclidean case, our algorithm also seems to be the first deterministic solution.

Keywords:

PTAS, Dynamic Algorithms, -center, Furthest Neighbor.1 Introduction

The main technical result of this paper is an efficient procedure for calculating approximate furthest neighbors from a dynamically changing point set This procedure, in turn, will lead to the development of two new simple algorithms for maintaining approximate -centers in dynamically changing point sets.

Let denote the ball centered at with radius . The -center problem is to find a minimum radius and associated such that the union of balls contains all of the points in

In the arbitrary metric space version of the problem, the centers are restricted to be points in In the Euclidean -center problem with and may be any set of points in The Euclidean -center problem is also known as the minimum enclosing ball (MEB) problem.

An -approximation algorithm would find a set of centers and radius in polynomial time such that contains all of the points in and . The -center problem is known to be NP-hard to approximate with a factor smaller than 2 for arbitrary metric spaces[HN79], and with a factor smaller than for Euclidean spaces [FG88].

Static algorithms

There do exist two 2-approximation algorithms in [Gon85, HS85] for the center problem on an arbitrary metric space; the best-known approximation factor for Euclidean -center remains 2 even for two-dimensional space when is part of the input (see [FG88]). There are better results for the special case of the Euclidean -center for fixed , or (e.g., see [BHPI02, BC03, AS10, KA15, KS20]). There are also PTASs [BHPI02, BC03, KS20] for the Euclidean center when and are constants.

Dynamic algorithms

In many practical applications, the data set is not static but changes dynamically over time, e.g, a new point may be inserted to or deleted from at each step. and then need to be recomputed at selected query times. If only insertions are permitted, the problem is incremental; if both insertions and deletions are permitted, the problem is fully dynamic.

The running time of such dynamic algorithms are often split into the time required for an update (to register a change in the storing data structure) and the time required for a query (to solve the problem on the current dataset). In dynamic algorithms, we require both update and query time to be nearly logarithmic or constant. The static versions take linear time.

Some known results on these problems are listed in Table 1. As is standard, many of them are stated in terms of the aspect ratio of point set . Let and . The aspect ratio of is .

The algorithms listed in the table work under slightly different models. More explicitly:

-

1.

For arbitrary metric spaces, both [GHL+21] and the current paper assume that the metric space has a bounded doubling dimension (see Definition 2).

-

2.

In “Low dimension”, update time may be exponential in ; in “High dimension” it may not.

-

3.

The “fixed” column denotes parameter(s) that must be fixed in advance when initializing the corresponding data structure, e.g., and/or In addition, in both [SS19, BEJM21] for high dimensional space, is a constant selected in advance that appears in both the approximation factor and running time.

The data structure used in the current paper is the navigating nets from [KL04]. It does not require knowing or in advance but instead supports them as parameters to the query.

-

4.

In [Cha09], (avg) denotes that the update time is in expectation (it is a randomized algorithm).

-

5.

Schmidt and Sohler [SS19] answers the slightly different membership query. Given , it returns the cluster containing In low dimension, the running time of their algorithm is expected and amortized.

Our contributions and techniques

Our main results are two algorithms for solving the dynamic approximate -center problem in, respectively, arbitrary metric spaces with a finite doubling dimension and in Euclidean space.

-

1.

Our first new algorithm is for any metric space with finite doubling dimension:

Theorem 1.1

Let be a metric space with a finite doubling dimension . Let be a dynamically changing set of points. We can maintain in time per point insertion and deletion so as to support approximate -center queries in time.

Compared with previous results (see table 1), our data structure does not require knowing or in advance, while the construction of the previous data structure depends on or as basic knowledge.

-

2.

Our second new algorithm is for the Euclidean -center problem:

Theorem 1.2

Let be a dynamically changing set of points. We can maintain in time per point insertion and deletion so as to support approximate -center queries in time.

This algorithm seems to be the first deterministic dynamic solution for the Euclidean -center problem. Chan [Cha09] presents a randomized dynamic algorithm while they do not find a way to derandomize it.

The motivation for our new approach was the observation that many previous results e.g., [BC03, BHPI02, Cha09, Gon85, KS20], on static -center, work by iteratively searching the furthest neighbor in from a changing set of points

The main technical result of this paper is an efficient procedure for calculating approximate furthest neighbors from a dynamically changing point set This procedure, in turn, will lead to the development of two new simple algorithms for maintaining approximate -centers in dynamically changing point sets.

Consider a set of points in some metric space A nearest neighbor in to a query point is a point satisfying A approximate nearest neighbor to is a point satisfying

Similarly, a furthest neighbor to a query point is a satisfying . A approximate furthest neighbor to is a point satisfying

There exist efficient algorithms for maintaining a dynamic point set (under insertions and deletions) that, given query point , quickly permit calculating approximate nearest [KL04] and furthest [Bes96, PSSS15, Cha16] neighbors to

A approximate nearest neighbor to a query set , is a point satisfying . Because “nearest neighbor” is decomposable, i.e., [KL04] also permits efficiently calculating an approximate nearest neighbor to set from a dynamically changing

An approximate furthest neighbor to a query set is similarly defined as a point satisfying Our main new technical result is Theorem 2.1, which permits efficiently calculating an approximate furthest neighbor to query set from a dynamically changing We note that, unlike nearest neighbor, furthest neighbor is not a decomposable problem and such a procedure does not seem to have previously known.

This technical result permits the creation of new algorithms for solving the dynamic -center problem in low dimensions.

2 Searching for a -Approximate Furthest Point in a Dynamically Changing Point Set

Let denote a fixed metric space.

Definition 1

Let be finite sets of points and . Set

is a furthest neighbor in to if

is a furthest neighbor in to set if

is a -approximate furthest neighbor in to if

is a -approximate furthest neighbor in to if

and will, respectively, denote procedures returning a furthest neighbor and a -approximate furthest neighbor to in

and will, respectively, denote procedures returning a furthest neighbor and -approximate furthest neighbor to in

Our algorithm assumes that has finite doubling dimension.

Definition 2 (Doubling Dimensions)

The doubling dimension of a metric space is the minimum value such that any ball in can be covered by balls of radius .

It is known that the doubling dimension of the Euclidean space is [H+01].

Now let be a metric space with a finite doubling dimension and be a finite set of points. Recall that and The aspect ratio of is .

Our main technical theorem (proven below in Section 2.2) is:

Theorem 2.1

Let be a metric space with finite doubling dimension and be a point set stored by a navigating net data structure [KL04]. Let be another point set. Then, we can find a -approximate furthest point among to in time, where is the aspect ratio of set .

The navigating net data structure [KL04] is described in more detail below.

2.1 Navigating Nets [KL04]

Navigating nets are very well-known structures for dynamically maintaining points in a metric space with finite doubling dimension, in a way that permits approximate nearness queries. To the best of our knowledge they have not been previously used for approximate “furthest point from set” queries.

To describe the algorithm, we first need to quickly review some basic known facts about navigating nets. The following lemma is critical to our analysis.

Lemma 1

[KL04] Let be a metric space and . If the aspect ratio of the metric induced on is at most and , then .

We next introduce some notation from [KL04]:

Definition 3 (-net)

[KL04] Let be a metric space. For a given parameter , a subset is an -net of if it satisfies:

-

(1)

For every , ;

-

(2)

, there exists at least one such that .

We now start the description of the navigating net data structure. Set . Each is called a scale. For every , will denote an -net of . The base case is that for every scale ,

Let be some fixed constant. For each scale and each , the data structure stores the set of points

| (1) |

is called the scale navigation list of .

Let denote the smallest satisfying and denote the largest satisfying for every . Scales are called non-trivial scales; all other scales are called trivial. Since and , the number of non-trivial scales is

Finally, we need a few more basic properties of navigating nets:

Lemma 2

[KL04](Lemma 2.1 and 2.2) For each scale , we have:

-

(1)

,

-

(2)

, ;

-

(3)

, .

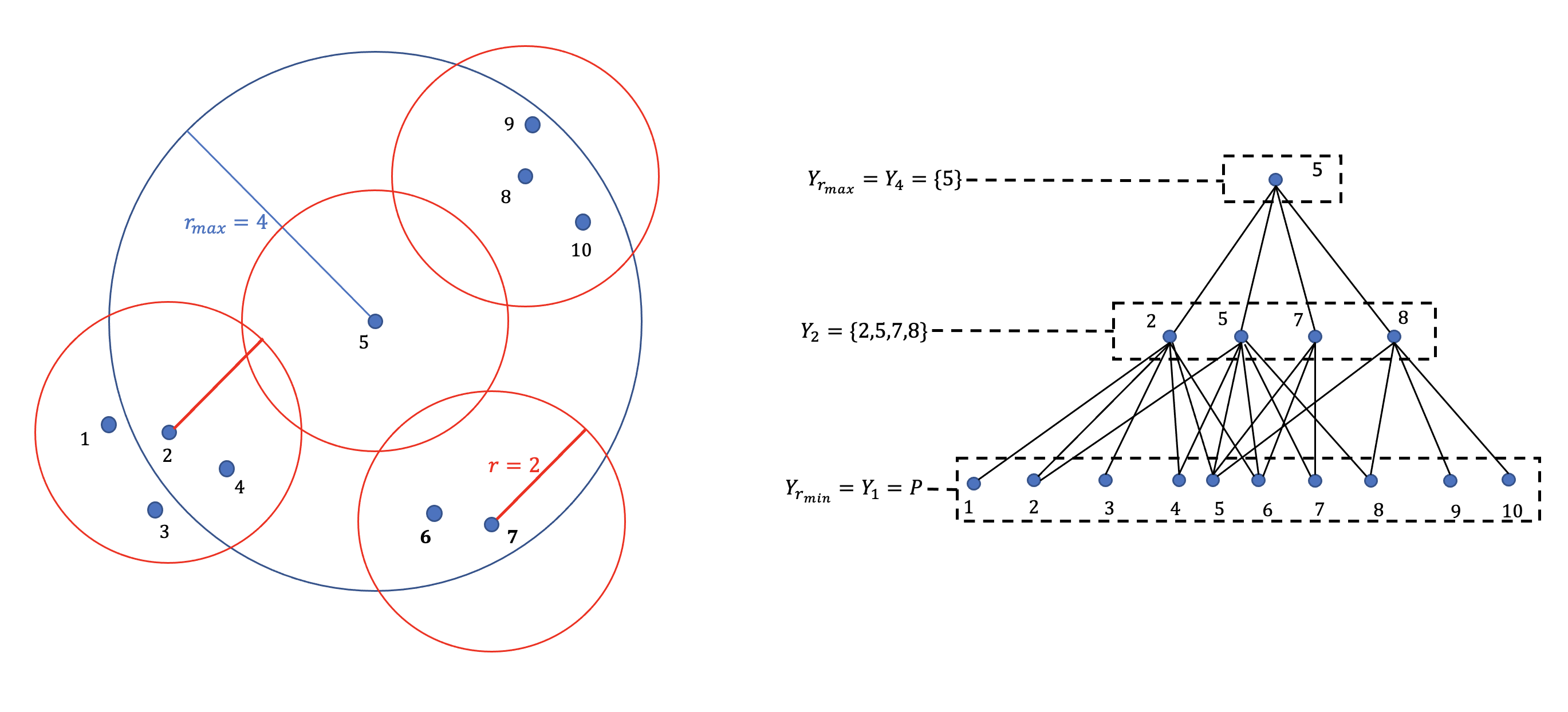

We provide an example (Figure 3) of navigating nets in the Appendix. Navigating nets were originally designed to solve dynamic approximate nearest neighbor queries and are useful because they can be quickly updated.

Theorem 2.2

([KL04]) Navigating nets use words. The data structure can be updated with an insertion of a point to or a deletion of a point in in time 111Note: Although the update time of the navigating net depends on , it does not explicitly maintain the value of Instead it dynamically maintains the values and . The update time depends on the number of non-trivial scales , but without actually knowing . This includes distance computations.

2.2 The Approximate Furthest Neighbor Algorithm )

Input:

A navigating net for set , set and a constant .

Output: A -approximate furthest neighbor among to

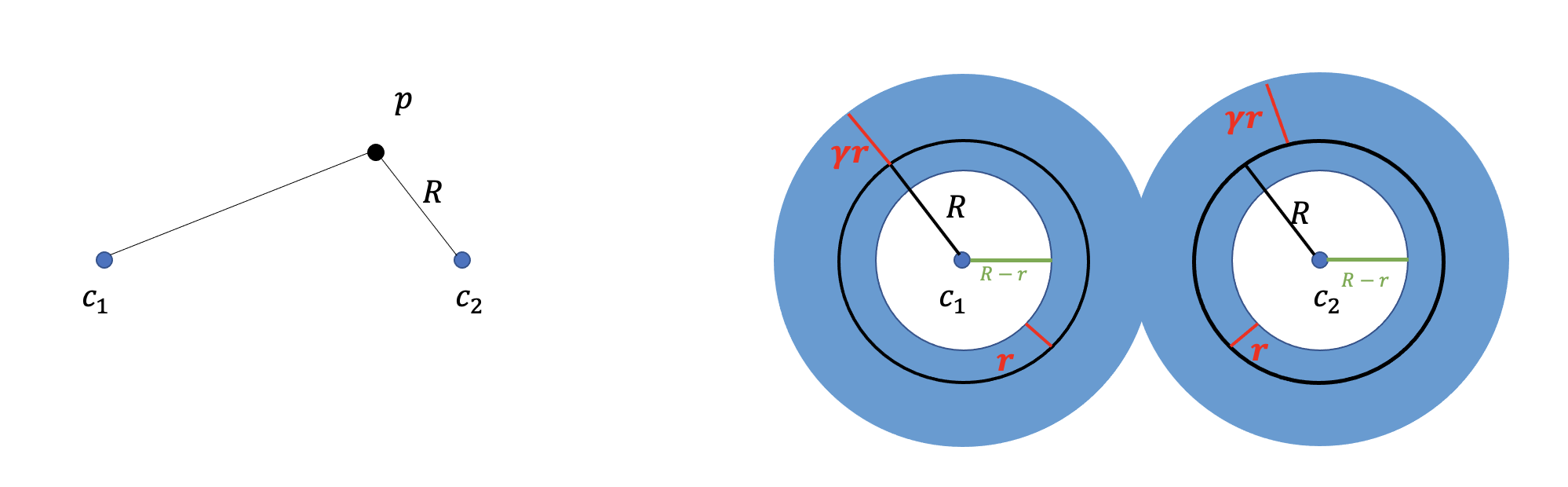

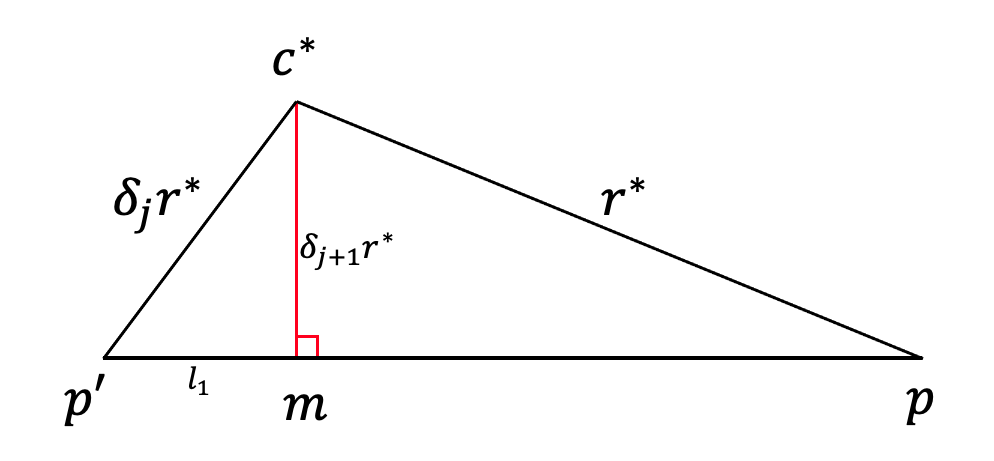

is given in Algorithm 1. Figure 1 provides some geometric intuition. requires that be stored in a navigating net and the following definitions:

Definition 4 (The sets )

-

•

, where ;

-

•

If is defined, .

Note that, by induction,

We now prove that returns a -approximate furthest point among to . We start by showing that, for every scale the furthest point to is close to

Lemma 3

Let be the furthest point to in . Then, every set as defined in Definition 4, contains a point satisfying

Proof

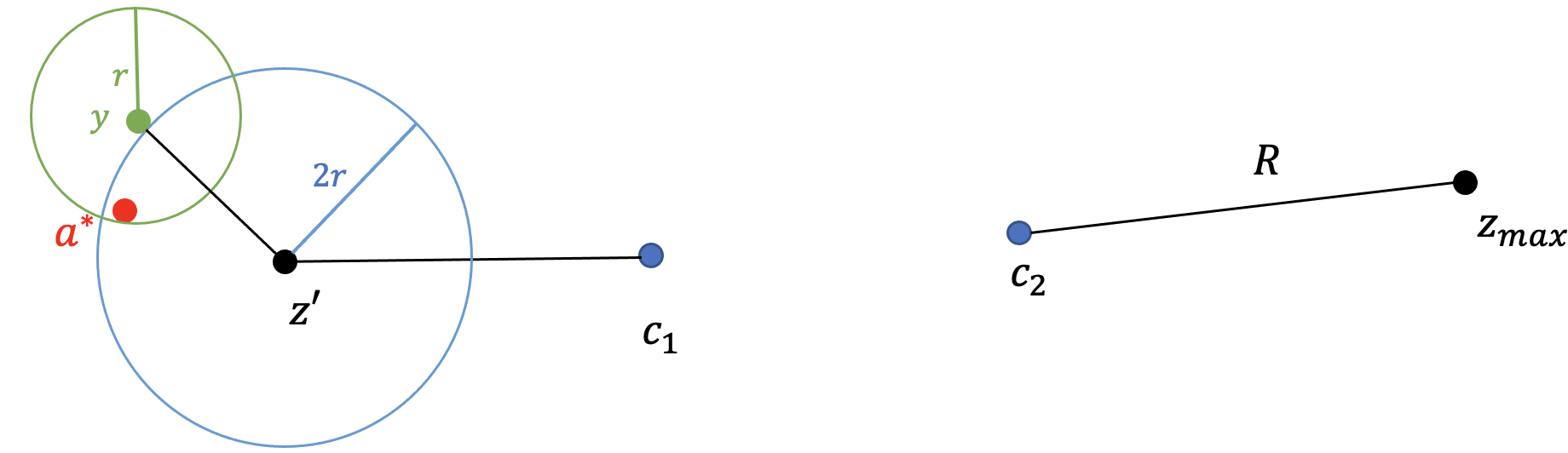

The proof is illustrated in Figure 2. It works by downward induction on . In the base case and , thus .

For the inductive step, we assume that satisfies the induction hypothesis, i.e, contains a point satisfying . We will show that contains a point satisfying .

Since is a -net of , there exists a point satisfying (Lemma 2(2)). Then,

and thus, because Finally, let . Then

Thus .

Lemma 3 permits bounding the approximation ratio of algorithm .

Lemma 4

Algorithm returns a point whose distance to satisfies .

Proof

Let denote the value of at the end of the algorithm. Let be the furthest point to among . Consider the two following conditions on

- 1.

-

2.

. In this case, recall that and that for every scale and , . Then

Now let be the largest scale for which and the scale at which AFN() terminates.

From point 1, Equation (2) holds with

If , then and the lemma is correct.

If then , so from point 2, and

Since satisfies condition 1, the second inequality holds. Hence, the lemma is again correct.

We now analyze the running time of .

Lemma 5

In each iteration of , .

Proof

We actually prove the equivalent statement that .

For all , there exists a point satisfying , i.e, . Let . Thus,

An iteration of will construct only when . Therefore, This implies

Next notice that, since is a -net, .

Finally, for fixed , , we have . Thus, the aspect ratio of the set is at most . Therefore, by Lemma 1,

Thus,

Lemma 6

runs at most iterations.

Proof

The algorithm starts with and concludes when Thus, the total number of iterations is at most

A more careful analysis leads to the proof of Theorem 2.1. Due to space limitations the full proof is deferred to appendix 0.B.

3 Modified -Center Algorithms

will now be used to design two new dynamic -center algorithms.

Lemma 2 hints that elements in can be approximate centers. This observation motivated Goranci et al. [GHL+21] to search for the smallest such that and return the elements in as centers. Unfortunately, used this way, the original navigating nets data structure only returns an -approximation solution. [GHL+21] improve this by simultaneously maintaining multiple nets.

Although we also apply navigating nets to construct approximate -centers, our approach is very different from that of [GHL+21]. We do not use the elements in as centers themselves. We only use the navigating net to support . Our algorithms result from substituting for deterministic furthest neighbor procedures in static algorithms.

The next two subsections introduce the two modified algorithms.

3.1 A Modified Version of Gonzalez’s [Gon85]’s Greedy Algorithm

Gonzalez [Gon85] described a simple and now well-known time -approximation algorithm that works for any metric space. It operates by performing exact furthest neighbor from a set queries. We just directly replace those exact queries with our new approximate furthest neighbor query procedure.

It is then straightforward to modify Gonzalaz’s proof from [Gon85] that his original algorithm is a -approximation one, to prove that our new algorithm is a -approximation one. The details of the algorithm (Algorithm 3) and the modified proof are provided in Appendix 0.C. This yields.

Theorem 3.1

Let be a finite set of points in a metric space . Suppose can be implemented in time. Algorithm 3 constructs a -approximate solution for the -center problem in time.

Plugging Theorem 2.1 into this proves Theorem 1.1.

3.2 A Modified Version of the Kim Schwarzwald [KS20] Algorithm

In what follows, is some arbitrary dimension.

In 2020 [KS20] gave an time (1+)-algorithm for the Euclidean -center (MEB) problem. They further showed how to extend this to obtain a (1+)-approximation to the Euclidean -center in time.

Their algorithms use, as a subroutine, a (or ) time brute-force procedure for finding (or

This subsection shows how replacing (or ) by (or ) along with some other minor small changes, maintains the correctness of the algorithm. Our modified version of Kim and Schwarzwald [KS20]’s MEB algorithm is presented as Algorithm 2.

Let be a constant. Their algorithm runs in iterations. The ’th iteration starts from some point and uses time to search for the point furthest from The iteration then selects a “good” point on the line segment as the starting point for the next iteration, where “good” means that the distance from to the optimal center is somehow bounded. The time to select such a "good" point is . The total running time of their algorithm is . They also prove that the performance ratio of their algorithm is at most .

The running time of their algorithm is dominated by the time required to find the point . As we will see in Theorem 3.2 below, finding the exact furthest point was not necessary. This could be replaced by

The first result is that this minor modification of Kim and Schwarzwald [KS20]’s algorithm still produces a approximation.

Theorem 3.2

Let be a set of points whose minimum enclosing ball has (unknown) radius Suppose can be implemented in time.

Let be the values returned by Algorithm 2. Then and . Thus Algorithm 2 constructs a -approximate solution and it runs in time.

Plugging Theorem 2.1 into Theorem 3.2 proves Theorem 1.2 for .

Input:

A set of points and a constant .

Output: A -approximate minimum enclosing ball containing all points in .

The algorithm presented is just a slight modification of that of [KS20]. The differences are that in

[KS20],

line 4 was originally

and the four terms on lines 8 and 9, were all originally

Proof

Every ball generated by Algorithm 2 encloses all of the points in i.e.,

| (3) |

To prove the correctness of the algorithm it suffices to show that Without loss of generality, we assume that

Each iteration of lines 4-9 of must end in one of the two following cases:

-

(1)

,

-

(2)

.

Note that if Case (1) holds for some then, directly from Equation 3 (using ),

This implies that if Case 1 ever holds, Algorithm 2 is correct.

The main lemma is

Lemma 7

If, , case (2) holds, i.e., , then

The proof of Lemma 7 is just a straightforward modification of the proof given in Kim and Schwarzwald [KS20] for their original algorithm and is therefore omitted. For completeness we provide the full modified proof in Section 0.D.1.

Lemma 7 implies that, by the end of the algorithm, Case 1 must have occurred at least once, so and the algorithm outputs a correct solution. Derivation of the running time of the algorithm is straightforward, completing the proof of Theorem 3.2.

[KS20] discuss (without providing details) how to use the "guessing" technique of [BHPI02, BC03]) to extend their MEB algorithm to yield a -approximation solution to the -center problem for .

For MEB, the Euclidean -center, in each iteration, they maintained the location of a candidate center and computed a furthest point to among . For the Euclidean -centers, in each step, they maintain locations of a set of candidate centers, and compute a furthest point to among using a procedure.

Again we can modify their algorithm by replacing the procedure by a one, computing an approximate furthest point to among . This will prove Theorem 1.2.

The full details of a modified version of their algorithm have been provided in section 0.D.2, which uses in place of , as well as an analysis of correctness and run time.

4 Conclusion

Our main new technical contribution is an algorithm, that finds a -approximate furthest point in to This works on top of a navigating net data structure [KL04] storing

The proofs of Theorems 1.1 and 1.2 follow immediately by maintaining a navigating net and plugging into Theorems 3.1 and 0.D.1, respectively.

These provide a fully dynamic and deterministic -approximation algorithm for the -center problem in a metric space with finite doubling dimension and a -approximation algorithm for the Euclidean -center problem, where are parameters given at query time.

One limitation of our algorithm is that, because is built on top of navigating nets, it depends upon aspect ratio . This is the only dependence of the -center algorithm on An interesting future direction would be to develop algorithms for in special metric spaces built on top of other structures that are independent of This would automatically lead to algorithms for approximate -center that, in those spaces, would also be independent of

References

- [AS10] Pankaj K Agarwal and R Sharathkumar. Streaming algorithms for extent problems in high dimensions. In Proceedings of the twenty-first annual ACM-SIAM symposium on Discrete algorithms, pages 1481–1489. SIAM, 2010.

- [BC03] Mihai Badoiu and Kenneth L Clarkson. Smaller core-sets for balls. In Proceedings of the fifteenth annual ACM-SIAM symposium on Discrete algorithms (SODA), volume 3, pages 801–802, 2003.

- [BEJM21] Mohammad Hossein Bateni, Hossein Esfandiari, Rajesh Jayaram, and Vahab Mirrokni. Optimal fully dynamic -centers clustering. arXiv preprint arXiv:2112.07050, 2021.

- [Bes96] Sergei Bespamyatnikh. Dynamic algorithms for approximate neighbor searching. In 8th Canadian Conference on Computational Geometry (CCCG’96), pages 252–257, 1996.

- [BHPI02] Mihai Bādoiu, Sariel Har-Peled, and Piotr Indyk. Approximate clustering via core-sets. In Proceedings of the thiry-fourth annual ACM symposium on Theory of computing (STOC), pages 250–257, 2002.

- [CGS18] TH Hubert Chan, Arnaud Guerqin, and Mauro Sozio. Fully dynamic k-center clustering. In Proceedings of the 2018 World Wide Web Conference (WWW), pages 579–587, 2018.

- [Cha09] Timothy M Chan. Dynamic coresets. Discrete & Computational Geometry, 42(3):469–488, 2009.

- [Cha16] Timothy M Chan. Dynamic streaming algorithms for epsilon-kernels. In 32nd International Symposium on Computational Geometry (SoCG 2016). Schloss Dagstuhl-Leibniz-Zentrum fuer Informatik, 2016.

- [FG88] Tomás Feder and Daniel Greene. Optimal algorithms for approximate clustering. In Proceedings of the twentieth annual ACM symposium on Theory of computing, pages 434–444, 1988.

- [GHL+21] Gramoz Goranci, Monika Henzinger, Dariusz Leniowski, Christian Schulz, and Alexander Svozil. Fully dynamic k-center clustering in low dimensional metrics. In 2021 Proceedings of the Workshop on Algorithm Engineering and Experiments (ALENEX), pages 143–153. SIAM, 2021.

- [Gon85] Teofilo F Gonzalez. Clustering to minimize the maximum intercluster distance. Theoretical Computer Science, 38:293–306, 1985.

- [H+01] Juha Heinonen et al. Lectures on analysis on metric spaces. Springer Science & Business Media, 2001.

- [HN79] Wen-Lian Hsu and George L Nemhauser. Easy and hard bottleneck location problems. Discrete Applied Mathematics, 1(3):209–215, 1979.

- [HS85] Dorit S Hochbaum and David B Shmoys. A best possible heuristic for the k-center problem. Mathematics of operations research, 10(2):180–184, 1985.

- [KA15] Sang-Sub Kim and HeeKap Ahn. An improved data stream algorithm for clustering. Computational Geometry, 48(9):635–645, 2015.

- [KL04] Robert Krauthgamer and James R Lee. Navigating nets: Simple algorithms for proximity search. In Proceedings of the fifteenth annual ACM-SIAM symposium on Discrete algorithms (SODA), pages 798–807, 2004.

- [KS20] Sang-Sub Kim and Barbara Schwarzwald. A (1+ )-approximation for the minimum enclosing ball problem in . In the 36th European Workshop on Computational Geometry (EuroCG), 2020.

- [PSSS15] Rasmus Pagh, Francesco Silvestri, Johan Sivertsen, and Matthew Skala. Approximate furthest neighbor in high dimensions. In International Conference on Similarity Search and Applications, pages 3–14. Springer, 2015.

- [SS19] Melanie Schmidt and Christian Sohler. Fully dynamic hierarchical diameter k-clustering and k-center. arXiv preprint arXiv:1908.02645, 2019.

Appendix 0.A A Navigating Nets Example

Appendix 0.B The Proof of Theorem 2.1

Proof

It only remains to show that the running time of is bounded by . We do this by splitting the set of scales processed by line 2 of Algorithm 1 into two ranges, (1) and (2)

We then study the two cases separately : For (1) we will show a better bound on than Lemma 5; for (2) we will show that the number of processed scales is small.

-

(1)

When , the size of is small. To see this note that

Thus, for each , there exists a such that , i.e., . Additionally, , so, , . Since the diameter of the ball is smaller than , for every . Therefore, .

By Lemma 6, the number of iterations is at most . Hence, the total running time for Case (1) is

-

(2)

When , although the size of can be larger, the number of possible iterations will be small.

Thus, the total number of Case (2) iterations is at most and the total running time in Case (2) is

Combining (1) and (2), the total running time of the algorithm is

Appendix 0.C The Modified Version of Gonzales’s Greedy Algorithm

As noted in the main text, Algorithm 3 is essentially Gonzalez’s [Gon85] original algorithm with replaced by

0.C.1 The Algorithm

Input:

A set of points , positive integer and a constant .

Output:

A set () and radius such that

and

Gonzalaz’s original algorithm only returned since in the deterministic case could be calculated in time.

0.C.2 Proof of Theorem 3.1

As noted this proof is just a modification of the proof of correctness of Gonzalez’s [Gon85] original algorithm (which used rather than ).

Proof

(of Theorem 3.1)

Let and denote the solution returned by .

Let be the -approximate furthest neighbor from to returned. Thus

i.e., . The output of the algorithm is thus a feasible solution.

Let denote an optimal center solution for the point set with being the optimal radius value. Recall that is the number of points in set . We consider two cases:

-

Case 1

,

Fix Let be such that Now let satisfy .

Then, by the triangle inequality, .

We have just shown that . In particular,

Therefore,

-

Case 2

There exists such that .

Let be the th point added into and . Thus . In Case 2, contains at least two points and (). From line 3 in , we have . Furthermore,Then, consider the radius returned by :

where the last inequality assumes, without loss of generality, that

Thus, , and, in both cases . Thus, always computes a -approximation solution.

Appendix 0.D Missing Details Associated with the Modified Kim and Schwarzwald[KS20]’s algorithm

0.D.1 Proof of Lemma 7

As noted previously, this proof is a modification of the proof of correctness given by Kim and Schwarzwald [KS20] for their algorithm (which used rather than ).

The proof needs an important geometric observation due to Kim and Schwarzwald[KS20] (slightly rephrased here). This was an extension of an earlier observation by Kim and Ahn[KA15] that was used to design a streaming algorithm for the Euclidean 2-center problem.

Lemma 8

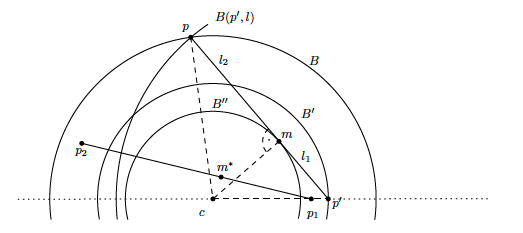

[KS20] (See Figure 4.) Fix Let and be two -dimensional balls with radii and around the same center point . Let and with .

Define to be the -dimensional ball centered at that is tangential to . Denote that tangent point as and define the distances and . Note that .

Consider any line segment satisfying , and . Then any point on with and lies inside .

Lemma 8 will imply that if case (2) always occurs, then the distance from to is bounded.

Corollary 1

If, , case (2) holds, i.e., , then and

Proof

The proof will be by induction. In the base case, and is arbitrarily selected in , so .

Now suppose that, , holds. From the induction hypothesis, we assume that and

Set , and .

Next, arbitrarily select a point on the boundary of Now construct ball . From the induction hypothesis,

If this would imply

contradicting that this is Case 2. Thus .

Since, this implies that must intersect . Arbitrarily select one of the two intersection points as . Next set

Finally, define to be the ball centered at that is tangent to line segment . Denote that tangent point as and define the distances and . We will show that , i.e., that , where is as defined on line 9 of Algorithm 2.

Note that . Thus, can be computed by the equation (illustrated in figure 5).

This solves to Thus

as required and, by construction, Furthermore,

Plugging into line 8 of Algorithm 2 yields

Since , we have

and .

From the induction hypothesis, , so . The definition of further implies .

We can now prove Lemma 7. We again note that this is just a slight modification of the proof given by Kim and Schwarzwald [KS20] for their original algorithm.

Proof

(of Lemma 7.)

Recall that the goal is to prove that if, , case (2) holds, i.e., , then

Let denote in step of the algorithm.

Recall that in the construction, is on the boundary of and is on the boundary of . Additionally, and line segment is vertical to line segment Recall that

Now define so that

Note that

Since is a right triangle, so, .

We have therefore just proven that if, , case (2) holds, i.e., , then for all such and, in particular,

By construction, and . Plugging the first (twice) into the second yields

or

Combining this with yields . Set . Then

Thus

Recall that , , i.e., is an isosceles triangle. Therefore, and Iterating the equation above yields . Thus, . Thus, if , then which we previously saw was not possible.

0.D.2 The Actual Modified Algorithm for Euclidean Center

Input:

A set of points , positive integer and a constant .

Output: A set () and radius such that

and

In the algorithm, each , is either undefined or a point in

denotes the set of defined is the set of all functions from

to

Before starting, we provide a brief intuition. If, for each , the algorithm knew in advance in which of the clusters is located, it could solve the problem by running Algorithm 2 separately for each cluster and returning the largest radius it found. Since it doesn’t know that information in advance, it “guesses” the location. This guess is encoded by the function introduced in Algorithm 4. It runs this procedure for every possible guess. Since one of the guesses must be correct, the algorithm returns a correct answer.

Again, we emphasize that Algorithm 4 is essentially the algorithm alluded222We write “alluded to” because [KS20] do not actually provide details. They only say that they are utilizing the guessing technique from [BC03]. In our algorithm, we have provided full details of how this can be done. to in Kim and Schwarzwald [KS20] with calls to replaced by calls to

Theorem 0.D.1

Let be a finite set of points. Suppose can be implemented in time. Then an -approximate -center solution for can be constructed in time.

Proof

Let be a set of optimal centers and Partition the points in into so that Let i.e., is a minimum enclosing ball for Note that

Fix to be some arbitrary function. Lines 3-12 of Algorithm 4 maintains a list of tentative centers; denotes the list at the start of iteration Note that some of the might be undefined, i.e., do not exist. will denote the set of defined items in the list at the start of iteration During iteration , the list updates (only the) tentative center and also constructs a radius

The algorithm starts with all of the being undefined, chooses some arbitrary point of calls it and then sets

At step it sets and

Note that the definitions of and immediately imply

| (4) |

Thus, lines 3-12 return and that cover all points in in time.

So far, the analysis has not considered lines 11-12 of the algorithm.

The algorithm arbitrarily chooses Now consider the unique function that always returns the index of the that contains i.e., For this lines 11 and 12 of the algorithm work as if they are running the original modified MEB algorithm on each of the separately.

By the generalized pigeonhole principle, there must exist at least one index such that at least times. For such a consider the value of for which exactly times. Then, from the analysis of Algorithm 2, for this particular

so

Thus

In particular, lines 3-12 using returns a -approximate solution.

Algorithm 4 runs lines 3-12 on all different possible functions Since this includes the full algorithm also returns a -approximate solution.