Fundamental limits to learning closed-form mathematical models from data

Abstract

Given a finite and noisy dataset generated with a closed-form mathematical model, when is it possible to learn the true generating model from the data alone? This is the question we investigate here. We show that this model-learning problem displays a transition from a low-noise phase in which the true model can be learned, to a phase in which the observation noise is too high for the true model to be learned by any method. Both in the low-noise phase and in the high-noise phase, probabilistic model selection leads to optimal generalization to unseen data. This is in contrast to standard machine learning approaches, including artificial neural networks, which in this particular problem are limited, in the low-noise phase, by their ability to interpolate. In the transition region between the learnable and unlearnable phases, generalization is hard for all approaches including probabilistic model selection.

Introduction

For a few centuries, scientists have described natural phenomena by means of relatively simple mathematical models such as Newton’s law of gravitation or Snell’s law of refraction. Sometimes, they arrived to these models deductively, starting from fundamental considerations; more frequently, however, they derived the models inductively from data. With increasing amounts of data available for all sorts of (natural and social) systems, one may argue that we are now in a position to inductively uncover new interpretable models for these systems. To this end, machine learning approaches that can automatically uncover closed-form models from data have been recently developed Džeroski and Todorovski (2007); Schmidt and Lipson (2009); Evans and Rzhetsky (2010); Brunton et al. (2016); Guimerà et al. (2020); Udrescu and Tegmark (2020) 111Here and throughout this article, we refer to closed-form models as those that are expressed in terms of a relatively small number of basic functions, such as addition, multiplication, trigonometric functions, etc.. In physics alone, such approaches have been applied successfully to quantum systems Gentile et al. (2021), non-linear and chaotic systems Schmidt and Lipson (2009); Brunton et al. (2016), fluid mechanics Reichardt et al. (2020), and astrophysics Cranmer et al. (2020), among others Udrescu and Tegmark (2020).

A central assumption implicit in these approaches is that, given data, it is always possible to identify the correct underlying model. Here, we investigate the validity of this assumption. In particular, consider a dataset , with , generated using the closed-form model , so that with being the parameters of the model, and a random unbiased observation noise drawn from the normal distribution with variance 222The assumption of Gaussian noise is standard in regression and symbolic regression problems, and it allows us to write the likelihood as in Eq. (12). In principle, one could assume other noise structures (for example, multiplicative noise) or even more general likelihoods, but these would be hard to justify in the context of regression and symbolic regression/model discovery. In any case, we do not expect that the qualitative behavior described in the manuscript should change in any way.. The question we are interested in is: Assuming that can be expressed in closed form, when is it possible to identify it as the true generating model among all possible closed-form mathematical models, for someone who does not know the true model beforehand? Note that our focus is on learning the structure of the model and not the values of the parameters , a problem that has received much more attention from the theoretical point of view Zdeborová and Krzakala (2016). Additionally, we are interested in situations in which the dimension of the feature space is relatively small (compare to typical feature spaces in machine learning settings), which is the relevant regime for symbolic regression and model discovery.

To address the model-learning question above, we formulate the problem of identifying the true generating model probabilistically, and show that probabilistic model selection is quasi-optimal at generalization, that is, at making predictions about unobserved data. This is in contrast to standard machine learning approaches, which, in this case, are suboptimal in the region of low observation noise. We then investigate the transition occurring between: (i) a learnable phase at low observation noise, in which the true model can in principle be learned from the data; and (ii) an unlearnable phase, in which the observation noise is too large for the true model to be learned from the data by any method. Finally, we provide an upper bound for the noise at which the learnability transition takes place, that is, the noise beyond which the true generating model cannot be learned by any method. This bound corresponds to the noise at which most plausible a priori models, which we call trivial, become more parsimonious descriptions of the data than the true generating model. Despite the simplicity of the approach, the bound provides a good approximation to the actual transition point.

Results

Probabilistic formulation of the problem

The complete probabilistic solution to the problem of identifying a model from observations is encapsulated in the posterior distribution over models . The posterior gives the probability that each model , with parameters , is the true generating model given the data . Again, notice that we are interested on the posterior over model structures rather than model parameters ; thus, we obtain by marginalizing over possible parameter values

where is the model likelihood, and and are the prior distributions over the parameters of a given model and the models themselves, respectively. The posterior over models can always be rewritten as

| (2) |

with and

Although the integral over parameters in Eq. (Probabilistic formulation of the problem) cannot, in general, be calculated exactly, it can be approximated as Schwarz (1978); Guimerà et al. (2020)

| (4) |

where is the Bayesian information criterion (BIC) of the model Schwarz (1978). This approximation results from using Laplace’s method integrate the distribution over the parameters . Thus, the calculation assumes that: (i) the likelihood is peaked around , so that it can be approximated by a Gaussian around ; (ii) the prior is smooth around so that it can be assumed to be approximately constant within the Gaussian. Unlike other contexts, in regression-like problems these assumptions are typically mild.

Within an information-theoretical interpretation of model selection, is the description length, that is, the number of nats (or bits if one uses base 2 logarithms instead of natural logarithms) necessary to jointly encode the data and the model with an optimal encoding Grünwald (2007). Therefore, the most plausible model—that is, the model with maximum —has the minimum description length, that is, compresses the data optimally.

The posterior in Eq. (2) can also be interpreted as in the canonical ensemble in statistical mechanics, with playing the role of the energy of a physical system, models playing the role of configurations of the system, and the most plausible model corresponding to the ground state. Here, we sample the posterior distribution over models by generating a Markov chain with the Metropolis algorithm, as one would do for physical systems. To do this, we use the “Bayesian machine scientist” introduced in Ref. Guimerà et al. (2020) (Methods). We then select, among the sampled models, the most plausible one (that is, the maximum a posteriori model, the minimum description length model, or the ground state, in each interpretation).

Probabilistic model selection yields quasi-optimal predictions for unobserved data

Probabilistic model selection as described above follows directly (and exactly, except for the explicit approximation in Eq. (4)) from the postulates of Cox’s theorem Cox (1946); Jaynes (2003) and is therefore (Bayes) optimal when models are truly drawn from the prior . In particular, the minimum description length (MDL) model is the most compressive and the most plausible one, and any approach selecting, from , a model that is not the MDL model violates those basic postulates.

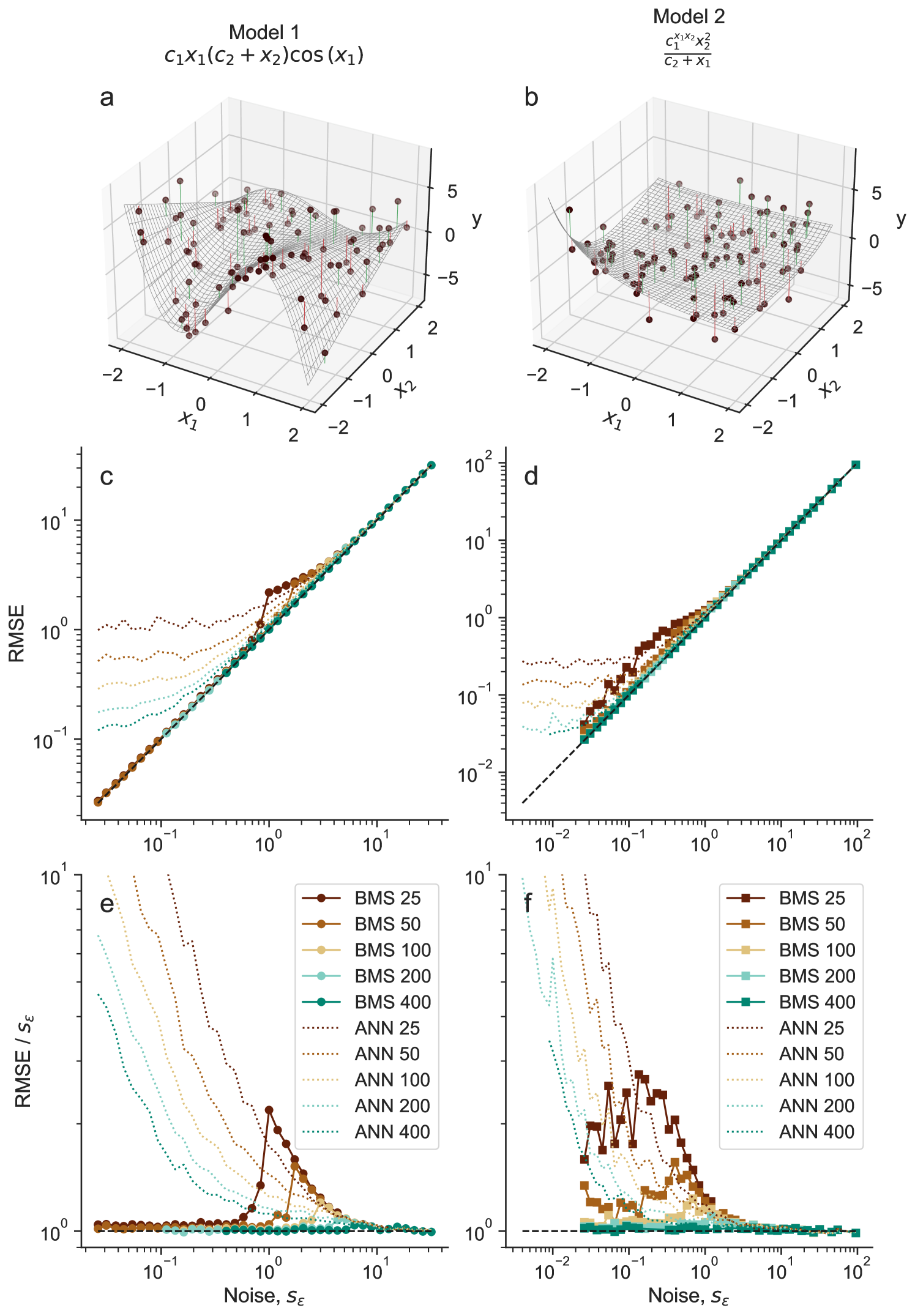

We start by investigating whether this optimality in model selection also leads to the ability of the MDL model to generalize well, that is, to make accurate predictions about unobserved data. We show that the MDL model yields quasi-optimal generalization despite the fact that the best possible generalization is achieved by averaging over models Guimerà et al. (2020), and despite the BIC approximation in the calculation of the description length (Fig. 1). Specifically, we sample a model from the prior described in Ref. Guimerà et al. (2020) and in the Methods; for a model not drawn from the prior, see Supplementary Text and Fig. S1. From this model, we generate synthetic datasets , (where and ) with different number of points and different levels of noise . Then, for each dataset , we sample models from using the Bayesian machine scientist Guimerà et al. (2020), select the MDL model among those sampled, and use it to make predictions on a test dataset .

Because is, like , subject to observation noise, the irreducible error is , that is, the root mean squared error (RMSE) of predictions cannot be, on average, smaller than . As we show in Fig. 1, the predictions of the MDL model achieve this optimal prediction limit except for small and some intermediate values of the observation noise. This is in contrast to standard machine learning algorithms, such as random forests and artificial neural networks. These algorithms achieve the optimal prediction error at large values of the noise, but below certain values of the prediction error stops decreasing and predictions become distinctly suboptimal (Fig. 1; random forests perform significantly worse than artificial neural networks, so data are not shown).

In the limit of high noise, all models make predictions whose errors are small compared to . Thus, the prediction error is similar to regardless of the chosen model, which also means that it is impossible to correctly identify the model that generated the data. Conversely, in the limit, the limiting factor for standard machine learning methods is their ability to interpolate between points in , and thus the prediction error becomes independent of the observation error. By contrast, because Eqs. (2)-(4) provide consistent model selection, in this limit the MDL should coincide with the true generating model and interpolate perfectly. This is exactly what we observe—the only error in the predictions of the MDL model is, again, the irreducible error . Therefore, our observations show that, probabilistic model selection leads to quasi-optimal generalization in the limits of high and low observation noise.

Phenomenology of the learnability transition

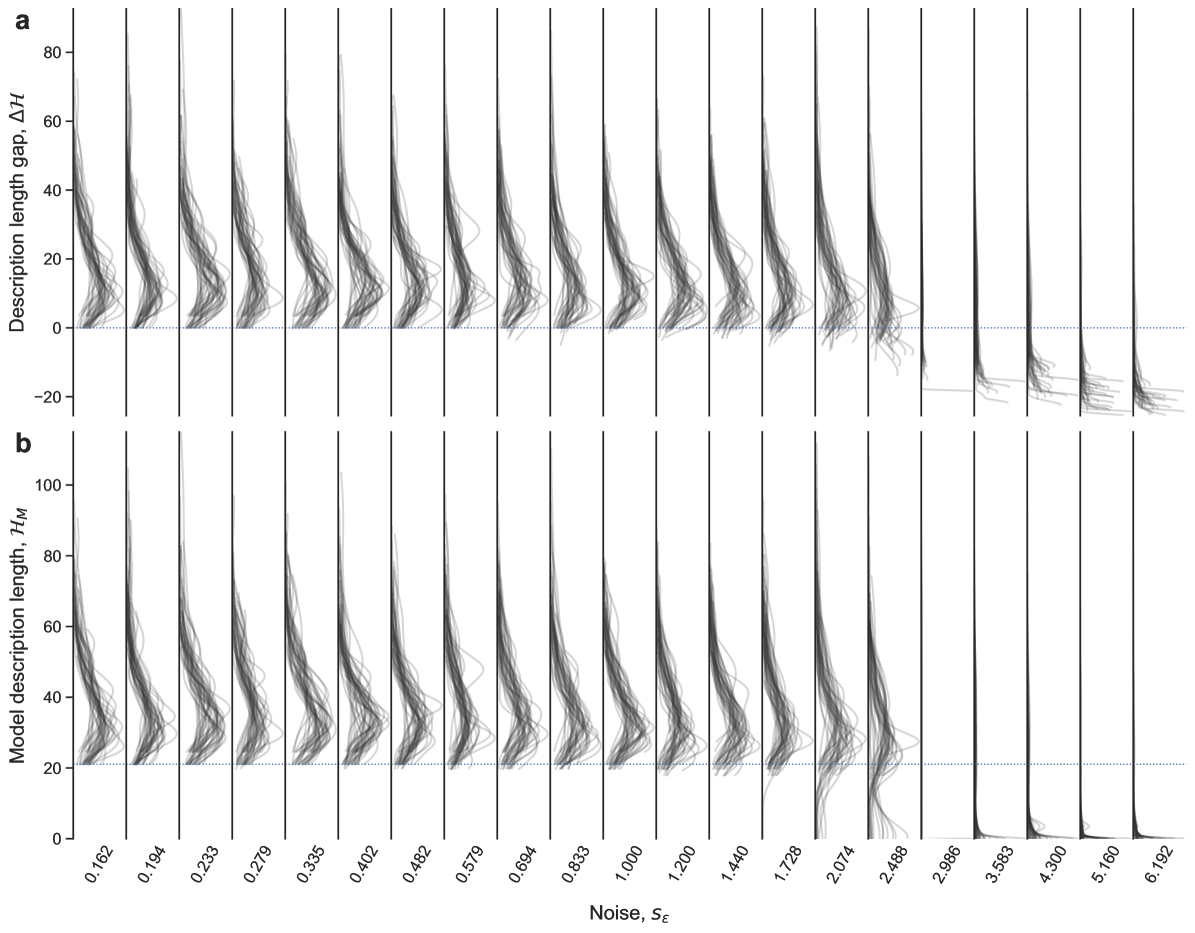

Next, we establish the existence of the learnable and unlearnable regimes, and clarify how the transition between them happens. Again, we generate synthetic data using a known model as in the previous section, and sample models from the posterior . To investigate whether a model is learnable we consider the ensemble of sampled models (Fig. 2); if no model in the ensemble is more plausible than the true generating model (that is, if the true model is also the MDL model), then we conclude that the model is learnable. Otherwise, the model is unlearnable because lacking any additional information about the generating model, it is impossible to identify it, as other models provide more parsimonious descriptions of the data .

To this end, we first consider the gap between the description length of each sampled model and that of the true generating model (Fig. 2a). For low observation error , all sampled models have positive gaps, that is, description lengths that are longer than or equal to the true model. This indicates that the most plausible model is the true model; therefore, the true model is learnable. By contrast, when observation error grows ( in Fig. 2a), some of the models sampled for some training datasets start to have negative gaps, which means that, for those datasets, these models become more plausible than the true model. When this happens, the true model becomes unlearnable. For even larger values of (), the true model is unlearnable for virtually all training sets .

To better characterize the sampled models, we next compute description length of the model; that is, the term in the description length, which measures the complexity of the model regardless of the data (Fig. 2b). For convenience we set an arbitrary origin for so that for the models that are most plausible a priori, which we refer to as trivial models; all other models have . We observe that, for low observation error, most of the sampled models are more complex than the true model. Above a certain level of noise (), however, most sampled models are trivial or very simple models with . These trivial models appear suddenly—at almost no trivial models are sampled at all.

Altogether, our analysis shows that for low enough observation error the true model is always recovered. Conversely, in the opposite limit only trivial models (those that are most plausible in the prior distribution) are considered reasonable descriptions of the data. Importantly, our analysis shows that trivial models appear suddenly as one increases the level of noise, which suggests that the transition to the unlearnable regime may be akin to a phase transition driven by changes in the model plausibility (or description length) landscape, similarly to what happens in other problems in inference, constraint satisfaction, and optimization Mézard and Montanari (2009); Zdeborová and Krzakala (2016).

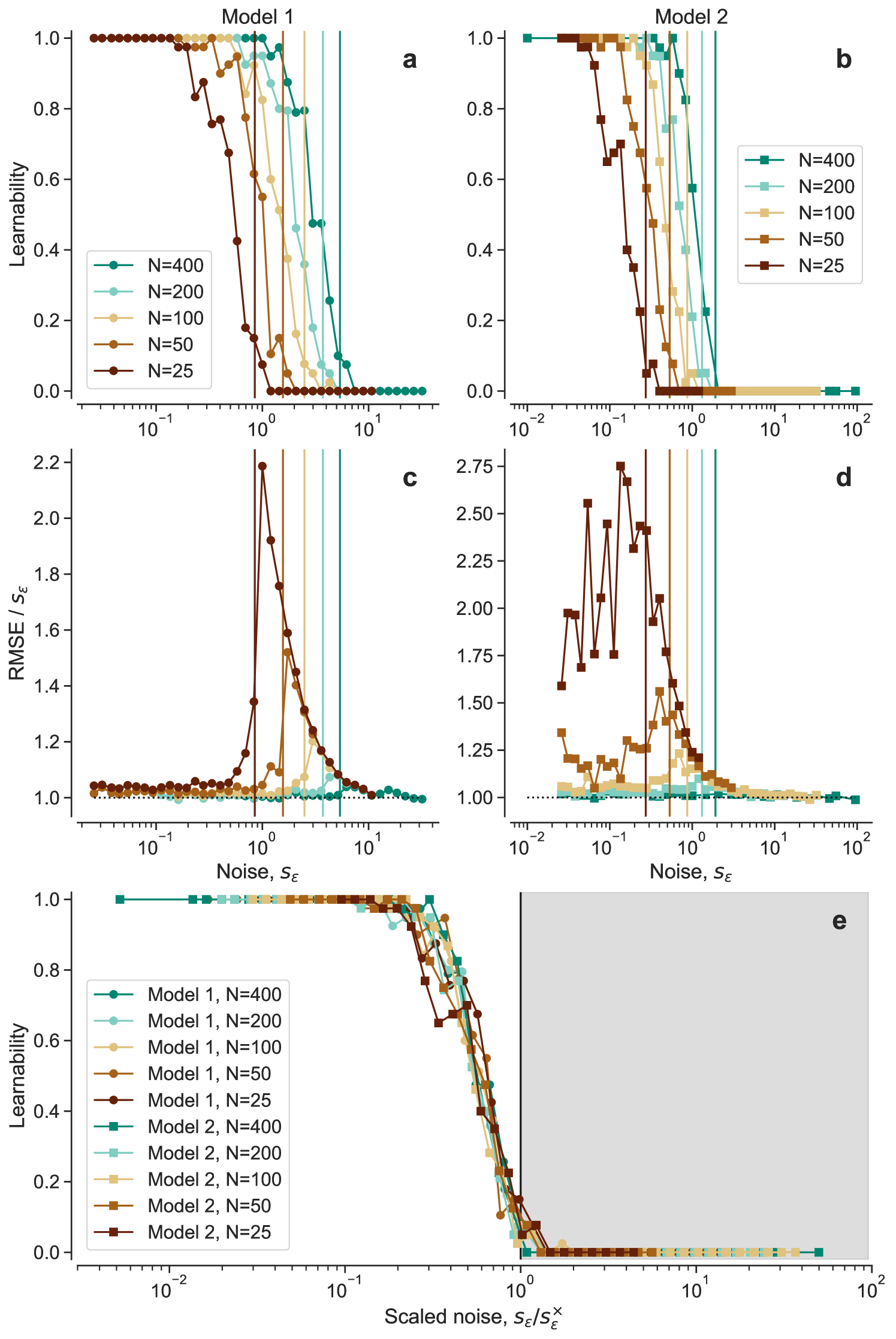

To further probe whether that is the case, we analyze the transition in more detail. Our previous analysis shows that learnability is a property of the training dataset , so that, for the same level of observation noise, some instances of enable learning of the true model whereas others do not. Thus, by analogy with satisfiability transitions Mézard and Montanari (2009), we define the learnability as the fraction of training datasets at a given noise for which the true generating model is learnable. In Fig. 3, we show the behavior of the learnability for different sizes , for the two models in Fig. 1 (see Supplementary Fig. S1 for another model). Consistent with the qualitative description above, we observe that the learnability transition occurs abruptly at a certain value of the observation noise; the transition shifts towards higher values of with increasing size of .

Remarkably, the difficult region identified in the previous section, in which prediction error deviates from the theoretical irreducible error , coincides with the transition region. Therefore, our results paint a picture with three qualitatively distinct regimes: a learnable regime in which the true model can always be learned and predictions about unobserved data are optimal; an unlearnable regime in which the true model can never be learned but predictions are still optimal because error is driven by observation noise and not by the model itself; and a transition regime in which the true model is learnable only sometimes, and in which predictions are, on average, suboptimal. This, again, is reminiscent of the hard phase in satisfiability transitions and of other statistical learning problems Mézard and Montanari (2009); Ricci-Tersenghi et al. (2019); Maillard et al. (2020).

Learnability conditions

Finally, we obtain an upper bound to the learnability transition point by assuming that all the phenomenology of the transtion can be explained by the competition between just two minima of the description length landscape : the minimum corresponding to the true model ; and the minimum corresponding to the trivial model that is most plausible a priori

| (5) |

As noted earlier, is such that by our choice of origin 333Note that, although the origin of descriptions lengths is not arbitrary, this choice is innocuous as long as we are only concerned with comparisons between models., and for our choice of priors it corresponds to trivial models without any operation, such as or . Below, we focus on one of these models , but our arguments (not the precise calculations) remain valid for any other choice of prior and the corresponding .

As we have also noted above, the true model is learnable when the description length gap is strictly positive for all models . Conversely, the true model becomes unlearnable when for some model . As per our assumption that the only relevant minima are those corresponding to and , we therefore postulate that the transition occurs when the description length gap of the trivial model becomes, on average (over datasets), . In fact, when the true model is certainly unlearnable; but other models could fullfill the condition earlier, thus making the true model unlearnable at lower observation noises. Therefore, the condition yields an upper bound to the value of the noise at which the learnability transition happens. We come back to this issue later, but for now we ignore all potential intermediate models.

Within the BIC approximation, the description length of each of these two models is (Methods)

| (6) | |||||

| (7) | |||||

where is the number of parameters of the true model, is the reducible error of the trivial model, and the averages are over the observations in . Then, over many realizations of , the transition occurs on average at

| (8) |

where is now the variance of over the observation interval rather than in any particular dataset.

For large and model description lengths of the true model such that , the transition noise is well approximated by (Methods)

| (9) |

Therefore, since diverges for , the true model is learnable for any finite observation noise, provided that is large enough (which is just another way of seeing that probabilistic model selection with the BIC approximation is consistent Schwarz (1978)).

In Fig. 3, we show that the upper bound in Eq. (8) is close to the exact transition point from the learnable to the unlearnable phase, as well as to the peak of the prediction error relative to the irreducible error. Moreover, once represented as a function of the scaled noise , the learnability curves of both models collapse into a single curve (Fig. 3e), suggesting that the behavior at the transition may be universal. Additionally, the transition between and becomes more abrupt with increasing , as the fluctuations in become smaller. If this upper bound became tighter with increasing , this would be indicative of a true, discontinuous phase transition between the learnable and the unlearnable phases.

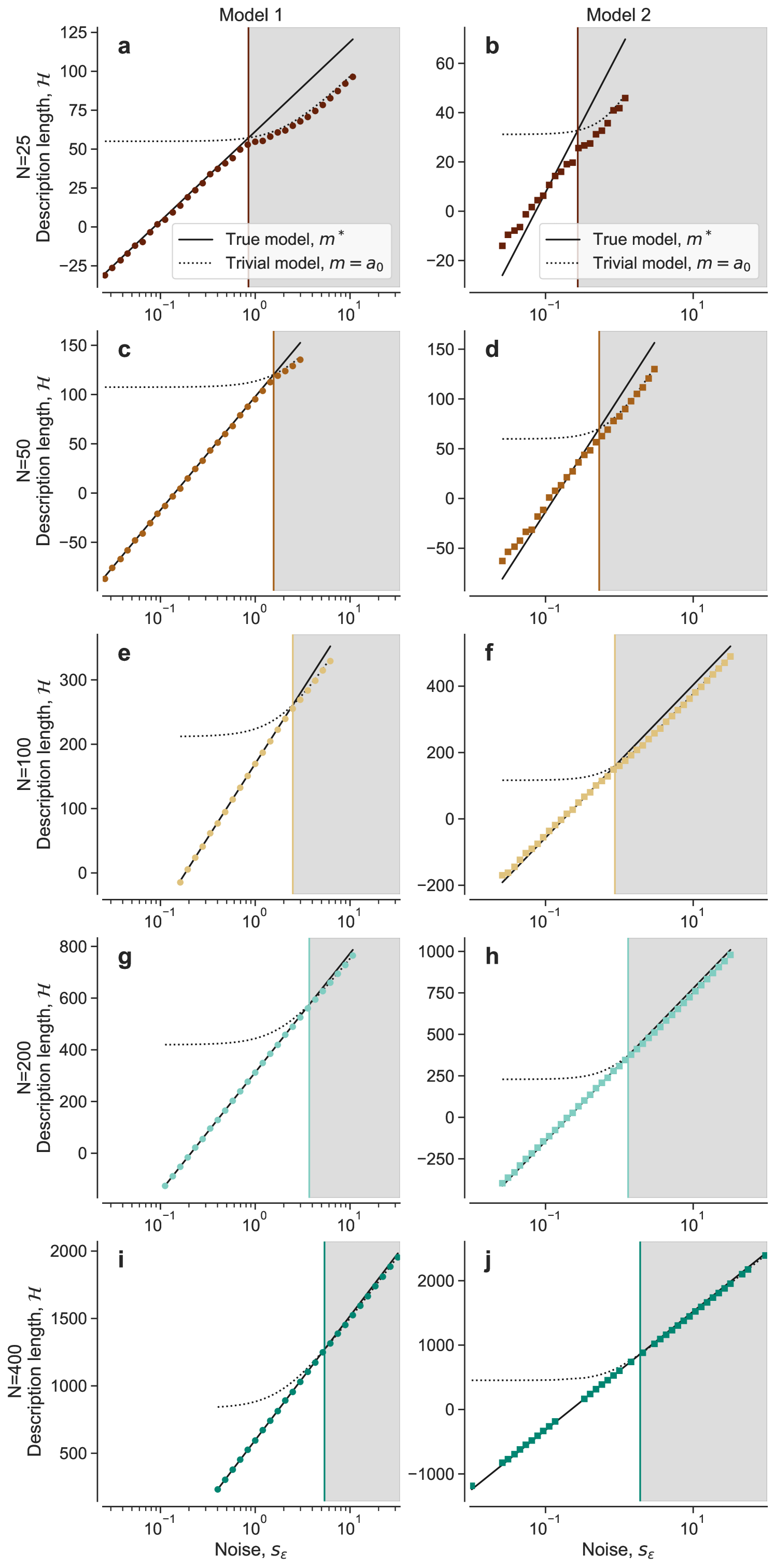

To further understand the transition and further test the validity of the assumption leading to the upper bound , we plot the average description length (over datasets ) of the MDL model at each level of observation noise (Fig. 4). We observe that the description length of the MDL model coincides with the description length of the true model below the transition observation noise, and with the description length of the trivial model above the transition observation noise. Around , and especially for smaller , the observed MDL is lower than both and , suggesting that, in the transition region, a multiplicity of models (or Rashomon set Rudin (2019)) other than and become relevant.

Discussion

The bidirectional cross fertilization between statistical learning theory and statistical physics goes back to the 1980’s, when the paradigm in artificial intelligence shifted from rule-based approaches to statistical learning approaches Carleo et al. (2019). Nonetheless, the application of statistical physics ideas and tools to learning problems has so far focused on learning parameter values Engel and den Broeck (2004); Maillard et al. (2020); Aubin et al. (2020); Mignacco et al. (2022), or on specific problems such as learning probabilistic graphical models and, more recently, network models Guimerà and Sales-Pardo (2009); Decelle et al. (2011); Krzakala et al. (2016); Vallès-Català et al. (2018); Peixoto (2020). Thus, despite the existence of rigorous probabilistic model selection approaches Ando (2010), the issue of learning the structure of models, and especially closed-form mathematical models, has received much less attention from the statistical physics point of view Zdeborová and Krzakala (2016).

Our approach shows that, once the model-selection problem is formalized in such a way that models can be represented and enumerated, and their posteriors can be estimated and sampled in the same way we do for discrete configurations in a physical system Guimerà et al. (2020), there is no fundamental difference between learning models and learning parameters (except, perhaps, for the discreteness of model structures). Therefore, the same richness that has been described at length in parameter learning can be expected in model learning, including the learnability transition that we have described here, but perhaps others related, for example, to the precise characterization of the description length landscape in the hard phase in which generalization is difficult. We may also expect model-learning and parameter-learning transitions to interact. For example, there are limits to our ability to learn model parameters, even for noiseless data Mondelli and Montanari (2019); Barbier et al. (2019); Maillard et al. (2020); in this unlearnable phase of the parameterlearning problem, it seems that the true model should also be unlearnable, even in this noiseless limit, something we have not considered here. Our findings are thus the first step in the characterization of the rich phenomenology arising from the interplay between data size, noise, and parameter and model identification from data.

Our results on closed-form mathematical models may also open the door to addressing some problems of learning that have so far remained difficult to tackle. In deep learning, for example, the question of how many training examples are necessary to learn a certain task with precision is still open Carleo et al. (2019), whereas, as we have shown, in our context of (closed-form) model selection the answer to this question arises naturally.

Finally, we have shown that, when data are generated with a (relatively simple) closed-form expression, probabilistic model selection generalizes quasi-optimally, especially in the ideal regime of low observation noise. This is in contrast, as we have seen, to what happens in this case with some machine learning approaches such as random forests or artificial neural networks. Conversely, it would be interesting to understand how expressive closed-form mathematical models are, that is, to what extent they are to describe and generalize when data are not generated using a closed-form mathematical model (for example, for the solution of a differential equation that cannot be expressed in closed form). We hope that our work encourages more research on machine learning approaches geared towards learning such interpretable models Rudin (2019), and on the statistical physics of such approaches.

METHODS

Prior over models

The probabilistic formulation of the model selection problem outline in Eqs. 1–4 requires the choice of a prior distribution over models—although the general, qualitative results described in the body of the text do not depend on a particular choice of prior. Indeed, no matter how priors are chosen, some model (or, at most, a finite subset of models, since uniform priors cannot be used Guimerà et al. (2020)) different from the true generating model will be most plausible a priori, so the key arguments in the main text hold.

However, the specific, quantitative results must be obtained for one specification of . Here, as in the previous literature Guimerà et al. (2020); Reichardt et al. (2020), we choose to be the maximum entropy distribution that is compatible with an empirical corpus of mathematical equations Guimerà et al. (2020). In particular, we impose that the mean number of occurrences of each operation per equation is the same as in the empirical corpus; and that the fluctuations of these numbers are also as in the corpus. Therefore, the prior is

| (10) |

where , and the hyperparameters and are chosen so as to reproduce the statistical features of the empirical corpus Guimerà et al. (2020). For this particular choice of prior, the models are those that contain no operations at all, for example ; hence the use of the term trivial to denote these models in the text.

Sampling models with the Bayesian machine scientist

For a given dataset , each model has a description length given by Eq. (4). The Bayesian machine scientist Guimerà et al. (2020) generates a Markov chain of models using the Metropolis algorithm as described next.

Each model is represented as an expression tree, and each new model in the Markov chain is proposed from the previous one by changing the corresponding expression tree: changing an operation or a variable in the expression tree (for example, proposing a new model from ); adding a new term to the tree (for example, from ); or replacing one block of the tree (for example, from ) (see Guimerà et al. (2020) for details). Once a new model is proposed from , the new model is accepted using the Metropolis rule.

Note that the only input to the Bayesian machine is the observed data . In particular, the observational noise is unknown and must be estimated, via maximum likelihood, to calculate the Bayesian information criterion of each model .

Artificial neural network benchmarks

For the analysis of predictions on unobserved data, we use as benchmarks the following artificial neural network architectures and training procedures. The networks consist of: an input layer with two inputs corresponding to and ; (ii) four hidden fully connected feed-forward layers, with 10 units each and ReLU activation functions; and (iii) a linear output layer with a single output .

Each network was trained with a dataset containing points, just as in the probabilistic model selection experiments. Training errors and validation errors (computed on an independent set) were calculated, and the training process stopped when the validation error increased, on average, for 100 epochs; this typically entailed training for 1,000-2,000 epochs. This procedure was repeated three times, and the model with the overall lowest validation error was kept for making predictions on a final test set . The predictions on are those reported in Fig. 1.

Model description lengths

The description length of a model is given by Eq. (4), and the BIC is

| (11) |

where is the likelihood of the model calculated at the maximum likelihood estimator of the parameters, , and is the number of parameters in . In a model-selection setting, one would typically assume that the deviations of the observed data are normally distributed independent variables, so that

| (12) | |||||

where , and is the observed error, which in general differs from when . We can obtain the maximum likelihood estimator for , which gives , and replacing into Eqs. (4)-(12) gives

| (13) |

Approximation of for large

Data availability

We did not use any data beyond the synthetically generated data described in the manuscript.

Code availability

The code for the Bayesian machine scientist is publicly available as a repository from the following URL: https://bitbucket.org/rguimera/machine-scientist/.

References

- Džeroski and Todorovski (2007) S. Džeroski and L. Todorovski, eds., Computational Discovery of Scientific Knowledge, Lecture Notes in Artificial Intelligence (Springer, 2007).

- Schmidt and Lipson (2009) M. Schmidt and H. Lipson, Science 324, 81 (2009).

- Evans and Rzhetsky (2010) J. Evans and A. Rzhetsky, Science 329, 399 (2010).

- Brunton et al. (2016) S. L. Brunton, J. L. Proctor, and J. N. Kutz, Proc. Natl. Acad. Sci. U.S.A. 113, 3932 (2016).

- Guimerà et al. (2020) R. Guimerà, I. Reichardt, A. Aguilar-Mogas, F. A. Massucci, M. Miranda, J. Pallarès, and M. Sales-Pardo, Sci. Adv. 6, eaav6971 (2020).

- Udrescu and Tegmark (2020) S.-M. Udrescu and M. Tegmark, Sci. Adv. 6, eaay2631 (2020).

- Note (1) Here and throughout this article, we refer to closed-form models as those that are expressed in terms of a relatively small number of basic functions, such as addition, multiplication, trigonometric functions, etc.

- Gentile et al. (2021) A. A. Gentile, B. Flynn, S. Knauer, N. Wiebe, S. Paesani, C. E. Granade, J. G. Rarity, R. Santagati, and A. Laing, Nat. Phys. 17, 837 (2021).

- Reichardt et al. (2020) I. Reichardt, J. Pallarès, M. Sales-Pardo, and R. Guimerà, Phys. Rev. Lett. 124, 084503 (2020).

- Cranmer et al. (2020) M. Cranmer, A. Sanchez Gonzalez, P. Battaglia, R. Xu, K. Cranmer, D. Spergel, and S. Ho, in Advances in Neural Information Processing Systems, Vol. 33, edited by H. Larochelle, M. Ranzato, R. Hadsell, M. F. Balcan, and H. Lin (Curran Associates, Inc., 2020) pp. 17429–17442.

- Note (2) The assumption of Gaussian noise is standard in regression and symbolic regression problems, and it allows us to write the likelihood as in Eq. (12\@@italiccorr). In principle, one could assume other noise structures (for example, multiplicative noise) or even more general likelihoods, but these would be hard to justify in the context of regression and symbolic regression/model discovery. In any case, we do not expect that the qualitative behavior described in the manuscript should change in any way.

- Zdeborová and Krzakala (2016) L. Zdeborová and F. Krzakala, Adv. Phys. 65, 453 (2016).

- Schwarz (1978) G. Schwarz, Ann. Stat. 6, 461 (1978).

- Grünwald (2007) P. D. Grünwald, The Minimum Description Length Principle (The MIT Press, Cambridge, Massachusetts, 2007).

- Cox (1946) R. T. Cox, Am. J. Phys. 14, 1 (1946).

- Jaynes (2003) E. T. Jaynes, Probability Theory: The Logic of Science (Cambridge University Press, 2003).

- Mézard and Montanari (2009) M. Mézard and A. Montanari, Information, Physics, and Computation (Oxford University Press, 2009).

- Ricci-Tersenghi et al. (2019) F. Ricci-Tersenghi, G. Semerjian, and L. Zdeborová, Phys. Rev. E 99, 042109 (2019).

- Maillard et al. (2020) A. Maillard, B. Loureiro, F. Krzakala, and L. Zdeborová, in Advances in Neural Information Processing Systems, Vol. 33, edited by H. Larochelle, M. Ranzato, R. Hadsell, M. Balcan, and H. Lin (Curran Associates, Inc., 2020) pp. 11071–11082.

- Note (3) Note that, although the origin of descriptions lengths is not arbitrary, this choice is innocuous as long as we are only concerned with comparisons between models.

- Rudin (2019) C. Rudin, Nat. Mach. Intell. 1, 206 (2019).

- Carleo et al. (2019) G. Carleo, I. Cirac, K. Cranmer, L. Daudet, M. Schuld, N. Tishby, L. Vogt-Maranto, and L. Zdeborová, Rev. Mod. Phys. 91, 45002 (2019).

- Engel and den Broeck (2004) A. Engel and C. V. den Broeck, Statistical Mechanics of Learning (Cambridge University Press, Cambridge, UK, 2004).

- Aubin et al. (2020) B. Aubin, F. Krzakala, Y. Lu, and L. Zdeborová, in Advances in Neural Information Processing Systems, Vol. 33, edited by H. Larochelle, M. Ranzato, R. Hadsell, M. Balcan, and H. L. n (Curran Associates, Inc., 2020) pp. 12199–12210.

- Mignacco et al. (2022) F. Mignacco, F. Krzakala, Y. M. Lu, P. Urbani, and L. Zdeborová, in Proceedings of the 37th International Conference on Machine Learning, ICML’20 (JMLR.org, 2022).

- Guimerà and Sales-Pardo (2009) R. Guimerà and M. Sales-Pardo, Proc. Natl. Acad. Sci. U. S. A. 106, 22073 (2009).

- Decelle et al. (2011) A. Decelle, F. Krzakala, C. Moore, and L. Zdeborová, Phys. Rev. Lett. 107, 065701 (2011).

- Krzakala et al. (2016) F. Krzakala, F. Ricci-Tersenghi, L. Zdeborová, R. Zecchina, E. W. Tramel, and L. F. Cugliandolo, eds., Statistical Physics, Optimization, Inference, and Message-Passing Algorithms, 1st ed. (Oxford, UK, 2016).

- Vallès-Català et al. (2018) T. Vallès-Català, T. P. Peixoto, M. Sales-Pardo, and R. Guimerà, Phys. Rev. E 97, 062316 (2018).

- Peixoto (2020) T. P. Peixoto, “Advances in network clustering and blockmodeling,” (John Wiley & Sons Ltd, 2020) Chap. Bayesian stochastic blockmodeling.

- Ando (2010) T. Ando, Bayesian model selection and statistical modeling (CRC Press, 2010).

- Mondelli and Montanari (2019) M. Mondelli and A. Montanari, Foundations of Computational Mathematics 19, 703 (2019).

- Barbier et al. (2019) J. Barbier, F. Krzakala, N. Macris, L. Miolane, and L. Zdeborová, Proc. Natl. Acad. Sci. U.S.A. 116, 5451 (2019).

Acknowledgements.

This research was funded by project PID2019-106811GB-C31 from the Spanish MCIN/AEI/10.13039/501100011033 and by the Government of Catalonia (2017SGR-896).Author contributions

MS-P and RG designed the research. OF-F, IR, HDLR, and RG wrote code and run computational experiments. All authors analyzed data. OF-F, IR, MS-P, and RG wrote the manuscript.

Competing interests

The authors declare no competing interests.