11email: huayang@hust.edu.cn 22institutetext: College of Urban Transportation and Logistics, Shenzhen Technology University, Shenzhen 518118, China 33institutetext: School of Science and Engineering, Tsinghua University, Beijing 100084, China

GelSplitter: Tactile Reconstruction from Near Infrared and Visible Images

Abstract

The GelSight-like visual tactile (VT) sensor has gained popularity as a high-resolution tactile sensing technology for robots, capable of measuring touch geometry using a single RGB camera. However, the development of multi-modal perception for VT sensors remains a challenge, limited by the mono camera. In this paper, we propose the GelSplitter, a new framework approach the multi-modal VT sensor with synchronized multi-modal cameras and resemble a more human-like tactile receptor. Furthermore, we focus on 3D tactile reconstruction and implement a compact sensor structure that maintains a comparable size to state-of-the-art VT sensors, even with the addition of a prism and a near infrared (NIR) camera. We also design a photometric fusion stereo neural network (PFSNN), which estimates surface normals of objects and reconstructs touch geometry from both infrared and visible images. Our results demonstrate that the accuracy of RGB and NIR fusion is higher than that of RGB images alone. Additionally, our GelSplitter framework allows for a flexible configuration of different camera sensor combinations, such as RGB and thermal imaging.

Keywords:

Visual tactile Photometric stereo Multi-modal fusion.

1 Introduction

Tactile perception is an essential aspect of robotic interaction with the natural environment [1]. As a direct means for robots to perceive and respond to physical stimuli, it enables them to perform a wide range of tasks and to interact with humans and other objects, such as touch detection [5], force estimation [4], robotic grasping [12] and gait planning [25]. While the human skin can easily translate the geometry of a contacted object into nerve impulses through tactile receptors, robots face challenges in achieving the same level of tactile sensitivity, especially in multi-modal tactile perception.

Visual tactile (VT) sensors such as the GelSights [28, 22, 6] are haptic sensing technology becoming popularity with an emphasis on dense, accurate, and high-resolution measurements, using a single RGB camera to measure touch geometry. However, the RGB-only mode of image sensor restricts the multi-modal perception of VT sensor. RGB and near infrared (NIR) image fusion is a hot topic for image enhancement, using IR images to enhance the RGB image detail and improve measurement accuracy [8, 32]. From this perspective, a multi-modal image fusion VT sensor is promoted to enrich the tactile senses of the robot.

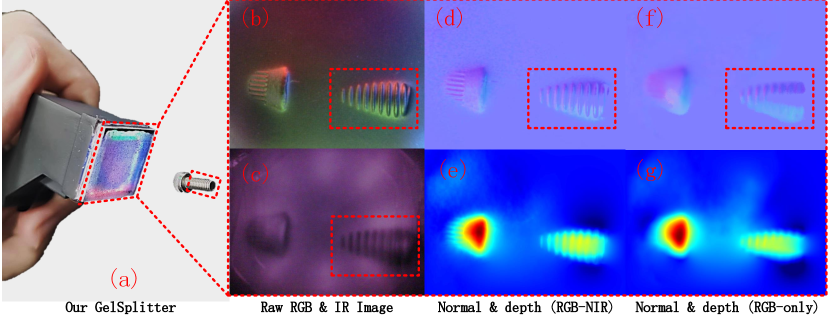

In this paper, we present the GelSplitter with RGB-NIR fusion as a solution of the above challenge. This novel design integrates a splitting prism to reconstruct tactile geometry from both NIR and RGB light cameras. Our GelSplitter offer a framework for the implementation of multi-modal VT sensors, which can further enhance the tactile capabilities of robots, as shown in Fig. 1. Additionally, our GelSplitter framework allows for a flexible configuration of different camera sensor combinations, such as RGB and thermal imaging. In summary, our contribution lies in three aspects:

-

•

We propose the GelSplitter, a new framework to design multi-modal VT sensor.

-

•

Based on the framework, we focus on the task of 3D tactile reconstruction and fabricate a compact sensor structure that maintains a comparable size to state-of-the-art VT sensors, even with the addition of a prism and camera.

-

•

A photometric fusion stereo neural network (PFSNN) is implemented to estimate surface normals of objects and reconstructs touch geometry from both infrared and visible images. The common issue of data alignment between the RGB and IR cameras is addressed by incorporating splitting imaging. Our results demonstrate that our method outperforms the RGB-only VT sensation.

The rest of the paper is organized as follows: In Sect. 2, we briefly introduce some related works of VT sensors and multi-modal image fusion. Then, we describe the main design of the GelSplitter and FPSNN in Sect. 3. In order to verify the performance of our proposed method, experimental results are presented in Sect. 4.

2 Related Work

2.1 VT Sensor

GelSight [28] is a well-established VT sensor that operates like a pliable mirror, transforming physical contact or pressure distribution on its reflective surface into tactile images that can be captured by a single camera. Building upon the framework of the GelSight, various VT sensors [9, 22, 23] are designed to meet diverse application requirements, such as [18, 16, 17, 10]. Inspired by GelSight, DIGIT [13] improves upon past the VT sensors by miniaturizing the form factor to be mountable on multi-fingered hands.

Additionally, there are studies that explore the materials and patterns of reflective surfaces to gather other modal information of touch sensing. For example, FingerVision [27] provides a multi-modal sensation with a completely transparent skin. HaptiTemp [2] uses thermochromic pigments color blue, orange, and black with a threshold of 31∘C, 43∘C, and 50∘C, respectively on the gel material, to enable high-resolution temperature measurements. DelTact [31] adopts an improved dense random color pattern to achieve high accuracy of contact deformation tracking.

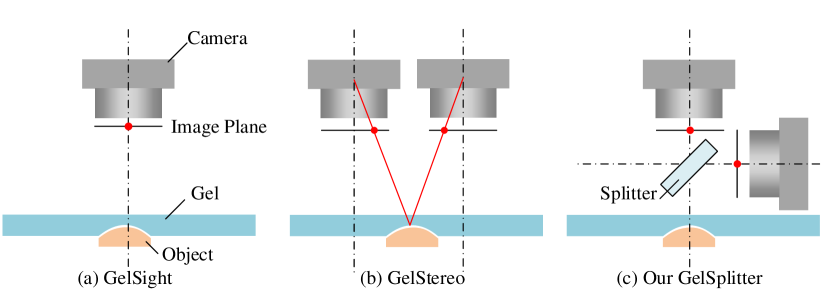

The VT sensors mentioned above are based on a single RGB camera. Inspired by binocular stereo vision, GelStereo [6] uses two RGB cameras to achieve tactile geometry measurements. Further, the gelstereo is developed in six-axis force/torque estimation [30] and geometry measurement [7, 11].

In current research, one type of camera is commonly used, with a small size and a simplified optical path. The RGB camera solution for binocular stereo vision effectively estimates disparity by triangulation. However, data alignment is a common issue to different kinds of camera images [3], because multi-modal sensors naturally have different extrinsic parameters between modalities, such as lens parameters and relative position. In this paper, two identical imaging windows are fabricated by a splitting prism, which filter RGB component (bandpass filtering at 650 wavelength) and IR component (narrowband filtering at 940 wavelength).

2.2 Multi-modal Image Fusion

Multi-modal image fusion is a fundamental task for robot perception, healthcare and autonomous driving [26, 21]. However, due to high raw data noise, low information utilisation and unaligned multi-modal sensors, it is challenging to achieve a good performance. In these applications, different types of datasets are captured by different sensors such as infrared (IR) and RGB image [32], computed tomography (CT) and positron emission tomograph (PET) scan [19], LIDAR point cloud and RGB image [15].

Typically, the fusion of NIR and RGB images enhances the image performance and complements the missing information in the RGB image. DenseFuse [14] proposes an encoding network that is combined with convolutional layers, a fusion layer, and a dense block to get more useful features from source images. DarkVisionNet [20] extracts clear structure details in deep multiscale feature space rather than raw input space by a deep structure. MIRNet [29] adopts a multi-scale residual block to learn an enriched set of features that combines contextual information from multiple scales, while simultaneously preserving the high-resolution spatial details.

Data alignment is another important content of multi-modal image fusion. Generally, the targets corresponding to multiple sensors are in different coordinate systems, and the data rates of different sensors are diverse. To effectively utilize heterogeneous information and obtain simultaneous target information, it is essential to map the data onto a unified coordinate system with proper time-space alignment [24, 21].

Following the above literature, PFSNN is designed for the gelsplitter, completing the missing information of RGB images by NIR image. It generates refined normal vector maps and depth maps. In addition, splitting prisms are embeded to unify the image planes and optical centres of the different cameras and align the multi-modal data.

3 Design and Fabrication

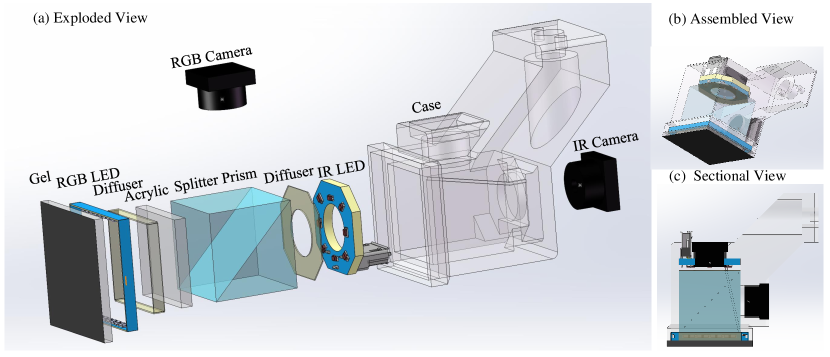

Our design aims to achieve high-resolution 3D tactile reconstruction while maintaining a compact shape. Fig. 3 shows the components and schematic diagram of the sensor design. Following, we describe the design principles and lessons learned for each sensor component.

3.1 Prism and Filters

To capture the NIR image and the RGB image, a splitting prism, band pass filter, narrow band filter and diffuser were prepared . These components ensure that the images are globally illuminated and homogeneous.

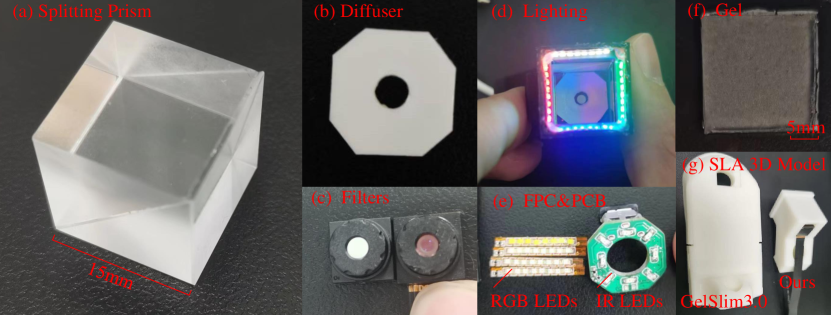

The splitting prism is a cube with a side length of 15, as shown in Fig. 4 (a). It has a spectral ratio of 1:1 and a refractive index of 1.5168, creating two identical viewports. Among the six directions of the cube, except for two directions of the cameras and one direction of the gel, the other three directions are painted with black to reduce secondary reflections.

The diffuser in lighting can produce more even global illumination as it distributes light evenly throughout the scene. The octagonal diffuser needs to be optically coupled to the splitting prism to avoid reflection from the air interface, as shown in Fig. 4 (b). “3M Diffuser 3635-70” is used for the sensor.

A 650 bandpass filter and 940 narrowband filter are used to separate the RGB component from the NIR component. The lens and filter are integrated together, as shown in Fig. 4 (c).

3.2 Lighting

In this paper, five types of colour LEDs are provided for illumination in different directions, including red ( Type NCD0603R1, wavelength 615630), green ( Type NCD0603W1, wavelength 515530), blue ( Type NCD0603B1, wavelength 463475), white ( Type NCD0603W1) and infrared ( Type XL-1608IRC940, wavelength 940), as shown in Fig. 4 (d). These LEDs are all in 0603 package size (1.6×0.8 ).

Moreover, in order to represent surface gradient information, LEDs are arranged in two different ways. Firstly, red, green, blue and white LEDs can be arranged in rows, illuminating the four sides of the gel. This allows for a clear representation of surface gradients from different angles. Secondly, infrared LEDs can be arranged in a circular formation above the gel, surrounding the infrared camera. This allows IR light to shine on the shaded areas, supplementing the missing gradient information.

Finally, we designed the FPC and PCB to drive LEDs, as shown in Fig. 4 (e). The LED brightness is configured with a resistor, and its consistency is ensured by a luminance meter.

3.3 Camera

Both NIR and RGB cameras are used the common CMOS sensor OV5640, manufactured by OmniVision Technologies, Inc. The OV5640 supports a wide range of resolution configurations from 320×240 to 2592×1944, as well as auto exposure control (AEC) and auto white balance (AWB). Following the experimental setting of GelSights [1], our resolution is configured to 640×480. AEC and AWE are disabled to obtain the linear response characteristics of the images.

To capture clear images, it is essential to adjust the focal length by rotating the lens and ensure that the depth of field is within the appropriate range. Although ideally, the optical centres of the two cameras are identical. In practice, however, there is a small assembly error that requires fine-grained data alignment.

Both RGB and NIR cameras are calibrated and corrected for aberrations using the Zhang’s calibration method implemented in OpenCV. Random sample consistency (RANSAC) regression is used to align the checkerboard corners of the two cameras’ images to achieve data alignment.

3.4 Elastomer

Our transparent silicone is coated with diffuse reflective paint. We choose low-cost food grade platinum silicone which operates in a 1:1 ratio. The silicone is poured into a mould and produced as a soft gel mat with a thickness of 1.5 . In our experiment, we found that a gel with a Shore hardness of 10A is both pliable enough to avoid breakage and capable of producing observable deformations. To achieve defoaming, it is necessary to maintain an environmental temperature of approximately 10°C and apply a vacuum pressure of -0.08 MPa.

3.5 SLA 3D Model

We use stereo lithography appearance (SLA) 3D printing to create the case. Compared to fused deposition modelling (FDM) 3D printing of PLA, SLA technology has a higher precision for assemble requirements of the splitting prism. Despite the addition of a prism and a NIR camera, our case still maintains a comparable size to the state-of-the-art VT sensor, GelSlim 3.0 [22], as shown in Fig. 4 (g).

| Layer | Operator | Kernel Size | Input channels | Output Channels |

|---|---|---|---|---|

| 1 | Concat | - | 4(RGB-NIR)+4(Background) | 8 |

| 2 | Conv2d | 1 | 8 | 128 |

| 3 | Relu | - | 128 | 128 |

| 4 | Conv2d | 1 | 128 | 64 |

| 5 | Relu | - | 64 | 64 |

| 6 | Conv2d | 1 | 64 | 3 |

| 7 | Relu | - | 3 | 3 |

| 8 | Tanh | - | 3 | 3 |

| 9 | Normalize | - | 3 | 3 |

| 10 | - | 3 | 3 |

4 Measuring 3D Geometry

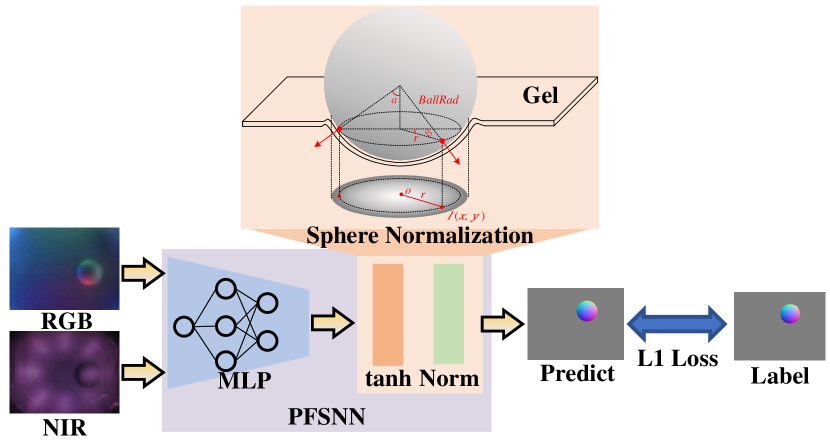

In this section, We introduce PFSNN which fuses RGB images and NIR images from GelSplitter, and estimate dense normal maps. Following, we describe the components and implementation details of PFSNN.

4.1 PFSNN

4.1.1 Network Architecture

of the PFSNN is shown in the table 1. It is composed of multi-layer perceptron (MLP) and sphere normalization. Compared to the look-up table (LUT) method [28], the MLP network is trainable. Through its non-linear fitting capability, the algorithm can combine and integrate information from both RGB and NIR sources.

4.1.2 Sphere Normalization

is derived from a physical model of the spherical press distribution and outputs a unit normal vector map, as shown in Fig. 5. It is defined as:

| (1) |

where is the output of the MLP, and is a small value ( in this paper) to avoid division by zero. Furthermore, the fast poisson algorithm [28] can be utilized to solve depth maps based on normal maps. It is defined as:

| (2) |

4.1.3 Implementation Details.

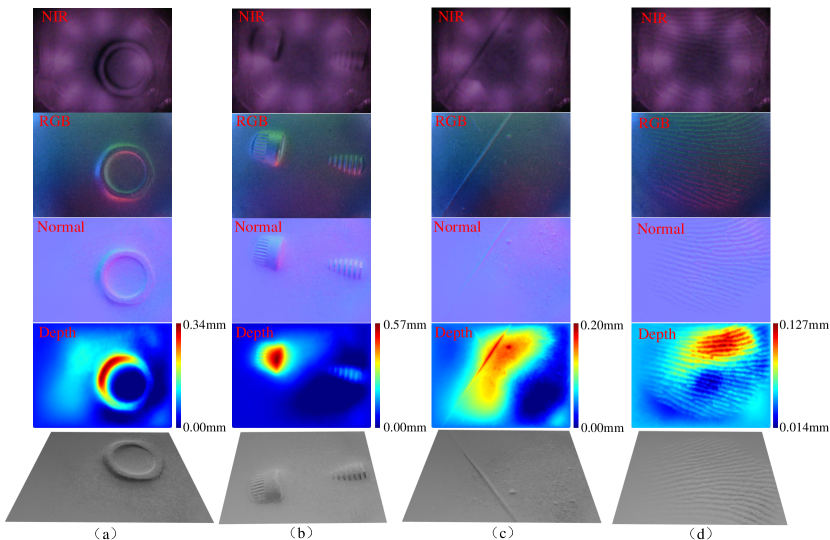

PFSNN requires a small amount of data for training. In this paper, only five images of spherical presses were collected, four for training and one for validation. We test the model with screw caps, screws, hair and fingerprints that the PFSNN never seen before, as shown in Fig. 6.

All of our experiments are executed on a NVIDIA RTX 3070 laptop GPU. Our method is implemented in the PyTorch 2.0 framework, trained with an ADAM optimizer. The batch size is set to 64. The learning rate is set to for 20 epochs. There is no use of data enhancement methods.

| LUT w/o NIR | LUT w. NIR | PFSNN w/o NIR | PFSNN w. NIR | |

| MAE(°) | 9.292 | 8.731 | 6.057 | 5.682 |

4.2 Result of 3D tactile Reconstruction

The process of 3D tactile reconstruction can be divided into two main steps. The first step involves calculating the normal map from RGB-NIR. The second step is to apply the fast Poisson solver [28] to integrate the gradients and obtain the depth information. This helps to create a detailed 3D tactile reconstruction, which can be very useful for various applications.

The screw cap, screw, hair, fingerprint is chosen to qualitative testing of resolution and precision, as shown in Fig. 6. In NIR images, the opposite property to RGB images is observed, with higher depth map gradients being darker. This means that our design allows the NIR image to contain information that is complementary to the RGB image and illuminates the shaded parts of the RGB image,as shown in Fig. 6 (a). In addition, our splitting design of imaging allows both the normal vector map and the reconstructed depth map to have a clear texture, as shown in Fig. 6 (b), where the threads of the screw are clearly reconstructed. Hair strands (diameter approx. 0.05 0.1) and fingerprints(diameter approx. 0.010.02) are used to test the minimum resolution of the GelSplitter, as shown in Fig. 6 (c) (d).

In addition, our splitter can be easily switched between RGB and RGB-NIR modes and provides a fair comparison of the results, as shown in the Tab. 2. The LUT method [28] is employed as a baseline to verify the validity of our method. The results show that the addition of NIR reduces the normal error by 0.561° and 0.375° for LUT and PSFNN respectively. Our PFSNN outperforms the LUT, decreasing the error by over 40%.

5 Conclusion

In this paper, we proposed a framework named GelSplitter to implement multi-modal VT sensor. Furthermore, we focus on 3D tactile reconstruction and designed a compact sensor structure that maintains a comparable size to state-of-the-art VT sensors, even with the addition of a prism and camera. We also implemented the PFSNN to estimate surface normals of objects and reconstruct touch geometry from both NIR and RGB images. Our experiment results demonstrated the performance of our proposed method.

Acknowledgments

This work was supported by the Guangdong University Engineering Technology Research Center for Precision Components of Intelligent Terminal of Transportation Tools (Project No.2021GCZX002), and Guangdong HUST Industrial Technology Research Institute, Guangdong Provincial Key Laboratory of Digital Manufacturing Equipment.

References

- [1] Abad, A.C., Ranasinghe, A.: Visuotactile sensors with emphasis on gelsight sensor: A review. IEEE Sensors Journal 20(14), 7628–7638 (2020). https://doi.org/10.1109/JSEN.2020.2979662

- [2] Abad, A.C., Reid, D., Ranasinghe, A.: Haptitemp: A next-generation thermosensitive gelsight-like visuotactile sensor. IEEE Sensors Journal 22(3), 2722–2734 (2022). https://doi.org/10.1109/JSEN.2021.3135941

- [3] Arar, M., Ginger, Y., Danon, D., Bermano, A.H., Cohen-Or, D.: Unsupervised multi-modal image registration via geometry preserving image-to-image translation. In: 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). pp. 13407–13416 (2020). https://doi.org/10.1109/CVPR42600.2020.01342

- [4] Bao, L., Han, C., Li, G., Chen, J., Wang, W., Yang, H., Huang, X., Guo, J., Wu, H.: Flexible electronic skin for monitoring of grasping state during robotic manipulation. Soft Robotics 10(2), 336–344 (2023). https://doi.org/10.1089/soro.2022.0014

- [5] Castaño-Amoros, J., Gil, P., Puente, S.: Touch detection with low-cost visual-based sensor. In: International Conference on Robotics, Computer Vision and Intelligent Systems (2021)

- [6] Cui, S., Wang, R., Hu, J., Wei, J., Wang, S., Lou, Z.: In-hand object localization using a novel high-resolution visuotactile sensor. IEEE Transactions on Industrial Electronics 69(6), 6015–6025 (2022). https://doi.org/10.1109/TIE.2021.3090697

- [7] Cui, S., Wang, R., Hu, J., Zhang, C., Chen, L., Wang, S.: Self-supervised contact geometry learning by gelstereo visuotactile sensing. IEEE Transactions on Instrumentation and Measurement 71, 1–9 (2022). https://doi.org/10.1109/TIM.2021.3136181

- [8] Deng, X., Dragotti, P.L.: Deep convolutional neural network for multi-modal image restoration and fusion. IEEE Transactions on Pattern Analysis and Machine Intelligence 43(10), 3333–3348 (2021). https://doi.org/10.1109/TPAMI.2020.2984244

- [9] Donlon, E., Dong, S., Liu, M., Li, J., Adelson, E., Rodriguez, A.: Gelslim: A high-resolution, compact, robust, and calibrated tactile-sensing finger. In: 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). pp. 1927–1934 (2018). https://doi.org/10.1109/IROS.2018.8593661

- [10] Fang, B., Long, X., Sun, F., Liu, H., Zhang, S., Fang, C.: Tactile-based fabric defect detection using convolutional neural network with attention mechanism. IEEE Transactions on Instrumentation and Measurement 71, 1–9 (2022). https://doi.org/10.1109/TIM.2022.3165254

- [11] Hu, J., Cui, S., Wang, S., Zhang, C., Wang, R., Chen, L., Li, Y.: Gelstereo palm: a novel curved visuotactile sensor for 3d geometry sensing. IEEE Transactions on Industrial Informatics pp. 1–10 (2023). https://doi.org/10.1109/TII.2023.3241685

- [12] James, J.W., Lepora, N.F.: Slip detection for grasp stabilization with a multifingered tactile robot hand. IEEE Transactions on Robotics 37(2), 506–519 (2021). https://doi.org/10.1109/TRO.2020.3031245

- [13] Lambeta, M., Chou, P.W., Tian, S., Yang, B., Maloon, B., Most, V.R., Stroud, D., Santos, R., Byagowi, A., Kammerer, G., Jayaraman, D., Calandra, R.: Digit: A novel design for a low-cost compact high-resolution tactile sensor with application to in-hand manipulation. IEEE Robotics and Automation Letters 5(3), 3838–3845 (2020). https://doi.org/10.1109/LRA.2020.2977257

- [14] Li, H., Wu, X.J.: Densefuse: A fusion approach to infrared and visible images. IEEE Transactions on Image Processing 28(5), 2614–2623 (2019). https://doi.org/10.1109/TIP.2018.2887342

- [15] Lin, Y., Cheng, T., Zhong, Q., Zhou, W., Yang, H.: Dynamic spatial propagation network for depth completion. In: Proceedings of the AAAI Conference on Artificial Intelligence. vol. 36, pp. 1638–1646 (2022)

- [16] Liu, S.Q., Adelson, E.H.: Gelsight fin ray: Incorporating tactile sensing into a soft compliant robotic gripper. In: 2022 IEEE 5th International Conference on Soft Robotics (RoboSoft). pp. 925–931 (2022). https://doi.org/10.1109/RoboSoft54090.2022.9762175

- [17] Liu, S.Q., Yañez, L.Z., Adelson, E.H.: Gelsight endoflex: A soft endoskeleton hand with continuous high-resolution tactile sensing. In: 2023 IEEE International Conference on Soft Robotics (RoboSoft). pp. 1–6 (2023). https://doi.org/10.1109/RoboSoft55895.2023.10122053

- [18] Ma, D., Donlon, E., Dong, S., Rodriguez, A.: Dense tactile force estimation using gelslim and inverse fem. In: 2019 International Conference on Robotics and Automation (ICRA). pp. 5418–5424 (2019). https://doi.org/10.1109/ICRA.2019.8794113

- [19] Ma, J., Xu, H., Jiang, J., Mei, X., Zhang, X.P.: Ddcgan: A dual-discriminator conditional generative adversarial network for multi-resolution image fusion. IEEE Transactions on Image Processing 29, 4980–4995 (2020). https://doi.org/10.1109/TIP.2020.2977573

- [20] Shuangping, J., Bingbing, Y., Minhao, J., Yi, Z., Jiajun, L., Renhe, J.: Darkvisionnet: Low-light imaging via rgb-nir fusion with deep inconsistency prior. In: Proceedings of the AAAI Conference on Artificial Intelligence. vol. 36, pp. 1104–1112 (2022)

- [21] Singh, S., Singh, H., Bueno, G., Deniz, O., Singh, S., Monga, H., Hrisheekesha, P., Pedraza, A.: A review of image fusion: Methods, applications and performance metrics. Digital Signal Processing 137, 104020 (2023). https://doi.org/https://doi.org/10.1016/j.dsp.2023.104020

- [22] Taylor, I.H., Dong, S., Rodriguez, A.: Gelslim 3.0: High-resolution measurement of shape, force and slip in a compact tactile-sensing finger. In: 2022 International Conference on Robotics and Automation (ICRA). pp. 10781–10787 (2022). https://doi.org/10.1109/ICRA46639.2022.9811832

- [23] Wang, S., She, Y., Romero, B., Adelson, E.: Gelsight wedge: Measuring high-resolution 3d contact geometry with a compact robot finger. In: 2021 IEEE International Conference on Robotics and Automation (ICRA). pp. 6468–6475 (2021). https://doi.org/10.1109/ICRA48506.2021.9560783

- [24] Wang, Z., Wu, Y., Niu, Q.: Multi-sensor fusion in automated driving: A survey. IEEE Access 8, 2847–2868 (2020). https://doi.org/10.1109/ACCESS.2019.2962554

- [25] Wu, X.A., Huh, T.M., Sabin, A., Suresh, S.A., Cutkosky, M.R.: Tactile sensing and terrain-based gait control for small legged robots. IEEE Transactions on Robotics 36(1), 15–27 (2020). https://doi.org/10.1109/TRO.2019.2935336

- [26] Xue, T., Wang, W., Ma, J., Liu, W., Pan, Z., Han, M.: Progress and prospects of multimodal fusion methods in physical human–robot interaction: A review. IEEE Sensors Journal 20(18), 10355–10370 (2020). https://doi.org/10.1109/JSEN.2020.2995271

- [27] Yamaguchi, A., Atkeson, C.G.: Tactile behaviors with the vision-based tactile sensor fingervision. International Journal of Humanoid Robotics 16(03), 1940002 (2019). https://doi.org/10.1142/S0219843619400024

- [28] Yuan, W., Dong, S., Adelson, E.H.: Gelsight: High-resolution robot tactile sensors for estimating geometry and force. Sensors 17(12) (2017), https://www.mdpi.com/1424-8220/17/12/2762

- [29] Zamir, S.W., Arora, A., Khan, S., Hayat, M., Khan, F.S., Yang, M.H., Shao, L.: Learning enriched features for fast image restoration and enhancement. IEEE Transactions on Pattern Analysis and Machine Intelligence 45(2), 1934–1948 (2023). https://doi.org/10.1109/TPAMI.2022.3167175

- [30] Zhang, C., Cui, S., Cai, Y., Hu, J., Wang, R., Wang, S.: Learning-based six-axis force/torque estimation using gelstereo fingertip visuotactile sensing. In: 2022 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). pp. 3651–3658 (2022). https://doi.org/10.1109/IROS47612.2022.9981100

- [31] Zhang, G., Du, Y., Yu, H., Wang, M.Y.: Deltact: A vision-based tactile sensor using a dense color pattern. IEEE Robotics and Automation Letters 7(4), 10778–10785 (2022). https://doi.org/10.1109/LRA.2022.3196141

- [32] Zhao, Z., Xu, S., Zhang, C., Liu, J., Zhang, J.: Bayesian fusion for infrared and visible images. Signal Processing 177, 107734 (2020). https://doi.org/https://doi.org/10.1016/j.sigpro.2020.107734