Generalised Linear Mixed Model Analysis, Maximum Likelihood Fitting, Prediction, Simulation, and Optimal Design in \proglangR

Samuel Watson \PlaintitleGeneralised Linear Mixed Model Analysis, Maximum Likelihood Fitting, Prediction, Simulation, and Optimal Design in R \Shorttitleglmmr packages for R \Abstract

We describe the \proglangR package \pkgglmmrBase and an extension \pkgglmmrOptim. \pkgglmmrBase provides a flexible approach to specifying, fitting, and analysing generalised linear mixed models. We use an object-orientated class system within \proglangR to provide methods for a wide range of covariance and mean functions relevant to multiple applications including cluster randomised trials, cohort studies, spatial and spatio-temporal modelling, and split-plot designs. The class generates relevant matrices and statistics and a wide range of methods including full maximum likelihood estimation of generalised linear mixed models using stochastic Maximum Likelihood, Laplace approximation, power calculation, standard error corrections, random effect and broader data simulation, and access to relevant calculations. The package includes Markov Chain Monte Carlo simulation of random effects, sparse matrix methods, and other functionality to support efficient estimation. The \pkgglmmrOptim package implements a set of algorithms to identify c-optimal experimental designs where observations are correlated and can be specified using the generalised linear mixed model classes. Several examples and comparisons to existing packages are provided to illustrate use of the packages.

\KeywordsMarkov Chain Monte Carlo Maximum Likelihood, Stochastic Approximation Expectation Maximisation, Generalised Linear Mixed Model, Non-linear model, \proglangR, \proglangC++, c-Optimal Experimental design

\PlainkeywordsMarkov Chain Monte Carlo Maximum Likelihood, Stochastic Approximation Expectation Maximisation, Generalised Linear Mixed Model, R, C++, c-Optimal Experimental design \Address

Samuel Watson

Institute for Applied Health Research

University of Birmingham

Birmingham, UK

E-mail:

1 Introduction

Generalised linear mixed models (GLMM) are a highly flexible class of statistical models that incorporate both ‘fixed’ and ‘random’ effects. GLMMs permit the incorporation of latent effects and parameters and allow for complex covariance structures. For example, they are widely used in the analysis of: clustered data, such as from cluster randomised trials, to capture latent cluster means; cohort studies to incorporate temporal correlation between observations on individuals; or, in geospatial statistical models as the realisation of a Gaussian process used to model a latent spatial or spatio-temporal surface. Their use in such a wide variety of statistical applications means there exist several packages and libraries for software including \proglangR, Stata, and SAS to provide relevant calculations for study design, model fitting, and other analyses.

For many types of analysis, such as a power calculation for a cluster trial, there exist multiple different packages each implementing a set of specific models. Users may therefore be required to use multiple packages with different interfaces for a single analysis. A more general and flexible system that provides a wide range of functionality for this model type and that permits users to add and extend functionality may therefore simplify statistical workflows and facilitate more complex analyses.

In this article, we describe the \proglangR package \pkgglmmrBase and an extension \pkgglmmrOptim for the \proglangR programming language. These packages provide a general framework for GLMM specification, including non-linear functions of parameters and data, with calculation of relevant matrices, statistics, and other functions designed to provide useful analyses for a large range of model specifications and to support implementation of other GLMM related software in \proglangR. The aim of this \proglangR package (and its underlying \proglangC++ library) was to provide several features altogether not available in other software packages: MCMC Maximum likelihood model fitting, run-time specification of non-linear fixed effect forms for GLMM models, GLMM model fitting with flexible, easy-to-specify covariance functions, data simulation and model summary features, easy access to relevant matrices and calculations, and support for extensions and algorithms including optimal design algorithms. We summarise and compare existing software that provides functionality in these areas where relevant in each section.

1.1 Generalised linear mixed models

A generalised linear mixed model (GLMM) has the linear predictor for observation

where is the th row of matrix , which is a matrix of covariates, is a vector of parameters, is the th row of matrix , which is the “design matrix” for the random effects, and , where is the covariance matrix of the random effects terms that depends on parameters . In this article we use bold lower case characters, e.g. to represent vectors, normal script lower case, e.g. , to represent scalars, and upper case letters, e.g. , to represent matrices.

The model is then

is a -length vector of outcomes with elements , is a distribution, is the link function such that where is the mean value, and is an additional scale parameter to complete the specification.

When is a distribution in the exponential family, we have:

| (1) | ||||

The likelihood of this model is given by:

| (2) |

The likelihood (2) generally has no closed form solution, and so different algorithms and approximations have been proposed to estimate the model parameters. We discuss model fitting in Section 3. Where relevant we represent the set of all parameters as .

2 A GLMM Model in glmmrBase

The \pkgglmmrBase package defines a \codeModel class using the \pkgR6 class system (Chang, 2022), which provides an encapsulated object orientated programming system for \pkgR. Most of the functionality of the package revolves around the \codeModel class, which interfaces with underlying \proglangC++ classes. A non-exhaustive list of the functions and objects calculated by or a member of the \codeModel class is shown in Table 1. The class also contains two subclasses, the \codecovariance and \codemean classes, which handle the random effects and linear predictor, respectively. \pkgR6 classes allow encapsulating classes to ‘share’ class objects, so that a single \codecovariance object could be shared by multiple \codeModel objects. \pkgR6 also provides familiar object-orientated functionality, including class inheritance, so that new classes can be created that inherit from the \codeModel class to make use of the range of functions. Within \proglangR, we use the \pkgMatrix package for matrix storage and linear algebra for operations, and the Eigen \proglangC++ library for linear algenra functionality in \proglangC++. Linkage between \proglangR and \proglangC++ is provided through the \proglangRcpp and \proglangRcppEigen packages (Eddelbuettel and François, 2011). We describe the base functionality and model specification in this section, and then describe higher-level functionality including model fitting in subsequent sections. The underlying \proglangC++ library is header-only and so can be imported into other projects.

| Method | Description | Sec. | |

| \codecovariance | \codeD | The matrix | 2.4 |

| \codeZ | The matrix | 2.7 | |

| \codechol_D() | Cholesky decomposition of | 2.4 | |

| \codelog_likelihood() | Multivariate Gaussian log-likelihood with zero mean and covariance | 3.5 | |

| \codesimulate_re() | Simulates a vector | 2.13 | |

| \codesparse() | Choose whether to use sparse matrix methods | 2.4 | |

| \codeparameters | The parameters | ||

| \codeformula | Random effects formula | 1 | |

| \codeupdate_parameters() | Updates and related matrices | ||

| \codehsgp() | HSGP approximation parameters | 1 | |

| \codenngp() | NNGP approximation parameters | 1 | |

| \codemean | \codeX | The matrix X | |

| \codeparameters | The parameters | ||

| \codeoffset | The optional model offset | ||

| \codeformula | The fixed effects formula used to create | 2.2 | |

| \codelinear_predictor() | Generates plus offset | ||

| \codeupdate_parameters() | Updates and related matrices | ||

| \codefamily | A \proglangR family object | ||

| \codevar_par | An optional scale parameter | ||

| \codefitted() | Full linear predictor | ||

| \codepredict() | Predictions from the model at new data values | 3.14 | |

| \codesim_data() | Simulates data from the model | 2.13 | |

| \codeSigma() | Generates (or an approximation) | 2.9 | |

| \codeinformation_matrix() | The information matrix | 2.9, 3.9 | |

| \codesandwich() | Robust sandwich matrix | 3.10 | |

| \codesmall_sample_correction() | Bias-corrected variance-covariance matrix of | 3.10 | |

| \codebox() | Inferential statistics for the modified Box correction | 3.10 | |

| \codemarginal() | Marginal effects of covariates | 3.10 | |

| \codepartial_sigma() | Matrices and | 3.10 | |

| \codeuse_attenutation() | Option for improving approximation of | 2.9 | |

| \codepower() | Estimates the power | 2.12 | |

| \codeMCML() | Markov Chain Monte Carlo Maximum Likelihood model fitting | 3 | |

| \codeLA() | Maximum Likelihood model fitting with Laplace approximation | 3.9 | |

| \codemcmc_sample() | Sample using MCMC | 3.3 | |

| \codew_matrix() | Returns | 2.9 | |

| \codedh_deta() | Returns | 2.9 | |

| \codecalculator_instructions() | Prints the calculation instructions for the linear predictor | 2.2 | |

| \codelog_gradient() | Returns either or | 3.3 | |

An example call to generate a new \codeModel is: {CodeChunk} {CodeInput} model <- Model

2.1 Data Generation Tools

We introduce methods to generate data for hierarchical models and blocked designs. As we show in subsequent examples, ‘fake’ data generation is typically needed to specify a particular data structure at the design stage of a study, but can be useful in other circumstances. Nelder (1965) suggested a simple notation that could express a large variety of different blocked designs. The notation was proposed in the context of split-plot experiments for agricultural research, where researchers often split areas of land into blocks, sub-blocks, and other smaller divisions, and apply different combinations of treatments. However, the notation is useful for expressing a large variety of experimental designs with correlation and clustering, including cluster trials, cohort studies, and spatial and temporal prevalence surveys. We have included the function \codenelder() in the package that generates a data frame of a design using Nelder’s notation.

There are two operations:

-

1.

\code

> (or in Nelder’s notation) indicates “clustered in”.

-

2.

\code

* (or in Nelder’s notation) indicates a crossing that generates all combinations of two factors.

The function takes a formula input indicating the name of the variable and a number for the number of levels, such as \codeabc(12). So for example \code cl(4) > ind(5) means in each of five levels of \codecl there are five levels of \codeind, and the individuals are different between clusters. Brackets are used to indicate the order of evaluation. Some specific examples are illustrated in Table 2.

| Formula | Meaning |

|---|---|

| \code person(5) * time(10) | A cohort study with five people, all observed in each of ten periods \codetime |

| \code (cl(4) * t(3)) > ind(5) | A repeated-measures cluster study with four clusters (labelled \codecl), each observed in each time period \codet with cross-sectional sampling and five individuals (labelled \codeind) in each cluster-period. |

| \code (cl(4) > ind(5)) * t(3) | A repeated-measures cluster cohort study with four clusters (labelled \codecl) with five individuals per cluster, and each cluster-individual combination is observed in each time period \codet. |

| \code ((x(100) * y(100)) > hh(4)) * t(2) | A spatial-temporal grid of 100x100 and two time points, with 4 households per spatial grid cell. |

Use of this function produces a data frame: {CodeChunk} {CodeInput} data <- nelder( (j(4) * t(5)) > i(5)) head(data) {CodeOutput} > j t i > 1 1 1 1 > 2 1 1 2 > 3 1 1 3 > 4 1 1 4 > 5 1 1 5 > 6 1 2 6

The data frame shown above may represent, for example, a cluster randomised study with cross-sectional sampling. Such an approach to study design assumes the same number of each factor for each other factor, which is not likely adequate for certain study designs. We may expect unequal cluster sizes, staggered inclusion/drop-out, and so forth, and so a user-generated data set would instead be required. Certain treatment conditions may be specified with this approach including parallel trial designs, stepped-wedge implementation, or factorial approaches by specifying a treatment arm as part of the block structure.

2.2 Linear Predictor

The package allows for flexible specification of . Standard model formulae in \proglangR can be used, for example \codeformula = factor(t) - 1, the package also allows a wide range of specifications including non-linear functions and naming parameters in the function, including allowing the same parameter to appear in multiple places. For example, the function can be specified as \codeformula = int + b_1*exp(b_2*x). Names of data are assumed to be multiplied by a parameter as in the standard linear predictor \code x would produce . To include data without a parameter, it can be wrapped in brackets such as \code (x), which can also provide a way of specifying offsets, although this can also be achieved by directly specifying the offset.

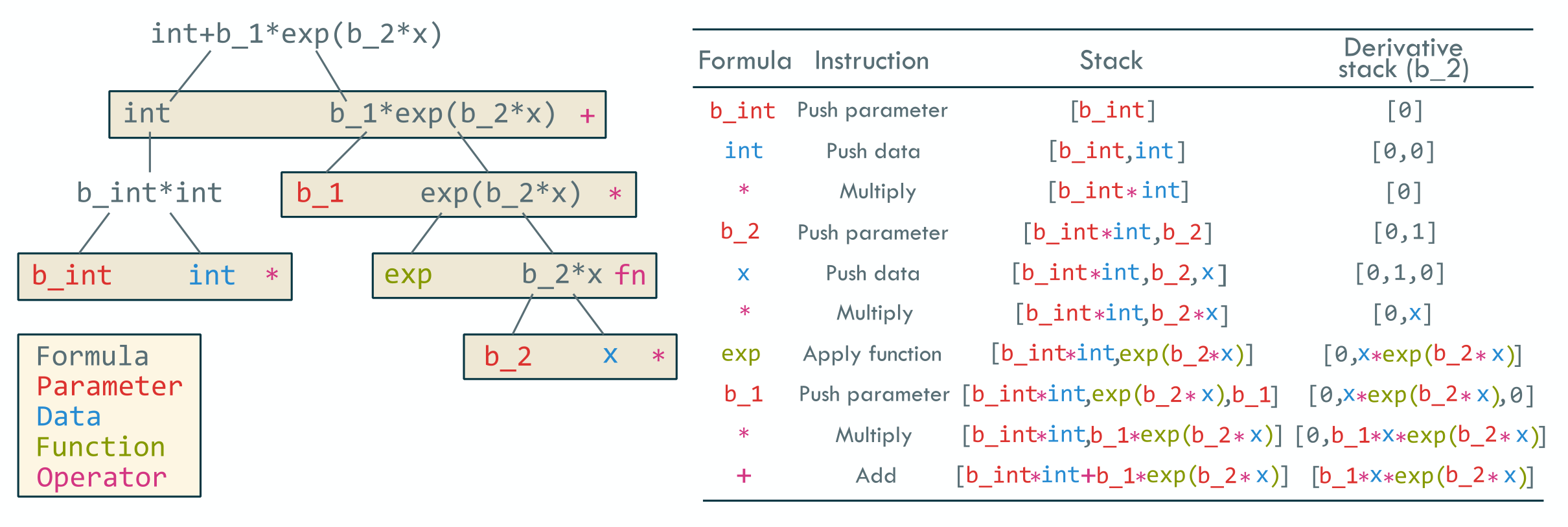

We use a recursive, operator precedence algorithm to parse the formula and generate a reverse Polish notation representing the formula. The algorithm first splits the formula at the first ‘+’ or ‘-’ symbol outside of a bracket, then if there are none, at a multiply (‘*’) or divide (‘/’) symbol outside of a bracket, then a power (‘’̂) symbol, and finally brackets, until a token is produced (e.g. \codeb_2 or \codeexp). The resulting token is first checked against available functions, then if it is not a function it is checked against the column names of the data, and if it is not in the data it is considered the name of a parameter. The resulting tree structure can then be parsed up each of its branches to produce an instruction set. Figure 1 shows an example of the formula parsing algorithm, and calculation of the value of the formula. This scheme also allows for an auto-differention approach to obtain the first and second derivatives where required.

2.3 Covariance specification

We adapt and extend the random effects specification approach of the popular \proglangR package \pkglme4 (Bates et al., 2015) and other related packages, building on the flexible formula parsing and calculation described above, to allow for relatively complex structures through the specification of a covariance function (see Section 3 for a summary of related packages). A covariance function is specified as an additive formula made up of components with structure \code(z|f(j)). The left side of the vertical bar specifies the covariates in the model that have a random effects structure; using \code(1|f(j)) specifies a ‘random intercept’ model. The right side of the vertical bar specifies the covariance function \codef for that term using variable \codej named in the data. Multiple covariance functions on the right side of the vertical bar are multiplied together, i.e., \code(1|f(j)*g(t)). The currently implemented functions (as of version 0.6.1) are listed in Table 3.

| Function | Code | ||

|---|---|---|---|

| Group membership | \codegr(x) | ||

| Exponential | \codefexp(x) | ||

| \codefexp0(x) | |||

| Squared Exponential | \codesqexp(x) | ||

| \codesqexp0(x) | |||

| Autoregressive order 1 | \codear(x) | ||

| Bessel | > 0 | \codebessel(x) | |

| Matern | \codematern(x) | ||

| Compactly supported* | |||

| Truncated Power 2 | , | \codetruncpow2(x) | |

| Truncated Power 3 | , | \codetruncpow3(x) | |

| Truncated Power 4 | , | \codetruncpow4(x) | |

| Cauchy | , | \codecauchy(x) | |

| Cauchy 3 | , | \codecauchy3(x) | |

| Gaussian process | |||

| approximations | |||

| Nearest Neighbour | See section 2.6 | \codenngp_*(x) | |

| Hilbert Space | See section 2.6 | \codehsgp_*(x) |

One combines smaller functions to provide the desired overall covariance function. For example, for a stepped-wedge cluster randomised trial we could consider the standard specification (see, for example, Li et al. (2021)) with an exchangeable random effect for the cluster level (\codej), and a separate exchangeable random effect for the cluster-period (with \codet representing the discrete time period), which would be \code (1|gr(j))+(1|gr(j,t)). Alternatively, we could consider an autoregressive cluster-level random effect that decays exponentially over time so that in a linear mixed model, for person in cluster at time , , for , for , and for . This function would be specified as \code (1|gr(j)*ar1(t)). We could also supplement the autoregressive cluster-level effect with an individual-level random intercept, where \codeind specified the identifier of the individual, as \code (1|gr(j)*ar1(t)) + (1|gr(ind)), and so forth. To add a further random parameter on some covariate \codex we must add an additional term, for example, \code (1|gr(j)*ar1(t)) + (1|gr(ind)) + (x|gr(ind)). As another example, to implement a spatial Gaussian process model with exponential covariance function and two Cartesian coordinates \codex and \codey with autoregressive temporal decay we can specify \code (1|ar(t)*fexp0(x,y).

2.3.1 Covariance function parameters

The \codecovariance argument of the call to \codeModel is optional and if used, is typically used to specify the values of the parameters in the model. It can also receive a list, which can contain covariance function parameters, a random effects specification, and a particular data frame. Where parameter values are provided, the elements of the vector correspond to each of the functions in the covariance formula in the order they are written. For example,

-

•

Formula: \code (1|gr(j))+(1|gr(j,t)); parameters: \codec(0.05,0.01) describes the covariance function for

-

•

Formula: \code (1|gr(j)*fexp0(t)); parameters: \codec(0.05,0.8) describes the covariance function

The Euclidean distance is used for all the functions. For example \code (1|fexp0(x,y)) will generate a covariance matrix where the covariance between two observations with positions and is where is the Euclidean distance. For some of the covariance functions, we provide two parameterisations (for example, \codefexp and \codefexp0). The aim of these functions is to provide a version that is compatible with other functions with leading covariance parameters as, for example, a model specified as \codegr(j)*fexp(t) would not be identifiable as there would be two free parameters multiplying the exponential function.

2.4 Covariance Calculation and Storage

The formula specifying the random effects is translated into a form that can be used to efficiently generate the value at any position in the matrix so that it can be quickly updated when the parameter values change, which facilitates functionality such as model fitting when using an arbitrary combination of covariance functions.

The matrices and are stored using sparse compressed row storage (CRS) format since for a large number of cases and its Cholesky factorisation are sparse. For example, a large number of covariance function specifications and their related study designs lead to a block diagonal structure for :

| (3) |

Internally, the formula into parsed into blocks, both determined by the presence of a \codegr function in any particular random effect specification, and for each additive component of the specification. These blocks may be of differing dimensions and have different formulae and parameters. The data defining each element is a Euclidean distance and within each (likely dense) block, only the data for the strictly lower triangular portion needs to be stored. These distances are calculated once and stored in a flat data structure. We use a reverse Polish notation for to specify the instructions for a given block, as with the linear predictor, along with parameter indices and other necessary information.

By default, the Cholesky decomposition , such that , is calculated using a sparse matrix LDL algorithm (Davis, 2005), which also provides an efficient forward substitution algorithm for computing quadratic forms in the multivariate Gaussian likelihood (see Section 3). Additionally, an efficient permutation is calculated on instantiation of the class using the approximation minimum degree algorithm (Furrer et al., 2006; Amestoy et al., 2004), which may provide a performance gain when factorising the matrix by ensuring the resulting factorisation is sparse. We provide a basic \proglangC++ sparse matrix class, the LDL decomposition, and basic operations in the \pkgSparseChol package for \proglangR.

As an alternative we can directly exploit the block diagonal structure of . We can directly generate the decomposition of each block separately. We use the Cholesky–Banachiewicz algorithm for this purpose. Many calculations can also be broken down by block, for example . This example can also be further simplified using a forward substitution algorithm (see Section 3.5). To switch between sparse and dense matrix methods, one can use \codemodel$sparse(TRUE) and \codemodel$sparse(FALSE), respectively. The default is to use sparse methods.

2.5 Compactly Supported Covariance Functions

For many types of GLMM, the matrix is dense and not blocked or sparse. For example, geospatial statistical models often specify spatial or spatio-temporal Gaussian process models for the random effects (Diggle et al., 1998). For even moderately sized data sets, this can result in very slow model fitting for the multivariate Gaussian likelihood. There are several approaches to reducing model fitting time. One approach is to use a compactly supported covariance function that leads to a sparse matrix while still approximating a Gaussian process. This method can be viewed as ‘covariance tapering’ (Kaufman et al., 2008), although the approach implemented here is what they describe as the ‘one taper’ method, which may be biased in some circumstances. As shown in Table 3, we include several compactly supported and parameterised covariance functions, which are described in Gneiting (2002). A covariance function with compact support has value zero beyond some ‘effective range’. Using these functions can result in a sparse matrix , which is then amenable to the sparse matrix methods in this package. To implement these functions with an effective range of , beyond which the covariance is zero, one must divide each variable by . For example, if there are two spatial dimensions, \codex and \codey, in the data frame \codedata then one would create \codedata$xr <- data$x/r, and equivelently for \codey. Then, one could use the covariance function, for example, \code truncpow3(xr,yr).

2.6 Gaussian Process Approximations

As an alternative to compactly supported covariance functions, one can instead use a Gaussian process approximation for large, dense covariance matrices. We provide two such approximations in this package.

2.6.1 Nearest Neighbour Gaussian Process

The multivariate Gaussian likelihood can be rewritten as the product of the conditional densities:

Vecchia (1988) proposed that one can approximate by limiting the conditioning sets for each to a maximum size of . For geospatial applications, (Datta et al., 2016a, b) proposed the nearest neighbour Gaussian process (NNGP), in which the conditioning sets are limited to the ‘nearest neighbours’ of each observation.

Let be the set of up to nearest neighbours of with index less than . The approximation is:

which leads to:

| (4) | ||||

where is the th nearest neighbour of . Equation (4) can be more compactly written as where is a sparse, strictly lower triangular matrix, with a diagonal matrix with entries and (Finley et al., 2019). The approximate covariance matrix can then be written as . We implement the algorithms described by Finley et al. (2019) to generate the matrices and . The cost of generating these matrices is owing to the need to solve linear systems of up to size . An efficient algorithm for calculating the quadratic form in the mulivariate Gaussian likelihood is also used.

A nearest neighbour approximation can be used by prefixing \codenngp_ to the covariance function name with most of the functions in the package. For example, a nearest neighbour Gaussian process approximation using an exponential covariance function in two dimensions can be used by specifying in the formula \code(1|nngp_fexp(x,y)). The approximation parameters including the number of nearest neighbours can be set (or returned) using the function \codemodelnngp().

2.6.2 Hilbert Space Gaussian Process

Low, or reduced, rank approximations aim to approximate the matrix with a matrix with rank . The optimal low-rank approximation is where is a diagonal matrix of the leading eigenvalues of and the matrix of the corresponding eigenvectors. However, the computational complexity of generating the eigendecomposition scales the same as matrix inversion. Solin and Särkkä (2020) propose an efficient method to approximate the eigenvalues and eigenvectors using Hilbert space methods, so we refer to it as a Hilbert Space Gaussian Process (HSGP). Riutort-Mayol et al. (2023) provides further discussion of these methods.

Stationary covariance functions, including those in the Matern class like exponential and squared exponential, can be represented in terms of their spectral densities. For example, the spectral density function of the squared exponential function in dimensions is:

Consider first a unidimensional space with support on . The eigenvalues (which are the diagonal elements of ) and eigenvectors (which form the columns of ) of the Laplacian operator in this domain are:

and

Then the approximation in one dimension is

where . This result can be generalised to multiple dimensions. The total number of eigenvalues and eigenfunctions in multiple dimensions is the combination of all univariate eigenvalues and eigenfunctions over all dimensions. The matrix does not depend on the covariance parameters are can be pre-computed, so only the product needs to be re-calculated during model fitting, which scales as . Riutort-Mayol et al. (2023) provide a detailed analysis of the reduced rank GP for unidimensional linear Gaussian models, examining in particular how the choice of and affect performance of posterior inferences and model predictions.

The HSGP is only currently available with the exponential or squared expoential covariance functions as either \codehsgp_fexp(x) or \codehsgp_sqexp(x). The approximation parameters including the number of basis functions and the boundary condition can be set (or returned) using the function \codemodelhsgp().

2.7 Computation of matrix Z

The matrix is constructed in a similar way as other packages, such as described for \pkglme4 (Bates et al., 2015). is comprised of the matrices corresponding to each block . For a model formula \code(1|f(x1,x2,…)), the dimension of the corresponding matrix is rows and number of columns equal to the number of unique rows of the combination of the variables in \codex1,x2,…. The th row and th column of is then equal to 1 if the th individual has the value corresponding to the th unique combination of the variables and zero otherwise. For formulae specifying random effects on covariates (“variable slopes models”), e.g. \code(z|f(x1,x2,…)), then the th row and th column of is further multiplied by . is also stored in CRS format and uses sparse matrix methods for multiplication and related calculations.

2.8 Additional Arguments

For Gaussian models, and other distributions requiring an additional scale parameter , one can also specify the option \codevar_par which is the conditional variance at the individual level. The default value is 1. Currently (version 0.6.1), the package supports the following families (link functions): Gaussian (identity, log), Poisson (log, identity), Binomial (logit, log, probit, identity), Gamma (log, inverse, identity), and Beta (logit). For the beta distribution one must provide \codeBeta() to the \codefamily argument.

2.9 Approximation of the Covariance Matrix

The \codeModel class can provide an approximation to the covariance matrix with the member function \code$Sigma(). This approximation is also used to calculate the expected information matrix with \code$information_matrix() and estimate power in the member function \code$power() (see Section 2.12). We use the first-order approximation based on the marginal quasi-likelihood proposed by Breslow and Clayton (1993):

| (5) |

where , which are recognisable as the GLM iterated weights (Breslow and Clayton, 1993; McCullagh and Nelder, 1989). For Gaussian-identity mixed models this approximation is exact. The diagonal of the matrix can be obtained using the member function \code$w_matrix(). The information matrix for is:

| (6) |

Zeger et al. (1988) suggest that when using the marginal quasilikelihood, one can improve the approximation to the marginal mean by “attenuating” the linear predictor for non-linear models. For example, with the log link the “attenuated” mean is and for the logit link with . To use “attenuation” one can set \codemod$use_attenutation(TRUE), the default is not to use attenutation.

2.10 Updating Parameters

The parameters of the covariance function and linear predictor can updated using the function \codeupdate_parameters(), which then triggers re-generation of the matrices and updates the data in the underlying \proglangC++ classes: \codemodel$update_parameters( cov.pars = c(0.1,0.9)).

2.11 Class Inheritance

The \pkgR6 class system provides many of the standard features of other object orientated systems, including class inheritance. The classes specified in the \pkgglmmrBase package can be inherited from to provide the full range of calculations to other applications. As an example we can define a new class that has a member function that returns the log determinant of the matrix : {CodeChunk} {CodeInput} CovDet <- R6::R6Class("CovDet", inherit = Covariance, public = list( det = function() return(Matrix::determinant(selfmodulus) )) cov <- CovDetdet() [1] -72.26107 More complex applications may include classes to implement simulation-based analyses that simulate new data and fit a GLMM using one of the package’s model fitting methods.

2.12 Power Calculation

Power and sample size calculations are an important component of the design of many studies, particularly randomised trials, which we use as an example. Cluster randomised trials frequently use GLMMs for data analysis, and hence are the basis for estimating the statistical power of a trial design (Hooper et al., 2016). Different approaches are used to estimate power or calculate sample size given an assumed correlation structure, most frequently using “design effects” (Hemming et al., 2020). However, given the large range of possible models and covariance structures, many software packages implement a narrow range of specific models and provide wrappers to other functions. For example, we identified eight different \proglangR packages available on CRAN or via a R Shiny web interface for calculating cluster trial power or sample size. These packages and their features are listed in Tables 4 and 5. As is evident, the range of models is relatively limited. Beyond this specific study type the R package \pkgsimr provides functions to simulate data from GLMMs and estimate statistics like power using Monte Carlo simulation for specific designs.

| Package | Custom Designs | Data simulation | Power by simulation | Power by approx. | Non-simple randomisation | Optimal designs |

| \pkgSWSamp | ✓ | ✓ | ✓ | ✓ | ||

| \pkgsamplingDataCRT | ✓ | ✓ | ✓ | |||

| \pkgShinycRCT | ✓ | ✓ | ||||

| \pkgswCRTdesign | ✓ | ✓ | ||||

| \pkgclusterPower | ✓ | ✓ | ||||

| \pkgCRTdistpower | ✓ | ✓ | ||||

| \pkgswdpwr | ✓ | ✓ | ||||

| \pkgSteppedPower | ✓ | ✓ | ||||

| \pkgglmmrBase | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ |

| Package | Non- canonical link | Binom./ Poisson | Other dist. | Compound symmetry | Temporal decay | Random slopes | Covariates | Other functions |

| \pkgSWSamp | ✓ | ✓ | ||||||

| \pkgsamplingDataCRT | ✓ | |||||||

| \pkgShinycRCT | ✓ | ✓ | ✓ | |||||

| \pkgswCRTdesign | ✓ | ✓ | ||||||

| \pkgclusterPower | ✓ | ✓ | ||||||

| \pkgCRTdistpower | ✓ | |||||||

| \pkgswdpwr | ✓ | ✓ | ✓ | |||||

| \pkgSteppedPower | ✓ | ✓ | ||||||

| \pkgglmmrBase | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ |

As an alternative, the flexible model specification and range of functionality of \pkgglmmrBase can be used to quickly estimate the power for a particular design and model. The \codeModel class member function \code$power() estimates power by calculating the information matrix described above. The square root of the diagonal of this matrix provides the (approximate) standard errors of . The power of a two-sided test for the th parameter at a type I error level of is given by where is the Gaussian cumulative distribution function. As an example, to estimate power for a stepped-wedge parallel cluster randomised trial (see Hooper et al. (2016)) with 10 clusters and 11 time periods and ten individuals per cluster period, where we use a Binomial-logit model with time period fixed effects set to zero, and use a auto-regressive covariance function with parameters 0.25 and 0.7: {CodeChunk} {CodeInput} data <- nelder( (cl(10) * t(11)) > i(10)) datat > datanew(formula = factor(t) + int - 1 + (1|gr(cl)*ar1(t)), data = data, covariance = c(0.05,0.7), mean = c(rep(0,12),0.5), family = binomial()) model

2.13 Data Simulation

The \codeglmmrBase package also simplifies data simulation for GLMMs. Data simulation is a commonly used approach to evaluate the properties of estimators and statistical models. The \codeModel class has member function \codesim_data(), which will generate either vector of simulated output (argument \codetype="y"), a data frame that combines the linked dataset with the newly simulated data (argument \codetype="data"), or a list containing the simulated data, random effects, and matrices and (argument \codetype="all"). To quickly simulate data new random effects are drawn as and then the random effects component of the model simulated as . Simulation only of the random effects terms can be done with the relevant function in the \codeCovariance class, \code$covariance$simulate_re().

3 Model Fitting

The parameters and standard errors of GLMMs are difficult to estimate in a maximum likelihood context given the intergral in the likelihood in Equation 2. Several approximations and associated software are available for GLMMs, however, there are few options for fitting more complex models. The \codeModel class includes two functions: \codeMCML, for MCML model fitting, and \codeLA, which uses a Laplace approximation, both described below.

3.1 Existing software

Software for fitting generalised linear mixed models has been available in \proglangR environment (R Core Team, 2021) for many years. The widely-used package \pkglme4 provides maximum likelihood and restricted maximum likelihood (REML) estimators (Bates et al., 2015). \pkglme4 builds on similar methods proposed by e.g. Bates and DebRoy (2004) and Henderson (1982). For non-linear models it uses Laplacian approximation or adaptive Gaussian quadrature. However, \pkglme4 only allows for compound symmetric (or exchangable) covariance structures, i.e. group-membership type random effects, and linear, additive linear predictors. Function \codeglmmPQL() in package \pkgMASS (Venables and Ripley, 2002) implements Penalized quasi-likelihood (PQL) methods for GLMMs, but is again limited to the same covariance structures, and linear, additive model structure. The package \pkgnlme (Pinheiro et al., 2022) also uses REML approaches for linear mixed models and approximations for models with mean specifications with non-linear functions of covariates discussed in Lindstrom and Bates (1990). \pkgnlme provides a wider range of covariance structures, such as autoregressive functions, which are also available nested within a broader group structure for linear, additive linear predictors. The package providing Frequentist model estimation of GLMMs with the broadest support for different specifications, including many different covariance functions, is \pkgglmmTMB, which uses the \pkgTMB (Template Model Builder) package to support model specification and implementation of Laplacian Approximation to estimate models, although also only permits linear, additive linear predictors.

Outside of R, GLMM model fitting is also provided in other popular statistical software packages. Stata offers a range of functions for fitting mixed models. The \codextmixed function offers both maximum likelihood and restricted maximum likelihood methods to estimate linear mixed models with multi-level group membership random effect structures and heteroscedasticity. For GLMMs, there is a wide range of models that allow for random effects, many of which are accessed through the \codemeglm suite of commands. Estimation can use Gauss-Hermite quadrature or Laplace approximation, but random effect structures are generally limited to group membership type effects. For the software SAS, \codePROC MIXED, and \codePROC GLIMMIX provide GLMM model fitting using either quadrature or Laplace approximation methods. These commands provide a relatively broad random of random effect coviariance structures, including autoregressive, Toeplitz, and exponential structures. \codePROC NLMIXED allows for non-linear functions of fixed and random effects; it uses a Gaussian quadrature method to approximate the integral in the log-likelihood, and a Newton-Raphson step for empirical Bayes estimation of the random effects. However, \codePROC NLMIXED only allows for group membership random effects. Both Stata and SAS also provide a range of options for standard error estimation and correction. Both software packages are proprietary.

We also provide in-built robust and bias-corrected standard error estimates where required. In R, the packages \pkglmerTest and \pkgpbkrtest provide a range of corrections to standard errors for models fit with \codelme4. The package architecture means that \pkgglmmrBase can provide these standard errors for the range of models available in the package, and generally in less time as they do not require refitting of the model. Stata and SAS can calculate bias-corrected and robust standard error estimates with the commands described above.

We also note that there are also several packages for Bayesian model fitting in R, including \pkgbrms (Bürkner, 2017) and \pkgrstanarm, which interface with the Stan statistical software (described below), and \pkgMCMCglmm. Stan is a fully-fledged probabilistic programming language that implements MCMC, and allows for a completely flexible approach to model specification (Carpenter et al., 2017). Stan can also provide limited maximum likelihood fitting functionalist and related tools for calculating gradients and other model operations.

Evidently, there already exists a good range of support for GLMM model fitting available in \proglangR. \pkgglmmrBase may be seen to provide a complement to existing resources rather than another substitute. As we discuss below, in a maximum likelihood context, the existing software all provide approximations rather than full likelihood model fitting. These approximations are typically accurate and fast, but they can also frequently fail to converge and in more complex models the quality of the approximation may degrade, which has lead to methods for bias corrections and improvements to these approximations (e.g. Breslow and Lin (1995); Lin and Breslow (1996); Shun and McCullagh (1995); Capanu et al. (2013)). \pkgglmmrBase provides an alternative that can be used to support more complex analyses, flexible covariance specification, and provide a comparison to ensure approximations are reliable, while also providing an array of other functionality.

3.2 Stochastic Maximum Likelihood Estimation

Markov Chain Monte Carlo Maximum Likelihood (MCML) are a family of algorithms for estimating GLMMs using the full likelihood that treat the random effect terms as missing data, which are simulated on each iteration of the algorithm. Estimation can then be achieved to an arbitrary level of precision depending on the number of samples used. Approximate methods such as Laplace approximation or quadrature provide significant computational advantages over such approaches, however they may trade-off the quality of parameter and variance estimates versus full likelihood approaches. Indeed, the quality of the Laplace approximation method for GLMMs can deteriorate if there are smaller numbers of clustering groups, smaller numbers of observations to estimate the variance components, or if the random effect variance is relatively large.

The package provides full likelihood model fitting for the range of GLMMs that can be specified using the package via the MCML algorithms described by McCulloch (1997) or stochastic approximation expectation maximisation (SAEM) (Jank, 2006). Each algorithm has three main steps per iteration: draw samples of the random effects using Markov Chain Monte Carlo (MCMC), the mean function parameters are then estimated conditional on the simulated random effects, and then the parameters of the multivariate Gaussian likelihood of the random effects are then estimated. The process is repeated until convergence. We describe each step in turn and optimisations.

3.3 MCMC sampling of random effects

Reliable convergence of the algorithm requires a set of independent samples of the random effects on the th iteration, i.e. realisations from the distribution of the random effect conditional on the observed data, and with fixed parameters. MCMC methods can be used to sample from the ‘posterior density’ . To improve sampling, instead of generating samples of directly, we instead sample from the model:

| (7) | ||||

where , and is the identity matrix. Once samples of are returned, we then transform the samples as .

We use Stan for MCMC sampling. Stan implements Hamiltonian Monte Carlo (HMC) with advances such as the No-U-Turn Sampler (NUTS) (Carpenter et al., 2017; Homan and Gelman, 2014), which automatically ‘tunes‘ the HMC parameters. Stan can generally achieve a good effective sample size per unit time and so lead to better convergence of the MCMCML algorithm than standard MCMC algorithms.

Each iteration of the MCMC sampling must evaluate the log-likelihood (and its gradient). Since the elements of both and are all independent in this version of the model, evaluating the log-likelihood is parallelisable within a single chain, which significantly improves running time.

3.4 Estimation of mean function parameters

There are several methods to generate new estimates of and conditional on , , and . We aim to minimise the negative log-likelihood:

| (8) |

There are three different approaches:

-

•

Monte Carlo Newton Raphson (MCNR): If the linear predictor is linear and additive in the data and parameters, then we can use the explicit formulation:

where the expectations are with respect to the random effects. We estimate the expectations using the realised samples from the MCMC step of the algorithm. For many GLMMs, the likelihoods can be multimodal Searle et al. (1992), which means the MCNR algorithm may fail to converge at all.

-

•

Monte Carlo Quasi-Newton (MCQN): As the gradient is relatively inexpensive to calculate, one can make use of quasi-Newton algorithms, including L-BFGS.

-

•

Monte Carlo Expectation Maximisation (MCEM): We use the BOBYQA algorithm, a numerical, derivative free optimser that allows bounds for expectation maximisation (Powell, 2009).

3.5 Estimation of covariance parameters

The final step of each iteration is to generate new estimates of given the samples of the random effects. We aim to minimise

| (9) |

The multivariate Gaussian density is:

where is the th diagonal element of .

As described in Section 2.5, we can take two approaches to the Cholesky factorisation of matrix , either exploiting the block diagonal structure or using sparse matrix methods. In both cases the quadratic form can be rewritten as , so we can obtain by solving the linear system using forward substitution, which, if is also sparse provides efficiency gains. The log determinant is . In the case of using a block diagonal formulation, we calculate the form:

where and indicates the start and end indexes of the vector, respectively, corresponding to block .

The gradient of the log-likelihood with respect to is:

The quadratic form can similarly be solved by applying forward and backward substitution. The L-BFGS-B algorithm can be used then to minimise the log-likelihood. However, the inversion of and calculation of its partial derivatives may make the gradient computationally demanding. A derivative-free algorithm, BOBYQA, while requiring more function evaluations may be more efficient at solving for the parameter values. Both methods are available.

3.6 Stochastic Approximation Expectation Maximisation

The efficiency of the MCML algorithms can be improved in two ways: first, one can dynamically alter the number of MCMC samples per step, which is discussed in the next section, and second, one can make use of MCMC sample information from previous iterations, reducing the number of samples per iteration. Stochastic Approximation Expectation Maximisation (SAEM) is a Ruppert-Monroe algorithm that can be used to approximate the log likelihoods (8) and (9) (Jank, 2006). For the log-likelihood on the th iteration is:

and equivalently for , where for . This approach makes use of the all the MCMC samples from every iteration, where the contribution of previous values diminish over the iterations depending on the parameter . Larger values of result in faster ‘forgetting’. For some applications, Ruppert-Polyak averaging can further improve convergence of the algorithms (Polyak and Juditsky, 1992), where the estimate of the log-likelihood on iteration is:

3.7 Stopping Criteria and MCMC Sample Sizes

We provide two types of rule for terminating the algorithm. First, one can monitor the differences in the parameter estimates between successive iterations, and terminate the algorithm when the largest difference falls below some tolerance. However, this method may lead to premature termination. A running mean could prevent such issues, however, a perhaps preferable method is to assess the changes in the estimates of the log-likelihood:

An expectation maximisation algorithm is guaranteed to improve the objective function value over time. However, the estimates of the log-likelihood are subject to Monte Carlo error and so may increase or decrease at convergence. Caffo et al. (2005) proposed a stopping criteria that monitors the probability of convergence. The estimated upper bound for the difference is:

where is the quantile of a standard Gaussian distribution and is the estimated standard deviation of . This upper bound will be negative with probability at convergence.

Caffo et al. (2005) use a similar argument to dynamically alter the number of MCMC samples on each iteration to acheive convergence with probability . The propose:

We provide MCML algorithm with and without dynamic sample sizes.

3.8 Model Estimation

The member function \codeMCML fits the model. There are multiple options to control the algorithm and set its parameters; most options are determined by the relevant parameters of the \codeModel object. The default fitting method is SAEM without Ruppert-Polyak averaging, and with the log-likelihood stopping rule with probability 0.95, as this has been found to provide the most efficient and reliable model fitting in many tests.

3.9 Laplace Approximation

We also provide model fitting using a Laplace approximation to the likelihood with the \codeModel member function \codeLA(). We include this approximation as it often provides a faster means of fitting the models in this package. These estimates may be of interest in their own right, but can also serve as useful starting values for the MCML algorithm. We approximate the log-likelihood of the re-parameterised model given in Equation (7) as:

| (10) |

As before, we use the first-order approximation (with or without attenutation), . We iterate model fitting to obtain estimates of , and and then to then obtain estimates of until the values converge. We provide two iterative algorithms for model fitting, using a similar pattern to the MCNR, and MCEM algorithms. First, the values of and are updated. We can either use a scoring algorithm approach, or numerical optimisation. For the former method the score system we use is:

| (11) |

where is a diagonal matrix with elements . Where there are non-linear functions of parameters in the linear predictor, a first-order approximation is used where is replaced by an matrix with row equal to evaluated at the values of . This system of score equations is highly similar to those used in other software packages and articles on approximate inference for GLMMs (e.g. Bates and DebRoy (2004); Bates et al. (2015); Breslow and Clayton (1993)). The alternative approach fits and by minimising the log-likelihood in Equation (10) using the BOBYQA algorithm. In the second step, the variance parameters are updated by again minimising (10), fixing and to their current estimates.

3.10 Standard errors

There are several options for the standard errors of and returned by either \codeLA or \codeMCML and are accessible through a \codeModel object. The currently implemented methods are described here.

3.10.1 GLS standard errors

The default GLS standard errors are given by the square root of the diagonal of . The standard errors for the covariance parameter estimates are estimated from the inverse expected information matrix for , , the elements of which are given by:

Using the expression for given in Section 2.9, we can write

for parameters of the covariance function, and for the variance parameter of a Gaussian distribution. Then, the information matrix for the covariance parameters has elements:

and equivalently for the scale parameter such as . The partial derivatives of matrix can then readily be obtained using the auto-differentiation scheme implemented in the calculation of the elements of the matrix.

3.11 Small sample corrections

The GLS standard errors with maximum likelihood estimate of the model parameters are known to exhibit a small-sample bias and underestimate the standard error when, for example, the number of higher-level groups or clusters is small. The small sample bias exists for two reasons: the standard errors fail to take into account the variability from estimating the variance parameters and ; and, the GLS estimator is itself a biased estimator of the variance of the fixed effect parameters. So the standard GLS standard error can underestimate the sampling error of , resulting in overly narrow confidence intervals. There have been a range of corrections proposed in the literature, typically with regards to the degrees of freedom of reference distributions for related test statistics (e.g. Satterthwaite (1946); Kenward and Roger (1997)). We can also use the tools in this package to generate a bias corrected variance-covariance matrix for using the approach proposed by Kenward and Roger (1997). The correction is valid for restricted maximum likelihood (REML) estimates of and as the standard maximum likelihood estimates of these parameters are biased downwards due to not accounting for the loss of degrees of freedom from estimating (Harville, 1977). At the time of writing, we have not implemented REML with the Laplace approximation methods described in the preceding section. However one can view the estimates of the variance components from the MCML algorithms as representing REML estimates, since the estimated sufficient statistics for do not depend on (Laird and Ware, 1982). Thus, using these estimates and to apply the small sample bias correction, we implement as:

Then, letting and

the bias corrected variance covariance matrix for is

The degrees of freedom correction is also given in Kenward and Roger (1997), which is the same degrees of freedom correction originally proposed by Satterthwaite (1946). The Kenward-Roger correction, though, can over-estimate the standard errors in very small samples in some cases. Kenward and Roger (2009) proposed an improved correction for covariance functions not linear in parameters (such as the autoregressive or exponential functions). The improved correction adds an additional adjustment factor. We have implemented these corrections in the package, which can returned directly from a \codeModel object using the \codesmall_sample_correction() member function, or they can be returned during model fitting by selecting the appropriate option for the \codese argument of the \codeMCML and \codeLA functions. For use in other calculations one can also retrieve the first and second-order partial derivatives of with respect to the covariance function parameters using \codepartial_sigma().

3.12 Modified Box correction

Finally, we also provide the “modified Box correction” for Gaussian-identity GLMMs given by Skene and Kenward (2010).

3.13 Additional inputs and outputs

One can specify weights for the model when generating a new \codeModel object. For binomial models the number of trials can also be passed to the model via the \codetrials argument, and offsets can be given in the same way via the \codeoffset argument.

The \codeMCML and \codeLA functions return an object of (S3) class \codemcml for which print and summary methods are provided. The print function returns standard regression output and basic diagnostics. We implement the conditional Akaike Information Criterion method described by Vaida and Blanchard (2005) for GLMMs, and approximate conditional and marginal R-squared values using the method described by Nakagawa and Schielzeth (2013) for GLMMs. The object returned by \codeMCML also contains other potentially useful output. In particular, there is often interest in the random effects themselves. As these are simulated during the MCMCML algorithm, the final set of samples are returned as a matrix facilitating further analysis.

3.14 Prediction

One can generate predictions from the model at new values of , , and the variables that define the covariance function. Conditional on the estimated or simulated values of , the distribution of the random effects at new values is:

where is the covariance matrix of the random effects at the observed values, is the covariance matrix at the new values, and is the covariance between the random effects at the observed and new values. Where there are multiple samples of , the conditional mean in averaged across them. Following a model fit, we can generate the linear predictor and mean and covariance at new locations using the member function \code$predict(newdata). This differs from the functionality of the function \code$fitted(), which generates the linear predictor (with or without random effects) for the existing model at the observed data locatons, and \code$sim_data(), which simulates outcome data at the existing data values.

4 Examples

We provide two examples illustrating model fitting and related functionality to demonstrate how \pkgglmmrBase may be useful in certain statistical workflows.

4.1 Cluster randomised trial

We consider a stepped-wedge cluster randomised trial (see Hemming et al. (2020), for example) with six cluster sequences and seven time periods, and ten individuals per cluster period cross-sectionally sampled. All clusters start in the control state and in each period one cluster switches to the intervention state. We simulate data from a Poisson-log GLMM with cluster and cluster-period exchangeable covariance function for the example. The Poisson-log model is, for individual in cluster at time :

where is an indicator for treatment status, is the indicator function, and is a random effect. For the data generating process we use and range from -1.5 to -0.3. We specify the exchangeable correlation structure where and . See Li et al. (2021) for a discussion of such models in the context of cluster randomised trials.

We can specify the model as follows, where we have included the parameter values: {CodeChunk} {CodeInput} data <- nelder( (cl(6)*t(7))>i(10)) datat > datanew( formula = int + factor(t)-1 + (1|gr(cl))+(1|gr(cl,t)), covariance = c(0.05, 0.01), mean = c(0.5,seq(-1.5,-0.3,by=0.2)), data = data, family = poisson()) y <- model

4.2 Geospatial data analysis with approximate covariance

As described in Section 2.5 and elsewhere, fitting models with large, dense covariance matrices is computationally intensive. We provide several approximations in this package to reduce fitting times. Here, we provide an example using the nearest neighbour Gaussian process, and generating predictions across an area of interest.

We generate a 600 random points in the square . We then simulate data from a Gaussian-identity model with a spatial Gaussian process with exponential covariance function: {CodeChunk} {CodeInput} n <- 600 df <- data.frame(x = runif(n,-1,1), y = runif(n,-1,1)) sim_model <- Modelsim_data() We then generate a new model with nearest neighbour Gaussian process covariance, and where we name the intercept parameter \codebaseline and include sensible starting values: {CodeChunk} {CodeInput} analysis_model <- Modelset_trace(1) # for verbose output analysis_modelsamps <- 50 fit_sp <- analysis_model

5 C-Optimal Experimental Designs with glmmrOptim

The \pkgglmmrOptim package provides a set of algorithms and methods to solve the c-optimal experimental design problem for GLMMs. More detail on the methods in this package and a comparison of their performance can be found in Watson and Pan (2022).

We assume there are total possible observations indexed by whose data generating process is described by a GLMM described in Section 1.1. The observations (indexes) are grouped into ‘units’, for example clusters or individual people, which are labelled as . These units may contain just a single observation/index. The set of all units is the design space and a ‘design’ is . The optimal experimental design problem is then to find the design of size that minimises the c-optimality function:

| (12) |

where is the information matrix described in Equation 6 associated with design . There is no exact solution to this problem, in part because of the complexity resulting from the non-zero correlation between observations and experimental units.

There are several algorithms that can be used to generate approximate solutions. A full discussion of this problem in the context of GLMMs and evaluation of relevant algorithms is given in (Watson and Pan, 2022) (see also Fedorov (1972); Wynn (1970); Nemhauser and Wolsey (1978); Fisher et al. (1978)). Here, we briefly describe the relevant algorithms and their implementation in the \pkgglmmrOptim package.

5.1 Existing software

The package \pkgglmmrOptim provides algorithms for identifying approximate c-optimal experimental designs when the observations and experimental units may be correlated. In \proglangR, there are several packages that provide related functionality. The \pkgskpr package provides D, I, Alias, A, E, T, and G-optimal, but not c-optimal, designs for models including with correlated observations, but only where the experimental units are uncorrelated. The \proglangR package \pkgAlgDesign also provides algorithms for estimating D, A, and I-optimal experimental designs, including for models with correlated observations, but again without correlation between experimental units. \pkgAlgDesign implements a version of the Greedy Algorithm (described below) for D-optimality. Both packages utilise, among other approaches, the approximation described below in Section 5.3. Other relevant packages include \pkgOptimalDesign, which uses the commercial software \pkgGurobi, and the packages \pkgFrF2 and \pkgconf.design, which consider factorial designs specifically. Only \pkgskpr provides both optimal experimental design algorithms and associated methods like Monte Carlo simulation and power calculation. \pkgglmmrBase can provide power calculations using the functions discussed in Section 2.12, as well as the other suite of methods to interrogate and fit relevant models once an optimal design has been identified, which emphasizes the usefulness of the linked set of packages we present.

5.2 Combinatorial Optimisation Algorithms

There are three basic combinatorial algorithms relevant to the c-optimal design problem. ‘Combinatorial’ here means choosing discrete elements (experimental units) from the set of to minimise Equation (12).

Algorithm 3 describes the ‘local search algorithm’. This algorithm starts with a random design of size and then makes the optimal swap between an experimental unit in the design and one not currently in the design. It proceeds until no improving swap can be made. Algorithm 4 shows the ‘reverse greedy search algorithm’ that instead starts from the complete design space and iteratively removes the worst experimental unit until a design of size is achieved. A third algorithm, the ‘greedy search’ is also included in the package, which starts from a small number of units and iteratively adds the best unit. However, this algorithm performs worse than the other two in empirical comparisons and so is not discussed further here. We offer these three algorithms in the \pkgglmmrOptim package. We allow the user to use any combination of these algorithms in succession. While such combinations do not necessarily have any theoretical guarantees, they may be of interest to users of the package. A more complete discussion of the methods provided by this package can be found in Watson and Pan (2022), with further examples in Watson et al. (2023).

5.2.1 Computation

We use several methods to improve computational time to execute these algorithms. The most computationally expensive step is the calculation of after adding or swapping an experimental unit, since the calculation of the information matrix requires inversion of the covariance matrix (see Equation (6)). However, we can avoid inverting on each step by using rank-1 up- and down-dating.

For a design with observations with inverse covariance matrix we can obtain the inverse of the covariance matrix of the design with one observation removed , as follows. Without loss of generality we assume that the observation to be removed is the last row/column of . We can write as

where is the principal submatrix of , is a column vector of length and is a scalar. Then,

For a design with observations with inverse covariance matrix , we aim now to obtain the inverse covariance matrix of the design . Recall that is a design effect matrix with each row corresponding to an observation. We want to generate . Note that:

where is the column vector corresponding to the elements of with rows in the current design and column corresponding to , and is the scalar . Also now define:

so that

and

and and , both of which are length column vectors. So we can get from using a rank-1 update as and similarly . Using the Sherman-Morison formula:

and

So we have calculated the updated inverse with only matrix-vector multiplication, which has complexity rather than the required if we just took a new inverse of matrix .

Other steps we include to improve efficiency are to check if any experimental units are repeated, if so then we can avoid checking a swap of an experimental unit for itself. Internally, the program only stores the unique combinations of rows of and and tracks the counts of each in or out of the design. Finally, when the function is executed (see Section 5.7), a check is performed to determine whether the experimental units are all uncorrelated with one another. If they are uncorrelated then we can implement an alternative approach to calculating , since we can write the information matrix as:

| (13) |

where we use to indicate the rows of in condition , and the submatrix of in . Thus, rather than using a rank-1 up- or down-date procedure iterated over the observations in each experimental unit, we can calculate the ‘marginal’ information matrix associated with each experimental unit and add or subtract it from as required. This method is generally faster as the number of observations per experimental unit is often much smaller than .

5.3 Approximate Unit Weights

If the experimental units are uncorrelated, we can use a different method to combinatorial optimisation. We assume that all the experimental units in are unique (the software automatically removes duplicates when using this method), and we place a probability measure over them where ; is then an approximate design. The weights can be interpreted as the proportion of ‘effort’ placed on each experimental unit. We can rewrite Equation (13) for the information matrix of this design as:

| (14) |

where the subscript indicates the submatrix relating to the indexes in . The problem is then to find the optimal design .

Holland-Letz et al. (2011) and Sagnol (2011) generalise Elfving’s Theorem (Elfving, 1952), which provides a geometric characterisation of the c-optimal design problem in these terms, to the case of correlation within experimental units. Sagnol (2011) shows that this problem can be written as a second order cone program, and as such be solved with interior point methods for conic optimisation problems. We modify this program to solve Holland-Letz et al. (2011) version of the theorem and include it in the \pkgglmmrOptim package, which we implement using the \pkgCVXR package for conic optimisation.

5.4 Optimal Mixed Model Weights

The final alternative algorithm identifies the optimal mixed model weights. The best linear unbiased estimator for the linear combination can be written as

where is a vector of weights. It can be shown, see Watson, Girling, and Hemming REF, that the experimental unit weights that minimise the bias are generated by Algorithm 3. As before these weights can then be used to generate optimal counts for each experimental unit.

5.5 Rounding

The approximate weighting approaches return the set of weights corresponding to each unique experimental unit. These weights need to be converted into an exact design with integer counts of each experimental unit . The problem of allocating a fixed set of items to categories according to a set of proportions is known as the apportionment problem. There a several rounding methods used for the apportionment problem proposed by the founding fathers of the United States for determining the number of representatives from each state. PUKELSHEIM and RIEDER (1992) show that a modified version of Adams’ method is most efficient when there needs to be at least one experimental unit of each type. In other cases the methods of Hamilton, Webster, or Jefferson may be preferred. We provide the function \codeapportion(), which generates exact designs from all these methods for a desired sample size and set of weights. The output of this function is automatically provided when the approximate weighting method is used.

5.6 Robust c-Optimal Designs

The preceding discussion has assumed that the correct model specification is known. However, in many scenarios there may be multiple plausible models, and a design optimal for one model or set of parameters, may perform poorly for another. Robust optimal design methods aim to produce an (approximate) optimal design over multiple candidate models. We implement a method amendable to the combinatorial algorithms described in Section 5.2 following Dette (1993). Let represent a GLMM model. We assume, following Dette (1993), that the true model belongs to a class of GLMMs and we define a vector , where and , which represents the prior weights or prior probabilities of each model. There are two robust c-optimality criteria we can use. The first:

was proposed by Dette (1993) and Läuter (1974). The second is the weighted mean

Both criteria result in functions with the appropriate properties to ensure the local search algorithm maintains its theoretical guarantees. Dette (1993) generalises Elfving’s theorem to this robust criterion, however, further work is required to ‘doubly’ generalise it to both correlated observations and robust criterion. While there may be a straightforward combination of the work of Dette (1993) and that of Holland-Letz et al. (2011) and Sagnol (2011); translating this into a program for conic optimisation methods is a topic for future research.

5.7 Implementation

The \pkgglmmrOptim package adds an additional \codeDesignSpace class, which references one or more \codeModel objects. The \codeModel objects must represent the same number of observations, with each row of and representing an observation. The other arguments to initialise the class are (optionally) the weights on each design, units of each observation. The observations are assumed to be separate experimental units unless otherwise specified.

The main member function of the \codeDesignSpace class is \codeoptimal, which runs one of the algorithms described above and returns the rows in the optimal design, or weights for each experimental unit, along with other relevant information. If the experimental units are uncorrelated with one another then the approximate weighting method is used by default; combinatorial algorithms can instead be used with the option \codeuse_combin=TRUE. The user can run one or more of the combinatorial algorithms sequentially. The algorithms are numbered as 1 is the local search, 2 is the greedy search, and 3 is the reverse greedy search. Specifying \codealgo=1 will run the local search. Specifying, \codealgo=c(3,1) will first run a reverse greedy search and then run a local search on the resulting design. We note that some combinations will be redundant, for example, running a greedy search after a reverse greedy search will have no effect since the resulting design will already be of size . However, some users may have interest in combining the approaches. A list (one per model in the design space) containing the vectors in Equation (12) must be provided.

For certain problems, an optimal design may include all of the same value of one or more dichotomous covariates, which would result in a non-positive definite information matrix. For example, some models include adjustment for discrete time periods, but not all time periods feature in the subset of observations in the optimal design. The program checks that the information matrix is positive definite at each step, and if not, it reports which columns may be causing the failure. These columns can then be removed using the \coderm_cols argument of the \codeoptimal function.

5.8 Examples

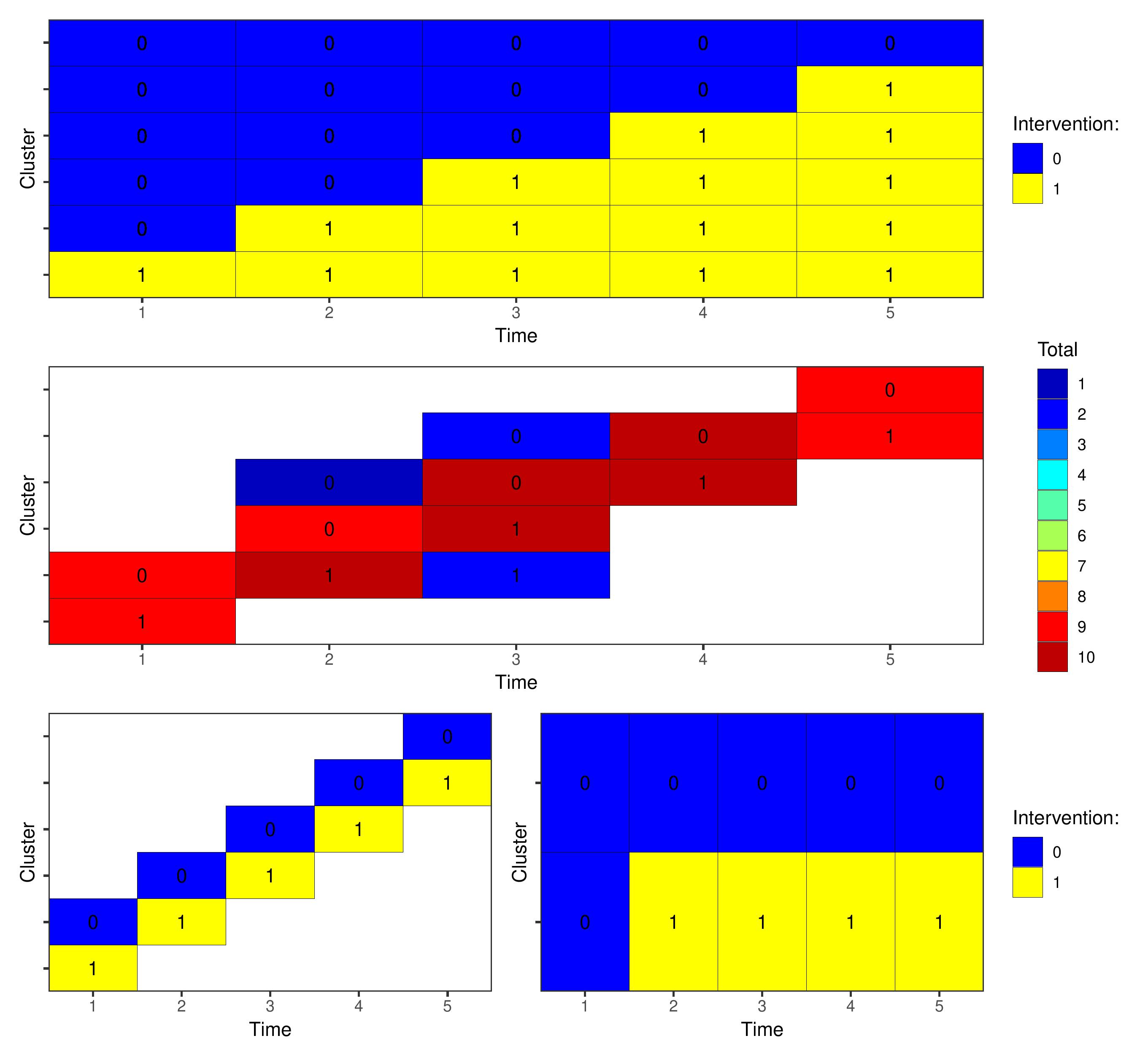

We present a set of examples relating to identifying an optimal cluster trial design within a design space consisting of six possible sequences over five time periods shown in the top row of Figure 2. There are six clusters and five time periods, and we may observe a maximum of ten individuals per cluster-period. Girling and Hemming (2016) and Watson et al. (2023) consider similar design problems.

The function that executes the algorithms in the \codeDesignSpace class is \codeoptim. The argument \codealgo specifies the algorithm with: 1 = local search, 2 = greedy search, 3 = reverse greedy search, and “girling” for the optimal mixed model weights (named after the proposer). One can also string together algorithms to run in sequence such as \codealgo = c(3,1).

5.8.1 Correlated experimental units with a Single Observation

First, we consider the case when each observation is a separate experimental unit so we may observe any number of individuals in a time period. We will identify an approximate optimal design with 100 observations with a maximum of ten observations in a single cluster-period. As the experimental units are correlated we will use the local search algorithm (option \codealgo=1). Given that the algorithm is not guaranteed to produce the optimal design, we run it ten times and select the design with lowest value of the objective function. We will specify a Gaussian model with identity link function and exchangeable covariance function: {CodeChunk} {CodeInput} #simulate data df <- nelder(formula( (cl(6) * t(5)) > ind(10))) dft >= dfnew(formula = factor(t) + int - 1+(1|gr(cl)) + (1|gr(cl,t)), covariance = c(0.05,0.01), mean = rep(0,6), data = df, family=gaussian()) ds <- DesignSpaceoptimal(m=100,C=list(c(rep(0,5),1)),algo=1) #run the algorithm The middle panel of Figure 2 shows the approximate optimal design produced by the algorithm. Code to reproduce the plots is provided in the replication materials.

5.8.2 Correlated experimental units with Multiple Observations

We secondly consider the case where each cluster period is an experimental unit containing ten observations, and we aim to select a design of size ten cluster-periods. {CodeChunk} {CodeInput} # update the experimental units ds <- DesignSpaceoptimal(m=10,C=list(c(rep(0,5),1)),algo=1) The bottom left panel of Figure 2 again shows the approximate optimal design produced by the algorithm, reflecting the ‘staircase’ design from the previous example.

5.8.3 Uncorrelated experimental units

Finally, we conside the case where each whole cluster represents an experimental unit and we aim to pick two of these six clusters. In this example, the experimental units are uncorrelated. By default the \codeoptim function will use the second-order cone program and return optimal weights for each experimental unit. We can force the function to instead use the local or greedy search algorithms with the option \codeforce_hill=TRUE: {CodeChunk} {CodeInput} # update the experimental units dscl opt3 <- ds

6 Discussion

In this article we describe the \pkgglmmrBase package for R that provides functionality for model specification, analysis, fitting, and other functions for GLMMs. We also describe the \pkgglmmrOptim package that extends its functionality. The number of packages in R alone that provide similar functionality attests to the growing popularity of GLMMs in statistical analyses. For example, we identified eight R packages for calculating power for cluster-randomised trials alone using similar models. Our intention with \pkgglmmrBase is to provide a broad set of tools and functions to support a wide range of GLMM related analyses, while maintaining a relatively simple interface through the \pkgR6 class system. For an analysis like a power calculation, the package can provide a direct estimate, any of the intermediary steps for similar analyses, or related functionality like data simulation. The power analysis can also be applied to a design identified through the \pkgglmmrOptim package as classes in this package inherit the functions of the \pkgglmmrBase classes. This support is intended to reduce the requirement for multiple different packages for different model structures where comparisons may be required.

The \pkgglmmrBase package provides MCML model fitting, along with a Laplace approximation. The package provides a wider range of covariance functions than is available in other packages in \proglangR, it allows for non-linear functions of parameters, and it can provide robust and bias-corrected standard errors. We plan to continue to expand this functionality and available outputs, and encourage users to suggest features. The package can therefore provide a complementary approach to GLMM model fitting alongside the existing software in \proglangR and other software. MCML may be especially useful when the approximations provided by packages like \pkglme4 or \pkgglmmTMB may fail to produce reliable estimates or their algorithms may not converge. For simpler models, such as those with exchangable covariance functions or large numbers of observations, methods like PQL and Laplacian approximation can produce highly reliable estimates, and in a fraction of the time that MCML can. However, in more complex cases there can be differences. More complex covariance structures are of growing interest to more accurately represent data generating processes. We have used a running example of a cluster randomised trial over multiple time periods. Early efforts at modelling used a single group-membership random effect, whereas more contemporary approaches focus on temporal decay models, like autoregressive random effects (Li et al., 2021). However, \pkgglmmTMB is one of very few packages, prior to \pkgglmmrBase to offer model fitting for this covariance structure in \proglangR; SAS provides similar functionality but not in conjunction with other features like non-linear fixed effect specifications.