Generalization and Memorization: The Bias Potential Model

Abstract

Models for learning probability distributions such as generative models and density estimators behave quite differently from models for learning functions. One example is found in the memorization phenomenon, namely the ultimate convergence to the empirical distribution, that occurs in generative adversarial networks (GANs). For this reason, the issue of generalization is more subtle than that for supervised learning. For the bias potential model, we show that dimension-independent generalization accuracy is achievable if early stopping is adopted, despite that in the long term, the model either memorizes the samples or diverges.

Keywords: probability distribution, machine learning, generalization error, curse of dimensionality, early stopping.

1 Introduction

Distribution learning models such as GANs have achieved immense popularity from their empirical success in learning complex high-dimensional probability distributions, and they have found diverse applications such as generating images [7] and paintings [21], writing articles [8], composing music [31], editing photos [5], designing new drugs [33] and new materials [29], generating spin configurations [52] and modeling quantum gases [11], to name a few.

As a mathematical problem, distribution learning is much less understood. Arguably, the most fundamental question is the generalization ability of these models. One puzzling issue is the following.

-

0.

Generalization vs. memorization:

Let be the target distribution, and be the empirical distribution associated with sample points. Let be the probability distribution generated by some machine learning model parametrized by the function in some hypothesis space . It has been argued, for example in the case of GAN, that as training proceeds, one has [23]

(1) where is the parameter we obtain at training step . We refer to (1) as the “memorization phenomenon”. When it happens, the model learned does not give us anything other than the samples we already have.

Despite this, these distribution-learning models perform surprisingly well in practice, being able to come close to the unseen target and allowing us to generate new samples. This counterintuitive result calls for a closer examination of their training dynamics, beyond the statement (1).

There are many other mysteries for distribution learning, and we list a few below.

-

1.

Curse of dimensionality:

The superb performance of these models (e.g. on generating high-resolution, lifelike and diverse images [7, 14, 45]) indicates that they can approximate the target with satisfactorily small error. Yet, in theory, this should not be possible, because to estimate a general distribution in with error , we need amount of samples (discussed below), which becomes astronomical for real-world tasks. For instance, the BigGAN [7] was trained on the ILSVRC dataset [39] with images of resolution , but the theoretical sample size should be like .

Of course, for restricted distribution families like the Gaussians, the sample complexity is only . Yet, one is really interested in complex distributions such as the distribution of facial images that a priori do not belong to any known family, so these tasks require the models to possess not only a dimension-independent sample complexity but also the universal approximation property.

-

2.

The fragility of the training process:

It is well-known that distribution-learning models like GANs and VAE (variational autoencoder) are difficult to train. They are especially vulnerable to issues like mode collapse [12, 40], instability and oscillation [34], and vanishing gradient [1]. The current treatment is to find by trial-and-error a delicate combination of the right architectures and hyper-parameters [34]. The need to understand these issues calls for a mathematical treatment.

This paper offers a partial answer to these questions. We focus on the bias potential model, an expressive distribution-learning model that is relatively transparent, and uncover the mechanisms for its generalization ability.

Specifically, we establish a dimension-independent a priori generalization error estimate with early-stopping. With appropriate function spaces , the training process consists of two regimes:

-

•

First, by implicit regularization, the training trajectory comes very close to the unseen target , and this is when early-stopping should be performed.

-

•

Afterwards, either converges to the sample distribution or it diverges.

This paper is structured as follows. In Section 2, we introduce the bias potential model and pose it as a continuous calculus of variations problem. Section 3 analyzes the training behavior of this model and presents this paper’s main results on generalization error and memorization. Section 4 presents some numerical examples. Section 5 contains all the proofs. Section 6 concludes this paper with remarks on future directions.

Notation: denote vectors by bold letters . Let be the space of continuous functions over some subset equipped with supremum norm. Let be the space of probability measures over , the subset of absolutely continuous measures, and the subset of measures with finite second moments. Denote the support of a distribution by . Let be the Wasserstein metric over .

1.1 Related works

-

•

Generalization ability: Among distribution-learning models, GANs have attracted the most attention and their generalization ability has been discussed in [2, 53, 3, 24] from the perspective of the neural network-based distances. For trained models, dimension-independent generalization error estimates have been obtained only for certain restricted models, such as GANs whose generators are linear maps or one-layer networks [49, 27, 22].

-

•

Curse of dimensionality (CoD): If the sampling error is measured by the Wasserstein metric , then for any absolutely continuous and any , it always holds that [48]

To achieve an error of , the required sample size is .

If sampling error is measured by KL divergence, then since is singular. If kernel smoothing is applied to , it is known that the error scales like [47] (technically the norm used in [47] is the difference between densities, but one should expect that CoD would likewise be present for KL divergence.)

-

•

Continuous perspective: [19, 16] provide a framework to study supervised learning as continuous calculus of variations problems, with emphasis on the role of the function representation, e.g. continuous neural networks [18]. In particular, the function representation largely determines the trainability [13, 38] and generalization ability [17, 15, 20] of a supervised-learning model. This framework can be applied to studying distribution learning in general, and we use it to analyze the bias potential model.

-

•

Exponential family:

The density function of the bias-potential model is an instance of the exponential families. These distributions have long been applied to density estimation [4, 10] with theoretical guarantees [50, 43]. Yet, existing theories focus only on black-box estimators, instead of the training process. It has also been popular to adopt a mixture of exponential distributions [26, 25, 36], but it will not be covered in this paper.

2 Bias Potential Model

This section introduces the bias potential model, a simple distribution-learning model proposed by [46, 6] and also known as “variationally enhanced sampling”.

To pose a supervised learning model as a calculus of variations problem, one needs to consider four factors: function representation, training objective, training rule, and discretization [19]. For distribution learning, there is the additional factor of distribution representation, namely how probability distributions are represented through functions. These are general issues for any distribution learning model. For future reference, we go through these components in some detail.

2.1 Distribution representation

The bias potential model adopts the following representation:

| (2) |

where is some potential function and is some base distribution. This representation commonly appears in statistical mechanics as the Boltzmann distribution. It is suitable for density estimation, and can also be applied to generative modeling via sampling techniques like MCMC, Langevin diffusion [37], hit-and-run [28], etc.

Typically the partition function can be ignored, since it is not involved in the training objectives or most of the sampling algorithms.

2.2 Training objective

Since the representation (2) is defined by a density function, it is natural to define a density-based training objective. Given a target distribution , one convenient choice is the backward KL divergence

An alternative way introduced in [46] is to define the “biased distribution”

so that iff . Then, we can define an objective by the forward KL

Removing constant terms, we obtain the following objectives

| (3) | ||||

Both objectives are convex in (Lemma 5.2). Suppose can be written as (2) with potential , then iff for some constant iff or , so we have a unique global minimizer up to constants. Otherwise, if does not have the form (2), then the minimizer does not exist.

In practice, when is available only through its sample , we simply substitute all the expectation terms in (3) by .

2.3 Function representation

A good function representation (or function space ) should have two conflicting properties:

-

1.

is expressive so that distributions generated by satisfy universal approximation property.

-

2.

has small complexity so that the generalization gap is small.

One approach is to adopt an integral transform-based representation [19],

for some feature function and parameter distribution . Then, can be approximated with Monte-Carlo rate by

| (4) |

where are i.i.d. samples from .

Let us consider function representations built from neural networks:

- •

- •

It is straightforward to establish the universal approximation theorem for these two representations and we provide such results below: Denote by the distributions over with continuous density functions, and by the total variation distance, which is equivalent to the norm when restricted to .

Proposition 2.1 (Universal approximation).

Let be any compact set with positive Lebesgue measure, let be the uniform distribution over , and let be any class of functions that is dense in . Then, the class of probability distributions (2) generated by and are

-

•

dense in under the Wasserstein metric (),

-

•

dense in under the total variation norm ,

-

•

dense in under KL divergence.

Given assumption 5.1, this result applies if is the Barron space or RKHS space .

The Monte-Carlo approximation (4) suggests that these continuous models can be approximated efficiently by finite neural networks. Specifically, we can establish the following a priori error estimates:

Proposition 2.2 (Efficient approximation).

Suppose that the base distribution is compactly-supported in a ball , and the activation function is Lipschitz with . Given , for every , there exists a finite 2-layer network with neurons that satisfies:

where is the distribution generated by . Similarly, assume that the fixed parameter distribution in (7) is compactly-supported in a ball , then given , for every , there exists such that

2.4 Training rule

We consider the simplest training rule, the gradient flow.

For continuous function representations, there are generally two kinds of flows:

-

•

Non-conservative gradient flow

-

•

Conservative gradient flow:

2.5 Discretization

So far we have only discussed the continuous formulation of distribution learning models. In practice, we implement these continuous models using discretized versions, with the hope that the discretized models inherit these properties up to a controllable discretization error.

Let us focus on the discretization in the parameter space, and in particular, the most popular “particle discretization”, since this is the analog of Monte-Carlo for dynamic problems. Consider the parameter distribution of the 2-layer net (5) and its approximation by the empirical distribution

where the particles are i.i.d. samples of . The potential function represented by this empirical distribution is given by:

Suppose we train by conservative gradient flow (9,10) with the objective . The continuity equation (9) implies that, for any smooth test function , we have

Meanwhile, we also have

Thus we have recovered the gradient flow for finite scaled 2-layer networks:

This example shows that the particle discretization of continuous 2-layer networks (5) leads to the same result as the mean-field modeling of 2-layer nets [30, 38].

3 Training Dynamics

This section studies the training behavior of the bias potential model and presents the main result of this paper, on the relation between generalization and memorization: When trained on a finite sample set,

-

•

With early stopping, the model reaches dimension-independent generalization error rate.

-

•

As , the model necessarily memorizes the samples unless it diverges.

3.1 Trainability

We begin with the training dynamics on the population loss. First, we consider the random feature model (7) and establish global convergence:

Proposition 3.1 (Trainability).

Suppose that the target distribution is generated by a potential (). Suppose that our distribution is generated by potential with parameter function trained by gradient flow on either of the objectives (3). Then,

Next, for 2-layer neural networks, we show that whenever the conservative gradient flow converges, it must converge to the global minimizer. In particular, it will not be trapped at bad local minima and thus avoids mode collapse. This result is analogous to the global optimality guarantees for supervised learning and regression problems [13, 38].

Proposition 3.2.

Assume that the distribution is generated by potential , a 2-layer network with parameter distribution trained by gradient flow on either of the objectives (3). Assume that the assumption 5.2 holds. If the flow converges in metric (or any , ) to some as , then is a global minimizer of : Let be the corresponding 2-layer network, then

3.2 Generalization ability

Now we consider the most important issue for the model, the generalization error, and prove that a dimension-independent a priori error rate is achievable within a convenient early-stopping time interval.

We study the training dynamics on the empirical loss. For convenience, we make the following assumptions:

-

•

Let the base distribution in (2) be supported on (the ball). Without loss of generality, we use the norm on .

-

•

Let the objective be from (3) (The analysis of would be more involved). Recall that if the target is generated by a potential , then

Denote by the empirical loss that corresponds to :

-

•

Model by the random feature model (7) with RKHS norm from (8). Assume that the activation function is ReLU, and that the fixed parameter distribution is supported inside the ball, that is, for almost all . Denote by and by , so the activation can be written as .

Remark 1 (Universal approximation).

If we further assume that covers all directions (e.g. is uniform over the sphere ) and is uniform over some , then Proposition 2.1 implies that this model enjoys universal approximation over distributions on .

-

•

Training rule: We train by gradient flow (Section 2.4). Let and be the training trajectories under and . Assume the same initialization .

Theorem 3.3 (Generalization ability).

Suppose is generated by a potential function (). For any , with probability over the sampling of , the testing error of is bounded by

Corollary 3.4.

Given the condition of Theorem 3.3, if we choose an early-stopping time such that

then the testing error obeys

This rate is significant in that it is dimension-independent up to a negligible term. Although the upper bound is slower than the desirable Monte-Carlo rate of , it is much better than the rate and we believe there is room for improvement. In addition, the early-stopping time interval is reachable within a time that is dimension-independent and the width of this interval is at least on the order of .

This result is enabled by the function representation of the model, specifically:

-

1.

Learnability: If the target lives in the right space for our function representation, then the optimization rate (for the population loss ) is fast and dimension-independent. In this case, the right space consists of distributions generated by random feature models, and the rate is provided by Proposition 3.1.

- 2.

Lemma 3.5.

For any distribution supported on and any , with probability over the i.i.d. sampling of the empirical distribution , we have

Lemma 3.6.

Let be a convex Fréchet-differentiable function over a Hilbert space with Lipschitz constant . Let be a Fréchet-differentiable function with Lipschitz constant . Define two gradient flow trajectories :

where represents a perturbed function. Then,

for all time .

Numerical examples for the training process and generalization error are provided in Section 4.

3.3 Memorization

Despite that the model enjoys good generalization accuracy with early stopping, we show that in the long term the solution necessarily deteriorates.

Proposition 3.7 (Memorization).

Hence, the model either memorizes the samples or diverges (coming to more than one limit, which are all degenerate), even though it may not manifest within realistic training time.

The proof is based on the following observation.

Lemma 3.8.

Let be a compact set with positive Lebesgue measure, let the base distribution be uniform over , and let be a continuous and integrally strictly positive definite kernel on . Given any target distribution and any initialization , train the potential by

If has only one weak limit, then converges weakly to . Else, none of the limit points cover the support of .

A numerical demonstration of memorization is provided in Section 4.

3.4 Regularization

Instead of early stopping, one can also consider explicit regularization: With the empirical loss , define the problem

for some appropriate functional norm and adjustable bound . For the special case of random feature models (8), this problem becomes

| (11) |

where denotes with potential generated by .

By convexity, can always converge to the minimum value as if is trained by gradient flow constrained to the ball . Denote the minimizer of (11) by (which exists by Lemma 5.7) and denote the corresponding distribution by .

Proposition 3.9.

Given the condition of Theorem 3.3, choose any . With probability over the sampling of , the minimizer satisfies

4 Numerical Experiments

Corollary 3.4 and Proposition 3.7 tell us that the training process roughly consists of two phases: the first phase in which a dimension-independent generalization error rate is reached, and a second phase in which the model deteriorates into memorization or divergence. We now examine how these happen in practice.

4.1 Dimension-independent error rate

The key aspect of the generalization estimate of Corollary 3.4 is that its sample complexity () is dimension-independent.

To verify dimension-independence, we estimate the exponent for varying dimension . We adopt the set-up of Theorem 3.3 and train our model by SGD on a finite sample set . Specifically, is uniform over , the target and trained distributions are generated by the potentials , these potentials are random feature functions (7) with being uniform over the sphere , with parameter functions and ReLU activation. The samples are obtained by Projected Langevin Monte Carlo [9]. We approximate using samples (particle discretization) and set . We initialize training with and train by scaled gradient descent with learning rate .

The generalization error is measured by . Denote the optimal stopping time by

and the corresponding optimal error by . The most difficult part of this experiment turned out to be the computation of the KL divergence: Monte-Carlo approximation has led to excessive variance. Therefore we computed by numerical integration on a uniform grid on . This limits the experiments to low dimensions.

For each , we estimate by linear regression between and . The sample size ranges in , each setting is repeated 20 times with a new sample set . Also, we solve for the dependence of on by linear regression between and . Here are the results:

| Dimension | 1 | 2 | 3 | 4 | 5 |

| Exponent of | |||||

| Exponent of | 0.30 | 0.29 | 0.27 | 0.26 | 0.31 |

Our experiments suggest that the generalization error of the early-stopping solution scales as and is dimension-independent, and the optimal early-stopping time is around . This error is much better than the upper bound given by Corollary 3.4, indicating that our analysis has much room for improvement.

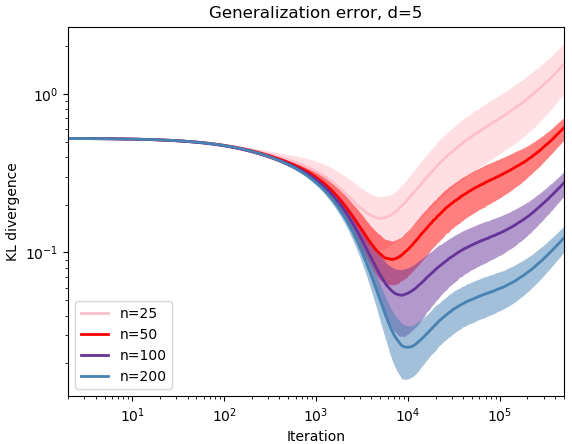

Shown in Figure 1 is the generalization error during training, for dimension .

All error curves go through a rapid descent, followed by a slower but gradual ascent due to memorization. In fact, the convergence rate prior to the optimal stopping time appears to be exponential. Note that if exponential convergence indeed holds, then the generalization error estimate of Corollary 3.4 can be improved to .

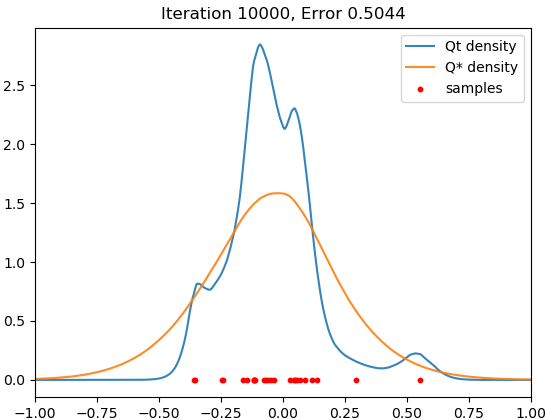

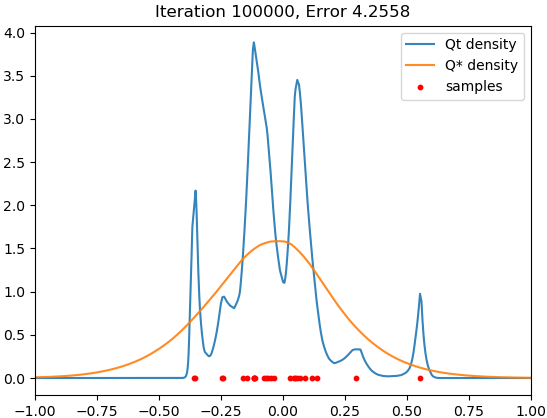

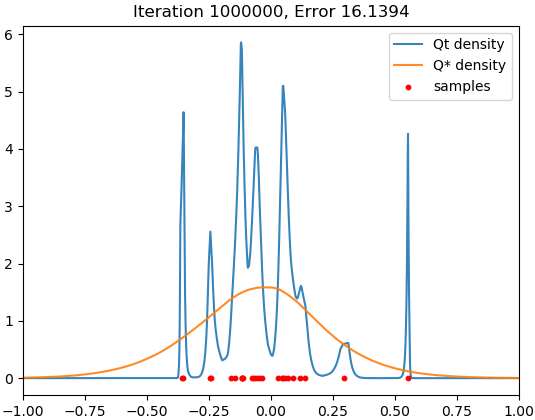

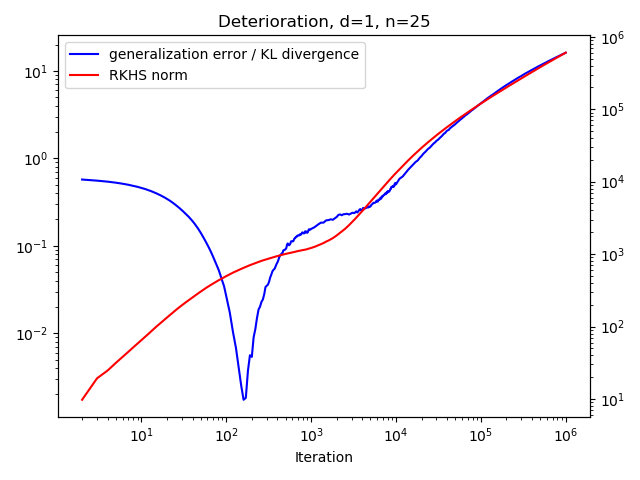

4.2 Deterioration and memorization

Proposition 3.7 indicates that as the model either memorizes the sample points or diverges. Our result shows that in practice we obtain memorization.

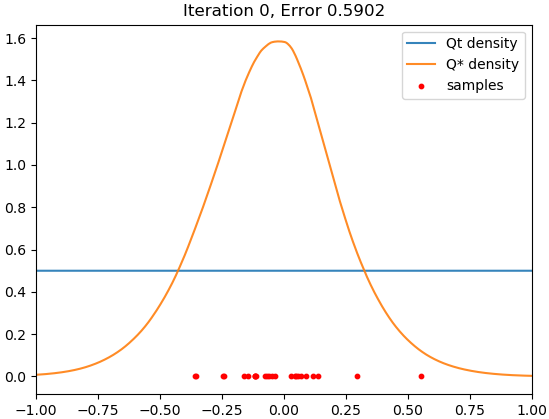

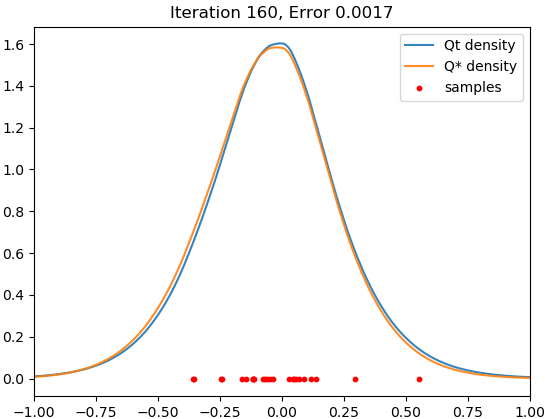

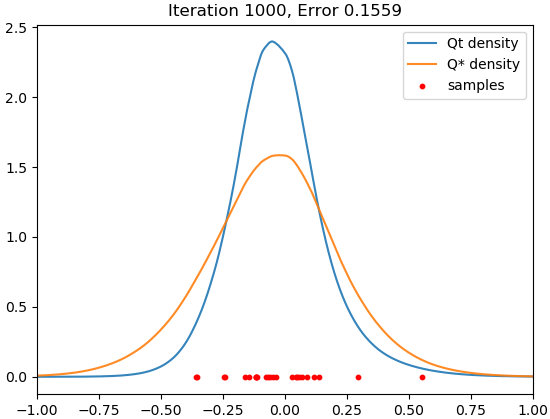

We adopt the same set-up as in Section 4.1. Since memorization occurs very slowly with SGD, we accelerate training using Adam. Figure 2 shows the result for .

We see that there is a time interval during which the trained model closely fits the target distribution, but it eventually concentrates around the samples, and this memorization process does not seem to halt within realistic training time.

Figure 3 suggests that memorization is correlated with the growth of the function norm of the potential.

5 Proofs

5.1 Proof of the Universal Approximation Property

Assumption 5.1.

For 2-layer neural networks (5), assume that the activation function is continuous and is not a polynomial.

For the random feature model (7), assume that the activation function is continuous, non-polynomial and grows at most linearly at infinity, . In addition, we assume that the fixed parameter distribution has full support over . (See Theorem 1 of [44] for more general conditions.) Alternatively, one can assume that is ReLU and covers all directions, that is, for all , we have for some .

Proof of Proposition 2.1.

Denote the set of distributions generated by by

First, for any , assume that its density function is strictly positive over . Then, . Let be a sequence that approximates in the supremum norm, and let be the distributions (2) generated by . It follows that

For the general case , define for any ,

For each , let be a distribution generated by some such that . Then,

where the inequality follows from the convexity of KL. Hence, the set is dense in under KL divergence.

Next, consider the total variation norm. Since is dense in under , and since Pinsker’s inequality bounds from above by KL divergence, we conclude that is also dense in .

Now consider the metric. can be seen as an optimal transport cost with cost function , so for any ,

Since is dense in under the metric, we conclude that is dense in .

Finally, note that for any ,

So is dense in . ∎

5.2 Estimating the Approximation Error

Lemma 5.1.

For any base distribution and any potential functions ,

Proof.

Denote . Then,

∎

Proof of Proposition 2.2.

The proof follows the standard argument of Monte-Carlo estimation (Theorem 4 of [18]). First, consider the case . For any , let be a parameter distribution of with path norm . Define the finite neural network

where are i.i.d. samples from . Denote .

Let be the distribution generated by . The approximation error is given by

By Lemma 5.1,

Given that , we can bound

Meanwhile, denote the empirical measure on by . Then, its expected path norm is bounded by

Define the events

By Markov’s inequality,

Since ,

Hence, there exists such that

The argument for the case is the same. ∎

5.3 Proof of Trainability

Lemma 5.2.

The objectives from (3) are convex in .

Proof.

It suffices to show that is convex: Given any two potential functions and any , Hölder’s inequality implies that

∎

Proof of Proposition 3.1.

For the target potential function , denote its parameter function by . Let the objective be either or . The mapping

is linear while is convex in by Lemma 5.2, so is convex in and we simply write the objective as . Define the Lyapunov function

Then,

By convexity, for any ,

Hence, . We conclude that or equivalently

∎

Assumption 5.2.

We make the following assumptions on the activation function , the initialization of , and the base distribution :

-

1.

The weights are restricted to the sphere .

-

2.

The activation is universal in the sense that for any distributions ,

-

3.

is continuously differentiable with a Lipschitz derivative . (For instance, might be sigmoid or mollified ReLU.)

-

4.

The initialization has full support over . Specifically, the support of contains a submanifold that separates the two components, and , for some .

-

5.

is compactly-supported.

Proof of Proposition 3.2.

The proof follows the arguments of [13, 38]. For convenience, denote by and by , so the activation is simply . Denote the training objective by ( or ). From a particle perspective, the flow (10) can be written as

| (12) | ||||

where if and if .

Since the velocity field (12) is locally Lipschitz over , the induced flow is a family of locally Lipschitz diffeomorphisms, and thus preserve the submanifold given by Assumption 5.2. Denote by and the projections of onto . It follows that has full support over for all time .

Since is a stationary point of , the velocity field (12) vanishes at almost everywhere. In particular, for all in the support of ,

We show that this condition holds for all . Denote . Assume to the contrary that does not vanish on . Let be a maximizer of . Without loss of generality, let ; the same reasoning applies to .

Since in , the bias potential converges to uniformly over the compact support of . Since all are supported on , the velocity field (12) converges locally uniformly to

For sufficiently large, we can study the flow with this approximate field. Let be any point with sufficiently close to , consider a trajectory initialized from with a large . If , then becomes increasingly negative, while follows a gradient ascent on and converges to (or any maximizer nearby). Else, , but if is sufficiently close to , then is very small (since and is Lipschitz in ), so will stay around and remains positive. Then, eventually becomes negative, and converges to .

Since has positive mass in any neighborhood of at time , this mass will remain in as . This is a contradiction since is disjoint from . It follows that vanishes on all of . Then for any ,

By Assumption 5.2, we conclude that , or equivalently and (up to an additive constant). ∎

5.4 Proof of Generalization Ability

Proof of Lemma 3.5.

Proof of Lemma 3.6.

Denote the inner product and norm of by and . Then,

Since is convex, for any . Therefore,

so that . By Lipschitz continuity, . ∎

Proof of Theorem 3.3.

For any time , the testing error can be decomposed into

The second term is bounded by Proposition 3.1, while the first term can be bounded by Lemma 3.6. The Hilbert space in Lemma 3.6 corresponds to the parameter functions for the random feature model, the convex objective corresponds to the objective over ,

and the perturbation term corresponds to ,

The remaining task is to estimate the constants and .

First, we have . For any , let be the modeled distribution,

where in the last step, since all distributions are supported on , .

Next, the estimate of has been provided by Lemma 3.5, because for any ,

∎

5.5 Proof of Memorization

Let be the space of finite signed measures on . We say that a kernel is integrally strictly positive definite if

Equip with the inner product

from which we define the MMD (maximum mean discrepancy) distance

Let be the RKHS generated by with inner product . Then the MMD inner product is the RKHS inner product on the mean embeddings ,

Lemma 5.3.

When restricted to the subset , the MMD distance induces the weak topology and thus is compact.

Proof.

By Lemma 2.1 of [42], the MMD distance metrizes the weak topology of , which is compact by Prokhorov’s theorem. ∎

As is a convex subset of , we can define the tangent cone at each point by

and equip it with the MMD norm, .

Given the gradient flow defined in Lemma 3.8, the distribution evolves by

We can extend this flow to a dynamical system on in positive time , defined by

| (13) | ||||

Each is a tangent vector in .

Note that we can rewrite and in terms of the RKHS norm: Let be the mean embeddings of ,

It follows that and are uniformly continuous over the compact space .

Lemma 5.4.

Given any initialization , there exists a unique solution , to the dynamics (13).

Proof.

The integral form of (13) can be written as

| (14) |

where we adopt the Bochner integral on . In the spirit of the classical Picard-Lindelöf theorem, we consider the vector space equipped with sup-norm

On the complete subspace , define the operator by

Define the sequence and .

Denote the set of fixed points of (13) by

Also, define the set of distributions that have larger supports than the target distribution

Lemma 5.5.

We have the following inclusion

Given any initialization , let , be the trajectory defined by Lemma 5.4 and let be the set of limit points in MMD metric

then .

Proof.

For any fixed point , we have for -almost every . By continuity, we have

| (15) |

If we further suppose that , then this equality holds for -almost all , so

Since is integrally strictly positive definite, we have . It follows that

or equivalently .

Lemma 5.6.

Given any initialization , if the limit point set contains only one point , then and thus . Else, is contained in .

Proof.

For any open subset that intersects , we have . Also

So remains positive for all finite . It follows that and for all .

First, consider the case . Assume for contradiction that for some . Equation (15) implies that

and thus

In particular, there exists some measureable subset and some such that

By continuity, there exists some open subset () such that its closure satisfies

Meanwhile, since intersects , we have for all . Whereas (15) implies that is disjoint from .

Since is continuous over and is compact, there exists some neighborhood such that

Since the trajectory converges in the MMD distance to , there exists some time such that for all , . It follows that

so that for all . Yet, Lemma 5.3 implies that converges weakly to , so that

A contradiction. We conclude that the limit point does not belong to . By Lemma 5.5, we must have .

Next, consider the case when has more than one point. Inequality (16) implies that the MMD distance is monotonously decreasing along the flow . Suppose that , then and thus , a contradiction. Hence, . ∎

Proof of Lemma 3.8.

Since , the initialization has full support over and thus . If converges weakly to some limit , Lemma 5.3 implies that also converges in MMD metric to . Then, Lemma 5.6 implies that the limit must be .

If there are more than one limit, then Lemma 5.6 implies that all limit points belong to and thus do not cover the full support of . ∎

Proof of Proposition 3.7.

We simply set . Note that since is trained by

the training dynamics for the potential is the same as in Lemma 3.8

with kernel defined by

It is straightforward to check that is integrally strictly positive definite: For any , if

then for -almost all , . It follows that for all random feature models from (7), we have . Assuming Remark 1, the random feature models are dense in by Proposition 2.1, so this equality holds for all . Hence, and is integrally strictly positive definite.

Hence, Lemma 3.8 implies that if has one limit point, then converges weakly to . Else, no limit point can cover the support of and thus do not have full support over . Since the true target distribution is generated by a continuous potential , it has full support and thus does not belong to and for all . Similarly, we must have

otherwise some subsequence of would converge to a limit with full support. ∎

5.6 Proof for the Regularized Model

Lemma 5.7.

For any , there exists a minimizer of (11).

Proof.

Since the closed ball is weakly compact in , it suffices to show that the mapping

is weakly continuous over (e.g. show that the term can be expressed as the uniform limit of a sequence of weakly continuous functions over ). Then, every minimizing sequence of in converges weakly to a minimizer of (11). ∎

6 Discussion

Let us summarize some of the insights obtained in this paper:

-

•

For distribution-learning models, good generalization can be characterized by dimension-independent a priori error estimates for early-stopping solutions. As demonstrated by the proof of Theorem 3.3, such estimates are enabled by two conditions:

-

1.

Fast global convergence is guaranteed for learning distributions that can be represented by the model, with an explicit and dimension-independent rate. For our example, this results from the convexity of the model.

-

2.

The model is insensitive to the sampling error , so memorization happens very slowly and early-stopping solutions generalize well. For our example, this is enabled by the small Rademacher complexity of the random feature model.

-

1.

-

•

Memorization seems inevitable for all sufficiently expressive models (Proposition 3.7), and the generalization error will eventually deteriorate to either or . Thus, instead of the long time limit , one needs to consider early-stopping.

The basic approach, as suggested by Theorem 3.3, is to choose an appropriate function representation such that, with absolute constants , there exists an early-stopping interval with and

(18) Then, with a reasonably large sample set (polynomial in precision ), the early-stopping interval will become sufficiently wide and hard to miss, and the corresponding generalization error will be satisfactorily small.

-

•

A distribution-learning model can be posed as a calculus of variations problem. Given a training objective and distribution representation , this problem is entirely determined by the function representation or function space . Given a training rule, the choice of the function representation then determines the trainability (Proposition 3.2) and generalization ability (Theorem 3.3) of the model.

Future work can be developed from the above insights:

-

•

Generalization error estimates for GANs

The Rademacher complexity argument should be applicable to GANs to bound the deviation , where are the generators trained on and respectively. Nevertheless, the difficulty is in the convergence analysis. Unlike bias potential models, the training objective of GAN is non-convex in the generator , and the solutions to are in general not unique.

-

•

Mode collapse

If we consider mode collapse as a form of bad local minima, then it can benefit from a study of the critical points of GAN, once we pose GAN as a calculus of variations problem. Unlike the bias potential model whose parameter function ranges in the Hilbert space , GANs are formulated on the Wasserstein manifold whose tangent space depends significantly on the current position . In particular, the behavior of gradient flow differs whether is absolutely continuous or not, and we expect that successful GAN models can maintain the absolutely continuity of the trajectory .

-

•

New designs

The design of distribution-learning model can benefit from a mathematical understanding. For instance, consider the early-stopping interval (18), can there be better training rules than gradient flow that reduces or postpones so that early-stopping becomes easier to perform?

References

- [1] Arjovsky, M., and Bottou, L. Towards principled methods for training generative adversarial networks, 2017.

- [2] Arora, S., Ge, R., Liang, Y., Ma, T., and Zhang, Y. Generalization and equilibrium in generative adversarial nets (GANs). arXiv preprint arXiv:1703.00573 (2017).

- [3] Bai, Y., Ma, T., and Risteski, A. Approximability of discriminators implies diversity in GANs, 2019.

- [4] Barron, A. R., and Sheu, C.-H. Approximation of density functions by sequences of exponential families. The Annals of Statistics (1991), 1347–1369.

- [5] Bau, D., Strobelt, H., Peebles, W., Wulff, J., Zhou, B., Zhu, J.-Y., and Torralba, A. Semantic photo manipulation with a generative image prior. ACM Transactions on Graphics 38, 4 (Jul 2019), 1–11.

- [6] Bonati, L., Zhang, Y.-Y., and Parrinello, M. Neural networks-based variationally enhanced sampling. In Proceedings of the National Academy of Sciences (2019), vol. 116, pp. 17641–17647.

- [7] Brock, A., Donahue, J., and Simonyan, K. Large scale GAN training for high fidelity natural image synthesis. arXiv preprint arXiv:1809.11096 (2018).

- [8] Brown, T. B., Mann, B., Ryder, N., Subbiah, M., Kaplan, J., Dhariwal, P., Neelakantan, A., Shyam, P., Sastry, G., Askell, A., Agarwal, S., Herbert-Voss, A., Krueger, G., Henighan, T., Child, R., Ramesh, A., Ziegler, D. M., Wu, J., Winter, C., Hesse, C., Chen, M., Sigler, E., Litwin, M., Gray, S., Chess, B., Clark, J., Berner, C., McCandlish, S., Radford, A., Sutskever, I., and Amodei, D. Language models are few-shot learners, 2020.

- [9] Bubeck, S., Eldan, R., and Lehec, J. Sampling from a log-concave distribution with projected Langevin Monte Carlo. Discrete & Computational Geometry 59, 4 (2018), 757–783.

- [10] Canu, S., and Smola, A. Kernel methods and the exponential family. Neurocomputing 69, 7-9 (2006), 714–720.

- [11] Casert, C., Mills, K., Vieijra, T., Ryckebusch, J., and Tamblyn, I. Optical lattice experiments at unobserved conditions and scales through generative adversarial deep learning, 2020.

- [12] Che, T., Li, Y., Jacob, A., Bengio, Y., and Li, W. Mode regularized generative adversarial networks. arXiv preprint arXiv:1612.02136 (2016).

- [13] Chizat, L., and Bach, F. On the global convergence of gradient descent for over-parameterized models using optimal transport. In Advances in neural information processing systems (2018), pp. 3036–3046.

- [14] Donahue, J., and Simonyan, K. Large scale adversarial representation learning. In Advances in neural information processing systems (2019), pp. 10542–10552.

- [15] E, W., Ma, C., and Wang, Q. A priori estimates of the population risk for residual networks. arXiv preprint arXiv:1903.02154 1, 7 (2019).

- [16] E, W., Ma, C., Wojtowytsch, S., and Wu, L. Towards a mathematical understanding of neural network-based machine learning: what we know and what we don’t, 2020.

- [17] E, W., Ma, C., and Wu, L. A priori estimates for two-layer neural networks. arXiv preprint arXiv:1810.06397 (2018).

- [18] E, W., Ma, C., and Wu, L. Barron spaces and the compositional function spaces for neural network models. arXiv preprint arXiv:1906.08039 (2019).

- [19] E, W., Ma, C., and Wu, L. Machine learning from a continuous viewpoint. arXiv preprint arXiv:1912.12777 (2019).

- [20] E, W., Ma, C., and Wu, L. On the generalization properties of minimum-norm solutions for over-parameterized neural network models. arXiv preprint arXiv:1912.06987 (2019).

- [21] Elgammal, A., Liu, B., Elhoseiny, M., and Mazzone, M. CAN: Creative adversarial networks, generating “art” by learning about styles and deviating from style norms, 2017.

- [22] Feizi, S., Farnia, F., Ginart, T., and Tse, D. Understanding GANs in the LQG setting: Formulation, generalization and stability. IEEE Journal on Selected Areas in Information Theory 1, 1 (2020), 304–311.

- [23] Goodfellow, I., Pouget-Abadie, J., Mirza, M., Xu, B., Warde-Farley, D., Ozair, S., Courville, A., and Bengio, Y. Generative adversarial nets. In Advances in neural information processing systems (2014), pp. 2672–2680.

- [24] Gulrajani, I., Raffel, C., and Metz, L. Towards GAN benchmarks which require generalization. arXiv preprint arXiv:2001.03653 (2020).

- [25] Jewell, N. P. Mixtures of exponential distributions. Ann. Statist. 10, 2 (06 1982), 479–484.

- [26] Kiefer, J., and Wolfowitz, J. Consistency of the maximum likelihood estimator in the presence of infinitely many incidental parameters. Ann. Math. Statist. 27, 4 (12 1956), 887–906.

- [27] Lei, Q., Lee, J. D., Dimakis, A. G., and Daskalakis, C. Sgd learns one-layer networks in wgans, 2020.

- [28] Lovász, L., and Vempala, S. The geometry of logconcave functions and sampling algorithms. Random Structures & Algorithms 30, 3 (2007), 307–358.

- [29] Mao, Y., He, Q., and Zhao, X. Designing complex architectured materials with generative adversarial networks. Science Advances 6, 17 (2020).

- [30] Mei, S., Montanari, A., and Nguyen, P.-M. A mean field view of the landscape of two-layer neural networks. Proceedings of the National Academy of Sciences 115, 33 (2018), E7665–E7671.

- [31] Oord, A. v. d., Dieleman, S., Zen, H., Simonyan, K., Vinyals, O., Graves, A., Kalchbrenner, N., Senior, A., and Kavukcuoglu, K. WaveNet: A generative model for raw audio. arXiv preprint arXiv:1609.03499 (2016).

- [32] Pinkus, A. Approximation theory of the mlp model in neural networks. Acta numerica 8, 1 (1999), 143–195.

- [33] Prykhodko, O., Johansson, S. V., Kotsias, P.-C., Arús-Pous, J., Bjerrum, E. J., Engkvist, O., and Chen, H. A de novo molecular generation method using latent vector based generative adversarial network. Journal of Cheminformatics 11, 74 (Dec 2019), 1–11.

- [34] Radford, A., Metz, L., and Chintala, S. Unsupervised representation learning with deep convolutional generative adversarial networks. arXiv preprint arXiv:1511.06434 (2015).

- [35] Rahimi, A., and Recht, B. Uniform approximation of functions with random bases. In 2008 46th Annual Allerton Conference on Communication, Control, and Computing (2008), IEEE, pp. 555–561.

- [36] Redner, R. A., and Walker, H. F. Mixture densities, maximum likelihood and the em algorithm. SIAM review 26, 2 (1984), 195–239.

- [37] Roberts, G. O., and Tweedie, R. L. Exponential convergence of langevin distributions and their discrete approximations. Bernoulli 2, 4 (1996), 341–363.

- [38] Rotskoff, G. M., and Vanden-Eijden, E. Trainability and accuracy of neural networks: An interacting particle system approach. stat 1050 (2019), 30.

- [39] Russakovsky, O., Deng, J., Su, H., Krause, J., Satheesh, S., Ma, S., Huang, Z., Karpathy, A., Khosla, A., Bernstein, M., Berg, A. C., and Fei-Fei, L. Imagenet large scale visual recognition challenge, 2015.

- [40] Salimans, T., Goodfellow, I., Zaremba, W., Cheung, V., Radford, A., and Chen, X. Improved techniques for training GANs. In Advances in neural information processing systems (2016), pp. 2234–2242.

- [41] Shalev-Shwartz, S., and Ben-David, S. Understanding machine learning: From theory to algorithms. Cambridge university press, 2014.

- [42] Simon-Gabriel, C.-J., Barp, A., and Mackey, L. Metrizing weak convergence with maximum mean discrepancies, 2020.

- [43] Sriperumbudur, B., Fukumizu, K., Gretton, A., Hyvärinen, A., and Kumar, R. Density estimation in infinite dimensional exponential families. Journal of Machine Learning Research 18 (2017).

- [44] Sun, Y., Gilbert, A., and Tewari, A. On the approximation properties of random relu features. arXiv preprint arXiv:1810.04374 (2018).

- [45] Vahdat, A., and Kautz, J. NVAE: A deep hierarchical variational autoencoder. arXiv preprint arXiv:2007.03898 (2020).

- [46] Valsson, O., and Parrinello, M. Variational approach to enhanced sampling and free energy calculations. Physical review letters 113 (2014).

- [47] Wand, M. P., and Jones, M. C. Kernel smoothing. Crc Press, 1994.

- [48] Weed, J., and Bach, F. Sharp asymptotic and finite-sample rates of convergence of empirical measures in wasserstein distance. arxiv e-prints, art. arXiv preprint arXiv:1707.00087 (2017).

- [49] Wu, S., Dimakis, A. G., and Sanghavi, S. Learning distributions generated by one-layer relu networks. In Advances in Neural Information Processing Systems 32. Curran Associates, Inc., 2019, pp. 8107–8117.

- [50] Yuan, L., Kirshner, S., and Givan, R. Estimating densities with non-parametric exponential families. arXiv preprint arXiv:1206.5036 (2012).

- [51] Zhang, C., Bengio, S., Hardt, M., Recht, B., and Vinyals, O. Understanding deep learning requires rethinking generalization. arXiv preprint arXiv:1611.03530 (2016).

- [52] Zhang, L., E, W., and Wang, L. Monge-Ampère flow for generative modeling. arXiv preprint arXiv:1809.10188 (2018).

- [53] Zhang, P., Liu, Q., Zhou, D., Xu, T., and He, X. On the discrimination-generalization tradeoff in GANs. arXiv preprint arXiv:1711.02771 (2017).