Gibbs posterior concentration rates under sub-exponential type losses

Abstract

Bayesian posterior distributions are widely used for inference, but their dependence on a statistical model creates some challenges. In particular, there may be lots of nuisance parameters that require prior distributions and posterior computations, plus a potentially serious risk of model misspecification bias. Gibbs posterior distributions, on the other hand, offer direct, principled, probabilistic inference on quantities of interest through a loss function, not a model-based likelihood. Here we provide simple sufficient conditions for establishing Gibbs posterior concentration rates when the loss function is of a sub-exponential type. We apply these general results in a range of practically relevant examples, including mean regression, quantile regression, and sparse high-dimensional classification. We also apply these techniques in an important problem in medical statistics, namely, estimation of a personalized minimum clinically important difference.

Keywords and phrases: classification; generalized Bayes; high-dimensional problem; M-estimation; model misspecification.

1 Introduction

A major selling point of the Bayesian framework is that it is normative: to solve a new problem, one only needs a statistical model/likelihood, a prior distribution for the model parameters, and the means to compute the corresponding posterior distribution. Bayesians’ obligation to specify a prior attracts criticism, but their need to specify a likelihood has a number of potentially negative consequences too, especially when the quantity of interest has meaning independent of a statistical model, like a quantile. On the one hand, even if the posited model is “correct,” it is rare that all the parameters of that model are relevant to the problem at hand. For example, if we are interested in a quantile of a distribution, which we model as skew-normal, then the focus is only on a specific real-valued function of the model parameters. In such a case, the non-trivial effort invested in dealing with these nuisance parameters, e.g., specifying prior distributions and designing computational algorithms, is effectively wasted. On the other hand, in the far more likely case where the posited model is “wrong,” that model misspecification can negatively impact one’s conclusions about the quantity of interest. For example, both skew-normal and Pareto models have a quantile, but the quality of inferences drawn about that quantile will vary depending on which of these two models is chosen.

The non-negligible dependence on the posited statistical model puts a burden on the data analyst, and those reluctant to take that risk tend to opt for a non-Bayesian approach. After all, if one can get a solution without specifying a statistical model, then it is impossible to incur model misspecification bias. But in taking such an approach, they give up the normative advantage of Bayesian analysis. Can they get the best of both worlds? That is, can one construct a posterior distribution for the quantity of interest directly, incorporating available prior information, without specifying a statistical model and incurring the associated model misspecification risks, and without the need for marginalization over nuisance parameters? Fortunately, the answer is Yes, and this is the present paper’s focus.

The so-called Gibbs posterior distribution is the proper prior-to-posterior update when data and the interest parameter are linked by a loss function rather than a likelihood (Catoni,, 2004; Zhang,, 2006; Bissiri et al.,, 2016). Intuitively, the Gibbs and Bayesian posterior distributions coincide when the loss function linking data and parameter is a (negative) log-likelihood. In that case the properties of the Gibbs posterior can be inferred from the literature on Bayesian asymptotics in both the well-specified and misspecified contexts. For cases where the link is not through a likelihood, the large-sample behavior of the Gibbs posterior is less clear and elucidating this behavior under some simple and fairly general conditions is our goal here.

As a practical example, medical investigators may want to know if a treatment whose effect has been judged to be statistically significant is also clinically significant in the sense that the patients feel better post-treatment. Therefore, they are interested in inference about the effect size cutoff beyond which patients feel better; this is called the minimum clinically important difference or MCID, e.g., Jaescheke et al., (1989). Estimation of the MCID boils down to a classification problem, and standard Bayesian approaches to binary regression do not perform well in this setting; misspecifying the link function leads to bias, and nonparametric modeling of the link function is inefficient (Choudhuri et al.,, 2007). Instead, we found that a Gibbs posterior distribution, as described above, provided a very reasonable and robust solution to the MCID problem (Syring and Martin,, 2017). In some applications, one seeks a “personalized” or subject-specific cutoff that depends on a set of additional covariates. This personalized MCID could be high- or even infinite-dimensional, and our previous Gibbs posterior analysis is not equipped to handle such situations. But the framework developed here is; see Section 6.

In the following sections we lay out and apply conditions under which a Gibbs posterior distribution concentrates, asymptotically, on a neighborhood of the true value of the inferential target as the sample sizes increases. Our focus is not on the most general set of sufficient conditions for concentration; rather, we aim for conditions that are both widely applicable and easily verified. To this end, we consider loss functions of a sub-exponential type, ones that satisfy an inequality similar to the moment-generating function bound for sub-exponential random variables (Boucheron et al.,, 2012). We can apply this condition in a variety of problems, from regression to classification, and in both fixed- and high-dimensional settings. An added advantage is that our conditions lead to straightforward proofs of concentration.

Section 2 provides some background and formally defines the Gibbs posterior distribution. In Section 3, we state our theoretical objectives and present our main results, namely, sets of sufficient conditions under which the Gibbs posterior achieves a specified asymptotic concentration rate. A unique attribute of the Gibbs posterior distribution is its dependence on a tuning parameter called the learning rate, and our results cover a constant, vanishing sequence, and even data-dependent learning rates. Section 4 further discusses verifying our conditions and extends our conditions and main results to handle certain unbounded loss functions. Section 5 applies our general theorems to establish Gibbs posterior concentration rates in a number of practically relevant examples, including nonparametric curve estimation, and high-dimensional sparse classification. Section 6 formulates the personalized MCID application, presents a relevant Gibbs posterior concentration rate result, and gives a brief numerical illustration. Concluding remarks are given in Section 7, and proofs, etc. are postponed to the appendix.

2 Background on Gibbs posteriors

2.1 Notation and definitions

Consider a measurable space , with a -algebra of subsets of , on which a probability measure is defined. A random element need not be a scalar, and many of the applications we have in mind involve or , where denotes a “response” variable and or denotes a “predictor” variable, and encodes the dependence between the entries in . Then the real-world phenomenon under investigation is determined by and our goal is to make inference on a relevant feature of , which we define as a given functional , taking values in . Note that could be finite-, high-, or even infinite-dimensional.

The specific way relates to guides our posterior construction. Suppose there is a loss function, , that measures how closely a generic value of agrees with a data point . (As is customary, “” will denote both the quantity of interest and a generic value of that quantity; when we need to distinguish the true from a generic value, we will write “.”) For example, if is a predictor–response pair, and is a function, then the loss might be

| (1) |

depending on whether is continuous or discrete/binary, where denotes the indicator function at the event . Another common situation is when one specifies a statistical model, say, , indexed by a parameter , and sets , where is the density of with respect to some fixed dominating measure. In all of these cases, the idea is that a loss is incurred when there is a certain discrepancy between and the data point . Then our inferential target is the value of that minimizes the risk or average loss/discrepancy.

Definition 2.1.

Consider a real-valued loss function defined on , and define the risk function , the expected loss with respect to ; throughout, denotes expected value of with respect to . Then the inferential target is

| (2) |

Given that estimation/inference is our goal, our focus will be on case where the risk minimizer, , is unique, so that the “” in (2) is an equality. But this is not absolutely necessary for our theory. Indeed, the main results in Section 3 remain valid even if the risk minimizer is not unique, and we make a few brief comments about this extension in the discussion following Theorem 3.2.

The risk function itself is unavailable—it depends on —and, therefore, so is . However, suppose that we have an independent and identically distributed (iid) sample of size , with each having marginal distribution on . The iid assumption is not crucial, but it makes the notation and discussion easier; an extension to independent but not identically distributed (inid) cases is discussed in the context of an example in Section 5.4. In general, we have data taking values in the measurable space , with joint distribution denoted by . From here, we can naturally replace the unknown risk in Definition 2.1 with an empirical version and proceed accordingly.

Definition 2.2.

For a loss function as described above, define the empirical risk as

| (3) |

where , with the Dirac point-mass measure at , is the empirical distribution.

Naturally, if the inferential target is the risk minimizer, then it makes sense to estimate that quantity based on data by minimizing the empirical risk, i.e.,

| (4) |

This is the M-estimator based on an objective function determined by the loss ; when is differentiable, the root of , the derivative of , is a Z-estimator and “” is often called an estimating equation (Godambe,, 1991; van der Vaart,, 1998). Since need not be smooth or convex, and is an average over a finite set of data, it is possible that its minimizer is not unique, even if is. These computational challenges are, in fact, part of what motivates the Gibbs posterior, as we discuss below.

There is a rich literature on the asymptotic distribution properties of M-estimators, which can be used for developing hypothesis tests and confidence intervals (Maronna et al.,, 2006; Huber and Ronchetti,, 2009). As an alternative, one might consider a Bayesian approach to quantify uncertainty, but there is an immediate obstacle, namely, no statistical model/likelihood connecting the data to the quantity of interest. If we did have a statistical model, with a density function , then the most natural loss is and the likelihood is . It is, therefore, tempting to follow that same strategy for general losses, resulting in a sort of generalized posterior distribution for .

Definition 2.3.

Given a loss function and the corresponding empirical risk in Definition 2.2, define the Gibbs posterior distribution as

| (5) |

where is a prior distribution and is a so-called learning rate (Holmes and Walker,, 2017; Syring and Martin,, 2019; Grünwald,, 2012; van Erven et al.,, 2015). The dependence of on will generally be omitted from the notation, but see Sections 2.2 and 3.3.

We will assume that the right-hand side of (5) is integrable in , so that the proportionality constant is well-defined. Integrability holds whenever the loss function is bounded from below, like for those in (1), but this could fail in some cases where the loss is not bounded away from , e.g., when is a negative log-density. In such cases, extra conditions on the prior distribution would be required to ensure the Gibbs posterior is well-defined.

An immediate advantage of this approach, compared to the M-estimation strategy described above, is that the user is able to incorporate available prior information about directly into the analysis. This is especially important in cases where the quantity of interest has a real-world meaning, as opposed to being just a model parameter, where having genuine prior information is the norm rather than the exception. Additionally, even though there is no likelihood, the same computational methods, such as Markov chain Monte Carlo (Chernozhukov and Hong,, 2003) and variational approximations (Alquier et al.,, 2016), common in Bayesian analysis, can be employed to numerically approximate the Gibbs posterior.

We have opted here to define the Gibbs posterior directly as an object to be used and studied, but there is a more formal, more principled way in which Gibbs posteriors emerge. In the PAC-Bayes literature, the goal is to construct a randomized estimator that concentrates in regions of where the risk, , or its empirical version, , is small (Valiant,, 1984; McAllester,, 1999; Alquier,, 2008; Guedj,, 2019). That is, the Gibbs posterior can be viewed as a solution to an optimization problem rather than a solution to the updating-prior-beliefs problem. More formally, for a given prior on , suppose the goal is to find

where the infimum is over all probability measures that are absolutely continuous with respect to , and denotes the Kullback–Leibler divergence. Then it can be shown (e.g., Zhang,, 2006; Bissiri et al.,, 2016) that the unique solution is , the Gibbs posterior defined in (5). Therefore, the Gibbs posterior distribution is the measure minimizing a penalized risk, averaged with respect to a given prior, .

2.2 Learning rate

Readers familiar with M-estimation may not recognize the learning rate, . This does not appear in the M-estimation context because all that influences the optimization problem—and the corresponding asymptotic distribution theory—is the shape of the loss/risk function, not its magnitude or scale. On the other hand, the learning rate is an essential component of the Gibbs posterior distribution in (5) since the distribution depends on both the shape and scale of the loss function. Data-driven strategies for tuning the learning rate are available (Holmes and Walker,, 2017; Syring and Martin,, 2019; Lyddon et al.,, 2019; Wu and Martin,, 2022, 2021).

Here we focus on how the learning rate affects posterior concentration. In typical examples, our results require the learning rate to be a sufficiently small constant. That constant depends on features of , which are generally unknown, so, in practice, the learning rate can be taken to be a slowly vanishing sequence, which has a negligible effect on the concentration rate. In more challenging examples, we require the learning rate to vanish sufficiently fast in ; this is also the case in Grünwald and Mehta, (2020).

2.3 Relation to other generalized posterior distributions

A generalized posterior is any data-dependent distribution other than a well-specified Bayesian posterior. Examples include Gibbs and misspecified Bayesian posteriors, which we compare first.

A key characteristic of the misspecified Bayesian posterior is that it is accidentally misspecified. That is, the data analyst does his/her best to posit a sound model, , for the data-generating process and obtains the corresponding posterior for the model parameter . That model will typically be misspecified, i.e., , so the aforementioned posterior will, under certain conditions, concentrate around the point that minimizes the Kullback–Leibler divergence of from (Kleijn and van der Vaart,, 2006; De Blasi and Walker,, 2013; Ramamoorthi et al.,, 2015). For a feature of interest, typically , so there is generally a bias that the data analyst can do nothing about. A Gibbs posterior, on the other hand, is purposely misspecified—no attempt is made to model . Rather, it directly targets the feature of interest via the loss function that defines it. This strategy avoids model misspecification bias, but its point of view also sheds light on the importance of the choice of learning rate. Since the data analyst knows the Gibbs posterior is not a correctly specified Bayesian posterior, they know the learning rate must be handled with care.

A number of authors have studied generalized posterior distributions formed using a likelihood raised to a power ; see, e.g., Martin et al., (2017) and Grünwald and van Ommen, (2017) among others. These -generalized posteriors tend to be robust to misspecification of the probability model, and data-driven methods to tune are developed in, e.g., Grünwald and van Ommen, (2017). These posteriors coincide with Gibbs posteriors based on a log-loss and with the learning rate corresponding to .

A common non-Bayesian approach to statistics bases inferences on moment conditions of the form for functions , rather than on a fully-specified likelihood. A number of authors have extended this framework to posterior inference. Kim, (2002) uses the moment conditions to construct a so-called limited-information likelihood to use in place of a fully-specified likelihood in a Bayesian formulation. Similarly, Chernozhukov and Hong, (2003) construct a posterior by taking a (pseudo) log-likelihood equal to a quadratic form determined by the set of moment conditions. Chib et al., (2018) studies Bayesian exponentially-tilted empirical likelihood posterior distributions, also defined by moment conditions. In some cases the Gibbs posterior distribution coincides with the above moment-based methods. For instance, in the special case that the risk is differentiable at with derivative , risk-minimization corresponds to the single moment condition .

3 Asymptotic concentration rates

3.1 Objective

A large part of the Bayesian literature concerns the asymptotic concentration properties of their posterior distributions. Roughly, if data are generated from a distribution for which the quantity of interest takes value , then, as , the posterior distribution ought to concentrate its mass around that same . As we will show, optimal concentration rates are possible with Gibbs posteriors, so the robustness achieved by not specifying a statistical model has no cost in terms of (asymptotic) efficiency.

Towards this, for a fixed , let denote a divergence measure on in the sense that for all , with equality if and only if . The divergence measure could depend on the sample size or other deterministic features of the problem at hand, especially in the independent but not iid setting; see Section 5.4. Our objective is to provide conditions under which the Gibbs posterior will concentrate asymptotically, at a certain rate, around relative to the divergence measure . Throughout this paper, denotes a deterministic sequence of positive numbers with , which will be referred to as the Gibbs posterior concentration rate.

Definition 3.1.

The Gibbs posterior in (5) asymptotically concentrates around at rate (at least) , with respect to , if

| (6) |

where is either a (deterministic) sequence satisfying arbitrarily slowly or is a sufficiently large constant, .

In the PAC-Bayes literature, the Gibbs posterior distribution is interpreted as a “randomized estimator,” a generator of random values that tend to make the risk difference small. For iid data and with risk divergence , a concentration result like that in Definition 3.1 makes this strategy clear, since the -probability of the event would be .

If the Gibbs posterior concentrates around in the sense of Definition 3.1, then any reasonable estimator derived from that distribution, such as the mean, should inherit the rate at relative to the divergence measure . This can be made formal under certain conditions on ; see, e.g., Corollary 1 in Barron et al., (1999) and the discussion following the proof of Theorem 2.5 in Ghosal et al., (2000).

Besides concentration rates, in certain cases it is possible to establish distributional approximations to Gibbs posteriors, i.e., Bernstein–von Mises theorems. Results for finite-dimensional problems and with sufficiently smooth loss functions can be found in, e.g., Bhattacharya and Martin, (2022) and Chernozhukov and Hong, (2003).

3.2 Conditions

Here we discuss a general strategy for proving Gibbs posterior concentration and the kinds of sufficient conditions needed for the strategy to be successful. To start, set . Then our first step towards proving concentration is to express as the ratio

| (7) |

The goal is to suitably upper and lower bound and , respectively, in such a way that the ratios of these bounds vanish. Two sufficient conditions are discussed below, the first primarily dealing with the loss function and aiding in bounding and the second primarily concerning the prior distribution and aiding in bounding . Both conditions concern the excess loss and its mean and variance:

3.2.1 Sub-exponential type losses

Our method for proving posterior concentration requires a vanishing upper bound on the expectation of the numerator term in (7). Since the integrand, , in is non-negative, Fubini’s theorem says it suffices to bound its expectation. Further, by independence

which reveals that the key to bounding is to bound , the expected exponentiated excess loss. In order for the bound on to vanish it must be that , but this is not enough to identify the concentration rate in (6). Rather, the speed at which vanishes must be a function of , and we take this relationship as our key condition for Gibbs posterior concentration. When this holds we say the loss function is of sub-exponential type.

Condition 1.

There exists an interval and constants , such that for all and for all sufficiently small , for

| (8) |

(The constant that appears here and below can take on different values depending on the context. The case is common, but some “non-regular” problems require ; see Section 5.5.)

An immediate consequence of Condition 1 and the definition of is the key finite-sample, exponential bound on the Gibbs posterior numerator

| (9) |

For some intuition behind Condition 1, consider the following. Let be a real-valued function such that the random variable has a distribution with sufficiently thin tails. This is automatic when is bounded, but suppose has a moment-generating function, i.e., is sub-exponential. For bounding the moment-generating function, the dream case would be if . Unfortunately, Jensen’s inequality implies the dream is an impossibility. It is possible, however, to show

for suitable depending on certain features of (and of ). If it could also be shown, e.g., that , then we are in a “near-dream” case where

The little extra needed beyond sub-exponential to achieve the “near-dream” case bound above is why we refer to such as being sub-exponential type.

Towards verifying Condition 1 we briefly review some developments in Grünwald and Mehta, (2020); further comments can be found in Section 3.4. These authors focus on an annealed expectation which, for a real-valued function of the random element and a fixed constant , is defined as . With this, we find that . So an upper bound as we require in Condition 1 is equivalent to a corresponding lower bound on . The “strong central condition” in Grünwald and Mehta, (2020) states that,

The above inequality implies and, in turn, that as . The other conditions in Grünwald and Mehta, (2020) aim to lower-bound the annealed expected excess loss by a suitable function of the excess risk. For example, Lemma 13 in Grünwald and Mehta, (2020) shows that a “witness condition” implies

so, if is of the order for some , then we recover Condition 1. Therefore, our Condition 1 is exactly what is needed to control the numerator of the Gibbs posterior, and the strong central and witness conditions developed in Grünwald and Mehta, (2020) and elsewhere constitute a set of sufficient conditions for our Condition 1.

A subtle difference between our approach and Grünwald and Mehta’s is that the bounds in the above two displays are required for all ; Condition 1 only deals with bounded away from . This difference arises because we directly target a bound on the Gibbs posterior probability, , an integration over , whereas Grünwald and Mehta, (2020) targets a bound on the Gibbs posterior mean of , an integration over all of . In Example 3.8 of van Erven et al., (2015) the global requirement in Grünwald and Mehta, (2020)’s condition is a disadvantage as van Erven et al., (2015) illustrate it creates some challenges in checking the bounds in the above two displays.

Besides Condition 1, other strategies for bounding in (7) have been employed in the literature. For example, empirical process techniques are used in Syring and Martin, (2017); Bhattacharya and Martin, (2022) to prove Gibbs posterior concentration in finite-dimensional applications. Generally, such proofs hinge on a uniform law of large numbers, which can be challenging to verify in non-parametric problems. Chernozhukov and Hong, (2003) require the stronger condition that in -probability. When it holds they immediately recover an in-probability bound on , irrespective of , but they do not obtain a finite-sample bound on . Their analysis is limited to finite-dimensional parameters and empirical risk functions that are smooth in a neighborhood of . This latter condition excludes important examples like the misclassification-error loss function; see Section 5.5.

There are situations in which Condition 1 does not hold, and we address this formally in Section 4. As an example, note that both Condition 1 and the witness condition used in Grünwald and Mehta, (2020) are closely related to a Bernstein-type condition relating the first two moments of the excess loss: for constants . For the “check” loss used in quantile estimation, the Bernstein condition is generally not satisfied and, consequently, neither Grünwald and Mehta’s witness condition nor our Condition 1—at least not in their original forms—can be verified. Similarly, if the excess loss, , a function of data , is heavy-tailed, then the moment-generating function bound in Condition 1 does not hold. For these cases, some modifications to the basic setup are required, which we present in Section 4.

3.2.2 Prior distribution

Generally, the prior must place enough mass on certain “risk-function” neighborhoods . This is analogous to the requirement in the Bayesian literature that the prior place sufficient mass on Kullback–Leibler neighborhoods of ; see, e.g., Shen and Wasserman, (2001) and Ghosal et al., (2000). Some version of the following prior bound is needed

| (10) |

for as in Condition 1. Lemma 1 in the appendix, along with (10), provides a lower probability bound on the denominator term defined in (7). That is,

| (11) |

where . Both the form of the risk-function neighborhoods and the precise lower bound in (10) depend on the concentration rate and the learning rate, so the results in Section 3.3 all require their own version of the above prior bound.

Grünwald and Mehta, (2020) only require bounds on the prior mass of the larger neighborhoods . Under their condition, we can derive a lower bound on similar to Lemma 1 in Martin et al., (2013). However, our proofs require an in-probability lower bound on , which in turn requires stronger prior concentration like that in (10). While there are important examples where the lower bounds on and are of different orders, none of the applications we consider here are of that type. Therefore, the stronger prior concentration condition in (10) does not affect the rates we derive for the examples in Section 5. Moreover, as discussed following the statement of Theorem 3.2 below, our finite-sample bounds are better than those in Grünwald and Mehta, (2020), a direct consequence of our method of proof that uses a smaller neighborhood .

3.3 Main results

In this section we present general results on Gibbs posterior concentration. Proofs can be found in Section 1 of the supplementary material. Our first result establishes Gibbs posterior concentration, under Condition 1 and a local prior condition, for sufficiently small constant learning rates.

Theorem 3.2.

Let be a vanishing sequence satisfying for a constant . Suppose the prior satisfies

| (12) |

for and for divergence measure , the same as above, and learning rate for some . If the loss function satisfies Condition 1, then the Gibbs posterior distribution in (5) has asymptotic concentration rate , with a large constant as in Definition 3.1.

For a brief sketch of the proof bound the posterior probability of by

where is as above. Taking expectation of both sides, and applying (11) and the consequence (9) of Condition 1, we get

Then the right-hand side is generally of order . To compare with the results in Grünwald and Mehta, (2020, Example 2), their upper bound on is , which vanishes arbitrarily slowly.

For the case where the risk minimizer in (2) is not unique, certain modifications of the above argument can be made, similar to those in Kleijn and van der Vaart, (2006, Theorem 2.4). Roughly, to our Theorem 3.2 above, we would add the requirements that (12) and Condition 1 hold uniformly in , where is the set of risk minimizers. Then virtually the same proof shows that , where .

Theorem 3.2 is quite flexible and can be applied in a range of settings; see Section 5. However, one case in which it cannot be directly applied is when is bounded. For example, in sufficiently smooth finite-dimensional problems, we have and the target rate is . The difficulty is caused by the prior bound in (12), since it is impossible—at least with a fixed prior—to assign mass bounded away from 0 to a shrinking neighborhood of . One option is to add a logarithmic factor to the rate, i.e., take , so that is a power of . Alternatively, a refinement of the proof of Theorem 3.2 lets us avoid slowing down the rate.

Theorem 3.3.

Consider a finite-dimensional , taking values in for some . Suppose that the target rate is such that is bounded for some constant . If the prior satisfies

| (13) |

and if Condition 1 holds, then the Gibbs posterior distribution in (5), with any learning rate , has asymptotic concentration rate at with respect to any divergence satisfying and for any diverging, positive sequence in Definition 3.1.

The learning rate is critical to the Gibbs posterior’s performance, but in applications it may be challenging to determine the upper bound for which Condition 1 holds. For a simple illustration, suppose the excess loss is normally distributed with variance satisfying , a kind of Bernstein condition. In this case, Condition 1 holds for , with , if . Bernstein conditions can be verified in many practical examples, but the factor would rarely be known. Consequently, we need to be sufficiently small, but the meaning of “sufficiently small” depends on unknowns involving . However, any positive, vanishing learning rate sequence satisfies for all sufficiently large . And if vanishes arbitrarily slowly, then it has no effect on the Gibbs posterior concentration rate; see Section 5.6. All we require to accommodate a vanishing learning rate is a slightly stronger -dependent version of the prior concentration bound in (12).

Theorem 3.4.

Let be a vanishing sequence and be a learning rate sequence satisfying for a constant . Consider a Gibbs posterior distribution in (5) based on this sequence of learning rates. If the prior satisfies

| (14) |

and if Condition 1 holds for , then the Gibbs posterior distribution in (5), with learning rate sequence , has concentration rate at for a sufficiently large constant in Definition 3.1.

The proof of Theorem 3.4 is almost identical to that of Theorem 3.2, hence omitted. But to see the basic idea, we mention two key observations. First, since Condition 1 holds for for all sufficiently large , and since is deterministic, by the same argument producing the bound in (9), we get

Second, the same argument producing the bound in (11) shows that

Then the only difference between this situation and that in Theorem 3.2 is that, here, the numerator bound only holds for “sufficiently large ,” instead of for all . This makes Theorem 3.4 is slightly weaker than Theorem 3.2 since there are no finite-sample bounds.

When the constant learning rate is absorbed by and there is no difference between the prior bounds in (12) and (14). But, the prior probability assigned to the -neighborhood of does not depend on , so if it satisfies (12), then the only way it could also satisfy (14) is if is bigger than it would have been without a vanishing learning rate. In other words, Theorem 3.2 requires whereas Theorem 3.4 requires , which implies that for a given vanishing Theorem 3.2 potentially can accommodate a faster rate . Therefore, we see that a vanishing learning rate can slow down the Gibbs posterior concentration rate if it does not vanish arbitrarily slowly. There are applications that require the learning rate to vanish at a particular -dependent rate, and these tend to be those where adjustments like in Section 4 are needed; see Sections 5.3.2 and 5.5.2.

If we can use the data to estimate consistently, then it makes sense to choose a learning rate sequence depending on this estimator. Suppose our data-dependent learning rate satisfies with probability converging to as . For the conclusion of Theorem 3.2 holds for all sufficiently large ; and see Section 4.2 in the Supplementary Material. One advantage of this strategy is that it avoids using a vanishing learning rate, which may slow concentration.

Theorem 3.5.

Fix a positive deterministic learning rate sequence such that the conditions of Theorem 3.4 hold and as a result has asymptotic concentration rate . Consider a random learning rate sequence satisfying

| (15) |

Then , the Gibbs posterior distribution in (5) scaled by the random learning rate sequence , also has concentration rate at for a sufficiently large constant in Definition 3.1.

3.4 Checking Condition 1

Of course, Condition 1 is only useful if it can be checked in practically relevant examples. As mentioned in Section 3.2, Grünwald and Mehta’s strong central and witness conditions are sufficient for Condition 1. A pair of slightly stronger, but practically verifiable conditions require the excess loss is sub-exponential with first and second moments that obey a Bernstein condition. For the examples we consider in Section 5 where Condition 1 can be used, these two conditions are convenient.

3.4.1 Bounded excess losses

For bounded excess losses, i.e., for all , Lemma 7.26 in (Lafftery et al.,, 2010) gives:

| (16) |

Whether Condition 1 holds depends on the choice of and the relationship between and as defined by the Bernstein condition:

| (17) |

Denote the bracketed expression in the exponent of (16) by . When , the and functions are of the same order and, if , and then Condition 1 holds with . Since for small , it suffices to take the learning rate a sufficiently small constant.

On the other hand, when is larger than , i.e., the Bernstein condition holds with , Condition 1 requires that depend on and . Suppose . For all small enough , we can set and Condition 1 is again satisfied with .

The above strategies are implemented in the classification examples in Section 5.5 where we also discuss connections to the Tsybakov (Tsybakov,, 2004) and Massart (Massart and Nedelec,, 2006) conditions. For the former, see Theorem 22 and the subsequent discussion in Grünwald and Mehta, (2020), where the learning rate ends up being a vanishing sequence depending on the concentration rate and the Bernstein exponent .

3.4.2 Sub-exponential excess losses

Unbounded but light-tailed loss differences may also satisfy Condition 1. Both sub-exponential and sub-Gaussian random variables (Boucheron et al.,, 2012, Sec. 2.3–4) admit an upper bound on their moment-generating functions. When the loss difference is sub-Gaussian

| (18) |

for all , where the variance proxy may depend on . If is sub-exponential, then the above bound holds for all for some indexing the tail behavior of .

If is upper-bounded by for a constant , then the above bound can be rewritten as

| (19) |

Then Condition 1 holds if the loss difference is sub-Gaussian and sub-exponential if and , respectively.

In practice it may be awkward to assume is sub-exponential, but in certain problems it is sufficient to make such an assumption about features of , which may be more reasonable. See Section 5.4 for an application of this idea to a fixed-design regression problem where the fact the response variable is sub-Gaussian implies the excess loss is itself sub-Gaussian.

4 Extensions

4.1 Locally sub-exponential type loss functions

In some cases the moment generating function bound in Condition 1 can be verified in a neighborhood of but not for all . For example, suppose is Lipschitz with respect to a metric with uniformly bounded Lipschitz constant , and that, for some ,

That is, the Bernstein condition (17) holds but for different values of depending on . This is the case for quantile regression; see Section 5.6. This class of problems, where the Bernstein exponent varies across , apparently has not been considered previously. For example, Grünwald and Mehta, (2020) only consider cases where the Bernstein exponent is constant across the entire parameter space; consequently, their results assume is bounded (e.g., Grünwald and Mehta,, 2020, Example 10), so (17) holds trivially with exponent .

To address this problem, our idea is a simple one. We propose to introduce a sieve which is large enough that we can safely assume it contains but small enough that, on which, the and functions can be appropriately controlled. Towards this, let be an increasing sequence of subsets of , indexed by the sample size . The “size” of will play an important role in the result below. While more general sieves are possible, to keep the notion of size concrete, let , so that size is controlled by the non-decreasing sequence , which would typically satisfy as . The metric in the definition of is at the user’s discretion, it would be chosen so that provides information that can be used to control the excess loss . This leads to the following straightforward modification of Condition 1.

Condition 2.

For with size controlled by , there exists an interval , a constant , and a sequence such that, for all and for all small ,

| (20) |

Aside from the restriction , the key difference between the bounds here and in Condition 1 is in the exponent. Instead of there being a constant , there is a sequence which is determined by the sieve’s size, controlled by . If the sequence is increasing, as we expect it will be, then we can anticipate that the overall concentration rate would be adversely affected—unless the learning rate is vanishing suitably fast. The following theorem explains this more precisely.

Condition 2 can be used exactly as Condition 1 to upper bound . However, the Gibbs posterior probability assigned to must be handled separately.

Theorem 4.1.

Let be a sequence of subsets for which the loss function satisfies Condition 2 for a sequence , a constant , and a learning rate for all sufficiently large . Let be a vanishing sequence satisfying . Suppose the prior satisfies

| (21) |

for some and the same , , and as above. Then the Gibbs posterior in (5) satisfies

Consequently, if

| (22) |

then the Gibbs posterior has concentration rate for all large constants in Definition 3.1.

There are two aspects of Theorem 4.1 that deserve further explanation. We start with the point about separate handling of . Condition (22) is easy to check for well-specified Bayesian posteriors, but these results do not carry over even to a misspecified Bayesian model. Of course, (22) can always be arranged by restricting the support of the prior distribution to , which is the suggestion in Kleijn and van der Vaart, (2006, Theorem 2.3) for the Bayesian case and how we handle (22) for our infinite-dimensional Gibbs example in Section 5.6. However, as (22) suggests, this is not entirely necessary. Indeed, Kleijn and van der Vaart, (2006) describe a trade-off between model complexity and prior support, and offer a more complicated form of their sufficient condition, which they do not explore in that paper. The fact is, without a well-specified likelihood, checking a condition like our (22) or Equation (2.13) in Kleijn and van der Vaart, (2006) is a challenge, at least in infinite-dimensional problems. For finite-dimensional problems, it may be possible to verify (22) directly using properties of the loss functions. For example, we use convexity of the check loss function for quantile estimation to verify (22) in Section 5.1.

Next, how might one proceed to check Condition 2? Go back to the Lipschitz loss case at the start of this subsection. For a sieve as described above, if and are in , then is bounded by a multiple of . This, together with the Lipschitz property and (16), implies

where . Suppose the user chooses and such that ; then we can replace by a constant . If there exist functions and such that

| (23) |

then the above upper bound simplifies to

Then Condition 2 holds with , and it remains to balance the choices of and to achieve the best possible concentration rate ; see Section 5.6.

4.2 Clipping the loss function

When the excess loss is heavy-tailed, i.e., not sub-exponential, like in Section 5.3, its moment-generating function does not exist and, therefore, Condition 1 cannot be satisfied. In this section, we assume the loss function is non-negative or lower-bounded by a negative constant. In the latter case we can work with the shifted loss— minus its lower bound. Many practically useful loss functions are non-negative, including squared-error loss, which we cover in Section 5.3.

In such cases, it may be reasonable to replace the heavy-tailed loss with a clipped version

where is a diverging clipping sequence. Since is bounded in for each fixed , the strategy for checking Condition 1 described in Section 3.4.1 suggests that, for certain choices of , places vanishing mass on the sequence of sets , where . This makes the the (moving) target of the Gibbs posterior, instead of the fixed . On the other hand, if the loss function admits more than one finite moment, then for a corresponding, increasing clipping sequence the clipped risk neighborhoods of contain the risk neighborhoods of for all large , that is,

| (24) |

Then we further expect concentration of the clipped loss-based Gibbs posterior at with respect to the excess risk divergence at rate . Condition 3 and Theorem 4.2 below provide a set of sufficient conditions under which these expectations are realized and a concentration rate can be established.

Condition 3.

Let be the loss. Define as the clipped loss and as a sieve, depending on constants .

-

1.

For some , the sequence is finite for all .

-

2.

There exists a sequence , and a sequence , such that for all sequences and for all sufficiently small ,

(25)

Theorem 4.2.

For a given loss and sieve , suppose that Condition 3 holds for ; and let be as defined in Condition 3.1, for . Let be a vanishing sequence such that , and suppose the prior satisfies

| (26) |

Then the Gibbs posterior in (5) based on the clipped loss satisfies

where . Consequently, if

then the Gibbs posterior has asymptotic concentration rate at with respect to the excess risk .

The setup here is more complicated than in previous sections, so some further explanation is warranted. First, we sketch out how Condition 3.1 leads to the critical property (24). A well-known bound on the expectation of a non-negative random variable, plus the moment bound in Condition 3.1, for , and Markov’s inequality leads to

| (27) |

This, in turn, implies . If , then the difference between the risk and the clipped risk is vanishing, so we can bound by a multiple of the excess risk for all sufficiently large . In the application we explore in Section 5.3, is related to the radius of the sieve , which grows logarithmically in , while is related to the polynomial tail behavior of , so that happens naturally if . Additional details are given in the proof of Theorem 4.2, which can be found in Appendix A.

Second, how might Condition 3.2 be checked? Start by defining the excess clipped risk and the corresponding variance . Now suppose it can be shown that there exists a function such that

is the size index of the sieve. This amounts to the excess clipped loss satisfying a Bernstein condition (17), with exponent . The excess clipped loss itself is (and maybe substantially smaller, depending on form of ). So, we can apply the moment-generating function bound in (16) for bounded excess losses to get

where , for a constant , provided that . Now it is easy to see that the above display implies Condition 3.2.

For the rate calculation with respect to the excess risk, the decomposition of based on (27) implies we need , subject to the constraint , for as above. As we see in Section 5.3, , , and can often be taken as powers of , so the critical components determining the rate are and . The optimal rate depends on the upper bound of the the excess clipped loss, which, in the worst case, equals . To apply (16) we need the learning rate to vanish like the reciprocal of this bound, so we take . Then, we determine to satisfy and , up to a log term. The clipping sequence is sufficient, and yields the rate , modulo log terms.

5 Examples

This section presents several illustrations of the general theory presented in Sections 3 and 4. The strategies laid out in Sections 3.4, 4.1, and 4.2 are put to use in the following examples to verify our sufficient conditions for Gibbs posterior concentration. All proofs of results in this section can be found in Appendix C.

5.1 Quantile regression

Consider making inferences on the conditional quantile of a response given a predictor . We model this quantile, denoted , as a linear combination of functions of , that is, , for a fixed, finite dictionary of functions and where is a coefficient vector with . Here we assume the model is well-specified so the true conditional quantile is for some . The standard check loss for quantile estimation is

| (28) |

We show minimizes in the proof of Proposition 1 below. It can be shown that is -Lipschitz, with , and convex, so the strategy in Section 4.1 of the main article is helpful here for verifying Condition 2 and Lemma 2 in Section B can be used to verify (22).

Inference on quantiles is a challenging problem from a Bayesian perspective because the quantile is well-defined irrespective of any particular likelihood. Sriram et al., (2013) interprets the check loss as the negative log-density of an asymmetric Laplace distribution and constructs a corresponding pseudo-posterior using this likelihood, but their posterior is effectively a Gibbs posterior as Definition 3 of the main article.

With a few mild assumptions about the underlying distribution , our general result in Theorem 2 can be used to establish Gibbs posterior concentration at rate .

Assumption 1.

-

1.

The marginal distribution of is such that exists and is positive definite;

-

2.

the conditional distribution of , given , has at least one finite moment and admits a continuous density such that is bounded away from zero for -almost all ; and

-

3.

the prior has a density bounded away from 0 in a neighborhood of .

Proposition 1.

Under Assumption 1, if the learning rate is sufficiently small, then the Gibbs posterior concentrates at with rate with respect to .

5.2 Area under receiver operator characteristic curve

The receiver operator characteristic (ROC) curve and corresponding area under the curve (AUC) are diagnostic tools often used to judge the effectiveness of a binary classifier. Suppose a binary classifier produces a score characterizing the likelihood an individual belongs to Group versus Group . We can estimate an individual’s group by where different values of the cutoff score may provide more or less accurate estimates. Suppose and are independent scores corresponding to individuals from Group and Group , respectively. The specificity and sensitivity of the test of that rejects when are defined by and . When the type 1 and 2 errors of the test are equally costly the optimal cutoff is the value of maximizing , or, in other words, the test maximizing the sum of power and one minus the type 1 error probability. The ROC is the parametric curve in which provides a graphical summary of the tradeoff between Type 1 and Type 2 errors for different choices of the cutoff. The AUC, equal to , gives an overall numerical summary of the quality of the binary classifier, independent of the choice of threshold.

Our goal is to make posterior inferences on the AUC, but the usual Bayesian approach immediately runs into the kinds of problems we see in the examples in Sections 5.1 and later in 6. The parameter of interest is one-dimensional, but it depends on a completely unknown joint distribution . Within a Bayesian framework, the options are to fix a parametric model for this joint distribution and risk model misspecification or work with a complicated nonparametric model. Wang and Martin, (2020) constructed a Gibbs posterior for the AUC that avoids both of these issues.

Suppose and denote random samples of size and , respectively, of binary classifier scores for individuals belonging to Groups 0 and 1, and denote . Wang and Martin, (2020) consider the loss function

| (29) |

for which the risk satisfies . If we interpret as a function of , then it makes sense to write the empirical risk function as

| (30) |

Note the minimizer of the empirical risk is equal to

| (31) |

Wang and Martin, (2020) prove concentration of the Gibbs posterior at rate under the following assumption.

Assumption 2.

-

1.

The sample sizes satisfy .

-

2.

The prior distribution has a density function that is bounded away from zero in a neighborhood of .

Wang and Martin, (2020) note that their concentration result holds for fixed learning rates and deterministic learning rates that vanish more slowly that . As discussed in Syring and Martin, (2019) one motivation for choosing a particular learning rate is to calibrate Gibbs posterior credible intervals to attain a nominal coverage probability, at least approximately. With this goal in mind, Wang and Martin, (2020) suggest the following random learning rate. Define the covariances

| (32) |

Wang and Martin, (2020) note the asymptotic covariance of is given by

| (33) |

and that the Gibbs posterior variance can be made to match this, at least asymptotically, by using the random learning rate

| (34) |

where and are the corresponding empirical covariances:

| (35) |

The hope is that the Gibbs posterior with the learning rate has asymptotically calibrated credible intervals. It turns out that the concentration result in Wang and Martin, (2020) along with Theorem 4 imply the Gibbs posterior with a slightly adjusted version of learning rate also concentrates at rate . The adjustment to the learning rate has the effect of slightly widening Gibbs posterior credible intervals, so their asymptotic calibration is not adversely affected.

Proposition 2.

Suppose Assumption 2 holds and let denote any diverging sequence. Then, the Gibbs posterior with learning rate concentrates at rate with respect to .

5.3 Finite-dimensional regression with squared-error loss

Consider predicting a response using a linear function by minimizing the sum of squared-error losses , with , over a parameter space . Suppose the covariate-response variable pairs are iid with taking values in a compact subset . To complement this example we present a more flexible, non-parametric regression problem in Section 5.4 below. For the current example, we focus on how the tail behavior of the response variable affects posterior concentration; see Assumptions 3 and 4 below.

5.3.1 Light-tailed response

When the response is sub-exponential so is the excess loss, and by the argument outlined in Section 3.4 we can verify Condition 1 for and with . Then, the Gibbs posterior distribution concentrates at rate as a consequence of Theorem 3.3.

Assumption 3.

-

1.

The response , given , is sub-exponential with parameters for all ;

-

2.

is bounded and its marginal distribution is such that exists and is positive definite with eigenvalues bounded away from ; and

-

3.

the prior has a density bounded away from in a neighborhood of .

Proposition 3.

If Assumption 3 holds, and the learning rate is a sufficiently small constant, then the Gibbs posterior concentrates at rate with respect to .

5.3.2 Heavy-tailed response

As discussed in Section 3.4, Condition 1 can be expected to hold when the response is light-tailed, but not when it is heavy-tailed. However, for a capped loss, , with increasing , and for a suitable sieve on the parameter space, we can show concentration of the Gibbs posterior at the risk minimizer via the argument given in Section 4.2.

Assumption 4.

The marginal distribution of satisfies for .

Define a sieve by for an increasing sequence , e.g., . The moment condition in Assumption 4 implies three important properties of for :

-

•

The excess clipped loss satisfies .

-

•

The risk and clipped risk are equivalent up to an error of order .

-

•

The clipped loss satisfies .

These three properties can be used to verify Condition 3 using the argument sketched out in Section 4.2 and to verify the prior bound in (26) required to apply Theorem 4.2.

Proposition 4.

Proposition 4 continues to hold if we replace in the definitions of and by satisfying . That means we only need an accurate lower bound for to construct a consistent Gibbs posterior for , albeit with a slower concentration rate.

It is clear the clipping sequence may bias the clipped risk minimizer. For a given clip, the bias is less for light-tailed (large ) losses compared to heavy-tailed losses because a loss in excess of the clip is rarer for the former. This explains why the clipping sequence decreases in .

Note that our can be compared to the rate derived in Grünwald and Mehta, (2020, Example 11). Indeed, up to log factors, their rate, , is smaller than ours for but larger for . That is, their rate is slightly better when the response has between 2 and 4 moments, while ours is better when the response has 4 or more moments. Also, their result assumes that the parameter space is fixed and bounded, whereas we avoid this assumption with a suitably chosen sieve.

5.4 Mean regression curve

Let be independent, where the marginal distribution of depends on a fixed covariate through the mean, i.e., the expected value of is , . For simplicity, set , corresponding to an equally-spaced design. Then the goal is estimation of the mean function , which resides in a specified function class defined below.

A natural starting point is to define an empirical risk based on squared error loss, i.e.,

| (36) |

However, any function that passes through the observations would be an empirical risk minimizer, so some additional structure is needed to make the solution to the empirical risk minimization problem meaningful. Towards this, as is customary in the literature, we parametrize the mean function as a linear combination of a fixed set of basis functions, . That is, we consider only functions , where

| (37) |

Note that we do not assume that is of the specified form; more specifically, we do not assume existence of a vector such that . The idea is that the structure imposed via the basis functions will force certain smoothness, etc., so that minimization of the risk over this restricted class of functions would identify a suitable estimate.

This structure changes the focus of our investigation from the mean function to the -vector of coefficients . We now proceed by first constructing a Gibbs posterior for and then obtain the corresponding Gibbs posterior for by pushing the former through the mapping . In particular, define the empirical risk function in terms of :

| (38) |

where and where is the matrix whose entry is , assumed to be positive definite; see below. Given a prior distribution for —which determines a prior for through the aforementioned mapping—we can first construct the Gibbs posterior for as in (5) with the pseudo-likelihood . If we write for this Gibbs posterior for , then the corresponding Gibbs posterior for is given by

| (39) |

Therefore, the concentration properties of are determined by those of .

We can now proceed very much like we did before, but the details are slightly more complicated in the present inid case. Taking expectation with respect to the joint distribution of is, as usual, the average of marginal expectations; however, since the data are not iid, these marginal expectations are not all the same. Therefore, the expected empirical risk function is

| (40) |

where is the marginal distribution of and where is the row of . Since the expected empirical risk function depends on , through , so too does the risk minimizer

| (41) |

If has finite variance, then differs from by only an additive constant not depending on , and this becomes a least-squares problem, with solution

| (42) |

where is the -vector . Our expectation is that the Gibbs posterior for will suitably concentrate around , which implies that the Gibbs posterior for will suitably concentrate around . Finally, if the above holds and the basis representation is suitably flexible, then will be close to in some sense and, hence, we achieve the desired concentration.

The flexibility of the basis representation depends on the dimension . Since need not be of the form , a good approximation will require that be increasing with . How fast must increase depends on the smoothness of . Indeed, if has smoothness index (made precise below), then many systems of basis functions—including Fourier series and B-splines—have the following approximation property: there exists an such that for every

| (43) |

Then the idea is to set the approximation error in (43) equal to the target rate of convergence, which depends on and on , and then solve for .

For Gibbs posterior concentration at or near the optimal rate, we need the prior distribution for to be sufficiently concentrated in a bounded region of the -dimensional space in the sense that

| (44) |

for the same as in (43), for a small constant , and for all small .

Assumption 5.

-

1.

The function belongs to a class of Hölder smooth functions parametrized by and . That is, satisfies

where the superscript “” means derivative and is the integer part of ;

-

2.

for a given , the response is sub-Gaussian and with variance and variance proxy—both of which can depend on —uniformly upper bounded by ;

-

3.

the eigenvalues of are bounded away from zero and ;

-

4.

the approximation property (43) holds; and

-

5.

the prior for satisfies (44) and has a bounded density on the -dimensional parameter space.

The bounded variance assumption is implied, for example, if the variance of is a smooth function of in , which is rather mild. And assuming the eigenvalues of are bounded is not especially strong since, in many cases, the basis functions would be orthonormal. In that case, the diagonal and off-diagonal entries of would be approximately 1 and 0, respectively, and the bounds are almost trivial. The conditions on the prior distribution are weak; as we argue in the proof, it can be satisfied by taking the joint prior density to be the product of independent prior densities on the components of , and where each component density is strictly positive.

Proposition 5.

If Assumption 5 holds, and the learning rate is a sufficiently small constant, then the Gibbs posterior for concentrates at with rate with respect to the empirical norm , where .

We should emphasize that the quantity of interest, , is high-dimensional, and the rate given in Proposition 5 is optimal for the given smoothness ; there are not even any nuisance logarithmic terms.

The simpler fixed-dimensional setting with constant can be analyzed similarly as above. In that case, we can simultaneously weaken the requirement on the response in Assumption 5.2 from sub-Gaussian to sub-exponential, and strengthen the conclusion to a root- concentration rate.

5.5 Binary classification

Let be a binary response variable and a -dimensional predictor variable. We consider classification rules of the form

| (45) |

and the goal is to learn the optimal vector, i.e., , where is the misclassification error probability, and is the joint distribution of . This optimal is such that if and if , where is the conditional probability function. Below we construct a Gibbs posterior distribution that concentrates around this optimal at rate that depends on certain local features of that function.

Suppose our data consists of iid copies , , of from , and define the empirical risk function

| (46) |

In addition to the empirical risk function we need specify a prior and here the prior plays a significant role in the Gibbs posterior concentration results.

A unique feature of this problem, which makes the prior specification a little different than in a linear regression problem, is that the scale of does not affect classification performance, e.g., replacing with gives exactly the same classification performance. To fix a scale, we follow Jiang and Tanner, (2008), and

-

•

assume that the component of is of known importance and always included in the classifier,

-

•

and constrain the corresponding coefficient, , to take values .

This implies that the and components of the vector should be handled very differently in terms of prior specification. In particular, is a scalar with a discrete prior—which we take here to be uniform on —and , being potentially high-dimensional, will require setting-specific prior considerations.

The characteristic that determines the difficulty of a classification problem is the distribution of or, more specifically, how concentrated is near the value , where one could do virtually no worse by classifying according to a coin flip. The set is called the margin, and conditions that control the concentration of the distribution of around are generally called margin conditions. Roughly, if has a “jump” or discontinuity at the margin, then classification is easier and does not have to be so tightly concentrated around . On the other hand, if is smooth at the margin, then the classification problem is more challenging in the sense that more data near the margin is needed to learn the optimal classifier, hence, tighter concentration of near is required.

In Sections 5.5.1 and 5.5.2 that follow, we consider two such margin conditions, namely, the so-called Massart and Tsybakov conditions. The first is relatively strong, corresponding to a jump in at the margin, and the result we we establish in Proposition 6 is accordingly strong. In particular, we show that the Gibbs posterior achieves the optimal and adaptive concentration rate in a class of high-dimensional problems () under a certain sparsity assumption on . The Tsybakov margin condition we consider below is weaker than the first, in the sense that can be smooth near the “” boundary and, as expected, the Gibbs posterior concentration rate result is not as strong as the first.

5.5.1 Massart’s noise condition

Here we allow the dimension of the coefficient vector to exceed the sample size , i.e., we consider the so-called high-dimensional problem with . Accurate estimation and inference is not possible in high-dimensional settings without imposing some low-dimensional structure on the inferential target, . Here, as is typical in the literature on high-dimensional inference, we assume that is sparse in the sense that most of its entries are exactly zero, which corresponds to most of the predictor variables being irrelevant to classification. Below we construct a Gibbs posterior distribution for that concentrates around the unknown sparse at a (near) optimal rate.

Since the sparsity in is crucial to the success of any statistical method, the prior needs to be chosen carefully so that sparsity is encouraged in the posterior. The prior for will treat and independent, and the prior for will be defined hierarchically. Start with the reparametrization , where denotes the configuration of zeros and non-zeros in the vector, and denotes the -vector of non-zero values. Following Castillo et al., (2015), for the marginal prior for , we take

where the is a prior for the size and the first factor on the right-hand side is the uniform prior for of the given size . Various choices of are possible, but here we take the complexity prior , a truncated geometric density, where and are fixed (and here arbitrary) hyperparameters; a similar choice is also made in Martin et al., (2017). Second, for the conditional prior of , given , again following Castillo et al., (2015), we take its density to be

a product of many Laplace densities with rate to be specified.

Assumption 6.

-

1.

The marginal distribution of is compactly supported, say, on .

-

2.

The conditional distribution of , given , has a density with respect to Lebesgue measure that is uniformly bounded.

-

3.

The rate parameter in the Laplace prior satisfies .

-

4.

The optimal is sparse in the sense that , where is the configuration of non-zero entries in , and .

-

5.

There exists such that .

The first two parts of Assumption 6 correspond to Conditions and in Jiang and Tanner, (2008). and Assumption 6 (5) above is precisely the margin condition imposed in Equation (5) of Massart and Nedelec, (2006); see, also, Mammen and Tsybakov, (1999) and Koltchinskii, (2006). This concisely states that there is either a jump in the function at the margin or that the marginal distribution of is not supported near the margin; in either case, there is separation between the and cases, which makes the classification problem relatively easy.

With these assumptions, we get the following Gibbs posterior asymptotic concentration rate result. Note that, in order to preserve the high-dimensionality in the asymptotic limit, we let the dimension increase with the sample size . So, the data sequence actually forms a triangular array but, as is common in the literature, we suppress this formulation in our notation.

Proposition 6.

Consider a classification problem as described above, with . Under Assumption 6, the Gibbs posterior, with sufficiently small constant learning rate, concentrates at rate with respect to .

This result shows that, even in very high dimensional settings, the Gibbs posterior concentrates on the optimal rule at a fast rate. For example, suppose that the dimension is polynomial in , i.e., for any , while the “effective dimension,” or complexity, is sub-linear, i.e., for . Then we get that has Gibbs posterior probability converging to 1 as . That is, rates better than can easily be achieved, and even arbitrarily close to is possible. Compare this to the rates in Propositions 2–3 in Jiang and Tanner, (2008), also in terms of risk difference, that cannot be faster than . Further, the concentration rate in Proposition 6 is nearly the optimal rate corresponding to an oracle who has knowledge of . That is, the Gibbs posterior concentrates at nearly the optimal rate adaptively with respect to the unknown complexity.

5.5.2 Tsybakov’s margin condition

Next, we consider classification under the more general Tsybakov margin condition (e.g., Tsybakov,, 2004). The problem set up is the same as above, except that here we consider the simpler low-dimensional case, with the number of predictors small relative to . Since the dimension is no longer large, prior specification is much simpler. We will continue to assume, as before, that the component of has a constrained coefficient , to which we assign a discrete uniform prior. Otherwise, we simply require the prior have a (marginal) density, , for , with respect to Lebesgue measure on .

Assumption 7.

-

1.

The marginal prior density for is continuous and bounded away from 0 near .

-

2.

There exists and such that for all sufficiently small .

The concentration of the marginal distribution of around controls the difficulty of the classification problem, and Condition 7.2 concerns exactly this. Note that smaller implies is less concentrated around , so we expect our Gibbs posterior concentration rate, say, , to be a decreasing function of . The following result confirms this.

Proposition 7.

Suppose Assumption 7 holds and, for the specified , let

Then the Gibbs posterior distribution, with learning rate , concentrates at rate with respect to .

Note that Massart’s condition from Section 5.5.1 corresponds to Tsybakov’s condition above with . In that case, the Gibbs posterior concentration rate we recover from Proposition 7 is , which is achieved with a suitable constant learning rate. This is within a logarithmic factor of the optimal rate for finite-dimensional problems. Moreover, for both the and cases, we expect that the logarithmic factor could be removed following an approach like that described in Theorem 3.3, but we do not explore this possible extension here.

We should emphasize that this case is unusual because the learning rate depends on the (likely unknown) smoothness exponent . This means the rate in Proposition 7 is not adaptive to the margin. However, this dependence is not surprising, as it also appears in Grünwald and Mehta, (2020, Section 6). The reason the learning rate depends on is that the Tsybakov margin condition in Assumption 7.2 implies the Bernstein condition in (17) takes the form

Therefore, in order to verify Condition 1 using the strategy in Section 3.4, we need and to have the same order when . This requires that the learning rate depends on , in particular, .

5.6 Quantile regression curve

In this section we revisit inference on a conditional quantile, covered in Section 5.1. The conditional quantile of a response given a covariate is modeled by a linear combination of basis functions :

In Section 5.1, we made the rather restrictive assumption that the true conditional quantile function belonged to the span of a fixed set of basis functions. In practice, it may not be possible to identify such a set of functions, which is why we considered using a sample-size dependent sequence of sets of basis functions in Section 5.4 to model a smooth function, . When the degree of smoothness, , of is known we can choose the number of basis functions to use in order to achieve the optimal concentration rate. But, in practice, may not be known, which creates a challenge because, as mentioned, the number of terms needed in the basis function expansion modeling depends on this unknown degree of smoothness.

To achieve optimal concentration rates adaptive to unknown smoothness, the choice of prior is crucial. In particular, the prior must support a very large model space in order to guarantee it places sufficient mass near . Our approximation of by a linear combination of basis functions suggests a hierarchical prior for , similar to Section 5.5.1, with a marginal prior for the number of basis functions and a conditional prior for , given . The resulting prior for is given by a mixture,

| (47) |

Then, in order for to place sufficient mass near , it is sufficient the marginal and conditional priors satisfy the following conditions: the marginal prior for satisfies for some for every

| (48) |

and, the conditional prior for given satisfies for every

| (49) |

for the same as in (43) and for some constant for all sufficiently small . Fortunately, many simple choices of are satisfactory for obtaining adaptive concentration rates, e.g., a Poisson prior on and a -dimensional normal conditional prior for , given ; see Conditions (A1) and (A2) and Remark 1 in Shen and Ghosal, (2015). Besides the conditions in (48) and (49) we need to make a minor modification of to make it suitable for our proof of Gibbs posterior concentration; see below.

Similar to our choice in Section 5.1 we link the data and parameter through the check loss function

where . See Koltchinskii, (1997) for a proof that is minimized at . It is straightforward to show the check loss is -Lipschitz with . From there, if were bounded we could use Condition 1 to compute the concentration rate. However, to handle an unbounded response we need the flexibility of Condition 2 and Theorem 4.1. To verify (22), we found it necessary to limit the complexity of the parameter space by imposing a constraint on the prior distribution, namely that the sequence of prior distributions places all its mass on the set for some diverging sequence ; see Assumption 8.4. This constraint implies the prior depends on , and we refer to this sequence of prior distributions by . Given the hierarchical prior in (47) one straightforward way to define a sequence of prior distributions satisfying the constraint is to restrict and renormalize to , i.e., define as

| (50) |

This particular construction of in (50) is not the only way to define a sequence of priors satisfying the restriction to , but it is convenient. That is, if places mass on a sup-norm neighborhood of (see the proof of Proposition 8), then, by construction, in (50) places at least mass on the same neighborhood.

We should emphasize this restriction of the prior to is only a technical requirement needed for the proof, but it is not unreasonable. Since the true function is bounded, it is eventually in the growing support of the prior . Similar assumptions have been used in the literature on quantile curve regression; for example, Theorem 6 in Takeuchi et al., (2006) requires that the parameter space consists only of bounded functions, which is a stricter assumption than ours here.

Assumption 8.

-

1.

The function is Hölder smooth with parameters (see Assumption 5.1);

-

2.

the basis functions satisfy the approximation property in (43);

-

3.

the covariate space is compact and there exists a such that the conditional density of , given , is continuous and bounded away from by a constant in the interval for every ; and,

- 4.

Proposition 8.

Define . If the learning rate satisfies for some and Assumption 8 holds, then the Gibbs posterior distribution concentrates at rate with respect to .

Since the mathematical statement does not give sufficient emphasis to the adaptation feature, we should follow up on this point. That is, is the optimal rate Shen and Ghosal, (2015) for estimating an -Hölder smooth function, and it is not difficult to construct an estimator that achieves this, at least approximately, if is known. However, is unknown in virtually all practical situations, so it is desirable for an estimator, Gibbs posterior, etc. to adapt to the unknown . The concentration rate result in Proposition 8 says that the Gibbs posterior achieves nearly the optimal rate adaptively in the sense that it concentrates at nearly the optimal rate as if were known.

The concentration rate in Proposition 8 depends on the complexity of the parameter space as determined by in Assumption 8(3). For example, if the sup-norm bound on were known, then and the learning rate could be taken as constants and the rate would be optimal up to a factor. On the other hand, if greater complexity is allowed, e.g., for some power , then the concentration rate takes on an additional factor, which is not a serious concern.

6 Application: personalized MCID

6.1 Problem setup

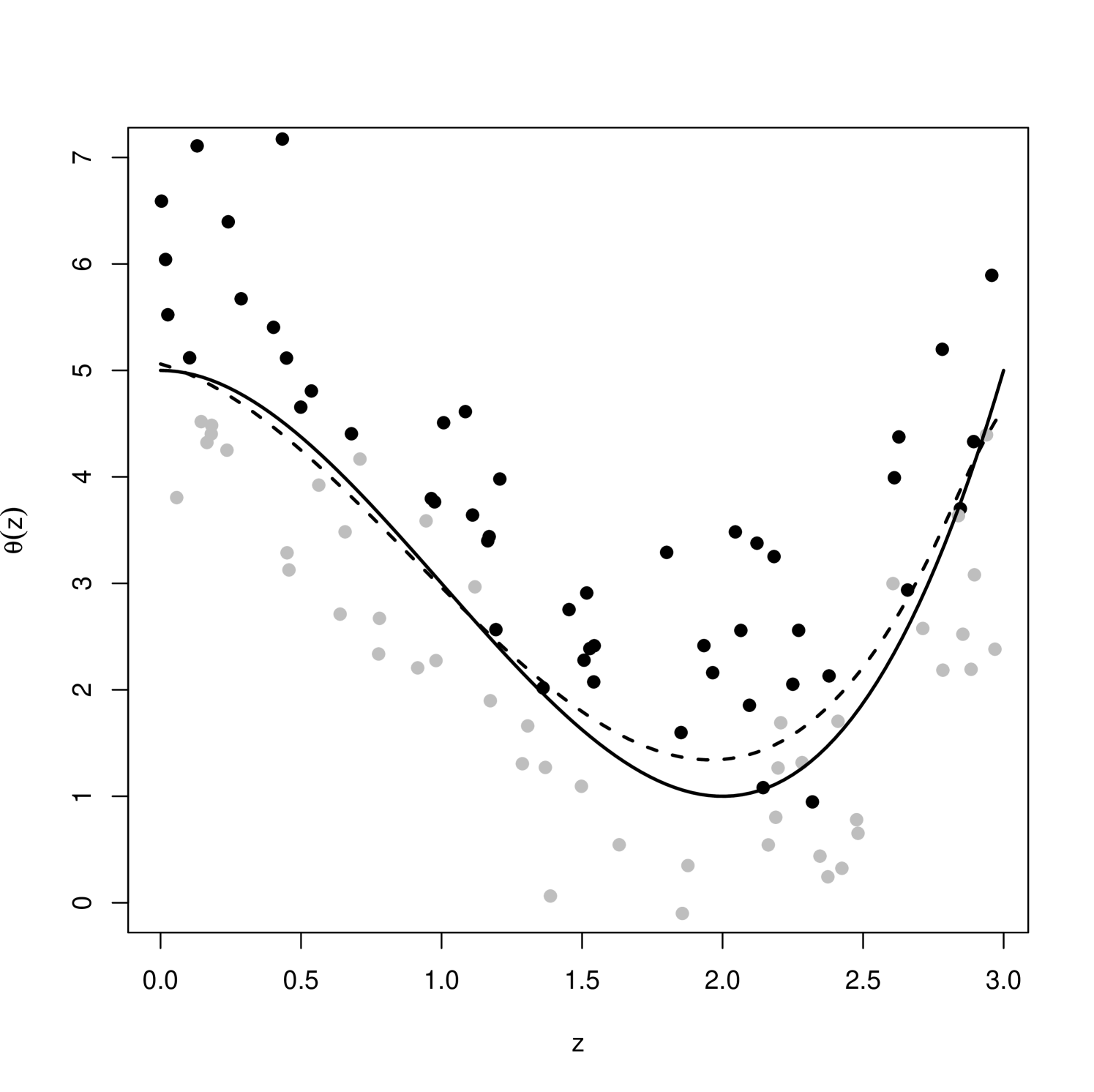

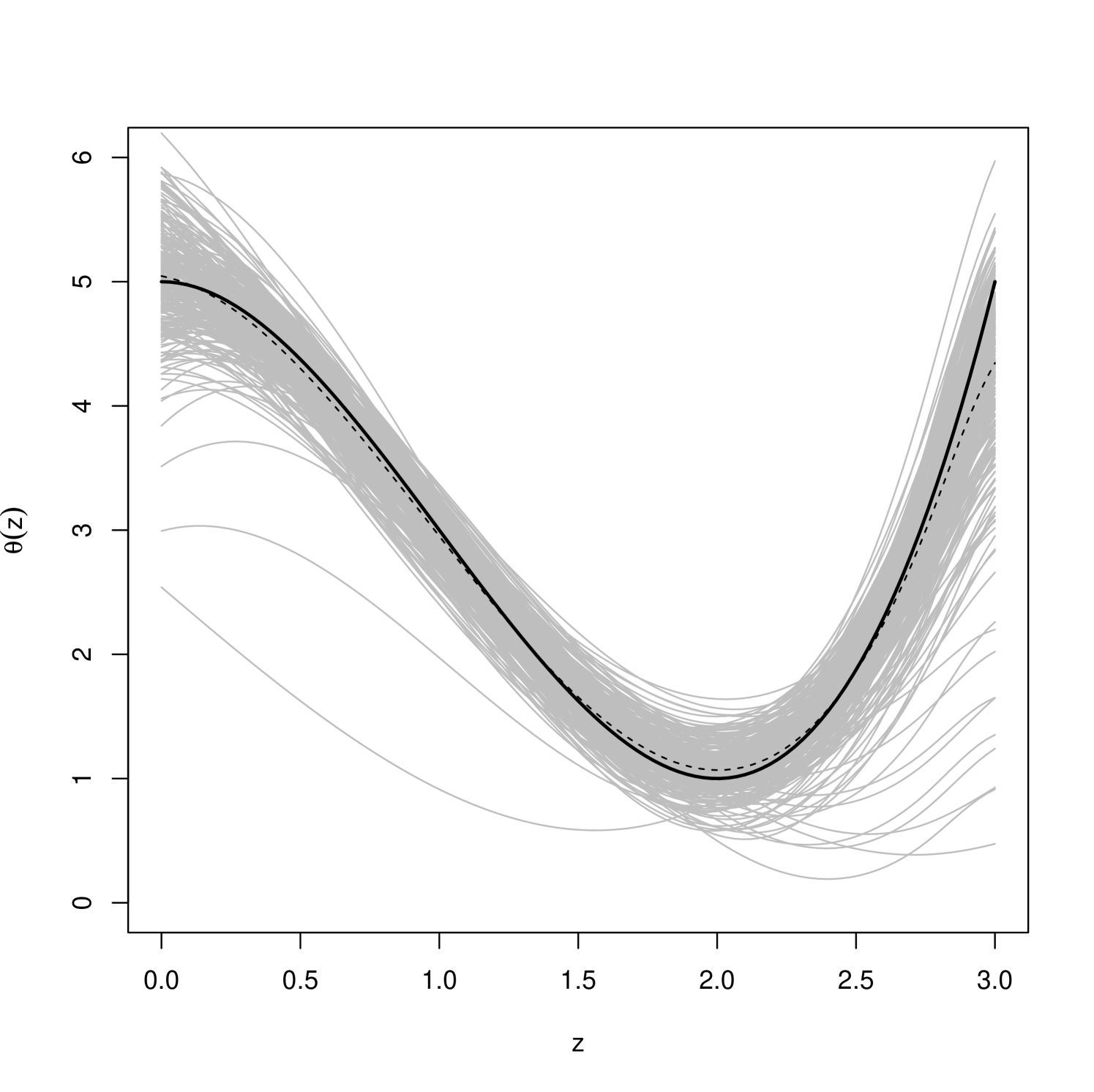

In the medical sciences, physicians who investigate the efficacy of new treatments are challenged to determine both statistically and practically significant effects. In many applications some quantitative effectiveness score can be used for assessing the statistical significance of the treatment, but physicians are increasingly interested also in patients’ qualitative assessments of whether they believed the treatment was effective. The aim of the approach described below is to find the cutoff on the effectiveness score scale that best separates patients by their reported outcomes. That cutoff value is called the minimum clinically important difference, or MCID. For this application we follow up on the MCID problem discussed in Syring and Martin, (2017) with a covariate-adjusted, or personalized, version. In medicine, there is a trend away from the classical “one size fits all” treatment procedures, to treatments that are tailored more-or-less to each individual. Along these lines, naturally, doctors would be interested to understand how that threshold for practical significance depends on the individual, hence there is interest in a so-called personalized MCID (Hedayat et al.,, 2015; Zhou et al.,, 2020).