Gibbs sampling for mixtures in order of appearance: the ordered allocation sampler

Abstract

Gibbs sampling methods are standard tools to perform posterior inference for mixture models. These have been broadly classified into two categories: marginal and conditional methods. While conditional samplers are more widely applicable than marginal ones, they may suffer from slow mixing in infinite mixtures, where some form of truncation, either deterministic or random, is required. In mixtures with random number of components, the exploration of parameter spaces of different dimensions can also be challenging. We tackle these issues by expressing the mixture components in the random order of appearance in an exchangeable sequence directed by the mixing distribution. We derive a sampler that is straightforward to implement for mixing distributions with tractable size-biased ordered weights, and that can be readily adapted to mixture models for which marginal samplers are not available. In infinite mixtures, no form of truncation is necessary. As for finite mixtures with random dimension, a simple updating of the number of components is obtained by a blocking argument, thus, easing challenges found in trans-dimensional moves via Metropolis-Hastings steps. Additionally, sampling occurs in the space of ordered partitions with blocks labelled in the least element order, which endows the sampler with good mixing properties. The performance of the proposed algorithm is evaluated in a simulation study.

Keywords: Dirichlet process; Pitman-Yor process; size-biased permutations; stick-breaking construction; species sampling models.

1 Introduction

Mixture models represent one of the most successful applications of Bayesian methods. Bayesian inference proceeds by placing a prior on the mixing distribution, whose atoms and their sizes represent the mixture component parameters and the weights, respectively. An important issue is that the number of components is rarely known in advance. The nonparametric approach consists in modelling the mixing distribution with infinitely many support points, and infer the number of components through the number of groups observed in the data (e.g. Escobar and West, 1995). Another alternative is to assign a prior to this unknown quantity (cf. Richardson and Green, 1997; Miller and Harrison, 2018). Posterior inference for mixture models is customarily based on Gibbs sampling methods which have been broadly classified into two categories: marginal and conditional samplers. Marginal methods (Escobar and West, 1995; Neal, 2000) are termed this way because they partially integrate out the mixing distribution and exploit the generalized Pólya urn scheme representation (Blackwell and MacQueen, 1973; Pitman, 2006) of the prediction rule of a sample from the mixing distribution. By doing so marginal samplers avoid dealing with a potentially infinite or random model dimension. They are well suited for models such as the Dirichlet (Ferguson, 1973; Escobar and West, 1995), the Pitman-Yor (Pitman and Yor, 1997; Ishwaran and James, 2001) and mixtures of finite mixtures (Miller and Harrison, 2018). However, they are challenging to adapt to mixing priors without a tractable prediction rule. In alternative, one can use conditional methods which include the mixing distribution and update it as a component of the sampler. While being more widely applicable they bring some issues in their design and implementation. In infinite mixture models, some sort of truncation either deterministic or random is necessary to avoid dealing with infinitely many mixture components. Finite dimensional approximations of the mixing prior were proposed in (Ishwaran and James, 2001). Of random truncation type are the exact conditional samplers derived by Walker (2007); Kalli et al. (2011) and Papaspiliopoulos and Roberts (2008). It has been observed that they require Metropolis-Hastings steps that swap components’ labels so to speed up mixing (Porteous et al., 2006; Papaspiliopoulos and Roberts, 2008). As for mixtures with a random number of components, the main challenge of conditional samplers is the need to explore parameter spaces of different dimensions. The standard method is the reversible jump MCMC algorithm (Richardson and Green, 1997) but this can be difficult to implement.

In this paper we contribute both methodologically and computationally to mitigate these issues by developing a novel conditional Gibbs sampling method named the ordered allocation sampler. The sampler works with the mixture components in the random order in which they are discovered. To derive it we use in depth the theory of species sampling models set forth in Pitman (1995, 1996a, 1996b) where, in particular, it is established that the law of the weights in order of appearance corresponds to the distribution of the weights that is invariant under size-biased permutations. This one admits a simple stick-breaking representation for the Dirichlet and the Pitman-Yor processes. Working with this specific rearrangement of mixture components allows us to exploit a (conditional) prediction rule in the sampler. Thus, it bears similarities with marginal methods such as the fact that mixing takes place in the space of partitions and not in the space of cluster’s labels as it occurs in other conditional samplers (Porteous et al., 2006). Empirical studies confirm that this endows our sampler with nice mixing properties. A second major advantage of our proposal is that, since data points can not be generated from more than distinct components, at most the sampler needs to update the first components in order of appearance. This is especially relevant for infinite mixture models as it avoids truncation. In particular, for Pitman-Yor processes with slowly decaying weights, our sampler proves to be very convenient computationally wise. A third important consequence is that marginalization over the weights in order of appearance yields exactly the exchangeable partition probability function (EPPF Pitman, 1996b). For mixtures with random dimension, this translates to a simple way of updating the number of components without resorting to a reversible jump step. Finally, as other conditional methods, the ordered allocation sampler allows direct inference on the mixing distribution and can be adapted to a wide range of mixing priors.

The rest of the paper is organized as follows. In Section 2 we provide background theory on species sampling priors in mixture models. It will set the stage for the ordered allocation sampler. In Section 3 we first derive the sampler for models with tractable size-biased permuted weights, and later we show how to adapt it when the law of this arrangement of the weights is not available in explicit form. In Section 4 we illustrate the performance of our sampler with well-known real and simulated datasets. Some concluding remarks and discussion points are brought in Section 5. Proofs, technical details and additional illustrations are in the Appendix.

2 Species sampling models

In Bayesian mixture models we model exchangeable data, , taking values in a Borel space, , as conditionally independent and identically distributed (iid) from

| (1) |

where is a density for each , and the mixing distribution, , is an almost surely discrete random probability measure over the Borel parameter space . Species sampling models, introduced and studied by Pitman (1996b), constitute a very general class of random probability measures that provide a convenient prior specification for the mixing distribution . In species sampling models, , the atoms, , are iid from a diffuse distribution, , over , and are independent of . The weights are positive random variables with almost surely, and the number of support points, , can be finite, infinite or random. A sequence, , is a species sampling sequence driven by if it is exchangeable and the almost sure limit of the empirical distributions, is a species sampling model. By de Finetti’s theorem, the latter is equivalent to the existence of a species sampling model, , such that given , are conditionally iid according to (cf. Theorem 1.1 and Proposition 1.4 of Kallenberg, 2005). The laws of and determine each other, and both are fully determined by that of , and the diffuse distribution, . A key aspect to note is that the law of is invariant under weights permutations. That is, is equal in distribution to for every permutation of , which means that working with an ordering of the weights or another does not change the mixing prior. This is reflected through the so called exchangeable partition probability function (EPPF), given by

| (2) |

where the sum ranges over all -tupples of distinct positive integers, and for . In fact, describes the probability that a sample, , of size , exhibits exactly distinct values, with corresponding frequencies, (Pitman, 1996b, 2006). Whenever can be computed in closed form, the prediction rule, , of is available and can be described in terms of a generalized Pólya urn scheme (cf Blackwell and MacQueen, 1973).

The invariance under permutations of , as well as the complexity of computing the unordered sum in (2), have motivated the study of weights permutations that simplify the analysis. An ordering of the weights of paramount importance is the size-biased permutation, , given by , and defined by

| (3) |

In other words, and are sampled without replacement from and , respectively, with probabilities . By construction the distribution of is invariant under size-biased permutations. As shown by Pitman (1995, 1996a), the EPPF (2) can be computed through

| (4) |

hence, if the distribution of is available, it becomes easier to compute . Another advantage of working with size-biased permutations is that coincides with the long-run proportion of indexes such that , where is the th distinct value to appear in . Furthermore, the conditional law of given and admits a simple prediction rule as detailed next.

Theorem 1.

Let be a species sampling model over the Borel space and let be a sequence with values in . Define the th distinct value to appear in through , where , for and . Then is an species sampling sequence driven by if and only if the following hold:

-

i.

exhibits distinct values, , in order of appearance, and are iid from . Furthermore, for , where satisfies (3).

-

ii.

The almost sure limits

exist, , almost surely, and is invariant under size-biased permutations. Moreover, is given by , with as in 2.i.

-

iii.

, and the conditional prediction rule of given and is

for every , where is the number of distinct values in .

-

iv.

, , and are independent of elements in .

Theorem 1 is based on theory laid down in Pitman (1995, 1996b), nonetheless, we provide a self-contained proof in Appendix A, due to the crucial role it plays in the derivation of the new sampler.

The canonical example of species sampling models in Bayesian nonparametric statistics is the Dirichlet process (Ferguson, 1973). It has support points and its size-biased permuted weights, , admit the stick-breaking representation

| (5) |

where are iid from the Beta distribution (Sethuraman, 1994). The Dirichlet model can be generalized to the two-parameter -model (Pitman, 2006) which features size-biased permuted weights as in (5) with independent according to one of the following two regimes:

- a)

-

b)

Given , , and . In agreement with the notation we have established stands for the number of support points of . It turns out that the law of corresponds to that of the size-biased permutation of symmetric Dirichlet weights, (Pitman, 1996a). When is fixed and is random belongs to the class of Gibbs-type priors (see De Blasi et al., 2015, for a recent review), while the allied mixture model corresponds to the mixture of finite mixtures of Miller and Harrison (2018).

Another type of species sampling models for which a stick-breaking characterization of size-biased weights is available are homogeneous normalized random measures with independent increments (cf. Regazzini et al., 2003; Favaro et al., 2016). Unfortunately, such characterization remains elusive for most species sampling models used in mixture modelling, examples are finite dimensional approximations of the Pitman-Yor process (Ishwaran and James, 2001), the Geometric process (Fuentes-García et al., 2010), the probit stick-breaking process (Rodríguez and Dunson, 2011) and exchangeable stick-breaking processes studied by Gil–Leyva and Mena (2021). For all these species sampling priors the weights can be defined in terms of a stick-breaking decomposition, , for some sequence of random variables with values in , yet is not invariant under size-biased permutations.

3 The ordered allocation sampler

As mentioned in Section 2, in mixture models data points are treated as conditionally iid from a random density as in (1). Whenever the mixing distribution, , is a species sampling model we can equivalently assume , independently for , where is a species sampling sequence driven by . In this setting, marginal samplers integrate out and exploit the exchangeability of as well as the prediction rule, , to derive an algorithm for posterior inference (cf. Neal, 2000; Favaro and Teh, 2013; Miller and Harrison, 2018). Instead, conditional samplers (e.g. Ishwaran and James, 2001; Papaspiliopoulos and Roberts, 2008; Kalli et al., 2011) include the mixing distribution, , and update its atoms, , and weights, , as components of the sampler. The ordered allocation sampler is a conditional sampler as it includes the mixing distribution, , however similarly to marginal samplers it relies on a prediction rule for species sampling sequences. Explicitly, motivated by Theorem 1 we work with the atoms, , and weights, , of in the order in which they were discovered by . As commonly done in other samplers, we augment the model with latent allocation variables that identify each observation, , with the mixture component it was sampled from. Here, in accordance with the order of appearance we introduce what we call ordered allocation variables, , given by if and only if was sampled from , i.e. . Thus, , and , independently for . If denotes the number of distinct values in then coincides with , and necessarily takes a value in . More precisely, iii of Theorem 1 allows us to compute

| (6) |

for , independently of elements in (see also iv of Theorem 1). This yields the augmented likelihood

| (7) |

where , , , and is the event that is a partition of with blocks in the least element order, in particular , for , and . The full conditional distributions required at each iteration of the sampler are proportional to the product of (7) times the prior distributions, and , of the atoms and weights of in order of appearance. We first derive the ordered allocation sampler for those species sampling mixing distributions where the prior of can be modelled directly as is the case of the -model. Latter we explain how to adapt the sampler for the more general case where the law of is not available.

3.1 Ordered allocation sampler for size-biased weights

Updating of the ordered allocation variables :

| (8) |

This is the fundamentally novel part of the algorithm. Differently from other conditional samplers, the allocation variables can not be updated independently of each other for two main reasons: (i) might change as a consequence of an update in , and (ii) the least element order of must be preserved, as specified by the indicator . Instead, the updating of resembles the way marginal algorithms update allocation variables (cf. Neal, 2000) in the sense that we will update one at a time by conditioning on the current value of the remaining ordered allocation variables. To do so, we first identify the set, , of admissible moves for , which contains all positive integers for which the event remains true after setting . That is, for , define , where , and add if, under the assumption , the sets are non-empty, for , and satisfy . An example that illustrates how to determine is available in Appendix D. With this notation at hand we can rewrite (8):

| (9) |

Next, we need to weight the admissible moves according to (9). To this aim, note that for each , either or . This means that we can divide (9) by and obtain

| (10) |

for , and , for . Once we have identified , sampling from (10) is straight-forward, as its support is .

Updating of component parameters in order of appearance :

| (11) |

Since , for , we simply sample from its prior distribution. For , sampling from (11) is easy if and form a conjugate pair. Otherwise, the problem of updating the non-empty components parameters is identical as in conditional samplers and some marginal ones. The advantage with respect to conditional algorithms is that the occupied component parameters are precisely the first in the sequence .

Updating of size-biased weights :

| (12) |

If the stick-breaking representation (5) is available, we can update via sampling from its full conditional. Noting that for each , we find

For example, for the -model we know that apriori , independently, according to one of the two regimes (a) or (b) spelled out in Section 2. In this case, we update , independently for , and we sample , for , as apriori.

Before we move on, there are two points worth highlighting concerning the updating of . The first one is that while the stick-breaking decomposition simplifies this step it is not a requirement, what is needed is a posterior characterization of the weights in order of appearance. The Pitman-Yor multinomial process studied by Lijoi et al. (2020) illustrates this point. The second crucial remark is that sampling and , for , is needed for only a few as required by the occupation of new components when updating . Being that , at most we will require to update and , for . This is specially relevant for infinite mixture models as it assures the sampler unfolds in a finite dimensional space even when the model dimension, , is infinite. In fact, if the model dimension is deterministic, the ordered allocation sampler is practically identical for finite and infinite mixture models. The case of random is treated next.

Updating the model dimension :

If the model dimension is random, our proposal here is to update , and the non occupied component parameters, , as a block from

| (13) |

Here we keep “ ” to denote all random terms other than , and . We first sample from its marginal, i.e. (13) after integrating over and :

| (14) |

The expectation is taken with respect to the conditional distribution of given and treating as constants. In particular, since , (14) equals zero for . Taking this into account and recognizing, in the conditional expectation, the EPPF of the species sampling model given , cf. (4), we obtain

| (15) |

This is a remarkably simple expression for the updating of the model dimension as it only requires the conditional EPPF given . In Appendix B we provide an example on how to update for mixtures of finite mixtures and for the choice of detailed by Gnedin (2010). After updating , we sample and , conditioning on . Thus is sampled from (12) as detailed before, and the non occupied component parameters, , from the prior , cf. (11). Note that the blocking argument is remarkably simple when compared with the Metropolis-Hasting steps of the reversible jump MCMC algorithm.

3.2 Ordered allocation sampler for non size-biased weights

In this section we adapt the ordered allocation sampler to species sampling priors that do not enjoy an explicit characterization of the size-biased weights . This makes our sampler applicable to mixture models for which marginal samplers are not available, so increasing substantially its scope (examples can be found at the end of Section 2). To this aim recall that is a rearrangement of the weights in any arbitrary order, , i.e. , where is sampled without replacement from with probabilities , as defined in (3). The key idea is to include as part of the sampler. As we will see, this augmentation yields a conditional sampler that inherits the advantages of the algorithm in Section 3.1 without requiring a closed-form expression of or the EPPF. As for the component parameters in order of appearance, , we will continue to model them directly, as i.i.d. from , independently of and , cf. iv in Theorem 1. Thus, instead of (7), we work with the augmented likelihood

| (16) |

It is straightforward to see that the updating of the ordered allocation variables and the component parameters remain identical. Hence, we will only explain how to update the weights in order of appearance via and , as well as the model dimension, , whenever this quantity is random.

Updating of through and :

| (17) |

where is the event that for every . In this part, as a notational device, we keep “ ” to denote all random terms other than . Also, we distinguish the indexes of the occupied components, , from the remaining ones, . The key idea to attain simple updating steps is to sample from its full conditional, which can be expressed as a weighted permutation of indexes, and separately and as a block.

We first focus on the updating of . From (17) we get

| (18) |

after noting that is a constant with respect to , because . The event indicates that this is about sampling from a weighted permutation of the integers corresponding to current values of . Namely, we sample from

where , is the normalizing constant and is the space of permutations of . Afterwards we simply apply to the indexes of the current value of so to obtain the updated value . Now, to sample from we follow Zanella (2020) by adopting a Metropolis–Hastings scheme using a locally-balanced informed proposal distribution, cf. Example 3 therein. For the reader’s convenience, we recall briefly how it works. Let be the neighborhood of given by all permutations obtained by switching two indexes, (i.e. if and only if there exist such that , and for all ). Instead of using a random walk scheme, consisting in proposing a new value of , say , uniformly over , and accepting it with probability , we bias the proposal towards high probability regions of the target. To do so, we set the proposal distribution to be , where is the normalizing constant. Then the new value, , is accepted with probability . In the simulation study we initialized as the identity function over and performed Metropolis-Hastings steps at each iteration. As explained by Zanella (2020) the appeal of considering a locally-balanced proposal, such as , is that it is roughly -reversible when is large with respect to , thus the acceptance probability tends to be high. Otherwise, if is small, one can opt to sample exactly from (18) by enumerating all possible permutations of .

As for the updating of and , we first sample from the conditional distribution obtained from after integrating over . We get

where , and , that is if and only if there exist such that and otherwise. When , we can update via sampling from

For instance, if a priori , independently for , then a posteriori for and , for . Further examples on how to update can be found in Appendix C. After updating we sample from

| (19) |

That is, are sampled without replacement from with probabilities proportional to . This can be achieved by sampling sequentially as a priori, cf. (3).

This way of updating , although theoretically valid, has the disadvantage that switches among indexes in and indexes in only occur when changes as a consequence of an update in . To facilitate the mixing one can include the following acceleration step after updating from (18) and before updating from (19). We suggest to sample each with from

| (20) |

i.e. conditioning on the current values of , for and , with integrated out. Hence, the indicator above only dictates , with . If the number of components is finite, the support of (20) consists of positive integers and sampling directly from this distribution is trivial. Otherwise, when , we can treat as a latent variable with distribution as in (19), and update the pair from

| (21) |

In practice, it is enough to sample from as in (3) and later either leave unchanged or switch the values of and , with probabilities determined by (21). It is worth emphasizing that this procedure has to be repeated for all , and that each time we discard because it is only playing the role of an auxiliary variable to draw samples from (20).

Similarly as with the sampler in Section 3.1, this sampler unfolds in a finite dimensional space even when the model dimension is infinite. In general, we will only need to update and , for , when required by the updating the ordered allocation variables, . At most iterations this will be necessary for only a few indexes . As for the weights, it is the updating of what will determine how many entries of must be updated. Thus, at most we will need to update for where is the latest entry of we were required to update.

Updating of :

If the model dimension is random, our proposal is to update , , and as a block from the full conditional . Here we use “ ” to denote all random terms other than , , and . Integrating over , and , we first sample from

| (22) |

where the expectation is taken with respect to the conditional distribution of given , and treating and as constants. Later we sample and from (17) as previously explained, and the empty component parameters, , from the prior, cf. (11). In contrast to (15), the EPPF does not appear in (22), instead it is enough to compute an expectation of the weights. This is very convenient, being that when law of is not available, typically the EPPF is hard to compute as mentioned in Section 2.

3.3 Acceleration step

There is a very simple modification of the ordered allocation sampler that can greatly improve its performance. To motivate it, first note that the set of admissible moves, , of is always contained in , with and . Recalling that indicates from which component of the mixture was sampled, this means that while the latest data points will be able to reallocate to virtually all observed components, the first data points will rarely be reassigned to a different component. Furthermore, since component parameters and weights are labelled in the order in which they were discovered by , the initial order of data points can dictate how often there are label switches of components and thus affect the mixing properties of the sampler. To overcome this, it is enough to exploit the exchangeability of and add a step, after updating , in which we randomly permute the data points obtaining , where is a uniform permutation of . Accordingly, we modify obtaining defined by if and only if equals the th distinct value to appear in . This way, the ordered allocation variables, , that correspond to the permuted data set, , preserve the induced clustering structure, and the least element order as dictated by the event now holds for with (see Appendix D for an example). In accordance, for the sampler in Section 3.2 we will also need to change the values of so to obtain , where now indicates which weight in is the th one to be discovered by . To do so we simply have to set if and only if the th distinct value to appear in equals . After doing so we can move on with the updating of (if it is random) and each of the observed component parameters, , and weights, , identically as before, although now they are labelled in order in which they were discovered by .

For the simulation study we will present in the following section, this acceleration step was included in all implementations of the ordered allocation. Nonetheless, in Appendix E we present a few runs of the ordered allocation sampler without it to illustrate its effect.

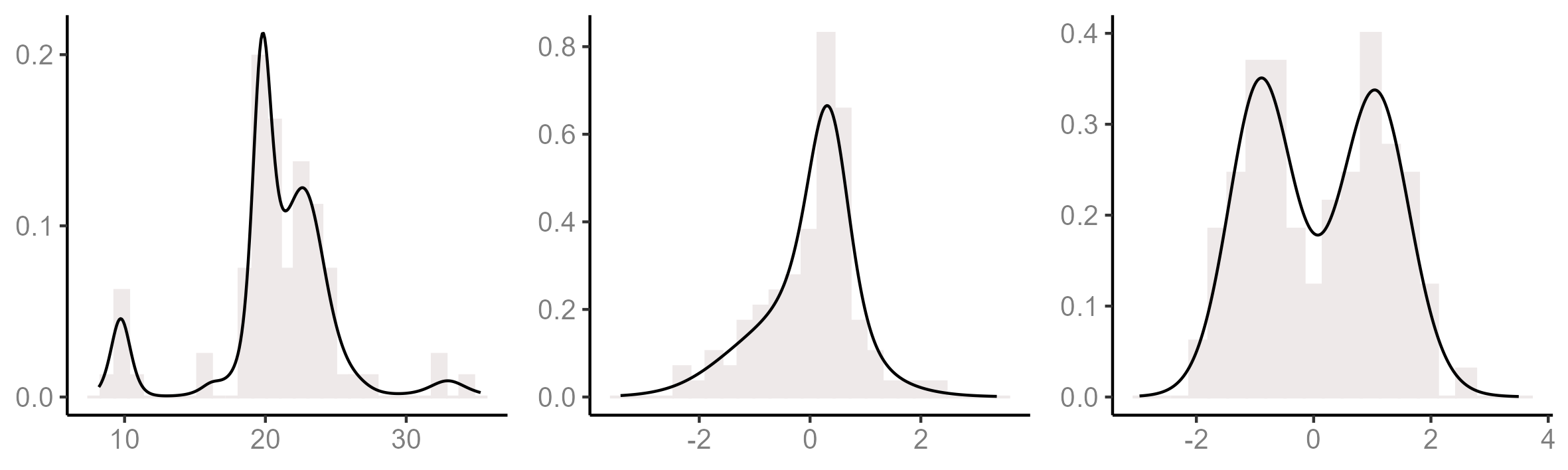

4 Simulation study

In this section we present a simulation study to compare the mixing of the ordered allocation sampler against that of a marginal sampler and a conditional sampler. Following Kalli et al. (2011), three different data sets have been considered (histograms are displayed in Figure 1). The first data set is the data, consisting of the velocities of distinct galaxies diverging away from our galaxy. The other two data sets are the and data sets first introduced in Green and Richardson (2001). The consists of data points simulated from the mixture . In the the observations come from the mixture . To each data set we fitted a mixture of Gaussian distributions with random location and scale parameters, i.e. , and with five different mixing priors specifications. First we consider a mixture of finite mixtures (MFM, Miller and Harrison, 2018) specifically a mixing prior with random dimension, , and symmetric Dirichlet weights, , with . As for , we used the prior (Gnedin, 2010) with . The remaining mixing priors we considered are a Dirichlet process (DP) with total mass parameter , a Pitman-Yor process (PY) with parameters , a Geometric process (GP, Fuentes-García et al., 2010) and an Exchangeable Stick-Breaking process (ESB, Gil–Leyva and Mena, 2021). Further specifications of the Geometric and the Exchangeable stick-breaking processes can be found in Appendix C. In all cases the distribution of the component parameters was fixed to with hyperparameters , and .

The marginal sampler we have implemented is Algorithm 8 in Neal (2000) for DP, PY and MFM. In particular for MFM, Algorithm 8 was adapted following Miller and Harrison (2018). No marginal samplers are available for GP and ESB as these priors lack a Pólya urn scheme representation. As for the conditional sampler, we implemented the (dependent) slice-efficient sampler, as described by Kalli et al. (2011), for all models but MFM. The ordered allocation sampler (OAS in short) was used to implement all considered mixing priors. In particular, we used the algorithm in Section 3.1 for MFM, DP and PY, as these priors enjoy a tractable law of the size-biased permuted weights. The algorithm of Section 3.2 was used for GP and ESB. In Appendix C we explain how to update the weights of the GP and ESB priors.

To monitor algorithmic performance we explored the convergence of the number of occupied components, , and the deviance, , of the estimated density (cf Green and Richardson, 2001). The deviance can be computed by where is the number of data points associated to . More precisely, we considered the chains and attained from iterations after the burn-in period. In each case we estimated the integrated autocorrelation time (IAT), , where stands for -lag autocorrelation of the monitored chain. As done by Kalli et al. (2011), was estimated through , where is the estimated autocorrelation at lag and . This is a very useful summary statistic for quantifying the convergence of an MCMC algorithm, smaller values of corresponding to better performance. For each sampler and mixing prior, we considered iterations after a burn-in period of iterations. Table 1 reports estimates of the IAT for and with standard errors appearing in parenthesis, the latter computed following Section 3 of Sokal (1997).

| data | data | ||||||

|---|---|---|---|---|---|---|---|

| Marginal | OAS | Conditional | Marginal | OAS | Conditional | ||

| MFM | 14.43(0.38) | 26.17(0.93) | — | 563.5(61.3) | 855.5(94.8) | — | |

| 33.55(0.92) | 89.42(3.07) | — | 337.7(26.3) | 535.2(64.7) | — | ||

| DP | 12.30(0.23) | 23.76(0.57) | 119.2(9.95) | 22.42(0.54) | 25.81(0.63) | 120.5(10.3) | |

| 13.68(0.25) | 32.49(0.81) | 190.2(16.3) | 9.26(0.13) | 18.99(0.41) | 100.8(7.18) | ||

| PY | 13.48(0.24) | 21.59(0.52) | 83.33(4.63) | 53.85(1.45) | 62.91(2.10) | 322.9(37.0) | |

| 12.43(0.23) | 35.62(0.84) | 115.7(6.23) | 12.02(0.24) | 20.96(0.56) | 138.7(13.1) | ||

| GP | — | 11.01(0.33) | 40.76(2.50) | — | 50.64(1.55) | 158.7(7.86) | |

| — | 61.67(1.89) | 621.2(172.5) | — | 45.34(1.21) | 129.8(6.43) | ||

| ESB | — | 24.29(0.68) | 245.4(28.3) | — | 61.29(1.97) | 184.5(18.6) | |

| — | 59.27(2.16) | 632.4(88.9) | — | 26.78(0.91) | 120.1(10.7) | ||

| data | ||||

|---|---|---|---|---|

| Marginal | OAS | Conditional | ||

| MFM | 122.9(8.04) | 143.0(9.28) | — | |

| 65.15(3.89) | 109.4(7.99) | — | ||

| DP | 7.84(0.16) | 13.87(0.35) | 35.61(1.68) | |

| 6.30(0.07) | 13.38(0.22) | 52.00(2.18) | ||

| PY | 39.91(1.33) | 58.11(2.21) | 257.1(22.8) | |

| 6.24(0.14) | 12.40(0.30) | 58.10(3.35) | ||

| GP | — | 57.45(1.76) | 148.4(5.81) | |

| — | 55.85(1.65) | 146.0(5.94) | ||

| ESB | — | 48.48(1.52) | 76.51(3.48) | |

| — | 19.93(0.58) | 35.53(1.42) | ||

We observe that, when applicable, Algorithm 8 outperforms the other samplers, and that the OAS has better mixing properties than the slice sampler. On average, the IAT corresponding to the OAS is roughly times bigger than that of Algorithm 8, and the IAT of the slice sampler is approximately times larger than that of the OAS. In general, it has been found that conditional algorithms perform worse than marginal samplers. This can be explained by the fact that in conditional algorithms mixing takes place in the space of all possible values of the (usual) allocation variables, , given by if and only is . Instead, in marginal algorithms the labels of the allocation variables are irrelevant, which means that the sampler searches is the space of partitions of , generated by the ties among allocation variables (cf. Porteous et al., 2006). Now, in the OAS, mixing occurs in the space of all possible values of the ordered allocation variables, , which unequivocally define an ordered partition of , with blocks in the least element order. Since there exists a one to one correspondence between (unordered) partitions of and partitions, of the same set, ordered according to the least element, we find that marginal samplers and the OAS search in the exact same space. This explains the better mixing properties of the OAS when compared with the slice sampler. Still, Algorithm 8 has better performances compared with the OAS, which is mainly due to the restricted support of in the OAS.

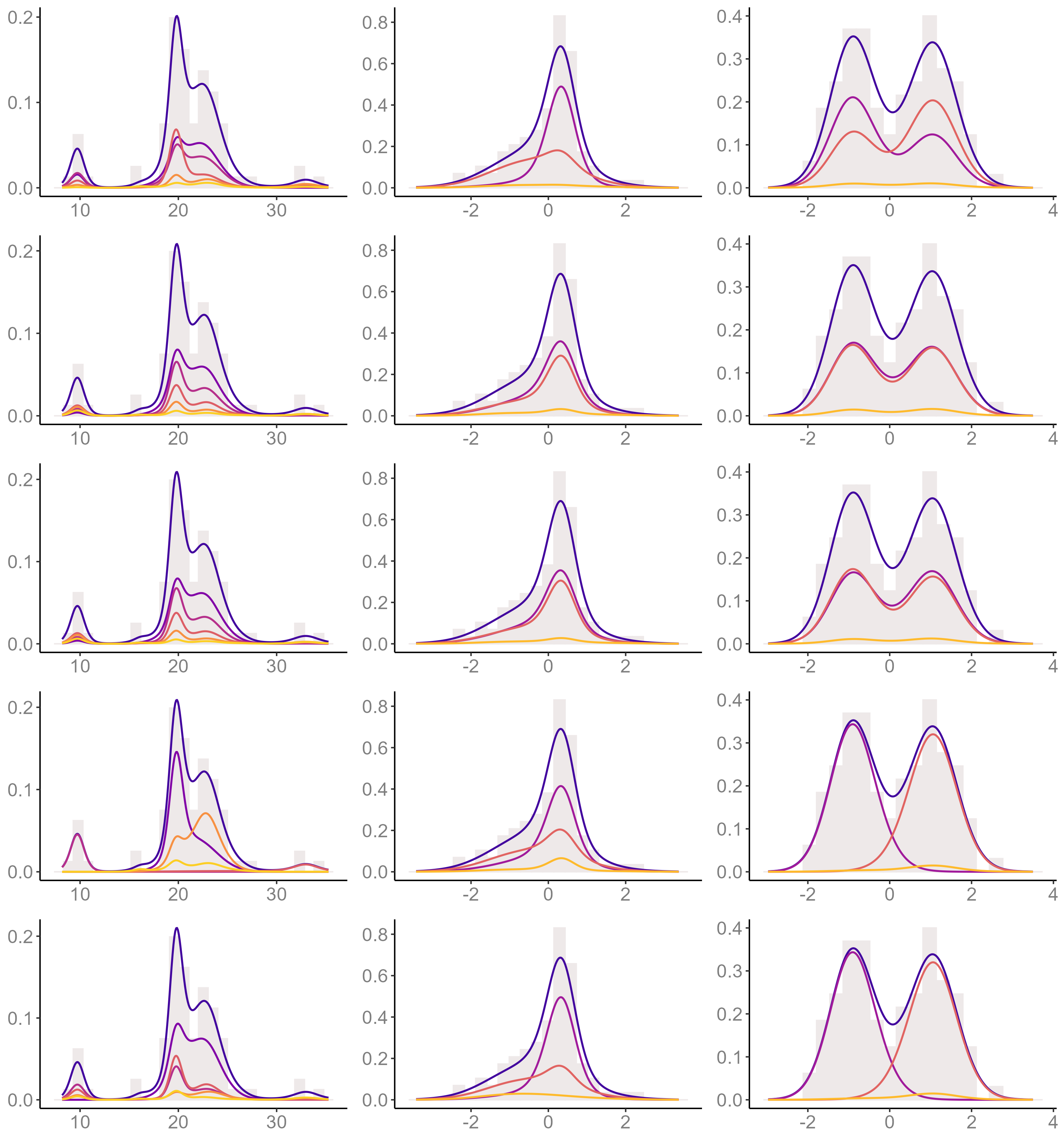

In Appendix E we extend this study for the DP model to further compare the distinct versions of the OAS in Sections 3.1 and 3.2, as well as the effect of the acceleration step in Section 3.3. There we also show the graph of the estimated weighted densities by component, so to illustrate in more details how the different samplers mix over component labels.

5 Discussion

The ordered allocation sampler exploits the conditional law of a species sampling sequence given the atoms and the weights in order of appearance. The idea of sorting the parameters by order of appearance is analogous to that of Chopin (2007) for devising sequential Monte Carlo algorithm for hidden Markov models. A key difference is that in our framework we retain exchangeability of the data, while in hidden Markov model the data possess a precise temporal order.

Mixture models with a random dimension have been long known for their appeal from a modelling perspective and for their optimal asymptotic properties (Rousseau and Mengersen, 2011; Shen et al., 2013). However, posterior computation had remained somehow elusive until the advent of the marginal sampler by Miller and Harrison (2018). The ordered allocation sampler is a valid alternative, it is simple to implement, and more broadly applicable. The sampler has been illustrated for mixtures of finite mixtures, but it readily applies to symmetric Dirichlet distributed weights whose parameter can depend on the number of components. For example, we can use it to implement Dirichlet-multinomial mixing priors, or “ sparse finite mixtures ” as termed by Frühwirth-Schnatter and Malsiner-Walli (2019), where . As for mixtures with infinitely many components, the sampler completely avoids the truncation problem. In fact, it is practically identical for the case where is a finite fixed number and the case where is infinite. Other conditional samplers are designed for the case where is fixed, , or is random and there are clear distinctions between samplers that are designed for one case or another. To the best of our knowledge, the ordered allocation sampler is the first conditional sampler that treats in a unified manner the distinct assumptions on .

As highlighted throughout the paper, the ordered allocation sampler enjoys nice properties in terms of applicability and mixing performance. Nonetheless, there are areas of improvements. In other conditional samplers allocation variables are updated independently of each other in a block, rather that one at a time. In big data settings this is a significant advantage over the ordered allocation sampler. Another drawback is that the number of occupied components, , can not change as freely, from one iteration to the next one, as it does in other samplers. This explains the higher IAT of when compared against the marginal method. The ordered allocation sampler may need additional modifications to address these issues, which is an interesting direction for future research.

References

- (1)

- Blackwell and MacQueen (1973) Blackwell, D. and MacQueen, J. B. (1973). Ferguson distributions via Polya urn schemes, Ann. Statist. 1(2): 353 – 355.

- Chopin (2007) Chopin, N. (2007). Inference and model choice for sequentially ordered hidden markov models, JRSSB 69(2): 269–284.

- De Blasi et al. (2015) De Blasi, P., Favaro, S., Lijoi, A., Mena, R. H., Prünster, I. and Ruggiero, M. (2015). Are Gibbs-type priors the most natural generalization of the Dirichlet process?, IEEE Transactions on Pattern Analysis and Machine Intelligence 37: 212–229.

- Escobar and West (1995) Escobar, M. D. and West, M. (1995). Bayesian density estimation and inference using mixtures, J. Amer. Statist. Assoc. 90: 577–588.

- Favaro et al. (2016) Favaro, S., Lijoi, A., Nava, C., Nipoti, B., Prünster, I. and Teh, Y. W. (2016). On the stick-breaking representation for homogeneous NRMIs, Bayesian Anal. 11: 697–724.

- Favaro and Teh (2013) Favaro, S. and Teh, Y. W. (2013). MCMC for normalized random measure mixture models, Statistical Science 28(3): 335 – 359.

- Ferguson (1973) Ferguson, T. (1973). A Bayesian analysis of some nonparametric problems, Ann. Statist. 1(2): 209–230.

- Frühwirth-Schnatter and Malsiner-Walli (2019) Frühwirth-Schnatter, S. and Malsiner-Walli (2019). From here to infinity: sparse finite versus dirichlet process mixtures in model-based clustering, Advances in Data Analysis and Classification 13: 33–64.

- Fuentes-García et al. (2010) Fuentes-García, R., Mena, R. H. and Walker, S. G. (2010). A new Bayesian nonparametric mixture model, Communications in Statistics - Simulation and Computation 39(4): 669–682.

- Gil–Leyva and Mena (2021) Gil–Leyva, M. F. and Mena, R. H. (2021). Stick-breaking processes with exchangeable length variables, Journal of the American Statistical Association p. in press.

- Gnedin (2010) Gnedin, A. (2010). A species sampling model with finitely many types, Electronic Communications in Probability 15: 79–88.

- Green and Richardson (2001) Green, P. J. and Richardson, S. (2001). Modeling heterogeneity with and without the Dirichlet process, Scand. J. Stat. 28: 355–375.

- Ishwaran and James (2001) Ishwaran, H. and James, L. F. (2001). Gibbs sampling methods for stick-breaking priors, J. Amer. Statist. Assoc. 96: 161–173.

- Kallenberg (2005) Kallenberg, O. (2005). Probabilistic Symmetries and Invariance Principles, first edn, Springer.

- Kalli et al. (2011) Kalli, M., Griffin, J. E. and Walker, S. (2011). Slice sampling mixtures models, Statist. Comput. 21: 93–105.

- Lijoi et al. (2020) Lijoi, A., Prünster, I. and Rigon, T. (2020). The Pitman–Yor multinomial process for mixture modelling, Biometrika 107(4): 891–906.

- Miller and Harrison (2018) Miller, J. W. and Harrison, M. T. (2018). Mixture models with a prior on the number of components, J. Amer. Statist. Assoc. 113(521): 340–356.

- Neal (2000) Neal, R. M. (2000). Markov Chain Sampling Methods for Dirichlet Process Mixture Models, J. Comput. Graph. Statist. 9(2): 249–265.

- Papaspiliopoulos and Roberts (2008) Papaspiliopoulos, O. and Roberts, G. O. (2008). Retrospective Markov chain Monte Carlo methods for Dirichlet process hierarchical models, Biometrika 95: 169–186.

- Pitman (1995) Pitman, J. (1995). Exchangeable and partially exchangeable random partitions, Probab. Theory Relat. Fields 102: 145–158.

- Pitman (1996a) Pitman, J. (1996a). Random discrete distributions invariant under size-biased permutation, Adv. Appl. Probab. 28(2): 525–539.

- Pitman (1996b) Pitman, J. (1996b). Some developments of the Blackwell-MacQueen urn scheme, in T. F. et al. (ed.), Statistics, Probability and Game Theory; Papers in honor of David Blackwell, Vol. 30 of Lecture Notes-Monograph Series, Institute of Mathematical Statistics, Hayward, California, pp. 245–267.

- Pitman (2006) Pitman, J. (2006). Combinatorial Stochastic Processes, Vol. 1875 of École d’été de probabilités de Saint-Flour, first edn, Springer-Verlag Berlin Heidelberg, New York.

- Pitman and Yor (1997) Pitman, J. and Yor, M. (1997). The two-parameter Poisson-Dirichlet distribution derived from a stable subordinator, Ann. Probab. 25(2): 855–900.

- Porteous et al. (2006) Porteous, I., Ihler, A., Smyth, P. and Welling, M. (2006). Gibbs sampling for (coupled) infinite mixture models in the stick breaking representation, Proceedings of the Twenty-Second Conference on Uncertainty in Artificial Intelligence (UAI2006), pp. 385–392.

- Regazzini et al. (2003) Regazzini, E., Lijoi, A. and Prünster, I. (2003). Distributional results for means of normalized random measures with independent increments, Ann. Statist. 31(2): 560–585.

- Richardson and Green (1997) Richardson, S. and Green, P. J. (1997). On Bayesian analysis of mixtures with an unknown number of components, J. R. Stat. Soc. Ser. B 59: 731–792.

- Rodríguez and Dunson (2011) Rodríguez, A. and Dunson, D. B. (2011). Nonparametric Bayesian models through probit stick-breaking processes, Bayesian Anal. 6(1): 145–178.

- Rousseau and Mengersen (2011) Rousseau, J. and Mengersen, K. (2011). Asymptotic behaviour of the posterior distribution in overfitted mixture models, J. R. Stat. Soc. Ser. B 73(5): 689–710.

- Sethuraman (1994) Sethuraman, J. (1994). A constructive definition of Dirichlet priors, Stat. Sin. 4: 639–650.

- Shen et al. (2013) Shen, W., Tokdar, S. T. and Ghosal, S. (2013). Adaptive Bayesian multivariate density estimation with Dirichlet mixtures, Biometrika 100: 623–640.

- Sokal (1997) Sokal, A. (1997). Monte Carlo Methods in Statistical Mechanics: Foundations and New Algorithms, in C. DeWitt-Morette, P. Cartier and A. Folacci (eds), Functional Integration: Basics and Applications, Springer US, Boston, MA, pp. 131–192.

- Walker (2007) Walker, S. G. (2007). Sampling the Dirichlet mixture model with slices, Communications in Statistics-Simulation and Computation 36(1): 45–54.

- Zanella (2020) Zanella, G. (2020). Informed proposals for local MCMC in discrete spaces, J. Amer. Statist. Assoc. 115(530): 852–865.

Appendix

Appendix A Proof of Theorem 1

Lemma A.1.

Let be a random variable taking values in and let be a sequence in with . Let be defined by

Then is invariant under size-biased permutations if and only if is a symmetric function of .

The proof of Lemma A.1 can be found in Pitman (1995, 1996a). Actually, Pitman derived it more in general for a sequence taking values in the infinite dimensional simplex . The statement in Lemma A.1 easily follows by transforming into a sequence in by appending zeros, i.e. for . For simplicity we will first take for granted Lemma A.1, later in Remark A.1 we explain how to derive a self-contained proof.

Proof of Theorem 1:

(Sufficiency): Assume is a species sampling sequence driven by the species sampling model . By de Finetti’s theorem,

almost surely. As and , we get that outside a -null event, for every , and for each there exist such that . This together with the diffuseness of , yield that exhibits exactly distinct values, , almost surely. This means that we can define given by if and only if , recalling that and for , . This way, is the th distinct value of in order of appearance. Next we prove that satisfies equation (3) in the main document. To this aim, note that is -measurable and vice versa. Moreover is -measurable and is -measurable. As is conditionally iid from , this implies

and for ,

This is

Hence, given , satisfies (3). Moreover, as is independent of , we also get that is independent of , which are iid from . This yields that are also iid from , so i is proved.

Using de Finetti’s theorem once more, we get

where is the number of distinct values in . Since the directing random measure of an exchangeable sequence is unique almost surely, this assures , and for , the long run proportion of indexes such that is

almost surely. As (3) holds for , is a size-biased permutation of , which yields ii.

As for iii, first note that by definition, are the distinct values in order of appearance in , for every , in particular . Now, as is conditionally iid from , we get that for each and every ,

Thus,

By definition, under the event we must have , i.e.

As for iv, first note that is -measurable and is -measurable. The last assertion relies on , where is the inverse permutation of . From the proof of i and the hypothesis, we have that , and are independent of . Thus, for every ,

which proves iv.

(Necessity): Assume i–iv hold. We first prove that is exchangeable. Fix and define the random partition, of generated by the random equivalence relation if and only of . In other words where . Using iii, a simple counting argument implies

| (A1) |

for every partition of , and where . Taking expectations in (A1),

By ii and Lemma A.1, the function

is symmetric. This shows , at most depends on the number of blocks of and the frequencies, , of each block, through a symmetric function. In other words, is exchangeable, in the sense that for every permutation, , of , is equal in distribution to , where

Now, fix and note

The last equality follows from the fact that are iid from , and is independent of and , which together with (A1) imply is independent of . By taking expectations in the last equation we find,

As is exchangeable,

hence

which proves is exchangeable. Finally, by de Finetti’s theorem we know that directing random measure of is given by

and by i and ii we conclude

∎

Remark A.1.

The sufficiency of Lemma A.1, which we require to prove the necessity of Theorem 1, can be easily derived using the sufficiency of Theorem 1, thus provide a self-contained proof of Theorem 1. Namely, in the context of Lemma A.1 let be invariant under size-biased permutations, and let be any sequence of weights whose size-biased permutation has the law of . Then we can construct a species sampling model over a Borel space and a species sampling sequence driven by . By the sufficiency of Theorem 1, we get that for every ,

| (A2) |

where are the distinct values that exhibits in order of appearance, and denotes the size-biased permutation of . Now, let be the random partition of generated by the random equivalence relation if and only if . Then, by construction is exchangeable, and a simple counting argument, using (A2), yields

where . Since is equal in distribution to and is exchangeable, we conclude

is a symmetric function of .

Appendix B Mixtures of finite mixtures with Gnedin (2010) prior on

In this section we illustrate how to update the model dimension by sampling from (15). We consider a mixture model with symmetric Dirichlet weights , and random with prior distribution

where is a known constant. As mentioned in Section 2, the size-biased permuted weights admit stick-breaking representation , and for independent random variables, where and . Thus, the ordered allocation sampler as derived in Section 3.1 can be used to implement this model.

First note that using (4) and the stick-breaking decomposition of , we can compute the conditional EPPF given :

where and , using the convention that the empty product equals one. Hence, (15) simplifies to

Following Gnedin (2010), we obtain

Thus, we can explicitly compute

In particular, using notation

and recursively for ,

Thus, to update , sample , and set when .

Appendix C Geometric and exchangeable stick-breaking processes

To illustrate the ordered allocation sampler derived in Section 3.2 we chose two species sampling mixing priors for which the law of is not available. These are the geometric process and the exchangeable stick-breaking process. The geometric process (Fuentes-García et al.; 2010) is a species sampling model with decreasingly ordered weights, , given by where is a random variable taking values in . The exchangeable stick-breaking process (Gil–Leyva and Mena; 2021) instead has weights , where is an exchangeable sequence with values in . Here we consider to be a species sampling sequence driven by a Dirichlet process over , with total mass parameter and base measure . Next we refer to as Dirichlet driven exchangeable stick-breaking process.

To fully specialize the ordered allocation sampler in Section 3.2 for this two mixing priors it is enough to explain how to update via sampling from

| (C3) |

where , , , and “ ” refers to all the random variables involved excluding and . Note that by excluding we are also excluding because these two sequences characterize each other. It is worth noting that the following description can be readily adapted to the updating of in the slice-efficient sampler.

For the geometric process we have that for every , hence it suffices to update from

where for each . In particular if a priori, then we update .

Now, for Dirichlet driven exchangeable stick-breaking processes, the updating of is more delicate due to the non-trivial dependence among elements in . We will first focus on updating . To this aim note that since is a species sampling sequence driven by a Dirichlet process with total mass parameter and base measure , we can compute

where are the distinct values that exhibits, and , with if and only if (cf. Pitman; 1996b; Neal; 2000). Thus (C3) yields

Now to update we can first sample from

which is a product of independent Beta distributions. Afterwards, for each , we can update which value does take among the ones observed in the rest of the ’s or if it takes a new unobserved value. Say that are the distinct values in , in no particular order, and assume without loss of generality that if and only if for each . Then it is enough to sample from

where and

If the updated value we simply set otherwise if we sample . Once we have updated , we can update for by sampling sequentially from , which happens to coincide with the prior prediction rule . As is a species sampling sequence driven by a Dirichlet process, , with total mass parameter and base measure , it is well known that

where are the distinct values in , and (cf. Pitman; 1996b). Thus updating for is easy, and it will be required only for a few .

In general, this way of updating for Dirichlet driven exchangeable stick-breaking processes is actually an adaptation of Algorithm 2 in Neal (2000), however, other marginal methods such as Algorithm 8 can also be exploited. In fact, by taking into account the underlying Dirichlet process, , of , even a version of the slice sampler or the ordered allocation sampler could have been used. To conclude, we mention that for the simulation study in Section 4 of the main document we fixed the hyperparameters , and for both geometric and Dirichlet driven exchangeable stick-breaking models.

Appendix D Ordered allocation variables

Here we discuss the set of admissible moves for the updating of the ordered allocation variable . Some general rules for determining can be envisioned: (i) if is different from any other , that is , then cannot change, unless ; and (ii) , so for larger , there are more possible admissible moves, in particular, cannot change. As for illustration, let and say that before updating , are such that the blocks of the partition in the least element order are , and . Clearly cannot change. As for , the admissible moves are thus will be sampled from

Say that we sample , so that now , , . Since the blocks must be in least element order, the admissible moves for are , hence will be sampled from

Assume we sample so now , , and . Given that can not be an empty set, under the current configuration, the only admissible move for is , i.e. cannot change. Finally, the admissible moves for are , and we will sample from

Finally, assuming that we sample , the initial configuration , and , is updated to and .

In Section 3.3 we discuss an acceleration step that consists in randomly permuting the data points at each iteration of the sampler. Next we provide an example on how to modify the ordered allocation variables so to preserve the induced clustering structure, as well as the least element order of the partition induced by the modified variables. Let us consider again, as starting values of the ones corresponding to the partition , and , so

Also consider the permutation , i.e. , so that the permuted data set is . The ordered allocation variables, , induce the following clustering of data points:

Thus, the original partition becomes with respect to the new labeling of the observations. Let us check that the ordered allocation variables, , that correspond to , can be obtained as explained in Section 3.3, that is if and only if equals the th distinct value to appear in . We have

so that the distinct values of in order of appearance are . Then,

We conclude that

which in fact yields the partition in least element order .

Appendix E Extended simulation study for the DP model

In this section we provide further illustrations of the two versions of the ordered allocation sampler derived in Sections 3.1 and 3.2 of the main paper, and of the importance of the acceleration step in Section 3.3. We consider the Dirichlet process mixing prior and we repeat the simulation study of Section 4 implementing, together with Algorithm 8 in Neal (2000) (Marginal), the dependent slice-efficient sampler by Kalli et al. (2011) (Conditional) and the sampler of Section 3.1 with the acceleration step (OAS1), the sampler of Section 3.2 with the acceleration step (OAS2) and the sampler of Section 3.1 without the acceleration step (OAS1*). Table E1 reports the IAT of the deviance () and number occupied components () for these 5 different samplers. In Figure E1 we show the estimated weighted densities, , of each component , as well as the estimated density, , where is the largest index , for which is not identical to zero. We have computed

for a window of iterations after the burn-in period has elapsed. Here are the sampled component parameters as labelled (or indexed) at iteration , and is the number of data points assigned to the th component at iteration . In particular, for the ordered allocation samplers, components are labelled in the order in which they were discovered by the (possibly permuted) dataset at each iteration. For the marginal sampler, components are labelled this way at the first iteration, and at subsequent iterations relabelling only occurred to delete gaps, i.e. so that the first indexes, , refer to the observed components. As for the slice sampler, components were never relabelled.

We will first focus on exploring the effects of the acceleration step in Section 3.3. As can be observed in Figure E1 the ordered allocation sampler without data permutations (OAS1*, 4th row) is more prone to label components consistently throughout iterations, this is reflected through the propensity of to be unimodal. In contrast, for the ordered allocation sampler that includes the acceleration step of Section 3.3 (OAS1, 2nd row) has the shape of the estimated density . Thus is multimodal when is (as is the case of the dataset). This indicates that more label-switches occur in the OAS1, which is an expected consequence of the fact that by permuting data points, components are discovered in a distinct order. Comparing against the graphs of the marginal sampler (1st row), we see that by including the acceleration step, label-switches occur in a more similar way as they naturally do in marginal samplers, which is the only type of sampler where labels are completely irrelevant. In terms of algorithmic performance, in Table E1 we see that the inclusion of data permutations at each iteration represents a significant improvement of the mixing properties, as the IAT corresponding to the OAS1 are much smaller than those of the OAS1*. The one exception is the IAT of for the dataset, which is very similar for the OAS1 and the OAS1*. This is due to the fact that, although this dataset comes from more than one Gaussian component, it only has one mode. Thus, it is not clear from which component does each data point come from, this is turn leads to frequent label-switches even if one does not permute the dataset.

We now turn to explore the algorithmic performance of the OAS2 compared against that the OAS1, when the latter applies, i.e. the distribution of the size-biased permuted weights, is available. In Table E1 we see that for the dataset, the IAT values of the OAS2 compare very well with those of the OAS1. For the other two datasets instead there is a difference between the IAT values of the OAS1 and the OAS2. To explain why this happens, recall that the key distinction between the OAS1 and the OAS2 is that the first one updates directly, while the OAS2 relies on the the indexes . In particular for the DP model, the OAS2 ignores the fact that the distribution of is available. As mentioned in the main paper, to update the OAS2 first updates (i.e. those of weights of occupied components) and later , hence swaps between and , with , mainly occur when occupied components are created or removed as a consequence of an update of . Now, the and datasets were both simulated from a mixture of two (more or less) balanced Gaussian components. Since we have implemented a Gaussian mixture, at most iterations the sampler will effectively recognize that there are only two large occupied components (see the 2nd and 3rd columns of Figure E1 for an illustration). Thus the mixing of will be affected because components are rarely created of removed. Furthermore, as typically there will be only two large “ occupied ” weights and the rest of them will be very small, it is extremely unlikely that their indexes get swapped. Instead, the dataset was not generated from a Gaussian mixture, which forces the model to rely on different Gaussian components of varying sizes to estimate the density (cf. 1st column of Figure E1). This means that the number of occupied components will change frequently and some of the “ occupied ” weights will be small at many iterations thus facilitating the mixing of weights’ indexes.

| Marginal | OAS1 | OAS2 | OAS1* | Conditional | ||

|---|---|---|---|---|---|---|

| 12.30(0.23) | 23.76(0.57) | 26.76(0.84) | 106.9(7.98) | 119.2(9.95) | ||

| 13.68(0.25) | 32.49(0.81) | 36.36(1.09) | 115.2(7.18) | 190.2(16.3) | ||

| 22.42(0.54) | 25.81(0.63) | 35.98(1.00) | 26.92(0.75) | 120.5(10.3) | ||

| 9.26(0.13) | 18.99(0.41) | 27.97(0.88) | 44.48(0.60) | 100.8(7.18) | ||

| 7.84(0.16) | 13.87(0.35) | 20.82(0.57) | 50.47(3.20) | 35.61(1.68) | ||

| 6.30(0.07) | 13.38(0.22) | 25.43(0.87) | 39.28(1.33) | 52.00(2.18) | ||