Global constraints on vector-like WIMP effective interactions

Abstract

In this work we combine information from relic abundance, direct detection, cosmic microwave background, positron fraction, gamma rays, and colliders to explore the existing constraints on couplings between Dark Matter and Standard Model constituents when no underlying model or correlation is assumed. For definiteness, we include independent vector-like effective interactions for each Standard Model fermion. Our results show that low Dark Matter masses below 20 GeV are disfavoured at the level with respect to higher masses, due to the tension between the relic abundance requirement and upper constraints on the Dark Matter couplings. Furthermore, large couplings are typically only allowed in combinations which avoid effective couplings to the nuclei used in direct detection experiments.

I Introduction

The search for Dark Matter (DM) in the form of thermal relics represents one of the most active lines of research in astro-particle and particle physics. Indeed, there is an overwhelming number of dedicated experimental searches for DM, most of them concentrating on the so-called Weakly Interacting Massive Particles (WIMPs) paradigm Bergstrom (2000). These are classified into three different categories: (1) indirect detection searches, where the DM would annihilate or decay into SM particles which can be detected, (2) direct detection searches, where the DM would scatter against the protons and neutrons in a detector, producing an observable recoil, and (3) collider searches, where the DM would be produced in high-energy collisions, thus leading to events with missing momentum.111A complementary search strategy is the search for DM self-interactions which would impact structure formation as well as stellar evolution in particular scenarios (see e.g. Tulin et al. (2013); Hochberg et al. (2014); Vincent et al. (2015a, b)). Since the focus of this work is to probe the interactions between DM and visible particles these constraints will not be considered here.

In light of all these searches it is essential to look at the global picture of where we stand concerning the WIMP scenario. This is certainly a rather complicated task, given the enormous zoo of models available in the literature with an associated plethora of free parameters. Even for a single model, brute-force scans of the corresponding parameter space represent a significant computational challenge. Consequently, the usual practice is to either rely on simplified realisations of those models or to fix some of the free parameters, thus scanning only hyper-surfaces in the model parameter space. 222The literature in this respect is quite vast. Some examples relevant to our work are Beltran et al. (2009, 2010); Fox et al. (2011, 2012); Mambrini and Zaldivar (2011); Cheung et al. (2012a), in the context of effective field theory (see below).

On the other hand, Monte Carlo methods constitute an efficient alternative to scan over a multi-dimensional parameter space. However, for WIMPs, most of the Monte Carlo scans are performed in the context of supersymmetric models, where the neutralino is usually selected as the preferred DM candidate (e.g., Refs. Cabrera et al. (2013); Roszkowski et al. (2012); Strege et al. (2014), however see Cheung et al. (2012b) as an example of a non-SUSY search).

The main goal of this work is to explore the present status of our knowledge of DM couplings to the different SM constituents in as much generality as possible. In order to gain in model independence, it is thus interesting to be more agnostic about the DM interactions with the SM constituents and parametrize them through an effective field theory (EFT) approach. In particular, this approach can reveal how constrained the DM interactions with the different SM fields are in the light of currently available data, when no further assumptions are made. Since allowing all type of Lorentz structures for these effective operators would provide too much freedom with hundreds of operators that cannot be bounded, we rather choose to exemplify the different interactions between a Dirac fermion DM and the SM by operators of the form

| (1) |

with independent coefficients for all SM particle species with chiralities . This type of interaction is well motivated by scenarios where the DM communicates with the SM via a vector portal. Moreover, scalar or tensor interactions typically require Higgs insertions, leading to higher dimensional and hence more suppressed operators. Additional dimension six operators with a vector-like structure involving the Higgs, such as , could be included as well. For DM heavier than the Higgs, this operator would provide an additional annihilation channel relevant for indirect searches and relic abundance constraints, via . However, as we will see, above 100 GeV the global upper bounds for any individual coupling correspond to the ones obtained from relic abundance alone (with no constraint for the top quark coupling, since that channel is not kinematically allowed). Therefore, we expect a similar behaviour for the Higgs, that is, no constraints for DM masses below the Higgs mass and recovering the relic abundance constraint for higher DM masses. Even with this restriction, if independent couplings for all SM constituents are allowed, 15 independent parameters remain to be constrained. Thus, given the large dimensionality of the parameter space, we make use of a nested sampling Monte Carlo algorithm to scan it efficiently.

When constraining the DM EFT parameter space, we consider bounds from all types of experiments where a WIMP signal is being actively sought for, i.e., direct detection (namely, LUX Akerib et al. (2014) and EDELWEISS Ahmed et al. (2011)), indirect detection (AMS Aguilar et al. (2013) positron fraction data and Fermi-LAT data for dwarf galaxies Ackermann et al. (2015)), cosmic microwave background (CMB) and relic density constraints from Planck Ade et al. (2015), and monojet and monophoton searches in colliders (from LHC Aad et al. (2015) and LEP data Fox et al. (2011)).333Contrary to the cases of direct and indirect detection strategies, for collider searches the use of the EFT framework -mainly at the LHC- may not be optimal. We take this issue into account when recasting the limits coming from LHC. See Sec.III. Also, note that other searches different from monojets can be interesting in the context of specific models (see e.g. Ref. Khachatryan et al. (2015).

Additional information on some annihilation channels such as , , or come from the non-observation of indirect neutrino signals from the Sun in SuperKamiokande Choi et al. (2015) and IceCube Aartsen et al. (2016). However, the bound is only relevant if apart from a large DM coupling to the final state particle, a sizeable coupling to first family quarks is also present. Since we are assuming all couplings to be independent, this bound will always be satisfied in the fit by choosing a set of parameters with one of the relevant couplings being very small. Thus, the inclusion of these experiments would not change our results.

We derive bounds on the coefficients accompanying each effective operator as a function of the DM mass, as well as bounds on the DM mass itself. These constitute the most general constraints which can be derived assuming that DM interacts with the SM as in Eq. (1).

The outline of the work is as follows. In Sec. II we describe the set of effective operators that will be jointly analyzed, introducing the parameters to be constrained. Sec. III lists the set of experimental constraints considered, and how these have been implemented. Some details regarding the numerical tools employed in the fit are explained in Sec. IV. Finally, Sec. V summarizes our results and we present our conclusions in Sec. VI.

II The Effective Field Theory Framework

In order to explore how much freedom the present global data on DM allows for its couplings to the SM, we will define a series of working models characterized by a set of independent effective operators, described by the following effective Lagrangian

| (2) |

where the effective current is given by

| (3) |

the index denotes the quark generations such that , , , , , and , while the sum over runs over all right-handed (RH) SM fermions. The coefficients are the couplings of the operators in the effective Lagrangian. As the effective operators are of dimension six, these coefficients will have a mass dimension of minus two. In expr.(2), represents a Dirac fermion DM.

This set of operators provide enough freedom so as to parameterize DM interactions with different strength to the various SM particles while, at the same time, keeping the set of operators at a viable level. Indeed, allowing extra operators would not only imply a more challenging numerical analysis, but would also be rather uninformative since mostly any value could be fitted and particular UV completions tend to have a much more limited set of free parameters. Furthermore, for simplicity and due to the generally stronger constraints, we will not consider flavour-changing operators between the SM fermion bilinears Kamenik and Smith (2012). Despite these restrictions, 15 different operators fall into this category, parametrizing the DM interactions with the 3 quark and lepton doublets as well as the 3 singlets of up-type and down-type quarks and charged leptons. Starting from this general setup, we will also define more restrictive working models that can exemplify other interesting scenarios such as leptophilic, leptophobic or flavour-blind setups, characterized by different correlations among the couplings. The models considered in this work are the following:

-

1.

General model: The first model makes no additional assumptions regarding the coefficients , which are all allowed and free to vary independently. This represents the least restrictive choice possible in our given EFT framework, and includes a total of 15 coefficients in addition to the mass of the DM.

-

2.

Flavour-blind: In this model, all operators involving fermions with the same gauge quantum numbers, e.g., all left-handed (LH) quarks, are assumed to also have the same couplings to DM and therefore have the same coefficients in the effective lagrangian. We are then left with five different coupling parameters which are related to those of the general model as

(4) For the latter three groups of coefficients, we will generally use the notation for the coefficient of the first generation to refer to it within this model.

-

3.

Family-oriented: In this model, we make the assumption that the effective couplings with DM are equal for all particles belonging to the same generation. This can be considered a quite crude proxy to flavour theories were the successive SM families are characterized by hierarchical couplings or charges in order to explain the observed mass hierarchy between them, following the philosophy of the Froggatt-Nielsen mechanism444See Calibbi et al. (2015) for a realisation in the context of dark matter. Froggatt and Nielsen (1979); Leurer et al. (1993, 1994); Ibanez and Ross (1994); Binetruy and Ramond (1995). This leaves only three independent operator coefficients,

(5) This is the model with the fewest number of free parameters which we will consider and, as such, the correlations among the couplings will allow to obtain strong constraints, particularly for the coupling .

-

4.

Leptophobic: This model assumes that DM does not have significant interactions with any of the leptons. As a result, all of the coefficients associated to operators involving leptons are set to zero, i.e.,

(6) with . At the same time, no restrictions are imposed on the operators involving quarks and the model therefore contains nine free parameters.

-

5.

Leptophilic: In direct contrast to the leptophobic model, this model instead considers the situation where the only relevant DM interactions with the visible sector are those involving leptons. In this model, we therefore set all of the coefficients for operators involving quark fields to zero, i.e.,

(7) where indicates the generation. In this situation, we are left with six independent coupling parameters for the DM interactions with leptons.

We wish to stress that these models are only meant to be phenomenological tools to assess our present global knowledge of how well constrained the DM couplings to the SM fermions are when not assumed to be universal or related through any particular UV completion. We will thus allow all couplings to vary freely when fitting the present data without questioning the apparent naturalness (or lack thereof) of the preferred regions. In particular, as we will see in Sec. V, most models prefer so as to avoid the very stringent limits coming from direct detection searches. Thus, even if these cancellations may seem unnatural, we will not avoid them by adding artificial “naturalness priors” to guide the Monte Carlo in any way, in the spirit of allowing the Monte Carlo to choose the points in parameter space which provide the best fit to the data. Symmetry arguments could perhaps be invoked in a particular UV completion when trying to reproduce the best fit found in the effective description in a natural way.

Moreover, these working models are not intended to be self-consistent low energy descriptions of any particular UV completion. In fact, due to the limited amount of operators considered, we are not allowed to take our effective theory description beyond tree level processes. Indeed, radiative corrections could induce other operators with interesting phenomenology.555For recent works in an EFT framework including one loop processes, see e.g. Refs. Crivellin et al. (2014); D’Eramo and Procura (2015) For example, in the leptophilic model the lepton legs could be closed in a loop and, through the emission of a virtual photon, induce a coupling to first generation quarks. Therefore, the strong bounds from direct searches would also apply to these models and put a constraint on the lepton couplings (see Ref. Kopp et al. (2009)). In a similar fashion, other signals at the LHC such as dijet or dileptons could be generated from loop-induced 4-SM-fermion operators. Furthermore, when moving from the flavour basis to the mass basis, the DM couplings to the SM doublets will induce flavour-changing operators that, when the DM legs are closed in a loop, could contribute to the oscillations of neutral kaons or other FCNC processes, for which stringent experimental constraints would apply. In full consistency, these operators should have been included from the start, since they are compatible with the particle content and symmetries of the theory and they are required to renormalize the divergences stemming from the loop contributions. However, when doing so, new unbounded coefficients are introduced and, in order to avoid these stringent limits, the fit will prefer the points in the parameter space where these new coefficients exactly cancel the loop-induced contributions. Thus we will simply not consider these loop-induced constraints in our list of observables, since in our approach they would not imply additional constraints on our parameter space unless particular relations between the couplings are invoked.

III Considered constraints on DM

In our numerical analysis, the DM contributions to the different DM observables are computed with micrOmegas Belanger et al. (2014). These are then compared to the current experimental bounds. A chi-square function is then computed for each observable independently. The total is obtained as the sum of all separate contributions

| (8) |

where is an index which runs over the observables and is a vector containing the coupling coefficients of the model being tested.

The contribution to the chi-square from each experiment is computed assuming that the limit on the physical quantity being bounded (i.e., relic abundance, the thermally averaged cross section, etc) by the experimental collaborations behaves like a gaussian. The chi-square can be expressed as a function of the number of events as:

| (9) |

where and correspond to the number of signal and background events, respectively, and refers to the systematic uncertainty on the number of events. Notice that, given the unfortunate lack of a positive DM signal in any of the observables described below with the exception of the relic abundance constraint, in this expression it has been assumed that the observed number of events corresponds with the expected background. In some cases, small upward or downward fluctuations over that background will be present and thus small deviations with respect to the scaling derived below can take place. However, notice that we will normalize this scaling with the data presented by each experimental collaboration itself. Thus, our function will always reproduce correctly the result obtained by the collaboration at the confidence level at which it is reported (typically 90 or 95%) and the deviations should be small for the purpose of our exploration as long as CL close to these limits are investigated.

Experimental collaborations present their results as bounds on a given quantity at a certain confidence level (CL). This can be translated into a certain number of events allowed at that CL. For a bound at 68% CL, for instance, we get666When the experimental result is quoted at a different CL, it can be simply rescaled to 68% CL within a gaussian approximation. :

| (10) |

By taking the ratio between Eqs. (9) and (10), we get the following expression for the chi-square:

| (11) |

The expression above can be further simplified in the two following limits:

-

•

Statistically-dominated: in this case, the number of background events and the systematic errors can be neglected, and we can therefore write the chi-square contribution as:

(12) -

•

Background/Systematics-dominated: in this case, the signal events can be neglected with respect to the background and/or the systematic error, and the chi-square simplifies to:

(13)

By making use of Eqs. (12) or (13), all the finer details of the detector response cancel in the ratio. We will therefore use the experimental bounds on the different observables reported by the collaborations instead of the number of events (which are not always publicly available).

All the experiments considered in this work fall in the background/systematics-dominated case, and therefore we will apply Eq. (13). The only exception to this will be the case of dwarf galaxies studied by the Fermi-LAT collaboration Ackermann et al. (2015). These are dark-matter–dominated objects and constitute extremely clean probes to test for dark matter interactions with SM particles. We have explicitly checked the behaviour of the chi-square for a subset of the observed dwarf galaxies, using publicly available data from the collaboration from Ref. Ackermann et al. (2015), and we find that the behaviour is in between the two extreme cases identified above. We therefore opt for the most conservative approach in this case, which corresponds to Eq. (12), when using the limit provided by the collaboration (which has been obtained using the full data sample).

In the following, we describe the full set of observables sensitive to interactions between the DM and the SM fermions which have been included in our simulations.

Relic abundance

The abundance of DM in the Universe is well-known from the PLANCK measurements Ade et al. (2015). At present, its central value is

| (14) |

with an error of at . We will assume that our DM fermion constitutes all of the observed relic density and that it is produced in the early Universe through thermal freeze-out. The predicted relic density is computed for each set of values for the model parameters, and the corresponding contribution to the chi-square function is given by

| (15) |

In general, within the thermal freeze-out scenario, the relic abundance of DM is mainly governed by the thermally averaged total DM annihilation cross section

| (16) |

Here, runs over all fermion fields contributing to the annihilation cross section, and is a weight associated to the dimension of the SM gauge group representation of the corresponding fermion field. As we will show in the results section, this combination is subject to very strong constraints and dictates the possible ranges in which the coefficients may lie. In particular, due to the finite and non-zero relic abundance and the assumption of thermal freeze-out, this implies that at least one of the coefficients must be non-zero and that none of them can be too large. It should be kept in mind that, alternatively, under the assumption of freeze-in the correct relic abundance could be generated via extremely small couplings to all SM fermions Hall et al. (2010).

Direct detection

The contribution to the chi-square from direct detection experiments is computed assuming that the limit on the spin-independent cross section given by the experimental collaborations behaves like a gaussian and is background-dominated (see Eq. (13)). Therefore, the contribution to the chi-square coming from each direct detection experiment can be computed as

| (17) |

where we have expressed the limit at the 68% CL (after rescaling from the 90% quoted by the collaborations). Note that, for , the appropriate value of the chi-square function and the experimental limit are recovered.

For , we implement direct detection constraints from LUX Akerib et al. (2014) and, so as to have an independent target material, EDELWEISS-II Ahmed et al. (2011). Our setup generally leads to a spin-independent contribution (except when ) which will be constrained by the LUX and EDELWEISS-II results. For our set of operators, the spin-dependent contribution cancels out Cheung et al. (2012a). The spin-independent dark-matter–nucleus coupling will relate to the quark couplings as

| (18) |

where is the mass number and the atomic number of the target nucleus. With this dependence on the couplings, there is a possible degeneracy in the DM–quark couplings for which the cross-section vanishes. For , this degeneracy occurs for . Thus, direct detection experiments will only allow large couplings to the first generation quarks if this particular relation is fulfilled. As we will show in Sec. V, this is clearly seen from the numerical results of our simulations.

Cosmic Microwave Background (CMB)

DM annihilations result in an injection of power into the Intergalactic Medium (IGM) per unit volume equal to Chen and Kamionkowski (2004); Padmanabhan and Finkbeiner (2005)

| (19) |

where is the redshift, is the DM abundance (critical density) today, is the thermally averaged annihilation cross section, and the statistical factor corresponds to DM being a Dirac particle. On the other hand, CMB probes such as the WMAP and Planck satellites can set limits on the deposited power into the IGM around the CMB epoch (), which is related to the injection power as Slatyer et al. (2009); Slatyer (2013); Lopez-Honorez et al. (2013).

| (20) |

where the efficiency function depends on the DM annihilation channel, . For this reason, experiments usually quote their limits through the quantity

| (21) |

where is the thermally averaged partial annihilation cross section into particles . This quantity has been constrained by Planck+lensing data at the 95% CL Ade et al. (2015), here we rescale that bound to the CL obtaining: cm3s-1GeV-1 so as to build our function . In order to implement these bounds we use the tabulated values of from Ref. Slatyer et al. (2009), to compute , and compare it with the experimental constraints. The contribution added to the total is given by

| (22) |

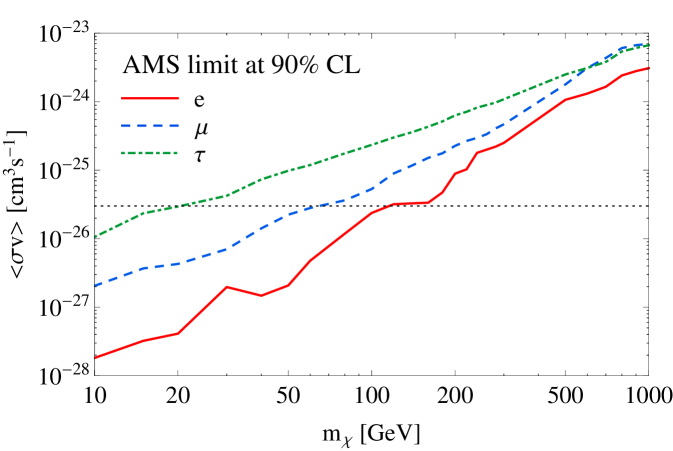

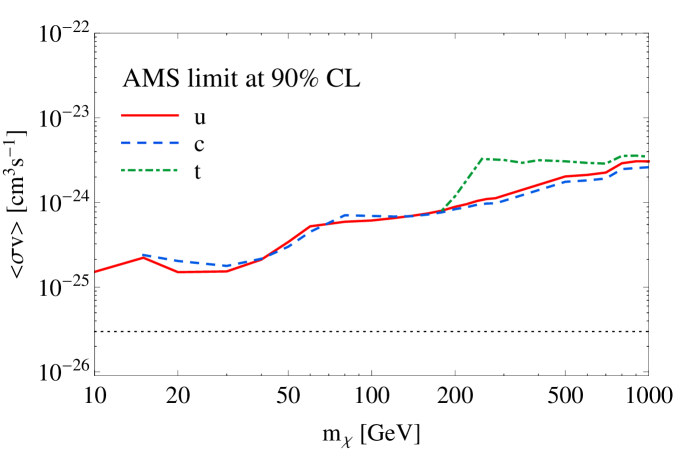

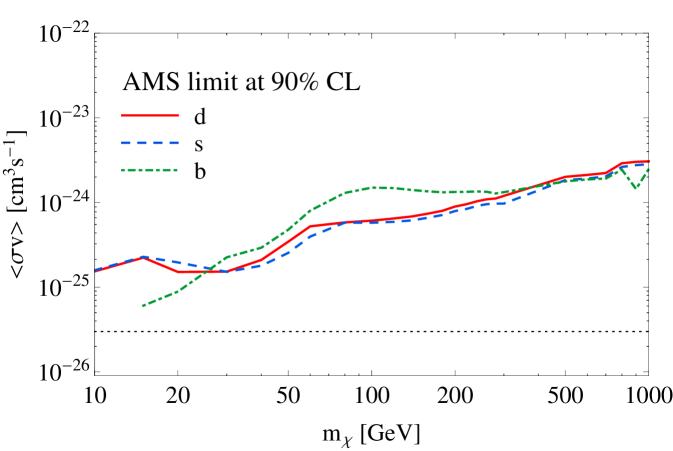

Positron fraction

The measurements from AMS02 on the positron fraction Aguilar et al. (2013) are used to derive upper bounds on DM annihilation cross sections. The contribution to electron and positron fluxes from DM annihilation are computed using micrOmegas. The contributions coming from astrophysical sources, on the other hand, are parameterized by

| (23) |

as in Ref. Ibarra et al. (2014), where the last term in the positron flux represents a point source with a hard spectrum cut , while the other terms model the diffuse background. Both DM and background fluxes are affected by the solar modulation, which can be explicitly taken into account by computing the flux at the top of the atmosphere () under the force field approximation:

| (24) |

where refer to the parameters accounting for the modulation. The differential positron fraction on top of the atmosphere is then given by

| (25) |

The function is built by binning the predicted positron fraction and comparing to the experimentally measured values,

| (26) |

where the uncertainties in each bin are taken directly from Ref. Aguilar et al. (2013) by adding the statistical and systematic errors in quadrature.

We have checked that allowing the electron flux to vary does not affect the fit in any substantial way and have therefore fixed the parameters for this flux to the values which provide the best-fit to Fermi electron flux data Ackermann et al. (2010). In particular, the value of the solar modulation parameter for the electron flux which gives a best-fit to the Fermi data is found to be equal to zero. Moreover, the solar modulation parameter for positrons was found to have a negligible impact on the fit, while creating some numerical degeneracies. Therefore, we choose to fix this parameter to zero as well during the fit, as described in detail in App. A. However, it should be noted that the solar modulation only affects the low energy part of the spectrum and therefore our analysis of AMS02 is expected to be accurate for .

In order to take into account the lack of knowledge on the astrophysical backgrounds, we profile over the positron flux parameters in Eq. (23) in our simulations,777The minimisation procedure was performed with the GSL libraries Galassi et al. (2013). without imposing any priors on them. This is done for each set of parameters independently, as explained in detail in App. A.

Gamma rays from dwarfs

Dwarf spheroidal satellite galaxies (dSphs), being DM-dominated objects, constitute very clean laboratories to test DM interactions. A search for -rays coming from Milky Way dSphs has been performed by the Fermi-LAT collaboration Ackermann et al. (2015). We make use of the present CL bounds (rescaled to the CL) on the thermally averaged cross section for DM annihilation from Ref. Ackermann et al. (2015), which are derived under the assumption of 100% DM annihilation into some specific channel . However, in our EFT approach, DM may annihilate to all fermionic channels with different branching fractions , which will depend on the set of couplings for a given model , and each particular channel will contribute to -ray emission with a different weight, given its different subsequent decay chains. This weight is inversely proportional to the final experimental bound that can be derived for that particular channel . We may therefore recast the experimental bound on the total cross section as

| (27) |

As already mentioned, the contribution to the chi-square coming from dwarf galaxy measurements is not completely dominated by either background/systematics nor by statistics. Therefore, we conservatively assume that it is the latter which dominates the measurement in this case. The contribution to the is thus given by

| (28) |

where is the total annihilation cross section today as predicted by the model being tested with couplings and DM mass .

Monojets at LHC

Here we take the most recent 95% CL LHC results (rescaled to the CL) from monojet+ analyses Aad et al. (2015), applied to the effective vector interaction operator , where a universal coupling to up and down-type quarks of both chiralities was assumed.888In view of the possible issues regarding the validity of the EFT approach in collider searches Busoni et al. (2014), we consider the limits from Aad et al. (2015) which respect the validity criteria of EFT. In the next LHC run, the issues with the validity of the EFT will be even more relevant, and therefore an analysis in terms of Simplified Models Buchmueller et al. (2015); Abdallah et al. (2014); Buckley et al. (2015); Abdallah et al. (2015); Abercrombie et al. (2015) might be preferable. Notice that, if opposite couplings are assumed for the different chiralities, the same bound is recovered Goodman et al. (2010). Indeed, any interference effect between the different effective operators will be chirality suppressed. Thus, it is safe to assume that the total contribution can be obtained as the incoherent sum of the individual ones. Since a full collider simulation is beyond of the scope of this work, we recast the existing limits on the coefficient of the effective operator as a function of the DM mass. Since the constraint mainly stems from events with large , for which the valence quarks dominate the parton distribution function of the proton, we assume that only the first generation quarks participate. Thus, the contribution to the function will be given by

| (29) |

where .

Mono-photons at LEP

Similarly to the monojet case, the 95% CL constraint (rescaled to the CL) on the coefficient of the operator, , by LEP mono-photon+ searches is considered Fox et al. (2011). The contribution to the is thus:

| (30) |

where .

IV Numerical details

All the phenomenological models described in Sec. II have been implemented in CalcHEP Belyaev et al. (2013) using FeynRules Alloul et al. (2014). The result is then fed into micrOmegas Belanger et al. (2014) for the computation of the DM observables.

With the chi-square function implemented as described above, the parameter spaces of the different models need to be efficiently explored in order to derive constraints. While a simple grid scan may be viable in the case of the family-oriented model (with only three parameters), it quickly becomes prohibitively expensive for models with a larger number of free parameters. In particular, the general model with 15 free parameters would be unfeasible to scan in this fashion. Moreover, we expect the parameter space to be affected by several degeneracies. These further decrease the effectiveness of a grid scan and require the grid spacing to be very small in order to find the global minimum. For these reasons, we have opted to perform our scans using the nested sampling Skilling (2004) Monte Carlo algorithm implemented in the MultiNest software Feroz et al. (2009). This method is particularly designed for handling parameter space degeneracies as well as for preferentially scanning the regions of parameter space where the likelihood function is large. Although the MultiNest software was initially designed for computing Bayesian evidence, it produces a sample of the parameter space with the corresponding likelihood values as a by-product. In this paper, we take a purely frequentist approach and only consider the values obtained from the likelihood. Thus, MultiNest is used only for its capability of efficiently sampling the regions with relatively low values.

In our MultiNest simulations, the number of live points was set to 750, the tolerance to 0.2 and the efficiency to 0.9. Constant efficiency mode was not used. The range of parameters scanned was adapted to the values of the coefficients required to reproduce the correct relic abundance, for each of the dark matter masses considered. The scan was done in linear scale over both positive and negative values for the coefficients accompanying each of the operators. The number of distinct samples obtained ranges between and , depending on the particular model and DM mass considered.

V Results

This section describes the results obtained from a global fit using the models described in Sec. II. All constraints considered in Sec. III have been included in our simulations.

Note that the dimensionful couplings will depend on the effective theory mass scale as . In this section, we will use units of TeV-2 for the couplings and the rough limit may be used to assess the range of validity of the EFT assumption.

Using the function built as described in Sec. III, we have derived two main types of constraints on the parameters of the model:

-

i)

For a fixed DM mass , we scan the parameter space for all couplings in each model. This results in a sample of points in the parameter space, which is generally concentrated in the regions corresponding to the lowest values of the function. We use these to perform parameter estimation and determine the allowed regions for the different couplings within a given model, for given values of .

-

ii)

The calculation described in (i) can be repeated for several values of the DM mass. By minimizing the global over all couplings in the model, the resulting ,

(31) allows parameter estimation of the DM mass . By deriving confidence intervals around the global minimum of a given model, this will indicate whether there are any values of the DM mass which are disfavoured by current data. Since the simulations are computationally rather expensive, they have been performed for a few values of the DM mass, namely , 50, 100, 200, 500 and 1000 GeV. Our minimization in the variable is therefore performed in a discrete fashion, using only this set of values. However, from GeV on, we find essentially no change in the value.

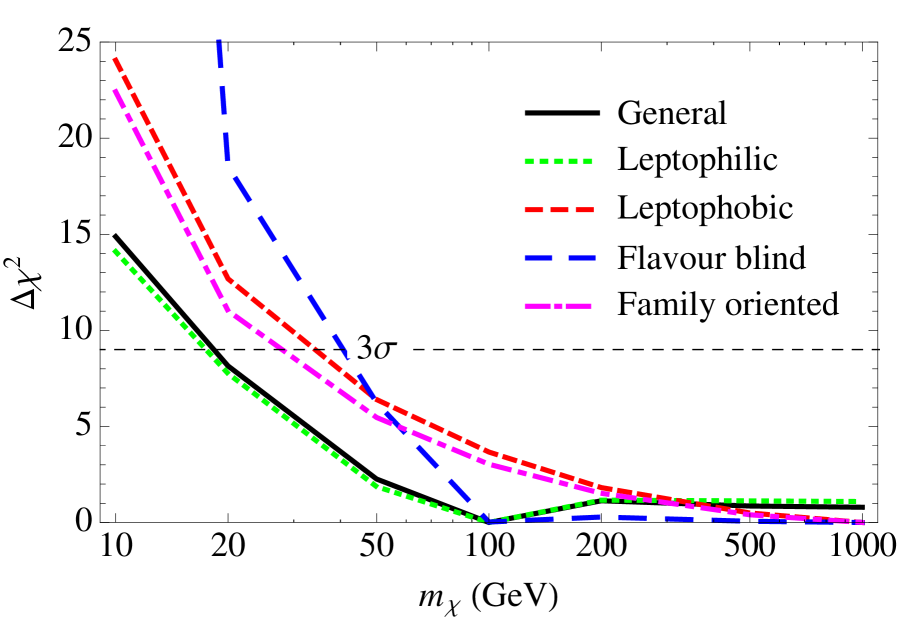

Our main results are summarized in Figs. 1 and 2. Figure 1 shows the value of the (see Eq. (31)) obtained for the different models under consideration, as indicated in the legend, see item (ii) above. The dashed horizontal line shows the value of the corresponding to limit for 1 d.o.f.. As can be seen from this figure, DM masses around 40 GeV and below are disfavored at with respect to higher masses for most models under consideration. The only exceptions are the general and leptophilic models, for which only masses below 20 GeV are disfavored.

The fact that very light DM masses are generally disfavored can be understood from the complementarity between different data sets. As can be seen from Eq. (16), the annihilation cross section is proportional to , while the energy density scales as . Thus, models with very light DM masses will require large couplings to the SM in order to satisfy relic density constraints. However, since there is no positive signal from DM in collider, direct or indirect detection data, a significant tension between the different data sets occurs, which eventually increases the minimum value of the . The tension is stronger in models where DM either does not couple to leptons (leptophobic), or in models where the lepton couplings are related to others (i.e., flavour-blind or family-oriented). For the general and leptophilic models, the tension is relaxed since the bounds which mainly constrain the couplings to leptons (LEP, CMB and AMS data) are not as strong as those constraining the quark couplings (direct detection, LHC and FermiLAT bounds). Finally, one can observe a slight preference in the fit for DM masses around 100 GeV for all models except for the leptophobic and family-oriented. This can be understood from the mild preference of AMS data for a DM signal around this mass, since smaller masses are too strongly constrained, while heavier masses do not produce an appreciable signal. It should be stressed out, however, that this preference is far from being statistically significant. This feature is absent in the leptophobic and family-oriented models since the required fermion couplings for this signal to take place are either absent or constrained to be very small by other experimental bounds.

|

|

|

|

|

|

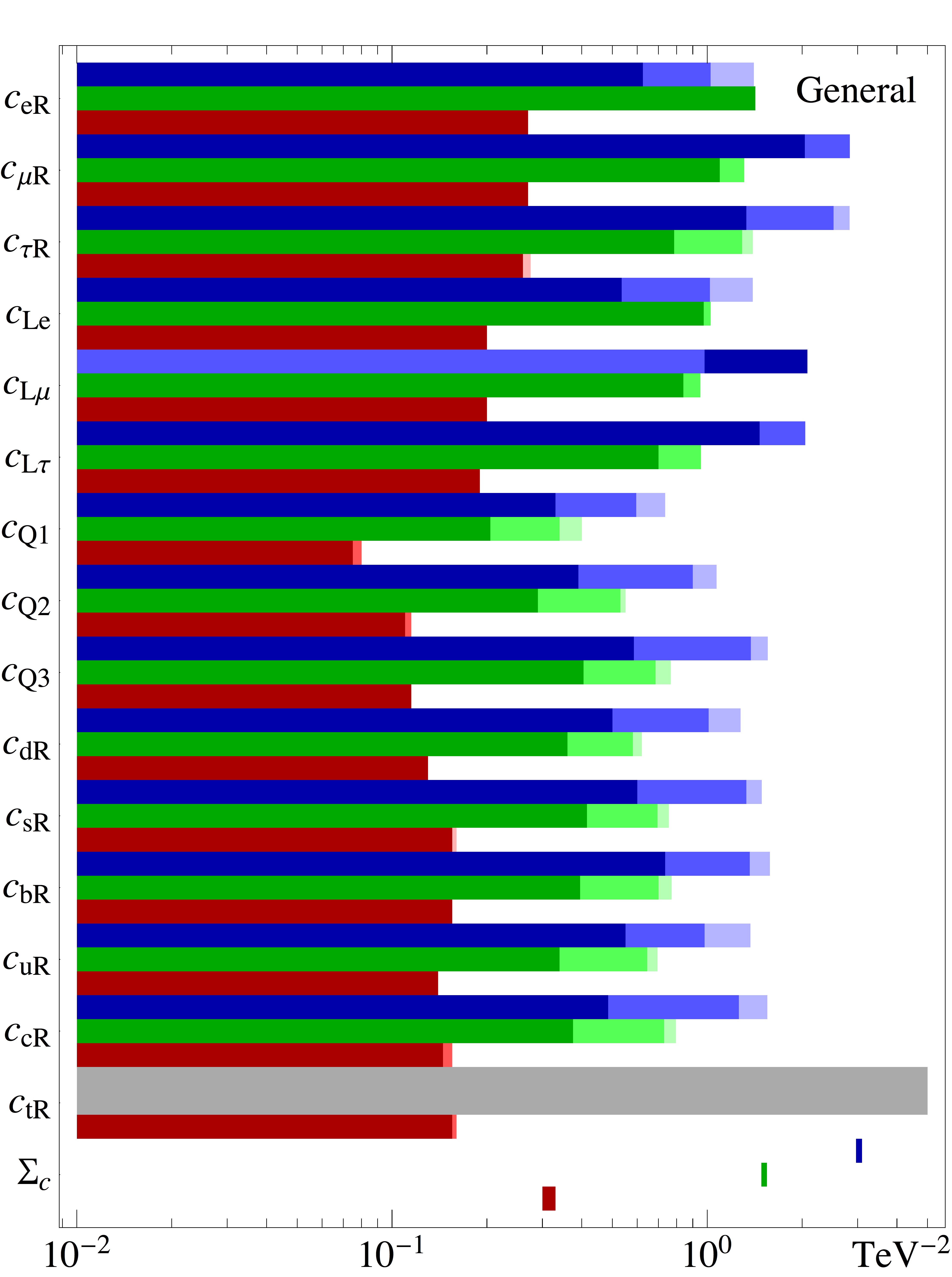

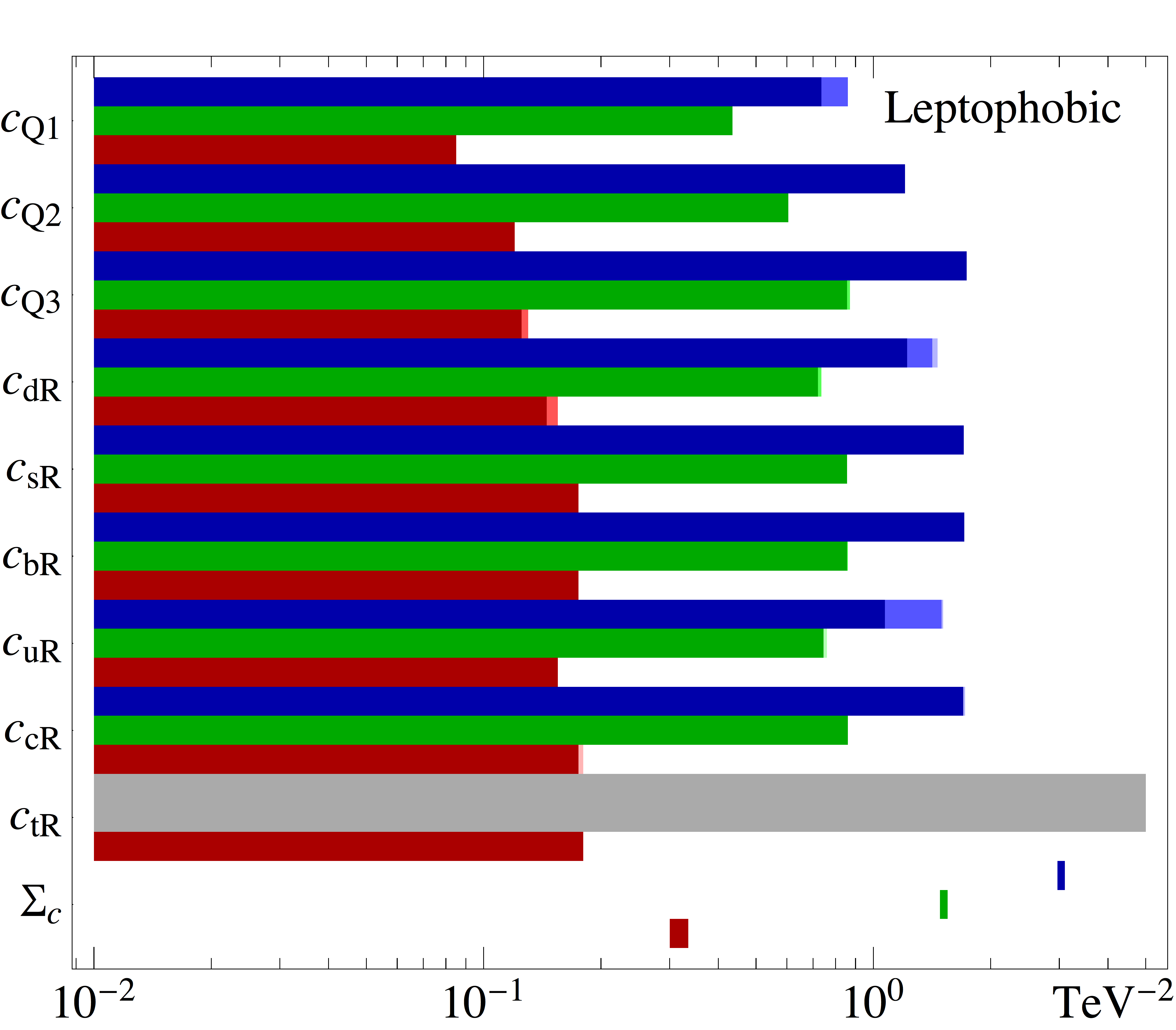

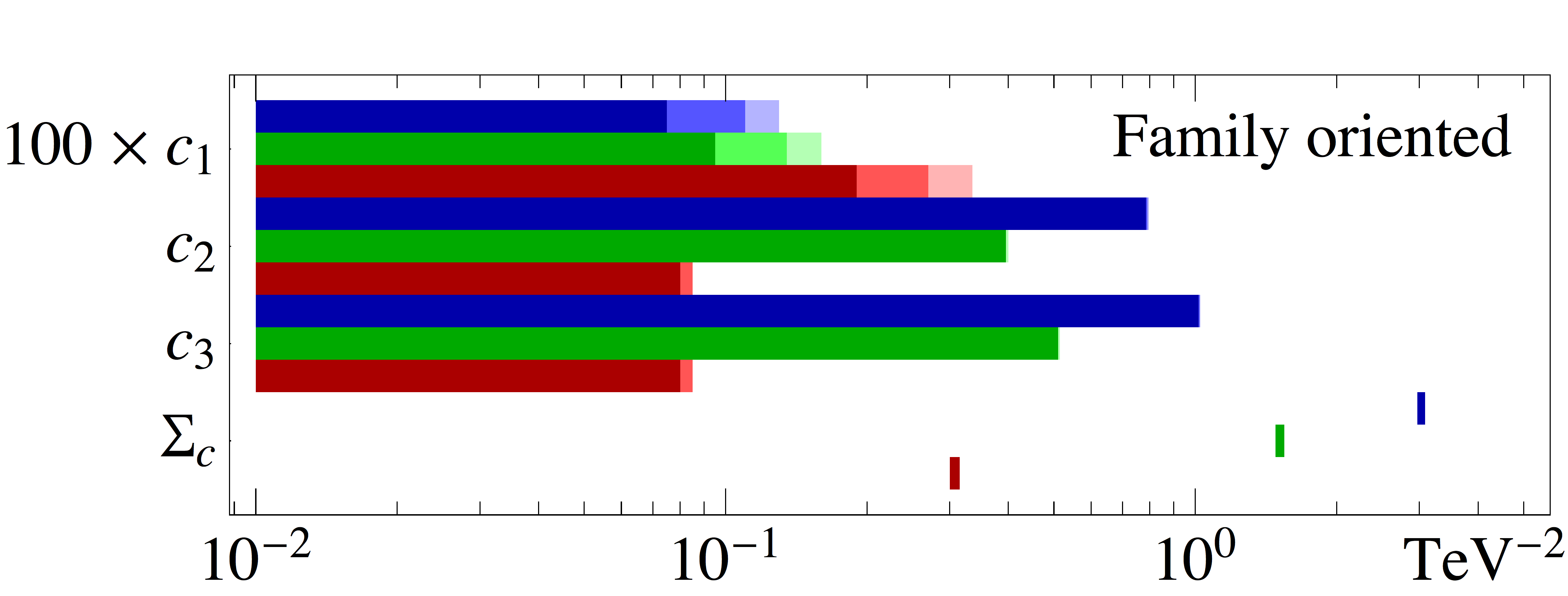

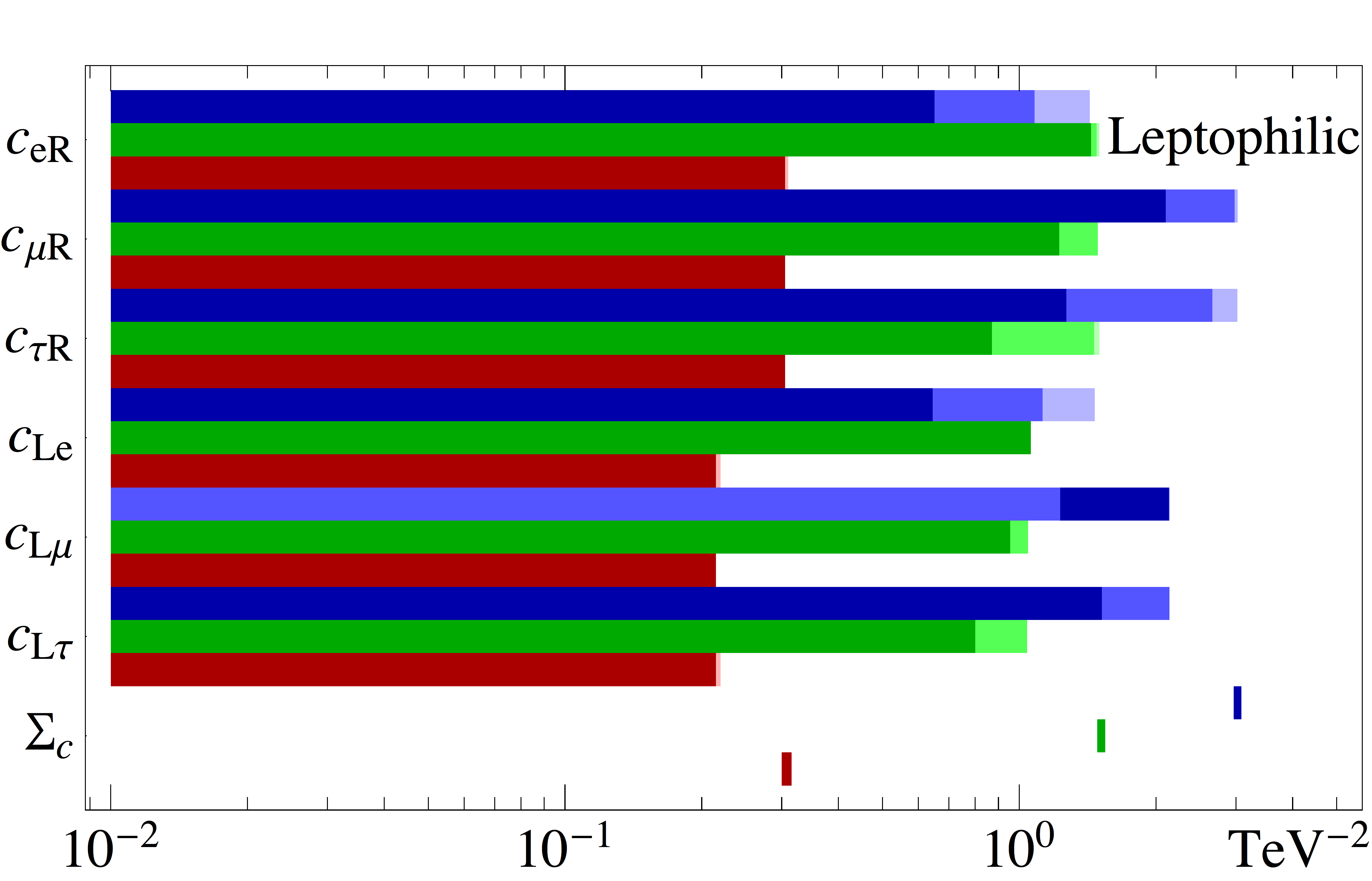

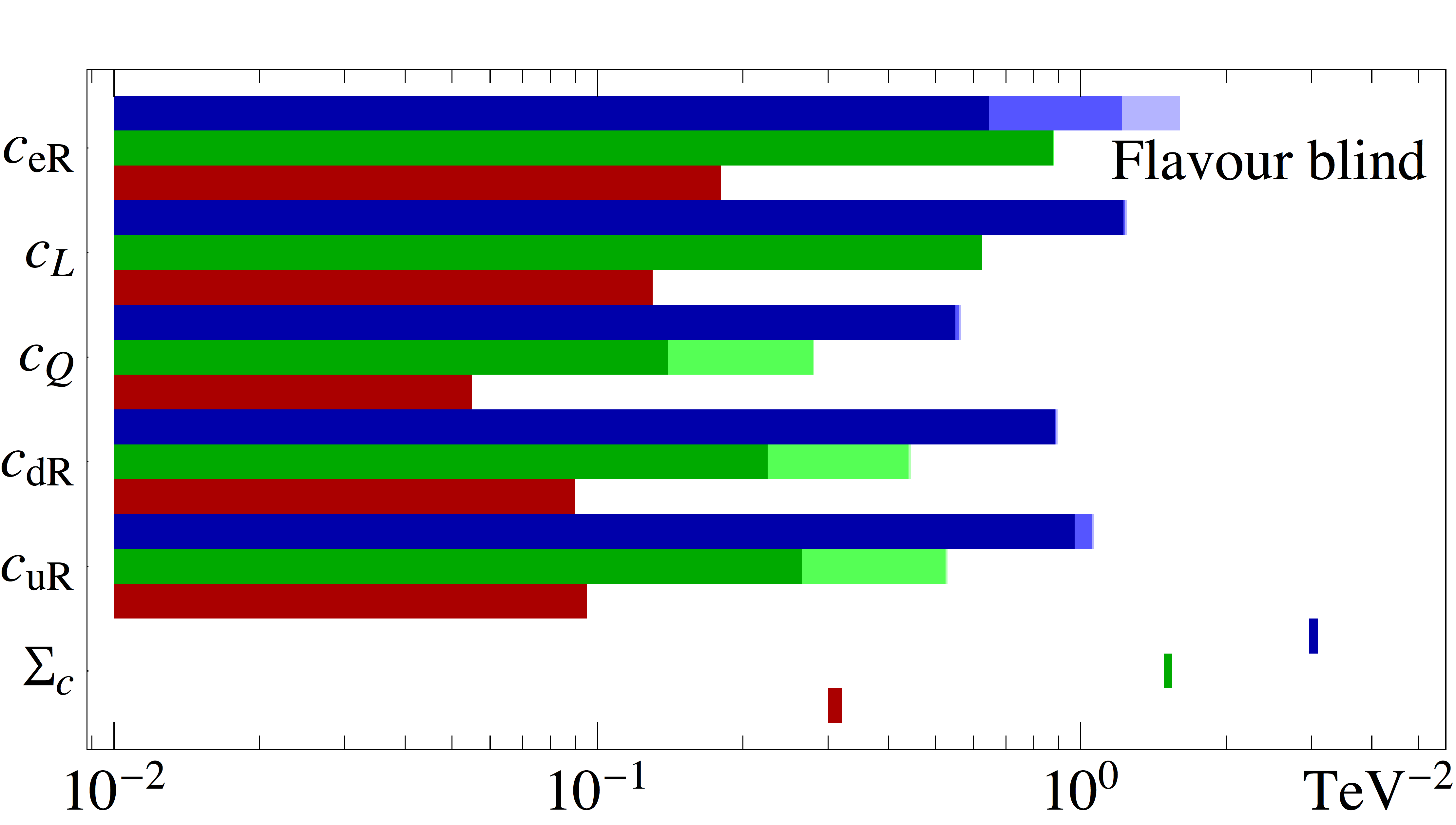

The second main result of this paper is shown in Fig. 2, where the allowed regions at 1, 2 and 3 (for 1 d.o.f.) are shown for all couplings associated to the effective operators and for the five models under consideration, see Sec. II. For each coupling, our results are shown for three different values of the DM mass, 10 GeV (upper/blue bands), 100 GeV (middle/green bands) and 500 GeV (lower/red bands). Each panel also shows the constraint imposed by relic density on the weighted sum of the squares of all couplings, i.e., as defined in Eq. (16).

The tension between different data sets can be understood from the results found in Fig. 2. As already explained, the constraint from relic density tends to bring the couplings to larger values as the DM mass is decreased. This is observed when comparing the upper/blue and lower/red bands, for all models under consideration, and in particular for the allowed values of . One can clearly see how the tension between different data sets can lead to having only one coupling as the major contributor to the relic density for a given model. For instance, for the general and leptophilic models with GeV, the relic density constraint is satisfied with a sizable coupling to the second family lepton doublet, and the preferred region for this coupling at does not include zero. This can be understood as follows. First, the bounds on couplings to quarks of all generations are very strongly constrained by direct detection and Fermi-LAT experiments. Moreover, on the leptonic side, the bounds on RH leptons from both AMS (electrons) and Fermi-LAT (taus) are very strong. In addition, LH couplings would also imply DM annihilations through muon neutrinos, so that the indirect searches are relaxed while the relic abundance constraint is more easily fulfilled. As such, the weakest global bound appears for the second lepton generation, which can accommodate the required relic abundance without being in tension with AMS or Fermi-LAT.

Nevertheless, a significant tension persists, as seen in Fig. 1. This is particularly the case for light DM masses, see, e.g., the bands corresponding to 50 GeV in Fig. 2. However, for 100 GeV mass (and heavier) the situation is different. For such large values, the indirect detection constraints are already weaker than the relic abundance one. Thus, since a coupling to LH fermions implies two annihilation channels, both contributing to decrease the DM density, we see that the LH couplings are more constrained than the corresponding RH couplings. Similarly, due to color multiplicity factors, couplings to quarks are more strongly constrained than those to leptons (in particular the LH ones).

Another interesting example is found in the panel for the flavour-blind model. Since the coupling to the muon is equal to that of the electron and tau (which are very strongly constrained) the relic abundance cannot be obtained out of annihilation to the second lepton doublet, as for the previous cases. For GeV, we see from the figure that the DM would prefer to annihilate to up-type RH quarks instead (the 1 region for this coupling is around the value of 1). However, this results in a strong tension with the Fermi-LAT data, and this mass is therefore disfavored at with respect to the best fit at higher mass (see Fig.1).

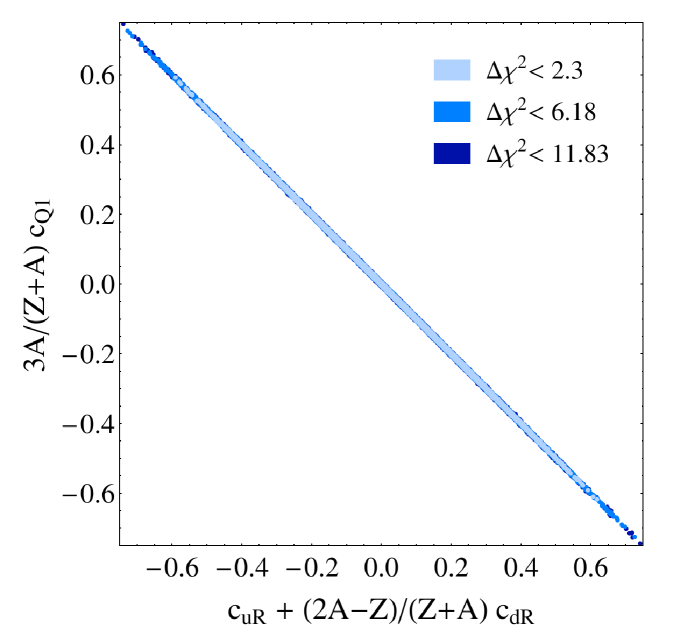

Finally, as was already mentioned in Sec. III, we have found two interesting degeneracies among different parameters in the models considered in this work. The first one is due to the constraints coming from direct detection experiments, which allows for a degeneracy between the couplings to quarks. This is shown in the left panel in Fig. 3, where the allowed confidence regions for the , and couplings are depicted, showing the degeneracy explicitly. For GeV, the most stringent bound on the effective operators involving the first generation of quarks comes from direct detection experiments. Nevertheless, as can be seen from this figure, the allowed regions extend to rather large values of the couplings, as long as the relation is satisfied, given the values of and of the Xenon material used by LUX (see Sec. III for details). While the inclusion of EDELWEISS-II, with a different target material, could in principle lift this degeneracy, the difference in the ratio of protons and neutrons is not large enough for this task. The final limits found in Fig. 3 for the degeneracy line are rather stemming from the relic abundance constraint.

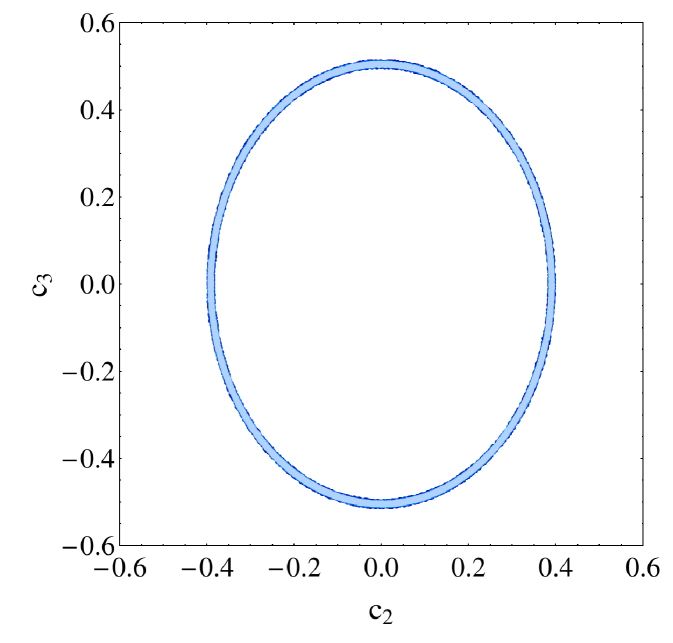

The second degeneracy stems from the strong constraints from relic abundance on , which generally imply that all couplings must lie on the surface of a hyperellipsoid. In particular, for the family oriented scenario, this reduces to the ellipse displayed in the right panel in Fig. 3, where the allowed regions at 1, 2 and 3 are shown in the plane for 2 d.o.f.. Given that the strongest constraints from colliders and direct detection experiments apply to the first generation, and in this model all particles in the first generation have the same coupling, the constraints on are very strong since the direct detection degeneracy relation cannot be fulfilled. This is shown in Fig. 2, where it can be seen that the coupling to the first family is restricted to at for this model. This model only has two additional couplings, and . Therefore, in order to satisfy relic density constraints, either the coupling to the second or the third family (or both) have to be different from zero. As a consequence, the allowed region is shaped as an ellipse in the plane.

A final remark regarding the validity of the EFT is in order. As can be seen from eq. (16) and from Fig. 2, the weighted sum of the coefficients required to obtain the correct thermal relic abundance goes like . On the other hand, for DM annihilation, the EFT validity condition reads . Since is a combination of couplings, we can generically write it as . Therefore, by combining the validity condition with the relic abundance requirement, a maximum DM mass is obtained for which the EFT ceases to be valid about TeV) . Notice however that we do not expect the data to strongly constrain thermal DM in this regime.

VI Conclusions

In this work we have explored how strongly the combination of present data from very different experimental probes can constrain the different couplings between Dark Matter (DM) and the Standard Model (SM) particle content. The focus of the work was a bottom-up approach to understand what data itself is able to tell us regarding DM interactions, minimizing when possible any theoretical input or bias. To this end, we make use of an Effective Field Theory (EFT) approach to attain the desired model-independence at the expense of assuming that DM–SM interactions are mediated by heavy particles that can be integrated out of the theory. Furthermore, we have allowed independent couplings of DM to all SM fields so as to minimize any theoretical bias, but we have limited these interactions to flavour-conserving ones in the SM sector, since generally stronger constraints apply to them. Finally, if all possible Lorentz structures were simultaneously allowed, the parameter space would become too large to explore and constrain with present data. Thus, we restrict our analysis to Dirac DM fermions which interact with the SM constituents through independent operators of the form

| (32) |

with independent coefficients for all SM fermions and chiralities .

This working model, which is not intended to reproduce any particular ultraviolet completion, provides the necessary parametrization to assess through a joint analysis the constraints on the individual interaction strengths from present data. We constrain 15 independent coefficients through a combination of 8 experimental probes comprising relic abundance, direct and indirect DM searches as well as collider limits. In addition to this general setup, we also explore how these constraints are affected when correlations between the different coefficients are allowed, or when some of the operators are forbidden. In particular, we studied the completely leptophilic, leptophobic and flavour-blind cases, as well a family-oriented scenario in which all members of the same generation share the same coupling to the DM particle.

From our global fits we find that for DM masses GeV the tension with respect to the best fit between the couplings necessary to reproduce the observed DM relic abundance and the upper bounds from null results exceeds the level, for the general and leptophilic scenarios considered, while the same happens for GeV for the leptophobic, flavour blind and family oriented cases. For these three cases, the rises very steeply as the DM mass decreases such that for GeV this tension reaches the level, within our EFT framework.

Furthermore we find that, due to the slightly weaker present constraints, couplings to the second-generation lepton doublet are preferred, whenever they are available within the model under study. If this coupling is not available or is instead further constrained by an assumed correlation, as is the case of the flavour-blind scenario, a more sizable coupling to the right-handed, up-type quark singlet is instead preferred for similar reasons. Thus, it would be interesting to improve our present constraints on these couplings. Finally, we also find that, since all couplings are assumed to be independent and free to vary in the fit, the very stringent constraints stemming from direct search experiments such as LUX imply that either the first generation quark couplings to DM are extremely small or that they are related to each other such that the different contributions to these processes cancel against each other, i.e., .

DM searches worldwide are now probing and constraining essentially all possible interaction channels between DM and the known matter constituents through extremely different and complementary and search techniques. In this context, and given our lack of a unique theoretical DM paradigm, it is important to test different DM models against present data with bottom-up approaches where theoretical biases are not imposed. Unfortunately, true and complete model independence cannot be achieved. Indeed, while EFT offers an ideal frame for this sort of studies, its adoption already enforces some assumptions, such as the decoupling nature of the mediating particle or the uniqueness of the DM candidate. Moreover, true model independence would imply the inclusion of hundreds of independent operators with different flavour and chiral structures, rendering the analysis too general to actually provide any useful information. In this work we have reduced the number of theoretical assumptions taking a first step which implies some unavoidable restrictions to the number and types of effective operators included. It would be very interesting to supplement our results with additional complementary analyses, including different Lorentz structures, different DM fields (Majorana fermions, or scalars), or with extensions beyond the EFT approach by allowing one (or several) generic light mediator(s).

Acknowledgements.

We are happy to acknowledge stimulating discussions with Felix Kahlhoefer, Olga Mena, Miguel Peiro, Pantelis Tziveloglou and Aaron Vincent. The work of MB was supported by the Göran Gustafsson Foundation. PC, EFM and PM acknowledge financial support by the European Union through the ITN INVISIBLES (PITN-GA-2011-289442). EFM also acknowledges support from the EU through the FP7 Marie Curie Actions CIG NeuProbes (PCIG11-GA-2012-321582) and the Spanish MINECO through the “Ramón y Cajal” programme (RYC2011-07710) and the project FPA2009-09017. EFM and PM were also supported by the Spanish MINECO through the Centro de excelencia Severo Ochoa Program under grant SEV-2012-0249. The work of BZ is supported by the IISN and by the Belgian Federal Science Policy through the Interuniversity Attraction Pole P7/37. Fermilab is operated by the Fermi Research Alliance under contract no. DE-AC02-07CH11359 with the U.S. Department of Energy. The work of PC was partially supported by the U.S. Department of Energy under contract DE-SC001363. PC and PM would like to thank the Mainz Institute for Theoretical Physics for its hospitality and partial support during the completion of this work. EFM and PM thank the Aspen Center for Physics for its hospitality and the support of the National Science Foundation grant PHY-1066293 and the Simons Foundation for their stay there.Appendix A Fitting procedure for the AMS02 positron flux data

As already explained in Sec. III, the measurements from AMS on the positron fraction are also used to derive upper bounds on DM annihilation cross sections. The main issue that has to be dealt with when doing so, however, are the large uncertainties on the positron and electron fluxes from astrophysical sources. It is common to use a linear combination of two power laws to parametrize them (see, e.g., Refs Ibarra et al. (2014); Bergstrom et al. (2013); Kopp (2013)), where typically the positron flux includes an exponential cut-off at high energies. Our parametrization for the electron and positron fluxes is shown in Eq. (23). For the propagation of charged cosmic rays in the astrophysical media, we use the MED parameters defined in Ref. Donato et al (2003).

In principle, both DM and the astrophysical contribution to the electron and positron fluxes are affected by the solar modulation. This can be explicitly taken into account by computing the flux at the top of the atmosphere () under the force field approximation, see Eq. (24). Therefore, under these assumptions our background already depends on 9 parameters

| (33) |

To get the total positron fraction, one would add to the background the additional contribution from DM annihilation, which in principle depends on the solar modulation parameter as well, see Eq. (25).

In principle, the best thing would be to perform a fit to the AMS02 data where, for each value of the DM mass, the minimum of the is searched for after marginalizing over the 9 parameters listed above. However, this is computationally rather expensive. Furthermore, we found that severe degeneracies take place among the different parameters, which complicates the problem even further.

However, the problem can be considerably simplified by considering the following. In principle, the positron flux will be the most sensitive to the DM annihilation signal, while the electron flux will be mainly dominated by astrophysical backgrounds instead. This allows to reduce the number of parameters in the fit significantly: since the electron flux will be independent from the signal, it can be fitted independently and leave the parameters fixed during the fit. This is done using the parametrization in Eq. (23) for the electron flux, and the publicly available data999The AMS02 data in Ref. Aguilar et al. (2013) contains only the positron fraction and fluxes, but not the electron data. Therefore, we use the Fermi LAT data in this case, which is publicly available Ackermann et al. (2010). on the electron flux from the Fermi LAT collaboration Ackermann et al. (2010).

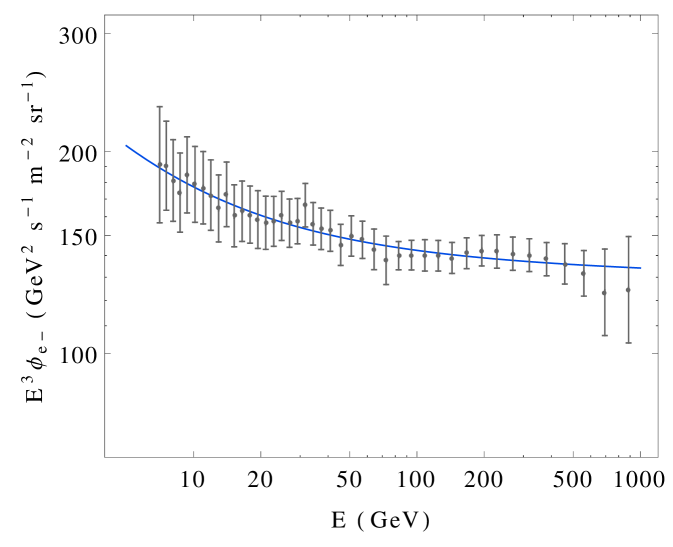

When fitting the Fermi LAT data, a fit is performed considering both the low-energy and high-energy data sets, between 7 GeV and 1 TeV. The resulting curve is shown in the left panel in Fig. 4, together with the data points as extracted from Ref. Ackermann et al. (2010). The uncertainties in each bin are computed by adding in quadrature their statistical and systematic errors.101010In the case of asymmetric systematic errors, we (conservatively) take the largest value. We find that the parameters which give a best-fit to the Fermi LAT electron flux data are

| (34) |

For these values of the parameters, we find a minimum .

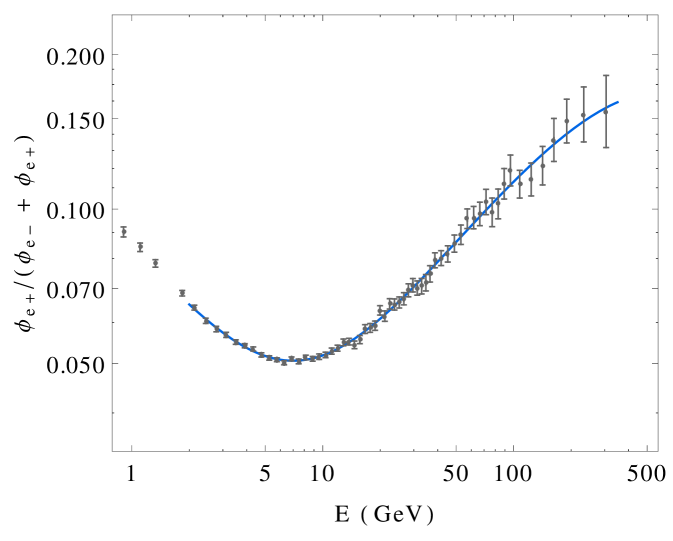

Finally, when deriving the constraint from AMS data, the total positron flux is obtained as the addition of the astrophysical background (which depends on and ) and the contribution from DM annihilation. In the absence of an extra contribution from DM annihilation, we find that the following parameters give a best-fit to the positron fraction data from AMS

| (35) |

with . The positron fraction obtained with these parameters can be seen in the right panel in Fig. 4 together with the AMS data. Again in this case, the errors are taken as the sum in quadrature of the statistical and systematic errors in each bin.

In our simulations, however, we include the DM annihilation to the positron flux and we let the parameters in Eq. (33) vary during the fit. When doing so, we find that the solar modulation parameter does not have a major impact in the fit while it generates some numerical degeneracies with other parameters, which are difficult to deal with. Therefore, we also fix this parameter to the value which gives a best-fit to the AMS02 data using the background contribution alone. The rest of the parameters in Eq. (33) are left free during the fit, and will be fitted for each value of the DM mass and the couplings in an independent way.

Finally, even though in our simulations we consider several couplings between the DM and SM particles at once, it is useful to look at the limits obtained when only one coupling to a SM fermion is allowed at a time. This allows to illustrate the interplay between different data sets and where the tension in the fit for low values of the DM mass may come from. The limits on the DM-SM interaction cross section are shown in Fig. 5 for leptons and in Fig. 6 for quarks, as a function of the DM mass. Since in our fit we are combining AMS with relic abundance constraints, a significant tension will only take place when the limit on the interaction cross section gets below the value needed for relic abundance. Therefore, one can already see from this figure that the AMS data will be most effective in constraining the couplings of the DM to electrons (for GeV) and muons (for GeV), but will be less efficient for other DM fermions (for instance, for taus it will only significantly affect the fit for GeV).

References

- Bergstrom (2000) L. Bergstrom, Rept. Prog. Phys. 63, 793 (2000), eprint hep-ph/0002126.

- Tulin et al. (2013) S. Tulin, H.-B. Yu, and K. M. Zurek, Phys. Rev. D87, 115007 (2013), eprint 1302.3898.

- Hochberg et al. (2014) Y. Hochberg, E. Kuflik, T. Volansky, and J. G. Wacker, Phys. Rev. Lett. 113, 171301 (2014), eprint 1402.5143.

- Vincent et al. (2015a) A. C. Vincent, P. Scott, and A. Serenelli, Phys. Rev. Lett. 114, 081302 (2015a), eprint 1411.6626.

- Vincent et al. (2015b) A. C. Vincent, A. Serenelli, and P. Scott, JCAP 1508, 040 (2015b), eprint 1504.04378.

- Beltran et al. (2009) M. Beltran, D. Hooper, E. W. Kolb, and Z. C. Krusberg, Phys. Rev. D80, 043509 (2009), eprint 0808.3384.

- Beltran et al. (2010) M. Beltran, D. Hooper, E. W. Kolb, Z. A. C. Krusberg, and T. M. P. Tait, JHEP 09, 037 (2010), eprint 1002.4137.

- Fox et al. (2011) P. J. Fox, R. Harnik, J. Kopp, and Y. Tsai, Phys. Rev. D84, 014028 (2011), eprint 1103.0240.

- Fox et al. (2012) P. J. Fox, R. Harnik, J. Kopp, and Y. Tsai, Phys. Rev. D85, 056011 (2012), eprint 1109.4398.

- Mambrini and Zaldivar (2011) Y. Mambrini and B. Zaldivar, JCAP 1110, 023 (2011), eprint 1106.4819.

- Cheung et al. (2012a) K. Cheung, P.-Y. Tseng, Y.-L. S. Tsai, and T.-C. Yuan, JCAP 1205, 001 (2012a), eprint 1201.3402.

- Cabrera et al. (2013) M. E. Cabrera, J. A. Casas, and R. R. de Austri, JHEP 07, 182 (2013), eprint 1212.4821.

- Roszkowski et al. (2012) L. Roszkowski, E. M. Sessolo, and Y.-L. S. Tsai, Phys. Rev. D86, 095005 (2012), eprint 1202.1503.

- Strege et al. (2014) C. Strege, G. Bertone, G. J. Besjes, S. Caron, R. Ruiz de Austri, A. Strubig, and R. Trotta, JHEP 09, 081 (2014), eprint 1405.0622.

- Cheung et al. (2012b) K. Cheung, Y.-L. S. Tsai, P.-Y. Tseng, T.-C. Yuan, and A. Zee, JCAP 1210, 042 (2012b), eprint 1207.4930.

- Akerib et al. (2014) D. S. Akerib et al. (LUX), Phys. Rev. Lett. 112, 091303 (2014), eprint 1310.8214.

- Ahmed et al. (2011) Z. Ahmed et al. (CDMS, EDELWEISS), Phys. Rev. D84, 011102 (2011), eprint 1105.3377.

- Aguilar et al. (2013) M. Aguilar et al. (AMS), Phys.Rev.Lett. 110, 141102 (2013).

- Ackermann et al. (2015) M. Ackermann et al. (Fermi-LAT) (2015), eprint 1503.02641.

- Ade et al. (2015) P. A. R. Ade et al. (Planck) (2015), eprint 1502.01589.

- Aad et al. (2015) G. Aad et al. (ATLAS), Eur. Phys. J. C75, 299 (2015), eprint 1502.01518.

- Khachatryan et al. (2015) V. Khachatryan et al. (CMS), JHEP 01, 096 (2015), eprint 1411.6006.

- Choi et al. (2015) K. Choi et al. (Super-Kamiokande), Phys. Rev. Lett. 114, 141301 (2015), eprint 1503.04858.

- Aartsen et al. (2016) M. G. Aartsen et al. (IceCube) (2016), eprint 1601.00653.

- Kamenik and Smith (2012) J. F. Kamenik and C. Smith, JHEP 03, 090 (2012), eprint 1111.6402.

- Calibbi et al. (2015) L. Calibbi, A. Crivellin, and B. Zaldivar, Phys. Rev. D92, 016004 (2015), eprint 1501.07268.

- Froggatt and Nielsen (1979) C. D. Froggatt and H. B. Nielsen, Nucl. Phys. B147, 277 (1979).

- Leurer et al. (1993) M. Leurer, Y. Nir, and N. Seiberg, Nucl. Phys. B398, 319 (1993), eprint hep-ph/9212278.

- Leurer et al. (1994) M. Leurer, Y. Nir, and N. Seiberg, Nucl. Phys. B420, 468 (1994), eprint hep-ph/9310320.

- Ibanez and Ross (1994) L. E. Ibanez and G. G. Ross, Phys. Lett. B332, 100 (1994), eprint hep-ph/9403338.

- Binetruy and Ramond (1995) P. Binetruy and P. Ramond, Phys. Lett. B350, 49 (1995), eprint hep-ph/9412385.

- Crivellin et al. (2014) A. Crivellin, F. D’Eramo, and M. Procura, Phys. Rev. Lett. 112, 191304 (2014), eprint 1402.1173.

- D’Eramo and Procura (2015) F. D’Eramo and M. Procura, JHEP 04, 054 (2015), eprint 1411.3342.

- Kopp et al. (2009) J. Kopp, V. Niro, T. Schwetz, and J. Zupan, Phys. Rev. D80, 083502 (2009), eprint 0907.3159.

- Belanger et al. (2014) G. Belanger, F. Boudjema, A. Pukhov, and A. Semenov, Comput.Phys.Commun. 185, 960 (2014), eprint 1305.0237.

- Hall et al. (2010) L. J. Hall, K. Jedamzik, J. March-Russell, and S. M. West, JHEP 03, 080 (2010), eprint 0911.1120.

- Chen and Kamionkowski (2004) X.-L. Chen and M. Kamionkowski, Phys. Rev. D70, 043502 (2004), eprint astro-ph/0310473.

- Padmanabhan and Finkbeiner (2005) N. Padmanabhan and D. P. Finkbeiner, Phys. Rev. D72, 023508 (2005), eprint astro-ph/0503486.

- Slatyer et al. (2009) T. R. Slatyer, N. Padmanabhan, and D. P. Finkbeiner, Phys. Rev. D80, 043526 (2009), eprint 0906.1197.

- Slatyer (2013) T. R. Slatyer, Phys. Rev. D87, 123513 (2013), eprint 1211.0283.

- Lopez-Honorez et al. (2013) L. Lopez-Honorez, O. Mena, S. Palomares-Ruiz, and A. C. Vincent, JCAP 1307, 046 (2013), eprint 1303.5094.

- Ibarra et al. (2014) A. Ibarra, A. S. Lamperstorfer, and J. Silk, Phys. Rev. D89, 063539 (2014), eprint 1309.2570.

- Ackermann et al. (2010) M. Ackermann et al. (Fermi-LAT), Phys. Rev. D82, 092004 (2010), eprint 1008.3999.

- Galassi et al. (2013) M. Galassi et al., ISBN 0954612078 (2013), URL http://www.gnu.org/software/gsl/.

- Busoni et al. (2014) G. Busoni, A. De Simone, E. Morgante, and A. Riotto, Phys.Lett. B728, 412 (2014), eprint 1307.2253.

- Buchmueller et al. (2015) O. Buchmueller, M. J. Dolan, S. A. Malik, and C. McCabe, JHEP 01, 037 (2015), eprint 1407.8257.

- Abdallah et al. (2014) J. Abdallah et al. (2014), eprint 1409.2893.

- Buckley et al. (2015) M. R. Buckley, D. Feld, and D. Goncalves, Phys. Rev. D91, 015017 (2015), eprint 1410.6497.

- Abdallah et al. (2015) J. Abdallah et al. (2015), eprint 1506.03116.

- Abercrombie et al. (2015) D. Abercrombie et al. (2015), eprint 1507.00966.

- Goodman et al. (2010) J. Goodman, M. Ibe, A. Rajaraman, W. Shepherd, T. M. P. Tait, and H.-B. Yu, Phys. Rev. D82, 116010 (2010), eprint 1008.1783.

- Belyaev et al. (2013) A. Belyaev, N. D. Christensen, and A. Pukhov, Comput. Phys. Commun. 184, 1729 (2013), eprint 1207.6082.

- Alloul et al. (2014) A. Alloul, N. D. Christensen, C. Degrande, C. Duhr, and B. Fuks, Comput. Phys. Commun. 185, 2250 (2014), eprint 1310.1921.

- Skilling (2004) J. Skilling, AIP Conference Proceedings 735 pp. 395–405 (2004).

- Feroz et al. (2009) F. Feroz, M. P. Hobson, and M. Bridges, Mon. Not. Roy. Astron. Soc. 398, 1601 (2009), eprint 0809.3437.

- Bergstrom et al. (2013) L. Bergstrom, T. Bringmann, I. Cholis, D. Hooper, and C. Weniger, Phys. Rev. Lett. 111, 171101 (2013), eprint 1306.3983.

- Kopp (2013) J. Kopp, Phys. Rev. D88, 076013 (2013), eprint 1304.1184.

- Donato et al (2003) F. Donato, N. Fornengo, D. Maurin, and P. Salati, Phys.Rev. D69, 063501 (2004), eprint astro-ph/0306207.