Globally Stable Neural Imitation Policies

Abstract

Imitation learning mitigates the resource-intensive nature of learning policies from scratch by mimicking expert behavior. While existing methods can accurately replicate expert demonstrations, they often exhibit unpredictability in unexplored regions of the state space, thereby raising major safety concerns when facing perturbations. We propose SNDS, an imitation learning approach aimed at efficient training of scalable neural policies while formally ensuring global stability. SNDS leverages a neural architecture that enables the joint training of the policy and its associated Lyapunov candidate to ensure global stability throughout the learning process. We validate our approach through extensive simulations and deploy the trained policies on a real-world manipulator arm. The results confirm SNDS’s ability to address instability, accuracy, and computational intensity challenges highlighted in the literature, positioning it as a promising solution for scalable and stable policy learning in complex environments.

I Introduction

Imitation learning (IL) is a pivotal notion attempting to overcome the diverse safety and complexity challenges of policy learning by emulating expert behavior [1, 2]. IL facilitates the training of intricate motion policies without resorting to an exhaustive search in the robot’s state space [3, 4]. Nonetheless, only a handful of IL methods offer formal guarantees pertaining to the stability of the resulting policies. Global stability assumes a critical role when deploying the policy in stochastic environments prone to external perturbations. Notably, it assures that the policy can recover effectively and predictably to a predetermined target, even in uncharted regions of the state space that lie beyond the scope of expert demonstrations.

Prior research in safe IL is focused on autonomous and time-invariant dynamical systems to model and train motion policies [4]. These policies are optimized across a set of expert trajectories, yielding a proper action (velocity) contingent on the current state (position). The optimization process ensures global stability through established theoretical frameworks, such as Lyapunov or contraction theories [5, 6, 7, 8, 9, 10, 11]. Nevertheless, reproducing complex trajectories in high-dimensional state spaces with the previous methods can be impractical due to computational complexity, non-convex optimization, and sample inefficiency. To address computational cost, previous research [7, 9, 11] tends to restrict the class of functions of the contraction metric or the Lyapunov candidate, which in turn limits the imitation performance of the optimized policy. Most notably, a common limitation of these methods appears in the absence of neural policy representation, which are recognized for their scalability, efficient gradient-based optimization, and domain transfer capabilities [12].

Recently developed stable neural policies [13, 14] mainly employ diffeomorphism and invertible mappings to transform a simple stable policy into a highly nonlinear one. Yet, the training stage requires multiple demonstrations, leads to policies with quasi-stability, and hinders incremental learning for slightly different expert trajectories. Neural IL methods, on the other hand, offer flexible and precise policies [15, 16, 17, 18], but lack the required safety and reliability outside the region covered by expert demonstrations and are often data hungry. Similar limitations persist in inverse reinforcement learning [19, 20], where computational complexity is fueled by having reinforcement learning in an inner loop [15].

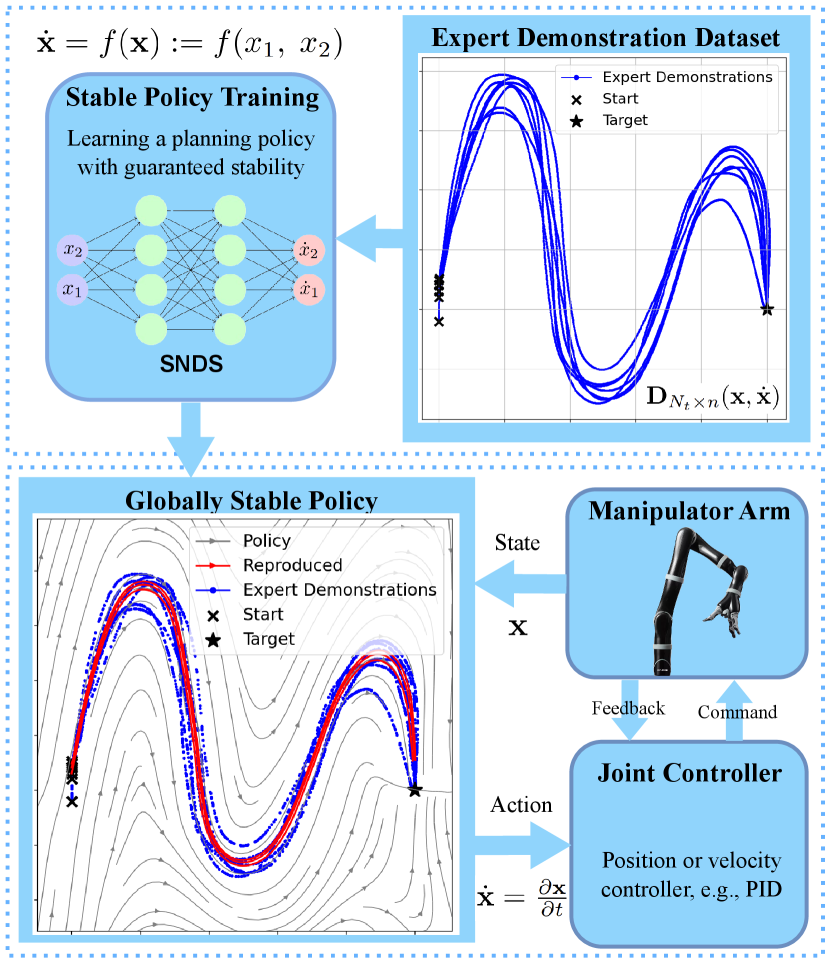

To tackle these challenges, we represent policies with Stable Neural Dynamical Systems (SNDS), where we jointly train a neural dynamical system policy, alongside a secondary, convex-by-design network that guarantees global stability. Subsequently, a joint optimization process trains the neural policy by minimizing a novel differentiable loss aimed at strengthening the alignment between policy rollouts and expert demonstrations. SNDS, therefore, benefits from an expressive and stable neural representation, allowing for safe and scalable approximation of the underlying dynamical system. We present an overview of our framework in Figure 1, and outline our key contributions below.

-

•

Designing SNDS—a stable-by-design neural representation for nonlinear dynamical systems as motion policies based on expert demonstrations.

-

•

Providing formal stability analysis founded on Lyapunov theory and convex neural networks.

-

•

Formulating a differentiable trajectory alignment loss function, inspired by forward Euler’s method.

-

•

Empirical evaluation of SNDS’s effectiveness in higher state space dimensions for complex trajectories, both in simulation and real-world scenarios.

II Background

II-A Preliminaries

Dynamical system. A dynamical system (DS) describes the evolution of a state, , in the ambient space , over time [21]. We consider an autonomous, time-invariant DS modeled with a first-order ordinary differential equation, , where the system yields the time derivative for each , without any control input and independent of time.

Lyapunov stability theory. is globally asymptotically stable (GAS) at an equilibrium, , if for any initial state, the system approaches as time progresses towards infinity. The Lyapunov stability theory is a widely used tool to analyze the GAS property of dynamical systems. According to the theory, a system exhibits GAS if there exists a positive-definite function , referred to as the Lyapunov potential function (LPF), satisfying for all , and at the equilibrium.

Input convex neural networks (ICNN). ICNN’s special architecture enables universal approximation of convex functions while maintaining convexity during training [22, 23]. An -layer ICNN, , is formulated as follows for :

where denotes the layer output, and and are real-value weights. In contrast to feedforward networks, ICNN design exploits , that are positive weights connecting the input directly to each layer, and can be any convex, non-decreasing activation function.

II-B Problem statement

Consider a policy that functions within a state space denoted by . The state space may correspond to a robot’s task () or configuration () space. The policy outputs an action in , such as velocity or torque, which determines the change in the state over time. We denote the state variable by , and assume that the yielded action indicates the time derivative of the current state, denoted by . In this context, our primary objective is to learn a globally stable imitation policy to map to , provided a dataset of expert’s state-action pairs.

Let be the number of trajectories demonstrated by the expert. Each trajectory contains state-actions. The dataset of expert trajectories stacks all state-action pairs,

| (1) |

where is the dataset entry corresponding to the -th sample of the -th trajectory. The dataset holds samples. We assume that the trajectories share a common target endpoint, , and have zero velocity at the target, i.e., and .

The mapping between states and actions can be modeled with a time-invariant autonomous DS [5], denoted by:

| (2) |

In Equation 2, the function corresponds to an ordinary differential equation describing the true underlying DS. The term accounts for the effect of measurement noise present in the expert’s demonstrations, which is incorporated into the estimated DS. Our subsequent objective is to acquire a noise-free approximation of , denoted as . This estimated function, , can be perceived as a policy that maps states to actions in real-time, thereby guiding the robot with the right set of actions to imitate the demonstrated trajectories within the state space.

Alternatively, a trajectory can be generated through forward Euler’s method. Imagine a robot situated at , the policy generates an action , and the next state is subsequently calculated through , where determines the granularity of the discretization.

III Methodology

We model the policy, , and the corresponding LPF, , with two neural networks in Section III-A. This allows us to accurately imitate an expert’s behavior, while providing formal GAS analysis in Section III-B. Lastly, we introduce a differentiable loss function in Section III-C to improve both sample efficiency and imitation accuracy of SNDS.

III-A Dynamical system policy formulation

Let be a standard and unrestricted feedforward neural network which models the unstable policy. Although the model can capture intricate expert demonstrations, training solely on results in an unstable policy which cannot predictably recover even from mild perturbations, as depicted in Figure 2. Hence, in this section, we enforce the trained policy to have the GAS property by satisfying the conditions of Lyapunov stability theory in Section II-A.

The first step is to define a positive-definite and differentiable LPF. As mentioned in Section II-A, ICNNs can approximate any convex function. To ensure that the LPF is positive definite, we employ a well-studied technique [17, 18] to define it as,

| (3) |

where is still a convex function. The first term ensures , while the addition of the state’s -norm with a negligible , along with convexity of ICNN guarantee that . Hence, the LPF with this architecture, , satisfies the positive-definite condition required by Lyapunov stability theory.

Next, to satisfy the negative derivative condition of Lyapunov theory, i.e., , we modify the projection expression in [23] to enforce GAS while training . The policy resulting from projecting onto the half space, , is formulated as follows,

| (4) |

In Equation 4, if the network output fails to meet the Lyapunov condition, i.e., , the output is projected so that always fulfills the condition. The process is simplified with a ReLU activation function. Note that the original formulation in [23] enforces exponential stability, which is too restrictive for our problem. Hence, we further relax the projection to guarantee GAS.

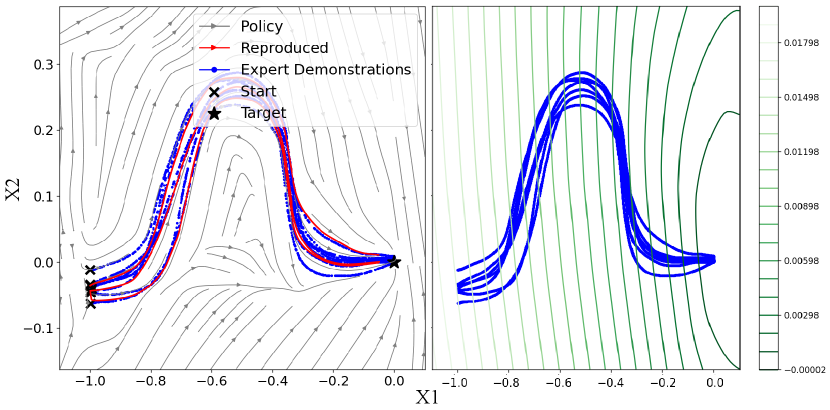

Figure 3 portrays an example of trained and , and the resulting global stability. The differentiable and globally stable , can be trained using efficient gradient-based methods within its parameters, as long as and are defined using automatic differentiation tools.

III-B Global asymptotic stability guarantees

To establish that defines a function with GAS property, we use proof by contradiction to show that regardless of initial state, , every trajectory converges to the target, that is, .

Proposition 1.

The dynamical system, , in Equation 4 is globally asymptotically stable with the Lyapunov function, , defined in Equation 3, and any two arbitrary networks, and , with bounded real-valued weights.

We leverage proof by contradiction for this proposition. Let be a trajectory not converging to the target, meaning that . Since is decreasing, as formulated in Equation 4, and , as defined in Equation 3, there must exist a value such that . We reason that for every .

Now consider the set of all . This set is compact. More precisely, for any open cover 111An open cover for a set is a collection of open sets whose union contains the original set. of that set, there exists a finite subcover, which means that a finite number of the open sets from the cover are sufficient to cover the original set. Hence, takes its supremum, and over this set. This conclusion is justified based on the projection introduced in Equation 4. The core integration in the proof of Lyapunov theorem,

indicates a contradiction when, and :

The above equation contradicts the fact that . Hence, all trajectories must converge to the desired target, .

Remark 2.

Proposition 1 only requires to be convex. The policy network, , remains fairly unrestricted to capture intricate expert demonstrations.

Thus, the proposed SNDS formally establishes global stability using an efficient gradient-based search for the LPF within the class of convex functions.

III-C SRVF training loss

When constructing an optimization loss, the literature often opts for the conventional Mean Squared Error (MSE) [5, 13, 3] to gauge the dissimilarity between the policy’s output and the actual velocity labels over each batch of data. Consequently, the MSE loss is solely focused on the precision of estimated velocity. While reproducing exact velocities is essential, even slight inaccuracies at one instance can lead to accumulated error in the reproduced trajectories. The accumulation happens as a result of using forward Euler’s method that relies on previous estimates of to yield the next ones. Therefore, a more effective loss function should promote the accuracy of trajectories generated through forward Euler’s method during policy rollouts.

Employing forward Euler’s method to generate a full trajectory makes the gradient-based optimization intractable as a result of repetitive integrations. To mitigate this effect, we propose a limited horizon combination of the Square-Root Velocity Functions (SRVF) [25] and traditional MSE to design the loss function. SRVF methods involve normalizing the curve’s velocity, with a focus on changes in curve shape. Assuming a trajectory , we shape the features by the definition:

| (5) |

Given the state-action pairs, , and the differentiable dynamics , we define the loss as,

| (6) |

where are discount factors decreasing as the loss horizon expands. The intuition behind Equation 6 is rather straightforward: is a differentiable function encapsulating both the current label mismatch, and deviation from the generated trajectory for a fixed horizon of consecutive samples. As long as the horizon is limited, stochastic optimizers, such as ADAM [26], can optimize the loss on expert’s training data.

IV Experiments

We utilize two sets of motion planning data in our experiments. Our primary dataset is sourced from the well-known LASA Handwriting dataset [24], which contains records of handwritten trajectories on a tablet. The second dataset consists of more complex motions gathered by [7] in the same way. Our real-world experiments are only based on the latter due to its complex nature.

IV-A Evaluation

We compare our approach against three existing baselines: Behavioral Cloning (BC) [27, 28], Linear Parameter-Varying Dynamical System (LPV-DS) [7], and Stable Dynamical System Learning Using Euclideanizing Flows (SDS-EF) [13]. Among these methods, only BC lacks formal global asymptotic stability guarantees. Nevertheless, comparing SNDS against BC enables us to gauge SNDS’s accuracy and global stability against an unrestricted neural policy.

To assess the effectiveness of SNDS in comparison with the named baselines, we carry out the learning process for each method on designated handwriting dataset motions. For each motion, we randomly split the demonstrated dataset in the dataset into train () and test () sets. The policy learning stage is carried out on the training data, and the optimal policy is used to generate policy rollouts, , using the Euler’s method. Policies are subsequently evaluated by calculating Mean-Squared Error (MSE),

| (7) |

and Dynamic Time Warping (DTW),

| (8) |

for handwriting dataset and experiments in higher state space dimensions to better capture discrepancies between policy rollouts and expert demonstrations. Within Equation 8, represents an alignment path, is the set of all admissible paths, is Euclidean distance [29], and . Notice that to calculate DTW, we need to generate an entire trajectory, while with MSE, only the generated actions are compared against true labels. We conduct the training and assessment procedures repeatedly with 10 random seeds, and present the mean and standard deviation of the results.

IV-B Handwriting dataset policies

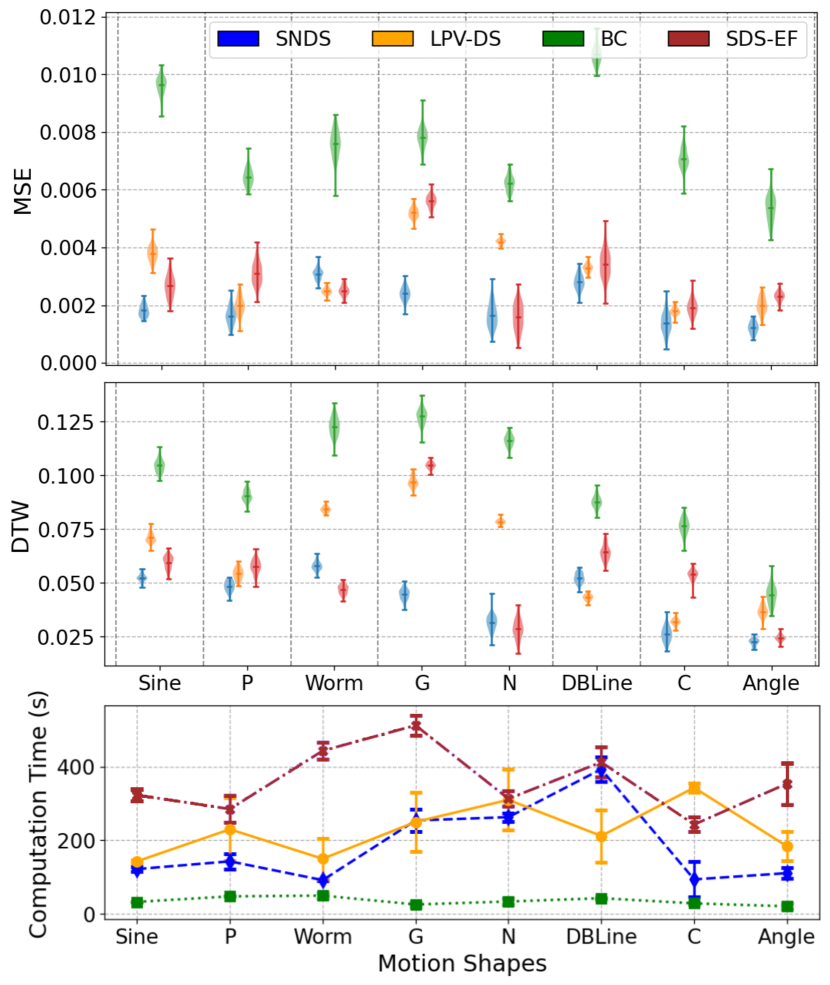

We apply SNDS and selected baselines to each set of demonstrations as explained in Section IV-A, and compare the reproduction accuracy and computation time 222Note that we restrict our computational resources to a single Core-i7 Gen8 CPU, despite that SNDS and SDS-EF can leverage parallel computing. across various motions in the handwriting dataset. Figure 4 presents a numerical comparison of both reproduction accuracy and computation time between SNDS and the selected baselines. The results indicate an acceptable level of reproduction accuracy while providing GAS certificates. In rare cases, SNDS shows a higher error compared to baselines, but outperforms others for the majority of elected motions, and maintains a reasonable computation time.

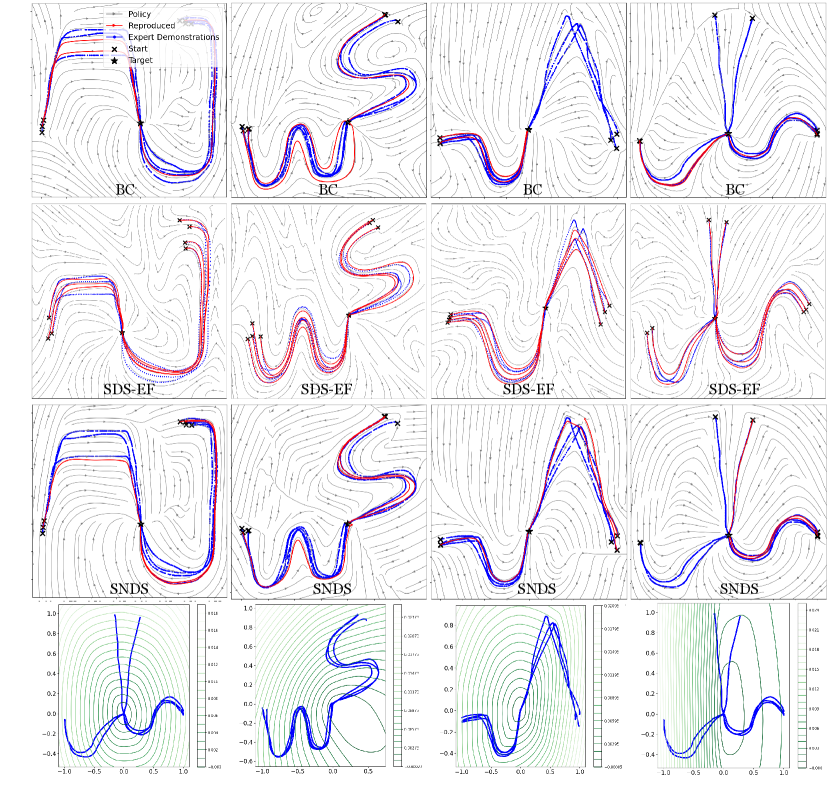

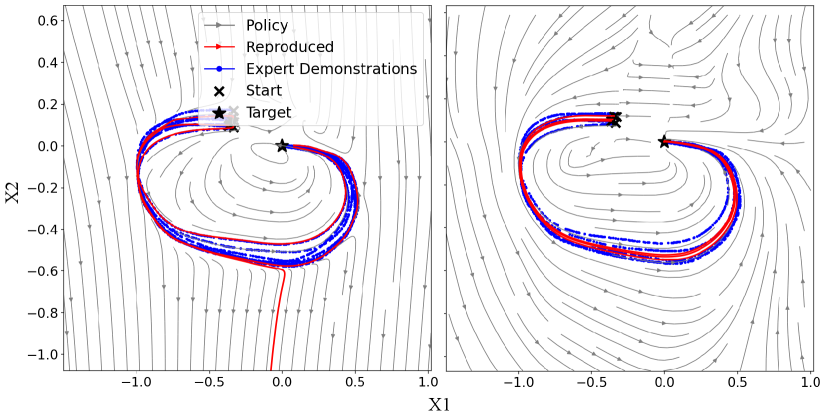

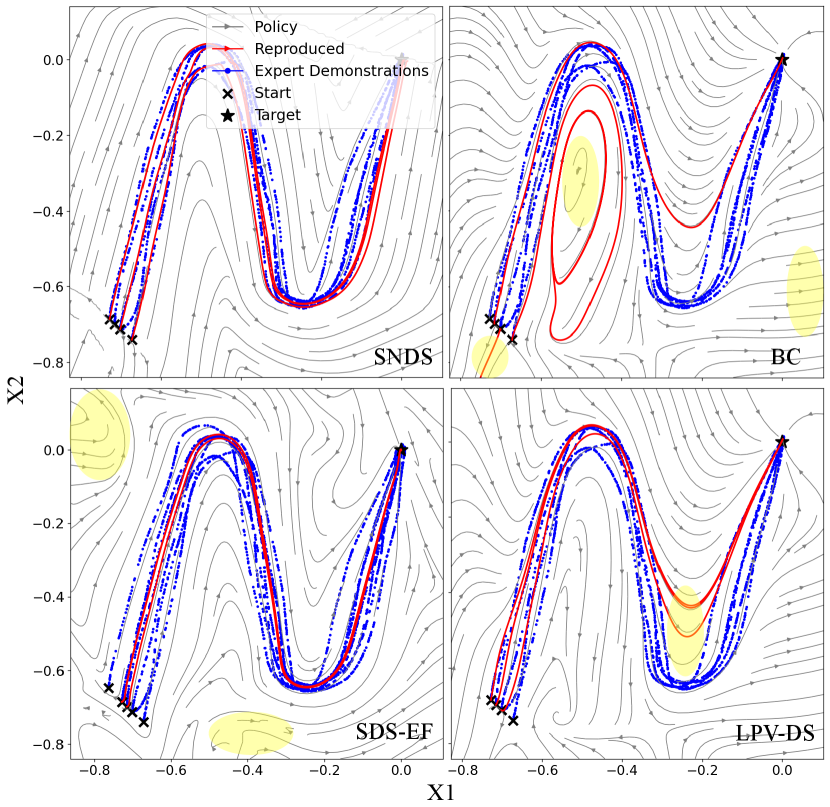

To closely examine the GAS property of each method, we plot the acquired policies using streamlines and generated two simulated rollouts with Euler’s method in Figure 5. It becomes apparent that SNDS, and LPV-DS provide predictability through guaranteeing GAS, while other approaches, such as BC and SDS-EF fail to render similar certificates. On a general note, even though we observe unstable policies trained with SDS-EF, instabilities occur only for a subset of motions in the handwriting dataset, and tend to intensify in regions further away from expert’s demonstrations.

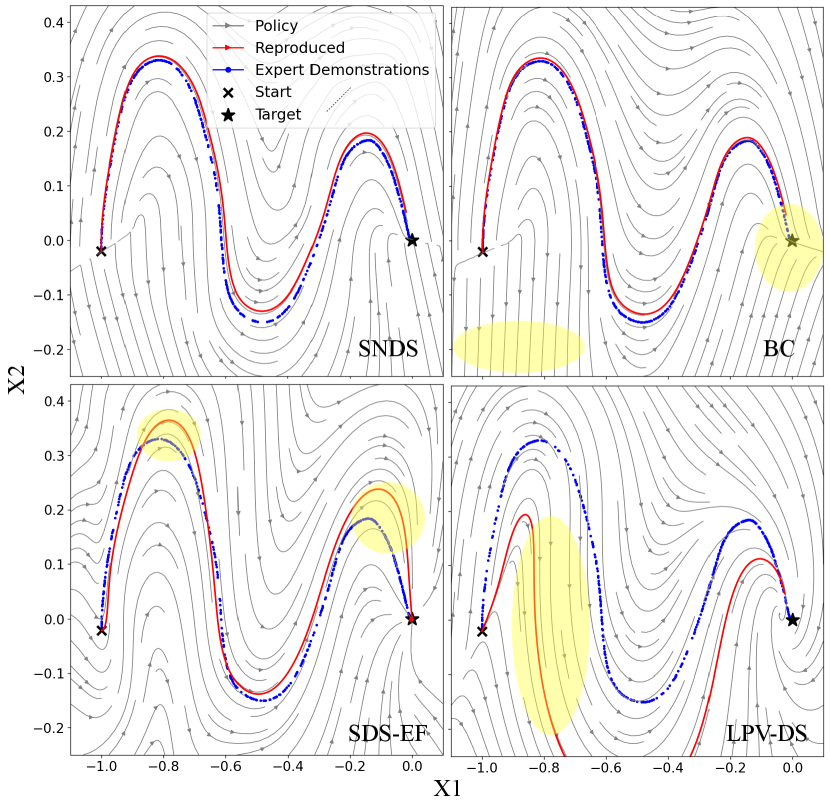

To further showcase the sample inefficiency associated with Gaussian mixture model and diffeomorphism-based methods, such as LPV-DS and SDS-EF, respectively, we repeat the experiments with the training set reduced to only a single demonstration. The learned policies in this scenario are illustrated in Figure 6, indicating the sample efficiency of SNDS when compared to LPV-DS and SDS-EF in the presence of limited demonstration samples.

IV-C SE(3) policy training

To illustrate the applicability of our method in high-dimensional state spaces, such as SE(3), we consider both the position and orientation of the robot and redefine the state variable, , accordingly. We pick the snake motion—an overly complex and nonlinear motion presented in [7] and 2 different motions in the handwriting dataset, namely Sine- and G-shaped motions, to consolidate our higher dimensional performances. We use synthetic demonstrations for orientation, as the demonstrations only comprise translation and linear velocity. After training SNDS on this data, the policy generates trajectories in SE(3) that guide the end-effector toward the desired pose.

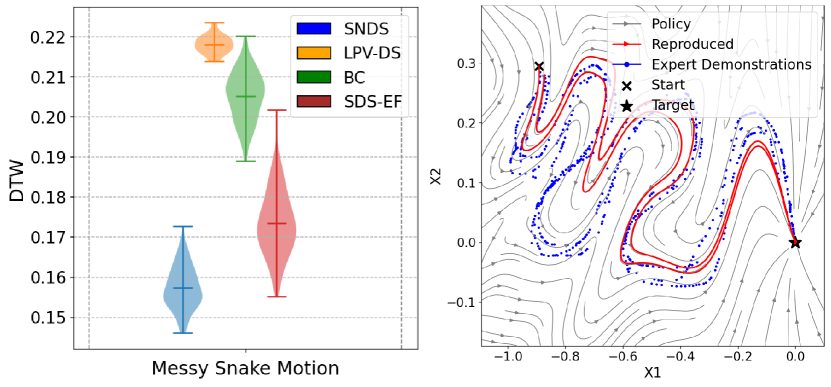

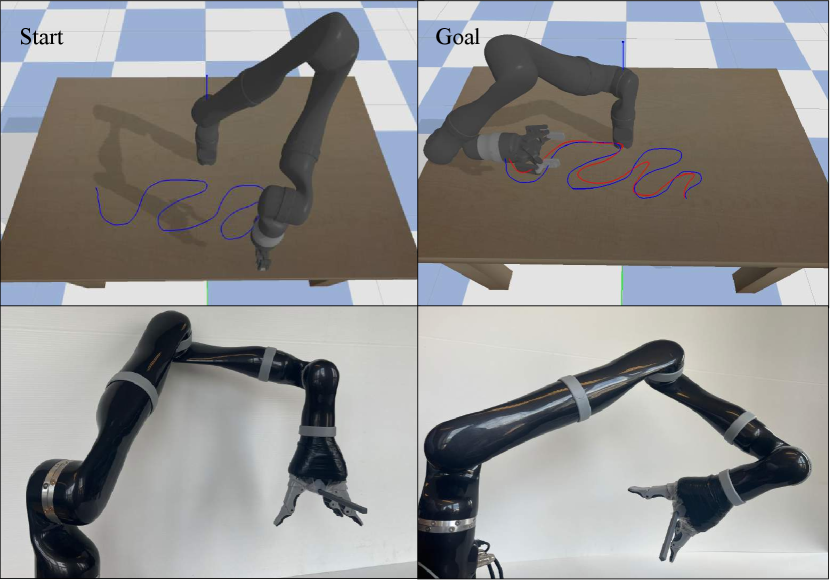

In Figure 7, we compare the performance of our method against the baselines trained with the Messy Snake data in SE(3). We utilize the DTW metric to evaluate the policy rollouts against the original trajectories. Next, we deploy the policy trained with SNDS on the simulated arm in PyBullet. Figure 8 offers an overview of policy deployment in simulation trained on the Messy Snake data.

Finally, we deploy the learned policy directly on a similar real-world manipulator platform. Recordings of experiments conducted with the Kinova Jaco2 arm are part of the supplementary video. Upon viewing the footage, it is evident that the robot reproduces the same motion as the simulation. The smooth sim-to-real transfer is made possible by the presence of Lyapunov stability conditions. The learned policy is robust to model error, since the Lyapunov condition ensures that the robot consistently progresses toward the target.

V Discussion

The experiments demonstrate that employing SNDS to model a policy results in improved predictability and safety when recovering from states far beyond those covered in the original demonstrations. SNDS proves to be more effective by utilizing expressive and scalable neural architecture in comparison to the state of the art. Consequently, SNDS can acquire highly nonlinear stable policies in larger state spaces with reduced computational overhead. Our approach also reduces the restrictive assumptions regarding the class of Lyapunov functions to convexity, while the adopted projection technique ensures a smooth training process on a variety of expert data, as evident by the experiments.

While SNDS offers global convergence and predictability, there remains a concern regarding physically impossible trajectories for robots to replicate, particularly when considering manipulators and their joint or torque limits. Future enhancements could address this by incorporating safe regions using control barrier functions. Moreover, it is worth noting that modeling the Lyapunov candidate as a strictly convex function is not an essential requirement. Future research could explore invertible transformations to ease this restriction. Additionally, integrating obstacle avoidance and joint constraints into our formulation are promising paths to explore beyond the current scope. More ambitious extensions might delve into the implications of utilizing SNDS policies in reinforcement learning or training and deploying stable policies on legged robots, especially for gait control.

VI Conclusion

We outlined a training process to learn expressive neural policies through imitating expert demonstrations. We effectively enforce global stability throughout the training process by adhering to the conditions of Lyapunov stability theory. This ensures that the resulting policy reliably converges to a predetermined target, regardless of initial conditions, velocity and time variations, or unexpected perturbations encountered during deployment. Our theoretical findings are accompanied by simulation and real-world benchmarking against best-performing methods in the field.

VII Reproducability

Network architecture and hyperparameters. Our approach employs a 4-hidden layer feed-forward neural network for policy, with Leaky ReLU activations and layer sizes of 2-256-256-128-128-2. For the Lyapunov function, we utilize an ICNN architecture [22] with 3 hidden layers of 2-128-128-128-1 nodes along with Leaky ReLU and Soft plus activations as required by the original design. We set and to limit the cost function’s horizon. Other parameters, e.g., learning rate and batch size, are selected and explained in our code repository. We use grid-search to pick the hyperparameters if computationally feasible.

Datasets specifications. The Handwriting Dataset [24] contains 30 sets of 2D handwriting motions recorded from a Tablet-PC via user input. There are seven demonstrations per motion, starting from slightly different initial positions but ending at (0, 0). Each demonstration encompasses position (2 × 1000) as learning features and velocity (2 × 1000) as labels. We pick the following representative set of motion for our experiments: Sine, P, Worm, G, N, DBLine, C, Angle. Note that the Messy Snake motion is not a part of this dataset. The Messy Snake data, gathered in [7], comprises three demonstrations with various sample sizes per demonstration, totaling to (2 × 1592), and we sample a single demonstration for policy learning and deployment.

Computational resources. We utilize a single machine with NVIDIA GeForce RTX 4050 GPU, Intel Core i7-13620H CPU, and 32 GB DDR4 RAM.

Codebase and reproduction. Consult README.md in our GitHub repository github.com/aminabyaneh/stable-imitation-policy to access SNDS experiments.

Acknowledgment

We express our heartfelt gratitude to Anya Forestell for her invaluable hardware support during our experiments.

References

- [1] S. Schaal, “Is imitation learning the route to humanoid robots?” Trends in cognitive sciences, vol. 3, no. 6, pp. 233–242, 1999.

- [2] A. Hussein, M. M. Gaber, E. Elyan, and C. Jayne, “Imitation learning: A survey of learning methods,” ACM Computing Surveys (CSUR), vol. 50, no. 2, pp. 1–35, 2017.

- [3] N. Figueroa and A. Billard, “Locally active globally stable dynamical systems: Theory, learning, and experiments,” The International Journal of Robotics Research, vol. 41, no. 3, pp. 312–347, 2022.

- [4] M. Hersch, F. Guenter, S. Calinon, and A. Billard, “Dynamical system modulation for robot learning via kinesthetic demonstrations,” IEEE Transactions on Robotics, vol. 24, no. 6, pp. 1463–1467, 2008.

- [5] S. M. Khansari-Zadeh and A. Billard, “Learning stable nonlinear dynamical systems with gaussian mixture models,” IEEE Transactions on Robotics, vol. 27, no. 5, pp. 943–957, 2011.

- [6] S. M. Khansari-Zadeh and A. Billard, “Learning control Lyapunov function to ensure stability of dynamical system-based robot reaching motions,” Robotics and Autonomous Systems, vol. 62, no. 6, pp. 752–765, 2014.

- [7] N. Figueroa and A. Billard, “A physically-consistent bayesian non-parametric mixture model for dynamical system learning,” in 2nd Annual Conference on Robot Learning, 2018, pp. 927–946.

- [8] K. Neumann and J. J. Steil, “Learning robot motions with stable dynamical systems under diffeomorphic transformations,” Robotics and Autonomous Systems, vol. 70, pp. 1–15, 2015.

- [9] H. Ravichandar, I. Salehi, and A. Dani, “Learning partially contracting dynamical systems from demonstrations,” in Conference on Robot Learning. PMLR, 2017, pp. 369–378.

- [10] F. Khadivar, I. Lauzana, and A. Billard, “Learning dynamical systems with bifurcations,” Robotics and Autonomous Systems, vol. 136, p. 103700, 2021.

- [11] A. Abyaneh and H.-C. Lin, “Learning Lyapunov-stable polynomial dynamical systems through imitation,” in 7th Annual Conference on Robot Learning, 2023.

- [12] Y.-C. Chang, N. Roohi, and S. Gao, “Neural Lyapunov control,” Advances in neural information processing systems, vol. 32, 2019.

- [13] M. A. Rana, A. Li, D. Fox, B. Boots, F. Ramos, and N. Ratliff, “Euclideanizing flows: Diffeomorphic reduction for learning stable dynamical systems,” in Learning for Dynamics and Control. PMLR, 2020, pp. 630–639.

- [14] J. Zhang, H. B. Mohammadi, and L. Rozo, “Learning riemannian stable dynamical systems via diffeomorphisms,” in 6th Annual Conference on Robot Learning, 2022.

- [15] J. Ho and S. Ermon, “Generative adversarial imitation learning,” Advances in neural information processing systems, vol. 29, p. 4572–4580, 2016.

- [16] J. Fu, K. Luo, and S. Levine, “Learning robust rewards with adversarial inverse reinforcement learning,” in International Conference on Learning Representations, 2018.

- [17] H. Dai, B. Landry, L. Yang, M. Pavone, and R. Tedrake, “Lyapunov-stable neural-network control,” in Robotics: Science and Systems, 2021.

- [18] A. Coulombe and H.-C. Lin, “Generating stable and collision-free policies through Lyapunov function learning,” in International Conference on Robotics and Automation, 2023, pp. 3037–3043.

- [19] P. Abbeel and A. Y. Ng, “Apprenticeship learning via inverse reinforcement learning,” in International conference on Machine learning, 2004, p. 1.

- [20] B. D. Ziebart, A. L. Maas, J. A. Bagnell, A. K. Dey, et al., “Maximum entropy inverse reinforcement learning.” in Association for the Advancement of Artificial Intelligence, vol. 8, 2008, pp. 1433–1438.

- [21] R. L. Devaney, An introduction to chaotic dynamical systems. CRC press, 2021.

- [22] B. Amos, L. Xu, and J. Z. Kolter, “Input convex neural networks,” in International Conference on Machine Learning. PMLR, 2017, pp. 146–155.

- [23] J. Z. Kolter and G. Manek, “Learning stable deep dynamics models,” Advances in neural information processing systems, vol. 32, 2019.

- [24] S. M. Khansari-Zadeh and A. Billard, “LASA Handwriting Dataset,” https://cs.stanford.edu/people/khansari/download.html#SEDS_reference, 2011.

- [25] M. Bruveris, “Optimal reparametrizations in the square root velocity framework,” SIAM Journal on Mathematical Analysis, vol. 48, no. 6, pp. 4335–4354, 2016.

- [26] D. P. Kingma and J. Ba, “Adam: A method for stochastic optimization,” in International Conference on Learning Representations, 2015.

- [27] D. A. Pomerleau, “Alvinn: An autonomous land vehicle in a neural network,” in Advances in neural information processing systems, vol. 1, 1988, pp. 305–313.

- [28] F. Torabi, G. Warnell, and P. Stone, “Behavioral cloning from observation,” in Proceedings of the 27th International Joint Conference on Artificial Intelligence, 2018, pp. 4950–4957.

- [29] E. Keogh and C. A. Ratanamahatana, “Exact indexing of dynamic time warping,” Knowledge and information systems, vol. 7, pp. 358–386, 2005.

Appendix

VII-A Efficient trajectory generation

Section III-C introduces a novel loss that penalizes discrepancies not only in the action (velocity) space but also in the state space. Penalizing the policy in the state space is intuitive: by using the forward Euler method, we can efficiently estimate the loss directly in the state space. Consider a scenario where all but one of the velocities are perfectly imitated. Even a single discrepancy in velocity can lead to a significant deviation in the state space, resulting in a substantial loss, while the corresponding loss in the action space remains minimal.

To address this issue, the loss formulation relies on short-horizon, efficient forward simulation of the policy to obtain trajectories in the state space. In this section, we briefly discuss two methods for generating such trajectories.

Direct solution. Remember the following equation in Section III-C:

where we can simply calculate a limited number of Euler steps to optimize the policy in the state space. This can be readily implemented by solving the DS policy for a given initial state using the Euler method. By selecting a sufficiently small step size, , and an appropriate solution horizon, , it is possible to train accurate policies while maintaining acceptable computation speed. Importantly, when the policy is modeled with automatic differentiation tools, the entire solution remains differentiable. Additionally, with a small horizon, the memory consumption and computational complexity are manageable. This is our chosen approach due to its efficiency and ease of implementation.

Fixed-point differentiation. Another approach involves solving the DS policy for a fixed horizon and given initial condition using the implicit function theorem. This method works by treating the entire trajectory as the solution of an implicit equation governing the system’s dynamics—in this case, our DS policy, . Unlike traditional numerical methods (like Euler’s method), which explicitly compute each step of the trajectory, the implicit function theorem enables backpropagation through the entire trajectory by implicitly differentiating the final state with respect to the initial conditions and model parameters. This allows for efficient gradient computation in a memory-friendly way, as only the final state and system dynamics need to be maintained, avoiding the storage of all intermediate states. Solving the DS policy through the implicit function theorem offers a powerful alternative to explicit numerical methods, particularly when managing memory and computational resources. However, we observed that for small horizons (a few forward Euler steps), the numerical method is computationally faster, and the additional memory consumption is negligible. Therefore, while the implicit function approach is valuable for more complex or extended horizons, the simplicity and efficiency of the numerical method make it preferable for short horizons in our use case.

VII-B Smoothness and numerical stability

Non-smooth behavior can be observed in the policy, and is attributed to the use of a non-differentiable activation function with the projection operation, which introduces discontinuities in the gradient at zero. Even if the activation function is smooth, the denominator in Equation 4 can become infinitesimal, essentially breaking the training process. To address this issue, we propose substituting ReLU with a smooth approximation, such as Softplus, or a customized activation suggested in [23]. Once the activation function is smooth and differentiable, incorporating a small regularization term in the denominator of the projection operation in Equation 4 helps to prevent abrupt changes in the output by stabilizing the computation when the denominator approaches zero.

The smoothness of all trajectories is of utmost importance, especially when imitating expert behavior in the real-world, where the robot is expected to follow smooth and reliable trajectories in the entire state space. Further refinements can be achieved by applying gradient clipping, which controls extreme gradient values that could otherwise contribute to non-smoothness. By limiting the gradient magnitude, we ensure that the function behaves more consistently across different input values. One last precaution is to ensure that the underlying Lyapunov candidate is approximated smoothly, possibly by adjusting its architecture to a Lipschitz bounded network.

VII-C Additional experiments

For additional experiments, we pick the multimodel motions in the handwriting dataset. These motions consist of multiple motions converging to a global equilibrium from different directions, and with initial states that are far apart. Figure 9 presents a thorough collection of empirical results, as SNDS is compared against two other baselines: SDS-EF and BC.