Going Deeper into Permutation-Sensitive

Graph Neural Networks

Abstract

The invariance to permutations of the adjacency matrix, i.e., graph isomorphism, is an overarching requirement for Graph Neural Networks (GNNs). Conventionally, this prerequisite can be satisfied by the invariant operations over node permutations when aggregating messages. However, such an invariant manner may ignore the relationships among neighboring nodes, thereby hindering the expressivity of GNNs. In this work, we devise an efficient permutation-sensitive aggregation mechanism via permutation groups, capturing pairwise correlations between neighboring nodes. We prove that our approach is strictly more powerful than the 2-dimensional Weisfeiler-Lehman (2-WL) graph isomorphism test and not less powerful than the 3-WL test. Moreover, we prove that our approach achieves the linear sampling complexity. Comprehensive experiments on multiple synthetic and real-world datasets demonstrate the superiority of our model.

1 Introduction

The invariance to permutations of the adjacency matrix, i.e., graph isomorphism, is a key inductive bias for graph representation learning [1]. Graph Neural Networks (GNNs) invariant to graph isomorphism are more amenable to generalization as different orderings of the nodes result in the same representations of the underlying graph. Therefore, many previous studies [2, 3, 4, 5, 6, 7, 8] devote much effort to designing permutation-invariant aggregators to make the overall GNNs permutation-invariant (permutation of the nodes of the input graph does not affect the output) or permutation-equivariant (permutation of the input permutes the output) to node orderings.

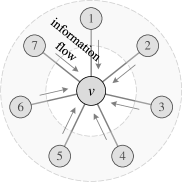

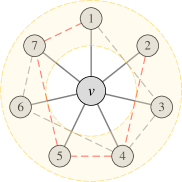

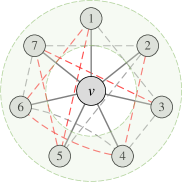

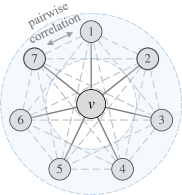

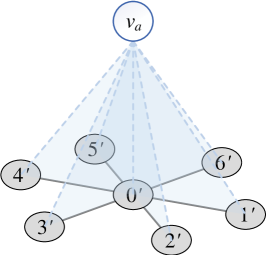

Despite their great success, Kondor et al. [9] and de Haan et al. [10] expound that such a permutation-invariant manner may hinder the expressivity of GNNs. Specifically, the strong symmetry of these permutation-invariant aggregators presumes equal statuses of all neighboring nodes, ignoring the relationships among neighboring nodes. Consequently, the central nodes cannot distinguish whether two neighboring nodes are adjacent, failing to recognize and reconstruct the fine-grained substructures within the graph topology. As shown in Figure 1(a), the general Message Passing Neural Networks (MPNNs) [4] can only explicitly reconstruct a star graph from the 1-hop neighborhood, but are powerless to model any connections between neighbors [11]. To address this problem, some latest advances [11, 12, 13, 14] propose to use subgraphs or ego-nets to improve the expressive power while preserving the property of permutation-invariance. Unfortunately, they usually suffer from high computational complexity when operating on multiple subgraphs [14].

In contrast, the permutation-sensitive (as opposed to permutation-invariant) function111One of the typical permutation-sensitive functions is Recurrent Neural Networks (RNNs), e.g., Simple Recurrent Network (SRN) [15], Gated Recurrent Unit (GRU) [16], and Long Short-Term Memory (LSTM) [17]. can be regarded as a “symmetry-breaking” mechanism, which breaks the equal statuses of neighboring nodes. The relationships among neighboring nodes, e.g., the pairwise correlation between each pair of neighboring nodes, are explicitly modeled in the permutation-sensitive paradigm. These pairwise correlations help capture whether two neighboring nodes are connected, thereby exploiting the local graph substructures to improve the expressive power. We illustrate a concrete example in Appendix D.

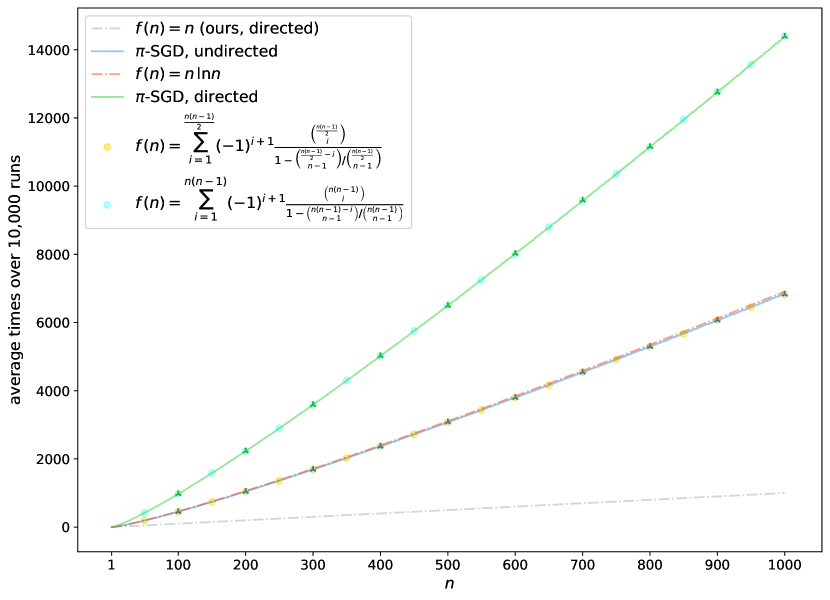

Different permutation-sensitive aggregation functions behave variously when modeling pairwise correlations. GraphSAGE with an LSTM aggregator [5] in Figure 1(b) is capable of modeling some pairwise correlations among the sampled subset of neighboring nodes. Janossy Pooling with the -SGD strategy [18] in Figure 1(c) samples random permutations of all neighboring nodes, thus modeling pairwise correlations more efficiently. The number of modeled pairwise correlations is proportional to the number of sampled permutations. After sampling permutations with a costly nonlinear complexity of (see Appendix K for detailed analysis), all the pairwise correlations between neighboring nodes can be modeled and all the possible connections are covered.

In fact, previous works [18, 1] have explored that incorporating permutation-sensitive functions into GNNs is indeed an effective way to improve their expressive power. Janossy Pooling [18] and Relational Pooling [1] both design the most powerful GNN models by exploiting permutation-sensitive functions to cover all possible permutations. They explicitly learn all representations of the underlying graph with possible node orderings to guarantee the permutation-invariance and generalization capability, overcoming the limited generalization of permutation-sensitive GNNs [19]. However, the complete modeling of all permutations also leads to an intractable computational complexity . Thus, we expect to design a powerful yet efficient GNN, which can guarantee the expressive power, and significantly reduce the complexity with a minimal loss of generalization capability.

Different from explicitly modeling all permutations, we propose to sample a small number of representative permutations to cover all pairwise correlations (as shown in Figure 1(d)) by the permutation-sensitive functions. Accordingly, the permutation-invariance is approximated by the invariance to pairwise correlations. Moreover, we mathematically analyze the complexity of permutation sampling and reduce it from to via a well-designed Permutation Group (PG). Based on the proposed permutation sampling strategy, we then devise an aggregation mechanism and theoretically prove that its expressivity is strictly more powerful than the 2-WL test and not less powerful than the 3-WL test. Thus, our model is capable of significantly reducing the computational complexity while guaranteeing the expressive power. To the best of our knowledge, our model achieves the lowest time and space complexity among all the GNNs beyond 2-WL test so far.

2 Related Work

Permutation-Sensitive Graph Neural Networks.

Loukas [20] first analyzes that it is necessary to sacrifice the permutation-invariance and -equivariance of MPNNs to improve their expressive power when nodes lose discriminative attributes. However, only a few models (GraphSAGE with LSTM aggregators [5], RP with -SGD [18], CLIP [21]) are permutation-sensitive GNNs. These studies provide either theoretical proofs or empirical results that their approaches can capture some substructures, especially triangles, which can be served as special cases of our Theorem 4. Despite their powerful expressivity, the nonlinear complexity of sampling or coloring limits their practical application.

Expressive Power of Graph Neural Networks.

Xu et al. [7] and Morris et al. [22] first investigate the GNNs’ ability to distinguish non-isomorphic graphs and demonstrate that the traditional message-passing paradigm [4] is at most as powerful as the 2-WL test [23], which cannot distinguish some graph pairs like regular graphs with identical attributes. In order to theoretically improve the expressive power of the 2-WL test, a direct way is to equip nodes with distinguishable attributes, e.g., identifier [1, 20], port numbering [24], coloring [24, 21], and random feature [25, 26]. Another series of researches [22, 8, 27, 28, 29, 30] consider high-order relations to design more powerful GNNs but suffer from high computational complexity when handling high-order tensors and performing global computations on the graph. Some pioneering works [10, 12] use the automorphism group of local subgraphs to obtain more expressive representations and overcome the problem of global computations, but their pre-processing stages still require solving the NP-hard subgraph isomorphism problem. Recent studies [24, 31, 20, 32] also characterize the expressive power of GNNs from the perspectives of what they cannot learn.

Leveraging Substructures for Learning Representations.

Previous efforts mainly focused on the isomorphism tasks, but did little work on understanding their capacity to capture and exploit the graph substructure. Recent studies [21, 11, 10, 19, 33, 25, 34, 35, 36] show that the expressive power of GNNs is highly related to the local substructures in graphs. Chen et al. [11] demonstrate that the substructure counting ability of GNN architectures not only serves as an intuitive theoretical measure of their expressive power but also is highly relevant to practical tasks. Barceló et al. [35] and Bouritsas et al. [36] propose to incorporate some handcrafted subgraph features to improve the expressive power, while they require expert knowledge to select task-relevant features. Several latest advances [19, 34, 37, 38] have been made to enhance the standard MPNNs by leveraging high-order structural information while retaining the locality of message-passing. However, the complexity issue has not been satisfactorily solved because they introduce memory/time-consuming context matrices [19], eigenvalue decomposition [34], and lifting transformation [37, 38] in pre-processing.

Relations to Our Work.

Some crucial differences between related works [5, 1, 21, 11, 19, 25, 34, 37] and ours can be summarized as follows: (i) we propose to design powerful permutation-sensitive GNNs while approximating the property of permutation-invariance, balancing the expressivity and computational efficiency; (ii) our approach realizes the linear complexity of permutation sampling and reaches the theoretical lower bound; (iii) our approach can directly learn substructures from data instead of pre-computing or strategies based on handcrafted structural features. We also provide detailed discussions in Appendix H.3 for [5], K.2 for [18, 1], and L.1 for [37, 38].

3 Designing Powerful Yet Efficient GNNs via Permutation Groups

In this section, we begin with the analysis of theoretically most powerful but intractable GNNs. Then, we propose a tractable strategy to achieve linear permutation sampling and significantly reduce the complexity. Based on this strategy, we design our permutation-sensitive aggregation mechanism via permutation groups. Furthermore, we mathematically analyze the expressivity of permutation-sensitive GNNs and prove that our proposed model is more powerful than the 2-WL test and not less powerful than the 3-WL test via incidence substructure counting.

3.1 Preliminaries

Let be a graph with vertex set and edge set , directed or undirected. Let be the adjacency matrix of . For a node , denotes its degree, i.e., the number of 1-hop neighbors of node , which is equivalent to in this section for simplicity. Suppose these neighboring nodes of the central node are randomly numbered as (also abbreviated as in the following), the set of neighboring nodes is represented as (or ). Given a set of graphs , each graph has a label . Our goal is to learn a representation vector of the entire graph and classify it into the correct category from classes. In this paper, we use the normal to denote a graph and the Gothic to denote a group. The necessary backgrounds of graph theory and group theory are attached in Appendixes A and B. The rigorous definition of the -WL test is provided in Appendix C.

3.2 Theoretically Most Powerful GNNs

Relational Pooling (RP) [1] proposes the theoretically most powerful permutation-invariant model by averaging over all permutations of the nodes, which can be formulated as follows:

| (1) |

where denotes the result of acting on , is the symmetric group on the set (or ), is a sufficiently expressive (possibly permutation-sensitive) function, is the feature vector of node .

The permutation-sensitive functions, especially sequential models, are capable of modeling the -ary dependency [18, 1] among input nodes. Meanwhile, the different input node orderings will lead to a total number of different -ary dependencies. These -ary dependencies indicate the relations and help capture the topological connections among the corresponding nodes, thereby exploiting the substructures within these nodes to improve the expressive power of GNN models. For instance, the expressivity of Eq. (1) is mainly attributed to the modeling of all possible -ary dependencies (full-dependencies) among all nodes, which can capture all graphs isomorphic to . However, it is intractable and practically prohibitive to model all permutations ( -ary dependencies) due to the extremely high computational cost. Thus, it is necessary to design a tractable strategy to reduce the computational cost while maximally preserving the expressive power.

3.3 Permutation Sampling Strategy

Intuitively, the simplest way is to replace -ary dependencies with 2-ary dependencies, i.e., the pairwise correlations in Section 1. Moreover, since the inductive bias of locality results in lower complexity on sparse graphs [39, 34], we restrict the permutation-sensitive functions to aggregate information and model the 2-ary dependencies in the 1-hop neighborhoods. Thus, we will further discuss how to model all 2-ary dependencies between neighboring nodes with the lowest sampling complexity .

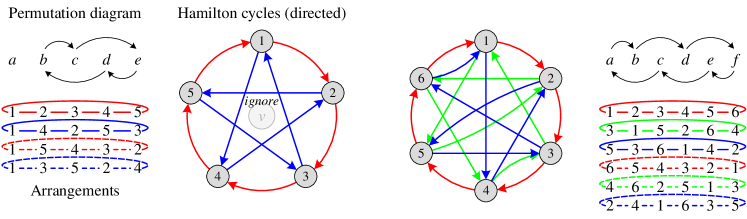

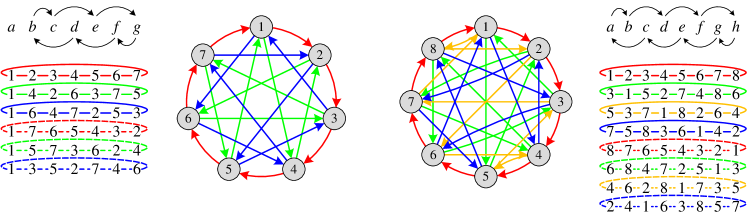

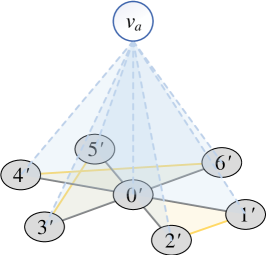

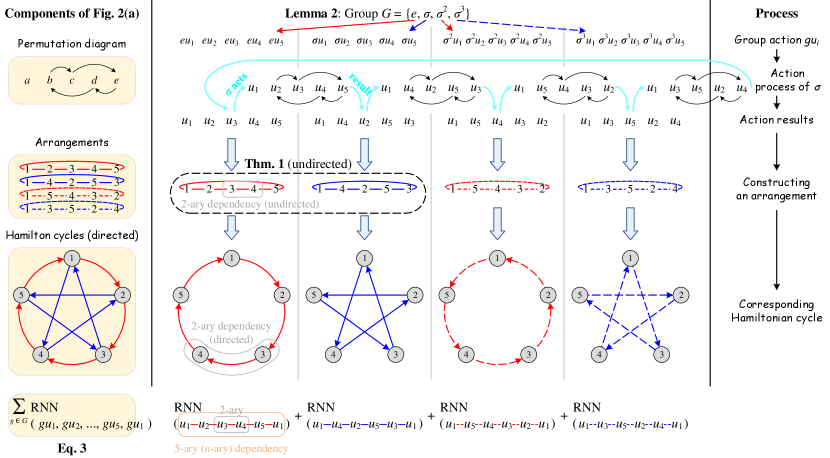

Suppose neighboring nodes are arranged as a ring, we define this ring as an arrangement. An initial arrangement can be simply defined as , including an -ary dependency and 2-ary dependencies . Since a permutation adjusts the node ordering in the arrangement, we can use a permutation to generate a new arrangement, which corresponds to a new -ary dependency covering 2-ary dependencies. The following theorem provides a lower bound of the number of arrangements to cover all 2-ary dependencies.

Theorem 1.

Let denote the number of 1-hop neighboring nodes around the central node . There are kinds of arrangements in total, satisfying that their corresponding 2-ary dependencies are disjoint. Meanwhile, after at least arrangements (including the initial one), all 2-ary dependencies have been covered at least once.

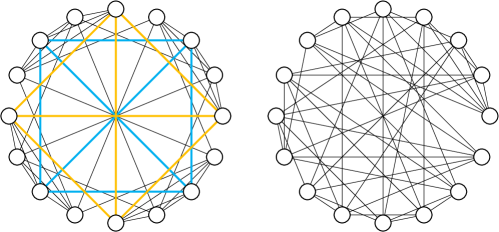

We first give a sketch of the proof. Construct a simple undirected graph , where denotes the neighboring nodes (abbreviated as nodes in the following), and represents an edge set in which each edge indicates the corresponding 2-ary dependency has been covered in some arrangements. Each arrangement corresponds to a Hamiltonian cycle in graph . In addition, we define the following permutation to generate new arrangements:

| (2) |

After performing the permutation once, a new arrangement is generated and a Hamiltonian cycle is constructed. Since every pair of nodes can form a 2-ary dependency, covering all 2-ary dependencies is equivalent to constructing a complete graph . Besides, as a has edges and each Hamiltonian cycle has edges, a can only be constructed with at least Hamiltonian cycles. It can be proved that after performing the permutation for times in succession (excluding the initial one), all 2-ary dependencies are covered at least once. Detailed proof of Theorem 1 is provided in Appendix E.

Note that Theorem 1 has the constraint because all 2-ary dependencies have already been covered in the initial arrangement when , and there is only a single node when . If , , respectively (the case of is trivial). Thus the permutation defined in Theorem 1 is available for an arbitrary , while Eq. (2) shows the general case with a large .

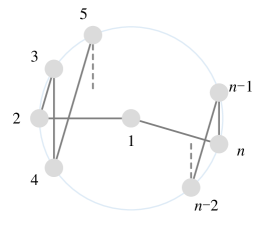

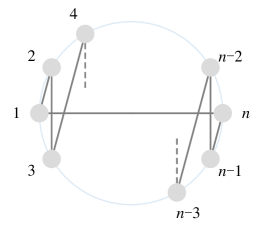

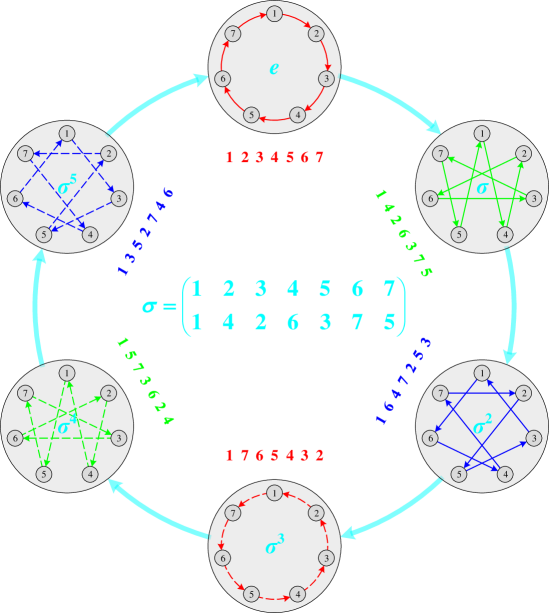

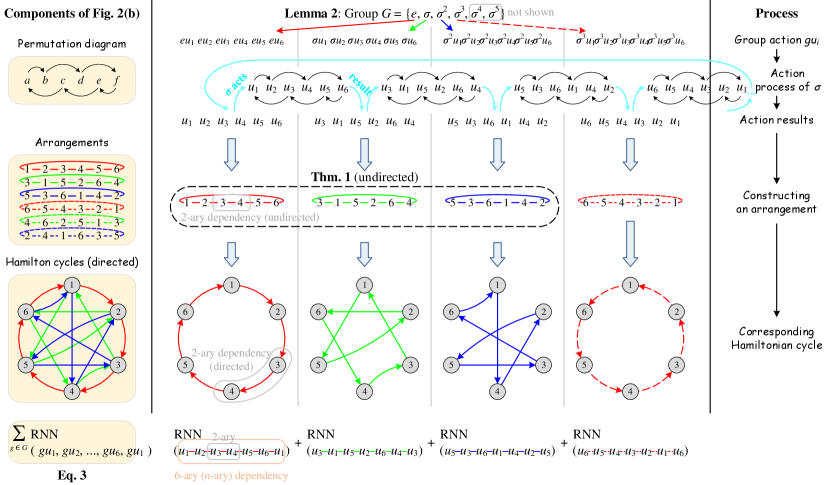

According to the ordering of neighboring nodes in the arrangement, we can apply a permutation-sensitive function to model an -ary dependency among these nodes while covering 2-ary dependencies. Since the input orderings and lead to different results in the permutation-sensitive function, these dependencies and the corresponding Hamiltonian cycles (the solid arrows in Figure 2) are modeled in a directed manner. We continue performing the permutation for times successively to get additional arrangements (the dashed lines in Figure 2) and reversely directed Hamiltonian cycles (not shown in Figure 2). After the bi-directional modeling, edges in Hamiltonian cycles are transformed into undirected edges. Figure 2 briefly illustrates the above process when . In conclusion, all 2-ary dependencies can be modeled in an undirected manner by the tailored permutations. The number of permutations is if is even and if is odd, ensuring the linear sampling complexity .

In fact, all permutations above form a permutation group. In order to incorporate the strategy proposed by Theorem 1 into the aggregation process of GNN, we propose to use the permutation group and group action, defined as follows.

Lemma 2.

For the permutation of indices, is a permutation group isomorphic to the cyclic group if is odd. And is a permutation group isomorphic to the cyclic group if is even.

Corollary 3.

The map denoted by is a group action of on .

3.4 Network Architecture

Without loss of generality, we apply the widely-used Recurrent Neural Networks (RNNs) as the permutation-sensitive function to model the dependencies among neighboring nodes. Let the group elements (i.e., permutations) in act on , our proposed strategy in Section 3.3 is formulated as:

| (3) |

where denotes the result of acting on , and is the feature vector of central node at the -th layer. We provide more discussion on the groups and model variants in Appendixes J.2 and J.3. Eq. (3) takes advantage of the locality and permutation group to simplify the group actions in Eq. (1), which acts the symmetric group on vertex set , thereby avoiding the complete modeling of permutations. Meanwhile, Eq. (3) models all 2-ary dependencies and achieves the invariance to 2-ary dependencies. Thus, we can conclude that Eq. (3) realizes the efficient approximation of permutation-invariance with low complexity. In practice, we merge the central node into RNN for simplicity:

| (4) |

Then, we apply a READOUT function (e.g., SUM) to obtain the graph representation at the -th layer and combine representations learned by different layers to get the score for classification:

| (5) |

here represents a learnable scoring matrix for the -th layer. Finally, we input score to the softmax function and obtain the predicted class of graph .

Complexity.

We briefly analyze the computational complexity of Eq. (3). Suppose the input and output dimensions are both for each layer, let denote the maximum degree of graph . In the worst-case scenario, Eq. (3) requires summing over terms processed in a serial manner. Since there is no interdependence between these terms, they can also be computed in a parallel manner with the time complexity of (caused by RNN computation), while sacrificing the memory to save time. Let denote the number of edges. Table 2 compares our approach with other powerful GNNs on the per-layer space and time complexity. The results of baselines are taken from Vignac et al. [19]. Since the complexity analysis of GraphSAGE [5], MPSN [37], and CWN [38] involves many other notations, we analyze GraphSAGE in Appendix H.3, and MPSN and CWN in Appendix L.1. In a nutshell, our approach theoretically outperforms other powerful GNNs in terms of time and space complexity, even being on par with MPNN.

| Model | Erdős-Rényi random graph | Random regular graph |

| GCN [3] | 0.599 0.006 | 0.500 0.012 |

| SAGE [5] | 0.118 0.005 | 0.127 0.011 |

| GIN [7] | 0.219 0.016 | 0.342 0.005 |

| rGIN [25] | 0.194 0.009 | 0.325 0.006 |

| RP [1] | 0.058 0.006 | 0.161 0.003 |

| LRP [11] | 0.023 0.011 | 0.037 0.019 |

| PG-GNN | 0.019 0.002 | 0.027 0.001 |

3.5 Expressivity Analysis

In this subsection, we theoretically analyze the expressive power of a typical category of permutation-sensitive GNNs, i.e., GNNs with RNN aggregators (Theorem 4), and that of our proposed PG-GNN (Proposition 5). We begin with GIN [7], which possesses the equivalent expressive power as the 2-WL test [7, 30]. In fact, the variants of GIN can be recovered by GNNs with RNN aggregators (see Appendix I for details), which implies that this category of permutation-sensitive GNNs can be at least as powerful as the 2-WL test. Next, we explicate why they go beyond the 2-WL test from the perspective of substructure counting.

Triangular substructures are rich in various networks, and counting triangles is an important task in network analysis [40]. For example, in social networks, the formation of a triangle indicates that two people with a common friend will also become friends [41]. A triangle is incident to the node if and are adjacent and node is their common neighbor. We define the triangle as an incidence triangle over node (also and ), and denote the number of incidence triangles over node as . Formally, the number of incidence triangles over each node in an undirected graph can be calculated as follows (proof and discussion for the directed graph are provided in Appendix H.1):

| (6) |

where and its -th element represents the number of incidence triangles over node , denotes element-wise product (i.e., Hadamard product), is a sum vector.

Besides the WL-test, the capability of counting graph substructures also characterizes the expressive power of GNNs [11]. Thus, we verify the expressivity of permutation-sensitive GNNs by evaluating their abilities to count triangles.

Theorem 4.

Let denote the feature inputs on graph , and be a general GNN model with RNN aggregators. Suppose that is initialized as the degree of node , and each node is distinguishable. For any and , there exists a parameter setting for so that after samples,

where is the final output value generated by and is the number of incidence triangles.

Detailed proof can be found in Appendix H.2. Theorem 4 concludes that, if the input node features are node degrees and nodes are distinguishable, there exists a parameter setting for a general GNN with RNN aggregators such that it can approximate the number of incidence triangles to arbitrary precision for every node. Since 2-WL and MPNNs cannot count triangles [11], we conclude that this category of permutation-sensitive GNNs is more powerful. However, the required samples are related to and proportional to the mixing time (see Appendix H.2), leading to a practically prohibitive aggregation complexity. Many existing permutation-sensitive GNNs like GraphSAGE with LSTM and RP with -SGD suffer from this issue (see Appendixes H.3 and K.2 for more discussion).

On the contrary, our approach can estimate the number of incidence triangles in linear sampling complexity . According to the definition of incidence triangles and the fact that they always appear within ’s 1-hop neighborhood, we know that the number of connections between the central node ’s neighboring nodes is equivalent to the number of incidence triangles over . Meanwhile, Theorem 1 and Eq. (3) ensure that all 2-ary dependencies between neighboring nodes are modeled with sampling complexity. These dependencies capture the information of whether two neighboring nodes are connected, thereby estimating the number of connections and counting incidence triangles in linear sampling complexity.

Recently, Balcilar et al. [34] claimed that the trace (tr) and Hadamard product () operations are crucial requirements to go further than 2-WL to reach 3-WL from the perspective of Matrix Language [42, 43]. In fact, for any two neighbors and of the central node , the locality and 2-ary dependency of Eq. (3) introduce the information of (i.e., ) and (i.e., ), respectively. Thus Eq. (3) can mimic Eq. (6) to count incidence triangles. Moreover, we also prove that (see Appendix H.1 for details), which indicates that PG-GNN can realize the trace (tr) operation when we use SUM or MEAN (i.e., ) as the graph-level READOUT function. Note that even though MPNNs and 2-WL test are equipped with distinguishable attributes, they still have difficulty performing triangle counting since they cannot implement the trace or Hadamard product operations [34].

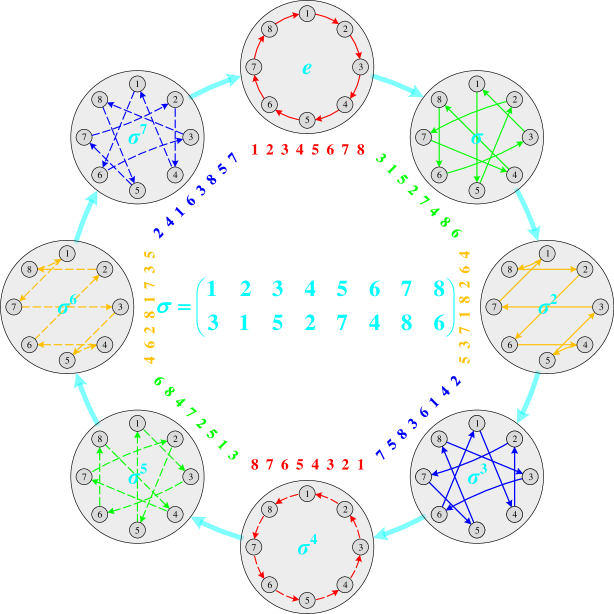

Beyond the incidence triangle, we can also leverage 2-ary dependencies of , , and to discover the incidence 4-clique , which is completely composed of triangles and only appears within ’s 1-hop neighborhood. In this way, the expressive power of PG-GNN can be further improved by its capability of counting incidence 4-cliques. As illustrated in Figure 7, these incidence 4-cliques help distinguish some pairs of non-isomorphic strongly regular graphs while the 3-WL test fails. Consequently, the expressivity of our model is guaranteed to be not less powerful than 3-WL222“A is no/not less powerful than B” means that there exists a pair of non-isomorphic graphs such that A can distinguish but B cannot. The terminology “no/not less powerful” used here follows the standard definition in the literature [11, 37, 38, 13]..

From the analysis above, we confirm the expressivity of PG-GNN as follows. The strict proof and more detailed discussion on PG-GNN and 3-WL are provided in Appendix I.

Proposition 5.

PG-GNN is strictly more powerful than the 2-WL test and not less powerful than the 3-WL test.

4 Experiments

In this section, we evaluate PG-GNN on multiple synthetic and real-world datasets from a wide range of domains. Dataset statistics and details are presented in Appendix M.1. The hyper-parameter search space and final hyper-parameter configurations are provided in Appendix M.2. Computing infrastructures can be found in Appendix M.3. The code is publicly available at https://github.com/zhongyu1998/PG-GNN.

4.1 Counting Substructures in Random Graphs

We conduct synthetic experiments of counting incidence substructures (triangles and 4-cliques) on two types of random graphs: Erdős-Rényi random graphs and random regular graphs [11]. The incidence substructure counting task is designed on the node level, which is more rigorous than traditional graph-level counting tasks. Table 2 summarizes the results measured by Mean Absolute Error (MAE, lower is better) for incidence triangle counting. We report the average and standard deviation of testing MAEs over 5 runs with 5 different seeds. In addition, the testing MAEs of PG-GNN on ER and random regular graphs are 0.029 0.002 and 0.023 0.001 for incidence 4-clique counting, respectively. Overall, the negligible MAEs of our model support our claim that PG-GNN is powerful enough for counting incidence triangles and 4-cliques.

Another phenomenon is that permutation-sensitive GNNs consistently outperform permutation-invariant GNNs on substructure counting tasks. This indicates that permutation-sensitive GNNs are capable of learning these substructures directly from data, without explicitly assigning them as node features, but the permutation-invariant counterparts like GCN and GIN fail. Therefore, permutation-sensitive GNNs can implicitly leverage the information of characteristic substructures in representation learning and thus benefit real-world tasks in practical scenarios.

| Model | PROTEINS | NCI1 | IMDB-B | IMDB-M | COLLAB |

| WL [44] | 75.0 3.1 | 86.0 1.8 | 73.8 3.9 | 50.9 3.8 | 78.9 1.9 |

| DGCNN [45] | 75.5 0.9 | 74.4 0.5 | 70.0 0.9 | 47.8 0.9 | 73.8 0.5 |

| IGN [8] | 76.6 5.5 | 74.3 2.7 | 72.0 5.5 | 48.7 3.4 | 78.4 2.5 |

| GIN [7] | 76.2 2.8 | 82.7 1.7 | 75.1 5.1 | 52.3 2.8 | 80.2 1.9 |

| PPGN [28] | 77.2 4.7 | 83.2 1.1 | 73.0 5.8 | 50.5 3.6 | 80.7 1.7 |

| CLIP [21] | 77.1 4.4 | N/A | 76.0 2.7 | 52.5 3.0 | N/A |

| NGN [10] | 71.7 1.0 | 82.7 1.4 | 74.8 2.0 | 51.3 1.5 | N/A |

| WEGL [46] | 76.5 4.2 | N/A | 75.4 5.0 | 52.3 2.9 | 80.6 2.0 |

| SIN [37] | 76.5 3.4 | 82.8 2.2 | 75.6 3.2 | 52.5 3.0 | N/A |

| CIN [38] | 77.0 4.3 | 83.6 1.4 | 75.6 3.7 | 52.7 3.1 | N/A |

| PG-GNN (Ours) | 76.8 3.8 | 82.8 1.3 | 76.8 2.6 | 53.2 3.6 | 80.9 0.8 |

4.2 Real-World Benchmarks

Datasets.

We evaluate our model on 7 real-world datasets from various domains. PROTEINS and NCI1 are bioinformatics datasets; IMDB-BINARY, IMDB-MULTI, and COLLAB are social network datasets. They are all popular graph classification tasks from the classical TUDataset [47]. We follow Xu et al. [7] to create the input features for each node. More specifically, the input node features of bioinformatics graphs are categorical node labels, and the input node features of social networks are node degrees. All the input features are encoded in a one-hot manner. In addition, MNIST is a computer vision dataset for the graph classification task, and ZINC is a chemistry dataset for the graph regression task. They are both modern benchmark datasets, and we obtain the features from the original paper [48], but do not take edge features into account. We summarize the statistics of all 7 real-world datasets in Table 7, and more details about these datasets can be found in Appendix M.1.

Evaluations.

For TUDataset, we follow the same data split and evaluation protocol as Xu et al. [7]. We perform 10-fold cross-validation with random splitting and report our results (the average and standard deviation of testing accuracies) at the epoch with the best average accuracy across the 10 folds. For MNIST and ZINC, we follow the same data splits and evaluation metrics as Dwivedi et al. [48], please refer to Appendix M.1 for more details. The experiments are performed over 4 runs with 4 different seeds, and we report the average and standard deviation of testing results.

Baselines.

We compare our PG-GNN with multiple state-of-the-art baselines: Weisfeiler-Lehman Graph Kernels (WL) [44], Graph SAmple and aggreGatE (GraphSAGE) [5], Gated Graph ConvNet (GatedGCN) [49], Deep Graph Convolutional Neural Network (DGCNN) [45], 3-WL-GNN [22], Invariant Graph Network (IGN) [8], Graph Isomorphism Network (GIN) [7], Provably Powerful Graph Network (PPGN) [28], Ring-GNN [50], Colored Local Iterative Procedure (CLIP) [21], Natural Graph Network (NGN) [10], (Deep-)Local Relation Pooling (LRP) [11], Principal Neighbourhood Aggregation (PNA) [51], Wasserstein Embedding for Graph Learning (WEGL) [46], Simplicial Isomorphism Network (SIN) [37], and Cell Isomorphism Network (CIN) [38].

Results and Analysis.

Tables 3 and 4 present a summary of the results. The results of baselines in Table 3 are taken from their original papers, except WL taken from Xu et al. [7], and IGN from Maron et al. [28] for preserving the same evaluation protocol. The results of baselines in Table 4 are taken from Dwivedi et al. [48], except PPGN and Deep-LRP are taken from Chen et al. [11], and PNA from Corso et al. [51]. Obviously, our model achieves outstanding performance on most datasets, even outperforming competitive baselines by a considerable margin.

From Tables 3 and 4, we notice that our model significantly outperforms other approaches on all social network datasets, but slightly underperforms main baselines on molecular datasets such as NCI1 and ZINC. Recall that in Section 3.5, we demonstrate that our model is capable of estimating the number of incidence triangles. The capability of counting incidence triangles benefits our model on graphs with many triangular substructures, e.g., social networks. However, triangles rarely exist in chemical compounds (verified in Table 7) due to their instability in the molecular structures. Thus our model achieves sub-optimal performance on molecular datasets. Suppose we extend the 1-hop neighborhoods to 2-hop (even -hop) in Eq. (3). In that case, our model will exploit more sophisticated substructures such as pentagon (cyclopentadienyl) and hexagon (benzene ring), which will benefit tasks on molecular graphs but increase the complexity. Thus, we leave it to future work.

| Model | MNIST | ZINC | ||

| Accuracy | Time / Epoch | MAE | Time / Epoch | |

| GraphSAGE [5] | 97.31 0.10 | 113.12s | 0.468 0.003 | 3.74s |

| GatedGCN [49] | 97.34 0.14 | 128.79s | 0.435 0.011 | 5.76s |

| GIN [7] | 96.49 0.25 | 39.22s | 0.387 0.015 | 2.29s |

| 3-WL-GNN [22] | 95.08 0.96 | 1523.20s | 0.407 0.028 | 286.23s |

| Ring-GNN [50] | 91.86 0.45 | 2575.99s | 0.512 0.023 | 327.65s |

| PPGN [28] | N/A | N/A | 0.256 0.054 | 334.69s |

| Deep-LRP [11] | N/A | N/A | 0.223 0.008 | 72s |

| PNA [51] | 97.41 0.16 | N/A | 0.320 0.032 | N/A |

| PG-GNN (Ours) | 97.51 0.07 | 82.60s | 0.282 0.011 | 6.92s |

4.3 Running Time Analysis

As discussed above, compared to other powerful GNNs, one of the most important advantages of PG-GNN is efficiency. To evaluate, we compare the average running times between PG-GNN and baselines on two large-scale benchmarks, MNIST and ZINC. Table 4 also presents the average running times per epoch for various models. As shown in Table 4, PG-GNN is significantly faster than other powerful baselines, even on par with several variants of MPNNs. Thus, we can conclude that our approach outperforms other powerful GNNs in terms of time complexity. We also provide memory cost analysis in Tables 5 and 6, please refer to Appendix L.2 for more details.

5 Conclusion and Future Work

In this work, we devise an efficient permutation-sensitive aggregation mechanism via permutation groups, capturing pairwise correlations between neighboring nodes while ensuring linear sampling complexity. We throw light on the reasons why permutation-sensitive functions can improve GNNs’ expressivity. Moreover, we propose to approximate the property of permutation-invariance to significantly reduce the complexity with a minimal loss of generalization capability. In conclusion, we take an important step forward to better understand the permutation-sensitive GNNs.

However, Eq. (3) only models a small portion of -ary dependencies while covering all 2-ary dependencies. Although these 2-ary dependencies are invariant to an arbitrary permutation, the invariance to higher-order dependencies may not be guaranteed. It would be interesting to extend the 1-hop neighborhoods to 2-hop (even -hop) in Eq. (3), thereby completely modeling higher-order dependencies and exploiting more sophisticated substructures, which is left for future work.

Acknowledgements

We would like to express our sincere gratitude to the anonymous reviewers for their insightful comments and constructive feedback, which helped us polish this paper better. We thank Suixiang Gao, Xing Xie, Shiming Xiang, Zhiyong Liu, and Hao Zhang for their helpful discussions and valuable suggestions. This work was supported in part by the National Natural Science Foundation of China (61976209, 62020106015), CAS International Collaboration Key Project (173211KYSB20190024), and Strategic Priority Research Program of CAS (XDB32040000).

References

- Murphy et al. [2019a] Ryan Murphy, Balasubramaniam Srinivasan, Vinayak Rao, and Bruno Ribeiro. Relational pooling for graph representations. In Proceedings of the 36th International Conference on Machine Learning, pages 4663–4673, 2019a.

- Duvenaud et al. [2015] David Duvenaud, Dougal Maclaurin, Jorge Aguilera-Iparraguirre, Rafael Gómez-Bombarelli, Timothy Hirzel, Alán Aspuru-Guzik, and Ryan P Adams. Convolutional networks on graphs for learning molecular fingerprints. In Advances in Neural Information Processing Systems, pages 2224–2232, 2015.

- Kipf and Welling [2017] Thomas N Kipf and Max Welling. Semi-supervised classification with graph convolutional networks. In Proceedings of the 5th International Conference on Learning Representations, 2017.

- Gilmer et al. [2017] Justin Gilmer, Samuel S Schoenholz, Patrick F Riley, Oriol Vinyals, and George E Dahl. Neural message passing for quantum chemistry. In Proceedings of the 34th International Conference on Machine Learning, pages 1263–1272, 2017.

- Hamilton et al. [2017] William L Hamilton, Rex Ying, and Jure Leskovec. Inductive representation learning on large graphs. In Advances in Neural Information Processing Systems, pages 1024–1034, 2017.

- Ying et al. [2018] Rex Ying, Jiaxuan You, Christopher Morris, Xiang Ren, William L Hamilton, and Jure Leskovec. Hierarchical graph representation learning with differentiable pooling. In Advances in Neural Information Processing Systems, pages 4800–4810, 2018.

- Xu et al. [2019] Keyulu Xu, Weihua Hu, Jure Leskovec, and Stefanie Jegelka. How powerful are graph neural networks? In Proceedings of the 7th International Conference on Learning Representations, 2019.

- Maron et al. [2019a] Haggai Maron, Heli Ben-Hamu, Nadav Shamir, and Yaron Lipman. Invariant and equivariant graph networks. In Proceedings of the 7th International Conference on Learning Representations, 2019a.

- Kondor et al. [2018] Risi Kondor, Hy Truong Son, Horace Pan, Brandon Anderson, and Shubhendu Trivedi. Covariant compositional networks for learning graphs. arXiv preprint arXiv:1801.02144, 2018.

- de Haan et al. [2020] Pim de Haan, Taco Cohen, and Max Welling. Natural graph networks. In Advances in Neural Information Processing Systems, pages 3636–3646, 2020.

- Chen et al. [2020] Zhengdao Chen, Lei Chen, Soledad Villar, and Joan Bruna. Can graph neural networks count substructures? In Advances in Neural Information Processing Systems, pages 10383–10395, 2020.

- Thiede et al. [2021] Erik Henning Thiede, Wenda Zhou, and Risi Kondor. Autobahn: Automorphism-based graph neural nets. In Advances in Neural Information Processing Systems, pages 29922–29934, 2021.

- Zhao et al. [2022] Lingxiao Zhao, Wei Jin, Leman Akoglu, and Neil Shah. From stars to subgraphs: Uplifting any GNN with local structure awareness. In Proceedings of the 10th International Conference on Learning Representations, 2022.

- Bevilacqua et al. [2022] Beatrice Bevilacqua, Fabrizio Frasca, Derek Lim, Balasubramaniam Srinivasan, Chen Cai, Gopinath Balamurugan, Michael M Bronstein, and Haggai Maron. Equivariant subgraph aggregation networks. In Proceedings of the 10th International Conference on Learning Representations, 2022.

- Elman [1990] Jeffrey L Elman. Finding structure in time. Cognitive Science, 14(2):179–211, 1990.

- Cho et al. [2014] Kyunghyun Cho, Bart van Merriënboer, Caglar Gulcehre, Dzmitry Bahdanau, Fethi Bougares, Holger Schwenk, and Yoshua Bengio. Learning phrase representations using RNN encoder-decoder for statistical machine translation. In Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing, pages 1724–1734, 2014.

- Hochreiter and Schmidhuber [1997] Sepp Hochreiter and Jürgen Schmidhuber. Long short-term memory. Neural computation, 9(8):1735–1780, 1997.

- Murphy et al. [2019b] Ryan L Murphy, Balasubramaniam Srinivasan, Vinayak Rao, and Bruno Ribeiro. Janossy pooling: Learning deep permutation-invariant functions for variable-size inputs. In Proceedings of the 7th International Conference on Learning Representations, 2019b.

- Vignac et al. [2020] Clément Vignac, Andreas Loukas, and Pascal Frossard. Building powerful and equivariant graph neural networks with structural message-passing. In Advances in Neural Information Processing Systems, pages 14143–14155, 2020.

- Loukas [2020] Andreas Loukas. What graph neural networks cannot learn: Depth vs width. In Proceedings of the 8th International Conference on Learning Representations, 2020.

- Dasoulas et al. [2020] George Dasoulas, Ludovic Dos Santos, Kevin Scaman, and Aladin Virmaux. Coloring graph neural networks for node disambiguation. In Proceedings of the 29th International Joint Conference on Artificial Intelligence, pages 2126–2132, 2020.

- Morris et al. [2019] Christopher Morris, Martin Ritzert, Matthias Fey, William L Hamilton, Jan Eric Lenssen, Gaurav Rattan, and Martin Grohe. Weisfeiler and Leman go neural: Higher-order graph neural networks. In Proceedings of the 33rd AAAI Conference on Artificial Intelligence, pages 4602–4609, 2019.

- Weisfeiler and Leman [1968] Boris Weisfeiler and Andrei A Leman. A reduction of a graph to a canonical form and an algebra arising during this reduction. Nauchno-Technicheskaya Informatsiya, 2(9):12–16, 1968.

- Sato et al. [2019] Ryoma Sato, Makoto Yamada, and Hisashi Kashima. Approximation ratios of graph neural networks for combinatorial problems. In Advances in Neural Information Processing Systems, pages 4081–4090, 2019.

- Sato et al. [2021] Ryoma Sato, Makoto Yamada, and Hisashi Kashima. Random features strengthen graph neural networks. In Proceedings of the 2021 SIAM International Conference on Data Mining, pages 333–341, 2021.

- Abboud et al. [2021] Ralph Abboud, İsmail İlkan Ceylan, Martin Grohe, and Thomas Lukasiewicz. The surprising power of graph neural networks with random node initialization. In Proceedings of the 30th International Joint Conference on Artificial Intelligence, pages 2112–2118, 2021.

- Maron et al. [2019b] Haggai Maron, Ethan Fetaya, Nimrod Segol, and Yaron Lipman. On the universality of invariant networks. In Proceedings of the 36th International Conference on Machine Learning, pages 4363–4371, 2019b.

- Maron et al. [2019c] Haggai Maron, Heli Ben-Hamu, Hadar Serviansky, and Yaron Lipman. Provably powerful graph networks. In Advances in Neural Information Processing Systems, pages 2156–2167, 2019c.

- Keriven and Peyré [2019] Nicolas Keriven and Gabriel Peyré. Universal invariant and equivariant graph neural networks. In Advances in Neural Information Processing Systems, pages 7092–7101, 2019.

- Azizian and Lelarge [2021] Waïss Azizian and Marc Lelarge. Expressive power of invariant and equivariant graph neural networks. In Proceedings of the 9th International Conference on Learning Representations, 2021.

- Garg et al. [2020] Vikas Garg, Stefanie Jegelka, and Tommi Jaakkola. Generalization and representational limits of graph neural networks. In Proceedings of the 37th International Conference on Machine Learning, pages 3419–3430, 2020.

- Tahmasebi et al. [2020] Behrooz Tahmasebi, Derek Lim, and Stefanie Jegelka. Counting substructures with higher-order graph neural networks: Possibility and impossibility results. arXiv preprint arXiv:2012.03174, 2020.

- You et al. [2021] Jiaxuan You, Jonathan Gomes-Selman, Rex Ying, and Jure Leskovec. Identity-aware graph neural networks. In Proceedings of the 35th AAAI Conference on Artificial Intelligence, pages 10737–10745, 2021.

- Balcilar et al. [2021] Muhammet Balcilar, Pierre Héroux, Benoit Gaüzère, Pascal Vasseur, Sébastien Adam, and Paul Honeine. Breaking the limits of message passing graph neural networks. In Proceedings of the 38th International Conference on Machine Learning, pages 599–608, 2021.

- Barceló et al. [2021] Pablo Barceló, Floris Geerts, Juan Reutter, and Maksimilian Ryschkov. Graph neural networks with local graph parameters. In Advances in Neural Information Processing Systems, pages 25280–25293, 2021.

- Bouritsas et al. [2022] Giorgos Bouritsas, Fabrizio Frasca, Stefanos P Zafeiriou, and Michael M Bronstein. Improving graph neural network expressivity via subgraph isomorphism counting. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2022.

- Bodnar et al. [2021a] Cristian Bodnar, Fabrizio Frasca, Yuguang Wang, Nina Otter, Guido F Montúfar, Pietro Liò, and Michael Bronstein. Weisfeiler and Lehman go topological: Message passing simplicial networks. In Proceedings of the 38th International Conference on Machine Learning, pages 1026–1037, 2021a.

- Bodnar et al. [2021b] Cristian Bodnar, Fabrizio Frasca, Nina Otter, Yu Guang Wang, Pietro Liò, Guido F Montúfar, and Michael Bronstein. Weisfeiler and Lehman go cellular: CW networks. In Advances in Neural Information Processing Systems, pages 2625–2640, 2021b.

- Battaglia et al. [2018] Peter W Battaglia, Jessica B Hamrick, Victor Bapst, Alvaro Sanchez-Gonzalez, Vinícius Flores Zambaldi, Mateusz Malinowski, Andrea Tacchetti, David Raposo, Adam Santoro, Ryan Faulkner, et al. Relational inductive biases, deep learning, and graph networks. arXiv preprint arXiv:1806.01261, 2018.

- Al Hasan and Dave [2018] Mohammad Al Hasan and Vachik S Dave. Triangle counting in large networks: A review. Wiley Interdisciplinary Reviews: Data Mining and Knowledge Discovery, 8(2):e1226, 2018.

- Mitzenmacher and Upfal [2017] Michael Mitzenmacher and Eli Upfal. Probability and computing: Randomization and probabilistic techniques in algorithms and data analysis. Cambridge University Press, 2017.

- Brijder et al. [2019] Robert Brijder, Floris Geerts, Jan Van Den Bussche, and Timmy Weerwag. On the expressive power of query languages for matrices. ACM Transactions on Database Systems, 44(4):1–31, 2019.

- Geerts [2021] Floris Geerts. On the expressive power of linear algebra on graphs. Theory of Computing Systems, 65(1):179–239, 2021.

- Shervashidze et al. [2011] Nino Shervashidze, Pascal Schweitzer, Erik Jan Van Leeuwen, Kurt Mehlhorn, and Karsten M Borgwardt. Weisfeiler-Lehman graph kernels. Journal of Machine Learning Research, 12(77):2539–2561, 2011.

- Zhang et al. [2018] Muhan Zhang, Zhicheng Cui, Marion Neumann, and Yixin Chen. An end-to-end deep learning architecture for graph classification. In Proceedings of the 32nd AAAI Conference on Artificial Intelligence, pages 4438–4445, 2018.

- Kolouri et al. [2021] Soheil Kolouri, Navid Naderializadeh, Gustavo K Rohde, and Heiko Hoffmann. Wasserstein embedding for graph learning. In Proceedings of the 9th International Conference on Learning Representations, 2021.

- Morris et al. [2020] Christopher Morris, Nils M Kriege, Franka Bause, Kristian Kersting, Petra Mutzel, and Marion Neumann. TUDataset: A collection of benchmark datasets for learning with graphs. ICML 2020 Workshop on Graph Representation Learning and Beyond (GRL+), 2020. URL www.graphlearning.io.

- Dwivedi et al. [2020] Vijay Prakash Dwivedi, Chaitanya K Joshi, Thomas Laurent, Yoshua Bengio, and Xavier Bresson. Benchmarking graph neural networks. arXiv preprint arXiv:2003.00982, 2020.

- Bresson and Laurent [2017] Xavier Bresson and Thomas Laurent. Residual gated graph convnets. arXiv preprint arXiv:1711.07553, 2017.

- Chen et al. [2019] Zhengdao Chen, Soledad Villar, Lei Chen, and Joan Bruna. On the equivalence between graph isomorphism testing and function approximation with GNNs. In Advances in Neural Information Processing Systems, pages 15894–15902, 2019.

- Corso et al. [2020] Gabriele Corso, Luca Cavalleri, Dominique Beaini, Pietro Liò, and Petar Veličković. Principal neighbourhood aggregation for graph nets. In Advances in Neural Information Processing Systems, pages 13260–13271, 2020.

- West [2001] Douglas Brent West. Introduction to graph theory. Prentice Hall, 2001.

- Artin [2011] Michael Artin. Algebra. Pearson Prentice Hall, 2011.

- Birkhoff and Mac Lane [2017] Garrett Birkhoff and Saunders Mac Lane. A survey of modern algebra. CRC Press, 2017.

- Grohe [2017] Martin Grohe. Descriptive complexity, canonisation, and definable graph structure theory, volume 47. Cambridge University Press, 2017.

- Cai et al. [1992] Jin-Yi Cai, Martin Fürer, and Neil Immerman. An optimal lower bound on the number of variables for graph identification. Combinatorica, 12(4):389–410, 1992.

- Deo [2017] Narsingh Deo. Graph theory with applications to engineering and computer science. Courier Dover Publications, 2017.

- Harary and Manvel [1971] Frank Harary and Bennet Manvel. On the number of cycles in a graph. Matematickỳ časopis, 21(1):55–63, 1971.

- Chung et al. [2012] Kai-Min Chung, Henry Lam, Zhenming Liu, and Michael Mitzenmacher. Chernoff-Hoeffding bounds for Markov chains: Generalized and simplified. In Proceedings of the 29th International Symposium on Theoretical Aspects of Computer Science, pages 124–135, 2012.

- Haykin [2010] Simon Haykin. Neural networks and learning machines, 3/E. Pearson Education India, 2010.

- Chen et al. [2016] Xiaowei Chen, Yongkun Li, Pinghui Wang, and John CS Lui. A general framework for estimating graphlet statistics via random walk. Proceedings of the VLDB Endowment, 10(3):253–264, 2016.

- Hardiman and Katzir [2013] Stephen J Hardiman and Liran Katzir. Estimating clustering coefficients and size of social networks via random walk. In Proceedings of the 22nd International Conference on World Wide Web, pages 539–550, 2013.

- LeCun et al. [1998] Yann LeCun, Léon Bottou, Yoshua Bengio, and Patrick Haffner. Gradient-based learning applied to document recognition. Proceedings of the IEEE, 86(11):2278–2324, 1998.

- Achanta et al. [2012] Radhakrishna Achanta, Appu Shaji, Kevin Smith, Aurelien Lucchi, Pascal Fua, and Sabine Süsstrunk. SLIC superpixels compared to state-of-the-art superpixel methods. IEEE Transactions on Pattern Analysis and Machine Intelligence, 34(11):2274–2282, 2012.

- Irwin et al. [2012] John J Irwin, Teague Sterling, Michael M Mysinger, Erin S Bolstad, and Ryan G Coleman. ZINC: A free tool to discover chemistry for biology. Journal of Chemical Information and Modeling, 52(7):1757–1768, 2012.

- Ioffe and Szegedy [2015] Sergey Ioffe and Christian Szegedy. Batch normalization: Accelerating deep network training by reducing internal covariate shift. In Proceedings of the 32nd International Conference on Machine Learning, pages 448–456, 2015.

- Glorot and Bengio [2010] Xavier Glorot and Yoshua Bengio. Understanding the difficulty of training deep feedforward neural networks. In Proceedings of the 13th International Conference on Artificial Intelligence and Statistics, pages 249–256, 2010.

- Kingma and Ba [2015] Diederik P Kingma and Jimmy Lei Ba. Adam: A method for stochastic optimization. In Proceedings of the 3rd International Conference on Learning Representations, 2015.

- Hagberg et al. [2008] Aric Hagberg, Pieter Swart, and Daniel S Chult. Exploring network structure, dynamics, and function using NetworkX. In Proceedings of the 7th Python in Science Conference, pages 11–15, 2008.

- Paszke et al. [2019] Adam Paszke, Sam Gross, Francisco Massa, Adam Lerer, James Bradbury, Gregory Chanan, Trevor Killeen, Zeming Lin, Natalia Gimelshein, Luca Antiga, et al. PyTorch: An imperative style, high-performance deep learning library. In Advances in Neural Information Processing Systems, pages 8026–8037, 2019.

- Wang et al. [2019] Minjie Wang, Lingfan Yu, Da Zheng, Quan Gan, Yu Gai, Zihao Ye, Mufei Li, Jinjing Zhou, Qi Huang, Chao Ma, et al. Deep graph library: Towards efficient and scalable deep learning on graphs. ICLR Workshop on Representation Learning on Graphs and Manifolds, 2019.

Appendix A Background on Graph Theory

Given a graph , a walk in is a finite sequence of alternating vertices and edges such as , where each edge . A walk may have repeated edges. A trail is a walk in which all the edges are distinct. A path is a trail in which all vertices (hence all edges) are distinct (except, possibly, ). A trail or path is closed if , and a closed path containing at least one edge is a cycle [52].

A Hamiltonian path is a path in a graph that passes through each vertex exactly once. A Hamiltonian cycle is a cycle in a graph that passes through each vertex exactly once. A Hamiltonian graph is a graph that contains a Hamiltonian cycle.

Let and be graphs. If and contains all the edges with , then is an induced subgraph of , and we say that induces in .

An empty graph is a graph whose edge-set is empty. A regular graph is a graph in which each vertex has the same degree. If each vertex has degree , the graph is -regular. A strongly regular graph in the family SRG() is an -regular graph with vertices, where every two adjacent vertices have common neighbors, and every two non-adjacent vertices have common neighbors.

A complete graph is a simple undirected graph in which every pair of distinct vertices is adjacent. We denote the complete graph on vertices by . A tournament is a directed graph in which each edge of a complete graph is given an orientation. We denote the tournament on vertices by . A clique of a graph is a complete induced subgraph of . A clique of size is called a -clique.

The local clustering coefficient of a vertex quantifies how close its neighbors are to being a clique (complete graph). The local clustering coefficient of a vertex is given by the proportion of links between the vertices within its neighborhood divided by the number of links that could possibly exist between them, defined as . This measure is 1 if every neighbor connected to is also connected to every other vertex within the neighborhood.

Let and be graphs. An isomorphism between and is a bijective map that maps pairs of connected vertices to pairs of connected vertices, and likewise for pairs of non-connected vertices, i.e., iff for all and in .

Appendix B Background on Group Theory

Since we deal with finite sets in this paper, all the following definitions are about finite groups.

For an arbitrary element in a group , the order of is the smallest positive integer such that , where is the identity element. is the cyclic subgroup generated by and is often denoted by . A cyclic group is a group that is equal to one of its cyclic subgroups: for some element , and the element is called a generator. The cyclic group with elements is denoted by [53, 54].

A permutation of a finite set is a bijective map from to itself. In Cauchy’s two-line notation, it denotes such a permutation by listing the “natural” order for all the elements of in the first row, and for each one, its image below it in the second row: . A cycle of length (or -cycle) is a permutation for which there exists an element in such that are the only elements moved by . In cycle notation, it denotes such a cycle (or -cycle) by .

A permutation group is a group whose elements are permutations of a given set , with the group operation “” being the composition of permutations. The permutation group on the set is denoted by . A symmetric group is a group whose elements are all permutations of a given set . The symmetric group on the set is denoted by [53]. Every permutation group is a subgroup of a symmetric group.

A group action of a group on a set is a map , denoted by (with often shortened to or ) that satisfies the following two axioms:

-

a)

identity: , for all , where is the identity element of .

-

b)

associative law: , for all and , where denotes the operation or composition in .

Let and be groups. A homomorphism is a map from to such that for all and in . An isomorphism from to is a bijective group homomorphism - a bijective map such that for all and in [53]. We use the symbol to denote two groups and are isomorphic, i.e., .

Appendix C Definition of k-WL Test

There are different definitions of the -dimensional Weisfeiler-Lehman (-WL) test for , while in this work, we follow the definition in Chen et al. [11]. Note that the -WL test here is equivalent to the -WL tests in [55, 22, 28, 30], and the -WL test in [56] (Grohe [55] calls this version as -WL′). -WL test has been proven to be strictly more powerful than -WL test [56].

The -WL algorithm is a generalization of the 1-WL, it colors tuples from instead of nodes. For any -tuple and each , define the -th neighborhood

That is, the -th neighborhood of the -tuple is obtained by replacing the -th component of with every node from .

Given a pair of graphs and , we use the -WL algorithm to test them for isomorphism. Suppose that the two graphs have the same number of vertices since otherwise, they can be told apart easily. Without loss of generality, we assume that they share the same set of vertex indices, (but may differ in ). The -WL test follows the following coloring procedure.

-

1)

For each of the graphs, at iteration 0, the test assigns an initial color in the color space to each -tuple according to its atomic type, i.e., two -tuples and in get the same color if the subgraphs induced from nodes of and are isomorphic.

-

2)

In each iteration , the test computes a -tuple coloring . More specifically, let denote the color of in assigned at the -th iteration, and let denote the color assigned for in . Define

where is a hash function that maps injectively from the space of multisets of colors to some intermediate space. Then let

where maps injectively from its input space to the color space , and are updated iteratively in this way.

-

3)

The test will terminate and return the result that the two graphs are not isomorphic if the following two multisets differ at some iteration :

Appendix D Distinguishing Non-Isomorphic Graph Pairs: Permutation-Sensitive vs. Permutation-Invariant Aggregation Functions

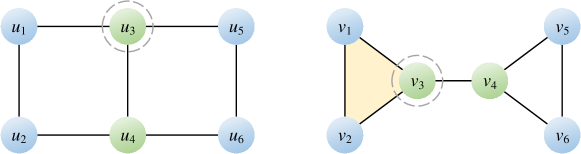

Let be an arbitrary aggregation function. For a node , let ( for blue, for green) denote the initial node feature, denote the feature transformed by . In the initial stage, we have:

Figure 3 illustrates a pair of non-isomorphic graphs that 2-WL test and most permutation-invariant aggregation functions fail to distinguish. Suppose is permutation-invariant, we take the sum aggregator SUM as an example to illustrate this process. After the first round of iteration, the transformed feature of each node is:

We can find that the distributions of node features of these two graphs are the same. Similarly, after each round of iteration, these two graphs always produce the same distributions of node features. Hence we can conclude that the 2-WL test and the permutation-invariant function SUM fail to distinguish these two graphs.

In contrast, suppose is permutation-sensitive, we take a generic permutation-sensitive aggregator as an example to illustrate its process. Here is the -th input node feature, is the corresponding transformed feature with , and the learnable parameter measures the pairwise correlation between and . For the left graph , we focus on node . Let the input ordering of neighboring nodes be , i.e., , then only encodes the pairwise correlation between and . Thus, we have

For the right graph , we focus on node . Let the input ordering of neighboring nodes be , i.e., , then also encodes the pairwise correlation between and . Thus, we have

After the first round of iteration, the node feature of differs from the of . Hence we can conclude that the permutation-sensitive aggregation function can distinguish these two graphs. Moreover, the weight ratio of and in is , which is smaller than that in , i.e., . This fact indicates that, in , focuses more on encoding the pairwise correlation between and . In contrast, in , focuses more on encoding the pairwise correlation between and , thereby exploiting the triangular substructure such as . It is worth noting that when , the function is and degenerates to the permutation-invariant function SUM, resulting in .

Appendix E Proof of Theorem 1

Theorem 1. Let denote the number of 1-hop neighboring nodes around the central node . There are kinds of arrangements in total, satisfying that their corresponding 2-ary dependencies are disjoint. Meanwhile, after at least arrangements (including the initial one), all 2-ary dependencies have been covered at least once.

Proof.

Construct a simple undirected graph , where denotes the neighboring nodes (abbreviated as nodes in the following) around the central node , and represents an edge set in which each edge indicates the corresponding 2-ary dependency has been covered in some arrangements. Thus, each arrangement corresponds to a Hamiltonian cycle in graph . For any two arrangements, detecting whether their corresponding 2-ary dependencies are disjoint can be analogous to finding two edge-disjoint Hamiltonian cycles. Since every pair of nodes can form a 2-ary dependency, the first problem can be translated into finding the maximum number of edge-disjoint Hamiltonian cycles in a complete graph , and the second problem can be translated into finding the minimum number of Hamiltonian cycles to cover a complete graph .

Since a has edges and each Hamiltonian cycle has edges, there are at most edge-disjoint Hamiltonian cycles in a . In addition, we can specifically construct edge-disjoint Hamiltonian cycles as follows. If is odd, keep the nodes fixed on a circle with node 1 at the center, rotate the node numbers on the circle clockwise by , while the graph structure always remains unchanged as the initial arrangement shown in Figure 4(a). Each rotation can be formulated as the following permutation :

Observe that each rotation generates a new Hamiltonian cycle containing completely different edges from before. Thus we have new Hamiltonian cycles with all edges disjoint from the ones in Figure 4(a) and among themselves [57]. If is even, the node arrangement can be initialized as shown in Figure 4(b), and new Hamiltonian cycles can be constructed successively in a similar way. We thus conclude that there are kinds of arrangements in total, satisfying that their corresponding 2-ary dependencies are disjoint.

Furthermore, if is odd, has edges divisible by the length of each Hamiltonian cycle. Therefore, we can exactly cover all edges by the above kinds of arrangements. On the contrary, if is even, has edges indivisible by the length of each Hamiltonian cycle, remaining edges uncovered by the above kinds of arrangements. Thus we continue to perform the permutation once, i.e., kinds of arrangements in total, to cover all edges but result in edges duplicated twice.

As discussed in the main body, these arrangements and the corresponding Hamiltonian cycles are modeled by the permutation-sensitive function in a directed manner. In addition, we also expect to reverse these directed Hamiltonian cycles by performing the permutation successively, thereby transforming them into an undirected manner. However, cannot satisfy this requirement if is even. Thus, we propose to revise the permutation into the following one:

where is the same as when is odd, but a little different when is even. If is even, is an -cycle, but is an -cycle. The corresponding initial node arrangement after revision is shown in Figure 4(c). After adding a virtual node 0 at the center in Figure 4(c), becomes the same as with in Figure 4(a), which can cover all edges with kinds of arrangements. Moreover, after performing for times in succession, it can cover a complete graph bi-directionally but fails.

In conclusion, after performing or for times in succession (excluding the initial one), all 2-ary dependencies have been covered at least once.

Appendix F Proof of Lemma 2

Theorem F.1.

The order of any permutation is the least common multiple of the lengths of its disjoint cycles [54].

Proposition F.2.

The order of a cyclic group is equal to the order of its generator [53].

2. For the permutation of indices, is a permutation group isomorphic to the cyclic group if is odd. And is a permutation group isomorphic to the cyclic group if is even.

Proof.

If is odd, we find the order of permutation first. Since

Let , , then the permutation can be represented as the product of these two disjoint cycles, i.e., . Here is a -cycle of length , is an -cycle of length . Using Theorem F.1, the order of permutation is the least common multiple of and : , which indicates that . Therefore, is a permutation group generated by , i.e., . According to the definition of the cyclic group (see Appendix B), is isomorphic to a cyclic group. By Proposition F.2, the order of group is equal to the order of its generator , i.e., . Thus, is a permutation group isomorphic to the cyclic group .

Similarly, we can prove that is a permutation group isomorphic to the cyclic group if is even.

Theorem F.3 (Cayley’s Theorem).

Every finite group is isomorphic to a permutation group [53].

The conclusion of Lemma 2 also obeys the most fundamental Cayley’s Theorem in group theory.

Appendix G Proof of Corollary 3 and the Diagram of Group Action

3. The map denoted by is a group action of on .

Proof.

Let be the identity element of and be the identity permutation. And let denote the composition in . For all and , we have

Thus, the map defines a group action of the permutation group on the set .

To better understand Lemma 2 and Corollary 3, we provide diagrams to illustrate the group actions of the permutation groups and when and , respectively. As shown in Figures 5(a) and 5(b), the overall frameworks with big light-gray circles and cyan arrows represent the Cayley diagrams of the permutation groups and constructed by Lemma 2, respectively. The center of each subfigure presents the corresponding generator . Each big light-gray circle represents an element (i.e., a permutation) of group , marked at the center of the circle. And each cyan arrow indicates the relationship exists between two group elements . After acts on the elements of the set , the corresponding images are presented as the colored numbers next to the big light-gray circle. Finally, the 2-ary dependencies (colored arrows) between neighboring nodes (small dark-gray circles) are modeled according to the action results of , shown in each big light-gray circle.

Appendix H Proofs About Incidence Triangles

H.1 Proof of Eq. (6)

Proof.

Let , , where and denote the element of and , respectively. Since equals 1 iff nodes and are adjacent in , equals the number of walks of length 2 from nodes to in . In addition, a walk of length 2 from to and an edge from to form a triangle containing both and . Therefore, the element of equals , which indicates how many triangles contain both and . We can use a sum vector to sum up each row of and get a result vector, whose -th element gives twice the number of incidence triangles of node . Here the “twice” comes from the fact that each incidence triangle over node has two walks of length 2 starting from node , that is, and . Hence after dividing each element of the result vector by 2, we finally obtain .

For the second equation, we have

Remark. The -th diagonal entry of is equal to twice the number of triangles in which the -th node is contained [41]. In addition, each triangle has three vertices. Hence we can divide the sum of the diagonal entries by 6 to obtain the total number of triangles in graph , i.e., [58].

For directed graphs, we also have similar results:

where and its -th element denotes the number of directed incidence triangles over node .

H.2 Proof of Theorem 4

Theorem H.1 (Chernoff-Hoeffding Bound for Discrete Time Markov Chain [59]).

Let be an ergodic Markov chain with state space and stationary distribution . Let be its -mixing time for . Let denote an -step random walk on starting from an initial distribution on , i.e., . Define . For every step , let be a weight function such that the expectation for all . Define the total weight of the walk by . There exists some constant (which is independent of , and ) such that for

or equivalently

Theorem H.2.

Any nonlinear dynamic system may be approximated by a recurrent neural network to any desired degree of accuracy and with no restrictions imposed on the compactness of the state space, provided that the network is equipped with an adequate number of hidden neurons [60].

Theorem 4. Let denote the feature inputs on graph , and be a general GNN model with RNN aggregators. Suppose that is initialized as the degree of node , and each node is distinguishable. For any and , there exists a parameter setting for so that after samples,

where is the final output value generated by and is the number of incidence triangles.

Proof.

Without loss of generality, we discuss how to estimate the number of incidence triangles for an arbitrary node based on its neighbors . Let , and let denote the subgraph induced by , with an adjacency matrix . We add a symbol “” to all notations of the induced subgraph to distinguish them from those of graph . For each node , denotes the degree of in graph , denotes the number of incidence triangles of in graph . In particular, , . Our goal is to estimate for an arbitrary node in graph , which is equal to in graph .

A simple random walk (SRW) with steps on graph , denoted by , is defined as follows: start from an initial node in , then move to one of its neighboring nodes chosen uniformly at random, and repeat this process times. This random walk on graph can be viewed as a finite Markov chain with the state space , and the transition probability matrix of this Markov chain is defined as

Let denote the sum of degrees in graph . After many random walk steps, the probability converges to , and the vector is called the stationary distribution of this random walk.

The mixing time of a Markov chain is the number of steps it takes for a random walk to approach its stationary distribution. We adopt the definition in [59, 61, 41] and define the mixing time as follows:

where is the stationary distribution of the Markov chain defined above, is the initial distribution when starting from state , is the transition matrix after steps, and is the variation distance between two distributions.

Later on, we will exploit node samples taken from a random walk to construct an estimator , then use the mixing time based Chernoff-Hoeffding bound [59] to compute the number of steps/samples needed, thereby guaranteeing that our estimator is within of the true value with the probability of at least .

Given a random walk on graph , we define a new variable for every , then we have

| (7) |

The second equality holds because there are equal probability combinations of , out of which only combinations form a triangle or its reverse , where is connected to , i.e., .

To estimate , we introduce two variables and , defined as follows:

Using the linearity of expectation and Eq. (Proof), we obtain

| (8) |

Similarly, we have

| (9) |

Recall that is a subgraph induced by , where are neighbors of an arbitrary node . Therefore, the maximum degree of graph is , which is equal to . In addition, we have , and . Substituting them in Eq. (8) and Eq. (9), we get

| (10) |

and

| (11) |

From Eq. (10) and Eq. (11) we can isolate and get

| (12) |

Since is the feature input, the coefficient can be considered as a constant factor here. Intuitively, both and converge to their expected values, and thus the estimator converges to as well. Next, we will find the number of steps/samples for convergence.

Since in only depends on a 3-nodes history, we observe a related Markov chain that remembers the three latest visited nodes. Accordingly, has states, and has the same transition probability as in . Define each state for . Let such that all values of are in . By Eq. (Proof), Eq. (8), and Eq. (10), we have . Define , assume that thus . By Theorem H.1 and Eq. (10), we have

| (13) |

Extracting from , we obtain , where , and are all constants.

Let , by Eq. (9) and Eq. (11) we have . Define , assume that thus . By Theorem H.1 and Eq. (11), we have

| (14) |

Extracting from , we obtain , where , and are all constants.

Since (see Appendix A in [62] for details), choose . Eq. (13) and Eq. (14) find the number of steps/samples , which guarantees both and are within of their expected values with the probability of at least . Since the probability of or deviating from their expected value is at most , the probability of either or deviating is at most :

The first line is a summary of Eq. (13) and Eq. (14). The inequalities “” hold due to Eq. (12), and the fact of both and when . We thus conclude that after samples, .

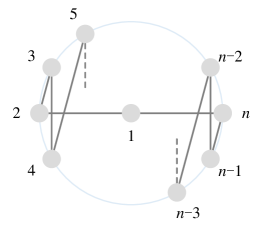

However, if and is a star graph, the number of samples . To avoid that, we add an artificial node and connect it to all nodes in , as illustrated in Figure 6. Since , , we only need to minus a for the estimated result , and the number of samples can then be reduced to .

We have proved that we can estimate the number of incidence triangles for an arbitrary node based on its neighbors by a random walk. Consider the random walk as a nonlinear dynamic system, according to the RNNs’ universal approximation ability (Theorem H.2), this random walk can be approximated by an RNN to any desired degree of accuracy. Therefore, let the input sequence of RNN follow the random walk above, then the RNN aggregator can mimic this random walk on the subgraph induced by and its 1-hop neighbors when aggregating, finally outputs . This completes the proof.

H.3 Analysis of GraphSAGE

Theorem H.3.

Let denote the input features for Algorithm 1 (proposed in GraphSAGE) on graph , where is any compact subset of . Suppose that there exists a fixed positive constant such that for all pairs of nodes. Then we have that there exists a parameter setting for Algorithm 1 such that after iterations

where are final output values generated by Algorithm 1 and are node clustering coefficients [5].

According to Theorem H.3, GraphSAGE can approximate the clustering coefficients in a graph to arbitrary precision. In addition, since GraphSAGE with LSTM aggregators is a special case of our proposed Theorem 4, it can also approximate the number of incidence triangles to arbitrary precision. In fact, the number of incidence triangles is related to the local clustering coefficient . More specifically, . Therefore, the conclusion of Theorem 4 is consistent with that of Theorem H.3. However, Theorem 4 reveals that the required samples are related to and proportional to the mixing time , leading to a practically prohibitive aggregation complexity.

To overcome this problem and improve the efficiency, GraphSAGE performs neighborhood sampling and suggests sampling 2-hop neighborhoods for each node. Suppose the neighborhood sample sizes of 1-hop and 2-hop are and , then the sampling complexity is . Accordingly, the memory and time complexity of GraphSAGE with LSTM are and .

Appendix I Proof of Proposition 5

Theorem I.1.

-WL and MPNNs cannot induced-subgraph-count any connected pattern with or more nodes [11].

Lemma I.2.

5. PG-GNN is strictly more powerful than the 2-WL test and not less powerful than the 3-WL test.

Proof.

We first verify that the GIN (with the equivalent expressive power as the 2-WL test) [7] can be instantiated by a GNN model with RNN aggregators (including our proposed PG-GNN). Consider a single layer of GIN:

| (15) |

where has a linear mapping and a bias term . Without loss of generality, we take the Simple Recurrent Network (SRN) [15] as the RNN aggregator in Eq. (3), formulated as follows:

Let , , , the initial state , the activation function be an identity function. And let the input sequence of the RNN aggregator be an arbitrarily ordered sequence of the set . Then any GIN with Eq. (15) can be instantiated by a GNN model with RNN aggregators (in particular, a PG-GNN with Eq. (3)), which implies that the permutation-sensitive GNNs can be at least as powerful as the 2-WL test.

Next, we prove that PG-GNN is strictly more powerful than MPNNs and 2-WL test from the perspective of substructure counting. Without loss of generality, we take an arbitrary node into consideration. According to the definition of incidence triangles and the fact that they always appear in the 1-hop neighborhood of the central node, the number of connections between neighboring nodes of the central node is equivalent to the number of incidence triangles over . Theorem 1 ensures that all the 2-ary dependencies can be modeled by Eq. (3). Suppose we are aiming to capture the connections between two arbitrary neighbors of the central node, we can use an MSE loss to measure the mean squared error between the predicted and ground-truth counting values and guide our model to learn the correct 2-ary dependencies, thereby capturing the correct connections and counting the number of connections between neighboring nodes. And if we mainly focus on specific downstream tasks (e.g., graph classification), these 2-ary dependencies will be learned adaptively with the guidance of a specific loss function (e.g., cross-entropy loss). Thus PG-GNN is capable of counting incidence triangles333In fact, since PG-GNN can count incidence triangles, it is also capable of counting all incidence 3-node graphlets. There are only two types of 3-node graphlets, i.e., wedges ( ) and triangles ( ), let be the number of incidence triangles over and be the number of 1-hop neighbors, then we have incidence wedges.. Moreover, since the incidence 4-cliques always appear in the 1-hop neighborhood of the central node and every 4-clique is entirely composed of triangles, PG-GNN can also leverage 2-ary dependencies to count incidence 4-cliques, similar to counting incidence triangles. Thus PG-GNN can count all 3-node graphlets ( , ), even 4-cliques ( ) incident to node .

In addition, Chen et al. [11] proposed Theorem I.1, which implies that 2-WL and MPNNs cannot count any connected induced subgraph with 3 or more nodes. Since the incidence wedges, triangles, and 4-cliques are all connected induced subgraphs with nodes, the above arguments demonstrate that the expressivity of PG-GNN goes beyond the 2-WL test and MPNNs.

To round off the proof, we finally prove that PG-GNN is not less powerful than the 3-WL test. Consider a pair of strongly regular graphs in the family SRG(16,6,2,2): 44 Rook’s graph and the Shrikhande graph. As illustrated in Figure 7, only Rook’s graph (left) possesses 4-cliques (some are emphasized by colors), but the Shrikhande graph (right) possesses no 4-cliques. Since PG-GNN is capable of counting incidence 4-cliques, our approach can distinguish this pair of strongly regular graphs. However, in virtue of Lemma I.2 and the fact that 2-FWL is equivalent to 3-WL [28], the 3-WL test fails to distinguish them. Thus PG-GNN is not less powerful than the 3-WL test444More accurately, PG-GNN is outside the WL hierarchy, and thus it is not easy to fairly compare it with 3-WL. On the one hand, PG-GNN can distinguish some strongly regular graphs but 3-WL fails. On the other hand, 3-WL considers all the 3-tuples , which form a superset of (induced) subgraphs, but PG-GNN only considers the induced subgraphs and thus cannot completely achieve 3-WL. In summary, 3-WL and PG-GNN have their own unique merits. However, since 3-WL needs to consider all 3-tuples, the problem of complexity is inevitable. In contrast, PG-GNN breaks from the WL hierarchy to make a trade-off between expressive power and computational efficiency..

In conclusion, our proposed PG-GNN is strictly more powerful than the 2-WL test and not less powerful than the 3-WL test.

Appendix J Details of the Proposed Model

In this section, we discuss the proposed model in detail. The notations follow the definitions in Section 3.1, i.e., let denote the number of 1-hop neighbors of the central node . Suppose these neighbors are randomly numbered as (also abbreviated as for simplicity), the set of neighboring nodes is represented as (or ).

J.1 Illustration of the Proposed Model