Draft \SetWatermarkLightness0.85 \SetWatermarkScale1.3

GotFlow3D: Recurrent Graph Optimal Transport for Learning 3D Flow Motion in Particle Tracking

Abstract

Flow visualization technologies such as particle tracking velocimetry (PTV) are broadly used in understanding the all-pervasiveness three-dimensional (3D) turbulent flow from nature and industrial processes. Despite the advances in 3D acquisition techniques, the developed motion estimation algorithms in particle tracking remain great challenges of large particle displacements, dense particle distributions and high computational cost. By introducing a novel deep neural network based on recurrent Graph Optimal Transport, called GotFlow3D, we present an end-to-end solution to learn the 3D fluid flow motion from double-frame particle sets. The proposed network constructs two graphs in the geometric and feature space and further enriches the original particle representations with the fused intrinsic and extrinsic features learnt from a graph neural network. The extracted deep features are subsequently utilized to make optimal transport plans indicating the correspondences of particle pairs, which are then iteratively and adaptively retrieved to guide the recurrent flow learning. Experimental evaluations, including assessments on numerical experiments and validations on real-world experiments, demonstrate that the proposed GotFlow3D achieves state-of-the-art performance against both recently-developed scene flow learners and particle tracking algorithms, with impressive accuracy, robustness and generalization ability, which can provide deeper insight into the complex dynamics of broad physical and biological systems.

Index Terms:

Graph Neural Networks, Recurrent Neural Networks, Optimal Transport, Fluid Motion Estimation, Point Clouds.1 Introduction

Visualization and measurement of the turbulent flow can reveal dynamic behaviors of great complexity in fluid mechanics, biological locomotion and soft materials, which has long been recognized by Leonardo da Vinci and carried out to track fine particles in turbulent flows of the aortic heart valve in the 1400s [1]. Among the diverse flow visualization technologies, particle tracking velocimetry (PTV) is commonly used for quantitative and non-intrusive measurement of global velocity field, that can provide a deeper perception into the complex flow phenomena [2]. In particular, tracking the suspended particles embedded within a fluid medium (e.g., liquid, gas or other material with continuous deformation) in PTV has been playing a significant role in elucidating natural phenomena formation processes [3, 4], hydrodynamics of biological locomotion [5], tissue-scale or intracellular flow in organisms [6, 7, 8, 9], biological collective phenomena or transport processes [10, 11], dynamic mechanisms of liquids [12] as well as atomic rearrangement motion in solids [13].

The PTV algorithms rely on the detection and tracking of individual particles between consecutive particle images, resulting in Lagrangian velocity vectors that can considerably represent the local motions of the fluid flow. In general, the particles are detected and localized with sub-pixel spatial coordinates in different ways depending on various experimental setups (e.g., defocusing PTV [14] or traction force microscopy [15]). Compared to other developed flow analysis techniques, e.g., particle image velocimetry (PIV) [16], PTV shows the great superiority for providing flow fields with substantially increased spatial resolution as well as improved accuracy by avoiding bias errors due to the spatial averaging in PIV [2]. Moreover, due to the individual particle tracking manner, PTV demonstrates better performance in measuring the flows with strong velocity gradients and inhomogeneity of particle spatial distribution due to Saffman effect [16], especially in a three-dimensional (3D) spatial domain.

Despite the aforementioned advantages, the ever-growing PTV demands in scientific researches and engineering applications pose great challenges to classical particle tracking algorithms, which need to be further generalized to tricky flow scenarios involving diverse motion patterns, large dynamic velocity ranges, dense particle distribution, etc. In addition, the classical approaches commonly involve complicated iterative algorithms with adjustable parameters or introduce additional temporal smoothness from multiple frames [17, 2], which are generally time-consuming in real experiments. To overcome these limitations, we incorporate deep neural networks developed for 3D point clouds to solve the fundamental problem of learning the 3D flow motion from double-frame particle sets in PTV experiments.

Deep learning in fluid mechanics has attracted increasing attention and developed rapidly over the past few years [18]. In the community of flow measurement, PIV has thrived due to the development of deep neural networks, especially convolutional neural networks (CNN) [19, 20, 21, 22]. However, due to the difficulty of applying CNN-based approaches to cope with the unstructured grid particle data, PTV has until recently benefited few from deep learning and conversely still remains room for improvement. A recent work on learning-based PTV has been proposed by [23], where a recurrent neural network is applied to deal with the particle linking issue. However, multiple frames of particle trajectories are required as input and a pre-trained model is specialized for one flow case. On the other hand, pioneered by the recent PointNet-based backbone [24], numerous approaches have been put forwards to estimate scene flow (mostly rigid motion) from unstructured 3D point clouds [25, 26, 27, 28]. Inspired by the scene flow learning network, a preliminary investigation has been conducted to estimate 2D flow in PTV analysis, which shows a comparable performance with classical state-of-the-art PTV approaches on accuracy, robustness and efficiency [29]. In this work, we present how to extract spatial geometric information from the basic PTV data (merely 3D particle positions of two frames) with graph neural network (GNN) and generalize the scene flow to complex non-rigid flow motion (more common in physical phenomena) using our proposed optimal-transport guided RNN framework. The designed deep learning model is called GotFlow3D. The learnt flow by GotFlow3D can directly indicates the physical dynamics or be implemented as a-priori knowledge of the flow field into predictor-corrector schemes [2] to boost the particle tracking. Our propose method is a new paradigm in PTV technology to recover the complex particle motion, which plays a significant role for further dynamic behavior analysis in physical and biological systems.

2 Methods

2.1 Problem Setup

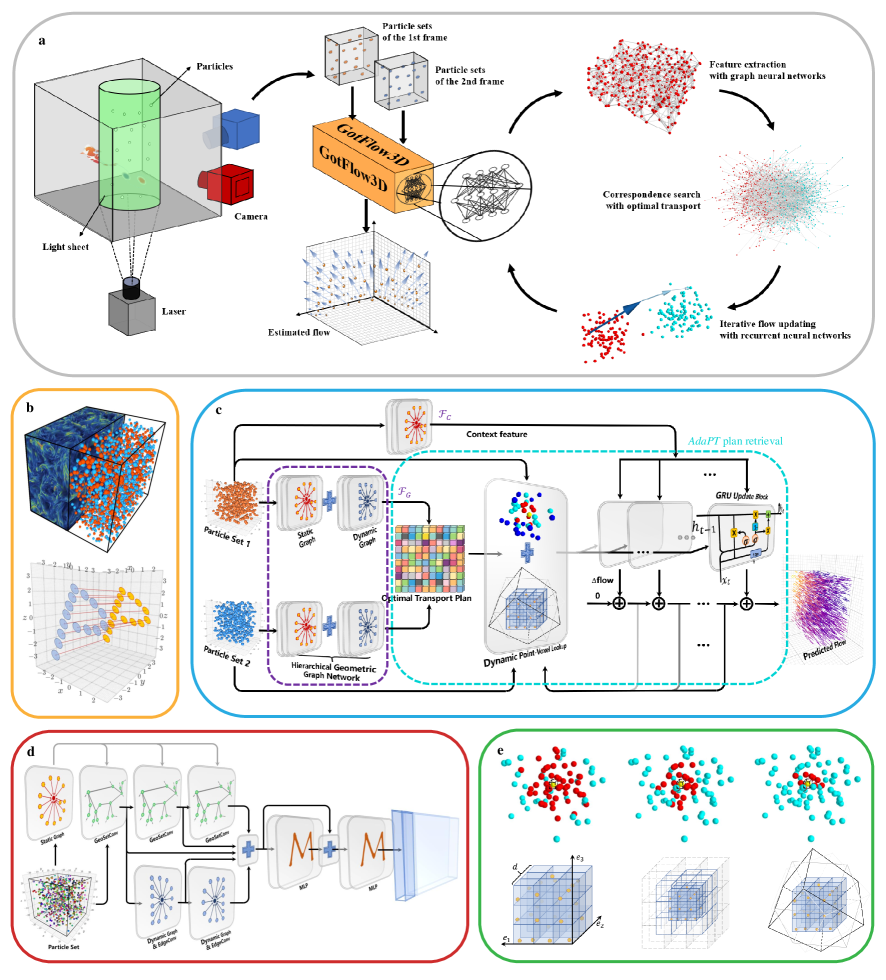

To better understand the principles of the proposed model, we first describe the formulation of the complex flow learning problem. The whole framework is shown in Figure 1(a). The proposed model consumes, as inputs, two unorganized consecutive 3D particle sets , and produces the dense vector field . The particle sets can be presented as the source set with particles and the target set with particles, where each particle (or ) suspends in various flow media and faithfully follows the media flow motion. Note that the attributes of each particle (or ) may contain the 3D coordinate together with some additional state features (e.g., intensity or color information). To enhance the generalization for various flow measurement tasks, we only consider the 3D coordinate (i.e., ) in this work.

Each element in represents the predicted flow motion corresponding to each particle . Given the source set and the target set , the iteratively updated flow (in the -th iteration) gradually translates the source to , which is expected to approach the target . Before the iterative process, we first employ the GNNs to extract the geometric pointwise feature , which is adopted to assign the optimal transport plan . Then, the recurrent neural network updates the flow by adaptively seeking the neighbors of in and following the guidance of the corresponding optimal transport plan.

To promote the learning of complex non-rigid fluid flow beyond scene flow, the properties of the flow motion in fluids should be taken into consideration. For examples, multi-scale eddies and chaotic property changes frequently exist in the complex fluid flow, which poses a great challenge to flow estimators. The challenge encourages an emphasis on both the extraction of large-scale motion fields (e.g., laminar flow) and the refinement of small-scale structures (e.g., vortex). In addition, the spatial local structures assembled with neighboring particles show approximately motion invariance and geometric similarities due to the local smooth fluid motion. All these properties are considered while designing the deep neural network.

2.2 GotFlow3D

This section introduces the proposed method used to learn the complex flow motion from two consecutive 3D particle sets. As shown in Figure 1(c), this model is designed to extract the geometric patterns of the 3D particle sets in the flow media, make the optimal transport plan that encodes the point correspondences, and iteratively undate the flow motion by adaptively retrieving the corresponding transport plan between the two particle sets. In the following, we present the architecture of GotFlow3D, which is constructed by three essential modules performing (1) graph-based feature extraction; (2) adaptive optimal transport plan lookup; and (3) iterative flow updating. Eventually, we also describe the implementation and training details of GotFlow3D.

2.2.1 Graph-based Feature Extraction

To ensure an accurate flow estimation, it is of great importance to capture reliable pointwise features, which are supposed to be discriminative enough for exact correspondences searching. Considering that the 3D coordinate is the only available input, the key point is to learn pointwise features representing the local geometry information. To achieve the effective feature exploration on the unstructured and irregular input format, we construct two kinds of geometric graphs on the particle sets and employ the static-dynamic fusion GNN (SDGNN) to derive the deep features of each particle. Specifically, we construct the static graph on 3D spatial space and the dynamic graph on high-dimensional feature space.

We first define the static graph , where the vertices are composed of the entire given particle set (or ). The edges are yielded by connecting each particle (vertex) to its -nearest spatial neighbors with the 3D Euclidean distance metric. The edges combining and its neighbors form local geometric structures and further generate the associated vertex feature , , where denotes the 3D directional vector. In addition, and represent the radial distance, azimuthal angle and polar angle in spherical coordinates, respectively, which supplement the relative spatial relationship of the neighboring vertices from another perspective. Such spherical representations further provide rotation invariant correspondences, which are advantageous in the estimation of complex motions and larger deformations [30]. The static graph for a particle set needs to be computed only once before training and is kept for the following retrieval.

As shown in Figure 1(d), given and , we employ the SDGNN to encode the local geometric characteristics to generate the distinctive feature descriptor for each particle. We denote the extracted pointwise feature of vertex at the -th layer as . In the deep hierarchical SDGNN, graph convolution operations are performed on the local geometric structure of each vertex to map the vertex feature of the previous layer to a new feature in the subsequent layer. Inspired by the PointNet++ framework [31], we propose a GeoSetConv layer to further embed the vertex features of the static graph to a high-dimensional feature space to better characterize the local geometric structures:

| (1) |

here denotes the feature extracted from the static graph at the -th layer, and depicts the -nearest neighbors of vertex in the static graph. In addition, is the -th GeoSetConv layer, which is implemented as multi-layer perceptrons (MLPs). And denotes the max-pooling operation, which aggregates features over the neighbors. The is initialized by the 3D coordinate of in the first GeoSetConv layer.

To further enrich the feature representations describing the detailed topological information, we explore the feature learning on the dynamic feature graph, as EdgeConv did in [32]. It is the first attempt at applying the dynamic graph CNN to extract features in the flow learning task. The following experiments also demonstrate that the introduction of the dynamic feature graph contributes to the improvement of the accuracy. The dynamic graph is constructed and updated by aggregating the -nearest neighbors on the high-dimensional feature space generated at each layer. Specifically, for each layer, we build the dynamic graph , where shares the same vertices as that of and the edges link feature level neighbors to form local structures in the feature manifold. Then, we formulate the update of the vertex feature of on the dynamic graph at the -th layer as

| (2) |

here depicts the index of the -nearest neighbors of in the feature space. And denotes the -th EdgeConv layer, which is also implemented as MLPs. Different from the static graph, whose receptive field is limited in the local neighborhood and hinders the acquirement of long-term dependencies, the dynamic graph enlarges the receptive field to the whole input set. The dynamic graph learns to construct a special receptive field by assigning more attention to the neighbors with similar feature (flow) properties.

Then, the proposed hierarchical SDGNN incorporates the extrinsic geometric topology and the intrinsic feature correspondences by fusing the learnt features from the two types of graphs. More precisely, we further concatenate the hierarchical features of these graphs from different layers of the GNN. Finally, several MLPs with a skip connection are employed to derive the final deep pointwise feature

| (3) |

where depicts the MLPs and denotes the concatenation of features from different graphs and different layers. The extracted pointwise feature is adopted for the following optimal transport planning to infer the particle correspondences via the feature similarity. Furthermore, we employ another feature encoder to extract the context feature of , which provides additional context information to the flow update. Such context feature encoder simply consists of several SetConv layers from PointNet++, which only operates on the spatial static graph.

2.2.2 Adaptive Point-Voxel Optimal Transport

Optimal transport. Optimal transport theory [33] guides the transportation and allocation between two distributions. In this work, we regard the correspondences searching of two particle sets as the transportation and allocation of the particle mass, where each particle () is set to have a mass of (). Then, the cost has to be paid to transport each unit of mass from to . Therefore, the key issue of optimal transport is to seek a transport plan transforming a source particle distribution to a target particle distribution whilst minimizing the transport cost [34]. In more detail, the computation of a transport plan between two particle sets can be formulated as

| (4) | ||||

where is the transport cost from particle to , and denotes the assigned quantity of mass to be transported from to . In addition, we define and in the equality constraints. The constraint manifests that the mass of each particle from the source distribution is supposed to be completely transported to the target and each particle of the target distribution is expected to be filled up with the transported mass.

However, due to the measurement noise in real experiments such as 3D reconstruction errors and object occlusions, the map reflecting the correspondences between two particle sets is neither injective nor surjective. Therefore, the mass constraints cannot hold ideally. To cope with this problem, it is preferred to apply the constraint relaxation to solve the optimal transport problem. We follow the formulation proposed by FLOT [26] to employ the Sinkhorn algorithm [35] to efficiently optimize the transport plan with an entropic regularization and a mass regularization:

| (5) | ||||

here represents the -divergence. In addition, and denote the entropic and the mass regularization parameter, which are also learnt through the network training. A small leads to a sparse transport plan, while a high tends to reinforce the effect of the mass constraint. Then, we define the transport cost between all pairs as the dissimilarity of the extracted pointwise feature via the cosine similarity:

| (6) |

where denotes the -norm. A small cost between two particles generally corresponds to a high plan, which implies a latent possibility of particle correspondences. The transport plan is constructed only once and is kept available for the following retrieval, which conduces to avoid numerous repeat matrix calculations.

Adaptive point-voxel optimal transport. In this paper, we propose a novel approach to adaptively retrieve the optimal transport plan for the iterative flow updating. For each iteration , given the currently estimated flow , the source is translated to , where . The estimated flow is initialized at and accumulated by the previous flow updates as

| (7) |

where denotes the index of the iteration. Next, we can consult the transport plan between and to guide the flow update of the next iteration. More specifically, the consultation is adopted by searching the neighbors of the warped source in the target and retrieving the corresponding transport plan . This iterative transport plan consultation contributes to lowering the difficulty of long range motion estimation and promote the refinement of small scale motion.

An essential step in the above-mentioned algorithm is: how to efficiently retrieve abundance and reliable information () from the full transport plan () through the neighborhood relation reasoning of the unstructured particle set. To address this issue, the proposed approach further generates the adaptive point-voxel optimal transport (AdaPT) plan during each consultation based on the original point-voxel operation [28]. More precisely, we integrate the captured point-based transport plan and the voxel-based transport plan to offer the long-range and small-scale correspondence of particle pairs in the consultation:

| (8) |

First, we present the generation of the point-based transport plan using a dynamic -nearest neighbors searching, where the number of the located neighbors are gradually reduced as the iteration progressed. Such construction adaptively narrows the search region to refine the fine-grained correspondence along with the increasing of iterations. From the deep point of view, the learning of the subsequent smaller residual flow amounts to cope with particles with relatively larger spatial distance. Accordingly, we dynamically reduce the searched neighboring particles to adapt to sparsely seeded regions, in which small number of neighbors perform better as noted in [30]. The computation of and the attenuation of are formulated as:

| (9) |

| (10) |

here denotes the index set of the -nearest neighbors of in , and is the corresponding retrieved transport plan between and . In addition, we further combine the retrieved and the relative spatial relation of the corresponding neighbors to deep encode the final plan , where the local spatial relation plays a similar role as the geometric attention weight. The deep encoding is performed by the MLPs with a operation over the dimension. Furthermore, is the initial value of , while is set to a constant value.

To further cope with the large-scale motion embedded in the long-range correspondence of transport plan, we innovatively construct adaptively deformable multi-scale cuboids, which compartmentalize the geometric space containing particles into pyramid voxels to yield the voxel-based transport plan . Specifically, each constructed cuboid is centered at each particle of and collects the enveloped neighbors in . As depicted in Figure 1(e), the cuboid is composed of identical sub-cuboids, whose length (), width () and height () vectors are adaptively defined according to the several recent flow updates as:

| (11) |

where and denote the normalized length of the current updated flow and the last updated flow over all particles in , respectively. Such normalization encourages a longer-range correspondence search when large variations appear on the flow. And calculates the cosine similarity between and . Such similarity calculation also reinforces a longer-range correspondence search when recent flow updates keep consistent but prompts a smaller-range correspondence search when they remain inconsistent. Moreover, , and are unit orthogonal basis vectors of the three principal orientations used to guide the search direction of the correspondence, which are established as:

| (12) |

where denotes the inner product of two vectors, while represents the outer product; means that the vector is normalized to unit length. Furthermore, we define a basic scale parameter , which controls the size deformation of the cuboid:

| (13) |

where denotes the mean distance between each particle and its -nearest neighbors in the input set, which is utilized to obtain the local particle concentration information. And is a learnable scale parameter optimized with the network training, which contributes to an adaptive process of particle sets with various particle densities. In addition, is the index of the pyramid iteration, which gradually doubles the length size of the sub-cuboid to retrieve the multi-scale voxel-based plan. The adaptive variation of the multi-scale cuboid size makes it possible to cover farther desired particles.

As illustrated above, the constructed cuboid presents the powerful deformability to adapt to current flow updates and input particle densities, while showing puissant capabilities to capture long-range correspondences with the multi-scale strategy. Then, the cuboid partitions the enveloped neighboring particles in into sub-cuboids, which indicate the relative direction of the neighbours to the central particle in . Furthermore, the neighboring particles and the corresponding transport plan in each sub-cuboid are aggregated and averaged for the final calculation of :

| (14) |

here denotes the neighboring particles of in enveloped in the -th sub-cuboid for the -th pyramid iteration. And is the average over the transport plan of particles in the -th sub-cuboid. Moreover, and denote the concatenation over all pyramid iterations and all sub-cuboids, respectively. There followed another MLPs , which is used to implement the similar deep encoding for the final plan .

GRU flow refinement. Inspired by RAFT [36], we finally employ a recurrent GRU to estimate the flow update , which mimics the iterative strategy in the optimization algorithm. The applied GRU consumes the integrated AdaPT plan , the current flow estimation , the context feature and the hidden state transmitted from the last iteration, while yielding a new hidden state encoding the future flow information:

| (15) |

| (16) |

| (17) |

| (18) |

where denotes the concatenated feature of , and . And is the element-wise product. In addition, denotes the weights of convolutional layers in different steps. Then, the is passed through a SetConv layer and several convolutional layers to predict the flow update .

2.2.3 Implementation Details

In this framework, there are some predefined parameters in the graph construction and the AdaPT plan retrieval process. The number of the searched neighboring particles used to construct the static graph and the dynamic graph is set as . For the AdaPT plan retrieval, the dynamic is initialized as , while is fixed to 2. And when the iteration exceeds 8, the is fixed to 16. The resolution of the cuboid and the number of the pyramid iteration are both set as 3. In addition, the is set as 3 to calculate the mean local particle distance. Moreover, the scale parameter is initialized as 0.5 and has a special learning rate, which is 0.1 times the global learning rate, to enhance the training stability. The dimension of the final pointwise feature extracted by the hierarchical GNN is set as 128. Furthermore, the and used to control the two regularizations are initialized as 0 and 1, respectively. And the flow is iteratively updated for 8 iterations.

The network training are performed with supervised loss using the entire estimated flow sequence :

| (19) |

where denotes the flow estimation of the -th iteration, while is the ground truth flow. In addition, denotes the distance, and is the total number of flow update iterations. Moreover, denotes the total number of the particles. Mini-batch training is employed with a batch size of 16. Then, we further impose an exponentially increased weight to the estimated flow sequence:

| (20) |

where is set to 0.8. The network is trained on six TITIAN XP GPUs for 40 epoches using Adam optimizer [37] with a initial learning rate of 0.001.

2.3 Datasets

2.3.1 Synthetic training datasets

Training data with ground-truth information plays an indispensable role in the network optimization with supervised learning strategies. However, the difficulty of recovering highly accurate ground-truth flow from real data enforces us turn to the simulated data from computational fluid dynamics (CFD), which is capable of generating various flow cases representing the true fluid mechanical characteristics by solving the Navier–Stokes equations [16].

To perform the flow supervision, we create the first synthetic 3D fluid flow dataset named FluidFlow3D, which is characterized by two consecutive particle sets , and the corresponding ground-truth flow set . In particular, we first generate a particle set , which comprises more than two thousand randomly distributed particles in the 3D observation volume. The observation volume is also defined in the random location of the CFD computational domain. Next, we use the public CFD data from Johns Hopkins Turbulence Database (JHTDB) [38], which is proven to provide physically correct simulated flow structures, to generate the ground-truth flow . This is achieved by applying the built-in functions to query the JHTDB database with the second order accurate Runge-Kutta integration scheme in time and the fourth order Lagrange interpolation in space, which are executed on the database nodes. The flow structures queried from JHTDB includes the incompressible isotropic turbulent flow, the incompressible magneto-hydrodynamic (MHD) turbulence, the fully developed turbulent channel flow and the transitional boundary layer flow. Therefore, we can get the next frame of the given particle set using the integrated trajectory with a designated time interval, subsequently get the the corresponding ground-truth flow . The sketch of this generation procedure is illustrated in Figure 1(b). In addition to the data from JHTDB, several flow benchmarks, including the uniform flow and the Beltrami flow [39], are also generated to increase the diversity of the training dataset. Eventually, the synthetic training dataset consists of six categories of typical flow cases.

Furthermore, to enhance the generalization capabilities of the trained network for realistic experimental configurations, we augment the above flow cases with various flow conditions. The measurement performance in PTV experiments is highly limited by large dynamic velocity ranges and high particle densities. These two factors can be characterized by the particle displacement ratio , which has been widely used in the PTV community [40] and can comprehensively depict the flow conditions:

| (21) |

here and denote the average distance between particles in the whole set (e.g., ) and the maximum displacement between two input sets, respectively. It is reported that the classical flow estimators can easily achieve satisfactory performance for flow cases with , while facing more challenges when gets smaller [40]. In our training dataset, we change the characteristic observation volume to vary (the number of particles remains constant) and alter the time interval to obtain different maximum displacements. As a result, this important parameter in the synthetic dataset ranges from to , much beyond the normal range of real PTV experiments. Moreover, to mimic the real-world applications, we add some noises to create more realistic cases, which mainly arise from the physical motion of particles in and out of the field of view, the measurement noise and the occlusion. To this end, we shuffle the particles in to obtain the second set , which directly disorders the correspondence. Then, we randomly replace of the particles in with new random particles. Such noises eliminates of the correspondence of , while introducing new isolated particles in with no correspondence. Here we set with level of noise for this dataset. Finally, we generate a synthetic dataset with 16K training samples and 1.6K validation samples. A table summarizing the synthetic dataset is given in Table III.

2.3.2 Testing Dataset

A family of synthetic FluidFlow3D-derived datasets, a public turbulent cylinder wake flow dataset (denoted by CylinderFlow) [41] and a real-world dataset (denoted by SphericalIndentationFlow) across different domains are adopted to conduct the experimental evaluations.

FluidFlow3D-family. Apart from the aforementioned FluidFlow3D, we further transform FluidFlow3D to a new dataset with normalized spatial scale (denoted by FluidFlow3D-norm) to compute the unified evaluation metric among flow cases with different observation volumes. The FluidFlow3D-norm is used for the comparison with the state-of-the-art methods and ablation studies. To analyze the robustness, we create a synthetic subset FluidFlow3D-noise with the different noise level. Furthermore, another subset FluidFlow3D-ratio regarding various is also generated.

CylinderFlow. This public dataset contains Eulerian velocities and Lagrangian particle trajectories of the cylinder wake flow with a Reynolds number of 3900 [41]. Such flow data is also calculated using CFD. In addition, the fourth-order Runge-Kutta scheme in time and trilinear interpolations in space are utilized to transport about 200,000 particles to obtain the Lagrangian trajectories. Trajectories of two 3D observation volumes are available for the assessment of tracking algorithms.

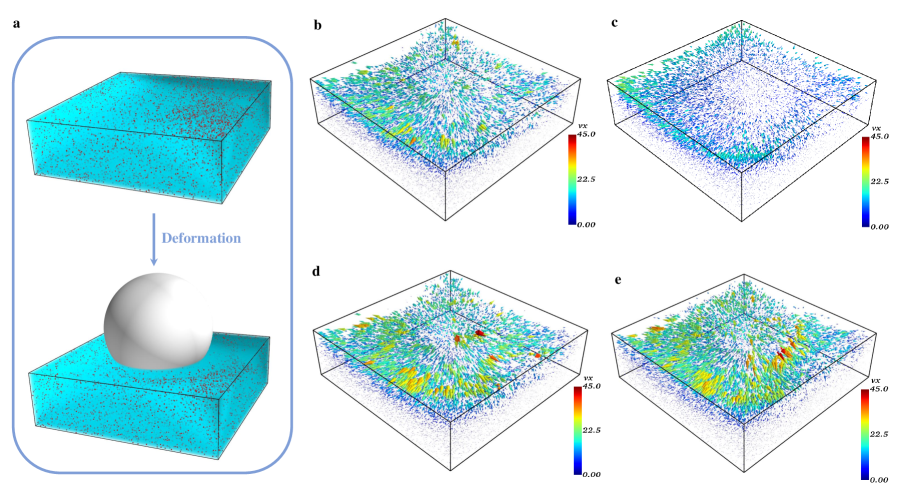

DeformationFlow. This experiment is conducted by measuring the volumetric deformation of a soft polyacrylamide (PA) hydrogel exerted by the spherical indentation [30]. A stainless steel sphere is placed on the surface of the PA hydrogel, which leads to the indentation deformation due to the force of gravity. Then the 3D volumetric image of the hydrogel material as well as the embedded fluorescent particles are scanned both before and after the deformation with multiphoton microscopy.

2.4 Evaluation Metrics

To quantitatively assess and compare the performance of all the methods, we adopt the widely used four evaluation metrics in scene flow learning tasks [25, 27, 26, 28], including EPE, Acc Strict, Acc Relax and Outliers. The end-point-error EPE averaged over each particle is defined as:

| (22) |

where denotes the distance. The Acc Strict is calculated as the percentage of particles with the relative EPE error 5% , while the Acc Relax is defined as the percentage of particles with the relative EPE error 10% . In addition, the Outliers are denoted as the percentage of particles with EPE 0.3 unit or the relative error 10%. Moreover, in the comparison with tracking algorithms, two metrics are employed as the assessment principles for the tracking accuracy and tracking reliability, including the yield rate (), the reliability rate ():

| (23) |

where denotes the ratio of correct matches (denoted by ) to all ground-truth matches (), while is the proportion of correct matches to all extracted matches ().

3 Results

In this section, a great deal of evaluations on experiments are presented to comprehensively assess the performance of GotFlow3D in the complex flow learning task. We compare GotFlow3D to the state-of-the-art methods and verify the robustness to the noise as well as various displacement ratios. There followed the validation on a public fluid flow dataset, where the superiority of GotFlow3D to traditional PTV methods and the promotion of GotFlow3D to the tracking accuracy are investigated. Finally, a PTV experiment is conducted to further investigate the generalization of GotFlow3D to real-world experimental data.

3.1 Comparison with SOTA scene flow learners

We first present quantitative results on the test set of FluidFlow3D-norm by comparing our model with other four representative state-of-the-art scene flow learning methods, including the FlowNet3D [25], FLOT [26], PointPWC-Net [27] and PV-RAFT [28]. All of these baseline methods are trained on the training set of FluidFlow3D-norm with the training hyper-parameters reported in their articles.

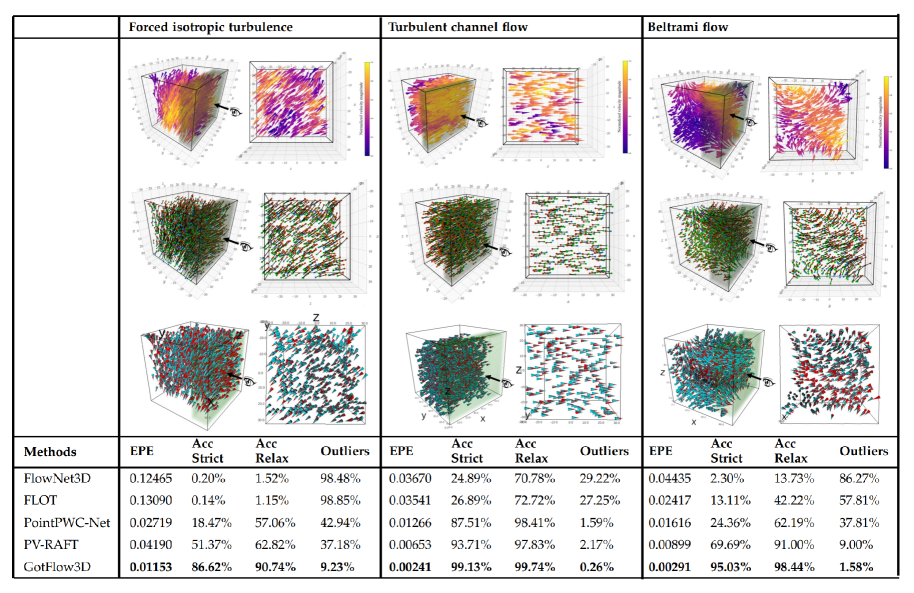

The comparisons of the EPE, Acc Strict, Acc Relax and Outliers metrics of these methods are listed in Table 1. As revealed in the table, our proposed model outperforms other baseline methods and achieves the state-of-the-art performance on all of the four metrics. Specifically, GotFlow3D surpasses FlowNet3D and FLOT by more than an order of magnitude on the EPE, significantly boosts the Acc Strict and Acc Relax and brings the Outliers to a extremely low level.

To visualize more detials, we conduct comparative experiments over different flow cases in FluidFlow3D-norm and verify the generalization and superiority of the proposed model. Three typical examples are demonstrated in Figure 2, while the errors for all the flow cases are given in Table IV. As shown in Figure 2 and Table IV, GotFlow3D achieves the state-of-the-art performance with the smallest EPE for all flow cases. In addition, GotFlow3D presents the highest Acc Strict (Relax) and the smallest Outlier except for the Uniform flow case. In the Uniform flow case, we observe that PV-RAFT shows comparable results with GotFlow3D. PV-RAFT is slightly better, and they both achieve over 99% accuracy and contain less than 0.02% outliers. We suspect that such a good performance in this relatively simple case is attributed to the GRU module employed both in GotFlow3D and PV-RAFT. However, in some of the complex flow cases (e.g., Forced isotropic turbulence, Forced MHD turbulence and Beltrami flow) which contain abundant fine-grained small-scale flow structures, the other flow estimators including PV-RAFT provide worse results, whereas GotFlow3D consistently performs the best on the EPE and Outliers even coping with the difficult datasets containing complicated flow scenes and diverse motion patterns.

To further depict the learning performance of GotFlow3D, we visualize the estimated flow from GotFlow3D with diverse fashions in Figure 2. Specifically, we plot the results of three test data samples from FluidFlow3D-norm, including the Forced isotropic turbulence (left), Turbulent channel flow (middle) and Beltrami flow (right). Moreover, we present the predicted flow fields (top) to show the capability of GotFlow3D to learn complex flow distributions, which involve numerous small-scale flow structures. In the middle row, the source particle (red), transported particles (green) using the learnt flow and the corresponding target particle (blue) are visualized to qualitatively demonstrate the tiny end point error, which also illustrates how GotFlow3D copes with the flow cases containing both small and large displacements. Furthermore, we also manifest both the ground-truth flow (red) and the flow estimations (cyan) in the bottom row. It can be observed that the estimations maintain highly consistency with the ground truth.

3.2 Robustness against experimental conditions

To further quantify the generalization and robustness of GotFlow3D facing extreme flow conditions, we conduct comparisons across different methods on dedicated datasets with wide range of velocity and varying noise levels. The metrics reported in this section are obtained by taking the average over 100 samples for each investigated condition.

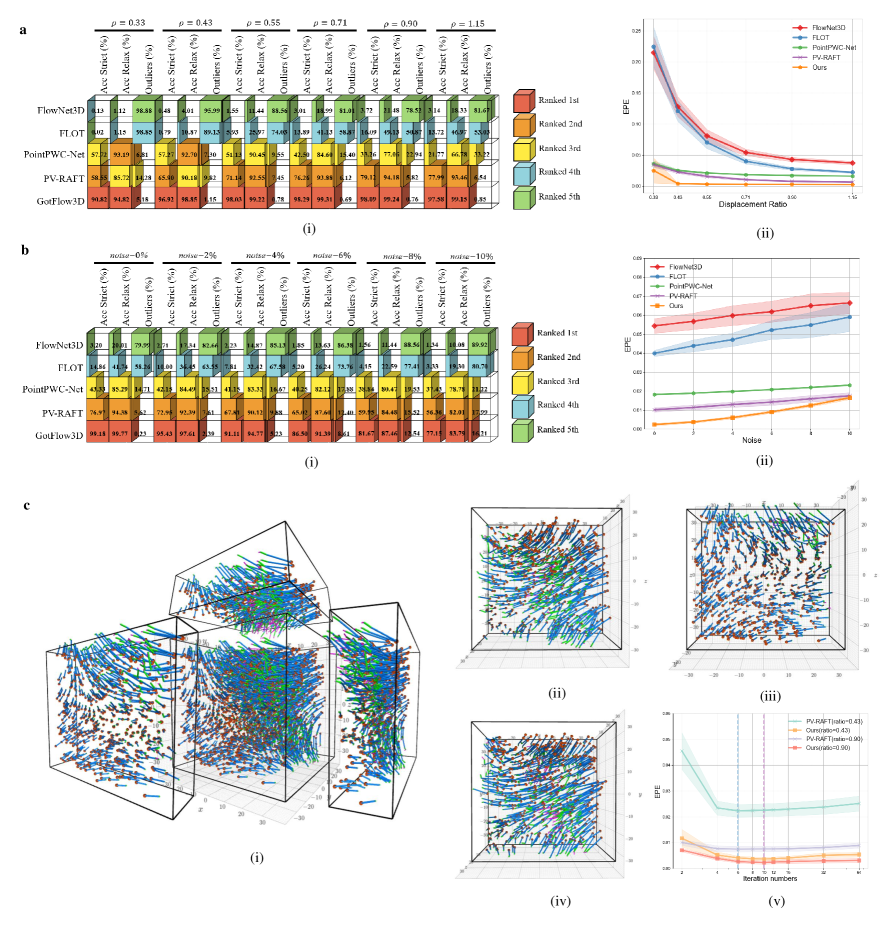

To investigate the influence of the dynamic velocity range, we first present the assessment with respect to different displacement ratios on the subset FluidFlow3D-ratio. This data subset is generated by simulating the Beltrami flow with varied velocity magnitudes, which leads to a series of ranging from 0.33 to 1.15, covering most situations in real experiments. We demonstrate the Acc Strict, Acc Relax and Outliers of all flow learners in Figure 3(a)(i), also plot the corresponding EPEs as a function of in Figure 3(a)(ii). As shown, GotFlow3D leads the way in EPE, Acc Strict (Relax) and Outliers compared to other methods while surpasses the FlowNet3D and FLOT to a great extent. When facing the flow condition with a extreme low of 0.33, GotFlow3D consistently shows impressive performances. GotFlow3D presents a relatively higher variance on EPE than PV-RAFT and PointPWC-Net in the case when in Figure 3(a)(ii), which may be due to that the of 0.33 is lower than the of the training set (from 0.35 to 6.58). Other than that, the EPE curve of GotFlow3D nearly remains flat with low variances when increases from 0.43 to 1.15. We can observe that PV-RAFT also shows comparable results and approaches GotFlow3D when facing conditions with large displacement ratio (i.e., the case where flow estimators are easier to track particles). Nevertheless, the outperformance of GotFlow3D convincingly reveals the robustness toward diverse flow cases with wide dynamic velocity ranges.

Likewise, the performance of all these flow estimators are demonstrated with respect to various noise levels defined in Section 2.3.1. This comparison is assessed on the subset FluidFlow3D-noise, which is also generated using Beltrami flow with mean of 0.71. We investigate the noise level ranging from 0 % to 10%, which is reasonable to mimic the noises in real experiments. The evaluation metrics among different learning methods are shown in Figure 3(b)(i), while the EPEs are ploted against the noise in Figure 3(b)(ii). It is obviously seen that the metrics of all methods inevitably get worse with the increase of noise level, which is expected. Furthermore, it can be observed that GotFlow3D achieves the best performance on EPE, Acc Strict (Relax) and Outliers among all investigated noise levels and surpasses FlowNet3D as well as FLOT by a large margin (more than 50%) on Acc Strict (Relax) and Outliers. When it comes to a condition with high level noise, GotFlow3D does not possess much superiority over PV-RAFT while the gap between them gradually narrows. The impressive performance indicates the robustness of GotFlow3D facing some degree of noise levels.

To better illustrate the effects of the recurrent flow estimation, we investigate the performance of the network inference with different iteration numbers in the recurrent flow updating. The iteration number is a user-defined parameter in inference stage, which can be set higher to learn flow with large displacements and can be defined lower to reduce the computational cost. This investigation is assessed on two flow subsets from FluidFlow3D-ratio: a more difficult case of and an easier case of , and the comparison is only performed between the learning methods with recurrent unit (i.e., PV-RAFT and GotFlow3D). As shown in Figure 3(c)(v), both methods yield a remarkable improvement on EPE as the iteration number increases to 6. However, the error of PV-RAFT becomes slightly higher when the iteration number exceeds 6 (marked with a blue dashed line) for both values of . On the other hand, the optimal iteration number of GotFlow3D is 10 (marked with a purple dashed line) for both the flow cases. These curves indicate that the iteration number has a profound impact on the performance of recurrent approaches. However, it can be found that the GotFlow3D is more accurate and less sensitive in different configurations. It also indicates that it is better to chose an iteration number close to the pre-defined value of the training process while applied to real flow scenarios. To understand the recurrent mechanism more intuitively, we visualize the recurrently updated flow of GotFlow3D by plotting trajectories of the transported particles in several iterations. As shown in Figure 3(c)(i-iv), the source particles (red) are transported using the estimated flow in iteration 1 (blue), the updated residual flow from iteration 1 to 2 (green) and the updated residual flow from iteration 2 to 32 (purple). It can be observed that the major flow motion is estimated in the first two iterations while the residual flow continues to refined slowly in the remaining iterations.

In addition to the aforementioned assessments, an ablation study regarding different modules in GotFlow3D is also performed to demonstrate the effectiveness of each module. The results are summarized in Table V. We also compute the inference time of GotFlow3D to present the computational efficiency. GotFlow3D takes about 0.1 s to estimate the flow of one sample containing about 2000 particles on a NVIDIA RTX 3090 GPU, which is promising for online flow visualization and measurement.

3.3 GotFlow3D for shedding flow with a high Reynolds number

PTV is one of the most primary techniques to analyse 3D turbulent flows by tracking particles. In this section, we perform further comparisons between GotFlow3D and other advanced open-source tracking algorithms on a public dataset CylinderFlow. We should note that GotFlow3D is a motion estimator, which does not perform particle matching and linking as the open-source PTV algorithms do. Therefore, we present the assessment by generalizing GotFlow3D as a customized motion predictor to provide existing PTV algorithms with additional motion prior information (i.e., initialization), which is supposed to promote the particle matching between adjacent frames. Two open-source particle tracking algorithms, i.e., T-PT [42] and SerialTrack [30], are implemented as evaluation benchmarks, which have been proven two of the state-of-the-art double-frame 3D tracking algorithms. T-PT is a topology-based particle tracking algorithm that encodes relative spatial neighbors as feature descriptors to deal with large displacements, while SerialTrack resolves large deformation and rotational motion with scale and rotation invariant augmented particle tracking. Both the two algorithms have been successfully applied to reveal complex motion behaviors in biological and physical applications [15, 43].

| 30 () | 60 () | 90 () | |||||||||||||

| Methods | |||||||||||||||

| T-PT [42] | 161727 | 195504 | 161875 | 82.72 | 99.91 | 118865 | 193903 | 119046 | 61.30 | 99.85 | 98202 | 192375 | 98324 | 51.05 | 99.88 |

| GotFlow3D + T-PT | 192196 | 195504 | 192450 | 98.31 | 99.86 | 166232 | 193903 | 166908 | 85.73 | 99.60 | 131451 | 192375 | 132087 | 68.33 | 99.52 |

| SerialTrack [30] | 181334 | 195504 | 189493 | 92.75 | 95.69 | 123643 | 193903 | 187647 | 63.76 | 65.89 | 95334 | 192375 | 187501 | 49.55 | 50.84 |

| GotFlow3D + SerialTrack | 192350 | 195504 | 194303 | 98.39 | 98.99 | 178556 | 193903 | 190297 | 92.08 | 93.83 | 154322 | 192375 | 187211 | 80.21 | 82.43 |

To better show the promotion brought to these PTV algorithms from GotFlow3D, we conduct three comparisons with different frame intervals, including the first frame and the 30th frame (denoted as experiment 30), the first frame and the 60th frame (denoted as experiment 60), the first frame and the 90th frame (denoted as experiment 90). Larger time interval generally leads to relatively larger displacement motion, and the three investigated experiments correspond to of 1.06 (30), 0.52 (60) and 0.35 (90), respectively. For evaluation of PTV algorithms, the yield rate () and reliability rate () are used as metrics for comparison, which strongly indicate the tracking accuracy and tracking reliability in PTV applications. As shown in Table 2, the and the correct matches of all methods decrease as the frame interval gets longer. T-PT consistently presents the highest among all the three experiments, showing the tracking reliability of this method. However, the of T-PT is relatively low, which means it misses a large number of particle tracks. SerialTrack outperforms T-PT on and in conditions of high (experiment 30 and 60) but is slightly surpassed by T-PT in the challenging experiment 90. Impressively, the integrated implementations named “GotFlow3D+T-PT” and “GotFlow3D+SerialTrack” improve the performance of T-PT and SerialTrack to an extremely high level, indicating that much more correct matches are extracted. In particular, the proposed GotFlow3D boosts the of T-PT by 15-24% and that of SerialTrack by 6-30%. The highest improvement of regarding T-PT comes from the experiment 60 (about 24%), while that regarding SerialTrack is obtaiend from the experiment 90 (about 30%). It can be seen that GotFlow3D yields larger promotion coping with the conditions with relatively larger displacements. When facing the condition with short frame interval of 30 (i.e., high value), “GotFlow3D+T-PT” and “GotFlow3D+SerialTrack” achieve a nearly perfect performance with both and approaching 100%. The of “GotFlow3D+T-PT” and “GotFlow3D+SerialTrack” surpasses SerialTrack but is not superior to T-PT, since GotFlow3D also boosts the extraction of more matches, which may lead to the decrease of .

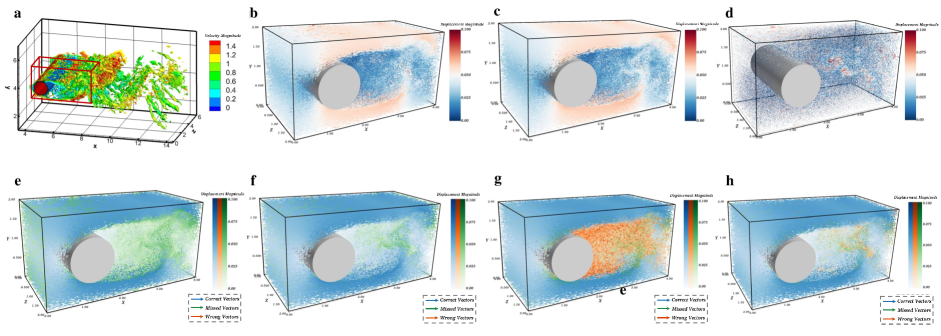

To visually validate the contribution of GotFlow3D, we plot the estimated cylinder flow as well as the ground-truth flow of the experiment 60. An overview of the flow field in a large domain is shown in Figure 4(a), where a subdomain, that we mainly focus on, is outlined. As shown in Figure 4(b), GotFlow3D itself has fulfilled an excellent recovery of the cylinder wake flow structure, which is nearly aligned with the ground truth (Figure 4c). We also present the residual flow (i.e., the error between estimated flow and the ground truth) in Figure 4(d), where the error mainly exists in a small region behind the cylinder. Furthermore, the tracking results of the two open-source PTV algorithms as well as their combinations with GotFlow3D are visually illustrated in Figure 4(e-h). We present the correct matches in blue vectors, missed matches in green vectors (i.e., the complementary set of correct matches in possible matches) and wrong matches in red vectors (i.e., the complementary set of correct matches in extracted matches), respectively. It can be seen that T-PT strives to maintain a high tracking reliability, and consequently misses plenty of potential matches, which however are successfully brought back by GotFlow3D. On the contrary, SerialTrack focuses on the extraction of more potential matches, and therefore results in abundant wrong matches, which are also well corrected by GotFlow3D. It is worth noting that although our synthetic training dataset cannot cover all flow scenarios (e.g., the cylinder flow), the impressive performance GotFlow3D makes it a promising method to be generalized to more potential and realistic applications.

3.4 GotFlow3D for deformation of sphere indentation

To further investigate the generalization to real experiments, we apply GotFlow3D to recover the 3D spherical indentation deformation data DeformationFlow. An illustration of the reference and deformed experimental configurations is shown in Figure 5(a). About 35000 particles (plotted as red dots) are simultaneously traced in the investigated domain of 1024 voxels 1024 voxels 445 voxels. The investigated flow motion of the hydrogel deformation exhibits an approximate displacement ratio of according to our estimation. The velocity vectors computed by T-PT, SerialTrack, the integrated “GotFlow3D+T-PT” and “GotFlow3D+SerialTrack” are presented in Figure 5 for comparison. Parameters of SerialTrack are set as the recommended ones from the original paper [30].

The recovered displacements of these methods are visualized in Figure 5(b-e). It can be observed that “GotFlow3D+T-PT” (Figure 5d) and “GotFlow3D+SerialTrack” (Figure 5e) yield denser 3D flow fields than T-PT and SerialTrack (Figure 5a-b). The improvement of spatial resolution comes from the flow prediction of GotFlow3D, which contributes to covering more fine details of the revealed complex flow structures. With GotFlow3D combined, the tracking algorithms can extract more potential matches and demonstrate more flow structures. Moreover, the estimations of “GotFlow3D+T-PT” and “GotFlow3D+SerialTrack” are nearly consistent with the results of T-PT and SerialTrack in the hydrogel region of relatively small displacements, which is far away from the sphere and mainly distributed with blue vectors. However, both original T-PT and SerialTrack fail to deal with the flow in the region near the contact surface between the sphere and the hydrogel. They provide sparse flow fields with small magnitudes, but relatively large particle displacements are expected. On the contrary, “GotFlow3D+T-PT” and “GotFlow3D+SerialTrack” show a great capability in extracting large deformation fields, especially in the contact surface region. Through the comparison, we can conclude that GotFlow3D, which is trained using synthetic flow, can be effectively generalized to more complex flow scenarios in real-world experiments and show excellent performance in resolving large displacements in particle tracking.

4 Discussion

In this paper, we introduce a complete pipeline to employ the deep neural network called GotFlow3D to learn the 3D fluid motion from two consecutive unorganized particle sets. The contributions of this work are manifold. A static-dynamic fusion graph neural network (SDGNN) is first proposed to derive the distinctive pointwise feature of particle sets by fusing the extracted geometric information from the static spatial graph and the dynamic feature graph. We present a novel approach to retrieve the adaptive point-voxel optimal transport (AdaPT) plan for the iterative flow learning. The retrieval of the AdaPT plan effectively provides the long-range and small-scale correspondence of particle pairs. We generate the first 3D synthetic fluid flow dataset named FluidFlow3D for the network training based on the physical simulations from CFD. This dataset covers a great deal of complex non-rigid motion under different flow conditions beyond the common rigid motion in scene flow tasks. The generated dataset is available for broader research in both the fluid mechanics community and the computer vision community. Comprehensive evaluations on synthetic and real-world experimental data have shown that our approach rivals state-of-the-art scene flow networks and other advanced PTV methods. In particular, our approach demonstrates competitive accuracy to the prior art, excellent robustness to various flow conditions and impressive generalization to real-world applications.

We devise a new framework to learn 3D complex fluid flow motion from consecutive particle sets in particle tracking experiments, which draws inspiration from some pioneering learning-based networks. However, there are obvious differences between GotFlow3D and the existing scene flow learners. The design of the proposed network only follows the basic similar concept of RAFT to estimate the flow in an iterative manner. In fact, RAFT performs the flow estimation on regular images, while our GotFlow3D targets at irregular particle sets. PV-RAFT adopts a similar iterative flow update to deal with point clouds, which however pays more attention to the basic point-voxel correlation fields. In comparison, we propose to adaptively retrieve a more efficient AdaPT plan to boost the recurrent flow learning guided by optimal transport. Both FLOT and GotFlow3D seek guidance from optimal transport theory, but implement that in different ways. The former directly employs optimal transport to compute a coarse flow, whereas the latter performs the AdaPT plan retrieval to extract more reliable correspondence information for the subsequent iterative flow refinement. Apart from the above-demonstrated differences, the innovation of the proposed GotFlow3D is also embodied in the design of the SDGNN, which makes the first attempt to combine features from the dynamic feature graph and that of the static spatial graph in flow motion learning tasks. Such combination of new modules presents a significant improvement on the accuracy of flow estimation according to the ablation study shown in Table V.

In summary, a novel deep learning based model - GotFlow3D - is proposed for learning complex flow motion from two particle sets, which provides a new perspective for introducing deep learning approaches in the further analysis of particle tracking applications encountered in a wide range of biological and physical systems. We present the first synthetic dataset for further deep learning developments in PTV community. GotFlow3D shows state-of-the-art accuracy on the PTV database and surpasses the existing scene flow neural networks on most flow cases. Robustness analysis further validates the stable performance of GotFlow3D under challenging flow cases and extreme experimental conditions, which are normally encountered in reality. GotFlow3D also achieves a significant performance on the experiment of cylinder flow, which does not even exist in the training database, and brings a great improvement to the existing PTV approaches. Moreover, GotFlow3D has been generalized to a real-world particle tracking application of sphere indentation, where GotFlow3D shows the superiority of improving the spatial resolution and resolving large displacements.

Future extensions to deep learning approaches in PTV analysis include Bayesian framework of GotFlow3D, which could provide not only flow estimation but also the uncertainty quantification of the measurement. Moreover, GotFlow3D is currently designed as an universal tool, which simply consumes spatial coordinates of particles. More properties of particles (e.g., intensity) could be utilized to further improve the correspondence searching and make GotFlow3D customized tools in specific applications. It is also a promising research topic to incorporate physics-informed constraints (e.g., governing equations of the flow motion) in specific applications while training the neural networks.

| Flow case | Observation Volume | ||

| Forced isotropic turbulence | {(0.25, 0.25, 0.25), (0.5, 0.5, 0.5)} | {0.03, 0.04, 0.05} | [0.36, 2.50] |

| Forced MHD turbulence | {(0.25, 0.25, 0.25), (0.5, 0.5, 0.5)} | {0.06, 0.07, 0.08} | [0.58, 2.33] |

| Transitional boundary flow | {(0.5, 1, 0.5) , (, 2, )} | {0.12, 0.16, 0.20} | [0.48, 2.24] |

| Turbulent channel flow | (0.25, 0.5, 0.25) | {0.03, 0.04, 0.05, 0.06, 0.07, 0.08} | [0.51, 1.63] |

| Beltrami flow | (2, 2, 2) | { [0.9, 1.0], [1.1, 1.2], [1.3, 1.4] } | [0.35, 6.58] |

| Uniform flow | (0.25, 0.25, 0.25) | {0.03, 0.04, 0.05} | [0.51, 3.11] |

| Method | Forced isotropic turbulence | Forced MHD turbulence | Transitional boundary flow | |||||||||

| EPE | Acc Strict | Acc Relax | Outliers | EPE | Acc Strict | Acc Relax | Outliers | EPE | Acc Strict | Acc Relax | Outliers | |

| FlowNet3D | 0.12465 | 0.20% | 1.52% | 98.48% | 0.09400 | 0.06% | 0.45% | 99.55% | 0.02003 | 67.23% | 91.01% | 8.99% |

| FLOT | 0.13090 | 0.14% | 1.15% | 98.85% | 0.09842 | 0.04% | 0.28% | 99.72% | 0.01715 | 70.37% | 94.63% | 5.37% |

| PointPWC-Net | 0.02719 | 18.47% | 57.06% | 42.94% | 0.01714 | 8.46% | 33.53% | 66.47% | 0.01163 | 89.13% | 98.29% | 1.71% |

| PV-RAFT | 0.04190 | 51.37% | 62.82% | 37.18% | 0.02594 | 41.44% | 61.37% | 38.63% | 0.00324 | 99.65% | 99.94% | 0.063% |

| GotFlow3D | 0.01153 | 86.62% | 90.74% | 9.26% | 0.00596 | 83.005% | 91.81% | 8.19% | 0.00222 | 99.87% | 99.97% | 0.028% |

| Method | Turbulent channel flow | Beltrami flow | Uniform flow | |||||||||

| EPE | Acc Strict | Acc Relax | Outliers | EPE | Acc Strict | Acc Relax | Outliers | EPE | Acc Strict | Acc Relax | Outliers | |

| FlowNet3D | 0.03670 | 24.89% | 70.78% | 29.22% | 0.04435 | 2.30% | 13.73% | 86.27% | 0.03748 | 25.41% | 79.23% | 20.77% |

| FLOT | 0.03541 | 26.89% | 72.72% | 27.28% | 0.02417 | 13.11% | 42.22% | 57.78% | 0.02059 | 68.25% | 96.45% | 3.55% |

| PointPWC-Net | 0.01266 | 87.51% | 98.41% | 1.59% | 0.01616 | 24.36% | 62.19% | 37.81% | 0.02140 | 64.31% | 97.32% | 2.68% |

| PV-RAFT | 0.00653 | 93.71% | 97.83% | 2.17% | 0.00899 | 69.69% | 91.00% | 9.00% | 0.00316 | 99.87% | 99.99% | 0.015% |

| GotFlow3D | 0.00241 | 99.13% | 99.74% | 0.26% | 0.00291 | 95.03% | 98.44% | 1.56% | 0.00244 | 99.85% | 99.98% | 0.019% |

| Method | Module options | Metrics | |||||||||

| Feature Extraction | Correspondence Retrieval | EPE | Outliers | ||||||||

| Static Graph | Dynamic Graph | SDGNN | Correlation Fields | Optimal Transport | Point-Voxel Lookup | AdaPT | |||||

| + | + | + | |||||||||

| Network1 | ✔ | ✗ | ✗ | ✔ | ✗ | ✔ | ✗ | ✗ | ✗ | 0.01651 | 16.39% |

| Network2 | ✔ | ✗ | ✗ | ✗ | ✔ | ✔ | ✗ | ✗ | ✗ | 0.01208 | 10.24% |

| Network3 | ✔ | ✗ | ✗ | ✗ | ✔ | ✗ | ✔ | ✗ | ✗ | 0.01177 | 9.85% |

| Network4 | ✔ | ✗ | ✗ | ✗ | ✔ | ✗ | ✗ | ✔ | ✗ | 0.01036 | 9.43% |

| Network5 | ✔ | ✗ | ✗ | ✗ | ✔ | ✗ | ✗ | ✗ | ✔ | 0.00916 | 7.96% |

| Network6 | ✗ | ✔ | ✗ | ✗ | ✔ | ✗ | ✗ | ✗ | ✔ | 0.00603 | 4.46% |

| Network7 | ✗ | ✗ | ✔ | ✗ | ✔ | ✗ | ✗ | ✗ | ✔ | 0.00487 | 3.63% |

Acknowledgments

This work was supported in part by the National Key R&D Program of China (No. 2019YFB1705800), in part by the National Natural Science Foundation of China under grant No. 61973270, in part by the Foundation for Innovative Research Groups of the National Natural Science Foundation of China under grant No. 61621002.

References

- [1] M. Kemp, “Leonardo da Vinci’s laboratory: studies in flow,” Nature, vol. 571, no. 7765, pp. 322–324, 2019.

- [2] D. Dabiri and C. Pecora, Particle Tracking Velocimetry. IOP Publishing Bristol, 2020.

- [3] A. Kopitca, K. Latifi, and Q. Zhou, “Programmable assembly of particles on a Chladni plate,” Science advances, vol. 7, no. 39, p. eabi7716, 2021.

- [4] B. Ferdowsi, C. P. Ortiz, M. Houssais, and D. J. Jerolmack, “River-bed armouring as a granular segregation phenomenon,” Nature communications, vol. 8, no. 1, pp. 1–10, 2017.

- [5] D. L. Hu, B. Chan, and J. W. Bush, “The hydrodynamics of water strider locomotion,” Nature, vol. 424, no. 6949, pp. 663–666, 2003.

- [6] B. He, K. Doubrovinski, O. Polyakov, and E. Wieschaus, “Apical constriction drives tissue-scale hydrodynamic flow to mediate cell elongation,” Nature, vol. 508, no. 7496, pp. 392–396, 2014.

- [7] H. Mestre, T. Du, A. M. Sweeney, G. Liu, A. J. Samson, W. Peng, K. N. Mortensen, F. F. Stæger, P. A. Bork, L. Bashford et al., “Cerebrospinal fluid influx drives acute ischemic tissue swelling,” Science, vol. 367, no. 6483, p. eaax7171, 2020.

- [8] Z. Zhang, M. Hwang, T. J. Kilbaugh, A. Sridharan, and J. Katz, “Cerebral microcirculation mapped by echo particle tracking velocimetry quantifies the intracranial pressure and detects ischemia,” Nature communications, vol. 13, no. 1, pp. 1–15, 2022.

- [9] M. Guo, A. J. Ehrlicher, M. H. Jensen, M. Renz, J. R. Moore, R. D. Goldman, J. Lippincott-Schwartz, F. C. Mackintosh, and D. A. Weitz, “Probing the stochastic, motor-driven properties of the cytoplasm using force spectrum microscopy,” Cell, vol. 158, no. 4, pp. 822–832, 2014.

- [10] Y. Peng, Z. Liu, and X. Cheng, “Imaging the emergence of bacterial turbulence: Phase diagram and transition kinetics,” Science advances, vol. 7, no. 17, p. eabd1240, 2021.

- [11] S. Schuerle, A. P. Soleimany, T. Yeh, G. Anand, M. Häberli, H. Fleming, N. Mirkhani, F. Qiu, S. Hauert, X. Wang et al., “Synthetic and living micropropellers for convection-enhanced nanoparticle transport,” Science advances, vol. 5, no. 4, p. eaav4803, 2019.

- [12] H. Punzmann, N. Francois, H. Xia, G. Falkovich, and M. Shats, “Generation and reversal of surface flows by propagating waves,” Nature physics, vol. 10, no. 9, pp. 658–663, 2014.

- [13] P. Y. Huang, S. Kurasch, J. S. Alden, A. Shekhawat, A. A. Alemi, P. L. McEuen, J. P. Sethna, U. Kaiser, and D. A. Muller, “Imaging atomic rearrangements in two-dimensional silica glass: watching silica’s dance,” Science, vol. 342, no. 6155, pp. 224–227, 2013.

- [14] F. Pereira, H. Stüer, E. C. Graff, and M. Gharib, “Two-frame 3D particle tracking,” Measurement science and technology, vol. 17, no. 7, p. 1680, 2006.

- [15] S. E. Leggett, M. Patel, T. M. Valentin, L. Gamboa, A. S. Khoo, E. K. Williams, C. Franck, and I. Y. Wong, “Mechanophenotyping of 3D multicellular clusters using displacement arrays of rendered tractions,” Proceedings of the National Academy of Sciences, vol. 117, no. 11, pp. 5655–5663, 2020.

- [16] M. Raffel, C. Willert, F. Scarano, C. Kähler, S. Wereley, and J. Kompenhans, Particle Image Velocimetry: A Practical Guide. Cham: Springer International Publishing, 2018.

- [17] C. Cierpka, B. Lütke, and C. J. Kähler, “Higher order multi-frame particle tracking velocimetry,” Experiments in Fluids, vol. 54, no. 5, pp. 1–12, 2013.

- [18] S. L. Brunton, B. R. Noack, and P. Koumoutsakos, “Machine learning for fluid mechanics,” Annual Review of Fluid Mechanics, vol. 52, pp. 477–508, 2020.

- [19] S. Cai, S. Zhou, C. Xu, and Q. Gao, “Dense motion estimation of particle images via a convolutional neural network,” Experiments in Fluids, vol. 60, no. 4, pp. 1–16, 2019.

- [20] S. Cai, J. Liang, Q. Gao, C. Xu, and R. Wei, “Particle image velocimetry based on a deep learning motion estimator,” IEEE Transactions on Instrumentation and Measurement, vol. 69, no. 6, pp. 3538–3554, 2019.

- [21] J. Liang, S. Cai, C. Xu, and J. Chu, “Filtering enhanced tomographic PIV reconstruction based on deep neural networks,” IET Cyber-Systems and Robotics, vol. 2, no. 1, pp. 43–52, 2020.

- [22] C. Lagemann, K. Lagemann, S. Mukherjee, and W. Schröder, “Deep recurrent optical flow learning for particle image velocimetry data,” Nature Machine Intelligence, vol. 3, no. 7, pp. 641–651, 2021.

- [23] K. Mallery, S. Shao, and J. Hong, “Dense particle tracking using a learned predictive model,” Experiments in Fluids, vol. 61, no. 10, pp. 1–14, 2020.

- [24] C. R. Qi, H. Su, K. Mo, and L. J. Guibas, “Pointnet: Deep learning on point sets for 3d classification and segmentation,” in Proceedings of the IEEE conference on computer vision and pattern recognition, 2017, pp. 652–660.

- [25] X. Liu, C. R. Qi, and L. J. Guibas, “Flownet3D: Learning scene flow in 3D point clouds,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2019, pp. 529–537.

- [26] G. Puy, A. Boulch, and R. Marlet, “Flot: Scene flow on point clouds guided by optimal transport,” in European conference on computer vision. Springer, 2020, pp. 527–544.

- [27] W. Wu, Z. Y. Wang, Z. Li, W. Liu, and L. Fuxin, “PointPWC-Net: Cost volume on point clouds for (self-) supervised scene flow estimation,” in European conference on computer vision. Springer, 2020, pp. 88–107.

- [28] Y. Wei, Z. Wang, Y. Rao, J. Lu, and J. Zhou, “PV-RAFT: point-voxel correlation fields for scene flow estimation of point clouds,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2021, pp. 6954–6963.

- [29] J. Liang, S. Cai, C. Xu, T. Chen, and J. Chu, “DeepPTV: Particle Tracking Velocimetry for complex flow mmtion via deep neural networks,” IEEE Transactions on Instrumentation and Measurement, 2021.

- [30] J. Yang, Y. Yin, A. K. Landauer, S. Buyuktozturk, J. Zhang, L. Summey, A. McGhee, M. K. Fu, J. O. Dabiri, and C. Franck, “SerialTrack: scale and rotation invariant augmented lagrangian particle tracking,” arXiv preprint arXiv:2203.12573, 2022.

- [31] C. R. Qi, L. Yi, H. Su, and L. J. Guibas, “Pointnet++: Deep hierarchical feature learning on point sets in a metric space,” Advances in neural information processing systems, vol. 30, 2017.

- [32] Y. Wang, Y. Sun, Z. Liu, S. E. Sarma, M. M. Bronstein, and J. M. Solomon, “Dynamic graph cnn for learning on point clouds,” Acm Transactions On Graphics (tog), vol. 38, no. 5, pp. 1–12, 2019.

- [33] C. Villani, Optimal transport: old and new. Springer, 2009, vol. 338.

- [34] G. Peyré, M. Cuturi et al., “Computational optimal transport: With applications to data science,” Foundations and Trends® in Machine Learning, vol. 11, no. 5-6, pp. 355–607, 2019.

- [35] M. Cuturi, “Sinkhorn distances: lightspeed computation of optimal transport,” Advances in neural information processing systems, vol. 26, 2013.

- [36] Z. Teed and J. Deng, “Raft: Recurrent all-pairs field transforms for optical flow,” in European conference on computer vision. Springer, 2020, pp. 402–419.

- [37] D. P. Kingma and J. Ba, “Adam: A method for stochastic optimization,” arXiv preprint arXiv:1412.6980, 2014.

- [38] Y. Li, E. Perlman, M. Wan, Y. Yang, C. Meneveau, R. Burns, S. Chen, A. Szalay, and G. Eyink, “A public turbulence database cluster and applications to study lagrangian evolution of velocity increments in turbulence,” Journal of Turbulence, no. 9, p. N31, 2008.

- [39] C. R. Ethier and D. Steinman, “Exact fully 3D Navier–Stokes solutions for benchmarking,” International Journal for Numerical Methods in Fluids, vol. 19, no. 5, pp. 369–375, 1994.

- [40] H. Maas, A. Gruen, and D. Papantoniou, “Particle tracking velocimetry in three-dimensional flows,” Experiments in fluids, vol. 15, no. 2, pp. 133–146, 1993.

- [41] A. R. Khojasteh, S. Laizet, D. Heitz, and Y. Yang, “Lagrangian and Eulerian dataset of the wake downstream of a smooth cylinder at a Reynolds number equal to 3900,” Data in brief, vol. 40, p. 107725, 2022.

- [42] M. Patel, S. E. Leggett, A. K. Landauer, I. Y. Wong, and C. Franck, “Rapid, topology-based particle tracking for high-resolution measurements of large complex 3D motion fields,” Scientific reports, vol. 8, no. 1, pp. 1–14, 2018.

- [43] M. T. Scimone, H. C. Cramer III, E. Bar-Kochba, R. Amezcua, J. B. Estrada, and C. Franck, “Modular approach for resolving and mapping complex neural and other cellular structures and their associated deformation fields in three dimensions,” Nature protocols, vol. 13, no. 12, pp. 3042–3064, 2018.