Gradient Temporal Difference with Momentum: Stability and Convergence

Abstract

Gradient temporal difference (Gradient TD) algorithms are a popular class of stochastic approximation (SA) algorithms used for policy evaluation in reinforcement learning. Here, we consider Gradient TD algorithms with an additional heavy ball momentum term and provide choice of step size and momentum parameter that ensures almost sure convergence of these algorithms asymptotically. In doing so, we decompose the heavy ball Gradient TD iterates into three separate iterates with different step sizes. We first analyze these iterates under one-timescale SA setting using results from current literature. However, the one-timescale case is restrictive and a more general analysis can be provided by looking at a three-timescale decomposition of the iterates. In the process we provide the first conditions for stability and convergence of general three-timescale SA. We then prove that the heavy ball Gradient TD algorithm is convergent using our three-timescale SA analysis. Finally, we evaluate these algorithms on standard RL problems and report improvement in performance over the vanilla algorithms.

1 Introduction

In reinforcement learning (RL), the goal of the learner or the agent is to maximize its long term accumulated reward by interacting with the environment. One important task in most of RL algorithms is that of policy evaluation. It predicts the average accumulated reward an agent would receive from a state (called value function) if it follows the given policy. In model-free learning, the agent does not have access to the underlying dynamics of the environment and has to learn the value function from samples of the form (state, action, reward, next-state). Two very popular algorithms in the model-free setting are Monte-Carlo (MC) and temporal difference (TD) learning (see Sutton and Barto (2018), Sutton (1988)). It is a well known fact that TD learning diverges in the off-policy setting (see Baird (1995)). A class of algorithms called gradient temporal difference (Gradient TD) were introduced in (Sutton, Maei, and Szepesvári 2009) and (Sutton et al. 2009) which are convergent even in the off-policy setting. These algorithms fall under a larger class of algorithms called linear stochastic approximation (SA) algorithms.

A lot of literature is dedicated to studying the asymptotic behaviour of SA algorithms starting from the work of (Robbins and Monro 1951). In recent times, the ODE method to analyze asymptotic behaviour of SA (Ljung 1977; Kushner and Clark 1978; Borkar 2008b; Borkar and Meyn 2000) has become quite popular in the RL community. The Gradient TD methods were shown to be convergent using the ODE approach. A generic one-timescale (One-TS) SA iterate has the following form:

| (1) |

where are the iterates. The function is assumed to be a Lipschitz continuous function. is a Martingale difference noise sequence and is the step-size at time-step . Under some mild assumptions, the iterate given by (1) converges (see Borkar 2008b; Borkar and Meyn 2000). When is a linear map of the form , the matrix is often called the driving matrix. The three Gradient TD algorithms: GTD (Sutton, Maei, and Szepesvári 2009), GTD2 and TDC (Sutton et al. 2009) consist two iterates of the following form:

| (2) |

| (3) |

where , . See section 2 for exact form of the iterates. The two iterates still form a One-TS SA scheme if , where is a constant and a two-timescale (two-TS) scheme if .

Separately, adding a momentum term to accelerate the convergence of iterates is a popular technique in stochastic gradient descent (SGD). The two most popular schemes are the Polyak’s Heavy ball method (Polyak 1964), and Nesterov’s accelerated gradient method (Nesterov 1983). A lot of literature is dedicated to studying momentum with SGD. Some recent works include (Ghadimi, Feyzmahdavian, and Johansson 2014; Loizou and Richtárik 2020; Gitman et al. 2019; Ma and Yarats 2019; Assran and Rabbat 2020). Momentum in the SA setting, which is the focus of the current work, has limited results. Very few works study the effect of momentum in the SA setting. A recent work by (Mou et al. 2020) studies SA with momentum briefly and shows an improvement of mixing rate. However, the setting considered is restricted to linear SA and the driving matrix is assumed to be symmetric. Further, the iterates involve an additional Polyak-Ruppert averaging (Polyak 1990). Here, in contrast, we analyze the asymptotic behaviour of the algorithm and make none of the above assumptions. A somewhat distant paper is by (Devraj, Bušíć, and Meyn 2019) that introduces Matrix momentum in SA and is not equivalent to heavy ball momentum.

A very recent work by (Avrachenkov, Patil, and Thoppe 2020) studied One-TS SA with heavy ball momentum in the univariate case (i.e., in iterate (1)) in the context of web-page crawling. The iterates took the following form:

| (4) |

The momentum parameter was chosen to decompose the iterate into two recursions of the form given by (2) and (3). We use such a decomposition for Gradient TD methods with momentum. This leads to three separate iterates with three step-sizes. We analyze these three iterates and provide stability (iterates remain bounded throughout) and almost sure (a.s.) convergence guarantees.

1.1 Our Contribution

-

•

We first consider the One-TS decomposition of Gradient TD with momentum iterates and show that the driving matrix in this case is Hurwitz (all eigen values are negative). Thereafter we use the theory of One-TS SA to show that the iterates are stable and convergent to the same TD solution.

-

•

Next, we consider the Three-TS decomposition. We provide the first stability and convergence conditions for general Three-TS recursions. We then show that the iterates under consideration satisfy these conditions.

-

•

Finally, we evaluate these algorithms for different choice of step-size and momentum parameters on standard RL problems and report an improvement in performance over their vanilla counterparts.

2 Preliminaries

In the standard RL setup, an agent interacts with the environment which is a Markov Decision Process (MDP). At each discrete time step , the agent is in state takes an action receives a reward and moves to another state . Here and are finite sets of possible states and actions respectively. The transitions are governed by a kernel . A policy is a mapping that defines the probability of picking an action in a state. We let be the transition probability matrix induced by . Also, represents the steady-state distribution for the Markov chain induced by and the matrix is a diagonal matrix of dimension with the entries on its diagonals.The state-value function associated with a policy for state is

where is the discount factor.

In the linear architecture setting, policy evaluation deals with estimating through a linear model , where is a feature associated with the state and is the parameter vector. We define the TD-error as and as an matrix where the row is . In the i.i.d setting it is assumed that the tuple ) (where ) is drawn independently from the stationary distribution of the Markov chain induced by . Let and , where the expectations are w.r.t. the stationary distribution of the induced chain. The matrix is negative definite (see Maei (2011); Tsitsiklis and Van Roy (1997)). In the off-policy case, the importance weight is given by , where and are the target and behaviour policies respectively. Introduced in (Sutton, Maei, and Szepesvári 2009), Gradient TD are a class of TD algorithms that are convergent even in the off-policy setting. Next, we present the iterates associated with the algorithms GTD (Sutton, Maei, and Szepesvári 2009), GTD2, TDC (Sutton et al. 2009).

-

•

GTD:

(5) (6) -

•

GTD2:

(7) (8) -

•

TDC:

(9) (10)

The objective function for GTD is Norm of Expected Error defined as . The GTD algorithm is derived by expressing the gradient direction as = . Here . If both the expectations are sampled together, then the term would be biased by their correlation. An estimate of the second expectation is maintained as a long-term quasi-stationary estimate (see (5)) and the first expectation is sampled (see (6)). For GTD2 and TDC, a similar approach is used on the objective function Mean Square Projected Bellman Error defined as . Here, is the projection operator that projects vectors to the subspace and is the Bellman operator defined as . As originally presented, GTD and GTD2 are one-timescale algorithms ( is constant) while TDC is a two-timescale algorithm (). It was shown in all the three cases that .

3 Gradient TD with Momentum

Although, Gradient TD starts with a gradient descent based approach, it ends up with two-TS SA recursions. Momentum methods are known to accelerate the convergence of SGD iterates. Motivated by this, we examine momentum in the SA setting, and ask if the SA recursions for Gradient TD with momentum even converge to the same TD solution. We probe the heavy ball extension of the three Gradient TD algorithms where, we keep an accumulation of the previous gradient values in . Then, at time step the new gradient value multiplied by the step size is added to the current accumulation vector multiplied by the momentum parameter as below:

The parameter is then updated in the negative of the direction , i.e., Since is computed as a long-term estimate of , its update rule remains same. The momentum parameter is usually set to a constant in the stochastic gradient setting. An exception to this can however be found in (Gitman et al. 2019; Gadat, Panloup, and Saadane 2016), where . Here, we consider the latter case. Substituting into the iteration of and noting that , the iterates for GTD with Momentum (GTD-M) can be written as:

| (11) |

| (12) |

Similarly the iterates for GTD2-M are given by:

| (13) |

| (14) |

Finally, the iterates for TDC-M are given by:

| (15) |

| (16) |

We choose the momentum parameter as in (Avrachenkov, Patil, and Thoppe 2020) as follows: , where is a positive sequence s.t. as and is a constant. Note that as . We later provide conditions on and to ensure a.s. convergence. As we would see in section 4, the condition on in the One-TS setting is restrictive. Specifically, it depends on the norm of the driving matrix . This motivates us to look at the Three-TS setting and then the corresponding condition on is less restrictive. Using the momentum parameter as above,

Rearranging the terms and dividing by , we get:

We let

Then, the GTD-M iterates in (11) and (12) can be re-written with the following three iterates:

| (17) | |||

| (18) | |||

| (19) |

A similar decomposition can be done for the GTD2-M and TDC-M iterates.

4 Convergence Analysis

In this section we analyze the asymptotic behaviour of the GTD-M iterates given by (17), (18) and (19). Throughout the section, we consider . We first consider the One-TS case when and , for some real constants . Subsequently, we consider the Three-TS setting where and as .

4.1 One-Timescale Setting

We begin by analyzing GTD-M using a one-timescale SA setting. We let for simplicity. The iterates of GTD-M can then be re-written as:

| (20) |

where,

Equation (20) can be re-written in the general SA scheme as:

| (21) |

Here , where the expectations are w.r.t. the stationary distribution of the Markov chain induced by the target policy . . In particular,

where recall that and

Lemma 1.

Assume, . Then, the matrix is Hurwitz.

Proof.

Let be an eigenvalue of . The characteristic equation of the matrix is given by:

Using the following formula for determinant of block matrices

we have,

Therefore, from the characteristic equation of , we have that

There must exist a non-zero vector , such that

where is the conjugate transpose of the vector and . The above equation reduces to the following cubic-polynomial equation:

where . Using Routh-Hurwitz criterion, a cubic polynomial has all roots with negative real parts iff and . In our case, . The last inequality follows from the fact that is negative definite and therefore . Finally, and follows from. Therefore and the claim follows. ∎

Consider the following assumptions:

1.

All rewards and features are bounded, i.e., and . Also, the matrix has full rank, where is an matrix where the sth row is .

2.

The step-sizes satisfy ,

and the momentum parameter satisfies:

3.

The samples () are drawn i.i.d from the stationary distribution of the Markov chain induced by target policy .

Theorem 2.

Proof.

Assumption 1 ensures that and 3 ensures that the function is well defined. Now, using Lemma 1 and (Borkar and Meyn 2000) we can show that the iterates in (20) remain stable. Then using the third extension from (Chapter-2 pp. 17, Borkar (2008b)) we can show that as . Thereafter using the formula for inverse of block matrices it can be shown that as . See Appendix A1 for a detailed proof. ∎

Similar results can be proved for the GTD2-M and TDC-M iterates.

4.2 Three Timescale Setting

We consider the three iterates for GTD-M in (17), (18) and (19) under the following criteria for step-sizes: and as . We provide the first conditions for stability and a.s. convergence of generic three-TS SA recursions. We emphasize that the setting we look at in Theorem 3 is more general than the setting at hand of GTD-M iterates. Although stability and convergence results exist for one-TS and two-TS cases, this is the first time such results have been provided for the case of three-TS recursions. We next provide the general iterates for a three-TS recursion along with the assumptions used while analyzing them. Consider the following three iterates:

| (22) |

| (23) |

| (24) |

and the following assumptions:

-

(B1)

are Lipchitz continuous, with Lipchitz constants and respectively.

-

(B2)

, , are step-size sequences that satisfy

-

(B3)

are Martingale difference sequences w.r.t. the filtration where,

, and constants . The terms satisfy as .

-

(B4)

-

(i)

The ode has a globally asymptotically stable equilibrium (g.a.s.e) , and is Lipchitz continuous.

-

(ii)

The ode has a globally asymptotically stable equilibrium , where is Lipchitz continuous.

-

(iii)

The ode , has a globally asymptotically stable equilibrium .

-

(i)

-

(B5)

The functions satisfy as uniformly on compacts. The ODE: has a unique globally asymptotically stable equilibrium , where is Lipschitz continuous. Further, .

-

(B6)

The functions satisfy as uniformly on compacts. The ODE: has a unique globally asymptotically stable equilibrium , where is Lipschitz continuous. Further, .

-

(B7)

The functions satisfy as uniformly on compacts. The ODE: has the origin in as its unique globally asymptotically stable equilibrium.

Remark 2.

Conditions give sufficient conditions that ensure that the iterates remain stable. Specifically it ensures that . Conditions along with the stability of iterates ensures a.s. convergence of the iterates.

Proof.

See Appendix A2. ∎

Next we use theorem 3, to show that the iterates of GTD-M a.s. converge to the TD solution . Consider the following assumption on step-size sequences instead of 2.

4.

The step-sizes satisfy ,

and the momentum parameter satisfies:

Theorem 4.

Proof.

We transform the iterates given by (17), (18) and (19) into the standard SA form given by (22), (23) and (24). Let . Let, and . Then, (17) can be re-written as:

where,

Next, (18) can be re-written as:

where,

Finally, (19) can be re-written as:

where,

The functions are linear in and hence Lipchitz continuous, therefore satisfying . We choose the step-size sequences such that they satisfy . One popular choice is Next, and , are martingale difference sequences w.r.t by construction. , . The first part of is satisfied with , and any . The fact that follows from the bounded features and bounded rewards assumption in 1. Next, observe that since . For a fixed , consider the ODE For , is the unique g.a.s.e, is linear and therefore Lipchitz continuous. This satisfies (i). Next, for a fixed , has as its unique g.a.s.e and is Lipschitz. This satisfies . Finally, to satisfy , consider,

Since, is negative definite, therefore, is negative definite. Therefore, is the unique g.a.s.e. Next, we show that the sufficient conditions for stability of the three iterates are satisfied. The function, uniformly on compacts as . The limiting ODE: has as its unique g.a.s.e. is Lipschitz with , thus satisfying assumption .

The function, uniformly on compacts as . The limiting ODE has as its unique g.a.s.e. is Lipchitz with . Thus assumption is satisfied.

Finally, uniformly on compacts as and the ODE: has origin as its unique g.a.s.e. This ensures the final condition . By theorem 3,

Specifically, . ∎

Similar analysis can be provided for GTD2-M and TDC-M iterates. See Appendix A3 for details.

5 Experiments

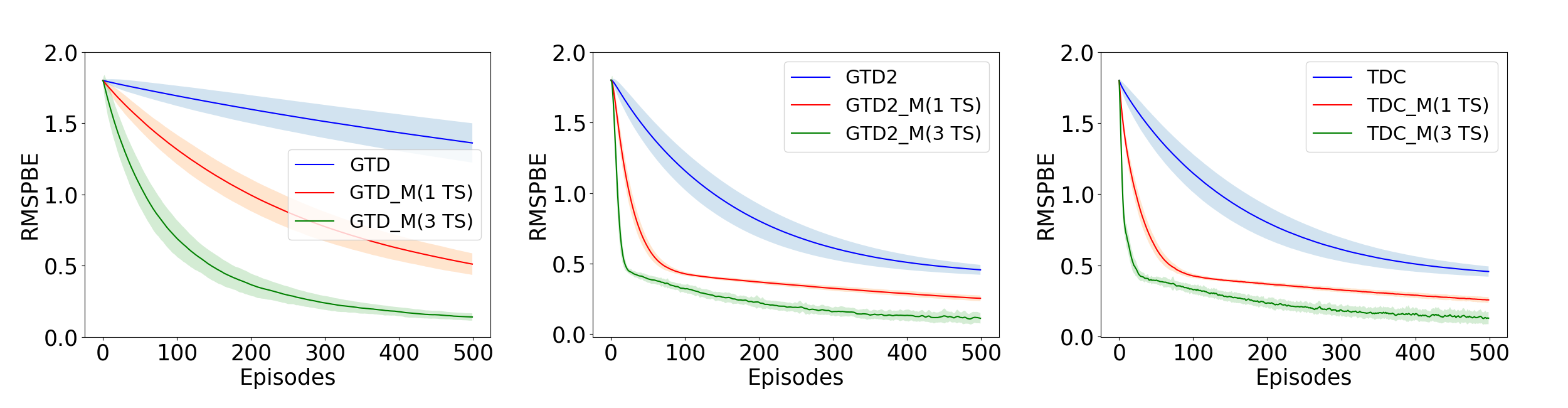

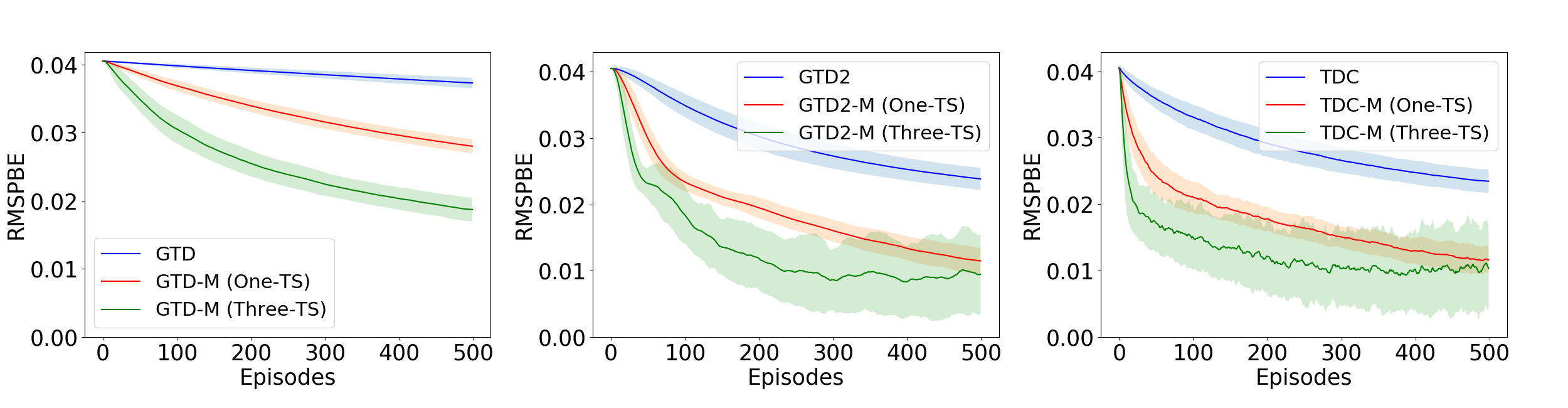

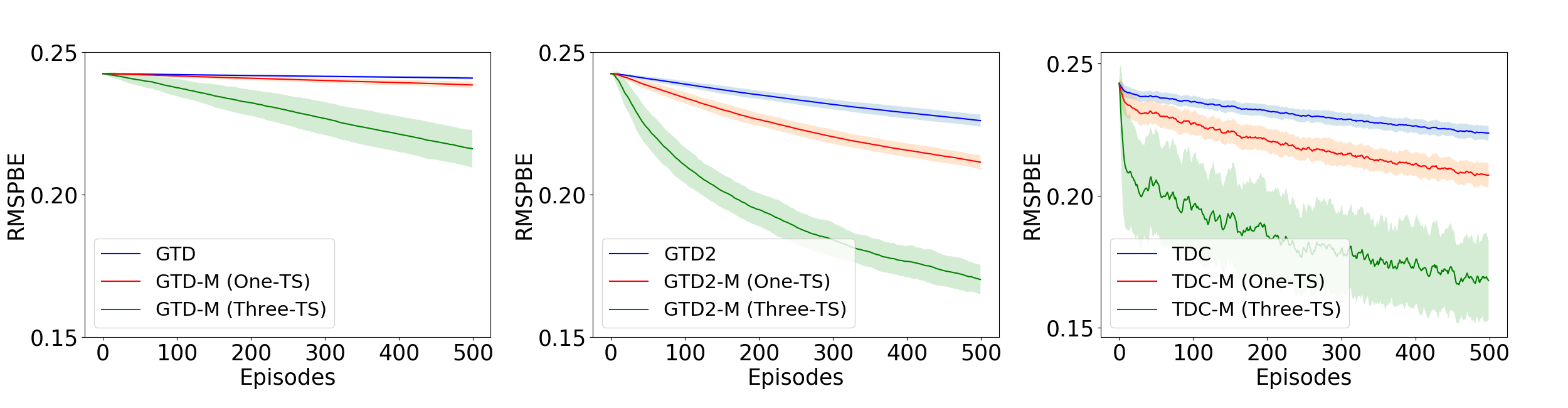

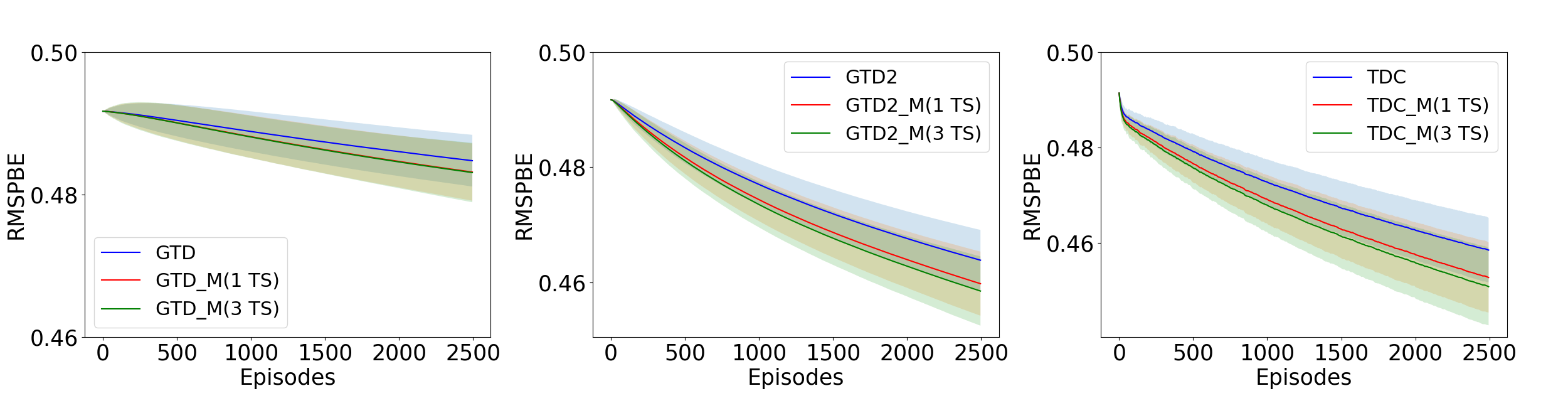

We evaluate the momentum based GTD algorithms defined in section 3 to four standard problems of policy evaluation in reinforcement learning namely, Boyan Chain (Boyan 1999), 5-State random walk (Sutton et al. 2009), 19-State Random Walk (Sutton and Barto 2018) and Random MDP (Sutton et al. 2009). See Appendix A4 for a detailed description of the MDP settings and (Dann, Neumann, and Peters 2014) for details on implementation. We run the three algorithms, GTD, GTD2 and TDC along with their heavy ball momentum variants in One-TS and Three-TS settings and compare the RMSPBE (Root of MSPBE) across episodes. Figure-1 to Figure-4 plot these results. We consider decreasing step-sizes of the form: in all the examples. Table 1 summarizes the different step-size sequences used in our experiment.

In one-TS setting, we require . Since , we must have . In the Three-TS setting, thus implying, and . Although our analysis requires square summability: , such choice of step-size makes the algorithms converge very slowly.

Recently, (Dalal et al. 2018a) showed convergence rate results for Gradient TD schemes with non-square summable

| Boyan Chain | ||||

|---|---|---|---|---|

| Vanilla | 0.25 | 0.125 | - | - |

| One-TS | 0.25 | 0.125 | 0.125 | 1 |

| Three-TS | 0.25 | 0.125 | 0.2 | 0.1 |

| 5-state RW | ||||

| Vanilla | 0.25 | 0.125 | - | - |

| One-TS | 0.25 | 0.125 | 0.125 | 1 |

| Three-TS | 0.25 | 0.125 | 0.2 | 0.1 |

| 19-State RW | ||||

| Vanilla | 0.125 | 0.0625 | - | - |

| One-TS | 0.125 | 0.0625 | 0.0625 | 1 |

| Three-TS | 0.125 | 0.0625 | 0.1 | 0.1 |

| Random Chain | ||||

| Vanilla | 0.5 | 0.25 | - | - |

| One-TS | 0.5 | 0.25 | 0.25 | 1 |

| Three-TS | 0.5 | 0.25 | 0.3 | 0.1 |

step-sizes also (See Remark 2 of (Dalal et al. 2018a)). Therefore, we look at non-square summable step-sizes here, and observe that in all the examples the iterates do converge. The momentum parameter is chosen as in 2.

In all the examples considered, the momentum methods outperform their vanilla counterparts. Since, in the Three-TS setting, a lower value of can be chosen, this ensures that the momentum parameter is not small in the initial phase of the algorithm as in the One-TS setting. This in turn helps to reduce the RMSPBE faster in the initial phase of the algorithm as is evident from the experiments.

6 Related Work and Conclusion

To the best of our knowledge no previous work has specifically looked at Gradient TD methods with an added heavy ball term. The use of momentum specifically in the SA setting is very limited. Section 4.1 of (Mou et al. 2020) does talk about momentum; however the problem looked at is that of SGD with momentum and the driving matrix is assumed to be symmetric (see Appendix H of their paper). We do not make any such assumption here. The work of (Devraj, Bušíć, and Meyn 2019), indeed looks at momentum in SA setting. However, they introduce a matrix momentum term which is not equivalent to heavy ball momentum. Acceleration in Gradient TD methods has been looked at in (Pan, White, and White 2017). The authors provide a new algorithm called ATD and the acceleration is in form of better data efficiency. However, they do not make use of momentum methods.

In this work we have introduced heavy ball momentum in Gradient Temporal difference algorithms for the first time. We decompose the two iterates of these algorithms into three separate iterates and provide asymptotic convergence guarantees of these new schemes under the same assumptions made by their vanilla counterparts. Specifically, we show convergence in the One-TS regime as well as Three-TS regime. In both the cases, the momentum parameter gradually goes 1. Three-TS formulation gives us more flexibility in choosing the momentum parameter. Specifically, compared to the One-TS setting, a larger momentum parameter can be chosen during the initial phase in the Three-TS case. We observe improved performance with these new schemes when compared with the original algorithms.

As a step forward from this work, the natural direction would be to look at more sophisticated momentum methods such as Nesterov’s accelerated method (Nesterov 1983). Also, here we only provide the convergence guarantees of these new momentum methods. A particularly interesting step would be to quantify the benefits of using momentum in SA settings. Specifically, it would be interesting to extend weak convergence rate analysis of (Konda and Tsitsiklis 2004; Mokkadem and Pelletier 2006) to Three-TS regime. Also, extending the recent convergence rate results in expectation and high probability of GTD methods (Dalal et al. 2018b; Gupta, Srikant, and Ying 2019; Kaledin et al. 2019; Dalal, Szorenyi, and Thoppe 2020) to these momentum settings would be interesting works for the future.

References

- Assran and Rabbat (2020) Assran, M.; and Rabbat, M. 2020. On the Convergence of Nesterov’s Accelerated Gradient Method in Stochastic Settings. Proceedings of the 37th International Conference on Machine Learning, PMLR, 119: 410–420.

- Avrachenkov, Patil, and Thoppe (2020) Avrachenkov, K.; Patil, K.; and Thoppe, G. 2020. Online Algorithms for Estimating Change Rates of Web Pages. arXiv, 2009.08142.

- Baird (1995) Baird, L. 1995. Residual Algorithms: Reinforcement Learning with Function Approximation. In In Proceedings of the Twelfth International Conference on Machine Learning, 30–37. Morgan Kaufmann.

- Borkar (2008a) Borkar, V. 2008a. Stochastic Approximation: A Dynamical Systems Viewpoint. Cambridge University Press. ISBN 9780521515924.

- Borkar (2008b) Borkar, V. S. 2008b. Stochastic Approximation: A Dynamical Systems Viewpoint. Cambridge University Press. ISBN 9780521515924.

- Borkar and Meyn (2000) Borkar, V. S.; and Meyn, S. P. 2000. The O.D.E. Method for Convergence of Stochastic Approximation and Reinforcement Learning. SIAM Journal on Control and Optimization, 38(2): 447–469.

- Boyan (1999) Boyan, J. 1999. Least-Squares Temporal Difference Learning. In ICML.

- Dalal, Szorenyi, and Thoppe (2020) Dalal, G.; Szorenyi, B.; and Thoppe, G. 2020. A Tale of Two-Timescale Reinforcement Learning with the Tightest Finite-Time Bound. Proceedings of the AAAI Conference on Artificial Intelligence, 34(04): 3701–3708.

- Dalal et al. (2018a) Dalal, G.; Szorenyi, B.; Thoppe, G.; and Mannor, S. 2018a. Finite Sample Analysis of Two-Timescale Stochastic Approximation with Applications to Reinforcement Learning. arXiv:1703.05376.

- Dalal et al. (2018b) Dalal, G.; Thoppe, G.; Szörényi, B.; and Mannor, S. 2018b. Finite Sample Analysis of Two-Timescale Stochastic Approximation with Applications to Reinforcement Learning. In Bubeck, S.; Perchet, V.; and Rigollet, P., eds., Proceedings of the 31st Conference On Learning Theory, volume 75 of Proceedings of Machine Learning Research, 1199–1233. PMLR.

- Dann, Neumann, and Peters (2014) Dann, C.; Neumann, G.; and Peters, J. 2014. Policy Evaluation with Temporal Differences: A Survey and Comparison. Journal of Machine Learning Research, 15(24): 809–883.

- Devraj, Bušíć, and Meyn (2019) Devraj, A. M.; Bušíć, A.; and Meyn, S. 2019. On Matrix Momentum Stochastic Approximation and Applications to Q-learning. 57th Annual Allerton Conference on Communication, Control, and Computing, 749–756.

- Gadat, Panloup, and Saadane (2016) Gadat, S.; Panloup, F.; and Saadane, S. 2016. Stochastic Heavy ball. Electronic Journal of Statistics, 12: 461–529.

- Ghadimi, Feyzmahdavian, and Johansson (2014) Ghadimi, E.; Feyzmahdavian, H. R.; and Johansson, M. 2014. Global convergence of the Heavy-ball method for convex optimization. arXiv:1412.7457.

- Gitman et al. (2019) Gitman, I.; Lang, H.; Zhang, P.; and Xiao, L. 2019. Understanding the role of momentum in stochastic gradient methods. Advances in Neural Information Processing Systems, 9630–9640.

- Gupta, Srikant, and Ying (2019) Gupta, H.; Srikant, R.; and Ying, L. 2019. Finite-Time Performance Bounds and Adaptive Learning Rate Selection for Two Time-Scale Reinforcement Learning. arXiv:1907.06290.

- Kaledin et al. (2019) Kaledin, M.; Moulines, E.; Naumov, A.; Tadic, V.; and Wai, H. 2019. Finite Time Analysis of Linear Two-timescale Stochastic Approximation with Markovian Noise. Conference on Learning Theory, 125: 2144–2203.

- Konda and Tsitsiklis (2004) Konda, V.; and Tsitsiklis, J. 2004. Convergence rate of linear two-time-scale stochastic approximation. Annals of Applied Probability, 14.

- Kushner and Clark (1978) Kushner, H.; and Clark, D. 1978. Stochastic Approximation Methods for constrained and unconstrained systems. Springer.

- Lakshminarayanan and Bhatnagar (2017) Lakshminarayanan, C.; and Bhatnagar, S. 2017. A Stability Criterion for Two-Timescale Stochastic Approximation Schemes. Automatica, 79: 108–114.

- Ljung (1977) Ljung, L. 1977. Analysis of recursive stochastic algorithms. IEEE Transactions on Automatic Control, 22(4): 551–575.

- Loizou and Richtárik (2020) Loizou, N.; and Richtárik, P. 2020. Momentum and stochastic momentum for stochastic gradient, Newton, proximal point and subspace descent methods. Computational Optimization and Applications, 77: 653–710.

- Ma and Yarats (2019) Ma, J.; and Yarats, D. 2019. Quasi-hyperbolic momentum and adam for deep learning. International Conference on Learning Representations.

- Maei (2011) Maei, H. R. 2011. Gradient Temporal-Difference Learning Algorithms. Ph.D. thesis, University of Alberta, CAN. AAINR89455.

- Mokkadem and Pelletier (2006) Mokkadem, A.; and Pelletier, M. 2006. Convergence rate and averaging of nonlinear two-time-scale stochastic approximation algorithms. The Annals of Applied Probability, 16(3): 1671 – 1702.

- Mou et al. (2020) Mou, W.; Li, C. J.; Wainwright, M. J.; Bartlett, P. L.; and Jordan, M. I. 2020. On Linear Stochastic Approximation: Fine-grained Polyak-Ruppert and Non-Asymptotic Concentration. Proceedings of Thirty Third Conference on Learning Theory, PMLR, 125: 2947–2997.

- Nesterov (1983) Nesterov, Y. 1983. A method of solving a convex programming problem with convergence rate . Soviet Mathematics Doklady, 269: 543–547.

- Pan, White, and White (2017) Pan, Y.; White, A.; and White, M. 2017. Accelerated Gradient Temporal Difference Learning. arXiv:1611.09328.

- Polyak (1964) Polyak, B. 1964. Some methods of speeding up the convergence of iteration methods. Ussr Computational Mathematics and Mathematical Physics, 4: 1–17.

- Polyak (1990) Polyak, B. 1990. New stochastic approximation type procedures. Avtomatica i Telemekhanika, 7: 98–107.

- Robbins and Monro (1951) Robbins, H.; and Monro, S. 1951. A Stochastic Approximation Method. The Annals of Mathematical Statistics, 22(3): 400 – 407.

- Sutton and Barto (2018) Sutton, R.; and Barto, A. 2018. Reinforcement Learning: An Introduction. Cambridge, MA, USA: A Bradford Book. ISBN 0262039249.

- Sutton et al. (2009) Sutton, R.; Maei, H.; Precup, D.; Bhatnagar, S.; Silver, D.; Szepesvári, C.; and Wiewiora, E. 2009. Fast Gradient-Descent Methods for Temporal-Difference Learning with Linear Function Approximation. In Proceedings of the 26th Annual International Conference on Machine Learning, ICML ’09, 993–1000. New York, NY, USA: Association for Computing Machinery. ISBN 9781605585161.

- Sutton (1988) Sutton, R. S. 1988. Learning to Predict By the Methods of Temporal Differences. Machine Learning, 3(1): 9–44.

- Sutton, Maei, and Szepesvári (2009) Sutton, R. S.; Maei, H.; and Szepesvári, C. 2009. A Convergent O(n) Temporal-difference Algorithm for Off-policy Learning with Linear Function Approximation. In Koller, D.; Schuurmans, D.; Bengio, Y.; and Bottou, L., eds., Advances in Neural Information Processing Systems, volume 21. Curran Associates, Inc.

- Tsitsiklis and Van Roy (1997) Tsitsiklis, J.; and Van Roy, B. 1997. An analysis of temporal-difference learning with function approximation. IEEE Transactions on Automatic Control, 42(5): 674–690.

Appendix

A1 Proof of Theorem 2

Consider the One timescale recursion for the GTD-M iterates given by (21) as given below:

Here , where the expectations are w.r.t. the stationary distribution of the Markov chain induced by the target policy . . In particular,

where recall that and We show that the conditions in Chapter 2 of (Borkar 2008b) hold and thereafter use Theorem 2 of (Borkar 2008b) to show convergence to the TD solution.

-

(A1)

The map is linear in and therefore Lipschitz continuous with Lipschitz constant .

-

(A2)

The step-size sequence satisfies the required conditions (cf. assumption 2 of the current paper).

-

(A3)

By construction is a martingale difference sequence w.r.t the filtration . Also, . (A3) is satisfied with . follows from the fact that the rewards are uniformly bounded and the features are normalized (see assumption 1).

-

(A4)

To ensure (A4), we show that (A5) of (Chapter 3, pp.22, Borkar (2008b)) holds and then use Theorem 7 of (Borkar 2008b). The functions . For any compact set , as uniformly. Consider the ODE

Observe that since and , we have . Since we have assumed that therefore, , and hence from lemma 1, we have that is Hurwitz. Hence, the origin is a unique globally asymptotically stable equilibrium (g.a.s.e) for the above ODE. This in turn implies that the iterates remain bounded i.e., a.s. . By (Theorem 2, Chapter 2 of Borkar (2008b)) converges to an internally chain transitive invariant set of the ODE . The only such point of the ODE is its equilibrium point . By (Corollary 4, Chapter 2 of Borkar (2008b)),

A straightforward calculation for the inverse of the block matrix gives us that

A2 Proof of Theorem 3

We first start by assuming that the iterates remain stable (cf. assumption (B5)) and show that the three timescale recursions converge. Subsequently we provide conditions which ensure that the iterates remain stable. We consider general three timescale recursions as given below:

| (25) |

| (26) |

| (27) |

where , and . Next we consider the following assumptions:

-

(B1)

are Lipschitz continuous.

-

(B2)

are Martingale difference sequences w.r.t. where,

for some constants , .

-

(B3)

, , are step-size sequences that satisfy

-

(B4)

-

(i)

The ODE has a globally asymptotically stable equilibrium , where is Lipschitz continuous.

-

(ii)

The ODE has a globally asymptotically stable equilibrium , where is Lipschitz continuous.

-

(iii)

The ODE , has a globally asymptotically stable equilibrium

-

(i)

-

(B5)

Proof.

We start with the following Lemma that characterizes the set to which the iterates converge.

Lemma 6.

Proof.

We first consider the fastest timescale of and show that:

We rewrite the iterates (25), (26) and (27) as:

| (28) |

| (29) |

| (30) |

where,

Using third extension from Chapter-2 of (Borkar 2008a), converges to an internally chain transitive invariant set of the ODE

For initial conditions , the internally chain transitive invariant set of the above ODE is . Therefore,

| (31) |

Next we consider the middle timescale . (26) and (27) can be re-written as:

| (32) |

where,

The iteration for can be re-written as:

| (33) |

where,

Since, , therefore as . Again using third extension from Chapter-2 of (Borkar 2008a), it can be seen that (32) and (33) converges to an internally chain transitive invariant set of the ODE

For initial conditions , the internally chain transitive invariant set of the above ODE is . Therefore,

| (34) |

Combining (31) and (34) we get:

∎

Finally, we consider the slowest timescale of . We define the piece wise linear continuous interpolation of the iterates as:

where, Also, let , denote the unique solution to the below ODE starting at :

with . Using the arguments as in Theorem-2, Chapter-6 of (Borkar 2008a), it can be shown that for any

Subsequently arguing as in proof of Theorem-2, Chapter-2 of (Borkar 2008a), we get:

Using Lemma 6, we get:

∎

Next we provide sufficient conditions for to hold. Consider the following additional assumptions:

-

(B6)

The functions satisfy as uniformly on compacts. For fixed , the ODE

has its unique globally asymptotically stable equilibrium , where is Lipschitz continuous. Further, , i.e.,

has origin in as unique globally asymptotically stable equilibrium.

-

(B7)

The functions satisfy as uniformly on compacts. For fixed , the ODE

has its unique globally asymptotically stable equilibrium , where is Lipschitz continuous. Further, , i.e.,

has origin in as its unique globally asymptotically stable equilibrium.

-

(B8)

The functions satisfy as uniformly on compacts. The ODE

has the origin in as its unique globally asymptotically stable equilibrium.

Theorem 7.

Under assumptions - and -,

Proof.

We begin with the fastest time scale determined by the step size . Consider the following definitions:

-

(F1)

Define

Let , and

-

(F2)

Given and a constant define

One can find a subsequence such that and as .

-

(F3)

The scaling sequence is defined as:

-

(F4)

The scaled iterates for are:

where, ,

-

(F5)

Next we define the linearly interpolated trajectory for the scaled iterates as follows:

-

(F6)

Let denote the trajectory of the ODE:

with , and .

First we state four lemmas for ODEs with two external inputs. The proofs of these lemmas follow exactly as Lemmas 2, 3, 4 and 5 of (Lakshminarayanan and Bhatnagar 2017). Subsequently when we analyze the middle timescale (timescale of ) and slow timescale (timescale of ) recursions, we restate the corresponding lemmas for ODEs with one and no external inputs respectively. Let and denote the solution to the ODEs

respectively, with initial condition and the external inputs and . Throughout the paper, and denote the ball of radius around and respectively.

Lemma 8.

Let be a compact set, and be fixed external inputs. Then under , given , such that

Lemma 9.

Let , , , be a given time interval and . Let , then

where as .

Lemma 10.

Let , then given and , , and such that , and external inputs and . Then,

Lemma 11.

Let and let hold. Then given and such that for any external input satisfying , ,

The next lemma uses the convergence result of three time scale iterates under the stability assumption of (Theorem 5) and shows that the scaled iterates defined in converge.

Lemma 12.

Under ,

-

(i)

For , a.s. for some constant

-

(ii)

Proof.

-

(i)

Follows as in (Lemma 4, Chapter-3, pp. 24, Borkar (2008a)).

- (ii)

∎

In particular, Lemma 12(i) shows that along the fastest timescale between instants and , the norm of the scaled iterate can grow at most by a factor starting from . Next, Lemma 12(ii) shows that the scaled iterate asymptotically tracks the ODE defined in . The next theorem bounds in terms of and . We define the linearly interpolated trajectory of the three iterates as: ,

Theorem 13.

Under assumptions - and ,

-

(i)

For large, and (here is the sampling frequency as in (F2) and is as in Lemma 11 with ), if , for some then

-

(ii)

a.s. for some .

-

(iii)

Proof.

-

(i)

We have Since, , this implies . Therefore, . Next we show that

For ,

Since, ,

(35) Therefore,

The second inequality follows from the fact that . A similar analysis proves . Next we show that

Here we are considering the case when iterates are blowing up. Therefore let . Then,

Let and . From lemma 11, such that

whenever and . Choose and . Since and for the ODE defined in and From and , it follows that and Using Lemma 12(ii), for large enough . Also observe that . Using these, we have . Finally since

we have

Choosing , proves the claim.

(ii) and (iii) follow along the lines of arguments in (Lakshminarayanan and Bhatnagar 2017) Lemma 6 (ii) and (iii) respectively. ∎

Next we consider the middle timescale of and re-define the following terms:

-

(M1)

Define

Let and

-

(M2)

Given and a constant define

One can find a subsequence such that , and as .

-

(M3)

The scaling sequence is defined as:

-

(M4)

The scaled iterates for are:

where, ,

-

(M5)

Next, we define the linearly interpolated trajectory for the scaled iterates as follows:

-

(M6)

Let denote the trajectory of the ODE:

with , and .

As before we state a few lemmas for ODEs with one external input. These follow along the lines of Lemmas 2-5 of (Lakshminarayanan and Bhatnagar 2017). Let and denote the solution to the ODEs

respectively, with initial condition and the external input .

Lemma 14.

Let be a compact set and . Then under , given , such that

Lemma 15.

Let , , be a given time interval and . Let , then

where as .

Lemma 16.

Let then given and , , and such that , and external input ,

Lemma 17.

Let and holds. Then given and such that for any external input satisfying , ,

Lemma 18.

Under ,

-

(i)

For , a.s. for some constant

-

(ii)

For sufficiently large , we have

Proof.

See Lemma 9 of (Lakshminarayanan and Bhatnagar 2017) ∎

Theorem 19.

Proof.

-

(i)

Since , . We first show that .

Since , . Therefore,

Next we show that , where is as defined in Theorem 13. Here again we are considering the case when the iterates are blowing up. Therefore let . Now, from Theorem 13 ,we know and therefore, . With this we have,

Now we proceed as in Theorem 13 (i). Let . From Lemma 17, such that

whenever . Choose . Since for the ODE defined in (M6) and and we choose from , it follows that Using Lemma 18(ii), s.t. for large enough and . Choose . Also observe that . Using these, we have . Finally since

we have

Choosing , proves the claim.

(ii) and (iii) follow along the lines of arguments in (Lakshminarayanan and Bhatnagar 2017), Lemma 6 (ii) and (iii), respectively.

∎

Finally we consider the slowest timescale corresponding to . As before we redefine the terms as follows:

-

(S1)

Define

Let and

-

(S2)

Given and a constant define

There exists some subsequence such that and as .

-

(S3)

The scaling sequence is defined as:

-

(S4)

The scaled iterates for are:

where, ,

-

(S5)

Next we define the linearly interpolated trajectory for the scaled iterates as follows:

-

(S6)

Let denote the trajectory of the ODE:

with , and .

We again state some results on ODEs, this time with no external input. These again follow along the lines of Lemma 2-5 in (Lakshminarayanan and Bhatnagar 2017). Let and denote the solution to the ODEs

respectively with initial condition .

Lemma 20.

Let be a compact set . Then under , given , such that

Lemma 21.

Let , be a given time interval and . Then

where as .

Lemma 22.

Given and , and such that , , ,

Lemma 23.

Let and let hold. Then given and , then

Lemma 24.

Under ,

-

(i)

For , a.s. for some constant

-

(ii)

For sufficiently large , we have

Proof.

See Lemma 9 (ii) and (iii) of (Lakshminarayanan and Bhatnagar 2017). ∎

Theorem 25.

Proof.

- (i)

- (ii)

(iii) and (iv) follow along the lines of arguments as in Lemma 10 (iii) and (iv) of (Lakshminarayanan and Bhatnagar 2017). ∎

Now from Theorem 25 (iii), it follows that the slow timescale iterates are bounded a.s. ( a.s. ) which in turn implies that the middle timescale iterates are bounded using Theorem 19 ( i.e., a.s. ). Finally the fast timescale iterates are bounded because of Theorem 13 and the fact that both middle timescale and slow timescale iterates are bounded showing a.s. Combining these we have a.s, thereby proving Theorem 7. ∎

The slightly more general version where each iterate could have small perturbation terms as given below:

| (36) |

| (37) |

| (38) |

with can be shown to converge to the same solution. Since the additional error terms are , their contribution is asymptotically negligible. See arguments in third extension of (Chapter 2, pp. 17 of Borkar (2008b) ) that handles this case for one-timescale iterates.

A3 Convergence of GTD-2 M and TDC-M

Here we provide the asymptotic convergence guarantees of the momentum variants of the remaining two Gradient TD methods namely GTD2-M and TDC-M. The analysis is similar to that of GTD-M in Theorem 4 and is provided here for completeness. We show that the assumptions (B1) - (B7) of the main paper are satisfied and thereby invoke Theorem 3 to show convergence.

A3.1 Asymptotic convergence of GTD2-M

We re-write the iterates for GTD2-M below:

| (39) |

| (40) |

As before, choosing , where is a positive sequence and is a constant, we can decompose the two iterates into three recursions as below:

| (41) | |||

| (42) | |||

| (43) |

Theorem 26.

Proof.

We transform the iterates given by (41), (42) and (43) into the standard SA form given by (22), (23) and (24). Let . Let, and . Then, (41) can be re-written as:

where,

Next, (42) can be re-written as:

where,

Here, and . Finally, (43) can be re-written as:

where,

The functions are linear in and hence Lipchitz continuous, therefore satisfying . We choose the step-size sequences such that they satisfy . One popular choice is

Next, and , are martingale difference sequences w.r.t by construction. Next,

The first part of is satisfied with , and any . The fact that follows from the bounded features and bounded rewards assumption in 1. Next, observe that since . For a fixed , consider the ODE

For , is the unique g.a.s.e, is linear and therefore Lipchitz continuous. This satisfies (i). Next, for a fixed ,

has as its unique g.a.s.e because is negative definite. Also is linear in and therefore Lipschitz. This satisfies . Finally, to satisfy , consider,

Since is negative definite and is positive definite, therefore, is negative definite. Therefore, is the unique g.a.s.e.

Next, we show that the sufficient conditions for stability of the three iterates are satisfied. The function, uniformly on compacts as . The limiting ODE:

has as its unique g.a.s.e. is Lipschitz with , thus satisfying assumption .

The function, uniformly on compacts as . The limiting ODE

has as its unique g.a.s.e. since is negative definite. is Lipchitz with . Thus assumption is satisfied.

Finally, uniformly on compacts as and the ODE:

has origin in as its unique g.a.s.e. This ensures the final condition . By theorem 3,

Specifically, . ∎

A3.2 Asymptotic Convergence of TDC-M

We re-write the iterates for TDC-M below:

| (44) |

| (45) |

As before, choosing , where is a positive sequence and is a constant, we can decompose the two iterates into three recursions as below:

| (46) | |||

| (47) | |||

| (48) |

Theorem 27.

Proof.

We transform the iterates given by (46), (47) and (48) into the standard SA form given by (22), (23) and (24). Let . Let, and . Then, (46) can be re-written as:

where,

Next, (46) can be re-written as:

where,

Here, and . Finally, (46) can be re-written as:

where,

The functions are linear in and hence Lipchitz continuous, therefore satisfying . We choose the step-size sequences such that they satisfy . One popular choice is

Observe that, and , are martingale difference sequences w.r.t by construction. Next,

. The first part of is satisfied with , and any . The fact that follows from the bounded features and bounded rewards assumption in 1. Next, observe that since . For a fixed , consider the ODE

For , is the unique g.a.s.e, is linear and therefore Lipchitz continuous. This satisfies (i). Next, for a fixed ,

has as its unique g.a.s.e because is negative definite. Also is linear in and therefore Lipschitz. This satisfies . Finally, to satisfy , consider,

Now, = . Since, is negative definite and is positive definite, therefore is negative definite and hence the above ODE has as its unique g.a.s.e.

Next, we show that the sufficient conditions for stability of the three iterates are satisfied. The function, uniformly on compacts as . The limiting ODE:

has as its unique g.a.s.e. is Lipschitz with , thus satisfying assumption .

The function, uniformly on compacts as . The limiting ODE

has as its unique g.a.s.e. since is negative definite. is Lipschitz with . Thus assumption is satisfied.

Finally, uniformly on compacts as . Consider the ODE:

Now, = . Since, is negative definite and is positive definite, therefore is negative definite and hence the above ODE has origin as its unique g.a.s.e. This ensures the final condition . By Theorem 3,

Specifically, . ∎

A4 Experiment Details

Here we briefly describe the MDP settings considered in section 5.

-

1.

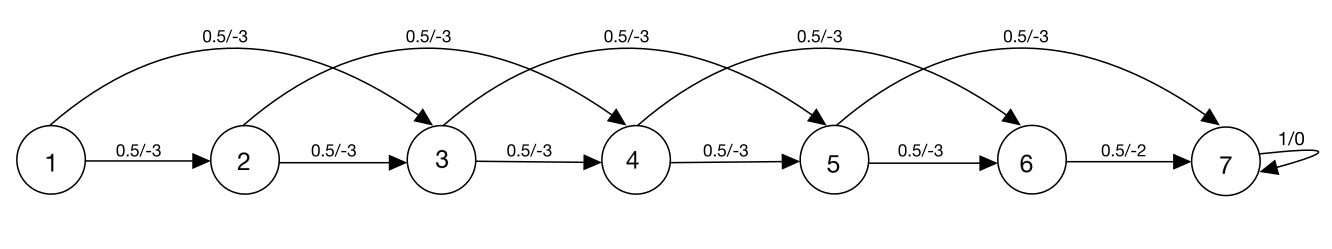

Example-1 (Boyan Chain): It consists of a linear arrangement of 14 states. From each of the first 13 states, one can move to the next state or the next to next state with equal probability. The last state is an absorbing state. The reward at each transition is -3 except the transition from state-6 to state-7 where it is -2. The discount factor is set to . The following figure shows the corresponding MDP for 7 state Boyan Chain.

Figure 5: 7 state Boyan Chain from (Boyan 1999) -

2.

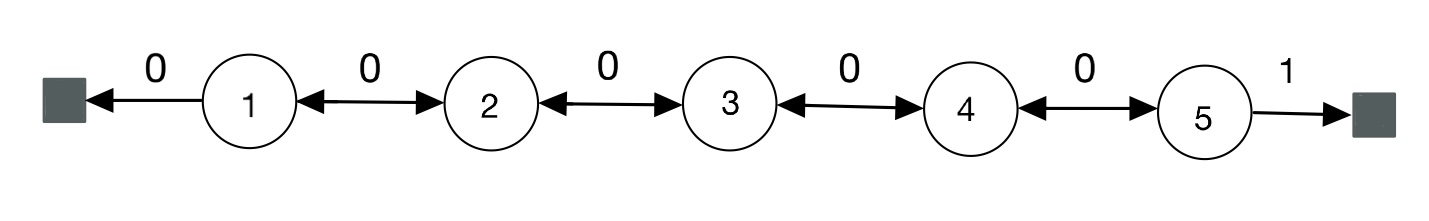

Example-2 (5-State Random Walk): It consists of a linear arrangement of 5 states with two terminal states. There is a single action at each state. From each state one either moves left or right with equal probability. Moving left from state 1 results in episode termination yielding a reward of 0. Similarly, moving right from state 5 also results in episode termination, however, yielding a reward of +1. The reward associated with all other transitions is 0 and the discount factor . The following figure shows the corresponding MDP.

Figure 6: 5-State Random Walk from (Sutton et al. 2009) -

3.

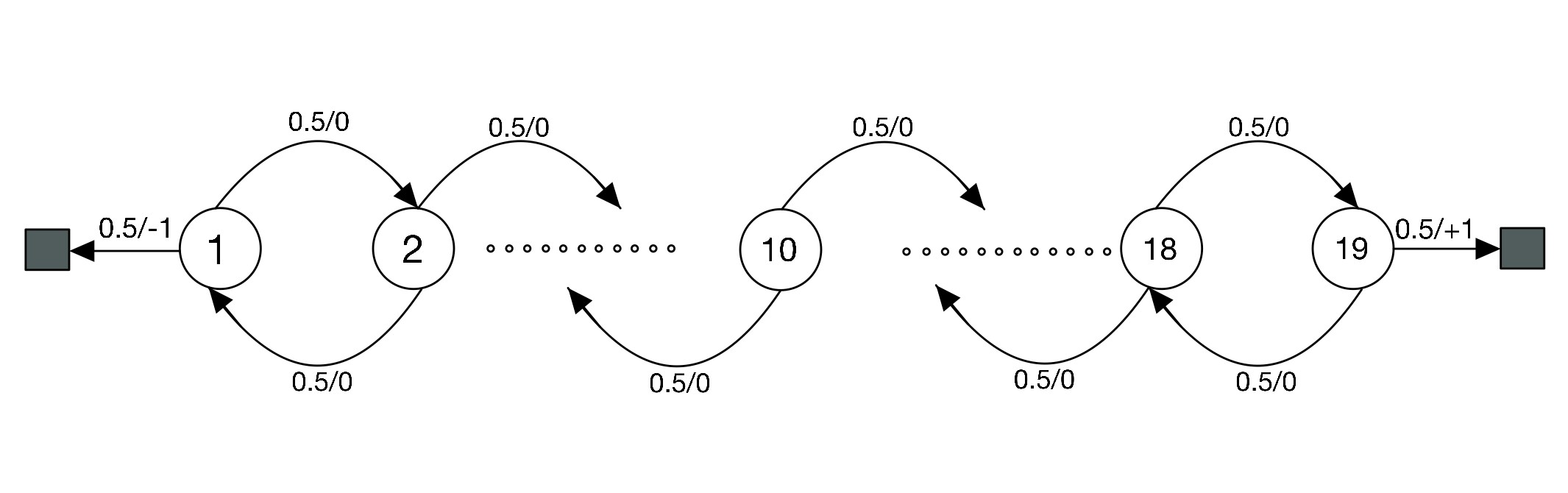

Example-3 (19-State Random Walk): It consists of a linear arrangement of 19 states. From each state one either moves left or right with equal probability. Moving left from state 1 results in episode termination yielding a reward of -1. Similarly, moving right from state 19 also results in episode termination, however, yielding a reward of +1. The reward associated with all other transitions is 0 and the discount factor . The following figure shows the corresponding MDP:

Figure 7: 19 State Random Walk from (Sutton and Barto 2018) -

4.

Example-4 (Random MDP): This is a randomly generated discrete MDP with 20 states and 5 actions in each state. The transition probabilities are uniformly generated from with a small additive constant. The rewards are also uniformly generated from . The policy and the start state distribution are also generated in a similar way and the discount factor . See (Dann, Neumann, and Peters 2014) for a more detailed description.