Graph-Embedded Multi-Agent Learning for Smart Reconfigurable THz MIMO-NOMA Networks

Abstract

With the accelerated development of immersive applications and the explosive increment of internet-of-things (IoT) terminals, 6G would introduce terahertz (THz) massive multiple-input multiple-output non-orthogonal multiple access (MIMO-NOMA) technologies to meet the ultra-high-speed data rate and massive connectivity requirements. Nevertheless, the unreliability of THz transmissions and the extreme heterogeneity of device requirements pose critical challenges for practical applications. To address these challenges, we propose a novel smart reconfigurable THz MIMO-NOMA framework, which can realize customizable and intelligent communications by flexibly and coordinately reconfiguring hybrid beams through the cooperation between access points (APs) and reconfigurable intelligent surfaces (RISs). The optimization problem is formulated as a decentralized partially-observable Markov decision process (Dec-POMDP) to maximize the network energy efficiency, while guaranteeing the diversified users’ performance, via a joint RIS element selection, coordinated discrete phase-shift control, and power allocation strategy. To solve the above non-convex, strongly coupled, and highly complex mixed integer nonlinear programming (MINLP) problem, we propose a novel multi-agent deep reinforcement learning (MADRL) algorithm, namely graph-embedded value-decomposition actor-critic (GE-VDAC), that embeds the interaction information of agents, and learns a locally optimal solution through a distributed policy. Numerical results demonstrate that the proposed algorithm achieves highly customized communications and outperforms traditional MADRL algorithms.

Index Terms:

Reconfigurable intelligent surface, THz, MIMO-NOMA, MADRL, distributed optimization.I Introduction

The upcoming 6G era confronts a variety of emerging applications, involving immersive applications such as ultra-high definition (UHD) video and virtual reality/augmented reality (VR/AR), as well as Internet of Things (IoT) applications like wearable devices and smart homes [1]. To meet the unprecedented challenges raised by ultra wide-band communications and massive IoT connectivity, terahertz (THz) massive multiple-input-multiple-output non-orthogonal multiple access (MIMO-NOMA) has become an essential technology for 6G, which can provide Gbps-order ultra-fast transmission speed and support millions of connections. Generally, THz MIMO-NOMA systems utilize the large-scale antenna array with hybrid beamforming structure [2], which can compensate the severe fading over high-frequency THz bands, and reduce the hardware complexity and power consumption. In addition, assisted with the MIMO-NOMA technology [3, 4], highly spatial-correlated users can be grouped into one cluster and supported by a single radio frequency (RF) chain, which can significantly improve spectral efficiency and connective density [5]. To employ the massive MIMO-NOMA technology for THz communications, the authors in [6] proposed an energy-efficient user clustering, hybrid precoding, and power optimization scheme, where the blockage probability and unreliability of line-of-sight (LoS) links have been ignored. Nevertheless, due to the high obscuration susceptibility, the application of THz MIMO-NOMA network may suffer from serious transmission unreliability and intermittency resulting from either wall blockage or human-body blockage effect [7], which may significantly degrade the experiences of unreliability-sensitive 6G immersive applications.

Fortunately, the newly-emerged reconfigurable intelligent surface (RIS) technology is regarded as a promising way to overcome the shortcomings in THz MIMO-NOMA communications [8, 9, 10]. Specifically, RISs can dynamically transform and reshape spatial beams, and thus construct virtual LoS links between transmitters and receivers to avoid blockage [11]. Meanwhile, a smart radio environment can also be created to achieve significant spectrum/energy efficiency improvement and flexible scheduling [12, 13]. Given the aforementioned benefits, increasingly research efforts have been devoted to the RIS-aided MIMO-NOMA networks operating in low frequencies. In [14], the authors proposed a joint passive and active beamforming method for RIS-aided multiple input single output (MISO)-NOMA network, which obtained a locally optimal solution based on a second-order cone programming (SOCP)-alternating direction method of multipliers (ADMM) algorithm. The authors in [15] further studied the joint passive and active beamforming in RIS-aided MISO-NOMA networks under both ideal and non-ideal RIS assumptions. Furthermore, the authors in [16] proposed joint deployment and beamforming frameworks for RIS-aided MIMO-NOMA networks based on deep reinforcement learning. However, the existing methods are inapplicable to 6G THz MIMO-NOMA communications: 1) The existing MIMO-NOMA mechanisms are incapable to deal with the extremely heterogenous quality-of-service (QoS) requirements of 6G users. 2) Compared with low-frequency MIMO communications, THz MIMO communications face a more prominent transmission unreliability problem. 3) Since THz MIMO-NOMA networks usually have high-dimensional spatial channel information, existing centralized and iterative optimization algorithms usually lead to unacceptable high complexity and information exchange overhead to schedule the complicated THz MIMO-NOMA scenarios.

Therefore, we aim to propose a novel smart reconfigurable MIMO-NOMA THz framework that can realize customizable and intelligent indoor communications with high energy efficiency and low complexity based on a machine learning mechanism in this work. Here, we consider two types of heterogeneous users, namely IoT users and super-fast-experience (SE) users. Specifically, SE users, like VR/AR and UHD video, require ultra-high-speed and reliable immersive communication experiences, while the densely connected IoT users, like smart cleaners and smart watches, tolerate sporadic and unreliability-tolerant traffic transmission. Different from the conventional systems, we introduce a low-complexity and decentralized learning-based framework that can jointly design the user clustering, NOMA decoding, and hybrid beam reconfiguration schemes in a cooperative setting with multiple APs and RISs: 1) By adaptively aligning users’ equivalent spatial channels as well as customizing NOMA decoding orders based on the QoS requirements, the intra-cluster interference suffered by SE users can be completely eliminated. 2) We adjust the highly-directional hybrid beams through the cooperation among APs and RISs, which can ensure tailored spatial data channels and mitigate both inter-cluster and inter-AP interference via active hybrid beamforming and coordinated passive beamforming. 3) To overcome the non-ideal discrete phase-shift control, we exploit a dynamic RIS element selection structure for the hybrid beam reconfiguration, which can flexibly refrain unfavorable and negative reflections via an element-wise ON/OFF control to enhance energy efficiency. Overall, the proposed framework can realize customizable hybrid spatial and power-domain multiplexing, as well as improving the multi-domain resource utilization.

Based on the above framework, we propose a long-term joint RIS element selection, coordinated discrete phase-shift control, and power allocation learning strategy. The objective function is formulated to maximize the system energy efficiency as well as satisfying users’ data rate and reliability, which is an NP-hard mixed-integer nonlinear programming (MINLP) problem. To efficiently solve the non-convex, strongly coupled, and highly complex MINLP problem online, we transfer it into a decentralized partially observable Markov decision process (Dec-POMDP). Thereafter, we introduce a novel cooperative multi-agent reinforcement learning (MADRL) method, namely graph-embedded value-decomposition actor-critic (GE-VDAC), to efficiently coordinate multi-AP and multi-RIS in a distributed manner. The proposed GE-VDAC algorithm can not only improve generalization ability of multi-agent learning, but also reduce information exchange overhead.

The main contributions of this work can be summarized as follows.

-

•

We propose a novel smart reconfigurable THz MIMO-NOMA framework, which can realize highly customizable and intelligent communications to support ultra-wide bands and ultra-dense connections. The hybrid spatial beams are smartly and cooperatively reconfigured through multi-AP and multi-RIS coordinations, where dynamic RIS element selection, on-demand data enhancement, flexible interference suppression, and efficient hybrid spatial and power-domain multiplexing are allowed.

-

•

The long-term joint RIS element selection, coordinated discrete phase-shift control, and power allocation optimization problem is formulated by a Dec-POMDP model. Under customized user clustering, NOMA decoding, and sub-connected hybrid beamforming schemes, the Dec-POMDP model can maximize the expected system energy efficiency while satisfy extremely heterogeneous data rate and reliability requirements for different users.

-

•

To efficiently solve the non-convex, strongly coupled, and highly complex MINLP problem online, we propose a novel distributed MADRL algorithm, namely GE-VDAC, which learns the decomposed local policies by embedding the agents’ interaction information into dimension-reduced and permutation-invariant features. We show that the proposed GE-VDAC can not only converge to a locally optimal solution, but also achieve a better coordination and generalization with low information exchange overhead.

-

•

We present numerical results to verify the effectiveness of the proposed strategy. The proposed GE-VDAC achieves higher system energy efficiency and faster learning speed than traditional MADRL algorithms. Moreover, both reliable and ultra-high-speed communications can be achieved by SE users despite the increment of connected users.

The rest of this paper is organized as follows. Section II describes the smart reconfigurable THz MIMO-NOMA network. Section III presents the formulated Dec-POMDP problem, and Section VI proposes the GE-VDAC based distributed MADRL algorithm. Numerical results are presented in Section V before the conclusion in Section VI.

Notation: We denote the imaginary unit by , and represent vectors and matrices by lower and upper boldface symbols, respectively. denotes the first partial derivative of function with respect to . represents the statistical expectation. and denote the absolute value and the Euclidean norm, respectively. Moreover, means the permutation operation. The main notations used throughout this paper is summarized in Table I.

| Notations | Definitions |

| , | number of antennas and RF chains |

| number of antennas in a subset | |

| analog and digital beamformers at AP | |

| , | number of SE and IoT users associated to each AP |

| () | user number (set) served by RF chain of AP |

| phase-shift matrix of RIS | |

| , | ON/OFF state and phase shift of element at RIS |

| , | power allocation of user and cluster |

| equivalent channel from AP to user | |

| direct channel from AP to user | |

| channel from RIS to user | |

| channel from AP to RIS | |

| , | traffic queue and virtual queue of user |

| , , | joint observation, state, and action vectors |

| vectorized node feature of the -th type agent | |

| vectorized feature of edge | |

| embedded feature of edge | |

| , | hidden and local embedded states of agent |

II Smart Reconfigurable THz MIMO-NOMA Framework

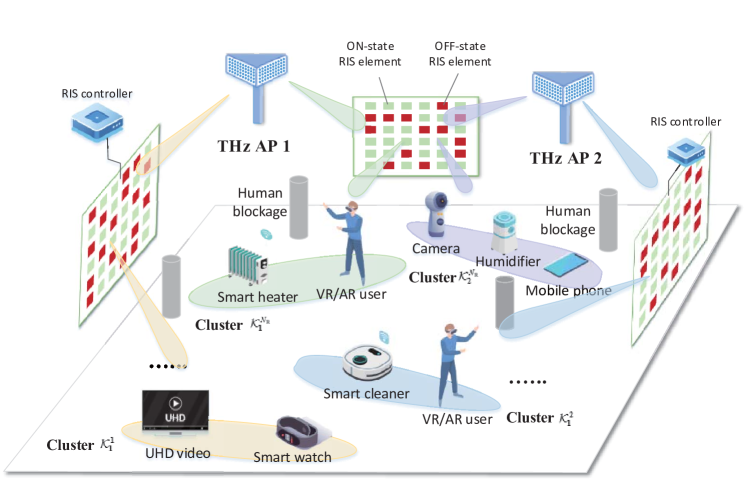

As shown in Fig. 1, we consider an indoor downlink massive MIMO-NOMA THz network that serves densely distributed SE users and IoT users under multiple pre-installed THz APs and RISs. Denote the set of APs and RISs as and , respectively. By coordinating and cooperating with neighboring APs and RISs, each AP serves a set of SE users and a set of IoT users, which have utterly diversified QoS requirements in terms of transmission data rate and reliability. For simplicity, we assume and . Here, we denote , , and . To reduce hardware complexity and energy dissipation, we assume each user equips with a single antenna and the APs apply the sub-connected hybrid beamforming structure [17]. Moreover, each AP is equipped with antennas and RF chains, where each RF chain connects to a subset of antennas via phase shifters with . Define the analog and digital beamforming matrixes at each AP as and . Denote the phase-shift matrix of each RIS by . Based on the MIMO-NOMA technology, the highly spatial-correlated SE and IoT users can be grouped into one cluster, which is served by a reconfigured beam transmitted from an AP antenna subarray that is connected with a RF chain. Define as user in cluster under AP , and be the set of users in cluster under AP . Furthermore, the whole system is divided into time slots, indexed by .

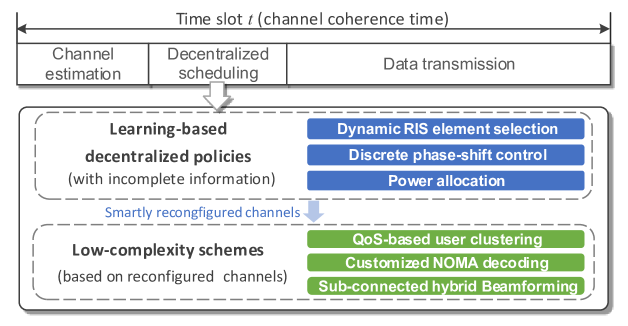

In general, we aim to propose a learning-based mechanism for the smart reconfigurable THz MIMO-NOMA network to jointly coordinate both multiple APs and RISs, which has the flow chart as shown in Fig. 2. Decentralized scheduling begins after channel estimation, which contains the process of learning-based decentralized policies and low-complexity schemes. In the learning-based decentralized policies, we can determine the dynamic RIS element selection, discrete phase-shift control, and power allocation with incomplete system information. Based on the smartly reconfigured channels, each AP can further obtain QoS-based user clustering, customized NOMA decoding order, and sub-connected hybrid beamformer using low-complexity schemes. Thereafter, data transmissions can be performed.

Without loss of generality, we assume each RIS equips with a low-cost RF chain that can estimate the CSI based on the semi-passive RIS channel estimation methods [18]. Specifically, at the beginning of each time slot, APs would send pilot signals to neighboring users to estimate the direct channels. Meanwhile, RISs would turn off their reflecting elements to sense the channels, where the indirect channels can be estimated by processing the received signals. Both direct and indirect channels can be estimated under compressed sensing or deep learning methods, which are out of the scope of this work. For the sake of expression, we ignore the time slot index from Section II-A to II-G.

II-A RIS Element Selection and Discrete Phase-Shift Control

We suppose that each RIS consists of reflecting elements, which is controlled by a software-defined RIS controller. Since the continuous phase control is hard to realize in practice, a finite-resolution passive phase shifter is utilized for each reflecting element. To save energy consumption as well as overcoming the non-ideal reflecting effect due to the discrete phase-shift control, we propose to leverage a dynamic RIS element selection structure during transmissions111Note that dynamic RIS element selection requires a complex design for the RIS array, which might be realized in the near future. In this work, we assume the reflecting elements in RISs can be dynamically turned ON/OFF by controlling the PIN diodes.. By flexibly controlling the ON/OFF states and the phase shifts of passive phase shifters, the dynamic selection structure can prevent unfavorable reflections and achieve higher energy efficiency.

Based on the dynamic selection structure, the phase-shift matrix on RIS can be given by

| (1) |

where denotes the ON/OFF state of the -th RIS element, given by

| (2) |

The discrete phase shift of a selected element at RIS is determined by an integer and the RIS resolution bit , which can be written as

| (3) |

II-B Channel Model

The equivalent channel vector from AP to user via multiple RISs is defined as , where is the equivalent channel from subarray at AP to user . Here, is given by

| (4) |

where , , and denote the direct spatial channels of AP -user , RIS -user , and AP -RIS links, respectively.

We assume LoS paths always exist between the APs and its neighboring RISs. However, the LoS paths for the AP-user and RIS-user links may be blocked. Therefore, channel can be modeled by

| (5) |

where is the antenna gain, is the path loss determined by the frequency and the distance between AP and user . indicates the existence of the LoS path, which is determined by the LoS probability based on the indoor THz blockage model [7]. Moreover, signifies the number of NLoS paths, and is the reflection coefficient for NLoS path [19]. denotes the angle of departure (AoD) for the downlink channel between AP and user . Given AoD , the array response vector can be denoted by . According to [20], the path loss includes both spreading loss and absorption loss, i.e.,

| (6) |

where is the frequency-dependent medium absorption coefficient, is the distance, and is the light speed.

II-C User Clustering

In traditional CSI-based massive MIMO-NOMA networks, the user clustering usually contains a cluster-head selection (CHS) procedure that chooses cluster head based on channel conditions, followed by which the remaining users are grouped with the highest channel-correlated cluster heads [21]. However, the CSI-based user clustering is inflexible to guarantee the ultra-high data rate and mitigate interference for SE users. To achieve customizable and intelligent communication experiences, we extend the traditional CSI-based user clustering to a QoS-based user clustering. Relying on the smartly reconfigured beams, users’ spatial channels can be flexibly aligned to achieve adaptive user clustering. Specifically, we group multiple IoT users with a single SE user. In this way, we can ensure multiplexing gain for SE users while enhancing IoT user connections. In light of this, we assume the number of RF chains activated at each AP is equal to its serving SE users, i.e., . Let represent the SE user, and , be the IoT user. The low-complexity user clustering scheme based on the reconfigured channels can be stated as follows.

The SE users are firstly selected as the cluster heads of different clusters. Then, we define the channel spatial correlation between an ungrouped IoT user and the cluster head as

| (7) |

Thereafter, multiple IoT users can be non-orthogonally grouped into the same cluster, where the cluster head achieves the strongest reconfigured channel correlations with them. The computational complexity of the QoS-based clustering scheme is . In comparison, the conventional CSI-based clustering scheme has the computational complexity of [21].

II-D Customized NOMA Decoding

Relying on the adaptively aligned channels through RISs, the NOMA user decoding order in each cluster can be flexibly customized based on QoS requirements without degrading the system performance. Exploiting the NOMA successive interference cancellation (SIC), SE users can subtract signals of other users within the same cluster, and completely eliminate intra-cluster interference based on power-domain multiplexing to realize ultra-high-speed and reliability-guaranteed communications. To realize this goal, each SE user with the highest QoS requirements in its cluster would be decoded at last, while the data signals of IoT users in each cluster would be decoded first.

To ensure the SIC at each IoT users in the same cluster can be successfully carried out, the NOMA decoding order of IoT users are rearranged according to equivalent channel gains as . Thereafter, the SE user would sequentially decode the signals of the former IoT users to completely cancel intra-cluster interference. To successfully cancel interference from IoT user , imposed to SE user , we have the following SIC constraint

| (8) |

where is the signal-to-interference-and-noise ratio (SINR) of IoT user for decoding its signal, and is the SINR to decode the signal of IoT user at SE user . We denote if the SIC constraint (8) can be satisfied, i.e., the SE user can cancel the intra-cluster interference from IoT user . Otherwise , and the intra-cluster interference from IoT user would be taken as noise at the SE user. In this way, can be expressed as

| (9) |

where is the power spectral density of additional white Gaussian noise (AWGN), is the power allocation coefficient for user , and is the power consumption of RF chain at AP .

II-E Sub-Connected Hybrid Beamforming

We design the analog beamforming at each AP to increase effective data gain of each cluster, and design the digital beamforming for suppressing residual inter-cluster interference that is not mitigated by RISs based on zero-forcing (ZF).

The analog beamforming matrix with the sub-connected structure can be formulated as

| (10) |

where for , and has an amplitude . The sub-connected analog beamforming is designed to improve the effective gains toward cluster heads that are highly spatial-correlated to the cluster members. Considering -bits quantized phase shifters in each antenna subarray, the -th element of the active analog beamforming vector is given by [6, 17]

| (11) |

where is the quantized phase calculated by

| (12) |

To further suppress the effective inter-cluster interference that hasn’t been canceled by RISs, we design the ZF digital beamforming as follows. Let denote the digital beamforming vector for cluster at AP . The cluster center is given as . Denote . Therefore, the ZF digital beamforming is calculated as

| (13) |

By introducing column power normalizing, the baseband precoding matrix can be expressed as

| (14) |

II-F Transmission Rate

Define as the transmitted symbols with normalized power, and as the transmitted signal in cluster . Define as the AWGN. The baseband signal received by each SE user after SIC can be formulated as

| (15) |

Therefore, the SINR of each SE user can be written as

| (16) |

Moreover, the SINR of IoT users , can be given by

| (17) |

Hence, the data rate of each user at each time slot can be expressed as

| (18) |

II-G Power Consumption

The total power consumption for the proposed networks at each time slot includes both transmit and circuit power, which can be formulated as

| (19) |

where denotes the inefficiency of the phase shifter in the THz network, is the circuit power consumption at each user, and denotes hardware energy dissipated at each RIS element depending on the RIS resolution bit [23]. Moreover, is the circuit power consumption at each AP, which is given by

| (20) |

where is the baseband power consumption, and , and denote power consumption of per RF chain, per phase shifters and per power amplifies, respectively.

Therefore, the network energy efficiency at each time slot can be formulated as

| (21) |

II-H Reliability Model

Suppose the THz MIMO-NOMA networks maintain a traffic buffer queue for each user, , which aims to transmit specific data volume in a predefined time. At the beginning of each time slot , the queue length of user can be updated by

| (22) |

where is the transmission data rate of (18), denotes the traffic arrival column at time slot following the poisson distribution, and .

To ensure reliable transmission, we should guarantee the queue stability and keep the outages below a predefined threshold [22]. The queue stability is ensured by

| (23) |

Moreover, the outage probability, i.e., the probability that the traffic queue length of device exceeds the threshold , is limited by a certain threshold . Therefore, the reliability conditions can be formulated as

| (24) |

III Lyapunov Optimization Based Dec-POMDP Problem

In this Section, we first formulate the constrained Dec-POMDP problem, and then transfer the constrained Dec-POMDP problem into a normal Dec-POMDP problem based on the Lyapunov optimization theory.

III-A Constrained Dec-POMDP Model

We aim to dynamically find a stationary online policy , which can jointly optimize the dynamic RIS element selection, coordinated discrete phase-shift control, and transmit power allocation by observing the environment state at each time slot . Under the given user clustering, NOMA decoding, and hybrid beamforming schemes, the joint policy dynamically maximizes the expected system energy efficiency for the smart reconfigurable THz MIMO-NOMA network, while satisfying various QoS requirements in terms of data rate and transmission reliability of users. Therefore, the online objective function can be formulated as follows

| (25a) | ||||

| (25b) | ||||

| (25c) | ||||

| (25d) | ||||

| (25e) | ||||

| (25f) | ||||

where . Constraint (25b) ensures the minimum data rate of each user, (25c) denotes the total transmit power should be less than the maximum power , (25d) signifies the binary ON/OFF state of RIS elements, and constraint (25e) represents the discrete phase-shift control. Note that the SIC constraint (8) is ignored since it has been implied in the SINR expression (16) via the indicator .

This online policy can be modeled as a constrained Markov Decision Process (MDP). However, solving in a centralized way is computationally inefficient due to the large-scale joint state-action space and the heavy overhead of high-dimensional information exchange from multiple APs and RISs to the centralized controller. To tackle in an efficient and low-complexity manner, we model the long-term energy efficiency optimization problem as a constrained Dec-POMDP. In detail, the POMDP provides a generalized framework to describe an MDP with incomplete information, while Dec-POMDP extends POMDP to the decentralized settings as . Here, denotes a set of agents. , , and denote the state, observation, and action spaces. At each slot , given the true state , each agent partially observes a local observation, based on which it can determine a local action using decentralized policy . The joint observation and action vectors of all agents are denoted by and , and the decentralized policy means the probability of taking joint action at the joint state . Furthermore, is the probability of transferring into a new state by taking action at state . Given the global reward function and the discount factor , the agents can cooperate and coordinate to maximize the discounted return. In this work, we model APs and RISs as two types of agents, whose set is denoted as . Let denote the set of the -th type agents, where represent APs and RISs respectively. We specify the formulated Dec-PoMDP as follows.

III-A1 Observation

The joint observation can be expressed as , where is the local observation of agent that involves part of the environment information, given by

| (26) |

| (27) |

where denotes the local action-observation history of agent , and and denotes the neighboring agent sets of RIS and AP , respectively. Furthermore, we define and as the vectorized channels. Note that in our settings, the joint observation also represents the true environment state, i.e., .

III-A2 Action

In this work, we consider a long-term joint RIS element selection, coordinated discrete phase-shift control, and power allocation optimization problem. Therefore, we define the joint action vector as , where is the local action of agent that can be expressed as

| (28) |

Here, is the downlink power allocation vector of AP agent , and and denote the dynamic reflecting element selection and the discrete phase-shift control of RIS agent , respectively.

III-A3 Decentralized Policy

The joint policy is decomposed into multiple decentralized and parallel local policies for each agent . At each time slot , all the agents take local actions based on the observed local observations to cooperatively maximize the global reward.

III-A4 Global Reward

Let be the data rate constraint violation. Then, the global reward can be defined as , which maximizes the network energy efficiency as well as satisfying data rate requirements. Here, , and is a non-negative parameter that imposes a penalty for data rate violation. However, due to the intractable long-term average queue stability and reliability constraints, it is infeasible to directly use the general MADRL methods to solve the constrained Dec-POMDP problem [24, 25]. Hence, we recast the constrained Dec-POMDP into a standard Dec-POMDP based on the Lyapunov optimization theory [26].

III-B Equivalently Transferred Dec-POMDP

Based on the Markov’s inequality [27], we can obtain the upper bound of the outage probability . Therefore, the reliability constraint (24) can be strictly guaranteed by ensuring

| (29) |

To tackle the long-term average constraints (23) and (29), we apply the virtual queue technique and Lyapunov optimization theory [26] to recast the intractable constrained Dec-POMDP as a normal Dec-POMDP, which can be solved by general MADRL algorithms. To meet the reliability constraints (29), we construct a virtual queue to model the instantaneous deviation of queue length constraints for each user . Here, evolves as follows

| (30) |

where we initialize at , .

Let , . Define as the combined queue vector. Then, we introduce the Lyapunov function to measure the satisfaction status of the reliability constraint, which should be maintained under a low value. Moreover, the one-step Lyapunov drift is defined as , which can be upper bounded by the following Lemma.

Lemma 1.

Define , then can be upper bounded by

| (31) |

where is a constant, with and . Moreover, and are fixed values at each time slot.

Proof.

See Appendix A. ∎

Based on the Lyapunov optimization, the original constrained Dec-POMDP of maximizing the system energy efficiency while ensuring the long-term reliability constraints can be transformed into minimising the following drift-minus-bonus function

| (32) |

where denotes the positive coefficient that controls the tradeoff between energy efficiency and transmission reliability, and the inequality is implied by Lemma 1 and . By ignoring the independent terms from control variables, can be rearranged as

| (33a) | ||||

| (33b) | ||||

IV GE-VDAC Algorithm for Distributed RIS Configuration and Power Allocation

IV-A Review of MADRL Methods

The standard Dec-POMDP problem can be solved based on general cooperative MADRL algorithms [28],[29]. By exploiting the remarkable representation ability of deep neural network (DNN) for approximating arbitrary non-convex functions, MADRL algorithms can learn the mapping from agents’ observations to actions through exploitation and exploration. However, MADRL algorithms commonly suffer partial observability, environment instability, and credit assignment problems [28]. To cope with environment instability, the multi-actor critic framework based on centralized-training and distributed-execution (CTDE) design is proposed [29], which learns distributed policies with a centralized critics. However, since it utilizes a unified critic value to compute local policy gradients, agents cannot fairly learn to contribute for global optimization, leading to the so-called multiagent credit assignment problem [28], [30], also referred to as indolent agent. Therefore, the idea of different rewards has been introduced, which computes the individual reward for each agent to evaluate their contributions and motivate coordinations. QMIX [31] is the widely utilized mechanism that decomposes the joint state-action value into monotonic individual state-action functions for different agents. To overcome the monotonicity limitation of QMIX, QTRAN with higher generalization of value factorization can be leveraged [32]. Nevertheless, both QMIX and QTRAN as extensions of the deep Q-learning are mainly used to deal with the problems with discrete action spaces. In [33], value-decomposition actor-critic (VDAC) is introduced to incorporate the value decomposition into the advantage actor-critic (A2C) framework, which has continuous action space and higher sample efficiency.

In our proposed framework, it’s critical to facilitate coordination among multiple APs and RISs to mitigate interference, improve system energy efficiency, and guarantee diversified QoS requirements. Under the realistic partially observable environment, although the joint design of RIS configuration and power allocation has been decomposed into distributed local policies, information still need to be exchanged among neighboring agents to achieve fully coordination. However, considering the high-dimensional CSI information resulting from the massive MIMO structure, directly exchanging information among interacting neighboring agents during each execution can cause high communication overhead and latency. Therefore, the existing MADRL algorithms are still inefficient to solve the highly coupled Dec-POMDP problem. In this section, we propose GE-VDAC, a novel MADRL algorithm that can tackle the multiagent credit assignment as well as the above problems.

IV-B The Proposed GE-VDAC Framework

GE-VDAC extends the commonly-used CTDE design in the existing MADRL algorithms, which realizes more efficient cooperative learning by integrating two techniques, i.e., graph embedding and different rewards. The interplay among interacting agents are modeled as a directed communication graph. Instead of directly exchanging high-dimensional information, the neighboring agents exchange low-dimensional embedded features learned by graph embedding, thus requiring fewer information exchange to achieve efficient coordination under partially observable environment. Moreover, the graph-embedded features possess permutation-invariant property. By learning distributed DRL policies over the graph-embedded observation space that has reduced dimension and enjoys permutation-invariant property, the learning speed and the generalization ability can be improved.

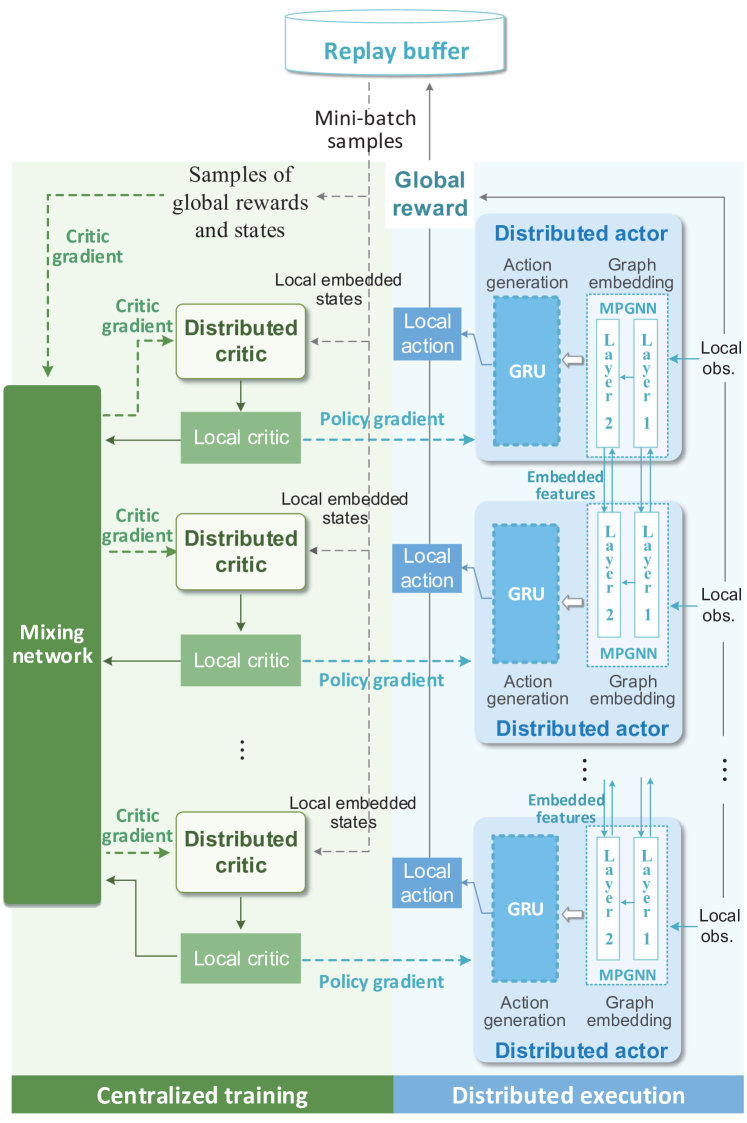

As shown in Fig. 3, the GE-VDAC jointly trains three neural networks, i.e., the distributed graph-embedded actors, the distributed critics, and the centralized mixing network. For simplicity, we assume the agents belonging to the same type share the neural network parameters of both the distributed actor and critic . The distributed graph-embedded actor contains a semi-distributed graph embedding module learning graph-embedded features for efficient information exchange, in conjunction with a fully-distributed action generation module that predicts local action based on the local embedded state attained by the graph embedding module. The graph embedding module is implemented as message passing graph neural network (MPGNN) [34],[35]. Moreover, the action generation module mainly comprises a gated recurrent unit (GRU) [36], which is a simplified variant of long-short term memory (LSTM) that can achieve comparative performance [37]. Two fully-connected (FC) layers are connected with the GRU before and after, respectively. On the other hand, the distributed critic evaluates an individual value of the local embedded state, which achieves differential reward to judge each agent’s contribution for global optimization. Meanwhile, a global mixing network is trained to combine the distributed critics, which guarantees that equivalent policy improvement can be achieved based on the value decomposition.

IV-C Graph-Embedded Actor

IV-C1 Graph Modeling

To characterize the interplay among neighboring agents, we model the THz reconfigurable massive MIMO-NOMA networks as a directed communication graph , where agents are modeled as two types of heterogeneous nodes , and interplay among agents are modeled as edges . maps a node to its -dimensional node feature . Denote tuple as a directed edge from the source node to the destination node . maps an edge to the -dimensional edge feature . Both and are extracted from the local observation of node .

The node feature of a AP node contains the spatial channel information from AP to its associated users, the queue information of its associated users, and the local action-observation history of AP , defined as

| (36) |

Moreover, the node feature of a RIS node includes the local action-observation history, i.e., .

The edge feature depicts the interplay effect of agent to , which can be mathematically denoted as

| (37) |

Specifically, for an AP-RIS link, the edge feature is the spatial channels from AP to the users served by neighboring APs of RIS . For an AP-AP link, the edge feature denotes the channel from AP to the users associated with AP . For a RIS-AP link, the edge feature includes the channels between RIS and both AP and its associated users.

To learn the structured representation of the THz reconfigurable massive MIMO-NOMA networks, we integrate MPGNN [35] into the distributed actor/critic networks for embedding the graph. Therefore, node/edge features of the directed graph over high-dimensional joint state space can be embedded into permutation-invariant features over low-dimensional space. By exchanging the learned low-dimensional and permutation-invariant embedded features among neighboring agents, it’s capable to improve generalization and enhance coordination among APs and RISs, while only requiring low information exchange overhead.

IV-C2 Graph-Embedded Actor

The graph-embedded actor (policy) comprises a semi-distributed graph embedding module which learns the embedding of the graph, followed by a distributed action generation module that outputs action by taking the local embedded sate as inputs. Here, we define as the graph embedding sub-policy, and define , , as the action generation sub-policies, which are parameterized as MPGNNs and GRUs, respectively. Let and denote the MPGNN and GRU weight parameters shared among agents , respectively.

We maintain a MPGNN at each distributed node . Similar to the multi-layer perceptron (MLP), MPGNN exploits a layer-wise structure. In each MPGNN layer, each agent first transmits embedded information to its neighbor agents, and then aggregates embedded information from neighbor agents and updates its local hidden state.

Define the edges and as the outbound and inbound edges of node . Let and denote the sets of neighbor agents that are linked with agent through outbound and inbound edges of agent , respectively. In layer , each agent locally embeds its node feature and the outbound edge feature as

| (38) |

where denotes the hidden state of MPGNN layer at agent , and is the distributed embedding function. Thereafter, each agent transmits the outbound embedded feature to its neighbor agents , and receives the inbound embedded feature from . Thereafter, the received embedded features are aggregated with a aggregation function , which is a permutation-invariant function such as , and . By combining the local hidden state and the aggregated features using the combining function , agent obtains the hidden state of layer as

| (39) |

Combining (38) and (39), the update rule of the -th MPGNN layer at node can be rewritten as

| (40) |

where is the hidden/output state at -th layer for agent , and .

After the graph embedding module, agent would predict local action utilizing GRU based on the output local embedded state , which is given by

| (41) |

The local action for each agent is sampled from the action generation sub-policy .

The coordinated scheduling for APs and RISs based on the distributed graph-embedded actors can be summarized as Algorithm 1. At each time slot , APs and RISs exchange embedded features and obtain their local embedded state through MPGNN. Thereafter, each agent samples its local action based on the action generation sub-policy of the local actor. By observing the reconfigured channels, each AP further decides the user clustering, hybrid beamforming and IoT user decoding order. In this way, the customized user clustering, NOMA decoding and hybrid beamforming schemes are considered as part of the environment feedback, which would be implicitly learned by the distributed actors through exploration and exploitation. The experiences are then joined into the replay memory buffer for centralized learning.

IV-C3 Policy improvement

Denote the combined parameters of graph embedding module and action generation module in the distributed actor (policy) as . Here, the distributed actors are trained to maximize the following performance function:

| (42) |

where is the joint state transition by following the joint policy . Therefore, we calculate policy gradient based on the advantage function, which is given by

| (43) |

where is the actual input of the graph-embedded actor. Moreover, denotes the temporal difference (TD) advantage, which is given by

| (44) |

Here, and denote the global state value and the global state-action value, respectively.

IV-D Value Decomposition

To address credit assignment problem during training, we rely on the value-decomposition critics [33] to train the distributed actors, which decomposes the global state value into local critics that are combined with a mixing function as

| (45) |

where is the local state value for agent .

Now, we are ready to introduce the following Lemma.

Lemma 2.

Any action that can improve agent ’s local critic value at local embedded state will also improve the global state value , if the other agents stay at the same local embedded states taking actions , and the following relationship holds

| (46) |

Proof.

Based on (46), the global reward monotonically increase with when the other agents stay at the same local embedded states taking actions . Therefore, if a local action is capable to improve , obviously it can also improve . ∎

Remark 1.

Based on Lemma 2, it’s rational to approximately decompose the high-complexity global critic into distributed critics combined by a mixing network with non-negative network weight parameters, thus reducing complexity and achieving difference rewards.

During centralized training, each agent attains a differential reward based on the local graph-embedded features to evaluate its contribution for global reward improvement, which can further facilitate agents’ coordination. The weights of mixing network are generated through separate hypernetworks [38]. Each hypernetwork takes the joint state and state-value as input to compute the weights of one layer of the mixing network [31]. To guarantee that the output weights are non-negative, the absolute activation function is exploited at the hypernetworks.

Define as the weight parameters of distributed critic that are shared among agents , and denote as the weights of the mixing network . The distributed critic and mixing network are optimized by mini-batch gradient descent to minimize the following critic loss:

| (47) |

where is the -step return bootstrapped from the last state, with upper-bounded by . Therefore, the mixing networks can be updated by

| (48) |

where is the learning rate for mixing network update.

To reduce complexity, we further share the weight parameters of non-output layers between distributed critics and actors for agents of the same type, similar to [33]. Denote the combined weight parameters of the distributed actors and critics networks as . Therefore, the critic gradient with respect to can be given by

| (49) |

Hence, the update rule of the distributed actor/critic networks can be derived as

| (50) |

where and are the learning rate for policy improvement and critic learning, respectively.

The whole GE-VDAC based MADRL algorithm is summarized in Algorithm 2.

IV-E Theoretical Analysis of GE-VDAC

IV-E1 Permutation invariance

Firstly, we show that GE-VDAC has permutation-invariant property, which can lead to better generalization. Consider a graph , whose node and edge features are denoted by and . Let denote the permutation operator. Let and denote the permutation of nodes (agents) and graph features, respectively. Given two graphs and , if their exists a permutation satisfying , then and are isomorphic, denoted as .

Definition 1.

Given two isomorphic communication graphs with permutation , the distributed actors/critics are permutation invariant if the output joint action vector and joint critic vector satisfy

| (51) |

Traditional centralized/multi-agent actor-critic algorithms usually exchange information directly and utilize neural networks such as MLP, convolutional neural network (CNN) and recurrent neural network (RNN) to learn actor and critic, which cannot achieve the permutation invariance property. Despite a sample and all of its agents’ permutations actually represent the same environments, they would be viewed as utterly different samples. Since traditional algorithms cannot adapt to agents’ permutations intelligently, all permutations of one sample should be fed to train actors and critics, leading to poor generalization and learning speed. However, by extending the traditional DRL algorithms, the graph embedding based distributed actors and critics in GE-VDAC enjoy the permutation invariance property, as shown below.

Proposition 1.

If the aggregation functions , utilized in (39) and (40) are permutation invariant, the distributed actors/critics based on GE-VDAC satisfy permutation invariance.

Proof.

Considering the update rule of hidden states in (40), if aggregation functions , are permutation invariant, the graph embedding features obtained by MPGNN , enjoy permutation invariant property [35], i.e., . Given agents’ permutation , we have

| (52) |

Therefore, we can obtain , which implies the permutation equivalence of the local embedded states. Considering the definition of the local critics, we can achieve

| (53) |

Moreover, since the local actions are sampled from and , we have

| (54) |

which signifies . This ends the proof. ∎

Based on Proposition 1, the learned distributed actors/critics in GE-VDAC are insusceptible to the agents’ permutations. Therefore, GE-VDAC can improve the generalization and the learning speed in multi-agent cooperative learning.

IV-E2 Convergence Guarantee

We also demonstrate that the proposed GE-VDAC algorithm can achieve the locally optimal policy. The convergence of the GE-VDAC algorithm can be theoretically guaranteed with the following theorem.

Theorem 1.

The proposed algorithm can converge to a locally optimal policy for the Dec-POMDP, i.e.,

| (55) |

Proof.

See Appendix B. ∎

IV-E3 Complexity Analysis

The computational complexity of the proposed GE-VDAC algorithm mainly comes from MPGNNs and GRUs. For each MPGNN, the local embedding function and the combining function are parameterized as two-layer MLPs with and neurons in each MLP layer, respectively. Thus, the computational complexity of local embedding, aggregation, and combination at agent in MPGNN layer can be respectively written as , , and , where the outdegree/indegree indicates the number of outbound/inbound edges of agent , is the size of the local embedding , and represents the size of the output vector at layer that is initialized as . Since all the agents can be calculated in parallel, the computational complexity of the whole MPGNN layers is , where implies the maximal complexity of agents in MPGNN layer , i.e.,

| (56) |

On the other hand, since the complexity of GRU per weight and time step is , its computational complexity depends on the number of GRU weight parameters [39]. For agent , the total parameter number of one GRU cell is , where denotes the size of the GRU input defined in (41), and is the number of neurons for each gate in the GRU cell. Based on the above analyses, the computational complexity of the proposed GE-VDAC algorithm can be obtained by

| (57) |

In comparison, the computational complexity of the traditional VDAC algorithm [33] (without information interaction) can be obtained by modifying the GRU input size and ignoring the MPGNN part in the GE-VDAC algorithm as , where is the maximal size of the GRU input at all agents. On the other hand, an advanced VDAC algorithm with direct information exchange among agents, denoted as IE-VDAC, has the complexity of , where .

V Numerical Results

In this section, we present numerical results to demonstrate the effectiveness of the proposed smart reconfigurable massive MIMO-NOMA networks. We consider a network with APs uniformly deployed on the ceiling of an m2 indoor room. Furthermore, we respectively consider RISs mounted on the walls. When , RIS is deployed in the middle of one wall. When , RISs are symmetrically and uniformly spaced on walls, respectively. Each AP equips RF chains to serve SE users and IoT users. For simplicity, we assume that the numbers of users associated with each AP are the same. The minimum rate requirements for SE users and IoT users are Gbit/s and Gbit/s, respectively. We further assume both SE and IoT users arrive the network under the Poisson distribution with the density of Gbit/s and Gbit/s, respectively. Moreover, the maximum queue lengths for SE users and IoT users are set as Gbit/s and Gbit/s, and the violation probability is limited by . On the other hand, each RIS equips with cost-effective RIS elements with quantization bit . The LoS probabilities between AP-device and RIS-device links are calculated according to the human blockage model in [7]. Moreover, the reflection coefficient of NLoS path is given by [19], where denotes the Fresnel reflection coefficient, and is the Rayleigh roughness factor. represents the angle of incidence and reflection, means the refractive index, and e-3 denotes the standard deviation of the surface characterizing the material roughness. Furthermore, the AWGN power spectral density is dBm/Hz. The simulation parameters and the neural network (NN) settings are summarized in Table II. For GE-VDAC, we empirically learn MPGNN layers with local embedding size and output size .

| Parameters | Values | NN parameters | Values |

| Frequency | = THz | GNN layers | |

| Absorption coeff. | Local embedding | ||

| Bandwidth | GHz | MLP neurons | |

| Antenna gain | dBi | Combining function | |

| Time slots | MPGNN outputs | ||

| Inefficiency | 1st FC layer | ||

| Maximum power | W | GRU neurons | |

| Baseband power | W | Last FC layer | |

| RF chain power | W | Learning rates ( , and ) | |

| Phase shifter power | W | ||

| Amplifier power | W | ||

| RIS element power | W |

We compare the proposed GE-VDAC algorithm with the following three baselines:

-

•

Central critic: the multi-agent centralized-critic distributed-actor algorithm, which extends the traditional multi-agent actor-critic algorithm [29] to the hybrid discrete and continuous action space.

-

•

VDAC: the fully distributed VDAC algorithm [33] without information exchange among agents.

-

•

IE-VDAC: the VDAC algorithm with direct Information Exchange among neighboring agents.

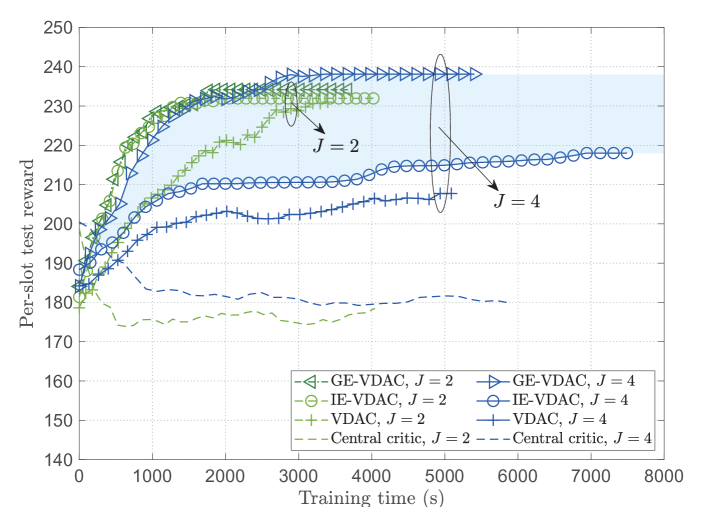

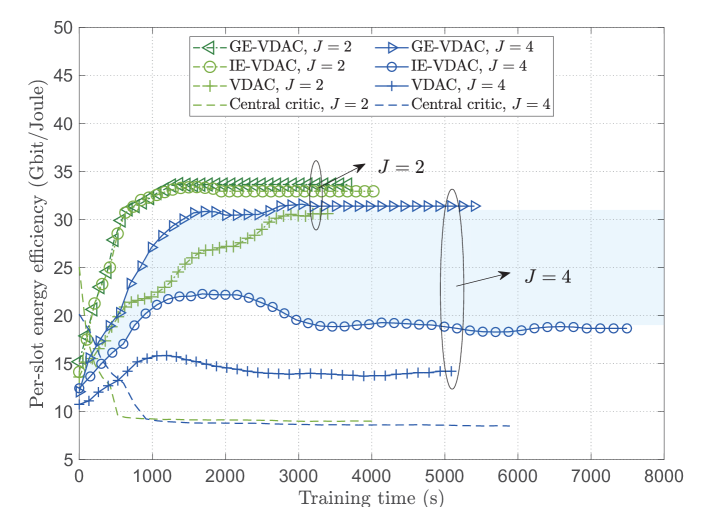

The convergence performance comparisons among different algorithms are shown in Fig. 4, where all of the agents are trained for time steps, and we set , , . We can see that the value-decomposition based algorithms (VDAC, IE-VDAC, GE-VDAC) outperform central critic algorithm, since the coordination among agents is enhanced through difference rewards. The fully distributed VDAC algorithm requires no information exchange overhead and takes the least training time, but has lower learning speed and expected reward than both IE-VDAC and GE-VDAC. With less information exchange overhead than IE-VDAC, GE-VDAC can achieve the highest expected reward and learning speed, and the performance gap increases with , demonstrating its ability to improve generalization and enhance agents’ coordination. Moreover, since GE-VDAC utilizes the dimension-reduced embedded features, it has lower complexity than IE-VDAC and takes less training time, and the time gap is larger as increases.

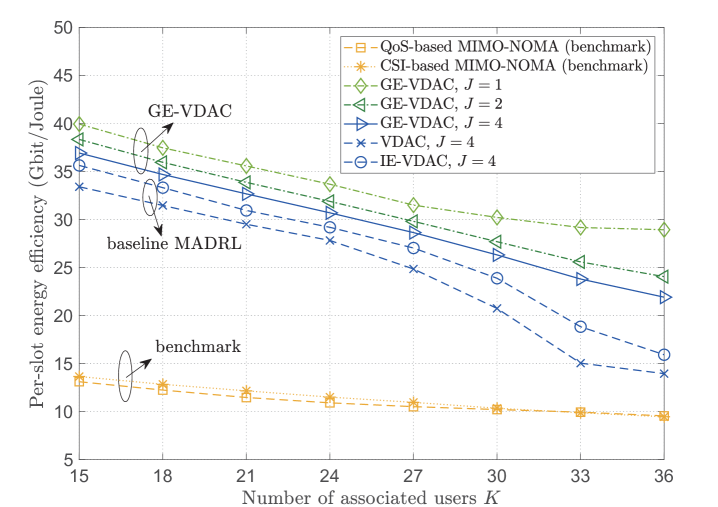

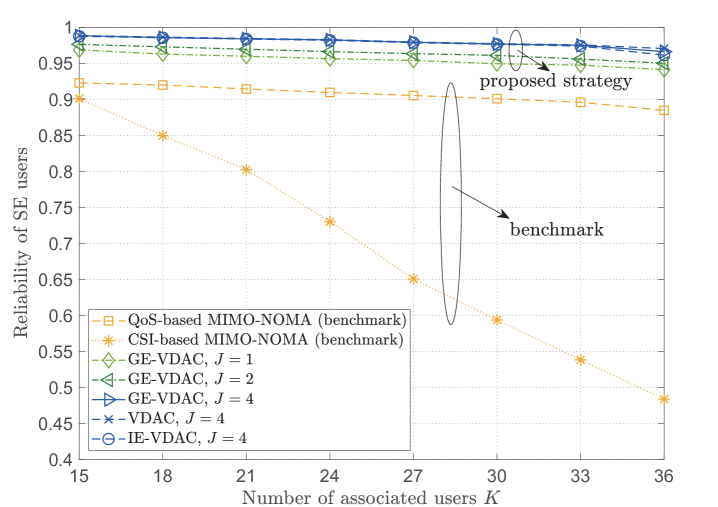

In Fig. 5, we show the energy efficiency and reliability performance of the smart reconfigurable THz MIMO-NOMA networks under different associated user numbers . Here, we further consider conventional THz massive MIMO-NOMA without the aid of RISs as benchmarks, which utilizes equal power allocation under channel-based/QoS-based user clustering and NOMA decoding. We can see that the CSI-based MIMO-NOMA scheme has the lowest reliability of SE users, and the performance gap dramatically grows as the associated users increase. Without the aid of RISs, the QoS-based MIMO-NOMA scheme can provide higher reliability than CSI-based MIMO-NOMA scheme, but leads to the lowest energy efficiency. However, the proposed algorithms can achieve the highest global energy efficiency, while guaranteeing the reliability of SE users. Even when the number of IoT users increases, the reliability of SE users can be guaranteed to be larger than , which verifies that the proposed reconfigurable THz MIMO-NOMA can provide on-demand QoS for heterogeneous users with efficient spectral utilization.

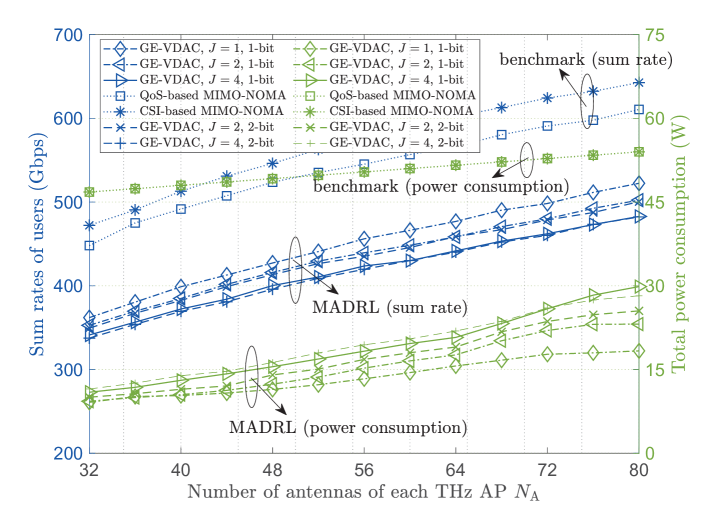

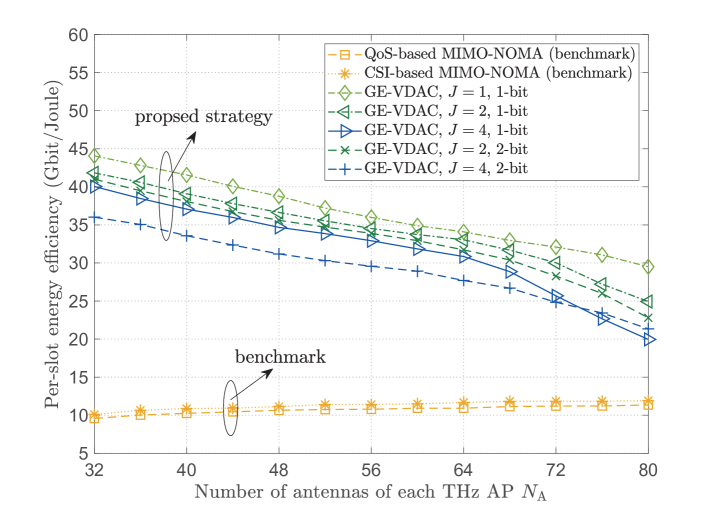

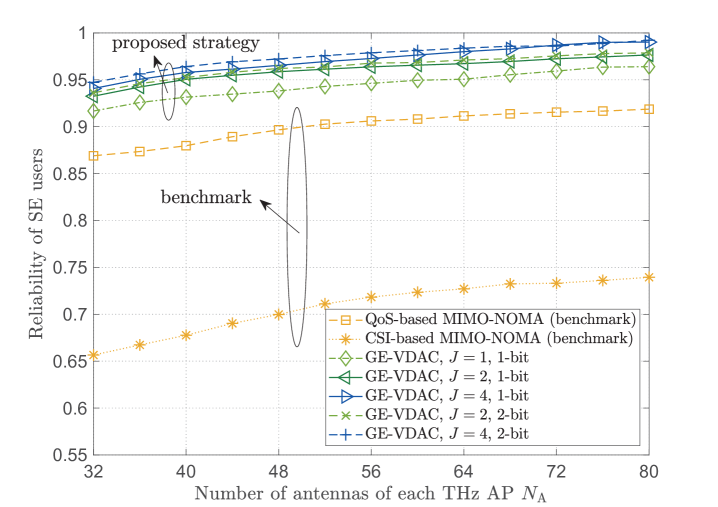

Fig. 6 and Fig. 7 present the performance of the proposed networks under different number of RISs and RIS quantization bit . As shown in Fig. 6, when and increase, the power consumption increases due to the higher hardware power dissipation. Meanwhile, the sum rate of users increases with but decreases with , since the spatial stream interferences become more severe as growing. In Fig. 7(a), the energy efficiency declines with and based on the proposed algorithms due to the higher circuit power dissipation. Compared with -bit RISs, -bit RISs result in higher power dissipation to achieve competitive data rate. Nevertheless, -bit RISs can achieve higher energy efficiency when and the number of antennas is large, since they have higher flexibility to suppress the severe spatial interference. Furthermore, Fig. 7(b) shows the reliability of SE users increases with , , and , which demonstrates the effectiveness of massive MIMO structure and the cooperative RISs to avoid blockage.

VI Conclusion

In this work, we propose a novel smart reconfigurable THz MIMO-NOMA framework, which empowers customizable and intelligent indoor communications. We first propose specific schemes of customized user clustering, NOMA decoding, and hybrid beamforming. Thereafter, we formulate a long-term Dec-POMDP problem under a joint dynamic RIS element selection, coordinated discrete phase-shift control, and power allocation strategy, which maximizes the network energy efficiency while ensuring heterogenous data rate and reliability for on-demanded users. To efficiently solve the intractable highly complex MINLP problem, we further develop a decentralized GE-VDAC based MADRL algorithm. We theoretically demonstrate that the GE-VDAC algorithm achieves locally optimal convergence and better generalization. Numerical results show that the proposed algorithm can achieve higher energy efficiency and faster learning speed compared to traditional MADRL methods. Furthermore, we can also obtain highly customized communications through efficient resource utilization.

Appendix A Proof of Lemma 1

Considering the definition of and , we have

| (58) |

Appendix B Proof of the Theorem 1

For the sake of expression, we ignore the time slot index in the following proof. The joint policy can be written as the product of the graph embedding sub-policy and the independent action generation sub-policies:

| (61) |

Therefore, the policy gradient in (43) can be rewritten as

| (62) |

where is the joint policy parameter vector.

References

- [1] M. Giordani, M. Polese, M. Mezzavilla, S. Rangan, and M. Zorzi, “Toward 6G networks: use cases and technologies,” IEEE Commun. Mag., vol. 58, no. 3, pp. 55-61, Mar. 2020.

- [2] L. Yan, C. Han, and J. Yuan, “A dynamic array-of-subarrays architecture and hybrid precoding algorithms for terahertz wireless communications,” IEEE J. Sel. Areas Commun., vol. 38, no. 9, pp. 2041-2056, Sept. 2020.

- [3] Y. Liu, Z. Qin, M. Elkashlan, Z. Ding, A. Nallanathan, and L. Hanzo, “Nonorthogonal multiple access for 5G and beyond,” Proc. IEEE, vol. 105, no. 12, pp. 2347-2381, Dec. 2017.

- [4] Y. Liu, H. Xing, C. Pan, A. Nallanathan, M. Elkashlan, and L. Hanzo, “Multiple-antenna-assisted non-orthogonal multiple access,” IEEE Wireless Commun., vol. 25, no. 2, pp. 17-23, Apr. 2018.

- [5] M. Zeng, A. Yadav, O. A. Dobre, G. I. Tsiropoulos, and H. V. Poor, “Capacity comparison between MIMO-NOMA and MIMO-OMA with multiple users in a cluster,” IEEE J. Sel. Areas Commun., vol. 35, no. 10, pp. 2413-2424, Oct. 2017.

- [6] H. Zhang, H. Zhang, W. Liu, K. Long, J. Dong, and V. C. M. Leung, “Energy efficient user clustering, hybrid precoding and power optimization in terahertz MIMO-NOMA systems,” IEEE J. Sel. Areas Commun., vol. 38, no. 9, pp. 2074-2085, Sept. 2020.

- [7] Y. Wu, J. Kokkoniemi, C. Han, and M. Juntti, “Interference and coverage analysis for terahertz networks with indoor blockage effects and line-of-sight access point association,” IEEE Trans. Wireless Commun., vol. 20, no. 3, pp. 1472-1486, Mar. 2021.

- [8] S. Gong, X. Lu, D. T. Hoang, D. Niyato, L. Shu, D. I. Kim, and Y. C. Liang, “Toward smart wireless communications via intelligent reflecting surfaces: A contemporary survey,” IEEE Commun. Surveys Tuts., vol. 22, no. 4, pp. 2283-2314, 4th Quart. 2020.

- [9] M. D. Renzo, A. Zappone, M. Debbah, M. Alouini, C. Yuen, J. Rosny, and S. Tretyakov, “Smart radio environments empowered by reconfigurable intelligent surfaces: How it works, state of research, and the road ahead,” IEEE J. Sel. Areas Commun., vol. 38, no. 11, pp. 2450-2525, Nov. 2020.

- [10] Y. Liu, X. Liu, X. Mu, T. Hou, J. Xu, M. D. Renzo, and N. Al-Dhahir, “Reconfigurable intelligent surfaces: principles and opportunities,” IEEE Commun. Surveys Tuts., vol. 23, no. 3, pp. 1546-1577, 3rd Quart. 2021.

- [11] C. Chaccour, M. Naderi Soorki, W. Saad, M. Bennis, and P. Popovski, “Risk-based optimization of virtual reality over terahertz reconfigurable intelligent surfaces,” in Proc. IEEE Int. Conf. Commun. (ICC), Jun. 2020, pp. 1-6.

- [12] Y. Liu, X. Mu, X. Liu, M. D. Renzo, Z. Ding, and R. Schober, “Reconfigurable intelligent surface (RIS) aided multi-user networks: Interplay between NOMA and RIS,” 2020, arXiv:2011.13336. [Online]. Available: https://arxiv.org/abs/2011.13336.

- [13] X. Mu, Y. Liu, L. Guo, J. Lin, and N. Al-Dhahir, “Capacity and optimal resource allocation for IRS-assisted multi-user communication systems,” IEEE Trans. on Commun., vol. 69, no. 6, pp. 3771-3786, Jun. 2021.

- [14] Y. Li, M. Jiang, Q. Zhang, and J. Qin, “Joint beamforming design in multi-cluster MISO NOMA intelligent reflecting surface-aided downlink communication networks,” 2019, arXiv:1909.06972. [Online]. Available: http://arxiv.org/abs/1909.06972.

- [15] X. Mu, Y. Liu, L. Guo, J. Lin, and N. Al-Dhahir, “Exploiting intelligent reflecting surfaces in NOMA networks: Joint beamforming optimization,” IEEE Trans. Wireless Commun., vol. 19, no. 10, pp. 688-6898, Oct. 2020.

- [16] X. Liu, Y. Liu, Y. Chen, and H. V. Poor, “RIS enhanced massive non-orthogonal multiple access networks: Deployment and passive beamforming design,” IEEE J. Sel. Areas Commun., vol. 39, no. 4, pp. 1057-1071, Apr. 2021.

- [17] X. Zhu, Z. Wang, L. Dai, and Q. Wang, “Adaptive hybrid precoding for multiuser massive MIMO,” IEEE Commun. Lett., vol. 20, no. 4, pp. 776-779, Apr. 2016.

- [18] Q. Wu, S. Zhang, B. Zheng, C. You, and R. Zhang, “Intelligent reflecting surface-aided wireless communications: A tutorial,” IEEE Trans. on Commun., vol. 69, no. 5, pp. 3313-3351, May 2021.

- [19] R. Piesiewicz, C. Jansen, D. Mittleman, T. Kleine-Ostmann, M. Koch, and T. Kurner, “Scattering analysis for the modeling of THz communication systems,” IEEE Trans. Antennas Propag., vol. 55, no. 11, pp. 3002-3009, Nov. 2007.

- [20] J. M. Jornet and I. F. Akyildiz, “Channel modeling and capacity analysis for electromagnetic wireless nanonetworks in the terahertz band,” IEEE Trans. Wireless Commun., vol. 10, no. 10, pp. 3211-3221, Oct. 2011.

- [21] L. Dai, B. Wang, M. Peng, and S. Chen, “Hybrid precoding-based millimeter-wave massive MIMO-NOMA with simultaneous wireless information and power transfer,” IEEE J. Sel. Areas Commun., vol. 37, no. 1, pp. 131-141, Jan. 2019.

- [22] S. Samarakoon, M. Bennis, W. Saad, and M. Debbah, “Distributed federated learning for ultra-reliable low-latency vehicular communications,” IEEE Trans. Commun., vol. 68, no. 2, pp. 1146-1159, Nov. 2019.

- [23] C. Huang, A. Zappone, G. C. Alexandropoulos, M. Debbah, and C. Yuen, “Reconfigurable intelligent surfaces for energy efficiency in wireless communication,” IEEE Trans. Wireless Commun., vol. 18, no. 8, pp. 4157-4170, Aug. 2019.

- [24] S. Khairy, P. Balaprakash, L. X. Cai, and Y. Cheng, “Constrained deep reinforcement learning for energy sustainable multi-UAV based random access IoT networks with NOMA,” IEEE J. Sel. Areas Commun., vol. 39, no. 4, pp. 1101-1115, Apr. 2021.

- [25] W. Wu, P. Yang, W. Zhang, C. Zhou, and S. Shen, “Accuracy-guaranteed collaborative DNN inference in industrial IoT via deep reinforcement learning,” IEEE Trans. Ind. Inform., vol. 17, no. 7, pp. 4988-4998, Jul. 2021.

- [26] M. J. Neely, “Stochastic network optimization with application to communication and queueing systems,” Synthesis Lectures on Communication Networks, vol. 3, no. 1, pp. 1-211, 2010.

- [27] B. K. Ghosh, “Probability inequalities related to Markov’s theorem,” Amer. Statist., vol. 56, no. 3, pp. 186-190, Aug. 2002.

- [28] T. T. Nguyen, N. D. Nguyen, and S. Nahavandi, “Deep reinforcement learning for multiagent systems: A review of challenges, solutions, and applications,” IEEE Trans. Cybern., vol. 50, no. 9, pp. 3826-3839, Sept. 2020.

- [29] R. Lowe, Y. Wu, A. Tamar, J. Harb, P. Abbeel, and I. Mordatch, “Multi-agent actor-critic for mixed cooperative-competitive environments,” Proc. Adv. Neural Inf. Process. Syst., pp. 6379-6390, 2017.

- [30] D. H. Wolpert and K. Tumer, “Optimal payoff functions for members of collectives,” Adv. Complex Syst., vol. 4, pp. 265-279, 2001.

- [31] T. Rashid, M. Samvelyan, C. S. De Witt, G. Farquhar, J. Foerster, and S. Whiteson, “QMIX: Monotonic value function factorisation for deep multi-agent reinforcement learning,” 2018, arXiv:1803.11485. [Online]. Available: http://arxiv.org/abs/1803.11485.

- [32] K. Son, D. Kim, W. J. Kang, D. E. Hostallero, and Y. Yi, “QTRAN: Learning to factorize with transformation for cooperative multi-agent reinforcement learning,” 2019, arXiv:1905.05408. [Online]. Available: http://arxiv.org/abs/1905.05408.

- [33] J. Su, S. Adams, and P. A. Beling, “Value-decomposition multi-agent actor-critics,” Proc. 35th AAAI Conf. Artif. Intell., pp. 11352-11360, 2021.

- [34] K. Xu, W. Hu, J. Leskovec, and S. Jegelka, “How powerful are graph neural networks?” Proc. Int. Conf. Learn. Represent., May 2019. [Online]. Available: https://openreview.net/forum?id=ryGs6iA5Km.

- [35] Y. Shen, Y. Shi, J. Zhang, and K. B. Letaief, “Graph neural networks for scalable tadio tesource management: Architecture design and theoretical analysis,” IEEE J. Sel. Areas Commun., vol. 39, no. 1, pp. 101-115, Jan. 2021.

- [36] K. Cho, B. Van Merriënboer, C. Gulcehre, D. Bahdanau, F. Bougares, H. Schwenk, and Y. Bengio, “Learning phrase representations using RNN encoder-decoder for statistical machine translation,” 2014, arXiv:1406.1078. [Online]. Available: http://arxiv.org/abs/1406.1078.

- [37] J. Chung, C. Gulcehre, K. Cho, and Y. Bengio, “Empirical evaluation of gated recurrent neural networks on sequence modeling,” 2014, arXiv:1412.3555. [Online]. Available: http://arxiv.org/abs/1412.3555.

- [38] D. Ha, A. Dai, and Q. V. Le, “Hypernetworks,” 2016, arXiv:1609.09106. [Online]. Available: http://arxiv.org/abs/1609.09106.

- [39] J. Xie, J. Fang, C. Liu, and X. Li, “Deep learning-based spectrum sensing in cognitive radio: A CNN-LSTM approach”, IEEE Commun. Lett., vol. 24, no. 10, pp. 2196-2200, Oct. 2020.

- [40] R. S. Sutton, D. A. McAllester, S. P. Singh, and Y. Mansour, “Policy gradient methods for reinforcement learning with function approximation,” Advances Neural Inform. Processing Syst., Vol. 99, pp. 1057-1063, Nov. 2000.

- [41] V. R. Konda and J. N. Tsitsiklis, “Actor-critic algorithms,” Advances Neural Inform. Processing Syst., pp. 1008-1014, 2000.