Graphical constructions of simple exclusion processes with applications to random environments

Abstract.

We show that the symmetric simple exclusion process (SSEP) on a countable set is well defined by the stirring graphical construction as soon as the dynamics of a single particle is. The resulting process is Feller, its Markov generator is derived on local functions, duality at the level of the empirical density field holds. We also provide a general criterion assuring that local functions form a core for the generator. We then move to the simple exclusion process (SEP) and show that the graphical construction leads to a well defined Feller process under a percolation-type assumption corresponding to subcriticality in a percolation with random inhomogeneous parameters. We derive its Markov generator on local functions which, under an additional general assumption, form a core for the generator. We discuss applications of the above results to SSEPs and SEPs in random environments, where the standard assumptions to construct the process and investigate its basic properties (by the analytic approach or by graphical constructions) are typically violated. As detailed in [14], our results for SSEP also allow to extend the quenched hydrodynamic limit in path space obtained in [11] by removing Assumption (SEP) used in there.

Keywords: Feller process, Markov generator, exclusion process, graphical construction, duality, empirical density field, random environment.

MSC2020 Subject Classification: 60K35, 60K37 60G55, 82D30

1. Introduction

Given a countable set and given non-negative numbers associated to with , the simple exclusion process (SEP) with rates is the interacting particle system on roughly described a follows. At most one particle can lie on a site and each particle - when sitting at site - attempts to jump to a site with probability rate , afterwards the jump is allowed only if the site is empty. One can think of a family of continuous-time random walks with jump probability rates apart from the hard-core interaction. The SEP is called symmetric (and will be denoted as SSEP below) when . Of course, conditions have to be imposed to have a well defined process for all times. For example, when the particle system is given by a single particle, the random walk with jump probability rates has to be well defined for all times: the holding time parameter is finite for all and a.s. no explosion occurs (whatever the starting site is).

The analytic approach in [20] assures that the SEP is well defined and is a Feller process with state space if

| (1) |

This follows by combining the Hille-Yosida Theorem (cf. [20, Theorem 2.9, Chapter 1]) with [20, Theorem 3.9, Chapter 1]. Indeed, conditions (3.3) and (3.8) in [20, Theorem 3.9, Chapter 1] are both equivalent to (1) as derived in Appendix A. For the SSEP, (1) can be rewritten as . It turns out that (1) is a too much restrictive assumption when dealing with SEPs or SSEPs in a random environment, i.e. with random rates and possibly with a random set . In this case we will write and in order to stress the dependence from the environment . For example one could consider the SSEP on with nearest-neighbor jumps and i.i.d. unbounded jump probability rates associated to the undirected edges. Or, starting with a simple point process111A simple point process on is a random locally finite subset of [10]. on (e.g. a Poisson point process), one could consider the SSEP on with jump probability rates of the form for all in and for a fixed decaying function . One could also consider the Mott variable range hopping (v.r.h.), without any mean-field approximation, which describes the electron transport in amorphous solids as doped semiconductors in the regime of strong Anderson localization at low temperature [1, 22, 23]. Starting with a marked simple point process where222By definition of marked simple point process, is a simple point process and is called mark of [10] and , Mott v.r.h. corresponds to the SEP on where, for ,

The list of examples can be made much longer (cf. e.g. [11, 13, 14] for others). The above models anyway show that, in some contexts with disorder, conditions (1) is typically not satisfied (i.e. for almost all (1) is violated with and ). On the other hand, it is natural to ask if a.s. the above SEPs exist, are Feller processes, to ask how their Markov generator behaves on good (e.g. local) functions, when local functions form a core and so on.

To address the above questions we leave the analytic approach of [20] and move to graphical constructions. The graphical approach has a long tradition in interacting particle systems, in particular also for the investigation of attractiveness and duality. Graphical constructions of SEP and SSEP are discussed e.g. in [18, 19] (also for more general exclusion processes) and in [25, Chapter 2], briefly in [20, p. 383, Chapter VIII.2] for SEP and [20, p. 399, Chapter VIII.6] for SSEP as stirring processes. For the graphical constructions of other particle systems we mention in particular [20, Chapter III.6] and [7] (see also the references in [20, Chapter III.7]). On the other hand, the above references again make assumptions not compatible with many applications to particles in a random environment (e.g. finite range jumps or rates of the form , being a probability kernel).

Let us describe our contributions. In Section 3 we consider the SSEP on a countable set (of course, the interesting case is for infinite). Under the only assumption that the continuous time random walk on with jump probability rates is well defined for all times (called Assumption SSEP below), we show that the stirring graphical construction leads to a well defined Feller process, with the right form of generator on local functions and on other good functions (see Propositions 3.2, 3.3, 3.4, 3.5 in Section 3). We also provide a general criterion assuring that local functions form a core for the generator (see Proposition 3.6). Due to its relevance for the study of hydrodynamics and fluctuations of the empirical field for SSEPs in random environments (see e.g. [5, 11, 16]), we also investigate duality properties of the SSEP at the level of the empirical field (see Section 3.3). Finally, in Section 4 we discuss some applications to SSEPs in a random environment. We point out that the construction of the SSEP on a countable set , when the single random walk is well defined, can be obtained also by duality and Kolmogorov’s theorem as in [4, Appendix A]. On the other hand, the analysis there is limited to the existence of the stochastic process.

We then move to the SEP. Under what we call Assumption SEP, which is inspired by Harris’ percolation argument [9, 18], in Section 5 we show that the graphical construction leads to a well defined Feller process and derive explicitly its generator on local functions and other good functions (see Propositions 5.8, 5.10, 5.11, 5.12). The analysis generalizes the one in [25, Chapter 2] (done for , and of the form for a finite range probability on ).

Checking the validity of Assumption SEP consists of proving subcriticality in suitable percolation models with random inhomogeneous parameters. Also for SEP we provide a general criterion assuring that local functions form a core for the generator (see Propositions 5.14, 5.16).

In Section 6 we discuss some applications to SEPs in a random environment. We point out that in [11] we assumed what we called there “Assumption (SEP)”, which corresponds to the validity of the present

Assumption SEP for a.a. realizations of the environment. In particular, in [11] we checked its validity for some classes of SEPs in a random environment. In Section 6 we recall these results in the present language. As a byproduct, we also derive the existence (and several properties of its Markov semigroup on continuous functions) of Mott v.r.h. on a marked Poisson point process.

We point out that the SEPs treated in [11] are indeed SSEPs. As a byproduct of our results in Section 3, the quenched hydrodynamic limit derived in [11] remains valid also by removing Assumption (SEP) there. This application will be detailed in [14].

Outline of the paper. Section 2 is devoted to notation and preliminaries. In Section 3 we describe the stirring graphical construction and our main results for SSEP (the analogous for SEP is given in Section 5). In Section 4 we discuss some applications to SSEPs in a random environment (the analogous for SEP is given in Section 6). The other sections and Appendix A are devoted to proofs.

2. Notation and preliminaries

Given a topological space we denote by the –algebra of its Borel subsets. We think of as a measurable space with –algebra of measurable subsets given by .

Given a metric space with metric satisfying for all (this can be assumed at cost to replace by ), is the space of càdlàg paths from to endowed with the Skorohod distance associated to . We denote this distance by and for completeness we recall its definition (see [8, Chapter 3]). Let be the family of strictly increasing bijective functions such that

Then, given , the distance is defined as

Due to [8, Proposition 5.3, Chapter 3] in if and only if there exists a sequence such that

| (2) |

If is separable, then the Borel –algebra of coincides with the -algebra generated by the coordinate maps , (see [8, Proposition 7.1, Chapter 3]). If is Polish (i.e. it is a complete separable metric space), then also is Polish (see [8, Theorem 5.6, Chapter 3]).

We now discuss two examples, frequently used in the rest. In what follows we will take endowed with the discrete topology and in particular with distance between . will be endowed with the Skorohod metric, denoted in this case by . Since is separable, is generated by the coordinate maps , .

Given a countable infinite set , we fix once and for all an enumeration

| (3) |

of and we endow with the metric

| (4) |

Then this metric induces the product topology on ( has the discrete topology). We point out that is a Polish space. Indeed, is a compact metric space and therefore it is also complete and separable. As a consequence also is Polish. Given a path and a time , we will sometimes write instead of . In particular, will be the value at of the configuration . Moreover, we will usually write instead of to denote a generic path in .

Since is separable, the –algebra is generated by the coordinate maps , . Since is generated by the coordinate maps , we conclude that is generated by the maps as vary in and , respectively. This will be used in what follows.

3. Graphical construction, Markov generator and duality of SSEP

Let be an infinite countable set. We denote by the family of unordered pairs of elements of , i.e.

| (5) |

To each pair we associate a number . To simplify the notation, we write instead of . Note that for all in . Moreover, to simplify the formulas below, we set

The following assumption, in force throughout all this section, will assure that the graphical construction of the symmetric simple exclusion process (SSEP) as stirring process is well posed.

Assumption SSEP. We assume that the following two conditions are satisfied:

-

(C1)

For all it holds ;

-

(C2)

For each the continuous-time random walk on starting at and with jump probability rates a.s. has no explosion.

When Conditions (C1) and (C2) are satisfied, we say that the random walk on with jump rates (or conductances) is well defined (for all times). This random walk (also called conductance model, cf. [3]) is built in terms of waiting times and jumps as follows. Arrived at (or starting at) , the random walk waits at an exponential time with mean . If , this waiting time is infinite. If , once completed its waiting, the random walk jumps to another site chosen with probability (independently from the rest). Condition (C2) says that, a.s., the jump times in the above construction have no accumulation point and therefore the random walk is well defined for all times.

3.1. Graphical construction of the SSEP

We consider the product space endowed with the product topology (recall that is endowed with the Skorohod metric , see Section 2). We write for a generic element of . The product topology on is induced by the metric

| (6) |

Definition 3.1 (Probability measure ).

We associate to each pair a Poisson process with intensity and with , such that the ’s are independent processes when varying the pair . We define as the law on of the random object and we denote by the expectation associated to .

We stress that, since the pairs are unordered, we have and .

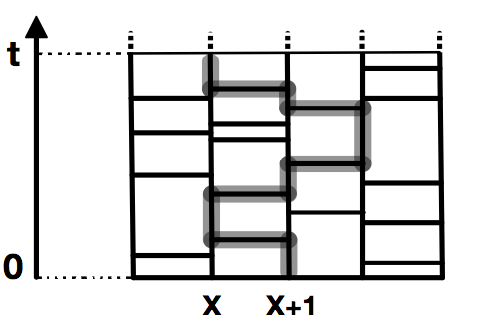

We briefly recall the graphical construction of the SSEP as stirring process (see also Figure 1). The detailed description and the proof that a.s. it is well posed will be provided in Section 7.

Given and we define as the output of the following algorithm (see Definition 7.4 for details). Start at and consider the set of all jump times not exceeding of the paths of the form with . If this set is empty, then stop and define , otherwise take the maximum value in this set. If is the jump time of , then move to and consider now the set of all jump times strictly smaller than of the paths of the form with . If this set is empty, then stop and define , otherwise take the maximum value in this set and repeat the above step. Iterate this procedure until the set of jump times is empty. Then the algorithm stops and its output is the last site of visited by the algorithm. Roughly, to determine it is enough to follow the path in the graph of Figure 1, starting at at time , going back in time and crossing an horizontal edge every time it appears. Then is the site visited by the path at time . In Section 7 we will prove that the above construction is well posed for all and if , where is a suitable Borel subset of with .

Having defined , given we set

Then . In Lemma 7.12 in Section 7 we show that and that the map is measurable in .

We write for the –algebra of Borel subsets of . Since is separable, is generated by the coordinate maps , (see Section 2). We also define as the –algebra generated by the coordinate maps with . Then is a filtered measurable space. For each we define as the probability measure on the above filtered measurable space given by

In what follows, we write for the expectation w.r.t. . Similarly to [25, Theorem 2.4] we get:

Proposition 3.2 (Construction of SSEP).

The family of probability measures on the filtered measurable space is a Markov process (called symmetric simple exclusion process with conductances ), i.e.

-

(i)

for all ;

-

(ii)

for any the function is measurable;

-

(iii)

for any and it holds –a.s.

For the proof of the above proposition see Section 7.1.

3.2. Markov semigroup and infinitesimal generator

We write for the space of real continuous functions on endowed with the uniform norm.

Proposition 3.3 (Feller property).

Given and given , the map defined as belongs to . In particular, the SSEP with conductances is a Feller process.

For the proof of the above proposition see Section 7.2.

Due to the Markov property in Proposition 3.2, is a semigroup on . Moreover, by using dominated convergence and that is right-continuous for (cf. Lemma 7.12), it is simple to check that is a strongly continuous semigroup. Its infinitesimal generator is then the Markov generator of the SSEP with conductances . We recall that has domain

and is defined as where the above limit is in .

Proposition 3.4 (Infinitesimal generator on local functions).

The configuration is obtained from by exchanging the occupation variables at and , i.e.

| (9) |

Moreover, we recall that a function is called local if, for some finite , is determined by (note that any local function is also continuous on ). In what follows, we will denote by the set of local functions, which is dense in .

The proof of Proposition 3.4, given in Section 7.3, has several similarities with then one in [11, Appendix B], where another graphical construction is used.

As in [20], given , we set

| (10) |

We also define

| (11) |

Then Proposition 3.4 can be extended to a larger class of functions by approximation. Indeed we have:

Proposition 3.5 (Infinitesimal generator on further good functions).

Let satisfy and . Then and , where the r.h.s. is an absolutely convergent series of functions in .

The proof of the above proposition is given in Section 7.4. Note that if is a local function, then except for a finite set of elements . In particular, local functions satisfy both and .

For the next result we recall that a set is a core of if and the graph of in is the closure of the set . We denote by the continuous–time random walk on with jump probability rates (which is well defined by Assumption SSEP) and we denote by the expectation referred to the random walk starting at . Moreover, we set and we recall that .

Proposition 3.6 (Core for ).

Suppose that

| (12) |

Then the family of local functions is a core for .

The proof of the above proposition is given in Section 7.5.

Remark 3.7.

Our attention to deal in (12) with and not with is motivated by the applications to random walks in random environment (see Section 4). Indeed, dealing with the countable set , (12) is valid for almost any environment if, fixed , for almost any environment it holds for all .

We note that, due to the symmetry , condition (1) assuring the validity of the analytic construction of the SSEP in [20] and in particular of [20, Theorem 3.9] reads

| (13) |

When (13) is satisfied, [20, Theorem 3.9] provides also a core for the generator of SSEP, which is given by . It is then standard to derive from this result that is a core for (see e.g. Remark 7.13 in Section 7.4 and use that the graph of is closed since is a Markov generator [20, Chapter 1]). All the above results from [20] are indeed included in ours since, under (13), Assumption SSEP is fulfilled, condition (12) is automatically satisfied and whenever .

3.3. Duality with the rw

Due to its relevance for the study of hydrodynamics and fluctuations of the empirical field for SSEPs in random environment (see e.g. [5, 11, 16]), we focus here on duality at the level of the density field. Apart from Lemma 3.9, the notions and results presented below are discussed in [11, Sections 6 and 8] (the proofs in [11] can be easily adapted to our notation, since it is enough to take there and to replace and there by and respectively). We denote by the set of functions which are zero outside a finite subset of . Since has the discrete topology, corresponds to the set of real functions with compact support. We write for the counting measure on and we introduce the set given by

We then consider the bilinear form with domain given by

On we introduce the norm with . One can easily derive from Condition (C1) that . We then call the closure of in w.r.t. the norm (see [11, Section 6]). By arguing as in [15, Example 1.2.5] the bilinear form restricted to is a regular Dirichlet form. As a consequence, there exists a unique nonpositive self-adjoint operator in such that equals the domain of and for any (cf. [15, Theorem 1.3.1]). By [15, Lemma 1.3.2 and Exercise 4.4.1] is the infinitesimal generator of the strongly continuous Markov semigroup on associated to the random walk on with jump probability rates (defined in terms of holding times and jump probabilities as after Assumption SSEP). In particular, we have where is the expectation referred to the random walk starting at . In what follows we write for the domain of the operator .

Definition 3.8.

Given a function such that , we define as .

Note that the series in the r.h.s. of the definition of is absolutely convergent by the assumption on and the symmetry of the ’s (indeed , while is finite since we have ). In particular, if then is well defined.

Although not necessary to prove the hydrodynamic limit of SSEPs on point processes, the following result has its own interest since it makes the generator explicit on local functions:

Lemma 3.9.

If , then and .

The above lemma is proved in Section 7.6.

We can now describe the duality between the SSEP with conductances and the random walk with probability rates at the level of the density field (i.e. the empirical measure). To this aim we recall that given the empirical measure is the atomic measure on given by

| (14) |

Given a real function on integrable w.r.t. , we will write or simply for the sum of w.r.t. . Trivially, if as in the lemma below, then is integrable w.r.t. for all

Recall that is the infinitesimal generator of the semigroup on associated to the SSEP with conductances .

Lemma 3.10 (see [11, Lemma 8.2]).

Suppose that satisfies

| (15) |

Then the map is continuous and indeed is an absolutely convergent series in . This map belongs to the domain of and

| (16) |

the r.h.s. of (16) being an absolutely convergent series in .

If in addition to (15) we have (for example, if ), then and in particular we have the duality relation

| (17) |

Identities of the form (17) are relevant to study hydrodynamics and fluctuations of the density field since they are associated to Dynkin’s martingales. We point out that above we have considered the Markov semigroup of the random walk in since particularly convenient for the stochastic homogenization analysis as in [12]. Of course, one could have considered as well the Markov semigroup on other functional spaces, as the space of continuous functions on vanishing at infinity endowed with the uniform norm.

4. Applications to SSEPs in a random environment

We discuss some applications of the results presented in the previous section to SSEPs in a random environment. We consider for simplicity , but the arguments and results we will present can be extended to more general graphs (see e.g. [14]).

We take . We denote by the set of undirected edges of the lattice . We take , endowed with the product topology and the Borel –algebra . We let be a probability measure on . Given we write instead of . Given we write for the shift .

Given the generic environment , we set if and otherwise. We write for the continuous-time random walk in the environment with jump rates and with state space , being a cemetery state (in case of explosion). The properties stated in Section 3 hold –a.s. if Conditions (C1) and (C2) are satisfied –a.s.. Trivially (C1) is always satisfied. For (C2) (i.e. the random walk a.s. does not explode) we have the following criterion:

Proposition 4.1.

Condition (C2) is satisfied –a.s. in the following three cases:

-

(i)

and is stationary w.r.t. shifts;

-

(ii)

is stationary w.r.t. shifts and ;

-

(iii)

and under the coordinates , as varies in , are i.i.d.

The proof of Item (ii) will be an extension of the arguments used in [6, Lemma 4.3] since we are not assuming here that is ergodic and that for all . Item (iii) will follow from the results of [2].

Proof.

We start with Item (i). Since is stationary, it is enough to restrict to the random walk starting at the origin. Let be an environment for which the random walk starting at the origin has explosion in path space with positive probability. Since the sum of infinite independent exponential variables with parameters upper bounded by a finite constant diverges a.s., if the random walk explodes with positive probability (in path space) then the parameter has to diverge for or for .

Now, given , consider the random set . For each consider the event defined as . By the stationarity of , does not depend on . On the other hand, the events , , are disjoint. Since we conclude that for each and . By reasoning similarly for , we conclude that –a.s. for any the set is empty or it is unbounded from the left and from the right. This implies that –a.s. is violated and, similarly, –a.s. is violated. This allows to conclude that for –a.a. the random walk a.s. does not explode.

We now move to Item (ii). At cost to enlarge the probability space by marking edges by i.i.d. non degenerate random variables, independent from the rest, we can assume that

| (18) |

We let . If then –a.s. and, by stationarity, for all –a.s.. In this case, –a.s., all sites are absorbing for the random walk and therefore (C2) is trivially satisfied. From now on we restrict to the case . We define as the probability measure on given by . Due to the stationarity of , it is enough to consider the random walk starting at the origin and show that a.s. there is no explosion.

We introduce the discrete-time Markov chain on with jump probability rates defined as follows: if we set , if we set when for some and otherwise. We write for the above Markov chain when starting at . Note that, due to (18), is well defined for –a.a. and therefore for –a.a. . Since , the probability measure is reversible for the above Markov chain. We write for the law on the path space of the Markov chain with initial distribution . Since is reversible, is invariant w.r.t. shifts.

We introduce now a sequence of i.i.d. exponential times of mean one defined on another probability space . We can take with the product topology, endowed with the Borel –algebra, and we can take for all . Then is stationary w.r.t. time-shifts when thought of as probability measure on the path space . We write for the –algebra of shift-invariant subsets of . By the ergodic theorem the limit exists –a.s. and equals the expectation of w.r.t. conditioned to , which a random variable with values in . As a consequence, where . We observe that is concentrated on , and and are mutually absolutely continuous when restricted to this set. On the other hand, if , we trivially have that (the definition of is similar to the one of ). We conclude that .

Finally we can build the continuous-time random walk with conductances and starting at the origin by defining its jump process (i.e. the sequence of states visited by in chronological order) as an additive functional of the Markov chain (here we use again (18)) and by using the exponential times as waiting times. The construction is standard: when the environment is , starts at the origin and remains there until time , afterwards it jumps to the site such that and remains there for a time and so on. By (18), for –a.a. the above construction is well defined (e.g. the above is univocally determined). The event then corresponds to non-explosion of the trajectory. Since , we conclude that for –a.a. condition (C2) is fulfilled.

We conclude with Item (iii). We define as the graph with edges with and with vertexes given by the points belonging to the above edges. We write and for the vertex set and the edge set of , respectively. For we set and, given , we set , where the infimum is taken over all paths from to in . Then by [2, Lemma 2.5] the r.w. a.s. does not explode if for any connected component of there exists and such that

| (19) |

We now define and for any . Given , we set , where the infimum is taken over all paths from to in the lattice . For we have since . Since in addition paths in are also paths in the lattice , we get that for any . In particular, given as in (19), the bound in (19) is true if it holds

| (20) |

We observe that under the new conductances , with , are i.i.d and lower bounded by 1. This is exactly the context of [2]. Then the bound (20) holds for –a.a. due to Theorem 4.3 and Lemma 2.11 in [2]. We conclude that for –a.a. condition (C2) is fulfilled. ∎

The result in Proposition 4.1–(ii) is extended in [14] to prove the a.s. non-explosion (i.e. Condition (C2)) for –a.a. for a very large class of random walks on random graphs in , with also a random vertex set (given by a simple point process).

We now give a simple criterion (based on Proposition 3.6) to verify that the set of local functions is a core for the generator for –a.a. environments :

Proposition 4.2.

Suppose that is stationary w.r.t. shifts and . Then for -a.a. condition (12) is satisfied. In particular, for –a.a. the family of local functions is a core for the generator .

We point out that the SSEP considered in Proposition 4.2 is well defined due to Proposition 4.1–(ii).

Proof.

Given the environment , consider the process environment viewed from the particle , i.e. . By the definition of the translations we have . Moreover, because of the symmetry of the jump rates, we have that is a reversible (and therefore invariant) probability measure for the process. As a consequence, we have

| (21) |

where is the expectation w.r.t. the process environment viewed from the particle starting at the environment . By our assumption, (21) is finite. This implies that, for any , for –a.a. . Hence, there exists measurable with such that, for all , for any . This means that, for all , for all . We set . By the stationarity of we have and therefore . Moreover, for all and , and therefore it holds for all . Using that , we get that (12) is satisfied for all . Proposition 3.6 allows to conclude. ∎

The proof of Proposition 4.2 can be easily adapted to more general SSEP in a random environment , with symmetric jump rates and on a random graph as in [12, 14]. More precisely, when considering models as in [12] with symmetric just rates, the condition assuring (12) becomes where is the Palm distribution associated to (of course, one does not need to require all the assumptions in [12]). One can apply the above observation for example to the random walk on the infinite cluster of a supercritical percolation on also with random conductances (assuring anyway stationarity). In this case would be the probability measure conditioned to the event that is in the infinite cluster (see [12, Eq. (12)]).

5. Graphical construction and Markov semigroup of SEP

We discuss here the graphical construction and the Markov semigroup of the simple exclusion process (SEP) on the countable set , when the jump rates are not necessarily symmetric.

We denote by the family of ordered pairs of elements of , i.e.

To each pair we associate a number . It is convenient to set

Note that is not assumed to be symmetric in , .

We consider the product space endowed with the product topology. This topology is induced by a metric , defined similarly to (6): . We write for a generic element of .

Definition 5.1 (Probability measure ).

We associate to each pair a Poisson process with intensity and with , such that the ’s are independent processes when varying the pair in . We define as the law on of the random object and we denote by the expectation associated to .

The graphical construction of the SEP presented below is based on Harris’ percolation argument [7, 18]. To justify this construction we need a percolation-type assumption. To this aim we define

above is defined as in (5). Note the symmetry relation and that belongs to . When is sampled with distribution , is a collection of independent processes and in particular is a Poisson process with parameter

We set for all .

From now on we make the following assumption, in force throughout all this section:

Assumption SEP. There exists such that for –a.a. the undirected graph with vertex set and edge set has only connected components with finite cardinality.

We point out that for –a.a. it holds for all and therefore for all . Hence, Assumption SEP remains unchanged if we replace by zero there. On the other hand, the above choice is more suited for the construction of similar graphs for further time intervals as in Lemma 5.2 below. Due to the loss of memory of the Poisson point process, Assumption SEP implies the following property (we omit the proof since standard):

Lemma 5.2.

For –a.a. the following holds: the undirected graph with vertex set and edge set has only connected components with finite cardinality.

Trivially, for . We also point out that the properties appearing in Assumption SEP and Lemma 5.2 define indeed measurable subsets of :

Lemma 5.3.

Given the set of configurations such that the graph has only connected components with finite cardinality is a Borel subset of .

The proof of the above lemma is trivial and therefore omitted (simply note that corresponds to the fact that, for some site , for each integer there exist distinct sites in such that for all , where ).

Trivially, Assumption SEP can be reformulated as follows:

Equivalent formulation of Assumption SEP: Given , consider the random graph with vertex set obtained by putting an edge between in with probability , independently when varying among . Then, for some , the above random graph has a.s. only connected components with finite cardinality.

Remark 5.4.

By stochastic domination, to check Assumption SEP one can as well replace by any other family such that for any .

In the above Assumption SEP we have not required any summability property as Condition (C1) in Assumption SSEP. Indeed, this is not necessary due to the following fact proved in Section 8:

Lemma 5.5.

Assumption SEP implies for all that , , .

Recall the definition of given in Lemma 5.3.

Definition 5.6 (Set ).

We define as the family of such that

-

(i)

;

-

(ii)

the sum is finite for all and ;

-

(iii)

given any in the set of jump times of and the set of jump times of are disjoint and moreover all jumps equal ;

-

(iv)

for all .

Lemma 5.7.

is measurable, i.e. , and .

We briefly describe the graphical construction of the SEP for under Assumption SEP.

Given we first define a trajectory in starting at by an iterative procedure. We set . Suppose that the trajectory has been defined up to time , . As all connected components of have finite cardinality. Let be such a connected component and let

| (22) |

The local evolution with and is described as follows. Start with as configuration at time in . At time move a particle from to with if, just before time , it holds:

-

(i)

site is occupied and site is empty;

-

(ii)

.

Note that, since , the set (22) is indeed finite and there exists at most one ordered pair satisfying (i) and (ii). After this first step, repeat the same operation as above orderly for times . Then move to another connected component of and repeat the above construction and so on. As the connected components are disjoint, the resulting path does not depend on the order by which we choose the connected components in the above algorithm (we could as well proceed simultaneously with all connected components). This procedure defines . Starting with and progressively increasing by we get the trajectory .

The filtered measurable space is defined as in Section 3.1. Again the space of real continuous functions on is endowed with the uniform topology. Given , we define as the probability measure on the above filtered measurable space given by for all . By Lemma 8.3 in Section 8 the set is indeed measurable and therefore is well defined.

Similarly to Propositions 3.2, 3.3 and 3.4 we have the following results (see Section 8.1 and 8.2 for their proofs):

Proposition 5.8 (Construction of SEP).

The family of probability measures on the filtered measurable space is a Markov process (called simple exclusion process with rates ), i.e.

-

(i)

for all ;

-

(ii)

for any the function is measurable;

-

(iii)

for any and it holds –a.s.

Remark 5.9.

By changing in the graphs , for –a.a. the path constructed above does not change, and this for any . In particular, the above SEP does not depend on the particular for which Assumption SEP holds.

Proposition 5.10 (Feller property).

Given and given , the map belongs to . In particular, the SEP with rates is a Feller process.

Proposition 5.11 (Infinitesimal generator on local functions).

Local functions belong to the domain of the infinitesimal generator of the SEP with rates . Moreover, for any local function , we have

| (23) |

The series in the r.h.s. is an absolutely convergent series of functions in .

We now show that Proposition 5.11 can be extended to a larger class of functions. To this aim, given , recall the definition of given in (10) and recall that (cf. (11)). While in the symmetric case we considered , we now set

Similarly to Proposition 3.5 we have the following:

Proposition 5.12 (Infinitesimal generator on further good functions).

Let satisfy and . Then and , where the r.h.s. is an absolutely convergent series of functions in .

We point out that local functions satisfy both and , hence Proposition 5.12 is an extension of Proposition 5.11. The proof of Proposition 5.12 is given in Section 8.3.

Finally we provide a criterion assuring that the family of local functions is a core for the generator . To this aim we need the following:

Definition 5.13 (Set and ).

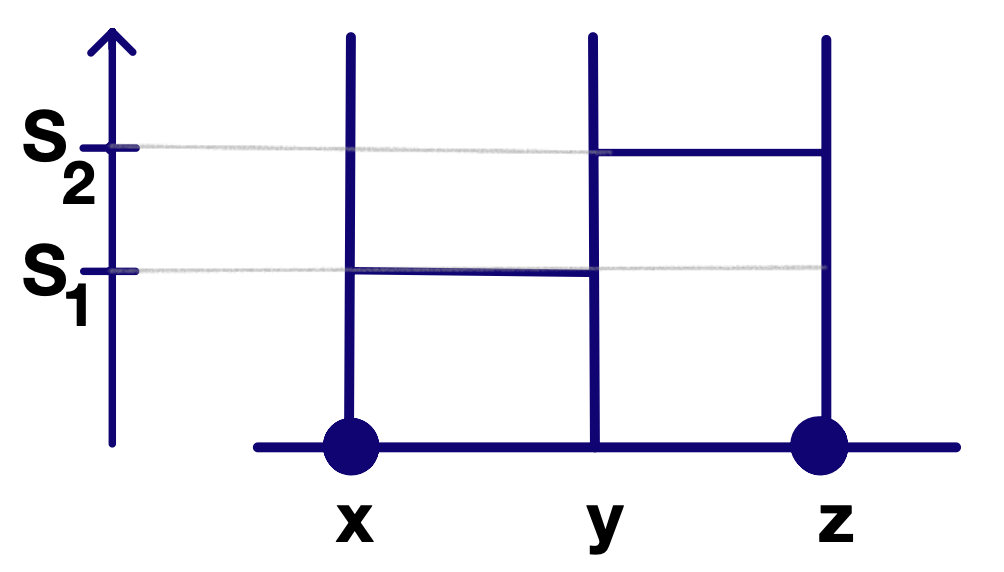

Given , and we define the set as follows. First we let be the connected component of in the graph . Then, we let be the union of the connected components in the graph of as varies in . In general, we introduce iteratively by defining as the union of the connected components in the graph of as varies in . We then set and for , .

Properties and relevance of and will be commented in Remark 8.1 in Section 8. We can now describe the above mentioned criterion:

Proposition 5.14 (Core for ).

Suppose that

| (24) | |||

| (25) |

Then the family of local functions is a core for .

Remark 5.15.

The above conditions (24) and (25) correspond to a countable family of requests. Indeed, since (24) and (25) are trivially satisfied for as , (24) and (25) are equivalent respectively to (26) and (27):

| (26) | |||

| (27) |

Below we write that in if is an edge of . Since corresponds to the connected component of in , we have if and only if . Given in , let

| (28) |

The following result reduces the verification of (24) and (25) to a percolation problem associated to the random graph :

Proposition 5.16.

6. Applications to SEPs in a random environment

We now consider the SEP with state space and jump probability rates depending on a random environment . More precisely, we have a probability space and the environment is a generic element of .

Due to the results presented in Section 5, to have –a.s. a well defined process built by the graphical construction and enjoying the properties stated in Propositions 5.8, 5.10 and 5.11, it is sufficient to check that for –a.a. Assumption SEP is valid with and . This becomes an interesting problem in percolation theory since one has a percolation problem in a random environment. By restating the content of Propositions 5.1 and 5.2 in [11] in the present setting ( here plays the role of the conductance of there) we have the two criteria below, where () denotes the set of directed (undirected) edges of the lattice .

Proposition 6.1.

[11, Proposition 5.1] Let . Suppose that is stationary w.r.t. shifts. Take and for and otherwise. Then for –a.a. Assumption SEP is satisfied if at least one of the following conditions is fulfilled:

-

(i)

–a.s. there exists a constant such that for all ;

-

(ii)

under the random variables are independent when varying in ;

-

(iii)

for some under the random variables are –dependent when varying in .

The –dependence in Item (iii) means that, given with distance at least , the random fields and are independent (see e.g. [17, Section 7.4]).

Proposition 6.2.

[11, Proposition 5.2] Suppose that there is a measurable map into the set of locally finite subsets of , such that is a Poisson point process (PPP) when is sampled according to . Take . Suppose that, for –a.a. , for any , where is a fixed bounded function such that the map belongs to . Then for –a.a. Assumption SEP is satisfied.

By taking the above proposition implies the following (recall Mott v.r.h. discussed in the Introduction):

Corollary 6.3.

For –a.a. the Mott v.r.h. on a marked Poisson point process (without the mean field approximation) can be built as SEP by the graphical construction and it is a Feller process, whose Markov generator is given by (23) on local functions.

We now give an application of Proposition 5.16 to check that is a core for the generator. We discuss a special case, where (33) can be violated (of course, further generalizations are possible, we just aim to illustrate the criterion):

Proposition 6.4.

Let . Take and for and otherwise. Suppose that the random variables with are i.i.d. and have finite -moment for some (i.e. the expectation of is finite). Then, for –a.a. environments , the family of local functions is a core for the generator of the SEP.

We point out that for the above model Assumption SEP is satisfied due to Proposition 6.1.

Proof.

Given the environment , we write and instead of and (cf. Definition 5.1 and Eq. (28)) in order to stress the dependence on . Let be the critical probability for the Bernoulli bond percolation on . for , while for . In both cases we can fix such that for all . Given we consider the undirected graph with vertex set and edges given by the pairs such that (i) or (ii) and . When is an edge of we say that it is open, otherwise we say that it is closed. Note that is a subgraph of . Moreover, under , the random graph corresponds to a Bernoulli bond percolation on [17] with parameter

Since we can fix small enough to assure that . As a consequence, –a.s. the graph is subcritical. Due to the results of subcritical Bernoulli bond percolation (cf. [17, Theorem (5.4)]), there exists such that the –probability that two points are connected in is bounded by . Hence also the –probability that two points are connected in is bounded by , i.e.

| (34) |

where denotes the expectation w.r.t. .

To get that is a core for –a.s. we apply Proposition 5.16. Since we just need that –a.s. the countable family of conditions (29) with and holds, it is enough to prove that given and condition (29) holds –a.s. and to this aim it is enough to show that

| (35) |

By applying several times Schwarz inequality, using that for since and using (34), as detailed in an example below, we can bound the expectation in (35) by

| (36) |

for some constant determined by and . This allows to get (35). For example, for , we have

By similar arguments one can check condition (31). In particular, as above, it is enough to prove that given and it holds

| (37) |

The only difference here is that one has to use Hölder’s inequality in the first step. In particular, one has to bound the expectation in (37) by

| (38) |

Due to the stationarity of and our moment assumption, the expectation is bounded uniformly in , while the first expectation in (38) is bounded by (36) as already observed. This allows to conclude. ∎

7. Graphical construction, Markov generator and duality of SSEP: proofs

In this section we detail the graphical construction of the SSEP, thus requiring a certain care on the measurability issues. At the end we provide the proof of Propositions 3.2, 3.3, 3.4, 3.5, 3.6 and Lemma 3.9 presented in Section 3.

Let us start with the graphical construction. For later use we note that is the –algebra generated by the coordinate maps , as varies in (see the metric (6)). Since is generated by the coordinate maps with (see Section 2), we get that is the –algebra generated by the maps as varies in and varies in .

Given , is a càdlàg path with values in , hence has a finite set of jump times on any interval , . Given and , we define as

| (39) |

Since each map is measurable, the same holds for the map . We point out that the path is not necessarily càdlàg. For example, take such that, for ,

Then equals zero for and equals for .

Definition 7.1.

We define as the family of such that

-

(i)

for all , is finite for all and the map is càdlàg with jumps of value ;

-

(ii)

for any the path has only jumps of value ;

-

(iii)

given any in , the set of jump times of and the set of jump times of are disjoint;

-

(iv)

for all .

Lemma 7.2.

is measurable, i.e. , and .

Proof.

It is a standard fact that the ’s satisfying (ii), (iii) and (iv) form a measurable set with –probability one. By property (ii) for all and the path is weakly increasing. Hence , where . Since the map is measurable, also the set is measurable. Moreover this set has -probability since and, by (C1), . This allows to conclude that is measurable and .

To conclude it is enough to show that . Trivially . To prove that we just need to show that, given and , is càdlàg with jumps of value . Let us first prove that is càdlàg . To this aim we fix and take . We note that , for any and (for the first two properties use that , for the third one use that ). Then, by dominated convergence applied to the sum among with , we get that (i.e. is right-continuous). Similarly, for , we get that (i.e. has left limits). Due to the above identities, if is a jump time of the càdlàg path , then the jump value is . The above expression and properties (ii) and (iii) imply that . ∎

Definition 7.3.

Let . Given and we define as the set of jump times of which lie in . Similarly, given , we define as the set of jump times of which lie in .

Note that, if , then and is finite for all and . Moreover, cannot be a jump time of or since both paths and are càdlàg. In particular, and .

We introduce as an abstract state not in . Given and given , we associate to each an element in as follows:

Definition 7.4 (Definition of for and ).

We first consider the set . If is empty, then we set and we stop. If is nonempty, then we define

as the largest time in and as the unique point in

such that 333Note that and are well defined since ..

In general, arrived at with , we consider the set . If is empty, then we set and we stop. If is nonempty, then we define as the largest time in and as the unique point in such that .

If the algorithm stops in a finite number of steps, then has been defined by the algorithm itself and belongs to . If the algorithm does not stop, then we set .

Remark 7.5.

Note that according to the above algorithm (indeed, in this case as has no jump at time being càdlàg).

We endow the countable set with the discrete topology. Below, when considering functions with domain , we consider as a measurable space with –algebra .

Lemma 7.6.

Given and , the map is measurable.

Proof.

We just need to show that, given , the set is measurable. Let us call the number of steps in the algorithm of Definition 7.4. Below are as in the above algorithm. Moreover, to simplify the notation, we take .

We first take . The above set is then the union of the countable family of sets , where , , and satisfy for . Let us show for example that the set is measurable (the case with is similar, just more involved from a notational viewpoint). We claim that

| (40) |

where

To prove our claim take . Since , we know that the map takes value in and is càdlàg with jumps equal to . Recall that is the last time in the nonempty set . This implies that for . Given and let with . Then, by the above observation, . Since is càdlàg, there exists small such that for all . Therefore, by taking such that and using that , we have that . By definition of we know that has no jumps in , thus implying that . This concludes the proof that, if , then belongs to r.h.s. of (40).

Suppose now that belongs to the r.h.s. of (40). Let us prove that . First we observe that, for some and for all , we can find such that . By definition of we have for all and . To simplify the notation let and . By the above properties and since , has exactly one jump time in . Setting , are subsequent points in . If the above jump time lies in , then it must be and . If the above jump time lies in , then it must be and . This proves that the sequence is weakly decreasing in , while the sequence is weakly increasing in . Since in addition the terms and differ by , we obtain that converges to some from the left and converges to the same from the right. It cannot be otherwise it would be for and we would have (due to the definition of ), thus contradicting the right continuity of . Hence . We claim that and . By taking the limit in the properties defining and using that , and are càdlàg, we get , , , . This implies that and . At this point we have proved our claim (40).

Having (40), which involves countable intersections and unions, the measurability of follows from the measurability of (for the latter recall that the maps and are measurable).

When , the analysis is the same. One has just to consider in addition the event , which is trivially measurable. ∎

Lemma 7.7.

Given and , the path is càdlàg.

Proof.

We prove the càdlàg property at , the case is similar. We fix , . Since , is càdlàg and therefore has no jump in and in for small enough. By the first step in the algorithm in Definition 7.4 we conclude respectively that for any and for for any . ∎

Let us point out an important symmetry (called below reflection invariance) of the law . We define the jump times of the random path . Setting , we have that , , are i.i.d. exponential random variables of parameter . Another characterization is that is a Poisson point process with intensity . As a consequence, given , the set is a Poisson point process on with intensity , i.e. the numbers of points in disjoint Borel subsets of are independent random variables and the number of points in a given Borel subset of is a Poisson random variable with parameter times the Lebesgue measure of . Due to the above characterization of the Poisson point process, we have that also is a Poisson point process on with intensity .

Due to the above reflection invariance, the independence of the Poisson processes and the algorithm in Definition 7.4, we get that, by sampling with probability , the random variable is distributed as the state at time of the random walk with conductances starting at , with the convention that corresponds to the explosion of the above random walk.

The above observation will be crucial in proving Lemma 7.9 below. We first give the following definition:

Definition 7.8.

We define the set as .

Lemma 7.9.

The set is measurable and .

Proof.

Let . By Lemma 7.7, . Due to Lemma 7.6 the set is measurable. Due to the above interpretation of the distribution of in terms of the random walk with random conductances and due to Condition (C2), we have . Then, since is the countable intersection of the measurable sets , , we conclude that is measurable and . As , the same holds for . ∎

Definition 7.10.

For each , and , we define as

| (41) |

We note that, given , it holds by Remark 7.5. We refer to Figure 2 for an example illustrating our definitions.

Lemma 7.11.

Fixed , the map is continuous in (for fixed ) and measurable in (for fixed ).

Proof.

We prove the continuity in for fixed . Recall the metric on defined in (4). Fix . We have that if for all . By (41) this holds if for all . Let us now choose large enough that . Since we have for all if , we conclude that whenever we have for all and therefore . This proves the continuity in .

We prove the measurability in for fixed . The Borel –algebra is generated by the sets as varies in . Then to prove the measurability in , for fixed , we just need to show that is measurable for any . By (41) we can rewrite as the countable union . Then the measurability of follows from the measurability of the sets , which follows from Lemmas 7.6 and 7.9. ∎

For the next result recall that .

Lemma 7.12.

For each and , the path belongs to . Moreover, fixed , the map

| (42) |

is measurable in .

Proof.

Let and . We first check that the path belongs to . We first prove its right continuity, i.e. given we show that . Due to (4) we just need to show that for any . By (41) it is enough to show that, for any , for any sufficiently near to . This follows from Lemma 7.7. We now prove that the path has limit from the left at . Given , by Lemma 7.7, the left limit is well defined. Moreover, since , this limit is in . Let for any . We claim that . Again, due to (4), we just need to show that , i.e. , for any . This follows from the fact that, for each , for all , for small enough. This concludes the proof that belongs to .

As discussed at the end of Section 2, is generated by the maps with varying in . This means that is the smallest –algebra such that the sets of the form , with , are measurable. To prove the measurability of (42) in for fixed , we just need to prove that the inverse image of via (42) is measurable, i.e. that the set is measurable. But this is exactly the measurability in (for fixed ) of the map in Lemma 7.11. ∎

7.1. Proof of Proposition 3.2

Item (i) holds since, as already observed, given it holds by Remark 7.5.

We move to Item (ii). We will use the Dynkin’s - Theorem (see e.g. [9]) as in the proof of Item (b) in [25, Theorem 2.4]. We fix , and . Due to Lemma 7.11, for fixed , the maps are continuous. Therefore, also

is continuous in for each fixed . Trivially if and only if for all , otherwise . We then consider the set . Note that as is generated by the coordinate maps, moreover is measurable in by Lemma 7.11. Since and , by dominated convergence and the above continuity in of , we get that the map is continuous.

We now consider the family of sets such that the map is measurable. Due to the above discussion, contains the family given by events of the form with , , and . The above family is a –system, i.e. if . We claim that, on the other hand, is a –system, i.e. (a) , (b) if and , then , (c) if and (i.e. and ), then . Before justifying our claim, let us conclude the proof of Item (ii). By Dynkin’s - Theorem, contains the –algebra generated by , which is indeed as discussed at the end of Section 2. Hence , thus corresponding to Item (ii).

We conclude by proving our claim. The check of (a) and (b) is trivial. Let us focus on (c). Let with . We need to prove that . We first observe that for each path it holds as by definition of . This implies that, for each and , as , where and . Then, by dominated convergence, as , for any . Since the map is measurable. As a byproduct of the above limit, we get that the map is the pointwise limit of measurable functions, and therefore it is measurable. This concludes the proof of (c).

We now focus on Item (iii). The proof is very close to the one of Item (c) in [25, Theorem 2.4], although the graphical construction is different. Given and , we define as the element of such that . We note that for any . We also denote by the collection of functions as varies in . We claim that for all and it holds

| (43) |

To check the above identity, observe that by the graphical construction where , and therefore (defining as in (43))

Take now and . We can think of as a subset of . We set . Then, using (43),

| (44) |

Since and depend only on , which is independent under from and since and have the same law under , we have

By collecting our observations we get

The above family of identities leads to Item (iii).

7.2. Proof of Proposition 3.3

Since is compact, is uniformly bounded. Fixed the map is measurable (as composition of measurable functions, see Lemma 7.11) and is bounded in modulus by . Fixed and a sequence in with , due to the continuity in of (see Lemma 7.11) and due to the continuity of , we have as for all . Then, by dominated convergence, we get that as .

7.3. Proof of Proposition 3.4

Let be a local function and take finite such that is defined in terms only of with . We set . By (C1) we have

| (45) |

From the above bound and since it is simple to prove that the r.h.s.’s of (7) and (8) are absolutely convergent series in defining the same function, that we denote by . Hence we just need to prove that .

From now on is assumed to be in (see Definition 7.8), which has -probability one. Since , with sampled by , is a Poisson random variable with finite parameter , it holds

| (46) |

Recall that . We introduce on the total order such that if and only if . When , we define the pair as the only edge in such that , with the rule that we call the minimal (w.r.t. ) point in . This rule is introduced in order to have a univocally defined labelling of the points in the above edge.

Recall (39). We observe that implies that for all with , , , for all and . implies also that if . Let . By the above observations and the graphical construction in Definition 7.4, also implies that for , and . Hence, on when occurs.

As already observed, if and , then must occur. Hence

| (47) |

We claim that, as ,

| (48) |

To prove our claim we estimate

| (49) |

Note that in the last bound we have used that is a Poisson random variable with parameter , which is independent from . By (45) and the dominated convergence theorem applied to the r.h.s. in (49), we get that the r.h.s. of (49) is . By combining this result with (47), we get (48).

Using that , when , (46) and (48), we can write

| (50) |

Above, to simplify the notation, for the intersection of events we used the comma instead of the symbol (we keep the same convention also below).

If in (50) we replace by , the global error is of order . Indeed, since the first event is included in the second one, we have

and the last expression is of order due to (48). By making the above replacement we have

| (51) |

We apply the dominated convergence theorem to the measure on giving weight to and to the –parametrized functions . The above functions are dominated by the constant function , which is integrable as . By dominated convergence we conclude that . By combining this observation with (51) we conclude that

The above expression implies that and that .

7.4. Proof of Proposition 3.5

We first point out a property of frequently used also in the rest: if and satisfy for all for some (possibly ), then it holds

| (52) |

The proof of (52) is standard. Indeed, define , , by setting if and if (recall that ). Then and as , thus implying that due to the continuity of and therefore . To conclude the proof of (52) it is enough to observe that and differ at most at (thus implying that ) and are equal if .

Let now be as in Proposition 3.5. We set . Since by (52), we get . Then, since , it holds

| (53) |

This proves the absolute convergence of as series of functions in . Let us call the resulting function.

Since is a Markov generator, its graph is closed in (cf. [20, Chapter 1]). Hence, to conclude, it is enough to exhibit a sequence of local functions such that and as (indeed this implies that and ). To this aim, given , we define if and otherwise. We also set . Trivially is a local function. Moreover, by (52), , which goes to zero as since . It remains to prove that . To this aim we fix . Due to (53) and since , we can fix such that

| (54) |

Note that , hence (54) holds also with instead of for any . As a consequence and due to the series representation of and (cf. (8)) we conclude that

| (55) |

For and we have that and coincide on and also and coincide on and therefore by (52)

| (56) |

By combining (55) and (56) and setting , we conclude that for . Hence, by the bound , . By the arbitrariness of we conclude that , thus proving the lemma.

Remark 7.13.

For later use we stress that, in the proof of Proposition 3.5, for any with and we have built a sequence such that and as .

7.5. Proof of Proposition 3.6

We set . We start showing that if is a core then also is a core. If is a core, then for any there exists a sequence such that and as . To prove that is a core, it is then enough to show that for any there exists a sequence such that and . This follows from the proof of Proposition 3.5 and in particular from Remark 7.13.

Now it remains to show that is a core for . By a slight modification of [8, Proposition 3.3] it is enough to show the following: (i) is dense in ; (ii) and (iii) for any and . The difference with [8, Proposition 3.3] is that in (iii) we require that only for and not for all . The reader can easily check that the short proof of [8, Proposition 3.3] still works. Indeed, the invariance of is used in the proof only to show that, given , belongs to .

Let us check the above properties (i), (ii) and (iii). Property (i) is well known [20]. The inclusion in (ii) follows from Proposition 3.5 since and for any . If we prove that (thus completing (ii)), then automatically we have (iii) since, by definition of , for any . Hence, it remains to prove that . To this aim we first show that, given ,

| (57) |

where the probability refers to the random walk starting at . To prove (57) suppose that and in are equal except at . Recall that, by the graphical construction, and . Since and , we get that for all such that . Therefore, by (52),

It then follows that

From the above estimate we get (57).

7.6. Proof of Lemma 3.9

We need to prove for any that

| (58) |

Since functions are finite linear combinations of Kronecker’s functions, it is enough to consider the case for a fixed . To this aim we write for the number of jumps performed by the random walk in the time window . Recall that . We write for the probability on the path space associated to the random walk starting at . We have

As a consequence (recall that ) to get (58) it is enough to prove the following:

| (59) | |||

| (60) | |||

| (61) |

(59) is trivial. (60) can be rewritten as (recall that )

Since and for all , the above limit follows from the dominated convergence theorem if we show that . To this aim we observe that, since , it must be . Then we can bound .

8. Graphical construction and Markov generator of SEP: proofs

We start with the proof of Lemma 5.5.

Proof of Lemma 5.5.

Trivially it is enough to prove the first bound. To this aim we argue by contradiction and assume Assumption SEP to hold and that for some . Without loss of generality, we can suppose that (see (3)). Due to the superposition property of independent Poisson point processes, under , , , is a Poisson process with parameter . Hence, fixed and , . Since by hypothesis , we conclude that . Since in addition the sequence of events is monotone and decreasing, we get that . Note that means that has degree at most in the graph . As a consequence, for any fixed , –a.s. the vertex has infinite degree in the graph , thus contradicting Assumption SEP. ∎

Recall the set introduced in Definition 5.6. Moreover, recall that sets and introduced in Definition 5.13.

Remark 8.1.

By the graphical construction and since , is a finite set and is a Borel subset of for all . Fix now with . Then, for any , the value depends on only through the restriction of to and, knowing that , it depends on on through the values with in and .

The following lemma is the SEP version of Lemma 7.11:

Lemma 8.2.

Fixed , the map is continuous in (for fixed ) and measurable in (for fixed ).

Proof.

For we have and the claim is trivially true. We fix and take such that .

We first prove the continuity in for fixed . By (4), given , we have if for all . Recall Definition 5.13 and set . By Remark 8.1 we have for all whenever and coincide on , which is automatically satisfied if is small enough since is finite. This proves the continuity in .

We now prove the measurability in for fixed . We just need to prove that, fixed and , the set is measurable in . Given finite, call the event in that the SEP on built by the standard graphical construction on has value at at time ( is given by the pairs with in ). Since in this case all is finite, is Borel in . We define as the Borel set in obtained as product set of with . Then, see Remark 8.1,

By Remark 8.1 the set is Borel in , thus allowing to conclude. ∎

Lemma 8.3.

For each and , the path belongs to . Moreover, fixed , the map

| (63) |

is measurable in .

Proof.

Let us show that belongs to . We start with the right continuity. Fix and let with . Due to (4) we just need to show that for any . By Remark 8.1, as , the set is finite and if has no jump time in for all with . Since is finite and the jump times of form a locally finite set, we conclude that the above condition is satisfied for sufficiently close to . This proves the right continuity. To prove that has left limit in , by (4) we just need to show that, for any , has left limit in , i.e. it is constant in the time window for some . By Remark 8.1 and since this follows from the fact that, by taking close to , for all with the path has no jump in (as is finite).

8.1. Proof of Propositions 5.8 and 5.10

The proof of Proposition 5.8 equals verbatim the proof of Proposition 3.2 by replacing Lemma 7.11 with Lemma 8.2, with exception for the derivation of (43) (which can anyway be obtained from the graphical construction). The proof of Proposition 5.10 equals verbatim the proof of Proposition 3.3 by replacing Lemma 7.11 with Lemma 8.2.

8.2. Proof of Propositions 5.11

The proof follows in good part [11, Appendix B]. Since the notation there is slightly different and some steps have to be changed, we give the proof for completeness.

Below will always vary in , without further mention. Given we denote by the undirected graph with vertex set and edge set . As is a subgraph of , the graph has only connected components of finite cardinality. Moreover, as , it is simple to check that can be obtained by the graphical construction of Section 5 but working with the graph instead of .

Let be a local function such that is defined in terms only of with and finite. We set . By Lemma 5.5 we have

| (64) |

By (64) it is simple to check that the r.h.s.’s of (23) is an absolutely convergent series in defining therefore a function in that we denote by . Hence we just need to prove that .

Since is a Poisson random variable with finite parameter , we have . When , we define the pair as the only edge in such that . To have a univocally defined labelling, as in the proof of Proposition 3.4, if the pair has only one point in , then we call this point and the other one . Otherwise, we call the minimal (w.r.t. the enumeration (3)) point inside the pair.

Claim 8.4.

Let be the event that (i) and (ii) is not a connected component of . Then .

Proof of Claim 8.4.

We first show that , where

To this aim suppose first that and . Then must be a connected component in (otherwise we would contradict ). Hence, implies that and . By , is not a connected component of , and therefore there exists a point such that or . The first case cannot occur as , and . By the same reason, in the second case it must be . Hence, there exists such that , thus concluding the proof that .

As to prove that it is enough to show that . To this aim we first estimate by

By applying the dominated convergence theorem to the measure giving weight to the pair with and and by using (64), we get . ∎

We define as the event that (i) and (ii) is a connected component of . Since and due to Claim 8.4 we get

| (65) |

We set . Given we set

Trivially, by the graphical construction, if , then for all . On the other hand, if takes place, then for all if and , otherwise for all . By (65) and the above observations and since , we can write

As and is bounded we can rewrite the above r.h.s. as

As uniformly in , by the dominated convergence theorem (use (64)) we can conclude that .

8.3. Proof of Proposition 5.12

The proof can be obtained by slight modifications from the proof of Proposition 3.5 for the symmetric case. We give it for the reader’s convenience. Let be as in Proposition 5.12 and let . Since by (52), and

| (66) |

This proves the absolute convergence of as series of functions in . Let us call the resulting function.

Since is close, to conclude it is enough to exhibit a sequence of local functions such that and as . To this aim define , and as in the proof of Proposition 3.5. By what proved there, we know that is a local function and , hence it goes to zero as since . It remains to prove that . We fix . Due to (66) we can fix such that

| (67) |

Since , (67) holds also with instead of for any . As a consequence, due to the series representation of and (cf. (23)) and due to (56), we conclude that for

| (68) |

Setting , we have proved that for . Hence, by the bound and the arbitrariness of , .

Remark 8.5.

For later use we stress that, in the above proof, for any with and we have built a sequence such that and as .

8.4. Proof of Proposition 5.14

We set . As in the proof of Proposition 3.6, due to Remark 8.5, we get that if is a core then also is a core. Moreover, due to [8, Proposition 3.3], to show that is a core it is enough to prove the following: (i) is dense in ; (ii) and (iii) for any and . Having Proposition 5.12, the only nontrivial property to check is that . So we focus on this.

First we show that, for any and , it holds

| (69) |

Given let be equal expect at most at . Then, by denoting by the expectation w.r.t. , we get

| (70) |

By Remark 8.1 and the graphical construction of SEP, we get that if . Hence, by (52), we can bound the last term in (70) by

| (71) |

By combining (70) and (71) we have

| (72) |

From (72) we get

| (73) | |||

| (74) |

Given we have for all except a finite set. Hence, we get due to (24) and (73), while due to (25) and (74). This completes the proof that .

8.5. Proof of Proposition 5.16

As already observed after Proposition 5.14, (24) is equivalent to (26) and (25) is equivalent to (27). Both (26) and (27) can be rewritten as

| (75) |

By definition of , given we have the following equality of events (where is the random object):

| (76) |

where , and so on. We point out that when (76) has to be thought of as . Since under the graphs , , ,… are i.i.d. and since , by (76) and a union bound we have

| (77) |

Hence we get

| (78) |

Trivially, by setting and and using that , the last sum can be written as .

Appendix A Derivation of (1)

In this appendix we show that Conditions (3.3) and (3.8) in [20, Chapter I.3] are both equivalent to (1). In the notation of [20, Chapter I.3], given in , one defines the measure on as , and one sets for with .

Condition (3.3) in [20, Chapter I.3] is given by , where . In our case we have

and therefore the above mentioned condition is equivalent to (1).

Given and , as in [20, Chapter I.3] we define

where denotes the total variation norm. In our case, if then . If with in , then

Since Condition (3.8) in [20, Chapter I.3] is given by , also this condition is equivalent to (1).

Acknowledgements. I thank the anonymous referee for the corrections and comments. I am very grateful to Ráth Balázs for the stimulating discussions during the Workshop “Large Scale Stochastic Dynamics” (2022) at MFO. I kindly acknowledge also the organizers of this event. I thank the very heterogeneous population (made of different animal and vegetable species) of my homes in Codroipo and Rome, where this work has been written.

References

- [1] V. Ambegoakar, B.I. Halperin, J.S. Langer; Hopping conductivity in disordered systems, Phys. Rev. B 4, 2612–2620 (1971).

- [2] M.T. Barlow, J.-D. Deuschel; Invariance principle for the random conductance model with unbounded conductances. Ann. Probab. 38, 234–276. (2010).

- [3] M. Biskup; Recent progress on the random conductance model. Probability Surveys, Vol. 8, 294-373 (2011).

- [4] A. Chiarini, S. Floreani, F. Redig, F. Sau; Fractional kinetics equation from a Markovian system of interacting Bouchaud trap models. arXiv:2302.10156

- [5] A. Chiarini, A. Faggionato; Density fluctuations of symmetric simple exclusion processes on random graphs in with random conductances. In preparation.

- [6] A. De Masi, P.A. Ferrari, S. Goldstein, W.D. Wick; An invariance principle for reversible Markov processes. Applications to random motions in random environments. J. Stat. Phys. 55, 787–855 (1989).

- [7] R. Durrett; Ten lectures on particle systems. In: Bernard, P. (eds) Lectures on Probability Theory. Lecture Notes in Mathematics 1608, 97–20. Springer, Berlin, 1995.

- [8] S.N. Ethier, T.G. Kurtz; Markov processes : characterization and convergence. J. Wiley, New York, 1986.

- [9] R. Durrett; Probability - Theory and Examples. Cambridge Series in Statistical and Probabilistic Mathematics, Cambridge University Press, Cambridge 2019.

- [10] D.J. Daley, D. Vere-Jones; An Introduction to the Theory of Point Processes. New York, Springer Verlag, 1988.

- [11] A. Faggionato; Hydrodynamic limit of simple exclusion processes in symmetric random environments via duality and homogenization. Probab. Theory Relat. Fields. 184, 1093–1137 (2022).

- [12] A. Faggionato; Stochastic homogenization of random walks on point processes. Ann. Inst. H. Poincaré Probab. Statist. 59, 662–705 (2023).

- [13] A. Faggionato; Scaling limit of the directional conductivity of random resistor networks on simple point processes. Ann. Inst. H. Poincaré Probab. Statist., to appear (preprint arXiv:2108.11258)

- [14] A. Faggionato; Graphs with random conductances on point processes: quenched RW homogenization, SSEP hydrodynamics and resistor networks. Forthcoming.

- [15] M. Fukushima, Y. Oschima, M. Takeda; Dirichlet forms and symmetric Markov processes. Second edition. De Gruyter, Berlin, 2010.

- [16] P. Gonçalves, M. Jara; Scaling limit of gradient systems in random environment. J. Stat. Phys. 131, 691–716 (2008).

- [17] G. Grimmett; Percolation. Second edition. Die Grundlehren der mathematischen Wissenschaften 321. Springer, Berlin, 1999.

- [18] T.E. Harris; Nearest neighbor Markov interaction processes on multidimensional lattices. Adv. in Math. 9, 66–89 (1972).

- [19] T.E. Harris; Additive set-valued Markov processes and graphical methods. Ann. Probab. 6, 355–378 (1978).

- [20] T. M. Liggett; Interacting particle systems. Grundlehren der Mathematischen Wissenschaften 276, Springer, Berlin, 1985.

- [21] T. M. Liggett; Stochastic interacting systems: contact, voter and exclusion processes. Grundlehren der mathematischen Wissenschaften 324, Springer, Berlin, 1999.

- [22] A. Miller, E. Abrahams; Impurity conduction at low concentrations. Phys. Rev. 120, 745–755 (1960).

- [23] N.F. Mott; Electrons in glass. Nobel Lecture, 8 December, 1977. Available online at https://www.nobelprize.org/uploads/2018/06/mott-lecture.pdf

- [24] R. Meester, R. Roy; Continuum percolation. Cambridge Tracts in Mathematics 119. First edition, Cambridge University Press, Cambridge, 1996.

- [25] T. Seppäläinen; Translation Invariant Exclusion Processes. Online book available at https://www.math.wisc.edu/~seppalai/excl-book/ajo.pdf, 2008.