Grasping in the Dark: Zero-Shot Object Grasping

Using Tactile Feedback

Abstract

Grasping and manipulating a wide variety of objects is a fundamental skill that would determine the success and wide spread adaptation of robots in homes. Several end-effector designs for robust manipulation have been proposed but they mostly work when provided with prior information about the objects or equipped with external sensors for estimating object shape or size. Such approaches are limited to many-shot or unknown objects and are prone to estimation errors from external estimation systems.

We propose an approach to grasp and manipulate previously unseen or zero-shot objects: the objects without any prior of their shape, size, material and weight properties, using only feedback from tactile sensors which is contrary to the state-of-the-art. Such an approach provides robust manipulation of objects either when the object model is not known or when it is estimated incorrectly from an external system. Our approach is inspired by the ideology of how animals or humans manipulate objects, i.e., by using feedback from their skin. Our grasping and manipulation revolves around the simple notion that objects slip if not grasped stably. This slippage can be detected and counteracted for a robust grasp that is agnostic to the type, shape, size, material and weight of the object. At the crux of our approach is a novel tactile feedback based controller that detects and compensates for slip during grasp. We successfully evaluate and demonstrate our proposed approach on many real world experiments using the Shadow Dexterous Hand equipped with BioTac SP tactile sensors for different object shapes, sizes, weights and materials. We obtain an overall success rate of 73.5%.

I Introduction

Robotic agents, and their respective research fields, have generally proven useful in structured environments, crafted specifically for them to operate. We as robotics researchers envision in the near future, robots performing various tasks in our homes. For such robots to be successful when deployed “in the wild”, they have to grasp and manipulate objects of various shapes, sizes, materials and weight which may or may not be present in their database.

Having the ability to grasp robustly and repeatedly is the primary way by which robots can affect their environment, and is the first step to performing more complicated and involved tasks. Robust grasping involves reliable perception of the object form (inference of pose, type of object and other properties) before grasping and a robust and continuous feedback loop to ensure that object does not slip (or fall) during grasping and manipulation.

In this work, we focus on the latter since it is required for grasping previously unseen or zero-shot objects, i.e., objects of unknown size, shape, material and weight, utilizing tactile feedback. Our method is inspired by the amazing grasping abilities of animals and humans [1, 2] for novel objects, and how they are able to “grasp in the dark” (with their eyes closed). To showcase that our method does not rely on an external perception input but rather only relies on tactile proprioception, we also demonstrate our method working on transparent objects that cannot be sensed robustly using traditional perception hardware, such as cameras or LIDARs. We formally define our problem statement and summarize a list of our contributions next.

I-A Problem Formulation and Contributions

A gripper is equipped with tactile sensors and an object of unknown shape, size, material and weight is placed in front of the gripper. The problem we address is as follows: Can we grasp and manipulate an unseen and unknown object (zero-shot) using only tactile sensing?

We postulate that a robust grasp is achieved when enough force is applied to an object such that it is just sufficient to counteract gravity, thus suspending the object in a state of static friction experienced between the object and the finger. Our framework allows for robust grasping of previously unseen objects or zero-shot of varied shapes, sizes, and weights under the absence of visual input, due to our reliance solely on tactile feedback. Humans are capable of this feat from an early age [3], and it is an important ability to have in scenarios with the absence of visual perception due to occlusions or the object not being present in the robot’s knowledge-base. A summary of our contributions are:

-

•

We propose a tactile-only grasping framework for unseen or zero-shot objects.

-

•

Extensive real-world experiments showing the efficacy of the proposed approach on a variety of common day-to-day objects of various shapes, sizes, textures and rigidity. We also include challenging objects such as a soft-toy and a transparent cup demonstrating that our approach is robust.

II Related Work

Most of the research in tactile-related grasping can be broadly divided into three categories, those that rely on purely tactile input, those that use vision-based approaches, and those that perform end-to-end learning of grasping in simulation and attempt to transfer them to a real robot. We discuss some of the recent works done in all three categories, and their respective pros and cons.

II-1 Tactile Grasping

In 2015, [4] presented their force estimation and slip detection for grip control using the BioTac sensors where they try to classify “slip events” by looking at force estimation for the fingers. They proposed a grip controller, which helps adapt the grasp if slip is detected. They only used the pressure sensor data and only considered 2 or 3 fingers, and compared it with an IMU placed on the object itself.

In 2016, BiGS: Biotac Grasp Stability dataset [5] was released, which equipped a Barrett three-fingered hand with BioTac sensors and measured grasp stability on a set of objects, classified into cylindrical, box-like and ball-like geometries. In 2018, [6] presented their work on non-matrix tactile sensors, such as the BioTac, and how to exploit the local connectivity to predict grasp stability. They introduced the concept of “tactile images” and used only single readings of the sensor to achieve high rate of detection compared to multiple sequential readings.

In 2019, TactileGCN [7] was presented. The authors used a graph CNN to predict grasp stability and they used the BioTac sensor data to construct the graph, but used only three fingers to grasp. They employed the concept of “tactile images” to convert grasp stability into an image classification problem. Their approach only deals with static grasps and does not consider the dynamic interaction between objects and the fingers.

Another work [8] tackled this problem by extracting features from high-dimensional tactile images and infer relevant information to improve grasp quality. But their approach is restricted to flat, dome- and edge-like shapes.

The work by [9] used FingerVision [10] sensor mounted on a parallel gripper to generate a set of tactile manipulation skills, such as stirring, in-hand rotation, and opening objects with specified force. However, FingerVision is only appropriate for demonstraing proof of concept since it has a large form factor and is not robust.

In 2020, a new tactile sensor “DIGIT” is presented in [11] that learns to manipulate small objects with a multi-fingered hand from raw, high-resolution tactile readings. In [12], the authors use the BioTac sensor as a way to stabilize objects during grasp using a grip force controller. The underlying assumption is that the shape of the object is known a-priori and repeatability with different shapes and sizes remains an ongoing challenge.

II-2 Visual Input (with Tactile Input) Grasping

In [13], the authors demonstrate a data set of slow-motion actions (picking and placing) organized as manipulation taxonomies. In [14], an end-to-end action-conditioned grasping model is trained in a self-supervised manner that learns re-grasping from raw visuo-tactile data, where the robot receives tactile input intermittently. The work in [15] leverages the innovation in machine vision, optimization and motion generation to develop a low-cost glove-free teleoperation solution to grasping and manipulation.

II-3 Simulation Based Grasping

Perhaps the most popular and famous papers in this category are [16, 17] from OpenAI, in which the authors demonstrate a massively parallel learning environment for the Shadow Dexterous Hand, and learn in-hand manipulation of a cube, and the solving of a Rubik’s cube entirely in simulation after which they are able to transfer said learning onto a physical robot. While impressive, their transfer learning approach requires near-perfect information about the joint angles of the Hand, as well as visual feedback regarding the position of the object. Levine, et al. [18] performs large-scale data collection and training on 14 manipulators for learning hand-eye coordination, directly going from pixel-space to task-space. In [19], a model-free deep reinforcement learning which can be scaled up to learn a variety of manipulation behaviors in the real world has been proposed, using general purpose neural networks. A State-Only Imitation Learning (SOIL) is developed in [20], by training an inverse dynamics model to predict action between consecutive states. The research problems attempted using perception and learning has seen limited progress due to the fact that vision does not provide any information regarding contact forces, regularly fails to reconstruct the scene due to occlusion or that the material properties of the object and the process of learning is time consuming, requires large amounts of data, and sometimes does not transfer to a real robot [21].

II-A Organization of the paper

We provide an overview of our approach in Sec. III followed by detailed description of our hardware setup and software pipeline in Secs. IV and V. We then present our experiments in Sec. VI along with an analysis of the results in Sec. VII. Finally, we conclude our work in Sec. VIII with parting thoughts for future work.

III Overview

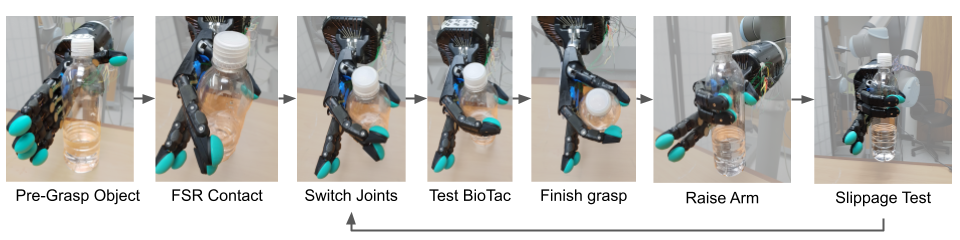

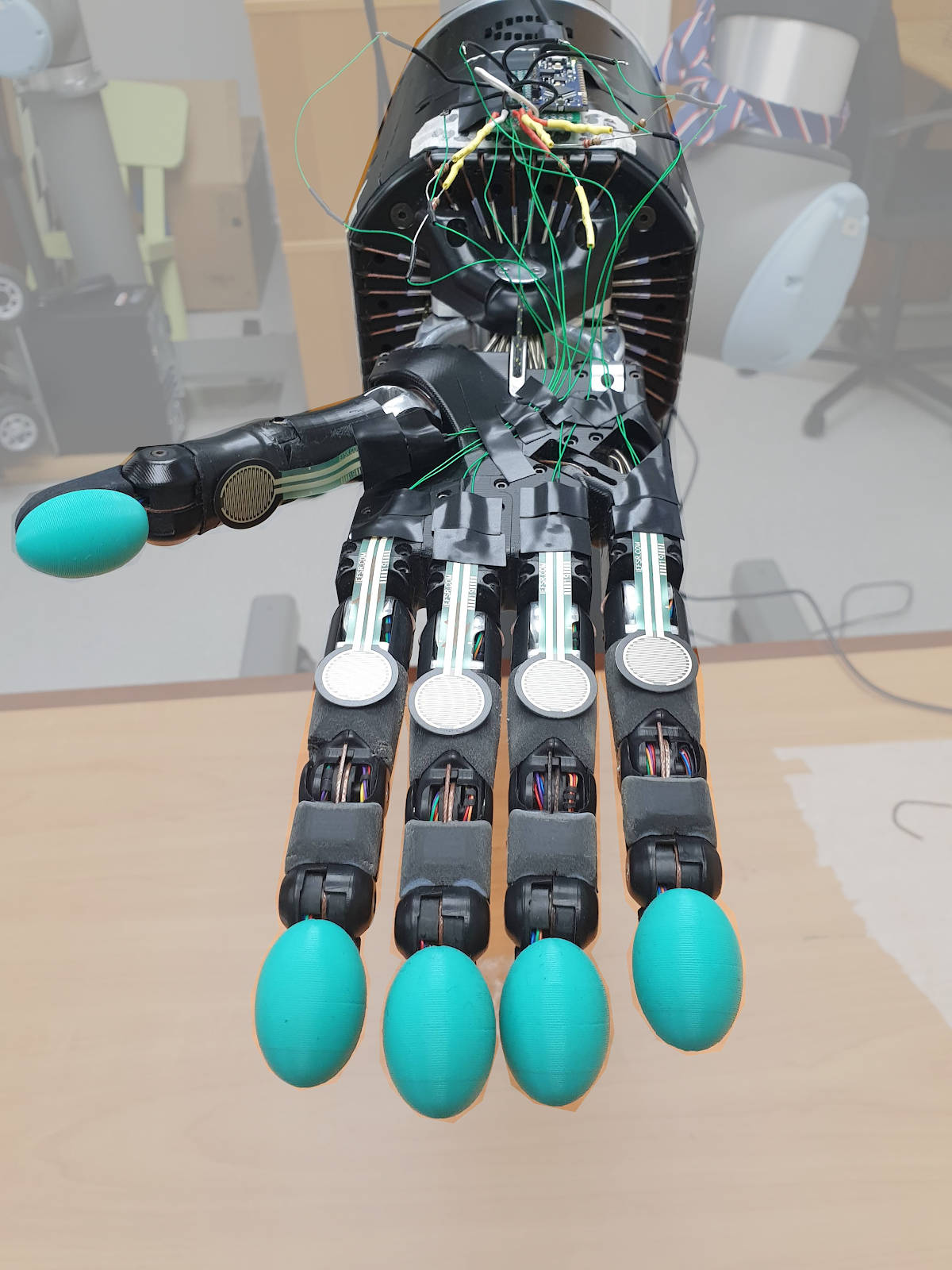

Our approach to solving the problem of grasping zero-shot objects is to define different actions for the robot and execute them accordingly. Figs. 1 and 2 shows the implementation and plots of these actions. An overview for controlling the robot to execute each of these actions are described in the rest of this section. Our proposed execution approach is implemented on a combination of the Shadow Dexterous Hand (we will call this ShadowHand, for brevity) equipped with BioTac SP tactile sensors (we will call this BioTac, for brevity) attached to a UR-10 robotic manipulator. An overview of our approach is as follows:

-

•

FSR Contact: Control each finger such that its proximal phalanges (the phalanges nearest to the palm) reaches the object.

-

•

Switch Joints: Control each finger’s configuration such that its distal phalanges (the fingertip) reaches the object.

-

•

Raise Arm: Move the robotic arm configuration upwards while controlling the robotic hand’s configuration to prevent object from slipping.

Before understanding our grasping framework, i.e. slip detection followed by a control policy for slip compensation, it is important to understand the basic structure of the ShadowHand. This will help the reader gain an intuition about the formulation of our control policy. The hardware setup is explained in the Sec. IV followed by our software pipeline in Sec. V.

IV Hardware Setup

IV-A Kinematic Structure of the Shadow Dexterous Hand

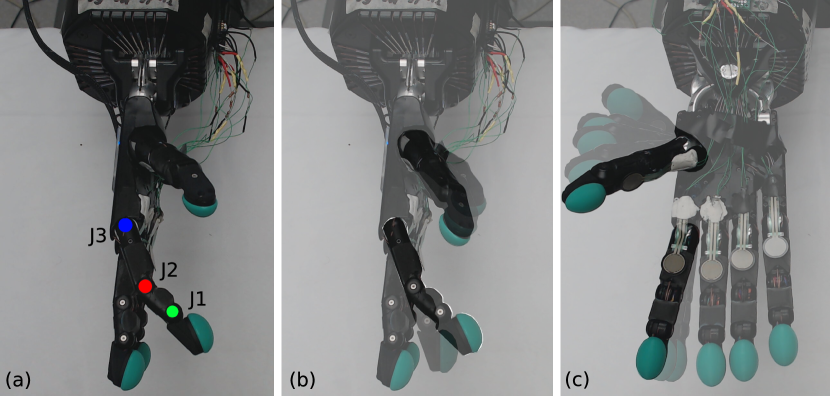

The ShadowHand has four fingers and an opposable thumb. Each of the fingers have four joints while the thumb has five joints. A representation of motion is shown in Fig. 3.

Fig. 3a demonstrates one finger and the joints specification it follows. Each finger has three links, also called phalanges, with one joint in between. From the top of the finger to the base, these are called the distal, middle and proximal phalanges respectively.

The fingers can be controlled by sending joint position values. The joint has two controllable ranges of motion, along the sagittal and transverse axes. This joint also has a minimum and maximum range of to respectively. The joints and are different in that, similar to the human hand, they are coupled internally at a kinematic level and do not move independently. They individually have a range of motion between to , but are underactuated. This means that the angle of the middle joint, i.e. is always greater than or equal to the angle of the distal joint, i.e. which allows the middle phalanx to bend while the distal phalanx remains straight.

Currently available commercial tactile sensors lie on a spectrum spanning from accuracy on one end to form factor on the other. These sensors can either have high accuracy while sacrificing anthropomorphic form factor or can be designed similar to human fingers, while having a relatively poor accuracy at tactile sensing. The choice of the sensor depends on the task at hand, which in our case is to grasp zero-shot objects. To this end, we select the ShadowHand equipped with the BioTac sensors in an effort to be biomimetic.

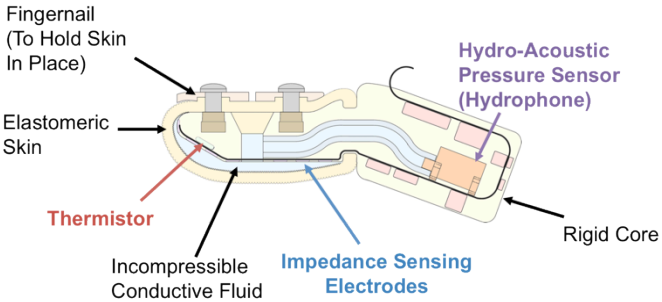

Using a combination of impedance sensing electrodes, hydro-acoustic pressure sensors and thermistors, the BioTac sensor is capable of sensing three of the most important sensory inputs that one needs for grasping, namely deformation and motion of stimuli across the skin, the pressure being applied on the finger and temperature flux across the surface. The internal cross-section of the BioTac is shown in Fig. 4.

The human hand can grasp objects of various shapes, sizes and masses without having seen them previously. This ability to grasp previously unseen objects in the absence of visual cues is possible only due to the presence of tactile sensing over a large surface area, through the skin. In Fig. LABEL:fig:palm_contacts, the highlighted parts show the primary regions of contact when grasping is performed. These regions make first contact with the object being grasped and apply the most amount of force, due to the large surface area. To mimic similar tactile characteristics on the ShadowHand, we equip it with additional sensors at the base of each finger and the thumb.

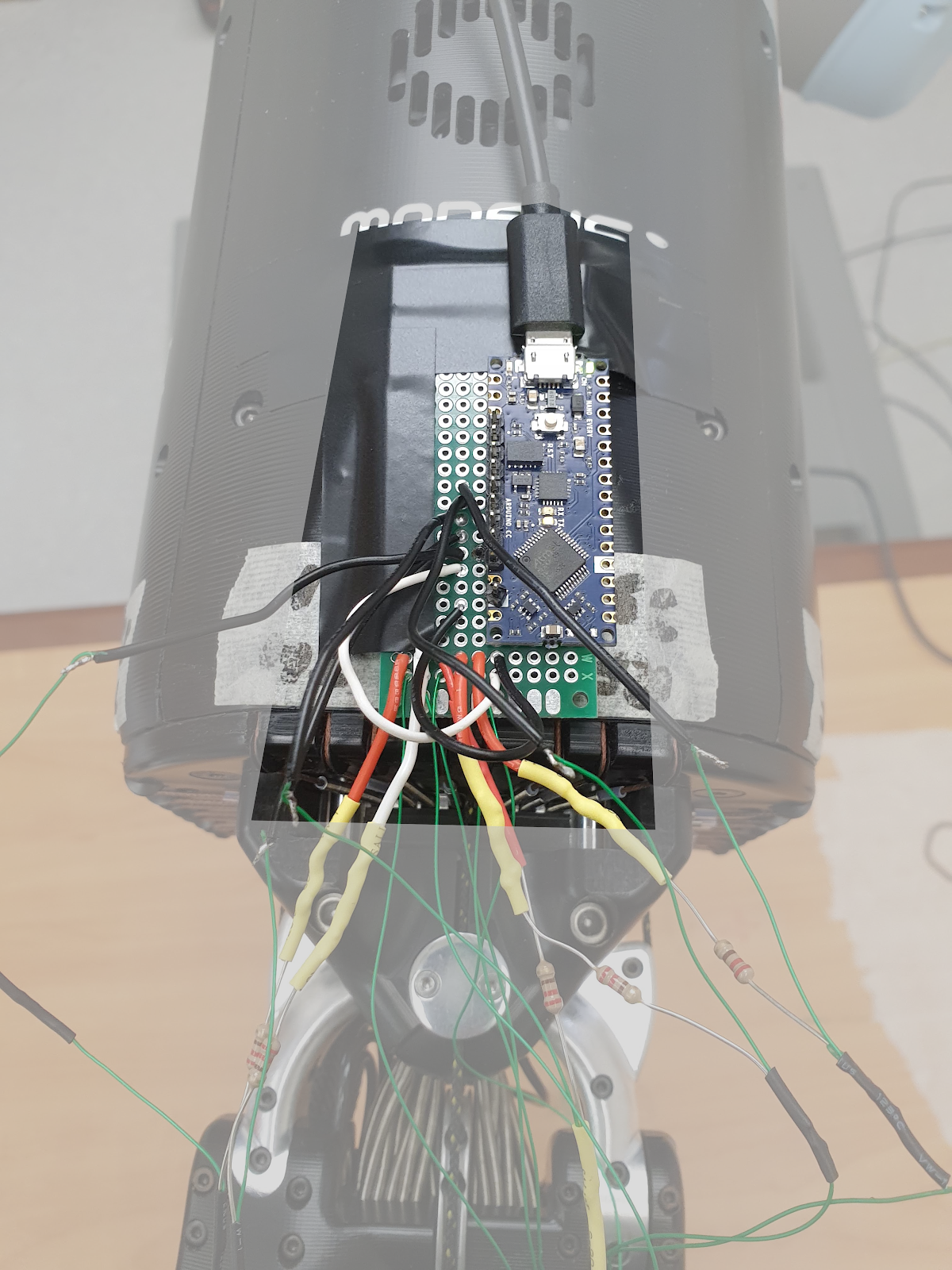

We utilize Force Sensitive Resistors (FSRs) for this purpose, which are flexible pads that change resistance when pressure is applied to the sensitive area (See Fig. LABEL:fig:fsr_hand). These FSRs work on the principle of a voltage divider circuit, Fig. LABEL:fig:arduino, and have a voltage drop inversely proportional to the resistance of the FSR.

We calibrate our sensors using a ground-truth force measurement unit, for 0N to 50N of force, using simple regression. We map a series of readings from the FSR to the corresponding force values in Newtons on the force measurement unit, and fit a regression line to these points. This is sufficient to measure contact forces between the fingers and an object during grasping.

V Software Pipeline

V-A Grasp Controller

Our pipeline, defined in Algos. 1, 2, starts at the pre-grasp pose where the fingers are fully extended and the thumb is bent at the base to a angle, which is optimal for grasping most objects due to having the maximum volume coverage by the trajectories of the finger tips. We explain the distinct parts of our pipeline in the following sections.

V-A1 Initialization

The controller begins by performing a tare operation using 50 readings of each BioTac sensor and computing their respective means. Successive readings are then min-max normalized, within an adjustable threshold of of this mean, to ensure that each sensor’s biases are taken into account, as well as to provide a standardized input to the control loop.

V-A2 Hand-Object Contact

Once the initialization process is complete and baseline readings have been established, the hand controller begins actuating the joints of all the fingers and of the thumb. This is done by sending the appropriate joint control commands. The current joint values are obtained from the ShadowHand, checked against the maximum joint limits of each finger ( for ), and increased by a small angle . The and joints of the fingers and thumb respectively are moved until it registers a contact with the object, as measured by the FSR readings. This establishes an initial reference point for the hand to begin refining the grasp, and the controller switches to the control policy for the coupled joints.

V-A3 Preliminary Grasp

At this stage, since the base of each finger and thumb have made initial contact with the object, the control policy switches to the coupled joints so that the fingers can begin to “wrap around” the object. We now activate the coupled and joints (controlled using a virtual joint defined in Eq. 1) of the fingers and the joint on the thumb. In this stage, the is inversely proportional to the the normalized sensor data from the BioTac sensor.

| (1) |

Intuitively, this means that when there is little or no contact between the fingers and the object, the controller sends out larger joint angle targets, causing the fingers to move larger distances. Once contact is made, the controller moves the fingers at progressively smaller increments, thus allowing for a more stable and refined grasp.

We use a Beziér curve to generate an easing function that maps our normalized BioTac sensor data to a normalized angle (in radians), between the joint limits of the respective joint. This mapping is then converted into a usable control output .

The Beziér curve is generated by the parametric formula

| (2) |

where and are the Beziér control points, is the instantaneous normalized BioTac reading and is the mapped Beziér curve output. We then compute control output as follows

| (3) |

where and .

We set a termination threshold on the BioTac sensor values such that the Hand controller stops executing as soon as a minimal level of contact is detected. Once all the fingers and the thumb have reached the preliminary grasp state, we exit the control loop.

V-B Slippage Detection

One of the crucial aspects of our proposed grasping pipeline is the ability to detect, and react to objects slipping between the fingers during grasp and move. This reactive nature of our controller allows for precise force applications on zero-shot objects, i.e., objects of unknown masses, sizes or shapes.

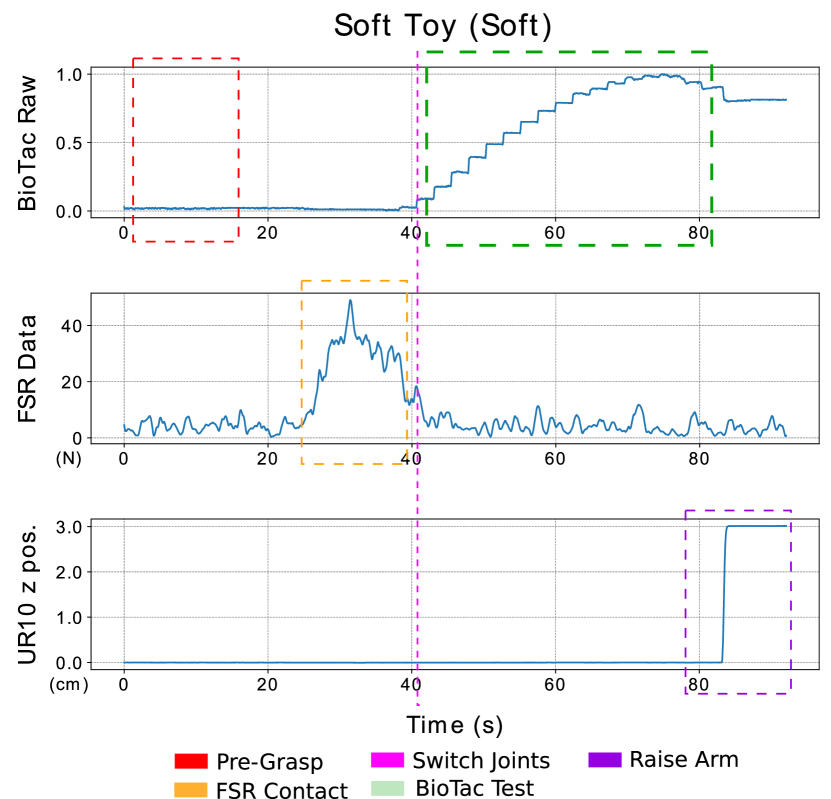

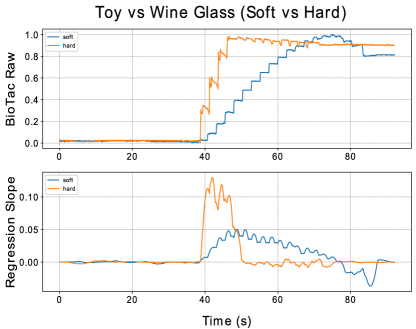

For slippage detection, we utilize data from the BioTac sensor. Fig. 6 show two sets of plots of sensors readings captured during grasping a wine glass, without and during slip respectively. From top to bottom, the graphs represent the BioTac, FSR readings and the position of the UR-10 along the z-axis. For visual clarity, we plot data for only the first finger. The difference in readings during slip versus without slip is quite evident, with several micro-vibrations in the BioTac data while the object slowly slips off the hand. This is due to the frictional properties of the BioTac skin, as well as the weight of the object. These vibrations are absent when the object does not slip, and the readings maintain a mostly stable baseline. Our slip detection algorithm works by measuring and tracking the change in gradient of the sensor readings over a non-overlapping time-window of .

We use linear regression [22] to obtain a the instantaneous slope over the time-window, and perform the comparison at consecutive intervals, as shown in Fig. 7. Consequently, by measuring the relative change in gradient, we are able to judge how fast the object is slipping, and provide larger or smaller control commands as necessary.

VI Experimental Results

VI-A Experiment Setup

The setup that we use to implement our pipeline includes multiple different robotic and sensing hardware, primarily the UR-10 manipulator and the Shadow Dexterous Hand, equipped with SynTouch BioTac tactile sensors [23]. Our approach utilizes a switching controller architecture, where we deploy different strategies for controlling the UR-10 arm and the different joints of the Shadow Hand [24] with feedback between controllers. The underlying control inputs come from various tactile sensors and the joint angles of the Shadow Hand.

We also introduce the shadowlibs111\faGithub kanishkaganguly/shadowlibs library, a software toolkit that contains several utility functions for controlling the Shadow Hand.

VI-B Results

Bottom (L-R): Grasping of Softball, Soft Toy, Box and Wine Glass.

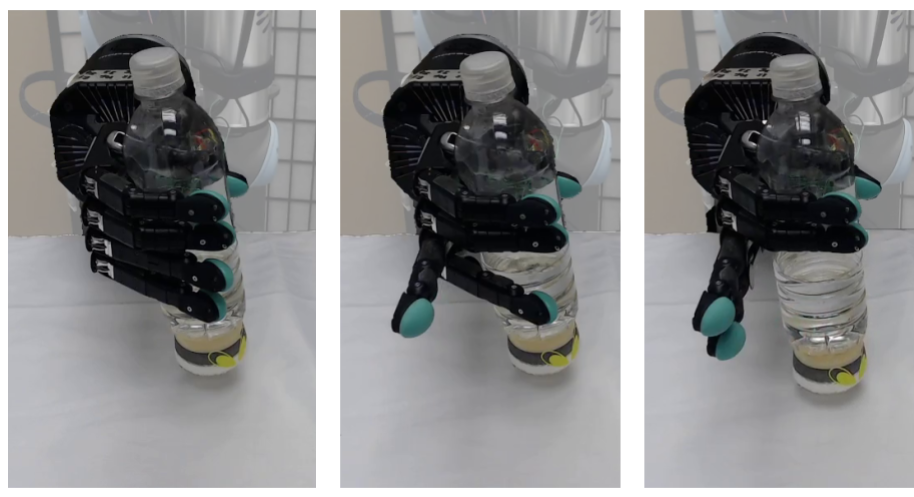

We demonstrate our algorithm on a set of objects with varied shapes and sizes. The upper row of Fig. 8(a) shows the dataset used, and the lower row of Fig. 8(a) shows successful grasps of four classes of objects, namely spherical, non-rigid, cuboidal, and transparent cylindrical respectively. We are able to grasp a wine glass, a soft toy, a ball and a box without any human intervention and without prior knowledge of their shapes, sizes or weights. The criteria for successful grasp were based on the ability to not only grasp the object entirely, but also to lift it and hold it in suspension for 10 seconds. Table I summarizes our results for various objects in our dataset. The accompanying video submission shows a detailed view of the grasping process, with cases for slip and without, as well as results for the other objects.

| Objects | Success (%) |

|---|---|

| Bottle | 85 |

| Transparent Wine Glass | 80 |

| Tuna Can | 70 |

| Football | 60 |

| Softball | 65 |

| Jello Box | 70 |

| Electronics Box | 85 |

| Apple | 75 |

| Tomato | 70 |

| Soft Toy | 75 |

| Paper | Method | Num. Objects | Success (%) |

|---|---|---|---|

| Li, et al.[25] | Simulation | 3 | 53.3 |

| Liu, et al.[26] | Simulation | 50 | 66.0 |

| Saxena, et al.[27] | Vision | 9 | 87.8 |

| Wu, et al.[28] | Reinf. Learning | 10 | 98.0 |

| Pablo, et al.[29] | GCN | 51 | 76.6 |

| Ours | Tactile based Control | 10 | 73.5 |

VII Analysis

As can be seen from Fig. 7, there is a clear distinction between the BioTac’s response to soft versus hard objects. The resulting slopes are also clearly distinguishable in their rate of change. Intuitively, softer objects slip slowly as compared to harder objects. This opens up interesting future avenues for material-adaptive grasping using our approach.

We also demonstrate in Fig. 8(b), that our controller is able to adjust to changes to the number of fingers in contact with the object on-the-fly. In particular, the object remains in a stable grasp even after two fingers are removed from contact showing the adaptive and robust nature of our approach. Such an approach has built-in recovery from possible failure of finger joints.

Lastly, as can be seen from the results, our proposed method, while simple, is quite adept at zero-shot object grasping. Compared to other methods, as shown in Table II, such as those that use vision or learning, we are able to achieve comparable accuracy. Since our approach only relies on tactile data, we can also robustly grasp transparent objects with relative ease.

VIII Conclusions

In this work, we develop a simple closed-loop formulation to grasp and manipulate a zero-shot object (object without a prior on shape, size, material or weight) with only tactile feedback. Our approach is based on the concept that we need to compensate for object slip to grasp correctly. We present a novel tactile-only closed-loop feedback controller to compensate for object slip. We experimentally validate our approach in multiple real-world experiments with objects of varied shapes, sizes, textures and weights using a combination of the Shadow Dexterous Hand equipped with BioTac SP tactile sensors. Our approach achieves a success rate of 73.5%. As a parting thought, our approach can be augmented by a zero-shot segmentation method [30] to push the boundaries of learning new objects through interaction.

References

- [1] I. Camponogara and R. Volcic, “Grasping movements toward seen and handheld objects,” Scientific Reports, vol. 9, no. 1, p. 3665, Mar 2019. [Online]. Available: https://doi.org/10.1038/s41598-018-38277-w

- [2] R. Breveglieri, A. Bosco, C. Galletti, L. Passarelli, and P. Fattori, “Neural activity in the medial parietal area v6a while grasping with or without visual feedback,” Scientific Reports, vol. 6, no. 1, p. 28893, Jul 2016. [Online]. Available: https://doi.org/10.1038/srep28893

- [3] R. K. Clifton, D. W. Muir, D. H. Ashmead, and M. G. Clarkson, “Is visually guided reaching in early infancy a myth?” Child Development, vol. 64, no. 4, pp. 1099–1110, 1993. [Online]. Available: http://www.jstor.org/stable/1131328

- [4] Z. Su, K. Hausman, Y. Chebotar, A. Molchanov, G. E. Loeb, G. S. Sukhatme, and S. Schaal, “Force estimation and slip detection/classification for grip control using a biomimetic tactile sensor,” in IEEE-RAS International Conference on Humanoid Robots (Humanoids). IEEE, 2015, pp. 297–303. [Online]. Available: http://ieeexplore.ieee.org/xpls/abs_all.jsp?arnumber=7363558&tag=1

- [5] Y. Chebotar, K. Hausman, Z. Su, A. Molchanov, O. Kroemer, G. Sukhatme, and S. Schaal, “Bigs: Biotac grasp stability dataset,” 05 2016.

- [6] B. S. Zapata-Impata, P. Gil, and F. T. Medina, “Non-matrix tactile sensors: How can be exploited their local connectivity for predicting grasp stability?” CoRR, vol. abs/1809.05551, 2018. [Online]. Available: http://arxiv.org/abs/1809.05551

- [7] A. Garcia-Garcia, B. S. Zapata-Impata, S. Orts-Escolano, P. Gil, and J. Garcia-Rodriguez, “Tactilegcn: A graph convolutional network for predicting grasp stability with tactile sensors,” arXiv preprint arXiv:1901.06181, 2019.

- [8] N. Pestell, L. Cramphorn, F. Papadopoulos, and N. F. Lepora, “A sense of touch for the shadow modular grasper,” IEEE Robotics and Automation Letters, vol. 4, no. 2, pp. 2220–2226, 2019.

- [9] B. Belousov, A. Sadybakasov, B. Wibranek, F. Veiga, O. Tessmann, and J. Peters, “Building a library of tactile skills based on fingervision,” in 2019 IEEE-RAS 19th International Conference on Humanoid Robots (Humanoids), 2019, pp. 717–722.

- [10] A. Yamaguchi, “Fingervision,” http://akihikoy.net/p/fv.html, 2017.

- [11] M. Lambeta, P.-W. Chou, S. Tian, B. Yang, B. Maloon, V. R. Most, D. Stroud, R. Santos, A. Byagowi, G. Kammerer et al., “Digit: A novel design for a low-cost compact high-resolution tactile sensor with application to in-hand manipulation,” IEEE Robotics and Automation Letters, vol. 5, no. 3, pp. 3838–3845, 2020.

- [12] F. Veiga, B. Edin, and J. Peters, “Grip stabilization through independent finger tactile feedback control,” Sensors (Basel, Switzerland), vol. 20, no. 6, p. 1748, Mar 2020. [Online]. Available: https://pubmed.ncbi.nlm.nih.gov/32245193

- [13] Y. C. Nakamura, D. M. Troniak, A. Rodriguez, M. T. Mason, and N. S. Pollard, “The complexities of grasping in the wild,” in 2017 IEEE-RAS 17th International Conference on Humanoid Robotics (Humanoids). IEEE, 2017, pp. 233–240.

- [14] R. Calandra, A. Owens, D. Jayaraman, J. Lin, W. Yuan, J. Malik, E. H. Adelson, and S. Levine, “More than a feeling: Learning to grasp and regrasp using vision and touch,” IEEE Robotics and Automation Letters, vol. 3, no. 4, pp. 3300–3307, 2018.

- [15] A. Handa, K. Van Wyk, W. Yang, J. Liang, Y.-W. Chao, Q. Wan, S. Birchfield, N. Ratliff, and D. Fox, “Dexpilot: Vision-based teleoperation of dexterous robotic hand-arm system,” in 2020 IEEE International Conference on Robotics and Automation (ICRA). IEEE, 2020, pp. 9164–9170, teleoperation.

- [16] OpenAI, M. Andrychowicz, B. Baker, M. Chociej, R. Józefowicz, B. McGrew, J. W. Pachocki, J. Pachocki, A. Petron, M. Plappert, G. Powell, A. Ray, J. Schneider, S. Sidor, J. Tobin, P. Welinder, L. Weng, and W. Zaremba, “Learning dexterous in-hand manipulation,” CoRR, vol. abs/1808.00177, 2018. [Online]. Available: http://arxiv.org/abs/1808.00177

- [17] OpenAI, I. Akkaya, M. Andrychowicz, M. Chociej, M. Litwin, B. McGrew, A. Petron, A. Paino, M. Plappert, G. Powell, R. Ribas, J. Schneider, N. Tezak, J. Tworek, P. Welinder, L. Weng, Q. Yuan, W. Zaremba, and L. Zhang, “Solving rubik’s cube with a robot hand,” CoRR, vol. abs/1910.07113, 2019. [Online]. Available: http://arxiv.org/abs/1910.07113

- [18] S. Levine, P. Pastor, A. Krizhevsky, and D. Quillen, “Learning hand-eye coordination for robotic grasping with deep learning and large-scale data collection,” 2016.

- [19] H. Zhu, A. Gupta, A. Rajeswaran, S. Levine, and V. Kumar, “Dexterous manipulation with deep reinforcement learning: Efficient, general, and low-cost,” in 2019 International Conference on Robotics and Automation (ICRA). IEEE, 2019, pp. 3651–3657.

- [20] I. Radosavovic, X. Wang, L. Pinto, and J. Malik, “State-only imitation learning for dexterous manipulation,” arXiv preprint arXiv:2004.04650, 2020.

- [21] Y. Liu, Z. Li, H. Liu, and Z. Kan, “Skill transfer learning for autonomous robots and human-robot cooperation: A survey,” Robotics and Autonomous Systems, p. 103515, 2020.

- [22] J. M. Stanton, “Galton, pearson, and the peas: A brief history of linear regression for statistics instructors,” Journal of Statistics Education, vol. 9, no. 3, p. null, 2001. [Online]. Available: https://doi.org/10.1080/10691898.2001.11910537

- [23] “https://www.syntouchinc.com/en/sensor-technology/.” [Online]. Available: https://www.syntouchinc.com/en/sensor-technology/

- [24] P. Tuffield and H. Elias, “The shadow robot mimics human actions,” Industrial Robot: An International Journal, 2003.

- [25] M. Li, K. Hang, D. Kragic, and A. Billard, “Dexterous grasping under shape uncertainty,” Robotics and Autonomous Systems, vol. 75, pp. 352–364, 2016.

- [26] M. Liu, Z. Pan, K. Xu, K. Ganguly, and D. Manocha, “Deep differentiable grasp planner for high-dof grippers,” 2020.

- [27] A. Saxena, L. Wong, M. Quigley, and A. Y. Ng, “A vision-based system for grasping novel objects in cluttered environments,” in Robotics Research, M. Kaneko and Y. Nakamura, Eds. Berlin, Heidelberg: Springer Berlin Heidelberg, 2011, pp. 337–348.

- [28] B. Wu, I. Akinola, J. Varley, and P. Allen, “Mat: Multi-fingered adaptive tactile grasping via deep reinforcement learning,” 2019.

- [29] P. G. Zapata-Impata and F. Torres, “Tactile-driven grasp stability and slip prediction,” Robotics, 2019. [Online]. Available: https://www.mdpi.com/2218-6581/8/4/85

- [30] C. D. Singh, N. J. Sanket, C. M. Parameshwara, C. Fermuller, and Y. Aloimonos, “NudgeSeg: Zero-shot object segmentation physical interaction,” 2021 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2021.