Hamiltonian MCMC Methods for Estimating Rare Events Probabilities in High-Dimensional Problems

Abstract

Accurate and efficient estimation of rare events probabilities is of significant importance, since often the occurrences of such events have widespread impacts. The focus in this work is on precisely quantifying these probabilities, often encountered in reliability analysis of complex engineering systems, based on an introduced framework termed Approximate Sampling Target with Post-processing Adjustment (ASTPA), which herein is integrated with and supported by gradient-based Hamiltonian Markov Chain Monte Carlo (HMCMC) methods. The developed techniques in this paper are applicable from low- to high-dimensional stochastic spaces, and the basic idea is to construct a relevant target distribution by weighting the original random variable space through a one-dimensional output likelihood model, using the limit-state function. To sample from this target distribution, we exploit HMCMC algorithms, a family of MCMC methods that adopts physical system dynamics, rather than solely using a proposal probability distribution, to generate distant sequential samples, and we develop a new Quasi-Newton mass preconditioned HMCMC scheme (QNp-HMCMC), which is particularly efficient and suitable for high-dimensional spaces. To eventually compute the rare event probability, an original post-sampling step is devised using an inverse importance sampling procedure based on the already obtained samples. The statistical properties of the estimator are analyzed as well, and the performance of the proposed methodology is examined in detail and compared against Subset Simulation in a series of challenging low- and high-dimensional problems.

Keywords Hamiltonian MCMC Quasi-Newton Rare Event Probability Reliability Estimation High-dimensional Spaces Inverse Importance Sampling.

1 Introduction

In this work, we develop a framework for estimation of rare events probabilities, a commonly encountered important problem in several engineering and scientific applications, often observed in the form of probability of failure () estimation or, alternatively, reliability estimation. In many practical applications, failure probabilities are fortunately very low, from to even and lower, and calculating such small probabilities presents many numerical and mathematical challenges, particularly in cases with high dimensional random spaces and/or expensive computational models, that practically limit the afforded number of model calls. The number of model calls is thus of great importance in these problems and one of the critical parameters that limits or prohibits use of several available techniques in the literature.

The reliability estimation problem has a long history in the engineering community [1, 2, 3, 4, 5]. One of the significant early successes was the discovery of the so called First Order Reliability Method (FORM) [6, 7], long investigated by Der Kiureghian, Ditlevsen and co-workers [8, 9], and many others, e.g., Shinozuka [10], providing also several enhancements, including second order effects (SORM) by Breitung [11]. In FORM/SORM methods, the search for the most probable failure point (MPP) is usually performed by gradient-based optimization methods [12, 13]. Although these asymptotic approximation methods are usually computationally inexpensive, they have several limitations and may involve considerable errors, particularly in high-dimensional problems or in problems with highly nonlinear limit-state functions [6, 14]. As such, various sampling-based methods have also been suggested by the reliability community, e.g., Schuëller and Pradlwarter [15], to tackle the problem in its utmost generality, with crude Monte Carlo approaches being prohibitive for this type of problems due to their excessive computational demands. Only some of many notable contributions can be seen in [16, 17, 18, 19, 20], describing and studying the state-of-the art-Subset Simulation and its enhancements, originally presented in [21], and in [22, 23, 24] utilizing importance sampling schemes, often also combined with the cross-entropy method [25, 26, 27, 28, 29]. Alternative approaches include directional and line Sampling [30, 31, 32, 33], the PHI2 method for time-variant reliability [34], and asymptotic sampling strategies [35, 36], among others. The problem of estimating rare event probabilities has also attracted a great deal of attention in other relevant communities and in mathematical literature, with several suggested methods sharing many similarities with FORM/SORM approaches, e.g.,[37], and Subset Simulation, such as in approaches involving Sequential Monte Carlo samplers for rare events [38, 39, 40], interacting particle system methodologies [41, 42], multilevel splitting methods [43, 44] and forward flux sampling [45], to name but a few.

In this paper, we are presenting a new solution approach to the problem by combining gradient-based approaches, already familiar to the engineering community and often available in computational tools, with Markov Chain Monte Carlo (MCMC) sampling methods in the form of Hamiltonian MCMC. MCMC methods [46] are plausibly the most broadly accepted ones to generate samples from target distributions, in cases where direct sampling is not possible. Despite notable successes, many MCMC methods scale poorly with the number of dimensions and can become inefficient. For complicated multivariate models, classic methods such as random-walk Metropolis-Hastings [47] and Gibbs sampling [48] may require an unacceptably long time and number of samples to adequately explore the target distribution. Originally developed by Duane et al. [49] and to a large extent understood and popularized through the works of Neal [50, 51], Hamiltonian Markov Chain Monte Carlo (HMCMC), usually called Hamiltonian Monte Carlo (HMC) in the literature, produces Markov chain samples based on Hamiltonian dynamics principles, is characterized by much better scalability [51, 52] and faster mixing rates, is capable of generating samples with much weaker auto-correlation, even in complex high-dimensional random spaces [53], and has enjoyed broad-spectrum successes in most general settings [54]. Balanced against these features and achievements is of course the need for multiple gradient evaluations in each HMCMC iteration, making the method more computationally intensive per iteration than other algorithms, such as random-walk Metropolis-Hastings and Metropolis-adjusted Langevin [55, 56], for example. Girolami and Calderhead introduced a Riemannian Manifold Hamiltonian Monte Carlo (RMHMC) approach in [57, 58] that has demonstrated significant successes in many challenging problems but requires computing higher-order derivatives of the target distribution. Overall, the two impediments to using Hamiltonian MCMC methods are the required gradients, since analytical formulas are not always available and numerical techniques are computationally costly, particularly in high dimensions, and the heedful tuning of the involved parameters [51]. The first issue can in certain cases be solved by automatic differentiation (e.g. [54, 59]) and stochastic gradient approaches [60], while for the second a fully automated state-of-the-art HMCMC algorithm has been developed by Hoffman and Gelman, known as the No-U-Turn Sampler (NUTS [61]). NUTS introduces, among others, an expensive tree building procedure, in order to trace when the Hamiltonian trajectory turns back on itself. Many of these approaches are not however relevant and/or applicable to the analyzed problem in this work, since, in general, many rare event and reliability problems involve complex, computationally expensive models, complicating and/or precluding use of automatic differentiation and data-based stochastic gradient techniques, as well as methodologies that require a considerably high number of model calls per problem, such as NUTS.

A new computationally efficient sampling framework for estimation of rare events probabilities is thus presented in this work, having exceptional performance in quantifying low failure probabilities for any type of reliability problems described in both low and high dimensional stochastic spaces. The introduced methodology is termed Approximate Sampling Target with Post-processing Adjustment (ASTPA) and comprises a sampling and a post-processing phase. The sampling target in ASTPA is constructed by appropriately combining the multi-dimensional random variable space with a cumulative distribution function that utilizes the limit-state function. Having acquired the samples, an adjustment step is then applied, in order to account for the fact that the samples are drawn from an approximate target distribution, and to thus correctly quantify the rare event probability. An original inverse importance sampling procedure is devised for this adjustment step, taking its name from the fact that the samples are already available. Although the ASTPA framework is general and can be combined with any appropriate Monte Carlo sampling method, it becomes substantially efficient when directly supported by gradient-based Hamiltonian Markov Chain Monte Carlo (HMCMC) samplers. To address the scalability issues a typical HMCMC sampler may manifest in high-dimensional spaces, a new Quasi-Newton mass preconditioned HMCMC approach is also developed. This new sampling scheme follows an approximate Newton direction and estimates the pertinent Hessian in its burn-in stage, only based on gradient information and the BFGS approximation, and eventually utilizes the computed Hessian as a preconditioned mass matrix in the main non-adaptive sampling stage. An approximate analytical expression for the uncertainty of the computed estimation is also derived, showcasing significant accuracy with numerical results, and all involved user-defined parameters of ASTPA are thoroughly analyzed and general default values are suggested. Finally, to fully examine the capabilities of the proposed methodology, its performance is demonstrated and compared against Subset Simulation in a series of challenging low- and high-dimensional problems.

2 Failure Probability Estimation

The failure probability PF for a system, that is the probability of a defined unacceptable system performance, can be expressed as a -fold integral, as:

| (1) |

where is the random vector T, is the failure event, g() is the limit-state function that can include one or several distinct failure modes and defines the system failure by g() 0, I(.) denotes the indicator function with () = 1 if g() 0 and () = 0 otherwise, is the expectation operator, and is the joint probability density function (PDF) for . As is common practice for problems of this type, in this work the joint PDF of is the standard normal one, due to its rotational symmetry and exponential probability decay. In most cases this is not restrictive, since it is uncomplicated to transform the original random variables X to , e.g. [62]. When this is not the case, however, and the probabilistic characterization of X can be defined in terms of marginal distributions and correlations, the Nataf distribution (equivalent to Gaussian copula) is commonly used to model the joint PDF, and the mapping to the standard normal space can be then accomplished [8, 63].

The focus in this work is to analyze the described integration in Eq.˜1 under very general settings, including the following challenging sampling context: (i) Computation of Eq.˜1 can only be done in approximate ways; (ii) the relationship between and is not explicitly known and for any we can merely check whether it belongs to the failure set or not, i.e. calculate the value (); (iii) the computational effort for evaluating () for each value of is assumed to be quite significant, so that it is essential to minimize the number of such function evaluations (model calls); (iv) the probability of failure PF is assumed to be very small, e.g. in order of ; (v) the parameter space is assumed to be high-dimensional, in the order of and more, for example. Under these general settings, several sampling methods, including direct Monte Carlo approaches and NUTS [61], become highly inefficient and fail to address the problem effectively. Subset Simulation (SuS) [21] has however proven successful and robust in dealing with problems of this type and is shown to outperform other relevant methods in numerous papers, e.g. [24, 31]. SuS relies on a modified component-wise Metropolis MCMC method that can successfully work in high dimensions and does not require a burn-in sampling stage. A notable adaptive conditional sampling (aCS) methodology within the SuS framework is also introduced by Papaioannou et al. in [16], providing important advantages and enhanced performance in several cases. Relevant SuS variants are thus utilized in this work, for validation and comparison purposes with our presented methodology that completely deviates from SuS and is efficiently supported by a direct Hamiltonian MCMC sampling approach [64].

3 Hamiltonian Markov Chain Monte Carlo

3.1 Standard HMCMC with leapfrog integrator

Based on the aforementioned discussion in the previous sections, Hamiltonian dynamics can be used to produce distant Markov chain samples, thereby avoiding the slow exploration of the state space that results from the diffusive behavior of simple random-walk proposals. This Hamiltonian approach was firstly introduced to molecular simulations by Alder and Wainwright in [65], in which the motion of the molecules was deterministic. Duane et al. in [49] united the MCMC and molecular dynamics approaches. Given -dimensional variables of interest with (unnormalized) density (.), the Hamiltonian Monte Carlo method introduces -dimensional auxiliary momentum variables z and samples from the joint distribution characterized by:

| (2) |

where is proposed to be a symmetric distribution. With and being uniquely described up to normalizing constants, the functions and are introduced as the potential energy and kinetic energy, owing to the concept of the canonical distribution [51] and the physical laws which motivate the Hamiltonian Markov Chain Monte Carlo algorithm. The total energy is often termed the Hamiltonian . The kinetic energy function is unconstrained and can be formed in various ways according to the implementation. In most typical cases, the momentum is sampled by a zero-mean normal distribution [51, 53], and accordingly the kinetic energy can be written as: , where M is a symmetric, positive-definite inverse covariance matrix, termed mass matrix.

HMCMC generates a Metropolis proposal on the joint state-space by sampling the momentum and simulating trajectories of Hamiltonian dynamics in which the time evolution of the state is governed by Hamilton’s equations, expressed typically by:

| (3) |

where denotes here the log-density of the target distribution. Hamiltonian dynamics prove to be an effective proposal generation mechanism because the distribution is invariant under the dynamics of Eq.˜3. These dynamics enable a proposal, triggered by an approximate solution of Eq.˜3, to be distant from the current state, yet with high probability acceptance. The solution to Eq.˜3 is analytically intractable in general and thus the Hamiltonian equations need to be numerically solved by discretizing time using some small step size, . A symplectic integrator that can be used for the numerical solution is the leapfrog one and works as follows:

| (4) |

The main advantage of using the leapfrog integrator is its simplicity, that is volume-preserving, and that it is reversible, due to its symmetry, by simply negating z, in order to generate a valid Metropolis proposal. See Neal [51] and Betancourt [53] for more details on energy-conservation, reversibility and volume-preserving integrators and their connections to HMCMC. It is noted that in the above leapfrog integration algorithm, the computationally expensive part is the one model call per step to acquire the term. With the trajectory or else path length, taking leapfrog steps approximates the evolution , providing the exact solution in the limit .

Typically, a simple Gaussian momentum is used for the Hamiltonian, (or ) and the mass matrix M is often set to the identity matrix, I. A generic procedure for drawing samples via HMCMC is described in Algorithm˜1, where again is the log-density of the target distribution of interest, are initial values, and L is the number of leapfrog steps, as explained before. For each HMCMC step, the momentum is first resampled and then the L leapfrog updates are performed, as seen in Eq.˜4, before a typical accept/reject MCMC Metropolis step takes place.

3.2 HMCMC parameters

The HMCMC performance and efficiency is well known to rely on selecting suitable values for the and L parameters. For a fixed trajectory length , the stepsize balances the trade-off between accuracy and computational cost. In this work, we select the stepsize in such a way so that the corresponding average acceptance rate is approximately 65, as values between 60 and 80 are typically assumed optimal [51, 52, 61]. The dual averaging algorithm of Hoffman and Gelman [61] is adopted to perform this task, used here only in the burn-in phase, to tune . To determine the number of leapfrog steps, , we estimate an appropriate to use trajectory length based on a few simulation runs, so as to have a sufficiently high so called normalized Expected Square Jumping Distance (ESJD), 2, as introduced in [66], and then we randomly perturb each trajectory length in the range to further avoid periodicity ( denotes the -th iteration of HMCMC). In all our numerical experiments herein, we determine and in this manner, as we have found it to work well in practice. The role of these parameters and techniques for determining them have been quite extensively studied in the literature and for more details we refer to [51, 52, 61].

4 Quasi-Newton mass preconditioned HMCMC (QNp-HMCMC)

In complex high-dimensional problems, the performance of the typical HMCMC sampler, presented as Algorithm˜1, may deteriorate and a prohibitive number of model calls could be required. A variety of methods have been proposed in the literature to address this issue. Among others, a Riemannian Manifold Hamiltonian Monte Carlo (RMHMC) has been suggested in [57] that takes advantage of the manifold structure of the variable space, at the cost of calculating second- and third-order derivatives of distributions and using a generalized leapfrog scheme, requiring additional model calls per leapfrog step. Although possible in some cases regarding second-order derivatives, e.g. [67], in the majority of cases higher-order derivatives are not provided by computational models, such as finite element models. In addition, the computational cost still increases importantly and extra model calls per leapfrog step are usually restrictive for computationally expensive models.

In this work, we instead address the high-dimensionality performance issue in a different, Newton-type context, without needing additional model calls per leapfrog step and by only using the Hessian information of the target distribution, either the exact one, when relevant information is provided freely by the computational model, or, even more general, an approximate one that does not increase the computational cost. An approximate Hessian can be given in a systematic manner based on already available gradient information, similar to Quasi-Newton methods used in nonlinear programming [68]. The well-known BFGS approximation [68] is thus utilized for our Quasi-Newton type Hamiltonian MCMC approach and all numerical examples in this work are analyzed accordingly, based solely on this most general approximate Hessian case. Let , consistent with the previous sections. Given the - estimate , where is an approximation to the inverse Hessian at , the BFGS update can be expressed as:

| (5) |

where I is the identity matrix, , and where denotes the log density of the target distribution, as before. There is a long history of efficient BFGS updates for very large systems and several numerical techniques can be used, including sparse and limited-memory approaches.

Two relevant studies in the literature on Quasi-Newton extensions and connections to MCMC algorithms can be found in [69, 70]. Our developed method, however, has fundamental differences that are summarized in that we are focusing on Hamiltonian methods, we are using two integrated coupled phases, an adaptive and a non-adaptive, and finally we consistently incorporate the Quasi-Newton outcomes in both stages of momentum sampling and simulation of Hamiltonian dynamics. In more detail, in the adaptive burn-in phase of the algorithm we are still sampling the momentum from an identity mass matrix, , but the ODEs of Eq.˜3 now become:

| (6) |

which is equivalent to the implicit linear transformation , and W is given by Eq.˜5. Accordingly, the leapfrog integrator is then reformulated as:

| (7) |

Hence, this new dynamic scheme efficiently and compatibly adjusts both the z and evolutions based on the local structure of the target distribution, and also features a Quasi-Newton direction for the momentum variables, allowing large jumps across the state space. The final estimation of the approximated inverse Hessian matrix, W, in the adaptive burn-in phase is then used in the subsequent non-adaptive phase of the algorithm as a preconditioned mass (inverse covariance) matrix, , used to sample the Gaussian momentum . As such, typical Hamiltonian dynamics are now used, albeit with this properly constructed mass matrix that takes into account the scale and correlations of the position variables, leading to significant efficiency gains, particularly in high-dimensional problems. The BFGS procedure in Eq.˜5 normally provides a symmetric, positive-definite W matrix in an optimization context. However, in our case we are using BFGS under different settings that may not satisfy the curvature condition , resulting in occasional deviations from positive-definiteness. Several standard techniques can be then implemented to ensure positive-definiteness, such as a damped BFGS updating [68] or the simple addition , where is some appropriate number. A straightforward method to determine is to choose it larger than the absolute value of the minimum eigenvalue of . Another technique involves utilizing a semidefinite programming approach to identify an optimized diagonal matrix to add to . Alternatively, W can be updated only when the curvature condition is satisfied, which directly guarantees positive definiteness. To further ensure the stability of the sampler, a positive threshold can be introduced to the curvature condition instead of zero, e.g., . This latter approach has been used and has worked well in this work. Since the final estimation of W in the adaptive burn-in phase is then extensively utilized in the subsequent non-adaptive phase, we suggest use of a directly provided positive-definite matrix W at this step. This can simply be accomplished by adding one more burn-in iteration step at the end of the burn-in phase, until an appropriate sample, directly supported by a positive definite W matrix is identified.

Our derived Quasi-Newton mass preconditioned Hamiltonian Markov Chain Monte Carlo (QNp-HMCMC) method is concisely summarized and presented in Algorithm˜2. Overall, QNp-HMCMC is a practical, efficient approach that only requires already available gradient information and provides important insight about the geometry of the target distribution, eventually improving computational performance and enabling faster mixing.

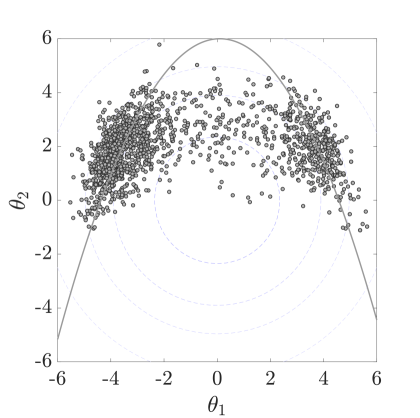

5 Approximate Sampling Target with Post-processing Adjustment (ASTPA)

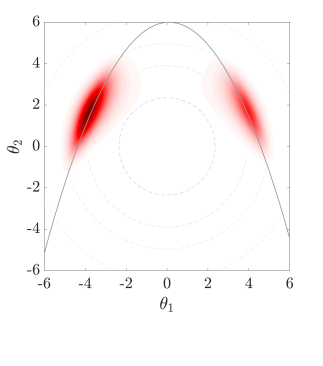

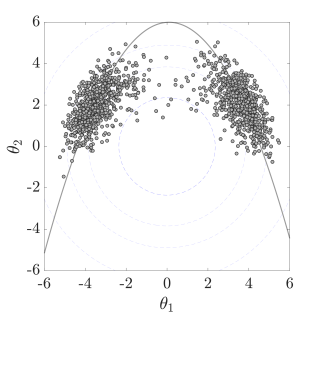

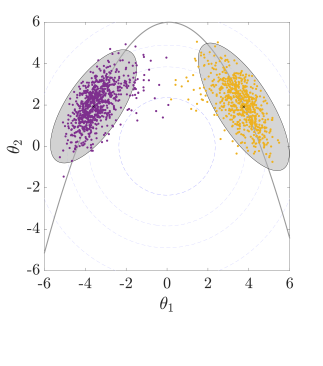

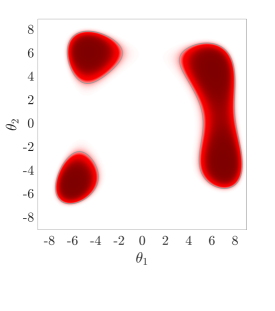

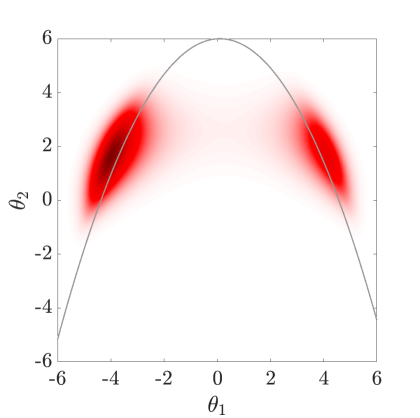

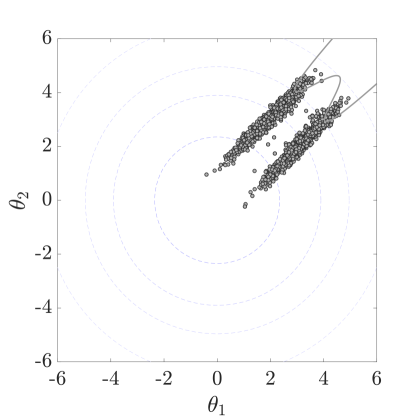

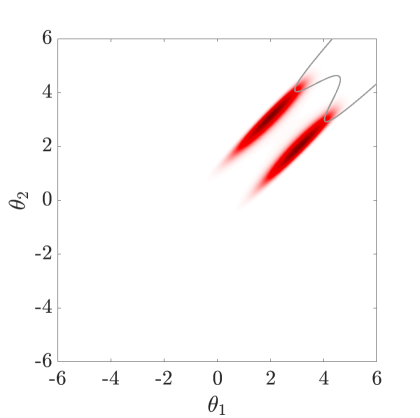

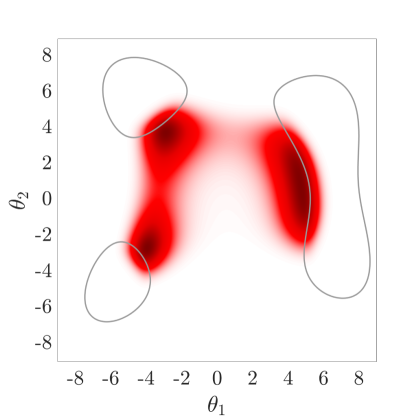

In order for an appropriate number of samples to discover and enter the relevant regions, contributing to the rare event probability estimation, a suitable approximate target distribution is constructed in this work, as analyzed in Section˜5.1, then sampled by Hamiltonian MCMC methods that can effectively reach regions of interest. For their initial stage, our HMCMC samplers have an adaptive annealed phase. This adaptive phase will be thoroughly explained later in Section˜5.3. To estimate the pertinent probability, Eq.˜1 needs to be then adjusted accordingly, since the samples are sampled from our constructed target distribution and not . An original post-sampling step is devised at this stage, using an inverse importance sampling procedure, described in Section˜5.2, i.e. first sample, then choose the importance sampling density automatically, based on the samples. Fig.˜1 concisely portrays the overall approach by using a bimodal target distribution with . The gray curves represent the parabolic limit-state function of this problem, with the failure domain being outside . The left figure displays the constructed target distribution, which in this simple 2D case can be visualized. The middle figure shows drawn samples from the target distribution by our suggested QNp-HMCMC algorithm, described in Section˜4, and the right figure demonstrates the inverse importance sampling step. All these different steps will be discussed in detail in the following sections.

5.1 Target distribution formulation

The basic idea is to construct an approximate sampling target distribution that places higher importance to the failure regions, to efficiently guide the samples to these domains of interest, and then the probability of failure can be quantified using an inverse importance sampling procedure. Eq.˜1 can be computed by directly sampling . However, this direct approach cannot be practically and effectively used in most general cases since the support domain of the non-smooth indicator function is only the failure regions ( g() 0), making it challenging of locating and adequately sampling the failure domains, especially in cases of high-dimensional and multi-modal spaces. In this work, the indicator function is hence approximated by a one-dimensional output likelihood function, that is based on the limit-state expression , supporting the entire domain . This likelihood function, , is expressed as a logistic cumulative distribution function, , with, mean, , and a dispersion factor , as:

|

|

|

| (8) |

where both , , parameters are described in detail in Section˜5.1.1, is the limit-state function, and is a scaling constant. A similar approach to approximate the indicator function can be seen in [24, 29], whereas it was used in a completely different context, in order to quantify the probability of failure using a sequential importance sampling method. The non-normalized target PDF is then defined as:

| (9) |

and combining Eqs.˜8 and 9, the is finally expanded as:

| (10) |

where denotes the multivariate standard normal distribution PDF in this work, , describing the multidimensional variable space . This approximate target distribution is smooth and supports both the safe and failure domains, emphasizing on the failure one, and can be sampled efficiently, particularly by the gradient-based HMCMC samplers which can take informed large jumps across the state space, exploring all regions of interest. The in Sections˜3 and 4, is thus the logarithmic form of the distribution, .

The reason for the scaling is mainly to properly and generally adjust the influence of the limit-state surface on the whole standard normal space , and to standardize parameter values, aiming at a universal algorithm and settings. Eq.˜11 defines recommended values for the scaling constant and the suggested range was found to work well in practice. Nonetheless, it is worth mentioning that these values may need further investigation when the proposed framework is directly used in non-Gaussian spaces.

| (11) |

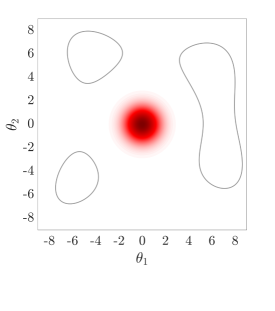

Fig.˜2 illustrates the described approach in constructing the target distribution in Eq.˜9 and Eq.˜10 under the ASTPA framework, emphasizing also on the effect of the scaling constant . The grey island-shaped curve is the well-known multi-modal Himmelblau’s function (particularly popular in mathematical optimization), seen also in Eq.˜30, and it characterizes our limit-state function , with the failure domain being inside of the curves and in this case. Fig.˜2(a) illustrates the bivariate normal distribution which defines the (, ) space. The limit state function here provides , so the scaling constant can be defined as , following Eq.˜11. Fig.˜2(b) portrays our prescribed 1-D likelihood function , described by a logistic cumulative distribution function, as shown in Eq.˜8. Fig.˜2(c) shows the non-normalized target PDF determined as a product of and without considering however the scaling constant here. Fig.˜2(d) instead represents the non-normalized target PDF constructed with the suggested value of . These two figures can thus clearly explain the role of the scaling constant in attracting samples to the regions of interest.

|

|

|

|

| (a) | (b) | (c) | (d) |

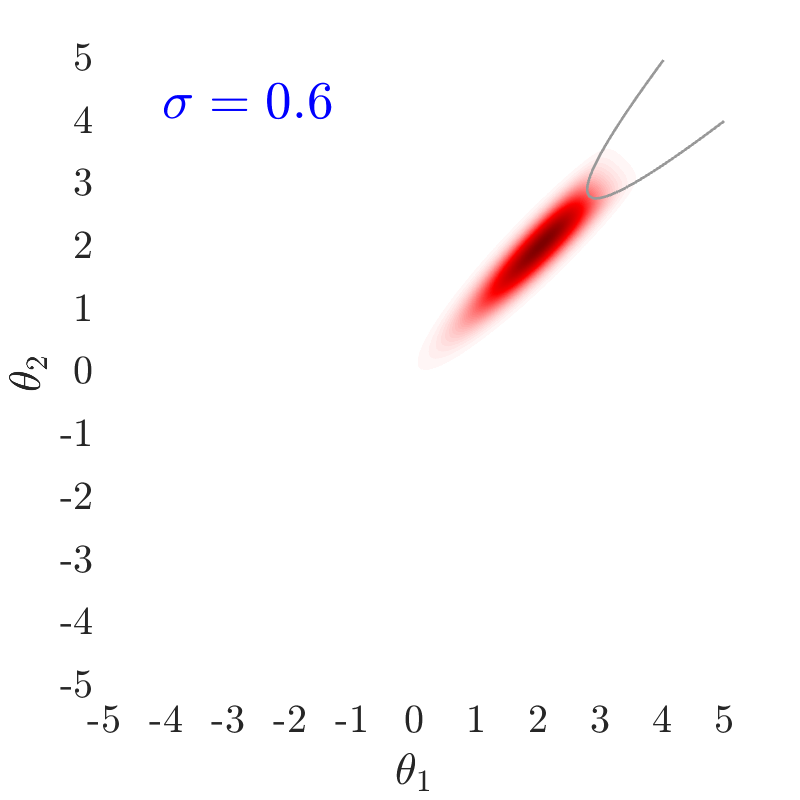

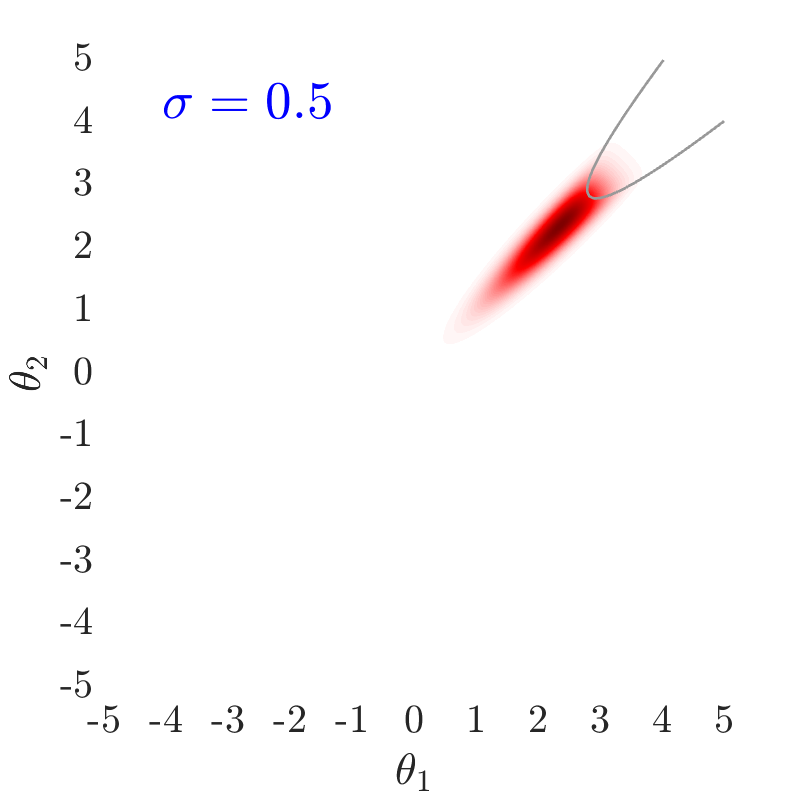

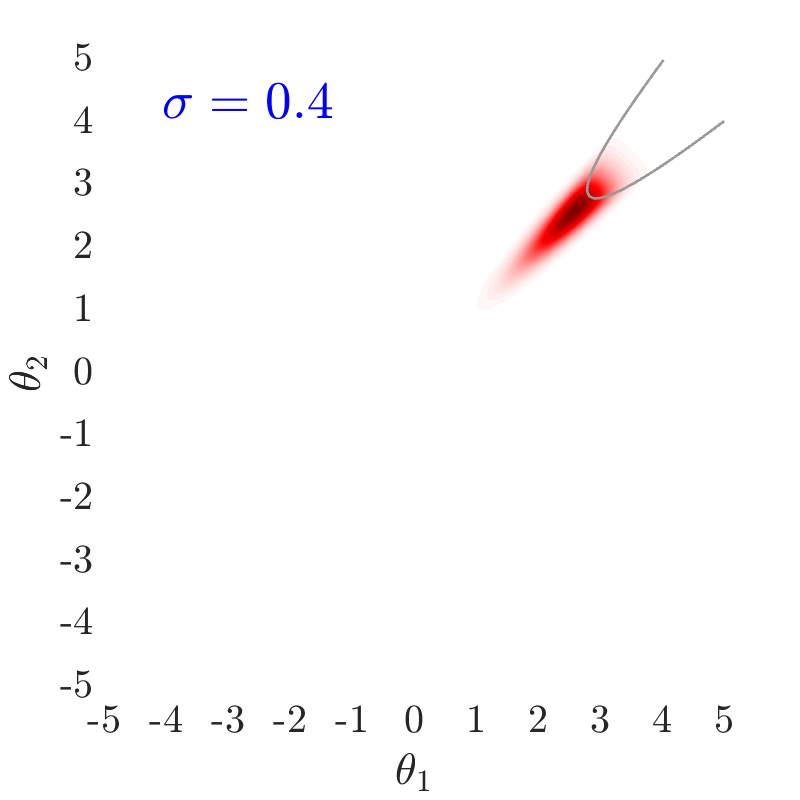

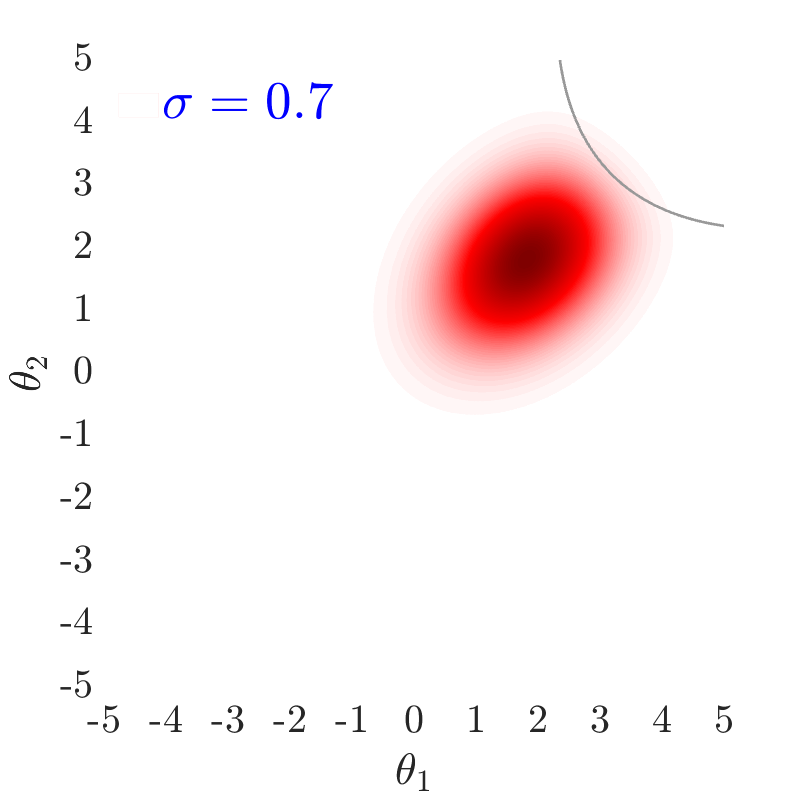

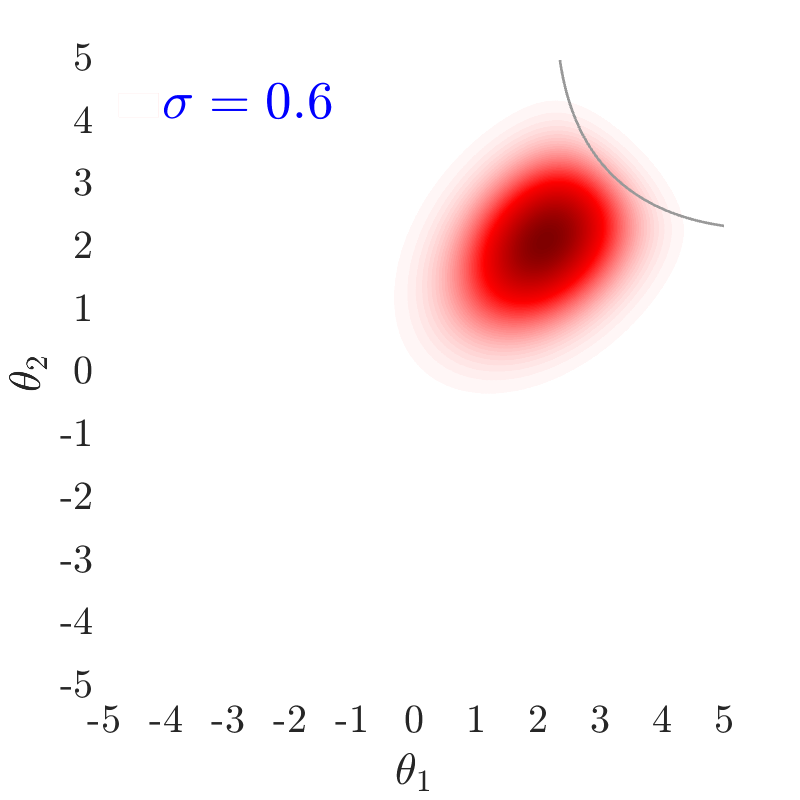

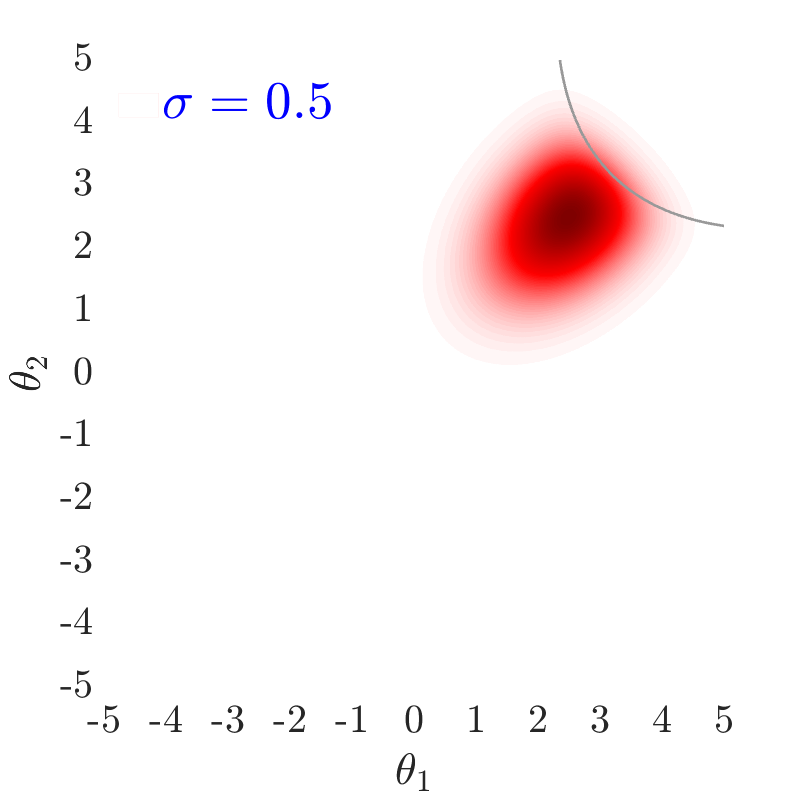

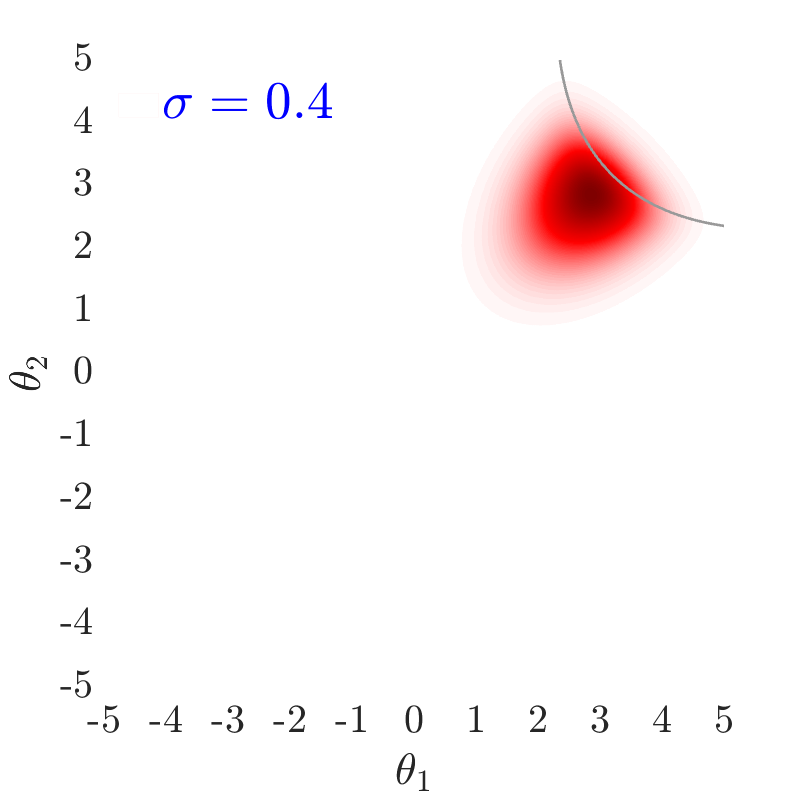

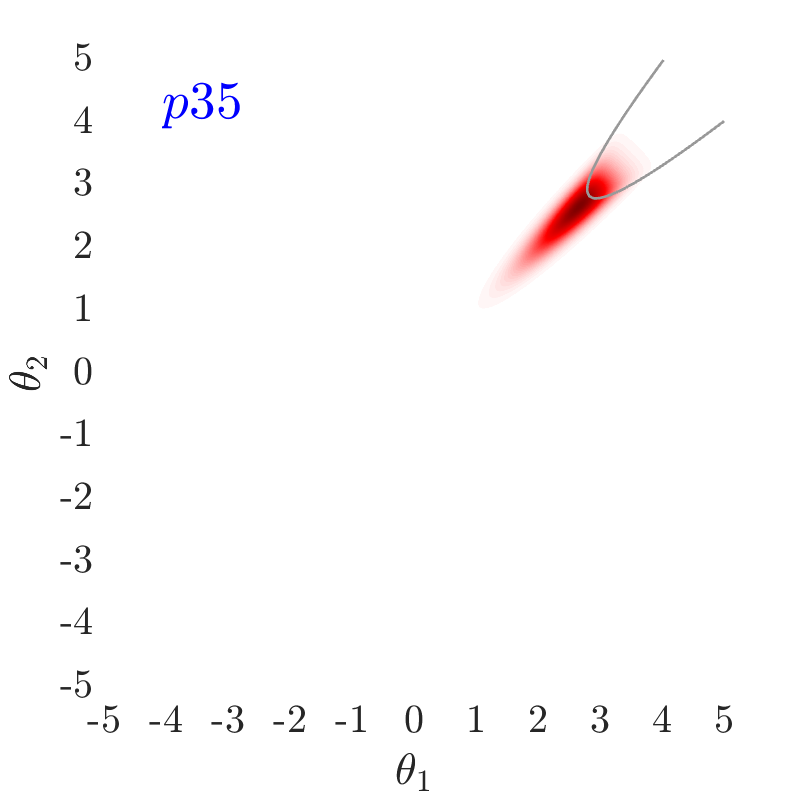

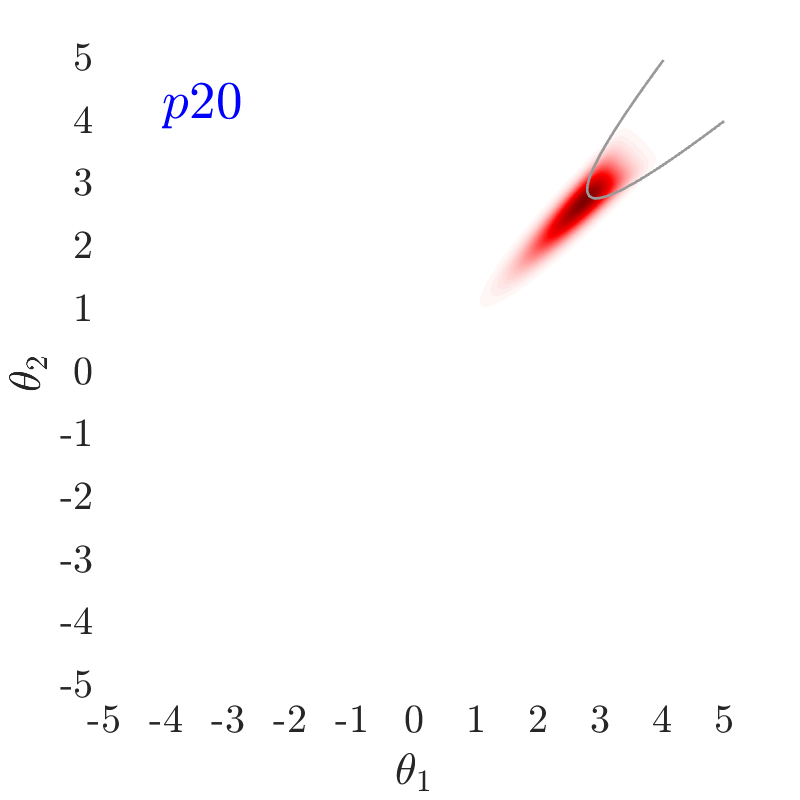

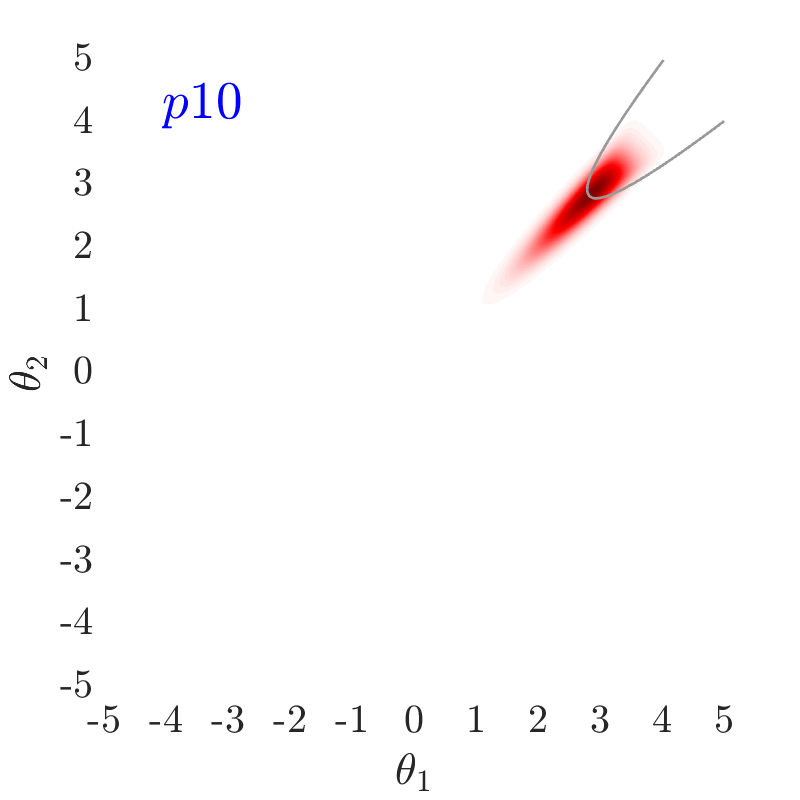

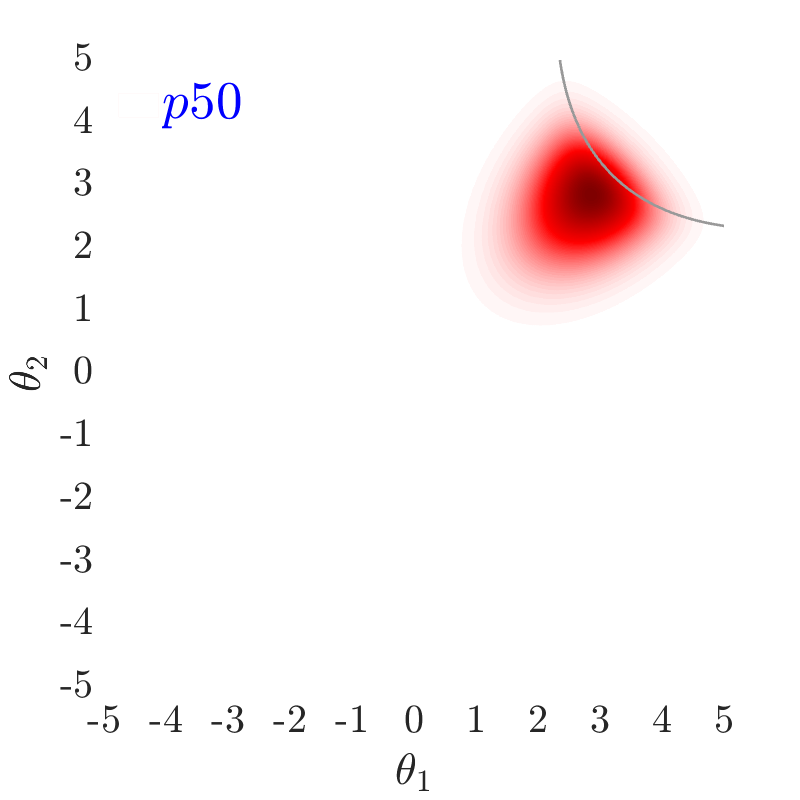

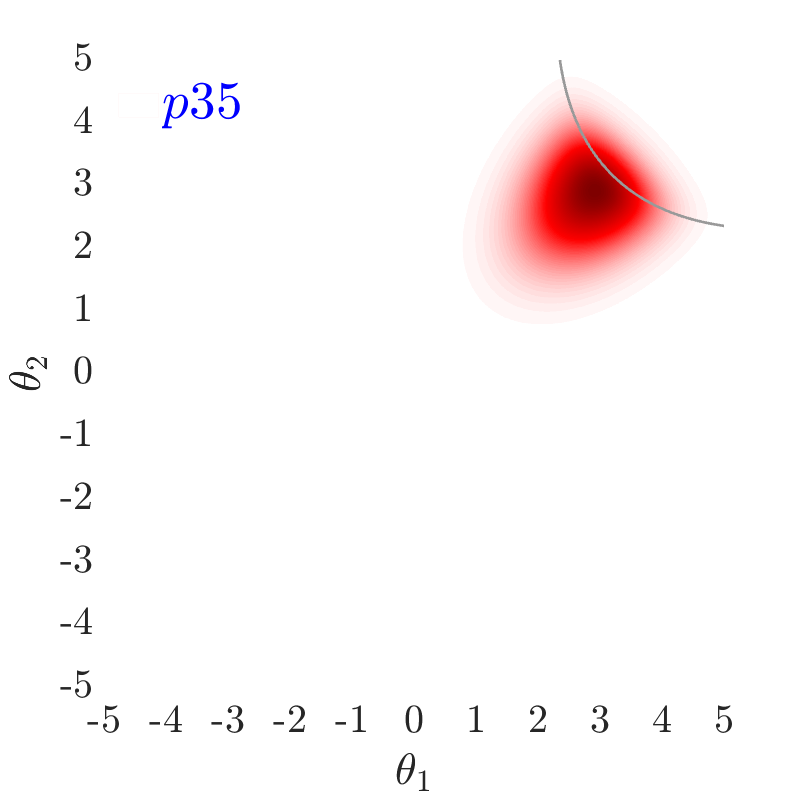

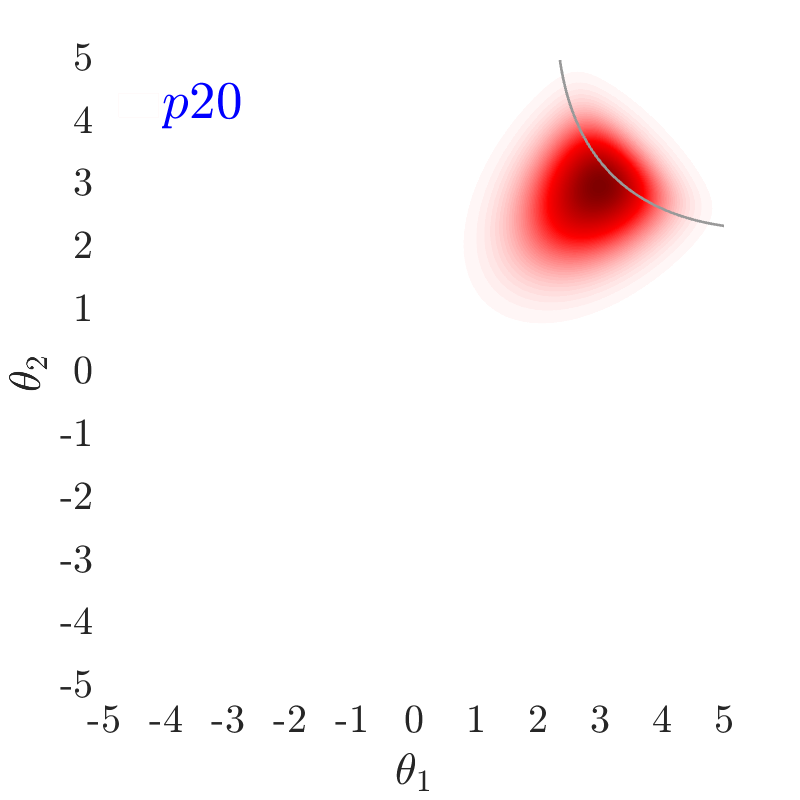

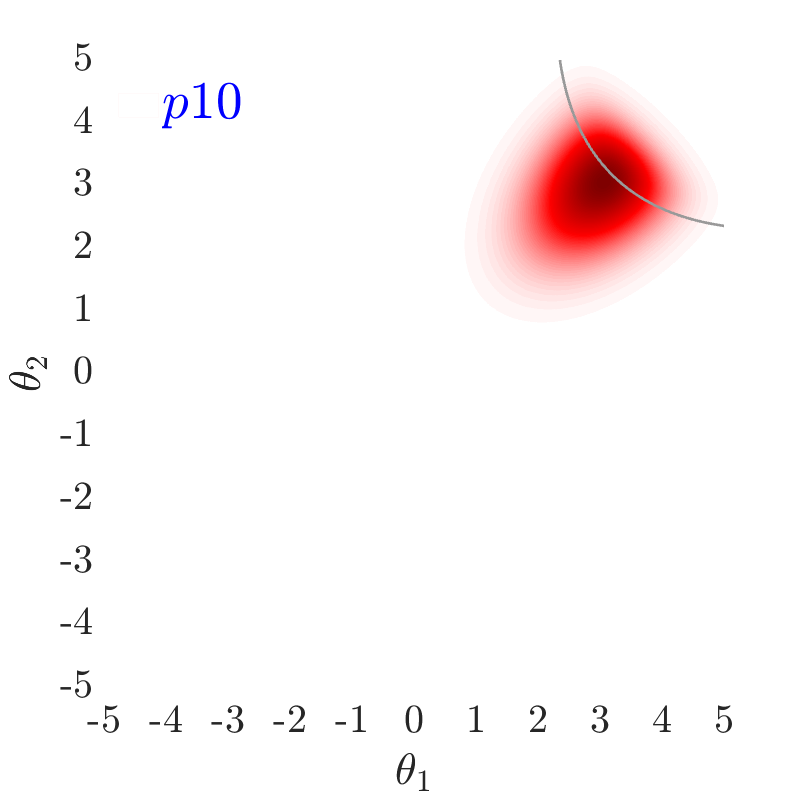

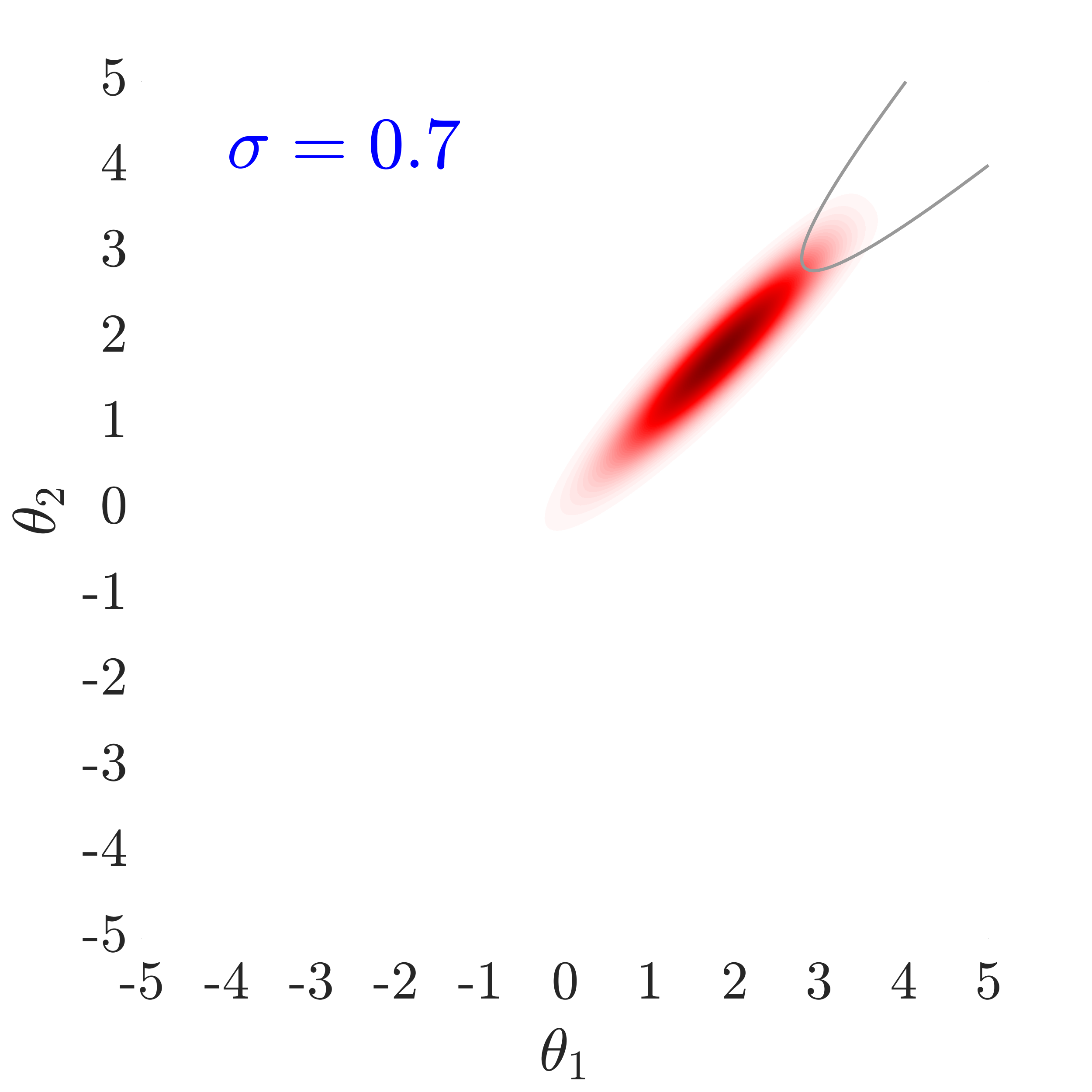

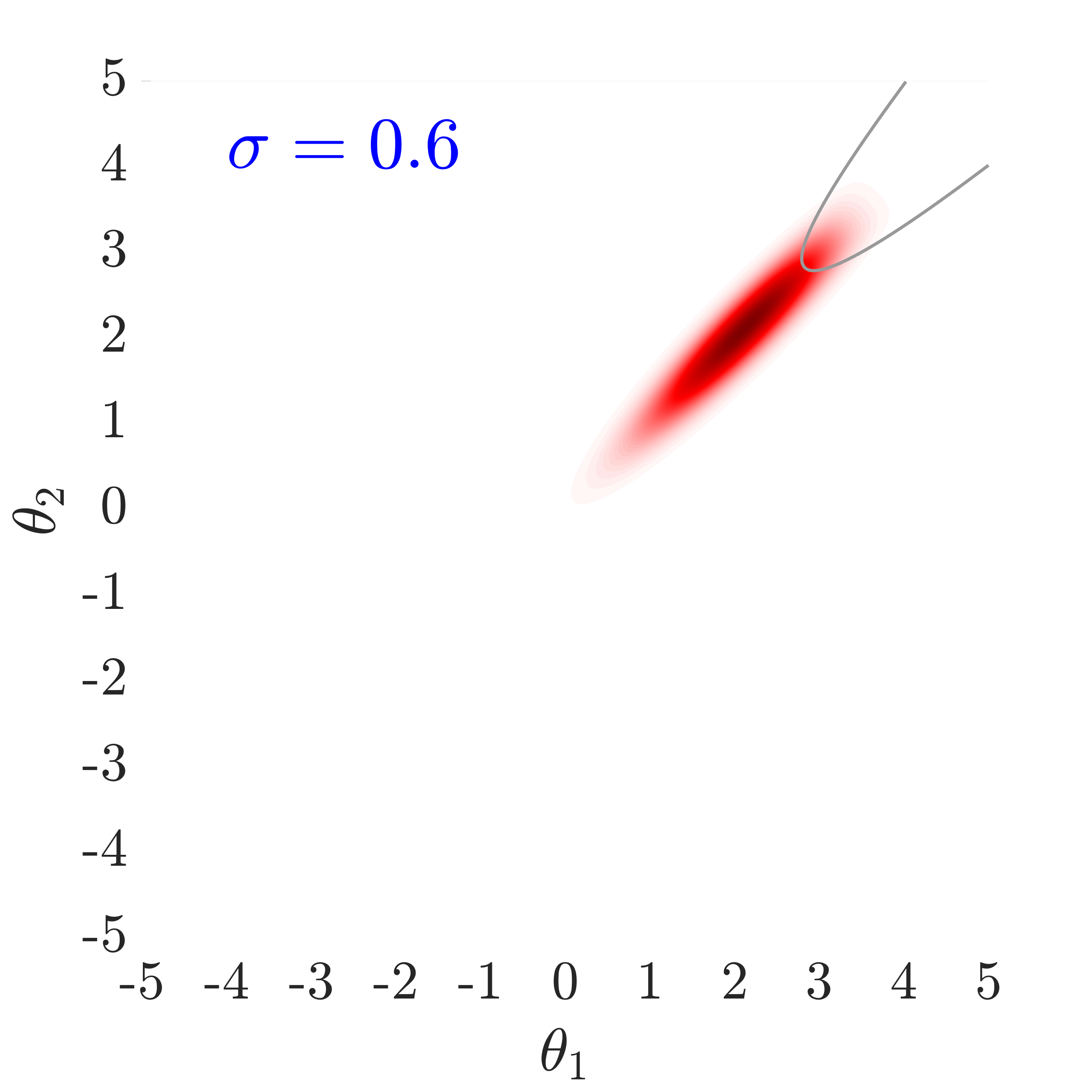

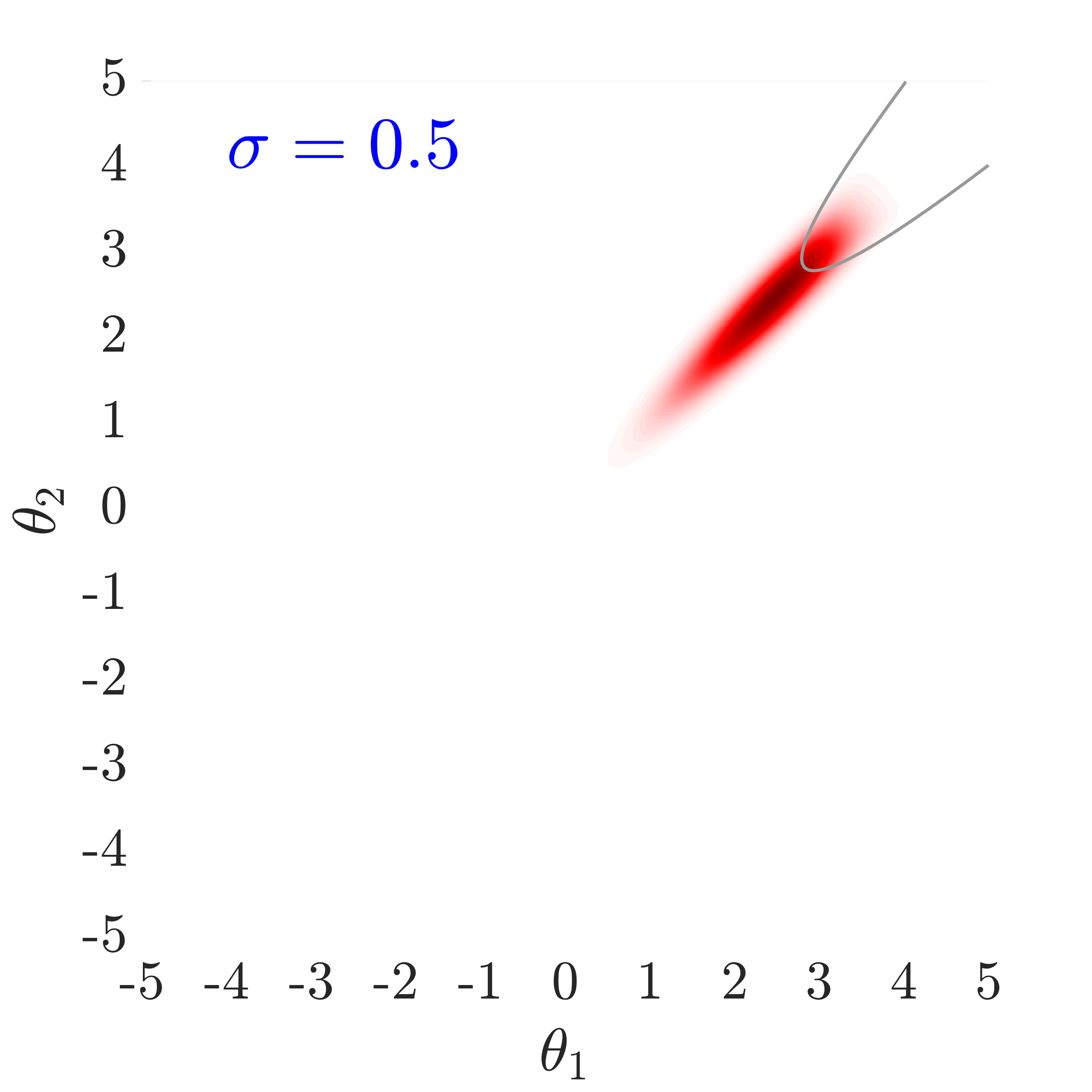

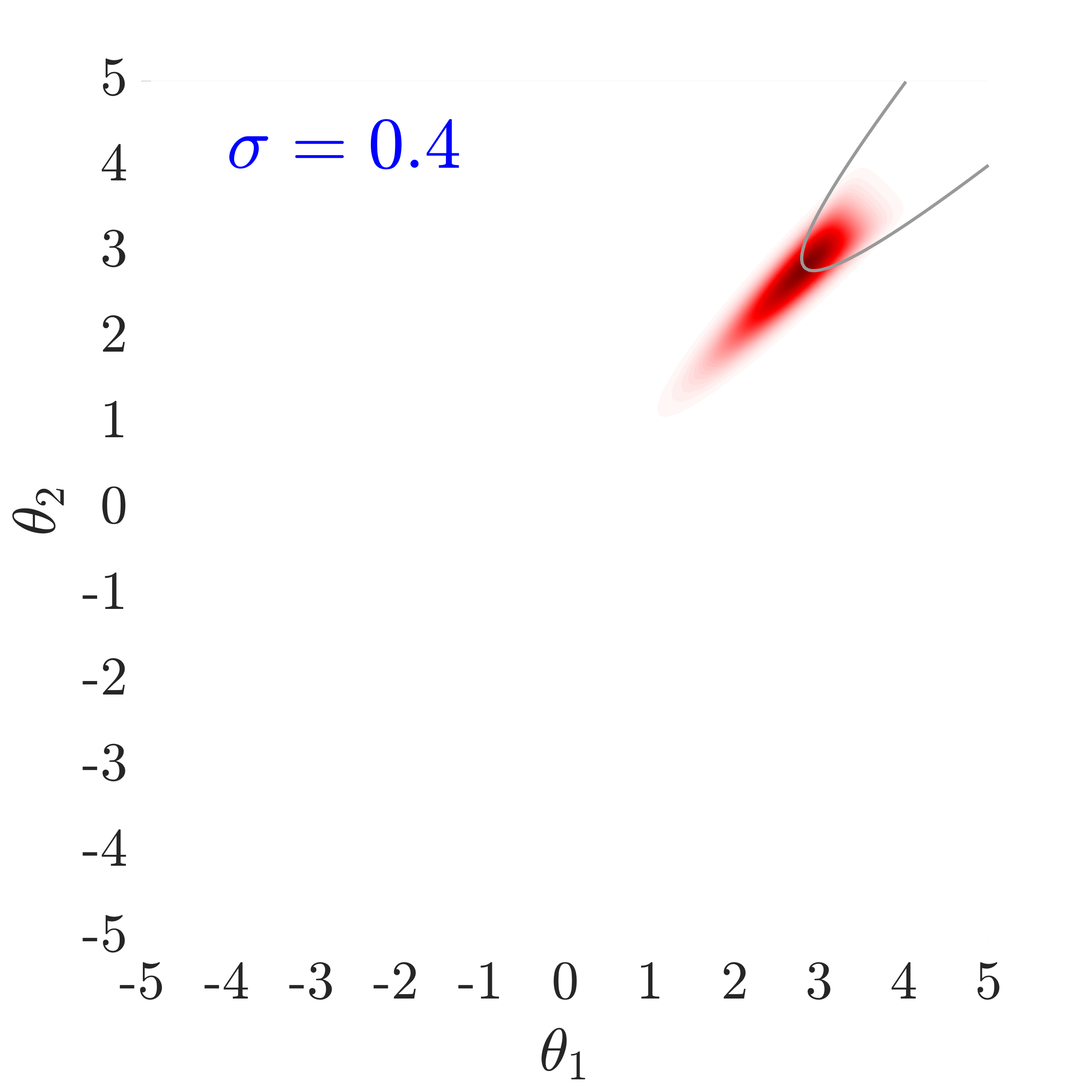

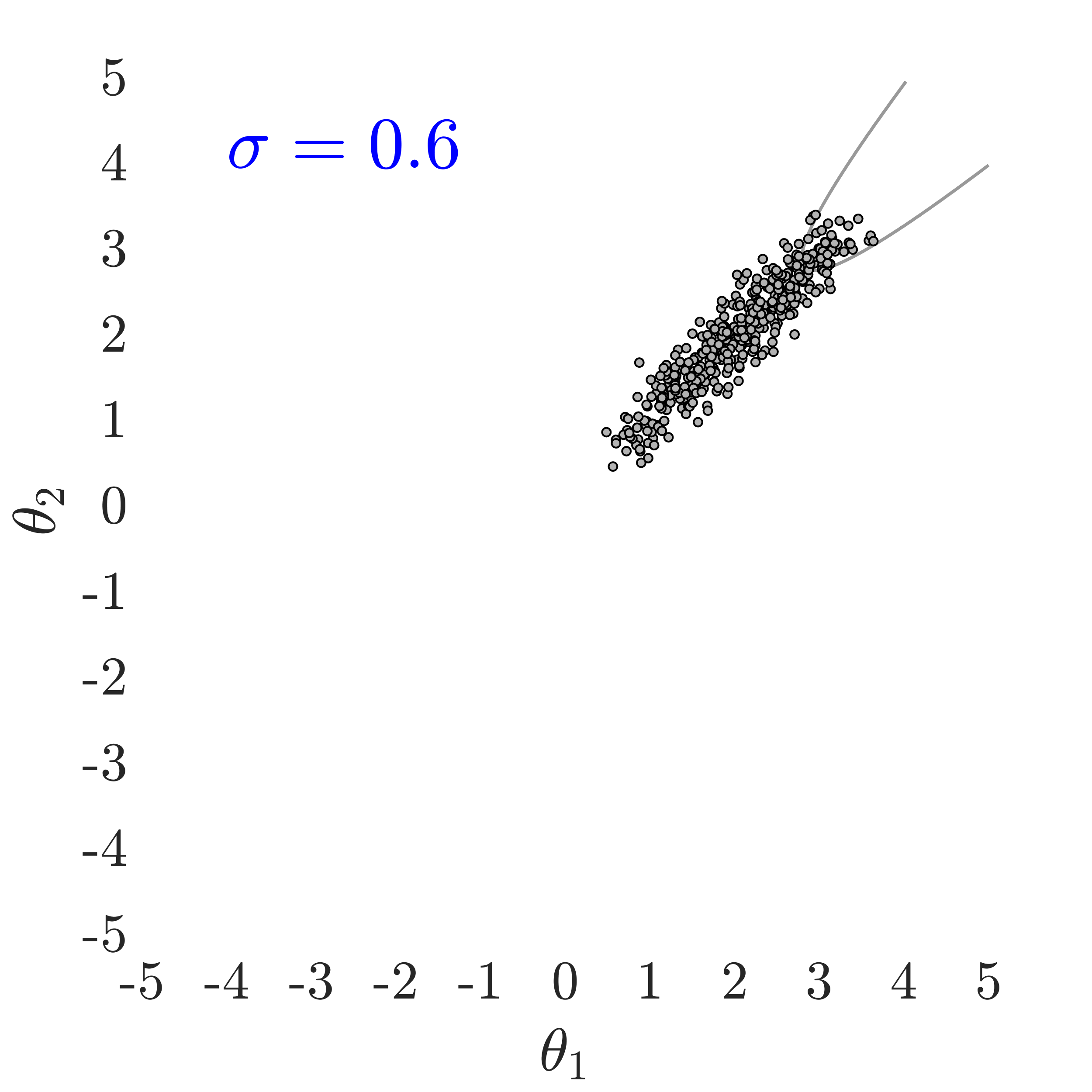

5.1.1 Impact of mean () and dispersion factor ()

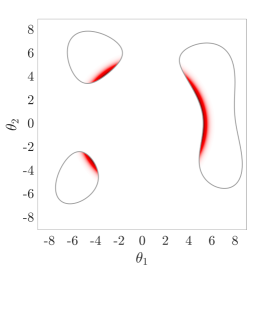

The use of in this work, largely follows ideas presented in [24], albeit at a completely different context. The value of also has an important effect on the convergence and the computational efficiency of our HMCMC sampling methods. In Fig.˜3 the effect of on the target distribution is displayed based on two 2D examples on the space, with a small failure probability () and a unimodal failure region. A higher value of can reduce the number of model calls as the constructed target becomes more dispersed compared to the case of lower values, thus being comparatively easier to be located and sampled, but an insufficient number of samples may then reach inside the failure regions. In contrast, reducing concentrates the target distribution within the regions of interest, making it, however, a more challenging sampling target, often requiring a higher number of model calls to be located and sampled. Choosing the value of the likelihood dispersion factor, , is therefore a trade-off. The suggested value of for our methodology is in the range . Fine tuning higher decimal values of in that range is not usually necessary. It is generally recommended to use higher values in multi-modal cases, enabling longer state jumps, even between modes, and lower values, , for cases with very small failure probability, e.g. around and lower, and/or high-dimensional problems, in order to attract more samples into the failure domain.

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| C.o.V | C.o.V | C.o.V | C.o.V |

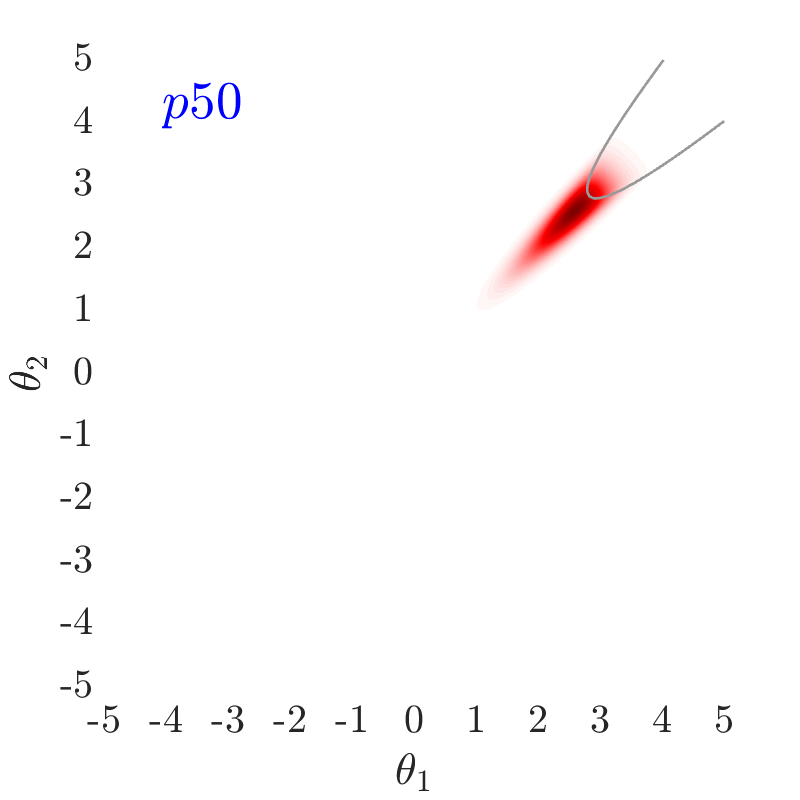

For the mean parameter, , we are interested in generally locating it into the failure region, , to enhance the sampling efficiency. As such, we are describing through a percentile, , of the logistic CDF and its quantile function, :

| (12) |

Placing a chosen percentile of the logistic CDF on the limit-state surface , results in and the can be then given as:

| (13) |

Therefore, as also shown in Fig.˜4, by reducing , the of the likelihood function further moves into the failure domain and consequently more samples can infiltrate into this region and can contribute to the failure probability estimation. In Fig.˜4, , for example, denotes the 50th percentile (). In all examples presented in this paper, the percentile, , is used to define the value , which is found to yield good efficiency. Eq.˜13 and parameter can now be used in Eq.˜10 to fully define the non-normalized target distribution .

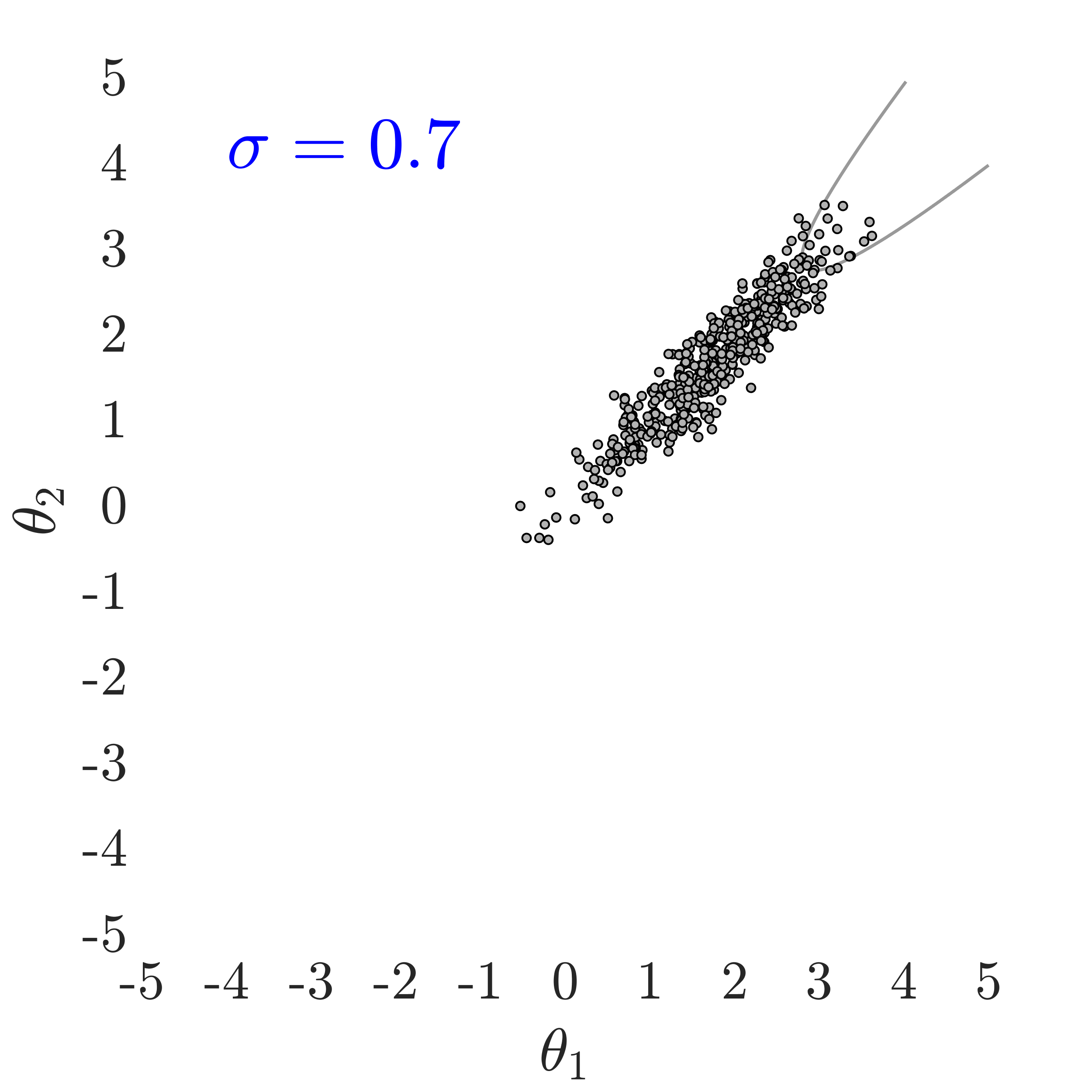

Fig.˜5 displays the effect of on sampling the approximate target distribution, showcasing how smaller values of push the samples further inside the failure regions. It also compares the and Coefficient of Variation (C.o.V), computed according to Eqs.˜16 and 26, based on 500 independent simulations with a number of model calls in all cases. Smaller values of result in a more accurate estimate of and a lower C.o.V, due to having the presence of more samples in the failure region.

5.2 Inverse Importance Sampling

An original post-sampling step is devised at this stage, termed inverse importance sampling (IIS), that can succesfully employ the already acquired samples and the normalized target distribution as the importance sampling density function. Hence, the probability of failure can be now computed as follows:

| (14) | |||

| (15) |

is the original distribution, denotes the non-normalized sampling target distribution, and is its normalizing constant. After some simplifications we get:

| (16) |

with the number of already acquired samples. To now calculate the normalizing constant , once again we resort to an importance sampling scheme in the following manner:

| (17) |

where can be a computed Gaussian Mixture Model (GMM), based again on the already available samples and the generic Expectation-Maximization (EM) algorithm [71], as indicatively seen in the right plot of Fig.˜1. A typical GMM expression can be then given by:

| (18) |

where is the PDF, is the weight, is the mean vector and is the covariance matrix of the Gaussian component, that can all be estimated, for all components, by the EM algorithm [71] based on the samples. When a GMM can be accurately fitted, the original HMCMC samples can be used directly to compute the normalizing constant , i.e., , and in Eq.˜16 and Eq.˜17. A precisely fitted GMM, with a sufficiently large number of components, adequately acts as a representative distribution for the original samples. However, given that the accuracy of GMMs may often deteriorate, particularly in high-dimensional and challenging multi-modal cases, due to the large number of parameters that need to be identified [29], similar to many other mixture models, additional samples from the computed can be required, just in order to accurately evaluate the normalizing constant . In this latter, more general case, even a very approximately fitted GMM with diagonal covariance matrices, particularly appropriate for high-dimensional cases, works effectively, since it is solely used for computing the constant , while the already obtained HMCMC samples are instead used in Eq.˜16. Drawing each of the i.i.d. samples requires only one model call and is called IIS sample in the following sections for the sake of clarity and simplicity. As a general guide, using IIS samples around of the total number of samples results in a good estimate for and eventually Eq.˜16.

5.3 Adaptation during the burn-in phase

A burn-in sampling phase is required in the ASTPA framework, regardless of the used MCMC sampling scheme. For the presented HMCMC methods in this work, the burn-in samples are usually adequate to be around of the total number of samples. To improve the overall sampling efficiency, we are taking advantage of this burn-in phase, that guides the chain to the appropriate target distribution, by also performing a series of adaptations and relevant parameter tuning steps. In particular, the stepsize and the trajectory length are established in this burn-in stage, as explained in Section˜3.2, and then used throughout the rest of the analysis, while the precondtioned mass matrix M, used in our QNp-HMCMC algorithm, is also computed in the burn-in phase and then utilized for the remainder of the sampling, as discussed in Section˜4.

In addition to these sampling related parameters, we are also adjusting the target distribution in this sampling stage, through the parameters and . Following the discussion in Section˜5.1.1, these two parameters are automatically evolving toward their constant values in this adaptive phase, to assist in guiding the samples to the final target distribution. The likelihood dispersion factor initiates with and then follows an exponential decay until its prescribed constant value , as:

| (19) |

where is the assigned number of total burn-in samples and denotes the iteration counter based on a fully completed leapfrog step. A similar exponential growth scheme is also used for , starting based on in Eq.˜13, i.e. , and reaching the constant value given by the prescribed percentile in Eq.˜13, as:

| (20) |

| Dispersion factor () | Trajectory length () | Step size () | Total model calls | Burn-in | IIS samples | |

|---|---|---|---|---|---|---|

| [0.1 0.8] | 0.7 | dual averaging [61] | 5,000-10,000 | 10 | 20 |

5.4 Statistical properties of the estimator

Based on Eq.˜16 and given samples , the sample estimator is expressed:

| (21) |

where

| (22) |

with variances:

| (23) |

| (24) |

Assuming and are independent random variables, the variance of can be given as:

| (25) |

The Coefficient of Variation (C.o.V) can then be provided as:

| (26) |

where denotes the used Markov chain samples, taking into account the fact that the samples are not independent and identically distributed (i.i.d) in this case. HMCMC samplers typically showcase low autocorrelation and thus thinning the sample size from to to enhance independence is often not required. In any case, can be easily determined by examining the sample autocorrelation and choosing an appropriate thinning lag, if needed. In this work, for the C.o.V calculation and in Eq.˜23, we used every sample for all examples. The same thinning process can also be used for in Eq.˜22, if wanted, although we have not done this in this work and all acquired samples have been used for the probability of failure estimation.

5.5 Summary of the ASTPA parameters

The required input parameters for the presented methodology are summarized in Table˜1, together with some suggested generic values for reliability estimation problems. The constant likelihood dispersion factor follows the suggestions in Section˜5.1.1 and can generally be in the range of . The mean, , is chosen to be provided by the percentile in Eq.˜13, and the trajectory length can be based on the ESJD metric, as explained in Section˜3.2, with a generic value being . The dual averaging algorithm of [61] is adopted here to automatically provide the used constant step size in the non-adaptive sampling phase, and the required minimum number of model calls for the QNp-HMCMC method is roughly suggested to be 5,000-10,000 for high-dimensional problems and target probabilities lower than . Naturally, the required number of model calls and samples is case dependent and convergence of the estimator can be checked through Eq.˜16, Eq.˜23, and/or Eq.˜24. For the burn-in phase less than of the total number of samples are often required, so a value can be generally suggested. Finally, to compute the normalizing constant of the approximate target, we can generally use IIS samples around of the total number of samples.

6 Numerical Results

Several numerical examples are studied in this section to examine the performance and efficiency of the proposed methods. In all examples, input parameters follow the provided guidelines in Section˜5.5. To compute the normalizing constant , IIS samples around of the total number of samples have been drawn from a computed GMM with diagonal covariance matrices and, generally, and Gaussian components for low and high dimensional problems, respectively. Results are compared with the Component-Wise Metropolis-Hastings based Subset Simulation (CWMH-SuS) [21], with two proposal distributions, a uniform one of width 2 and a standard normal one. The adaptive Conditional Sampling (aCS) SuS variant introduced in [16] is also used in all examples, implemented based on the online provided code by the authors [72]. In all examples, the SuS parameters are chosen as = 1,000 and 2,000 for low- and high-dimensional problems, when needed, respectively, with the number of samples in each subset level, and , where is the samples percentile for determining the appropriate subsets. Comparisons are provided in terms of accuracy and computational cost, reporting the PF estimation and the mean number of limit-state function calls. Analytical gradients are provided in all examples, hence one limit-state/model call can provide both the relevant function values and gradients. The number of limit-state function evaluations for the HMCMC-based methods has been determined based on reported C.o.V values , as estimated by 500 independent simulations. C.o.V values estimated by the analytical expression in Eq.˜26 are also reported in parenthesis, for both HMCMC and QNp-HMCMC methods. The reference failure probabilities are obtained based on the mean estimation of 100 independent simulations using crude Monte Carlo samples, where applicable for larger reference probabilities, and SuS method with = 100,000 for smaller reference probabilities. The problem dimensions are denoted by and all ASTPA parameters are carefully chosen for all examples but are not optimized for any one. Comparative and perhaps improved alternate performance might thus be achieved with a different set of parameters.

6.1 Example 1: Nonlinear convex limit-state function

The first example consists of a nonlinear convex limit-state function characterized by two independent standard normal random variables and a low failure probability ( 4.73E-6):

| (27) |

Table˜2 compares the number of model calls, C.o.V and obtained by SuS and the two HMCMC algorithms. For the HMCMC algorithms, the trajectory length is chosen equal to the default value of 0.7 and the likelihood dispersion factor, , is 0.4. The burn-in sample size is set to 150 samples. As shown, the suggested approaches perform noticeably better than SuS, and aCS-SuS results outperform the original SuS approach with two different proposal distributions. The QNp-HMCMC method exhibits an excellent performance with only 844 model calls in this problem, on average. The simulated QNp-HMCMC samples and the analytical target distribution based on the limit-state function of Eq.˜27 can be seen in the two figures on the right hand side in Fig.˜5.

| 500 Independent Simulations | CWMH-SuS | aCS-SuS | HMCMC | QNp-HMCMC | ||

|---|---|---|---|---|---|---|

| Number of total model calls | 5,462 | 5,552 | 5,453 | 1,873 | 836 | |

| C.o.V | 1.06 | 1.55 | 0.94 | 0.140.15 | 0.150.15 | |

| (Ref. 4.73E-6) | 4.88E-6 | 5.11E-8 | 4.52E-6 | 4.73E-6 | 4.72E-6 | |

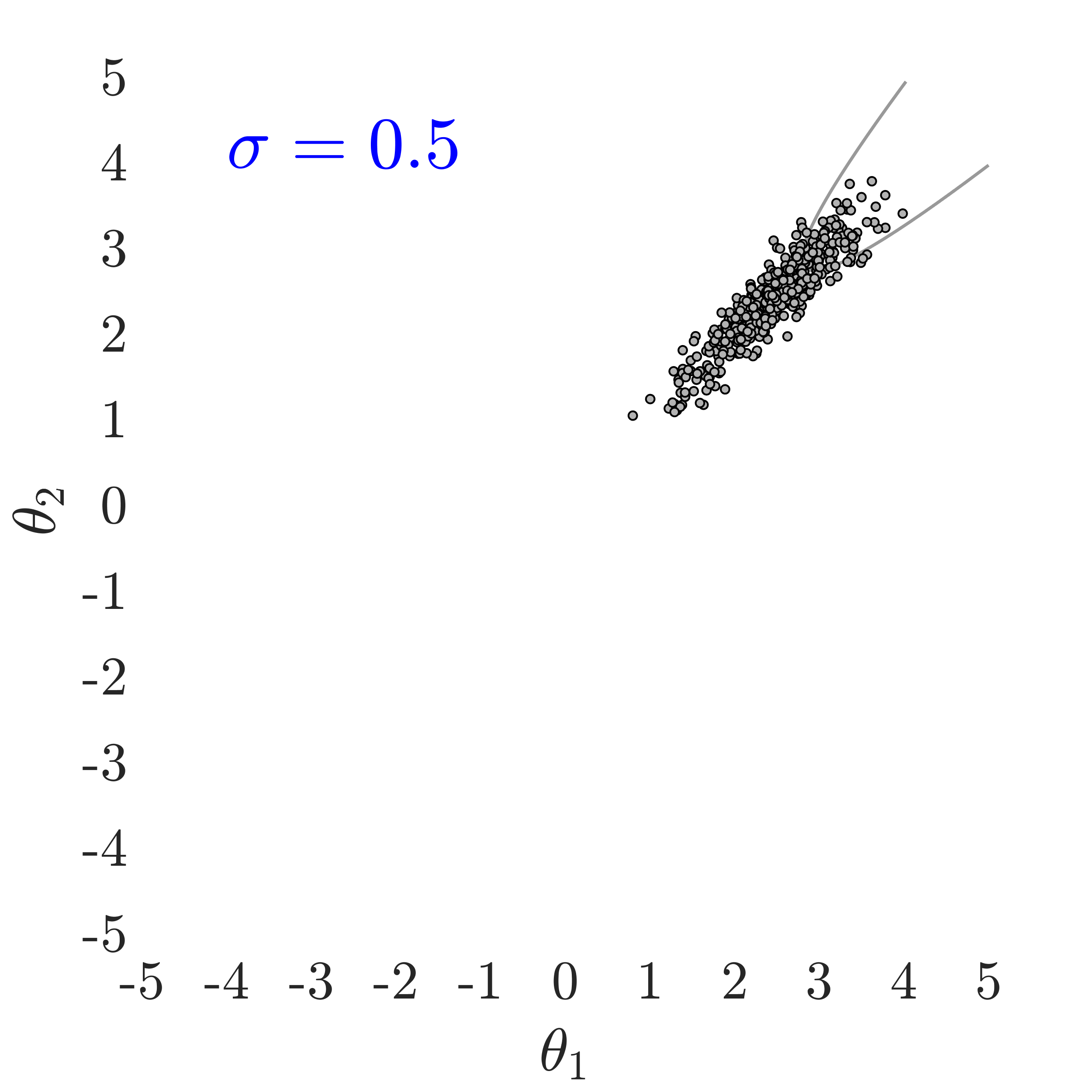

6.2 Example 2: Parabolic/Concave limit-state function

This example is based on the following limit state function with two standard normal random variables [73]:

| (28) |

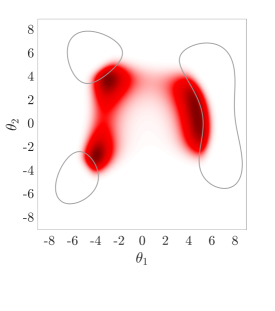

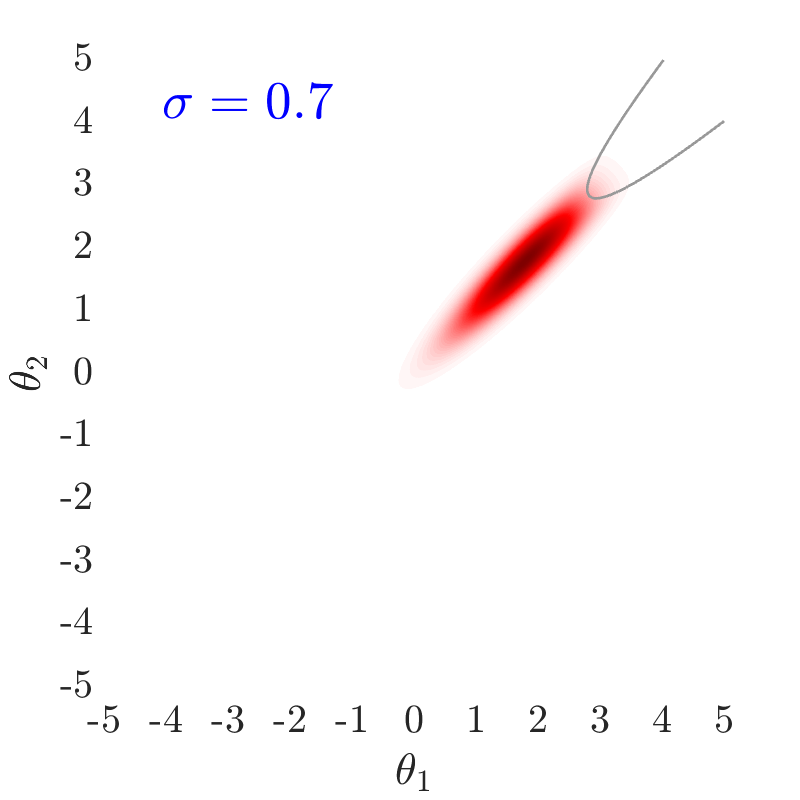

where , and are deterministic parameters chosen as , and . The probability of failure is 3.95E-5 and the limit-state function describes two main failure modes, as also seen in Fig.˜6. For the HMCMC-based algorithms, the likelihood dispersion factor, , is 0.7, the burn-in sample size is 200 samples, and the trajectory length is set to .

Table˜3 compares the number of model calls, the C.o.V and the obtained by all methods. The HMCMC-based approaches provide significantly smaller C.o.V values than SuS, with fewer model calls, and the HMCMC in particular outperforms all other methods in this two dimensional problem. The QNp-HMCMC samples are also shown in Fig.˜6 and can accurately represent the two important failure regions. The circular dash lines in Fig.˜6(a) represent the probability contour of the standard normal space , for probability levels of , , and , similarly provided for Figs.˜7 and 8 as well.

| 500 Independent Simulations | CWMH-SuS | aCS-SuS | HMCMC | QNp-HMCMC | ||

|---|---|---|---|---|---|---|

| Number of total model calls | 4,559 | 4,565 | 4,562 | 3,306 | 3,306 | |

| C.o.V | 0.62 | 0.65 | 0.63 | 0.090.06 | 0.090.06 | |

| (Ref. 3.95E-5) | 4.19E-5 | 4.14E-5 | 4.09E-5 | 3.93E-5 | 3.88E-5 | |

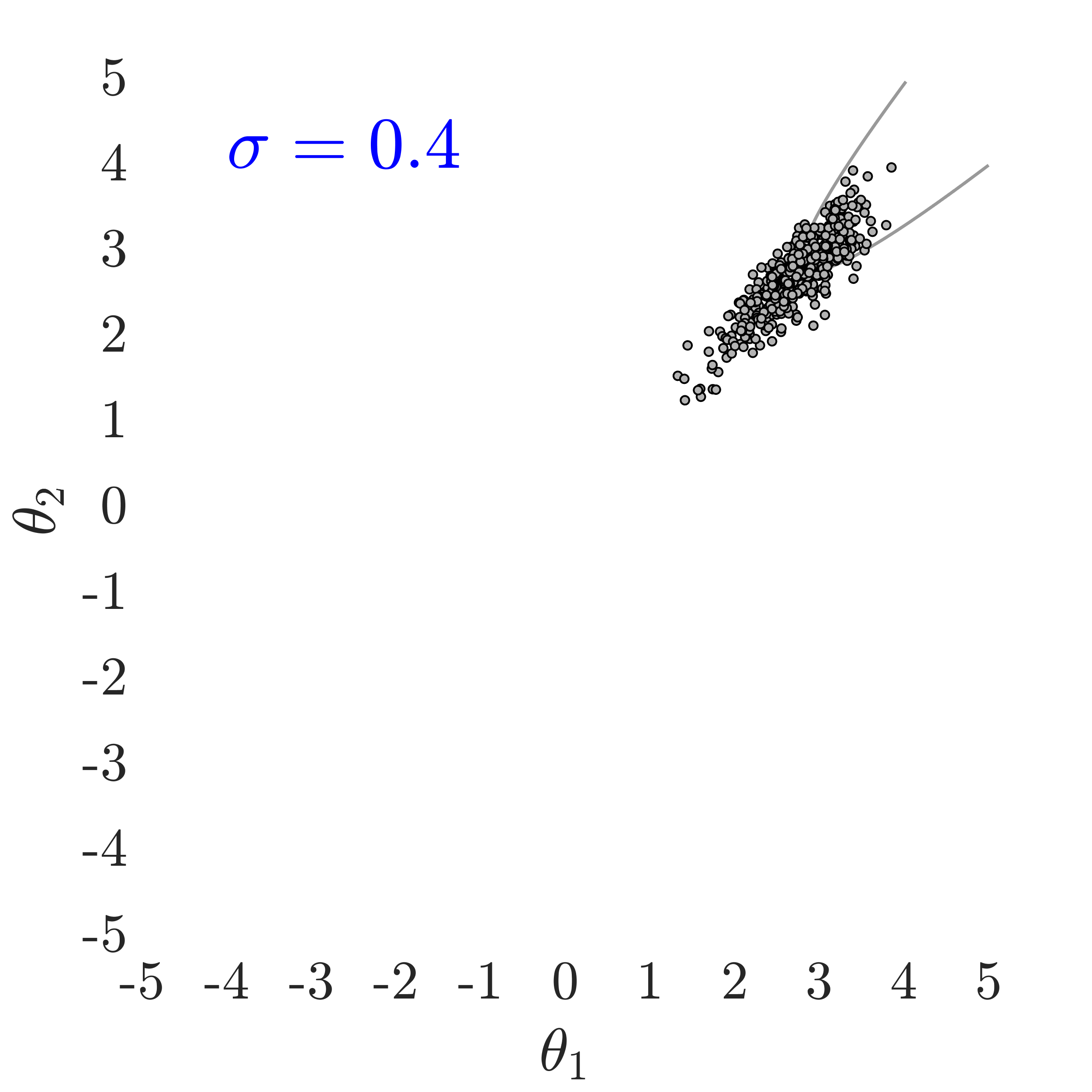

6.3 Example 3: Quartic bimodal limit-state function

The third example is a quartic bimodal limit-state function with very low probability of failure ( 5.90E-8), defined by the following limit-state function in the standard normal space:

| (29) |

Table˜4 compares the performance of the HMCMC methods with the ones by SuS. The trajectory length is chosen , and the likelihood dispersion factor, , is 0.5. The burn-in sample size is set to 200 samples. HMCMC-based methods, particularly QNp-HMCMC, once more provide significantly lower C.o.V values with fewer model calls than SuS approaches, that perform rather poorly in this example, with aCS-SuS performing the best among them. Fig.˜7 displays related target distribution based on the limit-state function of Eq.˜29, as well as the samples generated by the QNp-HMCMC method.

| 500 Independent Simulations | CWMH-SuS | aCS-SuS | HMCMC | QNp-HMCMC | ||

|---|---|---|---|---|---|---|

| Number of total model calls | 7,327 | 7,536 | 7,380 | 6,277 | 2,696 | |

| C.o.V | 1.64 | 2.45 | 1.59 | 0.280.27 | 0.270.27 | |

| (Ref. 5.90E-8) | 6.13E-8 | 5.86E-8 | 6.12E-8 | 5.90E-8 | 5.89E-8 | |

6.4 Example 4: The Himmelblau Function

In nonlinear optimization, a commonly used fourth order polynomial test function is the so-called Himmelblau [74] function. Here we adopt and modify this function, as:

| (30) |

which is particularly suitable for reliability examples with multiple separated failure domains. and are assumed to be independent standard normal random variables and the constant is used to define different levels of the failure probability. Table˜5 compares the number of model calls, coefficient of variation and obtained by SuS and the family of HMCMC algorithms. For the HMCMC algorithms, the trajectory length is chosen as and the burn-in is set to 200 samples. The is beyond the upper bound, , and as discussed in Section˜5, we perform the scaling with . The likelihood dispersion factor used in this example is mentioned in Table˜5, and has been chosen according to the guidelines in Section˜5.1.1, considering both multimodality and the different levels of the failure probabilities. The Subset Simulation results are based on 1,000.

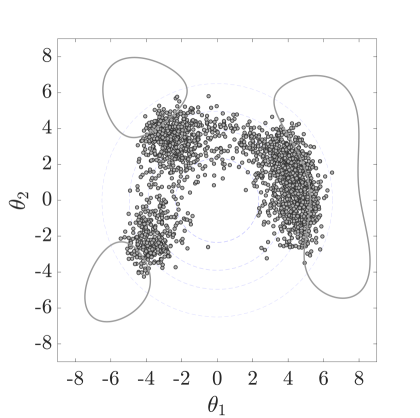

It is again shown here that the HMCMC approach gives significantly smaller C.o.V than SuS and also outperforms it in terms of the estimation. Fig.˜8 also demonstrates that the HMCMC samples accurately describe all the three important failure regions.

|

|

500 Independent Simulations | CWMH-SuS | aCS-SuS | HMCMC | QNp-HMCMC | |

|---|---|---|---|---|---|---|

| Number of total model calls | 3,833 | 3,833 | 3,821 | 3,100 | 3,100 | |

| C.o.V | 0.42 | 0.50 | 0.35 | 0.100.06 | 0.130.06 | |

| (Ref. 1.65E-4 ) | 1.69E-4 | 1.78E-4 | 1.68E-4 | 1.64E-4 | 1.62E-4 | |

| Number of total model calls | 6,471 | 6,528 | 6,463 | 3,600 | 3,600 | |

| C.o.V | 0.87 | 1.67 | 0.54 | 0.160.10 | 0.160.10 | |

| (Ref. 2.77E-7) | 3.14E-7 | 3.33E-7 | 2.89E-7 | 2.77E-7 | 2.77E-7 | |

6.5 Example 5: Cantilever beam

In this last two-dimensional example, a cantilever beam problem is studied [75]. The beam is illustrated in Fig.˜9, with cross-section width , height , beam length , and transverse loads and . The beam failure mode in this case is the maximum deflection exceeding the allowable value, , given by the limit-state function:

| (31) |

where and , is the elastic modulus, , and . and follow independent normal distributions and .

The normally distributed variables and are transformed into the standard normal space and the limit-state function in the space becomes:

| (32) |

|

|

500 Independent Simulations | CWMH-SuS | aCS-SuS | HMCMC | QNp-HMCMC | |

|---|---|---|---|---|---|---|

| Number of total model calls | 6,056 | 6,069 | 6,062 | 1,900 | 1,900 | |

| C.o.V | 0.80 | 0.93 | 0.50 | 0.150.17 | 0.140.17 | |

| (Ref. 1.01E-6 ) | 1.07E-6 | 1.08E-6 | 1.05E-6 | 1.01E-6 | 1.01E-6 | |

| Number of total model calls | 7,561 | 7,586 | 7,569 | 3,200 | 3,200 | |

| C.o.V | 1.11 | 1.41 | 0.60 | 0.190.24 | 0.190.24 | |

| (Ref. 1.97E-8) | 2.14E-8 | 2.22E-8 | 2.03E-8 | 1.97E-8 | 1.98E-8 | |

For the HMCMC-based algorithms, the trajectory length is chosen and the likelihood dispersion factor, , is 0.2. The burn-in sample size is set to 200 samples. Table˜6 compares the number of model calls, C.o.V and for two different failure probability levels obtained by SuS and the HMCMC-based methods. As shown, the HMCMC-based approaches noticeably again outperform the SuS methods.

6.6 Example 6: High-dimensional reliability example with linear limit-state function

In this first high-dimensional example, a linear limit-state function of independent standard normal random variables is considered:

| (33) |

where denotes again the related problem dimensions, and the pertinent probability of failure is equal to , independent of , with the cumulative distribution function of the standard normal distribution. To investigate the effect of different failure probability levels, in relation to the HMCMC-based methods and SuS performance, a sequence of values for and are studied. Table˜7 summarizes the comparative results through the mean number of model calls, mean and C.o.V of the estimated probabilities. For both HMCMC-based methods, the likelihood dispersion factor, , is equal to 0.3, the burn-in sample size is 300, and the trajectory length, , for all failure probability levels is chosen equal to 0.7, the default value. SuS results are again based on 1,000.

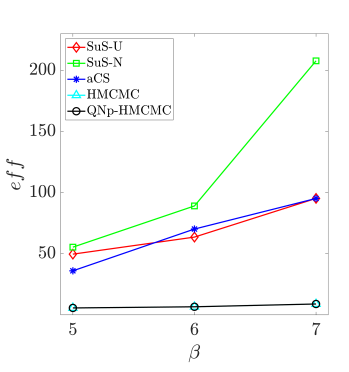

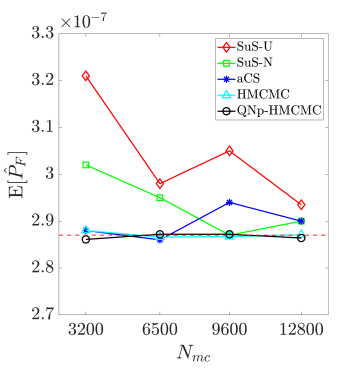

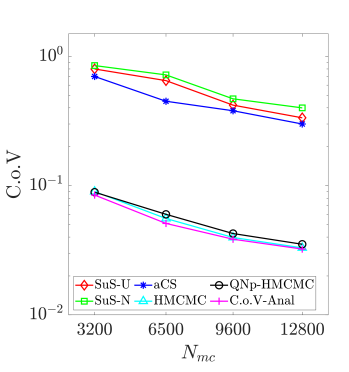

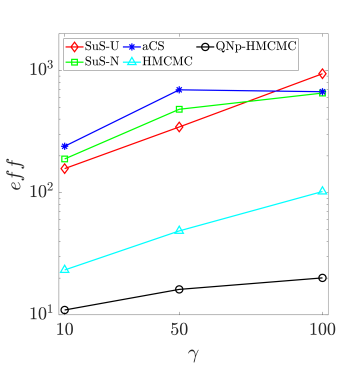

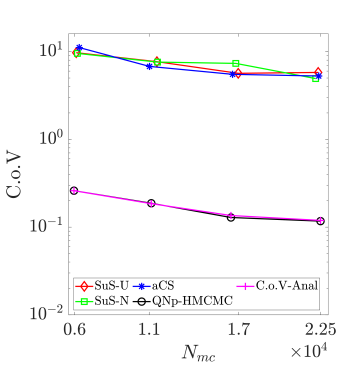

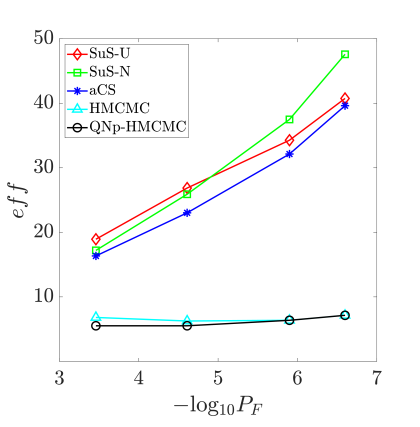

As reported in Table˜7, the HMCMC-based approaches exhibit for all probability levels accurate and stable results in terms of C.o.V., outperforming all SuS results. Defining the "unit C.o.V" eff as C.o.V = eff, with the total number of model calls, an index can now be used that appropriately considers both accuracy and computational effort [76], with a lower eff value exhibiting higher efficiency, of course. Fig.˜10a displays the eff variation in relation to the reliability index for , confirming the HMCMC approaches efficiency for various failure probability levels. SuS-U and SuS-N in the figure stand for the SuS results with uniform and standard normal proposals, respectively. Fig.˜10b showcases the mean estimate for and , based on the number of model calls. As shown, the HMCMC methods provide a consistent unbiased estimator after a certain, relatively small, number of model evaluations. Finally, in Fig.˜10c a similar plot is provided for the C.o.V results, with the proposed HMCMC-based framework exhibiting again excellent overall performance. The CoV-Anal curve in the figure represents the QNp-HMCMC C.o.V estimation based on the analytical expression in Eq.˜26.

| 500 Independent Simulations | CWMH-SuS | aCS-SuS | HMCMC | QNp-HMCMC | |||

| 100 | Number of total model calls | 6,418 | 6,443 | 6,409 | 2,225 | 2,225 | |

| C.o.V | 0.62 | 0.69 | 0.45 | 0.120.12 | 0.120.11 | ||

| (Ref. 2.87E-7) | 2.97E-7 | 2.94E-7 | 2.86E-7 | 2.86E-7 | 2.87E-7 | ||

| 100 | Number of total model calls | 8,711 | 8,798 | 9,279 | 2,226 | 2,228 | |

| C.o.V | 0.62 | 0.95 | 0.58 | 0.140.13 | 0.140.13 | ||

| (Ref. 0.99E-9) | 1.05E-9 | 1.01E-9 | 1.03E-9 | 0.98E-9 | 0.99E-9 | ||

| 100 | Number of total model calls | 11,458 | 11,473 | 11,922 | 2,736 | 2,735 | |

| C.o.V | 0.89 | 1.94 | 0.77 | 0.170.16 | 0.170.16 | ||

| (Ref. 1.28E-12) | 1.36E-12 | 1.32E-12 | 1.31E-12 | 1.28E-12 | 1.28E-12 | ||

| 500 | Number of total model calls | 8,760 | 8,808 | 9,271 | 5,439 | 5,532 | |

| C.o.V | 0.67 | 1.05 | 0.60 | 0.250.19 | 0.240.19 | ||

| (Ref. 0.99E-9) | 1.01E-9 | 1.01E-9 | 1.04E-9 | 1.00E-9 | 0.99E-9 | ||

| 500 | Number of total model calls | 11,334 | 11,870 | 11,908 | 5,634 | 5,583 | |

| C.o.V | 0.92 | 2.25 | 0.82 | 0.270.25 | 0.300.24 | ||

| (Ref. 1.28E-12) | 1.45E-12 | 1.15E-12 | 1.39E-12 | 1.16E-12 | 1.16E-12 | ||

6.7 Example 7: High-dimensional problem with quadratic nonlinearity

This example involves a quadratic limit-state function expressed in the standard normal space, as:

| (34) |

where is the problem dimension, defines the level of the failure probability, and affects the level of nonlinearity. To investigate the effect of dimensionality and nonlinearity, in relation to the HMCMC-based methods and SuS performance, different values for and are studied. Table˜8 presents the mean number of limit-state function evaluations, sample mean estimator, and C.o.V of the failure probabilities for various methods. For the HMCMC-based methods, the likelihood dispersion factor, , and the trajectory length, , are shown in Table˜8, and the burn-in sample size is set to 500 samples. The Subset Simulation results for all approaches are based on 2,000.

As seen in Table˜8, the QNp-HMCMC approach significantly outperforms all other methods, in all considered metrics, confirming its superiority, robustness, applicability and suitability in challenging, nonlinear, high-dimensional problems. By increasing the level of nonlinearity, through the parameter , HMCMC requires an excessive number of model calls in order to adequately explore the domain, while the QNp-HMCMC approach exhibits a largely invariant performance.

| 500 Independent Simulations | CWMH-SuS | aCS-SuS | HMCMC | QNp-HMCMC | |||

| 100 | Number of total model calls | 12,109 | 12,192 | 12,093 | 21,198 | 4,695 | |

| C.o.V | 1.43 | 1.71 | 2.18 | 0.160.15 | 0.160.15 | ||

| (Ref. 1.15E-6) | 1.22E-6 | 1.31E-6 | 1.23E-6 | 1.16E-6 | 1.16E-6 | ||

| 100 | Number of total model calls | 13,758 | 14,200 | 14,442 | 53,664 | 5,924 | |

| C.o.V | 2.94 | 4.04 | 5.78 | 0.210.21 | 0.210.21 | ||

| (Ref. 5.63E-7) | 4.29E-7 | 4.70E-7 | 5.86E-7 | 5.69E-7 | 5.63E-7 | ||

| 100 | Number of total model calls | 14,697 | 15,849 | 14,171 | 154,440 | 5,956 | |

| C.o.V | 7.76 | 5.19 | 5.63 | 0.260.26 | 0.260.26 | ||

| (Ref. 2.23E-6) | 2.40E-6 | 1.59E-6 | 2.83E-6 | 2.26E-6 | 2.24E-6 | ||

| 200 | Number of total model calls | 11,785 | 12,098 | 13,872 | 78,414 | 6,510 | |

| C.o.V | 3.57 | 3.67 | 3.43 | 0.250.25 | 0.250.24 | ||

| (Ref. 5.06E-6) | 5.89E-6 | 5.33E-6 | 4.62E-6 | 5.08E-6 | 5.05E-6 | ||

| 200 | Number of total model calls | 17,271 | 19,370 | 17,805 | 297,596 | 8,575 | |

| C.o.V | 8.09 | 11.85 | 6.64 | 0.310.31 | 0.290.29 | ||

| (Ref. 1.19E-6) | 1.36E-6 | 1.40E-6 | 1.08E-6 | 1.18E-6 | 1.17E-6 | ||

In Fig.˜11a, the computed eff values with respect to for are reported, based on Table˜8 results, confirming the QNp-HMCMC efficiency and robust behavior in all considered cases of nonlinearity. Fig.˜11b and Fig.˜11c study the effect of the number of model calls () on the mean estimate and C.o.V values for the case of and . The QNp-HMCMC method exhibits the best results and significantly outperforms all other approaches, while its results also consistently improve with increased sample sizes. HMCMC results are not reported in these two figures because the needed number of model calls in order to get meaningful results is quite high for this high-dimensional nonlinear problem, deeming this approach non-competitive in this case, and further noting the superior QNp-HMCMC performance and suitability in challenging high-dimensional spaces. The CoV-Anal curve in Fig.˜11c again represents the QNp-HMCMC C.o.V estimation based on the analytical expression in Eq.˜26, showcasing excellent agreement with numerical results.

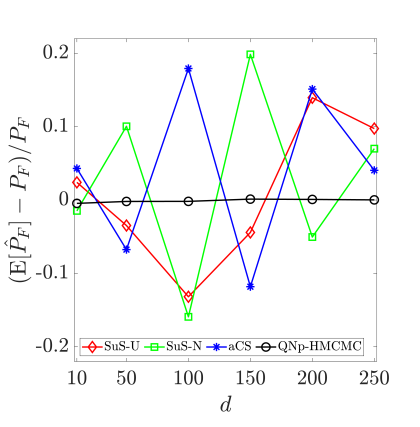

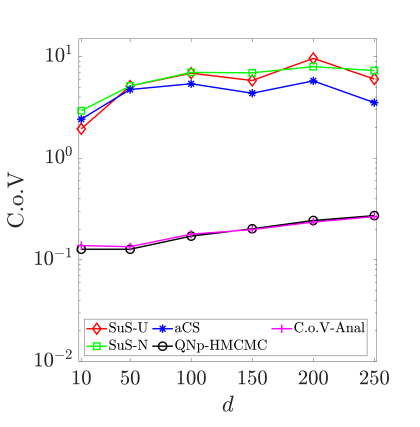

Finally, Fig.˜12 investigates the effect of the number of dimensions on the relative bias and C.o.V of the estimates, for the cases. The threshold is adjusted to have a failure probability of around . Again here, HMCMC results are not reported since they require a quite higher number of limit-state function evaluations, after or so, than all other methods, to achieve meaningful and comparable results. The same number of model calls is used for all methods shown in Fig.˜12, approximately 11,000-12,000 total model calls, which differs from Table˜8 settings and results for the similar cases that are studied and reported there, and the results are based on 500 independent simulations. QNp-HMCMC results are shown to provide an essentially unbiased estimator and very low C.o.V. values for all analyzed cases, in contrast to SuS results, reporting very high C.o.V. values. The analytical C.o.V expression in Eq.˜26 is also here in very good agreement with numerical results.

6.8 Example 8: High-dimensional highly nonlinear problem

To further investigate the QNp-HMCMC performance in challenging high-dimensional nonlinear problems, the limit-state function in this example is expressed in the standard normal space, as:

| (35) |

where is the problem dimension, equal to 100. The parameters for the HMCMC approaches are chosen as , and 500 burn-in samples. Table˜9 summarizes the computed results for various probability levels, acquired by adjusting the threshold value, and as shown the QNp-HMCMC approach once more achieves excellent results, superior to all other methods. In Fig.˜13a the eff metric is accordingly reported for the three different failure probability levels in Table˜9, with QNp-HMCMC exhibiting the best and stable performance, with very significant efficiency gains in relation to other methods, and particularly for decreasing failure probability levels.

| 500 Independent Simulations | CWMH-SuS | aCS-SuS | HMCMC | QNp-HMCMC | ||

|---|---|---|---|---|---|---|

| Number of total model calls | 9,380 | 9,593 | 10,593 | 28,026 | 7,295 | |

| C.o.V | 0.86 | 1.13 | 0.86 | 0.220.21 | 0.230.21 | |

| (Ref. 3.40E-5 ) | 3.38E-5 | 3.26E-5 | 3.62E-5 | 3.37E-5 | 3.41E-5 | |

| Number of total model calls | 12,948 | 13,704 | 13,965 | 32,009 | 7,924 | |

| C.o.V | 2.60 | 3.27 | 2.25 | 0.230.22 | 0.220.22 | |

| (Ref. 7.96E-7) | 8.26E-7 | 7.32E-7 | 7.99E-7 | 7.97E-7 | 7.96E-7 | |

| Number of total model calls | 17,304 | 17,793 | 17,974 | 31,948 | 7,889 | |

| C.o.V | 6.33 | 5.85 | 6.91 | 0.260.25 | 0.240.24 | |

| (Ref. 6.75E-9) | 8.84E-9 | 4.68E-9 | 7.32E-9 | 6.93E-9 | 6.86E-9 | |

6.9 Example 9: A thirty four-story structural example

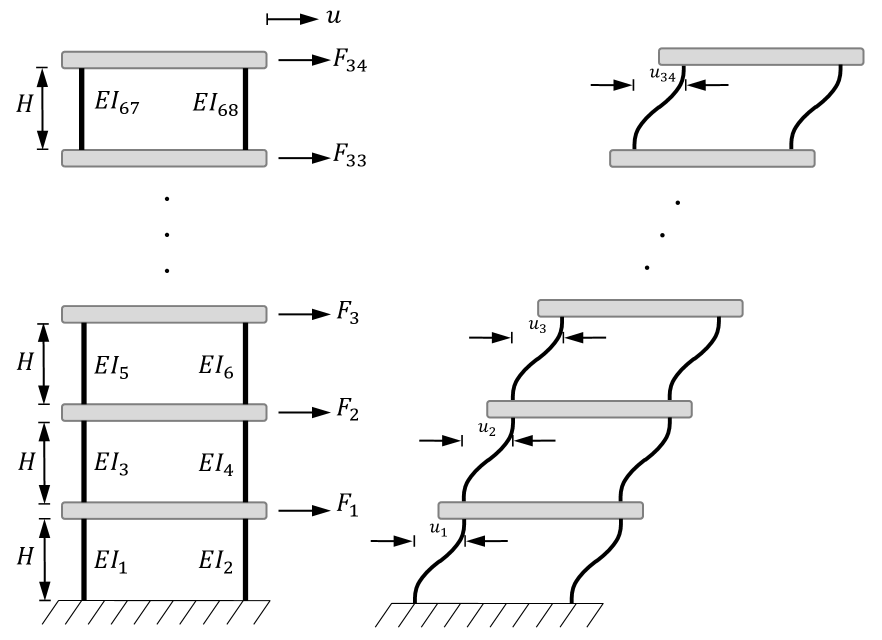

A thirty four-story structure is analyzed in this modified example from [77], as represented in Fig.˜14, and is subjected to thirty four static loads . The floor slabs/beams are assumed to be rigid and all columns have identical length, = 4 , and different flexural stiffnesses . Loads and stiffnesses are random variables and the total number of random variables is in this problem. The loads are assumed normally distributed, with a mean value of 2 and a C.o.V of 0.4, while stiffnesses are also normally distributed, with a mean value of 20 and a C.o.V of 0.2. Based on linear elastic behavior and excluding gravity effects, the top story displacement, , can be calculated by adding the interstory relative displacements, , as:

| (36) | ||||

and is the used limit-state function indicating failure when u exceeds a threshold value , chosen as 0.21, 0.22, 0.23 and 0.235 m. All random variables are transformed into the standard normal space for the analysis, and for the HMCMC-based methods the likelihood dispersion factor, , equals 0.3, and the burn-in samples are 400. In this example, 0<g(0)< 2, and as discussed in Section˜5, we perform the scaling with .

|

|

500 Independent Simulations | CWMH-SuS | aCS-SuS | HMCMC | QNp-HMCMC | |

|---|---|---|---|---|---|---|

| Number of total model calls | 7,400 | 7,400 | 7,400 | 2,741 | 2,520 | |

| C.o.V | 0.22 | 0.20 | 0.19 | 0.130.12 | 0.110.11 | |

| (Ref. 3.47E-4) | 3.56E-4 | 3.46E-4 | 3.39E-4 | 3.45E-4 | 3.47E-4 | |

| Number of total model calls | 9,200 | 9,200 | 9,200 | 2,723 | 2,519 | |

| C.o.V | 0.28 | 0.27 | 0.24 | 0.120.11 | 0.110.10 | |

| (Ref. 2.48E-5) | 2.52E-5 | 2.54E-5 | 2.42E-5 | 2.48E-5 | 2.48E-5 | |

| Number of total model calls | 11,468 | 11,475 | 11,470 | 2,819 | 2,819 | |

| C.o.V | 0.32 | 0.35 | 0.30 | 0.120.11 | 0.120.10 | |

| (Ref. 1.26E-6) | 1.27E-6 | 1.30E-6 | 1.22E-6 | 1.26E-6 | 1.26E-6 | |

| Number of total model calls | 12,804 | 12,836 | 12,815 | 3,019 | 3,019 | |

| C.o.V | 0.36 | 0.42 | 0.35 | 0.130.11 | 0.130.11 | |

| (Ref. 2.56E-7) | 2.62E-7 | 2.53E-7 | 2.41E-7 | 2.52E-7 | 2.50E-7 | |

Table˜10 summarizes all computed results, that once more validate the outstanding performance of the proposed HMCMC-based framework, particularly in high-dimensional, very low target probability problems. In Fig.˜13b, these findings are further supported by the reported eff metric for the four considered failure probability levels in Table˜10, showcasing again important advantages in relation to other methods.

7 Conclusions

A novel approach for estimation of rare event and failure probabilities, termed Approximate Sampling Target with Post-processing Adjustment (ASTPA), is presented in this paper, suitable for low- and high-dimensional problems, very small probabilities, and multiple failure modes. ASTPA can provide an accurate, unbiased probability estimation with an efficient number of limit-state function evaluations. The basic idea of ASTPA is to construct a relevant target distribution by weighting the high-dimensional random variable space through a likelihood model, using the limit-state function. Although this framework is general, to sample from this target distribution we utilize gradient-based Hamiltonian MCMC schemes in this work, including our newly developed Quasi-Newton based mass preconditioned HMCMC algorithm (QNp-HMCMC) that can sample very adeptly, particularly in difficult cases with high-dimensionality and very small rare event probabilities. Finally, an original post-sampling step is also devised, using the introduced inverse importance sampling procedure, based on the samples. The performance of the proposed methodology is carefully examined and compared very successfully against Subset Simulation in a series of low- and high-dimensional problems. As a general guideline, QNp-HMCMC is recommended to be used for problems with more than 20 dimensions, where traditional HMCMC schemes may not perform that well. However, even in lower dimensions QNp-HMCMC performs extremely well and is still a very competitive algorithm. Since we are utilizing gradient-based sampling methods in this work, all of our analyses and results are based on the fact that analytical gradients can be computed. In cases where numerical schemes are needed for the gradient evaluations, then HMCMC methods will not be competitive in relation to Subset Simulation. It should also be pointed out that different feature combinations of the HMCMC and QNp-HMCMC algorithms can be possible, based on problem-specific characteristics. Some of the ongoing and future works are directed toward exploring various ASTPA variants, sampling directly from non-Gaussian spaces, without the need for Gaussian transformations, and estimating high-dimensional first-passage problems under various settings [78, 79].

Acknowledgements

This material is based upon work partially supported by the U.S. National Science Foundation under CAREER Grant No. 1751941. The authors would also like to thank Prof. Dr. Daniel Straub and Dr. Iason Papaioannou at the Technical University of Munich, for very fruitful scientific discussions in relation to the presented formulation, and particularly for their helpful insights on the construction of the target distribution.

References

- [1] R. E. Melchers and A. T. Beck, Structural Reliability Analysis and Prediction. Wiley, 2018.

- [2] O. Ditlevsen and H. O. Madsen, Structural Reliability Methods. Department of Mechanical Engineering, Technical University of Denmark, 2007. Available at http://od-website.dk/books/OD-HOM-StrucRelMeth-Ed2.3.7.pdf.

- [3] S.-K. Au and Y. Wang, Engineering Risk Assessment with Subset Simulation. Wiley, 2014.

- [4] E. Nikolaidis, D. Ghiocel, and S. Singhal, Engineering Design Reliability Handbook. CRC press, 2005.

- [5] M. Lemaire, A. Chateauneuf, and J. Mitteau, Structural Reliability. Wiley, 2009.

- [6] R. Rackwitz, “Reliability analysis—A review and some perspectives,” Structural Safety, vol. 23, no. 4, pp. 365–395, 2001.

- [7] K. Breitung, “40 years FORM: Some new aspects?,” Probabilistic Engineering Mechanics, vol. 42, pp. 71–77, 2015.

- [8] A. Der Kiureghian and P.-L. Liu, “Structural reliability under incomplete probability information,” Journal of Engineering Mechanics, vol. 112, no. 1, pp. 85–104, 1986.

- [9] O. Ditlevsen and P. Bjerager, “Methods of structural systems reliability,” Structural Safety, vol. 3, no. 3-4, pp. 195–229, 1986.

- [10] M. Shinozuka, “Basic analysis of structural safety,” Journal of Structural Engineering, vol. 109, no. 3, pp. 721–740, 1983.

- [11] K. Breitung, “Asymptotic approximations for multinormal integrals,” Journal of Engineering Mechanics, vol. 110, no. 3, pp. 357–366, 1984.

- [12] P.-L. Liu and A. Der Kiureghian, “Optimization algorithms for structural reliability,” Structural Safety, vol. 9, no. 3, pp. 161–177, 1991.

- [13] T. Haukaas and A. Der Kiureghian, “Strategies for finding the design point in non-linear finite element reliability analysis,” Probabilistic Engineering Mechanics, vol. 21, no. 2, pp. 133–147, 2006.

- [14] M. Valdebenito, H. Pradlwarter, and G. Schuëller, “The role of the design point for calculating failure probabilities in view of dimensionality and structural nonlinearities,” Structural Safety, vol. 32, no. 2, pp. 101–111, 2010.

- [15] G. Schuëller and H. Pradlwarter, “Benchmark study on reliability estimation in higher dimensions of structural systems–an overview,” Structural Safety, vol. 29, no. 3, pp. 167–182, 2007.

- [16] I. Papaioannou, W. Betz, K. Zwirglmaier, and D. Straub, “MCMC algorithms for Subset Simulation,” Probabilistic Engineering Mechanics, vol. 41, pp. 89–103, 2015.

- [17] K. M. Zuev and L. S. Katafygiotis, “Modified Metropolis–Hastings algorithm with delayed rejection,” Probabilistic Engineering Mechanics, vol. 26, no. 3, pp. 405–412, 2011.

- [18] K. M. Zuev, J. L. Beck, S.-K. Au, and L. S. Katafygiotis, “Bayesian post-processor and other enhancements of Subset Simulation for estimating failure probabilities in high dimensions,” Computers & Structures, vol. 92, pp. 283–296, 2012.

- [19] S.-K. Au and E. Patelli, “Rare event simulation in finite-infinite dimensional space,” Reliability Engineering & System Safety, vol. 148, pp. 67–77, 2016.

- [20] Z. Wang, M. Broccardo, and J. Song, “Hamiltonian Monte Carlo methods for Subset Simulation in reliability analysis,” Structural Safety, vol. 76, pp. 51–67, 2019.

- [21] S.-K. Au and J. L. Beck, “Estimation of small failure probabilities in high dimensions by Subset Simulation,” Probabilistic Engineering Mechanics, vol. 16, no. 4, pp. 263–277, 2001.

- [22] S.-K. Au and J. L. Beck, “A new adaptive importance sampling scheme for reliability calculations,” Structural Safety, vol. 21, no. 2, pp. 135–158, 1999.

- [23] S.-K. Au and J. L. Beck, “Important sampling in high dimensions,” Structural Safety, vol. 25, no. 2, pp. 139–163, 2003.

- [24] I. Papaioannou, C. Papadimitriou, and D. Straub, “Sequential importance sampling for structural reliability analysis,” Structural Safety, vol. 62, pp. 66–75, 2016.

- [25] R. Y. Rubinstein and D. P. Kroese, The cross-entropy method: A unified approach to Monte Carlo simulation, randomized optimization and machine learning. Information Science & Statistics, Springer, 2004.

- [26] N. Kurtz and J. Song, “Cross-entropy-based adaptive importance sampling using Gaussian mixture,” Structural Safety, vol. 42, pp. 35–44, 2013.

- [27] S. Geyer, I. Papaioannou, and D. Straub, “Cross entropy-based importance sampling using Gaussian densities revisited,” Structural Safety, vol. 76, pp. 15–27, 2019.

- [28] D. Y. Yang, J. Teng, and D. M. Frangopol, “Cross-entropy-based adaptive importance sampling for time-dependent reliability analysis of deteriorating structures,” Structural Safety, vol. 66, pp. 38–50, 2017.

- [29] I. Papaioannou, S. Geyer, and D. Straub, “Improved cross entropy-based importance sampling with a flexible mixture model,” Reliability Engineering & System Safety, vol. 191, p. 106564, 2019.

- [30] P. Koutsourelakis, H. Pradlwarter, and G. Schuëller, “Reliability of structures in high dimensions, Part I: Algorithms and applications,” Probabilistic Engineering Mechanics, vol. 19, no. 4, pp. 409–417, 2004.

- [31] H. Pradlwarter, G. Schueller, P. Koutsourelakis, and D. Charmpis, “Application of line sampling simulation method to reliability benchmark problems,” Structural Safety, vol. 29, no. 3, pp. 208–221, 2007.

- [32] P. Bjerager, “Probability integration by directional simulation,” Journal of Engineering Mechanics, vol. 114, no. 8, pp. 1285–1302, 1988.

- [33] J. Nie and B. R. Ellingwood, “Directional methods for structural reliability analysis,” Structural Safety, vol. 22, no. 3, pp. 233–249, 2000.

- [34] C. Andrieu-Renaud, B. Sudret, and M. Lemaire, “The PHI2 method: A way to compute time-variant reliability,” Reliability Engineering & System Safety, vol. 84, no. 1, pp. 75–86, 2004.

- [35] C. Bucher, “Asymptotic sampling for high-dimensional reliability analysis,” Probabilistic Engineering Mechanics, vol. 24, no. 4, pp. 504–510, 2009.

- [36] A. Naess, B. Leira, and O. Batsevych, “System reliability analysis by enhanced Monte Carlo simulation,” Structural Safety, vol. 31, no. 5, pp. 349–355, 2009.

- [37] S. Tong and G. Stadler, “Large deviation theory-based adaptive importance sampling for rare events in high dimensions,” arXiv preprint arXiv:2209.06278, 2022.

- [38] A. M. Johansen, P. Del Moral, and A. Doucet, “Sequential Monte Carlo samplers for rare events,” in 6th International Workshop on Rare Event Simulation, pp. 256–267, 2006.

- [39] F. Cérou, P. Del Moral, T. Furon, and A. Guyader, “Sequential Monte Carlo for rare event estimation,” Statistics and Computing, vol. 22, no. 3, pp. 795–808, 2012.

- [40] P. Del Moral, A. Doucet, and A. Jasra, “Sequential Monte Carlo samplers,” Journal of the Royal Statistical Society: Series B (Statistical Methodology), vol. 68, no. 3, pp. 411–436, 2006.

- [41] P. Del Moral and J. Garnier, “Genealogical particle analysis of rare events,” The Annals of Applied Probability, vol. 15, no. 4, pp. 2496–2534, 2005.

- [42] F. Cérou, P. Del Moral, F. Le Gland, and P. Lezaud, “Genetic genealogical models in rare event analysis,” Alea, vol. 1, p. 181–203, 2006.

- [43] A. Guyader, N. Hengartner, and E. Matzner-Løber, “Simulation and estimation of extreme quantiles and extreme probabilities,” Applied Mathematics & Optimization, vol. 64, no. 2, pp. 171–196, 2011.

- [44] C. Walter, “Moving particles: A parallel optimal multilevel splitting method with application in quantiles estimation and meta-model based algorithms,” Structural Safety, vol. 55, pp. 10–25, 2015.

- [45] R. J. Allen, C. Valeriani, and P. R. ten Wolde, “Forward flux sampling for rare event simulations,” Journal of Physics: Condensed Matter, vol. 21, no. 46, p. 463102, 2009.

- [46] S. Brooks, A. Gelman, G. Jones, and X.-L. Meng, Handbook of Markov Chain Monte Carlo. CRC press, 2011.

- [47] N. Metropolis, A. W. Rosenbluth, M. N. Rosenbluth, A. H. Teller, and E. Teller, “Equation of state calculations by fast computing machines,” The Journal of Chemical Physics, vol. 21, no. 6, pp. 1087–1092, 1953.

- [48] S. Geman and D. Geman, “Stochastic relaxation, Gibbs distributions, and the Bayesian restoration of images,” IEEE Transactions on Pattern Analysis and Machine Intelligence, no. 6, pp. 721–741, 1984.

- [49] S. Duane, A. D. Kennedy, B. J. Pendleton, and D. Roweth, “Hybrid Monte Carlo,” Physics Letters B, vol. 195, no. 2, pp. 216–222, 1987.

- [50] R. M. Neal, Bayesian Learning for Neural Networks. Springer, 1995.

- [51] R. M. Neal, “MCMC using Hamiltonian dynamics.,” arXiv preprint arXiv:1206.1901, 2012.

- [52] A. Beskos, N. Pillai, G. Roberts, J.-M. Sanz-Serna, and A. Stuart, “Optimal tuning of the hybrid Monte Carlo algorithm,” Bernoulli, vol. 19, no. 5A, pp. 1501–1534, 2013.

- [53] M. Betancourt, “A conceptual introduction to Hamiltonian Monte Carlo,” arXiv preprint arXiv:1701.02434, 2017.

- [54] B. Carpenter, A. Gelman, M. D. Hoffman, D. Lee, B. Goodrich, M. Betancourt, M. Brubaker, J. Guo, P. Li, and A. Riddell, “Stan: A probabilistic programming language,” Journal of Statistical Software, vol. 76, no. 1, 2017.

- [55] G. O. Roberts and J. S. Rosenthal, “Optimal scaling for various Metropolis-Hastings algorithms,” Statistical Science, vol. 16, no. 4, pp. 351–367, 2001.

- [56] G. O. Roberts and J. S. Rosenthal, “Optimal scaling of discrete approximations to Langevin diffusions,” Journal of the Royal Statistical Society: Series B (Statistical Methodology), vol. 60, no. 1, pp. 255–268, 1998.

- [57] M. Girolami and B. Calderhead, “Riemann manifold Langevin and Hamiltonian Monte Carlo methods,” Journal of the Royal Statistical Society: Series B (Statistical Methodology), vol. 73, no. 2, pp. 123–214, 2011.

- [58] W. Chen, Z. Wang, M. Broccardo, and J. Song, “Riemannian Manifold Hamiltonian Monte Carlo based subset simulation for reliability analysis in non-Gaussian space,” Structural Safety, vol. 94, p. 102134, 2022.

- [59] A. Griewank, “On automatic differentiation,” Mathematical Programming: recent developments and applications, vol. 6, no. 6, pp. 83–107, 1989.

- [60] T. Chen, E. Fox, and C. Guestrin, “Stochastic gradient Hamiltonian Monte Carlo,” in International Conference on Machine Learning, pp. 1683–1691, 2014.

- [61] M. D. Hoffman and A. Gelman, “The No-U-turn sampler: Adaptively setting path lengths in Hamiltonian Monte Carlo,” Journal of Machine Learning Research, vol. 15, no. 1, pp. 1593–1623, 2014.

- [62] M. Hohenbichler and R. Rackwitz, “Non-normal dependent vectors in structural safety,” Journal of the Engineering Mechanics Division, vol. 107, no. 6, pp. 1227–1238, 1981.

- [63] R. Lebrun and A. Dutfoy, “An innovating analysis of the Nataf transformation from the copula viewpoint,” Probabilistic Engineering Mechanics, vol. 24, no. 3, pp. 312–320, 2009.

- [64] H. Nikbakht and K. G. Papakonstantinou, “A direct Hamiltonian MCMC approach for reliability estimation,” in 3rd International Conference on Uncertainty Quantification in Computational Sciences and Engineering, 2019.

- [65] B. J. Alder and T. E. Wainwright, “Studies in molecular dynamics. I. General method,” The Journal of Chemical Physics, vol. 31, no. 2, pp. 459–466, 1959.

- [66] Z. Wang, S. Mohamed, and N. Freitas, “Adaptive Hamiltonian and Riemann manifold Monte Carlo,” in International Conference on Machine Learning, pp. 1462–1470, 2013.

- [67] C. P. Andriotis, K. G. Papakonstantinou, and V. K. Koumousis, “Nonlinear programming hybrid beam-column element formulation for large-displacement elastic and inelastic analysis,” Journal of Engineering Mechanics, vol. 144, no. 10, p. 04018096, 2018.

- [68] J. Nocedal and S. J. Wright, “Numerical optimization,” Springer, 2006.

- [69] Y. Zhang and C. A. Sutton, “Quasi-Newton methods for Markov Chain Monte Carlo,” in Advances in Neural Information Processing Systems, pp. 2393–2401, 2011.

- [70] T. Fu, L. Luo, and Z. Zhang, “Quasi-Newton Hamiltonian Monte Carlo,” in Uncertainty in Artificial Intelligence, 2016.

- [71] G. McLachlan and D. Peel, Finite mixture models. Wiley, 2000.

- [72] E. R. A. group, “Subset Simulation (SuS) with the adaptive Conditional Sampling (aCS) approach, Matlab code,” 2018. Technical University of Munich. Available at https://www.bgu.tum.de/era/software/software00/subset-simulation/.

- [73] A. Der Kiureghian and T. Dakessian, “Multiple design points in first and second-order reliability,” Structural Safety, vol. 20, no. 1, pp. 37–49, 1998.

- [74] D. M. Himmelblau, Applied nonlinear programming. McGraw-Hill Companies, 1972.

- [75] X. Du, “First order and second reliability methods,” tech. rep., University of Missouri – Rolla, 2005.

- [76] S.-K. Au, J. Ching, and J. L. Beck, “Application of Subset Simulation methods to reliability benchmark problems,” Structural Safety, vol. 29, no. 3, pp. 183–193, 2007.

- [77] C. Bucher, Computational analysis of randomness in structural mechanics: Structures and infrastructures book series, vol. 3. CRC Press, 2009.

- [78] K. G. Papakonstantinou, E. Eshra, and H. Nikbakht, “Hamiltonian MCMC based framework for time-variant rare event uncertainty quantification,” in 14th International Conference on Applications of Statistics and Probability in Civil Engineering (ICASP), Dublin, Ireland, 2023.

- [79] K. G. Papakonstantinou, H. Nikbakht, and E. Eshra, “Quasi-Newton Hamiltonian MCMC sampling for reliability estimation in high-dimensional non-Gaussian spaces,” in 13th International Conference on Structural Safety & Reliability (ICOSSAR), Shanghai, China, 2022.