11email: 1027572886a@gmail.com

11email: {gaoliangcai,yanzuoyu,pengshuaipku}@pku.edu.cn 22institutetext: Huawei AI Application Research Center

22email: {dulin09,zhangziyin1}@huawei.com

Handwritten Mathematical Expression Recognition with Bidirectionally Trained Transformer

Abstract

Encoder-decoder models have made great progress on handwritten mathematical expression recognition recently. However, it is still a challenge for existing methods to assign attention to image features accurately. Moreover, those encoder-decoder models usually adopt RNN-based models in their decoder part, which makes them inefficient in processing long LaTeX sequences. In this paper, a transformer-based decoder is employed to replace RNN-based ones, which makes the whole model architecture very concise. Furthermore, a novel training strategy is introduced to fully exploit the potential of the transformer in bidirectional language modeling. Compared to several methods that do not use data augmentation, experiments demonstrate that our model improves the ExpRate of current state-of-the-art methods on CROHME 2014 by 2.23%. Similarly, on CROHME 2016 and CROHME 2019, we improve the ExpRate by 1.92% and 2.28% respectively.

Keywords:

handwritten mathematical expression recognition transformer bidirection encoder-decoder model1 Introduction

The encoder-decoder models have shown quite effective performance on various tasks such as scene text recognition [6] and image captioning [25]. Handwritten Mathematical Expression Recognition (HMER) aims to generate the math expression LaTeX sequence according to the handwritten math expression image. Since HMER is also an image to text modeling task, many encoder-decoder models [36, 34] have been proposed for it in recent years.

However, existing methods suffer from the lack of coverage problem [36] to varying degrees. This problem refers to two possible manifestations: over-parsing and under-parsing. Over-parsing means that some regions of HME image are redundantly translated multiple times, while under-parsing denotes that some regions remain untranslated.

Most encoder-decoder models are RNN-based models, which have difficulty modeling the relationship between two symbols that are far apart. Previous study [2] had noted this long-term dependency problem caused by gradient vanishing. This problem is exposed more obviously in HMER task. Compared to traditional natural language processing, LaTeX is a markup language designed by human, and thus has a clearer and more distinct syntactic structure, e.g., “{” and “}” are bound to appear in pairs. When dealing with long LaTeX sequences, it is difficult for RNN-based models to capture the relationship between two distant “{” and “}” symbol, resulting in lack of awareness of the LaTeX syntax specification.

Traditional autoregressive models [36, 34] use left-to-right (L2R) direction to predict symbols one by one in the inference phase. Such approaches may generate unbalanced outputs [15], which prefixes are usually more accurate than suffixes. To overcome this problem, existing study [15] employ two independent decoders, trained for left-to-right and right-to-left directions, respectively. This usually leads to more parameters and longer training time. Therefore, an intuitive attempt is to adapt a single decoder for bi-directional language modeling.

In this paper, we employ the transformer [22] decoder into HMER task, alleviating the lack of coverage problem [36] by using positional encodings. Besides, a novel bidirectional training strategy is proposed to obtain a Bidirectionally Trained TRansformer (BTTR) model. The strategy enables single transformer decoder to perform both L2R and R2L decoding. We further show that our BTTR model outperforms RNN-based ones in terms of both training parallelization and inferencing accuracy. The main contributions of our work are summarized as follows:

-

–

To the best of our knowledge, it is the first attempt to use end-to-end trained transformer decoder for solving HMER task.

-

–

The combination of image and word positional encodings enable each time step to accurately assign attention to different regions of input image, alleviating the lack of coverage problem.

-

–

A novel bidirectional training strategy is proposed to perform bidirectional language modeling in a single transformer decoder.

- –

-

–

We make our code available on the GitHub.111https://github.com/Green-Wood/BTTR

2 Related Work

2.1 HMER Methods

In the last decades, many approaches [4, 32, 37, 27, 13, 29, 28] related to HMER have been proposed. These approaches can be divided into two categories: grammar-based and encoder-decoder based. In this section, we will briefly review the related work in both categories.

2.1.1 Grammar Based

These methods usually consist of three parts: symbol segmentation, symbol recognition, and structural analysis. Researchers have proposed a variety of predefined grammars to solve HMER task, such as stochastic context-free grammars [1], relational grammars [16], and definite clause grammars [5, 3]. None of these grammar rules are data-driven, but are hand-designed, which could not benefit from large dataset.

2.1.2 Encoder-Decoder Based

In recent years, a series of encoder-decoder models have been widely used in various tasks [30, 31, 26]. In HMER tasks, Zhang et al. [36] observed the lack of coverage problem and proposed WAP model to solve the HMER task. In the subsequent studies, DenseWAP [34] replaced VGG encoder in the WAP with DenseNet [11] encoder, and improved the performance. Further, DenseWAP-TD [35] enhanced the model’s ability to handle complex formulas by substituting string decoder with a tree decoder. Wu et al. [24] used stroke information and formulated the HMER as a graph-to-graph(G2G) modeling task. Such encoder-decoder based models have achieved outstanding results in several CROHME competitions [18, 19, 17].

2.2 Transformer

Transformer [22] is a neural network architecture based solely on attention mechanisms. Its internal self-attention mechanism makes transformer a breakthrough compared to RNN in two aspects. Firstly, transformer does not need to depend on the state of the previous step as RNN does. Well-designed parallelization allows transformer to save a lot of time in the training phase. Secondly, tokens in the same sequence establish direct one-to-one connections through the self-attention mechanism. Such a mechanism fundamentally solves the gradient vanishing problem of RNN [2], making transformer more suitable than RNN on long sequences. In recent years, RNN is replaced by transformer in various tasks in computer vision and natural language processing [20, 8].

Recently, transformer has been used in offline handwritten text recognition. Kang et al. [14] first adopted transformer networks for the handwritten text recognition task and achieved state-of-the-art performance. For the task of mathematical expression, “Univ. Linz” method in CROHME 2019 [17] used a Faster R-CNN detector and a transformer decoder. Two subsystems were trained separately.

2.3 Right-to-Left Language Modeling

To solve the problem that traditional autoregressive models can only perform left-to-right(L2R) language modeling, many studies have attempted right-to-left(R2L) language modeling. Liu et al. [15] trained a R2L model separately. During the inference phase, hypotheses from L2R and R2L models are re-ranked to produce the best candidate. Furthermore, Zhang et al. [38] used R2L model to regularize L2R model in the training phase to obtain a better L2R model. Zhou et al. [39] proposed SB-NMT that utilized a single decoder to generate sequences bidirectionally. However, all of these methods increase model complexity. On the other hand, our approach achieves bidirectional language modeling on a single decoder while keeping the model concise.

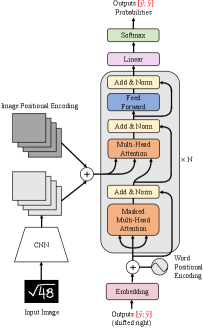

3 Methodology

In this section, we will detailed introduce the proposed BTTR model architecture, as illustrated in Fig. 1. In section 3.1, we will briefly describe the DenseNet [11] model used in the encoder part. In section 3.2 and 3.3, the positional encodings and transformer model used in the encoder and decoder part will be described in detail. Finally in section 3.4, we will introduce the proposed novel bidirectional training strategy, which allows to perform bidirectional language modeling in a single transformer decoder.

3.1 CNN Encoder

In the encoder part, DenseNet is used as the feature extractor for HME images. The main idea of DenseNet is to increase information flow between layers by introducing direct connections between each layer and all its subsequent layers. In this way, given output features from to layer, the output feature of layer can be computed by:

| (1) |

where denotes the concatenation operation of all the output features, and denotes a composite function of three consecutive layers: a batch normalization (BN) [12] layer, followed by a ReLU [9] layer and a convolution (Conv) layer.

Through concatenation operation in the channel dimension, DenseNet enables better propagation of gradient. The paper [11] states that by this dense connection, DenseNet can achieve better performance with fewer parameters compared to ResNet [10].

In addition to DenseNet, we also add a convolution layer in the encoder part to adjust the image feature dimension to the size of embedding dimension for subsequent processing.

3.2 Positional Encoding

The positional information of image features and word vectors can effectively help the model to identify regions that need to attend. In the previous studies [36, 34], although the RNN-based model inherently takes the order of word vectors into account, it neglects the positional information of image features.

In this paper, since the transformer model itself doesn’t have any sense of position for each input vector, we use two types of positional encodings to address this information. In detail, we use image positional encodings and word positional encodings to represent the image feature position and word vector position, respectively.

We refer to image features and word vector features as content-based, and the two types of positional encodings as position-based. As illustrated in Fig. 1, content-based and position-based features are summed up, as the input to transformer decoder.

3.2.1 Word Positional Encoding

Word positional encoding is basically the same as sinusoidal positional encoding proposed in the original transformer work [22]. Given position and dimension as input, the word positional encoding vector is defined as:

| (2) |

| (3) |

where is the index in dimension.

3.2.2 Image Positional Encoding

A 2-D normalized positional encoding is used to represent the image position features. We first compute sinusoidal positional encoding in each of the two dimensions and then concatenate them together. Given a 2-D position tuple and the same dimension as the word positional encoding, the image positional encoding vector is represented as:

| (4) |

| (5) |

where and are height and width of input images.

3.3 Transformer Decoder

For the decoder part, we use the standard transformer model [22]. Each basic transformer decoder layer module consists of four essential parts. In the following, we will describe the implementation details of these components.

3.3.1 Scaled Dot-Product Attention

This attention mechanism is essentially using the query to obtain the value from key-value pairs, based on the similarity between the query and key. The output matrix can be computed in parallel by the query , key , value , and dimension .

| (6) |

3.3.2 Multi-Head Attention

With multi-head mechanism, the scaled dot-product attention module can attend to feature-map from multiple representation subspaces jointly. With projection parameter matrices , we first project the query , key , and value into a subspace to compute the head .

| (7) |

Then all the heads are concatenated and projected with a parameter matrix and the number of heads .

| (8) |

3.3.3 Masked Multi-Head Attention

In the decoder part, due to the autoregressive property, the next symbol is predicted based on the input image and previously generated symbols. In the training phase, a lower triangle mask matrix is used to enable the self-attention module to restrict the attention region for each time step. Due to masked multi-head attention mechanism, the whole training process requires only one forward computation.

3.3.4 Position-wise Feed-Forward Network

Feed-Forward Network(FNN) consists of three operations: a linear transformation, a ReLU activation function and another linear transformation.

| (9) |

After multi-head attention, the information between positions has been fully exchanged. FFN enables each position to integrate its own internal information separately.

3.4 Bidirectional Training Strategy

First, two special symbols “” and “” are introduced in the dictionary to denote the start and end of the sequence. For the target LaTeX sequence , we denote the target sequence from left to right (L2R) as , and the right-to-left (R2L) target sequence as .

Conditioned on image and model parameter , the traditional autoregressive model need to compute the probability distribution:

| (10) |

where is the index in target sequence.

In this paper, since the transformer model itself does not actually care about the order of input symbols, we can use a single transformer decoder for bi-directional language modeling. Modeling both Eq. (10) and Eq. (11) at the same time.

| (11) |

To achieve this goal, a simple yet effective bidirectional training strategy is proposed, in which for each training sample, we generate two target sequences, L2R and R2L, from the target LaTeX sequence, and compute the training loss in the same batch (details in Section 4.2). Compared with unidirectional language modeling, our approach trains a model to perform bidirectional language modeling without sacrificing model conciseness. Experiment results in Section 5.3 verify the effectiveness of our bidirectional training strategy.

4 Implementation Details

4.1 Networks

In the encoder part, to make a fair comparison with the previous state-of-the-art method, we use the same DenseNet feature extractor as the DenseWAP model [34]. Specifically, three bottleneck layers are used in the backbone network and transition layers are added in between to reduce the number of feature-maps. In each bottleneck layer, we set the growth rate to , the depth of each block to , and the compression hyperpatameter of the transition layer to .

In the decoder part, we use the standard transformer model. We set the embedded dimension and model dimension to , the number of heads in the multi-head attention module to , the dimension of intermediate layers in the FFN to , and the number of transformer decoder layer to . The 0.3 dropout rate is used to prevent overfitting.

4.2 Training

Our training objective is to maximize the predicted probability of the ground truth symbols in Eq. (10) and Eq. (11), so we use the standard cross-entropy loss function to calculate the loss between the predicted probabilities w.r.t. the ground truth at each decoding position. Given the training sample , the objective function for optimization is shown as follows:

| (12) |

| (13) |

| (14) |

The model is trained from scratch using the Adadelta algorithm [33] with a weight decay of , , and . PyTorch framework is used to implement our model. The model is trained on four NVIDIA 1080Ti GPUs with GB memory.

4.3 Inferencing

In the inference phase, we aim to generate the most likely LaTeX sequence conditioned on the input image. Which can be fomulated as follows:

| (15) |

where denotes the input image and denotes the model parameter.

Unlike the training phase where a lower triangular mask matrix is used to generate the prediction for all time steps simultaneously. Since we have no ground truth of the previously predicted symbol, we can only predict symbols one by one until the “End” symbol appears or the predefined maximum length is reached.

Obviously, we cannot search for all possible sequences, thus a heuristic beam search is proposed to balance the computational cost with the quality of decoding. Further, taking advantage of the fact that our decoder is capable of bidirectional language modeling, approximate joint search [15] is used to improve the performance. The basic idea consists of three steps: (1) Firstly, a beam search is performed on L2R and R2L directions to obtain two k-best hypotheses.(2) Then, we reverse L2R hypotheses to R2L direction and R2L hypotheses to L2R direction and treat these hypotheses as ground truth to compute the loss values for each of them as in the training phase.(3) Finally, those loss values are added to their original hypothesis scores to obtain the final scores, which is then used to find the best candidate. In practice, we set beam size , the maximum length to 200, and length penalty .

5 Experiments

5.1 Datasets

We use the Competition on Recognition of Online Handwritten Mathematical Expressions (CROHME) benchmark, which currently is the largest dataset for handwritten mathematical expression to validate the proposed model. We use the same training dataset but different test datasets. The training set contains 8836 handwritten mathematical expressions, while the test sets of CROHME 2014/2016/2019 contain 986/1147/1199 expressions respectively.

In the CROHME dataset, each handwritten math expression is saved in a InkML file, which contains handwritten stroke trajectory information and ground truth in both MathML and LaTeX formats. We transform the handwritten stroke trajectory information in the InkML files to offline images in bitmap format for training and testing. With official evaluation tools provided by the CROHME 2019 [17] organizers and the ground truth in symLG format, we convert the predicted LaTeX sequences to symLG format and evaluate the performance.

5.2 Compare with state-of-the-art results

The results of some models on the CROHME 2014/2016/2019 datasets are shown in Table 1. To ensure the fairness of performance comparison, the methods we show all use only the officially provided 8836 training samples. Neither we nor the methods we compare use data augmentation.

We first provide results of three traditional handwritten mathematical formula recognition methods based on tree grammar in CROHME 2014 as the baseline, denoted as I, VI, and VII. For CROHME 2016, we provide the best performing “TOKYO” method as the baseline, which only used official training samples. For CROHME 2019 official methods, we provide “Univ. Linz” [17] method as the baseline. For Image-to-LaTeX methods, we use the previous state-of-the-art “WYGIWYS” [7], “PAL-v2” [23], “WAP” [36], “Weakly supervised WAP”(WS WAP) [21], “DenseWAP” [34] as well as the tree decoder-based “DenseWAP-TD” [35] method. The “Ours-Uni” and “Ours-Bi” methods denote the model trained using the vanilla transformer decoder and the model trained with the bidirectional training strategy on top of it.

In Table 1, by comparing “DenseWAP” with “Ours-Uni”, both are unidirectional string decoder based models, we can obtain 4.29% average performance improvement in ExpRate by simply replacing the RNN-based decoder with the vanilla transformer decoder.

Compared with other methods, our proposed BTTR model outperforms the previous state-of-the-art methods in nearly all metrics and is about 3.4% ahead of the “DenseWAP-TD” method in ExpRate, which explicitly encodes our prior knowledge about the LaTeX grammar through a tree decoder.

| Dataset | Model | ExpRate | StruRate | ||

| CROHME14 | I | 37.22 | 44.22 | 47.26 | - |

| VI | 25.66 | 33.16 | 35.90 | - | |

| VII | 26.06 | 33.87 | 38.54 | - | |

| WYGIWYS | 36.4 | - | - | - | |

| WAP | 40.4 | 56.1 | 59.9 | - | |

| DenseWAP | 43.0 | 57.8 | 61.9 | 63.2 | |

| PAL-v2 | 48.88 | 64.50 | 69.78 | - | |

| DenseWAP-TD | 49.1 | 64.2 | 67.8 | 68.6 | |

| WS WAP | 53.65 | - | - | - | |

| Ours-Uni | 48.17 | 59.63 | 63.29 | 65.01 | |

| Ours-Bi | 53.96 | 66.02 | 70.28 | 71.40 | |

| CROHME16 | TOKYO | 43.94 | 50.91 | 53.70 | 61.6 |

| WAP | 37.1 | - | - | - | |

| DenseWAP | 40.1 | 54.3 | 57.8 | 59.2 | |

| PAL-v2 | 49.61 | 64.08 | 70.27 | - | |

| DenseWAP-TD | 48.5 | 62.3 | 65.3 | 65.9 | |

| WS WAP | 51.96 | 64.34 | 70.10 | - | |

| Ours-Uni | 44.55 | 55.88 | 60.59 | 61.55 | |

| Ours-Bi | 52.31 | 63.90 | 68.61 | 69.40 | |

| CROHME19 | Univ. Linz | 41.49 | 54.13 | 58.88 | 60.02 |

| DenseWAP | 41.7 | 55.5 | 59.3 | 60.7 | |

| DenseWAP-TD | 51.4 | 66.1 | 69.1 | 69.8 | |

| Ours-Uni | 44.95 | 56.13 | 60.47 | 60.63 | |

| Ours-Bi | 52.96 | 65.97 | 69.14 | 70.06 |

5.3 Ablation Study

In Table 2, the first “IPE” column denotes whether to use image positional encoding or not. Secondly, the “Bi-Trained” column shows whether the bidirectional training strategy is used. On the “AJS” column, ✓ indicates the use of approximate joint search [15], while ✗ represents L2R search. The last “Ensemble” column denotes whether the ensemble method is used.

First, we can see that whether to use image positional encoding or not makes huge difference on the CROHME 2019 test set. This shows that image positional encoding improves the generalization ability of our model in different scales.

Comparing the \nth2 and the \nth3 rows in each dataset, we can see that the model trained using the bidirectional training strategy still outperforms the unidirectionally trained model by about 2.52% in ExpRate, though both of them using L2R search. This shows that while training a bidirectional language model, the bidirectional training strategy also helps the whole model to extract information from the images more comprehensively.

Further, using the properties of the bidirectional language model, we evaluate the decoding results of both L2R and R2L directions using approximate joint search, resulting in an improvement of about 4.68% in ExpRate.

Finally we report the results using the ensemble method, showing that this can significantly improve the overall recognition performance by about 3.35% in ExpRate. Specifically, We train five models initialized with different random seeds and average their prediction probabilities at each decoding step.

| Dataset | IPE | Bi-Trained | AJS | Ensemble | ExpRate |

| CROHME14 | ✗ | ✗ | ✗ | ✗ | |

| ✓ | ✗ | ✗ | ✗ | ||

| ✓ | ✓ | ✗ | ✗ | ||

| ✓ | ✓ | ✓ | ✗ | ||

| ✓ | ✓ | ✓ | ✓ | ||

| CROHME16 | ✗ | ✗ | ✗ | ✗ | |

| ✓ | ✗ | ✗ | ✗ | ||

| ✓ | ✓ | ✗ | ✗ | ||

| ✓ | ✓ | ✓ | ✗ | ||

| ✓ | ✓ | ✓ | ✓ | ||

| CROHME19 | ✗ | ✗ | ✗ | ✗ | |

| ✓ | ✗ | ✗ | ✗ | ||

| ✓ | ✓ | ✗ | ✗ | ||

| ✓ | ✓ | ✓ | ✗ | ||

| ✓ | ✓ | ✓ | ✓ |

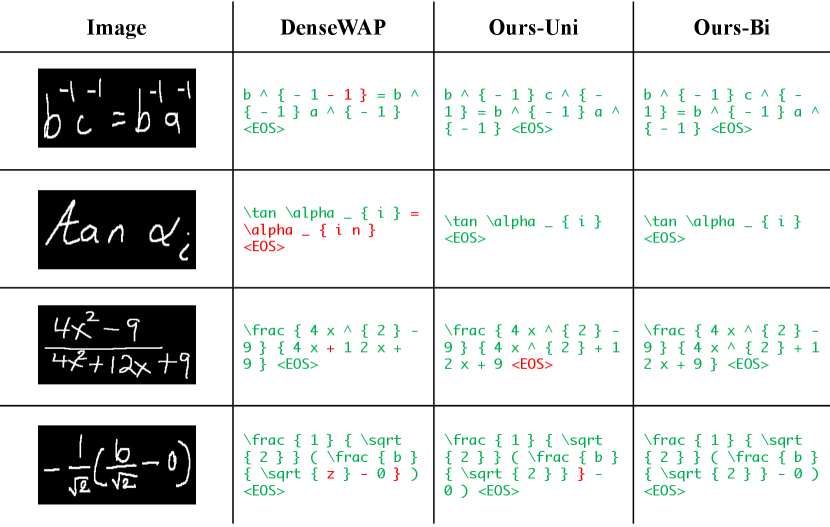

5.4 Case Study

As can be seen in Fig. 2, we give several case studies for the “DenseWAP”, “Ours-Uni” and “Ours-Bi” models. These three models use the same DenseNet encoder. The difference between these three models is that “DenseWAP” uses an RNN-based decoder, “Ours-Uni” adapts a vanilla transformer decoder, and “Ours-Bi” uses the bidirectional training strategy to train transformer decoder.

Firstly, comparing the prediction results between “DenseWAP” and “Ours-Uni”, we can see that for input images with complex structure, the “DenseWAP” model cannot predict all the symbols completely. Moreover, for input image with discontinuous structure, the right half is unnecessarily predicted twice. Problems mentioned above reflecting under-parsing and over-parsing phenomenon. However, the “Ours-Uni” and “Ours-Bi” models employed with positional encodings are able to identify all the symbols in these images accurately.

Secondly, by comparing the prediction results of “Ours-Uni” and “Ours-Bi”, we find that “Ours-Bi” gives more accurate predictions. Owing to the re-score mechanism in approximate joint search procedure, “Ours-Bi” avoids the asymmetry of “{” and “}” in the prediction results given by “Ours-Uni”.

6 Conclusion

In this paper, a novel bidirectionally trained trans former model is proposed for handwritten mathematical expression recognition task. Compared to previous approaches, our proposed BTTR model has the following three advantages: (1) Through image positional encoding, the model could capture the location information of the feature-map to guide itself to reasonably assign attention and alleviate the lack of coverage problem. (2) We take the advantage of the transformer model’s permutation-invariant property to train a decoder with bidirectional language modeling capability. The bidirectional training strategy enables BTTR model to make predictions in both L2R and R2L directions while ensuring model simplicity. (3) The RNN-based decoder is replaced by the transformer decoder to improve the parallelization of training process. Experiments demonstrate the effectiveness of our proposed BTTR model. Concretely speaking, our model gets ExpRate scores of 57.91%, 54.49%, and 56.88% on the CROHME 2014, CROHME 2016, and CROHME 2019 respectively.

Acknowledgments

This work is supported by the projects of National Key R&D Program of China (2019YFB1406303) and National Natural Science Foundation of China (No. 61876003), which is also a research achievement of Key Laboratory of Science, Technology and Standard in Press Industry (Key Laboratory of Intelligent Press Media Technology).

References

- [1] Alvaro, F., Sánchez, J.A., Benedí, J.M.: Recognition of on-line handwritten mathematical expressions using 2d stochastic context-free grammars and hidden markov models. Pattern Recognition Letters 35, 58–67 (2014)

- [2] Bengio, Y., Frasconi, P., Simard, P.: The problem of learning long-term dependencies in recurrent networks. In: IEEE international conference on neural networks. pp. 1183–1188. IEEE (1993)

- [3] Chan, K.F., Yeung, D.Y.: An efficient syntactic approach to structural analysis of on-line handwritten mathematical expressions. Pattern recognition 33(3), 375–384 (2000)

- [4] Chan, K.F., Yeung, D.Y.: Mathematical expression recognition: a survey. International Journal on Document Analysis and Recognition 3(1), 3–15 (2000)

- [5] Chan, K.F., Yeung, D.Y.: Error detection, error correction and performance evaluation in on-line mathematical expression recognition. Pattern Recognition 34(8), 1671–1684 (2001)

- [6] Cheng, Z., Bai, F., Xu, Y., Zheng, G., Pu, S., Zhou, S.: Focusing attention: Towards accurate text recognition in natural images. In: Proceedings of the IEEE international conference on computer vision. pp. 5076–5084 (2017)

- [7] Deng, Y., Kanervisto, A., Rush, A.M.: What you get is what you see: A visual markup decompiler. arXiv preprint arXiv:1609.04938 10, 32–37 (2016)

- [8] Devlin, J., Chang, M.W., Lee, K., Toutanova, K.: Bert: Pre-training of deep bidirectional transformers for language understanding. arXiv preprint arXiv:1810.04805 (2018)

- [9] Glorot, X., Bordes, A., Bengio, Y.: Deep sparse rectifier neural networks. In: Proceedings of the fourteenth international conference on artificial intelligence and statistics. pp. 315–323 (2011)

- [10] He, K., Zhang, X., Ren, S., Sun, J.: Deep residual learning for image recognition. In: Proceedings of the IEEE conference on computer vision and pattern recognition. pp. 770–778 (2016)

- [11] Huang, G., Liu, Z., Van Der Maaten, L., Weinberger, K.Q.: Densely connected convolutional networks. In: Proceedings of the IEEE conference on computer vision and pattern recognition. pp. 4700–4708 (2017)

- [12] Ioffe, S., Szegedy, C.: Batch normalization: Accelerating deep network training by reducing internal covariate shift. arXiv preprint arXiv:1502.03167 (2015)

- [13] Jiang, Z., Gao, L., Yuan, K., Gao, Z., Tang, Z., Liu, X.: Mathematics content understanding for cyberlearning via formula evolution map. In: Proceedings of the 27th ACM International Conference on Information and Knowledge Management. pp. 37–46 (2018)

- [14] Kang, L., Riba, P., Rusiñol, M., Fornés, A., Villegas, M.: Pay attention to what you read: Non-recurrent handwritten text-line recognition. arXiv preprint arXiv:2005.13044 (2020)

- [15] Liu, L., Utiyama, M., Finch, A., Sumita, E.: Agreement on target-bidirectional neural machine translation. In: Proceedings of the 2016 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies. pp. 411–416 (2016)

- [16] MacLean, S., Labahn, G.: A new approach for recognizing handwritten mathematics using relational grammars and fuzzy sets. International Journal on Document Analysis and Recognition (IJDAR) 16(2), 139–163 (2013)

- [17] Mahdavi, M., Zanibbi, R., Mouchere, H., Viard-Gaudin, C., Garain, U.: Icdar 2019 crohme+ tfd: Competition on recognition of handwritten mathematical expressions and typeset formula detection. In: 2019 International Conference on Document Analysis and Recognition (ICDAR). pp. 1533–1538. IEEE (2019)

- [18] Mouchere, H., Viard-Gaudin, C., Zanibbi, R., Garain, U.: Icfhr 2014 competition on recognition of on-line handwritten mathematical expressions (crohme 2014). In: 2014 14th International Conference on Frontiers in Handwriting Recognition. pp. 791–796. IEEE (2014)

- [19] Mouchère, H., Viard-Gaudin, C., Zanibbi, R., Garain, U.: Icfhr2016 crohme: Competition on recognition of online handwritten mathematical expressions. In: 2016 15th International Conference on Frontiers in Handwriting Recognition (ICFHR). pp. 607–612. IEEE (2016)

- [20] Parmar, N., Vaswani, A., Uszkoreit, J., Kaiser, L., Shazeer, N., Ku, A., Tran, D.: Image transformer. In: International Conference on Machine Learning. pp. 4055–4064. PMLR (2018)

- [21] Truong, T.N., Nguyen, C.T., Phan, K.M., Nakagawa, M.: Improvement of end-to-end offline handwritten mathematical expression recognition by weakly supervised learning. In: 2020 17th International Conference on Frontiers in Handwriting Recognition (ICFHR). pp. 181–186. IEEE (2020)

- [22] Vaswani, A., Shazeer, N., Parmar, N., Uszkoreit, J., Jones, L., Gomez, A.N., Kaiser, Ł., Polosukhin, I.: Attention is all you need. Advances in neural information processing systems 30, 5998–6008 (2017)

- [23] Wu, J.W., Yin, F., Zhang, Y.M., Zhang, X.Y., Liu, C.L.: Handwritten mathematical expression recognition via paired adversarial learning. International Journal of Computer Vision pp. 1–16 (2020)

- [24] Wu, J.W., Yin, F., Zhang, Y., Zhang, X.Y., Liu, C.L.: Graph-to-graph: Towards accurate and interpretable online handwritten mathematical expression recognition. AAAI 2021 (2021)

- [25] Xu, K., Ba, J., Kiros, R., Cho, K., Courville, A., Salakhudinov, R., Zemel, R., Bengio, Y.: Show, attend and tell: Neural image caption generation with visual attention. In: International conference on machine learning. pp. 2048–2057 (2015)

- [26] Yan, Z., Ma, T., Gao, L., Tang, Z., Chen, C.: Persistence homology for link prediction: An interactive view. arXiv preprint arXiv:2102.10255 (2021)

- [27] Yan, Z., Zhang, X., Gao, L., Yuan, K., Tang, Z.: Convmath: A convolutional sequence network for mathematical expression recognition. In: 2020 25th International Conference on Pattern Recognition (ICPR). pp. 4566–4572. IEEE (2021)

- [28] Yuan, K., Gao, L., Jiang, Z., Tang, Z.: Formula ranking within an article. In: Proceedings of the 18th ACM/IEEE on Joint Conference on Digital Libraries. pp. 123–126 (2018)

- [29] Yuan, K., Gao, L., Wang, Y., Yi, X., Tang, Z.: A mathematical information retrieval system based on rankboost. In: Proceedings of the 16th ACM/IEEE-CS on Joint Conference on Digital Libraries. pp. 259–260 (2016)

- [30] Yuan, K., He, D., Jiang, Z., Gao, L., Tang, Z., Giles, C.L.: Automatic generation of headlines for online math questions. In: Proceedings of the AAAI Conference on Artificial Intelligence. vol. 34, pp. 9490–9497 (2020)

- [31] Yuan, K., He, D., Yang, X., Tang, Z., Kifer, D., Giles, C.L.: Follow the curve: Arbitrarily oriented scene text detection using key points spotting and curve prediction. In: 2020 IEEE International Conference on Multimedia and Expo (ICME). pp. 1–6. IEEE (2020)

- [32] Zanibbi, R., Blostein, D.: Recognition and retrieval of mathematical expressions. International Journal on Document Analysis and Recognition (IJDAR) 15(4), 331–357 (2012)

- [33] Zeiler, M.D.: Adadelta: an adaptive learning rate method. arXiv preprint arXiv:1212.5701 (2012)

- [34] Zhang, J., Du, J., Dai, L.: Multi-scale attention with dense encoder for handwritten mathematical expression recognition. In: 2018 24th international conference on pattern recognition (ICPR). pp. 2245–2250. IEEE (2018)

- [35] Zhang, J., Du, J., Yang, Y., Song, Y.Z., Wei, S., Dai, L.: A tree-structured decoder for image-to-markup generation. In: ICML. p. In Press (2020)

- [36] Zhang, J., Du, J., Zhang, S., Liu, D., Hu, Y., Hu, J., Wei, S., Dai, L.: Watch, attend and parse: An end-to-end neural network based approach to handwritten mathematical expression recognition. Pattern Recognition 71, 196–206 (2017)

- [37] Zhang, X., Gao, L., Yuan, K., Liu, R., Jiang, Z., Tang, Z.: A symbol dominance based formulae recognition approach for pdf documents. In: 2017 14th IAPR International Conference on Document Analysis and Recognition (ICDAR). vol. 1, pp. 1144–1149. IEEE (2017)

- [38] Zhang, Z., Wu, S., Liu, S., Li, M., Zhou, M., Xu, T.: Regularizing neural machine translation by target-bidirectional agreement. In: Proceedings of the AAAI Conference on Artificial Intelligence. vol. 33, pp. 443–450 (2019)

- [39] Zhou, L., Zhang, J., Zong, C.: Synchronous bidirectional neural machine translation. Transactions of the Association for Computational Linguistics 7, 91–105 (2019)