Haptics-Enabled Forceps with Multi-Modal Force Sensing: Towards Task-Autonomous Surgery

Abstract

Many robotic surgical systems have been developed with micro-sized forceps for tissue manipulation. However, these systems often lack force sensing at the tool side and the manipulation forces are roughly estimated and controlled relying on the surgeon’s visual perception. To address this challenge, we present a vision-based module to enable the micro-sized forceps’ multi-modal force sensing. A miniature sensing module adaptive to common micro-sized forceps is proposed, consisting of a flexure, a camera, and a customised target. The deformation of the flexure is obtained by the camera estimating the pose variation of the top-mounted target. Then, the external force applied to the sensing module is calculated using the flexure’s displacement and stiffness matrix. Integrating the sensing module into the forceps, in conjunction with a single-axial force sensor at the proximal end, we equip the forceps with haptic sensing capabilities. Mathematical equations are derived to estimate the multi-modal force sensing of the haptics-enabled forceps, including pushing/pulling forces (Mode-I) and grasping forces (Mode-II). A series of experiments on phantoms and ex vivo tissues are conducted to verify the feasibility of the proposed design and method. Results indicate that the haptics-enabled forceps can achieve multi-modal force estimation effectively and potentially realize autonomous robotic tissue grasping procedures with controlled forces. A video demonstrating the experiments can be found at https://youtu.be/pi9bqSkwCFQ.

Index Terms:

Surgical robotics, autonomous surgery, multi-modal force sensing, tissue manipulation.I Introduction

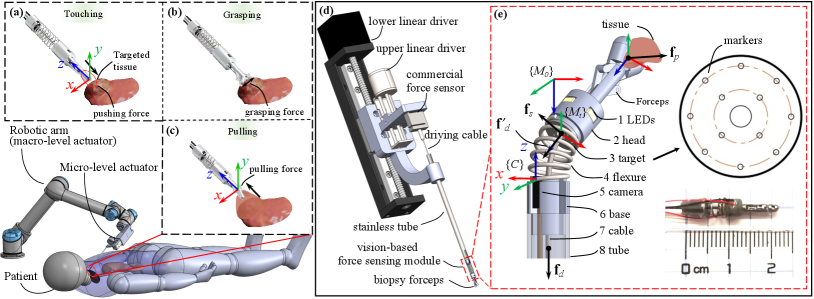

An increasing number of robotic systems have been developed with miniature instruments to facilitate minimally invasive surgery (MIS) in the past decade [1, 2]. For example, micro-sized forceps have been widely adopted in recently developed robotic systems for tissue manipulation in narrow spaces [3, 4, 5]. However, one notable limitation of these systems is their lack of force sensing at the tool side for tissue manipulation, including pushing (Fig. 1(a)), grasping (Fig. 1(b)), and pulling (Fig. 1(c)) forces.

Force sensing for tissue manipulation is critically needed for two reasons. Firstly, information about these forces can enhance surgeons’ operation and decision-making if integrated into the systems appropriately [6]. For instance, Talasaz et al. [7] verified that a haptics-enabled teleoperation system could perform better during a robot-assisted suturing task. Secondly, there is a consensus on the growth of autonomy in surgical robots [8] accompanied by more dexterous mechanisms such as snake-like robots [9, 10]. Force sensing at the tool side will play an essential role in the evolution of autonomous robotic surgery [8, 11].

Researchers have conducted various investigations to enable force sensing of different grippers for tissue manipulation. Zarrin et al. [12] fabricated a two-dimensional gripper that can measure grasping and axial forces with two Fiber Bragg grating (FBG) sensors embedded into the gripper’s jaws. Using the same principle, Lai et al. [13] developed a gripper that can estimate pulling and lateral forces. Although these FBG-based modules usually present a high resolution of force detection, they often suffer from sensitivity to temperature variation, and current compensation methods require complex design [14, 15, 16]. In addition, highly expensive interrogation systems are needed for these sensors to work. By integrating capacitance into a pair of surgical forceps’ two jaws, Kim et al. [17] enabled the forceps with multi-axis force sensing. However, capacitance-based methods are often susceptible to temperature and humidity variations, and additional sensing modules or complex design and fabrication are required for compensation [18, 19]. Moreover, sterilizations are necessary for surgical instruments, such as autoclaving and dry heat procedures, which may harm the sensing capabilities of the tool-side electronic modules [20]. These limitations may invalidate the forceps’ force sensing capability when used in practical surgical tasks. In addition, all the above methods require re-machining the forceps’ two jaws to install the proposed sensors, making them unsuitable for micro-sized forceps.

Recently, vision-based methods have also been explored for developing low-cost force sensors. Ouyang et al. [21] developed a multi-axis force sensor with a camera tracking a fiducial marker supported by four compression springs. The displacement of the marker was captured by the camera and converted to force information by multiplying a linear transformation matrix. Fernandez et al. [22] presented a vision-based force sensor for a humanoid robot’s fingers with almost the same principle. In addition to providing multi-dimensional force feedback, vision-based force sensing is relatively robust to magnetic, electrical, and temperature variations. However, these vision-based sensors developed so far are large in size and can only estimate contact forces.

Although vision has been adopted for force estimation in surgery, current methods often rely on tissue/organ deformation, movement, and biomechanical properties, and cannot be used as a stand-alone sensing module [23, 24, 25].

In summary, despite many achievements for gripper force sensing based on various principles in the past few years, force estimation of the micro-sized forceps for MIS, especially their grasping and pulling force measurement, is still challenging. This paper proposes a method to enable multi-modal force sensing of the micro-sized forceps by combining a three-dimensional vision-based force sensing module at the tool side and a single-axis commercial strain gauge sensor at the proximal side, as shown in Fig. 1(d). The developed sensing module that can be easily integrated at the tool side is responsible for perceiving the interaction between the forceps and the manipulated tissues (Fig. 1(e)). The commercial strain gauge sensor mounted at the proximal side measures the force applied to the forceps’ driving cable. By integrating these pieces of sensed information, mathematical equations are derived to estimate the forceps’ touching, grasping, and pulling forces.

The main contributions of this article are two-fold:

-

1.

A vision-based force-sensing module that can be easily integrated into micro-sized forceps is developed. An algorithm is designed to calculate the force applied to the sensing module with a registration method for tracking and estimating the pose of the sensing module’s target.

-

2.

A design of haptics-enabled forceps is further proposed by assembling the widely used biopsy forceps with the developed sensing module embedded at the tool end and a single-axis strain gauge sensor mounted at the proximal end. Mathematical formulae are derived to estimate the multi-modal force sensing of the haptics-enabled forceps, including pushing/pulling force (Mode-I) and grasping force (Mode-II).

These contributions are validated by various carefully designed experiments on phantoms and ex vivo tissues. Results indicate that the haptics-enabled forceps can achieve multi-modal force estimation effectively and potentially realize autonomous robotic tissue grasping procedures with controlled forces.

II Haptics-Enabled Micro-Sized Forceps

II-A Design of A Vision-Based Force Sensing Module

II-A1 Overall Structure

The design of the sensing module (Fig. 1(e)) has followed two requirements: 1) small in size, 2) adaptive to micro-sized forceps, for example, the biopsy forceps. The module is designed in a cylindrical shape to minimize anisotropy, and the main components are a flexure, a camera, and a target. A central channel is reserved for the passage of instruments. The target, supported by the flexure, is manufactured with multiple holes that allow light to pass from a light source, and these holes can then be captured by a camera as markers for the estimation of the target’s pose, as depicted in Fig. 1(e). The module’s size and stiffness can be customized based on the instruments’ and applications’ needs. Here, we assemble a prototype with a diameter of 4mm and a length of 12mm and integrate it in a pair of biopsy forceps, as shown in the lower-right of Fig. 1(e).

II-A2 Flexure

Material properties and geometry play a dominant role in the multidimensional stiffness of the flexure. Different approaches have been adopted for flexure design, including the pseudo-rigid-body replacement method [26], topology optimization [27], etc. For force sensing in different surgical tissue manipulations, the flexure should also be changeable according to the task requirements. One commercial and readily available flexure is the compression spring [21, 22]. Although compression springs typically show dominant stiffness in the axial direction, they also exhibit a certain degree of stiffness in lateral directions and can be linearly approximated [28]. Moreover, they can be easily reconfigured by choosing different wire diameters, outer diameters, and coil numbers. For the prototype, we chose a stainless steel compression spring with a wire diameter of 0.5mm, outer diameter of 4mm, rest length of 5mm, and four coils. The selected spring has a force response range of 05N and 02N at axial and lateral displacements of 02mm and 01mm, respectively, which can meet the requirement of most tissue manipulations [29].

II-A3 Camera and Target

The camera for the sensing module should have a compact size, sufficient resolution, and surgery compatibility. For the prototype, we chose OVM6946 (Omnivision Inc., USA), a medical wafer-level endoscopy camera, which has been adopted in many surgical applications [30, 5]. It is 1mm in width and 2.27mm in length and has a 400400 resolution and 30Hz rate. To ensure continuous tracking and estimation, we fabricated the target with 12 cycle-distributed 0.15mm-diameter holes used as markers for the camera to track and estimate the target pose, and a central hole is reserved for tools to pass through. These holes can also be customized based on the instruments and applications. Considering the integration into the biopsy forceps for MIS, the prototype’s target in Fig. 1(e) has a 3.4mm outer diameter and a 0.6mm-diameter central hole for the driving cable.

II-B Force Estimation of Sensing Module

Ideally, for a linear-helix compression spring, the external force applied to the sensing module’s head has an approximated relationship with the spring’s displacement [28] as

| (1) |

where denotes the external forces, denotes the stiffness matrix of the spring, and is the displacement of the spring’s end relative to its relaxed original position. In this paper, is equal to the target’s current relative displacement to its initial state.

II-B1 Image-Based Target Pose Estimation

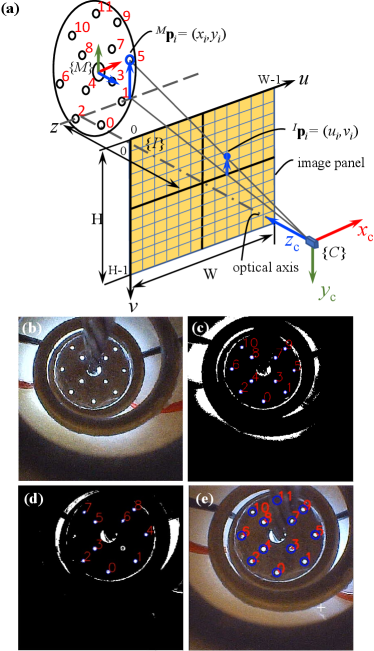

In order to estimate the target pose, each marker needs to be tracked by the camera first. However, as the raw image captured by the camera is full of noise, as seen in Fig. 2(b), markers cannot be detected robustly. Therefore, a bilateral filter and threshold are adopted to minimize the noise and preserve the boundary in images [31]. Then, markers are circled by a parameterized blob detection [32], as shown in Fig. 2(c). Finally, each marker’s central pixel position is returned.

The positions of markers in the target frame {M} are known. The projection between the -th marker centre and its corresponding pixel in the image frame {I}, as shown in Fig. 2(a), can be formulated as

| (8) | ||||

| (18) | ||||

| (25) |

where is the transformation from camera frame {C} to marker frame {M}, is the homogeneous form of , is the homogeneous form of , ( for the prototype), is the camera’s focal length, and are the width and height of each pixel, respectively, , and the camera intrinsic matrix is obtained via calibration with MATLAB camera toolbox [33].

According to the transformation relationship shown in Fig. 2(a), the current target pose related to its initial state can be formulated as

| (26) |

The translation of is equal to the displacement in (1). Then, the question becomes to get and . The relationship between and homography matrix can be formulated as

| (31) |

Because is a unit vector, can be calculated by . Therefore, to estimate and , we only need to solve the homography matrix and of the initial and current states.

Because each homography matrix has eight variables, at least four independent projections between and are needed to solve it, and each pair’s relationship can be formulated as

| (32) |

where and are coordinates of the -th marker in the frame {M} and its projection in the frame {I}. Based on this relationship and four pairs of projections, a set of equations can be established to solve . Substituting the solved into (31), can be further obtained.

II-B2 Marker Robust Registration

Ideally, through substituting four detected and their corresponding into (32) we can calculate the homography matrix for each state. However, under external forces, the pose variation of the target causes the occlusion or halation of some markers and results in wrong correspondence between the image and the marker. As a result, the corresponding relationship between and changes over time, which invalidates the direct use of (32). Therefore, a registration process is required to label the currently detected markers’ pixel positions to their original indexes, as shown in Fig. 2(a), and we define this registered result as .

At the initial state , the target is installed perpendicularly to the optical axis. For the prototype we built, all the markers can be detected except the 11th marker (top marker) that is occluded by the channel, as shown in Fig. 2(b). Therefore, we define by removing the 11th marker from as the marker set at the initial state, and the projection between and can be obtained according to (32). Substituting and back to (32), we can estimate . Then, the complete pixel-position array of the initial state can be obtained by appending to .

For the current state , the pixel positions of the detected markers ( is the number of detected markers, ) are returned by the blob detection. Nevertheless, these detected markers are directly labelled from bottom to top in the image. For example, the blob detection result of a random pose is shown in Fig. 2(d). Assuming is known, we calculate the L1 norm of the distance vector between each and , and , and define it as . The corresponding marker of in is the one that results in the minimal , and its index is the desired . Without loss of generality, the registration problem for the detected i-th hole is defined as

| (33) |

Repeating this registration for each detected marker and updating , we can obtain with the original indexes. We define the corresponding marker position set of as . Substituting and to (32), the homography matrix of the current state can be calculated.

To ensure the image of the current state can be used as the reference for the next registration, i.e., the +1 state, the complete pixel-position set of the current state also should be generated. By substituting undetected markers’ coordinates in and to (32), we can estimate their corresponding pixel positions . Then, can be obtained by merging with . For example, Fig. 2(e) shows the registration result and the generated complete image of Fig. 2(d).

II-B3 Flexure Deformation Estimation

The transformation between the target and the camera at the initial state can be calculated by substituting and into (31). Similarly, the transformation at the current state can be obtained by substituting and into (31). Then, the transformation can be calculated by substituting and into (26), which reflects the deformation of the flexure.

II-B4 Force Calculation

The displacement of the spring is the translation vector of . Substituting and the spring’s stiffness matrix into (1), we can estimate the external force . Ideally, the stiffness matrix represents the spring’s characteristics that connect the camera and the target. However, this stiffness matrix should also consider the influence of wires and shelter, as they are parallel to the spring. In order to get the practical stiffness matrix , a calibration is carried out on the prototype and detailed in section III. In summary, the above pose and force estimation method can be summarized as algorithm 1.

II-C Haptics-Enabled Forceps and Force Estimation

II-C1 Micro-level Forceps Actuator

To drive the forceps and conduct experimental verification, we designed a micro-level actuator, as shown in Fig. 1(d), which consists of upper and lower linear drivers that are responsible for grasping and pushing/pulling, respectively. The lower driver also compensates for the motion introduced by the flexure’s deformation when the upper driver actuates the forceps to grasp tissue. The compensation is achieved by moving the lower driver’s carrier for the same amount of the flexure’s axial deformation estimated by the camera in the reverse direction. A commercial single-axis force sensor (ZNLBS-5kg, CHINO, China), with a resolution of 0.015N, is installed collinearly to the forceps and connected to the driving cable to measure the driving force.

II-C2 Pushing/Pulling Force Estimation (Mode-I)

Integrating the sensing module into the forceps, we can enable its haptic sensing, and the haptics-enabled forceps are shown in Fig. 1(e). The base of the forceps is installed concentrically to the sensing module’s head, and the driving cable passes through the reserved central channel. The cylindrical base connects the forceps to its carrying instrument (stainless tube). A prototype is also shown in the inset of Fig. 1(e), which has a 4mm diameter and 22mm total length and is used in the following description and experiments. The proposed haptics-enabled forceps can be easily integrated into a robotic surgical system with the micro-level actuator, such as the robot presented in Fig. 1(a). The forces applied to the forceps when they push or pull a tissue are also shown in Fig. 1(e). is the pushing/pulling force from the interactive tissue. is the elastic force from the spring, which can be directly estimated by (1). is the driving force of the cable at the tool end. Ignoring friction, we assume equals measured by the single-axis force sensor connected to the driving cable at the proximal side. Then, can be formulated as . Finally, we can obtain the pushing/pulling force as

| (34) |

For tissue touching, the contact force can also be estimated by (34) no matter if the forceps are closed or open.

II-C3 Grasping Force Estimation (Mode-II)

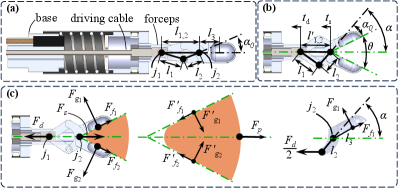

The estimation of the grasping force is more challenging because it relates to the geometry and driving force applied to the forceps’ cable, as depicted in Fig. 3. Although the sensing module can estimate the 3D elastic force and the orientation of , we mainly consider the situation where the forceps grasp and pull tissue straightly as grasping is performed in the release condition.

The forces applied to the forceps when they grasp and pull a tissue are shown in Fig. 3(c). For convenience, we adopt to denote the magnitude of force in the following sections. is the elastic force from the spring and is directly estimated by (1). is the driving force applied to the forceps’ hinge and measured by the proximal single-axis force sensor. and are contact and friction forces from the grasped tissue, as shown in Fig. 3(c). For ease of understanding, we define as the gripping force in the following description.

Assuming the momentum of one jaw about joint is zero, as sketched in the right inset of Fig. 3(c), we can further obtain the relationship between and as

| (35) |

where , the definitions of , , , , and are shown in Fig. 3(b). and can be calculated based on the geometric relationship of the forceps’ links. Fig. 3(a) shows the forceps’ geometry at the initial state, i.e., the forceps are in the closed form, and the flexure is relaxed. According to the relationship of forceps’ links at the initial state, we can get and formulate it as

| (36) |

where is the distance between forceps’ two joints and . When the forceps are open at angle , the geometry changes to Fig. 3(b). At this stage, the angle can be reformulated as

| (37) |

where , is the movement of the driving cable measured by the upper driver’s encoder of the micro-level actuator, and is the transformation of the sensor’s head estimated by the sensing module. Moreover, the relationship of , , and is

| (38) |

For a pair of forceps, we assume equals , and equals . Then, referring to (34), we can formulate as

| (39) |

III Experiments & Discussion

In this section, we first present the experiments on evaluating the method for target tracking and calibrating the stiffness matrix for external force estimation. Then, the evaluation of two modes of force estimation is described. Finally, groups of automatic robotic grasping procedures with the proposed system are illustrated as potential applications.

III-A Evaluation of Target’s Pose Estimation

III-A1 Experimental Setup

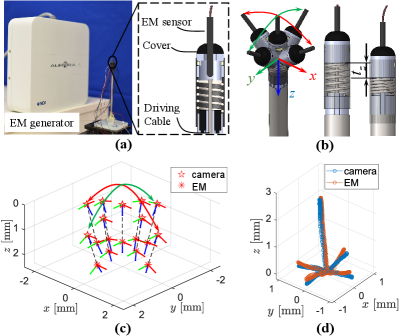

Fig. 4(a) shows the experimental setup for pose estimation evaluation with an electromagnetic (EM) tracking system (Northern Digital Inc, Canada) with resolutions less than 0.1mm in position and 0.1∘ in orientation. The EM tracking sensor was installed concentrically to the proposed force sensing module. During the experiment, the sensor’s head was moved along (red arrow), (green arrow), and (blue arrow) as indicated in Fig. 4(b).

III-A2 Pose Estimation Results

The orientation comparison is based on nine pairs of samples distributed in the sensing module’s workspace, as plotted in Fig. 4(c). Here, we use (, , ) and (, , ) to denote the pitch, roll, and yaw angles of camera estimation and EM tracking results of the -th pair. The max mean and absolute maximum deviations between EM tracking and our estimation result are calculated by and , where . For position evaluation, the EM sensor and target were tracked continuously by the EM tracking system and camera, respectively, and the comparison is shown in Fig. 4(d). To calculate the mean and max deviations between the EM and camera-tracking traces, we further calculated the mean () and Hausdorff () distance by

| (42) |

where , and denote positions of the camera estimation of point and EM tracking of point , , =1336 and =2181 are the numbers of points recorded by the camera and EM tracking system, respectively. As listed in Table I, the max mean, absolute maximum, and root mean square (RMS) deviations of the orientation are 0.056rad, 0.147rad, and 0.041rad, and those of the position are 0.0368mm, 0.2265mm, and 0.0551mm. This indicates that the proposed method can track and estimate the target’s pose reliably.

| Experiment | Max Mean | Maximum | RMS |

| Orientation | 0.056rad | 0.147rad | 0.041rad |

| Position | 0.0368mm | 0.2265mm | 0.0551mm |

III-B Stiffness Matrix Calibration and Verification

III-B1 Experimental Setup

We used a series of weights to calibrate the haptics-enabled forceps’ stiffness matrix, considering the influence of the driving cable and soft shell. The calibration platform is shown in Fig. 5(a). Here, the micro-level actuator is used to adjust the position and hold the forceps. An orientation module is installed concentrically to the forceps for adjusting the direction of the pulling force provided by a cable. This cable is connected to the forceps’ jaws, and the force applied to it is adjusted by adding/removing weights.

III-B2 Calibration and Verification

Two groups of data were collected for calibration and verification, respectively. When collecting the data for calibration in the - panel, as shown in Fig. 5(b), the orientation module made the cable align with the and axis, and the force was increased from 0N to 0.49N at intervals of 0.07N. Then, for the axis, the driving force was increased from 0N to 5N at intervals of 0.5N. The target pose for each interval was recorded. Then, substituting the calibration data to in MATLAB, we obtained the stiffness matrix as

| (46) |

To verify the calibrated , we rotated the orientation module for , as shown in Fig. 5(c), and collected the data following the same procedure of calibration. For the - panel the interval was set to 0.035N, while for the axis the interval was 0.02N. The comparison of weights and estimated forces are plotted in Fig. 5(d) and (e), which show the results of the - panel and the -axial direction, respectively. The absolute mean, maximum, and RMS errors of (, , ) in these three comparisons are (, , )N, (, , )N, and (0.8494, 0.5884, 4.9367)N, which are (1.86%, 1.30%, 0.76%), (6.71%, 4.46%, 3.97%), and (2.45%, 1.70%, 0.98%) of the measured force amplitude (MFA), respectively. This indicates that the calibrated can be used to estimate the force applied to the forceps.

| Error | o1 | o2 | o3 | o4 |

| Mean | 0.030(4.41%) | 0.038(5.15%) | 0.028(4.01%) | 0.023(3.91%) |

| Max | 0.077(11.17%) | 0.131(17.75%) | 0.128(14.42%) | 0.064(10.69%) |

| RMS | 0.038(5.50%) | 0.049(6.69%) | 0.043(6.16%) | 0.028(4.74%) |

III-C Evaluation of Mode-I: Estimation

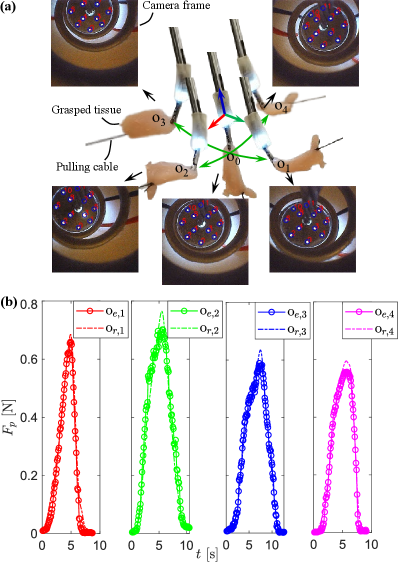

In this experiment, the grasped tissue was pulled in various directions by a cable connected to a force sensor. Fig. 6(a) shows that the grasped tissue is pulled from the initial state o0 to (o1), (o2), (o3), and (o4). Snapshots show the states when the flexure has maximum deformation in different orientations, and the corresponding camera registration frame are attached below them. Fig. 6(b) shows the forces estimated by the proposed sensing module (oe,i) and that measured by the commercial sensor (or,i) connected to the tissue-pulling cable. Table II lists the comparison result of between oe,i and or,i. The absolute mean, maximum, and RMS errors of the four tests are under 0.038N (5.15% of MFA), 0.131N (17.75% of MFA), and 0.049N (6.69% of MFA), which means the forceps are valid for estimating in various directions and meets the requirement of general surgical applications (for example, the clinical review [29] indicated that an absolute error of less than 0.28N can meet the requirement for tissue retraction with grasping in general surgery).

III-D Evaluation of Mode-II: Estimation

III-D1 Experimental Setup

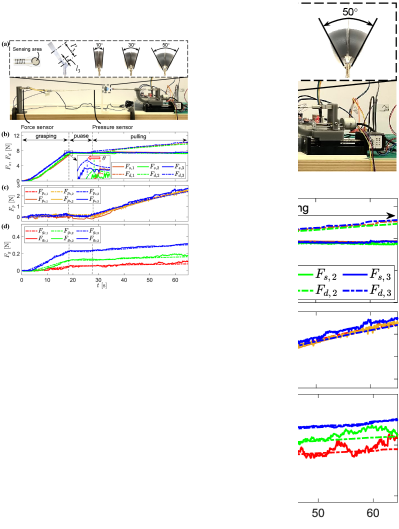

Fig. 7(a) shows the experimental setup, where a commercial pressure sensor (FlexiForce A201-1 lbs, Tekscan, USA), with a resolution of 0.02N, and a force sensor (ZNLBS-5kg, CHINO, China) were adopted as references. The pressure sensor was grasped by the forceps for direct measurement of the grasping force. Because the pressure sensor’s sensing area was a 10mm-diameter circle, 3D-printed additional surfaces were attached to the forceps’ jaws to ensure sufficient contact. The driving force reached around 7N during the grasping action in each experiment. Since, from (39), we can see the grasping force is also related to pulling force , we connected the pressure sensor to a commercial force sensor by a flexure to measure the applied pulling force.

III-D2 Evaluations

We carried out three experiments with different configurations. Each experiment was presented as a continuous process, and the results are shown in Fig. 7(b), (c), and (d), where the subscript (1,2,3) denotes the -th experiment that corresponds to , , and . The forceps grasped the pressure sensor and reached the target grasping force during 018s. After a pause phase (1826s), the forceps pulled the grasped sensor backward for 25mm in the pulling phase (2665s).

Fig. 7(b) depicts provided by the driving cable and measured by our proposed sensing module. and were almost equal in the grasping and pause phases. This is because the pulling force applied to the forceps was almost zero in these two phases, as there was no pulling action, and the pressure sensor was floating. On the contrary, in the pulling phase, increased while kept constant. This is because the pressure sensor has been pulled (), but the flexure deformation kept constant. The difference between and was the pulling force applied to the pressure sensor. As the three experiments followed the same procedure, their data almost overlay each other. However, because is configured differently, the time for performing the grasping is slightly different. The inset in Fig. 7(b) shows that the bigger is, the quicker the grasping finishes.

Fig. 7(c) shows the commercial sensor measured pulling force in the experimental procedure, and we compared it with forceps estimated result . We can see that the pulling force of these three experiments almost overlay each other as the pulling distances were equal. A comparison between the commercial sensor results and our estimations is listed in Table III. The mean, maximum, and RMS errors of the three experiments are under 0.133N, 0.497N, and 0.167N, respectively. This also reflects that the forceps are valid for estimating the pulling force when they grasp tissue.

| Force | Error | |||

| Mean | 0.118(4.81%) | 0.094(3.88%) | 0.133(5.50%) | |

| [N] | Max | 0.299(12.21%) | 0.278(11.48%) | 0.497(20.51%) |

| RMS | 0.145(5.94%) | 0.112(4.62%) | 0.167(6.87%) | |

| Mean | 0.040(8.13%) | 0.027(4.90%) | 0.012(2.00%) | |

| [N] | Max | 0.133(27.33%) | 0.096(17.37%) | 0.049(8.05%) |

| RMS | 0.049(10.03%) | 0.036(6.50%) | 0.016(2.67%) |

Fig. 7(d) compares the grasping force , where and denote the forces of -th experiment estimated by our proposed forceps and commercial pressure sensor, respectively. Because of the additional surfaces that are shown in the inlet of Fig. 7(a), in (39) was set as in these experiments. According to Fig. 7(d), we can see that when the driving force is equal, the grasping force is positively related to . This phenomenon conforms with the force calculation method (34) and (39), as the increased could entail greater and then result in increased . Table III lists the absolute mean, maximum, and RMS errors, and their percentage of MFA, between and of the three experiments. Their values are under 0.040N, 0.133N, and 0.049N, respectively. This indicates that the forceps are valid for estimating the grasping force and meet the requirements of surgical applications [29].

III-E Ex vivo Robotic Experiments

III-E1 Experimental Setup

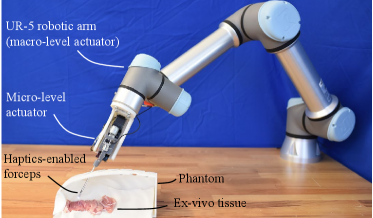

To further verify the feasibility of the proposed system, we conducted a group of robotic experiments on an ex vivo tissue. Fig. 8 shows the experimental setup, where a UR-5 robotic arm was adopted as the macro-level actuator. The ex vivo chicken tissue was placed in a human body phantom to simulate the lesion, and the instrument was implemented through a simulated minimally invasive port on the phantom.

III-E2 Automatic Tissue Grasping

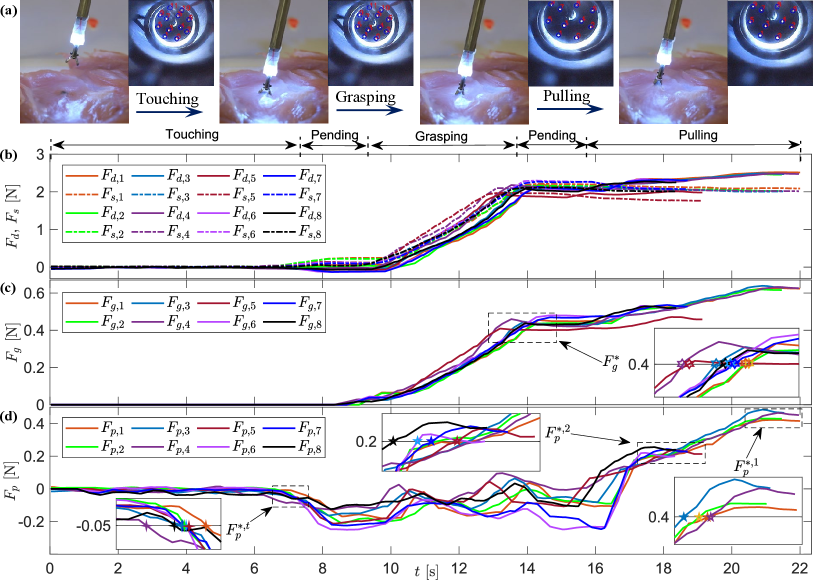

We carried out automatic tissue grasping procedure with one targeted grasping force and two different targeted pulling forces and . The key experimental scenes and results are shown in Fig. 9, and the experiments can be roughly divided into five phases. Transformations between these phases were automatically performed depending on the grasping force and pushing/pulling force estimated by the haptics-enabled forceps.

With Mode-I enabled, the forceps were driven to touch the tissue during the touching phase (07.5s) until reached the touching detection threshold followed by a short pending period (7.59.5s). Then, Mode-II was enabled, and the forceps performed the tissue grasping in the grasping phase (9.513.5s) until reached the targeted value . Finally, with another short pending period (13.515.5s), the grasped tissue was pulled up and held for a while with targeted pulling force . Because the targeted pulling force for the first and second group experiments were different, the time consumed for pulling varied. For the first group with N, the period was around 6s, while for the second group with N, it was around 2s.

Fig. 9(b) shows the measured driving force and the estimated elastic force of these experiments. Fig. 9(c) shows the estimated , where N is the targeted grasping force for grasping action. Fig. 9(d) shows the estimated , where N is the threshold for touch detection, while N and N are two target pulling forces for the first and second group experiments, respectively. According to the experiment results, we can see that the haptics-enabled forceps can be implemented in robotic surgery for multi-modal force sensing. We also noticed that, during the grasping process, the pulling force varies notably, which can potentially indicate successful grasping.

III-F Discussion

The accuracy, sampling frequency, and resolution of the sensing module can be reconfigured with different cameras and flexures, which can be defined according to the surgical task requirements. Currently, referring to [28], we linearly modeled the integrated haptics-enabled forceps with the calibrated to have a simple calculation complexity with acceptable accuracy. The accuracy can potentially be improved with a non-linear model, which can be obtained by more advanced calibrations and fitting methods. The sampling frequency of the proposed sensing module is equal to the camera rate, 30Hz in the current prototype, and no filter has been applied to the collected data yet. The sensing resolution depends on the camera’s resolution and the spring’s stiffness, and that of the prototype is 0.001N in the - panel and 0.005N in the -axis. The resolution in the - panel is higher than in the -axis because the camera is more sensitive in the - panel displacement [21], and the spring stiffness is higher in the -axis. To reduce the anisotropy in resolution, a hemisphere-shaped target is potentially helpful [22]. Moreover, to ensure the feasibility of closed-loop force control, the filter to improve accuracy and smoothness should also be studied carefully.

Since the adopted sensing method relies on the spring’s deformation, estimated by cameras tracking the target mounted on the opposite end of the spring, the sensing module is limited to estimating forces where the applied location ranges from the target to the forceps’ tip. Additionally, this paper focuses on estimating the multi-modal forces when the forceps pull/push and grasp tissues, and only the displacement is utilized for force estimation. Because the estimated transformation also contains the target’s current pose information, the wrench is potentially estimable [21, 22] and will be considered in future works.

Additionally, the proposed haptics-enabled forceps have minimized tool-side circuitry including only two micro-sized LEDs and an endoscopy, while the power supply and signal processing circuits are integrated at the proximal end and are removable, and the adopted camera can be sterilized repeatably, such as Ethylene oxide and STERRAD sterilization [20]. This improves the haptics-enabled forceps’ potential to be sterilized repeatedly as well.

Although in this paper, the biopsy forceps are adopted as an example, the presented sensing module can be integrated into a wide range of forceps with similar structures. The sensing module can probably be used as an independent sensor for other surgical applications, for example, palpation. Moreover, inspired by the robotic experiments, the successful grasp can potentially be indicated by the variation of during each grasping process, which will be investigated in future works.

IV Conclusion

This paper presented a vision-based force sensing module that is adaptive to micro-sized biopsy forceps. An algorithm was designed to calculate the force applied to the sensing module with a registration method for tracking and estimating the pose of the sensing module’s target. Integrating the developed sensing module into the biopsy forceps, in conjunction with a single-axis force sensor at the proximal end, a haptics-enabled forceps was further proposed. Mathematical equations were derived to estimate the multi-modal force sensing of the haptics-enabled forceps, including pushing/pulling force (Mode-I) and grasping force (Mode-II). The methods for estimating multi-modal forces were presented with experimental verification. Groups of automatic robotic ex vivo tissue grasping procedures were conducted to further verify the feasibility of the proposed sensing method and forceps. The results show that the proposed method can enable multi-modal force sensing of the micro-sized forceps, and the haptics-enabled forceps are potentially beneficial to automatic tissue manipulation in operations such as thyroidectomy, ENT surgery, and laparoscopic surgery. The forceps will be integrated into a dual-arm robotic surgical system to further study the benefits of multi-modal force sensing for MIS.

References

- [1] P. E. Dupont, B. J. Nelson, M. Goldfarb, B. Hannaford, A. Menciassi, M. K. O’Malley, N. Simaan, P. Valdastri, and G.-Z. Yang, “A decade retrospective of medical robotics research from 2010 to 2020,” Science Robotics, vol. 6, no. 60, p. eabi8017, 2021.

- [2] P. E. Dupont, N. Simaan, H. Choset, and C. Rucker, “Continuum robots for medical interventions,” Proceedings of the IEEE, vol. 110, no. 7, pp. 847–870, 2022.

- [3] L. Wu, F. Yu, T. N. Do, and J. Wang, “Camera frame misalignment in a teleoperated eye-in-hand robot: Effects and a simple correction method,” IEEE Transactions on Human-Machine Systems, vol. 53, no. 1, pp. 2–12, 2022.

- [4] F. Feng, Y. Zhou, W. Hong, K. Li, and L. Xie, “Development and experiments of a continuum robotic system for transoral laryngeal surgery,” International Journal of Computer Assisted Radiology and Surgery, vol. 17, no. 3, pp. 497–505, 2022.

- [5] Y. Cao, Z. Liu, H. Yu, W. Hong, and L. Xie, “Spatial shape sensing of a multisection continuum robot with integrated dtg sensor for maxillary sinus surgery,” IEEE/ASME Transactions on Mechatronics, 2022.

- [6] R. V. Patel, S. F. Atashzar, and M. Tavakoli, “Haptic feedback and force-based teleoperation in surgical robotics,” Proceedings of the IEEE, vol. 110, no. 7, pp. 1012–1027, 2022.

- [7] A. Talasaz, A. L. Trejos, and R. V. Patel, “The role of direct and visual force feedback in suturing using a 7-dof dual-arm teleoperated system,” IEEE transactions on haptics, vol. 10, no. 2, pp. 276–287, 2016.

- [8] G.-Z. Yang, J. Cambias, K. Cleary, E. Daimler, J. Drake, P. E. Dupont, N. Hata, P. Kazanzides, S. Martel, R. V. Patel et al., “Medical robotics—regulatory, ethical, and legal considerations for increasing levels of autonomy,” p. eaam8638, 2017.

- [9] A. Razjigaev, A. K. Pandey, D. Howard, J. Roberts, and L. Wu, “End-to-end design of bespoke, dexterous snake-like surgical robots: A case study with the raven ii,” IEEE Transactions on Robotics, 2022.

- [10] J. Wang, J. Peine, and P. E. Dupont, “Eccentric tube robots as multiarmed steerable sheaths,” IEEE Transactions on Robotics, vol. 38, no. 1, pp. 477–490, 2021.

- [11] A. Attanasio, B. Scaglioni, E. De Momi, P. Fiorini, and P. Valdastri, “Autonomy in surgical robotics,” Annual Review of Control, Robotics, and Autonomous Systems, vol. 4, pp. 651–679, 2021.

- [12] P. S. Zarrin, A. Escoto, R. Xu, R. V. Patel, M. D. Naish, and A. L. Trejos, “Development of a 2-dof sensorized surgical grasper for grasping and axial force measurements,” IEEE Sensors Journal, vol. 18, no. 7, pp. 2816–2826, 2018.

- [13] W. Lai, L. Cao, J. Liu, S. C. Tjin, and S. J. Phee, “A three-axial force sensor based on fiber bragg gratings for surgical robots,” IEEE/ASME Transactions on Mechatronics, vol. 27, no. 2, pp. 777–789, 2021.

- [14] A. Taghipour, A. N. Cheema, X. Gu, and F. Janabi-Sharifi, “Temperature independent triaxial force and torque sensor for minimally invasive interventions,” IEEE/ASME Transactions on Mechatronics, vol. 25, no. 1, pp. 449–459, 2019.

- [15] T. Li, A. Pan, and H. Ren, “A high-resolution triaxial catheter tip force sensor with miniature flexure and suspended optical fibers,” IEEE Transactions on Industrial Electronics, vol. 67, no. 6, pp. 5101–5111, 2019.

- [16] Q. Jiang, J. Li, and D. Masood, “Fiber-optic-based force and shape sensing in surgical robots: a review,” Sensor Review, vol. 43, no. 2, pp. 52–71, 2023.

- [17] U. Kim, Y. B. Kim, J. So, D.-Y. Seok, and H. R. Choi, “Sensorized surgical forceps for robotic-assisted minimally invasive surgery,” IEEE Transactions on Industrial Electronics, vol. 65, no. 12, pp. 9604–9613, 2018.

- [18] D.-Y. Seok, Y. B. Kim, U. Kim, S. Y. Lee, and H. R. Choi, “Compensation of environmental influences on sensorized-forceps for practical surgical tasks,” IEEE Robotics and Automation Letters, vol. 4, no. 2, pp. 2031–2037, 2019.

- [19] Y. Sun, Y. Liu, and H. Liu, “Temperature compensation for a six-axis force/torque sensor based on the particle swarm optimization least square support vector machine for space manipulator,” IEEE Sensors Journal, vol. 16, no. 3, pp. 798–805, 2015.

- [20] W. A. Rutala and D. J. Weber, Guideline for disinfection and sterilization in healthcare facilities (2008). Disinfection and Sterilization, Centers for Disease Control and Prevention, 2008.

- [21] R. Ouyang and R. Howe, “Low-cost fiducial-based 6-axis force-torque sensor,” in 2020 IEEE International Conference on Robotics and Automation (ICRA). IEEE, 2020, pp. 1653–1659.

- [22] A. J. Fernandez, H. Weng, P. B. Umbanhowar, and K. M. Lynch, “Visiflex: A low-cost compliant tactile fingertip for force, torque, and contact sensing,” IEEE Robotics and Automation Letters, vol. 6, no. 2, pp. 3009–3016, 2021.

- [23] N. Haouchine, W. Kuang, S. Cotin, and M. Yip, “Vision-based force feedback estimation for robot-assisted surgery using instrument-constrained biomechanical three-dimensional maps,” IEEE Robotics and Automation Letters, vol. 3, no. 3, pp. 2160–2165, 2018.

- [24] G. Fagogenis, M. Mencattelli, Z. Machaidze, B. Rosa, K. Price, F. Wu, V. Weixler, M. Saeed, J. E. Mayer, and P. E. Dupont, “Autonomous robotic intracardiac catheter navigation using haptic vision,” Science robotics, vol. 4, no. 29, p. eaaw1977, 2019.

- [25] Z. Chua and A. M. Okamura, “Characterization of real-time haptic feedback from multimodal neural network-based force estimates during teleoperation,” in 2022 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). IEEE, 2022, pp. 1471–1478.

- [26] M. A. Pucheta and A. Cardona, “Design of bistable compliant mechanisms using precision–position and rigid-body replacement methods,” Mechanism and Machine Theory, vol. 45, no. 2, pp. 304–326, 2010.

- [27] J. Deng, K. Rorschach, E. Baker, C. Sun, and W. Chen, “Topology optimization and fabrication of low frequency vibration energy harvesting microdevices,” Smart Materials and Structures, vol. 24, no. 2, p. 025005, 2014.

- [28] S. Keller and A. Gordon, “Equivalent stress and strain distribution in helical compression springs subjected to bending,” The Journal of Strain Analysis for Engineering Design, vol. 46, no. 6, pp. 405–415, 2011.

- [29] A. K. Golahmadi, D. Z. Khan, G. P. Mylonas, and H. J. Marcus, “Tool-tissue forces in surgery: A systematic review,” Annals of Medicine and Surgery, vol. 65, p. 102268, 2021.

- [30] A. Banach, F. King, F. Masaki, H. Tsukada, and N. Hata, “Visually navigated bronchoscopy using three cycle-consistent generative adversarial network for depth estimation,” Medical image analysis, vol. 73, p. 102164, 2021.

- [31] H. Singh, “Advanced image processing using opencv,” in Practical Machine Learning and Image Processing. Springer, 2019, pp. 63–88.

- [32] A. Kaspers, “Blob detection,” M.S. thesis, Image Sciences Institute, Utrecht Univ., Utrecht, Netherlands, 2011.

- [33] A. Fetić, D. Jurić, and D. Osmanković, “The procedure of a camera calibration using camera calibration toolbox for matlab,” in 2012 Proceedings of the 35th International Convention MIPRO. IEEE, 2012, pp. 1752–1757.

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/76ff49cf-8629-4f4d-acdd-a76b5580eb8f/x10.png)

|

Tangyou Liu received the B.S. degree in mechanical engineering from the Southwest University of Science and Technology (SWUST) in 2017, and the M.S. degree in mechanical engineering as an outstanding graduate from the Harbin Institute of Technology, Shenzhen (HITsz) in 2021, supervised by Prof. Max Q.-H.Meng. Then, he worked in KUKA, China, as a system development engineer and was awarded the champion of the KUKA China R&D Innovation Challenge. He was awarded the IEEE ICRA2023 Best Poster. He is pursuing his Ph.D. degree in mechatronic engineering at the University of New South Wales (UNSW), Sydney, Australia, under the supervision of Dr. Liao Wu. His current research interests include medical and surgical robots. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/76ff49cf-8629-4f4d-acdd-a76b5580eb8f/x11.png)

|

Tinghua Zhang received the B.S. and M.S. degrees in Mechanical Engineering from Jimei University (JMU) in 2020 and Harbin Institute of Technology (Shenzhen) (HITSZ) in 2023, respectively. Now, he is working at The Chinese University of Hong Kong (CUHK) as a research assistant. His research interest is mainly in medical robotics, including robotic OCT, optical tracking and force sensing. He was awarded the best student paper finiallist of IEEE ROBIO 2022. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/76ff49cf-8629-4f4d-acdd-a76b5580eb8f/x12.png)

|

Jay Katupitiya received his B.S. degree in Production Engineering from the University of Peradeniya, Sri Lanka and Ph.D degree from the Katholieke Universiteit Leuven, Belgium. He is currently an associate professor at the University of New South Wales in Sydney, Australia. His main research interest is the development of sophisticated control methodologies for the precision guidance of field vehicles on rough terrain at high speeds. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/76ff49cf-8629-4f4d-acdd-a76b5580eb8f/x13.png)

|

Jiaole Wang received the B.E. degree in mechanical engineering from Beijing Information Science and Technology University, Beijing, China, in 2007, the M.E. degree from the Department of Human and Artificial Intelligent Systems, University of Fukui, Fukui, Japan, in 2010, and the Ph.D. degree from the Department of Electronic Engineering, The Chinese University of Hong Kong (CUHK), Hong Kong, in 2016. He was a Research Fellow with the Pediatric Cardiac Bioengineering Laboratory, Department of Cardiovascular Surgery, Boston Children’s Hospital and Harvard Medical School, Boston, MA, USA. He is currently an Associate Professor with the School of Mechanical Engineering and Automation, Harbin Institute of Technology, Shenzhen, China. His main research interests include medical and surgical robotics, image-guided surgery, human-robot interaction, and magnetic tracking and actuation for biomedical applications. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/76ff49cf-8629-4f4d-acdd-a76b5580eb8f/x14.png)

|

Liao Wu is a Senior Lecturer with the School of Mechanical and Manufacturing Engineering, University of New South Wales, Sydney, Australia. He received his B.S. and Ph.D. degrees in mechanical engineering from Tsinghua University, Beijing, China, in 2008 and 2013, respectively. From 2014 to 2015, he was a Research Fellow at the National University of Singapore. He then worked as a Vice-Chancellor’s Research Fellow at the Queensland University of Technology, Brisbane, Australia from 2016 to 2018. Between 2016 and 2020, he was affiliated with the Australian Centre for Robotic Vision, an ARC Centre of Excellence. He has worked on applying Lie groups theory to robotics, kinematic modeling and calibration, etc. His current research focuses on medical robotics, including flexible robots and intelligent perception for minimally invasive surgery. |