HDMba: Hyperspectral Remote Sensing Imagery Dehazing with State Space Model

Abstract

Haze contamination in hyperspectral remote sensing images (HSI) can lead to spatial visibility degradation and spectral distortion. Haze in HSI exhibits spatial irregularity and inhomogeneous spectral distribution, with few dehazing networks available. Current CNN and Transformer-based dehazing methods fail to balance global scene recovery, local detail retention, and computational efficiency. Inspired by the ability of Mamba to model long-range dependencies with linear complexity, we explore its potential for HSI dehazing and propose the first HSI Dehazing Mamba (HDMba) network. Specifically, we design a novel window selective scan module (WSSM) that captures local dependencies within windows and global correlations between windows by partitioning them. This approach improves the ability of conventional Mamba in local feature extraction. By modeling the local and global spectral-spatial information flow, we achieve a comprehensive analysis of hazy regions. The DehazeMamba layer (DML), constructed by WSSM, and residual DehazeMamba (RDM) blocks, composed of DMLs, are the core components of the HDMba framework. These components effectively characterize the complex distribution of haze in HSIs, aiding in scene reconstruction and dehazing. Experimental results on the Gaofen-5 HSI dataset demonstrate that HDMba outperforms other state-of-the-art methods in dehazing performance. The code will be available at https://github.com/RsAI-lab/HDMba.

Index Terms:

Hyperspectral imagery (HSI), dehazing, window selective scan, Mamba.I Introduction

Optical remote sensing (RS) hyperspectral imagery (HSI) captures hundreds of contiguous narrow spectral bands, enabling the detection of subtle variations in ground surface characteristics. This capability makes HSI indispensable in environmental monitoring, agricultural assessment, and urban management [2]. However, atmospheric disturbances, such as haze, can cause significant distortions in spectral signatures, compromising the accuracy of surface feature identification and classification. Consequently, effective haze removal is essential to preserve the integrity and advantages of HSI in diverse remote sensing applications [3].

Conventional RS dehazing methods primarily rely on atmospheric scattering models and dark-object subtraction (DOS) models. Classical methods, such as the dark channel prior [4] and haze thickness map [5], are commonly used for model parameter estimation. Kang et al. [6] developed a DOS model-based HSI defogging model (Defog). These methods are often limited in dehazing efficacy and generalization due to their dependence on parameter settings and manual intervention. Deep learning techniques, particularly convolutional neural networks (CNNs), have been applied to RS dehazing, with models like FFANet [7], RSDehazeNet [8], CANet [9], and LKDNet [10] demonstrating notable generalization performance by mapping hazy images directly to clear images. Recent studies have integrated attention mechanisms in HSI dehazing, resulting in models like SGNet [11] and AACNet [12]. Despite these advances, the limited receptive field of convolutional kernels and pixel-to-pixel translation challenges these methods in capturing long-range contextual information in hazy regions, leading to scene structure discrepancies.

Thanks to its superior global modelling capability, the Transformer has been successfully applied to remote sensing dehazing, yielding impressive results. Models such as Restormer [13], DehazeFormer [14], and AIDTransformer [15] used U-shaped structures to extract deep global structural information. RSDformer [16] further enhanced image structure recovery by incorporating novel self-attention mechanisms to capture both local and global correlations. However, the quadratic complexity of self-attention and the number of tokens leads to significant computational overhead on image dehazing. Recently, Mamba [17], a novel state space model (SSM) has shown great potential in long-sequence modelling with linear complexity. It has been applied to various RS tasks, including semantic segmentation, pan-sharpening, and denoising [18]. While Mamba has been attempted for natural and RS-RGB image dehazing [19, 20], its efficacy in HSI dehazing remains unexplored.

Compared to conventional image dehazing, haze in HSI exhibits irregular and locally significant inhomogeneities in the spatial domain, with shortwave bands being more sensitive to haze than longwave bands. We aim to leverage Mamba to explore the complex haze distribution in HSI, achieving efficient haze removal and scene reconstruction. To this end, we developed the first HSI Dehazing Mamba (HDMba) framework and designed a window selective scan module (WSSM) to model the local-global spectral-spatial information flow, effectively reconstructing the scene and spectral details in HSI. The main contributions of this work are summarized as follows:

1) We introduce HDMba, the first framework to explore the potential of Mamba for HSI dehazing. The designed DehazeMamba block integrates SSM, convolution, and residual learning to effectively model complex haze distributions in HSI, recovering scene structure and texture details.

2) We propose a new WSSM that captures local dependencies along with contextual global interactions, improving the perception of local haze regions and the characterization of differences between hazy and haze-free regions.

3) We construct an HSI dehazing dataset with 2,000 image pairs using the Gaofen-5 Advanced Hyperspectral Imager (AHSI) sensor. This dataset, along with the available hyperspectral defogging dataset (HDD), is used to assess the dehazing performance and complexity of HDMba against other state-of-the-art dehazing networks.

II Proposed method

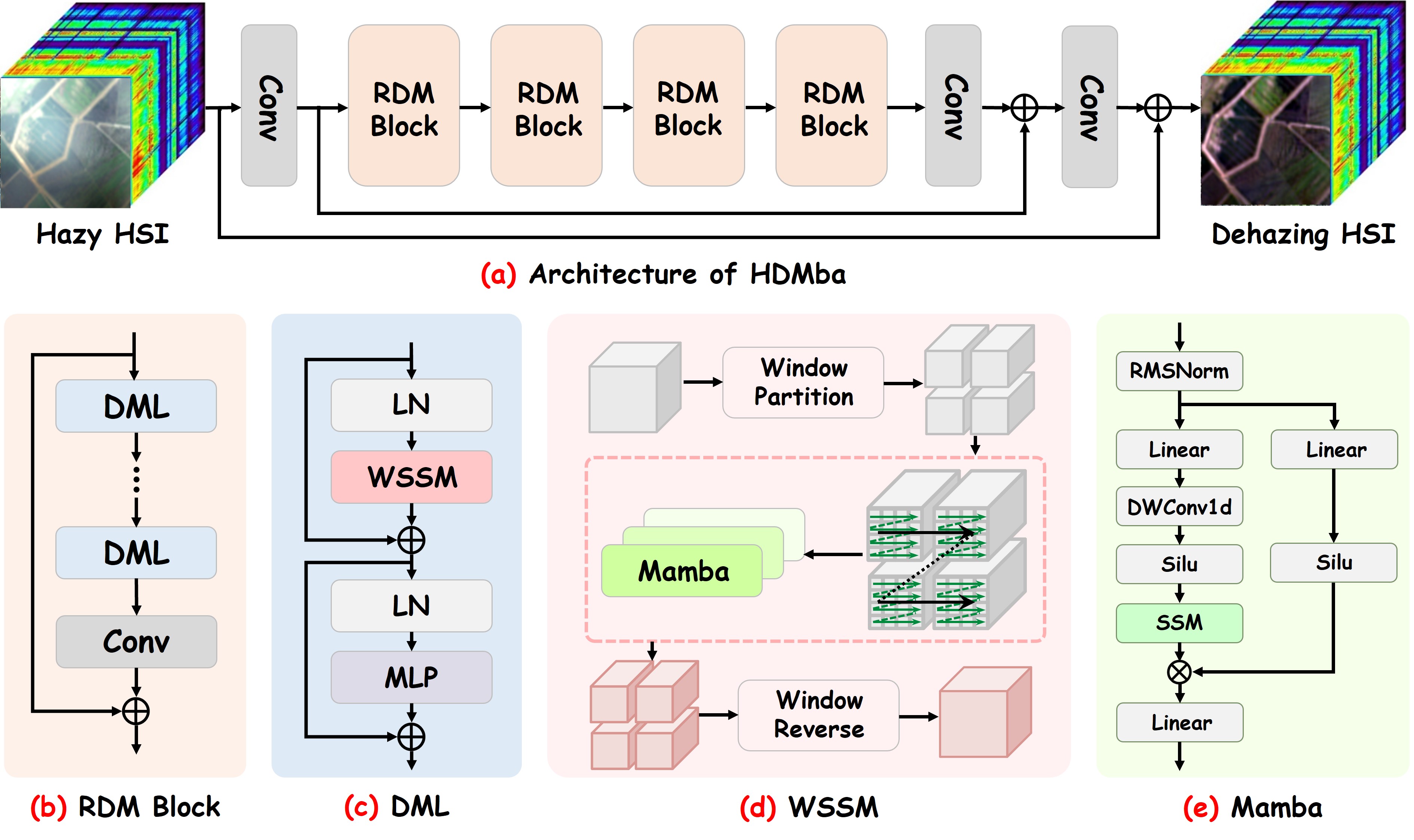

In this section, we first present the overall network framework of the proposed HDMba. Next, we introduce the key module, the Residual DehazeMamba (RDM) block, which consists of multiple DehazeMamba layers (DML). Finally, we describe the WSSM within the DML, detailing its approach to modelling and processing local and global information flows using Mamba.

II-A Network Architecture

To ensure effective and direct dehazing feature extraction, we adopted an end-to-end multi-scale feature extraction framework based on RDM blocks, as shown in Fig. 2 (a). Downsampling in the U-net is discarded, which allows the high-frequency information to be preserved. HDMba first applies a 3×3 convolution layer to extract low-level features from the haze image , where and represent the spatial dimensions, and and are the number of feature channels and the number of input bands, respectively. Then, is processed through several RDM blocks to extract deep features for scene recovery with the feature size of , and this process can be expressed as:

| (1) |

where represents the -th RDM block, and denotes the -th spatial-spectral feature obtained by this block. The network is designed with 4 RDM blocks. To recover a clean scene from the deep feature , two 3×3 convolution layers are concatenated with the shallow feature via skip connections. The global skip connection between the hazy image and the output enables the intermediate network to learn the irregular and uneven haze characteristics distributed across the spectral and spatial domains. A combination of mean squared error (MSE) and norm is used as loss function for network training:

| (2) |

where represents the MSE loss and represents the norm. and are the weighting factors.

II-B Residual DehazeMamba block

Haze exhibits an irregular spatial distribution, making it essential to focus on local haze areas and extract crucial information from them for scene recovery. We designed the RDM block (Fig. 2 (b)) for deep local and long-range information modelling, which mainly contains multiple DMLs, represented as follows:

| (3) |

where represents the -th DML and denotes the -th spatial-spectral feature in RDM block. Each RDM block concludes with a 3×3 convolution layer, adding the residual features from the previous block via a skip connection. For each DML (Fig. 2 (c)), we combine the normalized layer with WSSM and MLP respectively to improve the nonlinearity characterization while modelling spatial information. The process can be defined as follows:

| (4) |

| (5) |

where represents the input feature embedding of DML, and represent the output of and , respectively, and represents the Layer Normalization layer.

II-C Window selective scan module

Currently, most visual Mamba approaches primarily capture long-term dependencies by increasing scanning directions, but they lack the ability to effectively capture local spatial details and inter-regional correlations [21]. We proposed a novel WSSM to enhance the processing capacity in local areas where haze is distributed, as shown in Fig. 2 (d).

Specifically, for the input feature embedding , the window partition [22] is first performed in spatial dimension with the window size of , resulting in overlapping patches . These patches then capture local dependencies through Mamba, while retaining the correlation of different local regions, ensuring a comprehensive analysis of haze regions in the image. Finally, through a window reverse operation, the output patches from Mamba are gathered to obtain the final features . This process can be expressed as follows:

| (6) |

| (7) |

| (8) |

where and . For Mamba (Fig. 2 (e)), the input spatial feature sequence enters two branches after passing through RMSNorm. In the main branch, the features undergo successive processing by a linear layer, depth-wise separable convolution, a SiLU activation function, and SSM, effectively integrating haze characteristics from various regions. The other branch passes through a linear layer and a SiLU function and multiplies the output of the main branch. A normalization layer produces the final output. The process can be represented as follows:

| (9) |

| (10) |

| (11) |

where and represent the output of the two branches respectively. represents the depth-wise separable convolution, denotes the SiLU activation function and indicates the element-wise multiplication.

III Experimental results

III-A Dataset and Implementation Details

To evaluate the effectiveness of the proposed HDMba, we used two Gaofen-5 HSI datasets: HyperDehazing dataset111The dataset will be publicly available at https://github.com/RsAI-lab/HyperDehazing and HDD [6]. The HyperDehazing dataset is synthesized based on the DOS model using 100 clean scenes with 20 different haze thicknesses and 5 haze abundances. It contains a total of 2000 hazy and haze-free image pairs, each with a size of 512×512×305. 90% of this dataset was used for network training, while the remaining 10% was reserved for testing. HDD comprises 20 reference-free hazy images, each with dimensions 512×512×305. All images in this dataset were used for network testing. To meet memory requirements, we cropped the training data to 64×64 and the test data to 128×128.

We set the number of DMLs =4, the window size =8. and are set to 1 and 0.1, respectively. The batch size is set to 4, and all datasets are trained for 10,000 iterations. The Adam optimization operator was employed to accelerate the training, where the momentum parameters were set to 0.9, 0.999, and 10-8, respectively. The initial learning rate was set to 1×10-4, with the cosine annealing strategy to adjust the learning rate. The whole network was implemented on the PyTorch framework with an NVIDIA GeForce RTX 3060 GPU.

III-B Dehazing results

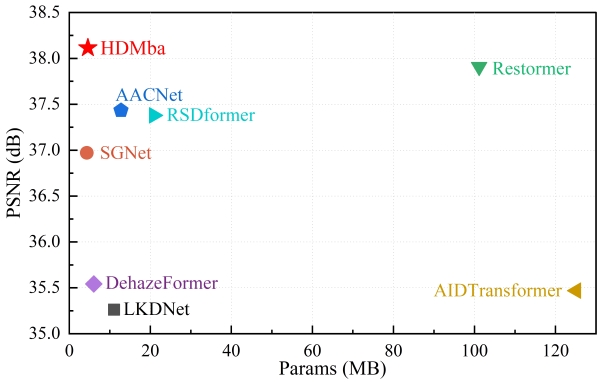

To quantitatively evaluate the dehazing performance, we used structural similarity index measurement (SSIM), peak signal-to-noise ratio (PSNR), universal image quality index (UQI), and spectral angle mapping (SAM) metrics for paired images, and natural image quality evaluator (NIQE) and average gradient (AG) metrics for real images. The results are shown in Table I. Transformer-based methods generally outperform CNN-based methods. However, HDMba achieves the best results across all metrics except UQI. When processing high-dimensional data, HDMba has significantly fewer parameters (4.60M) compared to most dehazing networks.

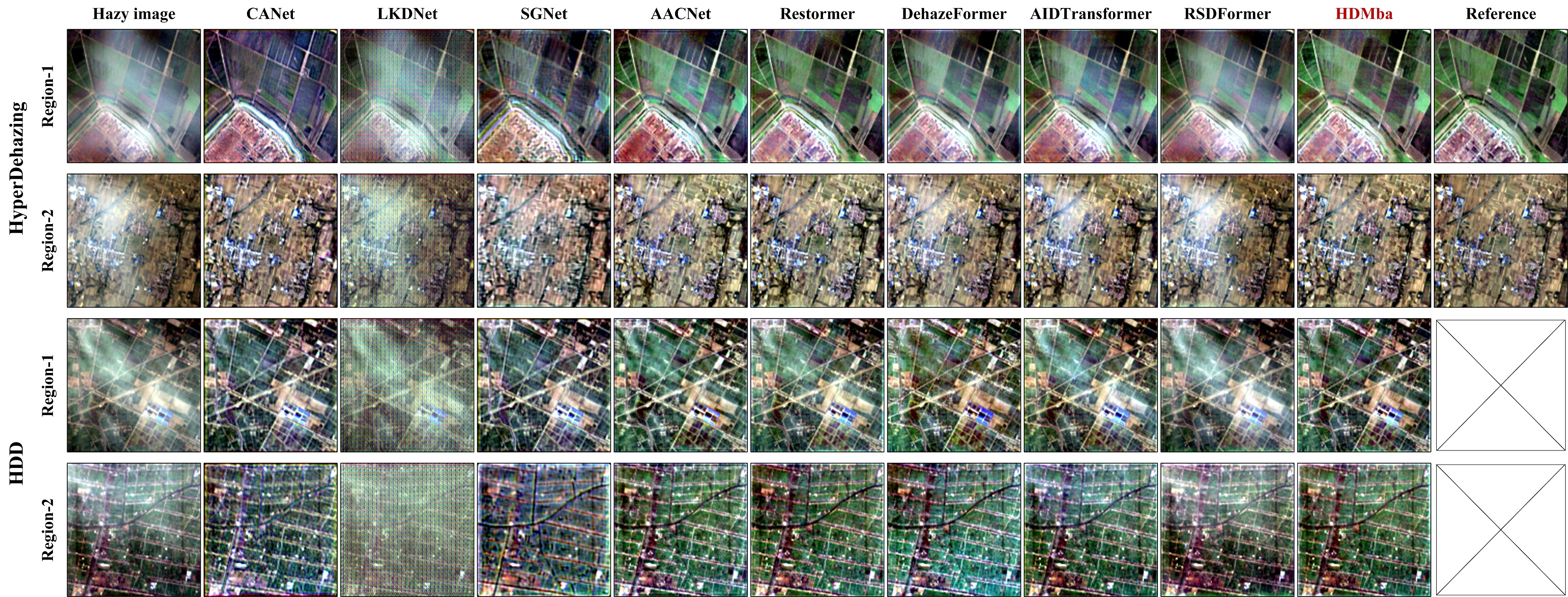

To visualize the effectiveness of dehazing, Fig. 3 presents dehazing images of several state-of-the-art methods. It is evident that LKDNet, AIDTransformer, and RSDFormer do not completely remove the haze. The scenes recovered by CANet and SGNet exhibit spectral distortions. While AACNet and Restormer manage to recover parts of the clean scene, some haze residue remains. In contrast, the proposed HDMba recovers the result closest to the clean scene, with a good reconstruction of surface details.

| Dataset | HyperDehazing | HDD | Complexity | |||||

| Methods | SSIM | PSNR | UQI | SAM | NIQE | AG | Params (M) | |

| Model-based | Defog[6] | 0.7020 | 27.5621 | 0.7853 | 0.2637 | 17.8467 | 0.1983 | - |

| CNN-based | FFANet[7] | 0.9035 | 32.1437 | 0.9476 | 0.0891 | 18.6571 | 0.1357 | 4.69 |

| RSDehazeNet[8] | 0.9409 | 34.4354 | 0.9721 | 0.0672 | 17.9746 | 0.1470 | 1.51 | |

| CANet[9] | 0.9542 | 34.6147 | 0.9743 | 0.0631 | 15.7951 | 0.2524 | 12.12 | |

| LKDMNet[10] | 0.9448 | 35.2614 | 0.9618 | 0.0588 | 19.7972 | 0.2616 | 11.06 | |

| SGNet[11] | 0.9672 | 36.9704 | 0.9785 | 0.0568 | 16.3662 | 0.2032 | 4.32 | |

| AACNet[12] | 0.9734 | 37.4322 | 0.9797 | 0.0425 | 15.2740 | 0.2556 | 12.76 | |

| Transformer-based | Restormer[13] | 0.9702 | 37.9080 | 0.9755 | 0.0421 | 15.7965 | 0.2396 | 101.16 |

| DehazeFormer[14] | 0.9708 | 35.5426 | 0.9739 | 0.0432 | 14.3863 | 0.2456 | 6.04 | |

| AIDTransformer[15] | 0.9723 | 35.4695 | 0.9736 | 0.0401 | 15.6097 | 0.2330 | 125.11 | |

| RSDformer[16] | 0.9709 | 37.3785 | 0.9743 | 0.0462 | 16.4790 | 0.2461 | 20.89 | |

| Mamba-based | HDMba | 0.9763 | 38.1340 | 0.9765 | 0.0382 | 13.7959 | 0.2663 | 4.60 |

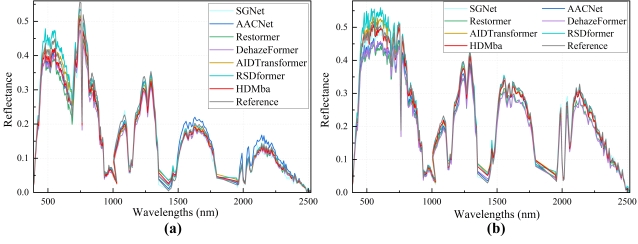

III-C Spectrum reconstruction analysis

As shown in Fig. 4, we selected building and vegetation scenes to compare spectra reconstruction performance. RSDFormer has an insufficient dehazing ability, resulting in spectral curves significantly higher than the reference value. AIDTransformer exhibits similar issues (Fig. 4(b)). AACNet, DehazeFormer, and Restormer produce spectra in the visible range that are lower than the reference, indicating excessive dehazing. In contrast, HDMba achieves spectra closest to the reference (Fig. 4 (b)), with spectral trends that are highly consistent despite some deviations in certain cases (Fig. 4 (a)).

III-D Performance across wavelengths

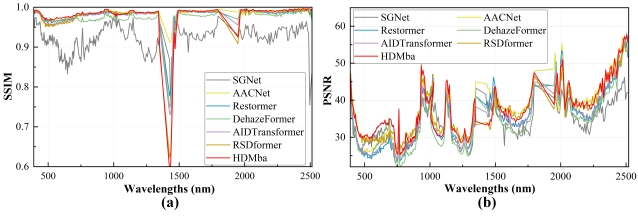

The effectiveness of HDMba in processing hazy HSIs across different bands is quantitatively evaluated using the SSIM and PSNR metrics, as shown in Fig. 5. The results indicate that HDMba consistently outperforms other methods across most bands, achieving better performance than SGNet (which has the lowest SSIM) and DehazeFormer (which has the lowest PSNR). It should be noted that the performance of HDMba degrades in the 1430 nm and 1950 nm wavelength ranges, which are close to the water and atmospheric absorption bands and can cause severe interference. Nevertheless, our method exhibits excellent dehazing effects across nearly all spectral bands.

III-E Ablation Study

We conducted ablation experiments on HyperDehazing to investigate the effectiveness of each component of the proposed model. These experiments included evaluating the impact of constituent elements within the DML, as well as exploring the effect of different partition window sizes in the WSSM on the dehazing results.

1) Analysis of DML: We trained the network with variations in constituent elements of Mamba and MLP, presenting the corresponding dehazing results in Table II. It is evident that the combination of SSM, DWConv1d, and multiplication in Mamba yields excellent dehazing performance, with further enhancement achieved by incorporating MLP.

2) Analysis of window size: We assessed the effect of window size in the WSSM on dehazing, and the results are presented in Table III. As we can see, larger window sizes lead to improved performance but also increase computational costs. We set the window size to 8 to strike a balance between performance and computation cost.

| Mamba | MLP | SSIM | PSNR | Params (M) | ||

|---|---|---|---|---|---|---|

| SSM | DConv | |||||

| 0.8062 | 28.1639 | 1.11 | ||||

| 0.9696 | 36.1114 | 3.92 | ||||

| 0.9680 | 36.2026 | 3.96 | ||||

| 0.9737 | 36.4216 | 4.35 | ||||

| 0.9783 | 37.2427 | 4.60 | ||||

| Window size | SSIM | PSNR | Params (M) |

|---|---|---|---|

| 2 | 0.9733 | 37.7522 | 4.56 |

| 4 | 0.9740 | 37.8846 | 4.57 |

| 8 | 0.9763 | 38.1340 | 4.60 |

| 16 | 0.9787 | 38.3088 | 4.74 |

IV Conclusion

In this paper, we propose a hyperspectral image dehazing network (HDMba) based on Mamba. The proposed residual DehazeMamba (RDM) blocks effectively characterize the complex haze distribution in HSI data, enhancing scene and texture detail recovery. Additionally, we design the window selective scan module (WSSM), which effectively extracts local haze region information and their differences from other regions, improving the perception of haze distribution and local changes. Extensive experimental results demonstrate that HDMba outperforms state-of-the-art methods in HSI dehazing performance and computational complexity. In future work, we will explore developing weakly supervised and generalized foundation models for HSI dehazing based on the proposed method.

References

- [1] A. F. H. Goetz, G. Vane, J. E. Solomon, and B. N. Rock, “Imaging spectrometry for earth remote sensing,” Science, vol. 228, no. 4704, pp. 1147–1153, 1985.

- [2] H. Fu, G. Sun, L. Zhang, A. Zhang, J. Ren, X. Jia, and F. Li, “Three-dimensional singular spectrum analysis for precise land cover classification from uav-borne hyperspectral benchmark datasets,” ISPRS Journal of Photogrammetry and Remote Sensing, vol. 203, pp. 115–134, 2023.

- [3] B. Rasti, Y. Chang, E. Dalsasso, L. Denis, and P. Ghamisi, “Image restoration for remote sensing: Overview and toolbox,” IEEE Geoscience and Remote Sensing Magazine, vol. 10, no. 2, pp. 201–230, 2022.

- [4] K. He, J. Sun, and X. Tang, “Single image haze removal using dark channel prior,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 33, no. 12, pp. 2341–2353, 2011.

- [5] A. Makarau, R. Richter, R. Müller, and P. Reinartz, “Haze detection and removal in remotely sensed multispectral imagery,” IEEE Transactions on Geoscience and Remote Sensing, vol. 52, no. 9, pp. 5895–5905, 2014.

- [6] X. Kang, Z. Fei, P. Duan, and S. Li, “Fog model-based hyperspectral image defogging,” IEEE Transactions on Geoscience and Remote Sensing, vol. 60, pp. 1–12, 2022.

- [7] X. Qin, Z. Wang, Y. Bai, X. Xie, and H. Jia, “Ffa-net: Feature fusion attention network for single image dehazing,” Proceedings of the AAAI Conference on Artificial Intelligence, vol. 34, no. 07, pp. 11 908–11 915, Apr. 2020.

- [8] J. Guo, J. Yang, H. Yue, H. Tan, C. Hou, and K. Li, “Rsdehazenet: Dehazing network with channel refinement for multispectral remote sensing images,” IEEE Transactions on Geoscience and Remote Sensing, vol. 59, no. 3, pp. 2535–2549, 2021.

- [9] X. Wen, Z. Pan, Y. Hu, and J. Liu, “An effective network integrating residual learning and channel attention mechanism for thin cloud removal,” IEEE Geoscience and Remote Sensing Letters, vol. 19, pp. 1–5, 2022.

- [10] P. Luo, G. Xiao, X. Gao, and S. Wu, “Lkd-net: Large kernel convolution network for single image dehazing,” in 2023 IEEE International Conference on Multimedia and Expo (ICME), 2023, pp. 1601–1606.

- [11] X. Ma, Q. Wang, and X. Tong, “A spectral grouping-based deep learning model for haze removal of hyperspectral images,” ISPRS Journal of Photogrammetry and Remote Sensing, vol. 188, pp. 177–189, 2022.

- [12] M. Xu, Y. Peng, Y. Zhang, X. Jia, and S. Jia, “Aacnet: Asymmetric attention convolution network for hyperspectral image dehazing,” IEEE Transactions on Geoscience and Remote Sensing, vol. 61, pp. 1–14, 2023.

- [13] S. W. Zamir, A. Arora, S. Khan, M. Hayat, F. S. Khan, and M. Yang, “Restormer: Efficient transformer for high-resolution image restoration,” in 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2022, pp. 5718–5729.

- [14] Y. Song, Z. He, H. Qian, and X. Du, “Vision transformers for single image dehazing,” IEEE Transactions on Image Processing, vol. 32, pp. 1927–1941, 2023.

- [15] A. Kulkarni and S. Murala, “Aerial image dehazing with attentive deformable transformers,” in 2023 IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), 2023, pp. 6294–6303.

- [16] T. Song, S. Fan, P. Li, J. Jin, G. Jin, and L. Fan, “Learning an effective transformer for remote sensing satellite image dehazing,” IEEE Geoscience and Remote Sensing Letters, vol. 20, pp. 1–5, 2023.

- [17] A. Gu and T. Dao, “Mamba: Linear-time sequence modeling with selective state spaces,” ArXiv, vol. abs/2312.00752, 2023.

- [18] R. Xu, S. Yang, Y. Wang, B. Du, and H. Chen, “A survey on vision mamba: Models, applications and challenges,” ArXiv, vol. abs/2404.18861, 2024.

- [19] H. Zhou, X. Wu, H. Chen, X. Chen, and X. He, “Rsdehamba: Lightweight vision mamba for remote sensing satellite image dehazing,” arXiv preprint arXiv:2405.10030, 2024.

- [20] Z. Zheng and C. Wu, “U-shaped vision mamba for single image dehazing,” arXiv preprint arXiv:2402.04139, 2024.

- [21] T. Huang, X. Pei, S. You, F. Wang, C. Qian, and C. Xu, “Localmamba: Visual state space model with windowed selective scan,” ArXiv, vol. abs/2403.09338, 2024.

- [22] M. Li, Y. Fu, and Y. Zhang, “Spatial-spectral transformer for hyperspectral image denoising,” Proceedings of the AAAI Conference on Artificial Intelligence, vol. 37, no. 1, pp. 1368–1376, Jun. 2023.