Hedging of time discrete auto-regressive stochastic volatility options

Abstract

Numerous empirical proofs indicate the adequacy of the time discrete auto-regressive stochastic volatility models introduced by Taylor [Tay86, Tay05] in the description of the log-returns of financial assets. The pricing and hedging of contingent products that use these models for their underlying assets is a non-trivial exercise due to the incomplete nature of the corresponding market. In this paper we apply two volatility estimation techniques available in the literature for these models, namely Kalman filtering and the hierarchical-likelihood approach, in order to implement various pricing and dynamical hedging strategies. Our study shows that the local risk minimization scheme developed by Föllmer, Schweizer, and Sondermann is particularly appropriate in this setup, especially for at and in the money options or for low hedging frequencies.

Keywords: Stochastic volatility models, ARSV models, hedging techniques, incomplete markets, local risk minimization, Kalman filter, hierarchical-likelihood.

1 Introduction

Ever since Black, Merton, and Scholes introduced their celebrated option valuation formula [BS72, Mer76] a great deal of effort has been dedicated to reproduce that result using more realistic stochastic models for the underlying asset. In the discrete time modeling setup, the GARCH parametric family [Eng82, Bol86] has been a very popular and successful choice for which the option pricing and hedging problem has been profusely studied; see for example [Dua95, HN00, BEK+11, GCI10, Ort10], and references therein. Even though the GARCH specification can accommodate most stylized features of financial returns like leptokurticity, volatility clustering, and autocorrelation of squared returns, there are mathematical relations between some of their moments that impose undesirable constraints on some of the parameter values. For example, a well-known phenomenon [CPnR04] has to do with the relation that links the kurtosis of the process with the parameter that accounts for the persistence of the volatility shocks (the sum of the ARCH and the GARCH parameters, bound to be smaller than one in order to ensure stationarity). This relation implies that for highly volatility persistent time series like the ones observed in practice, the ARCH coefficient is automatically forced to be very small, which is in turn incompatible with having sizeable autocorrelation (ACF) for the squares (the ACF of the squares at lag is linear in this coefficient). This situation aggravates when innovations with fat tails are used in order to better reproduce leptokurticity.

The structural rigidities associated to the finiteness of the fourth moment are of particular importance when using quadratic hedging methods for there is an imperative need to remain in the category of square summable objects and finite kurtosis is a convenient way to ensure that (see, for example [Ort10, Theorem 3.1 (iv)]).

The auto-regressive stochastic volatility (ARSV) models [Tay86, Tay05] that we will carefully describe later on in Section 2 are a parametric family designed in part to overcome the difficulties that we just explained. The defining feature of these models is the fact that the volatility (or a function of it) is determined by an autoregressive process that, unlike the GARCH situation, is exclusively driven by past volatility values and by designated innovations that are in principle independent from those driving the returns. The use of additional innovations introduces in the model a huge structural leeway because, this time around, the same constraint that ensures stationarity guarantees the existence of finite moments of arbitrary order which is of much value in the context of option pricing and hedging.

The modifications in the ARSV prescription that allow an enriching of the dynamics come with a price that has to do with the fact that conditional volatilities are not determined, as it was the case in the GARCH situation, by the price process. This causes added difficulties at the time of volatility and parameter estimation; in particular, a conditional likelihood cannot be written down in this context (see later on in Section 2.1). This also has a serious impact when these models are used in derivatives pricing and hedging because the existence of additional noise sources driving the dynamics makes more acute the incomplete character of the corresponding market; this makes more complex the pricing and hedging of contingent products.

The main goal of this paper is showing that appropriate implementations of the local risk minimization scheme developed by Föllmer, Schweizer, and Sondermann [FS86, FS91, Sch01] tailored to the ARSV setup provide an appropriate tool for pricing and hedging contingent products that use these models to characterize their underlying assets. The realization of this hedging scheme in the ARSV context requires two main tools:

-

•

Use of a volatility estimation technique: as we already said, volatility for these models is not determined by the price process and hence becomes a genuinely hidden variable that needs to be estimated separately. In our work we will use a Kalman filtering approach advocated by [HRS94] and the so called hierarchical likelihood (-likelihood) strategy [LN96, LN06, dCL08, WLLdC11] which, roughly speaking, consists of carrying out a likelihood estimation while considering the volatilities as unobserved parameters that are part of the optimization problem. Even though both methods are adequate volatility estimation techniques, the -likelihood technique has a much wider range of applicability for it is not subjected to the rigidity of the state space representation necessary for Kalman and hence can be used for stochastic volatility models with complex link functions or when innovations are non-Gaussian.

-

•

Use of a pricing kernel: this term refers to a probability measure equivalent to the physical one with respect to which the discounted prices are martingales. The expectation of the discounted payoff of a derivative product with respect to any of these equivalent measures yields an arbitrage free price for it. In our work we will also use these martingale measures in order to devise hedging strategies via local risk minimization. This is admittedly just an approximation of the optimal local risk minimization scheme that should be carried out with respect to the physical measure that actually quantifies the local risk. However there are good reasons that advise the use of these equivalent martingale measures mainly having to do with numerical computability and interpretation of the resulting value processes as arbitrage free prices. These arguments are analyzed in Section 3.2.

Regarding the last point, there are two equivalent martingale measures that we will be using. The first one is inspired by the Extended Girsanov Principle introduced in [EM98]. This principle allows the construction of a martingale measure in a general setup under which the process behaves as its “martingale component” used to do under the physical probability; this is the reason why this measure is sometimes referred to as the mean-correcting martingale measure, denomination that we will adopt. This construction has been widely used in the GARCH context (see [BEK+11, Ort10] and references therein) where it admits a particularly simple and numerically efficient expression in terms of the probability density function of the model innovations. As we will see, in the ARSV case, this feature is not anymore available and we will hence work with the expression coming from the predictable situation that even though in the ARSV case produces a measure that does not satisfy the Extended Girsanov Principle, it is still a martingale measure. Secondly, in Theorem 2.3 we construct the so called minimal martingale measure in the ARSV setup; the importance in our context of this measure is given by the fact that the value process of the local risk-minimizing strategy with respect to the physical measure for a derivative product coincides with its arbitrage free price when using as a pricing kernel. A concern with in the ARSV setup is that this measure is in general signed; fortunately, the occurrence of negative Radon-Nikodym derivatives is extremely rare for the usual parameter values that one encounters in financial time series. Consequently, the bias introduced by censoring paths that yield negative Radon-Nikodym derivatives and using as a well-defined positive measure is hardly noticeable. A point that is worth emphasizing is that even though the value processes obtained when carrying out local risk minimization with respect to the physical and the minimal martingale measures are identical, the hedges are in general not the same and consequently so are the hedging errors; this difference is studied in Proposition 3.6.

A general remark about local risk minimization that supports its choice in applications is its adaptability to different hedging frequencies. Most sensitivity based (delta) hedging methods for time series type underlyings are constructed by discretizing a continuous time hedging argument; consequently, when the hedging frequency diminishes, the use of such techniques loses pertinence. As we explain in Section 3.3, the local risk minimization hedging scheme can be adapted to prescribed changes in the hedging frequency, which regularly happens in real life applications.

The paper is organized in four sections. Section 2 contains a brief introduction to ARSV models and their dynamical features of interest to our study; this section contains two subsections that explain the volatility estimation techniques that we will be using and the martingale measures that we mentioned above. The details about the implementation of the local risk minimization strategy are contained in Section 3. Section 4 contains a numerical study where we carry out a comparison between the hedging performances obtained by implementing the local risk minimization scheme using the different volatility estimation techniques and the martingale measures introduced in Section 2. In order to enrich the discussion, we have added to the study two standard sensitivity based hedging methods that provide good results in other contexts, namely Black-Scholes [BS72] and an adaptation of Duan’s static hedge [Dua95] to the ARSV context. As a summary of the results obtained in that section it can be said that local risk minimization outperforms sensitivity based hedging methods, especially when maturities are long and the hedging frequencies are low; this could be expected due to the adaptability feature of local risk minimization with respect to modifications in this variable. As to the different measures proposed, it is the minimal martingale measure that yields the best hedging performances when implemented using a Kalman based estimation of volatilities, in the case of short and medium maturities and -likelihood for longer maturities and low hedging frequencies.

Conventions and notations: The proofs of all the results in the paper are contained in the appendix in Section 6. Given a filtered probability space and two random variables, we will denote by the conditional expectation, the conditional covariance, and by the conditional variance. A discrete-time stochastic process is predictable when is -measurable, for any .

Acknowledgments: we thank Alexandru Badescu for insightful comments about our work that have significantly improved its overall quality.

2 Auto-regressive stochastic volatility (ARSV) models

The auto-regressive stochastic volatility (ARSV) model has been introduced in [Tay86] with the objective of capturing some of the most common stylized features observed in the excess returns of financial time series: volatility clustering, excess kurtosis, and autodecorrelation in the presence of dependence; this last feature can be visualized by noticing that financial log-returns time series exhibit autocorrelation at, say lag , close to zero while the autocorrelation of the squared returns is significantly not null. Let be the price at time of the asset under consideration, the risk-free interest rate, and the associated log-return. In this paper we will be considering the so-called standard ARSV model which is given by the prescription

| (2.1) |

where , is a real parameter, and . Notice that in this model, the volatility process is a non-traded stochastic latent variable that, unlike the situation in GARCH like models [Eng82, Bol86] is not a predictable process that can be written as a function of previous returns and volatilities.

It is easy to prove that the unique stationary returns process induced by (2.1) available in the presence of the constraint is a white noise (the returns have no autocorrelation) with finite moments of arbitrary order. In particular, the marginal variance and kurtosis are given by

| (2.2) |

Moreover, let be the marginal variance of the stationary process , that is,

It can be shown [Tay86] that whenever is small and/or is close to one then the autocorrelation of the squared returns at lag can be approximated by

The existence of finite moments is particularly convenient in the context of quadratic hedging methods. For example, in Theorem 3.1 of [Ort10] it is shown that the finiteness of kurtosis is a sufficient condition for the availability of adequate integrability conditions necessary to carry out pricing and hedging via local risk minimization.

Let be the probability space where the model (2.1) has been formulated and let be the information set generated by the observables . This statement can be mathematically coded by setting , where is the sigma algebra generated by the prices . As several equivalent probability measures will appear in our discussion, we will refer to as the physical or historical probability measure.

Unless we indicate otherwise, in the rest of the discussion we will not assume that the innovations are Gaussian. We will also need the following marginal and conditional cumulant functions of with respect to the filtration .

If the innovations are Gaussian, we obviously have . When the random variables and are -independent, the following relation between and holds.

Lemma 2.1

Let be a random variable independent of and the filtration introduced above. Then

| (2.3) |

The cumulant functions that we just introduced are very useful at the time of writing down the conditional means and variances of price changes with respect to the physical probability; these quantities will show up frequently in our developments later on. Before we provide these expressions we introduce the following notation for discounted prices:

where denotes the risk-free interest rate. The tilde will be used in general for discounted processes. Using this notation and Lemma 2.1, a straightforward computation using the independence at any given time step of the innovations and the volatility process defined by (2.1), shows that

| (2.4) | |||||

| (2.5) |

2.1 Volatility and model estimation

The main complication in the estimation of ARSV models is due to the fact that does not determine the random variable and hence makes impossible the writing of a likelihood function in a traditional sense. Many procedures have been developed over the years to go around this difficulty based on different techniques: moment matching [Tay86], generalized method of moments [MT90, JPR94, AS96], combinations of quasi-maximum likelihood with the Kalman filter [HRS94], simulated maximum likelihood [Dan94], MCMC [JPR94] and, more recently, hierarchical-likelihood [LN96, LN06, dCL08, WLLdC11] (abbreviated in what follows as h-likelihood). An excellent overview of some of these methods is provided in Chapter 11 of the monograph [Tay05].

In this paper we will focus on the Kalman and h-likelihood approaches since both are based on numerically efficient estimations of the volatility, a point of much importance in our developments.

State space representation and Kalman filtering. This approach is advocated by [HRS94] and consists of writing down the model (2.1) using a state space representation by setting

We assume in this paragraph that the innovations are Gaussian. Using these variables, (2.1) can be rephrased as the observation and state equations:

| (2.6) |

where . Kalman filtering cannot be directly applied to (2.6) due to the non-Gaussian character of the innovations hence, the approximation in [HRS94] consists of using this procedure by thinking of as a Gaussian variable with mean and variance . The Kalman filter provides an algorithm to iteratively produce one-step ahead linear forecasts ; given that , these forecasts can be used to produce predictable estimations of the volatilities by setting and hence

| (2.7) |

The -likelihood approach. This method [LN96, LN06, dCL08, WLLdC11] consists of carrying out a likelihood estimation while considering the volatilities as unobserved parameters that are part of the optimization problem. More specifically, let be the parameters vector, the vector that contains the unobserved , and let ; if and denote the corresponding probability density functions, the associated -(log)likelihood is defined as

| (2.8) | |||||

The references listed above provide numerically efficient procedures to maximize (2.8) with respect to the variables and once the sample has been specified. An idea of much importance in our context is that, once the coefficients have been estimated, this procedure has a natural one-step ahead forecast of the volatility associated, similar to the one obtained using the Kalman filter. The technique operates using the variables alternating prediction and updating; more specifically, we initialize the algorithm using the mean of the unique stationary solution of (2.1), that is, . We use the second equation in (2.1) to produce a linear prediction of based on the value of , namely . Once the quote is available, the h-likelihood approach can be used to update this forecast to the value by solving the optimization problem

This value can be used in its turn to produce a forecast for by setting . If we continue this pattern we obtain a succession of prediction/updating steps given by the expressions

These forecasts can be used to produce predictable estimations of the volatilities by setting

Remark 2.2

Even though both the Kalman and the -likelihood methods constitute adequate estimation and volatility forecasting methods, the -likelihood technique has a much wider range of applicability for it is not subjected to the rigidity of the state space representation and hence can be generalized to stochastic volatility models with more complex link functions than the second expression in (2.1), to situations where the innovations are non-Gaussian, or there is a dependence between and .

2.2 Equivalent martingale measures for stochastic volatility models

Any technique for the pricing and hedging of options based on no-arbitrage theory requires the use of an equivalent measure for the probability space used for the modeling of the underlying asset under which its discounted prices are martingales with respect to the filtration generated by the observables. Measures with this property are usually referred to as risk-neutral or simply martingale measures and the Radon-Nikodym derivative that links this measure with the historical or physical one is called a pricing kernel.

A number of martingale measures have been formulated in the literature in the context of GARCH-like time discrete processes with predictable conditional volatility; see [BEK+11] for a good comparative account of many of them. Those constructions do not generalize to the SV context mainly due to the fact that the volatility process is not uniquely determined by the price process and hence it is not predictable with respect to the filtration generated by .

In this piece of work we will explore two solutions to this problem that, when applied to option pricing and hedging, yields a good combination of theoretical computability and numerical efficiency. The first one has to do with the so called minimal martingale measure and the second approach is based on an approximation inspired in the Extended Girsanov Principle [EM98].

The minimal martingale measure. As we will see in the next section, this measure is particularly convenient when using local risk minimization with respect to the physical measure [FS86, FS91, FS02] as a pricing/hedging technique, for it provides the necessary tools to interpret the associated value process as an arbitrage free price for the contingent product under consideration.

The minimal martingale measure is a measure equivalent to defined by the following property: every -martingale that is strongly orthogonal to the discounted price process , is also a -martingale. The following result spells out the specific form that takes when it comes to the ARSV models.

Theorem 2.3

Consider the price process associated to the ARSV model given by the expression (2.1). In this setup, the minimal martingale measure is determined by the Radon-Nikodym derivative that is obtained by evaluating at time the -martingale defined by

| (2.9) |

Proof. By Corollaries 10.28 and 10.29 and Theorem 10.30 in [FS02], the minimal martingale measure, when it exists, is unique and is determined by the Radon-Nikodym derivative obtained by evaluating at the -martingale

| (2.10) |

and is the martingale part in the Doob decomposition of with respect to . Hence we have

| (2.11) |

The equality (2.9) follows from (2.10), (2.11), (2.4), and (2.5).

Remark 2.4

The measure obtained by using (2.9) is in general signed. Indeed, as the random variable is -adapted and is -predictable, the term can take arbitrarily negative values that can force to become negative. We will see in our numerical experiments that even though this is in general the case, negative occurrences are extremely unlikely for the usual parameter values that one encounters in financial time series. Consequently, the bias introduced by censoring paths that yield negative Radon-Nikodym derivatives and using as a well-defined positive measure is not noticeable.

Remark 2.5

The quantity in (2.9) is in general extremely difficult to compute. Lemma 2.1 allows us to rewrite it as a conditional expectation of a function that depends only on and hence it is reasonable to use the estimations and introduced in Section 2.1 to approximate . For example, if the innovations are Gaussian and we hence have , we will approximate

by or depending on the technique (Kalman or -likelihood, respectively) used to estimate the conditional volatility.

The Extended Girsanov Principle and the mean correcting martingale measure. This construction has been introduced in [EM98] as an extension in discrete time and for multivariate processes of the classical Girsanov Theorem. This measure is designed so that when the process is considered with respect to it, its dynamical behavior coincides with that of its martingale component under the original historical probability measure. These martingale measures are widely used in the GARCH context (see for example [BEK+11, Ort10] and references therein).

In this paragraph we will work with models that are slightly more general than (2.1) in the sense that we will allow for predictable trend terms and generalized innovations, that is, our ARSV model will take the form

| (2.12) |

with an -measurable random variable.

Theorem 2.6

Given the model specified by (2.12), let be the conditional probability density function under of the random variable given and let be the stochastic discount factors defined by:

The process , is a -martingale such that and defines in the ARSV setup the martingale measure associated to Extended Girsanov Principle via the identity .

Remark 2.7

As and are independent processes, Rohatgi’s formula yields:

Unfortunately, there is no closed form expression for this integral even in the Gaussian setup. Though ad hoc numerical have been developed to treat it (see for instance [GLD04]), the computation of the stochastic discount factors for each time step may prove to be a computationally heavy task.

The point that we just raised in the remark leads us to introduce yet another martingale measure that mimics the good analytical properties of the Extended Girsanov Principle in the GARCH case but that, in the ARSV setup, only shares approximately its other defining features. In order to present this construction we define the market price of risk process:

| (2.13) |

to which we can associate what we will call the stochastic discount factor:

where is the conditional probability density function of the innovations with respect to the measure given .

Theorem 2.8

Using the notation that we just introduced, we have that

- (i)

-

The process , is a -martingale such that .

- (ii)

-

defines an equivalent measure such that under which the discounted price process determined by (2.12) is a martingale. By an abuse of language we will refer to as the ARSV mean correcting martingale measure.

3 Local risk minimization for ARSV options

In this section we use the local risk minimization pricing/hedging technique developed by Föllmer, Schweizer, and Sondermann (see [FS86, FS91, Sch01], and references therein) as well as the different measures and volatility estimation techniques introduced in the previous section to come up with prices and hedging ratios for European options that have an ARSV process as model for the underlying asset.

As we will see, this technique provides simultaneous expressions for prices and hedging ratios that, even though require Monte Carlo computations in most cases, admit convenient interpretations based on the notion of hedging error minimization that can be adapted to various models for the underlying asset. This hedging approach has been studied in [Ort10] in the context of GARCH models with Gaussian innovations and in [BO11] for more general innovations. Additionally, the technique can be tuned in order to accommodate different prescribed hedging frequencies and hence adapts very well to realistic situations encountered by practitioners. In the negative side, like any other quadratic method, local risk minimization penalizes equally shortfall and windfall hedging errors, which may sometimes lead to inadequate hedging decisions.

3.1 Generalized trading strategies and local risk minimization

We now briefly review the necessary concepts on pricing by local risk minimization that we will needed in the sequel. The reader is encouraged to check with Chapter 10 of the excellent monograph [FS02] for a self-contained and comprehensive presentation of the subject.

As we have done so far, we will denote by the price of the underlying asset at time . The symbol denotes the continuously composed risk-free interest rate paid on the currency of the underlying in the period that goes from time to ; we will assume that is a predictable process. Denote by

The price at time of the riskless asset such that , is given by .

Let now be a European contingent claim that depends on the terminal value of the risky asset . In the context of an incomplete market, it will be in general impossible to replicate the payoff by using a self-financing portfolio. Therefore, we introduce the notion of generalized trading strategy, in which the possibility of additional investment in the riskless asset throughout the trading periods up to expiry time is allowed. All the following statements are made with respect to a fixed filtered probability space :

-

•

A generalized trading strategy is a pair of stochastic processes such that is adapted and is predictable. The associated value process of is defined as

-

•

The gains process of the generalized trading strategy is given by

-

•

The cost process is defined by the difference

These processes have discounted versions , , and defined as:

Assume now that both and the are in . A generalized trading strategy is called admissible for whenever it is in and its associated value process is such that

The hedging technique via local risk minimization consists of finding the strategies that minimize the local risk process

| (3.1) |

within the set of admissible strategies . More specifically, the admissible strategy is called local risk-minimizing if

for all and each admissible strategy . It can be shown that [FS02, Theorem 10.9] an admissible strategy is local risk-minimizing if and only if the discounted cost process is a -martingale and it is strongly orthogonal to , in the sense that , -a.s., for any . More explicitly, whenever a local risk-minimizing technique exists, it is fully determined by the backwards recursions:

| (3.2) | |||||

| (3.3) | |||||

| (3.4) |

The initial investment determined by these relations will be referred to as the local risk minimization option price associated to the measure .

3.2 Local risk minimization with respect to a martingale measure

As we have explicitly indicated in expressions (3.2)–(3.4), the option values and the hedging ratios that they provide depend on a previously chosen probability measure and a filtration. In the absence of external sources of information, the natural filtration to be used is the one generated by the price process. Regarding the choice of the probability measure, the first candidate that should be considered is the physical or historical probability associated to the price process due to the fact that from a risk management perspective this is the natural measure that should be used in order to construct the local risk (3.1).

In practice, the use of the physical probability encounters two major difficulties: on one hand, when (3.2)–(3.4) are written down with respect to this measure, the resulting expressions are convoluted and numerically difficult to estimate due to the high variance of the associated Monte Carlo estimators; a hint of this difficulty in the GARCH situation can be seen in Proposition 2.5 of [Ort10]. Secondly, unless the discounted prices are martingales with respect to the physical probability measure or there is a minimal martingale measure available, the option prices that result from this technique cannot be interpreted as arbitrage free prices.

These reasons lead us to explore the local risk minimization strategy for martingale measures and, more specifically for the martingale measures introduced in Section 2.2. Given that the trend terms that separate the physical measure from being a martingale measure are usually very small when dealing with daily or weekly financial returns, it is expected that the inaccuracy committed by using a conveniently chosen martingale measure will be smaller than the numerical error that we would face in the Monte Carlo evaluation of the expressions corresponding to the physical measure; see [Ort10, Proposition 3.2] for an argument in this direction in the GARCH context. In any case, the next section will be dedicated to carry out an empirical comparison between the hedging performances obtained using the different measures in Section 2.2, as well as the various volatility estimation techniques that we described and that are necessary to use them.

The following proposition is proved using a straightforward recursive argument in expressions (3.2)–(3.4) combined with the martingale hypothesis in its statement.

Proposition 3.1

Let be an equivalent martingale measure for the price process and be a European contingent claim that depends on the terminal value of the risky asset . The local risk minimizing strategy with respect to the measure is determined by the recursions:

| (3.5) | |||||

| (3.6) | |||||

| (3.7) |

where

| (3.8) |

Remark 3.2

When expression (3.7) is evaluated at it yields the initial investment necessary to setup the generalized local risk minimizing trading strategy that fully replicates the derivative and coincides with the arbitrage free price for that results from using as a pricing measure. Obviously, this connection only holds when local risk minimization is carried out with respect to a martingale measure.

Remark 3.3

Local risk-minimizing trading strategies computed with respect to a martingale measure also minimize [FS02, Proposition 10.34] the so called remaining conditional risk, defined as the process , ; this is in general not true outside the martingale framework (see [Sch01, Proposition 3.1] for a counterexample). Analogously, local risk minimizing strategies are also variance-optimal, that is, they minimize (see [FS02, Proposition 10.37]). This is particularly relevant in the ARSV context in which a standard sufficient condition (see [FS02, Theorem 10.40]) that guarantees that local risk minimization with respect to the physical measure implies variance optimality does not hold; we recall that this condition demands for the deterministic character of the quotient

Indeed, expressions (2.4) and (2.5), together with Lemma 2.3 imply that in our situation

which is obviously not deterministic.

Remark 3.4

The last equality in (3.8) is of much importance when using Monte Carlo simulations to evaluate (3.5)–(3.7) and suggests the correct estimator that must be used in practice: if we generate under the measure a set of price paths all of which start at at time and take values at time , then in view of the last equality in (3.8) we write

We warn the reader that an estimator for based on the Monte Carlo evaluation of

would have much more variance and once inserted in the denominator of (3.6) would produce unacceptable results.

Remark 3.5

The Monte Carlo estimation of the expressions (3.5)–(3.7) requires the generation of price paths with respect to the martingale measure . In the GARCH context this can be easily carried out for a variety of pricing kernels by rewriting the process in connection with the new equivalent measure in terms of new innovations (see for example [Dua95, Ort10, GCI10, BEK+11]). This method can be combined with modified Monte Carlo estimators that enforce the martingale condition and that have a beneficial variance reduction effect like, for example, the Empirical Martingale Simulation technique introduced in [DS98, DGS01]. Unfortunately, this is difficult to carry out in our context and, more importantly, it does not produce good numerical results. Given that all the pricing measures introduced in the previous section are constructed out of the physical one, the best option in our setup consists of carrying out the path simulation with respect to the physical measure and computing the expectations in (3.5)–(3.7) using the corresponding Radon-Nikodym derivative. More specifically, let be an equivalent martingale measure that is obtained out of the physical measure by constructing a Radon-Nikodym derivative of the form:

such that the process defined by is a -martingale that satisfies ; notice that all the measures introduced in the previous section are of that form. In that setup we define the process by that allows us to rewrite -expectations in terms of -expectations, that is, for any measurable function and any ,

The proof of this equality is a straightforward consequence of [FS02, Proposition A.11] and of the specific way in which the measure is constructed.

3.3 Local risk minimization and changes in the hedging frequency

As we already pointed out one of the major advantages of the local risk minimization hedging scheme when compared to other sensitivity based methods, is its adaptability to prescribed changes in the hedging frequency. Indeed, suppose that the life of the option with maturity in time steps is partitioned into identical time intervals of duration ; this assumption implies the existence of an integer such that .

We now want to set up a local risk minimizing replication strategy for in which hedging is carried out once every time steps. We will denote by the hedging ratio at time that presupposes that the next hedging will take place at time . The value of such ratios will be obtained by minimizing the -spaced local risk process:

where is a cost process constructed out of value and gains processes, and that only take into account the prices of the underlying assets at time steps , in particular, given an integer such that

A straightforward modification of the argument in [FS02, Theorem 10.9] proves that the solution of this local risk minimization problem with modified hedging frequency with respect to a martingale measure is given by the expressions:

| (3.9) | |||||

| (3.10) | |||||

| (3.11) |

for any . As a follow up to what we pointed out in the remark 3.4, the denominator in (3.10) should be computed by first noticing that

and then using the appropriate Monte Carlo estimator for the variance, that is,

| (3.12) |

where are realizations of the price process under at time , using paths that have all the same origin at time . If the interest rate process is constant and equal to , then (3.12) obviously reduces to

3.4 Local risk minimization and the minimal martingale measure

Local risk-minimization requires picking a particular probability measure in the problem. We also saw that it is only when discounted prices are martingales with respect to that measure that the obtained value process for the option in question coincides with the arbitrage free price that one would obtain by using that martingale measure as a pricing kernel. This observation particularly concerns the physical probability which one would take as the first candidate to use this technique since it is the natural measure to be used when quantifying the local risk.

The solution of this problem is one of the motivations for the introduction of the minimal martingale measure that we defined in Section 2.2. Indeed, it can be proved that (see Theorem 10.22 in [FS02]) the value process of the local risk-minimizing strategy with respect to the physical measure coincides with arbitrage free price for obtained by using as a pricing kernel. More specifically, if is the value process for obtained out of formulas (3.2)–(3.4) using the physical measure then

| (3.13) |

We emphasize that, as we pointed out in Remark 2.4, the minimal martingale measure in the ARSV setup is in general signed. Nevertheless, the occurrences of negative Radon-Nikodym derivatives are extremely unlikely, at least when dealing with Gaussian innovations, which justifies its use for local risk minimization.

We also underline that even though the value processes obtained when carrying out local risk minimization with respect to the physical and the minimal martingale measures are identical, the hedges are in general not the same and consequently so are the hedging errors. In the next proposition we provide a relation that links both hedges and identify situations in which they coincide. Before we proceed we need to introduce the notion of global (hedging) risk process: it can be proved that once a probability measure has been fixed, if there exists a local risk-minimizing strategy with respect to it, then it is unique (see [FS02, Proposition 10.9]) and the discounted payoff can be decomposed as (see [FS02, Corollary 10.14])

| (3.14) |

with the discounted gains process associated to and , a sequence that we will call the (discounted) global (hedging) risk process. is a square integrable -martingale that satisfies and that is strongly orthogonal to in the sense that

The decomposition (3.14) shows that measures how far is from the terminal value of the self-financing portfolio uniquely determined by the initial investment and the trading strategy given by (see [LL08, Proposition 1.1.3]).

Proposition 3.6

Let be a European contingent claim that depends on the terminal value of the risky asset and let and be the local risk minimizing hedges associated to the minimal martingale measure and the physical measure , respectively. Let be the associated -global risk process. Then, for any :

| (3.15) |

If the processes and are either –strongly orthogonal or –independent, then .

4 Empirical study

The goal of this section is comparing the hedging performances obtained by implementing the local risk minimization scheme using the different volatility estimation techniques and martingale measures introduced in Section 2. In order to enrich the discussion, we will add to the list two standard sensitivity based hedging methods that provide good results in other contexts, namely standard Black-Scholes [BS72] and an adaptation of Duan’s static hedge [Dua95] to the ARSV context.

The way in which we proceed with the comparison consists of taking a particular ARSV model as price paths generating process and then carrying out the replication of a European call option according to the prescribed list of hedging methods for various moneyness, strikes and hedging frequencies. At the end of the life of each of this option, the square hedging error is recorded. This task is carried out for a number of independently generated price paths which allows us to estimate a mean square hedging error that we will use to compare the different hedging techniques.

The price generating ARSV process. The price process chosen for our simulations is obtained by taking the model that has as associated log-returns one of the ARSV prescriptions studied in [WLLdC11]. It consists of taking the following parameters in (2.1):

The use of formulas (2.2) shows that the resulting log-returns are very leptokurtic () and volatile (the stationary variance is which amounts to an annualized volatility of 47%). The intentionality behind this choice is exacerbating the defects of the different hedging methods in order to better compare them.

The hedging methods. Given the price paths generated by the ARSV process detailed in the previous paragraph, we will carry out the replication of European call options that have them as underlying asset using local risk minimization with respect to the two martingale measures introduced in Section 2.2, namely:

-

•

ARSV mean correcting martingale measure introduced in Theorem 2.8. The hedging method is abbreviated as LRM-MCMM in the figures.

-

•

Minimal martingale measure. Denoted by and introduced in Theorem 2.3. The hedging method is abbreviated as LRM-MMM in the figures. This measure may be signed in the ARSV context but this happens with extremely low probability (see Remark 2.4); in our simulations we did not encounter this situation even a single time. Local risk minimization with respect to this measure yields the same value process as the physical probability but not the same hedging ratios; Proposition 3.6 spells out the difference and provides sufficient conditions for them to coincide.

The construction of all these measures requires conditional expectations of functions of the conditional variance of the log-returns with respect to the filtration generated by the price process. As this filtration does not determine the conditional volatilities, those expectations cannot be easily computed; we hence proceed by estimating the conditional volatilities via Kalman filtering and a prediction-update routine based on the -likelihood approach, both briefly described in Section 2.1. Different volatility estimation techniques lead to different pricing/hedging kernels and hence to different hedging performances that we compare in our simulations.

We will complete the comparison exercise with two more methods:

-

•

Black-Scholes: we forget that the price process is generated via an ARSV model and we handle the hedging using the standard Black-Scholes delta [BS72] as if the underlying was a realization of a log-normal process with constant drift and volatility given by the drift and marginal volatility (given by the expression (2.2)) of the ARSV model specified above.

-

•

Duan’s static delta hedge: this is a generalization of the Black-Scholes delta hedge that has been introduced in [Dua95, Corollary 2.4] in the GARCH context. In that result the author computes the derivative of a European call price (the delta put can be readily obtained out of the call-put parity) with respect to the price of the underlying in an arbitrary incomplete situation in which that option price is simply obtained as the expectation of the discounted payoff with respect to a given pricing kernel , that is:

In those circumstances:

In our empirical study we will use this hedging technique using the various pricing kernels previously introduced. A dynamical refinement of this hedge can be obtained by considering additional dependences of the value process on the underlying price that may occur (like in our case) through the volatility process [GR99, BO11]. This will not be considered in our study.

Numerical specifications. The comparisons in performance will be carried out by hedging batches of European call options with different maturities and three different moneyness of 1.11, 1, and 0.90, and for a number of independent price paths. The initial price of the underlying is always equal to . We will assign to each hedging experiment a prescribed hedging frequency. The mean square hedging error associated to each individual hedging method will be computed by taking the mean of the terminal square hedging errors for each of the price paths. In the particular case of the local risk minimization method, where the hedges are obtained by computing the expressions (3.9)–(3.11), the conditional expectations that they contain will be estimated via Monte Carlo evaluations based on the use of price paths.

The hedging exercises. We now explain in detail the different hedging experiments that we have carried out.

-

•

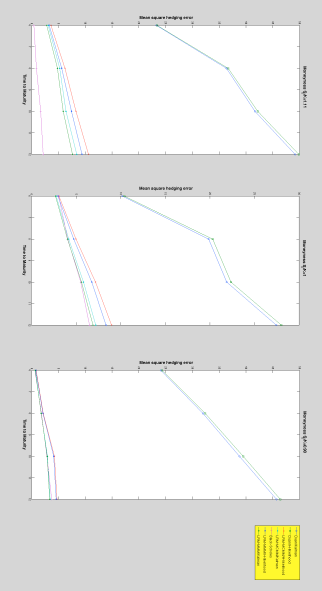

Exercise 1: all the hedging techniques explained in the paper are used. The four maturities considered are , , , and time steps. Hedging is carried out daily. The computation of the mean square hedging error is based on price paths. The first thing that we notice is that, even for these short maturities, the performance of Duan’s static hedge is very poor. This is specific to the ARSV situation for in the GARCH case this technique provides very competitive results [Ort10] even in the presence of processes excited by leptokurtic innovations [BO11]. Additionally, the Black-Scholes hedging scheme provides remarkably good results, specially for in and at the money options. Regarding the local risk minimization strategy, the best results are obtained when using a combination of a Kalman based estimation of the conditional volatility and the minimal martingale measure .

Figure 4.1: Experiment 1. All the hedging techniques explained in the paper are used. The four maturities considered are , , , and time steps. Hedging is carried out daily. The computation of the mean square hedging error is based on price paths. -

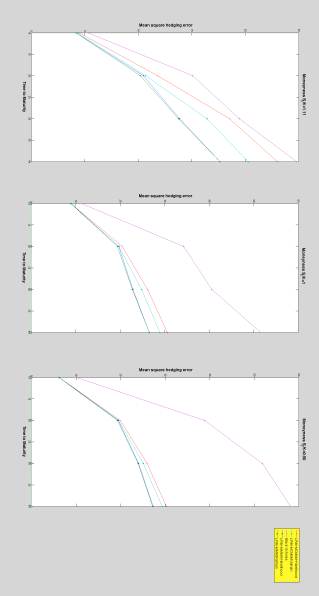

•

Exercise 2: we have retaken the specifications of Exercise , but this time around we have eliminated from the list of techniques under examination Duan’s static hedge due to its poor performance. The four maturities considered are , , , and time steps. Hedging is carried out every days. The main difference with the previous exercise is the degradation of the performance of the Black-Scholes scheme in comparison with local risk minimization. This is due to the fact that, unlike Black-Scholes, local risk minimization admits adjustment to the change of hedging frequency by using the formulas (3.9)–(3.11). Regarding local risk minimization it is still the combination of the minimal martingale measure with Kalman based estimation for the volatilities that performs the best.

Figure 4.2: Experiment 2. The four maturities considered are , , , and time steps. Hedging is carried out every days. The computation of the mean square hedging error is based on price paths. -

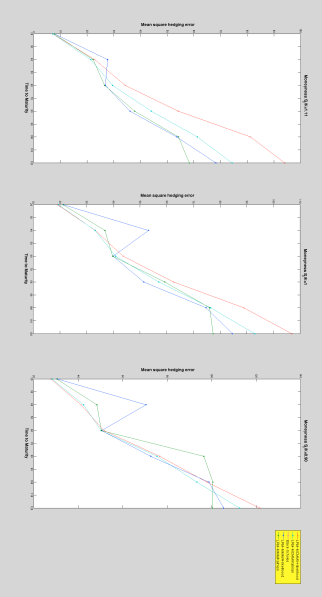

•

Exercise 3: we experiment with longer maturities and smaller hedging frequencies. We consider maturities of , , , , , and time steps with hedging carried out every days. The computation of the mean square hedging error is based on price paths. The novelty in these experiments with respect to the preceding ones is that in some instances the volatility estimation via –likelihood seems more appropriate than Kalman’s method in this setup.

Figure 4.3: Experiment 6. The hedging techniques used are spelled out in the legend. Six maturities are considered: , , , and time steps. Hedging is carried out every days. The computation of the mean square hedging error is based on price paths.

5 Conclusions

In this work we have reported on the applicability of the local risk minimization pricing/hedging technique in the handling of European options that have an auto-regressive stochastic volatility (ARSV) model as their underlying asset. The method has been implemented using the following martingale measures:

-

•

The minimal martingale measure: even though it is in general signed, the probability of occurrence of negative Radon-Nikodym derivatives is extremely low and hence can be used to serve our purposes.

-

•

Mean correcting martingale measure: it is a measure that shares the same expression and good analytical and numerical properties as the measure obtained in the GARCH context out of the Extended Girsanov Principle. The reason to use this proxy instead of the genuine Extended Girsanov Principle is that in the ARSV case, this procedure produces a measure that is numerically expensive to evaluate.

An added difficulty when putting this scheme into practice and that is specific to ARSV models, has to do with the need of estimating the volatility that, even though in this situation is not determined by the observable price process, it is necessary in order to construct the pricing/hedging kernels. Two techniques are used to achieve that: Kalman filtering and hierarchical likelihood (-likelihood). Both methods are adequate volatility estimation techniques, however the -likelihood technique has a much wider range of applicability for it is not subjected to the rigidity of the state space representation necessary for Kalman and hence can be used for stochastic volatility models with complex link functions or when innovations are non-Gaussian.

We have carried out a numerical study to compare the hedging performance of the methods based on different measures and volatility estimation techniques. Overall, the most appropriate technique seems to be the choice that combines the minimal martingale measure with a Kalman filtering based estimation of the volatility. -likelihood based volatility estimation gains pertinence as maturities become longer and the hedging frequency diminishes.

6 Appendix

6.1 Proof of Lemma 2.1

Let be an arbitrary measurable random variable. As is independent of and by hypothesis it is also independent of , the joint law of the random variable can be written as the product . Hence, using Fubini’s Theorem we have

| (6.1) | |||||

At the same time, by the definition of , for any measurable random variable , the equality (6.1) implies that:

As is measurable and arbitrary, the almost sure uniqueness of the conditional expectation implies that

as required.

The result that we just proved is a particular case of the following Lemma that we state for reference. The proof follows the same pattern as in the previous paragraphs.

Lemma 6.1

Let be a probability space and a sub--algebra. Let and be two random variables such that is simultaneously independent of and . For any measurable function define the mapping by . Then,

6.2 Proof of Theorem 2.6

The proof is a straightforward consequence of Theorem 3.1 in [EM98]. Indeed, it suffices to identify in the ARSV case the two main ingredients necessary to carry out the Extended Girsanov Principle, namely the one period excess discounted return defined by:

with , and the process uniquely determined by the relation:

The Theorem follows from expression (3.8) in [EM98].

6.3 Proof of Theorem 2.8

(i) We start by noticing that since is independent of both and , by Lemma 6.1,

| (6.2) |

where the real function is defined by

which substituted in (6.2) yields

| (6.3) |

This equality proves immediately the martingale property for . Indeed,

Finally, as is a -martingale

(ii) is by construction non-negative and . This guarantees (see, for example, Remarks after Theorem 4.2.1 in [LL08]) that is a probability measure equivalent to .

We now start proving that is a martingale measure by showing that:

| (6.4) |

Indeed,

Now, as is independent of both and , we can use Lemma 6.1 to prove that

| (6.5) |

Indeed, we can write

| (6.6) |

where the function is defined by

which substituted in (6.6) yields (6.5). Finally, if we insert in (6.5) the explicit expression (2.13) that defines the market price of risk, we obtain that

as required. The last equality in the previous expression follows from Lemma 2.1.

6.4 Proof of Proposition 3.6

Consider the unique decomposition (3.14) of the discounted payoff as a sum of the discounted -gains and -risk processes:

| (6.7) |

We recall that the minimal martingale measure is characterized by the property that every -martingale that is strongly orthogonal to the discounted price process , is also a -martingale. Hence, as the -martingale is -strongly orthogonal to , it is therefore a -martingale. Having this in mind, as well as (3.13), we take conditional expectations on both sides of the decomposition (6.7) and we obtain:

As is the local risk minimizing value process for both the and the measures, that is , if we multiply both sides of by and take conditional expectations we have:

If we divide both sides of this equality by and we use the relation (3.3) we obtain that

as required. Finally, we notice that

hence, either the -independence or the orthogonality of and imply that and hence .

References

- [AS96] T. G. Andersen and B. E. Sørensen. GMM estimation of a stochastic volatility model: A Monte Carlo study. Journal of Business and Economic Statistics, 14:328–352, 1996.

- [BEK+11] Alex Badescu, Robert J. Elliott, Reg Kulperger, Jarkko Miettinen, and Tak Kuen Siu. A comparison of pricing kernels for GARCH option pricing with generalized hyperbolic distributions. IJTAF, 14(5):669–708, 2011.

- [BO11] Alex Badescu and Juan-Pablo Ortega. Hedging garch options with generalized innovations. In preparation, 14(5):669–708, 2011.

- [Bol86] Tim Bollerslev. Generalized autoregressive conditional heteroskedasticity. J. Econometrics, 31(3):307–327, 1986.

- [BS72] F. Black and M. Scholes. The valuation of option contracts and a test of market efficiency. J. Finance, 27:399–417, 1972.

- [CPnR04] M. Angeles Carnero, D. Peña, and E. Ruiz. Persistence and kurtosis in GARCH and stochastic volatility models. Journal of Financial Econometrics, 2(2):319–342, 2004.

- [Dan94] J. Daníelsson. Stochastic volatility in asset prices: estimation with simulated maximum likelihood. Journal of Econometrics, 64:375–400, 1994.

- [dCL08] Joan del Castillo and Youngjo Lee. GLM-methods for volatility models. Stat. Model., 8(3):263–283, 2008.

- [DGS01] Jin-Chuan Duan, Geneviève Gauthier, and Jean-Guy Simonato. Asymptotic distribution of the ems option price estimator. Mgmt. Sci., 47(8):1122–1132, 2001.

- [DS98] Jin-Chuan Duan and Jean-Guy Simonato. Empirical martingale simulation of asset prices. Mgmt. Sci., 44(9):1218–1233, 1998.

- [Dua95] Jin-Chuan Duan. The GARCH option pricing model. Math. Finance, 5(1):13–32, 1995.

- [EM98] Robert J. Elliott and Dilip B. Madan. A discrete time equivalent martingale measure. Math. Finance, 8(2):127–152, 1998.

- [Eng82] Robert F. Engle. Autoregressive conditional heteroscedasticity with estimates of the variance of United Kingdom inflation. Econometrica, 50(4):987–1007, 1982.

- [FS86] Hans Föllmer and Dieter Sondermann. Hedging of nonredundant contingent claims. In Contributions to mathematical economics, pages 205–223. North-Holland, Amsterdam, 1986.

- [FS91] Hans Föllmer and Martin Schweizer. Hedging of contingent claims under incomplete information. In Applied stochastic analysis (London, 1989), volume 5 of Stochastics Monogr., pages 389–414. Gordon and Breach, New York, 1991.

- [FS02] Hans Föllmer and Alexander Schied. Stochastic finance, volume 27 of de Gruyter Studies in Mathematics. Walter de Gruyter & Co., Berlin, 2002. An introduction in discrete time.

- [GCI10] D. Guegan, C. Chorro, and F. Ielpo. Option pricing for garch-type models with generalized hyperbolic innovations. Preprint, 2010.

- [GLD04] Andrew G. Glen, Lawrence M. Leemis, and John H. Drew. Computing the distribution of the product of two continuous random variables. Comput. Statist. Data Anal., 44(3):451–464, 2004.

- [GR99] R. Garcia and E. Renault. Note on hedging in ARCH-type option pricing models. Math. Finance, 8(8):153–161, 1999.

- [HN00] S. L. Heston and S. Nandi. A closed-form garch option valuation model. The Review of Financial Studies, 13(3):585–625, 2000.

- [HRS94] A. C. Harvey, E. Ruiz, and N. Shephard. Multivariate stochastic variance models. Review of Economic Studies, 61:247–264, 1994.

- [JPR94] E. Jacquier, N.G. Polson, and P.E. Rossi. Bayesian analysis of stochastic volatility models. Journal of Business and Economic Statistics, 12:371–417, 1994.

- [LL08] Damien Lamberton and Bernard Lapeyre. Introduction to stochastic calculus applied to finance. Chapman & Hall/CRC Financial Mathematics Series. Chapman & Hall/CRC, Boca Raton, FL, second edition, 2008.

- [LN96] Y. Lee and J. A. Nelder. Hierarchical generalized linear models. J. Roy. Statist. Soc. Ser. B, 58(4):619–678, 1996. With discussion.

- [LN06] Youngjo Lee and John A. Nelder. Double hierarchical generalized linear models. J. Roy. Statist. Soc. Ser. C, 55(2):139–185, 2006.

- [Mer76] R. Merton. Option pricing when underlying stock returns are discontinuous. J. Finan. Econ., 3:125–144, 1976.

- [MT90] A. Melino and S. M. Turnbull. Pricing foreign currency options with stochastic volatility. Journal of Econometrics, 45:239–265, 1990.

- [Ort10] J.-P. Ortega. GARCH options via local risk minimization. Quantitative Finance, 2010.

- [Sch01] M. Schweizer. A guided tour through quadratic hedging approaches. In Option pricing, interest rates and risk management, Handbook of Mathematical Finance, pages 538–574. Cambridge University Press, Cambridge, 2001.

- [Tay86] Stephen J. Taylor. Modelling Financial Time Series. John Wiley & Sons, Chichester, 1986.

- [Tay05] Stephen J. Taylor. Asset Price Dynamics, Volatility, and Prediction. Princeton University Press, Princeton, 2005.

- [WLLdC11] Johan Lim Woojoo Lee, Youngjo Lee, and Joan del Castillo. The hierarchical-likelihood approach to autoregressive stochastic volatility models. Computational Statistics and Data Analysis, 55(55):248–260, 2011.