11email: {shenglaizeng, lizhuestc, heyh.uestc}@gmail.com, yuhf@uestc.edu.cn 22institutetext: Sch of Comp Sci & Tech, Harbin Institute of Technology, Shenzhen, China

22email: xuzenglin@hit.edu.cn 33institutetext: Sch of Comp Sci & Engin, Nanyang Technological University, Singapore

33email: {dniyato, han.yu}@ntu.edu.sg

Heterogeneous Federated Learning via Grouped Sequential-to-Parallel Training

Abstract

Federated learning (FL) is a rapidly growing privacy preserving collaborative machine learning paradigm. In practical FL applications, local data from each data silo reflect local usage patterns. Therefore, there exists heterogeneity of data distributions among data owners (a.k.a. FL clients). If not handled properly, this can lead to model performance degradation. This challenge has inspired the research field of heterogeneous federated learning, which currently remains open. In this paper, we propose a data heterogeneity-robust FL approach, FedGSP, to address this challenge by leveraging on a novel concept of dynamic Sequential-to-Parallel (STP) collaborative training. FedGSP assigns FL clients to homogeneous groups to minimize the overall distribution divergence among groups, and increases the degree of parallelism by reassigning more groups in each round. It is also incorporated with a novel Inter-Cluster Grouping (ICG) algorithm to assist in group assignment, which uses the centroid equivalence theorem to simplify the NP-hard grouping problem to make it solvable. Extensive experiments have been conducted on the non-i.i.d. FEMNIST dataset. The results show that FedGSP improves the accuracy by 3.7% on average compared with seven state-of-the-art approaches, and reduces the training time and communication overhead by more than 90%.

ederated learning Distributed data mining Heterogeneous data Clustering-based learning

Keywords:

F1 Introduction

Federated learning (FL) [1], as a privacy-preserving collaborative paradigm for training machine learning (ML) models with data scattered across a large number of data owners, has attracted increasing attention from both academia and industry. Under FL, data owners (a.k.a. FL clients) submit their local ML models to the FL server for aggregation, while local data remain private. FL has been applied in fields which are highly sensitive to data privacy, including healthcare [2], manufacturing [3] and next generation communication networks [4]. In practical applications, FL clients’ local data distributions can be highly heterogeneous due to diverse usage patterns. This problem is referred to as the non-independent and identically distributed (non-i.i.d.) data challenge, which negatively affects training convergence and the performance of the resulting FL model[5].

Recently, heterogeneous federated learning approaches have been proposed in an attempt to address this challenge. These works try to make class distributions of different FL clients similar to improve the performance of the resulting FL model. In [5, 6, 7], FL clients share a small portion of local data to build a common meta dataset to help correct deviations caused by non-i.i.d. data. In [8, 9], data augmentation is performed for categories with fewer samples to reduce the skew of local datasets. These methods are vulnerable to privacy attacks as misbehaving FL servers or clients can easily compromise the shared private data and the augmentation process. To align client data distributions without exposing the FL process to privacy risks, we group together heterogeneous FL clients so that each group can be perceived as a homogeneous “client” to participate in FL. This process does not involve any manipulation of private data itself and is therefore more secure.

An intuitive approach to achieve this goal is to assign FL clients to groups with similar overall class distribution, and use collaborative training to coordinate model training within and among groups. However, designing such an approach is not trivial due to the following two challenges. Firstly, assigning FL clients to a specified number of groups of equal group sizes to minimize the data divergence among groups (which can be reduced from the well-known bin packing problem [10]) is an NP-hard problem. Moreover, such group assignment process needs to be performed periodically in a dynamic FL environment, which introduces higher requirements for its effectiveness and execution efficiency. Secondly, even if the data distributions among groups are forced to be homogeneous, the data within each group can still be skewed. Due to the robustness of sequential training mode (STM) to data heterogeneity, some collaborative training approaches (e.g., [9]) adopt STM within a group to train on skewed client data. Then, the typical parallel training mode (PTM) can be applied among homogeneous groups. These methods are promising, but are still limited due to their static properties, which prevents them from adapting to the changing needs of FL at different stages. In FL, STM should be emphasized in the early stage to achieve a rapid increase in accuracy in the presence of non-i.i.d. data, while PTM should be emphasized in the later stage to promote convergence. In the static mode, the above parallelism degree must be carefully designed to realize a proper trade-off between sensitivity to heterogeneous data of PTM and overfitting of STM. Otherwise, the FL model performance may suffer.

To address these challenges, this paper proposes a new concept of dynamic collaborative Sequential-to-Parallel (STP) training to improve FL model performance in the presence of non-i.i.d. data. The core idea of STP is to force STM to be gradually transformed into PTM as FL model training progresses. In this way, STP can better refine unbiased model knowledge in the early stage, and promote convergence while avoiding overfitting in the later stage. To support the proposed STP, we propose a Federated Grouped Sequential-to-Parallel (FedGSP) training framework. FedGSP allows reassignment of FL clients into more groups in each training round, and introduces group managers to manage the dynamically growing number of groups. It also coordinates model training and transmission within and among groups. In addition, we propose a novel Inter-Cluster Grouping (ICG) method to assign FL clients to a pre-specified number of groups, which uses the centroid equivalence theorem to simplify the original NP-hard grouping problem into a solvable constrained clustering problem with equal group size constraint. ICG can find an effective solution with high efficiency (with a time complexity of ). We evaluate FedGSP on the most widely adopted non-i.i.d. benchmark dataset FEMNIST[11] and compare it with seven state-of-the-art approaches including FedProx[12], FedMMD[13], FedFusion[14], IDA[15], FedAdam, FedAdagrad and FedYogi[16]. The results show that FedGSP improves model accuracy by 3.7% on average, and reduces training time and communication overhead by more than 90%. To the best of our knowledge, FedGSP is the first dynamic collaborative training approach for FL.

2 Related Work

Existing heterogeneous FL solutions can be divided into three main categories: 1) data augmentation, 2) clustering-based learning, and 3) adaptive optimization.

Data Augmentation: Zhao et al. [5] proved that the FL model accuracy degradation due to heterogeneous local data can be quantified by the earth move distance (EMD) between the client and global data distributions. This result motivates some research works to balance the sample size of each class through data augmentation. Zhao et al. [5] proposed to build a globally shared dataset to expand client data. Jeong et al. [8] used the conditional generative network to generate new samples for categories with fewer samples. Similarly, Duan et al. [9] used augmentation techniques such as random cropping and rotation to expand client data. These methods are effective in improving the FL model accuracy by reducing data skew. However, they involve modifying clients’ local data, which can lead to serious privacy risks.

Clustering-based Learning: Another promising way to reduce data heterogeneity is through clustering-based FL. Sattler et al. [17] groups FL clients with similar class distributions into one cluster, so that FL clients with dissimilar data distributions do not interfere with each other. This method works well in personalized FL [18] where FL is perform within each cluster and an FL model is produced for each cluster. However, it is not the same as our goal which is to train one shared FL model that can be generalized to all FL clients. Duan et al. [9] makes the KullbackLeibler divergence of class distributions similar among clusters, and proposed a greedy best-fit strategy to assign FL clients.

Adaptive Optimization: Other research explores adaptive methods to better merge and optimize client- and server-side models. On the client side, Li et al. [12] added a proximal penalty term to the local loss function to constrain the local model to be closer to the global model. Yao et al. [13] adopted a two-stream framework and used transfer learning to transfer knowledge from the global model to the local model. A feature fusion method has been further proposed to better merge the features of local and global models [14]. On the server side, Yeganeh et al. [15] weighed less out-of-distribution models based on inverse distance coefficients during aggregation. Instead, Reddi et al. [16] focused on server-side optimization and introduced three advanced adaptive optimizers (Adagrad, Adam and Yogi) to obtain FedAdagrad, FedAdam and FedYogi, respectively. These methods perform well in improving FL model convergence.

Solutions based on data augmentation are at risky due to potential data leakage, while solutions based on adaptive optimization do not solve the problem of class distribution divergence causing FL model performance to degrade. FedGSP focuses on clustering-based learning. Different from existing research, it takes a novel approach of dynamic collaborative training, which allows dynamic scheduling and reassignment of clients into groups according to the changing needs of FL.

3 Federated Grouped Sequential-to-Parallel Learning

In this section, we first describe the concept and design of the STP approach. Then, we present the FedGSP framework which is used to support STP. Finally, we mathematically formulate the group assignment problem in STP, and present our practical solution ICG.

3.1 STP: The Sequential-to-Parallel Training Mode

Under our grouped FL setting, FL clients are grouped such that clients in the same group have heterogeneous data but the overall data distributions among the groups are homogeneous. Due to the difference in data heterogeneity, the training modes within and among groups are designed separately. We refer to this jointly designed FL training mode as the “collaborative training mode”.

Intuitively, the homogeneous groups can be trained in a simple parallel mode PTM because the heterogeneity of their data has been eliminated by client grouping. Instead, for FL clients in the same group whose local data are still skewed, the sequential mode STM can be useful. In STM, FL clients train the model in a sequential manner. A FL client receives the model from its predecessor client and delivers the local trained model to its successor client to continue training. In the special case of training with only one local epoch (i.e., ), STM is equivalent to centralized SGD, which gives it robustness against data heterogeneity.

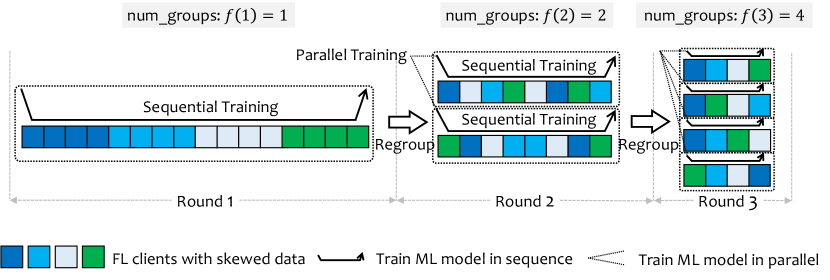

This naive collaborative training mode is static and has limitations. Therefore, we extend it to propose a more dynamic approach STP. As shown in Figure 1, STP reassigns FL clients into groups and shuffles their order in each round , where is a pre-specified group number growth function, with the goal to dynamically adjust the degree of parallelism. Then, STP can be smoothly transformed from (full) sequential mode to (full) parallel mode. This design can prevent catastrophic forgetting caused by the long “chain of clients” that causes the FL model to forget the data of previous clients and overfit the data of subsequent clients, and can also prevent the FL model from learning interfering information such as the order of clients. Moreover, the growing number of groups improves the parallelism efficiency, which promotes convergence and speeds up training when the global FL model is close to convergence.

The pseudo code of STP is given in Algorithm 1. In round , STP divides all FL clients into groups using the ICG grouping algorithm (Line 5), which will be described in Section 3.3. Due to the similarity of data among groups, each group can independently represent the global distribution, so only a small proportion of groups are required to participate in each round of training (Line 7). The first FL client in each group pulls the global model from the FL server (Line 9), and trains its local model using mini-batch SGD for one epoch (Line 11). The trained local model is then delivered to the next FL client to continue training (Line 12), until the last FL client is reached. The last FL client in each group sends the trained model to the FL server (Line 14). Models from all groups are aggregated to update the global FL model (Line 16). The above steps repeat until the maximum training round is reached. Finally, the well-trained global FL model is obtained (Line 18).

The choice of the growth function for the number of groups, , is critical for the performance of STP. We give three representative growth functions, including linear (smooth grow), logarithmic (fast first and slow later), and exponential (slow first and fast later) growth functions:

| (1) | |||||

| (2) | |||||

| (3) |

where the real number coefficient controls the growth rate, and the integer coefficient controls the initial number of groups and the growth span. We recommend to initialize , to a moderate value and explore the best setting in an empirical manner.

3.2 FedGSP: The Grouped FL Framework To Enable STP

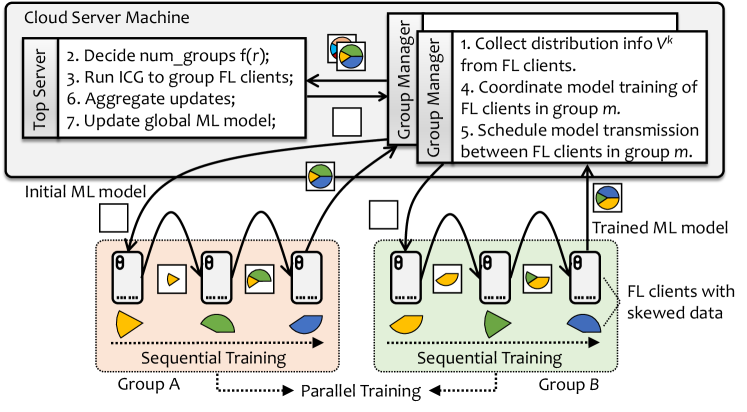

In this section, we describe the FedGSP framework that enables dynamic STP. FedGSP is generally a grouped FL framework that supports dynamic group management, as shown in Figure 2. The basic components include a top server (which acts as an FL server and performs functions related to group assignment) and a large number of FL clients. FL clients can be smart devices with certain available computing and communication capabilities, such as smart phones, laptops, mobile robots and drones. They collect data from the surrounding environment and use the data to train local ML models.

In addition, FedGSP creates group managers to facilitate the management of the growing number of groups in STP. The group managers can be virtual function nodes deployed in the same machine as the top server. Whenever a new group is built, a new group manager is created to assist the top server to manage this group by performing the following tasks:

1. Collect distribution information. The group manager needs to collect class distributions of FL clients and report them to the top server. These meta information will be used to assign FL clients to groups via ICG.

2. Coordinate model training. The group manager needs to coordinate the sequential training of FL clients in its group, as well as the parallel training with other groups, according to the rules of STP. Specifically, it needs to shuffle the order of clients and report resulting model to the top server for aggregation.

3. Schedule model transmission. In applications such as Industrial IoT systems, wireless devices can directly communicate with each other through wireless sensor networks (WSNs). However, this cannot be realized in most scenarios. Therefore, the group manager needs to act as a communication relay to schedule the transmission of ML models from one client to another.

3.3 ICG: The Inter-Cluster Grouping Algorithm

As required by STP, the equally sized groups containing heterogeneous FL clients should have similar overall class distributions. To achieve this goal, in this section, we first formalize the FL client grouping problem which is NP-hard, and then explain how to simplify to propose the ICG approach.

(A) Problem Modeling

Considering an -class classification task involving FL clients, STP needs to assign these clients to groups, where is determined by the group number growth function and the current round . Our goal is to find a grouping strategy in the 0-1 space to minimize the difference in class distributions of all groups, where represents the device is assigned to the group , is the class distribution matrix composed of -dimensional class distribution vectors of FL clients, represents the overall class distribution of group , and represents the distance between two class distributions. The problem can be formalized as follows:

| (4) | ||||

| (5) | ||||

| (6) | ||||

| (7) | ||||

| (8) | ||||

| (9) |

Constraint (5) ensures that the number of groups meets required by STP. Constraint (6) ensures that the groups have similar or equal size . Constraint (7) ensures that each client can only be assigned to one group at a time. The overall class distribution of the group is defined by Eq. (8), where is the class distribution vector of client . Constraint (9) restricts the decision variable to only take up a value of 0 or 1.

Proposition 1

Proof

The problem stated by Eq. (4) to Eq. (9) is actually a BPP with additional constraints, where items with integer weight and unit volume should be packed into the minimum number of bins of integer capacity . The difference is that Eq. (4) to Eq. (9) restricts the number of available bins to instead of unlimited, and the difference in the bin weights not to exceed . The input and output of BPP and Eq. (4) to Eq. (9) are matched, with only additional transformation complexity to set and to infinity. Therefore, BPP can call the solution of Eq. (4) to Eq. (9) in time to obtain its solution, which proves that the NP-hard BPP [10] can be reduced to the problem stated by Eq. (4) to Eq. (9). Therefore, Eq. (4) to Eq. (9) is also an NP-hard problem.

Therefore, it is almost impossible to find the optimal solution within a polynomial time. To address this issue, we adopt the centroid equivalence theorem to simplify the original problem to a constrained clustering problem.

(B) Inter-Cluster Grouping (ICG)

Consider a constrained clustering problem with points and clusters, where the size of all clusters is strictly the same .

Assumption 1

We make the following assumptions:

-

1.

is divisible by ;

-

2.

Take any point from cluster , the squared -norm distance between the point and its cluster centroid is bounded by .

-

3.

Take one point from each of clusters at random, the sum of deviations of each point from its cluster centroid meets .

Definition 1 (Group Centroid)

Given clusters of equal size, let group be constructed from one point randomly sampled from each cluster . Then, the centroid of group is defined as .

Proposition 2

If Assumption 1 holds, suppose the centroid of cluster is and the global centroid is . We have:

-

1.

The group and global centroids are expected to coincide, .

-

2.

The error between the group and global centroids is bounded by .

Proof

Proposition 2 indicates that there exists a grouping strategy and , (), so that the objective in Eq. (4) turns to and the expectation value reaches 0. This motivates us to use the constrained clustering model to solve in the objective Eq. (4). Therefore, we consider the constrained clustering problem below,

| (10) | ||||

| (11) | ||||

| (12) | ||||

| (13) |

where is a selector variable, means that client is assigned to cluster while 0 means not, represents the centroid of cluster . Eq. (10) is the standard clustering objective, which aims to assign clients to clusters so that the sum of the squared -norm distance between the class distribution vector and its nearest cluster centroid is minimized. Constraint (11) ensures that each cluster has the same size . Constraint (12) ensures that each client can only be assigned to one cluster at a time. In this simplified problem, Constraint (7) is relaxed to to satisfy the assumption that is divisible.

The above constrained clustering problem can be modeled as a minimum cost flow (MCF) problem and solved by network simplex algorithms [19], such as SimpleMinCostFlow in Google OR-Tools. Then, we can alternately perform cluster assignment and cluster update to optimize and , respectively. Finally, we construct groups, each group consists of one client randomly sampled from each cluster without replacement, so that their group centroids are expected to coincide with the global centroid. The pseudo code is given in Algorithm 2. ICG has a complexity of , where , and are the number of clients, groups, categories, and iterations, respectively. In our experiment, ICG is quite fast, and it can complete group assignment within only 0.1 seconds, with and .

4 Experimental Evaluation

4.1 Experiment Setup and Evaluation Metrics

Environment and Hyperparameter Setup. The experiment platform contains FL clients. The most commonly used FEMNIST[11] is selected as the benchmark dataset, which is specially designed for non-i.i.d. FL environment and is constructed by dividing 805,263 digit and character samples into 3,550 FL clients in a non-uniform class distribution, with an average of samples per client. For the resource-limited mobile devices, a lightweight neural network composed of 2 convolutional layers and 2 fully connected layers with a total of 6.3 million parameters is adopted as the training model. The standard mini-batch SGD is used by FL clients to train their local models, with the learning rate , the batch size and the local epoch . We test FedGSP for rounds. By default, we set the group sampling rate , the group number growth function and the corresponding coefficients , . The values of , , , will be further tuned in the experiment to observe their performance influence.

Benchmark Algorithms. In order to highlight the effect of the proposed STP and ICG separately, we remove them from FedGSP to obtain the naive version, NaiveGSP. Then, we compare the performance of the following versions of FedGSP through ablation studies:

-

1.

NaiveGSP: FL clients are randomly assigned to a fixed number of groups, the clients in the group are trained in sequence and the groups are trained in parallel (e.g., Astraea[9]).

-

2.

NaiveGSP+ICG: The ICG grouping algorithm is adopted in NaiveGSP to assign FL clients to a fixed number of groups strategically.

-

3.

NaiveGSP+ICG+STP (FedGSP): On the basis of NaiveGSP+ICG, FL clients are reassigned to a growing number of groups in each round as required by STP.

In addition, seven state-of-the-art baselines are experimentally compared with FedGSP. They are FedProx [12], FedMMD [13], FedFusion [14], IDA [15], and FedAdagrad, FedAdam, FedYogi from [16].

Evaluation Metrics. In addition to the fundamental test accuracy and test loss, we also define the following metrics to assist in performance evaluation.

Class Probability Distance (CPD). The maximum mean discrepancy (MMD) distance is a probability measure in the reproducing kernel Hilbert space. We define CPD as the kernel two-sample estimation with Gaussian radial basis kernel [20] to measure the difference in class probability (i.e., normalized class distribution) between two groups . Generally, the smaller the CPD, the smaller the data heterogeneity between two groups, and therefore the better the grouping strategy.

| (14) | ||||

Computational Time. We define in Eq. (15) to estimate the computational time cost, where the number of floating point operations (FLOPs) is M FLOPs per sample and M FLOPs for global aggregation, and G FLOPs per second is the computing throughput of the Qualcomm Snapdragon 835 smartphone chip equipped with Adreno 540 GPU.

| (15) |

Communication Time and Traffic. We define in Eq. (16) to estimate the communication time cost and in Eq. (17) to estimate the total traffic, where the FL model size is MB, the inbound and outbound transmission rates are (tested in the Internet by AWS EC2 r4.large 2 vCPUs with disabled enhanced networking). Eq. (16) to Eq. (17) consider only the cross-WAN traffic between FL clients and group managers, but the traffic between the top server and group managers is ignored because they are deployed in the same physical machine.

| (16) |

| (17) |

4.2 Results and Discussion

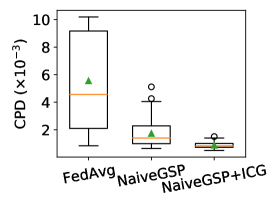

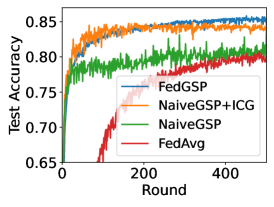

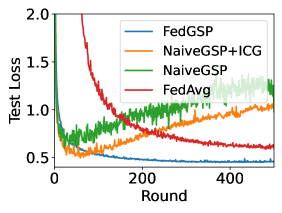

The effect of ICG and STP. We first compare the CPD of FedAvg[21], NaiveGSP and NaiveGSP+ICG in Figure 3a. These CPDs are calculated between every pair of FL clients. The results show that NaiveGSP+ICG reduces the median CPD of FedAvg by and NaiveGSP by . We also show their accuracy performance in Figure 3b. The baseline NaiveGSP quickly converges but only achieves the accuracy similar to FedAvg. Instead, NaiveGSP+ICG improves the accuracy by . This shows that reducing the data heterogeneity among groups can indeed effectively improve FL performance in the presence of non-i.i.d. data. Although NaiveGSP+ICG is already very effective, it still has defects. Figure 3c shows a rise in the loss value of NaiveGSP+ICG, which indicates that it has been overfitted. That is because the training mode of NaiveGSP+ICG is static, it may learn the client order and forget the previous data. Instead, the dynamic FedGSP overcomes overfitting and eventually converges to a higher accuracy , which proves the effectiveness of combining STP and ICG.

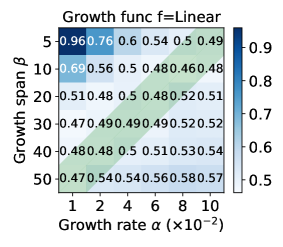

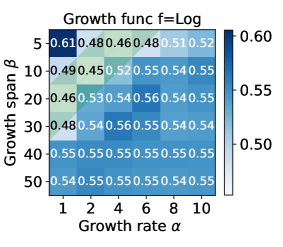

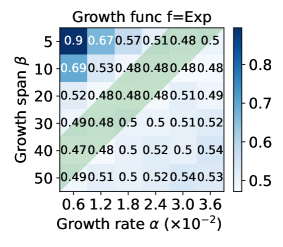

The effect of the growth function and its coefficients . To explore the performance influence of different group number growth functions , we conduct a grid search on and . The test loss heatmap is shown in Figure 4. The results show that the logarithmic growth function achieves smaller loss 0.453 with among 3 candidate functions. Besides, we found that both lower and higher lead to higher loss values. The reasons may be that a slow increase in the number of groups leads to more STM and results in overfitting, while a rapid increase in the number of groups makes FedGSP degenerate into FedAvg prematurely and suffers the damage of data heterogeneity. Therefore, we recommend to be a moderate value, as shown in the green area.

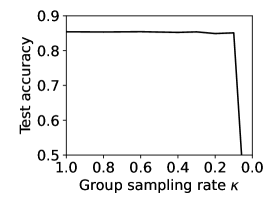

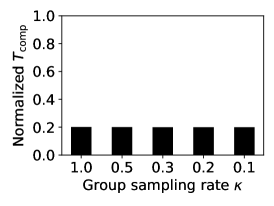

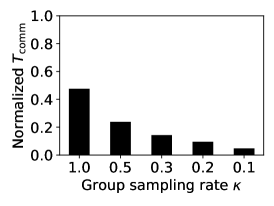

The effect of the group sampling rate . controls the participation rate of groups (also the participation rate of FL clients) in each round. We set to observe its effect on accuracy and time cost. Figure 5a shows the robustness of accuracy to different values of . This is expected because ICG forces the data of each group to become homogeneous, which enables each group to individually represent the global data. In addition, Figures 5b and 5c show that has a negligible effect on computational time , but a proportional effect on communication time because a larger means more model data are involved in data transmission. Therefore, we recommend that only of groups are sampled to participate in FL in each round to reduce the overall time cost. In our experiments, we set by default.

The performance comparison of FedGSP. We compare FedGSP with seven state-of-the-art approaches and summarize their test accuracy, test loss and training rounds (required to reach the accuracy of ) in Table 1. The results show that FedGSP achieves higher accuracy than FedAvg and reaches the accuracy of within only rounds. Moreover, FedGSP outperforms all the comparison approaches, with an average of higher accuracy, lower loss and less rounds, which shows its effectiveness to improve FL performance in the presence of non-i.i.d. data.

The time and traffic cost of FedGSP. Figure 6 visualizes the time cost and total traffic of FedGSP and FedAvg when they reach the accuracy of . The time cost consists of computational time and communication time , of which accounts for the majority due to the huge data traffic from hundreds of FL clients has exacerbated the bandwidth bottleneck of the cloud server. Figure 6 also shows that FedGSP spends less time and traffic than FedAvg, which benefits from a cliff-like reduction in the number of training rounds (only 34 rounds to reach the accuracy of ). Therefore, FedGSP is not only accurate, but also training- and communication-efficient.

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/0a463d0e-478d-403b-8763-3d55c89704cb/x12.png)

| Algorithm | Accuracy | Loss | Rounds |

|---|---|---|---|

| FedAvg | 80.1% | 0.602 | 470 |

| FedProx | 78.7% | 0.633 | |

| FedMMD | 81.7% | 0.587 | 336 |

| FedFusion | 82.4% | 0.554 | 230 |

| IDA | 82.0% | 0.567 | 256 |

| FedAdagrad | 81.9% | 0.582 | 297 |

| FedAdam | 82.1% | 0.566 | 87 |

| FedYogi | 83.2% | 0.543 | 93 |

| FedGSP | 85.4% | 0.453 | 34 |

5 Conclusions

In this paper, we addressed the problem of FL model performance degradation in the presence of non-i.i.d. data. We proposed a new concept of dynamic STP collaborative training that is robust against data heterogeneity, and a grouped framework FedGSP to support dynamic management of the continuously growing client groups. In addition, we proposed ICG to support efficient group assignment in STP by solving a constrained clustering problem with equal group size constraint, aiming to minimize the data distribution divergence among groups. We experimentally evaluated FedGSP on LEAF, a widely adopted FL benchmark platform, with the non-i.i.d. FEMNIST dataset. The results showed that FedGSP significantly outperforms seven state-of-the-art approaches in terms of model accuracy and convergence speed. In addition, FedGSP is both training- and communication-efficient, making it suitable for practical applications.

Acknowledgments. This work is supported, in part, by the National Key Research and Development Program of China (2019YFB1802800); PCL Future Greater-Bay Area Network Facilities for Large-Scale Experiments and Applications (LZC0019), China; National Research Foundation, Singapore under its AI Singapore Programme (AISG Award No: AISG2-RP-2020-019); the RIE 2020 Advanced Manufacturing and Engineering (AME) Programmatic Fund (No. A20G8b0102), Singapore; and Nanyang Assistant Professorship (NAP). Any opinions, findings and conclusions or recommendations expressed in this material are those of the authors and do not reflect the views of the funding agencies.

References

- [1] Kairouz, P., McMahan, H.B., Avent, B., et al.: Advances and open problems in federated learning. Foundations and Trends in Machine Learning 14(1-2), pp. 1-210, (2021). \doi10.1561/2200000083

- [2] Xu, J., Glicksberg, B.S., Su, C., et al.: Federated learning for healthcare informatics. Journal of Healthcare Informatics Research 5(1), pp. 1-19, (2021). \doi10.1007/s41666-020-00082-4

- [3] Khan, L.U., Saad, W., Han, Z., et al.: Federated learning for internet of things: Recent advances, taxonomy, and open challenges. IEEE Communications Surveys & Tutorials 23(3), pp. 1759-1799, (2021). \doi10.1109/COMST.2021.3090430

- [4] Lim, W.Y.B., Luong, N.C., Hoang, D.T., et al.: Federated learning in mobile edge networks: A comprehensive survey. IEEE Communications Surveys & Tutorials, 22(3), pp. 2031-2063, (2020). \doi10.1109/COMST.2020.2986024

- [5] Zhao, Y., Li, M., Lai, L., et al.: Federated learning with non-iid data. arXiv preprint arXiv:1806.00582, (2018).

- [6] Yao, X., Huang, T., Zhang, R.X., et al.: Federated learning with unbiased gradient aggregation and controllable meta updating. In: Workshop on Federated Learning for Data Privacy and Confidentiality, (2019).

- [7] Yoshida, N., Nishio, T., Morikura, M., et al.: Hybrid-fl for wireless networks: Cooperative learning mechanism using non-iid data. In: ICC 2020-2020 IEEE International Conference on Communications (ICC), pp. 1-7, (2020). \doi10.1109/ICC40277.2020.9149323

- [8] Jeong, E., Oh, S., Kim, H., et al.: Communication-efficient on-device machine learning: Federated distillation and augmentation under non-iid private data. In: Workshop on Machine Learning on the Phone and other Consumer Devices, (2018).

- [9] Duan, M., Liu, D., Chen, X., et al.: Astraea: Self-balancing federated learning for improving classification accuracy of mobile deep learning applications. In: 2019 IEEE 37th International Conference on Computer Design (ICCD), pp. 246-254, (2019). \doi10.1109/ICCD46524.2019.00038

- [10] Garey, M.R. and Johnson, D.S.: “Strong” NP-completeness results: Motivation, examples, and implications. Journal of the Association for Computing Machinery, 25(3), pp. 499-508, (1978). \doi10.1145/322077.322090

- [11] Caldas, S., Duddu, S.M.K., Wu, P., et al.: Leaf: A benchmark for federated settings. In: 33rd Conference on Neural Information Processing Systems (NeurIPS), (2019).

- [12] Li, T., Sahu, A.K., Zaheer, M., et al.: Federated optimization in heterogeneous networks. In: Proceedings of Machine Learning and Systems 2, pp. 429-450, (2020).

- [13] Yao, X., Huang, C. and Sun, L.: Two-stream federated learning: Reduce the communication costs. In: 2018 IEEE Visual Communications and Image Processing (VCIP), pp. 1-4, (2018). \doi10.1109/VCIP.2018.8698609

- [14] Yao, X., Huang, T., Wu, C., et al.: Towards faster and better federated learning: A feature fusion approach. In: 2019 IEEE International Conference on Image Processing (ICIP), pp. 175-179, (2019). \doi10.1109/ICIP.2019.8803001

- [15] Yeganeh, Y., Farshad, A., Navab, N., et al.: Inverse distance aggregation for federated learning with non-iid data. In: Domain Adaptation and Representation Transfer, and Distributed and Collaborative Learning, pp. 150-159, (2020). \doi10.1007/978-3-030-60548-3_15

- [16] Reddi, S., Charles, Z., Zaheer, M., et al.: Adaptive federated optimization. In: International Conference on Learning Representations, (2021).

- [17] Sattler, F., Müller, K.R. and Samek, W.: Clustered federated learning: Model-agnostic distributed multitask optimization under privacy constraints. IEEE Transactions on Neural Networks and Learning Systems 32(8), pp. 3710-3722, (2021). \doi10.1109/TNNLS.2020.3015958

- [18] Fallah, A., Mokhtari, A. and Ozdaglar, A.: Personalized federated learning: A meta-learning approach. In: 34th Conference on Neural Information Processing Systems (NeurIPS), (2020).

- [19] Bradley, P.S., Bennett, K.P. and Demiriz, A.: Constrained k-means clustering. Microsoft Research, Redmond, 20(0), p. 0, (2000).

- [20] Gretton, A., Borgwardt, K.M., Rasch, M.J.: A kernel two-sample test. Journal of Machine Learning Research, 13(1), pp. 723-773, (2012).

- [21] McMahan, B., Moore, E., Ramage, D., et al.: Communication-efficient learning of deep networks from decentralized data. In: Proceedings of the 20th International Conference on Artificial Intelligence and Statistics, pp. 1273-1282, (2017).