Hiding Your Signals: A Security Analysis of PPG-based Biometric Authentication

Abstract

Recently, physiological signal-based biometric systems have received wide attention. Unlike traditional biometric features, physiological signals can not be easily compromised (usually unobservable to human eyes). Photoplethysmography (PPG) signal is easy to measure, making it more attractive than many other physiological signals for biometric authentication. However, with the advent of remote PPG (rPPG), unobservability has been challenged when the attacker can remotely steal the rPPG signals by monitoring the victim’s face, subsequently posing a threat to PPG-based biometrics. In PPG-based biometric authentication, current attack approaches mandate the victim’s PPG signal, making rPPG-based attacks neglected. In this paper, we firstly analyze the security of PPG-based biometrics, including user authentication and communication protocols. We evaluate the signal waveforms, heart rate and inter-pulse-interval information extracted by five rPPG methods, including four traditional optical computing methods (CHROM, POS, LGI, PCA) and one deep learning method (CL_rPPG). We conducted experiments on five datasets (PURE, UBFC_rPPG, UBFC_Phys, LGI_PPGI, and COHFACE) to collect a comprehensive set of results. Our empirical studies show that rPPG poses a serious threat to the authentication system. The success rate of the rPPG signal spoofing attack in the user authentication system reached 0.35. The bit hit rate is 0.6 in inter-pulse-interval-based security protocols. Further, we propose an active defence strategy to hide the physiological signals of the face to resist the attack. It reduces the success rate of rPPG spoofing attacks in user authentication to 0.05. The bit hit rate was reduced to 0.5, which is at the level of a random guess. Our strategy effectively prevents the exposure of PPG signals to protect users’ sensitive physiological data.

Index Terms:

Biometrics, Spoofing Attack, Signal Hiding, PPG, User Authentication, Key Exchange ProtocolI Introduction

Over the past decade, biometric systems have provided high authentication accuracy and user convenience, driving the widespread deployment. However, with the popularity of biometrics, researchers have found that traditional biometric authentication is vulnerable to spoofing attacks. Traditional biometric features, such as face and fingerprint recognition, can be directly observed with the human eye, an attacker can compromise the authentication system without strong technical knowledge. Researchers are turning to a potentially reliable and unobservable biological feature, the physiological signal.

Common physiological signals, including Electrocardiogram, Electroencephalograph, and Photoplethysmography (PPG) are widely used as biometric features [1, 2, 3]. Compared to other physiological signals, PPG signals are easier to obtain through a light source and a light sensor, which makes it more widely used in life. For example, popular wearable devices use PPG sensors for heath monitoring — Apple and Samsung. Therefore, PPG signals are considered to be more practical and attractive than other physiological signals in real-world applications [3].

Currently, known threats against PPG-based biometric authentication require the collection of the victim’s PPG signal. This requires close contact with the victim, which significantly limits the possibility of an attack. However, the emergence of remote PPG (rPPG) signals has challenged the unobservable property of PPG signals, which means that PPG-based biometric authentication will lose the advantage of unobservability. rPPG attempts to capture PPG-like signals by monitoring subtle changes in the colour of a person’s facial skin. This is usually done by analyzing the colour change of the face area in the video. The rPPG signal has been successfully applied to infer biometric signals, such as monitoring heart rate [4, 5] and predicting blood pressure.

Although some studies explore the use of rPPG signals to attack inter-pulse interval (IPI)-based security protocols [6, 7, 8], they do not discuss viable defence strategies. In addition, there is still a research gap between rPPG signals and PPG-based biometric authentication. In an existing rPPG method, its validity is assessed mainly by heart rate, but signal morphological characteristics are often neglected. In PPG-based biometric authentication, the morphological characteristics of the signal are the main contributors to the uniqueness of the user. In addition, the rPPG signal is extracted from the human faces, while the PPG signal is mostly collected from the fingertips or wrist. Different skin tissues and distance from the heart can lead to differences in shape and phase between PPG signals. Thus, it is crucial to explore the potential of rPPG-based attacks to evaluate the security of PPG-based biometrics, which is one of the aims of this work.

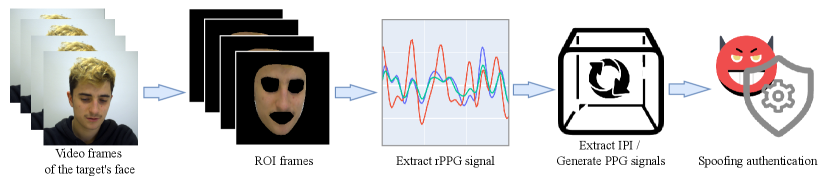

In this paper, we analyze the security of PPG-based biometric authentication, particularly in user authentication and communication protocols. As shown in Fig. 1, current methods only require access to video clips of the victim’s face to achieve a remote attack, increasing the likelihood of the attack in reality. We firstly analyze rPPG-based spoofing attacks and some common defence strategies to reduce the threat. We further propose an effective defence strategy and evaluate the defence performance. The main contributions of this paper are as follows:

-

1.

We analyze the potential threats of rPPG to PPG-based biometrics using five methods (CHROM, POS, LGI, PCA, CL_rPPG) on five datasets (PURE, UBFC_rPPG, UBFC_Phys, LGI_PPGI, COHFACE). The success rate of the spoofing attack on user authentication reached 0.35. The bit hit rate in the IPI-based security protocols reached 0.6.

-

2.

We analyze a series of defence strategies to mitigate the threat of rPPG-based spoofing attacks on biometrics. Existing passive defense strategies of spoofing attacks can significantly degrade the performance of the user authentication model.

-

3.

We propose a signal hiding method (SigH) by injecting an arbitrary waveform into the face of the video. Our active defense strategy reduces the success rate of spoofing attacks on user authentication to 0.05. And it will not affect the performance of the authentication model. The bit hit rate in the IPI-based security protocols was reduced to 0.5.

The rest of the paper is organized as follows: Section II reviews related work, including PPG-based biometric authentication methods, existing attacks, and rPPG methods. Section III and V describe the attack and defence strategies we used. In Section IV and VI, experimental results are provided along with a discussion on the performance of the attack and defense strategy, respectively. Finally, Section VII concludes this work.

II Related Work

II-A PPG-based Biometrics

In the biometrics field, PPG signals are mainly used in two areas: user authentication and communication protocols.

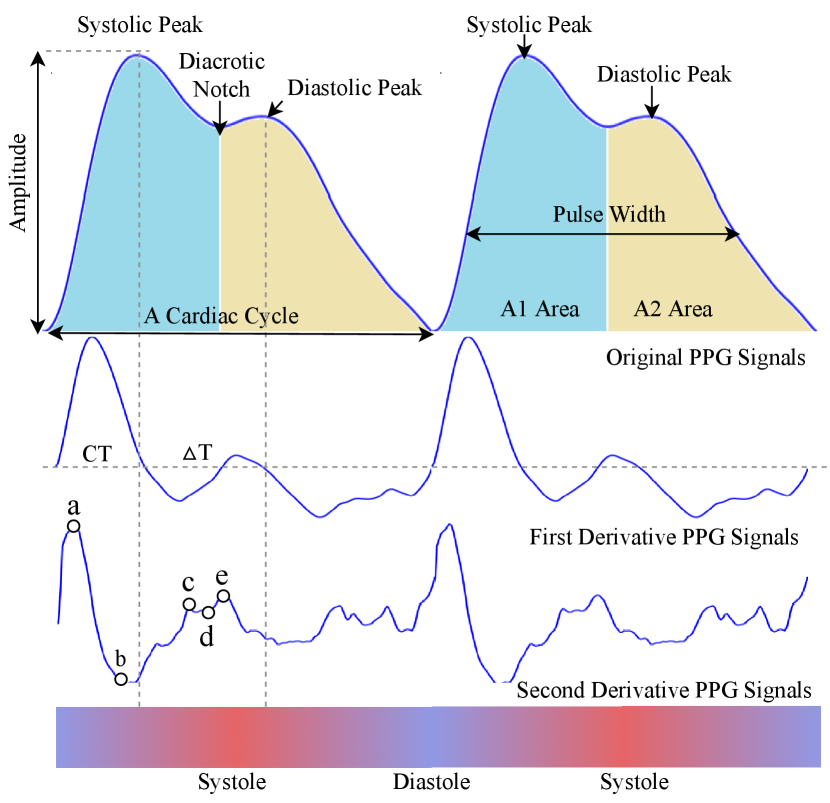

User Authentication: The PPG signal contains a wealth of personal cardiac data. The earliest studies used clinical features commonly existed in PPG signals as biometric features [9, 10]. For example, as shown in Fig. 2, the ratio of to is related to arterial stiffness [11]. and areas are related to systemic vascular resistance [12]. However, some characteristics of these features are sensitive to changes over time. For example, changes with an individual’s age [13]. As a result, researchers have applied a few techniques to get stable features, such as filtering noise-sensitive features using feature selection methods like Kernel Principal Component Analysis [14], transforming robust features by leveraging wavelet transform [15] and Short-time Fourier Transform [16]. GAN has also been used to synthesize temporally stable features of individual human [17].

After constituting an individual template using the extracted signal features, the process of identifying the individual template is similar to other biometric authentication systems. The early generations of authentication systems match individuals by calculating the distance between features and templates [18, 9]. Gradually, machine learning models replace simple distance calculations to improve the performance of template matching. However, many machine learning models require manual feature extraction so that the system designer and engineers may introduce bias. Recently, deep learning methods have provided an end-to-end authentication strategy, for example, CNN and LSTM are used to learn features from raw data automatically in the context of user recognition [19, 3].

Communication Protocols: In body area sensor networks (BASN) with strict computational resource constraints, traditional encryption schemes for information transmission are difficult to apply because PPG signals can get similar measurements in different parts of the body. When used as an entity identifier in a BASN symmetric encryption scheme, the distribution of pre key-agreement could be avoided [20]. IPI information extracted from the PPG signal is the most prevalent entity identifier. IPI indicates the time difference between two consecutive heartbeats, while PPG signal is usually regarded as the time difference between two pulse peaks. The timing of systolic peaks in the PPG signal is sympathetically related and influenced by various physiological factors. This characteristic allows the researcher to extract entity identifiers from the IPI information [21].

After obtaining the IPI, the randomness of the entity identifier needs to be extracted by quantization algorithms. A quantization algorithm encodes the signal into a binary representation. Two quantization methods exist. The first method was proposed in [22] to extract a random value from the intermediate bits of IPI. However, this method suffers from obvious issues, that is, the high bits of IPI are not random enough while the low bits have too much noise. The second method was proposed in [21] to use the trend of the IPI sequence as a random source of information. The second method improves the randomness attribute at the cost of excessive processing time.

II-B Remote Photoplethysmography (rPPG)

Most rPPG signals are obtained by analyzing subtle differences in the facial colour channels from a video clip. The green channel is more readily absorbed by hemoglobin than red and blue channels. In most circumstances, the green channel contains the strongest PPG signal, providing a high signal-to-noise ratio solution for signal acquisition [23]. Two blind source separation methods, Independent Component Analysis and Principal Component Analysis were prposed in [24, 25] to isolate the approximately clean signals from the RGB channels. Unlike contact PPG measurements, the signal reflected by the skin in rPPG measurements includes the specular reflection of natural light, leading to unpredictable normalization errors. CHROM [26] introduces chromatic aberration to eliminate specular reflection effects with the assumption that the pixels in the face region contribute equally to the rPPG signal. Nevertheless, pixels collected from the face region may be affected by different levels of noise.

A recent research trend is to apply deep learning in rPPG acquisition for an end-to-end solution framework. DeepPhys [27] uses an attention network with CNN to capture the differences in the spatial and temporal distribution of signals between video frames. PhysNet [28] uses a spatio-temporal convolutional network to restore the rPPG signal from the video clip. Meta-rPPG [29] presents a transduction meta-learner that allows the deep learning model to adapt the data distribution during deployment. A fully self-supervised training method was introduced in [30] to acquire the rPPG signals. In addition to existing progress, deep learning-based methods have good research potentials for rPPG signal acquisition.

In summary, the research trend for rPPG signal acquisition is towards automated end-to-end solutions with high accuracy and resilience to noise. However, many existing research works are evaluated by the extracted heart rate’s accuracy, neglecting the signal’s morphological characteristics.

II-C Existing Attacks

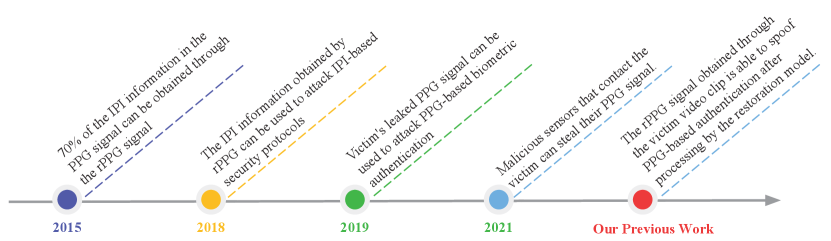

The first attack against IPI-based authentication using rPPG was proposed by Calleja et al. [7] in 2015. IPI signals help derive secure random sequences in the key distribution component of IPI-based authentication systems whose security conditions rely on the assumption that IPI can be measured by contact devices only. In fact, most of the IPI information acquired by PPG signals could also be obtained by non-contact techniques (rPPG). By analyzing the face’s colour change information in the video clip, the rPPG signal can be extracted in a manner similar to PPG. The extracted rPPG signal can be used to generate IPI, violating the assumptions in IPI-based authentication protocols [6]. Although rPPG does not accurately describe IPI generated from ECG signals, it has similar accuracy to contact PPG. Currently, PPG-based biometric authentication is mainly based on the morphological characteristics of the PPG signal rather than the IPI information.

In previous attacks on PPG-based biometric authentication, researchers almost always assume that the victim’s PPG signal has been compromised. Karimian et al. [8] utilized a non-linear dynamic model [31] to extract model parameters from the victim’s PPG signal before transforming the model parameters using two Gaussian functions as mapping functions. Thereafter, a spoofing attack was launched using the forged victim’s PPG signal. Another study involved stealing a victim’s PPG signal stealthily by installing a malicious PPG sensor on a device that the victim would touch [32]. Based on the recorded signals, the attacker can use a waveform generator to simulate the victim’s PPG signal and compromise the authentication. However, contact-based PPG signals are challenging to obtain without the victim’s awareness, significantly limiting these attacks’ application scenarios.

III Spoofing attacks on PPG-based Biometric Authentication

In this work, our goal is to conduct a comprehensive security analysis of PPG-based biometrics. First, Fig. 3 depicts our scheme that performs spoofing attacks on biometrics. We extracted the rPPG signal from the face video clip, then extracted the IPI and reconstructed the PPG signal from the rPPG signal. We consider various rPPG methods (CHROM, POS, LGI, PCA, CL_rPPG) to spoof biometric authentication, as well as extracting IPI information from the rPPG signal to spoof IPI-based security protocols.

III-A Threat Model

PPG-based biometrics, including user authentication and IPI-based security protocols, are vulnerable to spoofing attacks. This paper assumes that the attacker could obtain a video clip with the victim’s face. In this age of social networking, it is easy to retrieve many videos with human faces on social media sites. For instance, YouTube and Facebook (Meta) host numerous HD video clips that are downloadable to the attacker. Furthermore, an attacker can record a video clip of a targeted victim’s face through a surveillance camera, especially when hidden cameras are often not perceived by the victim. In contrast to the previous attack assumptions, our method requires no leaked PPG signals. In addition, we assume that the attacker knows the inputs to the user authentication model. For instance, a PPG signal with one heartbeat cycle is taken as input. The length of the signal is resampled to 60. The amplitude of the signal is normalized to 0-1.

III-B rPPG Acquisition

Before acquiring the rPPG signal, we use MTCNN [33] to detect the face region in each video frame before isolation. Then the non-skin part of the face region is filtered by the skin detection algorithm proposed in [34]. For example, clothes, hair, and other parts that do not provide PPG signal information will be filterd. We will get a sequence of video frames containing only the facial skin. denotes the th frame in the video clip. Each frame is stored as a matrix of , where and represent the frame’s width and height, and 3 is the number of color channels. The average value of RGB of the whole skin area is used as the R, G, B values of the current frame. We apply a bandpass filter to obtain the portion of the frame sequence with signal frequencies between 0.65Hz and 4.0Hz (i.e., between 39 and 240 bpm). The method in [35] was used to remove the breadth component of the signal. This preprocessing removes the signal’s noise and breathing components (signal trend).

Upon removing noises, we extract the rPPG signal from the consecutive skin frames. As listed in Section II-B, there are multiple methods for obtaining rPPG signals. For instance, CHROM, POS, LGI, PCA, CL_rPPG.

CHROM is based on the skin optical reflection model. The model assumes that the light reflected by the skin consists of diffuse and specular reflections, and the PPG signal is hidden in the diffuse reflection. CHROM eliminates the specular reflection component by using chrominance. CHROM uses simple mathematical operations to obtain the PPG signal quickly. Moreover, CHROM is resilient to motion artifacts. and represent two orthogonal axes, and , , and represent the three color channels in the video. The rPPG signal value can be calculated as follows:

| (1) |

| (2) |

| (3) |

| (4) |

where represents the method of obtaining the standard deviation.

POS is similar to CHROM, which is also based on the skin reflection model assumption to obtain low signal-to-noise ratio PPG signals by removing specular reflections. First, POS uses a sliding window to normalize the RGB pixel values within the window temporally. . Time normalization:

| (5) |

| (6) |

denotes the time window, and indicates the -th frame. The spatial pixel averaging step can reduce camera quantization errors. The signal is then projected into the plane . Finally, we get the rPPG signal .

| (7) |

| (8) |

| (9) |

LGI extracts the Local Group Invariance features of heart rates from face videos. The singular value decomposition of is first performed, such that . The projection plane is defined as :

| (10) |

| (11) |

Principal component analysis (PCA) is a statistical analysis method to reduce features’ dimensionality. PCA uses an orthogonal transformation to project the observed values of potentially correlated variables into a set of linearly uncorrelated variables. PCA separates the periodic pulse signals from the noisy signals.

CL_rPPG is a deep learning-based rPPG signal extraction method. Traditional methods may lose vital information related to the heartbeat through manually designed features. The deep learning-based approach recovers the rPPG signal directly from the original face video. CL_rPPG uses PhysNet as the rPPG estimator to generate the rPPG signal. PhysNet is a spatiotemporal convolutional network representing both long-term and short-term spatio-temporal contextual features. CL_rPPG outputs the PPG signal through the upsampling interpolation and 3D convolution. CL_rPPG has two versions: supervised learning (CL_rPPG_P) and self-supervised learning (CL_rPPG_F). For the supervised learning mode, the CL_rPPG_P model is trained using the maximum cross-correlation between the ground truth PPG signal and the estimated PPG signal as the loss function. In the self-supervised learning model CL_rPPG_F, negative samples with high heart rates are artificially generated by a frequency resampler for contrast training. The power spectral density means the squared error is used to measure the difference between two signals.

III-C IPI Recovery and Quantification

To spoof IPI-based security protocols, we need to recover the IPI information from the signal. We mark the peak locations of the original signal before calculating the time difference between the peaks as IPI. As the standard heart rate in adults is 50-120 beats per minute [17], there are at most two heartbeats per second. To minimize sample loss, we exclude data where the distance between heartbeats is less than .

For IPI sequences to be used in key exchange protocols, we need to quantize the IPI sequences. There are two mainstream quantification methods, trend-based and quantile function-based [20]. We use the Gray code as the quantization function for the quantile-based function. Firstly, we normalize the IPI time series to 0-1 by the following equation.

| (12) |

Then, we multiply it by 256 and convert the result to an 8-bit Gray code.

| (13) |

According to Equation 14, the function converts decimal numbers to binary. The function fills binary numbers with zeros up to eight bits.

| (14) |

For the trend-based quantification, we divide each IPI value into 16 parts of an equal length following a normal distribution. Our setting is identical to IMDGuard [36]. Then we encode the IPI sequence according to Algorithm 1.

IV Attack Evaluation

In this section, we evaluate the effectiveness of spoofing attacks using rPPG signals. We select five common public datasets for our experiments. Experiments on spoofing user authentication were performed in UBFC-PHYS [37]. We trained a state-of-the-art model as our user authentication target model (see Section IV-B). The threat of rPPG signals to IPI-based security protocols is explored on four datasets — PURE [38], UBFC_rPPG [39] (UBFC_1, UBFC_2), LGI_PPGI [40] and COHFACE [41]. We use trend-based and quantile function-based as our target model (see Section III-C) for IPI-based security protocols. Then we use the rPPG signal (see Section III-B) to perform a spoofing attack.

IV-A Datasets

To analyze the security of PPG-based authentication, we mainly consider the dataset, UBFC-PHYS [37]. Due to video quality, the rPPG signal recovered in other datasets is insufficient to threaten the biometrics.

UBFC-PHYS captures facial videos ( resolution, 35 FPS, 227,474 Kbps) of 56 participants. Participants are about 1 meter away from the camera and the light source. The light source uses artificial light to ensure the same lighting conditions. Each participant recorded a one-minute video of the three states (‘resting’, ‘talking’, or ‘calculating’). Meanwhile, the Empatica E4 wristband was used to collect the PPG signal from the wrist synchronously with the sampling rate of 65 Hz. We exclude some videos in which faces are not correctly recognized in the video frames due to the participants’ activity. We perform repeated independent experiments for each user. Other users are treated as non-victims. As shown in Table I, we extracted rPPG signals ranging from 150 to 287 cardiac cycles for each state. The number of cardiac cycles varies depending on the individual.

| Signals | Number of Cardiac Cycles |

|---|---|

| Non-victim PPG Signal | 11800+ |

| Victim’s rPPG Signal | 150287 |

To analyze the threat of rPPG for IPI-based protocols, we considered the four most commonly used rPPG datasets, PURE [38], UBFC_rPPG [39], LGI_PPGI [40] and COHFACE [41].

PURE captures 10 participants’ facial videos. Each participant’s videos were recorded in six states (Steady, Talking, Small/Medium Rotation, and Slow/Fast Translation). Each video clip is one-minute long with a resolution of 640 480 and a frame rate of 30 FPS. Meanwhile, the finger clip pulse oximeter acquired the PPG signal at a 65Hz sampling rate. Although there was facial movement in the video, all signals were captured during the rest state.

UBFC_rPPG uses a webcam to record video (640 480 resolution, 30 FPS). Transmissive pulse oximetry (62 Hz) to obtain the PPG signal. It consists of two sub-datasets. In UBFC_1, participants were asked to remain stationary; in UBFC_2, participants played a mathematical game in front of a green screen.

LGI_PPGI records facial videos in four scenes (resting, head movement, fitness, and conversation in an urban background). The video clips were captured by a webcam (640 480 resolution, 25 FPS). The sampling rate of the pulse oximeter is 60 Hz. However, only 6 of the original 25 participants’ videos are publicly available. Our experiments were conducted on these 6 participants.

COHFACE contains facial video clips (640 480 resolution, 20 FPS) of 40 subjects, including the simultaneous acquisition of PPG signals (256 Hz). Subjects were asked to sit still in front of the camera. The video was heavily compressed.

| Status | Resting | Talking | Calculating |

|---|---|---|---|

| Random Attack | 0.14 | 0.15 | 0.15 |

| Victim rPPG Signal Attack | 0.25 | 0.19 | 0.21 |

| Mean rPPG Signal Attack | 0.34 | 0.35 | 0.35 |

IV-B Experimental Implementation

We use Keras111https://www.tensorflow.org/ to implement the most advanced PPG-based user authentication model [3]. We use the virtual heart rate pyVHR222https://github.com/phuselab/pyVHR [42] to implement the different traditional rPPG methods (CHROM, POS, LGI, PCA). It provides a uniform standard of evaluation. For deep learning methods CL_rPPG, we use the open-source code333https://github.com/ToyotaResearchInstitute/RemotePPG/tree/master/iccv provided in the article.

For the user authentication model, we mark the signals of the victims as class 1 and other users as 0. We aim to compare the threat of rPPG signals with other users’ PPG signals for the user authentication system. We treat each user as a victim and one-tenth of other users are used to train the user authentication model, which is realistic for authentication systems. It is possible to collect a large amount of data about the target user, but only a small amount of data from other users. The remaining data from other users were used as a random attack to evaluate the spoofing attack by rPPG signals. To explore the impact of signals collected in different states on spoofing attacks, we exclude the PPG signals in the ‘talking’ and ‘calculating’ states while training the user authentication model. The performance of the user authentication model is determined by the Equal Error Rate (EER). We also call the model for the false acceptance rate (FAR) of the rPPG signal as the success rate of spoofing. A higher success rate indicates that the authentication system is more vulnerable to such spoofing attacks. Finally, we use the average results of all users.

For IPI-based security protocols, we evaluate them by using heart rate (HR) and IPI, respectively. The primary metrics are mean absolute error (MAE) and root-mean-square deviation (RMSE). We also use Pearson’s coefficient (PC) to compare the similarity between the PPG signal and the rPPG signal. Since IPI-based security protocols require multi-bit encoding from IPI, we use the bit hit rate (BHR) to show how many codes can be recovered by the rPPG signal. BHR is the percentage of recovered codes for all codes.

IV-C Attack in User Authentication

Tab. II shows the results of a random attack versus an attack using the victim’s rPPG signal. The success rate of using the victim’s rPPG signal is almost doubled compared to random attacks. The success rate of the Mean-treated signal is even higher, which is 0.34. Although the success rate of rPPG signal is reduced in the Talking and Calculating states, the mean treatment can help mitigate this effect. The attack’s success rate is 0.35 on average. It is a threat to the authentication model, since real-world authentication systems often allow users to make multiple attempts. By analyzing the results, we also found that individual differences were also evident in addition to the quality of the video, which significantly affected the attack’s success rate. The highest success rate of rPPG signal can reach 0.98 for users. In contrast, some users’ rPPGs cannot be used for spoofing attacks. Through the analysis, we found a significant difference in the morphology of some users’ rPPG and PPG signals.

| CHROM | POS | LGI | PCA | CL_P | CL_F | |

| MAE (P) | 0.1153 | 0.0762 | 0.0721 | 0.0729 | 0.0322 | 0.0592 |

| RMSE (P) | 0.1564 | 0.1114 | 0.1045 | 0.1051 | 0.0517 | 0.0796 |

| PC (P) | 0.7764 | 0.7985 | 0.8275 | 0.8296 | 0.9926 | 0.9925 |

| MAE (L) | 0.1572 | 0.1019 | 0.1001 | 0.1162 | - | - |

| RMSE (L) | 0.2008 | 0.1452 | 0.1419 | 0.1598 | - | - |

| PC (L) | 0.3597 | 0.3132 | 0.3230 | 0.3212 | - | - |

| MAE (U1) | 0.0771 | 0.0385 | 0.0481 | 0.0816 | - | - |

| RMSE (U1) | 0.1207 | 0.0694 | 0.0906 | 0.1319 | - | - |

| PC (U1) | 0.6451 | 0.7380 | 0.6829 | 0.6099 | - | - |

| MAE (U2) | 0.1319 | 0.0776 | 0.0867 | 0.1131 | 0.0457 | 0.0753 |

| RMSE (U2) | 0.1849 | 0.1332 | 0.1508 | 0.1804 | 0.1064 | 0.1484 |

| PC (U2) | 0.7554 | 0.8474 | 0.6730 | 0.6018 | 0.9248 | 0.8787 |

| MAE (C) | 0.1319 | 0.2271 | 0.2166 | 0.2265 | 0.2167 | 0.2282 |

| RMSE (C) | 0.1849 | 0.2954 | 0.2836 | 0.2957 | 0.4252 | 0.3980 |

| PC (C) | 0.0110 | 0.0600 | 0.0340 | 0.0060 | 0.9850 | 0.9880 |

| CHROM | POS | LGI | PCA | CL_P | CL_F | |

| BHR (P) | 0.5596 | 0.6307 | 0.6460 | 0.6302 | 0.6395 | 0.6016 |

| BHR (L) | 0.5138 | 0.5756 | 0.5669 | 0.5638 | - | - |

| BHR (U1) | 0.5544 | 0.6295 | 0.6460 | 0.5735 | - | - |

| BHR (U2) | 0.5486 | 0.5810 | 0.5790 | 0.5448 | 0.6344 | 0.6527 |

| BHR (C) | 0.5146 | 0.5205 | 0.5198 | 0.5214 | 0.5743 | 0.5813 |

IV-D Attack in IPI-based security protocols

We report MAE and RMSE for the video extracted IPIs with the PPG signal extracted IPI in Tab. III. The MAE of the recovered IPI in the high-quality original video is below 0.1. CL_P reaches a minimum MAE of 0.03. It also recovered an IPI that the Pearson correlation coefficient (PC) is as high as 0.99 with the IPI of PPG. It means the IPIs extracted by CL_P and PPG signals are very similar.

Tab. IV shows the BHR of the IPI-trend encoding extracted from the original video n different datasets. The BHR of the original video clip is around 0.6, implying that an attacker has a 60% success rate of breaking the PPG-based IPI security protocol. In the dataset UBFC_1, CL_F reached the highest BHR of 0.65. Due to the compression, the results in COHEFACE are almost equivalent to a random guess in every rPPG extraction method. Nevertheless, the BHR of CL_F and CL_P still reaches 0.58 and 0.57.

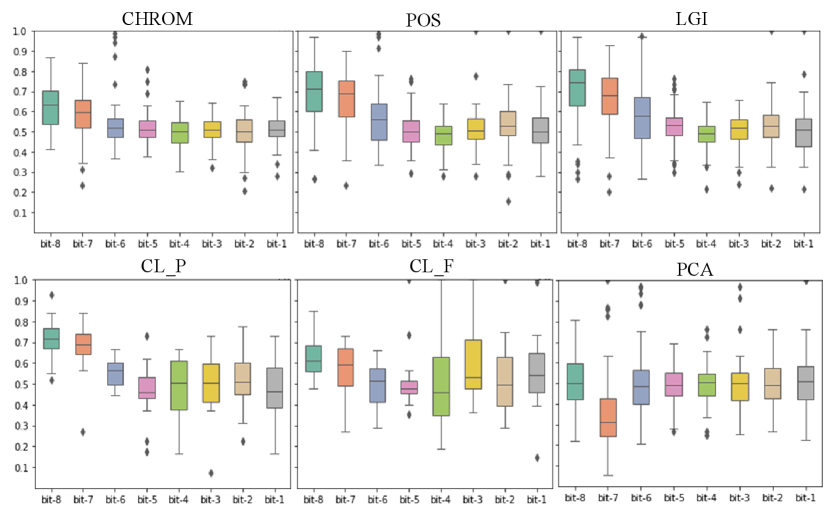

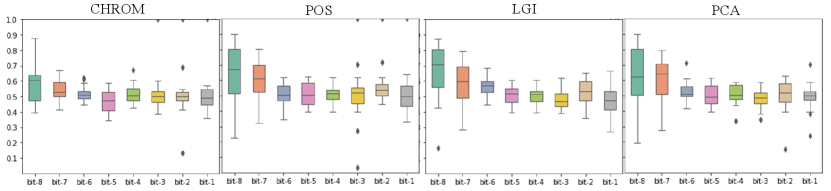

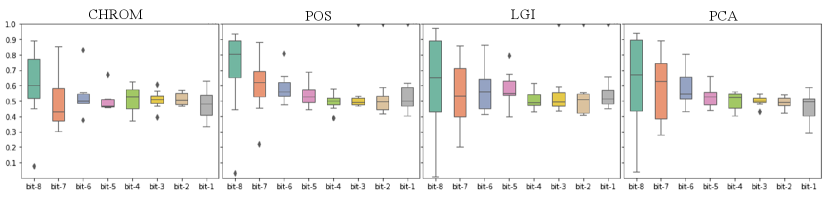

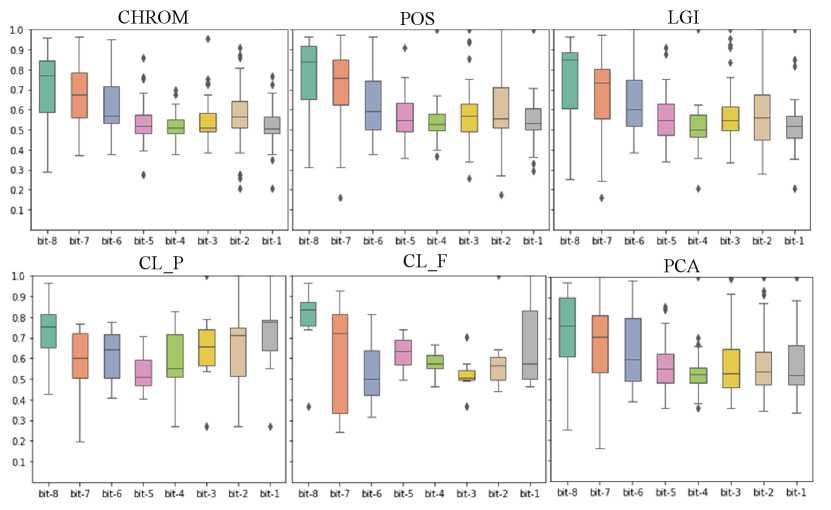

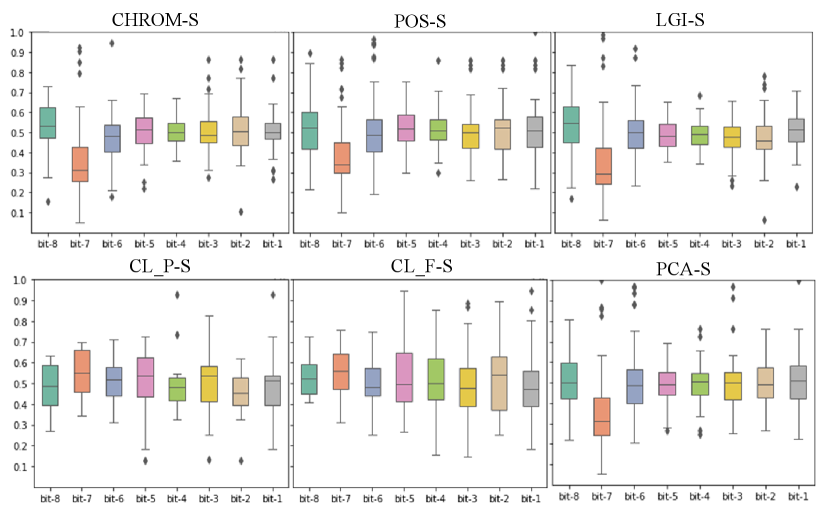

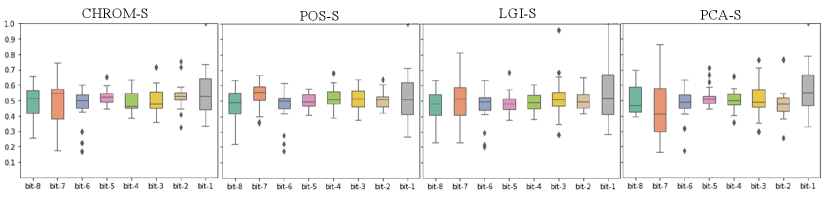

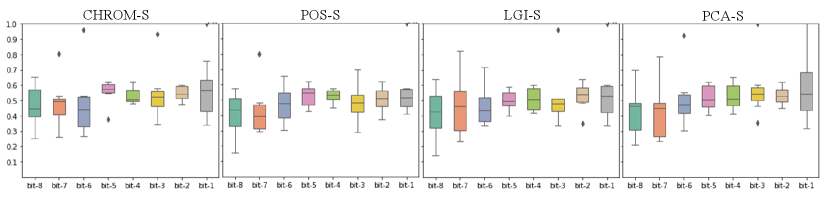

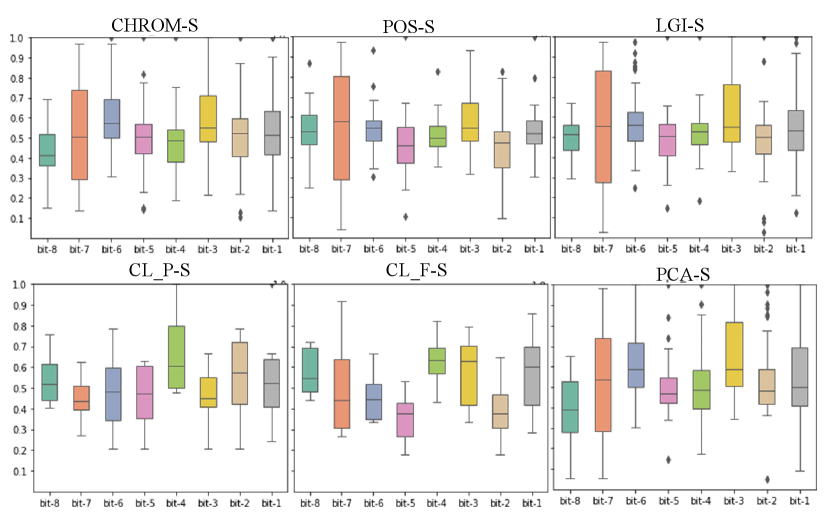

For quantile-based IPI coding, the randomness of the bit usually increases as the location of bits decreases. Because the performance of the original video extraction from COHFACE was poor in the previous experiments, we choose not to conduct the experiments on COHFACE. As shown in Fig. 4, the BHR of the encoding extracted from the original video varies with the position of bits. As the bit position decreases, so does the BHR. In the datasets LGI and UBFC_1, it is obvious to find that the BHR shows a decreasing trend from 0.7 to 0.5 with the decrease of bits. In UBFC_2, the CL_F high BHR even reached above 0.8. Although the BHR in CL_P does not vary with bits, their overall BHR is higher than 0.65.

IV-E Discussion

According to the results in Section IV, we conducted an exhaustive analysis on broadly used datasets. We observed that with the improvement of rPPG extraction methods, state-of-the-art methods have been able to recover the IPI from rPPG signals accurately. Both rPPG and PPG signals reflect individual cardiac information, making them contain a great deal of similar information. While this has facilitated the development of telemedicine, rPPG poses an actual threat to PPG-based biometric authentication, both for user authentication and IPI-based security protocols. In user authentication, the spoofing attack achieves a success rate of 35%, which is a significant improvement of 20% compared to random attacks. Even when the user is in a talking and calculating state, the success rate of rPPG attacks is still much higher than that of random attacks. In IPI-based security protocols, the state-of-the-art rPPG method recovered IPI with a BHR above 0.6. These results suggest numerous similar morphological features between the rPPG signal and the PPG signal. The exposure of these features makes it easier for attackers to compromise PPG signal-based authentication systems.

V Defensive Strategies

In this section, we investigate passive and active defence strategies against the threats discussed in previous section. Passive defence detects between regular and malicious access after an attacker has launched an attack. Active defence is a strategy taken before an attacker launches an attack. We hide the rPPG signal from the face of the video clip, making it difficult for the attacker to use the video to recover the PPG signal.

V-1 Passive Defence

Passive defence is primarily used for the scenario of user authentication. The input for user authentication is usually the fingertip or wrist PPG signal waveform. rPPG signals are collected from the human face, where there are phase differences and morphological differences between rPPG and PPG signals. The phase and morphological differences are measured to identify spoofing attacks.

Incremental Updating: When we have an attack sample, we can react to the attack instantly by incrementally updating the model. In practice, the attack sample can be obtained by pre-capturing the rPPG signal from the user’s face. However, we may not be able to simulate the attack sample in this way accurately.

Anomaly Detection: It is also known as outlier analysis. It only needs to learn the user’s samples before detecting malicious samples by excluding outliers. This paper uses Isolation Forest and One-Class SVM as anomaly detectors.

Passive defence usually follows the successful attack. Both incremental updates and anomaly detection can potentially affect the performance of the original user authentication model.

V-2 Active Defence

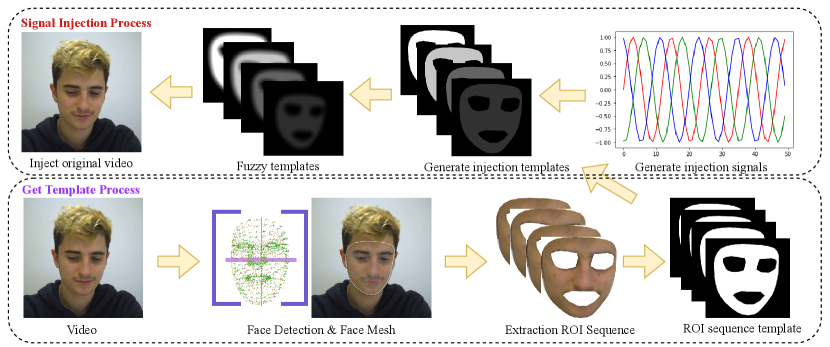

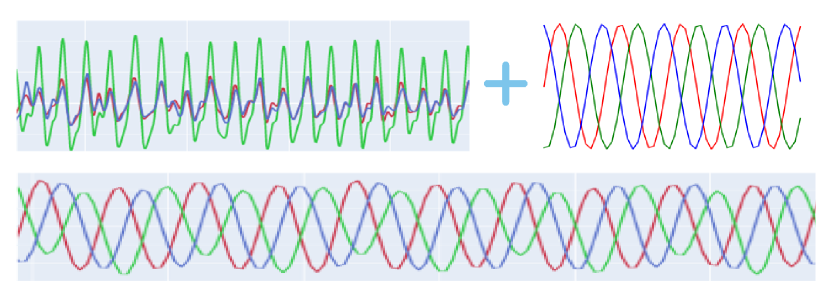

To solve the limitation of passive defence, we propose an active defence strategy, namely SigH. As shown in Fig. 5, we hide the exposed rPPG signal by injecting the noise signal into the original video. It allows us to start our defence before being attacked, minimising the impact on the original model. Our active defence strategy consists of the following components:

Extraction of ROI area: First we use BlazeFace [43] to detect the face area. It can detect faces quickly (200 to 1000 FPS) on a mobile GPU. Then we apply the method in [44] to get the face landmarks. It enables real-time (100-1000 FPS) inference of individual camera inputs on mobile devices. After that, we will get 468 3D face landmarks. These landmarks mark the locations of feature points in the face. For example, the tip of the nose and the top of the left/right cheekbones.

| Performance of the authentication model | Spoofing attack success rate | ||||

| F1-Score | Precision | Recall | rPPG | Mean-rPPG | |

| Original Model | 0.8735 | 0.9127 | 0.8548 | 0.2517 | 0.3427 |

| Incremental Updating | 0.8269 | 0.8166 | 0.8645 | 0.0067 | 0.0275 |

| OneClassSVM | 0.7369 | 0.8141 | 0.7138 | 0.0420 | 0.0572 |

| IsolationForest | 0.7364 | 0.8265 | 0.7079 | 0.0430 | 0.0586 |

Create Injection Template: For each frame in the video, We use the facial region as the ROI and exclude the eye and mouth regions. As shown in the formula below, in the template, the RGB value of the ROI area is set to 1 and the rest of the area is set to 0.

| (15) |

The sine signal is superimposed on the template.

| (16) |

The template is fuzzed with a convolution kernel.

| (17) |

Finally, we superimpose the template sequence on the original video frames to complete the signal hiding. represents the -th frame of the processed video. indicates the -th frame of the original video clip.

| (18) |

Fig. 6 shows the results of our signal injection in the video. We observe that the injected signal is completely different from the original signal.

| Video Type | CHROM-rPPG | Mean-rPPG |

|---|---|---|

| Original Video | 0.7005 | 1.0000 |

| SigH Video | 0.0291 | 0.0577 |

VI Defence Evaluation

We evaluate the performance of our defensive strategies (see Section V) in this section. The same datasets as in the previous section are used in this section. We use MediaPipe444https://google.github.io/mediapipe/ to obtain the face mesh for each video frame. Then the ROI template is extracted from the face mesh using OpenCV555https://opencv.org/ before we perform signal injection to the templates.

VI-A Defence in User Authentication

Tab. V shows the performance of the passive defence strategy. These results show that all passive defence strategies significantly reduce the attack’s success rate. The success rate of rPPG attacks has been reduced from 0.25 to at least 0.04. Mean-treated rPPG was reduced to 0.05. However, we observe that the passive defence strategy seriously affects the performance of the authentication model system. The incremental updating method has the least impact on the authentication system. The F1-Score of the model dropped from 0.87 to 0.82. It reduces the success rate of rPPG attacks and makes the model less generalized. IsolationForest and OneClassSVM have a significant impact. The F1-Score of the model is around 0.73. Unfortunately, it propagates the error rate to the authentication model while blocking the attack.

We propose an active defense strategy to solve the problems in passive defence. To maximize the active defence strategy’s performance, we selected 13 video clips from the original videos with the success rate of being attacked greater than 0.5. As shown in Tab. VI, the attack’s success rate using the rPPG signal extracted from the original video reaches 0.7, and the mean-treated rPPG signal even reaches 1. The success rate of video rPPG attacks processed by the active defence strategy is reduced to 0.02, and the mean-treated rPPG is decreased to 0.05, almost achieving the same performance as the passive defence. Moreover, the active defence strategy does not affect the original model’s performance.

| CHROM | POS | LGI | PCA | CL_P | CL_F | |

| MAE-S (P) | 0.1729 | 0.2087 | 0.1661 | 0.1780 | 0.2039 | 0.1691 |

| RMSE-S (P) | 0.2222 | 0.2536 | 0.2153 | 0.2279 | 0.2422 | 0.2086 |

| PC-S (P) | -0.046 | -0.037 | -0.057 | -0.055 | 0.2897 | 0.3917 |

| MAE-S (L) | 0.2782 | 0.2676 | 0.2872 | 0.2899 | - | - |

| RMSE-S (L) | 0.3419 | 0.3274 | 0.3466 | 0.3509 | - | - |

| PC-S (L) | 0.0317 | 0.0112 | -0.009 | -0.005 | - | - |

| MAE-S (U1) | 0.3141 | 0.2481 | 0.2472 | 0.3417 | - | - |

| RMSE-S (U1) | 0.3687 | 0.3061 | 0.3051 | 0.3893 | - | - |

| PC-S (U1) | 0.0003 | 0.0115 | 0.0098 | -0.050 | - | - |

| MAE-S (U2) | 0.3597 | 0.3063 | 0.3170 | 0.4012 | 0.3610 | 0.2728 |

| RMSE-S (U2) | 0.4077 | 0.3574 | 0.3689 | 0.4403 | 0.4045 | 0.3200 |

| PC-S (U2) | 0.0397 | 0.0058 | 0.0163 | 0.0447 | 0.4759 | 0.7647 |

| MAE-S (C) | 0.2884 | 0.2948 | 0.2943 | 0.2942 | 0.4112 | 0.4629 |

| RMSE-S (C) | 0.3209 | 0.3242 | 0.3237 | 0.3234 | 0.4873 | 0.5359 |

| PC-S (C) | -0.007 | -0.033 | -0.025 | -0.023 | 0.3360 | 0.2036 |

| CHROM | POS | LGI | PCA | CL_P | CL_F | |

| BHR-S (P) | 0.5516 | 0.5923 | 0.5557 | 0.5758 | 0.5410 | 0.5935 |

| BHR-S (L) | 0.5412 | 0.5208 | 0.5179 | 0.5362 | - | - |

| BHR-S (U1) | 0.6042 | 0.5210 | 0.5374 | 0.5700 | - | - |

| BHR-S (U2) | 0.5735 | 0.5563 | 0.5406 | 0.5787 | 0.5709 | 0.5283 |

| BHR-S (C) | 0.5388 | 0.5432 | 0.5397 | 0.5396 | 0.5312 | 0.5259 |

VI-B Defence in IPI-based security protocols

Compared to the best results (CL_P, dataset PURE) of the original video extracted IPI, in our processed video, MAE increased to 0.2, PC was reduced to 0.28. In the other datasets, MSE increased to some extent. However, we found that the variation was minimal in COHFACE because the video clips in COHFACE are compressed. The MAE of the IPI recovered from COHFACE has reached 0.2s. Their PC is around 0.05 for all methods except CL_P and CL_F. It indicates that the IPI recovered by other methods has a low correlation with the IPI of PPG. After processing the video, even the state-of-the-art method PC is reduced to approximately 0.3.

Contrary to the result in Section IV-D, Tab. VIII shows that the BHR of videos processed by active defense methods is approximately 0.5, which is almost equal to random guessing. As shown in Fig. 7, in the processed video, BHR remains steady at approximately 0.5 with the bit position. For example, as shown in Fig. 4(a), the LGI and POS methods gradually decrease in BHR from 0.7 to 0.5 as the bits go down. In contrast, as shown in Fig. 7(a), BHR does not change with the bits. It means that our proposed active defense method completely hides the PPG signal of the user in the video.

VI-C Discussion

A common strategy to combat spoofing attacks is by introducing detection components. Nevertheless, the detection component is usually located in front of the recognition pipeline, and its errors will be propagated to the recognition model, affecting the overall model recognition performance. In particular, when the spoofed signal is similar to a real signal, the model’s false-negative rate increases significantly. We observed that low-quality videos lower the success of spoofing attacks. For example, in the COHFACE dataset, the quality of the obtained rPPG signals is relatively poor, and the success rate of the attack is lower than in other datasets. Compared to other datasets, the video clips in COHFACE have a frame rate of 20 FPS, resolution, and 255 Kbps bit rate. However, preventing attacks by sacrificing video quality is not always practical. Thus, we propose an active defence to prevent leaking the target’s PPG signals by modifying the RGB pixel values of the facial skin in the video frame. Video hosting platforms like YouTube and TikTok can successfully prevent signal leakage by batch processing users’ videos.

VII Conclusion

Recently, emerging biometric solutions using physiological signals have gained widespread attention. In particular, the PPG signal is easy to collect and unobservable to remote attackers. However, the rPPG signal breaks this unobservability. To comprehensively analyze the impact of the rPPG signal, we conducted experiments on five datasets (PURE, UBFC_rPPG, UBFC_Phys, LGI_PPGI, and COHFACE). We found that user authentication and IPI-based security protocols are vulnerable to rPPG signal spoofing attacks. To mitigate this spoofing attack, we propose an active defence scheme. It has the most negligible impact on the performance of the original model compared to the passive defence. Before releasing HD video, we recommend video platforms using active defence strategies in bulk to mitigate rPPG signal leakage.

References

- [1] M. Wang, J. Hu, and H. A. Abbass, “Brainprint: Eeg biometric identification based on analyzing brain connectivity graphs,” Pattern Recognition, vol. 105, p. 107381, 2020.

- [2] Y. Huang, G. Yang, K. Wang, H. Liu, and Y. Yin, “Learning joint and specific patterns: A unified sparse representation for off-the-person ecg biometric recognition,” IEEE Transactions on Information Forensics and Security, vol. 16, pp. 147–160, 2021.

- [3] D. Y. Hwang, B. Taha, D. S. Lee, and D. Hatzinakos, “Evaluation of the time stability and uniqueness in ppg-based biometric system,” IEEE Transactions on Information Forensics and Security, vol. 16, pp. 116–130, 2021.

- [4] M. Hu, F. Qian, D. Guo, X. Wang, L. He, and F. Ren, “Eta-rppgnet: Effective time-domain attention network for remote heart rate measurement,” IEEE Transactions on Instrumentation and Measurement, vol. 70, pp. 1–12, 2021.

- [5] A. Dasari, S. K. A. Prakash, L. A. Jeni, and C. S. Tucker, “Evaluation of biases in remote photoplethysmography methods,” NPJ Digital Medicine, vol. 4, no. 1, pp. 1–13, 2021.

- [6] R. M. Seepers, W. Wang, G. de Haan, I. Sourdis, and C. Strydis, “Attacks on heartbeat-based security using remote photoplethysmography,” IEEE Journal of Biomedical and Health Informatics, vol. 22, no. 3, pp. 714–721, 2018.

- [7] A. Calleja, P. Peris-Lopez, and J. E. Tapiador, “Electrical heart signals can be monitored from the moon: Security implications for ipi-based protocols,” in Proceedings of the Information Security Theory and Practice, R. N. Akram and S. Jajodia, Eds., vol. 9311. Cham: Springer International Publishing, 2015, pp. 36–51.

- [8] N. Karimian, “How to attack ppg biometric using adversarial machine learning,” in Proceedings of the Autonomous Systems: Sensors, Processing, and Security for Vehicles and Infrastructure, vol. 11009. International Society for Optics and Photonics, 2019, p. 1100909.

- [9] Y. Gu, Y. Zhang, and Y. Zhang, “A novel biometric approach in human verification by photoplethysmographic signals,” in Proceedings of the 4th International IEEE EMBS Special Topic Conference on Information Technology Applications in Biomedicine, 2003, pp. 13–14.

- [10] A. R. Kavsaoğlu, K. Polat, and M. R. Bozkurt, “A novel feature ranking algorithm for biometric recognition with ppg signals,” Computers in Biology and Medicine, vol. 49, pp. 1–14, 2014.

- [11] K. Takazawa, N. Tanaka, M. Fujita, O. Matsuoka, T. Saiki, M. Aikawa, S. Tamura, and C. Ibukiyama, “Assessment of vasoactive agents and vascular aging by the second derivative of photoplethysmogram waveform,” Hypertension, vol. 32, no. 2, pp. 365–370, 1998.

- [12] A. A. Awad, A. S. Haddadin, H. Tantawy, T. M. Badr, R. G. Stout, D. G. Silverman, and K. H. Shelley, “The relationship between the photoplethysmographic waveform and systemic vascular resistance,” Journal of clinical monitoring and computing, vol. 21, no. 6, pp. 365–372, 2007.

- [13] S. C. Millasseau, R. Kelly, J. Ritter, and P. Chowienczyk, “Determination of age-related increases in large artery stiffness by digital pulse contour analysis,” Clinical science, vol. 103, no. 4, pp. 371–377, 2002.

- [14] X. Zhang, Z. Qin, and Y. Lyu, “Biometric authentication via finger photoplethysmogram,” in Proceedings of the 2nd International Conference on Computer Science and Artificial Intelligence (CSAI ’18). New York, NY, USA: Association for Computing Machinery, 2018, p. 263–267.

- [15] U. Yadav, S. N. Abbas, and D. Hatzinakos, “Evaluation of ppg biometrics for authentication in different states,” in Proceedings of the International Conference on Biometrics, 2018, pp. 277–282.

- [16] R. Donida Labati, V. Piuri, F. Rundo, F. Scotti, and C. Spampinato, “Biometric recognition of ppg cardiac signals using transformed spectrogram images,” in Proceedings of the ICPR Workshop on Mobile and Wearable Biometrics (WMWB), vol. 12668. Springer, 2021, pp. 244–257.

- [17] D. Y. Hwang, B. Taha, and D. Hatzinakos, “Pbgan: Learning ppg representations from gan for time-stable and unique verification system,” IEEE Transactions on Information Forensics and Security, vol. 16, pp. 5124–5137, 2021.

- [18] N. Akhter, H. Gite, G. Rabbani, and K. Kale, “Heart rate variability for biometric authentication using time-domain features,” in Proceedings of the International Symposium on Security in Computing and Communication. Kochi, India: Springer, 2015, pp. 168–175.

- [19] D. Biswas, L. Everson, M. Liu, M. Panwar, B.-E. Verhoef, S. Patki, C. H. Kim, A. Acharyya, C. Van Hoof, M. Konijnenburg, and N. Van Helleputte, “Cornet: Deep learning framework for ppg-based heart rate estimation and biometric identification in ambulant environment,” IEEE Transactions on Biomedical Circuits and Systems, vol. 13, no. 2, pp. 282–291, 2019.

- [20] J. Zhang, Y. Zheng, W. Xu, and Y. Chen, “H2k: A heartbeat-based key generation framework for ecg and ppg signals,” IEEE Transactions on Mobile Computing, 2021, doi:10.1109/TMC.2021.3096384.

- [21] H. Chizari and E. Lupu, “Extracting randomness from the trend of ipi for cryptographic operations in implantable medical devices,” IEEE Transactions on Dependable and Secure Computing, vol. 18, no. 2, pp. 875–888, 2019.

- [22] Q. Lin, W. Xu, J. Liu, A. Khamis, W. Hu, M. Hassan, and A. Seneviratne, “H2b: Heartbeat-based secret key generation using piezo vibration sensors,” in Proceedings of the 18th International Conference on Information Processing in Sensor Networks, 2019, pp. 265–276.

- [23] W. Verkruysse, L. O. Svaasand, and J. S. Nelson, “Remote plethysmographic imaging using ambient light,” Optics Express, vol. 16, no. 26, pp. 21 434–21 445, 2008.

- [24] M.-Z. Poh, D. J. McDuff, and R. W. Picard, “Non-contact, automated cardiac pulse measurements using video imaging and blind source separation,” Optics Express, vol. 18, no. 10, pp. 10 762–10 774, 2010.

- [25] M. Lewandowska, J. Rumiński, T. Kocejko, and J. Nowak, “Measuring pulse rate with a webcam — a non-contact method for evaluating cardiac activity,” in Proceedings of the Federated Conference on Computer Science and Information Systems. IEEE, 2011, pp. 405–410.

- [26] G. De Haan and V. Jeanne, “Robust pulse rate from chrominance-based rppg,” IEEE Transactions on Biomedical Engineering, vol. 60, no. 10, pp. 2878–2886, 2013.

- [27] W. Chen and D. McDuff, “Deepphys: Video-based physiological measurement using convolutional attention networks,” in Proceedings of the European Conference on Computer Vision (ECCV), 2018, pp. 349–365.

- [28] Z. Yu, W. Peng, X. Li, X. Hong, and G. Zhao, “Remote heart rate measurement from highly compressed facial videos: an end-to-end deep learning solution with video enhancement,” in Proceedings of the IEEE/CVF International Conference on Computer Vision, 2019, pp. 151–160.

- [29] E. Lee, E. Chen, and C.-Y. Lee, “Meta-rppg: Remote heart rate estimation using a transductive meta-learner,” in Proceedings of the European Conference on Computer Vision. Springer, 2020, pp. 392–409.

- [30] J. Gideon and S. Stent, “The way to my heart is through contrastive learning: Remote photoplethysmography from unlabelled video,” in Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), October 2021, pp. 3995–4004.

- [31] P. E. McSharry, G. D. Clifford, L. Tarassenko, and L. A. Smith, “A dynamical model for generating synthetic electrocardiogram signals,” IEEE transactions on biomedical engineering, vol. 50, no. 3, pp. 289–294, 2003.

- [32] S. Hinatsu, D. Suzuki, H. Ishizuka, S. Ikeda, and O. Oshiro, “Basic study on presentation attacks against biometric authentication using photoplethysmogram,” Advanced Biomedical Engineering, vol. 10, pp. 101–112, 2021.

- [33] K. Zhang, Z. Zhang, Z. Li, and Y. Qiao, “Joint face detection and alignment using multitask cascaded convolutional networks,” IEEE Signal Processing Letters, vol. 23, no. 10, pp. 1499–1503, 2016.

- [34] S. Kolkur, D. Kalbande, P. Shimpi, C. Bapat, and J. Jatakia, “Human skin detection using rgb, hsv and ycbcr color models,” in Proceedings of the International Conference on Communication and Signal Processing. Atlantis Press, 2016, pp. 324–332.

- [35] M. P. Tarvainen, P. O. Ranta-Aho, and P. A. Karjalainen, “An advanced detrending method with application to hrv analysis,” IEEE transactions on biomedical engineering, vol. 49, no. 2, pp. 172–175, 2002.

- [36] F. Xu, Z. Qin, C. C. Tan, B. Wang, and Q. Li, “Imdguard: Securing implantable medical devices with the external wearable guardian,” in 2011 Proceedings IEEE INFOCOM. IEEE, 2011, pp. 1862–1870.

- [37] R. Meziatisabour, Y. Benezeth, P. De Oliveira, J. Chappe, and F. Yang, “Ubfc-phys: A multimodal database for psychophysiological studies of social stress,” IEEE Transactions on Affective Computing, 2021, doi:10.1109/TAFFC.2021.3056960.

- [38] R. Stricker, S. Müller, and H.-M. Gross, “Non-contact video-based pulse rate measurement on a mobile service robot,” in The 23rd IEEE International Symposium on Robot and Human Interactive Communication. IEEE, 2014, pp. 1056–1062.

- [39] S. Bobbia, R. Macwan, Y. Benezeth, A. Mansouri, and J. Dubois, “Unsupervised skin tissue segmentation for remote photoplethysmography,” Pattern Recognition Letters, vol. 124, pp. 82–90, 2019.

- [40] C. S. Pilz, S. Zaunseder, J. Krajewski, and V. Blazek, “Local group invariance for heart rate estimation from face videos in the wild,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), 2018, pp. 1254–1262.

- [41] G. Heusch, A. Anjos, and S. Marcel, “A reproducible study on remote heart rate measurement,” arXiv preprint arXiv:1709.00962, 2017.

- [42] G. Boccignone, D. Conte, V. Cuculo, A. D’Amelio, G. Grossi, and R. Lanzarotti, “An open framework for remote-ppg methods and their assessment,” IEEE Access, vol. 8, pp. 216 083–216 103, 2020.

- [43] V. Bazarevsky, Y. Kartynnik, A. Vakunov, K. Raveendran, and M. Grundmann, “Blazeface: Sub-millisecond neural face detection on mobile gpus,” arXiv preprint arXiv:1907.05047, 2019.

- [44] Y. Kartynnik, A. Ablavatski, I. Grishchenko, and M. Grundmann, “Real-time facial surface geometry from monocular video on mobile gpus,” arXiv preprint arXiv:1907.06724, 2019.