High-Dimensional Inference for Generalized Linear Models

with Hidden Confounding

Abstract

Statistical inferences for high-dimensional regression models have been extensively studied for their wide applications ranging from genomics, neuroscience, to economics. However, in practice, there are often potential unmeasured confounders associated with both the response and covariates, which can lead to invalidity of standard debiasing methods. This paper focuses on a generalized linear regression framework with hidden confounding and proposes a debiasing approach to address this high-dimensional problem, by adjusting for the effects induced by the unmeasured confounders. We establish consistency and asymptotic normality for the proposed debiased estimator. The finite sample performance of the proposed method is demonstrated through extensive numerical studies and an application to a genetic data set.

Keywords: High-dimensional inference; Generalized linear model; Latent variable; Unmeasured confounder.

1 Introduction

Statistical inferences for high-dimensional regression models have received a growing interest due to the increasing number of complex data sets with high-dimensional covariates that are collected across many different scientific disciplines ranging from genomics to social science to econometrics (Peng et al., 2010; Belloni et al., 2012; Fan et al., 2014; Bühlmann et al., 2014). One of the most popularly used high-dimensional regression methods is the lasso linear regression, which assumes that the underlying regression coefficients are sparse (Tibshirani, 1996). However, the lasso penalty introduces non-negligible bias that renders high-dimensional statistical inference challenging (Wainwright, 2019). To address this challenge, Zhang and Zhang (2014) and van de Geer et al. (2014) proposed the debiasing method to construct confidence intervals for the lasso regression coefficients: the main idea is to first obtain the lasso estimator, and then correct for the bias of the lasso estimator using a low-dimensional projection method. We refer the reader to Javanmard and Montanari (2014), Belloni et al. (2014), Ning and Liu (2017), Chernozhukov et al. (2018), among others, for detailed discussions of the debiasing approach, and also Wainwright (2019) for an overview of high-dimensional statistical inference.

The aforementioned studies were established under the assumption that there are no unmeasured confounders that are associated with both the response and covariates. However, this assumption is often violated in observational studies. For instance, in genetic studies, the effect of certain segments of DNA on the gene expression may be confounded by population structure and microarray expression artifacts (Listgarten et al., 2010). Another example is in healthcare studies where the effect of nutrients intake on the risk of cancer may be confounded by physical wellness, social class, and behavioral factors (Fewell et al., 2007). Without adjusting for the unmeasured confounders, the resulting inferences from the standard debiasing methods could be biased and consequently, lead to spurious scientific discoveries.

Various methods have been proposed to perform valid statistical inferences for regression parameters in the presence of hidden confounders. One commonly used approach is instrumental variables regression, which typically requires domain knowledge to identify valid instrumental variables, making it challenging for high-dimensional applications (Kang et al., 2016; Burgess et al., 2017; Guo et al., 2018; Windmeijer et al., 2019). Recently, under a relaxed linear structural equation modeling framework, Guo et al. (2022) proposed a deconfounding approach for statistical inference for individual regression coefficients. Fan et al. (2023) developed a factor-adjusted debiasing method and conducted statistical estimation and inference for the coefficient vector. However, these two methods rely on the linearity assumption between the response and covariates, which may not hold when the response is not continuous but categorical (binary, count, etc.). Such categorical data commonly occurs in genetic, biomedical, and social science applications, and thus alternative methods are needed to perform valid statistical inferences in these cases.

To bridge the gap in the existing literature, we propose a novel framework to perform statistical inference in the context of high-dimensional generalized linear models with hidden confounding. The main idea of our proposed method involves estimating the unmeasured confounders using high-dimensional factor analysis techniques and then applying the debiasing method on the regression coefficient of interest, with the estimated unmeasured confounders treated as surrogate variables. This method does not rely on the linear model assumption or any specific model form and it is applicable to more general models beyond the generalized linear models, such as graphical models (Ren et al., 2015; Zhu et al., 2020) and additive hazards models (Lin and Ying, 1994; Lin and Lv, 2013).

Theoretically, we show that under some mild scaling conditions, the estimation errors of proposed estimators achieve comparable rates as that of the -penalized generalized linear model without unmeasured confounders. We further show that the debiased estimator for the coefficient of interest is asymptotically Gaussian after adjusting for the unmeasured confounders, which results in a valid statistical inference for high-dimensional generalized linear models with hidden confounding. It is worth highlighting that when using a factor model to relate covariates and unmeasured confounders, we make more general assumptions on the random noise compared to existing works. Specifically, we allow the random noise to be non-identically distributed. This represents a significant improvement over the majority of previous works, which assume that the random noise follows an independent and identical distribution (Guo et al., 2022; Fan et al., 2023). Furthermore, unlike existing methods under the linear framework (Guo et al., 2022; Fan et al., 2023), generalized linear models pose new challenges as many transformation and decomposition techniques commonly used for the linear models are not directly applicable. Consequently, more general and refined intermediate results are needed as “building blocks” in the proofs (see Remark 8 in Appendix D.1 for details).

Our paper is organized and structured as follows. In Section 2, we introduce the model setup and provide a comprehensive discussion of related literature. Section 3 presents our two-step approach for estimating the parameter of interest while adjusting for the effect of unmeasured confounders. Section 4 establishes the theoretical properties of the parameter including the estimation consistency as well as the asymptotic normality for the estimator. In Section 5, we demonstrate the performance of our proposed method and the validity of theoretical results via extensive simulation studies. We also provide an application to genetic data containing gene expression quantifications and stimulation statuses in mouse bone marrow-derived dendritic cells, where we identify significant gene expressions under stimulations, which are consistent with the experimental findings in genetic studies (Nemetz et al., 1999; Ather and Poynter, 2018; Jang et al., 2018; Toyoshima et al., 2019). Lastly, in Section 6, we provide concluding remarks and outline potential future directions for this research.

2 Generalized Linear Models with Hidden Confounding

In this section, we first set up a generalized linear model with hidden confounding and introduce a scientific application of our model framework. Then we will discuss related high-dimensional models in the existing literature.

2.1 Problem Setup

Consider a high-dimensional regression problem with a unidimensional response and a -dimensional observed covariates . In addition, assume that there is a -dimensional unmeasured confounders that are related to both and . Without loss of generality, we write , where is the covariate of interest and is a -dimensional vector of nuisance covariates. Furthermore, we denote as the univariate parameter of interest, as parameters for the nuisance covariates, and as parameters that quantify the effects induced by the unmeasured confounders. The goal is to perform statistical inference on the parameter .

We assume that given , , and the unmeasured confounders follows a generalized linear model with probability density (mass) function:

| (1) |

where is the scale parameter, and , , and are some known functions. As the distribution of belongs to the exponential family, we have and . For simplicity, we take . For notational convenience, let be a vector that includes all observed covariates and the unmeasured confounders, and let be the corresponding parameters. We now provide three commonly used examples.

Example 1 (Logistic Regression)

Let be a binary variable. Given covariates , , and unmeasured confounders , the response follows the logistic regression model with , , , and .

Example 2 (Poisson Regression)

Let be a discrete variable. Given covariates , , and unmeasured confounders , the response follows the Poisson regression model with , , , and .

Example 3 (Linear Regression)

Let be a real-valued response variable. Given covariates , , and unmeasured confounders , the response follows the linear regression model with and . The model parameters are , , and .

In addition, we assume that the relationship between covariates and unmeasured confounders is captured by the following model:

| (2) |

where is the loading matrix that describes the linear effect of unmeasured confounders on covariates , and is the random noise independent of . While similar model as in (2) is considered in Guo et al. (2022) and Fan et al. (2023), here we assume model (2) is an approximate factor model which allows for weak correlation and non-identical distribution of the random noise. This is a general setting compared to many existing works assuming to be identically and independently distributed (Guo et al., 2022; Fan et al., 2023). We will elaborate on this model setup and expound the assumptions for weakly correlated random noise in Sections 3 and 4.

The aforementioned structural equation modeling framework can be applied to many scientific applications. In the following, we provide one motivating example in genetic studies. Various authors have found that the effect of gene expression in response to the environmental conditions, e.g., viral or bacterial stimulation, might be confounded by unmeasured factors (Price et al., 2006; Lazar et al., 2013). One interesting scientific problem is to assess the effect of gene expression responding to the viral stimulation to cells, while adjusting for the confounding effects from the unmeasured variables. The viral stimulation status, a binary variable, is considered as the response variable . Therefore, a generalized linear model for modeling is preferred. In this example, is the viral stimulation status, is a vector of high-dimensional gene expression, and is a vector of possible unmeasured confounders. More details of the data application will be provided in Section 5.2.

2.2 Related Models in Existing Literature

In high-dimensional regression, the adjustment for the hidden confounding effects is a challenging and intriguing problem. There are various related models in the literature. For instance, to address the difficulty of model selection when , Paul et al. (2008) assumed that and are connected via a low-dimensional latent variable model: and , where the latent factors are associated with response and covariates are only used to infer the latent factors. However, the response and covariates are not directly associated. Different from the latent variable model in Paul et al. (2008), Bai and Ng (2006) considered an additional low-dimensional covariate to the latent variable model, that is expressed as and . Besides, there are other factor-adjusted models that can be extended to adjust hidden confounding. For example, Fan et al. (2020) studied the high-dimensional model selection problem when covariates are highly correlated. As most commonly used model selection methods may fail with highly-correlated covariates, they used a factor model to reduce the dependency among covariates and proposed a factor-adjusted regularized model section method. Fan et al. (2020) considered a generalized linear model between response and covariate, which together with the factor model forms a similar model framework as ours. However, the problem they studied is fundamentally different than our problem. They did not assume hidden confounding and the factor model is only used to identify a low rank part of highly-correlated covariates whereas in our problem, we focus on the regression problem with unmeasured confounders associated with response and covariates. Similar factor-adjusted methods have also been studied in other settings (e.g., Gagnon-Bartsch and Speed, 2011; Wang and Blei, 2019); however, as noted in Ćevid et al. (2020), related theoretical justifications are still underdeveloped in the literature.

Recently, many researchers studied the following linear hidden confounding model, which can be viewed as a special case of our framework,

| (3) |

Under this model, Kneip and Sarda (2011) used the principal component method to estimate the unmeasured confounders and then applied a selection procedure on a projected model. Ćevid et al. (2020) proposed a method that first performs the spectral transformation pre-processing step and then applies the lasso regression on the transformed response and covariates. However, these two works focused on estimation consistency and did not address inference issues. Several works have investigated statistical inference on the covariate coefficients. For example, Guo et al. (2022) proposed a doubly debiased lasso method to perform statistical inference for . Different from the approach of Guo et al. (2022) that implicitly adjusts for the hidden confounding effects, Fan et al. (2023) proposed to first use the principal component method to estimate unmeasured confounders and then construct the bias-corrected estimator for , which involves the decomposition of the estimation error relying on the linear form of the response and uses the projection of the response onto the factor space. The motivation of Fan et al. (2023) originated from Fan et al. (2020). When covariates are highly correlated, the leading factors are likely to have extra impacts on the response. So they augmented the factor into the sparse linear regression model between response and covariates, which is written as (3). However, these techniques are designed for linear models and may not be directly applicable to generalized linear model settings.

3 Estimation Method

In this section, we propose a novel framework to perform statistical inference for a parameter of interest in the context of high-dimensional generalized linear models with hidden confounding. In the proposed framework, we first estimate the unmeasured confounders using a factor analysis approach. Subsequently, the estimated unmeasured confounders are treated as surrogate variables for fitting a high-dimensional generalized linear model, and a debiased estimator is constructed to perform statistical inference.

Throughout this section, we assume that the observed data and the unmeasured confounders are realizations of (1) and (2). Moreover, the random noise has mean zero and variance Let , where denotes a diagonal matrix by setting off-diagonal entries in to zero. In the diagonal matrix , we denote the -th diagonal element to be , where is the element of . The model assumption on the random noise is general as it does not assume that the random noise is identical nor does it require the covariance matrix to be diagonal. The detailed theoretical assumptions regarding the random noise will be presented in Section 4.

Estimation of the Unmeasured Confounders: In this work, we consider the dimension as pre-specified. In practice, there are various methods to estimate the dimension of unmeasured confounders such as scree plot (Cattell, 1966), cross-validation method (Owen and Wang, 2016), information criteria method (Bai and Ng, 2002), the eigenvalue ratio method (Lam and Yao, 2012; Ahn and Horenstein, 2013), among others. In the implementation of our proposed method, we recommend the parallel analysis approach because of its good finite sample performance, easy implementation, and popularity in scientific applications (Hayton et al., 2004; Costello and Osborne, 2005; Brown, 2015) – it has shown superior performances compared to many other methods in various empirical studies (Zwick and Velicer, 1986; Peres-Neto et al., 2005). Detailed discussions on the estimation of the dimension of unmeasured confounders are presented in Appendix C.

We employ the maximum likelihood estimation to estimate the unmeasured confounders under (2). Without loss of generality, let and let be the sample variance of . Similarly, let be the sample variance of , where . Given unmeasured confounders , define an approximation of population variance of to be . Note that is not exactly the covariance matrix of because we do not restrict to be diagonal and define to be diagonal by setting the off-diagonal of to be zero. Based on the factor model in (2), the maximum likelihood estimators of , and are obtained as follows:

Computationally, we employ the Expectation-Maximization (EM) algorithm to obtain the maximum likelihood estimators as suggested in Bai and Li (2012) and Bai and Li (2016), where the authors proved the EM solutions are the stationary points for the likelihood function. Specifically, in this iterated EM algorithm, we use the principal components estimator as the initial estimator. Because principal component estimators are shown to be consistent estimators under similar model assumptions as ours (Fan et al., 2013; Wang and Fan, 2017), using the principal component estimators in initialization instead of using random initialization helps to improve algorithm efficiency and find more refined estimation results. At the -th iteration, denote the estimators at this step to be and . The EM algorithm updates the estimators to be

where ,

The iterative steps stop when and are less than certain tolerance value. Let and to be the estimators at the last step, and to be the matrix containing eigenvectors of corresponding to the descending eigenvalues. The maximum likelihood estimators are and . The estimator is obtained after estimating and . As is not our focus in this method, we omit its derivation details in this paper.

With and , we then estimate using the generalized least squares estimator:

| (4) |

Next, we treat the estimators as surrogate variables for the underlying unmeasured confounders to fit a high-dimensional generalized linear model. We then construct a debiased estimator for the parameter of interest by generalizing the decorrelated score method proposed by Ning and Liu (2017). Other debiasing approaches such as that of van de Geer et al. (2014) could also be similarly developed.

Initial -Penalized Estimator: Recall that . For vector , define . To fit a high-dimensional generalized linear model, we solve the -penalized optimization problem

where and is a known function, given a specific generalized linear model. Throughout the manuscript, for notational convenience, let be regression coefficients for the nuisance covariates and unmeasured confounders. Accordingly, we have , with the goal of performing statistical inference on . In the following, we construct a debiased estimator for , generalizing the approach in Ning and Liu (2017) to situations involving unmeasured confounders.

A Debiased Estimator: Before we unfold the details of constructing a debiased estimator, we start with introducing some notations. Let be the Fisher information matrix, and let , where and are corresponding block matrices of . In addition, let be the partial Fisher information matrix, where is a vector of nuisance covariates and unmeasured confounders. Finally, let with be the loss function.

We define as the generalized decorrelated score function, where and are the partial derivatives of the loss function with respect to and , respectively. Different from the existing definition of the decorrelated score function in Ning and Liu (2017), the generalized decorrelated score function takes into account the effects induced by the unmeasured confounders. Specifically, in the presence of unmeasured confounders, the generalized decorrelated score function is uncorrelated with the score function corresponding to the nuisance covariates as well as the unmeasured confounders, i.e., . The debiased estimator of is constructed by solving for from the first-order approximation of the generalized decorrelated score function . From the first-order approximation equation, we see that to establish the debiased estimator , we need to construct two estimators and , and the key is to estimate .

We estimate by solving the following convex optimization problem:

where is the th component of the loss function and is a sparsity tuning parameter for . Equivalently, the estimator is obtained by

| (5) |

The estimator is constructed with the intuition of finding a sparse vector such that the generalized decorrelated score function is approximately zero. This coincides with the intuition to solve for from the first-order approximation of the generalized decorrelated score function. Under the null hypothesis , we estimate the generalized decorrelated score function and the partial Fisher information matrix by

The debiased estimator can then be constructed as . We will show in Section 4 that the debiased estimator is asymptotically normal. Subsequently, the confidence interval for can be constructed as

| (6) |

where is the cumulative distribution function for the standard normal random variable.

4 Theoretical Results

Recall that our proposed method yields estimators , , and the debiased estimator . In this section, we first establish upper bounds for the estimation errors of and under the norm. Subsequently, we show that the debiased estimator is asymptotically normal.

For a vector , let for and let . For any matrix , let and represent the largest and smallest eigenvalues of , respectively. Moreover, for sequences and , we write if there exists a constant such that for all , and if and . For a sub-exponential random variable , we write as the sub-exponential norm. For a sub-Gaussian random variable , we write as the sub-Gaussian norm. Throughout the manuscript, we will use an asterisk on the upper subscript to indicate the population parameters. In addition, we define and as the cardinalities of and , respectively. All of our theoretical analysis are performed under the regime in which , , , and are allowed to increase, and the number of unmeasured confounders is fixed.

We start with some conditions on the factor model in (2). Similar conditions were also considered in Bai and Li (2016) in the context of high-dimensional approximate factor model.

Assumption 1

For some large constant ,

(a) , .

(b) with for some and all , and for all .

(c) with for some and all , and .

(d) For all ,

(e) and are estimated within the set for all . For positive definite matrices and , and , where is the Kronecker product.

Assumption 1 is more general than assumptions in classical factor analysis (Anderson and Amemiya, 1988; Bai, 2003; Fan et al., 2013; Bai and Li, 2016). Instead of constraining all the to have a diagonal covariance matrix, we now only require the higher-order moment of to be bounded, the diagonal entries ’s to be bounded, as well as the magnitudes of the correlations among entries of to be controlled in Assumption 1. The conditions are mild as we only control the magnitude of correlations rather than assuming zero correlations as in classical factor analysis. Assumption 1(e) is a regularity condition for restricting the parameters. Overall, Assumption 1 follows standard conditions for the approximate factor model in Bai and Li (2016) and is required for the estimation consistency of the unmeasured confounders.

Comparing Assumption 1 to the dense confounding assumption (A2) imposed on the linear framework in Guo et al. (2022), our assumption is mild as it holds for a broad regime of and . Specifically, the dense confounding assumption (A2) in Guo et al. (2022) is related to our assumption 1(e) that where is positive definite. Our assumption follows from classical factor analysis literature and as a result, we have , where is the -th singular value of the factor loading matrix . With being the coefficient for all covariates and being the individual coefficient of interest, the dense confounding assumption mainly requires that

where denotes the factor loading matrix with -th column removed. Guo et al. (2022) focuses on the setting and they point out that in the high-dimensional regime and under certain settings, the dense confounding assumption holds with high probability. Specifically, when the entries of are i.i.d. Sub-Gaussian with zero mean and variance , it holds that and the dense confounding assumption requires and to make diminish to zero. However, this condition is restricted to the high-dimensional regime and may not hold when is of relatively lower order.

Moreover, without loss of generality, we assume a working identifiability condition: and is a diagonal matrix with distinct entries. Note that the aforementioned working identifiability condition is for presentation purpose only and not an assumption on the model structure. As presented in Appendix B, when such a working identifiability condition is not satisfied, our theoretical results in Theorems 2 and 3 are still valid. We also illustrate this via simulation in Section 5.1. Next, we impose some assumptions on the generalized linear model with unmeasured confounders in (1).

Assumption 2

(a) The Fisher information satisfies , where is some constant.

(b) For some constant , , , and , where .

(c) The term is sub-exponential with .

(d) Assume that and with and with for constants , and , where for and sequence satisfies .

In the absence of unmeasured confounders, similar conditions in Assumption 2 can be implied from the conditions in Theorem 3.3 in van de Geer et al. (2014) and Assumption E.1 in Ning and Liu (2017). When and are binary or categorical, Assumption 2(b) holds with a constant . When and are sub-exponential random vectors, Assumption 2(b) holds with for some constant , with probability at least . Assumption 2(d) imposes mild regularity conditions on the function , and is commonly used in analyzing high-dimensional generalized linear models without unmeasured confounders. We require the function to be at least twice differentiable and and to be bounded. Specifically, can be implied by when exists, which is a weaker condition than the self-concordance property (Bach, 2010). This boundary assumption is important for the concentration of the Hessian matrix of the loss function. Assumption 2(d) holds for commonly used generalized linear models. For example, for logistic regression where , Assumption 2(d) holds with , and extended to infinity and it can be similarly verified to hold at for Poisson regression and for exponential regression with . For linear model, Assumption 2 can be relaxed as stated in Remark 4.

The theoretical analysis on the unmeasured confounders estimator is important in establishing the theoretical guarantee for our debiased method. As the decomposition techniques commonly used in linear models may not be applicable in generalized linear model settings, it is necessary to establish more general and refined intermediate results as the foundation of our theoretical analysis (see Remark 8 in Appendix D.1 for more details). We first present a uniform convergence result for the estimators of the unmeasured confounders.

From Proposition 1, the estimator of unmeasured confounders uniformly converges to at , which holds naturally under the high-dimensional regime . Moreover, the convergence rate is of a similar order to the convergence rates of principal component estimators (Fan et al., 2013; Wang and Fan, 2017). The estimation results of and are dependent on the accuracy of the estimator of unmeasured confounders and we next establish upper bounds on the estimation errors for and .

Theorem 2 (Estimation consistency)

The effect of unmeasured confounders enters the rate of the estimation error in our results through the term . Under the high-dimensional setting with , the unmeasured confounders effect is dominated by and as a result, the convergence rates in Theorem 2 are of the same order as in the oracle case when the unmeasured confounders are assumed to be known (van de Geer et al., 2014; Ning and Liu, 2017). With the estimation consistency established, we show that the debiased estimator from the proposed estimation method is asymptotically normal, and thus valid confidence intervals can be constructed.

Theorem 3 (Asymptotic normality)

The result in Theorem 3 is similar to existing inference results for high-dimensional generalized linear models without unmeasured confounders (van de Geer et al., 2014; Ning and Liu, 2017; Ma et al., 2021; Shi et al., 2021; Cai et al., 2023). Theorem 3 is established under the condition that the estimation errors of and in Theorem 2 are . As a consequence of the asymptotic normality result in Theorem 3 and that is consistent estimator for , the construction of the confidence interval in (6) is valid.

Remark 4

For the linear model, estimation consistency and asymptotic normality results in Theorems 2 and 3 hold with less stringent conditions than that in Assumption 2. Suppose Assumption 1 and Assumption 2(a) hold and assume that the random noise is independent sub-Gaussian random variable and is sub-Gaussian vector such that and for some constant . By choosing , if and , the estimation error bounds in Theorem 2 hold and the debiased estimator is asymptotically normal with limiting distribution (7). Similar assumptions are commonly imposed in many existing inference methods for high-dimensional linear models without unmeasured confounders (Zhang and Zhang, 2014; Javanmard and Montanari, 2014) as well as in the existence of confounders (Ćevid et al., 2020; Guo et al., 2022).

Remark 5

The sparsity assumption on is a standard assumption in high-dimensional regression models without unmeasured confounders. It may be more suitable and even weaker in our proposed framework with unmeasured confounders. In high-dimensional regression models without unmeasured confounders, the sparsity assumption on is implied by the sparse inverse population Hessian condition (van de Geer et al., 2014; Belloni et al., 2016; Ning and Liu, 2017; Jankova and van de Geer, 2018). Specifically, the sparse inverse population Hessian condition implies the sparsity of the coefficient parameter in a weighted node-wise lasso regression , which is similar to (5) but regresses the covariate of interest on the rest of the covariates .

In our proposed setting with unmeasured confounders, as shown from (5), we assume that in the weighted node-wise lasso regression , the coefficient of , denoted as , to be sparse. That is, is sparse, conditional on the unmeasured confounders . The sparsity assumption is mild and can be satisfied in many settings. For example, under the assumptions in classical factor analysis in which the covariance matrix of the random error is diagonal, is uncorrelated with the other covariates , conditioned on the unmeasured confounders . In this case, we have , conditioned on and thus the sparsity assumption holds naturally. We also want to point out that the imposed sparsity assumption may be weaker than that of existing work on high-dimensional inference without unmeasured confounders, where the coefficients of regression are assumed to be sparse. For instance, we allow to be densely correlated with marginally. In other words, we only require , the coefficient for in , to be sparse, conditional on the confounders while marginally the coefficients of could be dense.

Remark 6

For the simplicity of theoretical analysis, we assume the dimension of unmeasured confounders to be fixed and known, which is also assumed in Wang and Fan (2017), Fan et al. (2023), Wang (2022) and many other works. Nevertheless, our theoretical results hold as long as is consistently estimated. As introduced in Section 3, we use parallel analysis to estimate the dimension of unmeasured confounders in practice. Theoretically, it has been shown that parallel analysis consistently selects the dimension of unmeasured confounders in factor analysis (Dobriban, 2020). Specifically, when the dimension is relatively large compared to the sample size , each factor loads on not too few variables, and the signal size of unmeasured confounders is not too large, parallel analysis selects the number of factors with probability tending to one (Dobriban, 2020). These conditions can be satisfied under our framework, implying that the dimension of unmeasured confounders is consistently determined. Moreover, empirically we find that the proposed method still provides satisfying inference results under some overestimation of . Intuitively, as long as the corresponding linear combinations of the true underlying factors in the considered models can be well approximated by those of the estimated , the developed inference results for would still hold. To further illustrate this, we perform simulation studies in Appendix C to show that the overestimation of may not affect the asymptotic properties of the debiased estimator.

5 Numerical Studies

To illustrate the performance of our proposed method, we conduct numerical experiments including simulation studies and an application of our method to a genetic data set.

5.1 Simulations

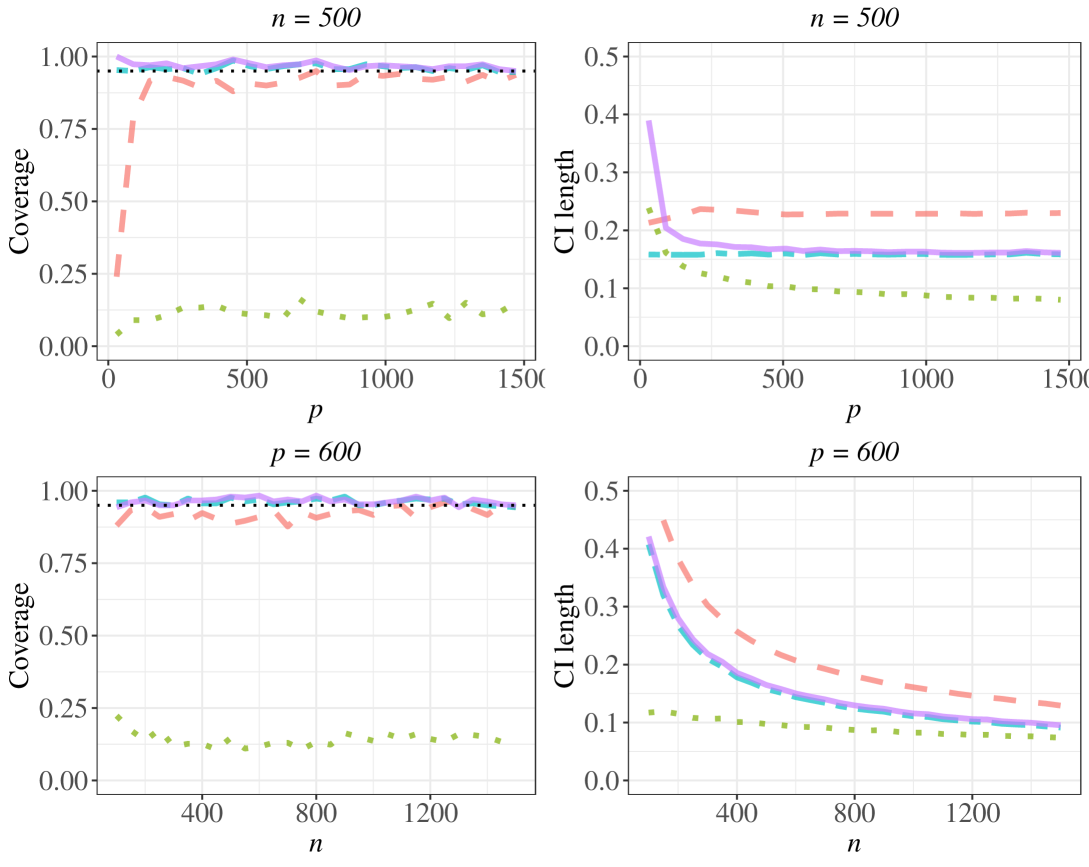

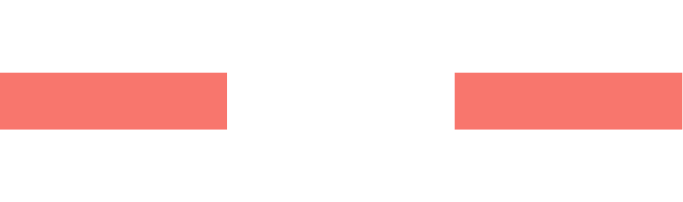

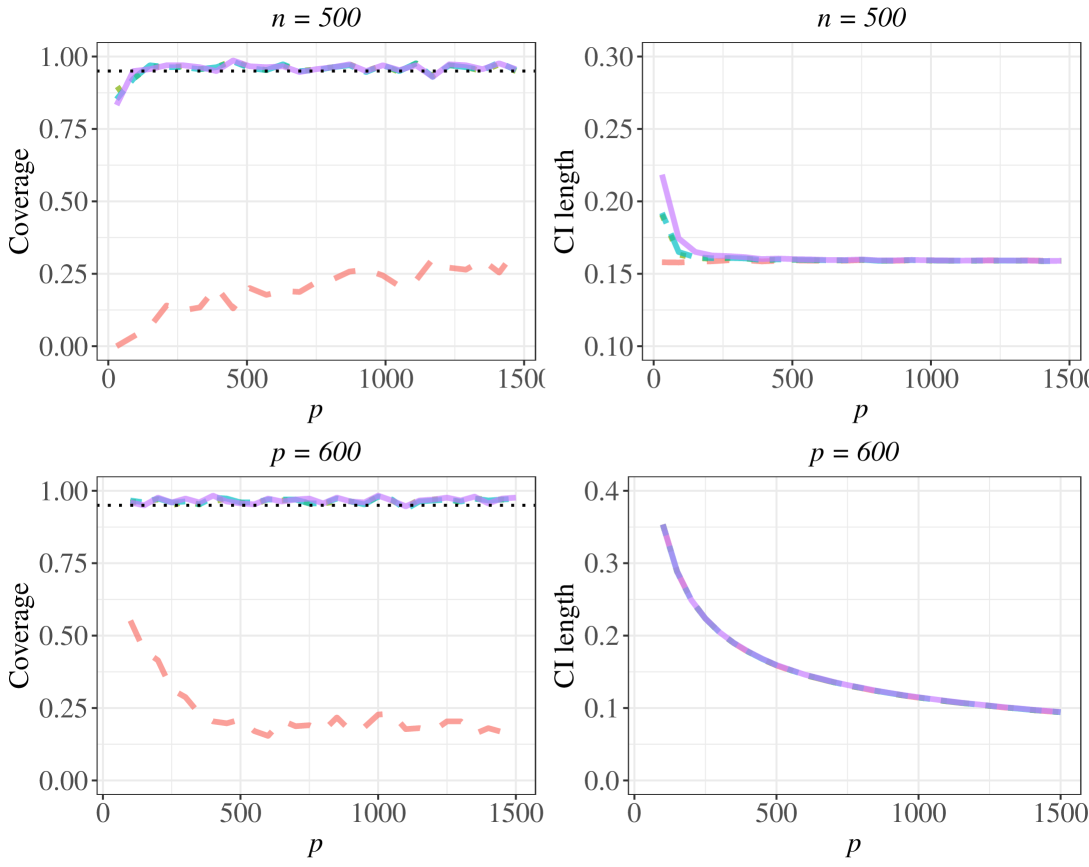

We consider two different models in (1), a linear regression model and a logistic regression model. We compare our method with two alternative approaches for performing high-dimensional inference: the oracle method where we perform the debiasing approach assuming that the true values of unmeasured confounders are known; and the naive method in which the unmeasured confounders are neglected in the estimation and we perform the debiasing approach with the observed covariates only. In addition, for the linear regression setting, we compare the proposed method with the doubly debiased lasso method proposed by Guo et al. (2022). The sparsity tuning parameters for all of the aforementioned methods are selected using 10-fold cross-validation. Our proposed method involves estimating the dimension of the unmeasured confounders, which we estimate using the parallel analysis (Horn, 1965; Dinno, 2009). To evaluate the performance across the different methods, we construct the 95% confidence intervals for the parameter of interest, and compute the average confidence length and the coverage probability of the true parameter over 300 independent replications.

First, we generate each entry of the unmeasured confounders and each entry of the random error from a standard normal distribution. We set

where , , and are vectors of dimension with all entries equal 0.5, 1, and 1.5, respectively (in our simulation, is set as integer values). The in the matrix denotes a vector of dimension with all entries equal 0. We then generate the covariates with and . It can be verified that the above setting satisfies the working identifiability condition described in Section 4. Later in this section, we also perform additional simulation studies as to illustrate the validity of the theory when the working identifiability condition does not hold.

We consider both the linear regression model and the logistic regression model: for linear regression, we generate the response according to , where the random noise is generated from a standard normal distribution; for logistic regression, the response is generated from a Bernoulli distribution with probability . The regression coefficients for the unmeasured confounders, parameter of interest, and nuisance parameters are set to be , , and , respectively.

) are our proposed method.

Blue two-dashed lines (

) are our proposed method.

Blue two-dashed lines ( ) represent the oracle case.

Green dotted lines (

) represent the oracle case.

Green dotted lines ( ) indicate the naive method.

Orange dashed lines

(

) indicate the naive method.

Orange dashed lines

( ) represent the doubly debiased lasso method.

) represent the doubly debiased lasso method.Results for the cases when and varies from 100 to 1500 and when and varies from 100 to 1500 under both linear regression model and logistic regression model are presented in Fig. 1 and Fig. 2, respectively. From Fig. 1, we see that the naive method suffers from undercoverage since the effects induced by the unmeasured confounders are not taken into account. Our proposed method has coverage at approximately 0.95 and is similar to that of the oracle method. The proposed method also has similar length of the confidence intervals as that of the oracle method. As a comparison, the doubly debiased lasso method suffers from undercoverage when is relatively small ( and ): our finding is consistent with that of Guo et al. (2022), where they indicate that when is relatively small to , bias from the hidden confounding effects can be relatively large, which leads to undercoverage of their method. As increases, we see that coverage for the doubly debiased lasso method approaches to 0.95, but its confidence interval lengths are larger than that of our proposed method, indicating our method is preferred in this case.

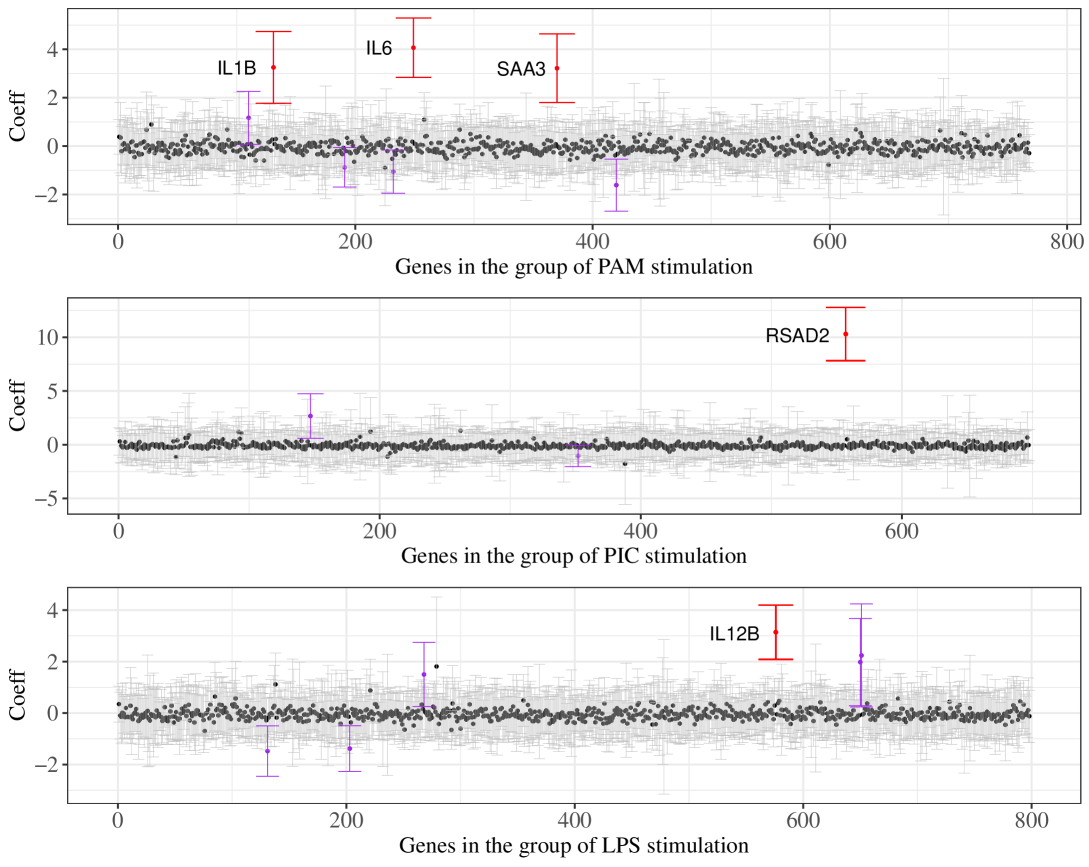

Under logistic regression model, our proposed method performs similarly to the oracle method in terms of both coverage and length of the confidence intervals. Results for doubly debiased lasso are not reported as it is not directly applicable to generalized linear model.

) are our proposed method.

Blue two-dashed lines (

) are our proposed method.

Blue two-dashed lines ( ) represent the oracle case.

Green dotted lines (

) represent the oracle case.

Green dotted lines ( ) indicate the naive method.

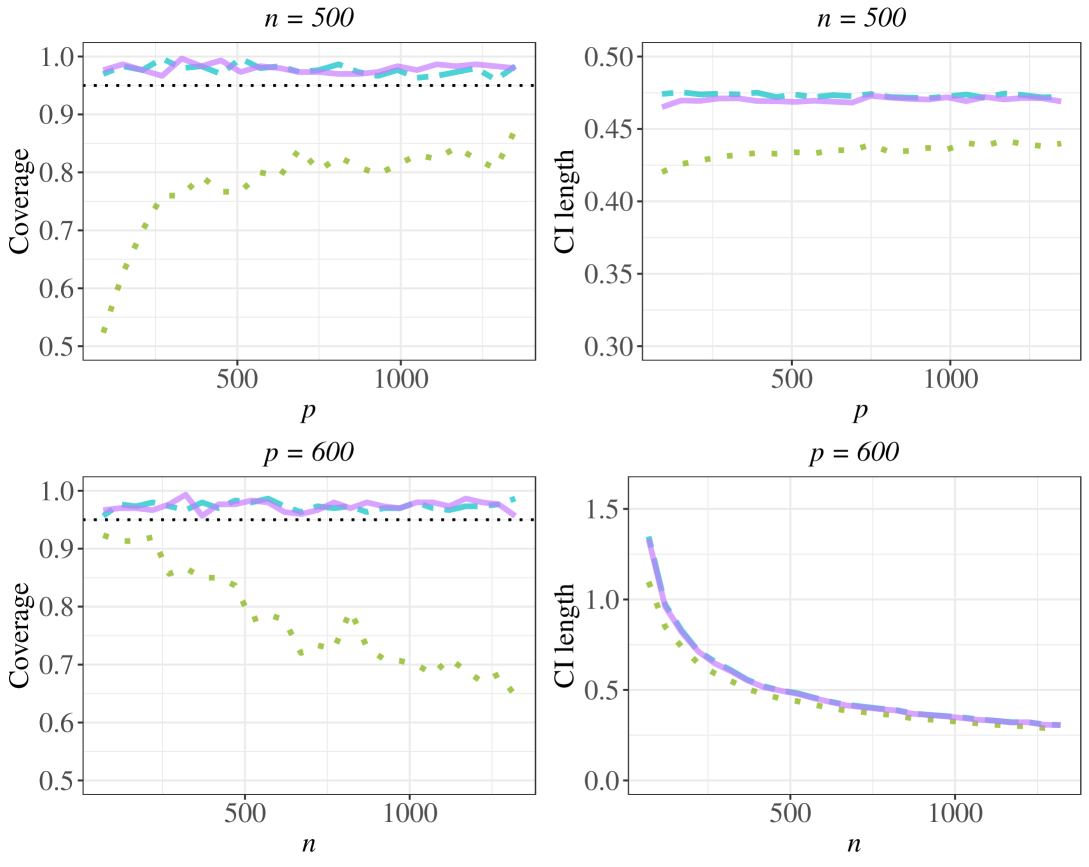

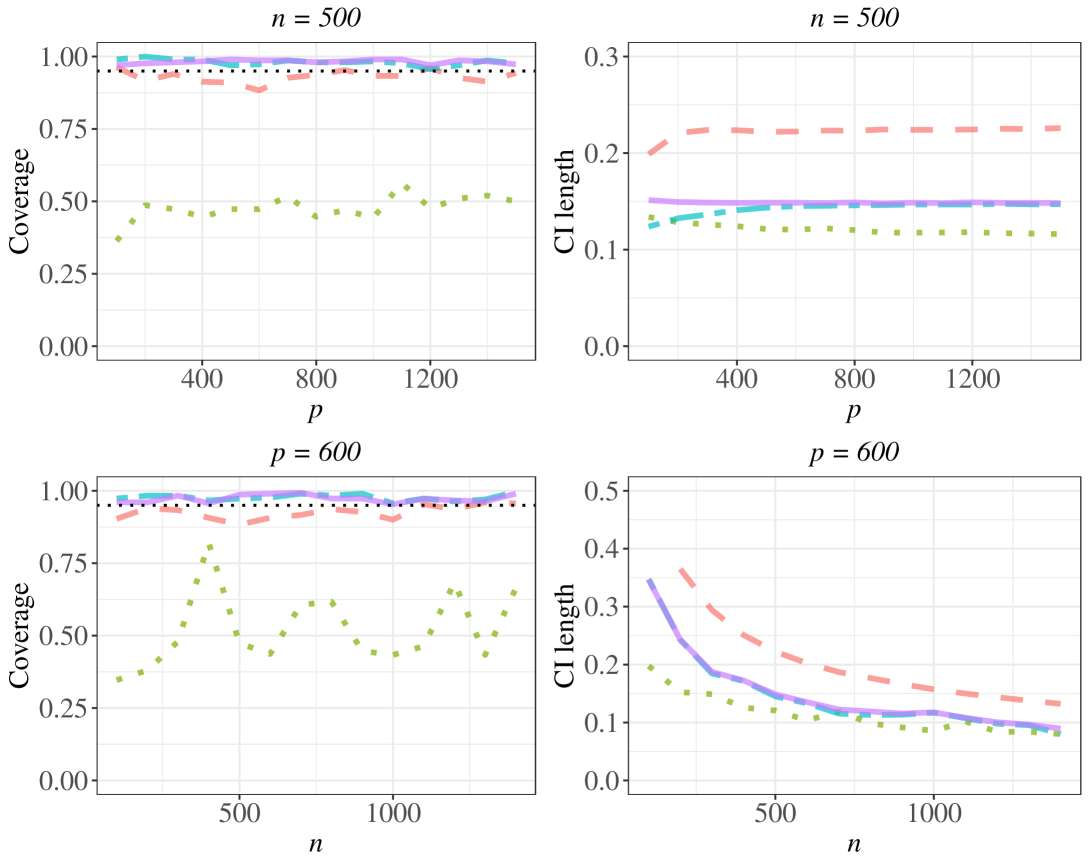

) indicate the naive method.In the remaining subsection, we show that when the working identifiability condition fails, our proposed method can still perform well. More discussions about the working identifiability condition are in Appendix B. Specifically, we set for and and . This loading matrix implies that each covariate vector is related to all the confounders. The identifiability condition fails in such case as is not a diagonal matrix. We continue with the parameter setting at the start of this section and the data generation process is unchanged except for replacing the original loading matrix with the new loading matrix where elements are uniformly distributed.

At varying dimensions and , we construct the confidence intervals using each method over 300 simulations, and then compute the coverage probabilities of the 95% confidence intervals on the true parameter and the average confidence interval lengths. Under the new loading matrix, the results for linear regression model are shown in Fig. 3 and the results for logistic regression model are shown in Fig. 4. We observe the coverage rates of our proposed method can still achieve the desirable 0.95 level and are close to the oracle case. The naive method performs worse than the proposed method, with coverage rates much less than the 0.95 level for most cases. For the linear regression results, although a little under coverage in some cases, the doubly debiased lasso method exhibits good performance in this setting with coverage rates approximating to the oracle case, while its average confidence interval length is larger than that of the proposed method.

) are our proposed method.

Blue two-dashed lines (

) are our proposed method.

Blue two-dashed lines ( ) represent the oracle case.

Green dotted lines (

) represent the oracle case.

Green dotted lines ( ) indicate the naive method.

Orange dashed lines(

) indicate the naive method.

Orange dashed lines( ) represent the doubly debiased lasso method.

) represent the doubly debiased lasso method.

) are our proposed method.

Blue two-dashed lines (

) are our proposed method.

Blue two-dashed lines ( ) represent the oracle case.

Green dotted lines (

) represent the oracle case.

Green dotted lines ( ) indicate the naive method.

) indicate the naive method. 5.2 Data Application

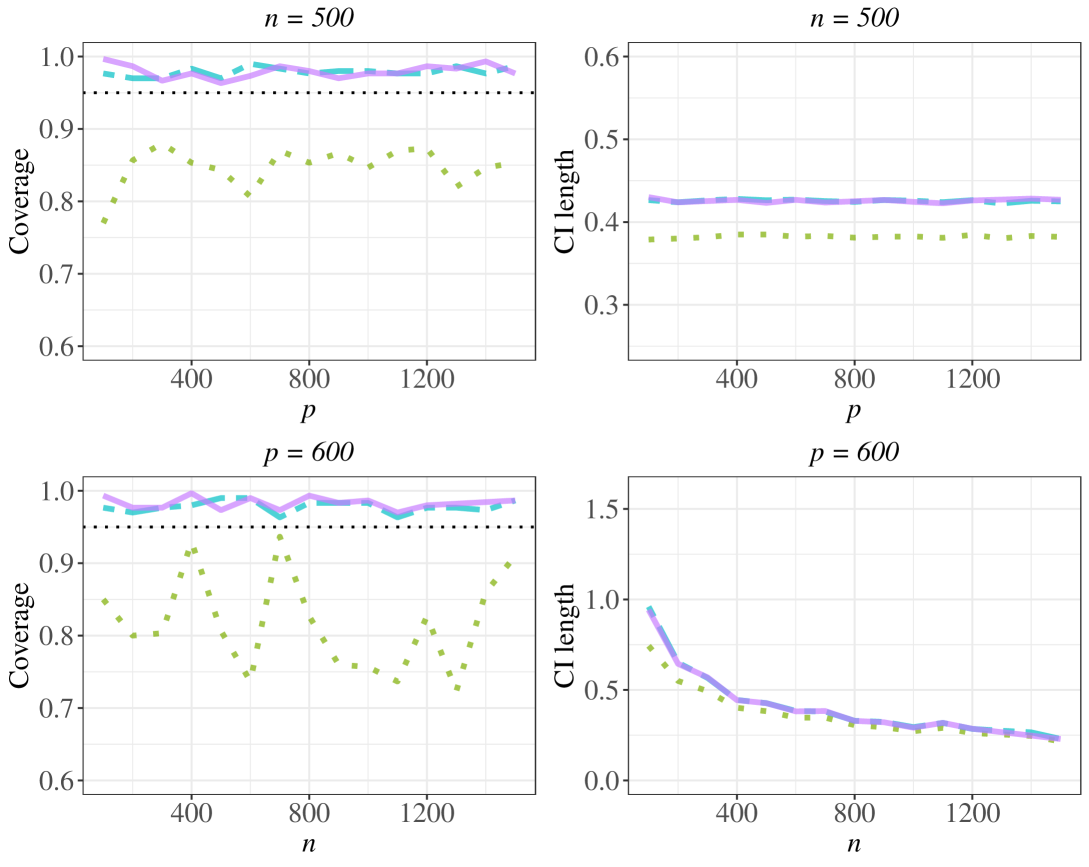

In this section, we apply the proposed method to a genetic data containing gene expression quantifications and stimulation statuses in mouse bone marrow derived dendritic cells. The data were also previously analyzed in Shalek et al. (2014) and Cai et al. (2023). Specifically, Cai et al. (2023) aimed to find significant gene expressions in response to three stimulations, which are (a) PAM, a synthetic mimic of bacterial lipopeptides; (b) PIC, a viralike ribonucleic acid; and (c) LPS, a component of bacteria. However, there may be potential unmeasured confounders that may lead to spurious discoveries. Our goal is to investigate the relationship between gene expressions levels and the different stimulations while accounting for possible unmeasured confounders.

We start with pre-processing the data: we consider expression profiles after six hours of stimulations and perform genes filtering, expression level transformation, and normalization. The details can be found in Cai et al. (2023). After the pre-processing steps, we have three groups of cells including 64 PAM stimulated cells, 96 PIC stimulated cells, and 96 LPS stimulated cells, respectively. Moreover, each of the three groups contains 96 control cells without any stimulation. The gene expression quantifications are computed on 768 genes for PAM stimulation group, 697 genes on PIC stimulation group, and 798 genes for LPS stimulation group. For each group of cells, the high-dimensional covariates are the gene expression levels, the stimulation status is a binary response variable recording whether a cell is stimulated.

We fit the data using our proposed method and the naive method in which we perform the debiasing approach without adjusting for unmeasured confounders. Applying each method, we construct 95% confidence intervals for the regression coefficient for every gene. In addition, we compute the -value and effect size for each gene using each of two methods, respectively. Our proposed method found that there are 7 possible confounders for the PAM and PIC stimulation groups, and 9 possible confounders for the LPS stimulation group. The 95% confidence intervals constructed with our proposed method for each of the three groups are shown in Fig. 5.

We see from Fig. 5 that the confidence intervals for some genes do not contain zero, suggesting that they are possibly associated with their respective stimulations. For valid statistical inference, we perform a Bonferroni correction to adjust for multiple hypothesis testing. As a comparison, we perform similar procedures using the confidence intervals constructed with the naive method to identify the significant genes. Genes that are significantly associated with the stimulation after Bonferroni correction from the proposed method and naive method respectively are reported in Table 1. The small -values and large effect sizes indicate that the corresponding genes are strongly associated with their respective stimulations. Some gene codes in Table 1 coincide with the findings in Cai et al. (2023), and their functional consequences to the stimulation are also supported in existing literature. Specifically, Cai et al. (2023) suggested that there exist significant association for “IL6” with PAM stimulation, “RSAD2” with PIC stimulation, and “CXCL10” and “IL12B” with LPS stimulation. Applying our proposed method in which the effects of unmeasured confounders are considered, we identify that “IL1B”, “IL6”, and “SAA3” are significant genes for PAM stimulated cells, “RSAD2” is significant for PIC stimulated cells, and “IL12B” is significant for LPS stimulated cells. On the other hand, when we apply the naive method which does not adjust for unmeasured confounders, we identify significant genes “IL1B”, “IL6” for PAM stimulated cells, “RSAD2” for PIC stimulated cells and “IL12B” for LPS stimulated cells. Comparing the proposed method with the naive method, our method that adjusts for unmeasured confounders identifies an additional gene “SAA3”. In the genetics literature, experimental studies support the finding that “SAA3” plays important roles in immune reactions (Ather and Poynter, 2018). For instance, mice lacking the gene “SAA3” develop metabolic dysfunction along with defects in innate immune development (Ather and Poynter, 2018). This comparison suggests that our proposed method can identify significant genes/variables that are not captured by existing debiasing procedures without for adjusting unmeasured confounders.

| Method | Stimulation | Gene code | -value | Effect size |

|---|---|---|---|---|

| Proposed method | PAM | IL1B | 1743 | 4295 |

| IL6 | 8940 | 6484 | ||

| SAA3 | 8800 | 4445 | ||

| PIC | RSAD2 | 4441 | 8144 | |

| LPS | IL12B | 5180 | 5841 | |

| Naive method | PAM | IL1B | 6908 | 4963 |

| IL6 | 2745 | 6990 | ||

| PIC | RSAD2 | 8604 | ||

| LPS | IL12B | 4187 | 5482 |

Moreover, many genetic studies support that in addition to the “SAA3”, genes discovered by our proposed method all play important roles in the immune response. The gene “IL1B” encodes protein in the family of interleukin 1 cytokine, which is an important mediator of the inflammatory response and its induction can contribute to inflammatory pain hypersensitivity (Nemetz et al., 1999). The genes “IL6” and “IL12B” are also known to encode cytokines that play key roles in hosting defense through stimulation and immune reactions (Müller-Berghaus et al., 2004; Toyoshima et al., 2019). The expression of the gene “RSAD2” is important in antiviral innate immune responses and “RSAD2” is also a powerful stimulator of adaptive immune response mediated via mature dendritic cells (Jang et al., 2018).

6 Discussion

This manuscript studies statistical inference problem for the high-dimensional generalized linear model under the presence of hidden confounding bias. We propose a debiasing approach to construct a consistent estimator of the individual coefficient of interest and its corresponding confidence intervals, which generalizes the existing debiasing approach to account for the effects induced by the unmeasured confounders. Theoretical properties were also established for the proposed procedure.

Our goal of this paper is to conduct statistical inference for individual coefficients . The purpose of adjusting for the unmeasured confounders in the proposed method is to use the latent information for the better inference of covariates coefficient , while the inference for the coefficient of unmeasured confounders is not the focus of the current work. This problem setting has wide scientific applications. For instance, as introduced in our data application, biologists are interested in the significance of the genes under the simulations with the unmeasured confounders adjusted. Moreover, in many practices with finite samples, consistent estimation of the number of factors may be problematic; our method only requires that the estimated factors can well approximate the confounders and the interpretation of the factors is of less interest in this setting. Here to investigate how the accuracy of the estimation of the dimension of unmeasured confounders affects the asymptotic distribution of debiased estimator, we conducted a simulation study in Appendix C and the results suggest that in practice, the overestimation of may not affect the asymptotic normality results, which may be because an abundant amount of unmeasured confounders can still fully capture the covariate information and would not incur bias in the point and interval estimation.

Nevertheless, the inference of unmeasured confounders is also of great interest in many econometrics and psychometrics applications. For instance, Fan et al. (2023) recently proposed an ANOVA-type testing procedure for testing the existence of unmeasured confounders or not. It would be interesting to develop similar inference testing procedures under the generalized linear models in applications when the inference of the unmeasured confounders of scientific interest.

Another interesting related problem is regarding the inference on group-wise covariate coefficients. We next briefly describe how to generalize our method to obtain the group-wise asymptotic distribution of via a bootstrap-assisted procedure for and any subset . The procedures follow simultaneous inference results from Zhang and Cheng (2017) and are shown as follows.

-

•

Step 1: For each , we construct a debiased estimator using our proposed method for . We generate a sequence of i.i.d. standard normal random variables and denote them as .

-

•

Step 2: Then, under the null hypothesis that for , let , where can be similarly calculated as and can be similarly calculated as in Section 3 by treating as the coefficient of interest and as the other coefficient for nuisance covariates and unmeasured confounders.

-

•

Step 3: Next, we calculate the critical value at significance level by .

With the debiased estimators and the critical value, we expect a similar result as Theorem 4.1 in Zhang and Cheng (2017) that the asymptotic distribution of satisfy . While the inference on group-wise maximum coefficients is an interesting problem, it is not the focus of this work and we leave the related theoretical proof for future research.

Besides the aforementioned extensions, there are several related problems worth investigating in the future. For instance, as discussed in Section 2.2, there are many models in existing literature related to our problem, in particular, the factor-adjusted method can be extended to the situation involving hidden confounding (Fan et al., 2020). In addition, in our theoretical analysis, we assume that the dimension of unmeasured confounders, , is fixed, which has also been imposed in the existing literature (e.g., Wang and Fan, 2017; Fan et al., 2023). One possible extension is to allow to grow as and increase, and it involves generalizations of the theoretical results on the maximum likelihood estimation for the unmeasured confounders. It would also be interesting to generalize the factor model to a nonlinear structure and investigate the theoretical properties of the debiased estimator under generalized factor model (Chen et al., 2020; Liu et al., 2023). Besides generalized linear models, the high-dimensional debiasing technique is also popularly studied in a variety of models such as Gaussian graphical models (Ren et al., 2015; Zhu et al., 2020) and additive hazards models (Lin and Ying, 1994; Lin and Lv, 2013). For instance, consider additive hazards models, which are popularly used in survival analysis and assume the conditional hazard function at time as with and covariates and unmeasured confounders. The relationship between covariates and unmeasured confounders are also modeled by a linear factor model. Our method can be used to perform inference on under the aforementioned model setup based on the quadratic loss function. Generalizing our proposed approach to these models to adjust for possible unmeasured confounders is also an interesting direction to investigate.

Appendix A. Preliminaries

We start with introducing some notations and definitions. For a vector , we let , , for and . For a matrix , let to be the maximum absolute column sum, to be the maximum of the absolute row sum, to be the maximum of the matrix entry, and to be the smallest and largest eigenvalues of and to be the Frobenius norm of . For sequences and , we write if there exists a constant such that for all , and if and . For any sub-exponential random variable , we define the sub-exponential norm as . For any sub-Gaussian random variable , we define the sub-Gaussian norm as .

Next, we give a review of our model framework. We assume the response given the covariate of interest , the nuisance covariates and the unmeasured confounders follows the generalized linear model with the probability density (mass) function to be

| (A8) |

and the relationship between and is

| (A9) |

We let to be a vector that includes all of the covariates and the unmeasured confounders, and let to be the corresponding parameters. For notational convenience, we also let and its coefficient , so . We denote as the coefficient for covariates , so .

Throughout the appendix, we use an asterisk on the upper subscript to indicate the population parameters. We assume that the observed data and the unmeasured confounders are realizations of (A8) and (A9). The noise for the factor model are denoted as with .

In the proposed inferential procedure, we first obtain the maximum likelihood estimator for unmeasured confounders . With the unmeasured confounder estimators, we let , and get an initial lasso estimator

| (A10) |

where the loss function is the negative log-likelihood function defined as

The gradient and Hessian of the loss function are commonly used in our subsequent proofs, which are expressed as

In constructing the debiased estimator, we define and , where and . We denote two sub-vectors of as and . We define the estimator for the sparse vector as

| (A11) |

where is equivalent to , the th component of the loss function. Equivalently, the estimator is obtained by

The estimator is used in estimating the generalized decorrelated score function and the partial Fisher information matrix. Under null hypothesis , the two estimators are

Our theoretical results are established under the asymptotic regime with . Regarding the factor model, as stated in Assumption 1 in Section 4 of the main text, we do not assume the random errors to be identically distributed nor does the model assumption require the covariance of to be diagonal. Specifically, we assume for some large constant : (a) , ; (b) with for some and all , and for all . (c) with for some and all , and . (d) For all ,

Regarding the loading matrix, we assume . There exist positive definite matrices and such that and . In addition, we also assume a working identifiability condition that and is a diagonal matrix with distinct entries.

For the assumptions regarding the generalized linear model relating and , as mentioned in Assumption 2 in Section 4 of the main text, we let , where and is some constant. The unmeasured confounders, the covariates and coefficients parameters are assumed to be bounded, that is, , , , and for some constant . We let to be sub-exponential with . In addition, we assume , with and with for constants , and , where for and sequence satisfying .

Appendix B. Discussion on Working Identifiability Condition

In this section, we make a detailed clarification on the working identifiability condition in the main text. Specifically, we show that when the working identifiability condition does not hold, we can transform the model into an identifiable model and the transformed identifiable model has identical parameter of interest compared to that of the pre-transformed model.

When the identifiability condition is not satisfied, the estimators are not fully identifiable in the sense that, for any invertible matrix , we have and to be valid maximum likelihood estimators. At , we can substitute the expression for into (4) to get the relationship between and as follows,

Suppose and are the asymptotically unbiased estimators for and respectively. Here we want to clarify that we use and to differentiate the unmeasured confounders before the transformation from that of transformed model but this does not imply that and are population parameters. we have and to be the asymptotically unbiased estimators for and .

When the working identifiability condition does not hold, that is, we have , and/or is not diagonal matrix with distinct entries, we can find an invertible matrix to transform the true parameters to satisfy the assumption. Then we build a correspondence between the true model and the model corresponding to the transformed parameters, showing that the two models have parameter of interest to be identical.

The transformed parameters are and , which are constructed in two steps. At first step, for any , we can find a matrix such that

At next step, given the matrix is symmetrical, there exists an orthogonal matrix whose columns correspond to the eigenvectors of as we could decompose this symmetric matrix into where has distinct eigenvalues in the diagonal.

We verify the transformed parameters satisfy the identifiability condition as follows. At and , we have

and

is a diagonal matrix with distinct entries.

We let , so accordingly the parameters for the transformed factor model are and . The relationship between the true model and the transformed model is as follows. The factor model structure corresponding to the true parameter is the same as the model corresponding to the transformed parameters as

The generalized linear framework according to the rotated true parameter is

whereas the framework according to true parameters is

At , and , the two frameworks are identical. That is, when the confounders are not identifiable, only the coefficient will be affected accordingly; the parameter of interest will not change and thus the theoretical results on are not affected.

Appendix C. Estimation of the Dimension of Unmeasured Confounders

In the numerical studies of this paper, we use parallel analysis (Horn, 1965) to estimate the dimension of unmeasured confounders. Because in factor analysis, parallel analysis is a popular approach to selecting the number of factors as it is accurate and easy to use (Hayton et al., 2004; Costello and Osborne, 2005; Brown, 2015). In the existing literature, several authors have conducted extensive simulation studies to assess the performance of parallel analysis relative to other existing approaches (Zwick and Velicer, 1986; Peres-Neto et al., 2005). They have shown that parallel analysis has better numerical performance in terms of selecting than many existing approaches (Zwick and Velicer, 1986; Peres-Neto et al., 2005). Furthermore, parallel analysis is also a commonly used statistical tool for dimension reduction (Lin et al., 2016), multiple testing dependence (Leek and Storey, 2008), and finds wide applications in other scientific disciplines including virology (Quadeer et al., 2014) and genetic studies (Leek and Storey, 2007).

The implementation of parallel analysis is as follows. With our given matrix , we denote columns in the design matrix as , respectively. Then we repeatedly generate matrices ’s where each matrix is generated by randomly permuting every column for . Next, we select the first factor when the top singular value of is larger than a certain percentile of the top singular value of the permuted matrices ’s. If the first factor is selected, we repeat this procedure to determine whether the second factor can be selected. The process is repeated until no more factor is selected. The main intuition of this approach is that the factor model is considered a summation of the signal (factors) and noise (random error). The permutation destroys the original signal structure and turns it into a matrix of random noise. Thus, identifying factors based on large singular values of can be interpreted as selecting factors that are above the noise level.

Besides parallel analysis, there are various methods to estimate the dimension of unmeasured confounders such as scree plot (Cattell, 1966), which empirically chooses the elbow point in the plot of descending eigenvalues of factors; method based on cross validation (Owen and Wang, 2016), which uses random held-out matrices of data to choose the number of factors; method based on information criteria including AIC and BIC to select the number of factors in high-dimensional factor model (Bai and Ng, 2002); the eigenvalue ratio method (Lam and Yao, 2012; Ahn and Horenstein, 2013), which chooses by where denotes the -th eigenvalue of and is a prespecified threshold which is often set to be in practice. Among these methods, the information-criteria-based method and eigenvalue ratio method have the theoretical guarantees to be consistent under similar assumptions as Assumption 1 in our paper. These assumptions follow the common conditions in theoretical analysis for the approximate factor model that allows weak correlation among the random error. Besides the method to determine the number of factors for linear factor models, Chen and Li (2021) proposed a method based on joint-likelihood-based information criterion to determine the number of factors for generalized linear factor models. All these selection methods are well-established tools and can possibly be used to select . Nonetheless, our theoretical results hold as long as the dimension of unmeasured confounders is consistently estimated. In our manuscript, we use the parallel analysis for good empirical performance, as illustrated in Peres-Neto et al. (2005).

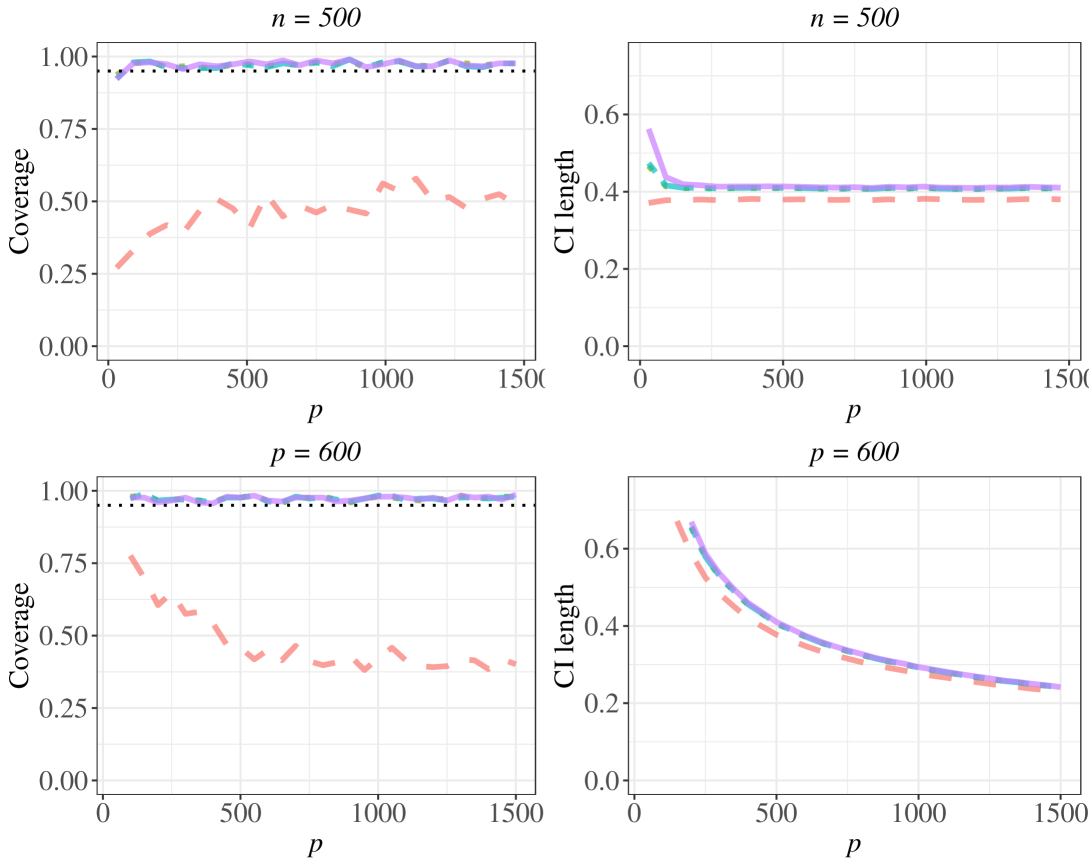

To further investigate how the accuracy of the estimation of affects the asymptotic distribution of the debiased estimation, we conduct simulation studies where we keep all the estimation settings to be the same as in Section 5.1 in the paper except manually replacing the estimated by parallel analysis with specified values: 2, 4, 5, and 10. Recall that we set the true , so this simulation provides us with some insights on how the overestimation and and underestimation of unmeasured confounder dimension affect the inference results.

The results under the logistic regression model at (a) and vary from 30 to 1500; (b) and varies from 100 to 1500 are presented in Fig. 6. The results under the linear model at the same regime of and are presented in Fig. 7. From the results, we find that the overestimation of appears not to affect the asymptotic normality results but the underestimation of can influence the asymptotic distribution of debiased estimation and further affects confidence interval estimation. Intuitively, as long as the corresponding linear combinations of the true underlying factors in the considered models can be well approximated by those of the estimated , the developed inference results for would still hold.

) represent the results at .

Green dotted lines (

) represent the results at .

Green dotted lines ( ) represent the results at .

Blue two-dashed lines (

) represent the results at .

Blue two-dashed lines ( ) represent the results at .

Purple solid lines (

) represent the results at .

Purple solid lines ( ) represent the results at .

) represent the results at .

) represent the results at .

Green dotted lines (

) represent the results at .

Green dotted lines ( ) represent the results at .

Blue two-dashed lines (

) represent the results at .

Blue two-dashed lines ( ) represent the results at .

Purple solid lines (

) represent the results at .

Purple solid lines ( ) represent the results at .

) represent the results at .Appendix D. Proof of Theorem 2

D.1 Estimation Consistency of

Theorem 2 shows that the estimators and can be consistently estimated. Before proving these results, we first introduce some lemmas that will be used in the proofs.

Lemma 7 (Concentration of the Gradient and Hessian)

;

.

Lemma 7 shows that there exist sub-exponential type of bounds on the gradient and linear combination of the Hessian of the loss functions. This is motivated by Assumption 3.2 in Ning and Liu (2017). They construct the loss function based on the observed covariates whereas our results are established upon the fact that the gradient and the hessian of the loss function involve not only observed covariates but also the estimated unmeasured confounders.

Remark 8

As the decomposition techniques commonly used in linear models may not be applicable in generalized linear model settings, it is necessary to establish stronger and more general intermediate results as the foundation of our theoretical analysis. For instance, different from linear models, where average estimation consistency on unmeasured confounders suffices, stronger uniform estimation consistency is necessary for the generalized linear framework. Specifically, in Fan et al. (2023), the linear model form and projection-based techniques enable the reduction of the gradient max-norm into , where and is the residual of the response after projecting in onto space of . To show the concentration of the gradient, a key step is to upper bound with by and , where they apply Cauchy-Schwartz inequality and use the Frobenius norm of estimated unmeasured confounders . Here is some transformation matrix and with a slight abuse of notation, we use , and to denote the matrix (vector) form of random error, unmeasured confounders, and responses. However in the generalized linear model, to derive the bound for gradient max-norm to be , we instead apply Bernstein inequality which requires the uniform estimation bound of unmeasured confounders, that is, . We leave the detailed proof of Lemma 7 in Appendix G.1.

We next obtain the upper bound for the estimation error of . To this end, we define the first-order approximation to the loss difference as . We will obtain upper and lower bounds of . As we will show, the norm is involved in both upper and lower bounds, combining which will give an inequality of and thus result in a bounded estimation error of .

Upper Bound for : recall that is a solution obtained from solving the convex optimization problem in (A10). And thus, we have the following optimality conditions and , where is a vector with entries

| (A14) |

This implies .

Recall that we denote the support set for as . We denote the difference between the estimator and the true parameter as , and its two sub-vectors are and . Similarly, we denote the sub-vectors of corresponding to non-zero entries as and that corresponding to zero entries as . With the notations introduced, the quadratic difference can then be written as

where the last inequality is by Hölder’s inequality.

From Lemma 7, we have . Since and by taking where is some constant, we further have

| (A15) | |||||

where the last inequality is because .

Lower Bound for : after establishing the upper bound for the quadratic difference, we next obtain a lower bound for it. Because is convex function, . We have from (A15), and based on this result and the restricted strong convexity condition for generalized linear model in Proposition 1 of Loh and Wainwright (2015),

| (A16) |

for some constant .

D.2 Estimation Consistency of

Before we present the proof for the estimation consistency of the estimator , we introduce additional results established based on the estimation consistency of and used in proving the estimation consistency of .

Remark 10

To prove the consistency of , we denote and establish the estimation error bound for . Recall that is a solution to (A11), that is

By definition, we have

Write , then the above inequality can be rearranged as

| (A21) |

The proof techniques are mostly motivated by Ning and Liu (2017). To present our proof, we define two quadratic difference terms as and . The left hand side of the above inequality is , and next we investigate the upper bound for in details.

For the right hand side of (A21), we first consider . Recall that we denote the support set for as . We also denote , , and . So and . Therefore we have

| (A22) | |||||

And according to Lagranian duality theory, an equivalent problem for (A11) is

for . This gives , which further results in from (A22).

We next consider the first term in (A21). Denote , which is a summation of two terms and denoted as

For , using a similar argument as in the proof of Lemma 7, we have , hence

| (A23) | |||||

For , by Assumption 2 that with and and applying Cauchy-Schwarz inequality, we have

| (A24) | |||||

The above upper bound of involves the expression of . We next investigate the relation between and . We apply Assumption 2 again on the difference between and as we do for bounding the term , and have

where the last inequality is by the estimation consistency results of in Appendix D.1 and by Proposition 1 that and . From the above result, we further apply triangular inequality and have

| (A26) |

Combining the above result on with (LABEL:Q_tau_taus) and taking , we get

| (A27) | |||||

The above result will be used to prove (A20), which will later be used to derive the error bound . Consider the following two cases.

Case 1: . We have (A20) naturally hold.

Case 2: . Then we can have

combining this result with (A27), we have . We next use this cone condition to derive the lower bound for for case 2.

Denote as a vector including a vector of zeros and the differences between estimated unmeasured confounders and the true confounders. Hence we have . We establish the lower bounds for first.

| (A28) | |||||

We next derive the bounds for the three terms in (A28) separately. For , we have

| (A29) |

For the second term in (A29), consider Assumption 2 that by letting and and applying Cauchy-Schwarz inequality, we have

| (A30) | |||||

From Assumption 2, . From the result for estimation consistency of in Appendix D.1, we have . In addition, we have . Based on these results and by the scaling condition and , we have

| (A31) |

Hence we have

| (A32) |

with probability tending to 1 as the second term in (A29) goes to 0. Recall that we denote . Because

where the last inequality is by the definition and properties of eigenvalue as well as matrix operations. Recall that we have obtained the cone condition , which results in . Moreover, using Bernstein’s inequality, it can be shown that . As , the term , which together with (A32) shows with probability tending to 1.

For , we can write it as

| (A33) |

Based on (A31) and

and under the scaling condition , we have the second term in (A33) goes to 0 and thus

| (A34) | |||||

Because we have

| (A35) | |||||

where the last inequality is from techniques similar to the proof of Lemma 7 Condition that and from Proposition 1 that . Because , under the scaling condition , combining (A34) and (A35) gives with probability tending to 1. Similarly, we have .

Summarizing the above results, we have that with a high probability as and . Substituting this result for the terms , and into (A28), and because , we have

| (A36) |

Recall we have proved (A27), which can be written as

From (A36), we have with high probability tending to 1. Substitute this result into the above inequality, we have

Cancelling the term , we have

which gives an upper bound for that

| (A37) |

which completes the proof of (A20) in case 2. Therefore, we prove (A20) holds in both cases. In both cases 1 and 2, by replacing with , we get (A19) as

To finally prove the estimation consistency of , or equivalently, to derive the error bound , we also consider two situations. If cone condition holds, we then apply similar techniques as in case 2 to derive (A36). Therefore

Otherwise, we have . From (A27), we have

which together with gives

where the last inequality is from (A20).

In conclusion, under either or , we prove

Appendix E. Proof of Theorem 3

Throughout the rest of appendix, we denote

From the Corollary S.1 in Bai and Li (2016), we have and . These results will play an important role in the following proofs. We next introduce a few technical lemmas as tools in the proof of Theorem 3.

Lemma 11 (Smoothness of loss function)

;

.

Lemma 11 shows the loss functions are smooth in a sense that they are second-order differentiable around the true parameter value. The conditions hold for quadratic loss functions as well as non-quadratic functions given the function is properly constrained.

Lemma 12 (Central limit theorem of score function)

Lemma 12 implies that a linear combination of the gradient of loss function, in other words, the score function is asymptotically normal.

Lemma 13 (Partial information estimator consistency)

With these result, we now prove the asymptotic normality of the debiased estimator, which generalizes the proof of Theorem 3.2 in Ning and Liu (2017) to the setting with unmeasured confounders.

The goal is to show the debiased estimator is asymptotically normal. First, by Lemma 12, we have (A38) hold. Therefore, it suffices to show that

which is equivalent to show

since is constant. Note that we have by the definition of estimated decorrelated score function, we next decompose the left hand side of the above expression and apply triangular inequality as

By an application of Lemma 11 condition , we write as

where the last inequality is by Hölder inequality with , . As a consequence of Lemma 7 condition , we have . In addition, Theorem 2 gives . Hence under the scaling condition of , we have

| (A39) |

By an application of Lemma 11 condition , we write as

| (A40) | |||||

From Theorem 2, we have . From Lemma 7 Condition (i), we have . Hence under the condition of , we have

| (A41) |

Appendix F. Proof of Proposition 1

To prove Proposition 1, we show that the estimated unmeasured confounders are bounded with convergence guarantees. From the expression of the estimator for unmeasured confounders in (4) of the main text and recall that and , we apply triangular inequality and have

For the first term in (LABEL:eq:max_uhat), we have

From Assumption 2, we have for all and some constant . For the norm , we apply the Cauchy-Schwarz inequality to bound the matrix norm

where the last equality follows because from Corollary S.1(b), from Corollary S.1(a) and from Proposition 1 in Bai and Li (2016). Therefore

| (A44) |

For the second term in (LABEL:eq:max_uhat), we have

| (A45) | |||||

For , we express the -dimensional vector as

| (A46) | |||||

We next investigate the bound for the term and then show the other two terms and are of smaller order and dominated by . From Assumption 2, we have , and . We apply triangular inequality and have

where the last three inequalities follow from matrix operation and properties of norms.

We have to be sub-exponential random variable since is bounded with . Combining the bound for with that and , we have , for and . So is sub-Gaussian random variable and thus is sub-exponential. As has mean zero, has mean zero and by Bernstein inequality

Apply union bound inequality, we have

where is a constant. At , the inequality

| (A47) |

holds with probability at least .

For the term , we apply Cauchy-Schwarz inequality and have

By Assumption 1(b), and are bounded in and as a result, we have

and

Because and by Berstein inequality,

and then applying union bound gives

From the above probability bound, we have and

| (A48) |

For the term , we apply Cauchy-Schwarz inequality and get

| (A49) |

By Proposition 1 in Bai and Li (2016), the first term

By a similar technique as in bounding and using the combination of Bernstein inequality and union bound, we can show , and therefore

| (A50) | |||||

Combining (A46), (A47), (A48) and (A50), we have

In addition, from Corollary S.1 (a) in Bai and Li (2016), we have with being finite constant as is symmetric and positive definite. Substituting these results into (A45), we have

| (A51) |

Combining (LABEL:eq:max_uhat) and (A51), we show

Appendix G. Proofs of Lemmas

G.1 Proof of Lemma 7

Proof of Condition (i). To prove the Condition (i) in Lemma 7, we need to show that

From Assumption 2, we have to be sub-exponential variable with mean 0 and . In addition, it is assumed that and . Denote . From Proposition 1, . Since , it can be shown that .

To prove condition (i), we focus on finding the appropriate sequence of such that the following probability tends to 0.

| (A52) |

where

We let and , then it can be verified that the probability , and tends to 0 under . Next, we look at , and to determine , and separately.

For , as is independent mean 0 sub-exponential random variables and , by Bernstein inequality, we have

Then applying union bound inequality, we have

so at , the probability tends to 0. Using similar techniques, we can show that at , the probability tends to 0; at , the probability tends to 0. Hence at

the probability (A52) tends to 0. As dominates in the above expression, we eventually have

which completes the proof for condition (i).

Proof of Condition . To show condition in Lemma 7 holds, we use a similar technique as in proving condition (i) and decomposing the probability as follows. Recall that and the two sub-vectors of are denoted as and . Denote .

| (A53) |

where .

Similarly as in proof of condition , we let with . From Assumption 2, we have , and as a result, . In addition, .

We let

It can be shown that , , and tends to 0 under . Then we determine , , and such that the probability (A53) tends to 0.

For , as is independent mean 0 sub-exponential random variables and

where we denote for notational simplicity. By Bernstein inequality, we have

Then applying union bound inequality, we have

so at , the probability tends to 0. Using similar techniques, we can show that at , the probability tends to 0; at , the probability tends to 0; at , the probability tends to 0. Hence at

the probability (A53) tends to 0. Since dominates , and , we have

This completes the proof for Condition .

G.2 Proof of Lemma 9

In this proof, we denote