High-order symplectic Lie group methods on using the polar decomposition

Abstract.

A variational integrator of arbitrarily high-order on the special orthogonal group is constructed using the polar decomposition and the constrained Galerkin method. It has the advantage of avoiding the second-order derivative of the exponential map that arises in traditional Lie group variational methods. In addition, a reduced Lie–Poisson integrator is constructed and the resulting algorithms can naturally be implemented by fixed-point iteration. The proposed methods are validated by numerical simulations on which demonstrate that they are comparable to variational Runge–Kutta–Munthe-Kaas methods in terms of computational efficiency. However, the methods we have proposed preserve the Lie group structure much more accurately and and exhibit better near energy preservation.

1. Introduction

1.1. Overview

Given a configuration manifold , variational integrators provide a useful method of deriving symplectic integrators for Lagrangian mechanics on the tangent bundle in terms of the Lagrangian , or for Hamiltonian mechanics on the cotangent bundle in terms of the Hamiltonian . It involves discretizing Hamilton’s principle or Hamilton’s phase space principle rather than the Euler–Lagrange or Hamilton’s equations. Discrete Lagrangian variational mechanics is described in terms of a discrete Lagrangian , which is an approximation of the exact discrete Lagrangian,

| (1) |

The discrete Hamilton’s principle states that the discrete action sum is stationary for variations that vanish at the endpoints. This yields the discrete Euler–Lagrange equations,

where denotes the partial derivative with respect to the -th argument. This defines an update map on , where . The update map can equivalently be described in terms of the discrete Legendre transforms,

| (2) |

which defines an update map on that automatically preserves the canonical symplectic structure on .

The order of the variational integrator depends on how accurately approximates . To derive a high-order discrete Lagrangian, a typical approach is the Galerkin method [14]. This involves considering the definition of the exact discrete Lagrangian, replacing with a finite-dimensional function space, and approximating the integral with a numerical quadrature formula. When the configuration manifold is a linear space and the polynomials of degree less than or equal to are chosen, the classical symplectic partitioned Runge–Kutta methods are recovered. Subsequently, Leok and Shingel [12] introduced the shooting-based discrete Lagrangian, which allows one to construct a symplectic integrator from an arbitrary one-step method.

When the configuration manifold is a Lie group , the construction of the discrete Lagrangian is more complicated than the case of linear space. Leok [11] proposed parametrizing curves on the Lie group using the exponential map, namely a curve connecting and that is represented by

where is a curve on the Lie algebra of with fixed endpoints and . This allows one to replace variations in by variations in on the Lie algebra , which is a linear space. This yields the following expression for the exact discrete Lagrangian,

| (3) |

where is the tangent lift of the exponential map. If is restricted to a finite-dimensional function space and the integral is replaced with a quadrature rule, we obtain the Galerkin Lie group variational integrators. The error analysis and implementation details for such methods can be found in [8, 3]. The above construction can be naturally extended to any retraction [1] on , which is a diffeomorphism from a neighborhood of to neighborhood of that satisfies a rigidity condition. The main disadvantage of Galerkin Lie group variational integrators is the term in (3). This implies that the resulting discrete Euler–Lagrange equations involve , which cannot be calculated exactly in general and requires the truncation of a series expansion.

1.2. Contributions

In this paper, we focus on the Lie group as our configuration space. By using the fact that every invertible square matrix can be uniquely decomposed into the product of an orthogonal matrix and a symmetric positive-definite matrix via the polar decomposition, we will circumvent the disadvantage discussed previously: Instead of parametrizing curves on by the exponential map or a retraction, is embedded naturally in the space , an open subset of . Given fixed endpoints and , we will construct interpolating polynomials in while ensuring that the internal points remain on by using the polar decomposition. Furthermore, we do not extend the Lagrangian to but instead project the trajectory onto in the same way. The variational integrator in Lagrangian form is derived following the usual variational approach for the constrained Galerkin method and the Hamiltonian form is derived using the discrete Legendre transforms.

For a system with rotational symmetry, we obtain a simpler integrator using Lie–Poisson reduction on the Hamiltonian side. Namely, if is -invariant, the constructed discrete Lagrangian is also -invariant and we can construct a reduced symplectic Lie–Poisson integrator. Lastly, we consider the dipole on a stick problem from [3], conduct numerical experiments using our method, and compare these to the variational Runge–Kutta–Munthe-Kaas methods (VRKMK) from the same reference.

2. Background

2.1. Notation

Recall that the Lie algebra of is the set , with the matrix commutator as the Lie bracket. Here, the inner products on and are introduced, and we identify these spaces with their dual spaces using the Riesz representations. For any , the inner product is given by

For any , the inner product is defined by

In addition, consider the operator , defined by . The following properties can be easily verified:

-

(a)

For any , .

-

(b)

For any , .

-

(c)

For any , .

In particular, we note that (c) gives a relationship between the two inner products. Lastly, given the choice of inner products, Riesz representation allows us to identify with and with .

2.2. Polar Decomposition

We introduce the polar decomposition and the construction of the retraction on described in [4]. Given , it decomposes uniquely as

where is the set of symmetric positive-definite matrices. This is the polar decomposition of , and we denote it as a coproduct mapping by . The map of interest is the projection defined by

where is the projection onto the first coordinate. In particular, when , we have . A fast and efficient algorithm for calculating the projection of the polar decomposition is by Newton iteration,

| (4) |

Now, this projection can be used to construct a retraction on from its Lie algebra relative to the identity element ,

where . This provides a diffeomorphism between a neighborhood of and a neighborhood of . To calculate its inverse, suppose that and take the transpose on both sides to obtain , which implies that . Thus, we have that

| (5) |

This is a Lyapunov equation, and it is well-known that matrix equations of the form have a unique solution if and only if for any , . For in the neighborhood of identity, its eigenvalues lie in the open right-half plane, which ensures that a unique solution to (5) exists. In principle, this Lyapunov equation can be solved using classical algorithms [2, 7]. For convenience, we denote the solution to the Lyapunov equation as

Next we introduce the tangent map and its adjoint for , which are essential for the derivation of the variational integrator.

2.2.1. The Tangent Map

Consider the polar decomposition and differentiate both sides to yield . By left-trivialization on , we can write , where . Rearranging gives , and since , we get that . Consequently, we may write it in the form of a Lyapunov equation,

| (6) |

We see that the tangent map of the polar decomposition is given by , where we solve the Lyapunov equation (6) to obtain .

2.2.2. The Adjoint of the Tangent Map

The adjoint of can be defined as

Recall that involves solving the Lyapunov equation (6). We aim to compute , so we define the following two maps,

where is fixed. Therefore, can be viewed as composition of and ,

and . We shall derive the expressions for and by considering the Riesz representations for our domains and codomains. For the adjoint of , let , then

Thus, , and is Hermitian. For , let and , then

Therefore, , and we obtain

where . Finally, we state a lemma that will be used later:

Lemma 1.

if and only if and for all .

3. Lagrangian Variational Integrators on the Rotation Group

Let the configuration manifold be the rotation group , and be a left-trivialized Lagrangian. We shall construct a discrete Lagrangian following the approach of constrained Galerkin methods on (see Appendix A). Denote the internal points by and the left-trivialized internal tangent vectors by . Fixing the endpoints and , we have

subject to the constraint

| (7) |

where the internal points are represented by

| (8) |

The expressions inside the parentheses in (7) and (8) correspond to a Runge–Kutta method in the embedding space. But, since these points may not lie on the Lie group , they are projected to using the polar decomposition.

Observe that (7) is equivalent to the condition that . Suppose that is small, and and are close enough to each other, then is in the neighborhood of . By Lemma 1, (7) holds if and only if , and so it is equivalent to

Now, we can construct a discrete Lagrangian with the constraint using a Lagrange multiplier ,

The corresponding variational integrator is given by

| (9a) | ||||

| (9b) | ||||

| (9c) | ||||

| (9d) | ||||

| (9e) | ||||

We shall compute the above equations more explicitly. It is easy to see that (9b) is equivalent to the constraint (7). Let us turn to (9a), where the main difficulty is the implicit dependence of on that involves solving a nonlinear system (8). Suppose that is fixed, and we vary such that with and , while remain fixed. Then,

Differentiating both sides and letting , we have that

| (10) |

where . Then, (10) can be rewritten as

| (11) |

In order to represent in terms of , we define three maps,

Then,

| (12) |

Now, we compute by evaluating and expressing as a left-trivialized cotangent vector. Since this is a straightforward calculation of the variation, we present the result here for equation (9a) (see section C.1 for details),

| (13) |

for any .

Recall that and its dual is given by

Therefore, let us derive the explicit expressions for the adjoints of our three proposed maps, so we may write explicitly. The adjoint of is easy to compute, and for any ,

| (14) |

where is in the -th position. For the adjoint of , we consider the identity

for any . Using the properties of the inner products again, we obtain the explicit expression (see section C.2 for details),

| (15) |

Similarly, we consider the same identity and techniques to obtain the explicit expression for (see section C.3 for details),

| (16) |

Combining (14), (15), and (16), for can be computed as follows,

| (17a) | ||||

| (17b) | ||||

We can first calculate from (17a) by using fixed-point iteration, and then substitute the result into (17b) to obtain . This combined with (13) gives an explicit formula for (9a).

We now derive an explicit formula for (9d). Notice that depends on by the nonlinear system in (8), so we can use the method of variations again. If we vary such that and , we obtain

Differentiating on both sides and letting , where is a left-trivialized tangent vector, we obtain

which can be rewritten as

Similar to the approach used for , we introduce a new map,

then . The explicit expression for can be written as (see section C.4 for details),

| (18) |

As such, can be computed as follows,

| (19a) | ||||

| (19b) | ||||

Now, we compute , which gives us (9d) explicitly (see section C.5 for details),

| (20) |

For equation (9e), it is easy to show that

| (21) |

Combining (13), (7), (8), (20), and (21), we obtain a Lagrangian variational integrator on ,

| (22a) | ||||

| (22b) | ||||

| (22c) | ||||

| (22d) | ||||

| (22e) | ||||

The integrator gives an update map on the cotangent bundle. In particular, one may solve for simultaneously using equations (22a)–(22d). Unfortunately, while (22b)–(22d) can be written in fixed-point form for the variables , , and , (22b) is implicit for . However, we can arrive at a fixed-point form for (22a) on the Hamiltonian side if is hyperregular.

4. Hamiltonian Variational Integrators on the Rotation Group

It is often desirable to transform a numerical method from the Lagrangian side to the Hamiltonian side, which is possible if is hyperregular. The same mechanical system can be represented by either a Lagrangian or a Hamiltonian, and they are related by the Legendre transform. In Euclidean space, this gives

and we have the following relationships,

Given a Lie group , a left-trivialized Lagrangian , and its corresponding Hamiltonian , it is easy to verify that similar relationships hold for these trivializations,

| (23) |

Using (23) and denoting the corresponding internal cotangent vectors by , (22) can be transformed to the Hamiltonian form as

| (24a) | ||||

| (24b) | ||||

| (24c) | ||||

| (24d) | ||||

| (24e) | ||||

| (24f) | ||||

In the above algorithm, is given explicitly by (24f) and only serves to reduce the redundancy in the computations because they are used numerous times in the other expressions. Similarly, shows up in both (24a) and (24d), so one can save computational effort by reusing the shared solution to (17a) and (19a). Then, the first four equations can be solved simultaneously by fixed-point iterations, meaning the variables are updated concurrently in each iteration. Observe that the fixed-point form for in (24d) requires solving a Lyapunov equation. Finally, is solved explicitly in (24e) after solving for . We shall call the integrators defined by (24) the variational polar decomposition method or VPD for short.

4.1. Lie–Poisson Integrator by Reduction

We consider a -invariant Hamiltonian system given by on the cotangent bundle . In this case, Hamilton’s equations can be reduced to a Lie–Poisson system on . If the Hamiltonian is hyperregular, then both the Lagrangian and the corresponding constrained Galerkin discrete Lagrangian will be -invariant. As such, (2) naturally reduces to yield a Lie–Poisson integrator (see Appendix B). We only consider the reduction on the Hamiltonian side due to the nature of the constrained Galerkin methods, which give an update map on the cotangent bundle.

The discrete Lagrangian we have constructed becomes

where

and is the reduced Lagrangian. It is easy to verify that our system is -invariant, meaning

where

Therefore, the variational integrator (24) can theoretically be reduced to a Lie–Poisson integrator. By letting and , (24) simplifies to

| (25a) | ||||

| (25b) | ||||

| (25c) | ||||

| (25d) | ||||

| (25e) | ||||

| (25f) | ||||

where is the reduced Hamiltonian. Multiplying by on both sides of yields

Suppose that is small and and are close, then is in the neighborhood of . By Lemma 1, this is equivalent to

which can be regarded as the fixed-point equation for . The first four equations can be solved using fixed-point iteration for the variables as in our previous discussions. Then, can be calculated explicitly.

In the above algorithm, we also need to figure out the reduced version of and . Note that (17a) and (17b) involve ; in addition, , and are reduced, so we need a reduced version of as well. Multiplying on the left by , we obtain

Then, is the polar decomposition of , and for ,

This is the reduced version of , and so can be computed as follows,

| (26a) | |||

| (26b) | |||

where are redefined to be . For , we need to compute for some , which is given by

Hence, we have

| (27a) | |||

| (27b) | |||

5. Numerical Experiments

We test our methods and compare them to the variational Runge–Kutta–Munthe-Kaas (VRKMK) methods from Bogfjellmo and Marthinsen [3] on the dipole on a stick problem that they considered. In particular, our configuration space is , and its Lie algebra is identified with . We shall only recall the mathematical expressions here, so for a thorough description of the system one should refer to the reference above. The right-trivialized and left-trivialized Hamiltonians, , can be written as

| (28) | ||||

| (29) |

where . Note that , with and . The constant vectors are and . Lastly, , and is our usual Euclidean norm.

Both forms of the Hamiltonian are written here because while our method was developed for left-trivialized systems using Hamilton’s principle, their method was developed for right-trivialized systems using the Hamilton–Pontryagin principle. As a result, both discretizations yield symplectic variational integrators for the Hamiltonian with their corresponding choice of trivialization. In particular, we have

| (30) |

as a relationship between the dual elements of the corresponding trivialization: is the dual representation of for the right-trivialization , and is the dual representation of for the left-trivialization . Consequently, we note that the left-trivialized cotangent vector in VPD (24) is computed as

where is the matrix derivative of with respect to . On the other hand, the right-trivialized cotangent vector is computed as

when implementing the VRKMK method.

For our tests, we also have the same initial data from [3],

for the VRKMK methods, and so (30) gives to complete the initial data for the VPD methods. Since both methods involve fixed-point iteration, we terminate the processes when the norm between the current and previous iteration is less than for each variable. In particular, the norm for vectors is the Euclidean norm and for matrices it is the induced matrix norm. Lastly, we ran these implementations in Wolfram Mathematica 12 on a personal computer with the following specifications: Operating system: Windows 10; CPU: 3.7GHz AMD Ryzen 5 5600X; RAM: G.Skill Trident Z Neo Series 32Gb DDR4 3600 C16; Storage: 1TB Rocket Nvme PCIe 4.0 writing/reading up to 5000/4400 MB/s.

5.1. Order Tests

| 1 |

We run both methods based on the one-, two-, and three-stage Gauss–Legendre methods and a third-order Runge–Kutta method for comparisons, and these methods are shown as Butcher tableaux in Table 1.

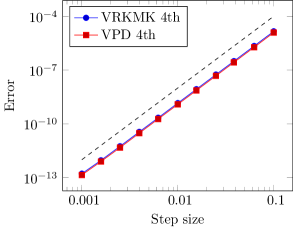

We compute the errors in from VRKMK and errors in from VPD with respect to a reference solution and these errors are shown in Figure 1. The reference solution was calculated using the sixth-order VPD method with step size . The errors for VRKMK is computed as while the errors for VPD is computed as . The black-dashed lines are reference lines for the appropriate orders: In the sixth order comparison plot, the errors from the fixed-point iteration are dominating for smaller step sizes, so the theoretical order is not observed in those regimes. Otherwise, both methods achieve their theoretical orders, and they are quite comparable, noting that VPD exhibits a noticeable, smaller error constant in the second order method.

5.2. Long-Term Behaviors

The long-term energy behaviors for both methods are presented in Figures 2 – 5. For second, third, and fourth order, we fixed the step size and ran integration steps to observe the energy errors. For second order, the energy errors have magnitude orders of for VRKMK and for VPD; for third order, they have magnitude orders of for VRKMK and for VPD; for fourth order, they have magnitude orders of for both methods.

For the sixth order, we consider step size to avoid the regime where the errors in the fixed-point iteration are dominating. This step size corresponds to the third point from the right in the order comparison plots. We also run integration steps and observe the energy errors in Figure 5. The energy error for the VPD method is stable with an order of magnitude of . On the other hand, the energy error in VRKMK exhibits a slow increase over the integrating time span. We investigated this phenomenon and attribute this to the framework of each method. In VPD, in (24b) and the internal points in (24c) are updated in each integration step via polar decomposition. As a result, the Lie group structure is always preserved up to machine precision for both the configuration space elements and internal points. However, in VRKMK, both and the internal points are updated by the left action of . This left action is a matrix multiplication which is performed to machine precision, but with a sufficient number of multiplications, the round-off error will still accumulate. Consequently, the Lie group structure is not as well-preserved in comparison, and this is illustrated in the comparison of orthogonality errors in Figure 6.

We also observed that projecting the sixth-order VRKMK method onto the rotation group using the polar decomposition at each timestep does not recover the near energy preservation typical of symplectic methods. Presumably, this is because the projection subtly compromises the symplecticity of the method. This is consistent with the observation made in [10], that both the Lie group structure and symplecticity needs to be preserved for the methods to exhibit near energy preservation.

5.3. Runtime Comparison

We also have data for runtime comparison of VRKMK and VPD for the two-stage and three-stage Gauss–Legendre methods in Figure 7. For each step size , we recorded the runtime of each method in seconds and repeated the execution 256 times to compute the average runtime. The runtime for VPD methods is on average longer than for VRKMK methods. This may be due in parts to the Lyapunov solutions and multiple layers of fixed-point iterations required in the polar decomposition in (4) and in the same equations (17a) and (19a).

6. Conclusion

By applying the polar decomposition in a constrained Galerkin method, we constructed high-order Lie group symplectic integrators on , which we refer to as the variational polar decomposition method. Furthermore, the integrator can be reduced to a Lie–Poisson integrator when the underlying mechanical system is group-invariant. We performed extensive numerical experiments comparing our proposed method to the variational Runge–Kutta–Munthe-Kaas method from [3]. These comparisons provide insights into each method and highlight an advantage of our proposed method, which is the preservation of Lie group structure up to machine precision for both the configurations and internal points. This appears to be important for high-order methods to exhibit the near energy preservation that one expects for symplectic integrators when applied to dynamics on Lie groups.

For future work, it would be interesting to explore the generalization of the proposed method to symmetric spaces, by applying the generalized polar decomposition [15]. This may be of particular interest in the construction of accelerated optimization algorithms on symmetric spaces, following the use of time-adaptive variational integrators for symplectic optimization [6] based on the discretization of Bregman Lagrangian and Hamiltonian flows [16, 5]. Examples of symmetric spaces include the space of Lorentzian metrics, the space of symmetric positive-definite matrices, and the Grassmannian.

Acknowledgements

This research has been supported in part by NSF under grants DMS-1010687, CMMI-1029445, DMS-1065972, CMMI-1334759, DMS-1411792, DMS-1345013, DMS-1813635, CCF-2112665, by AFOSR under grant FA9550-18-1-0288, and by the DoD under grant HQ00342010023 (Newton Award for Transformative Ideas during the COVID-19 Pandemic). The authors would also like to thank Geir Bogfjellmo and Håkon Marthinsen for sharing the code for their VRKMR method from [3], which we used in the numerical comparisons with our method.

Appendix A Constrained Galerkin Methods

Our Galerkin variational integrator will involve a discrete Lagrangian that differs from the classical construction in [14]. Traditionally in the linear space setting, (1) is approximated with a quadrature rule

and is approximated by polynomials with degree less than or equal to with fixed endpoints and . By choosing interpolation nodes with and interpolation values with and , can be expressed as on , where are Lagrange polynomials corresponding to the nodes . By taking variations with respect to the interpolation values , is varied over the finite-dimensional function space,

Consider the quadrature approximation of the action integral, viewing it as a function of the endpoint and interpolation values,

where . Then, a variational integrator (2) can be obtained as follows,

| (31) |

However, (31) is often impractical due to the complexity of evaluating and , which involve the Lagrange interpolating polynomials. In addition, computing the root of a system of nonlinear equations in (31) can be challenging because the formulation of a fixed-point problem could be complicated, and convergence issues could arise. In contrast, Runge-Kutta methods are already in fixed-point formulation and are convergent as long as consistency is satisfied.

Now, note that the finite-dimensional function space does not depend on the choice of nodes , and by a tricky elimination of , (31) can be simplified to yield

| (32a) | ||||||

| (32b) | ||||||

| (32c) | ||||||

where . When transformed to the Hamiltonian side, (32) simply recovers the symplectic partitioned Runge–Kutta method.

The same variational integrator can be derived in a much simpler way: Instead of performing variations on internal points , we will perform variations on the internal derivatives , subject to the constraint . Then, the internal points are reconstructed using the fundamental theorem of calculus and the degree of precision of the quadrature rule to obtain . Now, consider the quadrature approximation of the action integral, viewed as a function of the endpoint values and the internal velocities,

where is a Lagrange multiplier that enforces the constraint. Then, a variational integrator (2) can be obtained as follows,

| (33) |

Explicitly expanding (33) and eliminating the Lagrange multiplier yields (32) in a much more straightforward manner. This same technique, known as the constrained Galerkin method, is adopted on the rotation group in order to directly obtain a variational integrator in fixed-point form.

Appendix B Euler–Poincaré & Lie–Poisson Reductions

When the Lagrangian or Hamiltonian is left-invariant, the mechanical system can be symmetry reduced to evolve on the Lie algebra or its dual , respectively, assuming some regularity. On the Lagrangian side, this corresponds to Euler–Poincaré reduction [13], which is described by the Euler–Poincaré equations,

The above is expressed in terms of the reduced Lagrangian . As a result, this can be described in terms of a reduced variational principle with respect to constrained variations of form , where is an arbitrary path in the Lie algebra that vanishes at the endpoints, namely . The constraint on the variations are induced by the condition that together with arbitrary variations that vanish at the endpoints.

On the Hamiltonian side, this corresponds to Lie–Poisson reduction [13]. Recall that the Lie–Poisson structure on is given by

and together with the reduced Hamiltonian , they gives the Lie–Poisson equations on ,

If the discrete Lagrangian is also -invariant, meaning for some , then (2) can be reduced to a Lie–Poisson integrator [9],

| (34) |

where . This algorithm preserves the coadjoint orbits and, hence, the Poisson structure on .

Appendix C Detailed Derivations for the Lagrangian Variational Integrators

C.1. Derivations of

Initially, we have

where we can use the properties of the inner products to express the last term as follows,

Then, we continue using equation (12) to obtain,

We finally have

C.2. Explicit Expression for : A Derivation

C.3. Explicit Expression for : A Derivation

C.4. Explicit Expression for : A Derivation

C.5. Derivation of

We compute

Thus, we have

References

- Absil et al. [2008] P.-A. Absil, R. Mahony, and R. Sepulchre. Optimization Algorithms on Matrix Manifolds. Princeton University Press, 2008.

- Bartels and Stewart [1972] R. H. Bartels and G. W. Stewart. Solution of the matrix equation [f4]. Communications of the ACM, 15(9):820–826, 1972.

- Bogfjellmo and Marthinsen [2016] G. Bogfjellmo and H. Marthinsen. High-order symplectic partitioned Lie group methods. Foundations of Computational Mathematics, 16:493–530, 2016.

- Celledoni and Owren [2002] E. Celledoni and B. Owren. A class of intrinsic schemes for orthogonal integration. SIAM Journal on Numerical Analysis, 40(6):2069–2084, 2002.

- Duruisseaux and Leok [2021] V. Duruisseaux and M. Leok. A variational formulation of accelerated optimization on Riemannian manifolds. 2021.

- Duruisseaux et al. [2021] V. Duruisseaux, J. Schmitt, and M. Leok. Adaptive Hamiltonian variational integrators and applications to symplectic accelerated optimization. SIAM Journal on Scientific Computing, 43(4):A2949–A2980, 2021. doi: 10.1137/20M1383835.

- Golub et al. [1979] G. Golub, S. Nash, and C. Van Loan. A Hessenberg-Schur method for the problem . IEEE Transactions on Automatic Control, 24(6):909–913, 1979.

- Hall and Leok [2017] J. Hall and M. Leok. Lie group spectral variational integrators. Foundations of Computational Mathematics, 17:199–257, 2017.

- J.E. Marsden and Shkoller [1999] S. Pekarsky J.E. Marsden and S. Shkoller. Discrete Euler–Poincaré and Lie-Poisson equations. Nonlinearity, 12:1647–1662, 1999.

- Lee et al. [2007] T. Lee, M. Leok, and N. H. McClamroch. Lie group variational integrators for the full body problem in orbital mechanics. Celest. Mech. Dyn. Astr., 98(2):121–144, 2007.

- Leok [2005] M. Leok. Generalized Galerkin variational integrators. arXiv:math/0508360, 2005.

- Leok and Shingel [2012] M. Leok and T. Shingel. General techniques for constructing variational integrators. Frontiers of Mathematics in China, 7:273––303, 2012.

- Marsden and Ratiu [1999] J. E. Marsden and T. S. Ratiu. Introduction to Mechanics and Symmetry, volume 17 of Texts in Applied Mathematics. Springer-Verlag, second edition, 1999.

- Marsden and West [2001] J. E. Marsden and M. West. Discrete mechanics and variational integrators. Acta Numer., 10:357–514, 2001.

- Munthe-Kaas et al. [2001] Hans Z Munthe-Kaas, GRW Quispel, and Antonella Zanna. Generalized polar decompositions on lie groups with involutive automorphisms. Foundations of Computational Mathematics, 1(3):297–324, 2001.

- Wibisono et al. [2016] A. Wibisono, A. Wilson, and M. Jordan. A variational perspective on accelerated methods in optimization. Proceedings of the National Academy of Sciences, 113(47):E7351–E7358, 2016.