HooliGAN: Robust, High Quality Neural Vocoding

Abstract

Recent developments in generative models have shown that deep learning combined with traditional digital signal processing (DSP) techniques could successfully generate convincing violin samples [1], that source-excitation combined with WaveNet yields high-quality vocoders [2, 3] and that generative adversarial network (GAN) training can improve naturalness [4, 5].

By combining the ideas in these models we introduce HooliGAN, a robust vocoder that has state of the art results, fine-tunes very well to smaller datasets (<30 minutes of speech data) and generates audio at 2.2MHz on GPU and 35kHz on CPU. We also show a simple modification to Tacotron-based models that allows seamless integration with HooliGAN.

Results from our listening tests show the proposed model’s ability to consistently output high-quality audio with a variety of datasets, big and small. We provide samples at the following demo page: https://resemble-ai.github.io/hooligan_demo/

Keywords: neural vocoder, text to speech, DDSP, GAN, NSF

1 Introduction

††* Corresponding Author††PREPRINT - UNDER REVIEWSince the introduction of WaveNet [6], deep neural network based vocoders have shown to be vastly superior in naturalness compared to traditional parametric vocoders. Unfortunately, the original WaveNet suffers from slow generation performance due to its high complexity and auto-regressive generation. Other works address this issue by reducing parameters and complexity (WaveRNN [7], LPCNet [8], FFTNET [9]) and/or replacing the auto-regressive generation with parallel generation (Parallel WaveNet [10], WaveGlow [11], Clarinet [12],MelGAN [4], Parallel WaveGAN [5]).

Most of these vocoders consume log mel spectrograms that are predicted by a text to acoustic model such as Tacotron [13, 14]. However, if there is sufficient noise in these predicted features entropy increases in the vocoder creating artifacts in the output signal. Phase is also an issue. If a vocoder is trained directly with discrete samples (e.g., with a cross-entropy loss between predicted and ground truth samples) it can result in a characteristic “smeared” sound quality. This is because a periodic signal composed of different harmonics can have an infinite amount of variation in its discrete waveform while sounding identical. In this training scenario, the vocoder will be forced to “solve for phase”.

Differentiable Digital Signal Processing (DDSP) [1], while not strictly a vocoder, showed that it is possible to leverage traditional DSP components like oscillators, filters, amplitude envelopes and convolutional reverb to generate convincing violin sounds and that it can be done so without needing to explicitly “solve for phase”. Meanwhile, the Neural Source Filter (NSF) [2, 3] family of vocoders show that an F0 (fundamental frequency) driven source-excitation combined with neural filter blocks (i.e., simplified WaveNet blocks) generates outputs with naturalness competitive with vocoders that only take log mel spectrograms as input.

In this work we take the ideas behind DDSP and NSF and combine them into an efficient and robust model, whereby the source excitation is inspired by DDSP and the filtering is inspired by NSF’s neural filter blocks. To improve naturalness we also utilise the discriminator module and adversarial training outlined in MelGAN. What we arrive at is a model with impressive sound-quality, inference speed and robustness.

In the next section we review some key ideas from the DDSP, NSF and MelGAN models that we use in this work. In section 3 we outline the HooliGAN model, in section 4 we evaluate the proposed model’s naturalness, robustness and performance. We then discuss our findings in section 5.

2 Background

2.1 DDSP

DDSP [1] comprises of three main parts, an encoder that takes in log mel spectrograms, a decoder that predicts sequences of parameters that drive an additive oscillator, noise filter coefficients (via spectra predictions), loudness distributions for the oscillator harmonics and amplitude envelopes for both the waveform and noise. Finally the signal is convolved with a learned impulse response which in effect applies reverberation to the output signal.

While this model excels at generating realistic violin samples, when it comes to modelling speech, we found that it cannot model highly detailed transients on the sample level since the primary waveshaping components, i.e., the filter and envelopes, are operating on the frame level. Also, we found that the sinusoidal oscillator cannot generate convincing speech waveforms on its own.

Our interest in DDSP primarily concerns the additive oscillator and the model’s ability to learn time-varying amplitude envelopes.

2.2 NSF

NSF [2, 3] comprises of two excitation sources, namely a fundamental pitch (F0) driven sinusoidal signal and a constant Gaussian noise signal, each of which are then gated by an unvoiced/voiced (UV) switch, filtered by a simplified WaveNet (which they refer to as a neural filter) and passed through a Finite Impulse Response (FIR) filter.

While the sound quality of these vocoders is competitive, we surmise that some of the heavy lifting of the 6 WaveNet stacks could be offloaded to computationally lighter DSP components such as the additive oscillator and loudness envelopes as described in Section 3.2.

2.3 MelGAN

MelGAN [4] and Parallel WaveGAN [5] are the first competitive GAN-based vocoder models. While Parallel WaveGAN has superior naturalness in its output, MelGAN’s discriminator has widely-strided, large kernel size convolutions that are particularly well suited to a phase-agnostic training objective and we incorporate that into our proposed model.

3 HooliGAN

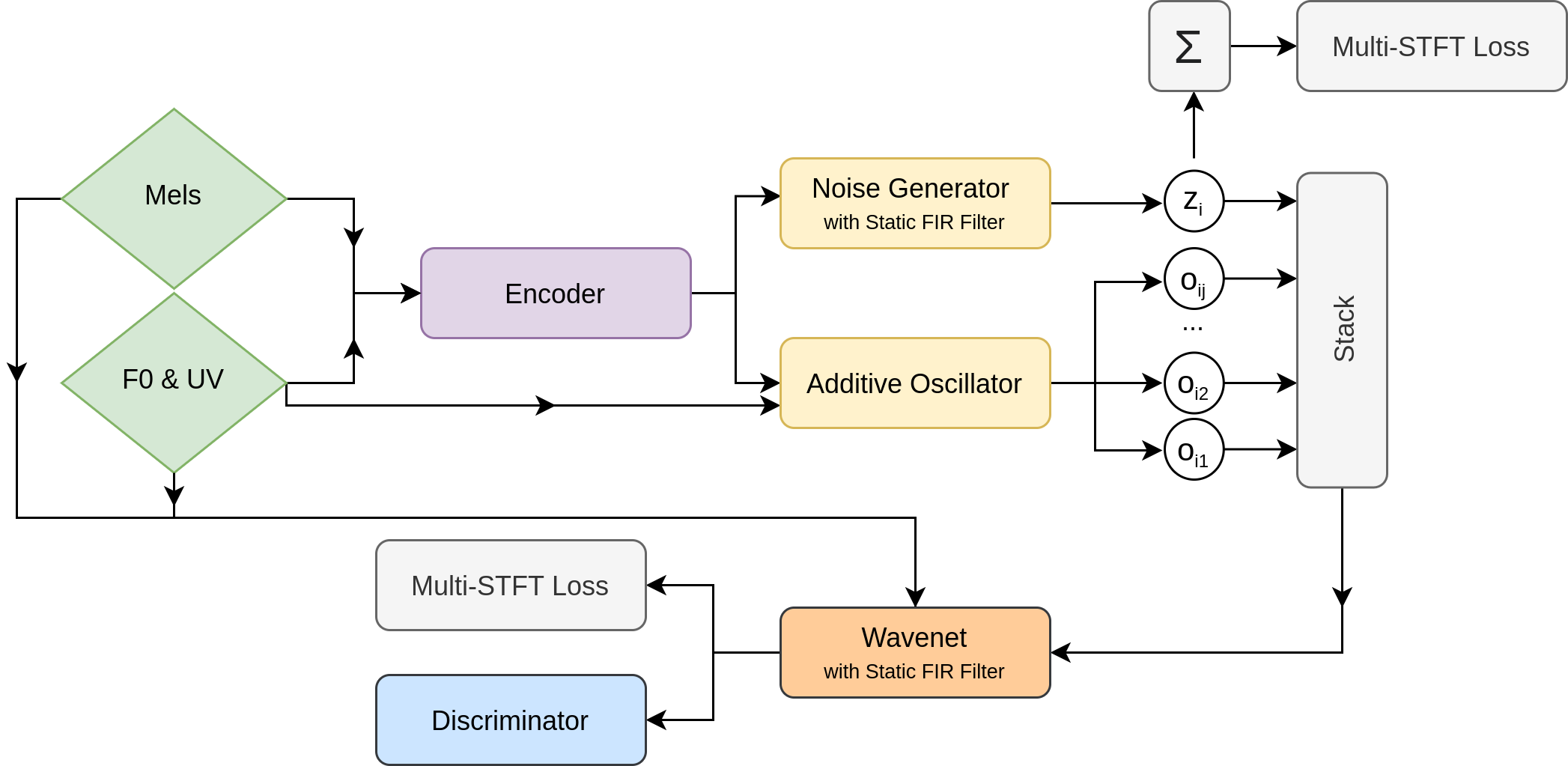

In the proposed model, as shown in Figure 1, we take as input log mel spectrograms , an F0 pitch sequence and a UV voicing sequence which we extract from with:

| (1) |

Note, for all equations is the time axis on the frame level and is the time axis on the sample level while will be used interchangeably for the channel/harmonic axes.

3.1 Encoder

, and are concatenated together into and put through two 1d convolutional layers with 256 channels, kernel size of 5 and LeakyRELU non-linearity [15]. Then a fully connected layer outputs a sequence of vectors that is split in two along the channel axis for controlling both the oscillator and noise generator.

3.2 Additive Oscillator

The additive oscillator module takes in from the encoder, transforms it with a fully-connected layer and applies the modified sigmoid non-linearity described in [1]. We then use linear interpolation to upsample from the frame level to the sample level. Here the parameters are split up into a sequence of distributions for controlling the loudness of each harmonic and an overall amplitude envelope for all harmonics.

Before generating the harmonics we create frequency values for harmonics by taking the input F0 sequence , upsampling it to the sample-level with linear interpolation to get and multiplying that with the integer sequence:

| (2) |

Please note that before we upsample , we interpolate across the unvoiced segments in order to avoid glissando artifacts resulting from the quick jumps from 0Hz to the voiced frequency.

We then create a mask for all frequency values in above 3.3kHz. We found that if we did not mask out higher frequencies, the WaveNet would become an identity function early in training and sound quality would not improve with further training. If is the sampling rate:

| (3) |

We get the oscillator phase for each time-step and harmonic with the cumulative summation operator along the time axis:

| (4) |

We then generate all harmonics with:

| (5) |

where is randomised with values in [-, ]. Finally we zero out the unvoiced part of the oscillator output by upsampling to with nearest neighbours upsampling and broadcast-multiplying:

| (6) |

3.3 Noise Generator

The noise generator takes in from the encoder and transforms it with a fully connected layer with modified sigmoid [1] to sequence . This is upsampled with linear interpolation to the sample level to get , the amplitude envelope for the noise. We then get the output with:

| (7) |

where is a learned parameter initialised with and . We then convolve with a 257 length impulse response with learnable parameters:

| (8) |

| Model | MOS |

|---|---|

| MelGAN | 3.02 ± 0.08 |

| WaveGlow | 3.77 ± 0.07 |

| WaveRNN | 3.77 ± 0.07 |

| HooliGAN | 4.07 ± 0.06 |

| Ground Truth | 4.29 ± 0.06 |

3.4 WaveNet

We concatenate the stacked harmonics from the oscillator with shaped noise to get , the direct input to the WaveNet module. is upsampled with linear interpolation to and used as side-conditioning in the residual blocks.

We remove the gating function in favour of a simple tanh activation, similar to [2, 3] and also remove the residual skip collection. However unlike NSF, where the WaveNet channels are reduced to 1 dimension at the output of each stack, we leave the amount of channels constant throughout.

For the WaveNet hyperparameters we use 3 stacks, each with 10 layers. The convolutional layers have 64 channels and the kernel-size is 5 with a dilation exponent of 2. We also note that this is approximately 2 times more computationally efficient than the NSF WaveNet which have a total 6 stacks.

Finally we convolve the output of the WaveNet with a 257 length learned impulse response to get the predicted output:

| (9) |

While this component was originally designed to model reverberation via a long 1-2 second impulse response, early experiments had unwanted echo artifacts. However, with further tweaking we found that a much shorter impulse would help shape the frequency response in the output so we kept this component during development of the model.

3.5 Training Objectives

3.5.1 Spectral Loss

We use a multi-STFT (Short Time Fourier Transform) loss similar to DDSP [1] for both the output of the WaveNet and the WaveNet input where we sum along the stacked axis and add the noise to get a 1d signal:

| (10) |

Then we get the full multi-STFT loss by:

| (11) |

| (12) |

where is the ground truth audio and computes the magnitude of the STFT with FFT sizes and using 75% overlap.

3.5.2 Adversarial Losses

We use similar adversarial training as described in [4, 5] and adapt unofficial open-source code333https://github.com/kan-bayashi/ParallelWaveGAN where we use multiple MelGAN discriminators , of the exact same architecture. To get the generator’s adversarial loss we use:

| (13) |

The generator’s feature matching loss , where denotes each convolutional layer of the discriminator model:

| (14) |

Our final generator loss , with and to prevent the multi-STFT loss overpowering the adversarial and feature-matching losses:

| (15) |

The discriminator loss is calculated with:

| (16) |

| Dataset | Vocoder Model | MOS |

|---|---|---|

| SpeakerA | WaveRNN | 4.10 ± 0.07 |

| SpeakerA | HooliGAN | 4.49 ± 0.05 |

| SpeakerB | WaveRNN | 3.18 ± 0.09 |

| SpeakerB | HooliGAN | 4.05 ± 0.06 |

3.5.3 Training Schedule

First we pretrain the generator with only the multi-STFT loss for 100k steps similar to [5] after which we switch to the full adversarial loss ( and ). We use the RAdam optimiser [16] with a fixed learning rate throughout of for the generator and for the discriminator with and no weight-decay for both optimisers.

We train with a batch size of 16 with having a duration 11,000 samples. The mel-spectrograms have 80 frequency bins, a hop-size of 12.5ms, a window of 50ms and FFT-size of 2048. We alter the F0 frames from Parselmouth/Praat such that they are centered like Librosa’s spectrograms and have an equal hop-size. We use a sampling rate of 22.05kHz. A single Nvidia 2080TI-RTX card is utilised for all experiments and we train for 500k - 1.5M steps.

3.6 Tacotron2 Modifications

Our text to acoustic model is Tacotron2 [14] which we modify as follows:

-

1.

During training, we predict an F0 value along with each mel-spectrogram frame and re-scale the F0 to be in range [0, 1] by dividing by the maximum frequency parameter in the F0 estimation algorithm. During inference, we re-scale back to hertz and divide by the sample-rate to get the correct frequency value for the oscillator.

-

2.

The predicted F0 bypasses the prenet and instead is concatenated to the prenet output and then reshaped with a fully connected layer before entering the decoder RNN.

-

3.

the training objective is altered to become:

(17) where is the mel spectrogram and .

| Model | MOS |

|---|---|

| WaveRNN | 3.65 ± 0.08 |

| HooliGAN | 4.18 ± 0.07 |

| Ground Truth | 4.28 ± 0.06 |

4 Experiments

4.1 Datasets

We use four English language datasets in total for testing sound-quality:

-

1.

LJSpeech [17]: a 24 hour single-speaker female dataset featuring Linda Johnson from LibriVox with 13,100 transcribed utterances.

-

2.

VCTK [18]: a multi-speaker dataset with 109 unique speakers. Each speaker reads approximately 400 sentences. We downsample from 48kHz to 22.05kHz for our experiments.

-

3.

SpeakerA: a 30 minute proprietary single-speaker female dataset with 582 utterances.

-

4.

SpeakerB: a 30 minute proprietary single-speaker male dataset with 500 utterances.

4.2 MOS Design

For each experiment we recruit 30 workers from Amazon Mechanical Turk (AMT) for a Mean Opinion Score (MOS) study. We require that the workers have AMT’s high-performance “Master” status and live in English-speaking countries. We evaluate each model in each experiment with 20 samples. In order to avoid the “louder sounds better” perceptual bias we normalise all audio to -23 LUFS using the EBU R128 loudness standard444https://tech.ebu.ch/docs/r/r128-2014.pdf.

4.3 Experiment Setup

To test the proposed model’s sound-quality we design four experiments.

4.3.1 Analysis / Synthesis

We compare the ability to invert ground-truth acoustic features of the proposed model against WaveRNN, MelGAN and WaveGlow. For MelGAN and WaveGlow we use the pretrained models released by Descript and Nvidia on Pytorch Hub555https://pytorch.org/hub/. For WaveRNN we use the pretrained Mixture of Logistics (MOL) model available in our github repository666https://github.com/fatchord/WaveRNN. All pretrained models are trained on the LJSpeech dataset [17]. Since we cannot fully control the train/validation/test split of all these pretrained models we need to ensure that the evaluation data we use was not seen by the models during training. To this end, we gather some recordings of Linda Johnson that were published after the release of the original LJSpeech dataset777https://librivox.org/the-great-events-by-famous-historians-volume-3-by-charles-f-horne/. We note that these newer recordings have an almost identical recording quality to those in the original dataset.

In Table 1 we see that HooliGAN achieves a leading MOS score of 4.07. While the output is clear and high-quality, we do notice that the transients can sometimes be too short, with a click-like quality. We also note that both WaveRNN and WaveGlow have a “smeared” characteristic in their outputs, most likely from those models being trained directly on discrete waveform. While we surmise that MelGAN performed poorly mainly because its generator network is under-powered and its receptive field too small.

| Model | Parameters | CPU | GPU |

|---|---|---|---|

| WaveGlow | 87.9M | 6kHz | 155kHz |

| WaveRNN | 4.5M | 20kHz | 43kHz |

| HooliGAN | 1.3M | 35kHz | 2.2MHz |

| MelGAN | 4.3M | 272kHz | 3.6MHz |

4.3.2 Text-to-Speech Vocoding

In the second experiment, we test the ability to invert acoustic features predicted by Tacotron2 trained on LJSpeech. We pick sentences from the same evaluation data in Section 4.3.1. Table 3 summarises the results with HooliGAN outperforming WaveRNN by a wide margin. We also note that the HooliGAN MOS is quite close to that of ground truth in this experiment.

4.3.3 Finetuning on Small Datasets

In the third experiment, we compare the combination of Tacotron2 with the proposed model and WaveRNN when finetuning on datasets with only 30 minutes of data. We pretrain Tacotron2 and the vocoder models with VCTK and finetune afterwards, picking the best performing checkpoints for all models.

In Table 2 we see again that HooliGAN outperforms WaveRNN. While there is an improvement for both speakers, we note that the improvement is larger for the male speaker. We conclude that having an explicit pitch signal feeding the vocoder is more important for male voices than female as the harmonics tend to be too compressed in mel spectrograms from male voices.

4.3.4 Inference Speed

Finally, we test the inference speed of all models in this paper for both CPU and GPU with standard, non-optimised Pytorch[19] code. In Table 4 we see that MelGAN clearly out-performs all models. However HooliGAN still performs respectably, and while it has less parameters than MelGAN, the deeply-stacked nature of WaveNet limits overall speed. WaveRNN is slowed down by its auto-regressive generation and WaveGlow’s theoretically fast parallel generation is limited by high complexity from the large parameter count.

5 Conclusions and Future Work

As we can see from the outlined experiments, HooliGAN outperforms all tested models by a large margin in a variety of testing scenarios. We conclude that the source excitation method combined with traditional DSP techniques not only reduces the complexity of the model, but also improves the overall sound quality. In future work we will explore ways to better model transients, investigate other methods of source excitation to further reduce complexity, explicitly model background noise with DSP components and raise the sampling rate to CD-quality 44.1kHz.

6 Acknowledgements

We would like to thank Jeremey Hsu, Corentin Jemine, John Meade, Zihan Jin, Zak Semenov, Aditya Tirumala Bukkapatnam, Haris Khan, Tedi Papajorgji and Saqib Muhammad from Resemble AI for their feedback and support.

References

- [1] J. Engel, L. Hantrakul, C. Gu, and A. Roberts, “Ddsp: Differentiable digital signal processing,” 2020.

- [2] X. Wang, S. Takaki, and J. Yamagishi, “Neural source-filter waveform models for statistical parametric speech synthesis,” 2019.

- [3] X. Wang and J. Yamagishi, “Neural harmonic-plus-noise waveform model with trainable maximum voice frequency for text-to-speech synthesis,” 2019.

- [4] K. Kumar, R. Kumar, T. de Boissiere, L. Gestin, W. Z. Teoh, J. Sotelo, A. de Brebisson, Y. Bengio, and A. Courville, “Melgan: Generative adversarial networks for conditional waveform synthesis,” 2019.

- [5] R. Yamamoto, E. Song, and J.-M. Kim, “Parallel wavegan: A fast waveform generation model based on generative adversarial networks with multi-resolution spectrogram,” 2019.

- [6] A. van den Oord, S. Dieleman, H. Zen, K. Simonyan, O. Vinyals, A. Graves, N. Kalchbrenner, A. Senior, and K. Kavukcuoglu, “Wavenet: A generative model for raw audio,” 2016.

- [7] N. Kalchbrenner, E. Elsen, K. Simonyan, S. Noury, N. Casagrande, E. Lockhart, F. Stimberg, A. van den Oord, S. Dieleman, and K. Kavukcuoglu, “Efficient neural audio synthesis,” 2018.

- [8] J.-M. Valin and J. Skoglund, “Lpcnet: Improving neural speech synthesis through linear prediction,” 2018.

- [9] Z. Jin, A. Finkelstein, G. J. Mysore, and J. Lu, “FFTNet: a real-time speaker-dependent neural vocoder,” in The 43rd IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Apr. 2018.

- [10] A. van den Oord, Y. Li, I. Babuschkin, K. Simonyan, O. Vinyals, K. Kavukcuoglu, G. van den Driessche, E. Lockhart, L. C. Cobo, F. Stimberg, N. Casagrande, D. Grewe, S. Noury, S. Dieleman, E. Elsen, N. Kalchbrenner, H. Zen, A. Graves, H. King, T. Walters, D. Belov, and D. Hassabis, “Parallel wavenet: Fast high-fidelity speech synthesis,” 2017.

- [11] R. Prenger, R. Valle, and B. Catanzaro, “Waveglow: A flow-based generative network for speech synthesis,” 2018.

- [12] W. Ping, K. Peng, and J. Chen, “Clarinet: Parallel wave generation in end-to-end text-to-speech,” 2018.

- [13] Y. Wang, R. Skerry-Ryan, D. Stanton, Y. Wu, R. J. Weiss, N. Jaitly, Z. Yang, Y. Xiao, Z. Chen, S. Bengio, Q. Le, Y. Agiomyrgiannakis, R. Clark, and R. A. Saurous, “Tacotron: Towards end-to-end speech synthesis,” 2017.

- [14] J. Shen, R. Pang, R. J. Weiss, M. Schuster, N. Jaitly, Z. Yang, Z. Chen, Y. Zhang, Y. Wang, R. Skerry-Ryan, R. A. Saurous, Y. Agiomyrgiannakis, and Y. Wu, “Natural tts synthesis by conditioning wavenet on mel spectrogram predictions,” 2017.

- [15] A. L. Maas, “Rectifier nonlinearities improve neural network acoustic models,” 2013.

- [16] L. Liu, H. Jiang, P. He, W. Chen, X. Liu, J. Gao, and J. Han, “On the variance of the adaptive learning rate and beyond,” 2019.

- [17] K. Ito, “The lj speech dataset,” https://keithito.com/LJ-Speech-Dataset/, 2017.

- [18] K. M. Christophe Veaux, Junichi Yamagishi, English Multi-speaker Corpus for CSTR Voice Cloning Toolkit. [Online]. Available: http://homepages.inf.ed.ac.uk/jyamagis/page3/page58/page58.html

- [19] A. Paszke, S. Gross, F. Massa, A. Lerer, J. Bradbury, G. Chanan, T. Killeen, Z. Lin, N. Gimelshein, L. Antiga, A. Desmaison, A. Kopf, E. Yang, Z. DeVito, M. Raison, A. Tejani, S. Chilamkurthy, B. Steiner, L. Fang, J. Bai, and S. Chintala, “Pytorch: An imperative style, high-performance deep learning library,” in Advances in Neural Information Processing Systems 32, H. Wallach, H. Larochelle, A. Beygelzimer, F. d'Alché-Buc, E. Fox, and R. Garnett, Eds. Curran Associates, Inc., 2019, pp. 8024–8035. [Online]. Available: http://papers.neurips.cc/paper/9015-pytorch-an-imperative-style-high-performance-deep-learning-library.pdf