Hovering Over the Key to Text Input in XR ††thanks: 1 Google, USA. 2 University of Birmingham, UK. 3 University of Copenhagen, Denmark. 4 Arizona State University, USA. 5 Northwestern University, USA equal contribution

Abstract

Virtual, Mixed, and Augmented Reality (XR) technologies hold immense potential for transforming productivity beyond PC. Therefore there is a critical need for improved text input solutions for XR. However, achieving efficient text input in these environments remains a significant challenge. This paper examines the current landscape of XR text input techniques, focusing on the importance of keyboards (both physical and virtual) as essential tools. We discuss the unique challenges and opportunities presented by XR, synthesizing key trends from existing solutions.

Index Terms:

Input Techniques, Keyboard, Text Entry, Extended Reality (XR), Virtual Reality, Spatial ComputingI Introduction

Keyboards remain the primary tool for efficient text input across personal computers and mobile devices. However, achieving comparably efficient text entry in XR environments has proven to be a significant challenge. Existing solutions are either inefficient, have limited accuracy, or require cumbersome physical setups. Without proper text input methods in XR, the development of productivity tools, immersive metaverse experiences, and potential killer apps for super-productivity remains hindered [1].

The unique challenges of XR environments necessitate tailored approaches. Technical constraints, such as high-resolution displays and accurate finger tracking, can impede traditional input methods. Interestingly, the term ”Metaverse” – popularized by Neal Stephenson’s ”Snow Crash” – has an intriguing connection to keyboards. The Metaverse originally signified a space dominated by those proficient with the ”Meta” key, a function key, like the modern Ctrl, Shift or Alt, found on early keyboards that first appeared on the Stanford Artificial Intelligence Lab (SAIL) keyboard in 1970 marked by a black diamond111http://en.wikipedia.org/w/index.php?title=Meta%20key. This historical link hints at the potential for innovative XR text entry methods to augment productivity in ways that extend beyond traditional written input and document creation. Maybe the real Meta-verse is just a keyboard-enabled XR.

This paper synthesizes aspects that have been consistently explored as solutions, and identified as challenges for this field. We do so by partially exploring the growing body of research that has focused on novel XR text input techniques.

II Design Considerations

From the very beginning the goal of keyboard design is to allow users to enter text into the computer system by pressing buttons as fast and accurately as possible by optimizing the spatial layout as well as using sounds and haptics from mechanical keys. In a way this is a legacy solution as Keyboards pre-date digital world, and go as far back as to mechanical typewriters. With modern sensing and ML capabilities text input has evolved significantly, and keyboards can be changed in shape and form and adapt to user needs [2].

A similar progress could be traced into the future of text input in XR. In their 1997 paper, Mine et al. noted the difficulty of text entry in virtual environments [3]. One of the earliest attempts to addressing this challenge was VType [4] which leveraged a commercial glove for tracking all 10 fingers and mapped multiple characters to each finger together with statistical analysis to disambiguate characters. Twenty five years later, effective text entry remains an open challenge in XR for consumers.

Currently average typists on PC using 10 fingers (touch typing) in PC can achieve 40-60 WPM, with peaks of 80 Words Per Minute (WPM), while hunt-and-peek typists will achieve 27-37 WPM [5].

In fact, there are several key metrics and considerations that come into play when it comes to quantitative metrics beyond WPM, like the N-Key Rollover (independent recognition of simultaneous keypresses), throughput, correctable typing error (percentage of word errors corrected by a language model, and character edit distance for correction accuracy). It is also important to track other typing errors like incorrect key registrations, multiple registrations from a single press, and missed key presses. As well as subjective metrics like the NASA Task Load Index that can offer insights into users’ cognitive workload. When WPM are very low users tend to utilize dictation via voice-to-text [6], despite voice’s privacy and throughput limitations.

Across the board, XR text input performs worse than PC, virtual keyboards easily drop to 5 to 10 WPM, and even when using physical keyboards users can only keep 60 % of their typing speed and 80% of their accuracy even in VR [7]. And the reason could be that in VR the technical requirements for text entry are stringent, especially for display resolution, FOV and finger tracking. In fact the complex tech stack needed for XR necessitates of particular design considerations.

II-A Display Resolution

Until very recently it was hard to read any text on commercial HMDs, much less to write. If we consider in 2017 VR displays usually had resolutions of 500-600 pixels per inch (PPI). As of 2023 most consumer displays had achieved 1200 PPI, despite LCDs in laboratories achieved 2000 PPI [8]. Large enough MicroOLED displays with higher PPI numbers have only appeared as of 2024 with the Apple Vision Pro, that has 3386 PPI. And as of May 2024 LG has presented similar advances in MicroOLED with up to 4000 PPIs.

II-B Field of View

While XR headsets often advertise wide fields of view or FOV (e.g., H V), they still fall short when compared to the full extent of human vision (H V). While human peripheral view often captures the user’s own body, this isn’t available in XR, while large FOV would be especially valuable for embodied interactions in XR [9].

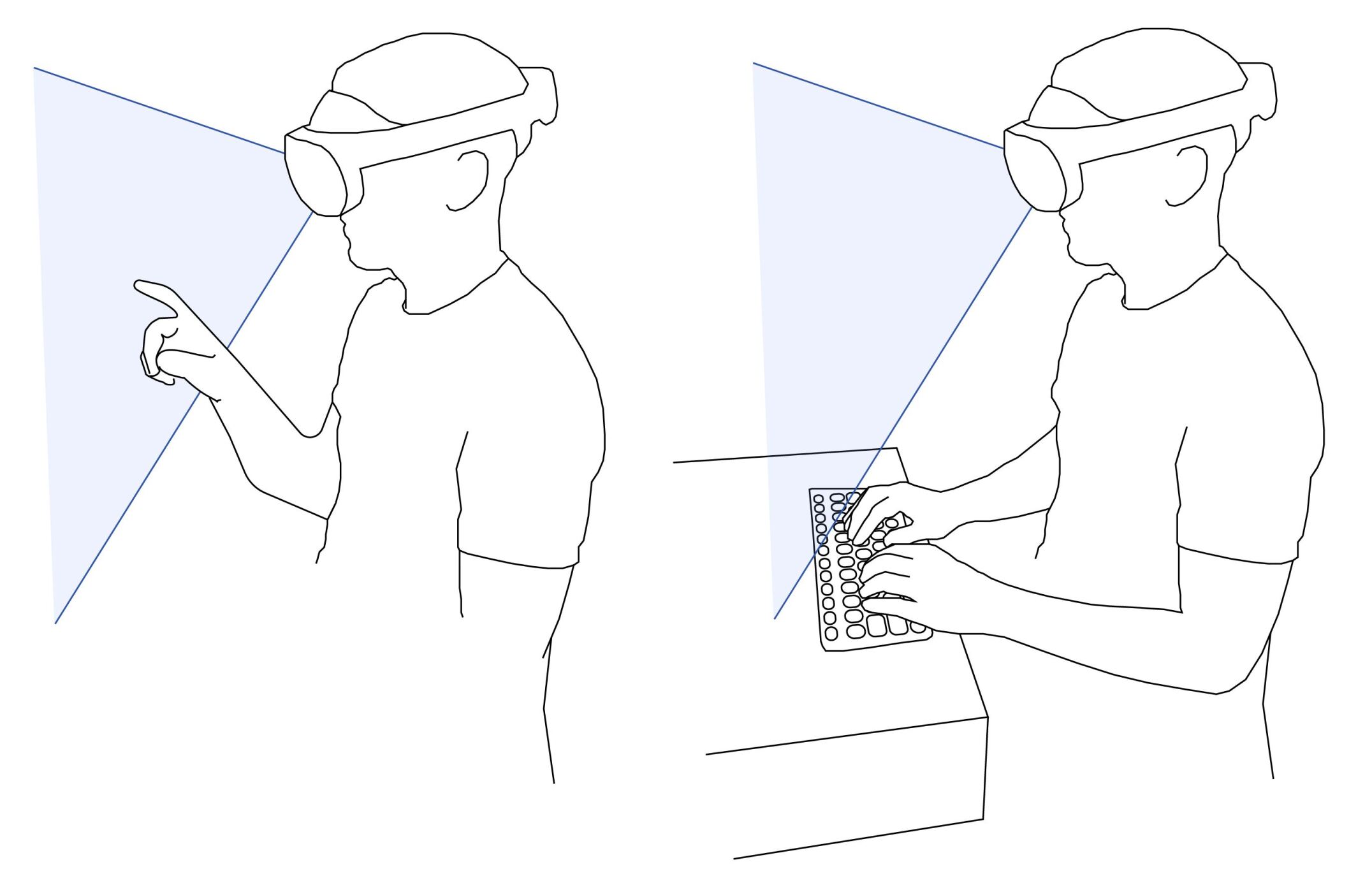

When using a physical keyboard in XR (either in passthrough or with a virtual proxy), the limited vertical FOV of current HMDs means that users often cannot see the both the keyboard and the virtual content at the same time (Figure 1). Furthermore, the lightseals of most HMDs also prevent the viewing of one’s hands/keyboard by glancing below the display. Overall, this lack of “glanceablility” for physical keyboards in XR reduces ergonomics, and potentially hinders productivity.

II-C Finger Tracking and Typing

While virtual keyboards offer a promising solution to this, the tracking of fingers and surfaces from the oblique, occlusion-prone, egocentric views provided by headset-mounted cameras remains technically challenging [10]. Finger tracking often fails from a egocentric perspective (with the camera mounted on the head) precisely at the moment of surface touch, a problem that is difficult to solve due to self-occlusions, where the back of the hand occludes the fingers at certain hand orientations. And despite the fact that many people are working on hacks for text entry in VR on surfaces, it is still an unsolved problem [10, 11].

And without great finger tracking we end up with typing solutions that often rely in only two fingers (‘hunt-and-peck’), which by all means is slower and less accurate than using all ten fingers [12, 5].

The hunt-and-peck method involves searching for keys with one finger from each hand, often looking at the keyboard instead of the content that’s being written. While seemingly easier at first, it is ultimately inefficient and dramatically limits typing speed.

In contrast, ‘touch typing’ involves using all ten fingers, each assigned specific keys and resting on a ”home row” [12]. Even when not an expert touch typist, using more fingers and relying less on looking at the keyboard allows one to focus on the content, reduce exertion, and type faster with fewer errors [5].

Up to now, the majority of text input methods for XR have fallen under the category of hunt-and-peck, either using direct touch with two index fingers or indirect raycasting from hands or controllers [13]. Expanding to support multi-finger text input has the potential to greatly increase throughput, though technical hurdles such as handling self-occlusion and better finger accuracy during hand tracking must still be resolved. Furthermore, we expect that the benefits of ten-finger typing may be more evident in certain scenarios, such as when typing on a surface-aligned keyboard.

II-D Hand Representations

While expert touch typists may rely less on visual cues, the majority of typists (even if proficient) tend to look at their keyboard at least occasionally during productivity sessions [14]. Furthermore, inexperienced typists using the ‘hunt-and-peck’ method heavily rely on visual feedback of both their hands and keyboard. The process of mastering a keyboard necessitates acquiring sensorimotor expertise through the integration of multiple sensory inputs. Visualizing the keyboard aids in learning the spatial arrangement of keys, while seeing the hands helps guide and maintain proper finger placement [15]. Tactile feedback from the keys confirms successful presses and reinforces alignment, while auditory clicks offer additional confirmation [16]. Finally, the visualized text output provides feedback on typing accuracy, closing the feedback loop [5].

Research suggests [15] that experienced typists can achieve even faster or comparable typing speeds (WPM) on a physical keyboard when hands are represented abstractly, reducing visual occlusion. However, a significant drop in productivity and increased mental workload occurs when hand tracking is unavailable [15]. This highlights the critical role hand representations play in XR keyboard interactions [17], especially for novice or less-skilled typists.

II-E Flexible Keyboard Layouts

XR presents a unique opportunity to reimagine how we view and interact with our keyboard. With XR, it is possible to scale, duplicate, offset, or otherwise augment the displayed keyboard. We believe this presents an opportunity to potentially enhance the text entry experience.

For example: as mentioned in Section II-B, typing on a physical keyboard in limited-FOV XR creates an ergonomic problem because users must choose between looking down at their keyboard or up at the content. One potential solution is to duplicate keyboards: one anchored to a physical keyboard (or tabletop) for tactile feedback and optimal hand positioning, and a second visually re-projected keyboard positioned closer to the content. Fostering more robust motor control through cross-modal binding, crucial to typing which is a motor control task. This approach may align with active inference frameworks, potentially minimizing prediction errors for a smoother typing experience [18].

II-F Other considerations

Additionally, proprioception, task agency, and embodiment significantly impact the keyboard experience. Social acceptability (from both first-person and observer perspectives) should be considered as well. Finally, other design considerations such as ergonomic factors are crucial, including mental and physical fatigue, haptic feedback, and device availability.

III Opportunities for Keyboards in XR

III-A The Case for a Physical Keyboard

Physical keyboards offer distinct haptic feedback, familiar layouts, and the potential for high throughput text input. In XR, keyboard representations often rely on either 3D tracking (e.g., Quest [19]) or passthrough visualization [20]. However physical keyboards might not always be available and they also suffer from some of the design considerations mentioned before such as FOV, hand representations and passthrough needs.

III-B The Need for a Virtual Keyboard

Virtual keyboards eliminate the need for external hardware and offer greater portability. However, virtual keyboards are associated with slow speeds.

III-B1 Mid-air Virtual Keyboards

Mid-air virtual keyboards are a conceptually intuitive and a widely explored approach for text input in XR. They often mimic traditional keyboard layouts (e.g., QWERTY) and are positioned within the user’s field of view. Input typically involves either hand [21], controller-based pointing [22] or the use of eye gaze [22, 23] for key selection. The appeal of this approach lies in its relative simplicity, potential for leveraging existing user familiarity, and minimal hardware requirements beyond standard XR devices.

However, mid-air virtual keyboards also face significant challenges. The absence of physical surfaces and haptic feedback makes precise key targeting difficult, leading to higher error rates [24]. Extended use frequently causes fatigue and discomfort in the arms and shoulders, particularly with hand-based input [25]. This lack of physicality and the reliance on ‘hunt-and-peck’ style interactions severely limit typing speed compared to traditional keyboards [26]. Additionally, mid-air keyboards can obstruct the virtual environment, potentially hindering immersion and task performance.

however it is also possible we see a shift in the way users interact with mid-air keyboards that could involve methods inspired by swipe-based smartphone typing [27], like the work by Dudley et al. [28], novel ergonomic raycast techniques tailored to XR akin to the CD gain of a mouse [29], or even entirely new interaction modalities that capitalize on the unique sensing and ML capabilities of future XR devices.

III-B2 Surface-anchored Virtual Keyboards

A growing trend in XR text input is the use of surface-anchored virtual keyboards. The advantages of leveraging surfaces are mostly supporting of ergonomics and providing additional haptics [25]. Surfaces aren’t just tables, and can range from dedicated wearables like watches [30] to the user’s own body, including fingernails [31] or finger tips [32]. By making use of a physical surface, these techniques offer some inherent haptic feedback, enhancing accuracy and reducing fatigue compared to mid-air keyboards [30]. The potential for compact, faster and even discreet text entry makes surface-anchored solutions appealing in situations where traditional keyboards are impractical.

III-B3 ML-enabled Keyboards

In virtual keyboards, ”tap detection” identifies individual key selections by the user. However, raw tap detection has proved challenging for robust text entry. Hence XR keyboards relying solely on tap detection would be slow and prone to errors.

Machine learning (ML) decoding models offer a solution by analyzing sequences of taps rather than individual inputs. These models consider statistical patterns of language and user input behavior, enabling them to correct likely typos, predict words, and personalize suggestions [33, 34]. This significantly enhances accuracy and speeds up text entry, mirroring the transformative impact of predictive models such as those used by Gboard for smartphone typing.

Probabilistic language models (PLMs) like Bayesian Neural Networks (BNNs) are particularly well-suited for this task [35]. Their ability to manage uncertainty in tap data is crucial in real-world XR scenarios where environmental factors and varying user behavior might lead to noisy or ambiguous input. By distributing probabilities across potential interpretations, BNNs increase the accuracy of the text decoding process.

Beyond PLMs, other ML approaches hold promise. Recurrent Neural Networks (RNNs) are adept at analyzing sequential data and capturing dependencies between taps [36]. When combined with Convolutional Neural Networks (CNNs), they can analyze both temporal and spatial patterns of taps [37], potentially enhancing accuracy in scenarios where precise tap location is informative (e.g., surface-based keyboards). As AI and large language models advance, their integration with XR text input becomes increasingly compelling. Techniques like ”sensor tap-to-language token embedding” [38] could bridge the gap between raw input and sophisticated language models, leading to further breakthroughs in intuitive and efficient XR text input experiences.

IV Discussion and Conclusions

Good UI and interaction tools help us learn the technology limits. A rare function key beyond Crtl+C, is currently on PC very hard to discover and or learn. But a Keyboard inside XR, is in the end a software designed UI, that can facilitate that learning through contextual augmentation. The learning might then surprisingly transfer back to PC and other less immersive devices. So we are not just augmenting the keyboard but augmenting the human.

The diverse use cases of XR, ranging from office work, to gaming, entertainment, to on-the-go applications, paired with the rapid advances of XR technologies introduce new interaction possibilities and challenges for text input.

This paper has showcased the main trends and challenges we have observed when exploring the field of text input and productivity in XR.

Experts on the real world unequivocally recommend learning touch typing. Although investing in touch typing might seem like an extra effort, it is a valuable skill that benefits in the long run, saving time, improving accuracy, and boosting overall productivity. But when it comes to XR typing there isn’t a clear recommendation, with many choices.

Perhaps the solution to text entry is indeed not a single one, as we might actually need a solution for physical keyboard, another one for virtual keyboards, depending on when surfaces are available and when they are not, it also includes improving ML algorithms, and optimizing typing assisted by existing devices phones or watches.

References

- [1] M. Gonzalez-Franco and A. Colaco, “Guidelines for productivity in virtual reality,” ACM Interactions Magazine, 2024.

- [2] L. Findlater and J. Wobbrock, “Personalized input: improving ten-finger touchscreen typing through automatic adaptation,” in ACM CHI, 2012, pp. 815–824.

- [3] M. R. Mine, F. P. Brooks Jr, and C. H. Sequin, “Moving objects in space: exploiting proprioception in virtual-environment interaction,” in 24th conference on Computer graphics and interactive techniques, 1997, pp. 19–26.

- [4] F. Evans, S. Skiena, and A. Varshney, “Vtype: Entering text in a virtual world,” International Journal of Human-Computer Studies, 1999.

- [5] A. M. Feit, D. Weir, and A. Oulasvirta, “How we type: Movement strategies and performance in everyday typing,” in ACM CHI, 2016, pp. 4262–4273.

- [6] S. Ruan, J. O. Wobbrock, K. Liou, A. Ng, and J. A. Landay, “Comparing speech and keyboard text entry for short messages in two languages on touchscreen phones,” ACM IMWUT, vol. 1, no. 4, pp. 1–23, 2018.

- [7] T. J. Dube and A. S. Arif, “Text entry in virtual reality: A comprehensive review of the literature,” in HCII 2019. Springer, 2019, pp. 419–437.

- [8] Y.-H. Wu, C.-H. Tsai, Y.-H. Wu, Y.-S. Cherng, M.-J. Tai, P. Huang, I.-A. Yao, and C.-L. Yang, “Breaking the limits of virtual reality display resolution: the advancements of a 2117-pixels per inch 4k virtual reality liquid crystal display,” Journal of Optical Microsystems, vol. 3, no. 4, pp. 041 208–041 208, 2023.

- [9] K. Nakano, N. Isoyama, D. Monteiro, N. Sakata, K. Kiyokawa, and T. Narumi, “Head-mounted display with increased downward field of view improves presence and sense of self-location,” IEEE Transactions on Visualization and Computer Graphics, vol. 27, no. 11, pp. 4204–4214, 2021.

- [10] Y. Gu, C. Yu, Z. Li, Z. Li, X. Wei, and Y. Shi, “Qwertyring: Text entry on physical surfaces using a ring,” ACM IMWUT, vol. 4, no. 4, pp. 1–29, 2020.

- [11] C. Liang, X. Wang, Z. Li, C. Hsia, M. Fan, C. Yu, and Y. Shi, “Shadowtouch: Enabling free-form touch-based hand-to-surface interaction with wrist-mounted illuminant by shadow projection,” ser. ACM UIST, 2023.

- [12] M. Rieger, “Motor imagery in typing: effects of typing style and action familiarity,” Psychonomic Bulletin & Review, vol. 19, pp. 101–107, 2012.

- [13] M. Speicher, A. M. Feit, P. Ziegler, and A. Krüger, “Selection-based text entry in virtual reality,” in ACM CHI, 2018, pp. 1–13.

- [14] S. Pinet, C. Zielinski, F.-X. Alario, and M. Longcamp, “Typing expertise in a large student population,” Cognitive Research: Principles and Implications, vol. 7, no. 1, p. 77, 2022.

- [15] J. Grubert, L. Witzani, E. Ofek, M. Pahud, M. Kranz, and P. O. Kristensson, “Effects of hand representations for typing in virtual reality,” in IEEE VR, 2018, pp. 151–158.

- [16] Z. Ma, D. Edge, L. Findlater, and H. Z. Tan, “Haptic keyclick feedback improves typing speed and reduces typing errors on a flat keyboard,” in 2015 IEEE World Haptics Conference (WHC), 2015, pp. 220–227.

- [17] R. Canales, A. Normoyle, Y. Sun, Y. Ye, M. D. Luca, and S. Jörg, “Virtual grasping feedback and virtual hand ownership,” in ACM Symposium on Applied Perception, 2019, pp. 1–9.

- [18] A. Maselli, P. Lanillos, and G. Pezzulo, “Active inference unifies intentional and conflict-resolution imperatives of motor control,” PLoS computational biology, vol. 18, no. 6, p. e1010095, 2022.

- [19] D. Abdlkarim, M. Di Luca, P. Aves, S.-H. Yeo, R. C. Miall, P. Holland, and J. M. Galea, “A methodological framework to assess the accuracy of virtual reality hand-tracking systems: A case study with the oculus quest 2,” BioRxiv, pp. 2022–02, 2022.

- [20] R. Gruen, E. Ofek, A. Steed, R. Gal, M. Sinclair, and M. Gonzalez-Franco, “Measuring system visual latency through cognitive latency on video see-through ar devices,” in IEEE VR, 2020, pp. 791–799.

- [21] G. Benoit, G. M. Poor, and A. Jude, “Bimanual word gesture keyboards for mid-air gestures,” in ACM CHI, 2017, pp. 1500–1507.

- [22] A. Gupta, N. Sendhilnathan, J. Hartcher-O’Brien, E. Pezent, H. Benko, and T. R. Jonker, “Investigating eyes-away mid-air typing in virtual reality using squeeze haptics-based postural reinforcement,” in ACM CHI, 2023, pp. 1–11.

- [23] K. Pfeuffer, H. Gellersen, and M. Gonzalez-Franco, “Design principles and challenges for gaze + pinch interaction in xr,” IEEE Computer Graphics and Applications, 2024.

- [24] A. Gupta, M. Samad, K. Kin, P. O. Kristensson, and H. Benko, “Investigating remote tactile feedback for mid-air text-entry in virtual reality,” in IEEE ISMAR, 2020, pp. 350–360.

- [25] Y. F. Cheng, T. Luong, A. R. Fender, P. Streli, and C. Holz, “Comfortable user interfaces: Surfaces reduce input error, time, and exertion for tabletop and mid-air user interfaces,” in IEEE ISMAR, 2022, pp. 150–159.

- [26] M. McGill, D. Boland, R. Murray-Smith, and S. Brewster, “A dose of reality: Overcoming usability challenges in vr head-mounted displays,” in ACM CHI, 2015, pp. 2143–2152.

- [27] S. Boustila, T. Guégan, K. Takashima, and Y. Kitamura, “Text typing in vr using smartphones touchscreen and hmd,” in IEEE VR, 2019, pp. 860–861.

- [28] J. J. Dudley, J. Zheng, A. Gupta, H. Benko, M. Longest, R. Wang, and P. O. Kristensson, “Evaluating the performance of hand-based probabilistic text input methods on a mid-air virtual qwerty keyboard,” IEEE Transactions on Visualization and Computer Graphics, 2023.

- [29] C. Kumar, R. Hedeshy, I. S. MacKenzie, and S. Staab, “Tagswipe: Touch assisted gaze swipe for text entry,” in ACM CHI, 2020, pp. 1–12.

- [30] H. Xia, T. Grossman, and G. Fitzmaurice, “Nanostylus: Enhancing input on ultra-small displays with a finger-mounted stylus,” in ACM UIST, 2015, pp. 447–456.

- [31] L. Chan, R.-H. Liang, M.-C. Tsai, K.-Y. Cheng, C.-H. Su, M. Y. Chen, W.-H. Cheng, and B.-Y. Chen, “Fingerpad: private and subtle interaction using fingertips,” in ACM UIST, 2013, pp. 255–260.

- [32] Z. Xu, P. C. Wong, J. Gong, T.-Y. Wu, A. S. Nittala, X. Bi, J. Steimle, H. Fu, K. Zhu, and X.-D. Yang, “Tiptext: Eyes-free text entry on a fingertip keyboard,” in ACM UIST, 2019, pp. 883–899.

- [33] O. Alharbi, W. Stuerzlinger, and F. Putze, “The effects of predictive features of mobile keyboards on text entry speed and errors,” ACM Human-Computer Interaction, vol. 4, no. ISS, pp. 1–16, 2020.

- [34] S. Zhai and P. O. Kristensson, “The word-gesture keyboard: reimagining keyboard interaction,” Communications of the ACM, vol. 55, no. 9, pp. 91–101, 2012.

- [35] P. Streli, J. Jiang, A. R. Fender, M. Meier, H. Romat, and C. Holz, “Taptype: Ten-finger text entry on everyday surfaces via bayesian inference,” in ACM CHI, 2022, pp. 1–16.

- [36] K. Mrazek and T. K. Mohd, “Using lstm models on accelerometer data to improve accuracy of tap strap 2 wearable keyboard,” in International Conference on Intelligent Human Computer Interaction. Springer, 2021, pp. 27–38.

- [37] M. Meier, P. Streli, A. Fender, and C. Holz, “Tapid: Rapid touch interaction in virtual reality using wearable sensing,” in IEEE VR, 2021, pp. 519–528.

- [38] M. Jin, Y. Zhang, W. Chen, K. Zhang, Y. Liang, B. Yang, J. Wang, S. Pan, and Q. Wen, “Position paper: What can large language models tell us about time series analysis,” arXiv preprint arXiv:2402.02713, 2024.