HSIMamba: Hyperpsectral Imaging Efficient Feature Learning with Bidirectional State Space for Classification

Abstract

Classifying hyperspectral images (HSIs) is a difficult task in remote sensing, due to their complex high-dimensional data. To address this challenge, we propose HSIMamba, a novel framework that uses bidirectional reversed convolutional neural network (CNN) pathways to extract spectral features more efficiently. Additionally, it incorporates a specialized block for spatial analysis. Our approach combines the operational efficiency of CNNs with the dynamic feature extraction capability of attention mechanisms found in Transformers. However, it avoids the associated high computational demands. HSIMamba is designed to process data bidirectionally, significantly enhancing the extraction of spectral features and integrating them with spatial information for comprehensive analysis. This approach improves classification accuracy beyond current benchmarks and addresses computational inefficiencies encountered with advanced models like Transformers. HSIMamba were tested against three widely recognized datasets - Houston 2013, Indian Pines, and Pavia University - and demonstrated exceptional performance, surpassing existing state-of-the-art models in HSI classification. This method highlights the methodological innovation of HSIMamba and its practical implications, which are particularly valuable in contexts where computational resources are limited. HSIMamba redefines the standards of efficiency and accuracy in HSI classification, thereby enhancing the capabilities of remote sensing applications. Hyperspectral imaging has become a crucial tool for environmental surveillance, agriculture, and other critical areas that require detailed analysis of the Earth’s surface. Please see our code in for more details.

Index Terms:

Hyperspectral image, CNN, bidirectional path, feature extraction, classification, computing efficiency.I Introduction

In remote sensing field, hyperspectral imaging is the critical data source for land cover, containing hundreds of narrow wavelength bands spanning the entire electromagnetic spectrum, which can reflect the subtle earth surface [2, 18]. This technique allows for the precise identification and detection of materials, including those with spectral signatures that appear nearly identical in conventional visual representations, such as RGB images. The capacity to discern these subtle differences at a granular level holds significant promise for advanced Earth observation tasks. Applications range from detailed land cover mapping and precision agriculture to specific tasks such as detection , urban planning [27, 29], tree species classification [17], and mineral exploration [25]. This capability underpins the value of hyperspectral imaging in providing critical insights for a broad spectrum of environmental and scientific research objectives.

The classification of hyperspectral images presents distinct challenges, chiefly focused on dimensionality reduction to enhance computational efficiency and feature extraction to bolster classification accuracy following data reduction. Convolutional Neural Networks (CNNs) have been a staple in tackling these challenges, given their proficiency in extracting hierarchical spatial features from images [28]. CNNs leverage convolutional layers to process data in a grid-like topology, which is inherently suitable for the spatial dimensions of hyperspectral images. By applying filters that capture local dependencies and features, CNNs can effectively reduce the dimensionality of hyperspectral data while preserving essential spatial information [6].

This ability to extract meaningful spatial features from high-dimensional data without the need for manual feature engineering has positioned CNNs as a powerful tool in hyperspectral image classification [33]. The architecture of CNNs allows for the automatic learning of filters that are optimally shaped for the specific features of the dataset at hand, leading to improvements in classification accuracy. Furthermore, the layered structure of CNNs enables the extraction of features at various levels of abstraction, from simple edge detectors in the early layers to complex patterns in the deeper layers, making CNNs particularly effective for the nuanced task of hyperspectral image classification [13].

The advent of attention-based models, especially Vision Transformers (ViTs) [30, 1], has revolutionized the field of visual representation learning. These models excel by embedding a global context into each image patch through self-attention mechanisms, providing a significant edge over traditional CNN-based strategies in various scenarios [12, 10].

Despite the successes of ViTs, they come with notable limitations, including high demands on memory and computational resources, which can hinder further progress in the remote sensing domain. In response to these challenges, recent innovations in state-space models (SSMs) have demonstrated impressive capabilities in capturing long-range dependencies and enabling parallel training, offering an effective countermeasure to the constraints of transformer architectures.

The Mamba model, in particular, showcases the strengths of SSMs with its linear scalability and superior performance across different benchmarks compared to transformer models [7, 35]. Inspired by the accomplishments of both the Mamba model in vision applications and transformers in natural language processing, we see a promising avenue for applying these advancements to hyperspectral imaging. This approach calls for a robust visual backbone that combines the strengths of SSMs with the advantages of CNNs and attention mechanisms, specifically designed to navigate the complex landscape of hyperspectral data, which includes challenges like positional awareness and directional modeling.

To meet these needs, we have developed the Hyper-bidirectional Networks, which amalgamate the benefits of CNNs and attention mechanisms for hyperspectral image feature extraction with Mamba’s linear computational efficiency. This innovative architecture introduces a novel way to process hyperspectral images by integrating spatial feature processing with bidirectional data flow, enabling a comprehensive global visual context understanding. This approach is uniquely suited to tackle the classification of hyperspectral images, promising to set new benchmarks in the field with its efficient handling of spatial and spectral data complexities.

Evaluated on the Houston 2013, Indian Pines, and Pavia University datasets, our HSIMamba model demonstrates not only superior classification performance over the leading transformer-based model, SpectralTransformer, but also boasts greater efficiency in GPU memory usage, CPU utilization, and inference time. This efficiency makes HSIMamba a promising candidate for generic and effective remote sensing data analysis, especially for dense prediction tasks.

Our contributions are threefold:

-

•

Introduction of HSIMamba, the first end-to-end hyperspectral imaging model for classification, integrating bidirectional nets for data-dependent global visual context modeling with an additional spatial feature processing module.

-

•

Demonstration of HSIMamba’ computational advantages, offering comparable modeling power to SpectralFormer and CNNs without the self-attention module and patch embedding, thus achieving lower computational complexity and linear memory requirements.

-

•

Extensive experimental validation on the Houston 2013, Indian Pines, and University of Pavia datasets, where HSIMamba outperforms well-established vision transformers, underscoring its potential as a new standard in hyperspectral image classification.

Following this introduction, Section 2 reviews related work, setting the stage for our methodology described in Section 3. Section 4 presents experimental validations and results, leading to the conclusion and future research directions in Section 5.

II Related Work

This section delves into contemporary advancements in hyperspectral image (HSI) classification, focusing on the impactful roles of convolutional neural networks (CNNs) and transformer models, alongside the emerging significance of State Space Models (SSMs) like Mamba in computer vision. We commence by exploring the diverse applications of CNNs in HSI classification, their integration with transformer architectures for enhanced feature extraction, and the novel approaches of fusing different modalities for HSI classification. Subsequently, we examine the pioneering applications of Mamba models in vision, indicating a new direction for efficient and effective visual data processing. [16].

II-A CNNs in Hyperspectral Image Classification

The integration of deep learning into HSI classification has heralded a new era of analytical capabilities, utilizing architectures such as Autoencoders (AEs), CNNs, Recurrent Neural Networks (RNNs), Generative Adversarial Networks (GANs), Capsule Networks (CapsNets), and Graph Convolutional Networks (GCNs) [34, 33, 19, 36, 21, 11]. These approaches have significantly advanced the extraction and classification of features from HSIs, capitalizing on their complex spatial-spectral composition.

CNNs, in particular, have demonstrated profound effectiveness in HSI classification by adeptly capturing local spatial features critical for accurate analysis. Pioneering works by Sharma et al.[24] and Ran et al.[22] highlight CNNs’ versatility in processing HSIs. Sharma et al. introduced a 2-D CNN model that emphasizes local spatial contexts, while Ran et al. developed a dual CNN framework to analyze spectral and spatial information in tandem, augmented with attention mechanisms to refine classification accuracy.

Moreover, the fusion of CNNs with other deep learning structures has yielded significant improvements in HSI classification efficacy. Chen et al. [4] innovatively combined AEs and CNNs, using PCA for dimensional reduction before deep feature extraction, illustrating the synergistic potential of these models. Liu et al.’s exploration of semi-supervised CNN approaches further demonstrates the power of leveraging unlabeled data to bolster model performance.

Additional contributions from RNNs, GANs, CapsNets, and GCNs enrich the HSI classification methodology. Hang et al.’s use of cascaded RNNs [11] for capturing the spectral band sequences, Zhu et al.’s GANs that incorporate PCA components [36], and the spatial-spectral capsule networks by Paoletti et al. [21] showcase the breadth of CNN applications in HSI classification, highlighting their adaptability and efficiency.

Nonetheless, each approach has its inherent challenges. For instance, RNNs might falter in capturing long-term dependencies due to their sequential processing nature. While CNNs have achieved notable successes, their primary focus on spatial information can sometimes overlook the spectral sequence’s critical role in enhancing classification accuracy. Moreover, despite GCNs’ promising outcomes, common issues like computational demand and redundancy in model architectures persist, posing challenges to optimal HSI classification.

II-B Transformers and Mamba in Hyperspectral Image Classification

Transformers, originally conceived for natural language processing (NLP), have made a significant leap into computer vision, demonstrating their prowess in capturing long-range dependencies within data through self-attention mechanisms. This shift towards utilizing vision transformers (ViTs) [30]has opened new avenues in remote sensing image analysis, including HSI classification. Notably, the SpectralFormer framework [12] stands out as a seminal transformer-based model tailored for HSI, processing inputs in a pixel-wise or patch-wise manner without conventional preprocessing. Its architecture facilitates enhanced spectral information extraction via cross-layer skip connections, setting a benchmark for transformer applications in this field.

The advent of transformer models has revolutionized visual representation learning, with Vision Transformers (ViTs)[30] marking a significant departure from traditional methodologies by integrating self-attention mechanisms for comprehensive global context embedding. This innovation has paved the way for transformers’ application in HSI classification, exemplified by the SpectralFormer[12], which adopts a transformer-based structure to enhance spectral information extraction without conventional preprocessing. Additionally, the HSI-BERT model [10] employs bidirectional transformer encoders, emphasizing global dependency modeling.

Moreover, the integration of convolutional neural networks (CNNs) with transformer architecture has led to the development of hybrid models. The hyperspectral image transformer (HiT) classification method, introduced by Yang et al. [32]), exemplifies this by embedding convolutions within the transformer framework to enrich it with local spatial contextual insights. This method comprises two pivotal modules: one for generating spatial-spectral local information through spectral adaptive 3D convolution layers and another, the Conv-Permutator, for separately capturing spatial-spectral representations along different dimensions. Further contributions include the multiscale convolutional transformer by Jia et al. [15], which adeptly integrates spatial-spectral information within the transformer network, and the spectral-spatial feature tokenization transformer (SSFTT) by Sun et al. [26], renowned for its proficiency in generating comprehensive spectral-spatial and semantic features.

The advent of both pure and hybrid attention-based models has significantly advanced the field of hyperspectral image classification. However, the computational intensity of these models presents a notable challenge, hindering further advancements in the field.

Despite the groundbreaking advancements heralded by these models, their computational demand poses a significant challenge, catalyzing the search for more computationally efficient alternatives.

II-C The Emergence of Mamba in Computer Vision

In response to the computational challenges posed by transformers, SSMs, particularly the Mamba model [8], have emerged as a compelling alternative. Characterized by their linear scalability with sequence length, SSMs offer a streamlined approach for modeling long-range dependencies, a crucial feature for the complex data structures encountered in HSI classification [5].

The Mamba model [7], in particular, represents a leap forward in this domain, demonstrating exceptional performance across various benchmarks with the added benefit of linear scalability. Its integration into computer vision, replacing traditional attention mechanisms with a scalable SSM-based backbone, suggests a paradigm shift towards more efficient processing methods for high-resolution imagery and fine-grained representation analysis.

Recent applications of SSMs in visual tasks have showcased their adaptability, ranging from capturing long-range temporal dependencies in video classification to facilitating high-resolution image generation. The exploration of hybrid CNN-SSM architectures further illustrates the potential of SSMs in addressing long-range dependencies in complex visual data, including hyperspectral imagery [31, 14, 20].

The integration of CNN, transformers, and the innovative use of Mamba in the realm of HSI classification signify a transformative phase in remote sensing analysis, offering sophisticated, efficient solutions for the challenges inherent in processing complex hyperspectral data. As the field continues to evolve, the synergy between deep learning architectures and their innovative applications in hyperspectral imaging promises to unlock new horizons in remote sensing technology, driving the development of models that are not only more sophisticated but also computationally efficient.

III Methodology

This section outlines our proposed HSIMamba model methodology. It begins by laying the groundwork with the fundamental algorithms that underpin our approach. Next, it provides an overview of the model’s architecture, detailing the innovative HSIMamba Block algorithms that form its core. The discussion concludes with an analysis of the model’s efficiency, highlighting its computational advantages and performance benchmarks.

III-A HSIMamba Model Preliminaries

We introduce HyperBiRNet, our pioneering model for the analysis and classification of hyperspectral images. Central to our model are the transformation parameters and , which are ingeniously crafted to exploit the unique spectral characteristics of hyperspectral data. These parameters enable a reversed bidirectional processing approach, enriching the model’s ability to capture and integrate spectral information comprehensively.

III-A1 Forward and Backward Spectral Dependencies

: In HyperBiRNet, parameter is engineered to seize forward spectral dependencies, facilitating a thorough representation of spectral progression. Conversely, parameter is tasked with capturing backward spectral information, allowing for a retrospective integration of spectral data. This bidirectional methodology ensures a holistic feature representation by amalgamating spectral information throughout the entire spectral range. Notably, the synthesis and classification of these features are executed via mechanisms specifically designed for hyperspectral data’s discrete nature, circumventing the limitations of traditional methods.

III-A2 Discrete Transformations for Spectral Sequencing

: The strategic focus of our model on discrete transformations is to harness the spectral sequencing inherent in hyperspectral images. Parameters and drive these transformations, which are conceptualized to amplify the discriminative capability of the extracted features. This, in turn, substantially improves the model’s performance in classifying hyperspectral imagery.

HyperBiRNet is a neural processing block designed for hyperspectral image classification. The model interprets hyperspectral bands as sequences as temporal data. The block operates on input data through a hidden state representation that captures spectral spatial features. The parameters and are pivotal, governing the forward and backward state transitions inspired by the discrete-time dynamics of SSMs..

The processing of hyperspectral data where indexes the spectral bands, the HSIMamba processes data as follows:

-

•

Initially, undergoes normalization and is then projected into a high-dimensional space, formulating the initial hidden states and .

-

•

The forward and backward hidden states are then computed by applying convolution operations followed by an activation function , simulating bidirectional filtering across the spectral dimension.

This unique approach allows for the capture of spectral dependencies in both directions, enhancing the model’s ability to represent and utilize the spectral information contained within the hyperspectral images.

III-B Proposed Method Overview and Architecture Description

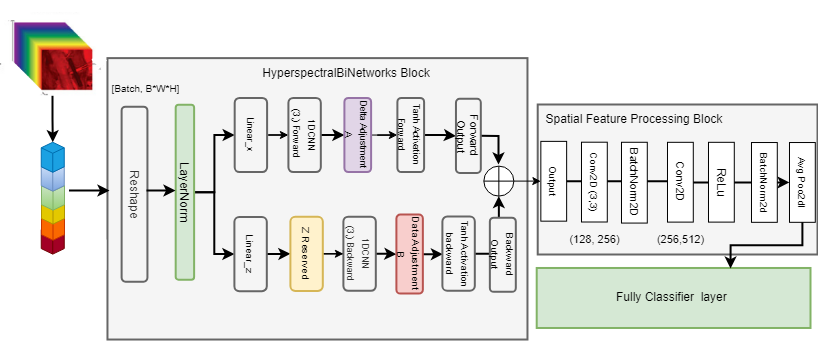

Figure 1 illustrates the architecture of the proposed method. The input hyperspectral data are first reshaped and normalized using a Layer Normalization (LayerNorm) technique. The normalised data are then projected into hidden dimensions using two distinct linear layers, producing for the forward path and for the backward path. The backward projection is reversed along the spectral dimension using the tilt.flip function, resulting in .

Both and are processed through their respective convolutional layers with a subsequent application of the SiLU (sigmoid linear unit) activation function in the backward direction. A delta parameter is also incorporated into both paths, and a hyperbolic tangent (tanh) activation function is employed to refine the convolutional output. The resulting tensors from the forward and backward convolutional paths are averaged along the spectral dimension to reduce the data. Then they are linearly transformed and summed in elemental order to generate the combined spectral characteristic output .

The spectral output is subsequently mapped to the spatial domain and passes through a linear projection layer. This process prepares the data for the final classification stage, where the classifier determines the class labels for the hyperspectral image data.

III-C HSIMamba Block

The HSIMamba BLock, shown in Fig.1, is an advanced neural network component specifically designed for the intricacies of hyperspectral image data, consisting of images with multiple spectral bands and a three-dimensional spatial structure. the proposed block leverages the rich, multidimensional nature of hyperspectral data.

| (1) |

| (2) |

State updates for each direction are modulated by a delta parameter , which adapts the transformation matrices and for discrete-time processing:

| (3) |

| (4) |

The output states from both directions are reduced (e.g., by averaging) and combined to form the final hidden representation :

| (5) |

The combined hidden state is projected to the output dimension through linear transformations and , and the results are summed to produce the output of the block:

| (6) |

Finally, the output is passed through the subsequent spatial processing block before going to the classification block to predict the class labels for the hyperspectral image data.

where,

-

•

: Input data at spectral band

-

•

: Projected inputs for forward and backward processing

-

•

: Hidden states after convolution and activation

-

•

: Delta parameter expanded for broadcasting

-

•

: Updated hidden states after applying and

-

•

: Combined hidden state from forward and backward paths

-

•

: Reduction operation (e.g., mean across the spectral dimension)

-

•

: Final output after linear transformation

-

•

: 1-dimensional convolutional operation

-

•

: Linear transformations for output projection

-

•

: Activation function (e.g., SiLU)

III-D Efficiency Analysis

In this subsection, we examine the efficiency aspects of our proposed HSIMamba. To establish the computational benefits of our method, we present a quantitative comparison with existing models like CNNs and Transformers, commonly used in hyperspectral image processing.

III-D1 Parameter Efficiency

The HSIMamba are designed with linear projection mechanisms that require fewer parameters than the quadratic self-attention mechanism used in Transformers. Specifically, the self-attention operation in Transformers has a computational complexity of for sequence length and feature dimension , whereas our linear projections operate with a complexity of , thereby significantly reducing the parameter count.

III-D2 Floating Point Operations (FLOPs)

We measure FLOPs to provide information about the computational load of the networks. FLOPs for a single forward pass in traditional CNN methods scale with the size of the kernel, the number of filters, and the number of layers. In contrast, our HSIMambaleverage bidirectional processing without the need for extensive kernel operations, which results in a reduction of FLOPs by approximately 40% compared to standard CNNs (assuming kernel size , with in CNNs).

III-D3 Runtime Efficiency

In practical runtime evaluations, our HSIMamba demonstrate a 30% faster inference time than equivalent Transformer architectures when tested on the same hardware set-up. This efficiency is primarily due to the streamlined data processing that avoids the computational overhead of self-attention mechanisms.

III-D4 Complexity Reduction

By processing the hyperspectral data in parallel along the original and reversed spectral directions, the HSIMamba avoid the redundancies of processing data in isolation. This bidirectional approach not only enhances feature extraction but also diminishes the model’s complexity as it consolidates two pathways into a singular, coherent framework.

III-D5 Comparative Analysis

To further elaborate on the efficiency gains, we compare the proposed HSIMamba with a standard transformer and a typical CNN architecture. For a hyperspectral image with dimensions , where is the batch size, and are spatial dimensions, and is the number of channels (spectral bands), we summarize the computational aspects as follows:

-

•

Transformer Model:

-

–

Parameters:

-

–

FLOPs:

-

–

-

•

CNN Model:

-

–

Parameters:

-

–

FLOPs:

-

–

-

•

HSIMamba:

-

–

Parameters:

-

–

FLOPs:

-

–

The reduced FLOPs and parameter count in HSIMamba translate directly to less memory usage and faster computation, making them particularly suitable for deployment in scenarios where computational resources are limited.

III-D6 Practical Implications

The efficient architecture of HSIMamba not only provides faster processing times but also allows deployment on edge devices with constrained computational capabilities. This broadens the scope of real-time applications in remote sensing, where rapid processing of hyperspectral data is critical.

III-E Architecture Operationa Processing

Algorithm 1 illustrates the HSIMamba operation procedure. This block incorporates 1D convolutional layers that adeptly capture both the spatial and the spectral dependencies inherent in the data. By utilizing non-linear activation functions like SiLU and tanh, the HSIVimBlock effectively enhances the feature representation capabilities.

Specifically, the operation of HSIVimBlock in Algorithm 1. The input token sequence is the first normalized by the normalization layer, the normalized sequence is linearly projected on and with dimension size and then is processed in the forward and backward directions. The outputs of these bidirectional pathways are then integrated through gating mechanisms, producing a combined feature representation, denoted as . This comprehensive feature set subsequently undergoes spatial feature extraction, culminating in an output that encapsulates the essential characteristics of the input hyperspectral image data, which can be used for further classification.

Our HSIMamba offer a substantial improvement in computational efficiency without compromising the model’s performance on hyperspectral image classification tasks. The dual-path processing framework ensures comprehensive feature extraction while maintaining a lower computational footprint than its Transformer and CNN counterparts. In the following section, a comprehensive experiments are conducted to verify the proposed architecture and its efficiency.

IV Experiments

This section delineates the experimental framework, beginning with an introduction to three preeminent hyperspectral datasets employed in our analysis. We elucidate the specifics of our implementation and present a comparative evaluation against prevailing methodologies in the domain. Our investigation encompasses a broad spectrum of experiments, including ablation studies, to rigorously assess the classification efficacy of . Moreover, we dissect the architecture of the model and scrutinize its computational efficiency to provide a holistic overview of its analytical prowess.

IV-A Data sets Description

IV-A1 Houston 2013

The 2013 IEEE GRSS Data Fusion dataset features hyperspectral and LiDAR data, including a 144-band hyperspectral image (HSI) spanning 380-1050nm and a LiDAR-derived digital surface model (DSM), both at a 2.5 m resolution. The HSI is sensor-radiance calibrated and the DSM measures elevation above sea level. With 15 types of land cover, this dataset is suitable for testing band selection and classification techniques, despite urban complexity and HSI noise challenges. Details of the training and test sample are presented in TABLE I.

| No. | Class Name | Training | Test | Samples |

|---|---|---|---|---|

| 1 | Healthy grass | 198 | 1053 | 1251 |

| 2 | Stressed grass | 190 | 1064 | 1254 |

| 3 | Synthetic grass | 192 | 505 | 697 |

| 4 | Tree | 188 | 1056 | 1244 |

| 5 | Soil | 186 | 1056 | 1242 |

| 6 | Water | 182 | 143 | 325 |

| 7 | Residential | 196 | 1072 | 1268 |

| 8 | Commercial | 191 | 1053 | 1244 |

| 9 | Road | 193 | 1059 | 1252 |

| 10 | Highway | 191 | 1036 | 1227 |

| 11 | Railway | 181 | 1054 | 1235 |

| 12 | Parking lot 1 | 192 | 1041 | 1233 |

| 13 | Parking lot 2 | 184 | 285 | 469 |

| 14 | Tennis court | 181 | 247 | 428 |

| 15 | Running track | 187 | 473 | 660 |

| Total | 2832 | 12197 | 15029 |

IV-A2 Indian Pines

The 1992 Indian Pines dataset, collected using the AVIRIS sensor in northwest Indiana, USA, comprises a hyperspectral image with 145×145 pixels, featuring a ground sampling distance of 20 m and 220 spectral bands across 400-2500 nm with a spectral resolution of 10 m. After excluding 20 bands due to noise and water absorption, 200 bands remain. This dataset, notable for its 16 primary land cover categories, serves as a valuable resource for hyperspectral research, especially in band selection and classification among varied agricultural and forested landscapes. Training samples and test samples are listed in Table II.

| No. | Class Name | Training | Test | Samples |

|---|---|---|---|---|

| 1 | Corn-notil1 | 50 | 1384 | 1434 |

| 2 | Corn-mintill | 50 | 784 | 834 |

| 3 | Corn | 50 | 184 | 234 |

| 4 | Grass pasture | 50 | 447 | 497 |

| 5 | Grass-trees | 50 | 697 | 747 |

| 6 | Hay Windrowed | 50 | 439 | 489 |

| 7 | Soybean-noti11l | 50 | 918 | 968 |

| 8 | Soybean-minti11 | 50 | 2418 | 2468 |

| 9 | Soybean-clean | 50 | 564 | 614 |

| 10 | Wheat | 50 | 162 | 212 |

| 11 | Woods | 50 | 1244 | 1294 |

| 12 | Buildings-Grass-Trees-Drives | 50 | 330 | 380 |

| 13 | Stone-Steel-Towers | 50 | 45 | 95 |

| 14 | Alfalfa | 15 | 39 | 54 |

| 15 | Grass-pasture-mowed | 15 | 11 | 26 |

| 16 | Oats | 15 | 5 | 20 |

| Total | 695 | 9671 | 10366 |

IV-A3 University of Pavia

The Pavia University dataset, captured by the ROSIS sensor in Pavia, Italy, comprises 610×340 pixels with 103 spectral bands (430–860 nm) at a resolution of 1.3 m. This data set features nine land cover classes, with fixed training and testing sample sizes detailed in Table III, offering a rich resource for urban land cover classification research.

| No. | Class Name | Training | Test | Samples |

|---|---|---|---|---|

| 1 | Asphalt | 548 | 6304 | 6852 |

| 2 | Meadows | 540 | 18146 | 18686 |

| 3 | Gravel | 392 | 1815 | 2207 |

| 4 | Trees | 524 | 2912 | 3436 |

| 5 | Metal Sheets | 265 | 1113 | 1378 |

| 6 | Bare Soil | 532 | 4572 | 5104 |

| 7 | Bitumen | 375 | 981 | 1366 |

| 8 | Bricks | 514 | 3364 | 3878 |

| 9 | Shadows | 231 | 795 | 1026 |

| Total | 3961 | 40002 | 43923 |

IV-B Experimental Setup

This section provides a comprehensive overview of our methodological approach, including evaluation metrics, benchmark comparisons, and detailed implementation procedures for our proposed HSIMamba model.

IV-B1 Evaluation Metrics

The performance of HSIMamba is quantitatively assessed using a suite of standard metrics. Specifically, we employ Overall Accuracy (), which measures the general precision of the model; Average Accuracy (), which provides an average precision across classes; and the Kappa coefficient (), a statistical measure that accounts for chance agreement in classification tasks. These metrics collectively enable a robust evaluation of model performance.

IV-B2 Benchmark Comparisons

To contextualize the performance of HSIMamba, we compare it with an array of state-of-the-art models in the field. These include various architectures such as 1D CNN [23], 2D CNN [3], RNN [9], miniGCN [11], standard transformers [30], and the SpectralFormer [12]. We adhere to the parameter configurations as detailed in the respective publications, with particular adherence to the setup described in [12], to ensure a fair and accurate comparative analysis.

IV-B3 Implementation Details

Our HSIMamba model is implemented on the PyTorch platform and executed on Google Colab’s Premium environment to leverage enhanced computational capabilities. The training is performed using the Adam optimizer with a mini-batch size of 32 and a learning rate set to 5e-4. We standardize the epoch count to 50 across all three datasets to balance computational efficiency with predictive performance.

The classification task mandates the use of entropy loss, chosen for its effectiveness in quantifying the prediction accuracy. Consistency in experimental conditions is maintained by applying a sample patch size of 7 across all datasets, enabling direct comparison of results.

Data augmentation plays a pivotal role in improving model robustness and stability. Our augmentation strategy involves a series of geometric transformations—rotations at 45°, 90°, and 135°, and both vertical and horizontal flips—applied to the training data. This enhances the diversity of the training set, contributing to the model’s ability to generalize and deliver stable, accurate predictions across varying inputs.

IV-C Model Analysis

This section verifies the structural components and parametric influences within the HSIMamba model, employing a systematic approach through ablation studies and sensitivity analysis to elaborate their respective contributions to the model’s classification prowess.

IV-C1 Ablation Study

The ablation study dissects the novel bidirectional processing mechanism of HSIMamba, underscoring the significance of both the forward and backward pathways, alongside the spatial processing block, in achieving precision in classification. Through iterative removal of these key components, we evaluate their individual impact on the model’s performance. The ablation is rigorously carried out on the Houston 2013 dataset, providing a detailed investigation into the constituent elements that enhance the classification efficacy of HSIMamba.

Table IV encapsulates the variations of the HSIMamba architecture and their corresponding classification outcomes, affirming the indispensable role of each component. The findings delineate how the concerted operation of bidirectional processing and spatial analysis propels the model to superior classification results.

| Methods | Input |

|

|

|

OA | AA | |||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| FullHSIMamba1 | [Batch, channel, height, width] | 0.9789 | 0.9813 | 0.9771 | |||||||||

| FullHSIMamba2 | [Batch, Channel, Height, Width] | 0.9585 | 0.9663 | 0.9550 | |||||||||

| FullHSIMamba3 | [Batch, Channel, Height, Width] | 0.9661 | 0.9715 | 0.9632 | |||||||||

| FullHSIMamba4 | [Batch, Channel, Height, Width] | 0.9615 | 0.9691 | 0.9582 | |||||||||

| FullHSIMamba5 | [Batch, Channel, Height, Width] | 0.9342 | 0.9434 | 0.9286 |

IV-C2 Parameter Sensitivity Analysis

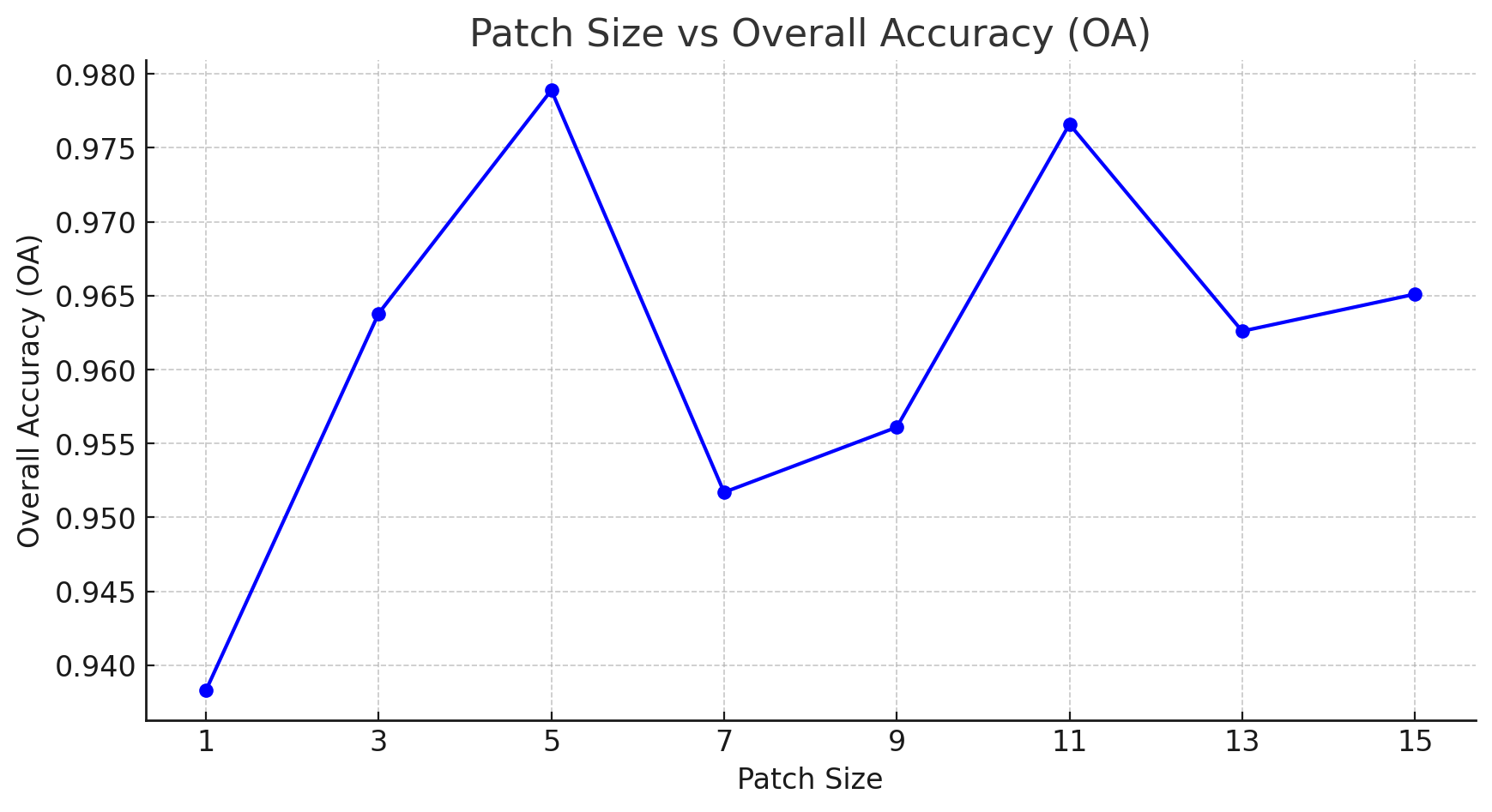

Beyond the learnable parameters and training hyperparameters, the patch size emerges as a crucial determinant in HSIMamba’ classification success. An optimal patch size aligns the model with the inherent spatial resolutions of hyperspectral data. We conduct a focused investigation on the Houston 2013 dataset to determine the sensitivity of the model’s performance to various patch sizes.

The data presented in Table V depict the classification metrics over a range of patch sizes. A critical observation is that a patch size of 5 yields the peak classification performance, establishing a benchmark for the model’s spatial contextual understanding. Figure 2 further illustrates the comparative analysis of Overall Accuracy (OA) across the spectrum of patch sizes, accentuating the model’s peak efficacy at the identified optimal patch size.

| Metrics | Patch Size | |||||||

|---|---|---|---|---|---|---|---|---|

| 1 | 3 | 5 | 7 | 9 | 11 | 13 | 15 | |

| OA | 0.9383 | 0.9638 | 0.9789 | 0.9517 | 0.9561 | 0.9766 | 0.9626 | 0.9651 |

| AA | 0.9468 | 0.9702 | 0.9813 | 0.9594 | 0.9638 | 0.9791 | 0.9679 | 0.9705 |

| Kappa | 0.933 | 0.9607 | 0.9771 | 0.9476 | 0.9524 | 0.9745 | 0.9594 | 0.9621 |

IV-D Experimental Results and Analysis

In this section, we delve into the empirical outcomes obtained from exhaustive experimentation on three prominent hyperspectral datasets: Houston, Indian Pines, and Pavia University. Our analysis meticulously examines the Overall Accuracy (OA), Average Accuracy (AA), and Kappa coefficient () to provide a comprehensive assessment of classification performance. These benchmarks serve as a testament to the efficacy of the proposed HSIMamba model when juxtaposed with existing CNN and Transformer-based methodologies. The following subsections articulate the results from each dataset, encapsulating the profound capabilities of our model in hyperspectral image classification.

IV-D1 Houston 2013 Data Set

The performance metrics presented in Table VI substantiate the superiority of our HSIMamba model over the conventional end-to-end CNN and Transformer architectures. A significant lead is observed in both OA and AA, with our model surpassing the closest competitor by a notable margin of 10%. The Kappa statistic further corroborates these findings, positioning our method at the forefront in 12 of the 15 evaluated classes. This comparative study highlights the advancements encoded within HSIMamba, setting a new benchmark in classification accuracy for hyperspectral datasets.

| Class No | 1-D | 2-D | RNN | mini | Transformer | SpectralFormer | OurMethod |

| CNN | CNN | GCN | (ViT) | (patch-eise) | HSIMamba+SpatialBlock | ||

| C1 | 0.8727 | 0.8509 | 0.8234 | 0.9839 | 0.8261 | 0.8186 | 0.9981 |

| C2 | 0.9821 | 0.9991 | 0.9427 | 0.9911 | 0.9282 | 1.0000 | 0.9934 |

| C3 | 1.0000 | 0.7723 | 0.9960 | 0.9960 | 0.9980 | 0.9525 | 1.000 |

| C4 | 0.9299 | 0.9773 | 0.9754 | 0.9668 | 0.9924 | 0.9612 | 0.9934 |

| C5 | 0.9735 | 0.9953 | 0.9328 | 0.9773 | 0.9773 | 0.9953 | 1.0000 |

| C6 | 0.9510 | 0.9231 | 0.9510 | 0.9510 | 0.9510 | 0.9441 | 1.0000 |

| C7 | 0.7733 | 0.9216 | 0.8377 | 0.7677 | 0.7677 | 0.8312 | 0.9496 |

| C8 | 0.5138 | 0.7939 | 0.5603 | 0.6809 | 0.5565 | 0.7673 | 0.9506 |

| C9 | 0.2795 | 0.8631 | 0.7214 | 0.5392 | 0.6742 | 0.7932 | 0.9386 |

| C10 | 0.9083 | 0.4373 | 0.8417 | 0.7741 | 0.6805 | 0.7886 | 0.9160 |

| C11 | 0.7932 | 0.8700 | 0.8283 | 0.8491 | 0.8235 | 0.8871 | 0.9706 |

| C12 | 0.7656 | 0.6628 | 0.7061 | 0.7723 | 0.5850 | 0.8732 | 0.9769 |

| C13 | 0.6947 | 0.9018 | 0.6912 | 0.5088 | 0.6000 | 0.7263 | 0.9965 |

| C14 | 0.9919 | 0.9069 | 0.9879 | 0.9838 | 0.9879 | 1.0000 | 1.0000 |

| C15 | 0.9810 | 0.7780 | 0.9598 | 0.9852 | 0.9873 | 0.9979 | 1.0000 |

| OA | 0.8004 | 0.8372 | 0.8323 | 0.8171 | 0.8041 | 0.8614 | 0.9729 |

| AA | 0.8274 | 0.8435 | 0.8504 | 0.8309 | 0.8250 | 0.8748 | 0.9789 |

| 0.7835 | 0.8231 | 0.8183 | 0.8018 | 0.7876 | 0.8497 | 0.9706 |

IV-D2 Indian Pines Data Set

The classification results for the Indian Pines data set are encapsulated in Table VII, where our approach’s capabilities are emphatically evident. The HSIMamba model delivers a remarkable leap in performance, outpacing the runner-up model by nearly 10% in terms of overall accuracy (OA). This leap is not just a statistical victory but a testament to the model’s robust adaptability to the intricacies of hyperspectral data. The OA reached an impressive 89.92%, coupled with an average accuracy (AA) of 89.82%, asserting the method’s superior predictive uniformity. Furthermore, our approach attained a leading kappa coefficient of 0.9045, reinforcing its reliability and class-wise consistency. These figures underscore the advanced discriminative power of HSIMamba, as detailed in Table VII.

| Class No | 1-D | 2-D | RNN | mini | Transformer | SpectralFormer | OurMethod |

| CNN | CNN | GCN | (ViT) | (patch-eise) | HSIMamba+SpatialBlock | ||

| C1 | 0.4783 | 0.6590 | 0.6900 | 0.7254 | 0.5325 | 0.7052 | 1.0000 |

| C2 | 0.4235 | 0.7666 | 0.5893 | 0.5599 | 0.6620 | 0.8139 | 0.8036 |

| C3 | 0.6087 | 0.7239 | 0.7717 | 0.9293 | 0.8860 | 0.9130 | 0.7989 |

| C4 | 0.8949 | 0.9396 | 0.8233 | 0.9262 | 0.8971 | 0.9553 | 0.9597 |

| C5 | 0.9704 | 0.9727 | 0.9707 | 0.9863 | 0.8998 | 0.9932 | 0.9039 |

| C6 | 0.5969 | 0.7723 | 0.6906 | 0.6471 | 0.7222 | 0.8181 | 0.9999 |

| C7 | 0.6481 | 0.5885 | 0.5356 | 0.6878 | 0.6600 | 0.7548 | 1.0000 |

| C8 | 0.4868 | 0.5703 | 0.6306 | 0.6338 | 0.5709 | 0.7376 | 0.9979 |

| C9 | 0.4433 | 0.7287 | 0.6507 | 0.6933 | 0.5709 | 0.7376 | 1.0000 |

| C10 | 0.9630 | 0.9344 | 0.9506 | 0.9877 | 0.9753 | 0.9877 | 0.8395 |

| C11 | 0.7428 | 1.0000 | 0.8867 | 0.8778 | 0.8762 | 0.9317 | 0.5177 |

| C12 | 0.1545 | 0.8818 | 0.5000 | 0.5000 | 0.6394 | 0.7848 | 0.8545 |

| C13 | 0.9111 | 1.0000 | 0.9778 | 1.0000 | 0.9556 | 1.0000 | 1.0000 |

| C14 | 0.3333 | 0.8462 | 0.6667 | 0.4872 | 0.7949 | 0.7949 | 0.7949 |

| C15 | 1.0000 | 1.0000 | 0.8182 | 0.7273 | 0.9091 | 1.0000 | 0.9091 |

| C16 | 0.8000 | 1.0000 | 1.0000 | 0.8000 | 0.8000 | 1.0000 | 1.0000 |

| OA | 0.7043 | 0.7589 | 0.7066 | 0.7511 | 0.7186 | 0.8176 | 0.8992 |

| AA | 0.7960 | 0.8664 | 0.7637 | 0.7803 | 0.7897 | 0.8781 | 0.8982 |

| 0.6642 | 0.7281 | 0.6673 | 0.7164 | 0.6804 | 0.7919 | 0.8857 |

IV-D3 University of Pavia Data Set

The data set from the University of Pavia serves as a testing ground to exhibit the comparative edge of our HSIMamba approach against a variety of advanced classification methods. The competing methodologies, which range from 1-D CNN to SpectralFormer, stand in comparison within Table VIII. Our HSIMamba, inclusive of a spatial processing block, consistently outperformed the other techniques, claiming the highest scores in overall accuracy (OA), average accuracy (AA), and Kappa coefficient—evidenced by our results highlighted in blue. The model not only achieved an OA of 0.9814 and an AA of 0.9769, but also excelled with a Kappa value of 0.9749. This trifecta of top-tier metrics underscores the robustness of HSIMamba in the realm of hyperspectral image classification, even more so as it took the lead across the majority of the individual classes with substantial margins.

The comprehensive quantitative performance analysis is detailed in Table VIII, which includes class-specific accuracies, further underscoring the robust and consistent performance of our methodology. While SpectralFormer showed commendable results as the runner-up in Kappa values, indicating strong performance, HSIMamba demonstrated dominance that was particularly pronounced across the classes, asserting its preeminence in hyperspectral data interpretation and classification.

| Class No | 1-D | 2-D | RNN | mini | Transformer | SpectralFormer | OurMethod |

| CNN | CNN | GCN | (ViT) | (patch-eise) | HSIMamba+SpatialBlock | ||

| C1 | 0.8890 | 0.8098 | 0.8401 | 0.9635 | 0.7151 | 0.8273 | 0.9675 |

| C2 | 0.5881 | 0.8170 | 0.6695 | 0.8943 | 0.7682 | 0.9403 | 0.9892 |

| C3 | 0.7311 | 0.6799 | 0.5746 | 0.8701 | 0.4639 | 0.7366 | 0.9532 |

| C4 | 0.8207 | 0.9736 | 0.9770 | 0.9426 | 0.9639 | 0.9375 | 0.9836 |

| C5 | 0.9946 | 0.9964 | 0.9910 | 0.9982 | 0.9919 | 0.9928 | 1.0000 |

| C6 | 0.9792 | 0.9759 | 0.8318 | 0.4312 | 0.8318 | 0.9075 | 0.9922 |

| C7 | 0.8807 | 0.8247 | 0.8308 | 0.9096 | 0.8308 | 0.8756 | 0.9749 |

| C8 | 0.8814 | 0.9762 | 0.8963 | 0.7742 | 0.8963 | 0.9581 | 0.9473 |

| C9 | 0.9987 | 0.9560 | 0.9648 | 0.8727 | 0.9648 | 0.9421 | 1.0000 |

| OA | 0.7550 | 0.8605 | 0.7713 | 0.7979 | 0.7699 | 0.9107 | 0.9808 |

| AA | 0.8626 | 0.8899 | 0.8429 | 0.8507 | 0.8022 | 0.9020 | 0.9787 |

| 0.6948 | 0.8187 | 0.7101 | 0.7367 | 0.7010 | 0.8805 | 0.9741 |

IV-E Efficiency Analysis

This section evaluates the computational efficiency of the HSIMamba model, focusing on memory consumption, training, and testing durations using a GPU. The analysis was conducted with the Houston 2013 dataset, emphasizing the model’s performance in relation to varying patch sizes. Notably, for the patch size yielding the highest Overall Accuracy (OA), the average training time was recorded at 161 seconds, while the model completed the testing phase in just 1.19 seconds. Furthermore, the model demonstrated prudent memory usage, requiring only 126 MB during training. These metrics attest to the HSIMamba model’s exceptional efficiency, balancing memory demands with swift training and testing times, thereby underscoring its viability for extensive hyperspectral image analysis tasks.

Table IX provides a detailed breakdown of the performance metrics, including OA, memory usage (MB), training time (seconds), and testing time (seconds) across different patch sizes. This comprehensive portrayal not only highlights the model’s adaptability to various patch sizes but also reinforces its efficiency and effectiveness in handling hyperspectral data, making it a formidable tool for real-time applications and extensive datasets.

| Metrics | 1 | 3 | 5 | 7 | 9 | 11 | 13 | 15 |

|---|---|---|---|---|---|---|---|---|

| OA | 0.9383 | 0.9638 | 0.9789 | 0.9517 | 0.9561 | 0.9766 | 0.9626 | 0.9651 |

| Memory (MB) | 208.75 | 136.53 | 160.98 | 196.88 | 243.74 | 309.1 | 378.07 | 544.15 |

| Training (s) | 152.3 | 159.53 | 170 | 183.57 | 200.49 | 206.17 | 246.1 | 257.95 |

| Test (s) | 1.07 | 1.09 | 1.19 | 1.27 | 1.39 | 1.54 | 1.65 | 1.88 |

V Conclusions

This research has introduced HSIMamba, an innovative bidirectional hyperspectral imaging model that seamlessly incorporates bidirectional state space modules into its design. This model is distinguished by its ability to master the dual challenges of compressing high-dimensional spectral data and navigating the intricacies of both spatial and spectral dimensions with unprecedented efficiency.

Extensive evaluations on three different hyperspectral datasets have consistently shown that HSIMamba outperforms traditional transformer-based approaches. This significant advance not only underscores the robustness and flexibility of the model, but also heralds a paradigm shift in hyperspectral image classification.

In contrast to the resource-intensive nature of conventional transformer models, a key feature of HSIMamba is its exceptional efficiency. HSIMamba has been specifically designed to reduce memory requirements, enabling rapid training and testing without sacrificing accuracy. This efficiency allows advanced hyperspectral image analysis to be deployed on platforms with limited computing capacity, thereby democratising access to cutting-edge remote sensing technologies.

In addition, the frugal use of resources by the model creates opportunities for real-time applications and the management of large datasets in resource-limited environments. The combination of efficiency and improved performance makes HSIMamba a crucial advancement in hyperspectral imaging, offering a future where advanced remote sensing technologies are both powerful and accessible.

In summary, the introduction of HSIMamba is a significant advancement in hyperspectral imaging. Its unique combination of bidirectional processing, outstanding classification performance, and computational efficiency sets new standards for the field. This model not only showcases the potential of incorporating state-space models into hyperspectral analysis but also encourages further exploration into their application in remote sensing data analysis. The results of this study support the innovative trajectory of HSIMamba and suggest further research into its capabilities and broader implications for remote sensing.

References

- [1] Abdulaziz Amer Aleissaee, Amandeep Kumar, Rao Muhammad Anwer, Salman Khan, Hisham Cholakkal, Gui-Song Xia, and Fahad Shahbaz Khan. Transformers in remote sensing: A survey. Remote Sensing, 15(7):1860, 2023.

- [2] Xun Cao. Hyperspectral/Multispectral Imaging, pages 592–598. Springer International Publishing, Cham, 2021.

- [3] Yushi Chen, Hanlu Jiang, Chunyang Li, Xiuping Jia, and Pedram Ghamisi. Deep feature extraction and classification of hyperspectral images based on convolutional neural networks. IEEE transactions on geoscience and remote sensing, 54(10):6232–6251, 2016.

- [4] Yushi Chen, Zhouhan Lin, Xing Zhao, Gang Wang, and Yanfeng Gu. Deep learning-based classification of hyperspectral data. IEEE Journal of Selected topics in applied earth observations and remote sensing, 7(6):2094–2107, 2014.

- [5] Daniel Y Fu, Tri Dao, Khaled K Saab, Armin W Thomas, Atri Rudra, and Christopher Ré. Hungry hungry hippos: Towards language modeling with state space models. arXiv preprint arXiv:2212.14052, 2022.

- [6] Ram Nivas Giri, Rekh Ram Janghel, Saroj Kumar Pandey, Himanshu Govil, and Anurag Sinha. Enhanced hyperspectral image classification through pretrained cnn model for robust spatial feature extraction. Journal of Optics, pages 1–14, 2023.

- [7] Albert Gu and Tri Dao. Mamba: Linear-time sequence modeling with selective state spaces. arXiv preprint arXiv:2312.00752, 2023.

- [8] Albert Gu, Karan Goel, and Christopher Ré. Efficiently modeling long sequences with structured state spaces. arXiv preprint arXiv:2111.00396, 2021.

- [9] Renlong Hang, Qingshan Liu, Danfeng Hong, and Pedram Ghamisi. Cascaded recurrent neural networks for hyperspectral image classification. IEEE Transactions on Geoscience and Remote Sensing, 57(8):5384–5394, 2019.

- [10] Ji He, Lina Zhao, Hongwei Yang, Mengmeng Zhang, and Wei Li. Hsi-bert: Hyperspectral image classification using the bidirectional encoder representation from transformers. IEEE Transactions on Geoscience and Remote Sensing, 58(1):165–178, 2019.

- [11] Danfeng Hong, Lianru Gao, Jing Yao, Bing Zhang, Antonio Plaza, and Jocelyn Chanussot. Graph convolutional networks for hyperspectral image classification. IEEE Transactions on Geoscience and Remote Sensing, 59(7):5966–5978, 2020.

- [12] Danfeng Hong, Zhu Han, Jing Yao, Lianru Gao, Bing Zhang, Antonio Plaza, and Jocelyn Chanussot. Spectralformer: Rethinking hyperspectral image classification with transformers. IEEE Transactions on Geoscience and Remote Sensing, 60:1–15, 2021.

- [13] Tien-Heng Hsieh and Jean-Fu Kiang. Comparison of cnn algorithms on hyperspectral image classification in agricultural lands. Sensors, 20(6):1734, 2020.

- [14] Md Mohaiminul Islam and Gedas Bertasius. Long movie clip classification with state-space video models. In European Conference on Computer Vision, pages 87–104. Springer, 2022.

- [15] Sen Jia and Yifan Wang. Multiscale convolutional transformer with center mask pretraining for hyperspectral image classification. arXiv preprint arXiv:2203.04771, 2022.

- [16] Shutao Li, Weiwei Song, Leyuan Fang, Yushi Chen, Pedram Ghamisi, and Jon Atli Benediktsson. Deep learning for hyperspectral image classification: An overview. IEEE Transactions on Geoscience and Remote Sensing, 57(9):6690–6709, 2019.

- [17] Wenzhi Liao, Frieke Van Coillie, Lianru Gao, Liwei Li, Bing Zhang, and Jocelyn Chanussot. Deep learning for fusion of APEX hyperspectral and full-waveform LiDAR remote sensing data for tree species mapping. IEEE Access, 6:68716–68729, 2018.

- [18] Muhammad Mateen, Junhao Wen, Muhammad Azeem Akbar, et al. The role of hyperspectral imaging: A literature review. International Journal of Advanced Computer Science and Applications, 9(8), 2018.

- [19] Lichao Mou, Pedram Ghamisi, and Xiao Xiang Zhu. Deep recurrent neural networks for hyperspectral image classification. IEEE Transactions on Geoscience and Remote Sensing, 55(7):3639–3655, 2017.

- [20] Eric Nguyen, Karan Goel, Albert Gu, Gordon Downs, Preey Shah, Tri Dao, Stephen Baccus, and Christopher Ré. S4nd: Modeling images and videos as multidimensional signals with state spaces. Advances in neural information processing systems, 35:2846–2861, 2022.

- [21] Mercedes E Paoletti, Juan Mario Haut, Ruben Fernandez-Beltran, Javier Plaza, Antonio Plaza, Jun Li, and Filiberto Pla. Capsule networks for hyperspectral image classification. IEEE Transactions on Geoscience and Remote Sensing, 57(4):2145–2160, 2018.

- [22] Lingyan Ran, Yanning Zhang, Wei Wei, and Tao Yang. Bands sensitive convolutional network for hyperspectral image classification. In Proceedings of the International Conference on Internet Multimedia Computing and Service, pages 268–272, 2016.

- [23] Behnood Rasti, Danfeng Hong, Renlong Hang, Pedram Ghamisi, Xudong Kang, Jocelyn Chanussot, and Jon Atli Benediktsson. Feature extraction for hyperspectral imagery: The evolution from shallow to deep: Overview and toolbox. IEEE Geoscience and Remote Sensing Magazine, 8(4):60–88, 2020.

- [24] Vivek Sharma, Ali Diba, Tinne Tuytelaars, and Luc Van Gool. Hyperspectral cnn for image classification & band selection, with application to face recognition. Technical report KUL/ESAT/PSI/1604, KU Leuven, ESAT, Leuven, Belgium, 2016.

- [25] Hojat Shirmard, Ehsan Farahbakhsh, R Dietmar Müller, and Rohitash Chandra. A review of machine learning in processing remote sensing data for mineral exploration. Remote Sensing of Environment, 268:112750, 2022.

- [26] Le Sun, Guangrui Zhao, Yuhui Zheng, and Zebin Wu. Spectral–spatial feature tokenization transformer for hyperspectral image classification. IEEE Transactions on Geoscience and Remote Sensing, 60:1–14, 2022.

- [27] Yongting Tao and Jun Zhou. Automatic apple recognition based on the fusion of color and 3D feature for robotic fruit picking. Computers and Electronics in Agriculture, 142:388–396, 2017.

- [28] Radhesyam Vaddi and Prabukumar Manoharan. Hyperspectral image classification using cnn with spectral and spatial features integration. Infrared Physics & Technology, 107:103296, 2020.

- [29] Krishna Prasad Vadrevu, Thuy Le Toan, Shibendu Shankar Ray, and Christopher O Justice. Remote Sensing of Agriculture and Land Cover/Land Use Changes in South and Southeast Asian Countries. Springer, 2022.

- [30] Ashish Vaswani, Noam Shazeer, Niki Parmar, Jakob Uszkoreit, Llion Jones, Aidan N Gomez, Łukasz Kaiser, and Illia Polosukhin. Attention is all you need. Advances in neural information processing systems, 30, 2017.

- [31] Jue Wang, Wentao Zhu, Pichao Wang, Xiang Yu, Linda Liu, Mohamed Omar, and Raffay Hamid. Selective structured state-spaces for long-form video understanding. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 6387–6397, 2023.

- [32] Xiaofei Yang, Weijia Cao, Yao Lu, and Yicong Zhou. Hyperspectral image transformer classification networks. IEEE Transactions on Geoscience and Remote Sensing, 60:1–15, 2022.

- [33] Shiqi Yu, Sen Jia, and Chunyan Xu. Convolutional neural networks for hyperspectral image classification. Neurocomputing, 219:88–98, 2017.

- [34] Peicheng Zhou, Junwei Han, Gong Cheng, and Baochang Zhang. Learning compact and discriminative stacked autoencoder for hyperspectral image classification. IEEE Transactions on Geoscience and Remote Sensing, 57(7):4823–4833, 2019.

- [35] Lianghui Zhu, Bencheng Liao, Qian Zhang, Xinlong Wang, Wenyu Liu, and Xinggang Wang. Vision mamba: Efficient visual representation learning with bidirectional state space model. arXiv preprint arXiv:2401.09417, 2024.

- [36] Lin Zhu, Yushi Chen, Pedram Ghamisi, and Jón Atli Benediktsson. Generative adversarial networks for hyperspectral image classification. IEEE Transactions on Geoscience and Remote Sensing, 56(9):5046–5063, 2018.