Human Impedance Modulation to Improve Visuo-Haptic Perception

Abstract

Humans activate muscles to shape the mechanical interaction with their environment, but can they harness this control mechanism to best sense the environment? We investigated how participants adapt their muscle activation to visual and haptic information when tracking a randomly moving target with a robotic interface. The results exhibit a differentiated effect of these sensory modalities, where participants’ muscle cocontraction increases with the haptic noise and decreases with the visual noise, in apparent contradiction to previous results. These results can be explained, and reconciled with previous findings, when considering muscle spring like mechanics, where stiffness increases with cocontraction to regulate motion guidance. Increasing cocontraction to more closely follow the motion plan favors accurate visual over haptic information, while decreasing it avoids injecting visual noise and relies on accurate haptic information. We formulated this active sensing mechanism as the optimization of visuo-haptic information and effort. This OIE model can explain the adaptation of muscle activity to unimodal and multimodal sensory information when interacting with fixed or dynamic environments, or with another human, and can be used to optimize human-robot interaction.

Author summary:

It has been widely known that the human CNS stiffens the limbs in response to movement error due to interactions. However, by systematically investigating how muscle co-contraction varies with incoming visual and haptic information, we show that this principle does not always hold, and limb stiffness is in fact regulated by the brain to optimise incoming sensory information from the interaction. The manuscript thus describes the following contributions:

-

•

Muscle cocontraction (from which the limb stiffness depends) changes in accordance with the quality of visual and haptic cues. It relaxes with increasing visual noise and stiffens with haptic perturbation, contrary to suggestions from previous studies.

-

•

We propose a mathematical model of this active sensing mechanism, according to which muscle co-contraction is adapted by the brain to minimise prediction error about the environment based on visual and haptic information, while saving effort.

-

•

This computational model: i) predicts the observed results both qualitatively and quantitatively; ii) can explain the results on cocontraction adaptation to force field and interaction with human partners that we know from the literature; iii) extends the existing computational models, and can be used to improve human-robot interaction.

I Introduction

How do humans interact with their environment? It is known that the central nervous system (CNS) regulates the limbs’ stiffness by coordinating muscle activation to shape the energy exchange with the environment [1, 2], such as unstable situations typical of tool use [3, 4]. The prevailing explanation for the observed adaptation of muscle cocontraction is that individuals adjust stiffness to minimize errors in the presence of disturbances [5, 6, 7]. However, how this affects visuo-haptic sensing has been little investigated. For instance, when skiing down a bumpy slope, should one stiffen the legs to best sense the terrain, or relax them to filter out perturbations? This may be particularly important when visual information is degraded, such as in foggy conditions or at dawn, where one must rely on one’s feet to feel the terrain and avoid falling.

Few studies have examined how muscle stiffness regulation is influenced by visual disturbances, and the results have shown complex response patterns. For example, [8] found that in arm reaching tasks, endpoint stiffness decreased with larger target sizes, indicating that the CNS increases stiffness to enhance control precision. However, endpoint stiffness did not significantly increase in response to lateral visual noise during arm reaching tasks, unlike the increase observed with mechanical vibrations [9]. This suggests that the CNS may respond differently to visual versus haptic disturbances. Further research is needed to explore how visual disturbances affect motion control through muscle stiffness regulation.

Visual and haptic information are critical in physical human-robot collaboration, including applications such as physical rehabilitation [10], collaborative robotics for industrial manufacturing [11], and shared control of semi-autonomous vehicles [12]. However, how the CNS combines these sensory inputs in real time remains unclear. When integrating sensory signals over short intervals, the CNS accounts for both sensory discrepancies and temporal delays to achieve optimal multi-sensory integration and feedback control [13]. Interestingly, the presence of visual feedback during a mechanical disturbance does not increase the magnitude of the muscle response but does reduce its variance [14]. Additionally, we observed a modulation of coactivation when physically connected individuals track a common target [15]. The partner with superior visual acuity tends to stiffen their arm and lead the movement, while the other relaxes their arm. Notably, the partners adjust their cocontraction differently depending on the levels of visual and haptic noise.

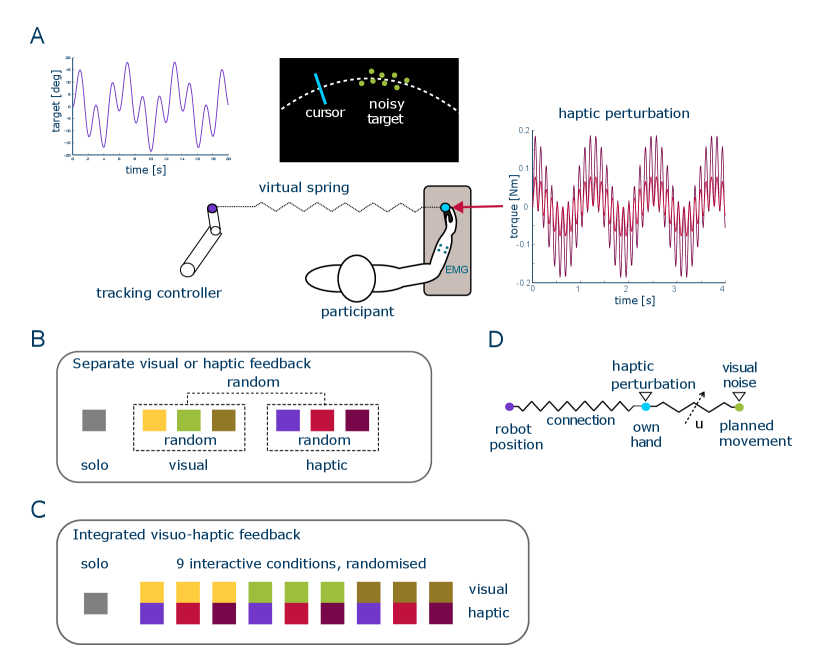

In order to systematically study how humans adapt their muscle activation with visual and haptic feedback, we conducted an experiment in which subjects tracked a randomly moving target using wrist flexion/extension while being connected to the human like tracking controller of [16] (Fig. 1A). We examined the influence of visual and haptic feedback with different levels of noise first separately (Fig. 1B), then in combination (Fig. 1C). In the visual conditions the target presented on the monitor was either a sharp disk, or a dynamic cloud of normally distributed dots. We also introduced a haptic perturbation of varying amplitude to the interaction torque. Conditions with a specific noise level were presented pseudo-randomly. We observed that the visual and haptic noise levels have different effects on the cocontraction adaptation, suggesting that the brain modulates body impedance based not only on movement error [17], but also on its influence on specific sensory interactions. Subsequently, we developed a computational model to examine the mechanism behind muscle cocontraction adaptation (Fig. 1D).

II Results

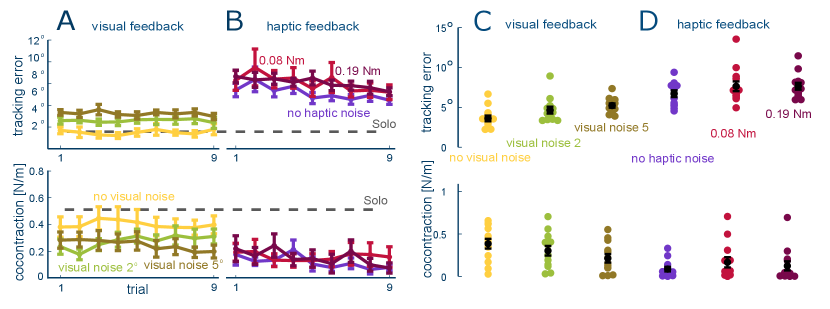

We first analyzed how visual feedback affects the motion control (Fig. 2). After the initial solo trials with only visual feedback, the tracking error remains stable in each of the three visual feedback conditions (slope ). However, the tracking error increases with the magnitude of visual noise (larger error in each of the weak and strong conditions relative to clean vision, , pairwise Wilcoxon tests). On the other hand, the cocontraction level decreases with larger visual noise ( for strong noisy condition relative to sharp vision, for weak noisy condition compared to sharp vision, paired t-test ).

These results seem to contradict previous observations that muscle cocontraction increases with the magnitude of movement error [18, 9, 19, 20]. However these earlier findings can be explained when considering the spring like muscle mechanics [21], where muscle activation increases stiffness and viscosity while also shortening the muscle. By coordinating the activation of antagonist muscles, the CNS can thus control the force at the hand as well as the spring stiffness and reference position. In particular we can consider the reference position that emerges when the CNS controls muscles’ activity to move the limbs. As illustrated in Fig. 1D, with clear visual information, increasing muscle coactivation will increase the limb’s endpoint stiffness and guide it closer to this accurate motion plan. However, when the target information becomes noisy, stiffening the muscle would instead inject noise into the limb’s movement. This explains why cocontraction decreases with an increasing level of noise in the visual target.

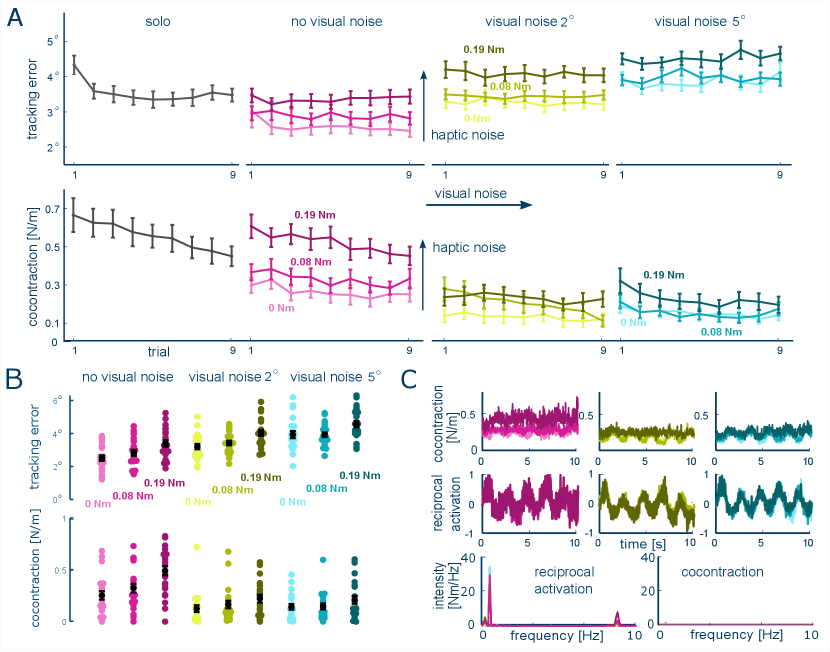

Next we investigated how the CNS regulates muscle cocontraction when both visual and haptic feedbacks are provided. Fig. 3A shows that the error decreases fast in the solo trials (slope ) and reaches a steady level ( for the last seven trials). The level of error remains stable in all the interactive conditions (non-significant slope with ). A two-way ART ANOVA shows that the tracking error depends on both visual noise () and haptic noise (), increasing with the amplitude of visual or haptic noise ( for all pairwise comparisons between noise levels, Wilcoxon tests).

The muscle cocontraction tends to decrease with trials, indicating a learning effect to integrate the two sensory feedback modalities (especially at the largest level of haptic noise with for sharp vision). Consistent with the sole visual feedback condition in the previous experiment, cocontraction decreases with the visual noise as shown in Fig. 3C (significant interaction between visual and haptic noise factors ), where the sharp vision conditions results in a higher cocontraction level compared to both weak and strong visual noise conditions for each level of haptic noise (all , Wilcoxon tests). However, muscle cocontraction increases with the level of haptic perturbation, especially between weak and strong haptic perturbation conditions. The increment of this increase depends on the visual noise level: it increases the most in the sharp visual condition (, Wilcoxon tests) and becomes less clearly with the increase of visual noise ( all , Wilcoxon tests).

In order to understand how the activity of antagonistic muscle is modulated dynamically, we align the reciprocal activation and cocontraction profiles as shown in Fig. 3C. Muscle cocontraction remains relatively constant for each sensory noise condition (slope for all conditions). On the other hand, the reciprocal activation is modulated to produce movement dynamics (the averaged Pearson correlation coefficient between reciprocal activation and target movement is 0.820.09). The frequency spectrum of reciprocal activation has three peaks at the target movement frequencies (0.2, 0.5 and 8.5 Hz) in contrast to the essentially flat spectrum of muscle cocontraction. These results indicate that reciprocal activation generates the tracking movement and responds to the haptic perturbation, while the cocontraction level is regulated to deal with the specific noise condition.

III Modelling of visuo-haptic sensing

What are the principles of the cocontraction adaptation? Above experiments show that cocontraction tends to decrease with practice, and is modulated by both visual noise of the target, and external haptic noise. However, these two noise sources have an opposite effect: Cocontraction increases with a larger level of visual noise, but decreases with haptic noise. We posit that these apparently contradictory trends can be explained through the following sensorimotor interaction principles:

-

•

Muscles’ activation generation corresponds to the CNS using reciprocal activation to move the limbs and coactivation to guide them along a motion plan with suitable viscoelasticity [1].

-

•

Cocontraction is adapted to maximise performance considering both visual target noise and haptic noise at the limbs while concurrently minimising effort.

The mechanics of these principles can be illustrated as in Fig. 1D, where muscle cocontraction can tune the viscoelasticity of the hand to follow the planned movement. When the target is visually sharp, the motion plan is accurate thus it is useful to stiffen in order to follow it closely. However when the target is noisy, it is preferable to relax in order to avoid injecting own visual noise into the hand movement and benefit from the external haptic guidance.

These principles can be formulated mathematically by considering the maximal likelihood prediction error when integrating visual and haptic information:

| (1) |

with the standard deviation of the haptic noise exerted on the limb. Critically, the standard deviation of hand movement relative to the motion plan following the target can be regulated through the muscle co-contraction .

The above sensorimotor interaction principles thus correspond to the concurrent minimization of the prediction error and effort , i.e. of the cost function

| (2) |

with the effort ratio . Muscle cocontraction can then be adapted using a gradient descent optimisation:

| (3) |

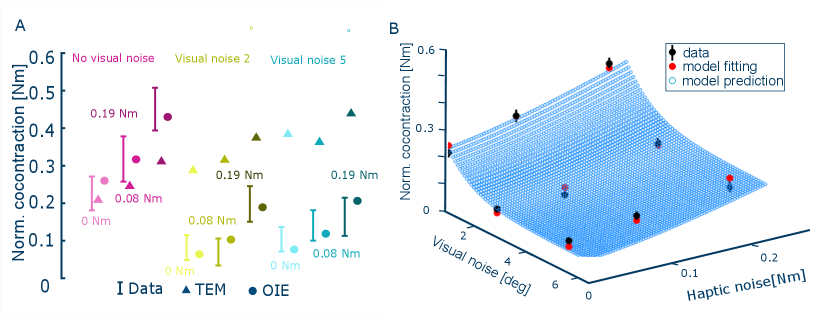

This optimal information and error (OIE) model was tested on the data of the tracking experiment with combined visual and haptic noise. The effective noise values that best fit the nine data points of Fig. 4 are given in Table I, yielding an effort ratio = 2.26. The model predicted muscle cocontraction values are shown in Fig. 4. We also tested the tracking error minimisation (TEM) model of [17] that explains the motor learning in novel force fields. In this model, the coactivation increases with each new trial to minimize tracking error , and decreases to minimize effort, according to

| (4) |

The predicted values from the OIE and TEM models are compared with experimental data as shown in Fig. 4A. The results indicate that the TEM model correctly predicts the trend of increased cocontraction with haptic noise, it fails to capture the decrease in cocontraction with visual noise. In contrast, the OIE model closely matches the experimentally measured cocontraction levels in all variations of visual and haptic noise. The superiority of the OIE model is further supported by the Akaike information criterion (AIC): the small sample-size normalised AIC value [22, 23] for the OIE model is -6.5, which is lower than the value of -2.9 for the TEM model, showing that the OIE model better accounts for information loss and the number of independent parameters. Fig. 4B illustrates how the OIE model accurately predicts the specific modulation of cocontraction to varying levels of visual and haptic noise.

| Noise strength | sharp | weak | strong |

|---|---|---|---|

| Effective haptic perturbation | 5.06 | 5.86 | 7.85 |

| Effective visual noise | 30.64 | 63.66 | 65.30 |

Could the OIE model predict the effect of blind haptic interaction? In this case the lack of visual feedback can be modeled through visual noise with infinite deviation , thereby making the cost to minimize . The OIE model then predicts that subjects connected haptically to the controller tracking the target (but without visual feedback) would minimize cocontraction independent of the haptic noise level. The results of the experiment testing this prediction are shown in Fig. 2B. In the sole haptic feedback conditions, both tracking error and muscle cocontraction decreased over the trials (, two-way ART ANOVA comparing the mean of all subjects in the first and last three trials). Consistent with the model prediction, muscle cocontraction remained at a minimum value and did not depend on the noise level (, paired Wilcoxon tests). There was little change in tracking error with the haptic perturbation level (, two-way ART ANOVA).

IV Discussion

In physical human-robot interaction, sensory signals crucial for motor control are derived from both visual input and haptic feedback, which provides substantial information about the movement intentions of the connected agents. However, the process by which the CNS adjusts muscle cocontraction to optimize the use of visual and haptic signals, thereby enhancing the effectiveness of human-robot collaboration, remains unclear. This paper systematically investigated how different levels of visual and haptic disturbances affect muscle activation during target-tracking tasks.

In a first experiment, we evaluated the impact of noise on muscle control within either the visual or haptic channel. Contrary to previous observations[17], muscle co-activation decreased with increasing levels of visual noise. Meanwhile, muscle co-activation showed no substantial variation in response to the intensity of haptic disturbances. This suggests that the CNS regulates muscle co-activation by considering not just movement error but also sensing uncertainty. When there is no visual noise and motion planning can be relied upon, muscle cocontraction increases to ensure that the planned trajectories are well followed. Conversely, as visual noise and the associated uncertainties in motion planning increase, muscle co-activation decreases. In the absence of visual feedback, which can be interpreted as maximal uncertainty from visual sensing, muscle cocontraction remains low and becomes insensitive to the intensity of haptic disturbance.

The second experiment extended these findings by examining the influence of noise in scenarios with both visual and haptic feedback. Consistent with the observations in the single feedback conditions, muscle cocontraction decreased with an increase in visual noise and increased with rising haptic disturbances, in line with previous studies [18, 6]. This indicates that the cocontraction adaptation mechanism is influenced by both visual and haptic feedback. When visual feedback is clear, muscle cocontraction significantly increases with rising haptic noise; however, this response diminishes markedly when visual feedback is blurred. This behavior suggests that the CNS’s reliance on a particular sensory modality correlates with the uncertainty associated with each feedback type, revealing a complex, nonlinear interplay between these modalities. Notably, muscle co-activation drops sharply at the onset of visual noise but decreases as visual noise intensifies. Additionally, muscle co-activation shows a general downward trend with an increasing numbers of experimental trials, likely reflecting the CNS’s strategy to minimize metabolic cost [24, 17].

By systematically studying how subjects interact with visual and haptic information, we were able to decipher the mechanism of body impedance adaptation during the interaction with the environment. Our experimental and simulation results demonstrated that subjects regulate co-activation to optimally integrate visual and haptic information while minimizing effort. This enables them to extract useful information about their environment and plan accurate movements. A computational model based on the optimization of information and effort (OIE) was used to predict muscle cocontraction levels under different visuo-haptic sensory conditions. This OIE model explains how the CNS integrates multi-modal sensory information, considering their respective noise level, to enhance perceptual acuity with minimal metabolic cost. Notably, the results obtained could not be predicted by previous models of muscle cocontraction adaptation [17, 25, 26], which only considered movement error and suggested that muscle activation would increase with either visual or haptic noise. In contrast, the OIE model accounts for sensorimotor interactions and the influence of each sensory modality’s noise on target perception, tracking performance, and effort, thereby successfully predicting the observed actions.

The OIE model can also explain the experimental results presented in [8], where participants marginally reduced stiffness in response to a larger target size, resulting in increased visual uncertainty. It is also compatible with existing models for motor adaptation to external haptic [2, 18, 27] or visual [9, 19, 20] perturbations. A destabilizing force field [2, 27], or perturbations of the hand position in visual feedback [9, 19, 20], both correspond to haptic noise and result in increased stiffness, as predicted by the OIE model. In turn, this means that the OIE model extends the computational models of [17, 25] according to which stiffness increases with hand movement error.

In conclusion, muscle cocontraction is not automatically tuned to minimize movement error as previously thought [17, 25, 26]. Instead, it is skillfully regulated by the CNS to extract maximal information from the environment. The OIE model presented in this paper explains the adaptation of muscle cocontraction during interactions with force fields, dynamic environments with visual noise at the target, and haptic noise at the hand, as in the experiments of this paper, as well as during collaboration with other humans [15]. While active sensing has been identified previously in vision [28], this is, to our knowledge, the first evidence of body adaptation to improve visuo-haptic sensing. Furthermore, while sophisticated algorithms have been developed for such active inference e.g. [29], the experimental results were well predicted through the simple OIE model. This active sensing computational mechanism may be used to design robotic systems that adapt to their user as a human partner would, with various applications in physical human-robot collaboration.

V Materials and Methods

V-A Experiment setup

Each participant was seated on a height-adjustable chair, next to the Hi5 robotic interface [30] with the dominant wrist attached to a handle of the interface during flexion/extension movement. They received visual feedback of the target angle and of their wrist flexion/extension angle on their monitor, and/or haptic feedback from the interaction with the tracking controller of [16] (Fig. 1A).

The Hi5 handle is connected to a current-controlled DC motor (MSS8, Mavilor) that can generate torques of up to 15 Nm, and is equipped with a differential encoder (RI 58-O, Hengstler) to measure the wrist angle and a torque sensor (TRT-100, Transducer Technologies) to measure the exerted torque in the range [0,11.29] Nm. The handle is controlled at 1 kHz using Labview Real-Time v14.0 (National Instruments) and a data acquisition board (DAQ-PCI-6221, National Instruments) while the data was recorded at 100 Hz.

The activation of two antagonist wrist muscles, the flexor carpi radialis (FCR) and extensor carpi radialis longus (ECRL) were recorded during the movement from each participant. Muscle electromyographic (EMG) signals were measured with surface electrodes using a medically certified non-invasive 16-channel EMG system. The EMG data was recorded at 100 Hz. The raw EMG signal was i) high-pass filtered at 20 Hz by using a second-order Butterworth filter to remove drifts in the EMG and ii) rectified and passed through a low-pass second-order Butterworth filter with a 15 Hz cutoff frequency to obtain the envelope of the EMG activity.

V-B Tracking task

The participants were instructed to “track the moving target as accurately as possible” using wrist flexion-extension movements. The target was moving according to

| (5) |

where started in each trial from a randomly selected offset time of the multi-sine function in order to minimize memorization of the target’s motion.

In solo trials, the participants tracked the target without active torque from the robot. Otherwise, participants’ wrist was connected by a compliant virtual spring to the tracking controller of [16] according to (in Nm)

| (6) |

where (in degrees) denotes the participant’s wrist angle, and the controller’s target angle computed as in [16]. The connection stiffness was selected such that subjects could clearly sense the robot’s movement but compliant enough to let them actively pursue the tracking task [31]. This controller has been shown to induce a similar behaviour to human interaction [31]. Using this human-like interaction (rather than direct human interaction) allows for the direct manipulation of haptic noise.

The experiment considered three haptic noise conditions: sharp haptic information (H0 condition) without noise, or a torque perturbation with Nm in the weak haptic noise condition H1 and Nm in the strong haptic noise condition H2.

For visual feedback, a target was displayed on the monitor for participants to track with three conditions. In the sharp visual condition V0, the target was displayed as a 8 mm diameter disk, which had the same visual condition as in solo trials. In the noisy visual conditions V1 and V2, the target was displayed as a “cloud” of eight randomly distributed dots around the nominal target position, where each of the eight dots was sequentially replaced every 100 ms. The cloud’s vertical position relative to the target was normally distributed with [0, (15 mm)2], the angular distance position relative to the target was distributed with [0, ], and the velocity with [0, (101.6 mm/s)2]. The weak visual noise condition V1 was defined through = 21.32 mm and the strong visual noise condition V2 through = 52.78 mm.

V-C Muscle activation calibration

The participants placed their wrist in the most comfort- able middle posture which defined 0∘. Constrained at that posture, they were then instructed to sequentially flex or extend the wrist to exert torque. Each phase was 4 s long and was followed by a 5 s rest period to avoid fatigue. The latter period was used as a reference activity in the relaxed condition. This procedure was repeated four times at flexion/ extension torque levels of {1, 2, 3, 4} Nm and {-1, -2, -3, -4} Nm, respectively.

The recorded muscle activity of each participant was then linearly regressed against the torque values. Specifically, the torque of the flexor muscle was modelled from the envelope of the EMG activity as

| (7) |

and similarly for the torque of the extensor muscle .

V-D Experimental protocol

The experiment was approved by the Imperial College Research ethics committee (No. 15IC2470). Before participating in the experiment, each subject was informed about the investigation, then signed a consent form, filled out a demographic questionnaire and an Edinburgh Handedness Inventory form [32].

After the EMG calibration, the participants carried out nine solo trials to get familiar with the tracking task and the dynamics of the wrist interface. This was followed by series of 20 s long trials as described in Fig. 1B. After each trial, the target disappeared, and the participants had to place their respective cursor on the starting position at the center of the screen. The next trial then started after a 5 s rest period and a 3 s countdown. The participants could take an extra break at will between trials by keeping the cursor away from the screen center.

V-D1 Behaviour with only visual or only haptic feedback

13 naive subjects (six females and seven males) aged 21-25 years (mean = 22.5, sd = 1.05) were recruited to study the influence of visual or haptic feedback separately. One participant was left-handed (with Laterality Quotient LQ -43) and the others were right-handed (LQ 40).

Each participant carried out two blocks with visual feedback, respectively haptic feedback, presented in a random order. Furthermore, the noise conditions were presented randomly in each block. Nine interaction trials of 20 seconds were carried out in each of these conditions.

V-D2 Behaviour with visual and haptic feedback

For the coupled visuo-haptic feedback experiment, 22 naive subjects (twelve females and ten males) aged 22-35 years (mean = 24.1, sd = 3.06) were recruited to study the combined effect of visual and haptic feedback. One participant was ambidextrous (LQ = -29) and the others were right-handed (LQ 40). Due to incomplete EMG data for two participants, data analysis was conducted using the remaining 20 subjects.

There were nine blocks of nine interaction trials, each with one of the nine different noise conditions (resulting from the combination of visual the three noise levels and three haptic perturbations) presented in a random order.

V-E Analysis

The tracking error and muscle cocontraction were used as metrics to analyze the participants’ tracking performance and impedance adaptation to different visuo-haptic noise conditions. To represent the overall tracking accuracy within a trial, the tracking error is defined as the root mean squared error between the target position and the hand position during one trial:

| (8) |

Using the torque regression model of eq. (7), the joint reciprocal activation is defined as

| (9) |

and cocontraction as

| (10) |

The average muscle cocontraction of each trial for a specific subject is computed as

| (11) |

The normalised cocontraction of each participant was used in the subsequent analysis, which is calculated as

| (12) |

where and are the minimum and the maximum of the averaged muscle cocontraction of all interaction trials of a participant respectively.

Linear mixed effects (LME) statistical analysis via restricted maximum likelihood (REML) was applied to every condition on both the tracking error and cocontraction, in order to assess performance stability and evaluate whether the participants had adapted to noise. The model was fitted with the trial number as a fixed slope (s) and a random intercept for each grouping factor (subject ID). For visual or haptic feedback experiment, the Shapiro-Wilk test showed that the cocontraction was normally distributed while the tracking error was not. For visual and haptic feedback experiment, the Shapiro-Wilk test showed that neither the tracking error nor the cocontraction was normally distributed. For metrics with non-normal distribution, repeated measures ART ANOVA was used to analyse the effect of the visual and haptic noise, the paired Wilcoxon signed-rank test was used for post-hoc non-parametric hypothesis testing. For metrics with normal distribution, repeated measures ANOVA was used to assess the impact of visual or haptic noise. Paired T-test was conducted for post-hoc comparison between groups. The p-values for all comparisons were adjusted using the Holm-Bonferroni method.

V-F Visuo-haptic noise model

The CNS perceives the target movement through visual feedback and haptic connection to the target, which is known to degrade the signal quality [33]. Assuming that this effect results in independent noise, the standard deviation of internal sensory noise is

| (13) |

where is the deviation of visual noise. Experiment data exhibit a saturation of tracking error with the increase of visual fuzziness (the size of the cloud) so the visual noise is modelled as

| (14) |

where is the angular deviation of target cloud given in the experiment. is the deviation due to the joint compliance decreasing with muscle coactivity [33], which was identified in [34] as

| (15) |

Furthermore, the tracking error increases with the amplitude of haptic perturbation thus a quadratic regression model is used for the haptic noise:

| (16) |

The parameters of the computational model are identified using the cocontraction data of the last trial for all noise conditions. The effective visual noise deviation , the effective haptic noise were identified by minimising the variation of the cost derivative to satisfy the first-order necessary optimal (Karush–Kuhn–Tucker) conditions [35]. Considering the relationship between the deviation and the wrist’s viscoelasticity, the visual noise deviation and the haptic noise deviation each has three values, resulting in six parameters to identify:

Using the collected cocontraction data , a grid search is performed to determine the effects of visual and haptic noise under {sharp, weak, strong} conditions Particle swarm optimisation (PSO) [36] is employed within a bound range of [0,70], yielding the noise values shown in Table I. The optimal effort ratio = 2.26 is then computed as the solution of

| (17) |

A least-square regression using the identified parameters = -1.21, = 66.18 in eq. (14) was used to express the relationship between the angular deviation of the visual cloud and effective deviation of visual noise . A quadratic regression with = 5.05, = 6.84, = 41.68 was identified to model the relation between the perturbation amplitude and effective haptic noise.

Acknowledgment

We thank Dagmar Sternad for discussions on the experimental protocol, and the participants for taking part in the experiment.

References

- [1] N. Hogan, “Impedance control: an approach to manipulation,” IEEE American Control Conference, pp. 304–313, 1984.

- [2] E. Burdet, R. Osu, D. W. Franklin, T. E. Milner, H. Gomi, and M. Kawato, “The central nervous system stabilizes unstable dynamics by learning optimal impedance,” Nature, vol. 414, pp. 446–449, 2001.

- [3] D. W. Franklin, G. Liaw, T. Milner, R. Osu, E. Burdet, and M. Kawato, “Endpoint stiffness of the arm is directionally tuned to instability in the environment,” Journal of Neuroscience, vol. 27, no. 29, pp. 7705–7716, 2007. [Online]. Available: http://www.jneurosci.org/cgi/doi/10.1523/JNEUROSCI.0968-07.2007

- [4] L. P. J. Selen, D. W. Franklin, and D. M. Wolpert, “Impedance control reduces instability that arises from motor noise,” Journal of Neuroscience, vol. 29, no. 40, pp. 12 606–12 616, 2009.

- [5] D. W. Franklin, R. Osu, E. Burdet, M. Kawato, and T. E. Milner, “Adaptation to stable and unstable dynamics achieved by combined impedance control and inverse dynamics model,” Journal of Neurophysiology, vol. 90, no. 5, pp. 3270–3282, 2003.

- [6] K. P. Tee, D. W. Franklin, M. Kawato, T. E. Milner, and E. Burdet, “Concurrent adaptation of force and impedance in the redundant muscle system,” Biological Cybernetics, vol. 102, pp. 31–44, 2010.

- [7] C. Yang, G. Ganesh, S. Haddadin, S. Parusel, A. Albu-Schaeffer, and E. Burdet, “Human-like adaptation of force and impedance in stable and unstable interactions,” IEEE Transactions on Robotics, vol. 27, no. 5, pp. 918–930, 2011.

- [8] R. Osu, N. Kamimura, H. Iwasaki, E. Nakano, C. M. Harris, Y. Wada, and M. Kawato, “Optimal impedance control for task achievement in the presence of signal-dependent noise,” Journal of Neurophysiology, vol. 92, no. 2, pp. 1199–1215, 2004.

- [9] J. Wong, E. T. Wilson, N. Malfait, and P. L. Gribble, “The influence of visual perturbations on the neural control of limb stiffness,” Journal of Neurophysiology, vol. 101, no. 1, pp. 246–257, 2009.

- [10] R. Colombo and V. Sanguineti, Rehabilitation robotics: Technology and application. Academic Press, 2018.

- [11] J. E. Colgate, M. Peshkin, and S. H. Klostermeyer, “Intelligent assist devices in industrial applications: a review,” in Proceeding IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), vol. 3, 2003, pp. 2516–2521.

- [12] M. Mulder, D. A. Abbink, and E. R. Boer, “Sharing control with haptics: Seamless driver support from manual to automatic control,” Human Factors, vol. 54, no. 5, pp. 786–798, 2012.

- [13] F. Crevecoeur, D. P. Munoz, and S. H. Scott, “Dynamic multisensory integration: somatosensory speed trumps visual accuracy during feedback control,” Journal of Neuroscience, vol. 36, no. 33, pp. 8598–8611, 2016.

- [14] S. Kasuga, F. Crevecoeur, K. P. Cross, P. Balalaie, and S. H. Scott, “Integration of proprioceptive and visual feedback during online control of reaching,” Journal of Neurophysiology, vol. 127, no. 2, pp. 354–372, 2022.

- [15] H. Börner, G. Carboni, X. Cheng, A. Takagi, S. Hirche, S. Endo, and E. Burdet, “Physically interacting humans regulate muscle coactivation to improve visuo-haptic perception,” Journal of Neurophysiology, vol. 129, no. 2, pp. 494–499, 2023.

- [16] A. Takagi, G. Ganesh, T. Yoshioka, M. Kawato, and E. Burdet, “Physically interacting individuals estimate the partner’s goal to enhance their movements,” Nature Human Behaviour, vol. 1, no. 3, 2017.

- [17] D. W. Franklin, E. Burdet, K. Peng Tee, R. Osu, C. Chew, T. E. Milner, and M. Kawato, “CNS learns stable, accurate, and efficient movements using a simple algorithm,” Journal of Neuroscience, vol. 28, no. 44, pp. 11 165–11 173, 2008.

- [18] D. W. Franklin, U. So, M. Kawato, and T. E. Milner, “Impedance control balances stability with metabolically costly muscle activation,” Journal of Neurophysiology, vol. 92, no. 5, pp. 3097–3105, 2004.

- [19] S. Franklin, D. M. Wolpert, and D. W. Franklin, “Visuomotor feedback gains upregulate during the learning of novel dynamics,” Journal of Neurophysiology, vol. 108, no. 2, pp. 467–478, 2012.

- [20] J. A. Calalo, A. M. Roth, R. Lokesh, S. R. Sullivan, J. D. Wong, J. A. Semrau, and J. G. Cashaback, “The sensorimotor system modulates muscular co-contraction relative to visuomotor feedback responses to regulate movement variability,” Journal of Neurophysiology, vol. 129, no. 4, pp. 751–766, 2023.

- [21] E. Burdet, D. W. Franklin, and T. E. Milner, Human robotics: neuromechanics and motor control. MIT Press, 2013.

- [22] S. Portet, “A primer on model selection using the akaike information criterion,” Infectious Disease Modelling, vol. 5, pp. 111–128, 2020.

- [23] N. Cohen and Y. Berchenko, “Normalized information criteria and model selection in the presence of missing data,” Mathematics, vol. 9, no. 19, p. 2474, 2021.

- [24] E. Todorov and M. I. Jordan, “Optimal feedback control as a theory of motor coordination,” Nature Neuroscience, vol. 5, no. 11, pp. 1226–1235, 2002.

- [25] E. A. Theodorou, J. Buchli, and S. Schaal, “A generalized path integral control approach to reinforcement learning,” Journal of Machine Learning Research, vol. 11, pp. 3137–3181, 2010.

- [26] Y. Li, G. Ganesh, N. Jarrassé, S. Haddadin, A. Albu-Schaeffer, and E. Burdet, “Force, impedance, and trajectory learning for contact tooling and haptic identification,” IEEE Transactions on Robotics, vol. 34, no. 5, pp. 1170–1182, 2018.

- [27] D. W. Franklin, G. Liaw, T. E. Milner, R. Osu, E. Burdet, and M. Kawato, “Endpoint stiffness of the arm is directionally tuned to instability in the environment,” Journal of Neuroscience, vol. 27, no. 29, pp. 7705–7716, 2007.

- [28] R. P. N. Rao and D. H. Ballard, “Predictive coding in the visual cortex: a functional interpretation of some extra-classical receptive-field effects,” Nature Neuroscience, vol. 2, no. 1, pp. 79–87, 1999. [Online]. Available: https://doi.org/10.1038/4580

- [29] K. J. Friston, J. Daunizeau, J. Kilner, and S. J. Kiebel, “Action and behavior: a free-energy formulation,” Biological Cybernetics, vol. 102, no. 3, pp. 227–260, 2010. [Online]. Available: https://doi.org/10.1007/s00422-010-0364-z

- [30] A. Melendez-Calderon, L. Bagutti, B. Pedrono, and E. Burdet, “Hi5: A versatile dual-wrist device to study human-human interaction and bimanual control,” in Proceeding IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2011, pp. 2578–2583.

- [31] E. Ivanova, G. Carboni, J. Eden, J. Krueger, and E. Burdet, “For motion assistance humans prefer to rely on a robot rather than on an unpredictable human,” IEEE Open Journal of Engineering in Medicine and Biology, vol. 1, pp. 133–139, 2020.

- [32] R. C. Oldfield, “The assessment and analysis of handedness: The Edinburgh inventory,” Neuropsychologia, vol. 9, no. 1, pp. 97–113, 1971.

- [33] A. Takagi, F. Usai, G. Ganesh, V. Sanguineti, and E. Burdet, “Haptic communication between humans is tuned by the hard or soft mechanics of interaction,” PLoS Computational Biology, vol. 14, no. 3, pp. 1–17, 2018.

- [34] G. Carboni, T. Nanayakkara, A. Takagi, and E. Burdet, “Adapting the visuo-haptic perception through muscle coactivation,” Scientific Reports, vol. 11, no. 1, pp. 1–7, 2021.

- [35] S. Boyd, S. P. Boyd, and L. Vandenberghe, Convex optimization. Cambridge University Press, 2004.

- [36] J. Kennedy and R. Eberhart, “Particle swarm optimization,” in International Conference on Neural Networks, vol. 4. IEEE, 1995, pp. 1942–1948.