Human Instance Matting via Mutual Guidance and Multi-Instance Refinement

Abstract

This paper introduces a new matting task called human instance matting (HIM), which requires the pertinent model to automatically predict a precise alpha matte for each human instance. Straightforward combination of closely related techniques, namely, instance segmentation, soft segmentation and human/conventional matting, will easily fail in complex cases requiring disentangling mingled colors belonging to multiple instances along hairy and thin boundary structures. To tackle these technical challenges, we propose a human instance matting framework, called InstMatt, where a novel mutual guidance strategy working in tandem with a multi-instance refinement module is used, for delineating multi-instance relationship among humans with complex and overlapping boundaries if present. A new instance matting metric called instance matting quality (IMQ) is proposed, which addresses the absence of a unified and fair means of evaluation emphasizing both instance recognition and matting quality. Finally, we construct a HIM benchmark for evaluation, which comprises of both synthetic and natural benchmark images. In addition to thorough experimental results on complex cases with multiple and overlapping human instances each has intricate boundaries, preliminary results are presented on general instance matting. Code and benchmark are available in https://github.com/nowsyn/InstMatt. ††footnotetext: This work was done when Yanan Sun was a student intern at Kuaishou Technology, which was supported by Kuaishou Technology and the Research Grant Council of the Hong Kong SAR under grant no. 16201420.

1 Introduction

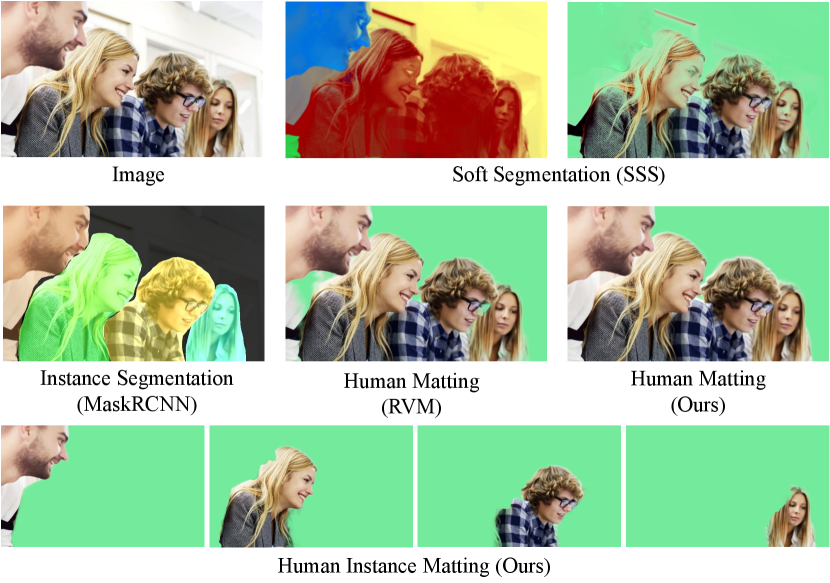

Fast development of mobile internet technology has triggered the rapid growth of multimedia industry especially we-media, where users are heavily engaged in editing tools to beautify or re-create their image and video contents. As one of the primary techniques for efficient image editing, image matting has achieved significant improvement with the wide adoption of deep neural networks in the task. However, existing matting methods still fail or else are not easy to use in many scenarios, such as extracting the foreground human while removing background humans, or instance-level editing as shown in Figure 1: what if we want to independently extract and edit each human instance?

Similar to semantic versus instance segmentation, existing matting methods, which focus on a region based on a given trimap or a known object class, are unable to differentiate instances. To address this issue, we propose a new task called human instance matting (HIM), which aims to automatically extract precise alpha matte for each human instance in a given image. HIM shares similarities to the following conventional tasks while embodying fundamental differences making it a problem on its own: 1) instance segmentation aims at distinguishing instances, but it can only produce sharp object boundary without semi-transparency consideration; 2) recent soft segmentation [3] is capable of generating soft segments for multiple instances of different classes with instance-aware features, but cannot deal with instances of the same class; 3) conventional matting aims at extracting precise alpha matte, but it lacks instance awareness. Overall, human instance matting is a unified task encompassing the characteristics of the aforementioned related tasks while introducing new technical challenges.

Conventional matting is based on the image compositing equation where an image is the combination of foreground layer, background layer modulated by alpha :

| (1) |

To adapt to multiple instance matting, we modify the 2-layer Equation 1 to one of multi-instance layered composition, where each instance layer is attenuated by its corresponding :

| (2) |

where and respectively denote the foreground and alpha matte for instance ; and respectively represent the background and its corresponding alpha matte; is the number of instances. This equation had also appeared in [34, 3], but all such relevant matting and segmentation tasks were not instance aware. The goal of instance matting is to solve for target mattes for all .

By exploring the complex relation among multiple instances, we propose a new instance matting framework, called InstMatt, where a novel mutual guidance strategy enables a deep model to decompose mingled compositing colors into their respective instances. Our mutual guidance strategy takes both the relation between instances and the background, and the relation among instances into consideration. Besides, a multi-instance refinement module is carefully designed and engineered for interchanging information among instances to synchronize predictions for further refinement. Equipped with the novel mutual guidance and multi-instance refinement, our InstMatt is able to not only produce high-quality human alpha matte but also distinguish multiple human instances shown in Figure 1.

With this new HIM task, existing evaluation metrics for instance segmentation or matting are insufficient, which were designed for either one of the tasks. We propose a new metric, called instance matting quality (IMQ), that simultaneously measures instance recognition quality and alpha matte quality. To provide a general and comprehensive validation on instance matting techniques, we construct an instance matting benchmark, HIM2K, which consists of a synthetic image benchmark and a natural image benchmark totaling 2,000 images with high-quality matte ground truths.

To demonstrate the promise of our technical contributions beyond human instance matting, we present preliminary results on matting multi-object instances not limited to humans, a fruitful future direction to explore.

2 Related Work

2.1 Matting

Natural Image Matting. Image matting is a pixel-level task, aiming to extract alpha matte for a foreground object. Traditional matting methods can be summarized into two approaches. Sampling-based methods [14, 24, 20, 24] collect a set of known foreground and background samples to estimate unknown alpha values. Propagation-based methods [34, 10, 33, 22, 5, 4] assume neighboring pixels are correlated, and use their affinities to propagate alpha from known regions to unknown regions. Traditional methods rely on low-level or statistical features, which can easily fail on complex cases due to their limited feature representation.

The wide application of deep convolutional neural network (CNN) addresses this feature representation issue to a great extent. DCNN [13] and DIM [60] are the first representative methods to apply CNN in matting, which are followed by a series of valuable works advancing the state-of-the-art matting performance. Deep learning-based methods can be further grouped into three approaches. Trimap (or mask) based methods [47, 46, 7, 26, 27, 36, 15, 52, 53, 61, 18, 56, 44] take an additional trimap to focus the model on the target foreground object. With careful network design, these methods have achieved excellent performance. User-supplied constraints are relaxed in [49, 38] by using an extra photo taken without the relevant foreground object for providing useful prior information. Trimap-free methods [63, 48] erase the dependence on additional input. These methods resort attention or salience to localize foreground object and extract the corresponding alpha matte.

Human Matting. Human matting is a class-specific image matting task, where the semantic information of the foreground object, namely, human is known. Known human semantics effectively guides relevant human matting methods and thus they usually do not require additional input. Deep learning-based human matting was first proposed in [50] and then improved in SHM [9]. A method was proposed in BSHM [41] which makes use of coarse annotated data for boosting performance. MODNet [30] addresses automatic and fast human matting using a light-weight network considering both low-resolution semantics and high-resolution details. In RVM [39] a video human matting framework was proposed using a recurrent decoder to improve robustness. Further, a cascade framework is proposed in [62] to extract alpha matte from low-to-high resolution.

2.2 Segmentation

Instance Segmentation. Instance segmentation simultaneously requires instance-level and pixel-level predictions. The existing methods can be classified into three categories. Top-down methods [23, 37, 43, 28, 11, 6, 8, 29] first detect instances and then segment the object within detected bounding boxes. On the contrary, bottom-up methods [42, 19] first learns the embeddings for each pixel and then group them into instances. Direct methods [57, 58] are box-free and grouping-free. They predict instance masks with classification in one shot without a detection or clustering step.

Soft Segmentation. Soft segmentation is a pixel-level task, decomposing an image into several segments where each pixel may belong partially to multiple segments. Different decomposition methods lead to different segments. For instance, soft color segmentation methods [51, 54, 55, 1, 2] decompose an image into soft layers of homogeneous colors; spectral matting [34] clusters an image into a set of spectral segments; SSS [3] decomposes an image into soft semantic segments via aggregating high-level embeddings with local-level textures.

2.3 Instance Matting

Instance matting maps each pixel into a set of soft or fractional alphas each tagged with an unique instance ID. Besides inheriting the difficulties from instance segmentation and soft segmentation, instance matting introduces new algorithmic challenges. Specifically, compared to instance segmentation, each pixel in instance matting can partially belong to more than one instance; compared to soft segmentation, each pixel can belong to multiple instances of the same class. To the best of our knowledge, there is no unified framework that can simultaneously address these technical challenges brought by the new instance matting problem. In this paper, we take human instance matting as an example, and propose a framework to address the aforementioned issues via our novel mutual guidance and multi-instance refinement.

3 Method

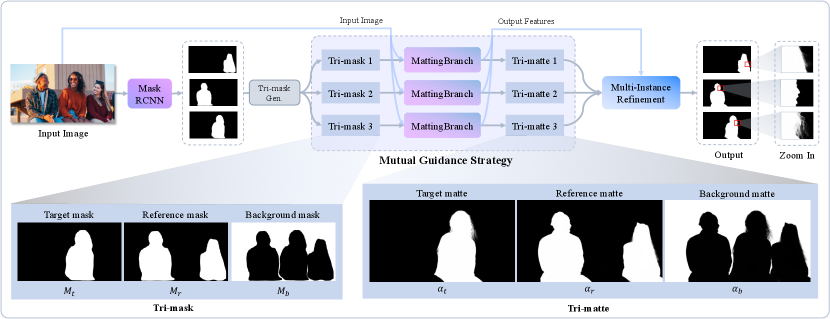

Our HIM framework, called InstMatt, consists of two steps, first recognizing instances and then extracting their respective alpha mattes. This allows the model to globally discover instances and then refine them according to local context. Figure 2 illustrates the whole framework.

3.1 Observations

Sparsity.

Equation 2 indicates that a given pixel can belong to multiple instances and hence . However, in real-life images even containing many instances, each pixel usually consists of no more than two non-zero s, belonging to an instance and the background, or two overlapping instances, thus satisfying the sparsity observation of multi-instance matting.

Mutual Information and Tri-mattes.

To estimate target instance alpha matte , the other instances can be regarded as reference information. Note that we do not regard the other instances as part of background since they have different semantic representation. Therefore, we can re-formulate Equation 2 into the following equation using three components, i.e., target instance , the other instances if any (also named reference instances), and the background :

| (3) |

If we treat the component as a new combined layer, Equation 3 is then simplified into a sparse representation as Equation 4, which considers the sparsity constraint:

| (4) |

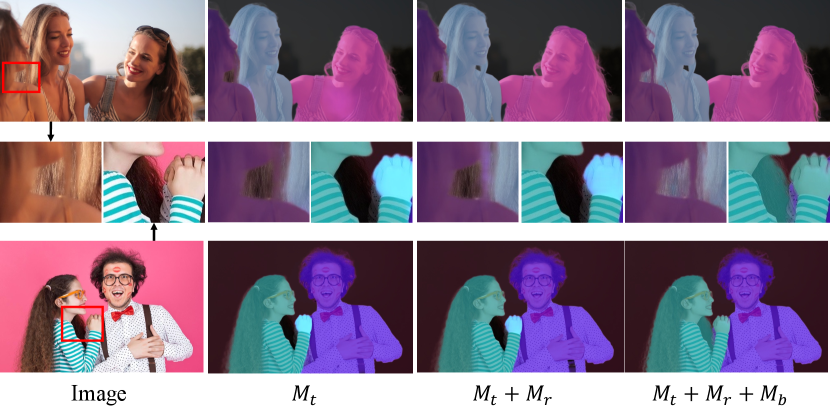

where subscripts ,, represent the three components , , respectively. For a target instance, Equation 4 implies that the alpha matte of each pixel can be correspondingly decomposed into three components, , and (where one or two of them can be zero). These three components provide mutual information for one another, and they are collectively termed tri-mattes.

3.2 Mutual Guidance Strategy

Given an image, we first apply MaskRCNN [23] to extract coarse masks for human instances. The challenge lies in turning the coarse mask into precise alpha matte for each instance. When only one instance exists, the task reduces into conventional human matting, which can be addressed by [61] or other matting techniques. To handle multiple instances, according to the above observations, we propose a novel mutual guidance strategy implemented using a tri-mask. Tri-mask is defined as the concatenation of , and , which respectively mask the region of , and . For instance , , and are computed using the following tri-mask generation formulas,

| (5) | ||||

| (6) |

Afterward, for each instance, we feed as input the concatenation of the image and its tri-mask into a matting branch for extracting its tri-matte , which is the concatenation of the alpha matte , and . The matting branch is an encoder-decoder matting network adopting the same structure with the network used in [61]. After the matting branch, we extract the tri-mattes for all instances. To supervise , multi-instance constraints are employed which will be introduced in Section 3.4.

Prior information in tri-mask provides comprehensive guidance for the model in pixel decomposition. On the one hand, the mutual exclusion among , and guides the model to distinguish human instances from the background. On the other hand, the separation between and guides the model to differentiate instances. Subject to the constraint , we force the model to learn a mutual exclusive decomposition in a contrastive manner.

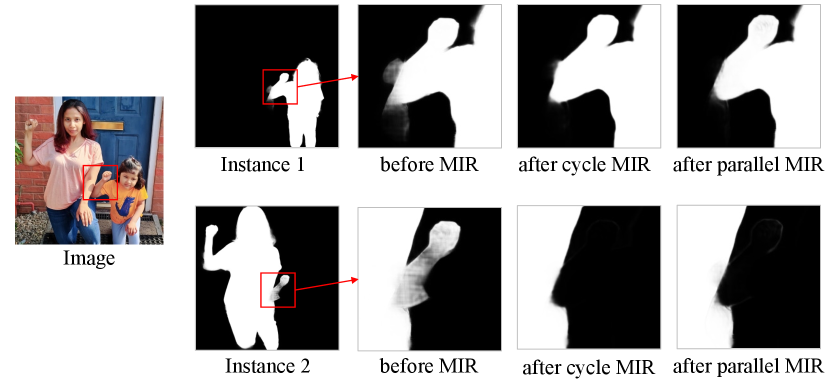

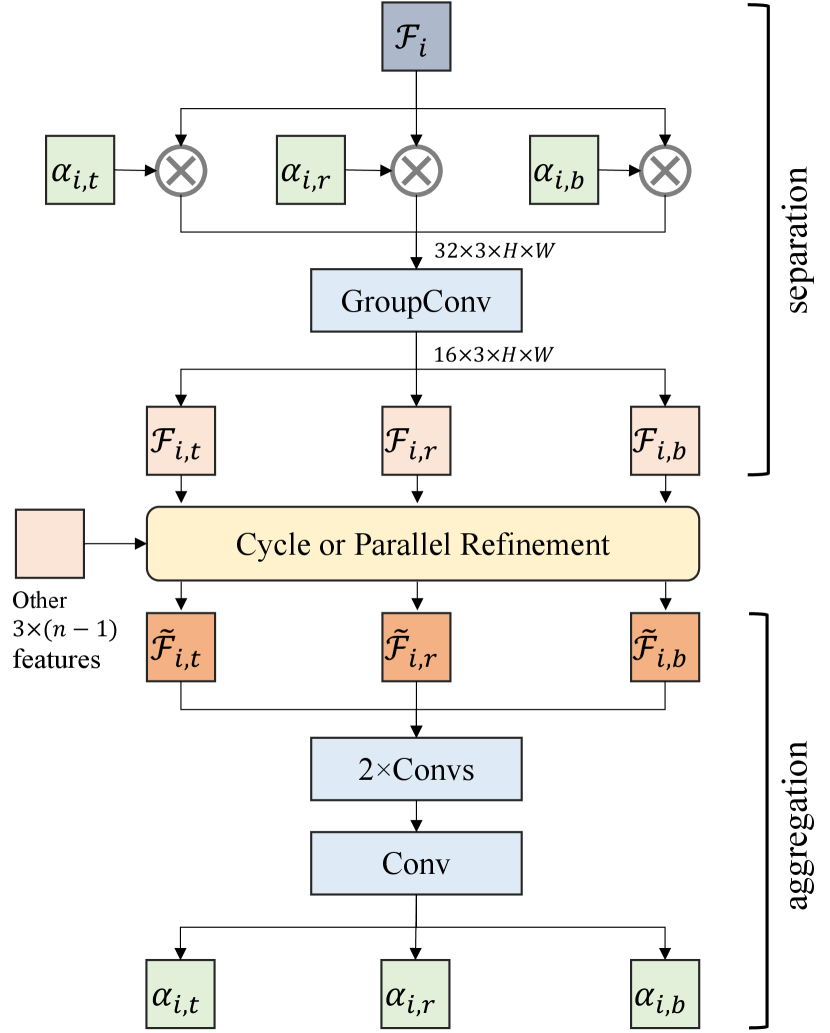

3.3 Multi-Instance Refinement

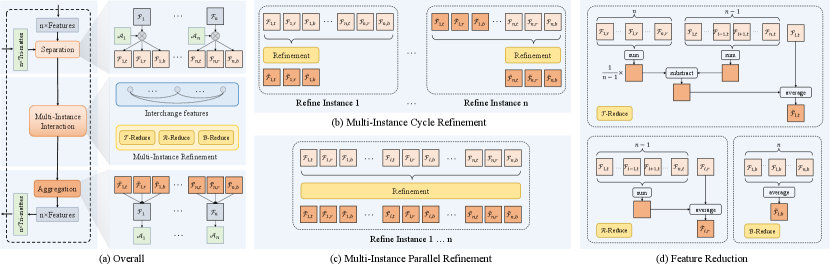

Given instances, tri-mattes, i.e., triplets of are derived via the aforementioned mutual guidance, which encourages intra-instance but not inter-intance consistencies, which may lead to misalignment among overlapping tri-mattes from different instances. We utilize such inter-instance inconsistencies to correct potential error of the estimated alpha mattes. Based on tri-mattes, we design a multi-instance refinement module (MIR), illustrated in Figure 3 to further promote the quality of alpha mattes for all target instances.

Overall Structure. Our multi-instance refinement module comprises of three steps: separation, interaction and aggregation as shown in Figure 3-(a). For each instance, we use to represent the feature from the final layer before the prediction head in the matting branch. Though embodies the information for , and , it is infeasible to perform individual operation on these three components. Thus, we use tri-matte to provide spatial attention so as to obtain the separate features for , and . Specifically, multiplied by , and , we obtain three features , and , .

Separate representations for , and enable free communications and interactions to a large extent among instances. In the second step, a novel multi-instance interaction layer is proposed, in which each instance sends its features to other instances and receives the features from other instances. As the number of features varies with the number of instances, feature reduction operation is required to integrate these received features for refinement. Specifically, the refinement consists of three reduction operations, i.e., -reduce, -reduce, and -reduce, which are defined in Equation 7–9 (Figure 3-(d)).

| (7) | ||||

| (8) | ||||

| (9) |

Equation (7)–(9) can be regarded as an averaging process. Such ‘averaging’ can provide communication among instances obtained from each individual branch to alleviate uncertainty and stabilize the convergence. After the multi-instance interaction layer, we reunify , and to produce an enhanced feature for tri-matte estimation.

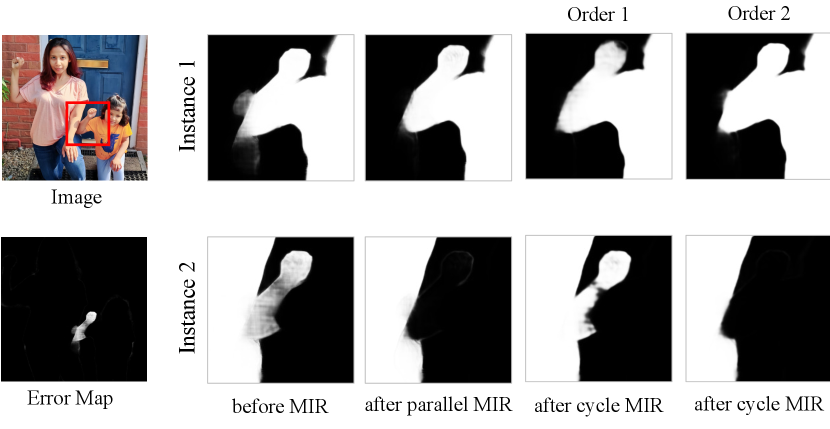

Cycle versus Parallel Refinement. In the multi-instance interaction layer, after instances interchanging features information, there are numerous refinement possibilities since instances can refine their features concurrently or successively. Here, we discuss two representative refinement strategies in the multi-instance interaction layer, i.e. cycle refinement and parallel refinement shown in Figure 3-(b) and (c) respectively:

-

•

Cycle refinement. Instances refine their features with the help of other features sequentially. For example, instance first refines its feature and then sends its refined feature to all other instances. Next, instance refines its features with the refined features from instance and the unrefined features from the rest instances, and so on. Finally, instance refines its features based on the refined features from all the other instances.

-

•

Parallel refinement. Instances refine their features with the help of other features simultaneously. All instances refine their features based on the unrefined features from the other instances.

Both refinement strategies are effective in utilizing multi-instance mutual information to alleviate the effect of outliers. Since cycle refinement is order-sensitive, parallel refinement is preferable in non-interactive applications. We adopt parallel refinement in this paper. More comparisons and implementation details can be found in the supplementary materials.

3.4 Multi-Instance Constraint

Conventional matting losses, i.e., alpha loss and pyramid Laplacian loss, are still applicable in instance matting. Specially, we apply alpha loss and pyramid Laplacian loss for , and separately. Their summations are denoted by and .

Alpha loss and pyramid Laplacian loss directly regularize the distance between the estimated alpha matte and the ground truth, not considering composition constraint and alpha constraint among multiple instances as well as the background. We adapt the composition loss to accommodate multi-instance composition constraint as Equation 10,

| (10) |

In addition, we employ multi-instance alpha constraint on tri-matte as Equation 11 to reduce the solution space:

| (11) |

Finally, the total loss is the summation of the aforementioned losses as Equation 12,

| (12) |

We apply the loss defined in Equation 12 for the tri-mattes from both the matting branch and the multi-instance refinement module.

4 Benchmark

Existing benchmarks are designed for instance segmentation such as COCO dataset [40], or matting such as Composition-1K [60], but not for instance matting. They cannot provide a comprehensive evaluation for instance matting. In this paper, we propose a human instance matting benchmark called HIM2K, which is composed of two subsets, synthetic image subset and natural image subset respectively containing 1,680 and 320 images.

Synthetic Subset. We collect a variety of human images and carefully extract the human foregrounds. Then, we randomly select 2–5 such foregrounds , and iteratively composite them onto a non-human background image sampled from BG20K [35] following Equation 13 below, where is the background image:

| (13) |

Expanding Equation 13 for each foreground object layer, a uniform formula can be derived as Equation 14:

| (14) |

If we use layer to represent a foreground image or background image , Equation 14 for the last iteration can be simplified as Equation 15 which is the same as Equation 2:

| (15) |

where denotes the alpha matte of -th layer , the target to be estimated for instance when .

Natural Subset. In light of the domain gap between synthetic and real images, we construct a natural subset for fair evaluation. The natural subset consists of 320 images containing multiple human instances of a variety of poses and scenarios, with ground truth alpha matte obtained by manual labeling using Photoshop. Despite the possibly imperfect (still reasonably accurate) annotation, we found that more than 98% of regions contain no more than 3 overlapping areas, which makes annotated ground truth trustworthy. Evaluation on the natural subset can validate the effectiveness and stability of different methods on real-world photos. Figure 4 shows examples from the two subsets.

5 IMQ Metric

In this section, we introduce a new metric for instance matting. Existing metrics are designed for either matting or instance segmentation including semantic segmentation. Instance segmentation metrics, such as mask average precision (mask AP), are used for measuring the binary instance mask quality, and thus unsuitable for evaluating alpha matte with fractional values in transitional region. On the other hand, the most widely used matting metrics, namely, the four errors MAD (or SAD), MSE, Gradient and Connectivity, measure alpha matte quality without instance awareness. The above limitations of existing metrics necessitate a new metric, which we call instance matting quality (IMQ).

Instance Matting Quality. IMQ measures instance matte quality giving attention to both instance recognition quality and matting quality. Inspired by the panoptic quality [31], IMQ is defined by Equation 16:

| (16) |

where is the similarity measurement function; , , and are respectively the true positive, false positive and false negative sets; and are the predicted and ground truth instance alpha matte. The computation of IMQ has two steps: instance matching and similarity measurement as revealed in Equation 16.

Instance Matching. To match the predicted instance mattes with ground-truth instance mattes, the matching criterion is intersection-over-union (IoU) between and . We first quantify each instance matte into a binary mask by applying before computing IoU matrix. Based on the IoU matrix, we apply Hungarian matching [32], a greedy assignment strategy to achieve one-to-one assignment. All assigned predicted instance mattes are treated as TP candidates, where a candidate is assigned to TP if its IoU is above a threshold (0.5 is adopted in this paper). After settling the TP set, the FP set and FN set can be derived easily.

Similarity Measurement. The similarity measurement criterion is defined as Equation 17 below, where is a balance factor, and is an error function, e.g., MSE,

| (17) |

We denote IMQ applying MSE error function to measure similarity as IMQ. If we replace the error function by MAD, Gradient and Connectivity, we respectively obtain IMQ, IMQ and IMQ.

Analysis. Similar to panoptic quality, IMQ can be decomposed into two components as Equation 18,

| (18) |

RQ has a similar expression to -score, a metric widely used in recognition tasks, while MQ measures the matting quality for TP set. Different from existing instance segmentation and matting metrics, collaboration of RQ and MQ provides a fair and comprehensive evaluation for instance matte quality.

6 Experiments

In this section, we introduce our synthetic training dataset, evaluation and ablation studies. More details about the implementation including the network structure, data augmentations and training schedule can be found in the supplementary materials.

6.1 Synthetic Training Dataset

Since there is no off-the-shelf human instance matting training dataset, we construct our synthetic training dataset following [60], by compositing human instances onto background images. Specifically, for the foreground, we collect 38,618 human instances with matting annotations from Adobe Image Matting dataset [60], Distinctions-646 [48] and self-collected dataset. For the background, we use non-human high-resolution images from [35, 53].

To produce a synthetic image, we randomly pick 2 to 5 instances from the foreground set, and composite them onto a background image. Random crop and zoom are applied on each foreground image. To avoid degenerate cases, such as a totally occluded instance, we composite instances with a random gap or overlap within some reasonable range. The composition is an iterative procedure following Equation 13. Finally, a total of 35,000 synthetic images with multiple instances are included in our training dataset.

| Method | HIM2K (Synthetic Subset) | HIM2K (Natural Subset) | RWP636 | |||||||

|---|---|---|---|---|---|---|---|---|---|---|

| IMQ | IMQ | IMQ | IMQ | IMQ | IMQ | IMQ | IMQ | IMQ | IMQ | |

| MaskRCNN [23] | 18.37 | 25.65 | 0.45 | 19.07 | 24.22 | 33.74 | 2.27 | 26.65 | 20.26 | 25.36 |

| MaskRCNN + CascadePSP [12] | 40.85 | 51.64 | 29.59 | 43.37 | 64.58 | 74.66 | 60.02 | 67.20 | 42.20 | 52.91 |

| MaskRCNN + GCA [36] | 37.76 | 51.56 | 38.33 | 39.90 | 45.72 | 61.40 | 44.77 | 48.81 | 33.87 | 46.47 |

| MaskRCNN + SIM [52] | 43.02 | 52.90 | 40.63 | 44.29 | 54.43 | 66.67 | 49.56 | 58.12 | 34.66 | 46.60 |

| MaskRCNN + FBA [18] | 36.01 | 51.44 | 37.86 | 38.81 | 34.81 | 48.32 | 36.29 | 37.23 | 35.00 | 47.54 |

| MaskRCNN + MaskGuided [61] | 51.67 | 67.08 | 53.03 | 55.38 | 57.98 | 71.12 | 66.53 | 60.86 | 30.64 | 53.16 |

| InstMatt (Ours) | 63.59 | 78.14 | 64.50 | 67.71 | 70.26 | 81.34 | 74.90 | 72.60 | 51.10 | 73.09 |

6.2 Evaluation

Human Instance Matting. We perform joint qualitative and quantitative evaluations on multiple datasets, including HIM2K, RWP636 [61], SPD [16], COCO [40] dataset as well as more complex real-world images.

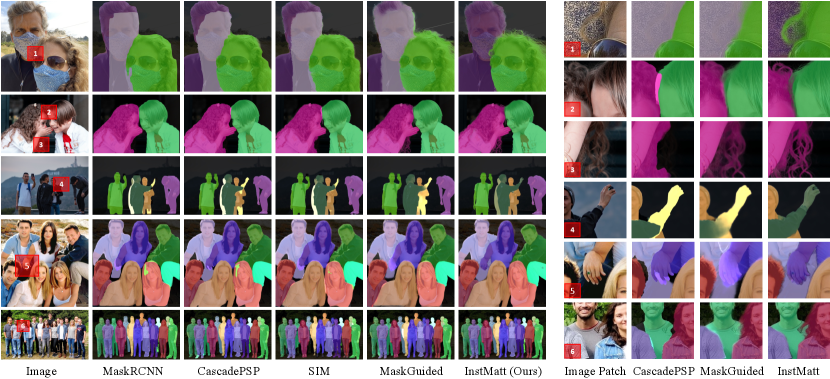

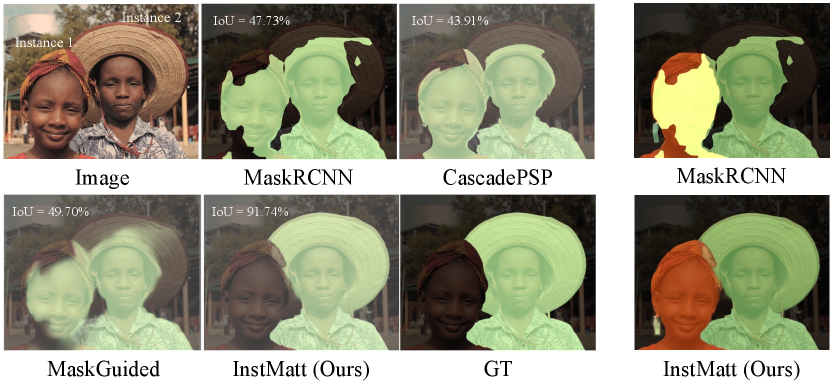

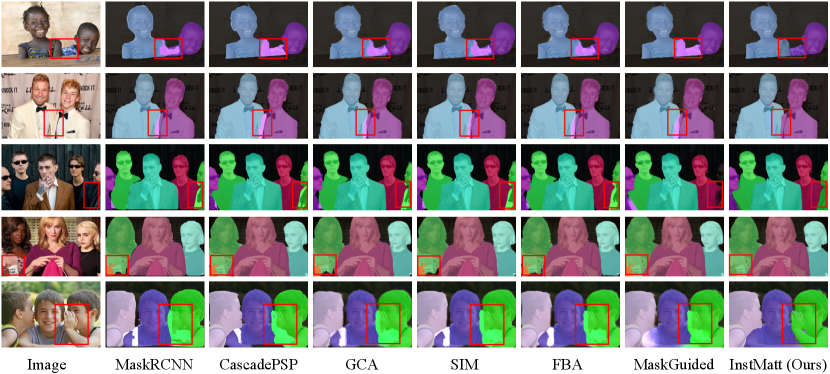

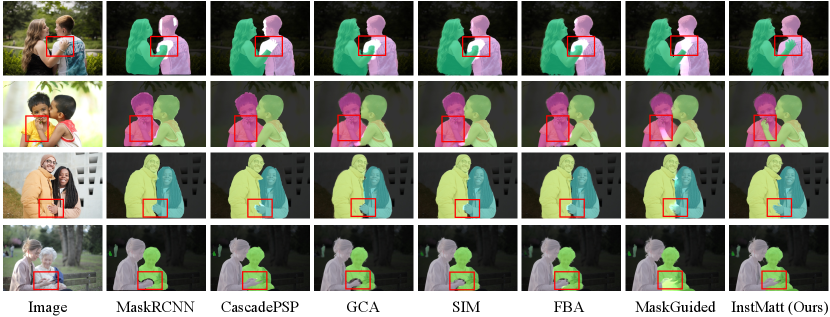

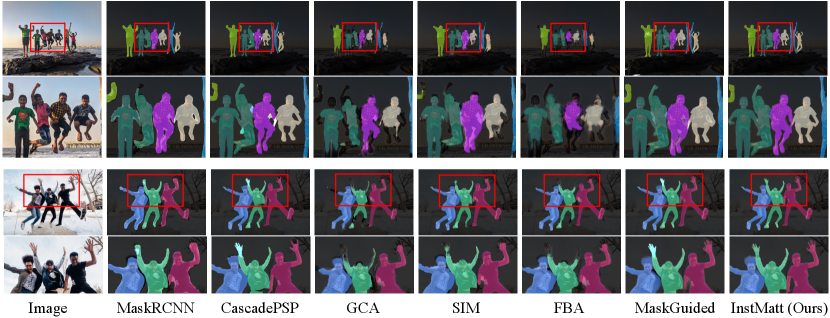

HIM2K is the proposed benchmark for human instance matting. Since our method is the first work to address instance matting, we compare our method with instance segmentation methods [23, 12] and a straightforward extension on existing state-of-the-art matting methods [36, 52, 18, 61] based on the masks from MaskRCNN [23]. To validate the effectiveness of our method, we also conduct comparisons on a human matting benchmark, Real World Portrait 636 (RWP636), and a human segmentation dataset, Supervisely Person dataset (SPD). SPD consists of 5418 images with fine mask annotations. We split a subset comprising of 500 images from SPD as the testing dataset. Table 1 and 2 tabulate the quantitative results on the three testing sets, showing our method achieves the state-of-the art performance.

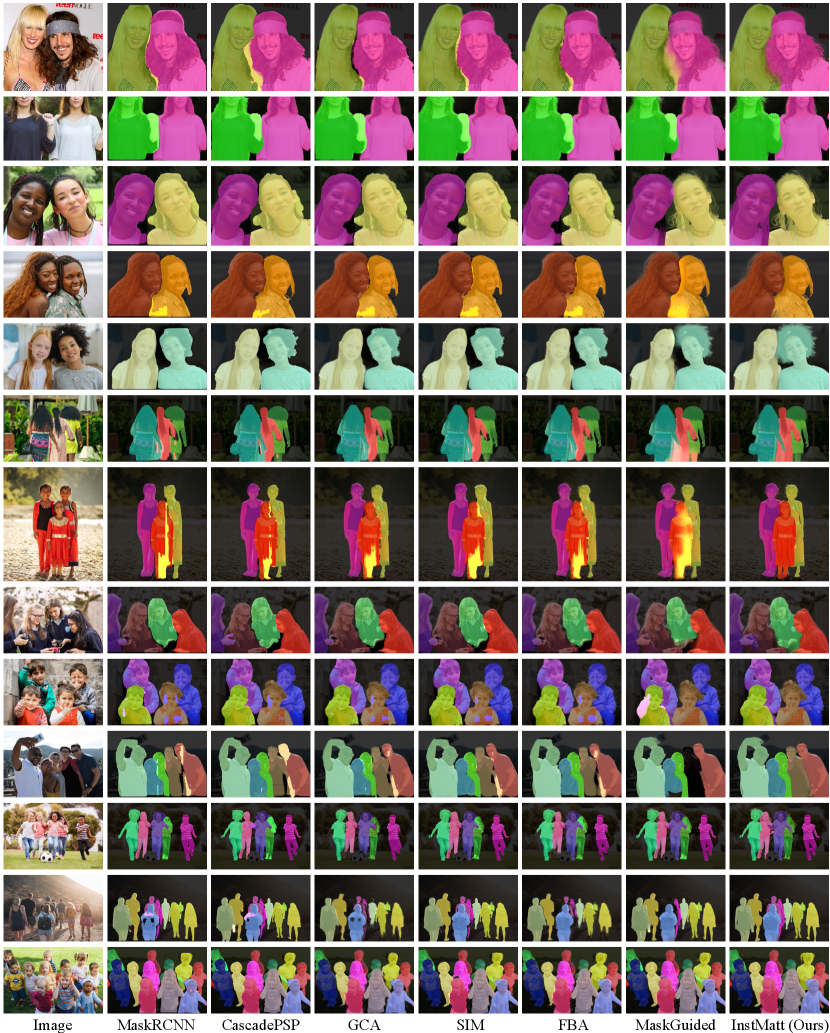

Figure 5 shows qualitative comparisons on complex images, demonstrating that instance matting is capable of solving challenging cases with multiple and overlapping instances, which cannot be addressed by other existing instance segmentation or matting techniques. Note on the other hand while COCO is a widely used testing dataset in detection and segmentation tasks, the mask annotations are labeled by rough polygons thus making COCO inappropriate in quantitative results comparison for the instance matting task. Thus, we instead conduct qualitative comparisons on COCO dataset in Figure 6. Compared with instance segmentation algorithms, our InstMatt framework is significantly better in handling complex matting scenarios at the instance level, such as defocus, motion, blurry or thin hairy structures.

Instance Matting Beyond Humans. This paper takes human instance matting as our focused contribution in instance matting. Notably, our method, including mutual guidance, multi-instance refinement, multi-instance constraints, and the proposed instance matting metric IMQ as well can be also applied to instance matting on other semantic classes. We adapt our method on another two popular classes, i.e., cat and dog. Preliminary results shown in Figure 7 indicate that our method may generalize well to other semantic classes in instance matting.

6.3 Ablation Study

Tri-mask. The tri-mask provides mutual guidance for both instances versus background as well as instances versus instances. Table 3 tabulates the results on models with different mask guidance settings. The tri-mask guides the model to assign each pixel partially to the target instance, other instances or the background. Notably, with tri-mask, some missing part due to occlusion are recovered as shown in the examples in Figure 8. The representation of the missing part is similar to that of the target instance, which cannot be ascribed to background or other instances due to the mutual exclusive supervision.

| Method | IMQ | IMQ |

|---|---|---|

| MaskRCNN [23] | 18.44 | 18.48 |

| MaskRCNN + CascadePSP [12] | 30.54 | 33.37 |

| InstMatt (Ours) | 30.67 | 39.56 |

| MIR | IMQ | IMQ | |||

|---|---|---|---|---|---|

| ✓ | ✗ | ✗ | ✗ | 57.98 | 71.12 |

| ✓ | ✓ | ✗ | ✗ | 62.25 | 74.35 |

| ✓ | ✓ | ✓ | ✗ | 69.40 | 79.74 |

| ✓ | ✓ | ✓ | ✓ | 70.26 | 81.34 |

Multi-Instance Refinement. Multi-instance refinement aligns alpha matte predictions among multiple tri-mattes. Table 3 shows that the IMQ of our model with and without multi-instance refinement module is 81.34 and 79.74, indicating an improvement from our multi-instance refinement. Figure 9 further shows that multi-instance refinement is helpful in erasing outliers due to the information synchronization among different instances.

7 Conclusion

In this paper, we propose a new task, instance matting with human instance matting as the first significant example by proposing a novel instance matting framework. Our InstMatt utilizes mutual exclusive guidance to guide the matting branch to extract alpha matte for each instance, which is followed by a multi-instance refinement module to synchronize information among co-occurring instances. InstMatt is capable of handling challenging cases with multiple and overlapping instances, which can be adapted to other semantic class instance matting beyond human instances. We hope the proposed method, alongside with the new instance matting metric and the human instance matting benchmark, will encourage more future works.

A. Network Structure

From the perspective of functionality, our InstMatt consists of two steps, that is, instance recognition and mask refinement. We adopt MaskRCNN [23] with the backbone ResNet50 [25] as our instance recognition model since MaskRCNN is a conventional and also competitive approach in instance segmentation. We take the publicly released MaskRCNN pre-trained weight from Detectron2 [59] without further finetuning because this model is well-trained on the large-scale COCO [40] dataset containing rich scenarios which can be well generalized to other datasets.

The mask refinement step in InstMatt can be further divided into two modules, i.e., tri-mask guided matting branch and multi-instance refinement. We adopt the network used in MG [61] as our matting branch. The network takes ResNet34 [25] as the backbone and applies three convolution blocks with a stride of , and in the decoder respectively to reconstruct the features for tri-matte prediction. During inference stage, after extracting the instance masks, we obtain a tri-matte for each instance. Next, all the tri-mattes are sent to the multi-instance refinement for information synchronization.

Multi-Instance Refinement. Considering the crowded cases with many instances, it is infeasible to perform multi-instance refinement on the whole image without out-of-memory problem when the memory storage is limited. Note that we only have to synchronize information on pixels which have information difference among all the instances and the background, that is, for a pixel ,

| (19) |

For other pixels already satisfying the multi-instance alpha constraint, we may not gain much promotion from the information synchronization. Thus, we adopt the patch inference guided by an error map , which is computed as follows,

| (20) |

We take the pixels with an error larger than 0.01 as the centers and correspondingly crop the patches of size to perform multi-instance refinement.

In implementation, the multi-instance refinement contains 4 learnable layers as illustrated in Figure 10. The four convolution layers all utilize kernel.

Cycle versus Parallel Refinement. Cycle refinement is order-sensitive, which is shown in the example in Figure 11. When adopting order 1 (instance 1, 2), the updated results get worse, while the outliers are perfectly removed when adopting order 2 (instance 2, 1). With user-supplied hints, cycle refinement is able to generate promising refined results. However, the instability makes the cycle refinement strategy inappropriate in non-interactive applications. On the contrary, the parallel refinement produces refined results not relevant to any order and thus shows stable performance.

B. Datasets

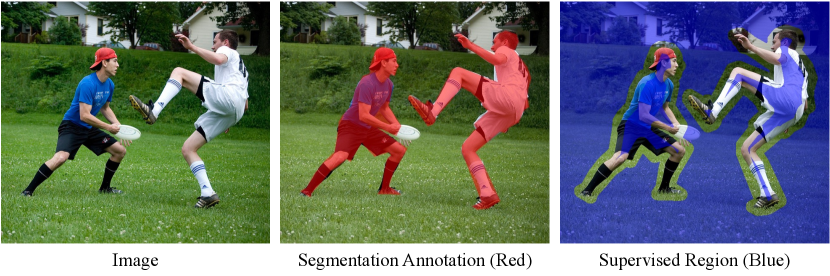

During training, we use two datasets, one of which is the synthetic training dataset mentioned in the main paper Section 6.1. Since the synthetic training samples have a domain gap with natural images, we also include a natural set containing 41330 images selected from the COCO training set. However, it is non-trivial to adapt the COCO dataset to the instance matting task due to the lack of instance matting annotations. To tackle this issue, we adopt a partial supervision strategy to make the samples with segmentation annotations applicable in our task.

Partial Supervision. See the example in Figure 12. The instance segmentation annotations are labeled by polygons, which introduces noise along the boundary region. Thus, we respectively dilate and erode pixels along the boundary to generate a region shown in blue in Figure 12, which are denoted as the supervised region while other pixels not masked are skipped. Such a partial supervision strategy allows us to make use of segmentation dataset without introducing noise. is set to 35 in training.

C. Augmentation

To enrich the training dataset and avoid overfitting, various augmentation operations are adopted on the training samples. Besides random flip, random zoom, random shearing, as well as random crop, we propose tri-mask augmentation to improve the fault tolerance of the model, in particular, robustness against missing instances and imperfect masks.

Missing Instance Tolerance. Let be the mask representing the union of all instances except for the target instance. Sometimes, the instance segmentation model is incapable of detecting all the instances. In this case, we only access a subset of the complete instance set to generate . Therefore, the alpha constraint is no longer applicable. To avoid such a dilemma, we relax in the training stage to a subset of reference instances instead of the complete set.

Mask Quality Tolerance. Equation 6 in the main paper mandates that is the complementary set of . To avoid overfitting caused by such a strong constraint, we conduct dilation or erosion on the tri-mask after computing , and . In this way, the tri-mask may exhibit various gaps or overlaps among each other, thus introducing some uncertainty in training to better accommodate possible uneven quality of segmentation tasks.

Tri-mask Augmentation. Due to the aforementioned two robustness considerations, we generate tri-masks in three steps, 1) instance mask generation, 2) instance separation, 3) mask perturbation.

In the first step, for an image with instances, we adopt two ways to generate masks for these instances. For a subset of the instances, we obtain their masks from the instance segmentation model; for the rest of the instances, we generate their masks from a random truncation on the ground truth alpha matte. A hybrid of the two ways in instance generation increases the diversity of masks.

In the second step, we first randomly pick an instance as the target instance, then randomly choose a subset from the rest of instances to produce . Finally we obtain by . Such relaxation operation on make the model ascribe the pixels of those undetected instances to , rather than or .

In the last step, we randomly dilate or erode or perform a hybrid of dilation and erosion on the tri-mask with kernel size in . Such perturbation on tri-mask further improves the fault tolerance of our model.

Note that the ground truth tri-matte for the tri-mask are generated without the relaxation or perturbation operation.

| Method | IMQmad | IMQmse |

|---|---|---|

| without tri-mask aug. | 67.51 | 76.54 |

| with tri-mask aug. | 69.40 | 79.74 |

Without and with the tri-mask augmentation, the IMQmad of our InstMatt is 67.51 and 69.40 as tabulated in Table 4, showing a promotion benefiting from the tri-mask augmentation.

D. Training Schedule

Our training schedule consists of two steps:

-

1.

Train the matting branch on the synthetic and natural training datasets. The branch is initialized with ImageNet [17] pre-trained weight. We use a batch size of 16 in total on 4 GPU cards. Adam optimizer with and is adopted. The initial learning rate is set to and decays at a cosine learning rate [45, 21]. The training lasts for 100,000 iterations with a warm-up of the first 5, 000 iterations.

-

2.

After the matting branch is well-trained, we freeze the matting branch and train the multi-instance refinement module. In the second step, we use a batch size of 4 in total on 4 GPU cards. The initial learning rate is set to . The training lasts for 25,000 iterations with a warm-up of the first 1, 000 iterations. We keep the other hyper parameters the same as those in the first step.

E. IMQ Metric

Computation. We propose an IMQ metric to provide a comprehensive and unified evaluation of instance matte quality. The computation of IMQ can be divided into two steps, i.e., instance matching and similarity measurement. During matching instances, we first quantify the predicted and ground truth alpha mattes by applying into binary mask to compute IoU matrix. Here, we use rather than other values as the threshold considering the semi-transparent/transparent objects usually composed of small alpha values. Other threshold will turn the alpha mattes of these objects into an incomplete binary mask which cannot cover the whole objects, thus leading to a wrong instance matching result. Quantification with 0 as threshold makes the IMQ metric applicable for not only human instance alpha mattes but also other semantic classes including transparent objects.

During similarity measurement, we adopt the widely used error functions in conventional matting task to evaluate the instance alpha matte from multiple dimensions. In implementation, we compute as follows,

| (24) | ||||

| (25) |

We take the average upon the union of quantified and instead of the whole image to avoid the overwhelming zero values from the large amount of background pixels especially for small instances.

MQ and RQ. As mentioned in Section 5 in the main paper, IMQ can be decomposed of two components, RQ and MQ, measuring the instance recognition quality and the alpha matte quality of TP set respectively. We provide the RQ and MQ in Table 5. Compared to MaskRCNN, CascadePSP significantly promotes the instance matte quality among TP set, however, does not improves the RQ at all, demonstrating that CascadePSP cannot upgrade a low-quality mask which has an IoU below 0.5 with any ground truth instance mask into a high-quality instance mask due to the lack of instance awareness.

On the contrary, besides refining the instance alpha matte along the boundary and the hairy regions among the TP set, our InstMatt is also capable of recognizing an instance and correspondingly extracting its alpha matte even though only a low-quality mask with misleading instance information is provided, such as the first example in Figure 5 in the main paper and the example in Figure 13.

F. Experiment and Comparison

Experiment Setting. We train our method InstMatt and MaskGuided [61] on both the synthetic and natural datasets. For other methods including CascadePSP [12], GCA [36], SIM [52] and FBA [18], we use the released model from their official project website. To generate trimap for the trimap-based matting methods, we respectively dilate and erode the mask predicted from MaskRCNN [23] with a kernel size of 5 and then repeat the dilation and erosion operations for 10 times.

Comparisons on HIM2K. Through our mutual guidance strategy in tandem with the multi-instance refinement module, our InstMatt shows superiority in various challenging cases. We provide more qualitative results for comparisons.

Figure 14 shows the cases containing multiple instances next to each other closely. MaskRCNN is able to distinguish instances but produces overlapping instance masks, which cannot be addressed either by CascadePSP [12] or a naive extension of existing matting models [36, 52, 18, 61]. Our InstMatt, however, can clearly separate the instances and generate non-overlapping instance alpha mattes.

Figure 15 shows the cases with occlusion. Under such cases, a part of one instance, usually a hand, or an arm, appears within the region of another instance and is far away from its own body. It is difficult to solve these cases for recognition tasks due to the limitation of receptive fields and the bottleneck of long-range feature propagation. As shown in Figure 15, both instance segmentation models and matting models fall short of producing satisfactory results in these cases, while our InstMatt still produces promising results. Inter-instance mutual exclusive information guides the model to retrieve the remote pixels sharing the similar appearance with the body region instead of ascribing them to the other instances.

Figure 16 compares the performance on a case with incomplete instance segmentation masks. Although CascadePSP or matting-based models are able to refine the mask along the boundary, they cannot recover a part of missing region due to the lack of instance awareness. Our InstMatt can find the lost region from the background giving the credit to the mutual exclusive guidance between the human instances and the background.

Figure 17 compares the performance on small instances. Compared to other methods, our InstMatt still shows stable performance on the instances of small or tiny scales, demonstrating the generalization ability and the fineness of our results.

G. Limitation

Under most cases, our method is capable of upgrading a low-quality instance mask into a high-quality alpha matte. However, sometimes the instance segmentation model cannot differentiate two largely overlapping instances as shown in Figure 18. Note that this example is different from the one in Figure 13. In Figure 13, MaskRCNN recognizes two instances although the mask of instance 2 covers a part of instance 1. Differently, in Figure 18, the instance segmentation model regards two left human instances as one and only predict a mask for the two left instances. In this case, our model can only refine the mask but cannot separate them due to the lack of sufficient guidance.

H. More Qualitative Results

Examples with hair details in alpha format are provided in Figure 19. More qualitative comparisons on images containing complex multiple overlapping or crowded cases are shown in Figure 20.

References

- [1] Yagiz Aksoy, Tunç Ozan Aydin, Marc Pollefeys, and Aljosa Smolic. Interactive high-quality green-screen keying via color unmixing. ACM Trans. Graph., 35(5):152:1–152:12, 2016.

- [2] Yagiz Aksoy, Tunç Ozan Aydin, Aljosa Smolic, and Marc Pollefeys. Unmixing-based soft color segmentation for image manipulation. ACM Trans. Graph., 36(2):19:1–19:19, 2017.

- [3] Yağiz Aksoy, Tae-Hyun Oh, Sylvain Paris, Marc Pollefeys, and Wojciech Matusik. Semantic soft segmentation. ACM Transactions on Graphics, 37(4):1–13, 2018.

- [4] Yagiz Aksoy, Tunc Ozan Aydin, and Marc Pollefeys. Designing effective inter-pixel information flow for natural image matting. In IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2017.

- [5] Xue Bai and Guillermo Sapiro. A geodesic framework for fast interactive image and video segmentation and matting. In International Conference on Computer Vision, 2007.

- [6] Daniel Bolya, Chong Zhou, Fanyi Xiao, and Yong Jae Lee. YOLACT: real-time instance segmentation. In IEEE/CVF International Conference on Computer Vision, 2019.

- [7] Shaofan Cai, Xiaoshuai Zhang, Haoqiang Fan, Haibin Huang, Jiangyu Liu, Jiaming Liu, Jiaying Liu, Jue Wang, and Jian Sun. Disentangled image matting. In International Conference on Computer Vision, 2019.

- [8] Hao Chen, Kunyang Sun, Zhi Tian, Chunhua Shen, Yongming Huang, and Youliang Yan. Blendmask: Top-down meets bottom-up for instance segmentation. In IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2020.

- [9] Quan Chen, Tiezheng Ge, Yanyu Xu, Zhiqiang Zhang, Xinxin Yang, and Kun Gai. Semantic human matting. In ACM Multimedia Conference, 2018.

- [10] Qifeng Chen, Dingzeyu Li, and Chi-Keung Tang. Knn matting. In IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2012.

- [11] Xinlei Chen, Ross B. Girshick, Kaiming He, and Piotr Dollár. Tensormask: A foundation for dense object segmentation. In IEEE/CVF International Conference on Computer Vision, 2019.

- [12] Ho Kei Cheng, Jihoon Chung, Yu-Wing Tai, and Chi-Keung Tang. Cascadepsp: Toward class-agnostic and very high-resolution segmentation via global and local refinement. In IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2020.

- [13] Donghyeon Cho, Yu-Wing Tai, and In-So Kweon. Natural image matting using deep convolutional neural networks. In European Conference on Computer Vision, 2016.

- [14] Yung-Yu Chuang, Brian Curless, David Salesin, and Richard Szeliski. A bayesian approach to digital matting. In IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2001.

- [15] Yutong Dai, Hao Lu, and Chunhua Shen. Learning affinity-aware upsampling for deep image matting. In IEEE Conference on Computer Vision and Pattern Recognition, 2021.

- [16] Supervisely Person Dataset. supervisely. https://github.com/supervisely-ecosystem/persons.

- [17] Jia Deng, Wei Dong, Richard Socher, Li-Jia Li, Kai Li, and Fei-Fei Li. Imagenet: A large-scale hierarchical image database. In IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2009.

- [18] Marco Forte and François Pitié. F, b, alpha matting. CoRR, abs/2003.07711, 2020.

- [19] Naiyu Gao, Yanhu Shan, Yupei Wang, Xin Zhao, Yinan Yu, Ming Yang, and Kaiqi Huang. SSAP: single-shot instance segmentation with affinity pyramid. In IEEE/CVF International Conference on Computer Vision, 2019.

- [20] Eduardo SL Gastal and Manuel M Oliveira. Shared sampling for real-time alpha matting. Computer Graphics Forum, 29(2):575–584, 2010.

- [21] Priya Goyal, Piotr Dollár, Ross B. Girshick, Pieter Noordhuis, Lukasz Wesolowski, Aapo Kyrola, Andrew Tulloch, Yangqing Jia, and Kaiming He. Accurate, large minibatch SGD: training imagenet in 1 hour. CoRR, abs/1706.02677, 2017.

- [22] Leo Grady, Thomas Schiwietz, Shmuel Aharon, and Rüdiger Westermann. Random walks for interactive alpha-matting. In Proceedings of VIIP, 2005.

- [23] Kaiming He, Georgia Gkioxari, Piotr Dollár, and Ross B. Girshick. Mask R-CNN. In IEEE International Conference on Computer Vision, 2017.

- [24] Kaiming He, Christoph Rhemann, Carsten Rother, Xiaoou Tang, and Jian Sun. A global sampling method for alpha matting. In IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2011.

- [25] Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. Deep residual learning for image recognition. In IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2016.

- [26] Qiqi Hou and Feng Liu. Context-aware image matting for simultaneous foreground and alpha estimation. In International Conference on Computer Vision, 2019.

- [27] Guanqing Hu and James J. Clark. Instance segmentation based semantic matting for compositing applications. In Conference on Computer and Robot Vision, 2019.

- [28] Zhaojin Huang, Lichao Huang, Yongchao Gong, Chang Huang, and Xinggang Wang. Mask scoring R-CNN. In IEEE Conference on Computer Vision and Pattern Recognition, 2019.

- [29] Lei Ke, Yu-Wing Tai, and Chi-Keung Tang. Deep occlusion-aware instance segmentation with overlapping bilayers. In IEEE Conference on Computer Vision and Pattern Recognition, 2021.

- [30] Zhanghan Ke, Kaican Li, Yurou Zhou, Qiuhua Wu, Xiangyu Mao, Qiong Yan, and Rynson W. H. Lau. Is a green screen really necessary for real-time portrait matting? CoRR, abs/2011.11961, 2020.

- [31] Alexander Kirillov, Kaiming He, Ross B. Girshick, Carsten Rother, and Piotr Dollár. Panoptic segmentation. In IEEE Conference on Computer Vision and Pattern Recognition, 2019.

- [32] Harold Kuhn. Hungarian algorithm. https://en.wikipedia.org/wiki/Hungarian_algorithm.

- [33] Anat Levin, Dani Lischinski, and Yair Weiss. A closed-form solution to natural image matting. In IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2006.

- [34] Anat Levin, Alex Rav-Acha, and Dani Lischinski. Spectral matting. In IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2007.

- [35] Jizhizi Li, Jing Zhang, Stephen J. Maybank, and Dacheng Tao. End-to-end animal image matting. CoRR, abs/2010.16188, 2020.

- [36] Yaoyi Li and Hongtao Lu. Natural image matting via guided contextual attention. In AAAI Conference on Artificial Intelligence, 2020.

- [37] Yi Li, Haozhi Qi, Jifeng Dai, Xiangyang Ji, and Yichen Wei. Fully convolutional instance-aware semantic segmentation. In IEEE Conference on Computer Vision and Pattern Recognition, 2017.

- [38] Shanchuan Lin, Andrey Ryabtsev, Soumyadip Sengupta, Brian Curless, Steven M. Seitz, and Ira Kemelmacher-Shlizerman. Real-time high-resolution background matting. In IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2021.

- [39] Shanchuan Lin, Linjie Yang, Imran Saleemi, and Soumyadip Sengupta. Robust high-resolution video matting with temporal guidance. CoRR, abs/2108.11515, 2021.

- [40] Tsung-Yi Lin, Michael Maire, Serge J. Belongie, James Hays, Pietro Perona, Deva Ramanan, Piotr Dollár, and C. Lawrence Zitnick. Microsoft COCO: common objects in context. In David J. Fleet, Tomás Pajdla, Bernt Schiele, and Tinne Tuytelaars, editors, European Conference on Computer Vision, 2014.

- [41] Jinlin Liu, Yuan Yao, Wendi Hou, Miaomiao Cui, Xuansong Xie, Changshui Zhang, and Xian-Sheng Hua. Boosting semantic human matting with coarse annotations. In IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2020.

- [42] Shu Liu, Jiaya Jia, Sanja Fidler, and Raquel Urtasun. SGN: sequential grouping networks for instance segmentation. In IEEE International Conference on Computer Vision, 2017.

- [43] Shu Liu, Lu Qi, Haifang Qin, Jianping Shi, and Jiaya Jia. Path aggregation network for instance segmentation. In IEEE Conference on Computer Vision and Pattern Recognition, 2018.

- [44] Yuhao Liu, Jiake Xie, Xiao Shi, Yu Qiao, Yujie Huang, Yong Tang, and Xin Yang. Tripartite information mining and integration for image matting. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), pages 7555–7564, October 2021.

- [45] Ilya Loshchilov and Frank Hutter. SGDR: stochastic gradient descent with warm restarts. In International Conference on Learning Representations, 2017.

- [46] Hao Lu, Yutong Dai, Chunhua Shen, and Songcen Xu. Indices matter: Learning to index for deep image matting. In International Conference on Computer Vision, 2019.

- [47] Sebastian Lutz, Konstantinos Amplianitis, and Aljosa Smolic. Alphagan: Generative adversarial networks for natural image matting. In British Machine Vision Conference, 2018.

- [48] Yu Qiao, Yuhao Liu, Xin Yang, Dongsheng Zhou, Mingliang Xu, Qiang Zhang, and Xiaopeng Wei. Attention-guided hierarchical structure aggregation for image matting. In IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2020.

- [49] Soumyadip Sengupta, Vivek Jayaram, Brian Curless, Steven M. Seitz, and Ira Kemelmacher-Shlizerman. Background matting: The world is your green screen. In IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2020.

- [50] Xiaoyong Shen, Xin Tao, Hongyun Gao, Chao Zhou, and Jiaya Jia. Deep automatic portrait matting. In Bastian Leibe, Jiri Matas, Nicu Sebe, and Max Welling, editors, European Conference on Computer Vision, 2016.

- [51] Dheeraj Singaraju and René Vidal. Estimation of alpha mattes for multiple image layers. IEEE Trans. Pattern Anal. Mach. Intell., 33(7):1295–1309, 2011.

- [52] Yanan Sun, Chi-Keung Tang, and Yu-Wing Tai. Semantic image matting. In IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2021.

- [53] Yanan Sun, Guanzhi Wang, Qiao Gu, Chi-Keung Tang, and Yu-Wing Tai. Deep video matting via spatio-temporal alignment and aggregation. In IEEE Conference on Computer Vision and Pattern Recognition, 2021.

- [54] Yu-Wing Tai, Jiaya Jia, and Chi-Keung Tang. Soft color segmentation and its applications. IEEE Trans. Pattern Anal. Mach. Intell., 29(9):1520–1537, 2007.

- [55] Jianchao Tan, Jyh-Ming Lien, and Yotam I. Gingold. Decomposing images into layers via rgb-space geometry. ACM Trans. Graph., 36(1):7:1–7:14, 2017.

- [56] Tiantian Wang, Sifei Liu, Yapeng Tian, Kai Li, and Ming-Hsuan Yang. Video matting via consistency-regularized graph neural networks. In Proceedings of the IEEE/CVF International Conference on Computer Vision, 2021.

- [57] Xinlong Wang, Tao Kong, Chunhua Shen, Yuning Jiang, and Lei Li. SOLO: segmenting objects by locations. In Andrea Vedaldi, Horst Bischof, Thomas Brox, and Jan-Michael Frahm, editors, European Conference on Computer Vision, 2020.

- [58] Xinlong Wang, Rufeng Zhang, Tao Kong, Lei Li, and Chunhua Shen. Solov2: Dynamic and fast instance segmentation. In Hugo Larochelle, Marc’Aurelio Ranzato, Raia Hadsell, Maria-Florina Balcan, and Hsuan-Tien Lin, editors, Advances in Neural Information Processing Systems, 2020.

- [59] Yuxin Wu, Alexander Kirillov, Francisco Massa, Wan-Yen Lo, and Ross Girshick. Detectron2. https://github.com/facebookresearch/detectron2, 2019.

- [60] Ning Xu, Brian L. Price, Scott Cohen, and Thomas S. Huang. Deep image matting. In IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2017.

- [61] Qihang Yu, Jianming Zhang, He Zhang, Yilin Wang, Zhe Lin, Ning Xu, Yutong Bai, and Alan L. Yuille. Mask guided matting via progressive refinement network. In IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2021.

- [62] Zijian Yu, Xuhui Li, Huijuan Huang, Wen Zheng, and Li Chen. Cascade image matting with deformable graph refinement. In Proceedings of the IEEE/CVF International Conference on Computer Vision, 2021.

- [63] Yunke Zhang, Lixue Gong, Lubin Fan, Peiran Ren, Qixing Huang, Hujun Bao, and Weiwei Xu. A late fusion CNN for digital matting. In IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2019.