Hyperplane Distance Depth††thanks: This work is funded in part by the Natural Sciences and Engineering Research Council of Canada (NSERC).

Abstract

Depth measures quantify central tendency in the analysis of statistical and geometric data. Selecting a depth measure that is simple and efficiently computable is often important, e.g., when calculating depth for multiple query points or when applied to large sets of data. In this work, we introduce Hyperplane Distance Depth (HDD), which measures the centrality of a query point relative to a given set of points in , defined as the sum of the distances from to all hyperplanes determined by points in . We present algorithms for calculating the HDD of an arbitrary query point relative to in time after preprocessing , and for finding a median point of in time. We study various properties of hyperplane distance depth, and show that it is convex, symmetric, and vanishing at infinity.

1 Introduction

Depth measures describe central tendency in statistical and geometric data. A median of a set of univariate data is a point that partitions the set into two halves of equal cardinality, with smaller values in one part, and larger values in the other. Various definitions of medians exist in higher dimensions (multivariate data), seeking to generalize the one-dimensional notion of median (e.g., [6]). For geometric data and sets of geometric objects, applications of median-finding include calculating a centroid, determining a balance point in physical objects, and defining cluster centers in facility location problems [7]. A median is frequently used in statistics to describe the central tendency of a data set. It is particularly useful when dealing with skewed distributions or datasets that contain outliers. By using a median, analysts can obtain a representative value that is less affected by extreme values and outliers [10].

In 1975, Tukey introduced the concept of data depth for evaluating centrality in bivariate data sets [12]. The depth of a particular query point in relation to a given set gauges the extent to which is situated within the overall distribution of ; i.e., when ’s depth is large, tends to be near the center of . Since the introduction of Tukey depth (also called half-space depth), many more depth functions have been proposed.

Data depth functions should ideally satisfy specific properties, such as convexity, stability (small perturbations in the data do not result in large changes in depth values), robustness (depth is not heavily influenced by outliers or extreme values in the data), affine invariance (the depth function remains consistent under linear transformations of the data, such as translation, scaling, and rotation), maximality at the center (points closer to the geometric center of the data set have higher depth values), and vanishing at infinity (depth values approach zero as a query point moves away from the data set) [14].

2 Related Work

Tukey [12] first introduced the concept of location depth. In , the Tukey depth of a point relative to a set of points in is defined as the smallest number of points of on one side of a line passing through . This concept can also be generalized to higher dimensions.

Definition 1 (Tukey Depth [12])

The Tukey depth of a point relative to a set of points in , is the minimum number of points of in any closed half-space that contains .

In univariate space, e.g., in , the Tukey depth of is determined by considering the minimum of the count of points where , and the count of points where .

A Tukey median of a set in corresponds to a point (or points) with maximum Tukey depth among all points in .

Since Tukey’s introduction of Tukey depth, several other important depth functions have been defined to measure the centrality of relative to .

Definition 2 (Mahalanobis Depth [9])

The Mahalanobis depth of a point relative to a set in is defined as , where and are the mean vector and dispersion matrix of .

This function lacks robustness, as it relies on non-robust measures like the mean and the dispersion matrix. Another possible disadvantage of Mahalanobis depth is its reliance on the existence of second moments [9].

Definition 3 (Convex Hull Peeling Depth [2])

The convex hull peeling depth of a point relative to a set in is the level of the convex layer to which belongs.

A convex layer is established by recursively removing points on the convex hull boundary of until is outside the hull. Begin by constructing the convex hull of . Points of on the boundary of the hull constitute the initial convex layer and are removed. Then, form the convex hull anew with the remaining points of . The points along this new hull’s boundary constitute the second convex layer. This iterative process continues, generating a sequence of nested convex layers. The deeper a query point resides within , the deeper the layer it belongs to. However, the method of convex hull peeling depth possesses certain drawbacks. It fails to exhibit robustness in the presence of outliers or noise. Additionally, it’s unfeasible to associate this measure with a theoretical distribution.

Definition 4 (Oja Depth [11])

The Oja depth of a point relative to a set in is defined as the sum of the volumes of every closed simplex having one vertex at and its remaining vertices at any points of .

In , the Oja depth of a point is the sum of the areas of all triangles formed by the vertices ,, and , where .

Definition 5 (Simplicial Depth [8])

The simplicial Depth of a point relative to a set in is defined as the number of closed simplices containing and having vertices in .

The simplicial depth of a point is the number of triangles with vertices in and containing . This is a common measure of data depth.

Definition 6 ( Depth [13])

The depth of a point relative to a set in is defined as .

The Median is the point that minimizes the sum of the absolute distances (also known as the norm or Manhattan distance) to all other points in . The key advantage of the Median is its robustness to outliers. It is less sensitive to extreme values in the dataset compared to the Median, which minimizes the sum of squared distances. As a result, the Median can provide a more accurate estimate of central tendency in datasets with outliers or heavy-tailed distributions. The Median is used in various fields, including finance, image processing, and robust statistics, whenever there is a need for a robust estimate of the central location of a dataset that may contain atypical values.

Definition 7 ( Depth [14])

The depth (mean) of a point relative to a set in is defined as .

The Median is the point that minimizes the sum of the squared Euclidean distances. The mean is a widely used measure of central tendency in statistics and data analysis. The mean is not robust to outliers; a single outlier can pull the mean arbitrarily far.

Definition 8 (Fermat-Weber Depth [4])

The Fermat-Weber depth (Geometric depth) of a point relative to a set in is defined as .

A deepest point (median) with respect to Fermat-Weber depth cannot be calculated exactly in general when and [1].

There is no single depth function that universally outperforms all others. The choice of a particular depth function often depends on its suitability for a specific dataset or its ease of computation. Nevertheless, there are several desirable properties that all data depth functions should ideally possess. In Section 3, we introduce a new depth measure, and we examine which of these properties it satisfies.

3 Results

In this section, we will introduce the Hyperplane Distance Depth (HDD) measure and study its properties.

3.1 Defintion

Definition 9 (Hyperplane distance depth)

The Hyperplane distance depth (HDD) of a point relative to a set in is defined as

| (1) |

where is the set of all -dimensional hyperplanes determined by points in , and denotes the Euclidean () distance from the point to the hyperplane .

3.2 Properties

Theorem 3.1.

In , the HDD median relative to the set coincides with the usual univariate definition of median.

Proof 3.2.

By Definition 9, the median is a point that minimizes the sum of the distances to all possible points passing through each point in . Therefore, . Consequently, the HDD median is equivalent to the usual definition of median in a one-dimensional space.

Theorem 3.3.

The HDD function relative to the set is convex over .

Proof 3.4.

The distance function from a query point to the hyperplane is convex. Any non-negative linear combination of convex functions is convex. Therefore, the HDD function is convex over .

Theorem 3.5.

The HDD median point relative to the set of points in is always on one of the intersection points between hyperplanes in .

Proof 3.6.

The distance from the point to a hyperplane is equal to where and are the hyperplane’s normal vector and the offset respectively. Therefore, the HDD of the point is equal to

| (2) |

Depending on the position of with respect to , can be equal to (above the hyperplane) , (below the hyperplane), or (on the hyperplane). Therefore, for any point we have

| (3) |

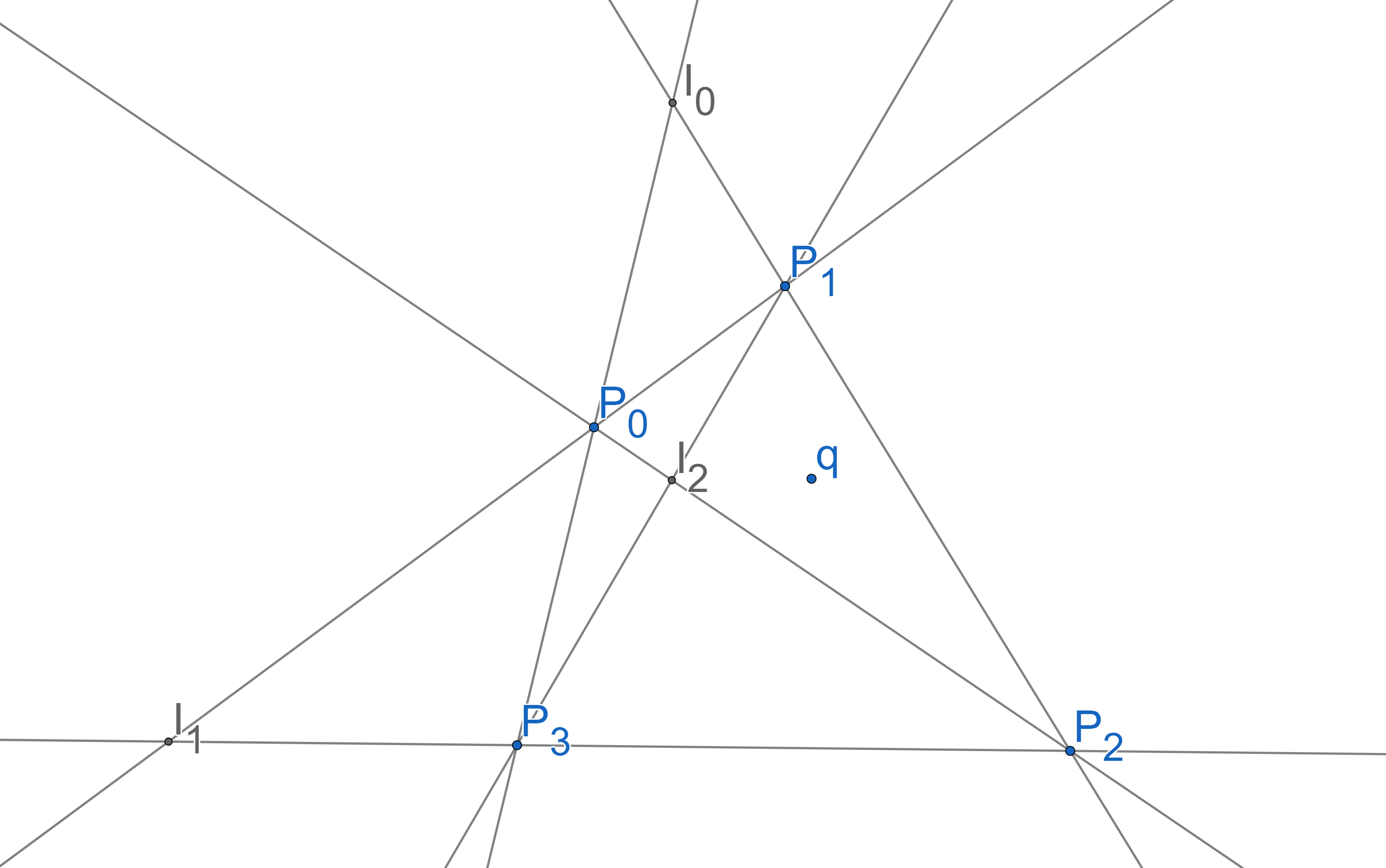

It is worth noting that the derivative of the equation (3) exists if is not on any of the hyperplanes in (). Now to find the HDD median with the minimum HDD measure, we should compute the derivative with respect to and see where it will be equal to . For any query point inside a region bounded by some hyperplanes and not on any hyperplanes (Figure 1) we have

| (4) |

Equation (4) above cannot be equal to in general since there are no variables (4). This means the assumption we made about the query point not being on the hyperplanes in was incorrect. Therefore, we can say the median is surely on one of the hyperplanes. If is on , will be equal to . Therefore, we can say

| (5) |

Using the same logic we can conclude that the median point should be on another hyperplane in addition to . We can repeat these steps times and after that, it will be proved that the median should be on the intersection point of hyperplane (that will be a single point), thus the median will be on one of the intersection points.

Theorem 3.7.

The HDD median point relative to the set in is always in the convex hull of the input points .

Proof 3.8.

Let be the sum of the distances to all the hyperplanes in passing through the point . The minimum of this convex function is always on the point where the HDD is equal to . On the other hand, since each hyperplane includes input points from , we have .

Now consider a point outside of the convex hull. by computing the gradient of , we will show that by moving closer to the convex hull, the HDD gets smaller. Using the equation above we have . Since the minimum of the function is on , is a vector pointing to for . Therefore we can conclude that for every point outside of the convex hull, points to the convex hull that means by moving toward that direction, the HDD decreases. Therefore the HDD median is always in the convex hull of .

Theorem 3.9.

The HDD median point relative to the set in is always at the center of symmetry.

Proof 3.10.

Let be the median of the s.t. is symmetric. If is not on the center of symmetry, consider , the reflection of across the center of symmetry. Because of the symmetry, it is trivial that the HDD measure of both points is equal. Since the median point has the minimum depth measure among the other points and the depth measure function is convex, all the points on the line segment should have a depth measure equal to the median. Therefore, the median is always at the center of symmetry.

Theorem 3.11.

The HDD measure relative to the set in vanishes as we move the query point to infinity.

Proof 3.12.

As we move the query point to infinity, it is straightforward that there exists a hyperplane that gets further from . Since we can move arbitrarily far from , and the distance from to the remaining hyperplanes in is non-negative, therefore HDD vanishes at infinity.

Note that some measures of depth are defined such that deep points have high depth values and outliers have low depth values, whereas this property is reversed for other depth measures. HDD is of the latter type, with central points having a low sum of distances to hyperplanes in , whereas this sum approaches infinity as moves away from . Consequently, for HDD, “vanishing at infinity” means that depth approaches as opposed to 0.

Theorem 3.13.

The HDD measure relative to the set in is not robust.

Proof 3.14.

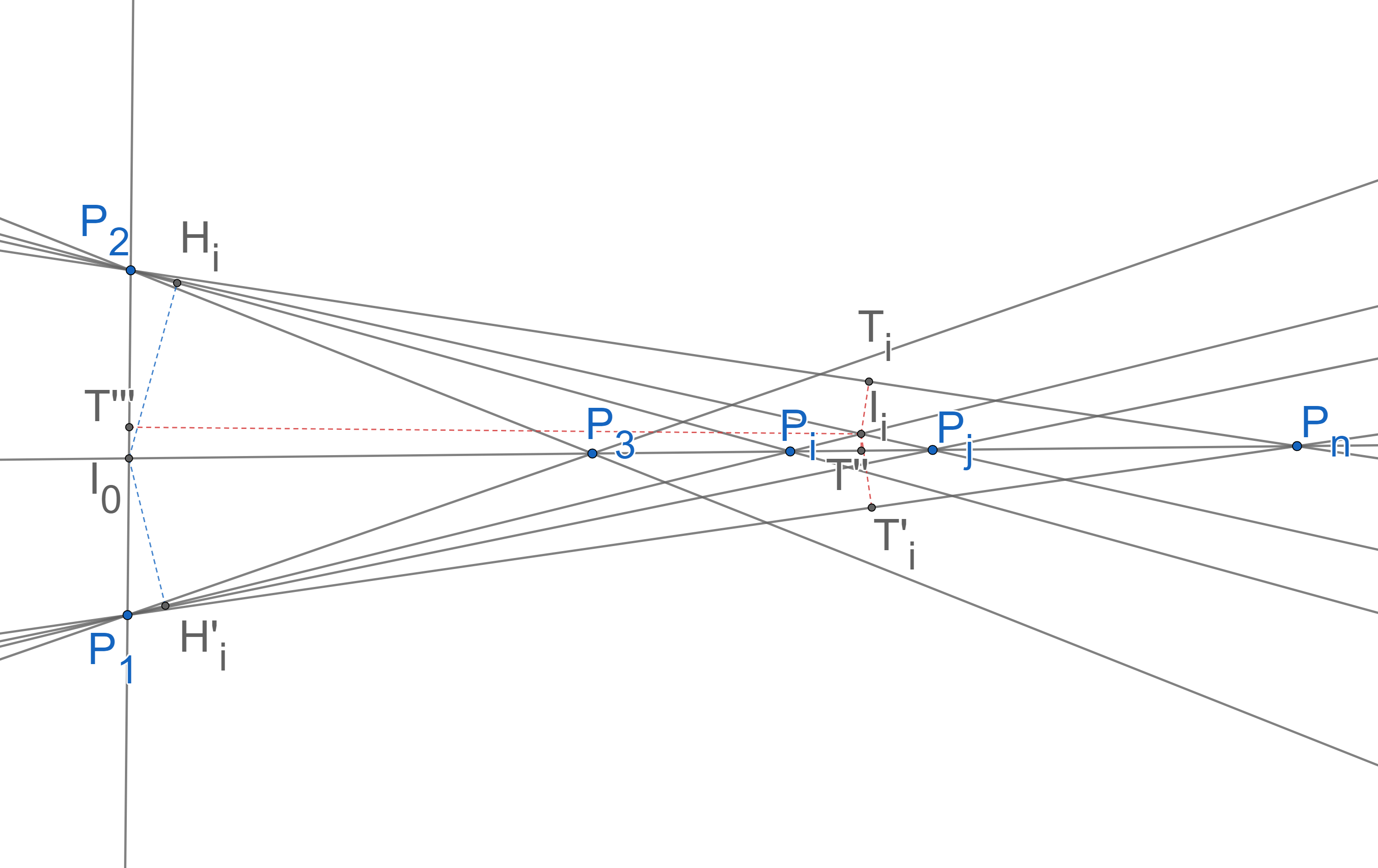

We will prove this fact using a counter-example in a 2-dimensional space (Figure 2). We can move the HDD median by changing the location of 2 points which means the HDD is not robust. The median is always on one of the intersection points and we can place the points in a way that is always the median (Figure 2). We will compute the depth measures for the points (5) and (7), where is an arbitrary intersection point except .

| (6) | ||||

| (7) |

Regardless of the position, we know that and . Therefore, we have (Equation (6)):

| (8) |

On the other hand, using Equation (7) we have:

| (9) |

Now by moving the points and far enough, let , where is a positive number. Therefore, we have (inequality 9):

| (10) |

Consequently, is the median. By increasing , the median gets as far as we want. This means by moving and , we can move the median point as much as we want.

Definition 3.15 (-stability [5]).

A depth measure is -stable if for all points in , all sets in , all , and all functions such that , ,

| (11) |

where .

That is, for any -perturbation of and , the depth of relative to changes by at most .

Theorem 3.16.

The HDD measure relative to the set in is not -stable for any constant .

Proof 3.17.

Choose any and let . Let be a set of points in and let lie to the left of . By moving all points of one unit to the right (), the hyperplane depth of relative to increases by a factor of , regardless of . Thus HDD is not -stable.

Theorem 3.18.

The HDD function relative to the set in is not equivariant under affine transformations.

Proof 3.19.

We will prove this theorem using a counter-example. Consider the set of points . Using Theorem 3.5 we can show that the median is on point . Now consider the set that is under the non-uniform affine transformation matrix . Using theorem 3.5 and 3.9 It can be shown that the median is on the line now. This means that the HDD median is not equivariant under affine transformation.

As we now show, HDD is equivariant under similarity transformations, including translation, rotation, reflection and uniform scaling, since these preserve the shape of .

Theorem 3.20.

The HDD function relative to the set in is equivariant under the similarity transformations.

Proof 3.21.

For any rotation, reflection, or translation transformation , the distance from the query point to any hyperplane remains unchanged. That is, for any point and any hyperplane , .

For any uniform scaling transformation with a scaling factor of , distances between each pair of points will be multiplied by after the transformation. Therefore it is easy to show that, for any query point , the HDD will be multiplied by after uniform transformation. Therefore, the median is equivariant under the uniform scaling transformation.

4 Algorithms

In this section, we provide three algorithms: a) to compute HDD depth queries in time after preprocessing time, b) to find an HDD median point in time, and c) to find an approximate HDD median. Let be a set of points in , and let be the set of hyperplanes determined by point in .

4.1 HDD Query Algorithm

The hyperplane distance depth of a query point relative to can be computed by directly evaluating Equation (2) in time. We will present an algorithm that can calculate HDD in logarithmic time after preprocessing. First, to measure the HDD of , we need to store some coefficients belonging to each polytope formed by hyperplanes in .

Consider Equation (3). Let be the set of all minimal polytopes determined by the arrangement of hyperplanes in . For a query point in a polytope , the coefficients for are the same. Therefore, for any points in , we can simplify the summation in (3) in time and find the coefficients and such that

| (12) |

Using Euler’s characteristic theorem we know that there are polytopes formed by the hyperplanes in e.g. in Figure 1 there are polytopes (faces) formed by the hyperplanes (lines). Therefore we will need space and time to preprocess.

Using the mentioned data structure we can calculate the HDD measure in time if we know to which polytope the query point belongs.

Given hyperplanes in -dimensional space and a query point , it takes time to find the location with a data structure of size and a preprocessing time of [3]. In our problem, there are hyperplanes. Therefore, with a preprocessing time of and a space of , we can find the location of in time.

Now after finding the ’s location in , we can calculate the HDD measure in using Equation (12).

Therefore, after preprocessing time using space, we can find the HDD of an arbitrary query point in time. This proves the following theorem.

Theorem 4.1.

We can preprocess any given set of points in in time, such that given any point , we can compute in time.

4.2 Finding a HDD Median

By Theorem 3.5, a straightforward algorithm for finding an HDD median of is to check all points of intersection between hyperplanes in using an exhaustive search. There are hyperplanes in and therefore intersection points between hyperplanes in . Since it takes to compute the Equation (2) directly, a HDD median of can be found in time by this brute-force algorithm.

Next, we will introduce an algorithm that finds the HHD median in time. When , this second algorithm runs in time, compared to time for the brute-force algorithm. First, we will show that we can find the point with the smallest HDD on a line in time. Consider the intersection of hyperplanes in that determine a line . Since every hyperplane in has exactly one point of intersection with , is a set of points of intersection. By Theorem 3.3, we can conclude that the hyperplane depth of points on is a convex function. Since is discrete, using binary search and calculating HDD in time using Equation (2), we can find the intersection point with the minimum HDD in time.

We can use the algorithm above to find the minimum point for each intersection line among hyperplanes in to find an HDD median. Since each hyperplanes in form a line, there are lines and thus we can find the median in time. This proves the following theorem.

Theorem 4.2.

Given a set of points in , we can find an HDD median of in time.

4.3 Finding an Approximate HDD Median in

In this section, we will present an approximation algorithm to find an HDD median of with an error of in time, for any fixed , where is the diameter of .

Theorem 4.3.

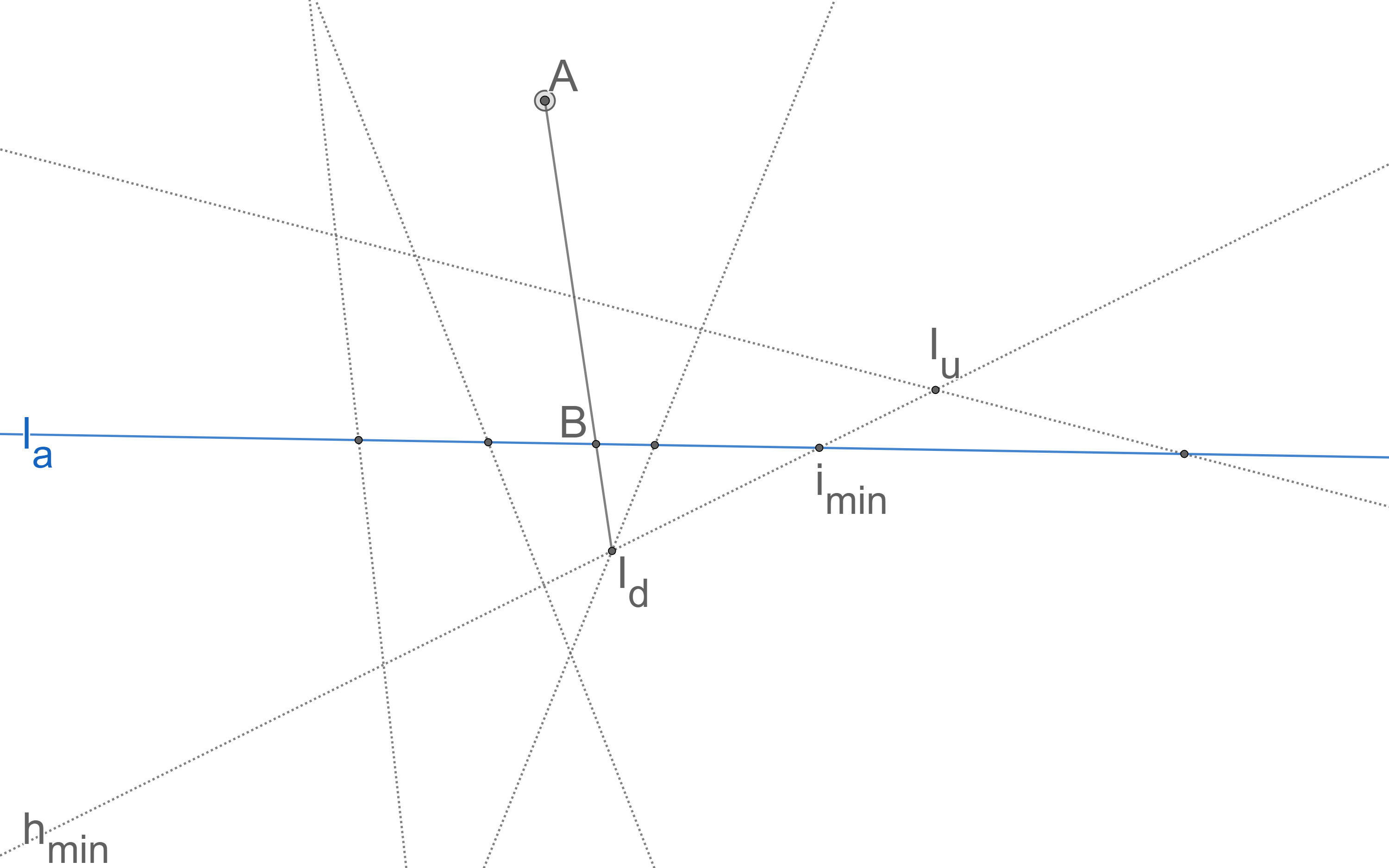

Given a set of points in , in time we can find a point in such that , for any fixed , where denotes an HDD median of and .

Proof 4.4.

Let be an arbitrary line among the lines in (see Figure 3). There are lines in and, consequently, points of intersection between and lines in . Using an analogous argument as in the proof of Theorem 3.5, the point with minimum HDD on lies at an intersection of and a line in . Therefore, using the same algorithm described in Section 4.2, we can find the point on with minimum HDD in time; let denote the line in such that . Next, we find the closest points of intersection in to on the line in each direction, say and . We compute the HDD for all the three points , , and . Since has minimum HDD on the line , if , then is the HDD median (by Theorem 3.3). By Theorem 3.3 again, or . Furthermore, or . Without loss of generality, suppose . We claim that all points in the half-plane bounded by that contains have HDD that exceeds ; we prove this by contradiction. Suppose there exists a point in this half-plane such that . Let be the intersection point of the line and the line segment . Since has minimum HDD on the line , therefore, . Furthermore, . On the other hand, we assumed and we know . Consequently, . Combining the two resulting inequalities above, we have and , which is impossible since the three points are on the same line and the HDD function is convex. Therefore, no such point can exist.

Therefore, no point of intersection in in this half-plane can be an HDD median of ; in time we can remove these points from consideration in our search for a median.

Now we will use this property to approximate the median point. Firstly, we will find the diameter of the input points in time and consider an square that contains (see Figure 4). By Theorem 3.7, we know that the median lies inside this square. At each step, we draw the two lines and that partition the square into four similar smaller squares, each with dimensions , and we apply the above algorithm to eliminate two half-planes in time. After steps we have a square of dimensions and we return its center as an approximation of the HDD median. Since the HDD median is a point inside this square, the error is at most . The total time complexity of the algorithm is .

This strategy can be generalized to higher dimensions by finding the minimum HDD on an arbitrary hyperplane (analogous to the line in the proof of Theorem 4.3) to eliminate a half-space, but the time complexity of finding the minimum HDD point on is high.

5 Discussion and Possible Directions for Future Research

Our algorithm for computing HDD queries presented in Section 4.1 requires space and preprocessing time. One natural possible direction for future research is to identify algorithms with improved preprocessing time or space.

Our algorithm for finding an HDD median presented in Section 4.2 requires time. In addition to seeking to identify lower bounds on the worst-case running time required to find an HDD median, we could attempt to reduce the running time using techniques such as gradient descent or linear programming.

Our analysis of our algorithm for finding an approximate HDD median presented in Section 4.3 does not capitalize on the fact that the number of candidate points decreases on each step; we charge time per step. If it could be shown that a constant fraction of the remaining points are eliminated on each step, then the bound on the algorithm’s time complexity would be significantly improved.

Finally, we could consider alternative definitions for depth using similar notions to those in Definition 1. E.g., one can define a “line distance depth” that evaluates the distances to all possible lines passing through each pair of points in the set of input points. This definition coincides with Definition 1 when , but differs in higher dimensions, for .

References

- [1] C. Bajaj. The algebraic degree of geometric optimization problems. Discrete and Computational Geometry, 3:177–191, 1988.

- [2] V. Barnett. The ordering of multivariate data. Journal of the Royal Statistical Society: Series A (General), 139(3):318–344, 1976.

- [3] B. Chazelle and J. Friedman. Point location among hyperplanes and unidirectional ray-shooting. Computational Geometry, 4(2):53–62, 1994.

- [4] R. Durier and C. Michelot. Geometrical properties of the Fermat-Weber problem. European Journal of Operational Research, 20(3):332–343, 1985.

- [5] S. Durocher and D. Kirkpatrick. The projection median of a set of points. Computational Geometry: Theory and Applications, 42(5):364–375, 2009.

- [6] S. Durocher, A. Leblanc, and M. Skala. The projection median as a weighted average. Journal of Computational Geometry, 8(1):78–104, 2017.

- [7] R. Z. Farahani, M. SteadieSeifi, and N. Asgari. Multiple criteria facility location problems: A survey. Applied mathematical modelling, 34(7):1689–1709, 2010.

- [8] R. Y. Liu. On a notion of data depth based on random simplices. The Annals of Statistics, pages 405–414, 1990.

- [9] P. C. Mahalanobis. On the generalized distance in statistics. Sankhyā: The Indian Journal of Statistics, Series A (2008-), 80:S1–S7, 2018.

- [10] A. T. Murray and V. Estivill-Castro. Cluster discovery techniques for exploratory spatial data analysis. International journal of geographical information science, 12(5):431–443, 1998.

- [11] H. Oja. Descriptive statistics for multivariate distributions. Statistics & Probability Letters, 1(6):327–332, 1983.

- [12] J. Tukey. Mathematics and the picturing of data. In Proc. Int. Cong. Math., pages 523–531, 1957.

- [13] Y. Vardi and C.-H. Zhang. The multivariate -median and associated data depth. Proceedings of the National Academy of Sciences, 97(4):1423–1426, 2000.

- [14] Y. Zuo and R. Serfling. General notions of statistical depth function. Annals of statistics, 28(2):461–482, 2000.