11email: {ravigupta131,21D070068,asethi}@iitb.ac.in

IDAL: Improved Domain Adaptive Learning for Natural Images Dataset

Abstract

We present a novel approach for unsupervised domain adaptation (UDA) for natural images. A commonly-used objective for UDA schemes is to enhance domain alignment in representation space even if there is a domain shift in the input space. Existing adversarial domain adaptation methods may not effectively align different domains of multi-modal distributions associated with classification problems. Our approach has two main features. Firstly, its neural architecture uses the deep structure of ResNet and the effective separation of scales of feature pyramidal network (FPN) to work with both content and style features. Secondly, it uses a combination of a novel loss function and judiciously selected existing loss functions to train the network architecture. This tailored combination is designed to address challenges inherent to natural images, such as scale, noise, and style shifts, that occur on top of a multi-modal (multi-class) distribution. The combined loss function not only enhances model accuracy and robustness on the target domain but also speeds up training convergence. Our proposed UDA scheme generalizes better than state-of-the-art for CNN-based methods on Office-Home, Office-31, and VisDA-2017 datasets and comaparable for DomainNet dataset.

Keywords:

Adversarial, Deep Learning, Domain Adaptation, Natural Images1 Introduction

Unsupervised Domain Adaptation (UDA) addresses the performance degradation caused by domain shift in supervised learning, where there’s a significant distribution difference between training (source) and testing (target) data domains. Adversarial-based UDA, utilizing frameworks like Generative Adversarial Networks (GANs) [25] and Domain Adversarial Neural Networks (DANN) [8], aims to mitigate this by learning domain-invariant features from unlabeled target data. By promoting feature harmonization while retaining class information, these models enhance target domain generalization. Despite promising results in image classification and object detection, adversarial UDA faces challenges such as hyper-parameter sensitivity, high-dimensional space navigation, and domain shift detection.

To address the aforementioned challenge, we developed an unsupervised domain adaptation approach that surpasses the state-of-the-art UDA performance of convolution neural networks (CNNs) for benchmark natural image datasets – Office-Home [36], Office-31 [29], VisDA-2017 [26], and shows comparable results for DomainNet [27].

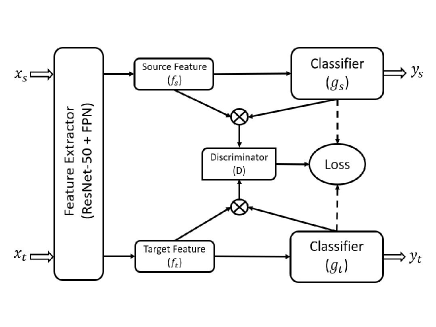

Inspired by the concept of a conditional domain adversarial network (CDAN) [22], our core approach – Improved Domain Adaptive Learning (IDAL) – involves concurrent training of a feature extractor (typically a deep neural network) and a domain classifier (discriminator) tasked with distinguishing between source and target domains. We have explored various CNN-based feature extractors such as ResNet-101, ResNet-50 [12], ViT [6], and ConvMixer [33] to extract meaningful features. The feature extractor’s aim is to learn representations that are invariant to domain shifts, and thus deceive the domain classifier that endeavors to correctly classify the domain of the extracted features. The integration of ResNet-50 and FPN combines [20] deep feature representation and multi-scale extraction, essential for tasks like object detection and segmentation. Given that object scale and style vary by domain, this synergy makes ResNet + FPN a strong candidate for Unsupervised Domain Adaptation (UDA), focusing on higher-level domain-specific feature suppression. This application to UDA is novel.

In the adversarial training process, the feature extractor (ResNet-50 + FPN) and domain classifier compete: the extractor aims to produce domain-agnostic features, while the classifier attempts to distinguish between domains. This method fosters the development of domain-invariant features, enhancing transferability across source and target domains.

To improve the training process, we propose a novel loss function called pseudo label maximum mean discrepancy (PLMMD). We use this loss in addition to certain existing losses – maximum information loss (entropy loss) [15], maximum mean discrepancy (MMD) loss [21], minimum class confusion (MCC) loss [13]. Our model integrates several loss functions to enhance domain adaptation and classification accuracy: Maximum information loss clusters target features by class, preserving key information. MMD loss bridges domain gaps by comparing mean embeddings. MCC loss boosts accuracy by minimizing class confusion, vital for uneven datasets. Our innovative PLMMD loss selectively extracts domain-invariant features, speeding up training. This tailored mix of loss functions enables our method to outperform existing CNN models and achieve quicker convergence on natural image datasets– Office-Home [36], Office-31 [29], and VisDA [26].

2 Background and Related Works

In unsupervised domain adaptation (UDA), we have data from a source domain as labeled examples and that from a target domain as unlabeled examples where ’s are unknown. The source domain and target domain are sampled from the distributions and respectively. Notably, the two distributions are initially not aligned; that is, .

Domain adversarial neural network (DANN) [8] is a framework of choice for UDA. This is a dual-player game involving two key components: the domain discriminator, denoted as , and the feature representation, denoted as . In this setup, is trained to differentiate between the source domain and the target domain, while is simultaneously trained to both confound the domain discriminator and accurately classify samples from the source domain. The discrepancy between the feature distributions and [7] has well corresponding with the error function of the domain discriminator. This is a key to bound the risk associated with the target in the domain adaptation theory [2].

An alternative approach in the field of Unsupervised Domain Adaptation (UDA) focuses on reducing the domain discrepancy as quantified by various metrics, e.g., maximum mean discrepancy(MMD). To establish class-level alignment across domains, the methodology outlined in the study conducted by Pei and colleagues [26] incorporates a multiplicative interaction between feature representations and class predictions. In their studies [3], efforts are made to ensure alignment between the centroids of labeled source data and the centroids derived from pseudo-labeled target data, particularly for shared classes within the feature space.

Another approach to UDA involves employing separate task classifiers for each of the two domains. These classifiers are used to identify non-discriminative features. In turn, they facilitate the learning of a feature extractor that focuses on generating discriminative features [17]. Several other studies emphasize the importance of directing attention towards transferable regions as a means to establish a domain-invariant classification model, as exemplified by [16]. In addition, for the purpose of extracting target-discriminative features,[14] employ techniques such as generating synthetic data from the raw input of the two domains, as described in[25].

Since our work modifies the network and losses of the CDAN framework [22], we explain it here for completeness. To reduce the shift in data distributions across the domains, CDAN trains a deep network , so that source risk = can bound the target risk = plus the distribution discrepancy quantified by a novel conditional domain discriminator. In the context of adversarial learning, Generative Adversarial Networks (GANs) [8] play a pivotal role in mitigating differences between domains. A deep network generates features represented by and classifier prediction denoted by .

We enhance existing methods for adversarial domain adaptation in two specific ways. Firstly, when dealing with non-identical joint distributions of features and classes across domains, as characterized by and , relying solely on the adaptation of the feature representation may prove insufficient, as highlighted in [22]. A quantitative analysis indicates that deep representations tend to transition from a more general to a domain-specific nature as they traverse deeper layers within neural networks. This transition leads to a notable decrease in transferability, particularly observed in the layers responsible for domain-specific feature extraction () and classification (), as detailed in [37]. Secondly, due to the nature of multi-class classification, the feature distribution is multi-modal, and hence adapting feature distribution may be challenging for adversarial networks.

Simultaneous modeling the domain variances in feature representation and classifier prediction facilitates effective domain gap reduction [22]. This joint conditioning helps capture and align data distributions between source and target domains. Thus, incorporating classifier prediction as a conditioning factor in domain adaptation shows promising potential for enhancing transferability and producing domain-invariant representations in challenging cross-domain scenarios. CDAN originally introduced a minimax optimization framework featuring two adversarial loss terms: (a) the source classifier loss, aimed at minimizing it to ensure a lower source risk, and (b) the discriminator loss applied to both the source classifier and the domain discriminator , spanning both the source and target domains. This loss is minimized with respect to while simultaneously maximized with respect to and :

| (1) |

| (2) | ||||

In this context, corresponds to the cross-entropy loss, is the classifier loss, is the discriminator loss and signifies the combined variable encompassing the feature representation and classifier prediction . The minimax game of CDAN is

| (3) |

Here, denotes a hyper-parameter that balances between the two objectives, allowing for a trade-off between source risk and domain adversary concerns.

As depicted in Figure 1, the domain discriminator is conditioned on the classifier prediction via the joint variable , aiming to address the two challenges inherent in adversarial domain adaptation, as discussed in [22]. To incorporate a basic form of conditioning for , we employ —where we concatenate the feature representation and classifier prediction into a vector , which serves as the input for the conditional domain discriminator . This conditioning approach aligns with the common practice observed in existing conditional GANs [8]. However, when utilizing the concatenation approach, and operate independently, missing the opportunity to fully capture the crucial multiplicative interactions between the feature representation and classifier prediction that play a pivotal role in domain adaptation. A multilinear map is formed by computing the outer product of multiple random vectors. This technique, which involves multilinear maps applied to infinite-dimensional nonlinear feature maps, has proven successful in embedding joint or conditional distributions into reproducing kernel Hilbert spaces [31]. In addition to the theoretical advantages offered by the multilinear map in comparison to concatenation , as discussed in [31]. In this research, we harness the capabilities of the multilinear map to condition on . In contrast to concatenation, the multilinear map, denoted as , excels in capturing the intricate multi-modal structures that are inherent in complex data distributions. However, it’s important to note that a drawback of the multilinear map is its potential for dimension explosion.

Our approach involves the joint minimization of the source classifier and feature extractor with respect to Equation (1). Additionally, we minimize Equation (2) to optimize the domain discriminator and simultaneously maximize Equation (2) to enhance the feature extractor and source classifier . This yields the mini-max problem of Domain Adversarial Networks:

| (4) |

In this context, serves as a hyper-parameter responsible for adjusting the weightage between the source classifier and the conditional domain discriminator and acts as the generator. Meanwhile, represents the composite variable encompassing both the domain-specific representation and the classifier prediction which play pivotal roles in adversarial adaptation. [10] shows the improvement in performance with use of tailored loss function for medical datasets.

3 Proposed Method

In our Unsupervised Domain Adaptation (UDA) strategy for cross-domain classification, we leverage insights from a labeled source domain to enhance target domain performance, despite unlabeled conditions. Our method utilizes a multi-scale optimized neural architecture, ensuring well-separated, multi-modal class distributions. Data augmentation techniques such as flipping, resizing, and normalization are applied for domain consistency. A novel aspect of our approach is a unique loss function that, combined with selected existing ones, minimizes domain discrepancies and aligns feature distributions across multi-class datasets with varying image sizes. For augmented image feature extraction, we employ ResNet-50 coupled with the Feature Pyramid Network (FPN), blending deep feature capture with multi-scale extraction to effectively represent detailed and broad image features, a pioneering application in UDA and image classification.

3.1 Proposed loss function

The proposed loss function to train the new architecture in the CDAN [22] framework for improving UDA for image classification can be formulated as follows:

| (5) | |||

where , , , and are hyper parameters, is the information maximization (entropy) loss, is minimum class confusion loss, is maximum mean discrepancy loss, and is a novel pseudo-label maximum mean discrepancy loss. It is worth noting that the original CDAN [22] trained a ResNet (and not ResNet FPN as proposed) using only , and . On the other hand, all other individual loss terms have their own specialty and this novel combination of loss significantly surpasses the performance of CNN-based as well as transformer-based models. A detailed description of all the losses, including the proposed are given below.

3.1.1 Information Maximization loss:

The Information Maximization loss is designed to encourage neural networks to learn more informative representations by maximizing the mutual information between the learned features and the input data [15]. By maximizing the mutual information between the empirical distribution of target inputs and the resulting distribution of target labels, which can be formally defined as:

| (6) |

where, , , and K is the number of classes. By taking into account , our model is incentivized to learn target features that exhibit tight clustering along with a uniform distribution. This approach is designed to retain discriminative information within the target domain.

3.1.2 Minimum Class Confusion:

The minimum class confusion loss, referenced as [13], aims to mitigate confusion between various classes represented by indices and , where these indices collectively encompass the entire set of classes. Notably, this loss term is focused on the target domain and is intended to minimize the confusion between pairs of classes, such as those denoted by and is given by:

After standardizing (normalising) the class confusion terms, the ultimate MCC Loss function is defined as:

| (7) |

This loss is computed as the summation of all non-diagonal elements within the class confusion matrix. The diagonal elements signify the classifier’s level of "certainty," whereas the non-diagonal elements signify the "uncertainty" associated with classification. The MCC loss can be incorporated alongside other domain adaptation techniques.

3.1.3 Maximum Mean Discrepancy:

Maximum Mean Discrepancy (MMD) is a kernel-based two-sample statistical test employed to assess the similarity between two distributions. The final loss for a given probability measure and takes the following form:

| (8) |

3.1.4 Pseudo-label MMD:

We propose a novel loss function called pseudo-label maximum mean discrepancy (PLMMD). This loss function takes into account pseudo-labels that can be generated on the target domain samples after the first few training iterations. Doing so strongly conditions the feature alignment on the classes. It is calculated using a procedure similar to that of calculating MMD. The difference is that we multiply each of the expectations in Equation 8 with weights that are calculated based on pseudo-labels:

| (9) |

where represents weight to get similarity within the source domain, are weights for similarity within the target domain, and are weights to get similarity within the source and target domain. For calculating the weights, firstly source and target label data are normalized to account for class imbalances. For each class common to both datasets, dot products of normalized vectors are computed to quantify instance relationships. Calculated dot products are normalized by the count of common classes, ensuring fairness. This returns three weight arrays, representing relationships between instances in the source dataset, target dataset, and source-to-target pairs.

| Model | AC | AP | AR | CA | CP | CR | PA | PC | PR | RA | RC | RP | Avg. |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| ResNet-50 [12] | 34.9 | 50.0 | 58.0 | 37.4 | 41.9 | 46.2 | 38.5 | 31.2 | 60.4 | 53.9 | 41.2 | 59.9 | 46.1 |

| DANN [8] | 45.6 | 59.3 | 70.1 | 47.0 | 58.5 | 60.9 | 46.1 | 43.7 | 68.5 | 63.2 | 51.8 | 76.8 | 57.6 |

| CDAN [22] | 50.7 | 70.6 | 76.0 | 57.6 | 70.0 | 70.0 | 57.4 | 50.9 | 77.3 | 70.9 | 56.7 | 81.6 | 65.8 |

| MDD [38] | 54.9 | 73.7 | 77.8 | 60.0 | 71.4 | 71.8 | 61.2 | 53.6 | 78.1 | 72.5 | 60.2 | 82.3 | 68.1 |

| GVB-GD [5] | 57.0 | 74.7 | 79.8 | 64.6 | 74.1 | 74.6 | 65.2 | 55.1 | 81.0 | 74.6 | 59.7 | 84.3 | 70.4 |

| SRDC [32] | 52.3 | 76.3 | 81.0 | 69.5 | 76.2 | 78.0 | 68.7 | 53.8 | 81.7 | 76.3 | 57.1 | 85.0 | 71.3 |

| SHOT [19] | 56.9 | 78.1 | 81.0 | 67.9 | 78.4 | 78.1 | 67.0 | 54.6 | 81.8 | 73.4 | 58.1 | 84.5 | 71.6 |

| SDAT [28] | 58.2 | 77.1 | 82.2 | 66.3 | 77.6 | 76.8 | 63.3 | 57.0 | 82.2 | 74.9 | 64.7 | 86.0 | 72.2 |

| FixBi [24] | 58.1 | 77.3 | 80.4 | 67.7 | 79.5 | 78.1 | 65.8 | 57.9 | 81.7 | 76.4 | 62.9 | 86.7 | 72.7 |

| IDAL w/o FPN | 58.6 | 77.2 | 80.1 | 69.2 | 76.4 | 76.3 | 70.8 | 56.9 | 82.4 | 77.6 | 63.6 | 84.2 | 72.8 |

| IDAL | 59.8 | 77.8 | 80.8 | 69.8 | 76.9 | 77.0 | 71.6 | 57.4 | 82.9 | 78.5 | 64.1 | 85.6 | 73.5 |

| ADDA [34] | clp | inf | pnt | qdr | rel | skt | Avg. | DANN [8] | clp | inf | pnt | qdr | rel | skt | Avg. | MIMTFL [9] | clp | inf | pnt | qdr | rel | skt | Avg. |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| clp | - | 11.2 | 24.1 | 3.2 | 41.9 | 30.7 | 22.2 | clp | - | 15.5 | 34.8 | 9.5 | 50.8 | 41.4 | 30.4 | clp | - | 15.1 | 35.6 | 10.7 | 51.5 | 43.1 | 31.2 |

| inf | 19.1 | - | 16.4 | 3.2 | 26.9 | 14.6 | 16.0 | inf | 31.8 | - | 30.2 | 3.8 | 44.8 | 25.7 | 27.3 | inf | 32.1 | - | 31.0 | 2.9 | 48.5 | 31.0 | 29.1 |

| pnt | 31.2 | 9.5 | - | 8.4 | 39.1 | 25.4 | 22.7 | pnt | 39.6 | 15.1 | - | 5.5 | 54.6 | 35.1 | 30.0 | pnt | 40.1 | 14.7 | - | 4.2 | 55.4 | 36.8 | 30.2 |

| qdr | 15.7 | 2.6 | 5.4 | - | 9.9 | 11.9 | 9.1 | qdr | 11.8 | 2.0 | 4.4 | - | 9.8 | 8.4 | 7.3 | qdr | 18.8 | 3.1 | 5.0 | - | 16.0 | 13.8 | 11.3 |

| rel | 39.5 | 14.5 | 29.1 | 12.1 | - | 25.7 | 24.2 | rel | 47.5 | 17.9 | 47.0 | 6.3 | - | 37.3 | 31.2 | rel | 48.5 | 19.0 | 47.6 | 5.8 | - | 39.4 | 32.1 |

| skt | 35.3 | 8.9 | 25.2 | 14.9 | 37.6 | - | 25.4 | skt | 47.9 | 13.9 | 34.5 | 10.4 | 46.8 | - | 30.7 | skt | 51.7 | 16.5 | 40.3 | 12.3 | 53.5 | - | 34.9 |

| Avg. | 28.2 | 9.3 | 20.1 | 8.4 | 31.1 | 21.7 | 19.8 | Avg. | 35.7 | 12.9 | 30.2 | 7.1 | 41.4 | 29.6 | 26.1 | Avg. | 38.2 | 13.7 | 31.9 | 7.2 | 45.0 | 32.8 | 28.1 |

| ResNet-101 [12] | clp | inf | pnt | qdr | rel | skt | Avg. | CDAN† [22] | clp | inf | pnt | qdr | rel | skt | Avg. | MDD† [38] | clp | inf | pnt | qdr | rel | skt | Avg. |

| clp | - | 19.3 | 37.5 | 11.1 | 52.2 | 41.0 | 32.2 | clp | - | 20.4 | 36.6 | 9.0 | 50.7 | 42.3 | 31.8 | clp | - | 20.5 | 40.7 | 6.2 | 52.5 | 42.1 | 32.4 |

| inf | 30.2 | - | 31.2 | 3.6 | 44.0 | 27.9 | 27.4 | inf | 27.5 | - | 25.7 | 1.8 | 34.7 | 20.1 | 22.0 | inf | 33.0 | - | 33.8 | 2.6 | 46.2 | 24.5 | 28.0 |

| pnt | 39.6 | 18.7 | - | 4.9 | 54.5 | 36.3 | 30.8 | pnt | 42.6 | 20.0 | - | 2.5 | 55.6 | 38.5 | 31.8 | pnt | 43.7 | 20.4 | - | 2.8 | 51.2 | 41.7 | 32.0 |

| qdr | 7.0 | 0.9 | 1.4 | - | 4.1 | 8.3 | 4.3 | qdr | 21.0 | 4.5 | 8.1 | - | 14.3 | 15.7 | 12.7 | qdr | 18.4 | 3.0 | 8.1 | - | 12.9 | 11.8 | 10.8 |

| rel | 48.4 | 22.2 | 49.4 | 6.4 | - | 38.8 | 33.0 | rel | 51.9 | 23.3 | 50.4 | 5.4 | - | 41.4 | 34.5 | rel | 52.8 | 21.6 | 47.8 | 4.2 | - | 41.2 | 33.5 |

| skt | 46.9 | 15.4 | 37.0 | 10.9 | 47.0 | - | 31.4 | skt | 50.8 | 20.3 | 43.0 | 2.9 | 50.8 | - | 33.6 | skt | 54.3 | 17.5 | 43.1 | 5.7 | 54.2 | - | 35.0 |

| Avg. | 34.4 | 15.3 | 31.3 | 7.4 | 40.4 | 30.5 | 26.6 | Avg. | 38.8 | 17.7 | 32.8 | 4.3 | 41.2 | 31.6 | 27.7 | Avg. | 40.4 | 16.6 | 34.7 | 4.3 | 43.4 | 32.3 | 28.6 |

| SCDA [18] | clp | inf | pnt | qdr | rel | skt | Avg. | CDAN + SCDA [18] | clp | inf | pnt | qdr | rel | skt | Avg. | MDD + SCDA [18] | clp | inf | pnt | qdr | rel | skt | Avg. |

| clp | - | 18.6 | 39.3 | 5.1 | 55.0 | 44.1 | 32.4 | clp | - | 19.5 | 40.4 | 10.3 | 56.7 | 46.0 | 34.6 | clp | - | 20.4 | 43.3 | 15.2 | 59.3 | 46.5 | 36.9 |

| inf | 29.6 | - | 34.0 | 1.4 | 46.3 | 25.4 | 27.3 | inf | 35.6 | - | 36.7 | 4.5 | 50.3 | 29.9 | 31.4 | inf | 32.7 | - | 34.5 | 6.3 | 47.6 | 29.2 | 30.1 |

| pnt | 44.1 | 19.0 | - | 2.6 | 56.2 | 42.0 | 32.8 | pnt | 45.6 | 20.0 | - | 4.2 | 56.8 | 41.9 | 33.7 | pnt | 46.4 | 19.9 | - | 8.1 | 58.8 | 42.9 | 35.2 |

| qdr | 30.0 | 4.9 | 15.0 | - | 25.4 | 19.8 | 19.0 | qdr | 28.3 | 4.8 | 11.5 | - | 20.9 | 19.2 | 17.0 | qdr | 31.1 | 6.6 | 18.0 | - | 28.8 | 22.0 | 21.3 |

| rel | 54.0 | 22.5 | 51.9 | 2.3 | - | 42.5 | 34.6 | rel | 55.5 | 22.8 | 53.7 | 3.2 | - | 42.1 | 35.5 | rel | 55.5 | 23.7 | 52.9 | 9.5 | - | 45.2 | 37.4 |

| skt | 55.6 | 18.5 | 44.7 | 6.4 | 53.2 | - | 35.7 | skt | 58.4 | 21.1 | 47.8 | 10.6 | 56.5 | - | 38.9 | skt | 55.8 | 20.1 | 46.5 | 15.0 | 56.7 | - | 38.8 |

| Avg. | 42.6 | 16.7 | 37.0 | 3.6 | 47.2 | 34.8 | 30.3 | Avg. | 44.7 | 17.6 | 38.0 | 6.6 | 48.2 | 35.8 | 31.8 | Avg. | 44.3 | 18.1 | 39.0 | 10.8 | 50.2 | 37.2 | 33.3 |

| clp | inf | pnt | qdr | rel | skt | Avg. | |

| clp | - | 20.5 | 36.8 | 9.2 | 54.8 | 44.5 | 33.2 |

| inf | 32.4 | - | 25.8 | 2.5 | 31.2 | 41.2 | 26.6 |

| pnt | 72.5 | 25.0 | - | 4.5 | 52.7 | 37.6 | 38.5 |

| qdr | 47.4 | 5.3 | 8.4 | - | 11.8 | 12.5 | 17.1 |

| rel | 51.5 | 25.2 | 54.5 | 2.9 | - | 34.8 | 33.8 |

| skt | 49.8 | 24.8 | 47.4 | 12.8 | 51.6 | - | 37.3 |

| Avg. | 50.7 | 20.2 | 34.6 | 6.4 | 40.4 | 34.1 | 31.1 |

| Model | plane | bcycl | bus | car | horse | knife | mcycl | person | plant | sktbrd | train | truck | Avg. |

| ResNet-50 [12] | 55.1 | 53.3 | 61.9 | 59.1 | 80.6 | 17.9 | 79.7 | 31.2 | 81.0 | 26.5 | 73.5 | 8.5 | 52.4 |

| BNM [4] | 89.6 | 61.5 | 76.9 | 55.0 | 89.3 | 69.1 | 81.3 | 65.5 | 90.0 | 47.3 | 89.1 | 30.1 | 70.4 |

| MCD [30] | 87.0 | 60.9 | 83.7 | 64.0 | 88.9 | 79.6 | 84.7 | 76.9 | 88.6 | 40.3 | 83.0 | 25.8 | 71.9 |

| SWD [17] | 90.8 | 82.5 | 81.7 | 70.5 | 91.7 | 69.5 | 86.3 | 77.5 | 87.4 | 63.6 | 85.6 | 29.2 | 76.4 |

| FixBi [24] | 96.1 | 87.8 | 90.5 | 90.3 | 96.8 | 95.3 | 92.8 | 88.7 | 97.2 | 94.2 | 90.9 | 25.7 | 87.2 |

| IDAL w/o FPN | 94.1 | 88.6 | 89.2 | 78.7 | 94.9 | 98.2 | 88.5 | 84.6 | 94.7 | 90.3 | 88.4 | 51.3 | 86.8 |

| IDAL | 94.7 | 89.0 | 89.6 | 79.0 | 95.6 | 98.7 | 89.4 | 85.2 | 95.6 | 90.5 | 88.9 | 52.6 | 87.4 |

| Method | A D | A W | D W | W D | D A | W A | Avg |

| ResNet-50 [12] | 68.9 | 68.4 | 96.7 | 99.3 | 62.5 | 60.7 | 76.1 |

| DANN [8] | 79.7 | 82.0 | 96.9 | 99.1 | 68.2 | 67.4 | 82.2 |

| CDAN [22] | 92.9 | 94.1 | 98.6 | 100.0 | 71.0 | 69.3 | 87.7 |

| MDD [38] | 93.5 | 94.5 | 98.4 | 100.0 | 74.6 | 72.2 | 88.9 |

| GVB-GD [5] | 95.0 | 94.8 | 98.7 | 100.0 | 73.4 | 73.7 | 89.3 |

| SRDC [32] | 95.8 | 95.7 | 99.2 | 100.0 | 76.7 | 77.1 | 90.8 |

| SHOT [19] | 93.1 | 90.9 | 98.8 | 99.9 | 74.5 | 74.8 | 88.7 |

| f-DAL [1] | 94.8 | 93.4 | 99.0 | 100.0 | 73.6 | 74.6 | 89.2 |

| FixBi [24] | 95.0 | 96.1 | 99.3 | 100.0 | 78.7 | 79.4 | 91.4 |

| IDAL w/o FPN | 94.4 | 95.0 | 99.0 | 100.0 | 75.6 | 76.6 | 90.1 |

| IDAL | 95.6 | 95.7 | 99.1 | 100.0 | 77.3 | 77.1 | 90.8 |

4 Experiments and Results

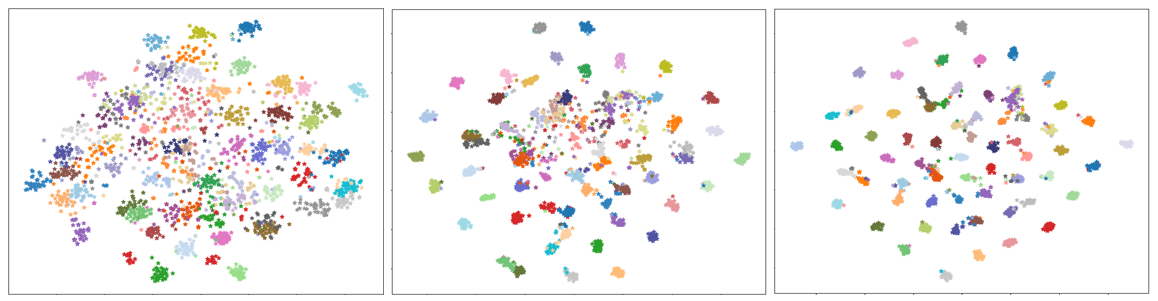

To validate the efficacy of our model, we undertake extensive investigations on well-established benchmarks and juxtapose our results with those achieved by state-of-the-art UDA methods. We also studied the impact of using a feature pyramid network (FPN) [20] for domain adaptation for classification. Additionally, we studied how the feature (representation) space of the target domain evolves during training. We also studied the contribution of various components of the loss function. We also studied the convergence speed of our method compared to FixBi [24].

4.1 Datasets

To evaluate the proposed method, we conducted experiments on benchmark UDA datasets – including Office-31 [29], Office-Home [36], VisDA-2017 [26], and DomainNet [27]. The details of the datasets and transfer tasks on these datasets are given below:

The Office-Home dataset is a key benchmark with 15,500 images across 65 classes and four domains: Artistic, Clip Art, Product, and Real-World, used to assess twelve transfer tasks. Office-31, another pivotal dataset, contains 4,110 images in 31 classes from Amazon, Webcam, and DSLR domains, evaluating six transfer tasks. VisDA-2017, aimed at cross-domain generalization, includes Synthetic and Real source domains with 12 categories, using the ImageNet validation set as the target. DomainNet, the largest dataset for domain adaptation, features about 0.6 million images across 345 categories from six domains (Clipart, Infograph, Painting, Quickdraw, Real, and Sketch), supporting 30 adaptation tasks, showcasing its scale and diversity in visual domain adaptation challenges.

4.2 Implementation details

All the experiments were conducted on an NVIDIA A100 in PyTorch, using the CNN-based neural network (ResNet-50) pre-trained on ImageNet [11] and feature pyramid network as the backbone for our proposed model. The base learning rate is 0.00001 with a batch size of 32, and we train models by 50 epochs. The hyper-parameters were =0.05 , =0.1 , =0.15 and =0.15 for the experiment of Office-31 dataset. Similarly for Office-Home, the hyper-parameters were =0.05 , =0.21 , =0.25 and =0.25 , for the VisDA-2017 dataset the hyper parameters were =0.05 , =0.3 , =0.25 and =0.25 ,and for the Domain-Net dataset the hyper parameters were =0.05 , =0.01 , =0.2 and =0.25.We have used AdamW [23] with a momentum of 0.9, and a weight decay of 0.001 as the optimizer. We adhere to the standard procedure for unsupervised domain adaptation (UDA), wherein we make use of both labeled source samples and unlabeled target samples during the training process. For a fair comparison with prior works, we also conduct experiments with the same backbones such as ResNet-50 [12], DANN [8], CDAN [22], MDD [38], GVB-GD [5], SRDC [32], FixBi [24], SHOT [19], SDAT [28], f-DAL [1], BNM [4], MCD [30] and SWD [17] for demonstration of results with different-different datasets.

4.3 UDA benchmarks

We assess the performance of our proposed model by conducting comparisons with state-of-the-art methods that rely on ResNet-based architectures. In these experiments, we employ ResNet-50 as the underlying architecture for our evaluations across the Office-Home, Office-31 and VisDA-2017 datasets and ResNet-101 for the DomainNet dataset. Importantly, each ResNet-50 and ResNet-101 backbone is trained exclusively on source data and subsequently subjected to testing using target data.

| M1 | MMD | MCC | PLMMD | AC | AP | AR | CA | CP | CR | PA | PC | PR | RA | RC | RP | Avg. |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 50.7 | 70.6 | 76.0 | 57.6 | 70.0 | 70.0 | 57.4 | 50.9 | 77.3 | 70.9 | 56.7 | 81.6 | 65.8 | ||||

| 59.4 | 76.8 | 80.3 | 69.1 | 75.7 | 76.2 | 69.7 | 56.8 | 82.3 | 78.4 | 63.4 | 84.6 | 72.7 | ||||

| 59.6 | 77.6 | 80.4 | 69.3 | 76.0 | 76.8 | 71.2 | 57.1 | 82.7 | 78.5 | 64.0 | 85.2 | 73.2 | ||||

| 59.8 | 77.8 | 80.8 | 69.8 | 76.9 | 77.0 | 71.6 | 57.4 | 82.9 | 78.5 | 64.1 | 85.6 | 73.5 |

Table 1 presents quantitative results with various backbones, demonstrating our proposed model’s consistent superiority over the state-of-the-art, specifically achieving an impressive average accuracy improvement of over 0.8% when compared to the FixBi model for the Office-Home dataset. Table 4 further showcases our model’s superiority, surpassing the current state-of-the-art and attaining a notable 0.2% average accuracy improvement over the FixBi model for the VisDA-2017 dataset. Table 5 illustrates results using diverse backbones, highlighting our model’s performance, which is comparable with the current state-of-the-art for the Office-31 dataset. Table 2 and 3 depict the performance of our model with ResNet-101 + FPN as the feature extractor for the most challenging dataset of domain adaptation with comparable performance to state-of-the-art.

ResNet-50 combined with Feature Pyramid Network (FPN) for feature extraction offers multi-scale feature capture, superior object detection, and effective feature fusion. This versatile pairing, proven in various computer vision tasks, balances depth and scale, enhancing overall performance while reducing computational costs. Tables 1, 4, and 5 show the impact of having FPN with ResNet for feature extraction. We conducted ablation studies to understand the impact of the different feature extractors such as ConvMixer [33] and ResNet-101 [12]. However, the performance in these cases was worse than our reported results. We also compare the ResNet-based backbone and transformer-based backbone and notice a huge gap in parameter requirements. ResNet-based backbone needs relatively very less parameters compared to transformer-based backbones.

4.4 Impact of loss components

To gauge the influence of individual loss functions and their collective impact, we conducted a thorough experimental analysis. Our findings revealed that Minimum Class Confusion (MCC) loss functions enhance classification models by reducing class confusion, especially in scenarios with imbalanced class distributions. Concurrently, we observed that information maximization losses assist the classifier in prioritizing the most confidently aligned samples for domain adaptation. Additionally, the Maximum Mean Discrepancy (MMD) loss effectively narrows the gap between the mean embeddings of the two distributions. Table 6 shows the effect of the individual loss function on the performance of our model IDAL for the Office-Home dataset and it indicates that our model IDAL performs best with a tailored combination of loss functions. By artfully combining these distinctive loss functions, we not only surpass the current state-of-the-art but also achieve a comprehensive solution that advances the field of classification models in diverse scenarios.

5 Conclusions and Future Directions

We proposed a novel method for unsupervised domain adaptation for image classification. We proposed a novel neural network architecture and a loss function. Architecturally, we have demonstrated that synergy between two deep learning architectures – ResNet [11] and feature pyramidal network (FPN) [20] – complement each other to extract multi-scale features and effectively separate style (domain) and content (class) information components. Our ablation studies confirm the importance of using FPN with ResNet. The proposed loss component PLMMD and judiciously chosen existing loss components leads to significant improvements in unsupervised domain adaptation (UDA) performance that can surpass the performance of CNNs using other UDA methods. Our ablation study confirmed the importance of each of the loss components. Additionally, using the proposed loss led to faster convergence and a rapid evolution of a class-wise multi-modal distribution of the target domain features.

In the future, computationally heavier architectures, such as, vision transformers [6] and its derivatives may be used for further improvements in domain adaptation. Additionally, the proposed loss function may be adapted for other tasks, such as semantic segmentation and object detection.

References

- [1] David Acuna, Guojun Zhang, Marc T Law, and Sanja Fidler. f-domain adversarial learning: Theory and algorithms. In International Conference on Machine Learning, pages 66–75. PMLR, 2021.

- [2] Shai Ben-David, John Blitzer, Koby Crammer, Alex Kulesza, Fernando Pereira, and Jennifer Wortman Vaughan. A theory of learning from different domains. Machine learning, 79:151–175, 2010.

- [3] Chaoqi Chen, Weiping Xie, Wenbing Huang, Yu Rong, Xinghao Ding, Yue Huang, Tingyang Xu, and Junzhou Huang. Progressive feature alignment for unsupervised domain adaptation. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pages 627–636, 2019.

- [4] Shuhao Cui, Shuhui Wang, Junbao Zhuo, Liang Li, Qingming Huang, and Qi Tian. Towards discriminability and diversity: Batch nuclear-norm maximization under label insufficient situations. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pages 3941–3950, 2020.

- [5] Shuhao Cui, Shuhui Wang, Junbao Zhuo, Chi Su, Qingming Huang, and Qi Tian. Gradually vanishing bridge for adversarial domain adaptation. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pages 12455–12464, 2020.

- [6] Alexey Dosovitskiy, Lucas Beyer, Alexander Kolesnikov, Dirk Weissenborn, Xiaohua Zhai, Thomas Unterthiner, Mostafa Dehghani, Matthias Minderer, Georg Heigold, Sylvain Gelly, et al. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv preprint arXiv:2010.11929, 2020.

- [7] Yaroslav Ganin and Victor Lempitsky. Unsupervised domain adaptation by backpropagation. In International conference on machine learning, pages 1180–1189. PMLR, 2015.

- [8] Yaroslav Ganin, Evgeniya Ustinova, Hana Ajakan, Pascal Germain, Hugo Larochelle, François Laviolette, Mario Marchand, and Victor Lempitsky. Domain-adversarial training of neural networks. The journal of machine learning research, 17(1):2096–2030, 2016.

- [9] Jian Gao, Yang Hua, Guosheng Hu, Chi Wang, and Neil M. Robertson. Reducing distributional uncertainty by mutual information maximisation and transferable feature learning. In Computer Vision – ECCV 2020: 16th European Conference, Glasgow, UK, August 23–28, 2020, Proceedings, Part XXIII, page 587–605, Berlin, Heidelberg, 2020. Springer-Verlag.

- [10] Ravi Kant Gupta, Shounak Das, and Amit Sethi. Domain-adaptive learning: Unsupervised adaptation for histology images with improved loss function combination. arXiv preprint arXiv:2309.17172, 2023.

- [11] Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. Deep residual learning for image recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 770–778, 2016.

- [12] S Jian, H Kaiming, R Shaoqing, and Z Xiangyu. Deep residual learning for image recognition. In IEEE Conference on Computer Vision & Pattern Recognition, pages 770–778, 2016.

- [13] Ying Jin, Ximei Wang, Mingsheng Long, and Jianmin Wang. Minimum class confusion for versatile domain adaptation. In Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, August 23–28, 2020, Proceedings, Part XXI 16, pages 464–480. Springer, 2020.

- [14] Guoliang Kang, Liang Zheng, Yan Yan, and Yi Yang. Deep adversarial attention alignment for unsupervised domain adaptation: the benefit of target expectation maximization. In Proceedings of the European conference on computer vision (ECCV), pages 401–416, 2018.

- [15] Andreas Krause, Pietro Perona, and Ryan Gomes. Discriminative clustering by regularized information maximization. Advances in neural information processing systems, 23, 2010.

- [16] Vinod Kumar Kurmi, Shanu Kumar, and Vinay P Namboodiri. Attending to discriminative certainty for domain adaptation. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pages 491–500, 2019.

- [17] Chen-Yu Lee, Tanmay Batra, Mohammad Haris Baig, and Daniel Ulbricht. Sliced wasserstein discrepancy for unsupervised domain adaptation. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pages 10285–10295, 2019.

- [18] Shuang Li, Mixue Xie, Fangrui Lv, Chi Harold Liu, Jian Liang, Chen Qin, and Wei Li. Semantic concentration for domain adaptation. CoRR, abs/2108.05720, 2021.

- [19] Jian Liang, Dapeng Hu, and Jiashi Feng. Do we really need to access the source data? source hypothesis transfer for unsupervised domain adaptation. In International conference on machine learning, pages 6028–6039. PMLR, 2020.

- [20] Tsung-Yi Lin, Piotr Dollár, Ross Girshick, Kaiming He, Bharath Hariharan, and Serge Belongie. Feature pyramid networks for object detection. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 2117–2125, 2017.

- [21] Mingsheng Long, Yue Cao, Jianmin Wang, and Michael Jordan. Learning transferable features with deep adaptation networks. In International conference on machine learning, pages 97–105. PMLR, 2015.

- [22] Mingsheng Long, Zhangjie Cao, Jianmin Wang, and Michael I Jordan. Conditional adversarial domain adaptation. Advances in neural information processing systems, 31, 2018.

- [23] Ilya Loshchilov and Frank Hutter. Decoupled weight decay regularization. In International Conference on Learning Representations, 2019.

- [24] Jaemin Na, Heechul Jung, Hyung Jin Chang, and Wonjun Hwang. Fixbi: Bridging domain spaces for unsupervised domain adaptation. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pages 1094–1103, 2021.

- [25] Silvio Pavanetto and Marco Brambilla. Generation of realistic navigation paths for web site testing using rnn and gan. Journal of Web Engineering, pages 2571–2604, 2021.

- [26] Zhongyi Pei, Zhangjie Cao, Mingsheng Long, and Jianmin Wang. Multi-adversarial domain adaptation. In Proceedings of the AAAI conference on artificial intelligence, volume 32, 2018.

- [27] Xingchao Peng, Qinxun Bai, Xide Xia, Zijun Huang, Kate Saenko, and Bo Wang. Moment matching for multi-source domain adaptation. In Proceedings of the IEEE/CVF international conference on computer vision, pages 1406–1415, 2019.

- [28] Harsh Rangwani, Sumukh K Aithal, Mayank Mishra, Arihant Jain, and Venkatesh Babu Radhakrishnan. A closer look at smoothness in domain adversarial training. In International Conference on Machine Learning, pages 18378–18399. PMLR, 2022.

- [29] Kate Saenko, Brian Kulis, Mario Fritz, and Trevor Darrell. Adapting visual category models to new domains. In Computer Vision–ECCV 2010: 11th European Conference on Computer Vision, Heraklion, Crete, Greece, September 5-11, 2010, Proceedings, Part IV 11, pages 213–226. Springer, 2010.

- [30] Kuniaki Saito, Kohei Watanabe, Yoshitaka Ushiku, and Tatsuya Harada. Maximum classifier discrepancy for unsupervised domain adaptation. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 3723–3732, 2018.

- [31] Le Song, Jonathan Huang, Alex Smola, and Kenji Fukumizu. Hilbert space embeddings of conditional distributions with applications to dynamical systems. In Proceedings of the 26th Annual International Conference on Machine Learning, pages 961–968, 2009.

- [32] Hui Tang, Ke Chen, and Kui Jia. Unsupervised domain adaptation via structurally regularized deep clustering. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pages 8725–8735, 2020.

- [33] Asher Trockman and J Zico Kolter. Patches are all you need? arXiv preprint arXiv:2201.09792, 2022.

- [34] Eric Tzeng, Judy Hoffman, Kate Saenko, and Trevor Darrell. Adversarial discriminative domain adaptation. CoRR, abs/1702.05464, 2017.

- [35] Laurens Van der Maaten and Geoffrey Hinton. Visualizing data using t-sne. Journal of machine learning research, 9(11), 2008.

- [36] Hemanth Venkateswara, Jose Eusebio, Shayok Chakraborty, and Sethuraman Panchanathan. Deep hashing network for unsupervised domain adaptation. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 5018–5027, 2017.

- [37] Jason Yosinski, Jeff Clune, Yoshua Bengio, and Hod Lipson. How transferable are features in deep neural networks? Advances in neural information processing systems, 27, 2014.

- [38] Yuchen Zhang, Tianle Liu, Mingsheng Long, and Michael Jordan. Bridging theory and algorithm for domain adaptation. In Kamalika Chaudhuri and Ruslan Salakhutdinov, editors, Proceedings of the 36th International Conference on Machine Learning, volume 97 of Proceedings of Machine Learning Research, pages 7404–7413. PMLR, 09–15 Jun 2019.