Identification of prognostic and predictive biomarkers in high-dimensional data with PPLasso

Abstract.

In clinical development, identification of prognostic and predictive biomarkers is essential to precision medicine. Prognostic biomarkers can be useful for anticipating the prognosis of individual patients, and predictive biomarkers can be used to identify patients more likely to benefit from a given treatment. Previous researches were mainly focused on clinical characteristics, and the use of genomic data in such an area is hardly studied. A new method is required to simultaneously select prognostic and predictive biomarkers in high dimensional genomic data where biomarkers are highly correlated. We propose a novel approach called PPLasso (Prognostic Predictive Lasso) integrating prognostic and predictive effects into one statistical model. PPLasso also takes into account the correlations between biomarkers that can alter the biomarker selection accuracy. Our method consists in transforming the design matrix to remove the correlations between the biomarkers before applying the generalized Lasso. In a comprehensive numerical evaluation, we show that PPLasso outperforms the Lasso type approaches on both prognostic and predictive biomarker identification in various scenarios. Finally, our method is applied to publicly available transcriptomic data from clinical trial RV144. Our method is implemented in the PPLasso R package which is available from the Comprehensive R Archive Network (CRAN).

Key words and phrases:

variable selection; highly correlated predictors; genomic data1. Introduction

With the advancement of precision medicine, there has been an increasing interest in identifying prognostic or predictive biomarkers in clinical development. A prognostic biomarker informs about a likely clinical outcome (e.g., disease recurrence, disease progression, death) in the absence of therapy or with a standard therapy that patients are likely to receive, while a predictive biomarker is associated with a response or a lack of response to a specific therapy. Ballman (2015) and Clark (2008) provided a comprehensive explanation and concrete examples to distinguish prognostic from predictive biomarkers, respectively.

Concerning the biomarker selection, the high dimensionality of genomic data is one of the main challenges as explained in Fan and Li (2006). To identify effective biomarkers in high-dimensional settings, several approaches can be considered including hypothesis-based tests described in McDonald (2009), wrapper approaches proposed in Saeys et al. (2007), and penalized approaches such as Lasso designed by Tibshirani (1996) among others. Hypothesis-based tests consider each biomarker independently and thus ignore potential correlations between them. Wrapper approaches often show high risk of overfitting and are computationally expensive for high-dimensional data as explained in Smith (2018). More efforts have been devoted to penalized methods given their ability to automatically perform variable selection and coefficient estimation simultaneously as highlighted in Fan and Lv (2009). However, Lasso showed some potential drawbacks when biomarkers are highly correlated. Particularly, when the Irrepresentable Condition (IC) proposed by Zhao and Yu (2006) is violated, Lasso can not guarantee to correctly identify true effective biomarkers. In genomic data, biomarkers are usually highly correlated such that this condition can hardly be satisfied, see Wang et al. (2019). Several methods have been proposed to adress this issue. Elastic Net (Zou and Hastie, 2005) combines the and penalties and is particularly effective in tackling correlation issues and can generally outperform Lasso. Adaptive Lasso (Zou, 2006) proposes to assign adaptive weights for penalizing different coefficients in the penalty, and its oracle property was demonstrated. Wang and Leng (2016) proposed the HOLP approach which consists in removing the correlation between the columns of the design matrix; Wang et al. (2019) proposed to handle the correlation by assigning similar weights to correlated variables in their approach called Precision Lasso; Zhu et al. (2021) proposed to remove the correlations by applying a whitening transformation to the data before using the generalized Lasso criterion designed by Tibshirani and Taylor (2011).

The challenge of finding prognostic biomarkers has been extensively explored with previously introduced methods, however, the discovery of predictive biomarkers has seen much less attention. Limited to binary endpoint, Foster et al. (2011) proposed to first predict response probabilities for treatment and use this probability as the response in a classification problem to find effective biomarkers. Tian et al. (2012) proposed a new method to detect interaction between the treatment and the biomarkers by modifying the covariates. This method can be implemented on continuous/binary/time-to-event endpoint. Lipkovich et al. (2011) proposed a method called SIDES, which adopts a recursive partitioning algorithm for screening treatment-by-biomarker interactions. This method was further improved in Lipkovich and Dmitrienko (2014) by adding another step of preselection on predictive biomarkers based on variable importance. The method was demonstrated with continuous endpoint. More recently, Sechidis et al. (2018) applied approaches coming from information theory for ranking biomarkers on their prognostic/predictive strength. Their method is applicable only for binary or time-to-event endpoint. Moreover, all of these methods were assessed under the situation where the sample size is relatively large and the number of biomarkers is limited, which is hardly the case for genomic data.

In the literature mentioned above, the authors focused on one of the problematic of identifying prognostic or predictive biomarkers, but rarely on both. Even if predictive biomarkers is of major importance for identifying patients more likely to benefit from a treatment, the identification of prognostic biomarkers is also key in this context. Indeed, the clinical impact of a treatment can be judged only with the knowledge of the prognosis of a patient. It is thus of importance to reliably predict the prognosis of patients to assist treatment counseling (Windeler, 2000). In this paper, we propose a novel approach called PPLasso (Prognostic Predictive Lasso) to simultaneously identify prognostic and predictive biomarkers in a high dimensional setting with continuous endpoints, as presented in Section 2. Extensive numerical experiments are given in Section 3 to assess the performance of our approach and to compare it to other methods. PPLasso is also applied to the clinical trial RV144 in Section 4. Finally, we give concluding remarks in Section 5.

2. Methods

In this section, we propose a novel approach called PPLasso (Predictive Prognostic Lasso) which consists in writing the identification of predictive and prognostic biomarkers as a variable selection problem in an ANCOVA (Analysis of Covariance) type model mentioned for instance in Faraway (2002).

2.1. Statistical modeling

Let be a continuous response or endpoint and , two treatments. Let also (resp. ) denote the design matrix for the (resp. ) patients with treatment (resp. ), each containing measurements on candidate biomarkers:

| (1) |

To take into account the potential correlation that may exist between the biomarkers in the different treatments, we shall assume that the rows of (resp. ) are independent centered Gaussian random vectors with a covariance matrice equal to (resp. ).

To model the link that exists between and the different types of biomarkers we propose using the following model:

| (2) |

where corresponds to the response of patients with treatment , being equal to 1 or 2,

with (resp. ) corresponding to the effects of treatment (resp. ).

Moreover, (resp. ) are the coefficients associated to each of the biomarkers in treatment (resp. ) group, ′ denoting the matrix transposition

and are standard independent Gaussian random variables independent of and . When stands for the standard treatment or placebo, prognostic biomarkers are defined as those having non-zero coefficients in . According to the definition of prognostic biomarkers, their effect should indeed be demonstrated in the absence of therapy or with a standard therapy that patients are likely to receive. On the other hand, predictive biomarkers are defined as those having non-zero coefficients in because they aim to highlight different effects between two different treatments.

Model (2) can be written as:

| (3) |

with . The Lasso penalty is a well-known approach to estimate coefficients with a sparsity enforcing constraint allowing variable selection by estimating some coefficients by zero. It consists in minimizing the following penalized least-squares criterion (Tibshirani (1996)):

| (4) |

where and for . A different sparsity constraint was applied to and to allow different sparsity levels. Hence we propose to replace the penalty in (4) by

| (5) |

Thus, a first estimator of could be found by minimizing the following criterion with respect to :

| (6) |

where and , with denoting the identity matrix of size and denoting a matrix having rows and columns and containing only zeros. However, since the inconsistency of Lasso biomarker selection is originated from the correlations between the biomarkers, we propose to remove the correlation by “whitening” the matrix . More precisely, we consider , where

| (7) |

and define by replacing in (7) by , where , and being the matrices involved in the spectral decomposition of for or 2. With such a transformation the columns of are decorrelated and Model (3) can be rewritten as follows:

| (8) |

where . The objective function (6) thus becomes:

| (9) |

2.2. Estimation of

Let us define a first estimator of as follows:

| (10) |

for each fixed and . To better estimate and , a thresholding was applied to . For (resp. ) in , let (resp. ) be the set of indices corresponding to the (resp. ) largest values of the components of (resp. ), then the estimator of after the correction is denoted by where the th component of , for or 2, is defined by:

| (11) |

Note that the corrections are only performed on , the estimators and were not modified. The choice of and will be explained in Section 2.4.

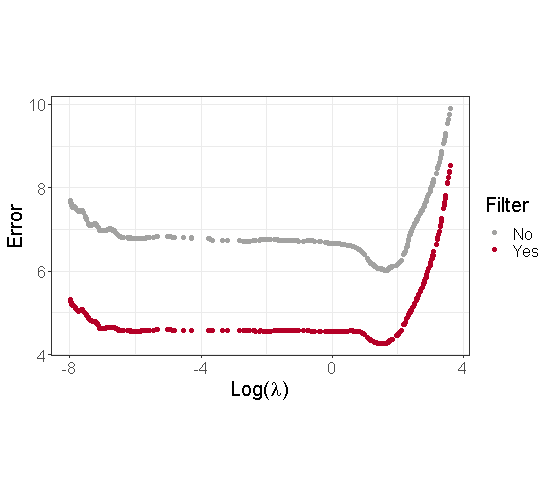

To illustrate the interest of using a thresholding step, we generated a dataset based on Model 3 with parameters described in Section 3.1 and . Moreover, to simplify the graphical illustrations, we focus on the case where . Figure 1 displays the estimation error associated to the estimators of before and after the thresholding. We can see from this figure that the estimation of is less biased after the correction. Moreover, we observed that this thresholding strongly improves the final estimation of and the variable selection performance of our method.

2.3. Estimation of

With , the estimators of and can be obtained by and . As previously, another thresholding was applied to and : for or 2,

| (12) |

for each fixed and . The biomarkers with non-zero coefficients in (resp. ) are considered as prognostic (resp. predictive) biomarkers, where the choice of and is explained in Section 2.4.

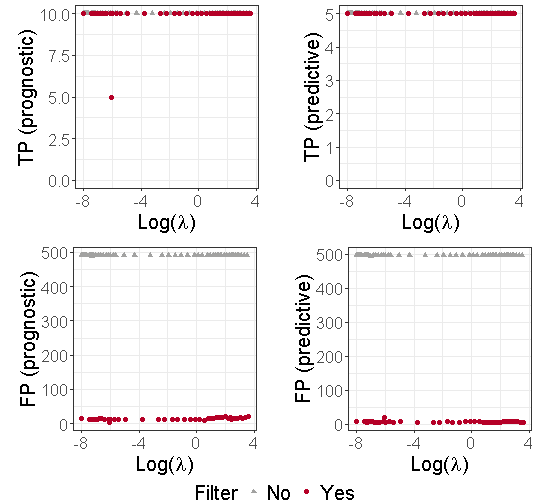

To illustrate the benefits of using an additional thresholding step, we used the dataset described in Section 2.2. Moreover, to simplify the graphical illustrations, we also focus on the case where . Figure 8 in the Supplementary material displays the number of True Positive (TP) and False Positive (FP) in prognostic and predictive biomarker identification with and without the second thresholding. We can see from this figure that the thresholding stage limits the number of false positives. Note that and are estimated by and defined in (10).

2.4. Choice of the parameters , and

For each and each , we computed:

| (13) |

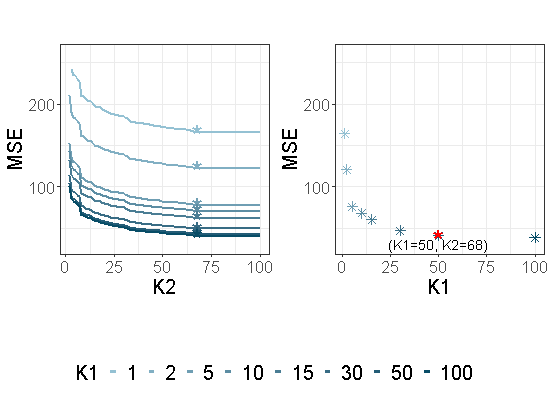

where defined in (10) and in (11). It is displayed in the left part of Figure 2.

For each , and a given , the parameter is then chosen as follows for each :

The associated to each are displayed with ’*’ in the left part of Figure 2. Then is chosen by using a similar criterion:

The values of are displayed in the right part of Figure 2 in the particular case where , and with the same dataset as the one used in Section 2.2. is displayed with a red star. This value of will be used in the following sections. However, choosing in the range (0.9,0.99) does not have a strong impact on the variable selection performance of our approach.

2.5. Estimation of and

As the empirical correlation matrix is known to be a non accurate estimator of when is larger than , a new estimator has to be used. Thus, for estimating we adopted a cross-validation based method designed by Boileau et al. (2021) and implemented in the cvCovEst R package (Boileau et al., 2021). This method chooses the estimator having the smallest estimation error among several compared methods (sample correlation matrix, POET (Fan et al. (2013)) and Tapering (Cai et al. (2010)) as examples). Since the samples in treatments and are assumed to be collected from the same population, and are assumed to be equal.

2.6. Choice of the parameters and

For the sake of simplicity, we limit ourselves to the case where . For choosing we used BIC (Bayesian Information Criterion) which is widely used in the variable selection field and which consists in minimizing the following criterion with respect to :

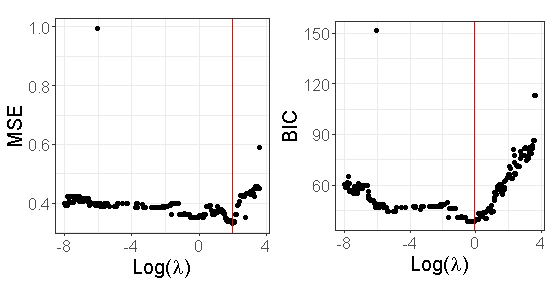

where is the total number of samples, and is the number of non null coefficients in the OLS estimator obtained by re-estimating only the non null components of and . The values of the BIC criterion as well as those of the MSE obtained from the dataset described in Section 2.2 are displayed in Figure 3.

Table 2 in the supplementary material provides the True Positive Rate (TPR) and False Positive Rate (FPR) when is chosen either by minimizing the MSE or the BIC criterion for this dataset. We can see from this table that both of them have TPR=1 (all true positives are identified). However, the FPR based on the BIC criterion is smaller than the one obtained by using the MSE.

3. Numerical experiments

This section presents a comprehensive numerical study by comparing the performance of our method with other regularized approaches in terms of prognostic and predictive biomarker selection. Besides the Lasso, we also compared with Elastic Net and Adaptive Lasso since they also take into account the correlations. For Lasso, Elastic Net and Adaptive Lasso, in order to directly estimate prognostic and predictive effects, and in Model (3) were replaced by

and , respectively, where and are defined in (1), (resp. ) denotes a matrix having rows and columns and containing only zeros (resp. ones). Note that this is the modeling proposed by Lipkovich et al. (2017). The sparsity enforcing constraint was put on the coefficients and which boils down to putting a sparsity enforcing constraint on and .

3.1. Simulation setting

All simulated datasets were generated from Model (3) where the () rows of () are assumed to be independent Gaussian random vectors with a covariance matrix , and is a standard Gaussian random vector independent of and . We defined as:

| (14) |

where (resp. ) are the correlation matrix of prognostic (resp. non-prognostic) biomarkers with off-diagonal entries equal to (resp. ). Morever, is the correlation matrix between prognostic and non-prognostic variables with entries equal to . In our simulations , which is a framework proposed by Xue and Qu (2017). We checked that the Irrepresentable Condition (IC) of Zhao and Yu (2006) is violated and thus the standard Lasso cannot recover the positions of the null and non null variables. For each dataset we assumed randomized treatment allocation between standard and experimental arm with a 1:1 ratio, i.e. . We further assume a relative treatment effect of 1 ( and ). The number of biomarkers varies from 200 to 2000. The number of active biomarkers was set to 10 (i.e. 5 purely prognostic biomarkers with and 5 biomarkers both prognostic and predictive with and ).

3.2. Evaluation criteria

We considered several evaluation criteria to assess the performance of the methods in selecting the prognostic and predictive biomarkers: the as the true positive rate (i.e. rate of active biomarkers selected) and the false positive rate (i.e. rate of inactive biomarkers selected) of the selection of prognostic biomarkers, and similarly for predictive biomarkers with and . We further note and the criterion of overall selection among all candidate biomarkers regardless their prognostic or predictive effect. The objective of the selection is to maximize the and minimize the . All metrics were calculated by averaging the results of 100 replications for each scenario.

3.3. Biomarker selection results

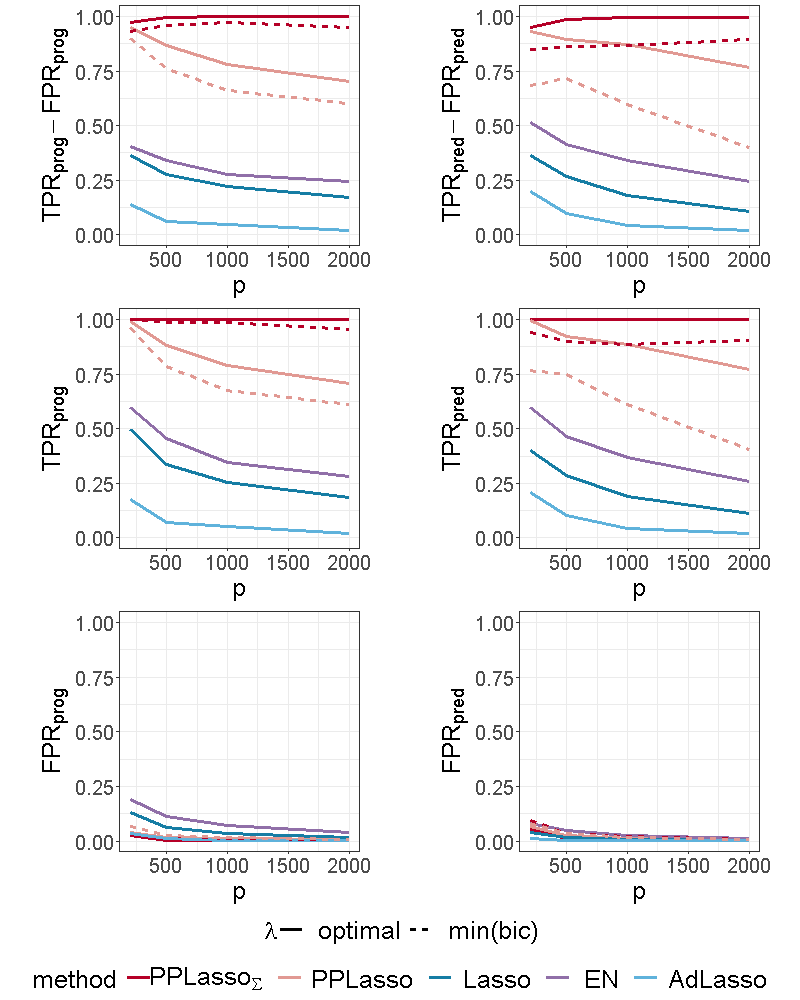

For the proposed method, different results are presented. (resp. PPLasso) corresponds to the results of the method by considering the true (resp. estimated) matrix . For estimating , we used the approach explained in Section 2.5. Two choices of parameters are also given: “optimal” and “min(bic)”. The former uses as a value for the one that maximizes and the latter uses the approach presented in Section 2.6. In order to compare the performance of our approach to the best performance that could be reached by Elastic Net, Lasso and Adaptive Lasso, we used for these methods the “optimal” parameters namely those maximizing . All these three methods were implemented with the glmnet R package, the best parameter involved in Elastic Net was chosen in the set . The choice of “min(bic)” is only applied to our method and corresponds to a choice of that could be used in practical situations. For ease of presentation, the abbreviation EN (resp. AdLasso) refers to Elastic Net (resp. Adaptive Lasso) in the following.

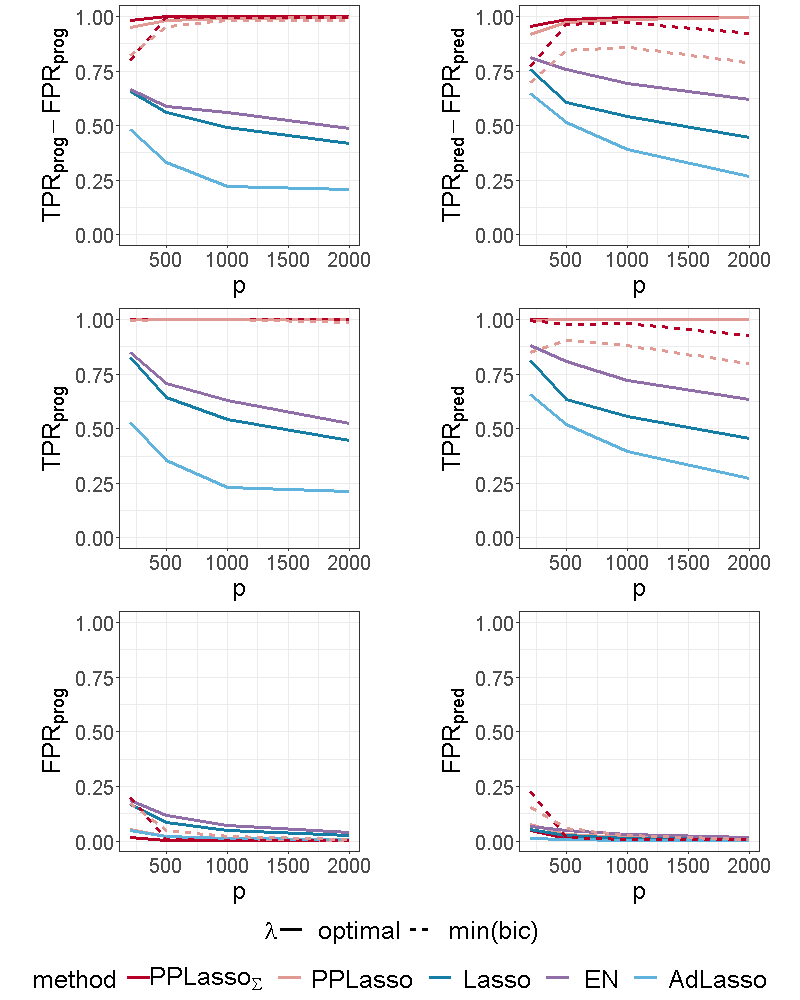

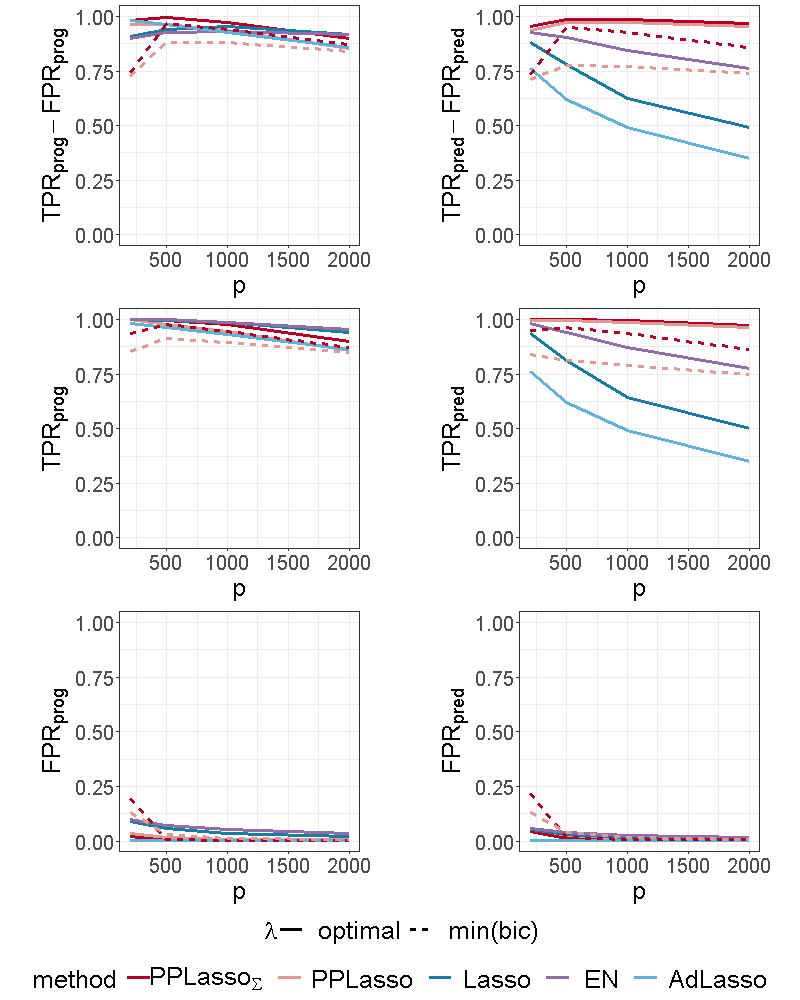

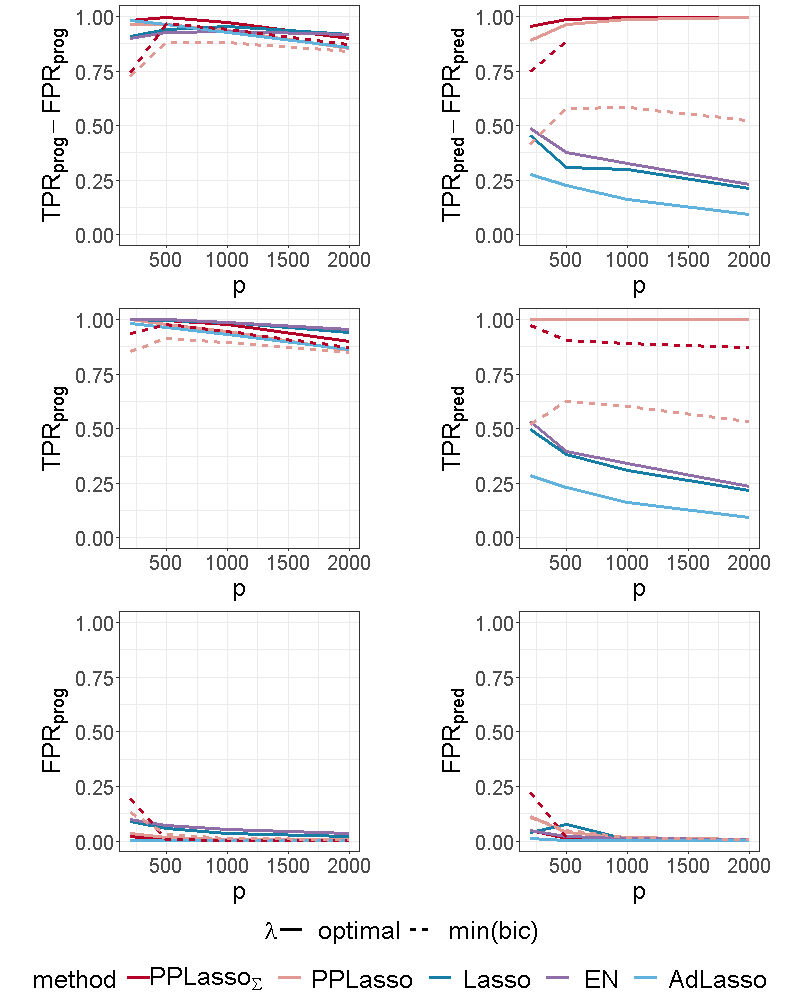

Figure 4 shows the selection performance of PPLasso and other compared methods in the simulation scenario presented in Section 3.1. PPLasso achieved to select all prognostic biomarkers ( almost 1) even for large , with limited false positive prognostic biomarkers selected. As compared to the optimal maximizing , the one selected with the BIC tends to select some false positives (average: 33 () for and 10 () for ). The results obtained from the oracle and estimated are comparable. Selection performance of predictive biomarkers is slightly lowered as compared to prognostic biomarkers. Even if the false positive selection is quite similar between prognostic and predictive biomarkers, PPLasso missed some true predictive biomarkers when is selected with the BIC criterion (average 0.98 and 0.80 for oracle and estimated , respectively, with ). In this scenario where the IC is violated, PPLasso globally outperforms Lasso, Elastic Net and Adaptive Lasso. Although Elastic Net showed higher TPR than Lasso and Adaptive Lasso, they all failed in selecting all truly prognostic and predictive biomarkers, and the number of missed active biomarkers increased with the dimension . For example, for Elastic Net, = 0.85 and 0.53, = 0.81 and 0.61 for and , respectively.

3.3.1. Impact of the correlation matrix

To evaluate the impact of the correlation matrix on the selection performance of the methods, additional scenarios are presented where the IC is satisfied:

-

(1)

Compound symmetry structure where all biomarkers are equally correlated with a correlation ;

-

(2)

Independent setting where is the identity matrix.

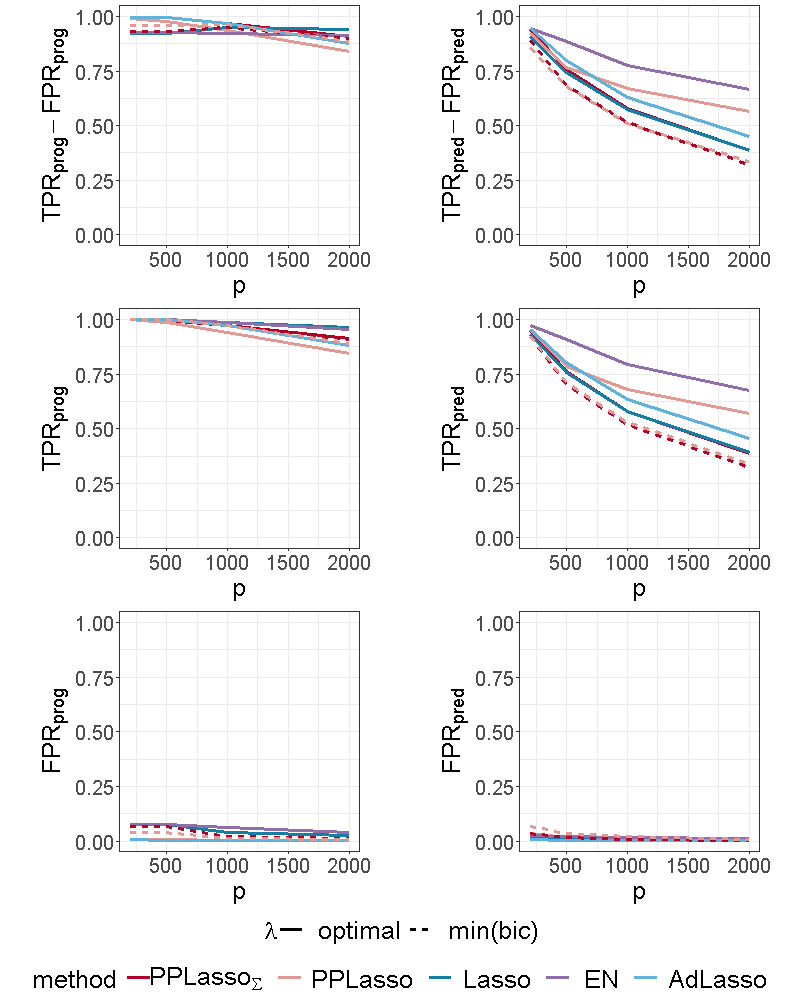

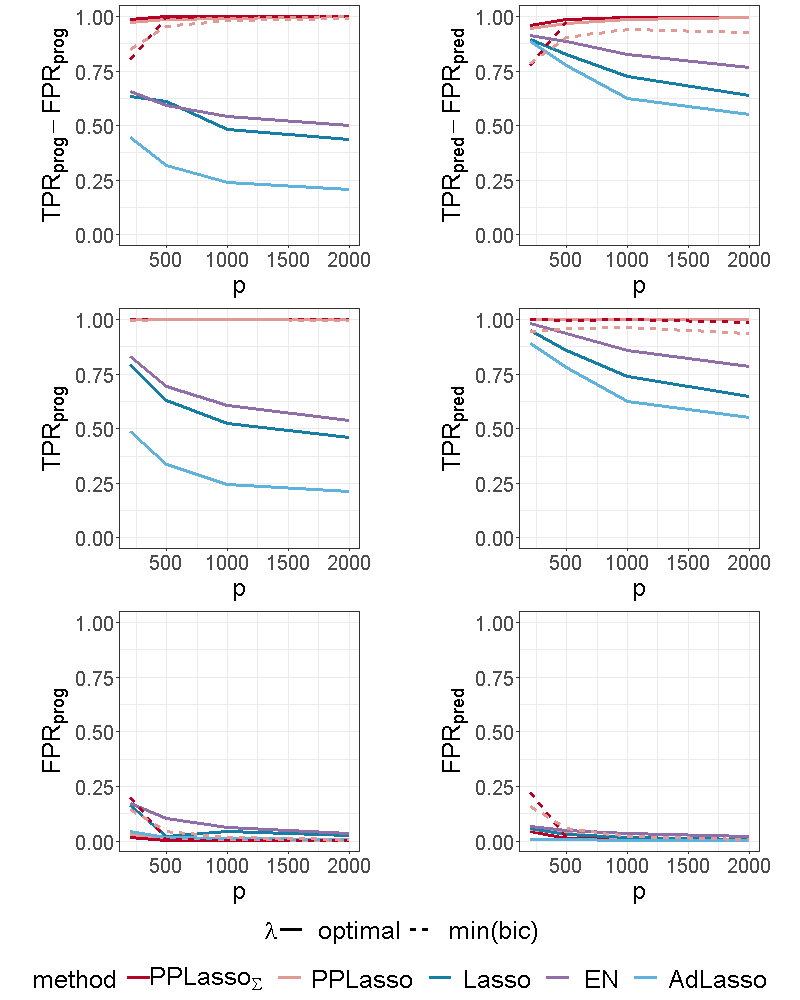

For the scenario with compound symmetry structure displayed in Figure 5, all the methods successfully identified the true prognostic biomarkers ( close to 1 even for large ) with limited false positive selection. On the other hand, the compared methods (Lasso, ELastic Net, Adaptive Lasso) missed some predictive biomarkers especially when increases. On the contrary, PPLasso successfully identified almost all predictive biomarkers with the optimal choice of . Moreover, even when is selected by minimizing the BIC criterion (min(bic)), outperformed Lasso and Adaptive Lasso when with relatively stable and as increases.

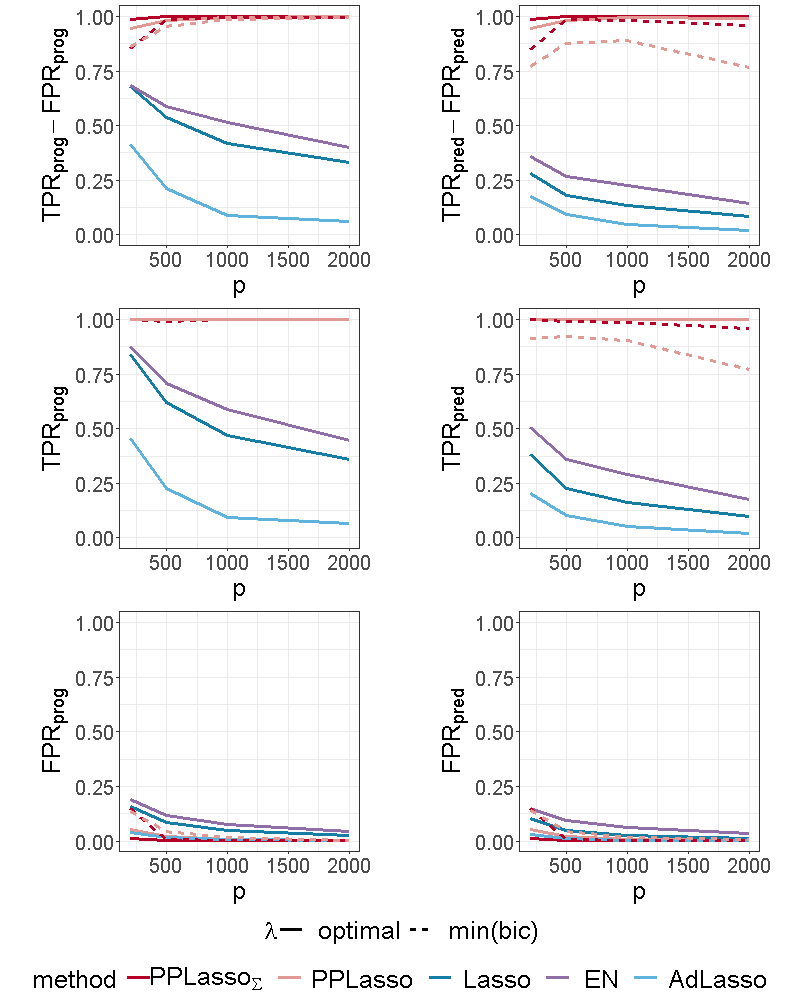

For the independent setting, as displayed in Figure 6, prognostic biomarkers were globally well identified by all the compared methods with a slightly higher for Lasso and ELastic Net as compared to PPLasso but also with a slightly higher . With regards to predictive biomarkers, PPLasso using (oracle) performed also similarly to the Lasso, which is reasonable since no transformation has been used in PPLasso. On the other hand, even if PPLasso with selected with “min(bic)” performed similarly with PPLasso with optimal for relatively small , the selection performance is altered for large and even if the performance is higher than Lasso and Adaptive Lasso, it is smaller than the one of Elastic Net.

3.3.2. Impact of the effect size of active biomarkers

To evaluate the impact of the effect size on biomarker selection performance, the scenario presented in Section 3.1 was considered with different values of : 1.5, 2 and 2.5.

Since the effect size of prognostic biomarkers did not change, the comparison focused on predictive biomarkers. As expected, the reduction of the effect size makes the biomarker selection harder, especially for Lasso, Elastic Net and Adaptive Lasso where the predictive biomarker selection is limited when : for Lasso when , = 0.45 (resp. 0.22) for (resp. 1.5), see Figure 4 and Figure 9 of the supplementary material. The selection performance of PPLasso when is selected with min(bic) is also reduced by decreasing , especially when is also estimated. Nevertheless, the selection performance of PPLasso remains better than for the other compared methods for which the performance displayed are associated to the optimal value of . On the other hand, even with limited effect size, PPLasso with optimal identified all predictive biomarkers with very limited false positive selection. When was increased to 2.5, the selection performance for all methods is improved and the results for PPLasso with estimated was close to the ones with the optimal as displayed in Figure 10 of the supplementary material. As compared with PPLasso, for which the selection performance remained stable as increased, Lasso, Elastic Net and Adaptive Lasso were more impacted by the value of since the true positive selection decreased as increased. As an example, for the Lasso, =0.95 (resp. 0.65) for (resp. 2000).

3.3.3. Impact of the number of predictive biomarkers

The impact of the number of true predictive biomarkers was assessed by increasing the number of predictive biomarkers from 5 to 10 in the scenario presented in Section 3.1. When the number of predictive biomarkers increased, the impact on PPLasso is almost negligible, especially for prognostic biomarker identification. However, for the other methods, we can see from Figure 11 of the supplementary material that it became even harder to identify predictive biomarkers. decreased compared to Figure 4, especially for large (e.g. 0.12, 0.18, and 0.02 for Lasso, Elastic Net and Adaptive Lasso respectively when ).

3.3.4. Impact of the dimension of the dataset

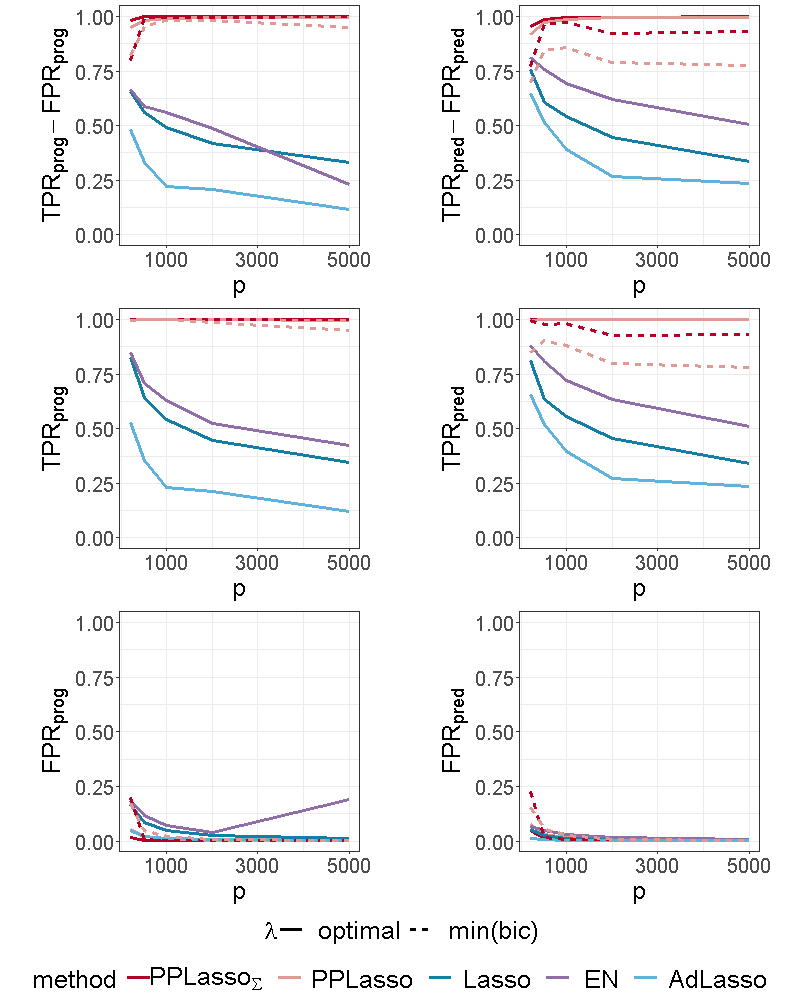

In this section, we studied a different sample size: =50 with and a different number of biomarkers: =5000.

We can see from Figure 12 of the supplementary material that for , the selection performance of PPLasso is not altered as compared with while the compared methods have more difficulties to identify both prognostic and predictive biomarkers.

When the sample size is smaller (=50), we can see from Figure 13 of the supplementary material that the ability to identify prognostic and predictive biomarkers decreased for all the methods. However, PPLasso still outperformed the others with higher and and lower and .

4. Application to transcriptomic data in RV144 clinical trial

We applied the previously described methods to publicly available transcriptomic data from the RV144 vaccine trial (Rerks-Ngarm et al. (2009)). This trial showed reduced risk of HIV-1 acquisition by 31.2% with vaccination with ALVAC and AIDSVAX as compared to placebo. Transcriptomic profiles of in vitro HIV-1 Env-stimulated peripheral blood mononuclear cells (PBMCs) obtained pre-immunization and 15 days after the immunization (D15) from both 40 vaccinees and 10 placebo recipients were generated to better understand underlying biological mechanisms (Fourati et al. (2019), Gene Expression Omnibus accession code: GSE103671).

For illustration purpose, the absolute change at D15 in gene mTOR was considered as the continuous endpoint (response). mTOR plays a key role in mTORC1 signaling pathway which has been shown to be associated with risk of HIV-1 acquisition (Fourati et al. (2019), Akbay et al. (2020)). The gene expression has been normalized as in the original publication of Fourati et al. (2019). After removing non-annotated genes (LOCxxxx and HS.xxxx), the top 2000 genes with the highest empirical variances were included as candidate biomarkers for prognostic and predictive identification from PPLasso and the compared methods. The penalty parameter for the Lasso and Adaptive Lasso, the parameters and for Elastic Net were selected through the classical cross-validation approach. For PPLasso, was selected based on the criterion described in Section 2.6.

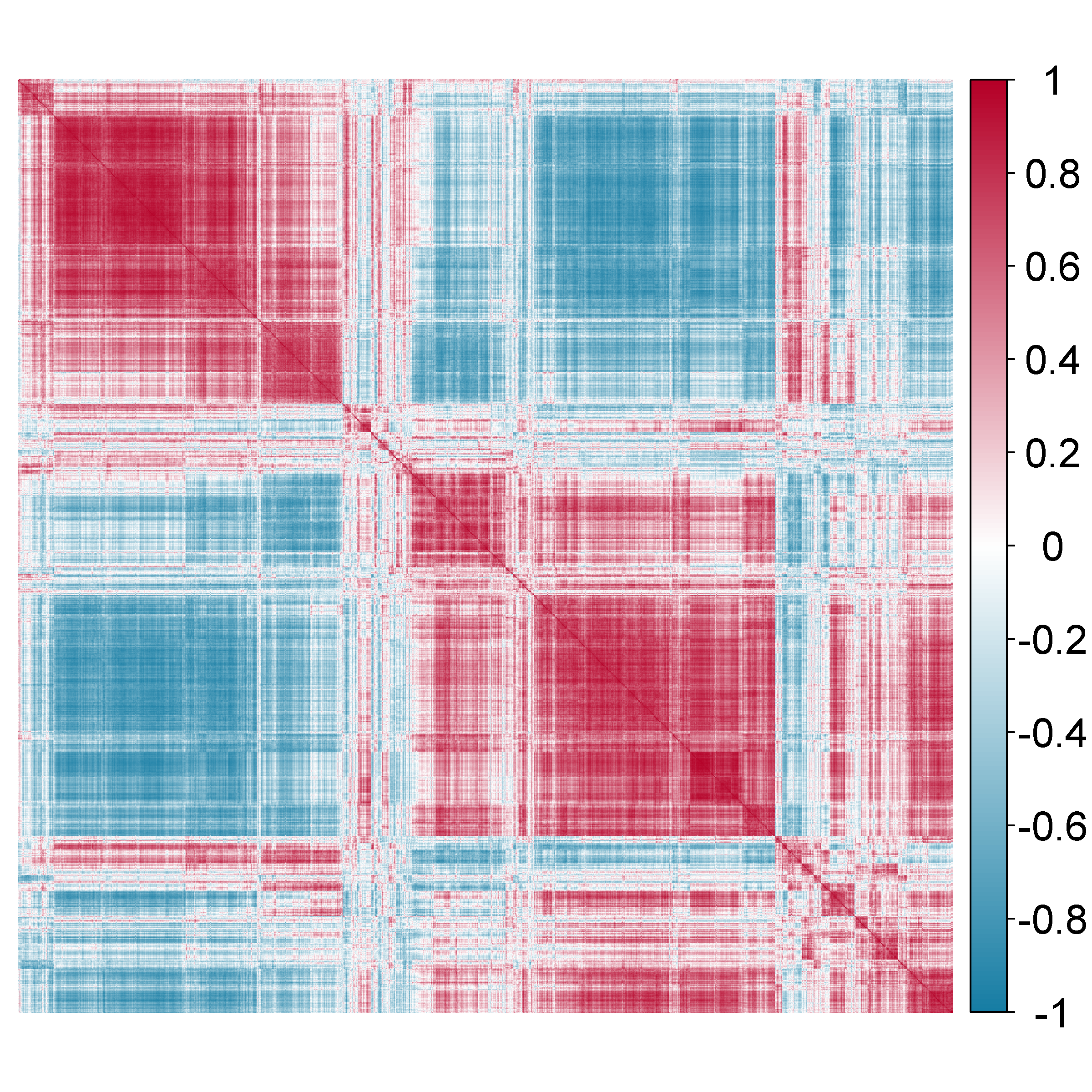

The estimation of was obtained by comparing several candidate estimators from the cvCovEst R package and by selecting the estimator having the smallest estimation error. In this application, the combination of the sample covariance matrix and a dense target matrix (denseLinearShrinkEst) derived by Ledoit and Wolf (2020) provides the smallest estimation error. Figure 7 displays the estimated and highlights the strong correlation between the genes. Table 3 of the Supplementary material gives details on the compared estimators.

Prognostic and predictive genes selected by PPLasso, Lasso, Elastic Net and Adaptive Lasso are listed in Table 1. The number of genes selected are similar for all the compared methods, except for a slightly higher number of predictive genes selected by PPLasso. Lasso, Elastic Net and Adaptive Lasso selected very similar sets of prognostic and predictive genes. The intersection between PPLasso and others is moderate (2 prognostic genes (SLAMF7 and TNFRSF6B), 3 predictive genes (YTHDC1, MS4A7 and RPL21)). Interestingly, some genes selected by most methods such as SLAMF7, TNFRSF6B, TNFRSF18 or NUCKS1 have already been discussed in the HIV-1 field. Moreover, among the predictive genes selected by the PPLasso, some are linked to pathways that have been highlighted as possible target for HIV-1 such as BIRC3 and TLR8.

| prognostic genes | predictive genes | |

| PPLasso | HAPLN3, SLAMF7, GTF3C5, FAM46A, SH3PXD2B, TM4SF1, TNFRSF6B, TNFRSF18, TRPM2 | TLR8, YTHDC1, NUCKS1, BIRC3, SLAMF7, NFATC2IP, BOK, MGRN1, KIAA0492, SLC25A36, HMGN2, P2RY5, RPL21, MS4A7, RPL12P6 |

| Lasso | DKFZp434K191, NUCKS1, MAFF, SLAMF7, HIST2H2AC, HIST1H4C, IL8, TNFRSF6B, TNFRSF18, SCAND1 | DKFZp434K191, YTHDC1, VMO1, BOLA2, HIST1H4C, RPL21, MS4A7 |

| Elastic Net | DKFZp434K191, NUCKS1,SNURF, MAFF, SLAMF7, IL8, ZBP1, TNFRSF6B, ZAK, TNFRSF18, SCAND1, NME1-NME2, DNM1L, RNF146, NPEPL1 | DKFZp434K191, YTHDC1, PMP22, VMO1, BOLA2, HIST1H4C, RPL21, MS4A7,RAB11FIP1 |

| Adaptive Lasso | NUCKS1,SNURF, MAFF, SLAMF7, IL8, ZBP1, TNFRSF6B, NME1-NME2, DNM1L, RNF146 | YTHDC1, PMP22, VMO1, BOLA2, HIST1H4C, MS4A7, RPL21 |

5. Conclusion

We propose a new method named PPLasso to simultaneously identify prognostic and predictive biomarkers. PPLasso is particularly interesting for dealing with high dimensional omics data when the biomarkers are highly correlated, which is a framework that has not been thoroughly investigated yet. From various numerical studies with or whithout strong correlation between biomarkers, we highlighted the strength of PPLasso in well identifying both prognostic and predictive biomarkers with limited false positive selection. The current method is only dedicated to the analysis of continuous responses through ANCOVA type models. However, it will be the subject of a future work to extend it to other challenging contexts, such as classification or survival analysis.

Funding

This work was supported by the Association Nationale de la Recherche et de la Technologie (ANRT).

References

- Akbay et al. (2020) Akbay, B., Shmakova, A., Vassetzky, Y., and Dokudovskaya, S. (2020). Modulation of mtorc1 signaling pathway by hiv-1. Cells, 9, 1090.

- Ballman (2015) Ballman, K. V. (2015). Biomarker: Predictive or prognostic? Journal of clinical oncology, 33(33), 3968–3971.

- Boileau et al. (2021) Boileau, P., Hejazi, N. S., van der Laan, M. J., and Dudoit, S. (2021). Cross-validated loss-based covariance matrix estimator selection in high dimensions.

- Boileau et al. (2021) Boileau, P., Hejazi, N. S., van der Laan, M. J., and Dudoit, S. (2021). cvCovEst: Cross-validated covariance matrix estimator selection and evaluation in R. Journal of Open Source Software, 6 (63), 3273.

- Cai et al. (2010) Cai, T., Zhang, C.-H., and Zhou, H. (2010). Optimal rates of convergence for covariance matrix estimation. The Annals of Statistics, 38.

- Clark (2008) Clark, G. (2008). Prognostic factors versus predictive factors: Examples from a clinical trial of erlotinib. Molecular oncology, 1, 406–12.

- Fan and Li (2006) Fan, J. and Li, R. (2006). Statistical challenges with high dimensionality: Feature selection in knowledge discovery. Proc. Madrid Int. Congress of Mathematicians, 3.

- Fan and Lv (2009) Fan, J. and Lv, J. (2009). A selective overview of variable selection in high dimensional feature space. Stat. Sinica, 20 1, 101–148.

- Fan et al. (2013) Fan, J., Liao, Y., and Mincheva, M. (2013). Large covariance estimation by thresholding principal orthogonal complements. Journal of the Royal Statistical Society. Series B, Statistical methodology, 75.

- Faraway (2002) Faraway, J. J. (2002). Practical regression and ANOVA using R. University of Bath.

- Foster et al. (2011) Foster, J., Taylor, J., and Ruberg, S. (2011). Subgroup identification from randomized clinical trial data. Statistics in medicine, 30, 2867–80.

- Fourati et al. (2019) Fourati, S., Ribeiro, S., Blasco Lopes, F., Talla, A., Lefebvre, F., Cameron, M., Kaewkungwal, J., Pitisuttithum, P., Nitayaphan, S., Rerks-Ngarm, S., Kim, J., Thomas, R., Gilbert, P., Tomaras, G., Koup, R., Michael, N., McElrath, M., Gottardo, R., and Sékaly, R. (2019). Integrated systems approach defines the antiviral pathways conferring protection by the rv144 hiv vaccine. Nature Communications, 10.

- Ledoit and Wolf (2020) Ledoit, O. and Wolf, M. (2020). The Power of (Non-)Linear Shrinking: A Review and Guide to Covariance Matrix Estimation. Journal of Financial Econometrics.

- Lipkovich and Dmitrienko (2014) Lipkovich, I. and Dmitrienko, A. (2014). Strategies for identifying predictive biomarkers and subgroups with enhanced treatment effect in clinical trials using sides. Journal of biopharmaceutical statistics, 24, 130–53.

- Lipkovich et al. (2011) Lipkovich, I., Dmitrienko, A., Denne, J., and Enas, G. (2011). Subgroup identification based on differential effect search (sides) – a recursive partitioning method for establishing response to treatment in patient subpopulations. Statistics in medicine, 30, 2601–21.

- Lipkovich et al. (2017) Lipkovich, I., Dmitrienko, A., and B. D’Agostino Sr., R. (2017). Tutorial in biostatistics: data-driven subgroup identification and analysis in clinical trials. Statistics in Medicine, 36(1), 136–196.

- McDonald (2009) McDonald, J. (2009). Handbook of Biological Statistics 2nd Edition. Sparky House Publishing Baltimore.

- Rerks-Ngarm et al. (2009) Rerks-Ngarm, S., Pitisuttithum, P., Nitayaphan, S., Kaewkungwal, J., Chiu, J., Paris, R., Premsri, N., Namwat, C., De Souza, M., Benenson, M., Gurunathan, S., Tartaglia, J., McNeil, J., Francis, D., Stablein, D., Birx, D., Chunsuttiwat, S., Khamboonruang, C., and Kim, J. (2009). Vaccination with alvac and aidsvax to prevent hiv-1 infection in thailand. The New England journal of medicine, 361, 2209–20.

- Saeys et al. (2007) Saeys, Y., Inza, I., and Larranaga, P. (2007). A review of feature selection techniques in bioinformatics. Bioinformatics, 23(19), 2507–2517.

- Sechidis et al. (2018) Sechidis, K., Papangelou, K., Metcalfe, P. D., Svensson, D., Weatherall, J., and Brown, G. (2018). Distinguishing prognostic and predictive biomarkers: an information theoretic approach. Bioinformatics, 34(19), 3365–3376.

- Smith (2018) Smith, G. (2018). Step away from stepwise. J. Big Data, 5(32), 1–12.

- Tian et al. (2012) Tian, L., Alizadeh, A., Gentles, A., and Tibshirani, R. (2012). A simple method for estimating interactions between a treatment and a large number of covariates. Journal of the American Statistical Association, 109.

- Tibshirani (1996) Tibshirani, R. (1996). Regression shrinkage and selection via the lasso. J. R. Stat. Soc. Ser. B (Stat. Methodol.), 58(1), 267–288.

- Tibshirani and Taylor (2011) Tibshirani, R. J. and Taylor, J. (2011). The solution path of the generalized lasso. Ann. Stat., 39(3), 1335–1371.

- Wang et al. (2019) Wang, H., Lengerich, B., Aragam, B., and Xing, E. (2019). Precision lasso: Accounting for correlations and linear dependencies in high-dimensional genomic data. Bioinformatics, 35(7), 1181–1187.

- Wang and Leng (2016) Wang, X. and Leng, C. (2016). High dimensional ordinary least squares projection for screening variables. J. R. Stat. Soc. Ser. B (Stat. Methodol.), 78(3), 589–611.

- Windeler (2000) Windeler, J. (2000). Prognosis - what does the clinician associate with this notion? Statistics in medicine, 19, 425–30.

- Xue and Qu (2017) Xue, F. and Qu, A. (2017). Variable selection for highly correlated predictors.

- Zhao and Yu (2006) Zhao, P. and Yu, B. (2006). On model selection consistency of lasso. J. Machine Learn. Res., 7, 2541–2563.

- Zhu et al. (2021) Zhu, W., Lévy-Leduc, C., and Ternès, N. (2021). A variable selection approach for highly correlated predictors in high-dimensional genomic data. Bioinformatics, 37(16), 2238–2244.

- Zou and Hastie (2005) Zou, H. and Hastie, T. (2005) Regularization and variable selection via the elastic net. Journal of the royal statistical society: series B (statistical methodology), 67(2), 301-320.

- Zou (2006) Zou, H. (2006). The adaptive lasso and its oracle properties.. Journal of the American statistical association 101(476), 1418-1429.

Supplementary material

This supplementary material provides additional numerical experiments, figures and tables for the paper: “Identification of prognostic and predictive biomarkers in high-dimensional data with PPLasso”.

| MSE | BIC | |

| TPR(prognostic) | 1.000 | 1.000 |

| FPR(prognostic) | 0.038 | 0.024 |

| TPR(predictive) | 1.000 | 1.000 |

| FPR(predctive) | 0.008 | 0.006 |

| Estimator | Hyperparameters | Empirical risk |

| denseLinearShrinkEst | - | 102546 |

| sampleCovEst | - | 102547 |

| linearShrinkLWEst | - | 103496 |

| poetEst | lambda=0.1, k=2 | 104522 |

| poetEst | lambda=0.2, k=2 | 105358 |

| poetEst | lambda=0.1, k=1 | 105972 |

| poetEst | lambda=0.2, k=1 | 108222 |

| thresholdingEst | gamma=0.2 | 137798 |

| thresholdingEst | gamma=0.4 | 186844 |