Image augmentation with conformal mappings for a convolutional neural network

Abstract.

For augmentation of the square-shaped image data of a convolutional neural network (CNN), we introduce a new method, in which the original images are mapped onto a disk with a conformal mapping, rotated around the center of this disk and mapped under such a Möbius transformation that preserves the disk, and then mapped back onto their original square shape. This process does not result the loss of information caused by removing areas from near the edges of the original images unlike the typical transformations used in the data augmentation for a CNN. We offer here the formulas of all the mappings needed together with detailed instructions how to write a code for transforming the images. The new method is also tested with simulated data and, according the results, using this method to augment the training data of 10 images into 40 images decreases the amount of the error in the predictions by a CNN for a test set of 160 images in a statistically significant way (p-value=0.0360).

Key words and phrases:

Conformal mapping, convolutional neural network, deep learning, image augmentation2020 Mathematics Subject Classification:

Primary 30C30; Secondary 68U10Author information.

Oona Rainio1, email: ormrai@utu.fi,

ORCID: 0000-0002-7775-7656

Mohamed M.S. Nasser2, email: mms.nasser@wichita.edu, ORCID: 0000-0002-2561-0978

Matti Vuorinen3, email: vuorinen@utu.fi, ORCID: 0000-0002-1734-8228

Riku Klén1, email: riku.klen@utu.fi, ORCID: 0000-0002-0982-8360

1: Turku PET Centre, University of Turku and Turku University Hospital, Turku, Finland

2: Department of Mathematics, Statistics, and Physics, Wichita State University, Wichita, KS 67260-0033, USA

3: Department of Mathematics and Statistics, University of Turku, Turku, Finland

1. Introduction

A convolutional neural network (CNN) is a type of deep learning technique that is well-suited for processing images. It receives image data in a matrix format so that each element of the matrix corresponds to the value of one pixel in the image and then transforms this input through several layers by taking into account the spatial relationships between the data points. The CNNs have been noted to be very useful in different areas of research but training even a single CNN often requires a large number of labelled images, which can sometimes be difficult to obtain.

One possible solution to this problem is using data augmentation, which means that the amount of existing data is multiplied by using simple transformations to create new, slightly different versions of images. Typical transformations used for this purpose are rotations, reflections, and translations but they do not suit for all types of data. Namely, if the image is square-shaped, creating a new version of it with a translation always crops out some areas from the image close to its edges and there are only seven new images that can be created with such a rotation or a reflection that fully preserves the original square. Clearly, the issue would not be encountered if the images given to the CNN would be disk-shaped but this is rarely the case.

However, according to the Riemann mapping theorem from classical function theory, any simply connected proper subdomain of the complex plane can be mapped onto the unit disk with a conformal mapping and, in particular, a conformal mapping called a Schwarz-Christoffel mapping can be used to map a two-dimensional disk onto the interior of a simple polygon [8]. Conformal mappings are much studied in complex analysis because they have many desirable properties such as preserving the magnitudes and directions of angles between curves even though they can turn straight line segments into circular arcs and vice versa. Furthermore, another subtype of conformal mappings are Möbius transformations which can be defined so that they map the interior of a disk onto itself but still significantly transform its contents. While conformal mappings could potentially be utilized in image data augmentation, their formulas are generally quite complicated and require integration of complex valued functions that cannot be computed directly with the existing functions in common programming languages.

In this article, we study if image augmentation can be performed by first mapping each square-shaped image onto a disk with a conformal mapping, then applying such rotations and Möbius transformations that preserve this disk, and finally mapping the resulting images back onto their original square shape. First, in Section 2, we present the usual formulas of conformal mappings and other mathematical theory needed. In Section 3, we show how these mappings can be written in Python or other programming languages and how images can be mapped with them. In Section 4, we test this method with one simple example about a CNN predicting a simulated data set. All the codes written in Python and MATLAB are also publicly available so the readers can access to these codes.

2. Preliminaries

Let be the interior of the square with the vertices . In other words, define , where is the complex plane. Denote the unit disk by .

Consider the complete elliptic integrals of the first kind and defined for by [3, 16]

| (2.1) |

In many references, the complete elliptic integrals of the first kind are defined as in (2.1), see e.g. [4, 7, 12]. However, in this paper, we will use the notations used in [1]. Thus, the complete elliptic integrals of the first kind are defined by [1, p. 590]

| (2.2) |

where and . The incomplete elliptic integral of the first kind is defined by [1]

| (2.3) |

The Jacobian elliptic functions can be defined with the help of the incomplete elliptic integral (2.3), see [1, Ch. 16]. If

then the angle is called the Jacobi amplitude and we write

The Jacobian elliptic functions are defined by

| (2.4) |

The exact conformal mapping from the square region and its inverse can be written in terms of the elliptic functions and the incomplete elliptic integral. It follows from [11, p. 182] and [12, p. 242] that the conformal mapping from the domain onto the unit disk , , is given by

| (2.5) |

where

| (2.6) |

Note that , see [3, (3.19), p. 51]. Then, it follows from (2.4) and (2.5) that the inverse of this mapping, , is given

| (2.7) |

which is a conformal mapping from the unit disk onto the square domain .

Denote the extended complex space by and choose some . Define the Möbius transformation ,

| (2.8) |

where is the complex conjugate of . This mapping fulfills , , and , which means that it preserves the unit disk but is not an identity mapping as long as .

Finally, we define formally the rotation about the origin for an angle as ,

| (2.9) |

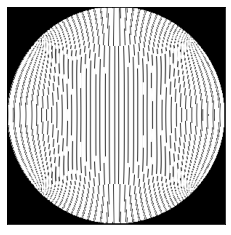

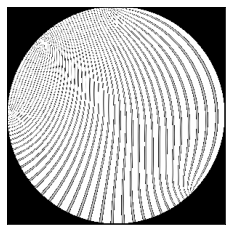

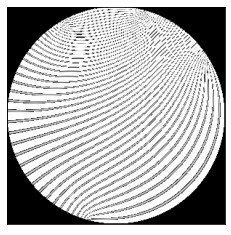

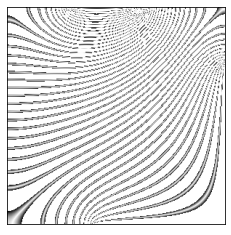

Figure 1 represents visually how the functions , , and and the inverse function affect the shape of an image, when and are defined with constants and , respectively.

3. Code

Computing the mapping function by (2.5) and its inverse function by (2.7) requires computing the Jacobian elliptic functions and for complex as well as computing the incomplete elliptic integral for complex . Thus, the function and its inverse function cannot usually be computed directly from their definitions (2.5) and (2.7) in the code because the functions used to evaluate the elliptic integrals are not defined for complex numbers in many programming languages. For instance, the complete elliptic integral , the incomplete elliptic integral , as well as the Jacobian elliptic functions , , and can be computed for real arguments, , with the functions ellipk, ellipkinc, and ellipj of the subpackage special of the package Scipy [20] in Python. So, in this paper, we will use the properties of the Jacobian elliptic functions and the incomplete elliptic integral to compute their values without the need to compute elliptic integrals of complex variables. For example, the functions and , for a complex variable where and are real variables, can be computed using the following formulas [1, §16.21.1]

| (3.1) | |||||

| (3.2) |

where

and

Similarly, for a complex variable , the incomplete elliptic integral can be computed by [1, §17.4.11]

| (3.3) |

where and are real variables such that is the positive root of the equation

| (3.4) |

and is given by

| (3.5) |

Note that, if and , then and hence . Thus, we have

| (3.6) |

Denote below the floor and the ceiling functions with and . Then, the values of and can be computed from (3.6) and (3.5), respectively, through (see [14])

| (3.7) | |||||

| (3.8) |

At the beginning of the code, fix the constants , , and as in (2.6). The conformal mapping from the square onto the unit disk can now be written with the following pseudo-code.

| (3.9) | ||||

Finding the inverse mapping requires solving the quadratic equation (3.4). The more unusual trigonometric functions such cot or csc and their inverses are in the package sumpy in Python. Note also that the parameter can be chosen to be any small positive number.

| (3.10) | ||||

| #Find the roots of the equation (3.4) | ||||

| #Change of variables taking into account the periodicity ceil to the right | ||||

After writing the functions and with the pseudo-codes (3.9) and (3.10), they can be tested by choosing a random number from the square , computing and , and printing the difference , which should be very close to zero.

An image can be mapped conformally onto a disk with the function squareToDisk presented in (3.11). First, suppose then that there is a square matrix called img which can be read as a square-shaped grayscale image by choosing the colour of each pixel according the corresponding element in img. Let be the number of rows or columns in the matrix img, imgH a vector containing evenly spaced points in the interval , and the distance between two adjacent points in imgH. Then we initialize the new image img by creating a zero matrix of the same size as img. After that, we create a loop that goes through each element of img and expresses it as a point with the help of the vector imgH. If the point belongs to the unit disk, we use the inverse mapping to find the point in the square that becomes when the domain is mapped conformally to the unit disk with the mapping . For this point , we find such points and that , which gives us also the closest four points to that correspond to the pixel locations of the original image matrix img and the distances between and these locations. Finally, we compute the values of the pixel at the point by using the weighted means of the four surrounding pixels and, since , we have the value of the pixel at the point in the unit disk. In the pseudo-code below, refers to the th element of the vector as the indexing starts from 0.

| (3.11) | ||||

The function in (3.11) returns such an image matrix that has the original image mapped onto the largest possible disk fitting inside the square-shaped image matrix, and the other values outside this disk are zeroes. Note that this function can be extended for also colour images of RGB or another similar format by just computing the weighted means for the values of each color channel at the end of the loop. Similarly, by replacing the inverse function with either the Möbius transformation of (2.8) or the rotation of (2.9), we can map the input image so that the part inside the disk is transformed. To create the function that conformally maps the interior of this disk in the square-shaped image matrix onto the whole matrix, we just need to remove the condition and replace by in the code (3.11). Alternatively, we can use for instance the composed mapping to obtain the image of Figure 1(E).

4. Experiment

Here, we build a CNN for predicting how many small black disks an otherwise white image contains. We use Python (version: 3.9.9) [19] with packages TensorFlow (version: 2.7.0) [2], Keras (version: 2.7.0) [6], and SciPy [20] (version: 1.7.3).

.

.

4.1. Data

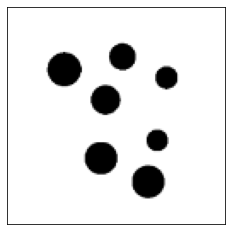

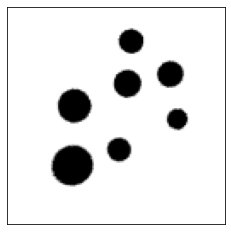

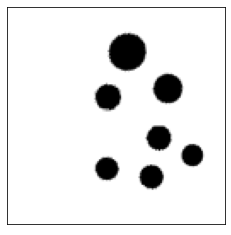

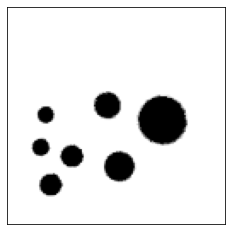

The data set consisted of a collection of images from one to ten black disks on white background and an explaining variable containing the correct number of disks for each image. For a number , a single image was created by first initializing a null matrix corresponding to the domain , choosing centers such that and radii , and changing each element of the matrix from 0 to 1 if and only if the distance was less than for some . Out of 170 different images, 10 were included into the training set and the rest 160 into the test set. Augmented version of the training set with 40 images was then created by adding three versions of each image in the original training set by mapping them with the composed mapping , where are as in (2.5)-(2.9) for and , and , and and , as shown in Figure 2. We also created another augmented training set of 40 images by using rotations of degrees, . The final images were scaled to the size of pixels.

4.2. Convolutional neural network

The CNN used here is the same as in [10]. It is based on the U-Net architecture introduced in [18], which is commonly used in segmentation of medical images. While a typical U-Net contains first an expanding path and then a contracting path to perform the segmentation, our CNN only has the contracting path after which it returns a single numeric value. The contracting path consist of four sequences, each of which contains two convolutions and one pooling operation, and, after that, there are four dense layers. The activation function of the last layer is a linear function and, for all the other layers, the ReLu function is used. The CNN was trained on 130 epochs for the non-augmented data set and two augmented data sets by using Adam as the optimizer, the mean squared error (MSE) as the loss function with learning rate of 0.001, and validation split of 30%.

4.3. Methods

The CNN is first initialized, trained with the non-augmented data set, and used to predict the values of the test set. Then we compute the squared error between the predicted number of disks and the real number of disks for each image of the test set. After that, the CNN is re-initialized to its initial state and the experiment is re-run by using the augmented training data sets instead. The three methods are compared by computing the MSE as the mean value of the squared errors and, to see if the differences in these means are statistically significant or not, the Student’s t-test is performed for the distributions of the squared errors.

4.4. Results

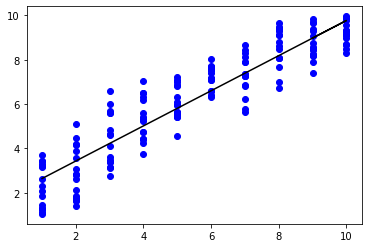

The MSE of the predictions of the test set was 1.742 when the CNN was trained for the data augmented by using conformal mappings. The corresponding MSE was 2.381 for non-augmented data and 2.095 for the data augmented with just rotations. According the Student’s t-test between the squared errors of the predictions from the non-augmented and the augmented model, the difference between the MSEs was statistically significant with a p-value 0.0360. However, the difference between two different augmentation types was not statistically significant (p-value=0.196). Pearson’s correlation coefficient was 0.884 between the predictions on the test set by the non-augmented CNN and the real numbers of disks, 0.883 for the rotation augmentation, and 0.924 for the conformal mapping augmentation (Fig. 2).

5. Discussion

Firstly, it must be noted that the experiment above is very simplified example and better results could be obtained by building a suitable algorithm instead of using a CNN. However, there are numerous practical reasons why a CNN that recognizes certain unusual disk-shaped areas are useful. In fact, this experiment was inspired by the CNNs detecting tumors from tomography images of cancer patients in [10, 13, 17].

It also should be taken into account that this method is quite complicated and designed for situations where the typical transformations cannot be used. For instance, if some of the disks would be so close to edges of the images in our experiment that they would be cropped completely or partially out when the square-shaped images are directly rotated and re-calculating their number is not possible, using this new method rather than the usual rotation is justified. The rotation used as a comparison augmentation method in our experiment did not crop the images but rather included more background. Given how commonly CNNs are used nowadays, there are likely many practical examples of cases where information could be lost if the typical rotation is used. Note that these mappings can be also extended to map any rectangle-shaped image into a disk or vice versa because the rectangle can be very easily stretched into a square.

Another reason for using this method is wanting to utilize the properties of the Möbius transformation in particular. Because of this mapping, the images change in more complicated ways under this augmentation method than they do in simple rotations. We can see that the right-most disk in Figure 2(D) is larger than any of the disks in the original image in Figure 2(A). By increasing the absolute value of the point used to define the Möbius transformation, the differences between the images before and after the composed mapping are also increased. Still, all these disks stay circular because Möbius transformations can only map circles into lines or circles and the disks stay inside the edges of the image. The use of Möbius transformations in image augmentation has been also studied by Zhou et al. [21] but they did not use other conformal mappings and their augmented images therefore contain much empty background outside the original photographs.

One alternative method is using general adversarial network (GAN) augmentation first introduced in 2014 by Goodfellow et al. [9]. GANs are a class of neural networks that generate synthetic samples resembling the real images of the original data set. However, it might be difficult to predict what sort of augmented data GANs produce while the use of conformal mappings only distort the images. This means that the augmentation based on the conformal mappings preserves the number of disks in the images of the data set used here, while GANs might change the number of disks. Furthermore, GANs also require some amount of data so that they can be trained for their work, while the amount of the data does not affect how the images change under the conformal mappings. Our method of augmentation could be also compared with such procedures that use prior information about the data distribution in augmentation [5, 15].

6. Conclusion

We used conformal mappings to create a new way to augment the image data of a CNN and, according to our result, this method both works and produces better predictions than a CNN trained with non-augmented data set.

Data and code availability statement. Available at https://github.com/rklen/Conf_map_augmentation

Conflict of interest statement. On the behalf of all authors, the corresponding author states that there is no conflict of interest.

Funding. The first author was financially supported by the Finnish Culture Foundation and Magnus Ehrnrooth Foundation.

Acknowledgments. The authors are grateful to the referees for their valuable comments.

References

- [1] M. Abramowitz and I.A. Stegun, Handbook of mathematical functions, 10th ed., Dover, New York, 1972.

- [2] M. Abadi, A. Agarwal, P. Barham, E. Brevdo, Z. Chen, C. Citro, G.S. Corrado, …, X. Zheng. (2015). TensorFlow: Large-scale machine learning on heterogeneous systems.

- [3] G. D. Anderson, M. K. Vamanamurthy, M. Vuorinen, Conformal invariants, inequalities and quasiconformal maps. Canadian Mathematical Society Series of Monographs and Advanced Texts. A Wiley-Interscience Publication. J. Wiley, 1997.

- [4] H. Bateman, A. Erdelyi, Higher transcendental functions. Vol. 1, 1953.

- [5] A. Botev, M. Bauer and S. De, Regularising for invariance to data augmentation improves supervised learning. arXiv: 2203.03304.

- [6] F. Chollet et al. (2015). Keras. GitHub.

- [7] H. Dalichau, Conformal Mapping and Elliptic Functions. München, http://dateiena.harald-dalichau.de/spcm/pref11.pdf (2006).

- [8] T.A. Driscoll, L.N. Trefethen, Schwarz-Christoffel Mapping. Cambridge Monographs on Applied and Computational Mathematics. Cambridge University Press, 2002.

- [9] I. Goodfellow, J. Pouget-Abadie, M. Mirza, B. Xu, D. Warde-Farley, S. Ozair, A. Courville, Y. Bengio. (2014). Generative adversarial nets. In: Advances in neural information processing systems. pp. 2672–80

- [10] H. Hellström, J. Liedes, O. Rainio, S. Malaspina, J. Kemppainen, R. Klén. (2023). Classification of head and neck cancer from PET images using convolutional neural networks. Sci Rep 13, 10528.

- [11] H. Kober, Dictionary of conformal representations. Vol. 2. Dover, New York, (1957).

- [12] P.K. Kythe, Handbook of conformal mappings and applications, CRC Press, 2019.

- [13] J. Liedes, H. Hellström, O. Rainio, S. Murtojärvi, S. Malaspina, J. Hirvonen, R. Klén, J. Kemppainen. Automatic segmentation of head and neck cancer from PET-MRI data using deep learning. Journal of Medical and Biological Engineering (to appear).

- [14] I. Moiseev, Elliptic functions for Matlab and Octave, GitHub repository, doi: http://dx.doi.org/10.5281/zenodo.48264, 2008.

- [15] P. Niyogi, F. Girosi and T. Poggio, Incorporating prior information in machine learning by creating virtual examples. Proceedings of the IEEE, vol. 86, no. 11, 1998.

- [16] F.W.J. Olver, D.W. Lozier, R.F. Boisvert, and C.W. Clark, NIST handbook of mathematical functions, Cambridge university press, Cambridge, 2010.

- [17] O. Rainio, J. Lahti, M. Anttinen, O. Ettala, M. Seppänen, P. Boström, J. Kemppainen, R. Klén. New method of using a convolutional neural network for 2D intraprostatic tumor segmentation from PET images. Research on biomedical engineering (to appear).

- [18] O. Ronneberger, P. Fischer, T. Brox. (2015). U-Net: Convolutional Networks for Biomedical Image Segmentation (pp. 234–241). In: Navab N., Hornegger J., Wells W., Frangi A. (eds) Medical Image Computing and Computer-Assisted Intervention – MICCAI 2015. MICCAI 2015. Lecture Notes in Computer Science, vol 9351. Springer, Cham.

- [19] G. van Rossum, F.L. Drake (2009). Python 3 Reference Manual. CreateSpace.

- [20] P. Virtanen, R. Gommers, T.E. Oliphant, M. Haberland, T. Reddy, D. Cournapeau, E. Burovski, P. Peterson, W. Weckesser, J. Bright, S.J. van der Walt, M. Brett, J. Wilson, K. Jarrod Millman, N. Mayorov, A.R.J. Nelson, E. Jones, R. Kern, E. Larson, C.J. Carey, İ. Polat, Y. Feng, E.W. Moore, J. VanderPlas, D. Laxalde, J. Perktold, R. Cimrman, I. Henriksen, E.A. Quintero, C.R. Harris, A.M. Archibald, A.H. Ribeiro, F. Pedregosa, P. van Mulbregt, and SciPy 1.0 Contributors. (2020) SciPy 1.0: Fundamental Algorithms for Scientific Computing in Python. Nature Methods, 17(3), 261-272.

- [21] S. Zhou, J. Zhang, H. Jiang, T. Lundh and A.Y. Ng. (2021). Data augmentation with Mobius transformations. Mach. Learn.: Sci. Technol. 2, 2, 025016.