.

Impact of signal-to-noise ratio and bandwidth on graph Laplacian spectrum from high-dimensional noisy point cloud

Abstract.

We systematically study the spectrum of kernel-based graph Laplacian (GL) constructed from high-dimensional and noisy random point cloud in the nonnull setup. The problem is motived by studying the model when the clean signal is sampled from a manifold that is embedded in a low-dimensional Euclidean subspace, and corrupted by high-dimensional noise. We quantify how the signal and noise interact over different regions of signal-to-noise ratio (SNR), and report the resulting peculiar spectral behavior of GL. In addition, we explore the impact of chosen kernel bandwidth on the spectrum of GL over different regions of SNR, which lead to an adaptive choice of kernel bandwidth that coincides with the common practice in real data. This result paves the way to a theoretical understanding of how practitioners apply GL when the dataset is noisy.

1. Introduction

Spectral algorithms are popular and have been widely applied in unsupervised machine learning, like eigenmap [5], locally linear embedding (LLE) [54], diffusion map (DM) [17], to name but a few. A common ground of these algorithms is graph Laplacian (GL) and its spectral decomposition that have been extensively discussed in the spectral graph theory [16]. Up to now, due to its wide practical applications, there have been rich theoretical results discussing the spectral behavior of GL under the manifold model when the point cloud is clean. For example, the pointwise convergence [5, 36, 35, 57], the convergence without rate [6, 63, 58], the convergence with rate [34], the convergence with rate [25], etc. We refer readers to the cited literature therein for more information. Also see, for example [41, 12], for more relevant results. However, to our knowledge, limited results are known about the GL spectrum when the dataset is contaminated by noise [59, 26, 29, 55], particularly when the noise is high-dimensional [26, 29, 55]. Specifically, in the high-dimensional setup, [26, 29] report controls on the operator norm between the clean GL and noisy GL in some specific signal-to-noise (SNR) regions. However, a finer and more complete description of the spectrum is still lacking. The main focus of this work is extending existing results so that the spectral behavior of GL is better depicted when the dataset is contaminated by high-dimensional noise.

GL is not uniquely defined, and there are several possible constructions from a given point cloud. For example, it can be constructed by the idea of local barycentric coordinates like that in LLE [64] or by taking landmarks like that in Roseland [55], the kernel can be asymmetric [17], the metric can be non-Euclidean [60], etc. In this paper, we focus ourselves on a specific setup; that is, GL is constructed from a random point cloud via a symmetric kernel with the usual Euclidean metric. To be more specific, from , construct the affinity matrix (or kernel matrix) by

| (1.1) |

where is the chosen parameter and is the chosen bandwidth. In other words, we focus on kernels of the exponential type, that is, , to simplify the discussion. Then, define the transition matrix

| (1.2) |

where is the degree matrix, which is a diagonal matrix with diagonal entries defined as

| (1.3) |

Note that is row-stochastic. The (normalized) GL is defined as

Since and are related by an isotropic shift and universal scaling, we focus on studying the spectral distributions of and in the rest of the paper. With the eigendecomposition of , we could proceed with several data analysis missions. For example, spectral clustering is carried out by combining top few non-trivial eigenvectors and -mean [62], embedding datasets to a low-dimensional Euclidean space by eigenvectors and/or eigenvalues reduces the dataset dimension [5, 17], etc. Thus, the spectral behavior of is the key to fully understanding these algorithms.

1.1. Mathematical setup

We now specify the high-dimensional noisy model and the problem we are concerned with in this work. Suppose is independent and identically distributed (i.i.d.) following a sub-Gaussian random vector

| (1.4) |

where is a random vector with mean 0 and , , and is sub-Gaussian with independent entries,

| (1.5) |

We also assume that and are independent. As a result, is a sub-Gaussian random vector with mean 0 and covariance

| (1.6) |

where . In this model, and represent the signal and noise part of the point cloud respectively. To simplify the discussion, we also assume that are continuous random variables. Denote and .

We adopt the high-dimensional setting and assume that

| (1.7) |

for some constant ; in other words, we focus on the large and large setup. We also assume that is independent of . Note that (1.4) is closely related to the commonly applied spiked covariance model [39].

Next, we consider the following setup to control the signal strength:

| (1.8) |

Thus, represents the signal strength in the -th component of the signal. On the other hand, the total noise in the dataset is , and we call the signal to noise ratio (SNR) associated with the -th component of the signal.

We denote the matrix such that

| (1.9) |

Note that compared with defined in (1.1), is the affinity matrix constructed from the clean signal . Moreover, let be the affinity matrix associated with which represents the noise part. Their associated transition matrices and are defined similarly as in (1.2). We will show in part (1) of Theorem 2.7, part (2) of Theorem 3.1 and Corollary 3.2 that under (1.4), when the SNR is above some threshold and the bandwidth is properly chosen, we can separate the signal and noise parts in the sense that is close to

| (1.10) |

with high probability, where stands for the Hadamard product. The closeness is quantified using the normalized operator norm; that is, with high probability. In fact, using the definition of below in (2.15), we immediately see that in general we have When the SNR is relatively large and the bandwidth is chosen properly, we show that with high probability , and Lemma A.1 leads to the claim.

The main problem we ask in this work is studying the relationship between the spectra of and under different SNR regions and bandwidths; that is, how do noise and chosen bandwidth impact the spectrum of the affinity matrix and how does the noisy spectrum deviate from that of the clean affinity matrix. By combining existing understandings of under suitable models, like manifolds [34, 25], we obtain a finer understanding of the commonly applied GL.

1.2. Relationship with the manifold model

We claim that this seemingly trivial spiked covariance model (1.4) overlaps with the commonly considered nonlinear manifold model. Consider the case that the manifold is embedded into a subspace of fixed dimension in . With some mild assumptions about the manifold, and the fact that the Euclidean distance of is invariant to rotation, the noisy manifold model can be studied by the spiked covariance model satisfying (1.4)-(1.8). We defer details to Section A.1 to avoid distraction. We shall however emphasize that it does not mean that we could understand the manifold structure by studying the spiked covariance model. The problem we study in this paper is the relationship between the noisy and clean affinity matrices; that is, how does the noise impact the GL. The problem of exploring the manifold structure from a clean dataset via the GL is a different one. See, for example, [35, 34, 25]. Therefore, by studying the relationship between the noisy GL and clean GL via studying the model where the data is sampled from a sum of two sub-Gaussian random variables, we could further explore the manifold structure from noisy dataset.

1.3. Some related works

The focus of the current paper is to study the GL spectrum under the nonnull case for the point cloud described in (1.4), and connect it to the GL spectrum under the null case; that is, when the GL is constructed from the pure noise point cloud . The eigenvalues of , a high-dimensional Euclidean distance kernel random matrix, in the null case have been studied in several works. In the pioneering work [27], the author studied the spectrum of under the null case assuming the bandwidth , and showed that the complicated kernel matrix can be well approximated by a low rank perturbed Gram matrix; see [27, Theorem 2.2] or (A.19) below for more details. It was concluded that studying in the null case is closely related to studying the principal component analysis (PCA) of the dataset with a low rank perturbation. The results in [27] were extended to more general kernels beyond the exponential kernel function, and can be anisotropic with some moment assumptions; for example, more general kernels with Gaussian noise was reported in [15], the Gaussian assumption was removed in [22, 11], the convergence rates of individual eigenvalues of were provided in [18], etc.

However, much less is known for the nonnull case (1.4). To our knowledge, the most relevant works are [26, 29]. By assuming and , in [26] the author showed that could be well approximated by , which connected the noisy observation and the clean signal. In fact, the results of [26, Theorem 2.1] were established for a class of smooth kernels and the noise could be anisotropic. In a recent work [1], the authors established the concentration inequality of and used it to study spectral clustering.

We mention that other types of kernel random matrices related to (1.1) have also been studied in the literature. For example, the inner-product type kernel random matrices of the form where is some kernel function, has drawn attention among researchers. In [27], the author showed that under some regularity assumptions, the inner-product kernel matrices could also be approximated by a Gram matrix with low rank perturbations, which had been generalized in [15, 22, 11, 32, 43, 52]. The other example is the Euclidean random matrices of the form arising from physics, where is some measurable function. Especially, the empirical spectral distribution has been extensively studied in, for example, [47, 10, 38], among others.

Finally, our present work is also in the line of research regarding the robustness of GL. There have been efforts in different settings along this direction. For example, the perturbation of the eigenvectors of GL was studied in [46], the consistency and robustness of spectral clustering were analyzed in [1, 63, 9], and the robustness of DM was studied in [59, 55], among others.

1.4. An overview of our results

Our main contribution is a systematic treatment of the spectrum of GL constructed from a high-dimensional random point cloud corrupted by high-dimensional noise; that is, we consider the nonnull setup (1.4). We establish a connection between the spectra of noisy and clean affinity matrices with different choices of bandwidth and different SNRs by extensively expanding the results reported in [26] with tools from random matrix theory. More specifically, we allow the signal strength to diverge with so that the relative strength of signal and noise is captured, and characterize the spectral distribution of the noisy affinity matrix by studying how the signal and noise interact and how different bandwidths impact the spectra of and Motivated by our theoretical results, we propose an adaptive bandwidth selection algorithm with theoretical support. The proposed method utilizes some certain quantile of pairwise distance as in (3.6), where the quantile can be selected using our proposed Algorithm 1. We provide detailed results when in (1.4), and discuss how to extend the results to and why some cases are challenging when . Our result, when combined with existing manifold learning results like [35, 34, 25] and others, pave the way to a better understanding of how GL-based algorithms behave in practice, and a theoretical support for the commonly applied bandwidth scheme, when is distributed on a low-dimensional, compact and smooth manifold.

We now provide a heuristic explanation of our results assuming and when . In Section 2, when we show that the noisy kernel affinity matrix provides limited useful information for the clean affinity matrix in (1.9). Specifically, similar to the null setting, can be well approximated by a Gram matrix with a finite rank perturbation; see Section 2.2 for more details. When becomes closer to with some universal scaling and isotropic shift (c.f. (2.14)). Note that some related results have been established for in [26]. We show that the convergence rate is adaptive to , and provide a quantification of how eigenvalues of the noisy affinity matrix converge. We mention that when , the classic bandwidth choice needs to be modified to reflect the underlying signal structure. Specifically, if and , we have . These results are stated in Theorem 2.7. Similar results hold for the transition matrix , and they are reported in Section 2.4.

Motivated by the fact that becomes trivial when is large, in Section 3, we focus on the case When the results are analogous to the setting When the spectrum of will be dramatically different. Specifically, when , is close to with some universal scaling and isotropic shift (c.f. (3.1)), and when is close to without any scaling and shift. Moreover, besides the top eigenvalues of , the other eigenvalues are trivial; see Theorem 3.1 for more details.

In practice, is unknown and not easy to estimate especially when are sampled from a nonlinear geometric object. For practical implementation, we propose a bandwidth selection algorithm in Section 3.2. It turns out that the proposed bandwidth selection algorithm can bypass the challenge of estimating , and the result coincides with that determined by the ad hoc bandwidth selection method commonly applied by practioners. See [56, 44] for example, among many others. Note that the bandwidth issue is also discussed in [1]. To our knowledge, our result is the first step toward a theoretical understanding of that common practice.

Finally, we point out technical ingredients of our proof. We focus on the discussion of the bandwidth and take for an example. First, when is bounded, i.e., the spectrum of the has been studied in [27] using an entrywise expansion to the order of two (with a third order error). When the above strategy can be adapted straightforwardly with some modifications. When we need to expand the entries to a higher order depending on . With this expansion, we show that except for the Gram matrix, the other parts are either fixed rank or negligible; see Sections B.1 and B.2 for more details. Second, when the entrywise expansion strategy fails and we need to conduct a more careful analysis. Particularly, thanks to the Hadamard product representation of in (1.10), we need to analyze the spectral norm of carefully; see Lemma A.10, which has its own interest. Third, to explore the number of informative eigenfunctions, we need to investigate the individual eigenvalues of using Mehler’s formula (c.f. (A.32)); see Section B.3 for more details.

The paper is organized in the following way. The main results are described in Section 2 for the bandwidth and in Section 3 for the bandwidth and an adaptive bandwidth selection algorithm. The numerical studies are reported in Section 4. The paper ends with the discussion and conclusion in Section 5. The background and necessary results for the proof are listed in Section A and the proofs of the main results are given in Sections B and C.

Conventions. We systematically use the following notations. For a smooth function , denote to be the -th derivative of For two sequences and depending on the notation means that for some constant and means that for some positive sequence as We also use the notation if and

2. Main results (I): classic bandwidth choice

In this section, we state the main results regarding the spectra of and when the bandwidth satisfies For definiteness, without loss of generality, we assume that Such a choice has appeared in many works in kernel methods and manifold learning, e.g., [27, 18, 22, 42, 28, 29]. Since our focus is the nonlinear interaction of the signal and noise in the kernel method, in what follows, we focus on reporting the results for and omit the subscripts in (1.8). We refer the readers to Remark 2.9 and Section A.7.2 below for a discussion on the setting when

2.1. Some definitions

We start with introducing the notion of stochastic domination [30]. It makes precise statements of the form “ is bounded with high probability by up to small powers of ”.

Definition 2.1 (Stochastic domination).

Let

be two families of nonnegative random variables, where is a possibly -dependent parameter set. We say that is stochastically dominated by , uniformly in the parameter , if for all small and large , there exists so that we have

for a sufficiently large . We interchangeably use the notation or if is stochastically dominated by , uniformly in , when there is no danger of confusion. In addition, we say that an -dependent event holds with high probability if for a , there exists so that

when

For any constant denote to be the shifting operator that shifts a probability measure defined on by ; that is

| (2.1) |

where is a measurable subset. Till the end of the paper, for any symmetric matrix the eigenvalues are ordered in the decreasing fashion so that

Definition 2.2.

For a given probability measure and , define the -th typical location of as ; that is,

| (2.2) |

where . is also called the -quantile of .

Let be the data matrix associated with the point cloud ; that is, the -th column is . Denote the empirical spectral distribution (ESD) of as

Here, stands for the standard deviation of the scaled noise . It is well-known that in the high-dimensional region (1.7), has the same asymptotic properties [40] as the so-called Marchenko-Pastur (MP) law [45], denoted as , satisfying

| (2.3) |

where is the indicator function and when and when ,

| (2.4) |

and .

Denote

| (2.5) |

and for any kernel function , define

| (2.6) |

Till the end of this paper, we will use and for simplicity, unless we need to specify the values of and at certain points of . As mentioned below (1.1), we focus on throughout the paper unless otherwise specified. Recall the shift operator defined in (2.1). Denote

| (2.7) |

where is the MP law defined in (2.3) by replacing with .

2.2. Spectrum of kernel affinity matrices: low signal-to-noise region

In this subsection, we present the results when the SNR is small in the sense that in Theorems 2.3 and 2.5. In this setting, there does not exist a natural connection between the spectrum of and the signal part in (1.9). More specifically, even though the spectrum of can be studied, is not close to zero.

In the first result, we consider the case when is bounded from above; that is, . In this bounded region, as in the null case studied in [27, 18] (see Lemma A.9 for more details), the spectrum is governed by the MP law, except for a few outlying eigenvalues. These eigenvalues either come from the kernel function expansion that will be detailed in the proof, or the Gram matrix where is the data matrix associated with the noisy observation defined in (1.4) when the signal is above some threshold. This result is not surprising, since the signal is asymptotically negligible compared with the noise.

Theorem 2.3 (Bounded region).

Remark 2.4.

In Theorem 2.3, we focus on reporting the bulk eigenvalues of In this case, the outlying eigenvalues are mainly from the kernel function expansion, which we call the “kernel effect” hereafter, and the resulting Gram matrix. For example, as we will see in the proofs in Section B, we have that and where and are defined in (A.20). Moreover, we mention that Theorem 2.3 holds for a more general kernel function as in [27, 18]; that is, (1.1) is replaced by

| (2.9) |

where is monotonically decreasing, bounded and . Moreover, we remark that in (2.8) we can relax to with an additional assumption that for some constant which is a standard assumption in the random matrix theory literature guaranteeing that the smallest eigenvalue of the Gram matrix is bounded from below; for instance, see the monograph [31]. Finally, we mention that in the pure noise setting, i.e., the asymptotics of the spectrum of (equivalently, in this setting) has been established in [18] using a different approach, where the results hold for regardless of the ratio of However, in [18], the bound is weaker than what we show here; that is, the rate is when is bounded, and the rate only appears when

In the second result, we study the case when the signal strength diverges with but slowly; that is, , where In this region, since , the signal is still weaker than the noise, and again it is asymptotically negligible. Thus, it is not surprising to see that the noise information dominates and the spectral distribution is almost like the MP law, except for the first few eigenvalues. Again, like in the bounded region, these finite number of outlying eigenvalues come from the interaction of the nonlinear kernel and the Gram matrix.

Theorem 2.5 (Slowly divergent region).

Suppose (1.1) and (1.4)-(1.8) hold true, and . For any given small when is sufficiently large, we have that the following holds with probability at least :

-

(1)

When for some constant we have that:

(2.10) -

(2)

When denote

(2.11) For some constant there exists some integer satisfying

so that with high probability, for all we have that

(2.12) where is defined as

(2.13)

As in Theorem 2.3, since the outlying eigenvalues are impacted by both the kernel effect and signals, we focus on reporting the bulk eigenvalues. Similar to the discussion in Remark 2.4, the outlying eigenvalues can be figured out from the proof in Section B. Moreover, the number of the outlying eigenvalues is adaptive to As can be seen from Theorem 2.5, for any small , when the results are similar to (2) of Theorem 2.3 except for the convergence rates. When there will be more, but finite, outlying eigenvalues, which comes from a high order expansion in the proof. Finally, we mention that Theorem 2.5 holds for a more general kernel function like that in (2.9).

We remark that when and the non-bulk eigenvalues (i.e., outlying eigenvalues) “seem” to be useful for understanding the signal. For example, we may potentially utilize the number of outliers to determine if the signal strength is stronger than . However, to our best knowledge, there exists limited literature on utilizing this information via GL since ( respectively) is not close to ( respectively) in this region, except some relevant work in [28] when . Recall that some of the non-bulk eigenvalues are generated by the kernel effect instead of the signals. As commented in Remark 2.4, while it is true that when is bounded the kernel effect can be quantified, when diverges, we loss the capability to distinguish these outliers. Especially, when although we show in (2) of Theorem 2.5 that the number of non-bulk eigenvalues is finite and depends on the signal strength, we do not know the exact number of outliers and the relation between the signals and outliers. Therefore, it is challenging to recover the signals using this information. For example, when the signal is supported on a linear subspace so that there are multiple spikes, to our knowledge it is challenging to estimate the dimension via GL.

We shall emphasize that the GL approach is very different from PCA. Note that for the -spiked model, only the largest eigenvalues could possibly detach from the bulk and can be located when PCA is applied. However, for the GL approach, even a single spike can lead to multiple outlying eigenvalues as in (2) of Theorem 2.5. This shows that even for a simple -dim linear manifold that is realized as a -dim linear subspace in , the nonlinear method via GL is very different from the linear method via PCA. More details on this aspect can be found in Section 5. The problem becomes more challenging if the subspace is nonlinear, even under the assumption that the manifold model can be reduced to this low rank spiked model in Section A.1 that we focus on in this paper.

Finally, we point out that when although it is challenging to obtain precise information for its clean signal counterpart from a direct application of the standard GL via (c.f. Theorem 2.5) or (c.f. Corollary 2.10), we could consider a variant of GL via the transition matrix by zeroing-out the diagonal elements of proposed in [29]. It has been shown in [29] that the zeroing-out strategy could help the analysis of noisy datasets. We refer the readers to Appendix A.7.1 for more details.

Remark 2.6.

When although it is still unclear how to use the bulk of eigenvalues from the noisy observation to extract information for the clean signal counterpart, it is a starting point for understanding the existence of a strong signal (i.e., ). For example, when Theorems 2.3 and 2.5 demonstrate that most of the eigenvalues follow the MP law so that except for a finite number of outliers, two consecutive eigenvalues should be close to each other by a distance of . Under the alternative (i.e., ), since the noisy GL is close to the clean GL as shown later in Section 2.3 below, two consecutive eigenvalues can be separated by a distance of constant order.

2.3. Spectrum of kernel affinity matrices: high signal-to-noise region

In this subsection, we present the results such that the spectra of and in (1.9) can be connected after properly scaling when the SNR is relatively large, i.e., . We first prepare some notations. Denote

| (2.14) |

Clearly, is closely related to via a scaling and an isotropic shift and contains only the signal information. On the other hand, note that , where the matrix comes from the first order Taylor expansion of . We introduce another affinity matrix that will be used when is too large so that the bandwidth is relatively small compared with the signal strength. Denote

| (2.15) |

and

| (2.16) |

Note that differs from by the matrix It will be used when

Our main result for this SNR region is Theorem 2.7 below. For some constant denote

| (2.17) |

Theorem 2.7.

Suppose (1.1) and (1.4)-(1.8) hold true, and .

-

(1)

When we have that

(2.18) Moreover, for some universal large constant we have that for in (2.17)

(2.19) -

(2)

When we have that

(2.20) Furthermore, when is larger and satisfies

(2.21) for a constant , we have that with probability for some constant ,

(2.22) and consequently

(2.23)

The scaling in (2.18) is commonly used in many manifold learning and machine learning literature [1, 12, 26, 41, 53, 63]. On one hand, (1) of Theorem 2.7 shows that once the SNR is “relatively large” (), we may access the spectrum of the clean affinity matrix via the noisy affinity matrix as is described in (2.18), and the clean affinity matrix may contain useful information of the signal. In this case, the signal is strong enough to compete with the noise so that we are able to recover the “top few” eigenvalues of the kernel matrix associated with the clean data via Especially, (2.19) implies that we should focus on the top eigenvalues of , since the remaining eigenvalues are not informative. This coincides with what practitioners usually do in data analysis. Note that increases with , which fits our intuition, since the SNR becomes larger.

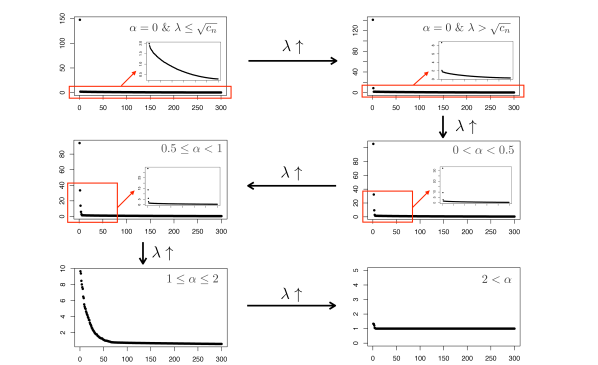

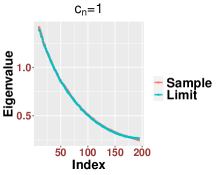

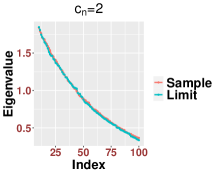

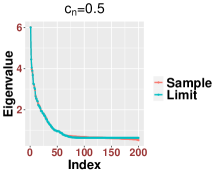

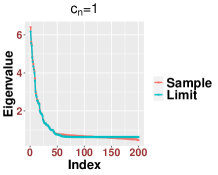

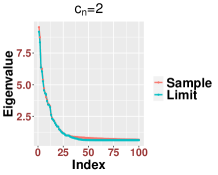

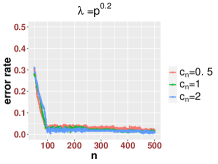

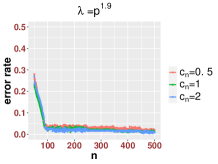

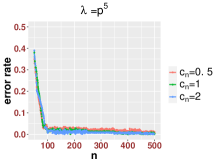

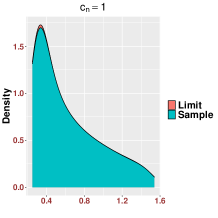

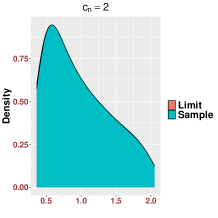

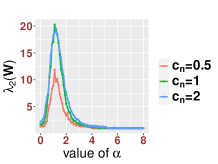

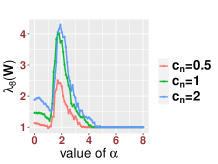

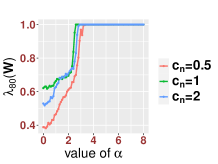

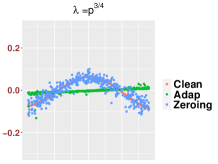

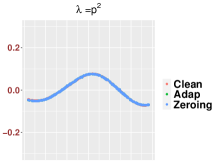

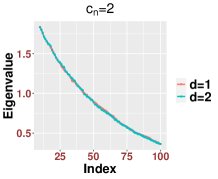

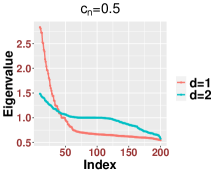

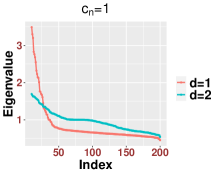

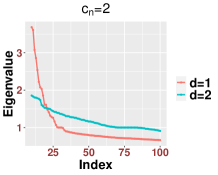

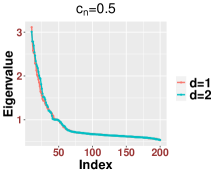

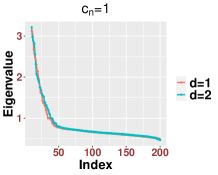

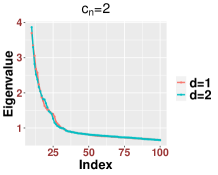

On the other hand, we find that the classic bandwidth choice is not a good choice when the SNR is “too large” (). First, (2) of Theorem 2.7 states that when since the bandwidth is too small compared with the signal strength, the noisy affinity matrix will be close to a mixture of signal and noise. Especially, when the signals are stronger in the sense of (2.21), we will not be able to obtain information from the noisy affinity matrix. This can be understood as follows. Since the signal is far stronger than the noise, equivalently we could say that the signal is contaminated by “small” noise. However, since the bandwidth is set to , which is “small” compared with the signal strength, the exponential decay of the kernel forces each point to be “blind” to see other points. In this case, is close to when , and the affinity matrix is close to an identity matrix, which leads to (2.23). As we will see in Theorem 3.1, all these issues will be addressed using a different bandwidth. For the readers’ convenience, in Figure 1, we use a simulation to summarize the phase transitions observed in Theorems 2.3, 2.5 and 2.7. For numerical accuracy of our established theorems, we refer the readers to Section 4.1 below for more details.

Technically, in the previous results when , the kernel function only contributes to the measure via (2.7) and its decay rate does not play a role in the conclusions. However, once the signal becomes stronger, the kernel decay rate plays an essential role. We focus on to simplify the discussion in this paper, and postpone the discussion of general kernels to our future work.

Remark 2.8.

When in Theorem 2.7, we have shown that besides the top eigenvalues of , the remaining eigenvalues of are trivial. Since the error bound in (2.19) is much smaller than the one in (2.18), the smaller eigenvalues of may fluctuate more and have a non-trivial ESD. The ESD of is best formulated using the Stieltjes transform [2]. Here we provide a remark on the approximation of the Stieltjes transforms. Denote as

| (2.24) |

Note that compared with , the matrix in comes from a higher order Taylor expansion of . Let and be the Stieltjes transforms of and respectively. In Section D, we show that

| (2.25) |

where is the domain of spectral parameters defined as

| (2.26) |

and is some fixed (small) constant. This result helps us further peek into the intricate relationship between the clean and noisy affinity matrices. However, it does not provide information about each single eigenvalue.

Remark 2.9.

In the above theorems, we focus on reporting the results for the case in (1.4). We now discuss how to generalize our results to . There are two major cases. The first case is when all signal strengths are in the same SNR region, and the second case is when the signal strengths might be in different SNR regions. We start from the first case, and there are four regions we shall discuss.

- (1)

-

(2)

When , , satisfy condition (1) of Theorem 2.7, the results of (2.18) and (2.20) of Theorem 2.7 hold with some changes. Indeed, in (2.20) should be replaced by and in (2.17) should be replaced by . Moreover, in this setup, since the noisy affinity matrix will be close to a matrix depending on the clean affinity matrix , where , which in general does not follow the MP law, the spectrum of vary according to the specific values of , . More discussion with simulation of this setup with can be found in Section A.7.2.

-

(3)

When , , this is the region our argument cannot be directly applied and we need a substantial generalization of the proof. Especially, our proof essentially relies on the Mehler’s formula in Section A.6 that has only been proved for to our knowledge. Nevertheless, we believe it is possible to generalize this formula to following the arguments of [33].

-

(4)

When , we could directly generalize the result to with all the key ingredients, i.e., Lemmas A.4–A.9, in Section A.5. Specifically, to extend Theorem 2.3 concerning , , denote if for all , if for all , or if . The proof of Theorem 2.3 still holds by updating In fact, according to (B.13), the matrix therein is of rank three and the Gram matrix can generate outliers according to Lemma A.6. Theorem 2.5 still holds when . Following its proof, when for all the first part of the theorem still holds when and the rate should be replaced by In fact, in this setting, all and all will generate outliers. Moreover, when all by replacing (2.11) with , where we conclude that the second part of the theorem still holds true by replacing with and with . We emphasize that in the above settings, as defined in (2.5) satisfies that as , the bulk eigenvalues can be characterized by the same MP law as in (2.8) and (2.12). However, the number of the outliers may depend on the signal strength , See Section A.7.2 for more discussion and simulation with .

The second case includes various combinations, and some of them might be challenging. We discuss only one setup assuming the signal strengths fall in two different regions; that is, there exists such that

Theorem 2.7 still holds by replacing by and in (2.14) by , where and , i.e., the clean affinity matrix is defined by only using those components with large SNRs. That is to say, the spectrum of for the value of is close to the clean affinity matrix for the value of with the same signals The detailed statements and proofs are similar to the case except for extra notional complication. We defer more discussion of this setup with to Section A.7.2.

In short, there are still several open challenges when , and we will explore them in our future work.

2.4. Spectrum of transition matrices

In this subsection, we state the main results for the transition matrix defined in (1.2). Even though there is an extra normalization step by the degree matrix , we will see that most of the spectral studies of boil to those of In what follows, we provide the counterparts of the results in Sections 2.2 and 2.3 for Similar discussions as those in Remark 2.8 and Remark 2.9 also hold.

For defined in (2.14), denote similar to the definition (1.2). Similarly, for defined in (2.16), denote

Corollary 2.10.

Suppose (1.1)-(1.8) hold true, , and . When the results of Theorems 2.3 and 2.5 hold for the eigenvalues of by replacing with and the measure with . When for (1) of Theorem 2.7, the counterpart of (2.18) reads as

and the counterpart of (2.19) is

Moreover, when for (2) of Theorem 2.7, the counterpart of (2.20) reads as

The rest parts hold by replacing and with and respectively.

3. Main results (II): a different bandwidth choice

As discussed after Theorem 2.7, when the SNR is large, the classic bandwidth choice is too small compared with the signal. For example, according to (2) of Theorem 2.7, we cannot obtain any information about the clean signal when if To address this issue, we consider a different bandwidth , where is the signal strength. We show that this signal dependent bandwidth will result in a meaningful spectral convergence result, which is stated in Theorem 3.1. As in Section 2, we focus on the setting , and set . The discussion for the setting is similar to that in Remark 2.9, which we only state the difference here. When , we have , so all the arguments in (3) and (4) of Remark 2.9 directly apply. When , , which corresponds to the strong signal cases (1) and (2) in Remark 2.9, following the proof of (3.5) below, a similar argument of case (2) of Remark 2.9 still apply. For definiteness, below we state our results for the spectra of and assuming in Section 3.1. Since is usually unknown in practice, in Section 3.2, we propose a bandwidth selection algorithm for practical implementation. With this algorithm, even without the knowledge of the signal strength, we can still get meaningful spectral results. See Corollary 3.2.

3.1. Spectra of affinity and transition matrices

In this subsection, we state the results for the spectra of and when . Denote

| (3.1) |

where is constructed using the bandwidth

Theorem 3.1.

Suppose (1.1)-(1.8) hold true, and . The following results hold.

- (1)

-

(2)

When we have that

(3.3) and for some large constants and we have that when

(3.4) Moreover, when we have that

(3.5)

Finally, similar results hold for the transition matrix by replacing , and in (3.3)-(3.5) by , and respectively, where and are defined by plugging and into (1.2).

With this bandwidth, we have addressed the issue we encountered in (2) of Theorem 2.7. Specifically, according to Theorem 3.1, we find that when the noise dominates and does not lead to any essential difference in the spectrum compared with that from the fixed bandwidth On the other hand, when , such a bandwidth choice contributes significantly to the spectrum. Especially, compared to (2.17), only has nontrivial eigenvalues. Combined with (3.3), by properly choosing the bandwidth, once the SNR is relatively large, the noisy affinity matrix captures the spectrum of the clean affinity matrix via . In addition, when , we can replace with directly. Finally, we mention that in the small SNR region modifying the transition matrix by zeroing out the diagonal terms before normalization [29] could be useful. For more discussions, we refer the readers to Section A.7.1.

3.2. An adaptive choice of bandwidth

While the above result connects the spectra of noisy affinity matrices and those of clean affinity matrices, in general is unknown. In this subsection, we provide an adaptive choice of depending on the dataset without providing an estimator for Such a choice will enable us to recover the results of Theorem 3.1.

Given some constant we choose according to

| (3.6) |

where is the empirical distribution of the pairwise distance In this subsection, when there is no danger of confusion, we abuse the notation and denote the affinity and transition matrices conducted using from (3.6) as and respectively, and define in (1.9) and in (3.1) with in (3.6).

As we show in the proof, when , (see (C.1) in the proof), and when , (see (C.2) in the proof). In this sense, the following corollary recovers the results of Theorem 3.1, while the choice of bandwidth is practical.

Corollary 3.2.

Since Corollary 3.2 recovers the results of Theorem 3.1, the same comments after Theorem 3.1 and the discussions about manifolds in Subsection 3.3 hold for Corollary 3.2. We comment that in practice, usually researchers choose the 25% or 50% percentile of all pairwise distances, or those distances of nearest neighbor pairs, as the bandwidth; see, for example, [56, 44]. Corollary 3.2 provides a theoretical justification for this commonly applied ad hoc bandwidth selection method.

Next, we discuss how to choose in practice. Based on the obtained theoretical results, when the outliers stand for the signal information (c.f. (3.1)), except those associated with the kernel effect. Thus, we propose Algorithm 1 to choose adaptively which seeks for a bandwidth so that the affinity matrix has the most number of outliers.

-

(1)

Take fixed constants where e.g., and . For some large integer , we construct a partition of the intervals denoted as , where .

-

(2)

For the sequence of quantiles calculate the associated bandwidths according to (3.6), denoted as

-

(3)

For each calculate the eigenvalues of the affinity matrices which is conducted using the bandwidth Denote the eigenvalues of in the decreasing order as

-

(4)

For a given threshold satisfying as denote

-

(5)

Choose the quantile such that

(3.7)

Note that we need a threshold in step 4 of Algorithm 1. We suggest to adopt the resampling method established in [50, Section 4] and [19, Section 4.1]. This method provides a choice of to distinguish the outlying eigenvalues and bulk eigenvalues given the ratio . The main rationale supporting this approach is that the bulk eigenvalues are close to each other (c.f. Remark 2.8) and hence the ratios of the two consecutive eigenvalues will be close to one. Moreover, in step 5 of the algorithm, when there are multiple that achieve the argmax, we choose the largest one for the purpose of robustness.

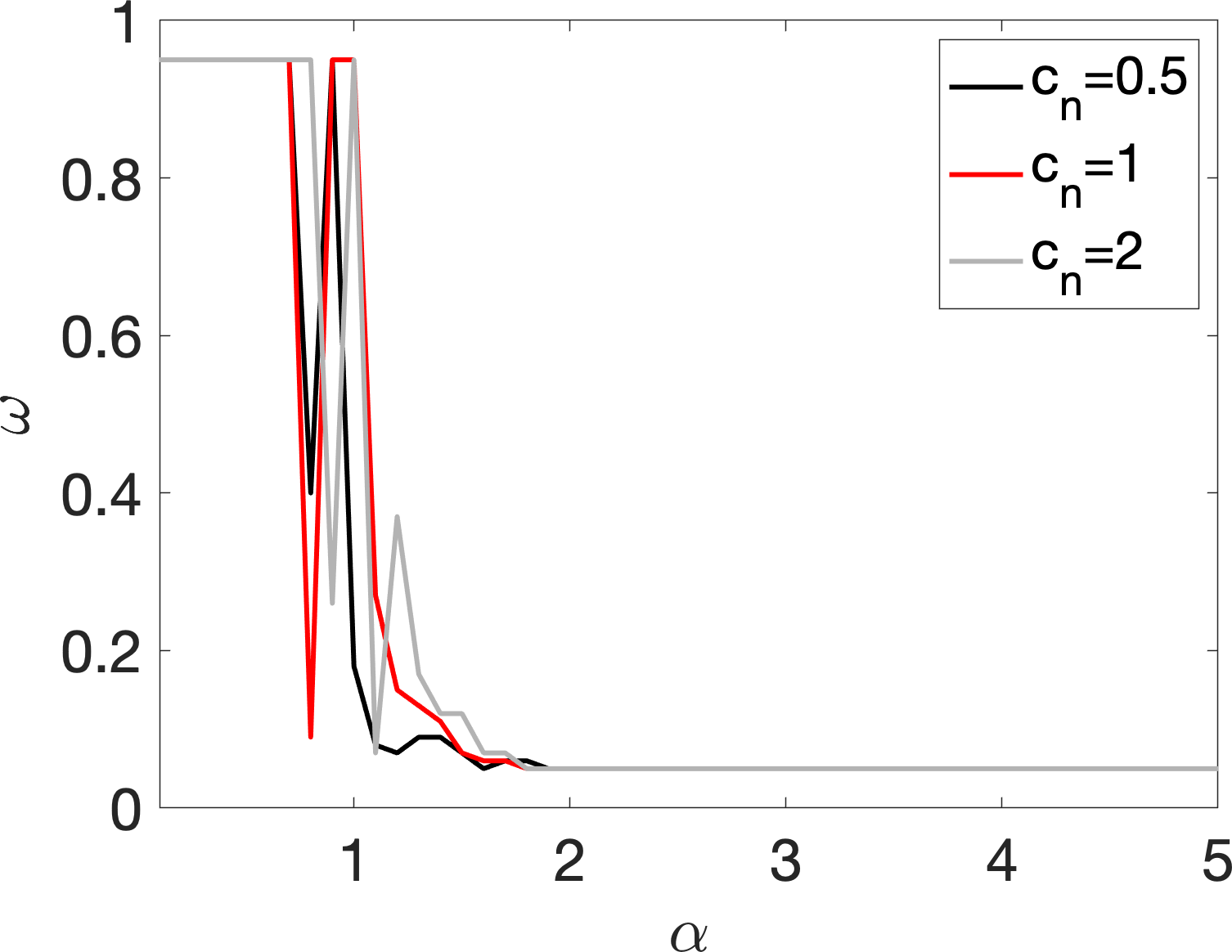

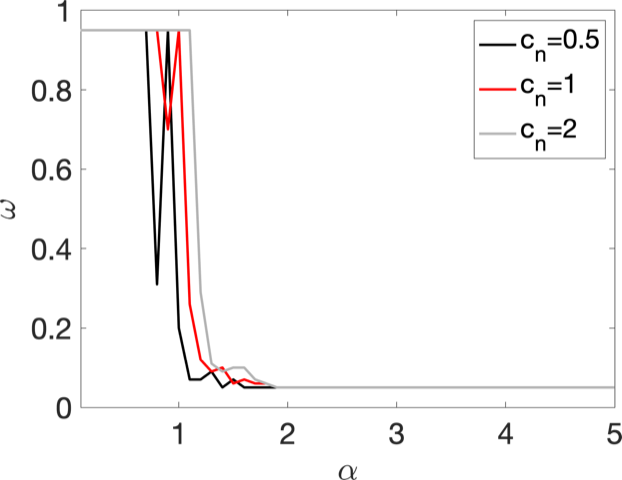

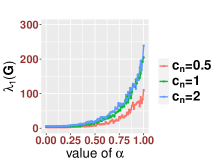

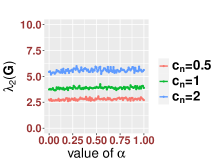

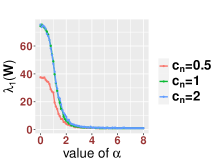

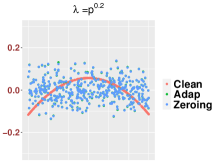

Next, we numerically illustrate how the chosen by Algorithm 1 depends on . Consider the nonlinear manifold , the canonical -dim sphere, isometrically embedded in the first two axes of and scaled by , where ; that is, , where is uniformly sampled from . Next, we add Gaussian white noise to via where , are noise independent of We consider this example since its topology is nontrivial and we know the ground truth. In Figure 2, we record the chosen for different from . When is small, (i.e. ), since the bandwidth choice will not essentially influence the transition, the algorithm will offer a large quantile in light of (3.7). When is large (i.e., ), we get a small quantile. Intuitively, the larger selected bandwidth when gets small can be understood as the algorithm trying to combat the noise with a larger bandwidth. In Figure 2, we also record the chosen for different from , where we simply replace the role of by . We can see the same result as that from . Finally, note that the choices of are irrelevant of the aspect ratio in (1.7). This finding suggests that the bandwidth selection is not sensitive to the ambient space dimension.

3.3. Connection with the manifold learning

To discuss the connection with the manifold learning, we focus on Theorem 3.1 and , and hence Corollary 3.2, which states that via replacing , and in (3.3)-(3.5) by , and , the relationship between the eigenvalues of and is established when . We know that all except the top eigenvalues of are trivial according to (3.4), and by Weyl’s inequality, the top eigenvalues of and differ by . On the other hand, the eigenvalues of have been extensively studied in the literature. Below, take the result in [41] as an example. Suppose the clean data is sampled from a -dim closed (compact without boundary) and smooth manifold, which is embedded in a -dim subspace in , following a proper sampling condition on the sampling density function (See Section A.1 for details of this setup). To link our result to that shown in [41], note that is of order by assumption so that the selected bandwidth is of the same order as that of . Thus, since when , the eigenvalues of converge to the eigenvalues of the integral operator

where is a smooth function defined on and is the volume density. By combining the above facts, we conclude that under the high-dimensional noise setup, when the SNR is sufficiently large and the bandwidth is chosen properly, we could properly obtain at least the top few eigenvalues of the associated integral kernel from the noisy transition matrix . Since our focus in this paper is not manifold learning itself but how the high-dimensional noise impacts the spectrum of GL, for more discussions and details about manifold learning, we refer readers to [25] and the citations therein.

4. Numerical studies

In this section, we conduct Monte Carlo simulations to illustrate the accuracy and usefulness of our results and proposed algorithm. In Section 4.1, we conduct numerical simulations to illustrate the accuracy of our established theorems for various values of We also show the impact of In Section 4.2, we examine the usefulness of our proposed Algorithm 1 and compare it with some methods in the literature with a linear manifold and a nonlinear manifold.

4.1. Accuracy of our asymptotic results

In this subsection, we conduct numerical simulations to examine the accuracy of the established results. For simplicity, we focus on checking the results in Section 2 when which is the key part of the paper. Similar discussions can be applied to the results in Section 3 when

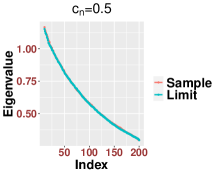

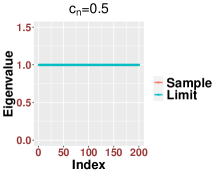

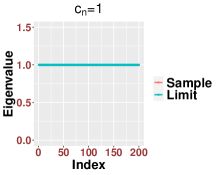

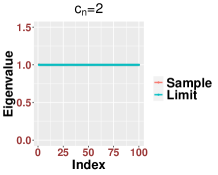

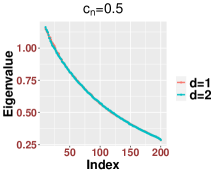

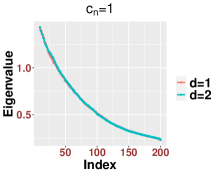

In Figure 3, we study the low SNR setting when as in Section 2.2, particularly the closeness of bulk eigenvalues of and the quantiles of the MP law shown in (2.8) and (2.12). We also show that even for a relative small value of our results are reasonably accurate for various values of Then we study the region when as in Section 2.3. In Figure 4, we study the SNR region when and check (2.18). Moreover, in Figure 5, we examine the accuracy of (2.23) when is very large. Again, we find that our results are accurate even for a relatively small value of under different settings of

Since our results are stated in the asymptotic sense when is sufficiently large, in Figure 6, we examine how the value of impact our results. For various values of and SNRs, we find that our results are reasonable accurate once . We see that when the SNR becomes large, our results for small , like , are more accurate.

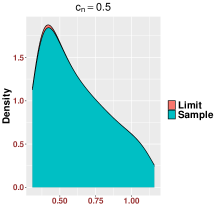

We point out that for a better visualization, we report the results for the sorted eigenvalues instead of the histogram in the above plots regarding eigenvalues. The main reason is that we focus on each individual eigenvalue rather than the global empirical distribution, which has been known in the literature. The simulation results are based on only one trial which emphasizes the concentration with high probability as established in our main results. For the visualization of histogram, we have to run several simulations and look at the average. Since this is not the main focus of the paper, we only generate one such plot in Figure 7 based on 1,000 trials.

4.2. Efficiency of our proposed bandwidth selection algorithm (Algorithm 1)

In this subsection, we show the usefulness of our proposed Algorithm 1 and compare it with some existing methods in the literature. We consider two manifolds of different dimensions, and compare the following four setups. (1) The clean affinity matrix with the bandwidth ; (2) constructed using our Algorithm 1; (3) constructed using the bandwidth via (3.6) with which has been used in [56, 44]; (4) using the bandwidth as in [27, 18, 22, 42, 28, 29]. Since the accuracy of the eigenvalue has been discussed in Section 4.1, in what follows, while we do not explore eigenvectors of GL in this paper, we demonstrate the eigenvectors of and compare them with those of constructed from the clean signal to further understand the impact of bandwidth selection.

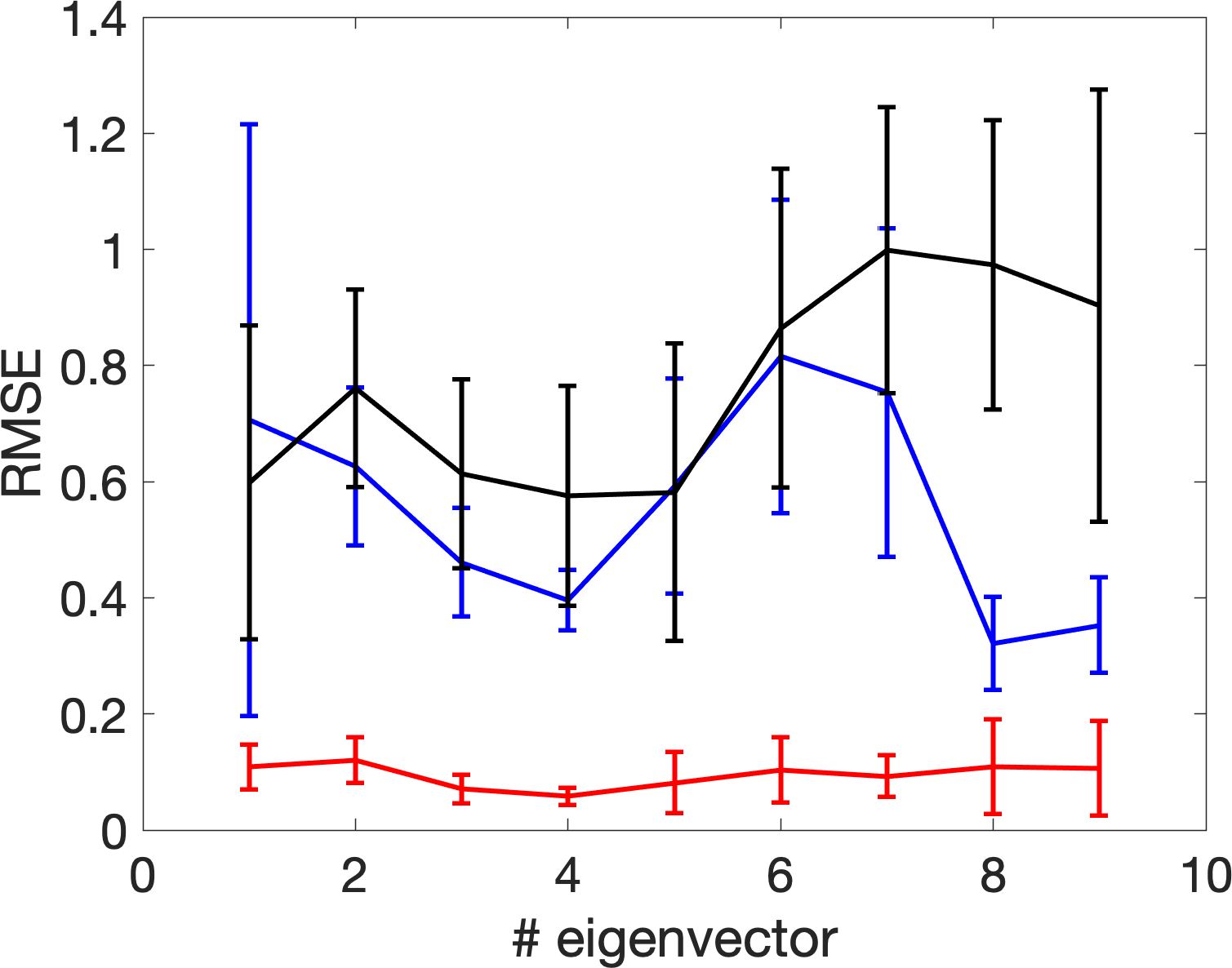

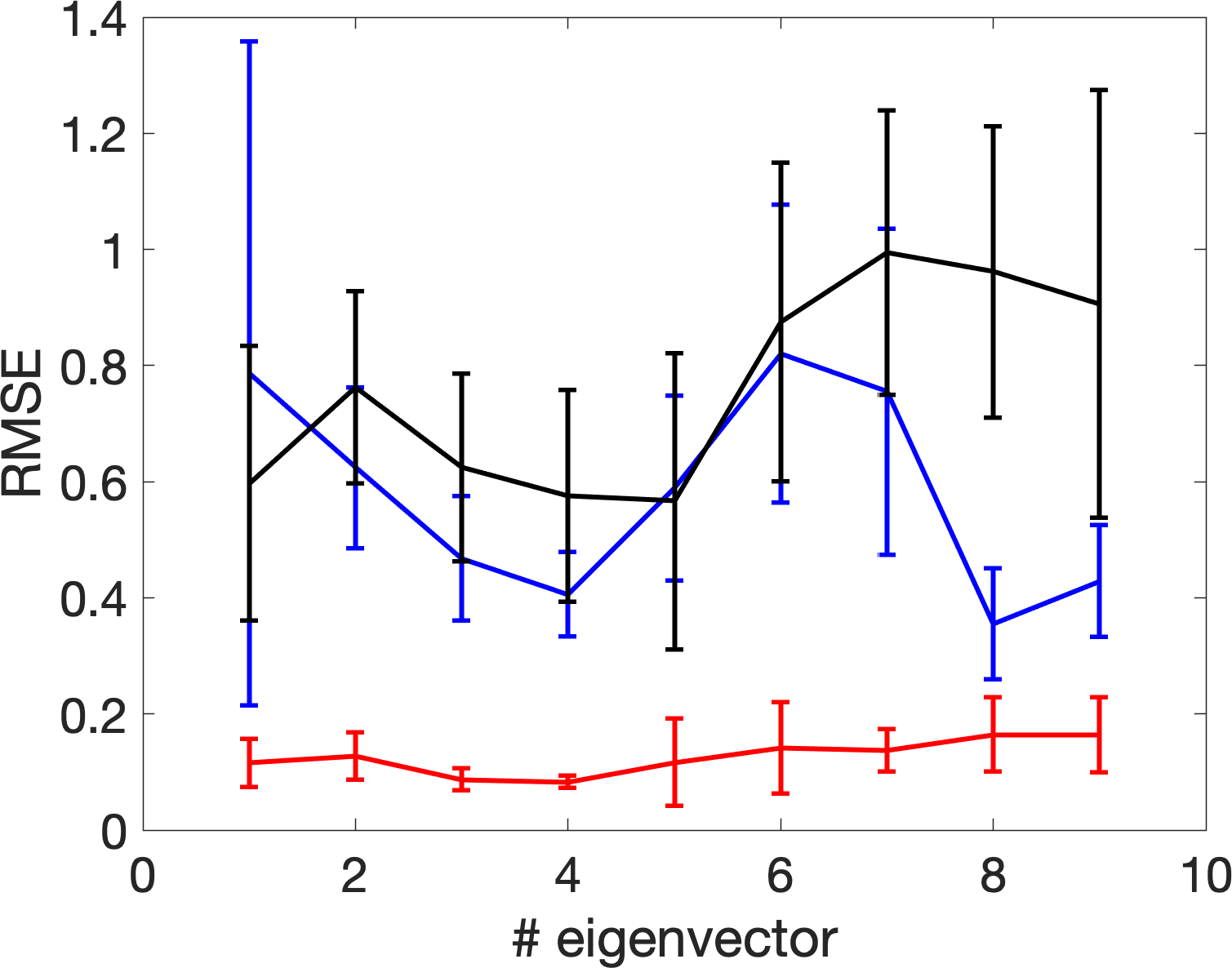

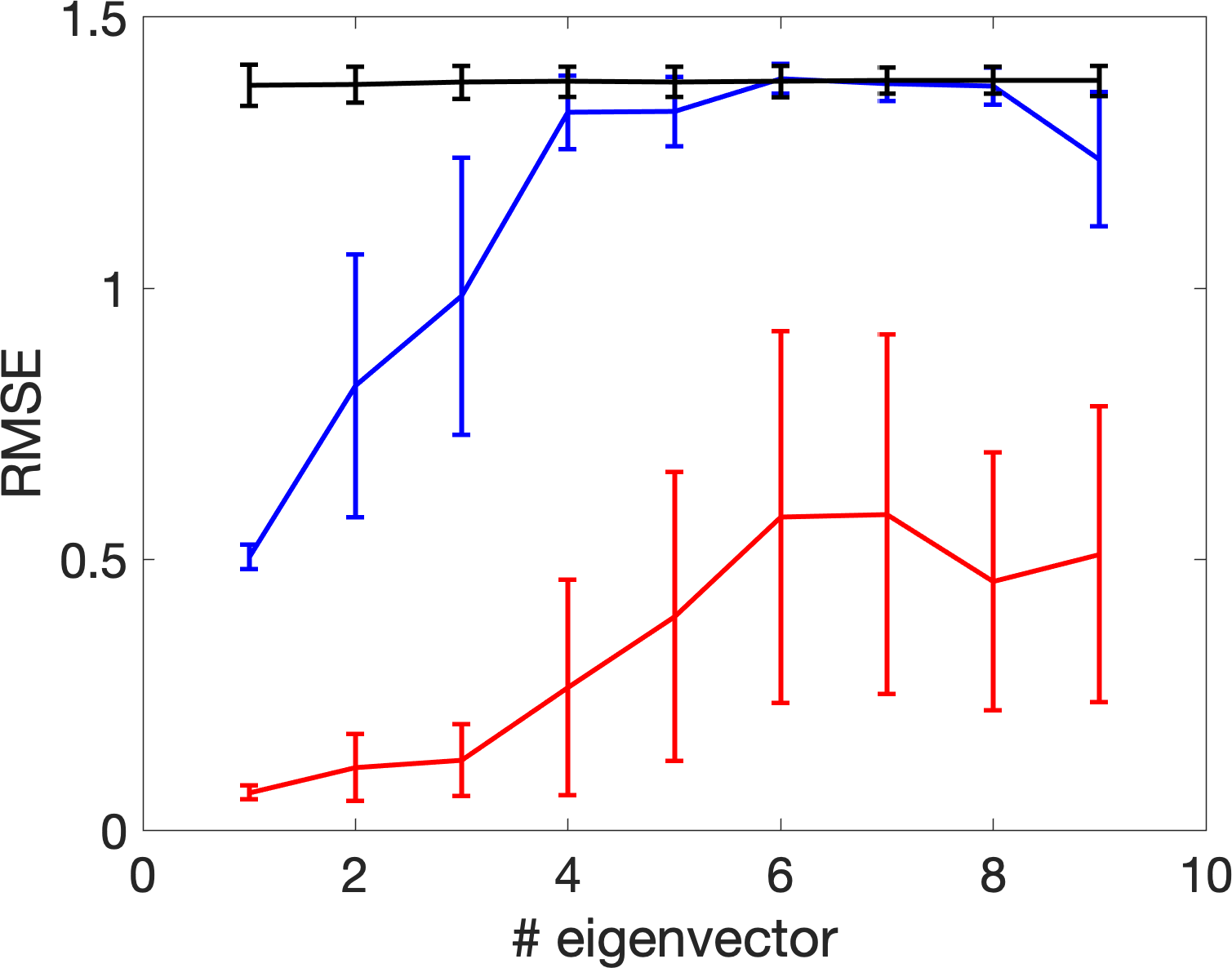

We start with an 1-dimensional smooth and closed manifold , which is parametrized by , where , and . In other words, the 1-dimensional manifold is embedded in a 3-dim Euclidean space in . Now, sample independently and uniformly points from , , and hence points . Next, we add Gaussian white noise to via where , are noise independent of The results of embedding this manifold by the top three trivial eigenvectors are shown in Figure 8. We could see that with the proposed bandwidth selection algorithm, the embedding of the noisy data is closer to that from the clean data. To further quantify this closeness, we view the eigenvectors from as the truth, and compare these with the eigenvectors of with different bandwidths by evaluating the root mean square (RMSE). Note that the freedom of eigenvector sign is handled by taking the smaller value of and , where if the -th eigenvector of associated with the -th largest eigenvalue and is the -th eigenvector of associated with the -th largest eigenvalue. We repeat the random sample for 300 times, and plot the errobars with meanstandard deviation in Figure 9. It is clear that with the adaptive bandwidth selection algorithm, the top several eigenvectors of are close to those of .

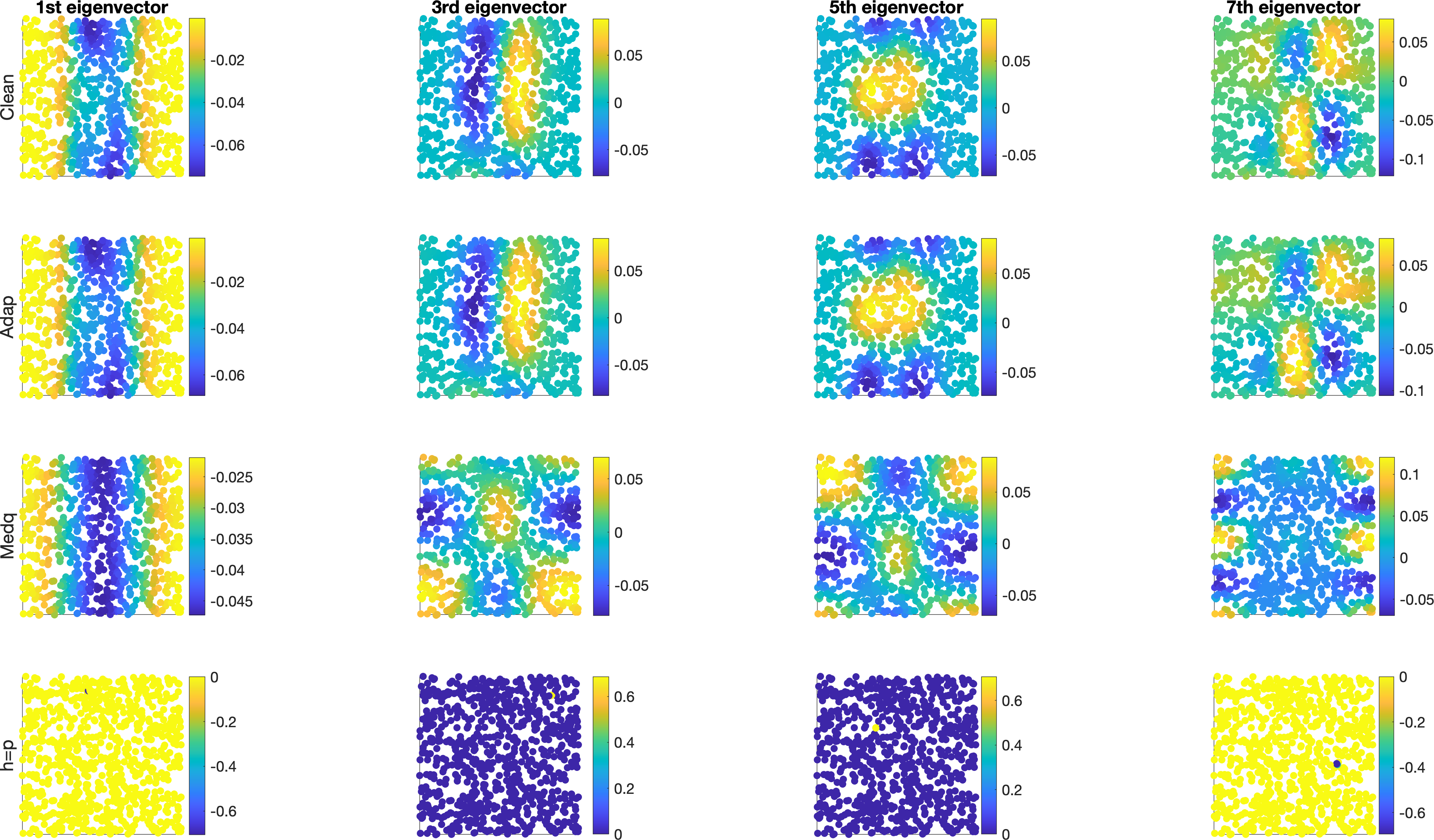

Next, we consider the Klein bottle, which is a -dim compact and smooth manifold that cannot be embedded into a three dimensional Euclidean space. First, set

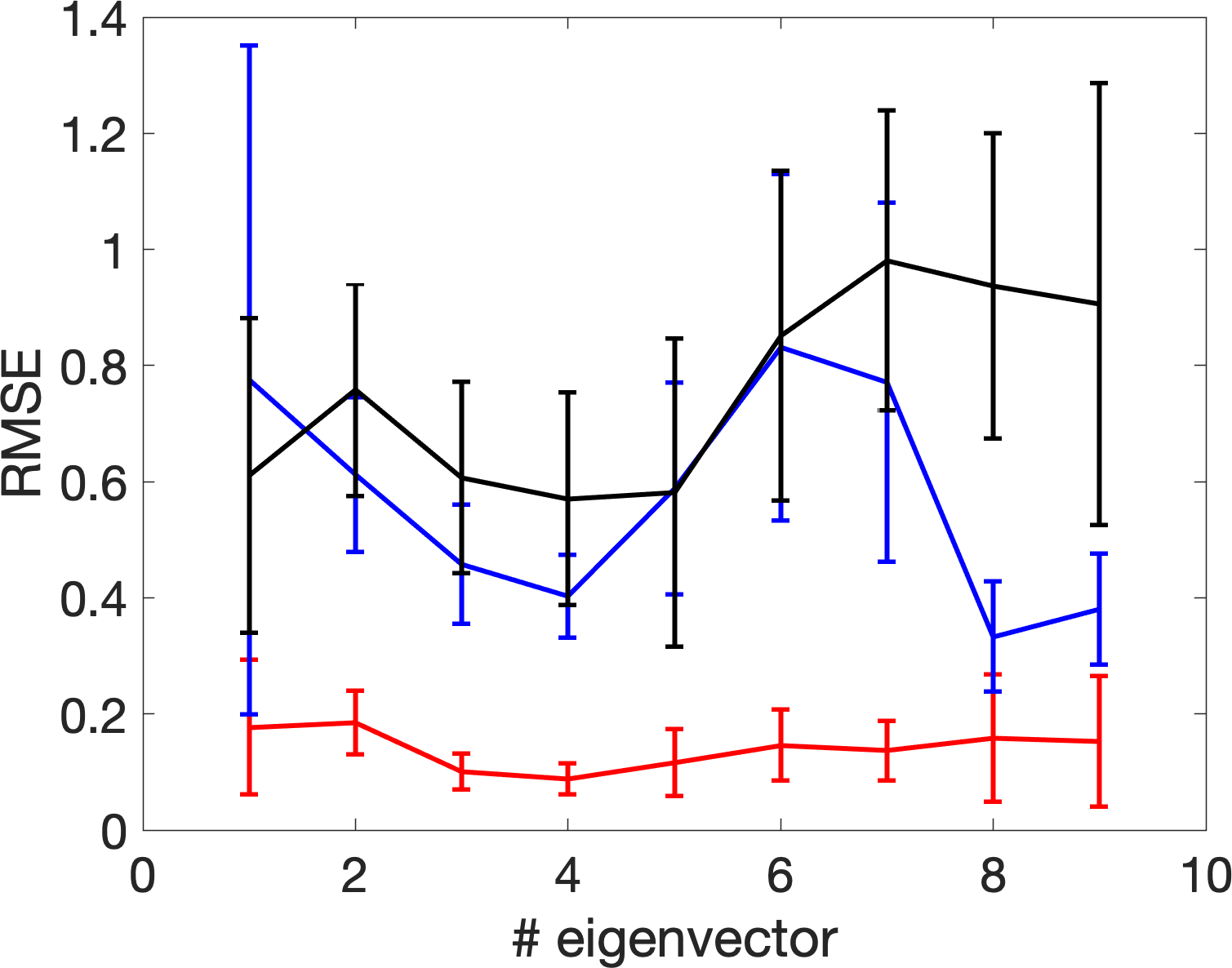

| (4.1) |

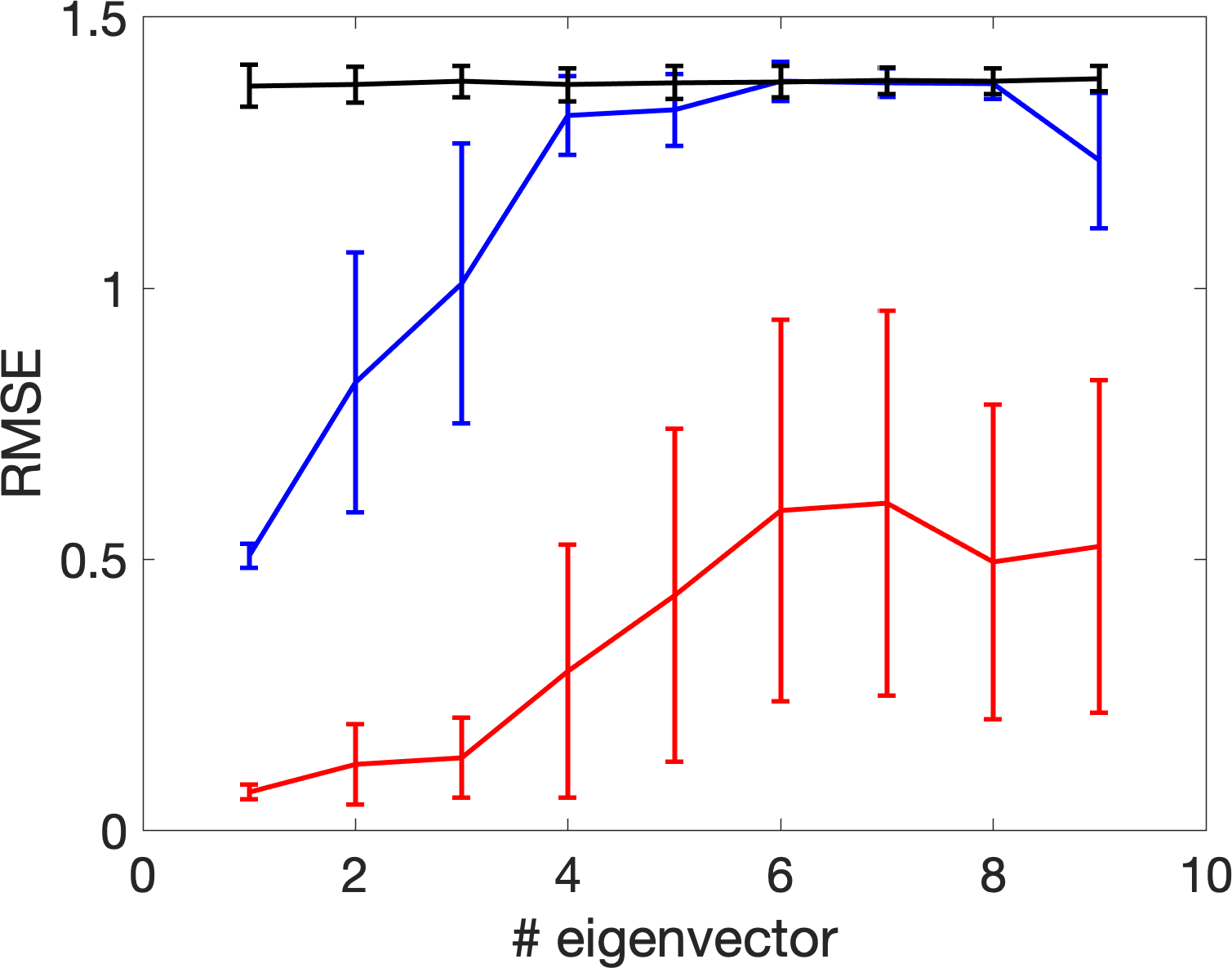

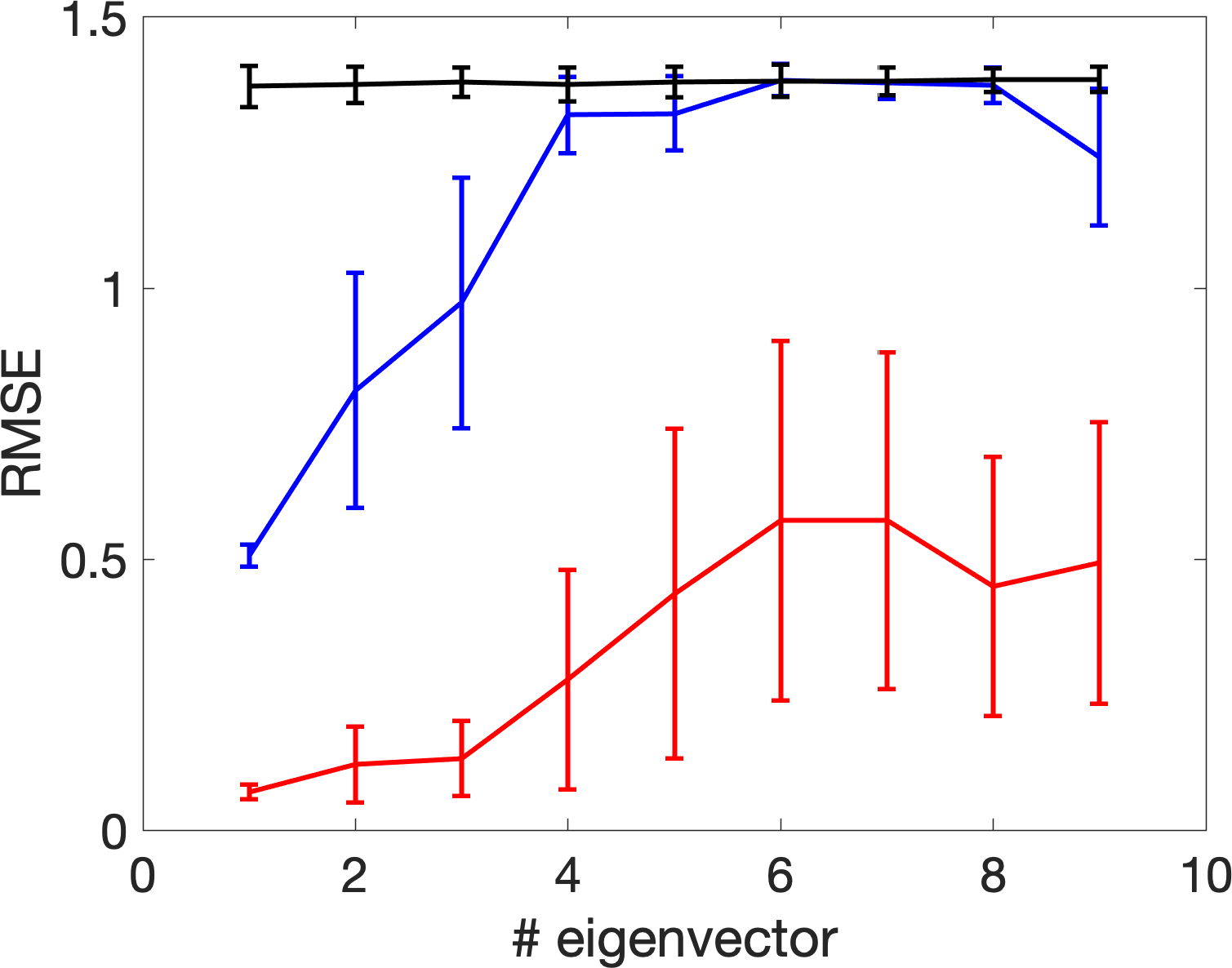

where , are randomly sampled uniformly from and and is the signal strength. In other words, we sample nonuniformly from the Klein bottle isometrically embedded in a -dimension subspace of . Next, we add noise to by setting where , are noise independent of The eigenvectors under the mentioned four setups when the signal strength is equivalent to are shown in Figure 10, where and we plot the magnitude of different eigenvector over with colors; that is, the color at represents the -th entry of the associated eigenvector. We apply the same RMSE evaluation used in the example above; that is, we show the RMSE of the eigenvectors of different when compared with those from as the truth. We repeat the random sample for 300 times, and plot the errobars with meanstandard deviation in Figure 11. It is clear that with the adaptive bandwidth selection algorithm, the top several eigenvectors of are close to those of .

The results of the above two examples support the potential of our proposed bandwidth selection algorithm, particularly when compared with two bandwidth selection methods commonly considered in the literature. Note that since only the eigenvalue information is used in Algorithm 1, only partial information in the kernel random matrix is utilized. We hypothesize that by taking the eigenvectors into account, we could achieve a better bandwidth selection algorithm. Since the study of eigenvector is out of the scope of this paper, we will explore this possibility in our future work.

5. Discussion and Conclusion

We provide a systematic study of the spectrum of GL under the nonnull setup with different SNR regions, particularly when , and explore the impact of chosen bandwidths. Specifically, we show that under a proper SNR and bandwidth, the spectrum of from the clean dataset can be extracted from that of from the noisy dataset. We also provide a new algorithm to select the suitable bandwidth so that the number of “outliers” of the spectral bulk associated with the noise is maximized.

Note that we need the assumption that the entries of are independent because our arguments depend on established results in the literature, for example, [8], which are proved using the assumption that the entries of are independent. Nevertheless, we believe that this assumption can be removed with extra technical efforts. A natural strategy is to utilize the Gaussian comparison method in the literature of random matrix theory as in [31]. This strategy contains two steps. In the first step, we establish the results for Gaussian random vectors where linear independence is equivalent to independence. In the second step, we prove the results hold for sub-Gaussian random vectors using the comparison arguments as in [31] under certain moment matching conditions. Since this is not the main focus of the current paper and the extension needs to generalize many existing results in the literature, for example, [8], we will pursue this direction in the future.

A comparison with principal component analysis (PCA) deserves a discussion. It is well known that when so that the signal is sampled from a linear subspace, we could easily recover this linear subspace from any linear methods like PCA. At the first glance, it seems that the problem is resolved. However, if the purpose is studying the geometric and/or topological structure of the dataset, we may need further analysis. For example, if we want to answer the question like “is the -dim signal supported on two disjoint subsets (or the -dim linear manifold is disconnected)?”, then PCA cannot answer and we need other tools (for example, GL via the spectral clustering). The same fact holds when , where the nonlinear manifold is supported in a -dim subspace while its dimension is strictly smaller than . In this case, while we could possibly recover the -dim subspace by PCA, if we want to further study the nonlinear geometric and/or topological structure of the manifold, we need GL as the analysis tool. For readers who are interested in recovering the manifold structure via GL, see [35, 34, 25] and the literature therein. Based on our results, we know that even for the 1-dimensional linear manifold (for example, in (1.4)–(1.6) satisfies that is uniformly distributed on ), the linear and nonlinear methods are significantly different.

One significant difference that we shall emphasize is the phase transition phenomenon when the nonlinear method like GL is applied. While the discussion could be much more general, the essence is the same, so we continue the discussion with the 1-dimensional linear manifold below. For simplicity, we focus on the results in Section 2 for the kernel affinity matrix when the bandwidth is chosen so that From Theorem 2.3 to Theorem 2.7, we observe several phase transitions for eigenvalues depending on the signal strength . In the case when is bounded, we observe three or four outlying eigenvalues according to the magnitude of where three of these outlying eigenvalues are from the kernel effect; see Lemma A.9 for details. When transits from the subcritical region to the supercritical region , one extra outlier is generated due to the well-known BBP transition [3] phenomenon for the spiked covariance matrix model. Moreover, the rest of the non-outlying eigenvalues still obey a shifted MP law. As will be clear from the proof, in the bounded region, studying the affinity matrix is directly related to studying PCA of the dataset via the Gram matrix; see (A.22) for details. However, once the signal strength diverges, the spectrum of the affinity matrix behaves totally differently. In PCA, under the setting of (1.6), no matter how large is, we can only observe a single outlier and its strength increases as increases. Moreover, only the first eigenvalue is influenced by and the rest eigenvalues satisfy the MP law, and this MP law is independent of Specifically, the second eigenvalue, i.e., the first non-outlying eigenvalue will stick to the right-most edge of the MP law. We refer the readers to Lemma A.6 for more details and Figure 12 for an illustration. In contrast, regarding the nonlinear kernel method, the values and amount of outlying eigenvalues vary according to the signal strength. That is, the magnitude of has a possible impact on all eigenvalues of through the kernel function. Heuristically, this is because PCA explores the point cloud in a linear fashion so that will only have an impact on the direction which explains the most variance, i.e., , where is the covariance matrix that is directed related to the Gram matrix . However, the kernel method deals with the data in a nonlinear way. As a consequence, all eigenvalues of contain the signal information. In other words, unlike the bulk (non-outlier) eigenvalues of , all eigenvalues of change when change when increases. Consider the following three cases. First, as we will see in the proof below, the eigenvalue corresponding to the BBP transition in the supercritical region will increase when increases, and this eigenvalue will eventually be close to when diverges following (2.21). Thus, we expect that the magnitude of this outlying eigenvalue follows an asymmetric bell curve as increases. Second, the eigenvalues corresponding to the kernel effect (see (A.20)) will decrease when increases since the kernel function is a decreasing function. Third, as we can see from Theorems 2.5 and 2.7, the non-outlying eigenvalues become outlying eigenvalues when increases. In Figure 13, we illustrate the above phenomena by investigating the behavior of some eigenvalues.

While our results pave the way towards a foundation for statistical inference of various kernel-based unsupervised learning algorithms for various data analysis purposes, like visualization, dimensional reduction, spectral clustering, etc, there are various problems we need to further study. First, as has been mentioned in Remark 2.9, there are some open problems; particularly, when for all . Second, the behavior of eigenvectors of from noisy dataset and its relationship with the eigenfunctions of the Laplace-Beltrami operator under the manifold model need to be explored so that the above-mentioned data analysis purposes can be justified under the manifold setup. Third, in practice, noise may have a fat tail, the kernel may not be Gaussian [26, 29] or the kernel might not be isotropic [64], and the kernel function might be group-valued [58]. Fourth, the bandwidth selection algorithm is established under very nice assumptions, and its performance for real world databases needs further exploration. We will explore these problems in our future work.

Acknowledgment

The authors would like to thank the associate editor and two anonymous reviewers for many insightful comments and suggestions, which have resulted in a significant improvement of the paper.

Appendix A Preliminary results

In this section, we collect and prove some useful preliminary results, which will be used later in the technical proof.

A.1. From manifold to spiked model

In this subsection, we detail the claim in Section 1.2 and explain why the manifold model and (1.4) overlaps. Suppose are i.i.d. sampled from a -dim random vector , and suppose the range of is supported on a -dimensional, connected, smooth and compact manifold , where , isometrically embedded in via . Assume there exists , where is independent of and , so that the embedded is supported in a -dim affine subspace of . Here we assume that is fixed. Since we consider the kernel distance matrix as in (1.1), without loss of generality, we can assume that ; that is, the embedded manifold is centered at . Also, assume the density function on the manifold associated with the sampling scheme is smooth with a positive lower bound. See [14] for more detailed discussion of the manifold model and the relevant notion of density function. Suppose the noisy data is

| (A.1) |

where represents the signal strength, and is the independent noise that satisfies (1.5). Denote . Thus, there exists a orthogonal matrix such that

Since is compact, , , is bounded and hence sub-Gaussian with the variance controlled by , which is independent of since the manifold is assumed to be fixed. On the other hand, since is connected, , , is a continuous random variable. We can further choose another rotation so that the first coordinates of are whitened; that is, has the covariance structure

where by the assumption of the density function, we have for . Note that is controlled by , and by the smoothness of the manifold, we could assume without loss of generality that for for all . Then, multiply the noisy dataset in (A.1) by from the left and get

| (A.2) |

where , and . It is easy to see that since are isotropic (c.f. (1.5)), are sub-Gaussian random vectors satisfying (1.5). Thus, since and are still independent, the covariance of becomes

where for . Note that in this case, are of the same order. By the above definitions and the invariance of the norm, we have

which means that the affinity matrices (1.1) and transition matrices (1.9) remain unchanged after applying the orthogonal matrix. Thus, (A.2) is reduced to (1.4) and it suffices to focus on model (1.4).

Under the above setting that a low dimensional manifold is embedded into an affine subspace with a fixed dimension , the nonlinear manifold model is thus closely related to the spiked covariance matrix model. Recall that according to Nash’s isometric embedding theorem [48], there exists an embedding so that is smaller than , but there may exist embeddings so that the is higher than . More complicated models might need to even diverge as . In these settings, the nonlinear manifold model will be reduced to other random matrix models, i.e., the divergent spiked model [13] or divergent rank signal plus noise model [23, 24, 20, 21]. We believe that the spectrum of the GL under these settings can also be investigated once the spectrum of these random matrix models can be well studied. Since this is not the focus of the current paper, we will pursue this direction in the future.

A.2. Some linear algebra facts

We record some linear algebraic results. The first one is for the Hadamard product from [27], Lemma A.5.

Lemma A.1.

Suppose is a real symmetric matrix with nonnegative entries and is a real symmetric matrix. Then we have that

where stands for the largest singular value of

The following lemma is commonly referred to as the Gershgorin circle theorem, and its proof can be found in [37, Section 6.1].

Lemma A.2.

Let be a real matrix. For let be the sum of the absolute values of the non-diagonal entries in the -th row. Let be a closed disc with center and radius referred as the Gershgorin disc. Every eigenvalue of lies within at least one of the Gershgorin discs , where .

We also collect some important matrix inequalities. For details, we refer readers to [18, Lemma SI.1.9]

Lemma A.3.

For two symmetric matrices and we have that

Moreover, let and be the Stieltjes transforms of the ESDs of and respectively, then we have

A.3. Some concentration inequalities for sub-Gaussian random vectors

We record some auxiliary lemmas for our technical proof. We start with the concentration inequalities for the sub-Gaussian random vector that satisfies

The first lemma establishes the concentration inequalities when is bounded.

Lemma A.4.

Suppose (1.4)-(1.6) hold. Assume is fixed, for and write

| (A.3) |

for all , where for all . Then, for and we have

| (A.4) |

as well as

| (A.5) |

For the diagonal terms, for we have for some universal constants

| (A.6) |

as well as

| (A.7) |

Especially, the above results imply that

| (A.8) |

as well as

| (A.9) |

Note that since and are of the same order, the above results hold when is replaced by the vector , which is a sub-Gaussian random vector.

Proof.

Then we provide the concentration inequalities when is in the slowly divergent region. Indeed, in this region, the results of the diagonal parts of Lemma A.4 still apply.

Lemma A.5.

Proof.

We only discuss the second term and the first item can be dealt with in a similar way. We define as the floor of . We again adapt (A.3) in the proof. Note that using (A.10) we have

| (A.12) |

We study the upper bound of the sandwich inequality, and the lower bound follows by the same argument. On one hand, according to (A.4), we have that

Moreover, since is a sub-Gaussian random variable, we can apply (A.4). This yields that

where we used the fact that for all Similarly, we have

Using (A.3), we readily obtain that

∎

A.4. Some results for Gram matrices

Denote the Gram matrix of the point clouds in the form of (1.4) as

| (A.13) |

The eigenvalues of have been thoroughly studied in the literature; see [8, 7, 4, 51], among others. We summarize those results relevant to this paper in the following lemma.

Lemma A.6.

Proof.

We mention that the results under our setup have been originally proved in [8] (see Section 1.2 and Theorems 2.3 and 2.7 therein), and stated in the current form. ∎

The following lemma provides a control for the Hadamard product related to the Gram matrix. In our setup with the point cloud , as discussed around (1.6), itself is a sub-Gaussian random vector with a spiked as in (1.6). We thus can extract the probability and bounds by tracking the proof in [27, Step (iv) on Page 21 of the proof of Theorem 2.1].

Lemma A.7.

We also need the following lemma for the Gram matrix of noisy signals.

Lemma A.8.

Proof.

When ,

| (A.18) |

By the assumption that the standard deviations of the entries of and are of order and respectively, we have

and by (A.5), we have

when and

when . Therefore, using the Gershgorin circle theorem, we conclude the claimed bound. ∎

A.5. Some results for affinity matrices

Lemma A.9.

Suppose (1.1) and (1.4)-(1.8) hold true, , for in (1.8) (i.e., is bounded), and in (1.1). Let with , . Denote

| (A.19) |

where is a general kernel function satisfying the conditions in Remark 2.4, is defined in (2.6),

| (A.20) | |||

| (A.21) |

and is the Hadamard product. Then for some small constant , when is sufficiently large, we have that with probability at least

| (A.22) |

Proof.

We also need the following lemma, which is of independent interest.

Lemma A.10.

Proof.

Assume and denote . Throughout the proof, we adapt the notation (A.3). For an arbitrarily small constant and a given let be some constant depending on , and denote the event as

Note that is the signal part. Denote Due to the independence, we find that

| (A.24) |

Using Stirling’s formula, when is sufficiently large, we find that

| (A.25) | ||||

We next provide an estimate for Denote the probability density function (PDF) of as and the PDF of is Recall the assumption that are continuous random variables with . By using the fact , we find that

Consequently, we have that for any small

| (A.26) |

By (A.26), we immediately have that

| (A.27) |

Since as , we have

| (A.28) |

By plugging (A.25) and (A.28) into (A.24), we obtain

| (A.29) |

where the second asymptotic comes from plugging (A.27). In light of (A.5), to make a high probability event, we may take , which leads to

We can choose such that for any large constant when is large enough, we have that

| (A.30) |

Note that . On the event , by the Gershgorin circle theorem and the definition of , we find that

| (A.31) |

where in the inequality we consider the worst scenario such that all the elements in are either on the same row or column. This finishes the claim with high probability.

A.6. Orthogonal polynomials and kernel expansion

We first recall the celebrated Mehler’s formula (for instance, see equation (5) in [49] or [33])

| (A.32) |

where is the scaled Hermite polynomial defined as

and is the standard Hermite polynomial defined as

We mention that is referred to as the probabilistic version of the Hermite polynomial. We will see later that in our proof, the above Mehler’s formula provides a convenient way to handle the interaction term when we write the affinity matrix as a summation of rank one matrices.

A.7. More remarks

A.7.1. Zeroing-out technique

Here we discuss the trick of zeroing-out diagonal terms proposed in [29]. First of all, we summarize the idea and restate the results in [29]. Then we modify it to our setting (1.4)–(1.6). To simplify the discussion, we focus our discussion on the setting with the signal strength , where . The zeroed out affinity matrix is defined as

where is the vector with in all entries. Denote the associated degree matrix as Consequently, the modified transition matrix is

| (A.33) |

Recall that the transition matrix for the signal part is defined as . It can be concluded from [29] that with high probability the spectrum of is close to that of in that

| (A.34) |

provided the following two conditions are satisfied:

(1). The signal strength satisfies

(2). The off-diagonal entries of the signal kernel affinity matrix should satisfy that

| (A.35) |

for some universal constant Even though [29] did not provide a detailed discussion on the bandwidth selection, the assumption (A.35) imposes a natural condition.

We now explain how the zeroing-out trick is related to our approach in the large signal-to-noise ratio region. Recall that the transition matrix for the observation is defined as . As shown in part 2) of Theorem 3.1, when the signal strength is and the bandwidth is either or selected adaptively according to the method proposed in Section 3.2, the spectrum of will be close to that of with high probability, i.e.,

We emphasize that when and or selected adaptively according to the method proposed in Section 3.2, it can be concluded from the proof of Corollary 3.2 that (A.35) holds with high probability. Consequently, together with (A.34), we can conclude that when the signal-to-noise ratio is large in the sense and the bandwidth is selected properly as in Section 3.2, the spectrum of is asymptotically the same as the zeroing-out matrix We mention that although in this setting our approach and results are asymptotically equivalent to the zeroing-out trick, our method provides an adaptive and theoretically justified method to select the bandwidth instead of choosing a fixed bandwidth according to (A.35). In fact, in the simulation of [29], the authors used a similar approach empirically.

When the signal-to-noise ratio is “smaller” so that the zeroing-out trick has a significant impact on the spectrum. In this region, the proposed bandwidth selection algorithm will choose a bandwidth satisfying , and the spectral behavior of noisy GL is recorded in Corollary 2.10, part 1) of Theorem 3.1 and Corollary 3.2. We see that is no longer close to the spectrum of under our setup. However, the zeroing-out trick works provided (A.35) holds. Therefore, we can see that when and the bandwidth is properly selected, the result can be improved using the zeroing-out trick in the sense of (A.34).

Finally, when the SNR is “very small” so that both and are dominated by the noise. In this case, we are not able to extract useful information of the signal, even with the zeroing-out trick. For an illustration, in Figure 14, we show the performance of and in different SNR regions by comparing some of their eigenfunctions (eigenvectors) with those of the clean signal matrix We can conclude that the zeroing-out trick can be beneficial when provided the bandwidth is carefully selected.

A.7.2. Mor remark on

We continue the discussion in Remark 2.9 and provide some simulations with . We assume that , and discuss two cases with different and

First, we discuss the setting when the bulk eigenvalues are governed by the MP law, i.e., in the region Recall that the shifted MP law defined in (2.7) depends on the signal level via in (2.5), and when as which is independent of asymptotically. We can thus always set in (2.5) and use for the MP law as in (2.8) and (2.12). Therefore, when the bulk distribution is the same as that in (2.8) and (2.12), which is characterized by the shifted MP law,

where is the shift operator defined in (2.1), is the MP law defined in (2.3) with replaced by , and is defined by inserting (or equivalently ) in (2.6); that is,

In general, although the bulk distribution is the same for different finite the number of outliers can vary according to , On the technical level, the proofs in Appendices B.1 and B.2 follow after some minor modifications, especially in the Taylor expansion. For example, in (B.1), the key parameter should be defined as when . For an illustration, in Figure 15, we show how the bulk eigenvalues are asymptotically identical for the setting and when the exponents are less than one for various settings of .

Second, we discuss the region when . On the one hand, as in Theorem 2.7, we can show that the spectrum of is close to those of matrices defined in (2.14) and (2.16) that depend on the clean signal part . As in the case when , the spectrum of may not follow the MP law and depends on both and and the chosen bandwidth. This dependence suggests that the spectrum of might be different from that when . In Figure 16, we show numerically how the bulk under the setup and is different from that when and .

Third, when the spectrum of will be close to some matrices that depend only on In this case, the spectrum of is close to that of when and the signal strength is See Figure 17 for an illustration, where we see that the bulks are fairly close to each other, while they may not necessarily follow the MP law.

Appendix B Proof of main results in Section 2

In this section, we prove the main results in Section 2.

B.1. Proof of Theorem 2.3

Recall defined in (2.5). Since the proof holds for general kernel function described in Remark 2.4, we will carry out our analysis with such general kernel function .

Proof.

We start from simplifying . Denote to be the Kronecker delta. By the Taylor expansion, when , we have that

| (B.1) |

where is defined in (A.15), is defined as

| (B.2) |

and is some value between and is defined in (2.5). Consequently, we find that can be rewritten as

| (B.3) |

where we used the shorthand notations

With this expansion, we immediately obtain

| (B.4) |

where the error quantified by is in the operator norm, the term is controlled by Lemma A.5, and the term is controlled by the facts that , and the Gershgorin circle theorem.

Next, we control and . Since , we could approximate by via

| (B.5) |

where with , , is defined in (A.19), and the last bound comes from Lemma A.5.

For , we write and focus on the term Since , we have (also see the proof of [27, Theorem 2.2])

| (B.6) |

Moreover, construct from in the same way as (A.15) and write

| (B.7) |

where We find that

Note that by (A.4) and (A.5), and by the fact that and , so we have By Lemma A.6 and (A.4), we find that . On the other hand, by the fact that and (A.5), we have

| (B.8) |

By (B.5), we conclude that

With the above preparation, is reduced from (B.1) to

| (B.9) |

We further simplify . Let be constructed in the same way as in (B.2) using the point cloud By Lemma A.8, can be replaced by . Moreover, by a discussion similar to (B.5), we can control by

Combining all the above results, and applying Lemma A.7, we have simplified as

| (B.10) |

where

| (B.11) |

with probability at least for some small ,

With the above simplification, we discuss the outlying eigenvalues. Invoking (B.10), since is simply an isotropic shift, the outlying eigenvalues of can only come from . Notice that by the identity , we find that

| (B.12) |

which leads to a rearrangement of to

| (B.13) |

Note that is of rank at most three since the first two terms of (B.13) form a matrix of rank at most and is a rank-one matrix with the spectral norm of order . With (B.10), we can therefore conclude our proof using Lemma A.6.

∎

B.2. Proof of Theorem 2.5

Since the proof in this subsection hold for general kernel function described in Remark 2.4, we will carry out our analysis with such general kernel function . Note that in this case, defined in (2.5) is still bounded from above. So for a fixed , the first coefficients in the Taylor expansion can be well controlled under the smoothness assumption, i.e., , for for . However, Lemma A.9 is invalid since . On the other hand, in this region, although the concentration inequality (c.f. Lemma A.5) still works, its rate becomes worse as becomes larger. In [26], the author only needs to conduct the Taylor expansion up to the third order since is fixed. In our setup, to handle the divergent , we need a high order expansion that is adaptive to Thus, due to the nature of convergence rate in Lemma A.5, we will employ different proof strategies for the cases and When satisfies , the proof of Theorem 2.3 still holds. When satisfies , we need a higher order Taylor expansion to control the convergence. This comes from the second term of (A.5), where the concentration inequalities regarding , where , have different upper bounds with different .

Proof of case (1), .

By (B.1) and Lemma A.5, we find that when ,

where we used the fact that is bounded. By a discussion similar to (B.1) and the Gershgorin circle theorem, we find that (B.1) also holds true. The rest of the proof follows lines of the proof of Theorem 2.3 using Lemmas A.5 and A.6. We omit the details here.

∎

Proof of Case (2), .

For simplicity, we introduce

| (B.14) |

By Lemma A.5 and notations defined in (B.2), we have

| (B.15) |

By the Taylor expansion, when , we have

| (B.16) |

where is defined in (2.11) and is some value between and Consider defined as

so that . We start from claiming that

| (B.17) |

where is defined in (2.13). To see (B.17), we use (A.5) and the fact that is finite to get

Together with (B.16), by the Gershgorin circle theorem, we have

where we set

Similar definition applies to and

Next, we study Recall the definition of in (A.20). To simplify the notation, we denote and , and obtain

| (B.18) |

Clearly, , and by Lemma A.5, we have

| (B.19) |

For any , in view of the expansion

below we examine term by term.

First, when , we only have the term . We focus on the discussion when , and the same argument holds when . We need the following identity. For any matrix and vectors ,

| (B.20) |

Note that the expansion in (B.7) still holds, and to further simplify the notation, we denote

| (B.21) |

where and . We thus have that

| (B.22) |

We control the first term, and the other terms can be controlled by the same way. Since and , we have that

Together with (B.21) and the fact that , we obtain

where and . Now we discuss the above three terms one by one. First, using (B.20), we have

where in the second equality we used the fact that Second, we have

On one hand, we can use (B.20) to write

The first term of the above equation is the leading order term which can be bounded as follows

where we used the fact that The other terms can be bounded similarly so that

Similarly, we can control This shows that

Analogously, we can analyze the other two terms in (B.2) and obtain that

| (B.23) |

Moreover, note that , we have by Lemma A.1 that

On the other hand, since , we have . Consequently, we have that

We mention that since is at most rank two so that is at most Similarly, using the above discussion for general we can show that

Second, when , we only have the term . When using (B.18) and the fact that is diagonal, we have that

By Lemma A.5 (aka (B.19)), we have that

Similarly, for general we have that

Third, when and , we discuss a typical case when and , i.e., We prepare some bounds. Recall that

where we have

By the definition of , we immediately have

| (B.24) |

where the bound for holds by the tail bound of the maximum of a finite set of sub-Gaussian random variables. Similarly, we have the bound

| (B.25) |

by (A.5). By an expansion, we have

| (B.26) |

which leads to

| (B.27) |

by Lemma A.1 with the fact , by Lemma A.5, (B.24) and . So we have the bound

where the first bound comes from Lemma A.1 and the second bound comes from (B.25). Together with , by the same argument we have that

Using the simple estimate that , we find that

The other and can be handled in the same way. Precisely, when , can be approximated by with the norm difference of order , where . Therefore, up to an error of , all the terms in , except that will be absorbed into the first order expansion, can be well approximated using a matrix at most of rank , where .

Consequently, we can find a matrix of rank at most , where , to approximate so that