Impact-resistant, autonomous robots inspired by tensegrity architecture

Future robots will navigate perilous, remote environments with resilience and autonomy. Researchers have proposed building robots with compliant bodies to enhance robustness, but this approach often sacrifices the autonomous capabilities expected of rigid robots. Inspired by tensegrity architecture, we introduce a tensegrity robot—a hybrid robot made from rigid struts and elastic tendons—that demonstrates the advantages of compliance and the autonomy necessary for task performance. This robot boasts impact resistance and autonomy in a field environment and additional advances in the state of the art, including surviving harsh impacts from drops (at least 5.7 m), accurately reconstructing its shape and orientation using on-board sensors, achieving high locomotion speeds (18 bar lengths per minute), and climbing the steepest incline of any tensegrity robot (28 degrees). We characterize the robot’s locomotion on unstructured terrain, showcase its autonomous capabilities in navigation tasks, and demonstrate its robustness by rolling it off a cliff.

Introduction

In the mid-20th century, art student Kenneth Snelson and his professor, architect Buckminster Fuller, began creating peculiar sculptures by suspending rigid elements in a tension network made from strings (?). These “discontinuous compression” sculptures had useful properties, including flexibility, light weight, minimal material usage, high strength-to-weight ratios, and the ability to absorb large external loads (?). Fuller would later name this concept tensegrity, a portmanteau of “tensile integrity” (?). Since its invention, the principle of tensegrity has been applied to numerous fields, including art (?), architecture (?), civil engineering (?), biomechanical modeling (?, ?), and robotics (?, ?).

Made from rigid struts and elastic tendons, tensegrity robots have the potential to navigate perilous and unpredictable environments. Their inherently compliant bodies make them adaptable, deployable, and resilient (?). These advantages, along with their light weight, make tensegrity robots a promising candidate for the next generation of planetary rovers (?) and for navigating other remote environments. In recent years, researchers have introduced locomotive tensegrity robots that are capable of crawling (?, ?, ?), rolling (?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?), vibrating (?, ?, ?), jumping (?, ?), climbing (?, ?), swimming (?, ?), and flying (?, ?). Tensegrity robots achieve rolling, the most common locomotion mode, by deforming their bodies to shift their center of mass outside of the stable polygon formed by the nodes in contact with the ground, using either electric motors (?) or other actuators (?, ?) to change the lengths of their tendons or bars.

Despite their exciting advantages, tensegrity robots are not yet able to autonomously navigate unstructured environments due to unsolved problems in design, sensing, modeling, and control. To solve this grand challenge, tensegrity robots need reliable sensors for state estimation and control, increased levels of autonomy, versatility across different terrains, and demonstrated resilience to harsh impacts (?, ?). There has been some work on state estimation for tensegrity robots (?, ?), including shape reconstruction with on-board sensors (?, ?). To prove their adaptability and versatility, some researchers demonstrate tensegrity robots on unstructured terrain (?, ?, ?, ?). Tensegrity robots have been shown to climb inclines as steep as 24 ° (?). There are also a few examples of tensegrity robots exhibiting robustness to impacts by surviving short drops (?, ?, ?, ?). Despite these advancements, an autonomous tensegrity robot with impact resistance has yet to be demonstrated.

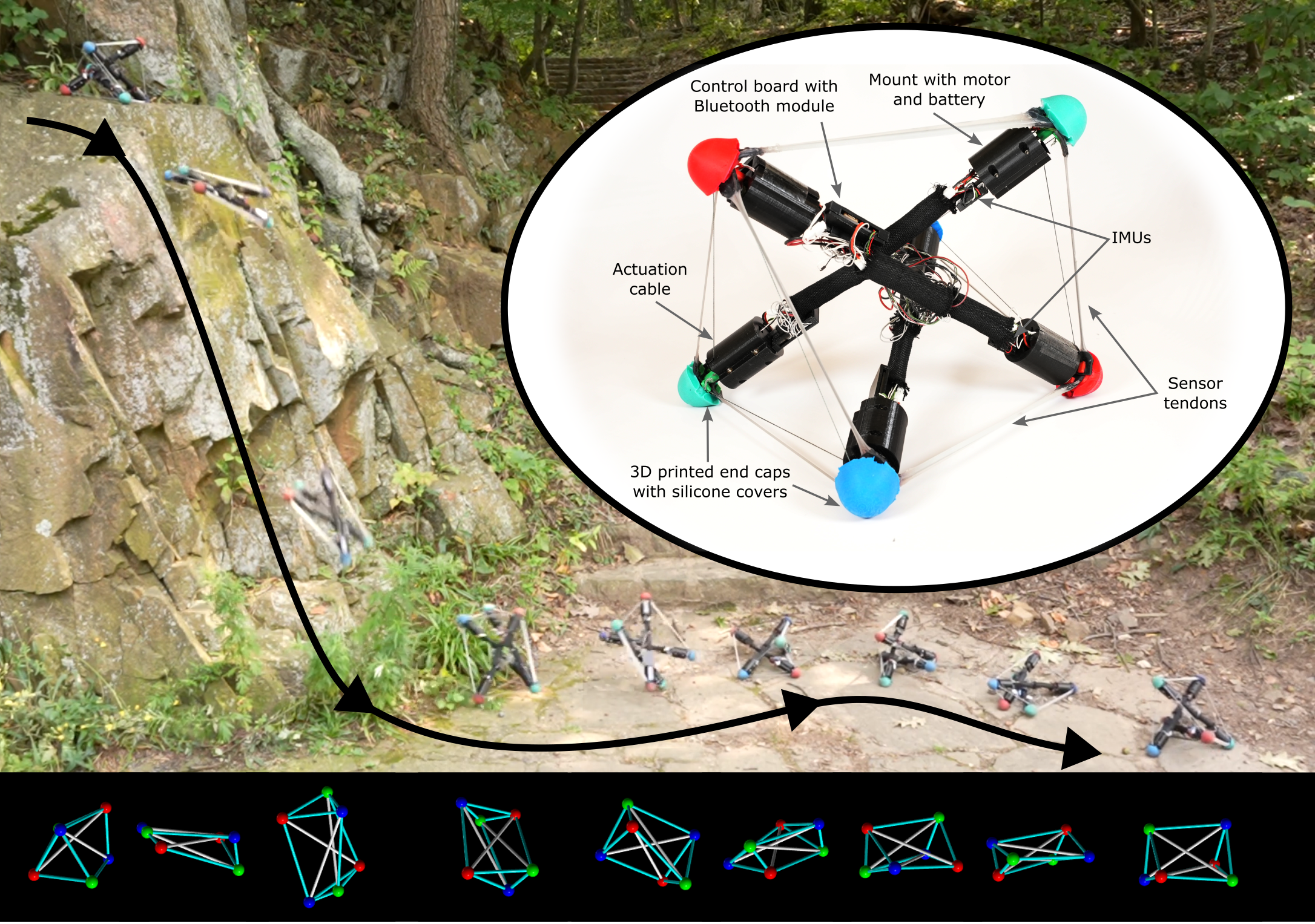

In this work, we introduce Tribar, a 3-bar tensegrity robot, and demonstrate its capabilities of state estimation, locomotion, autonomous control, shape morphing, and impact resistance. The robot is capable of extreme deformations, and therefore we use highly stretchable strain sensors (?) for state estimation and closed-loop control. Beyond what has been demonstrated previously, this tensegrity robot can estimate its shape and global orientation in real time with on-board sensors, enabling uninterrupted autonomous locomotion in a field environment after a 2 m fall (Fig. 1). We characterize the robot’s locomotion across different unstructured terrains, with different gaits, and up inclines as steep as 28°, which is the steepest climbed by a tensegrity robot in the reported literature. Autonomous control is further demonstrated in trajectory following experiments that make use of the tensegrity’s multiple gaits for forward locomotion and turning. In a game of limbo, the robot exhibits autonomy and shape morphing, which allow it to navigate under an obstacle with decreasing height. Finally, we show the robot rolling off a bridge and surviving a 5.7 m drop, the highest in the reported literature. This work represents an advance in the state-of-the-art across the quantitative metrics pertinent to tensegrity robots [supplementary materials (SM) section S1] and a step toward realizing tensegrity robots’ promise to autonomously explore unstructured and perilous remote environments.

Design of a 3-bar Tensegrity Robot

Tribar is made from three rigid struts connected with tensile elements in a stable network (Fig. 1). Each 360 mm strut contains two motors that can extend and contract cables, deforming the tensegrity structure. The six short tendons are actuated by the six motors, while the longer three tendons act as passive restoring springs. The robot is untethered and carries its own power in the form of six 1S LiPo batteries, two on each strut, wired 3S2P for a total of 11.1 V nominal. The electronics are distributed among the three struts, including a microcontroller with Bluetooth module, motor drivers, inertial measurement units (IMUs), and a capacitive sensing unit. Each strut contains custom 3D printed parts, including end caps (Fig. S1) and mounts for electronics. Full details are found in SM section M1, and a circuit schematic is shown in Fig. S2.

Each connection between the struts has one highly stretchable capacitive strain sensor that doubles as a tensegrity tendon (?). These sensor tendons (Fig. S3) are made from two thin layers of a room-temperature liquid metal paste (?, ?) encapsulated in a silicone elastomer. The capacitance of the sensors varies linearly as they are stretched, and the sensors can accommodate the high strains () that are necessary for tensegrity robot locomotion. Fabrication details and characterization are given in SM section M1.3 and in our previous work (?). In this work, the six sensor tendons that are parallel to the motor-driven cables are used for feedback control. The other three sensor tendons are stiffer, and they are used as passive restoring springs as well as for shape estimation.

With feedback from the sensor tendons, the robot can reliably achieve target shapes by extending and contracting the six actuated cables until all the sensor lengths match their target lengths within some small tolerance (SM section M3). A gait comprises a repeating sequence of target shapes that achieve a desired behavior like rolling or turning. A hand-tuned PID controller commands the motors to achieve target lengths, and once all six lengths have been reached, the robot proceeds to the next target shape in the gait. During rolling gaits, the tensegrity robot deforms its body to shift its center of mass outside of its polygon of stability and topple onto an adjacent face. Some of the robot’s gaits were designed by hand while others were discovered in simulation (?). The complete repertoire of gaits can be found in SM sections M3, S4, and S6.

The tensegrity robot was designed for impact resistance and autonomous control, as shown in Fig. 1. With closed-loop control, the robot executes a rolling gait and rolls off a cliff, falling 2 m. After absorbing the impact with its inherent body compliance, the robot senses its shape as well as its orientation and continues its locomotion in the same direction. While other autonomous mobile robots would have to plan a path around obstacles like cliffs, our tensegrity robot can just roll off the edge, demonstrating its potential for efficiently navigating unstructured environments.

State Estimation

Robots that navigate unknown environments must be able to estimate their own state for locomotion and control. Due to tensegrity robots’ many degrees of freedom and coupled dynamics, state estimation remains a grand challenge (?). Previous work on tensegrity state estimation has relied on off-board sensors that realistically will not be available in unknown environments (?, ?). Using only on-board sensors, researchers have been able to estimate the shape (?) or shape plus downward face (?) of a tensegrity robot using stretchable sensors that strain . Our 3-bar tensegrity robot uses highly stretchable sensor tendons and IMUs to reconstruct its shape and orientation in a global frame. While previous work has been limited to stationary experiments (SM section S1), our state estimation runs in real time during locomotion (Fig. 2 and Fig. S5).

In this work, the robot’s state is defined as its shape and orientation. In order to sense translation, the robot requires an external camera (SM section S4). The output of state estimation is the 3D position vector of each of the robot’s six nodes centered at the origin and oriented relative to Earth’s gravitational and magnetic fields. The inputs to the algorithm are the nine lengths measured by the sensor tendons and two bar orientations given by the digital motion processors on the 9-axis IMUs. The estimation algorithm is a two-stage constrained optimization process. First, the shape is estimated by minimizing the squared error from the measured tendon lengths constrained by the known bar lengths, as in previous work (?). Second, the orientation is estimated by minimizing the error between the estimated and measured bar orientations. Full details of the state estimation algorithm are given in SM section M2.

Fig. 2 shows the results of the robot’s real-time state estimation while executing its counterclockwise turning gait on a dirt path. Fig. 2A shows a side-by-side comparison of video frames and state estimation results for the 2-minute trial. The plots in Fig. 2B shows the tendon lengths, orientation, and error as a function of time. Dashed lines with corresponding colors indicate ground truth as measured by our vision-based tensegrity pose tracking algorithm (?). The orientation is plotted as the ZYX Euler angles that define the robot’s orientation relative to the global frame, and the error is the root mean square error (RMSE) normalized by the robot’s 360 mm bar length. The RMSE over the trial in Fig. 2 is 8.4% of the bar length. Another state reconstruction experiment with the quasistatic rolling gait is shown in Fig. S5, and the RMSE from that trial is 10%.

In practice, the state estimation algorithm is sufficiently fast and accurate for real-time autonomous control. Before each step in the rolling gait of Fig. 1, the robot inferred its downward face and heading from state estimation and adapted its gait via symmetry reduction (see SM section S6) to ensure it would continue rolling in the same direction it was facing at the start of the trial.

Locomotion

The robot’s locomotion was characterized across unstructured terrain, up inclines and down declines, with different gaits for rolling and turning, and with shape morphing for varying the height of the robot. The robot’s quasistatic rolling gate is shown across different types of unstructured terrain, including grass, ice, pebbles, and sand in Fig. 3A-D. The corresponding plots show the robot’s center of mass (CoM), which we define as the centroid of its six end caps, as a function of time over four trials. The robot’s speed across terrains is compared in Fig. 3I. Since this gait relies on gravity for rolling, the robot has comparable speeds across the different terrains, with grass being the most cumbersome.

The quasistatic rolling gait is a two-step, gravity-based rolling gait. In the first step, the robot contracts two cables to transition onto the base triangle closer to the direction of rolling. In the second step, the robot contracts three cables to deform its body, contracting its base triangle and moving its center of mass, in order to roll onto the next face. Repeating this process achieves punctuated rolling. For more details, see SM section M3.

The quasistatic rolling gait can also be used to climb inclines as steep as 28°(Fig. 3), the steepest climbed by a tensegrity robot. Feedback control enables the robot to morph to increase its height, allowing the robot to ascend inclines effectively by taking larger steps with each cycle of the rolling gait. The robot’s speed across various incline angles is compared in Fig. 3J, and more incline experiments are shown in Fig. S6. For controlled descent of declines (movie S5), the robot morphs to decrease its height and lower its CoM, preventing it from falling down the decline uncontrolled. We characterize the robot’s shape morphing ability in Fig. S8, which shows the robot executing its quasistatic rolling gait with different heights. This morphing ability enables the robot to navigate under obstacles (Fig. 4D) by decreasing its height.

By skipping the transition step in the quasistatic rolling gait, the tensegrity robot exhibits dynamic rolling (Fig. 3F) and achieves its top speed of 11 cm/s or 18 bar lengths per minute (BL/min). The dynamic rolling gait is compared to the quasistatic rolling gait in Fig. 3K. Additional gaits for turning counterclockwise (Fig. 3G) and clockwise (Fig. 3H) were discovered in simulation (?), and they are compared in Fig. 3L. The asymmetry in the turning gaits is due to the robot’s chiral topology. The robot can also achieve a gradual turning gait by modulating its height to be larger on one side than the other. More turning gaits are discussed in SM section S3 and shown in Fig. S9. Overall, this tensegrity robot has diverse locomotion capabilities; the gaits and their variations discussed in this section are the robot’s motion primitives, the building blocks for navigation and control tasks.

Autonomous Control

Tensegrity robots in the reported literature demonstrate open-loop trajectories. Here, we introduce a model-based, autonomous controller that enables closed-loop trajectory following (Fig. 4A-C). Tensegrity robots are difficult to model accurately due to their complex, coupled dynamics and many degrees of freedom (?). In previous work, we presented a Real2Sim2Real framework for modeling tensegrity robots based on a differentiable physics engine (?). In this work, this simulator was used to model each of the tensegrity robot’s motion primitives (the distinct gaits and their variations discussed above) to build a database of state-action transitions. The robot’s position and orientation are tracked in real time with an overhead RGB-D camera using a tensegrity pose-tracking algorithm adapted from our previous work (?). Given the robot’s state, the controller performs a two-ply exhaustive search to determine the sequence of actions that minimizes the cost function of the trajectory following task (?, ?). After executing the first action in the sequence and subsequently measuring the robot’s state, the controller re-plans a new sequence of two actions. More details are available in SM section M4.

The results of the trajectory following experiments are shown for a straight line, a circular arc, and a right triangle trajectory in Fig. 4A-C. The images show the robot with the pose tracking, the trajectory, and the planned action sequence superimposed, with successively lighter colors corresponding to predictions further in the future. The corresponding plots show the robot’s CoM over three trials for each trajectory. Larger markers represent planning steps. The triangular trajectory is segmented into three straight line trajectories, and the robot proceeds to the next trajectory segment in the sequence when its CoM falls within a given radius of the end of the current segment. At that moment, the controller determines if the robot will follow the next segment in the same direction or if it will reverse its direction and modify its gait via symmetry reduction (SM sections M4 and S6). The error (Fig. S7) is higher for the latter two segments of the triangular trajectory because the robot starts those segments where it ended the previous ones rather than with the perfect starting position and orientation.

Fig. 4D and movie S7 show another demonstration of autonomy: a game of limbo where the robot repeatedly navigates under an obstacle of decreasing height. The overhead camera tracks the robot’s pose and the height of the bar in real time. After the robot reaches the other side, the bar lowers, and the robot reverses its gait (SM section S6) and restricts its range to keep its body size below the bar height. The plot in Fig. 4D shows the robot height and bar height during the trial. The yellow shaded regions indicate when the robot is under the bar while the gray shaded regions show whether the robot is executing its rolling gait or waiting for the bar to lower.

The control tasks demonstrated in this work are the building blocks for future tensegrity robots that can navigate unstructured environments. Because human teleoperators may not always have open lines of communication, these robots must be capable of executing their most fundamental tasks autonomously, like following the last provided path and avoiding obstacles. Furthermore, the impact resistance of tensegrity robots can enable more efficient path planning (?). Instead of navigating around cliffs and drops, our robot can roll off and survive the fall (Figs. 1 and 5).

Impact Resistance

The ability to survive harsh impacts is one of the most noteworthy advantages of tensegrity robots, yet only a few researchers demonstrate their robots enduring drop tests (?, ?, ?, ?). In addition to the fall from the 2 m cliff shown in Fig. 1, movie S8 and Fig. 5 show our tensegrity robot rolling off a 5.7 m bridge, surviving the subsequent shear drop—the highest drop by a tensegrity robot in the reported literature—and then continuing its rolling locomotion (SM section M6). Movie S11 shows the robot surviving a fall from a larger cliff in an authentic field environment, though it is not a shear drop as the robot is buffeted by vegetation and the cliff face on the way down (SM section M6).

The bulk compliance afforded by the robot’s tensegrity structure allows it to absorb the energy of the impact through deformation. As the external load is applied, the struts reorient such that all are loaded in pure axial compression while the tendons are loaded in pure axial tension. The ability to absorb large impacts from falls could one day enable tensegrity robots to explore the bottoms of craters on planetary surfaces or to be quickly deployed to disaster zones by dropping them from airplanes.

We envision a future where robots are versatile, robust, and autonomous so that they may navigate and explore environments that are out of reach for today’s state-of-the-art robots. Part of the solution is introducing new hardware platforms with enhanced capabilities. Inspired by art and architecture, we have created a 3-bar tensegrity robot to push boundaries. This tensegrity robot has reliable, highly stretchable sensors and for state estimation and autonomous control. The robot is versatile, navigating various terrains and obstacles with several locomotion gaits, and its robustness is demonstrated by subjecting it to harsh impacts. We expect this tensegrity robot to serve as a platform and a benchmark for further studies as we work toward a future with capable, compliant robots.

References

- 1. K. Snelson, International Journal of Space Structures 11, 43 (1996).

- 2. K. D. Snelson, Continuous tension, discontinuous compression structures (1965). US Patent 3,169,611.

- 3. A. Micheletti, P. Podio-Guidugli, Archive of Applied Mechanics 92, 2525 (2022).

- 4. K. Snelson, International journal of space structures 27, 71 (2012).

- 5. R. B. Fuller, Tensile-integrity structures (1962). US Patent 3,063,521.

- 6. W. Gilewski, J. Kłosowska, P. Obara, Procedia Engineering 111, 242 (2015).

- 7. C. S. Chen, D. E. Ingber, Osteoarthritis and cartilage 7, 81 (1999).

- 8. N. Wang, et al., Proceedings of the National Academy of Sciences 98, 7765 (2001).

- 9. R. L. B. K. W. M. V. K. B. Dylan S. Shah, Joran W. Booth, R. Kramer-Bottiglio, Soft robotics (2021).

- 10. Y. Liu, et al., Mechanism and Machine Theory 168, 104571 (2022).

- 11. R. E. Skelton, R. Adhikari, J.-P. Pinaud, W. Chan, J. Helton, Proceedings of the 40th IEEE conference on decision and control (Cat. No. 01CH37228) (IEEE, 2001), vol. 5, pp. 4254–4259.

- 12. A. P. Sabelhaus, et al., 2015 IEEE international conference on robotics and automation (ICRA) (IEEE, 2015), pp. 2867–2873.

- 13. C. Paul, F. J. Valero-Cuevas, H. Lipson, IEEE Transactions on Robotics 22, 944 (2006).

- 14. D. Zappetti, S. Mintchev, J. Shintake, D. Floreano, Conference on Biomimetic and Biohybrid Systems (Springer, 2017), pp. 497–508.

- 15. R. Kobayashi, H. Nabae, G. Endo, K. Suzumori, IEEE Robotics and Automation Letters 7, 5349 (2022).

- 16. L.-H. Chen, et al., Journal of Mechanisms and Robotics 9 (2017).

- 17. M. Vespignani, J. M. Friesen, V. SunSpiral, J. Bruce, 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS) (IEEE, 2018), pp. 2865–2871.

- 18. Z. Wang, K. Li, Q. He, S. Cai, Advanced Materials 31, 1806849 (2019).

- 19. Z. Littlefield, et al., International Journal of Robotics Research (IJRR) 38, 1442 (2019).

- 20. L.-H. Chen, et al., 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS) (IEEE, 2017), pp. 4976–4981.

- 21. T. Kaufhold, F. Schale, V. Böhm, K. Zimmermann, 2017 IEEE International Conference on Advanced Intelligent Mechatronics (AIM) (IEEE, 2017), pp. 523–528.

- 22. T. Rhodes, V. Vikas, International Design Engineering Technical Conferences and Computers and Information in Engineering Conference (American Society of Mechanical Engineers, 2019), vol. 59247, p. V05BT07A021.

- 23. R. L. Baines, J. W. Booth, R. Kramer-Bottiglio, IEEE Robotics and Automation Letters 5, 6567 (2020).

- 24. K. Kim, A. K. Agogino, A. M. Agogino, Soft robotics 7, 346 (2020).

- 25. J. W. Booth, et al., Soft Robotics (2020).

- 26. D. Surovik, K. Wang, M. Vespignani, J. Bruce, K. E. Bekris, The International journal of robotics research 40, 375 (2021).

- 27. V. Böhm, K. Zimmermann, 2013 IEEE international conference on robotics and automation (IEEE, 2013), pp. 5475–5480.

- 28. J. Rieffel, J.-B. Mouret, Soft robotics 5, 318 (2018).

- 29. J. Kimber, et al., 2019 2nd IEEE International Conference on Soft Robotics (RoboSoft) (IEEE, 2019), pp. 88–93.

- 30. Y. S. Chung, J.-H. Lee, J. H. Jang, H. R. Choi, H. Rodrigue, ACS applied materials & interfaces 11, 40793 (2019).

- 31. K. Garanger, et al., Earth and Space 2021 (2020), pp. 841–854.

- 32. J. M. Friesen, et al., 2016 IEEE International Conference on Robotics and Automation (ICRA) (IEEE, 2016), pp. 2123–2128.

- 33. B. Chen, H. Jiang, Soft robotics 6, 520 (2019).

- 34. J. Shintake, D. Zappetti, T. Peter, Y. Ikemoto, D. Floreano, 2020 IEEE International Conference on Robotics and Automation (ICRA) (IEEE, 2020), pp. 2887–2892.

- 35. S. Mintchev, D. Zappetti, J. Willemin, D. Floreano, 2018 IEEE International Conference on Robotics and Automation (ICRA) (IEEE, 2018), pp. 7492–7497.

- 36. J. Zha, X. Wu, J. Kroeger, N. Perez, M. W. Mueller, 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS) (IEEE, 2020), pp. 1407–1412.

- 37. Y. Gao, S. Chien, Science Robotics 2, eaan5074 (2017).

- 38. K. Caluwaerts, J. Bruce, J. M. Friesen, V. SunSpiral, 2016 IEEE International Conference on Robotics and Automation (ICRA) (IEEE, 2016), pp. 1860–1865.

- 39. S. Lu, et al., The International Symposium of Robotics Research (Springer, 2022), pp. 136–152.

- 40. W.-Y. Li, A. Takata, H. Nabae, G. Endo, K. Suzumori, IEEE Robotics and Automation Letters 6, 6228 (2021).

- 41. D. Zappetti, Y. Sun, M. Gevers, S. Mintchev, D. Floreano, Advanced Intelligent Systems 4, 2200025 (2022).

- 42. S. Spiegel, J. Sun, J. Zhao, IEEE/ASME Transactions on Mechatronics 28, 2073 (2023).

- 43. W. R. Johnson, A. Agrawala, X. Huang, J. Booth, R. Kramer-Bottiglio, 2022 IEEE Sensors (IEEE, 2022), pp. 1–4.

- 44. X. Wang, et al., Advanced Functional Materials 29, 1907063 (2019).

- 45. W. Kong, et al., Soft Matter 16, 5801 (2020).

- 46. K. Wang, et al., IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS) (2023).

- 47. A. Nagabandi, et al., 2018 IEEE/RSJ international conference on intelligent robots and systems (IROS) (IEEE, 2018), pp. 4606–4613.

- 48. X. Huang, et al., Advanced Intelligent Systems 4, 2200163 (2022).

- 49. J. Shintake, Y. Piskarev, S. H. Jeong, D. Floreano, Advanced Materials Technologies 3, 1700284 (2018).

- 50. A. Richardson, J. Strom, E. Olson, 2013 IEEE/RSJ International Conference on Intelligent Robots and Systems (IEEE, 2013), pp. 1814–1821.

Acknowledgments

W. R. J., X. H., J. W. B., and R. K. B. were supported by the National Science Foundation (NSF) under grant no. IIS-1955225. S. L., K. W., and K. B. were supported by the NSF under award IIS-1956027. The authors would like to thank Frank Butler and the staff at the Ingalls Rink for the generous use of their ice for the multi-terrain experiments. Additionally, we would like to thank John Campbell, Sean O’Grady, Stephanie Woodman, Luis Ramirez, Monica Li, and Caitlin Le for their assistance with the large cliff experiments in East Rock Park.

Supplementary materials

Materials and Methods

M1 Robot Design

M1.1 Mechanical Components

The robot comprises three 1/8” carbon fiber rods (2153T16; McMaster-Carr) with mounted electronics, 3D printed end caps, and cables and silicone tendons that connect the end caps to form the tensegrity structure (Fig. 1D). For the majority of the experiments, the end caps are 3D printed from polylactic acid (PLA), and their dimensions are shown in Fig. S1A. The rods are cut to length so the overall length of one bar is 360 mm from end cap to end cap. For the impact resistance experiments (section M6), the end caps are 3D printed from Tough 1500 resin (FormLabs) with the dimensions shown in Fig. S1B. Note that the rods in the impact resistance experiments are 12 mm shorter on each end due to the change in end cap dimensions. Each rod has two 3D printed clamping mounts, one on either end, that each houses a 12 V High-Power Carbon Brush Micro Metal Gearmotor with a 150:1 gear ratio (3042; Pololu) and a 220 mAh 1S LiPo battery. The 3D printed mounts have heat-set inserts (94459A250; McMaster-Carr) that add threads to the plastic parts such that they can be clamped onto the rod and held in place by tightening two 4-40 screws.

Each motor has on its shaft a 3D printed winch with a 7 mm diameter that extends and contracts fishing line (Power Pro) that connects the motor to an end cap on another bar. Each 3D printed end cap has four hooks: one where the fishing line from a motor attaches and three others where sensor tendons, described below, attach. Each end cap also has a silicone cover to enhance the friction and dampen the footfalls of the robot’s end caps. The covers are made by pouring a silicone elastomer (DragonSkin 20; Smooth-On) in a 3D printed mold (Fig. S1C. The end caps and their corresponding covers are colored red, green, and blue to enable pose tracking of the robot, described in our previous work (?) and summarized in Supplementary Materials (SM) section S4.

The six 1S batteries distributed across the rods are wired together 3S2P for a nominal 11.1 V to drive the 12 V motors. The electronics, described below, are housed in 3D printed cases with 1/8” holes that allow them to slide onto the rods. Wires are wrapped in protective sleeving. All 3D printed parts are made from polylactic acid (PLA), and the files for printing them are provided in the supplementary data.

M1.2 Electronics

The robot’s on-board electronics comprise a custom control board containing a microcontroller with a Bluetooth module (BMD-350), a voltage regulator (AP2112K), and three motor drivers (TB6612FNG); a capacitive sensing breakout board (MPR121; Adafruit); an I2C multiplexer breakout board (TCA9548A); and a breakout board for a 9-axis IMU with an on-board digital motion processor (ICM-20948; Pimoroni). The design for the control board is available for download in the supplementary data. A schematic is shown in Fig. S2. The electronics are distributed among the three rods and connected with wires. The red rod hosts the control board, the green rod the MPR121, and the blue rod the mutliplexer. Each rod contains one IMU and two batteries. All the peripherals are connected to the control board via I2C (the IMUs are connectiated via the multiplexer). The motors and batteries connect directly to the control board while the sensor tendons are connected to the MPR121.

M1.3 Capacitive Sensor Tendons

We have previously introduced capacitive sensor tendons (?), capacitive strain sensors that double as the elastic tendons of a tensegrity robot. Sensor tendons comprise two layers of a liquid metal paste (?, ?) encapsulated by a silicone elastomer (Smooth-On) to form a stretchable parallel-plate capacitor. As the tendon is stretched, the capacitance increases linearly (?), so we can know the length of the tendon by measuring its capacitance with the MPR121.

There are two types of sensor tendons: thinner tendons run parallel to the cables driven by the motors, and thicker tendons run parallel to the long axis of the robot. The thinner tendons need to accommodate high strains so that they do not limit the extreme deformations necessary for tensegrity locomotion while the thicker tendons need to exert high forces since they act like passive springs responsible for restoring the robot to its original shape after deformation. The dimensions of the two types of sensor tendons are shown in Fig. S3, and the files necessary for fabricating them are available in the supplementary data. Further characterization and manufacturing details are available in the original paper (?). After the sensors are manufactured, their wire leads are extended by 140 mm with ultra flexible 29 AWG wire (9564T2; McMaster-Carr) and crimped with Dupont connectors.

Each of the nine sensor tendons is calibrated by stretching it to five known lengths, measuring its corresponding capacitance at each length (with the MPR121), and fitting a linear mapping between capacitance and length for that sensor. An example calibration is shown in Fig. S3. During state reconstruction and locomotion, described below, the capacitance of each sensor is constantly measured, and the linear mapping obtained from the calibration is used to calculate each tendon’s length for shape estimation and feedback control. The sensors need to be recalibrated at least once per day because wires shifting positions during locomotion trials or repairs can change the baseline capacitance measured by the MPR121 for each sensor.

M2 State Estimation

Advancing beyond what was demonstrated in previous literature (?, ?, ?), Tribar is capable of reconstructing its state during dynamic locomotion using only on-board sensors. Here state is defined as the robot’s shape plus its orientation relative to a global frame. The robot’s on-board sensors include the nine strain sensors that measure the length of the nine tensegrity tendons and three 9-axis IMUs. Each IMU contains a 3-axis accelerometer, a 3-axis gyroscope, and a 3-axis magnetometer. These three sensors along with the IMU’s on-chip digital motion processor are used to measure the IMU’s orientation relative to Earth’s magnetic and gravitational fields. Two such measurements from IMUs on different bars, estimating each bar’s direction in a global frame, provide enough information for state estimation.

Our state estimation algorithm is a two-step optimization algorithm. The first step is shape estimation where we minimize the squared error from the sensor tendon measurements in a constrained optimization given the known bar lengths, as in (?). Precisely, shape estimation minimizes the objective

where is the 3D position of node , is the sensor measurement between nodes and , and is the constant bar length. The node labeling scheme is shown in Fig. S4. Nodes 0 and 1 are on the red bar, 2 and 3 on the green, and 4 and 5 on the blue. The odd numbered nodes are all on one side of the robot while the even numbered nodes are on the opposite side. The output of the shape estimation step is the 3D position vector of each node in an arbitrary frame whose origin, for convenience, is at the centroid of the six nodes.

The second step of the algorithm is orientation estimation where, given the shape of the nodes, we find the rotation that minimizes the squared error between the unit vectors of the estimated and measured directions of the two bars with IMUs relative to the global frame. Precisely, we find the rotation that minimizes the objective

where is again the 3D position of node and is the unit vector representing the orientation of bar as measured by the IMU. Once is found through the optimization routine, we apply the rotation to the nodes and return the rotated coordinates. These rotated coordinates represent the robot’s state, defined as its shape and orientation relative to a global frame. Note that our algorithm does not estimate the robot’s translation.

The robot’s state reconstruction performance during dynamic locomotion trials in a field environment is shown in Fig. 2 and Fig. S5. A field environment was chosen to avoid magnetic interference from nearby buildings. The magnetic interference disrupts the readings of the IMU’s magnetometer and therefore the output of the orientation estimation. The field experiments were conducted at dusk under artificial lighting from portable LED spotlights so that the sunlight did not interfere with the Intel RealSense L515 depth camera, which we used to track the robot’s position for ground truth (?).

M3 Locomotion

The tensegrity robot achieves locomotion through extreme deformations that shift its center of mass. Sensor tendons (section M1.3) provide real-time feedback for PID control (described below) so that each of the six actuators can achieve a target length within some small tolerance. A set of six target lengths is a target shape, and a repeating sequence of target shapes defines a gait. The robot has several different gaits (described below) for rolling and turning locomotion.

M3.1 PID Control

Each gait is parameterized by a minimum length and a range for each actuator. The position of each actuated tendon is the normalized length of the tendon where is the minimum length and is the minimum length plus the range.

For a given target shape, each of the six actuated tendons will have a target length between and . The error between the current position and the target is given by , and the command to each motor is calculated via PID control.

At each time step, the calculated command is then multiplied by another gait parameter, max speed, and the product is bounded by where a result of (or ) corresponds to the maximum duty cycle sent to the motor.

M3.2 Gaits

The PID controller described above ensures all six actuated tendons are set to their target length (a normalized position between and ), within some small tolerance, given as a percent of the range. Together, these six target lengths define a target shape, which is one step in a gait, a repeating sequence of target shapes that accomplishes a desired behavior.

At the start of each step in a gait, all six motors are sent commands given by the PID controller. The first time one tendon’s length falls within the tolerance of the target length, that motor is stopped until the next step in the gait begins, which occurs once all six motors have reached their target (within tolerance) and have been stopped.

The gait used for most of the experiments is the robot’s quasistatic (punctuated) rolling gait. This gait consists of two steps: (1) a transition step which tips the robot onto the correct base triangle and (2) a rolling step where the robot deforms its body to shift its center of mass outside of the triangle of stability and roll onto the next face. The transition step involves contracting two tendons to control the direction the robot tips, and the rolling step involves contracting the bottom two tendons to make the robot unstable and one other tendon to influence the direction of the roll. The quasistatic rolling gait is defined as

where each number is one actuator’s target length, and a set of six is a target shape. The target shape is specified in terms of the target lengths for each tendon as defined in Fig. S4 where the robot is in its rest state. The specific tendons that are contracted change based on symmetry each time the robot rolls to a new face. The full gait is given alongside the discussion of the robot’s symmetry in SM section S6. The robot’s symmetry is illustrated in Fig. S10.

The robot achieves its top locomotion speed of 18 body lengths per minute (BL/min) in its dynamic rolling given by

where it skips the transition step from the quasistatic gait and instead executes repeated rolling steps. Skipping the transition step makes the rolling gait much faster (Fig. 3K) at the expense of making it somewhat less reliable; on occasion after a step in the dynamic gait, the robot will fail to roll. The gait parameters for the dynamic rolling experiments in Fig. 3E are a minimum length of 100 mm, a range of 90 mm, a max speed of 99, a tolerance of 7%, , , and .

While these two gaits were first discovered by hand, our simulation based on a differentiable physics engine (?) generated a qualitatively similar rolling gait. Moreover, the simulation generated turning gaits that were not discovered by hand. The counterclockwise turning gait is

and the clockwise turning gait is

where the clockwise turning gait is extended via symmetry reduction as described in SM section S6. The experiments for characterizing the turning gaits are described in SM section S3, and their results are shown in Fig. S9. These two turning gaits, along with the quasistatic rolling gait, are used in the trajectory following experiments in Fig. 4, which are described in more detail below (section M4).

M3.3 Multi-terrain Experiments

The multi-terrain experiments were conducted on two 24” x 48” patches of real grass (Fresh Patch), ice at the David S. Ingalls ice rink, polished pebbles (GASPRO), and play sand (Quikrete) as well as a flat floor covered by a white fabric background. The experiments characterized the quasistatic rolling gait, and the gait parameters are given in Table S1.

| Terrain | Floor | Grass | Ice | Pebbles | Sand |

|---|---|---|---|---|---|

| Minimum Length (mm) | 100 | 100 | 100 | 100 | 100 |

| Range (mm) | 90 | 90 | 100 | 90 | 100 |

| Tolerance (%) | 12 | 10 | 10 | 10 | 15 |

| Max Speed | 99 | 99 | 99 | 99 | 99 |

| 8 | 6 | 6 | 6 | 6 | |

| 0.01 | 0.01 | 0.01 | 0.01 | 0.01 | |

| 0.5 | 0.5 | 0.5 | 0.5 | 0.5 |

M3.4 Incline Experiments

The incline experiments were performed on two side-by-side 36” x 24” x 1/2” sheets of medium density fiberboard (2726N42; McMaster-Carr) coated with an adhesive-backed silicone sheet and held at various incline angles. The robot performed the quasistatic gait, and the gait parameters are given in Table S2. To climb the steepest inclines, the gait’s range needed to be high so that the robot could take bigger steps without slipping backward. This change meant that the gait parameters had to be adjusted to avoid a problem with triangle inequality: at certain steps in the gait, two of the three tendons that make a triangle on one side of the robot are commanded to be short, close to their minimum length, while the third side is commanded to be long, forming a theoretically impossible triangle. This problem is exacerbated by the increased shifting of the wires, which gradually changes the baseline capacitance of the sensor tendons, thereby adding noise, when the robot exhibits extreme deformations at this increased range. Therefore, the tolerance parameter of the gait was split into high tolerance, which is the tolerance for target lengths closer to 1, and low tolerance, which is the tolerance for target lengths closer to 0. The high tolerance was tuned to be higher than the low tolerance to accommodate the increased sensor noise and triangle inequality without compromising the critical mechanism of the gait: the small polygon of stability that allows the robot to roll over. The experiments from inclines at various angles are shown in Fig. S6 and movie S5.

| Incline Angle | 0° | 5° | 10° | 15° | 20° | 25° | 28° |

|---|---|---|---|---|---|---|---|

| Minimum Length (mm) | 100 | 100 | 100 | 100 | 100 | 100 | 100 |

| Range (mm) | 140 | 140 | 140 | 140 | 140 | 140 | 180 |

| High Tolerance (%) | 20 | 20 | 20 | 20 | 15 | 15 | 20 |

| Low Tolerance (%) | 10 | 10 | 10 | 10 | 10 | 10 | 10 |

| Max Speed | 99 | 99 | 99 | 99 | 99 | 99 | 99 |

| 10 | 10 | 10 | 10 | 10 | 10 | 10 | |

| 0.01 | 0.01 | 0.01 | 0.01 | 0.01 | 0.01 | 0.01 | |

| 0.5 | 0.5 | 0.5 | 0.5 | 0.5 | 0.5 | 0.5 |

M4 Trajectory Following

Shown in Fig. 4, the tensegrity robot can follow prescribed trajectories by selecting actions that minimize a cost function. The actions include the quasistatic rolling gait with different values for the range (every 10 mm from 90 mm to 150 mm), the counterclockwise (ccw) turning gait with a range of 100 mm, the clockwise (cw) turning gait with a range of 100 mm, and modified versions of the quasistatic rolling gait where the range is different on either side of the robot. When one side of the robot has a larger range than the other in the rolling gait, it takes a larger step on one side than the other, and the robot’s behavior is forward motion with gradual turning (in contrast to the cw and ccw “turn in place” gaits) analogous to differential drive in wheeled robots when the wheels on one side spin faster than the other. The following gait parameters are the same for all the actions: a minimum length of 100 mm, a max speed of 99, a tolerance of 20%, , , and .

Each of these 51 actions was first modeled offline using our simulation based on a differentiable physics engine (?). The robot’s pose is the 2D position of the centroid of its six end caps and the 2D orientation of the robot given by the unit vector that points from the centroid of nodes 1, 3, and 5 to the centroid of nodes 0, 2, and 4 (see Fig. S4), which we call the robot’s principal axis. Before and after executing each action in simulation, the pose (position and orientation) is extracted from the simulation. The 2D rotation from the pose before to the pose after is calculated based on the angle by which the principal axis rotated about the z-axis. The 2D translation from the pose before to the pose after is calculated relative to the robot’s initial body frame via where is the rotation matrix that describes the robot’s initial body frame with respect to the world frame given the anlge between the robot’s principal axis and the world frame’s x-axis. The 2D rotation and translation relative to the robot’s body frame before the action are stored in a data table, which is searched in real time during trajectory following trials to determine the best sequence of actions to minimize the cost function.

Each trajectory is represented as a series of 2D waypoints in a global frame. An overhead RGB-D camera (Intel RealSense L515) was calibrated using April tags (?) to measure its extrinsic matrix relative to the global frame. Our previously developed tensegrity pose tracking algorithm (?) was modified to run in real time as a ROS service. During each planning step, the node that controls the tensegrity robot requests the robot’s current pose from the tracking service and plans a sequence of actions to follow the given trajectory.

The cost function is given by

where , , and are the hand-tuned weights for distance, angle, and progression, respectively, is the 2D position vector of waypoint (the closest waypoint on the trajectory to the robot’s current position), is the robot’s 2D position given by the tracking service, is the robot’s heading (the robot’s principal axis rotated by 90°counterclockwise), and is the number of waypoints in the trajectory. As in previous work (?, ?), the three terms in this cost function punish the robot for its 2D Euclidean distance from the trajectory, for the angle between the robot’s heading and the trajectory’s tangent at the robot’s location, and for being closer to the start of the trajectory. The weights used for the experiments are listed in Table S3.

The robot starts the trajectory following task by planning via an exhaustive tree search of all possible actions with a search depth of two. For each action, the rotation and translation tabulated from the simulation are applied to the robot’s current position and orientation to predict the next position and orientation after taking that action. Then, for each prediction, we search the action space again, applying the transformation to each predicted position and orientation. The resulting position and orientation with respect to the world frame after applying two actions are given by the equations below.

Having reached the prescribed search depth, the cost function is evaluated, and the sequence of two actions with the lowest cost is returned. The robot executes the first action and then again plans a sequence of two actions, repeating this process until it reaches the end of the trajectory.

Fig. 4 shows the robot’s ability to follow a straight line, a circular arc, and a right triangle. To follow the straight line, the controller usually commands rolling actions, course correcting for any deviations, and occasionally turn in place actions. To follow the circle, gradual turning actions are selected. The triangular trajectory’s sharp corners pose further challenges. To follow the triangle, the controller segments the triangle trajectory into three straight line trajectories. When the robot reaches the end of a segment—defined as being within 27 cm of the last waypoint or if the last waypoint is the nearest point to the robot—the robot moves on to following the next segment. At this juncture, to handle sharp corners, the controller decides if the robot should continue to roll in the same direction or instead, by virtue of its symmetry, roll backward by evaluating

where is the robot’s principal axis, defined above, and is the unit vector in the direction of the next trajectory segment. If this inequality is true, the robot rolls in its typical forward direction. Otherwise, if the cross product is negative, the robot rolls in the reverse direction, the gaits are modified via symmetry reduction, and the principal axis and heading are reversed in the robot body frame (SM section S6). When the robot begins subsequent trajectory segments, it doesn’t start with the perfect position and heading like it does for the first segment (and for the straight line and semicircle trajectories). These errors compound, which is why the deviation from the trajectory is greater for the triangle than the other two. However, the robot is still able to recover and reach the desired end point of the trajectory. Plots of the error as a function of distance along the trajectory are shown in Fig. S7.

| Trajectory | |||

|---|---|---|---|

| Straight Line | 500 | 200 | 300 |

| Circular Arc | 400 | 100 | 300 |

| Right Triangle | 600 | 100 | 300 |

M5 Limbo Demonstration

To enable Tribar to play limbo autonomously, the pose tracking service was further augmented to perceive the height of the yellow limbo bar (SM section S4). The robot first shrinks to the proper height, setting its range to the measured bar height minus 120 mm (100 mm accounting for the minimum length of the tendon and 20 mm for the diameter of the bar) with a maximum range of 140 mm and rounded down to the nearest 10 mm. Then, the robot executes its quasistatic rolling gait at that range with a max speed of 99, a tolerance of 20%, , , and . When the robot has reached (within 27 cm) the endpoint of its 70-cm path under the limbo bar, as measured by the tracking service (SM section S4), the robot returns to its maximum height (at a range of 140 mm) and waits for the limbo bar to lower. A stepper motor lowers the bar height by 40 mm; when the lowering of the bar is complete, the robot is manually commanded to resume the limbo demonstration with the newly lowered bar in the reverse direction, autonomously modifying its gait by symmetry reduction (SM section S6) and the gait’s range based on the perceived bar height (SM section S4).

M6 Impact Resistance

To survive the harsh impacts from rolling off the cliff (Fig. 1) and the bridge (Fig. 5), the design of the end caps had to be modified. As shown in Fig. S1, the depth of the central hole through the end cap was increased so that the carbon fiber rod reinforced the stress concentration where the hemisphere meets the cylinder. Also, the end caps were 3D printed from a stronger material (Tough 1500 Resin on a Form 3; Formlabs). The quasistatic rolling gait was modified to be

with the robot returning to its rest shape immediately after the rolling step because it is more capable of absorbing impact in that shape (i.e., more energy is absorbed through deformation before the rigid components contact each other). For this gait, the minimum length is 100 mm, the range is 100 mm, the tolerance is 12%, , , and .

The cliff dive demonstration, shown in Fig. 1 and movie S1, is autonomous. Sensor information is received over Bluetooth, and state estimation is calculated in real time. The robot’s initial heading is recorded based on the state estimation result at the beginning of the trial. After each cycle of the modified quasistatic rolling gait, when the robot returns to its rest shape, the most recent state estimation result is analyzed to determine the robot’s heading and which three nodes define the downward face. The quasistatic gait is then modified with symmetry reduction (SM section S6), including reversing the gait if necessary, to ensure the gait is correct for the next cycle.

The bridge dive demonstration, shown in Fig. 5 and movie S8, uses the same gait, but the demonstration is not fully autonomous. The state estimation algorithm relies on absolute orientations from the 9-axis IMUs, and the magnetic interference from the nearby buildings causes the magnetometer readings to drift. For that reason, the robot is programmed to briefly pause after one gait cycle, and then the correct heading and downward face are manually entered depending on how the robot lands. Afterward, the modified quasistatic rolling gait is executed continuously.

In an additional field test, shown in movie S11, we demonstrate the robot rolling off a large cliff, about 12 m high, and recovering its shape at the bottom. The height of the large cliff does not represent the greatest survivable drop height of the robot since it was not a shear drop: the robot was buffeted by the cliff side and vegetation on its way down. This experiment was conducted for the lack of controls in an unpredictable, authentic field environment. The drop was high enough that our Bluetooth communication was out of range, so the long delay in the video is due to the time it took to walk to the bottom of the cliff and reconnect to the robot.

Supplementary Text

S1 State of the Art of Tensegrity Robotics

Tensegrity robotics researchers have built many innovative robots and characterized their advantages of impact resistance, light weight, and versatility. As we work toward fully autonomous tensegrity robots that can navigate unstructured environments, it is important to measure the progress of our field with quantitative metrics (?). In this section, we report the critical performance metrics of Tribar that enable its key achievements of autonomy, versatility, and impact resistance and compare them to prominent examples of the state of the art. This list is not meant to be exhaustive, in part because there are not universally agreed upon metrics for tensegrity robots that all studies report (?). A more comprehensive discussion of the state of the art can be found in recent review articles (?, ?).

S1.1 State Estimation

State estimation from on-board sensors is a critical capability that enables Tribar’s autonomous control in a field environment (Fig. 1). Prior work on tensegrity state estimation has relied on off-board sensors (?) that will not realistically be available in the field environments that we envision future tensegrity robots will navigate. Two previous studies present tensegrity state estimation with only on-board sensors. Both use stretchable strain sensors: resistance-based conductive thread sensors (?) and capacitance-based silicone graphite sensors (?). In both cases, the state estimation experiments are conducted in a stationary setting since the robots are limited to low strains. In contrast, our highly stretchable sensor tendons accommodate the high strains necessary for Tribar’s locomotion, so we conduct the state estimation experiments during locomotion. Futhermore, the on-board IMUs enable Tribar to reconstruct not just its shape but also its orientation relative to Earth’s gravitational and magnetic fields, a step beyond detecting only the bottom face (?). The state of the art of tensegrity state estimation with on-board sensors is summarized in Table S4. The root mean square error (RMSE) of state estimation is reported in absolute units and relative to the robots’ respective bar lengths.

| Robot | Estimation Capabilities | Strain | RMSE |

|---|---|---|---|

| Conductive Thread Tensegrity (?) | Shape | 63% | 3.8 mm (1%) |

| Robotic Skins Tensegrity (?) | Shape and downward face | 12% | 45.8 mm (13.1%) |

| Tribar (ours) | Shape and global orientation | 180% | 36 mm (10%) |

S1.2 Locomotion and Unstructured Terrain

Tensegrity robots are motivated for their ability to adapt to unstructured terrain, yet most are only tested in controlled laboratory settings. Some notable examples of tensegrity robots demonstrated on unstructured terrain include SUPERball demonstrated on NASA’s roverscape (?), a light-powered tensegrity with liquid crystal elastomer tendons demonstrated on sand and pebbles (?), the TT-3 tensegrity demonstrated outdoors on dirt (?), and air-powered modular tensegrities that can crawl through a dirt tunnel (?). Aside from terrain, another challenge with tensegrity locomotion is that it is typically slow for mobile robots. Table S5 lists the characteristic velocity in bar lengths per minute (BL/min) for some of the fastest tensegrity robots in the literature as well as whether they have been demonstrated on unstructured terrain. The curved bar tensegrity is capable of pure rolling, not just the punctuated rolling that is typical for tensegrity robots. The pure rolling and vibrating robots exhibit faster speeds than Tribar’s punctuated rolling; however, Tribar is not limited to locomotion on flat planes in a laboratory setting.

| Robot | Characteristic Velocity (BL/min) | Unstructured Terrain |

| TT-4mini (?) | 11 | - |

| Curved Bar Tensegrity (?) | 24 | - |

| Vibrating Tensegrity (?) | 69 | - |

| SUPERball (?) | 3.5 | NASA Roverscape |

| Tribar (ours) | 18 | grass, ice, pebbles, sand, cliffs |

S1.3 Maximum Climbable Incline

Table S6 summarizes the inclined locomotion capabilities of tensegrity robots with gravity-based rolling gaits. Some studies also demonstrate stable tensegrity locomotion down declines. We exclude crawling-based gaits—like those of pipe-climbing tensegrity robots that are capable of vertical locomotion (?, ?)—as well as wheeled tensegrity robots (?) from this comparison. Tribar’s locomotion up a 28°incline is the steepest incline climbed by a tensegrity robot in the reported literature.

| Robot | Maximum Climbable Incline | Maximum Stable Decline |

|---|---|---|

| Robotic Skins Tensegrity (?) | - | 8.7° |

| Curved Bar Tensegrity (?) | 7° | 7° |

| SUPERball (?) | 15° | - |

| TT-4mini (?) | 24° | - |

| Tribar (ours) | 28° | 20° |

S1.4 Drop Height

Only a few studies on tensegrity robots experimentally demonstrate impact resistance by showing the robots surviving a drop, and these demonstrations are summarized in Table S7. NASA’s SUPERBall, a 6-bar tensegrity robot with a diameter of 2 m, was shown falling 3.4 m from the top of a roof (?). Rieffel dropped his vibrating tensegrity robots from his eye level (?). Another team of researchers built a drone with tensegrity landing gear and a rover with a tensegrity axle, which survived drops of 2 m and 5 m, respectively (?), and yet another group built a robot with tensegrity wheels (?). Even though these robots are not pure tensegrity structures like SUPERball, Tribar, and the vibrating tensegrities, we include them as examples in Table S7 because they still demonstrate how incorporating tensegrities into robots enables impact resistance. We have not included studies of the impact resistance of tensegrity structures that are not robots. Tribar’s 5.7-m fall from the bridge, described above, is the highest absolute drop survived by a tensgrity robot in the reported literature. Normalizing by bar length yields higher normalized drop heights for smaller, lighter robots.

| Robot | Bar Length (m) | Drop Height (m) | Drop Height (BL) |

|---|---|---|---|

| Tensegrity Wheels (?) | 0.13 | 1.5 | 11.5 |

| Vibrating Tensegrity (?) | 0.094 | 1̃.8 | 1̃9 |

| Tensegrity Drone (?) | 0.094 | 2 | 21 |

| SUPERball (?) | 2 | 3.4 | 1.7 |

| Tensegrity Rover (?) | 0.094 | 5 | 53 |

| Tribar (ours) | 0.36 | 5.7 | 15.8 |

S1.5 Standard Metrics

In a recent review paper (?), we suggested uniform metrics that all tensegrity robotics papers should report to track the progress of the field. Those metrics for our 3-bar tensegrity robot are listed in Table S8. The robot density is defined as the robot’s mass of 0.4 kg divided by its maximum volume in its most expanded state. The characteristic rod length is 360 mm for all of the experiments except the bridge and cliff dive experiments, as described above. The maximum bending, buckling, and crushing loads of the rods were measured in an Instron 3345 materials testing system using the nominal 360 mm rods (not the slightly shorter rods with the Tough 1500 end caps that were used in the impact tests). The maximum buckling and crushing loads were measured in an axial compression experiment while the maximum bending load was measured in a 3-point bending experiment. The rate for these experiments was 1 mm/s. The characteristic velocity is the robot’s top speed during its dynamic rolling gait, described above, divided by its characteristic rod length. The only standard metric we were unable to measure is the cost of transport because the robot does not have an on-board power sensor. We plan to measure the cost of transport of Tribar in future work.

| Metric | Value |

| Robot density () | 56 |

| Characteristic Rod Length () | 0.36 |

| Maximum Bending Load () | 1.3 |

| Maximum Buckling Load () | 1.2 |

| Maximum Crushing Load () | 1.4 |

| Characteristic velocity (BL/min) | 18 |

| Maximum climbable incline | 28° |

S2 Shape Morphing

To understand the effect of the robot’s shape morphing on its locomotion, we characterized the quasistatic rolling gait with a varying range (90 mm to 180 mm). The experiments are shown in Fig. S8, and the gait parameters are given in Table S9. The robot’s velocity decreases as the range increases. Although a larger range results in bigger steps each time the robot rolls, contracting a longer cable takes more time for the motors, resulting in a lower frequency of rolls and a slower overall speed.

| Range (mm) | 90 | 120 | 150 | 180 |

|---|---|---|---|---|

| Minimum Length (mm) | 100 | 100 | 100 | 100 |

| Tolerance (%) | 12 | 12 | 15 | 15 |

| Max Speed | 99 | 99 | 99 | 99 |

| 8 | 8 | 8 | 8 | |

| 0.01 | 0.01 | 0.01 | 0.01 | |

| 0.5 | 0.5 | 0.5 | 0.5 |

S3 Turning Experiments

In addition to rolling forward, we demonstrate different turning gaits for the tensegrity robot. The two most reliable gaits for turning in place were discovered by our simulator (SM section S5). The counterclockwise turning gait is , and the clockwise turning gait is . In the counterclockwise gait, the robot shifts its weight during the cycle to rotate its body, maintaining the same nodes on the bottom for the duration of the gait. In the clockwise gait, like the rolling gaits, the robot flips over onto a new face. Therefore, for the clockwise gait to be repeatedly executed, it is extended by symmetry reduction as described below. The results of the experiments with these “turn in place” gaits are shown in Fig. 3, and the gait parameters are listed in Table S10.

The robot is also capable of a crawling-based turning gait where it uses one side of its body as a pivot while the other side crawls along using friction. Experiments, shown in Fig. S9, characterize the robot turning clockwise and counterclockwise while using each side as the pivot. Here, “left” refers to using the left side of the robot (with nodes 1, 3, and 5) as the pivot while “right” refers to using the right side (with nodes 0, 2, and 4) as the pivot. “Left” and “right” are defined with respect to the robot’s locomotion direction during the quasistatic rolling gait from its rest state (Fig. S4). The gait for crawling-based turning with the left pivot is given by

for turning clockwise and

for turning counterclockwise. The gait can be adapted to use the right pivot by symmetry reduction as described below. The gait parameters for these experiments are listed in Table S10.

Finally, the robot can also turn using the quasistatic rolling gait by using a different value for the range on each side of its body, analogous to differential drive on wheeled vehicles. Just as spinning the left and right wheels at different speeds leads to a gradual turning motion on a wheeled robot, our robot can turn while rolling forward. Experiments (Fig. S9) show the robot gradually turning counterclockwise by employing the quasistaic rolling gait with a range of 80 mm on the left side and 160 mm on the right side. Then, it can turn clockwise using the same gait by switching the range values. Gait parameters for these gradual turning experiments are listed in Table S10. This gradual turning gait and its variations are used in the control strategy for the trajectory following task shown in Fig. 4.

| Direction | Turn in place | Crawling turn | Gradual turning |

| Minimum Length (mm) | 100 | 100 | 100 |

| Range (mm) | 100 | 90 | 80/160 |

| Tolerance (%) | 15 | 17 | 10 |

| Max Speed | 80 | 99 | 99 |

| 6 | 6 | 6 | |

| 0.01 | 0.01 | 0.01 | |

| 0.5 | 0.5 | 0.5 |

S4 Pose Tracking

All the plots in this paper that track the robot’s CoM are produced using a tensegrity pose tracking algorithm we previously published (?). This pose tracking algorithm also provides the ground truth for evaluating our state estimation experiments (Fig. 2 and Fig. S5). For the trajectory following experiments and the limbo demonstration, the algorithm was modified to run as a ROS service so it could be used online.

The pose tracking algorithm fuses sensor inputs from an RGB-D camera (Intel RealSense L515) and the on-board capacitive strain sensors described in section M1.3. The algorithm tracks the pose of the three tensegrity rods by observing the red, green, and blue colors of the end caps in the camera data. Information from the on-board sensors is weighted more by the algorithm when the end caps are heavily occluded, usually due to self-occlusions with the other rods. We manually give the algorithm the initial positions of the end caps by clicking on them in numerical order (0-5 in Fig. S4) on an image of the first frame of the RGB-D video. The pose tracking for all the locomotion trials was done offline so we could plot the CoM and calculate the speed.

For the trajectory following experiments and the limbo demonstration, the pose tracking service was run online in real time. The pose estimate was updated whenever the service received synchronized data from the ROS nodes publishing the RGB-D data and the robot’s on-board sensor data. After each gait cycle, the node controlling the robot requested the robot’s current pose from the tracking service. The robot’s pose was used to modify the next cycle of the gait appropriately based on the bottom face of the robot (SM section S6). The pose was also used for motion planning in the trajectory following experiments and to determine if the robot had gone past its endpoint on the other side of the bar in the limbo demonstration.

For the limbo demonstration, the pose tracking service is further augmented to perceive the height of the yellow bar. Each time the robot begins a segment of limbo, the robot control node sends a request to the tracking service for the bar height. The tracking service filters the latest RGB-D data for the yellow color of the bar and estimates the height with RANSAC. After the bar height is received from the tracking service, the robot shrinks to the appropriate size and adjusts the range of its gait (section M5) before beginning the limbo segment. All the pose tracking code can be found in the GitHub repository linked in our previous work (?).

S5 Simulation

The simulation used in this work is a differentiable physics engine that we previously published (?). The simulation was used to generate the clockwise and counterclockwise turning gaits discussed in section M3.2 and characterized in Fig. 3. It was also used to model all of the possible actions for the trajectory following experiments discussed in section M4 and shown in Fig. 4.

The clockwise and counterclockwise gaits were generated using a graph search with the principal axis rotation as the reward function (?). To simplify the search, the targets lengths were constrained to either fully extended or fully contracted (i.e., or ). The gaits generated in simulation transferred to the real robot with only one modification to the third step in the clockwise turning gait. The simulation output was , but for the gait to execute more reliably, it was changed to . To achieve , tendons A and B (see Fig. S4) would go to the minimum length (e.g., 100 mm) and tendon C would have to reach full extension (e.g., 200 mm). Depending on the range and tolerance used, these commands could produce a triangle inequality, which the robot could not possibly achieve. The modification, changing C’s target from to , is designed to mitigate this issue.

The Real2Sim2Real pipeline we use with our differentiable simulator involves recording a trajectory on the real robot in order to perform system identification (sysID). Therefore, prior to the trajectory following experiments, we recorded a simple, open-loop locomotion trajectory on the substrate where the experiments would be conducted (vinyl dance floor; VEVOR) to identify the friction parameters for the robot on this substrate. More details about the sysID process can be found in our previous work (?). After sysID, we modeled the robot’s full action space in the simulator and recorded the robot’s 2D transformations in a data table as described in section M4. The data table used for the trajectory following task is available in the supplementary data.

S6 Symmetry Reduction

Throughout this manuscript, the robot’s locomotion gaits have been given based on its initial position with nodes 0, 2, and 5 touching the ground. However, when another face is downward, the gaits can be adapted using the robot’s symmetry. For example, the quasistatic rolling gait is given as for when nodes 0, 2, and 5 are on the ground. In the second step—the rolling step—of the gait, the bottom two tendons (A and D) are fully contracted at 0, shrinking the polygon of stability to allow a roll. After one gait cycle where the robot rolls over, nodes 1, 2, and 5 will be touching the ground, and tendons C and F will be the bottom two. Therefore, the next two steps in the gait should be . The full quasistatic rolling gait is therefore

after which the robot has the same orientation since three rolls have been completed, and therefore this six-step gait can be repeated from the beginning.

In this way, any gait can be adapted to a different bottom face. This mapping is shown in Fig. S10A. As the robot rolls to the next face, the target lengths on each side (ABC vs. DEF) should be left-shifted by one. For example, if the gait is when the bottom nodes are 0, 2, 3, and 5, then that gait should be translated to when the robot is on the next face with nodes 1, 2, 4 and 5 closest to the ground. For the last face (nodes 0, 1, 3 and 4), the same gait would be .

Using the same strategy, we can extend the clockwise turning gait from above, adapting it to the new faces in sequence after the robot rolls over. Therefore, the full clockwise turn in place gait (starting with nodes 0, 2, and 5 on the ground) is

after which the robot has rolled back onto its original face. Note that the clockwise gait rolls onto new faces in the opposite sequence compared to the quasistatic rolling gait, which is why the target lengths are right-shifted instead of left-shifted. The counterclockwise turning gait does not cause the robot to roll onto a new face, but it can still be adapted to different bottom faces via the same strategy.

The quasistatic rolling gait can also be modified so that the robot rolls in the opposite direction (Fig. S10B). The full gait to roll backward is

when starting with nodes 0, 2, 3, and 5 closest to the ground. The general mapping to reverse the quasistatic rolling gait is a function of the bottom nodes; the mapping can be found in the supplementary code.

The crawling-based turning gaits can be adapted to use the other side of the robot as the pivot foot. Using the right pivot, the crawling-based turning gaits are

for turning clockwise and

for turning counterclockwise. This reverse mapping for the crawling-based turning gaits is the same mapping for reversing the quasistatic rolling gait. Notably, the clockwise and counterclockwise turn in place gaits can also be reversed, and they still yield clockwise and counterclockwise turning, respectively.