Implicit Regularization in Over-Parameterized Support Vector Machine

Abstract

In this paper, we design a regularization-free algorithm for high-dimensional support vector machines (SVMs) by integrating over-parameterization with Nesterov’s smoothing method, and provide theoretical guarantees for the induced implicit regularization phenomenon. In particular, we construct an over-parameterized hinge loss function and estimate the true parameters by leveraging regularization-free gradient descent on this loss function. The utilization of Nesterov’s method enhances the computational efficiency of our algorithm, especially in terms of determining the stopping criterion and reducing computational complexity. With appropriate choices of initialization, step size, and smoothness parameter, we demonstrate that unregularized gradient descent achieves a near-oracle statistical convergence rate. Additionally, we verify our theoretical findings through a variety of numerical experiments and compare the proposed method with explicit regularization. Our results illustrate the advantages of employing implicit regularization via gradient descent in conjunction with over-parameterization in sparse SVMs.

1 Introduction

In machine learning, over-parameterized models, such as deep learning models, are commonly used for regression and classification lecun2015deep ; voulodimos2018deep ; otter2020survey ; fan2021selective . The corresponding optimization tasks are primarily tackled using gradient-based methods. Despite challenges posed by the nonconvexity of the objective function and over-parameterization yunsmall , empirical observations show that even simple algorithms, such as gradient descent, tend to converge to a global minimum. This phenomenon is known as the implicit regularization of variants of gradient descent, which acts as a form of regularization without an explicit regularization term neyshabur2015search ; neyshabur2017geometry .

Implicit regularization has been extensively studied in many classical statistical problems, such as linear regression vaskevicius2019implicit ; li2021implicit ; zhao2022high and matrix factorization gunasekar2017implicit ; li2018algorithmic ; arora2019implicit . These studies have shown that unregularized gradient descent can yield optimal estimators under certain conditions. However, a deeper understanding of implicit regularization in classification problems, particularly in support vector machines (SVMs), remains limited. Existing studies have focused on specific cases and alternative regularization approaches soudry2018implicit ; molinari2021iterative ; apidopoulos2022iterative . A comprehensive analysis of implicit regularization via direct gradient descent in SVMs is still lacking. We need further investigation to explore the implications and performance of implicit regularization in SVMs.

The practical significance of such exploration becomes evident when considering today’s complex data landscapes and the challenges they present. In modern applications, we often face classification challenges due to redundant features. Data sparsity is notably evident in classification fields like finance, document classification, and gene expression analysis. For instance, in genomics, only a few genes out of thousands are used for disease diagnosis and drug discovery huang2012identification . Similarly, spam classifiers rely on a small selection of words from extensive dictionaries almeida2011contributions . These scenarios highlight limitations in standard SVMs and those with explicit regularization, like the norm. From an applied perspective, incorporating sparsity into SVMs is an intriguing research direction. While sparsity in regression has been deeply explored recently, sparse SVMs have received less attention. Discussions typically focus on generalization error and risk analysis, with limited mention of variable selection and error bounds peng2016error . Our study delves into the implicit regularization in classification, complementing the ongoing research in sparse SVMs.

In this paper, we focus on the implicit regularization of gradient descent applied to high-dimensional sparse SVMs. Contrary to existing studies on implicit regularization in first-order iterative methods for nonconvex optimization that primarily address regression, we investigate this intriguing phenomenon of gradient descent applied to SVMs with hinge loss. By re-parameterizing the parameters using the Hadamard product, we introduce a novel approach to nonconvex optimization problems. With proper initialization and parameter tuning, our proposed method achieves the desired statistical convergence rate. Extensive simulation results reveal that our method outperforms the estimator under explicit regularization in terms of estimation error, prediction accuracy, and variable selection. Moreover, it rivals the performance of the gold standard oracle solution, assuming the knowledge of true support.

1.1 Our Contributions

First, we reformulate the parameter as , where denotes the Hadamard product operator, resulting in a non-convex optimization problem. This re-parameterization technique has not been previously employed for classification problems. Although it introduces some theoretical complexities, it is not computationally demanding. Importantly, it allows for a detailed theoretical analysis of how signals change throughout iterations, offering a novel perspective on the dynamics of gradient descent not covered in prior works gunasekar2018implicit ; soudry2018implicit . With the help of re-parameterization, we provide a theoretical analysis showing that with appropriate choices of initialization size , step size , and smoothness parameter , our method achieves a near-oracle rate of , where is the number of the signals, is the dimension of , and is the sample size. Notice that the near-oracle rate is achievable via explicit regularization peng2016error ; zhou2022communication ; kharoubi2023high . To the best of our knowledge, this is the first study that investigates implicit regularization via gradient descent and establishes the near-oracle rate specifically in classification.

Second, we employ a simple yet effective smoothing technique Nesterov2005 ; zhou2010nesvm for the re-parameterized hinge loss, addressing the non-differentiability of the hinge loss. Additionally, our method introduces a convenient stopping criterion post-smoothing, which we discuss in detail in Section 2.2. Notably, the smoothed gradient descent algorithm is not computationally demanding, primarily involving vector multiplication. The variables introduced by the smoothing technique mostly take values of or as smoothness parameter decreases, further streamlining the computation. Incorporating Nesterov’s smoothing is instrumental in our theoretical derivations. Directly analyzing the gradient algorithm with re-parameterization for the non-smooth hinge loss, while computationally feasible, introduces complexities in theoretical deductions. In essence, Nesterov’s smoothing proves vital both computationally and theoretically.

Third, to support our theoretical results, we present finite sample performances of our method through extensive simulations, comparing it with both the -regularized estimator and the gold standard oracle solution. We demonstrate that the number of iterations in gradient descent parallels the role of the regularization parameter . When chosen appropriately, both can achieve the near-oracle statistical convergence rate. Further insights from our simulations illustrate that, firstly, in terms of estimation error, our method generalizes better than the -regularized estimator. Secondly, for variable selection, our method significantly reduces false positive rates. Lastly, due to the efficient transferability of gradient information among machines, our method is easier to be paralleled and generalized to large-scale applications. Notably, while our theory is primarily based on the Sub-Gaussian distribution assumption, our method is actually applicable to a much wider range. Additional simulations under heavy-tailed distributions still yield remarkably desired results. Extensive experimental results indicate that our method’s performance, employing implicitly regularized gradient descent in SVMs, rivals that of algorithms using explicit regularization. In certain simple scenarios, it even matches the performance of the oracle solution.

1.2 Related Work

Frequent empirical evidence shows that simple algorithms such as (stochastic) gradient descent tend to find the global minimum of the loss function despite nonconvexity. To understand this phenomenon, studies by neyshabur2015search ; neyshabur2017geometry ; keskarlarge ; wilson2017marginal ; zhang2022statistical proposed that generalization arises from the implicit regularization of the optimization algorithm. Specifically, these studies observe that in over-parameterized statistical models, although optimization problems may contain bad local errors, optimization algorithms, typically variants of gradient descent, exhibit a tendency to avoid these bad local minima and converge towards better solutions. Without adding any regularization term in the optimization objective, the implicit preference of the optimization algorithm itself plays the role of regularization.

Implicit regularization has attracted significant attention in well-established statistical problems, including linear regression gunasekar2018implicit ; vaskevicius2019implicit ; woodworth2020kernel ; li2021implicit ; zhao2022high and matrix factorization gunasekar2017implicit ; li2018algorithmic ; arora2019implicit ; ma2021sign ; ma2022global . In high-dimensional sparse linear regression problems, vaskevicius2019implicit ; zhao2022high introduced a re-parameterization technique and demonstrated that unregularized gradient descent yields an estimator with optimal statistical accuracy under the Restricted Isometric Property (RIP) assumption candes2005decoding . fan2022understanding obtained similar results for the single index model without the RIP assumption. In low-rank matrix sensing, gunasekar2017implicit ; li2018algorithmic revealed that gradient descent biases towards the minimum nuclear norm solution when initiated close to the origin. Additionally, arora2019implicit demonstrated the same implicit bias towards the nuclear norm using a depth- linear network. Nevertheless, research on the implicit regularization of gradient descent in classification problems remains limited. soudry2018implicit found that the gradient descent estimator converges to the direction of the max-margin solution on unregularized logistic regression problems. In terms of the hinge loss in SVMs, apidopoulos2022iterative provided a diagonal descent approach and established its regularization properties. However, these investigations rely on the diagonal regularization process bahraoui1994convergence , and their algorithms’ convergence rates depend on the number of iterations, and are not directly compared with those of explicitly regularized algorithms. Besides, Frank-Wolfe method and its variants have been used for classification lacoste2013block ; tajima2021frank . However, sub-linear convergence to the optimum requires the strict assumption that both the direction-finding step and line search step are performed exactly jaggi2013revisiting .

2 Model and Algorithms

2.1 Notations

Throughout this work, we denote vectors with boldface letters and real numbers with normal font. Thus, denotes a vector and denotes the -th coordinate of . We use to denote the set . For any subset in and a vector , we use to denote the vector whose -th entry is if and otherwise. For any given vector , let , and denote its , and norms. Moreover, for any two vectors , we define as the Hadamard product of vectors and , whose components are for . For any given matrix , we use and to represent the Frobenius norm and spectral norm of matrix , respectively. In addition, we let be any two positive sequences. We write if there exists a universal constant such that and we write if we have and . Moreover, shares the same meaning with .

2.2 Over-parameterization for -regularized SVM

Given a random sample with denoting the covariates and denoting the corresponding label, we consider the following -regularized SVM:

| (1) |

where denotes the hinge loss and denotes the regularization parameter. Let denote the first term of the right-hand side of (1). Previous works have shown that the -regularized estimator of the optimization problem (1) and its extensions achieve a near-oracle rate of convergence to the true parameter peng2016error ; wang2021improved ; zhang2022statistical . In contrast, rather than imposing the regularization term in (1), we minimize the hinge loss function directly to obtain a sparse estimator. Specifically, we re-parameterize as , using two vectors and in . Consequently, can be reformulated as :

| (2) |

Note that the dimensionality of in (1) is , but a -dimensional parameter is involved in (2). This indicates that we over-parameterize via in (2). We briefly describe our motivation on over-parameterizing this way. Following Hoff2017 , . Thus, the optimization problem (1) translates to . For a better understanding of implicit regularization by over-parameterization, we set and , leading to . This incorporation of new parameters and effectively over-parameterizes the problem. Finally, we drop the explicit regularization term and perform gradient descent to minimize the empirical loss in (2), in line with techniques seen in neural network training, high-dimensional regression vaskevicius2019implicit ; li2021implicit ; zhao2022high , and high-dimensional single index models fan2022understanding .

2.3 Nesterov’s smoothing

It is well-known that the hinge loss function is not differentiable. As a result, traditional first-order optimization methods, such as the sub-gradient and stochastic gradient methods, converge slowly and are not suitable for large-scale problems zhou2010nesvm . Second-order methods, like the Newton and Quasi-Newton methods, can address this by replacing the hinge loss with a differentiable approximation chapelle2007training . Although these second-order methods might achieve better convergence rates, the computational cost associated with computing the Hessian matrix in each iteration is prohibitively high. Clearly, optimizing (2) using gradient-based methods may not be the best choice.

To address the trade-off between convergence rate and computational cost, we incorporate Nesterov’s method Nesterov2005 to smooth the hinge loss and then update the parameters via gradient descent. By employing Nesterov’s method, (2) can be reformulated as the following saddle point function:

where . According to Nesterov2005 , the above saddle point function can be smoothed by subtracting a prox-function , where is a strongly convex function of with a smoothness parameter . Throughout this paper, we select the prox-function as . Consequently, can be approximated by

| (3) |

Since is strongly convex, can be obtained by setting the gradient of the objective function in (3) to zero and has the explicit form:

| (4) |

For each sample point , , we use and to denote its hinge loss and the corresponding smoothed approximation, respectively. With different choices of for any in (4), the explicit form of can be written as

| (5) |

Note that the explicit solution (5) indicates that a larger yields a smoother with larger approximation error, and can be considered as a parameter that controls the trade-off between smoothness and approximation accuracy. The following theorem provides the theoretical guarantee of the approximation error. The proof can be directly derived from (5), and thus is omitted here.

Theorem 1.

For any random sample , , the corresponding hinge loss is bounded by its smooth approximation , and the approximation error is completely controlled by the smooth parameter . For any , we have

2.4 Implicit regularization via gradient descent

In this section, we apply gradient descent algorithm to in (3) by updating and to obtain the estimator of . Specifically, the gradients of (3) with respect to and can be directly obtained, with the form of and , respectively. Thus, the updates for and are given as

| (6) |

where denotes the step size. Once is obtained, we can update as via the over-parameterization of . Note that we cannot initialize the values of and as zero vectors because these vectors are stationary points of the algorithm. Given the sparsity of the true parameter with the support , ideally, and should be initialized with the same sparsity pattern as , with and being non-zero and the values outside the support being zero. However, such initialization is infeasible as is unknown. As an alternative, we initialize and as , where is a small constant. This initialization approach strikes a balance: it aligns with the sparsity assumption by keeping the zero component close to zero, while ensuring that the non-zero component begins with a non-zero value fan2022understanding .

We summarize the details of the proposed gradient descent method for high-dimensional sparse SVM in the following Algorithm 2.4.

We highlight three key advantages of Algorithm 2.4. First, the stopping condition for Algorithm 2.4 can be determined based on the value of in addition to the preset maximum iteration number . Specifically, when the values of are across all samples, the algorithm naturally stops as no further updates are made. Thus, serves as an intrinsic indicator for convergence, providing a more efficient stopping condition. Second, Algorithm 2.4 avoids heavy computational cost like the computation of the Hessian matrix. The main computational load comes from the vector multiplication in (6). Since a considerable portion of the elements in are either or , and the proportion of these elements increases substantially as decreases, the computation in (6) can be further simplified. Lastly, the utilization of Nesterov’s smoothing not only optimizes our approach but also aids in our theoretical derivations, as detailed in Appendix E.

3 Theoretical Analysis

In this section, we analyze the theoretical properties of Algorithm 2.4. The main result is the error bound , where is the minimizer of the population hinge loss function for without the norm: . We start by defining the -incoherence, a key assumption for our analysis.

Definition 1.

Let be a matrix with -normalized columns , i.e., for all . The coherence of the matrix is defined as

where denotes the -dimensional vector whose -th entry is if and otherwise. Then, the matrix is said to be satisfying -incoherence.

Coherence measures the suitability of measurement matrices in compressed sensing foucart2013invitation . Several techniques exist for constructing matrices with low coherence. One such approach involves utilizing sub-Gaussian matrices that satisfy the low-incoherence property with high probability donoho2005stable ; carin2011coherence . The Restricted Isometry Property (RIP) is another key measure for ensuring reliable sparse recovery in various applications vaskevicius2019implicit ; zhao2022high , but verifying RIP for a designed matrix is NP-hard, making it computationally challenging bandeira2013certifying . In contrast, coherence offers a computationally feasible metric for sparse regression donoho2005stable ; li2021implicit . Hence, the assumptions required in our main theorems can be verified within polynomial time, distinguishing them from the RIP assumption.

Recall that is the -sparse signal to be recovered. Let denote the index set that corresponds to the nonzero components of , and the size of is given by . Among the nonzero signal components of , we define the index set of strong signals as and of weak signals as for some constants . We denote and as the cardinalities of and , respectively. Furthermore, we use to represent the minimal strength for strong signals and to represent the condition number-the ratio of the largest absolute value of strong signals to the smallest. In this paper, we focus on the case that each nonzero signal in is either strong or weak, which means that . Regarding the input data and parameters in Algorithm 2.4, we introduce the following two structural assumptions.

Assumption 1.

The design matrix satisfies -incoherence with . In addition, every entry of is zero-mean sub-Gaussian random variable with bounded sub-Gaussian norm .

Assumption 2.

The initialization for gradient descent are , where the initialization size satisfies , the parameter of prox-function satisfies , and the step size satisfies .

Assumption 1 characterizes the distribution of the input data, which can be easily satisfied across a wide range of distributions. Interestingly, although our proof relies on Assumption 1, numerical results provide compelling evidence that it isn’t essential for the success of our method. This indicates that the constraints set by Assumption 1 can be relaxed in practical applications, as discussed in Section 4. The assumptions about the initialization size , the smoothness parameter , and the step size primarily stem from the theoretical induction of Algorithm 2.4. For instance, controls the strength of the estimated weak signals and error components, manages the approximation error in smoothing, and affects the accuracy of the estimation of strong signals. Our numerical simulations indicate that extremely small initialization size , step size , and smoothness parameter are not required to achieve the desired convergence results, highlighting the low computational burden of our method, with details found in Section 4. The primary theoretical result is summarized in the subsequent theorem.

Theorem 2.

Theorem 2 demonstrates that if contains nonzero signals, then with high probability, for any , the convergence rate of in terms of the norm can be achieved. Such a convergence rate matches the near-oracle rate of sparse SVMs and can be attained through explicit regularization using the norm penalty peng2016error ; zhou2022communication , as well as through concave penalties kharoubi2023high . Therefore, Theorem 2 indicates that with over-parameterization, the implicit regularization of gradient descent achieves the same effect as imposing an explicit regularization into the objective function in (1).

Proof Sketch. The ideas behind the proof of Theorem 2 are as follows. First, we can control the estimated strengths associated with the non-signal and weak signal components, denoted as and , to the order of the square root of the initialization size for up to steps. This provides an upper boundary on the stopping time. Also, the magnitude of determines the size of coordinates outside the signal support at the stopping time. The importance of choosing small initialization sizes and their role in achieving the desired statistical performance are further discussed in Section 4. On the other hand, each entry of the strong signal part, denoted as , increases exponentially with an accuracy of around near the true parameter within roughly steps. This establishes the left boundary of the stopping time. The following two Propositions summarize these results.

Proposition 1.

Proposition 2.

Consequently, by appropriately selecting the stopping time within the interval specified in Theorem 2, we can ensure convergence of the signal components and effectively control the error components. The final convergence rate can be obtained by combining the results from Proposition 1 and Proposition 2.

4 Numerical Study

In our simulations, unless otherwise specified, we follow a default setup. We generate independent observations, divided equally for training, validation, and testing. The true parameters is set to with a constant . Each entry of is sampled as zero-mean Gaussian random variable, and the labels are determined by a binomial distribution with probability . Default parameters are: true signal strength , number of signals , sample size , dimension , step size , smoothness parameter , and initialization size . For evaluation, we measure the estimation error using (for comparison with oracle estimator) and the prediction accuracy on the testing set with . Additionally, "False positive" and "True negative" metrics represent variable selection errors. Specifically, "False positive" means the true value is zero but detected as a signal, while "True negative" signifies a non-zero true value that isn’t detected. Results are primarily visualized employing shaded plots and boxplots, where the solid line depicts the median of runs and the shaded area marks the -th and -th percentiles over these runs.

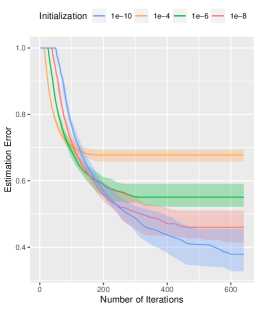

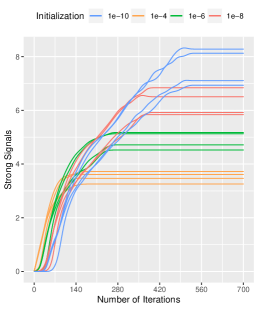

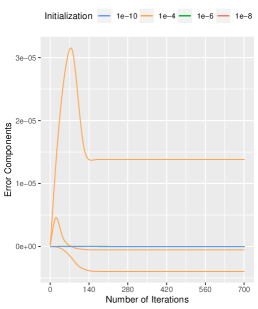

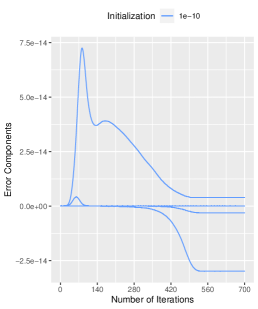

Effects of Small Initialization Size. We investigate the power of small initialization size on the performance of our algorithm. We set the initialization size , and other parameters are set by default. Figure 1 shows the importance of small initialization size in inducing exponential paths for the coordinates. Our simulations reveal that small initialization size leads to lower estimation errors and more precise signal recovery, while effectively constraining the error term to a negligible magnitude. Remarkably, although small initialization size might slow the convergence rate slightly, this trade-off is acceptable given the enhanced estimation accuracy.

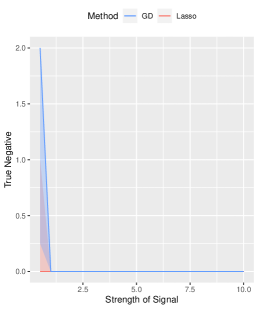

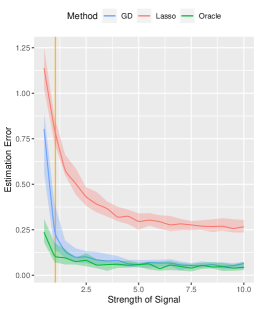

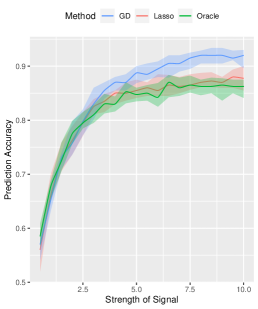

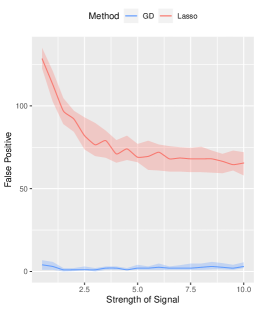

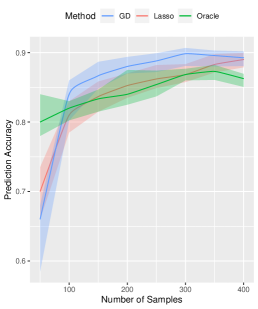

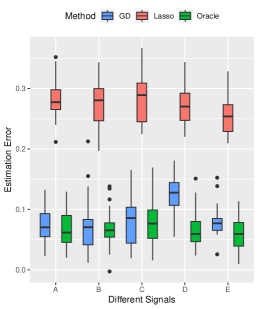

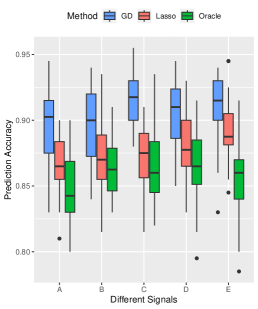

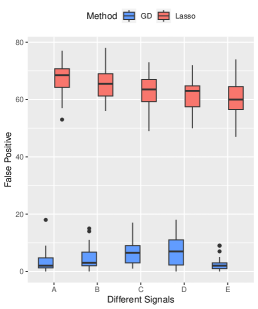

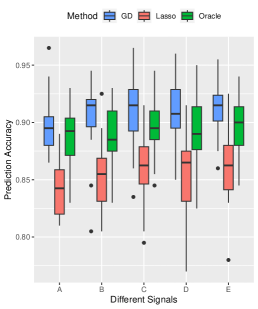

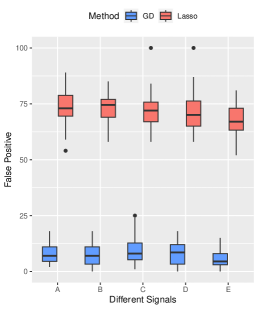

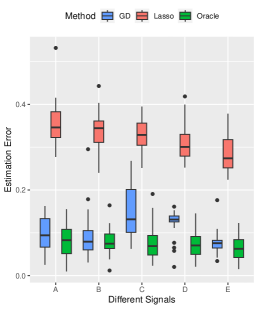

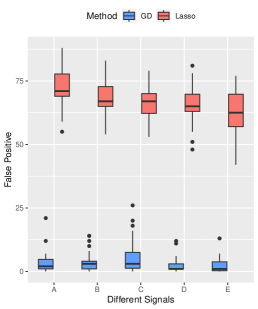

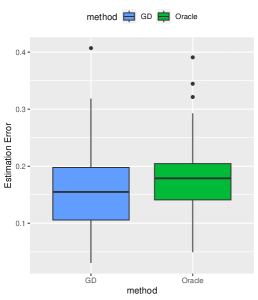

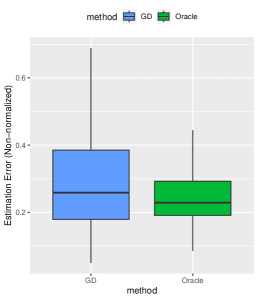

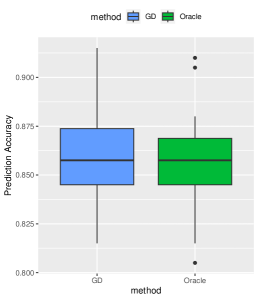

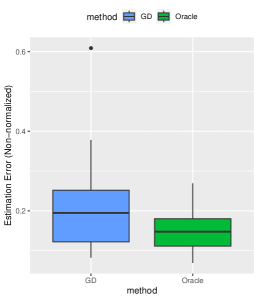

Effects of Signal Strength and Sample Size. We examine the influence of signal strength on the estimation accuracy of our algorithm. We set the true signal strength and keep other parameters at their default values. As depicted in Figure 2, we compare our method (denoted by GD) with -regularized SVM (denoted by Lasso method), and obtain the oracle solution (denoted by Oracle) using the true support information. We assess the advantages of our algorithm from three aspects. Firstly, in terms of estimation error, our method consistently outperforms the Lasso method across different signal strengths, approaching near-oracle performance. Secondly, all three methods achieve high prediction accuracy on the testing set. Lastly, when comparing variable selection performance, our method significantly surpasses the Lasso method in terms of false positive error. Since the true negative error of both methods is basically , we only present results for false positive error in Figure 2.

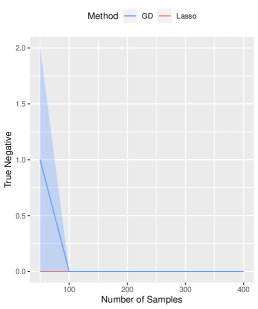

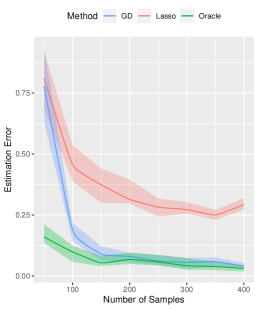

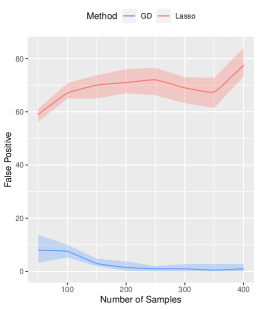

We further analyze the impact of sample size on our proposed algorithm. Keeping the true signal strength fixed at , we vary the sample size as for . Other parameters remain at their default values. Consistently, our method outperforms the Lasso method in estimation, prediction, and variable selection, see Figure 3 for a summary of the results.

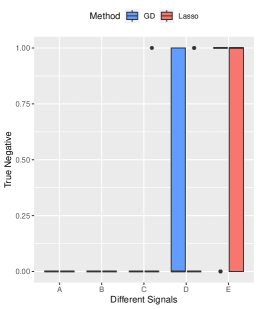

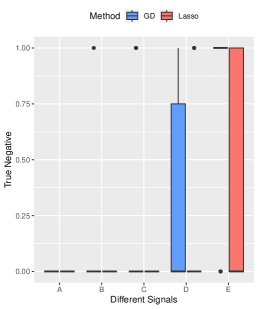

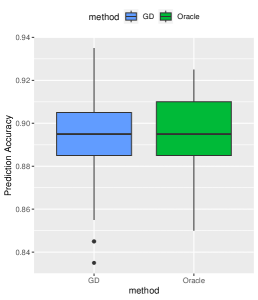

Performance on Complex Signal Structure. To examine the performance of our method under more complex signal structures, we select five signal structures: , , , , and . Other parameters are set by default. The results, summarized in Figure 4, highlight the consistent superiority of our method over the Lasso method in terms of prediction and variable selection performance, even approaching an oracle-like performance for complex signal structures. High prediction accuracy is achieved by the both methods.

Performance on Heavy-tailed Distribution. Although the sub-Gaussian distribution of input data is assumed in Assumption 1, we demonstrate that our method can be extended to a wider range of distributions. We conduct simulations under both uniform and heavy-tailed distributions. The simulation setup mirrors that of Figure 4, with the exception that we sample from a uniform distribution and a distribution, respectively. Results corresponding to the distribution are presented in Figure 5, and we can see that our method maintains strong performance, suggesting that the constraints of Assumption 1 can be substantially relaxed. Additional simulation results can be found in the Appendix A.

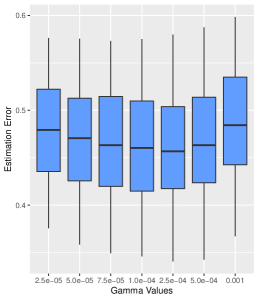

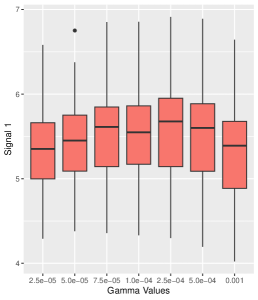

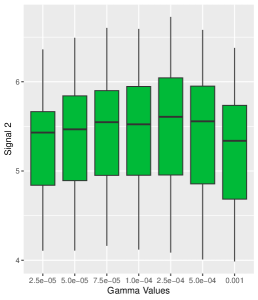

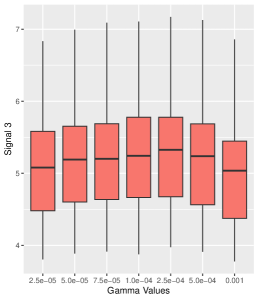

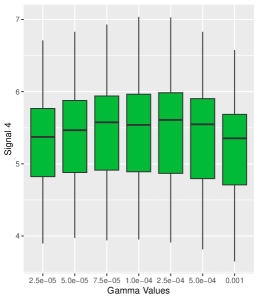

Sensitivity Analysis with respect to smoothness parameter . We analyze the impact of smoothness parameter on our proposed algorithm. Specifically, the detailed experimental setup follows the default configuration, and is set within the range . The simulations are replicated times, and the numerical results of estimation error and four estimated signal strengths are presented in Figure 6. From Figure 6, it’s evident that the choice of is relatively insensitive in the sense that the estimation errors and the estimated strengths of the signals under different s are very close. Furthermore, as increases, the estimation accuracy experiences a minor decline, but it remains within an acceptable range. See Appendix A for simulation results of Signal and Signal .

5 Conclusion

In this paper, we leverage over-parameterization to design an unregularized gradient-based algorithm for SVM and provide theoretical guarantees for implicit regularization. We employ Nesterov’s method to smooth the re-parameterized hinge loss function, which solves the difficulty of non-differentiability and improves computational efficiency. Note that our theory relies on the incoherence of the design matrix. It would be interesting to explore to what extent these assumptions can be relaxed, which is a topic of future work mentioned in other studies on implicit regularization. It is also promising to consider extending the current study to nonlinear SVMs, potentially incorporating the kernel technique to delve into the realm of implicit regularization in nonlinear classification. In summary, this paper not only provides novel theoretical results for over-parameterized SVMs but also enriches the literature on high-dimensional classification with implicit regularization.

6 Acknowledgements

The authors sincerely thank the anonymous reviewers, AC, and PCs for their valuable suggestions that have greatly improved the quality of our work. The authors also thank Professor Shaogao Lv for the fruitful and valuable discussions at the very beginning of this work. Dr. Xin He’s and Dr. Yang Bai’s work is supported by the Program for Innovative Research Team of Shanghai University of Finance and Economics and the Shanghai Research Center for Data Science and Decision Technology.

References

- [1] Tiago A Almeida, José María G Hidalgo, and Akebo Yamakami. Contributions to the study of sms spam filtering: new collection and results. In Proceedings of the 11th ACM symposium on Document engineering, pages 259–262, 2011.

- [2] Vassilis Apidopoulos, Tomaso Poggio, Lorenzo Rosasco, and Silvia Villa. Iterative regularization in classification via hinge loss diagonal descent. arXiv preprint arXiv:2212.12675, 2022.

- [3] Sanjeev Arora, Nadav Cohen, Wei Hu, and Yuping Luo. Implicit regularization in deep matrix factorization. Advances in Neural Information Processing Systems, 32, 2019.

- [4] MA Bahraoui and B Lemaire. Convergence of diagonally stationary sequences in convex optimization. Set-Valued Analysis, 2:49–61, 1994.

- [5] Afonso S Bandeira, Edgar Dobriban, Dustin G Mixon, and William F Sawin. Certifying the restricted isometry property is hard. IEEE transactions on information theory, 59(6):3448–3450, 2013.

- [6] Emmanuel J Candes and Terence Tao. Decoding by linear programming. IEEE transactions on information theory, 51(12):4203–4215, 2005.

- [7] Lawrence Carin, Dehong Liu, and Bin Guo. Coherence, compressive sensing, and random sensor arrays. IEEE Antennas and Propagation Magazine, 53(4):28–39, 2011.

- [8] Olivier Chapelle. Training a support vector machine in the primal. Neural computation, 19(5):1155–1178, 2007.

- [9] David L Donoho, Michael Elad, and Vladimir N Temlyakov. Stable recovery of sparse overcomplete representations in the presence of noise. IEEE Transactions on information theory, 52(1):6–18, 2005.

- [10] Jianqing Fan, Cong Ma, and Yiqiao Zhong. A selective overview of deep learning. Statistical science: a review journal of the Institute of Mathematical Statistics, 36(2):264, 2021.

- [11] Jianqing Fan, Zhuoran Yang, and Mengxin Yu. Understanding implicit regularization in over-parameterized single index model. Journal of the American Statistical Association, pages 1–14, 2022.

- [12] Simon Foucart, Holger Rauhut, Simon Foucart, and Holger Rauhut. An invitation to compressive sensing. Springer, 2013.

- [13] Suriya Gunasekar, Jason D Lee, Daniel Soudry, and Nati Srebro. Implicit bias of gradient descent on linear convolutional networks. Advances in neural information processing systems, 31, 2018.

- [14] Suriya Gunasekar, Blake E Woodworth, Srinadh Bhojanapalli, Behnam Neyshabur, and Nati Srebro. Implicit regularization in matrix factorization. Advances in Neural Information Processing Systems, 30, 2017.

- [15] Xin He, Shaogao Lv, and Junhui Wang. Variable selection for classification with derivative-induced regularization. Statistica Sinica, 30(4):2075–2103, 2020.

- [16] P. Hoff. Lasso, fractional norm and structured sparse estimation using a hadamard product parametrization. Computational Statistics Data Analysis, 115:186–198, 2017.

- [17] Yuan Huang, Jian Huang, Ben-Chang Shia, and Shuangge Ma. Identification of cancer genomic markers via integrative sparse boosting. Biostatistics, 13(3):509–522, 2012.

- [18] Martin Jaggi. Revisiting frank-wolfe: Projection-free sparse convex optimization. In International conference on machine learning, pages 427–435. PMLR, 2013.

- [19] Nitish Shirish Keskar, Dheevatsa Mudigere, Jorge Nocedal, Mikhail Smelyanskiy, and Ping Tak Peter Tang. On large-batch training for deep learning: Generalization gap and sharp minima. In International Conference on Learning Representations.

- [20] Rachid Kharoubi, Abdallah Mkhadri, and Karim Oualkacha. High-dimensional penalized bernstein support vector machines. arXiv preprint arXiv:2303.09066, 2023.

- [21] Simon Lacoste-Julien, Martin Jaggi, Mark Schmidt, and Patrick Pletscher. Block-coordinate frank-wolfe optimization for structural svms. In International Conference on Machine Learning, pages 53–61. PMLR, 2013.

- [22] Yann LeCun, Yoshua Bengio, and Geoffrey Hinton. Deep learning. Nature, 521(7553):436–444, 2015.

- [23] Jiangyuan Li, Thanh Nguyen, Chinmay Hegde, and Ka Wai Wong. Implicit sparse regularization: The impact of depth and early stopping. Advances in Neural Information Processing Systems, 34:28298–28309, 2021.

- [24] Yuanzhi Li, Tengyu Ma, and Hongyang Zhang. Algorithmic regularization in over-parameterized matrix sensing and neural networks with quadratic activations. In Conference On Learning Theory, pages 2–47. PMLR, 2018.

- [25] Jianhao Ma and Salar Fattahi. Sign-rip: A robust restricted isometry property for low-rank matrix recovery. arXiv preprint arXiv:2102.02969, 2021.

- [26] Jianhao Ma and Salar Fattahi. Global convergence of sub-gradient method for robust matrix recovery: Small initialization, noisy measurements, and over-parameterization. arXiv preprint arXiv:2202.08788, 2022.

- [27] Cesare Molinari, Mathurin Massias, Lorenzo Rosasco, and Silvia Villa. Iterative regularization for convex regularizers. In International conference on artificial intelligence and statistics, pages 1684–1692. PMLR, 2021.

- [28] Y. Nesterov. Smooth minimization of non-smooth functions. Mathematical Programming, 103:127–152, 2005.

- [29] Behnam Neyshabur, Ryota Tomioka, Ruslan Salakhutdinov, and Nathan Srebro. Geometry of optimization and implicit regularization in deep learning. arXiv preprint arXiv:1705.03071, 2017.

- [30] Behnam Neyshabur, Ryota Tomioka, and Nathan Srebro. In search of the real inductive bias: On the role of implicit regularization in deep learning. International Conference on Learning Representations, 2015.

- [31] Daniel W Otter, Julian R Medina, and Jugal K Kalita. A survey of the usages of deep learning for natural language processing. IEEE transactions on neural networks and learning systems, 32(2):604–624, 2020.

- [32] Bo Peng, Lan Wang, and Yichao Wu. An error bound for l1-norm support vector machine coefficients in ultra-high dimension. The Journal of Machine Learning Research, 17(1):8279–8304, 2016.

- [33] Daniel Soudry, Elad Hoffer, Mor Shpigel Nacson, Suriya Gunasekar, and Nathan Srebro. The implicit bias of gradient descent on separable data. The Journal of Machine Learning Research, 19(1):2822–2878, 2018.

- [34] Kenya Tajima, Yoshihiro Hirohashi, Esmeraldo Ronnie Rey Zara, and Tsuyoshi Kato. Frank-wolfe algorithm for learning svm-type multi-category classifiers. In Proceedings of the 2021 SIAM International Conference on Data Mining (SDM), pages 432–440. SIAM, 2021.

- [35] Tomas Vaskevicius, Varun Kanade, and Patrick Rebeschini. Implicit regularization for optimal sparse recovery. Advances in Neural Information Processing Systems, 32, 2019.

- [36] Athanasios Voulodimos, Nikolaos Doulamis, Anastasios Doulamis, Eftychios Protopapadakis, et al. Deep learning for computer vision: A brief review. Computational intelligence and neuroscience, 2018, 2018.

- [37] Junhui Wang, Jiankun Liu, Yong Liu, et al. Improved learning rates of a functional lasso-type svm with sparse multi-kernel representation. Advances in Neural Information Processing Systems, 34:21467–21479, 2021.

- [38] Ashia C Wilson, Rebecca Roelofs, Mitchell Stern, Nati Srebro, and Benjamin Recht. The marginal value of adaptive gradient methods in machine learning. Advances in neural information processing systems, 30, 2017.

- [39] Blake Woodworth, Suriya Gunasekar, Jason D Lee, Edward Moroshko, Pedro Savarese, Itay Golan, Daniel Soudry, and Nathan Srebro. Kernel and rich regimes in overparametrized models. In Conference on Learning Theory, pages 3635–3673. PMLR, 2020.

- [40] Chulhee Yun, Suvrit Sra, and Ali Jadbabaie. Small nonlinearities in activation functions create bad local minima in neural networks. In International Conference on Learning Representations.

- [41] Hao Helen Zhang. Variable selection for support vector machines via smoothing spline anova. Statistica Sinica, pages 659–674, 2006.

- [42] Xiang Zhang, Yichao Wu, Lan Wang, and Runze Li. Variable selection for support vector machines in moderately high dimensions. Journal of the Royal Statistical Society Series B: Statistical Methodology, 78(1):53–76, 2016.

- [43] Yingying Zhang, Yan-Yong Zhao, and Heng Lian. Statistical rates of convergence for functional partially linear support vector machines for classification. Journal of Machine Learning Research, 23(156):1–24, 2022.

- [44] Peng Zhao, Yun Yang, and Qiao-Chu He. High-dimensional linear regression via implicit regularization. Biometrika, 109(4):1033–1046, 2022.

- [45] Tianyi Zhou, Dacheng Tao, and Xindong Wu. Nesvm: A fast gradient method for support vector machines. In 2010 IEEE International Conference on Data Mining, pages 679–688. IEEE, 2010.

- [46] Xingcai Zhou and Hao Shen. Communication-efficient distributed learning for high-dimensional support vector machines. Mathematics, 10(7):1029, 2022.

Appendix

The appendix is organized as follows.

In Appendix A, we provide supplementary explanations and additional experimental results for the numerical study.

In Appendix B, we discuss the performance of our method using other data generating schemes.

In Appendix C, we characterize the dynamics of the gradient descent algorithm for minimizing the Hadamard product re-parametrized smoothed hinge loss .

In Appendix D, we prove the main results stated in the paper.

Appendix A Additional Experimental Results

A.1 Effects of Small Initialization on Error Components

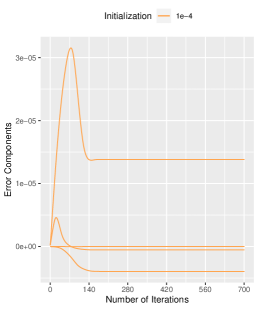

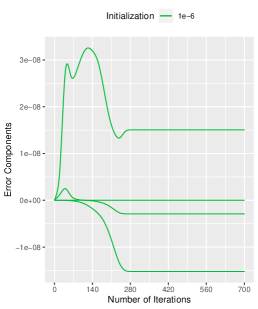

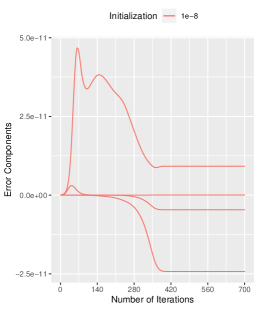

We present a comprehensive analysis of the impact of initialization on the error components, as depicted in Figure 7. Our results demonstrate that, while the initialization size does not alter the error trajectory, it significantly affects the error bounds. Numerically, when the initialization is , the maximum absolute value of the error components is around , which is bounded by the initialization size . Similarly, when the initialization is , the maximum absolute value of the error components is around , which is bounded by the initialization size . The same result is obtained for the other two initialization sizes. The specific numerical results we obtained validate the conclusions drawn in Proposition 1, as the error term satisfies

A.2 True Negative Results

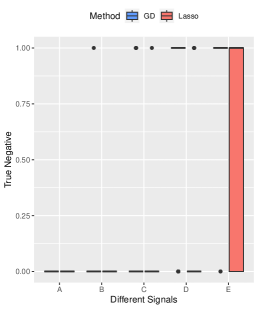

In the main text, we could not include the True Negative error figures in 2, 3, 4, and 5 due to space constraints. However, these figures are provided in Figure 8. We observe that both the gradient descent estimator and the Lasso estimator effectively detect the true signals across different settings.

A.3 Additional Results under Uniform Distribution

As previously described, we sample from a uniform distribution and set other parameters as follows: true signal structures are , , , , and . The number of signals is , the sample size is , dimension is , step size is , smoothness parameter is , and the initialization size is . The experimental results are summarized in Figure 9. As depicted in Figure 9, the gradient descent (GD) estimator consistently outperforms the Lasso estimator in terms of estimation accuracy and variable selection error. Both methods slightly surpass the oracle estimator in terms of prediction. However, this advantage mainly stems from the inevitable overestimation of the number of signals in the estimates by both the GD and Lasso methods.

A.4 Additional Results of Sensitivity Analysis

Within our sensitivity analysis, the simulation results for Signal and Signal are presented in Figure 10. We observe that the estimated strengths of the two signals exhibit similar distributions across different values of the smoothness parameter .

Appendix B Other Data Generating Schemes

In section 4, the scheme for generating in our original submission is based on a treatment similar to those in [41, 42], where the task of variable selection for the support vector machine is considered. In this section, we also introduce other generating schemes as adopted in [32, 15]. Specifically, we generate random data based on the following two models.

-

•

Model : , with nonzero elements for , , where is the cumulative density function of the standard normal distribution, and .

-

•

Model : , , , , , with diagonal entries equal to 1, nonzero entries for and other entries equal to . The bayes rule is with bayes error .

We follow the default parameter setup from Section 4, and the experiments are repeated times. The averaged estimation and prediction results are presented in Figure 11. From Figure 11, it’s evident that the GD estimator closely approximates the oracle estimator in terms of estimation error and prediction accuracy.

Appendix C Gradient Descent Dynamics

First, let’s recall the gradient descent updates. We over-parameterize by writing it as , where and are vectors. We then apply gradient descent to the following optimization problem:

where . The gradient descent updates with respect to and are given by

For the sake of convenience, let represent the gradients in the form of , where is a diagonal matrix composed of the elements of . Consequently, the -th element of can be expressed as , where . Subsequently, the updating rule can be rephrased as follows:

| (7) |

Furthermore, the error parts of and are denoted by and , which include both weak signal parts and pure error parts. In addition, strong signal parts of and are denoted by and , respectively.

We examine the dynamic changes of error components and strong signal components in two stages. Without loss of generality, we focus on analyzing entries where . The analysis for the case where and is similar and therefore not presented here. Specifically, within Stage One, we can ensure that with a high probability, for , the following results hold under Assumptions 1 and 2:

| strong signal dynamics: | (8) | |||

| error component dynamics: |

From (8), we can observe that for , the iterate reaches the desired performance level. Specifically, each component of the positive strong signal part increases exponentially in until it reaches . Meanwhile, the weak signal and error part remains bounded by . This observation highlights the significance of small initialization size for the gradient descent algorithm, as it restricts the error term. A smaller initialization leads to better estimation but with the trade-off of a slower convergence rate.

After each component of the strong signal reaches , Stage Two starts. In this stage, continues to grow at a slower rate and converges to the true parameter . After this time, after iterations, of the strong signal enters a desired interval, which is within a distance of from the true parameter . Simultaneously, the error term remains bounded by until iterations.

Appendix D Proof of Theorem 2

Proof.

We first utilize Proposition 1 to upper bound the error components . By Proposition 1, we are able to control the error parts of the same order as the initialization size within the time interval . Thus, by direct computation, we have

| (9) | ||||

where is based on the relationship between norm and norm and follows form the results in Proposition 1. As for the strong signal parts, by Proposition 2, that with probability at least , we obtain

| (10) |

Finally, combining (9) and (10), with probability at least , for any that belongs to the interval

it holds that

Since is much larger than , the last term, , is negligible. Considering the constants , and , we finally obtain the error bound of gradient descent estimator in terms of norm. Therefore, we conclude the proof of Theorem 2. ∎

Appendix E Proof of Propositions 1 and 2

In this section, we provide the proof for the two propositions mentioned in Section 3. First, we introduce some useful lemmas, which are about the coherence of the design matrix and the upper bound of sub-Gaussian random variables.

E.1 Technical Lemmas

Lemma 1.

(General Hoeffding’s inequality) Suppose that are independent mean- zero sub-Gaussian random variables and . Then, for every , we have

where and is an absolute constant.

Proof.

General Hoeffding’s inequality can be proofed directly by the independence of and the sub-Gaussian property. The detailed proof is omitted here. ∎

Lemma 2.

Suppose that is a -normalized matrix satisfying -incoherence; that is for any . Then, for -sparse vector , we have

where denotes the matrix whose -th column is if and otherwise.

E.2 Proof of Proposition 1

Proof.

Here we prove Proposition 1 by induction hypothesis. It holds that our initializations and satisfy our conclusion given in Proposition 1. As we initialize and for any fixed with

Proposition 1 holds when . In the following, we show that for any with , if the conclusion of Proposition 1 stands for all with , then it also stands at the -th step. From the gradient descent updating rule given in (7), the updates of the weak signals and errors , for any fixed are obtained as follows,

Recall that for , for any fixed , then we have

For ease of notation, we denote and . Then we can easily bound the weak signals and errors as follows,

| (11) |

By General Hoeffding’s inequality of sub-Gaussian random variables, with probability at least , we obtain , where is a constant. Since is much larger than , the inequality holds. Then, with probability at least , where is a constant, we have .

E.3 Proof of Proposition 2

Proof.

In order to analyze strong signals: for any fixed , we focus on the dynamics and . Following the updating rule (7), we have

| (12) | ||||

Without loss of generality, here we just analyze entries with . Analysis for the negative case with is almost the same and is thus omitted. We divide our analysis into two stages, as discussed in Appendix C.

Specifically, in Stage One, since we choose the initialization as , we obtain for any fixed , we show after iterations, the gradient descent coordinate will exceed . Therefore, all components of strong signal part will exceed half of the true parameters after iterations. Furthermore, we calculate the number of iterations required to achieve , where , in Stage Two. Thus, we conclude the proof of Proposition 2.

Stage One. First, we introduce some new notations. In -th iteration, according to the values of , we divide the set into three parts, , and , with cardinalities of , and , respectively. From [28, 45], most elements of are or and the proportion of these elements will rapidly increase with the decreasing of , which means that controlling in a small level.

According to the updating formula of in (12), we define the following form of element-wise bound for any fixed as

| (13) | ||||

Rewriting (13), we can easily get that

| (14) |

From (14), it is obvious that if the magnitude of is sufficiently small, we can easily conclude that . Therefore, our goal is to evaluate the magnitude of in (13) for any fixed . First, we focus on the term in (13). In particular, recall the definition of and we expand in the following form

For the term , by the condition and General Hoeffding’s inequality, we have

| (15) |

holds with probability at least , where is a constant.

For the term , let denote the matrix whose -th column is if . Then, we approximate based on the assumption on the design matrix via Lemma 2,

| (16) |

By (15), (16), condition of the minimal strength and condition , we have

| (17) |

Then, we can bound through

| (18) | ||||

Note that for any fixed , . By General Hoeffding’s inequality, we have with probability at least , and then based on the assumption of . Therefore, is always bounded by some constant greater than .

Recalling the definition of element-wise bound , and combining (17) and (18), we have that

| (19) |

where we use the bound of for any fixed in step and step is based on and . Thus, we conclude that is sufficiently small for any fixed .

Now, for any fixed , when , we can get the increment of for any fixed according to (19),

where follows from and holds since . Similarly, we can analyze to get that when ,

Therefore, increases at an exponential rate while decreases at an exponential rate. Stage One ends when exceeds , and our goal is to estimate that satisfies

Note that forms a decreasing sequence and thus is bounded by . Hence, it suffices to solve the following inequality for ,

which is equivalent to obtain satisfying

For , by direct calculation, we have

where follows from when and holds due to when as well as the assumption on and . Thus, we set such that for all , for all .

Stage Two. Define and . Next, we refine the element-wise bound according to (13). For any fixed , if there exists some such that and , then, based on the analytical thinking in Stage One, we can easily deduce that

| (20) |

Using this element-wise bound (20), we get the increment of and decrement of ,

We define as the smallest such that . Intuitively, suppose that the current estimate is between and , we aim to find the number of iterations required for the sequence to exceed . To obtain a sufficient condition for , we construct the following inequality,

Similar with Stage One, the sequence is bounded by . Then, it is sufficient to solve the following inequality for ,

To obtain a more precise upper bound of , we have

where is based on the condition , stands since when . By direct calculation, we obtain

| (21) | ||||

where we use when in step and in step , respectively. Recall the assumption with , then we get . Moreover, by the assumption on the initialization size , we have . Thus, we can bound in (21) as

| (22) |

where holds when . Combined (21) and (22), we calculate the final bound of as

for any . If there exists an such that , after iterations, we can guarantee that . Now recalling the definition of , we have . Therefore, with at most iterations, we have . By the assumption of , we obtain

Since the function is decreasing with respect to , we have after iterations that for any fixed . Thus, we complete the proof of Proposition 2. ∎