Importance Weighted Structure Learning for Scene Graph Generation

Abstract

Scene graph generation is a structured prediction task aiming to explicitly model objects and their relationships via constructing a visually-grounded scene graph for an input image. Currently, the message passing neural network based mean field variational Bayesian methodology is the ubiquitous solution for such a task, in which the variational inference objective is often assumed to be the classical evidence lower bound. However, the variational approximation inferred from such loose objective generally underestimates the underlying posterior, which often leads to inferior generation performance. In this paper, we propose a novel importance weighted structure learning method aiming to approximate the underlying log-partition function with a tighter importance weighted lower bound, which is computed from multiple samples drawn from a reparameterizable Gumbel-Softmax sampler. A generic entropic mirror descent algorithm is applied to solve the resulting constrained variational inference task. The proposed method achieves the state-of-the-art performance on various popular scene graph generation benchmarks.

Index Terms:

Scene Graph Generation, Structured Prediction, Message Passing Neural Network, Importance Weighted Variational Inference.1 Introduction

As a structured prediction task, scene graph generation (SGG) aims to construct a visually-grounded scene graph for an input image, in which its potential objects as well as their relevant relationships are explicitly modelled. Such fundamental scene understanding task could potentially facilitate the downstream computer vision tasks, such as image captioning [1], [2], [3] and visual question answering [4], [5], [6]. Given an input image , SGG aims to infer the optimum interpretations by a max aposteriori (MAP) estimation , where is applied to parameterize the underlying posterior . Due to the exponential dependencies among the output variables, it is often computationally intractable to directly compute .

To this end, current SGG models generally follow a variational Bayesian (VB) [7], [8] framework, in which the variational inference step aims to approximate with a computationally tractable variational distribution , while the variaitonal learning step tries to fit the underlying posterior for the ground-truth training samples via a cross entropy loss. To estimate the optimum and , one needs to alternate the above variational inference and learning steps. For tractability, in current SGG models [9], [10], [11], [12], [13], [14], [15], [16], the variational distribution is often assumed to be fully decomposed as , where is the interpretation of one of the potential object and relationship region proposals and represents the corresponding local variational approximation. The resulting VB framework is also known as the mean field variational Bayesian (MFVB) [7], [8], and the associated variational inference step is also called mean field variational inference (MFVI) [7], [8].

The above MFVI step in current SGG tasks is often formulated using message passing neural network (MPNN) models [15], [16], [17], [18], [19], which require two fundamental modules to be constructed: visual perception and visual context reasoning [20]. Such formulation combines the superior feature representation learning capability of the deep neural networks and the inference capability of the classical MFVI. As a result, the above MPNN-based MFVI methodology has became the ubiquitous solution for current SGG tasks [15], [16], [17], [18], [19]. In the above formulation, a classical evidence lower bound (ELBO) [21] is often implicitly (since the message passing optimization method do not need to explicitly maximize it) chosen as the variational inference objective.

However, the variational approximation inferred from such loose ELBO objective generally underestimates the underlying complex posterior [21], which often leads to inferior generation performance. In other words, the classical ELBO objective does not achieve a balanced bias-variance trade-off, since it generates overly simplified representations which fail to use the entire modeling capability of the network [22]. This perhaps partly explains the fact that the detection performance of the current SGG models fall short of our expectations.

To solve the above issue, in this paper, we propose a novel importance weighted structure learning (IWSL) method, which employs a tighter importance weighted lower bound [22] to replace the classical loose ELBO [21] as the variational inference objective. Such importance weighted approximation is essentially a lower bound of the underlying log-partition function [22], which is estimated from the multiple samples drawn from a reparameterizable Gumbel-Softmax sampler [23], [24]. Unlike the classical MPNN-based SGG models, the proposed IWSL method requires to solve a constrained variational inference step. Basically, it aims to explicitly maximize the importance weighted lower bound objective, subject to the constraint that the categorical probability approximated by a Gumbel-Softmax variational distribution resides in a probability simplex. To this end, a generic constrained optimization algorithm - entropic mirror descent [25] - is applied to infer the optimum interpretation from the input image. The proposed IWSL method achieves the state-of-the-art performance on two popular scene graph generation benchmarks: Visual Genome and Open Images V6.

This paper is organized as follows: Section 2 presents the related works while Section 3 demonstrates the proposed importance weighted structure learning methodology. The experimental results and the corresponding analysis are elaborated in Section 4. Finally, the conclusions are drawn in Section 5.

2 Related Works

Current SGG models follow two main research directions: pursuing a superior feature extracting structure or implementing an unbiased relationship prediction. The former aims to improve the feature representation learning capabilities of the neural network models, while the latter focuses on overcoming the problem of bias (which mainly detects the dominant relationship categories with abundant training samples and largely ignores the informative ones with fewer training samples) in the learnt relationship prediction, caused by a long-tail data distribution.

For the first direction, [3], [11], [17], [26], [27] devise novel MPNN models while [26], [13], [15], [12], [19] embed the relevant contextual structural information into the current MPNN models. For the second direction, various debiasing methodologies have been proposed to solve the biased relationship prediction problem. For instance, dataset resampling [28], [29], [30], instance-level resampling [31], [32], bi-level data resampling [16], knowledge transfer learning [33], [34], [35] and loss reweighting based on instance frequency [36], [37] are the various remedies suggested in the literature. Unlike the above traditional debiasing methods, [38] removes the harmful bias from the good context bias based on the counterfactual causality (via calculating the Total Direct Effect with the help of a causal graph).

Most of the above SGG models [39], [27], [13], [10], [19], [16] tend to rely on a unified MPNN-based MFVB methodology. Such formulation generally employs the ELBO as the variational inference objective, in which the resulting variational approximation derived from ELBO often underestimates the underlying complex posterior [22]. In contrary, we use a tighter importance weighted lower bound as the variational inference objective in the proposed IWSL method, and solve the resulting constrained variational inference task via a generic entropic mirror descent strategy rather than the traditional message passing technique. Specifically, various samples drawn from a reparameterizable Gumbel-Softmax sampler [23], [24] are applied to compute the above importance weighted lower bound.

The above strictly tighter importance weighted lower bound is firstly introduced in the importance weighted autoencoder (IWAE) [22], which is a generative model with the same architecture as the classical variational autoencoder (VAE). In particular, the recognition network in IWAE relies on multiple samples to approximate the posterior, which increases the flexibility to model complex posteriors which do not fit the VAE modeling assumptions. Moreover, due to the inability to backpropagate through samples, the output categorical latent variables in SGG tasks are rarely employed in the stochastic neural networks. To this end, in this paper, instead of producing non-differentiable samples from a categorical distribution, Gumbel-Softmax sampler is utilized to draw differentiable samples from a novel Gumbel-Softmax distribution [23], [24]. Due to the applied explicit reparameterization function, it is quite easy to construct an efficient gradient estimator.

3 Proposed Methodology

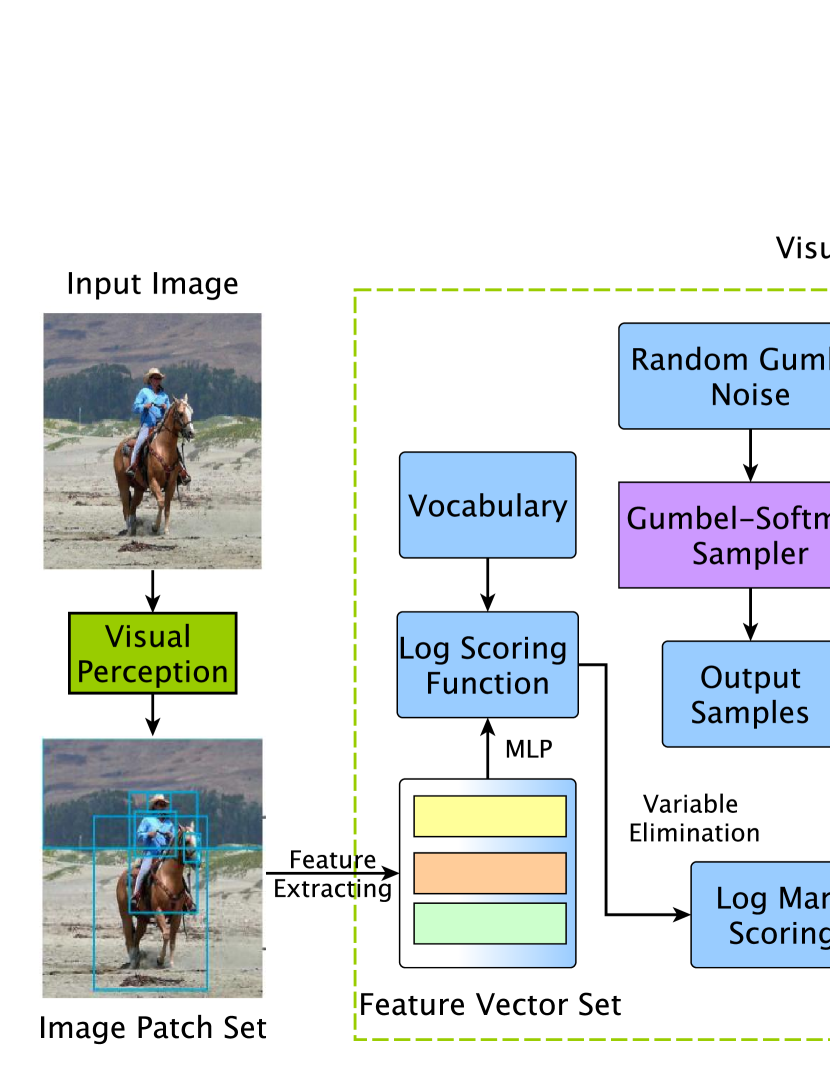

In this section, we first present the problem formulation, followed by the proposed scoring function and the relevant Gumbel-Softmax sampler. Finally, the importance weighted structure learning and the adopted entropic mirror descent inference strategy are also discussed in the last two subsections. Fig.1 demonstrates the overview of the proposed IWSL method.

3.1 Problem Formulation

As a structured prediction task, scene graph generation aims to build a visually-grounded scene graph for an input image by explicitly identifying the scene objects and their relevant relationships. In the current SGG settings, the scene graph includes a list of intertwined semantic triplet structures, in which each triplet consists of three components: a subject, a predicate and an object. Specifically, the current SGG tasks only focus on inferring the pairwise relationships, where the relationship between two interacting instances (subject and object) in an input image is termed as a predicate.

Generally, SGG task aims to infer the optimum interpretations from the input image via a MAP estimation , in which the underlying posterior can be computed as follows:

| (1) |

where is a scoring function of the probabilistic graphical model (e.g. conditional random field [41]) of the SGG task, which is used to measure the similarity/compatibility between the input variable and the output variable . is the relevant partition function or normalizing constant. Due to the exponential combinatorial dependencies existing in the SGG task, is generally computationally intractable, which implies that it can only be estimated by certain approximation strategies.

To this end, a classical variational inference (VI) [7], [8] technique is generally employed to estimate the computationally intractable log-partition function in current SGG models. For tractability, the computationally tractable variational distribution is often assumed to be fully decomposed and the resulting inference technique is known as mean field variational inference (MFVI) [7], [8]. Besides the above variational inference step, one still requires another variational learning step to fit the underlying posterior with the ground-truth training samples. Such formulation is also known as mean field variational Bayesian (MFVB) [7], [8] framework, in which the optimum and are obtained by alternating the above inference and learning steps. More importantly, for MFVI, the original MAP inference in SGG task can be transformed into a corresponding marginal inference task, which is not the case for other VI techniques [42].

In the current SGG modelling approaches, a classical cross-entropy loss is generally employed to solve the variaitional learning step, while the above MFVI step is commonly formulated using a message passing neural network (MPNN) [43], [44], [45], [46] model, in which the traditional ELBO is invariably implicitly chosen as the variational inference objective. As a result, the MPNN-based MFVB formulation has became the de facto solution for current SGG tasks. In this MPNN-based MFVB framework, two fundamental modules are required, namely, visual perception and visual context reasoning. The former aims to locate and instantiate the objects and predicates within the input image, while the latter tries to infer their consistent interpretation.

Specifically, given an input image , visual perception module aims to generate a set of object region proposals , as well as a set of predicate region proposals , where and represent the number of objects and predicates detected in the input image, respectively. Correspondingly, the input image can be divided into two sets of image patches and . One can extract the relevant fixed-sized latent feature representation sets and by applying a ROI pooling on the feature maps obtained from the visual perception module. Given a set of object classes and a set of relationship categories , a visual context reasoning module aims to infer the resulting object and predicate interpretation sets and based on the above latent feature representation sets.

However, the variational distribution derived from the above loose ELBO objective often underestimates the underlying posterior [21], which leads to inferior generation performance. To this end, in this paper, we propose a novel importance weighted structure learning (IWSL) method, which employs a tighter (which is explicitly explained in Section 3.4) importance weighted lower bound to replace the classical ELBO as the variational inference objective. Instead of relying on the classical message passing technique, the proposed IWSL method applies a generic entropic mirror descent algorithm to accomplish the resulting constrained variational inference task. The proposed generic IWSL methodology allows us to increase the model complexity so that one can find a balanced bias-variance trade-off. In other words, the resulting variational distribution derived from the tighter importance weighted lower bound objective facilitates a better exploration of the complex multiple-modal behaviour of the underlying posterior.

3.2 Scoring Function

As a structured prediction task, scene graph generation can be generally formulated using a probabilistic graphical model, e.g. a conditional random field (CRF) [41]. With such probabilistic graphical model, one can model the conditional dependencies among the relevant variables by devising a non-negative scoring function , where is used to parameterize the scoring function.

Basically, measures the similarity or compatibility between the input image and the output interpretations , which is often defined as follows:

| (2) |

where is a clique within a clique set (which is defined by the corresponding graph structure), is a non-negative factor function which models the dependencies between and . Generally, the underlying posterior related to is often assumed to be a Gibbs distribution in traditional VB framework [7]. Correspondingly, often takes the exponential form and the log scoring function is computed as follows:

| (3) |

where is also known as a potential function. In current SGG models, we have two types of potential functions: the unary potential function and the pairwise/binary potential function .

Currently, only local contextual information is considered in most previous SGG models, while the global contextual information is largely ignored. In this paper, we aim to compute a latent global feature representation from the global region proposal , where is obtained by the union of all the associated object/predicate region proposals in the input image. Correspondingly, is the relevant global image patch of , and is its interpretation.

With the above definitions, by adding two types of pairwise potential terms and , one could incorporate the global contextual information into the following applied log scoring function:

| (4) |

where the superscripts , , represent the object, the predicate and the global context, respectively. is the set of neighbouring nodes around the target . It is worth to note the latent feature representations are implicitly embedded in the above formulation.

3.3 Gumbel-Softmax Sampler

In SGG tasks, the output variables are generally assumed as categorical variables, which are rarely applied in stochastic neural networks due to the inability to compute and backpropagate the gradients of the associated discrete distributions [23], [24]. To this end, instead of generating non-differentiable samples from a categorical distribution, a Gumbel-Softamx sampler [23], [24] is employed to produce differentiable samples drawn from a novel Gumbel-Softmax distribution. Such sampler is generally reparameterizable, in which an efficient gradient estimator can be easily implemented.

Suppose be the interpretation of a potential region proposal, it can be modelled as a categorical variable with the class probabilities (where is the number of hypotheses for ), and the output categorical variables in SGG are encoded as -dimensional one-hot vectors locating on the corners of the -dimensional simplex, . The reparameterization function in Gumbel-Softmax sampler is defined as follows:

| (5) |

where represents a -dimensional Gumbel noise, is the output -dimensional sample vector and its -th element is computed as follows:

| (6) |

where is the softmax temperature. The samples drawn from the Gumbel-Softmax sampler become one-hot vectors when annealing the softmax temperature to zero. In reality, is often annealed to a relatively low temperature instead of zero.

3.4 Importance Weighted Structure Learning

In traditional VB framework, ELBO is generally employed as the variational inference objective and the resulting maximization of ELBO is used to approximate the computationally intractable log-partition function [21]. Given a scoring function and a computationally tractable variational distribution , one can easily derive the following:

| (7) |

where, on the right-hand side of the equation, the first term is the so-called ELBO and the second term is the Kullback–Leibler (KL) divergence between the variational distribution and the underlying posterior . Clearly, ELBO is lower bound of since the KL divergence term is non-negative. However, such loose ELBO objective often leads to overly simplified model, which may not capture the complicated multi-modal structure of the underlying posterior [22].

To this end, a tighter lower bound based on -sample importance weighting [22] is employed to replace the classical ELBO in this paper. Such lower bound is also known as importance weighted lower bound, which is defined as follows:

| (8) |

where represents the number of samples, in which each is an random sample drawn from the variational distribution . is also known as the importance weight, and is the importance weighted lower bound of when is a relatively small value. Essentially, becomes an unbiased estimator of when reaches infinity. In particular, the traditional ELBO is just a special case of the importance weighted lower bound (when setting ). Using more samples could only improve the tightness of the bound [22]. Therefore, compared with the traditional ELBO, is a much tighter lower bound of the log partition function .

For tractability, the above variational distribution is often assumed to be fully decomposed as:

| (9) |

where and are local variational approximations for the objects and predicates in the output scene graph, respectively. and are the sizes of vocabularies for the objects and predicates, respectively. Such inference procedure is also known as mean field variational inference (MFVI) [7], [8]. In MFVI, the MAP inference formulated in the first subsection can be transformed into a corresponding marginal inference task, which may not be the case in general [42].

Given a potential region proposal , the corresponding local log marginal posterior is computed as follows:

| (10) |

where is the computationally intractable log partition function, and (where represents marginalization over all nodes except the node ) is the local log marginal scoring function of , which is generally obtained by a variable elimination technique.

Specifically, for a potential object region proposal , it is computed as follows:

| (11) |

while for a potential predicate region proposal , it is obtained by:

| (12) |

In the above two equations, means an inner product, and are the output variables for an object and a predicate, which are generated by a Gumbel-Softmax sampler. is a relevant global region proposal interpretation set. The feature representation learning functions , , , , , , are constructed by combing visual perception modules and multi-layer perceptrons (MLPs), which are parameterized by . Each of these functions will first map the input image patches into the corresponding feature representations via the visual perception module, and then obtain the resulting dimensional feature vector by feeding relevant into the corresponding MLP. Most importantly, the MLPs implicitly perform the potential function marginalization prescribed in Equations (11) and (12). The resulting log score is essentially the inner product of the above dimensional feature vector and the corresponding -dimensional vector .

To approximate the computationally intractable , in this paper, we employ the following constrained variational inference objective :

| (13) |

where represents the -sample importance weighted lower bound, and the local variational approximation is set to a Gumbel-Softmax distribution with a categorical probability . represent the samples drawn from . Since the local Gumbel-Softmax variational distribution is reparameterizable, based on the Gumbel-Softmax sampler in the above subsection, can be formulated as follows:

| (14) |

where is -dimensional Gumbel noise drawn from a Gumbel distribution , which is fed into the Gumbel-Softmax reparameterization function to explicitly compute the corresponding output sample .

As a result, the above expectation can be approximated using a Monte Carlo estimator as follows:

| (15) |

where the efficient computation of the log importance weight is essential for computing the above . Specifically, can be easily computed based on Equation (11) and (12), while the above log probability is approximated as follows:

| (16) |

where represents the norm while is the maximum value of .

With the above , one can compute the target log marginal posterior via a corresponding surrogate logit :

| (17) |

where is a relevant constant w.r.t. and . According to the trick, one can compute by ignoring the above constant :

| (18) |

where the optimum interpretation of the input region proposal is computed as .

Moreover, a classical cross-entropy loss is employed in the relevant variational learning step to fit the above with the ground-truth training samples:

| (19) |

where represents the variational learning objective, is the number of training images in a mini-batch, is the ground-truth scene graph of the input image .

Input region proposal , categorical probability , number of samples , Gumbel noise distribution , Gumbel-Softmax reparameterization function , learning rate , softmax temperature , minimum temperature , temperature annealing rate , number of iterations

Output ,

Finally, to better illustrate the proposed IWSL method, we summarize it in Algorithm 1. As a MFVB framework, the proposed IWSL method consists of two procedures: variational inference and variational learning. In particular, the variational inference procedure includes steps 3-7, while steps 8-9 represent the variational learning procedure. The temperature annealing process is accomplished in step 10. For computational efficiency, the number of samples in variational inference is often smaller than the one applied in variational learning.

Specifically, in variational inference, one first randomly initialize a categorical probability for a potential region proposal , and then draw Gumbel noise samples from a Gumbel noise distribution . The output samples are explicitly computed by feeding the above Gumbel noises into a Gumbel-Softmax reparameterization function . With the Equations (11), (12) and (16), one can easily compute the relevant importance weight and approximate the corresponding importance weighted lower bound via an Monte Carlo estimation. Finally, an entropic mirror descent (EMD) (which is introduced in the following subsection) method is applied to solve the resulting constrained variational inference task and obtain the optimum categorical probability . In variational learning, according to Equation (17), one can first compute a surrogate logit based on the above updated optimum categorical probability , and then obtain the resulting using the trick. Finally, we compute the variational learning objective and then update the corresponding according to the stochastic gradient descent method.

With the above variational inference and learning procedures, in the training period, one can obtain the optimum and . In the test period, and are fixed to and , respectively. In particular, one discards the steps 9 and 10 in Algorithm 1, and generates the optimum interpretations .

3.5 Entropic Mirror Descent

As demonstrated in Equation (13), the variational inference procedure in the proposed method requires us to solve a constrained optimization problem. Specifically, to approximate the computationally intractable log partition function , one needs to maximize the -sample importance weighted lower bound , subject to the constraint that the categorical probability resides in -simplex.

Input variational distribution , importance weighted lower bound , number of iterations , an initial learning rate , a predefined objective , a small positive value

Output optimum

Among the existing constrained optimization algorithms, entropic mirror descent (EMD) [25] method is chosen as the applied variational inference methodology, as demonstrated in Algorithm 2. Since the above constraint is a probability simplex, the negative entropy can naturally be employed as a specific function to construct the required Bregman distance [47]. Due to the utilization of the geometry of the optimization problem [48], EMD generally converges faster than the classical projected gradient descent methods [49].

| Method | PredCls | SGCls | SGDet | SGDet(R@100) | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| mR@50 | mR@100 | mR@50 | mR@100 | mR@50 | mR@100 | Head | Body | Tail | Mean | |

| RelDN†[39] | ||||||||||

| Motifs[27] | ||||||||||

| Motifs*[27] | ||||||||||

| G-RCNN†[13] | ||||||||||

| MSDN†[10] | ||||||||||

| GPS-Net†[19] | ||||||||||

| GPS-Net†∗[19] | ||||||||||

| VCTree-TDE[38] | ||||||||||

| BGNN[16] | ||||||||||

| IWSL | ||||||||||

-

•

Note: All the above methods apply ResNeXt-101-FPN as the backbone. means the re-sampling strategy [31] is applied in this method, and depicts the reproduced results with the latest code from the authors. Using bold to represent the proposed method.

4 Experiments

In this section, we first validate the proposed IWSL method by comparing it with various state-of-the-art SGG models on two popular benchmarks: Visual Genome [50] and Open Images V6 [51], respectively. Finally, the ablation study and the visualization results are presented and discussed in the last two subsections.

4.1 Visual Genome

4.1.1 Experiment Configuration

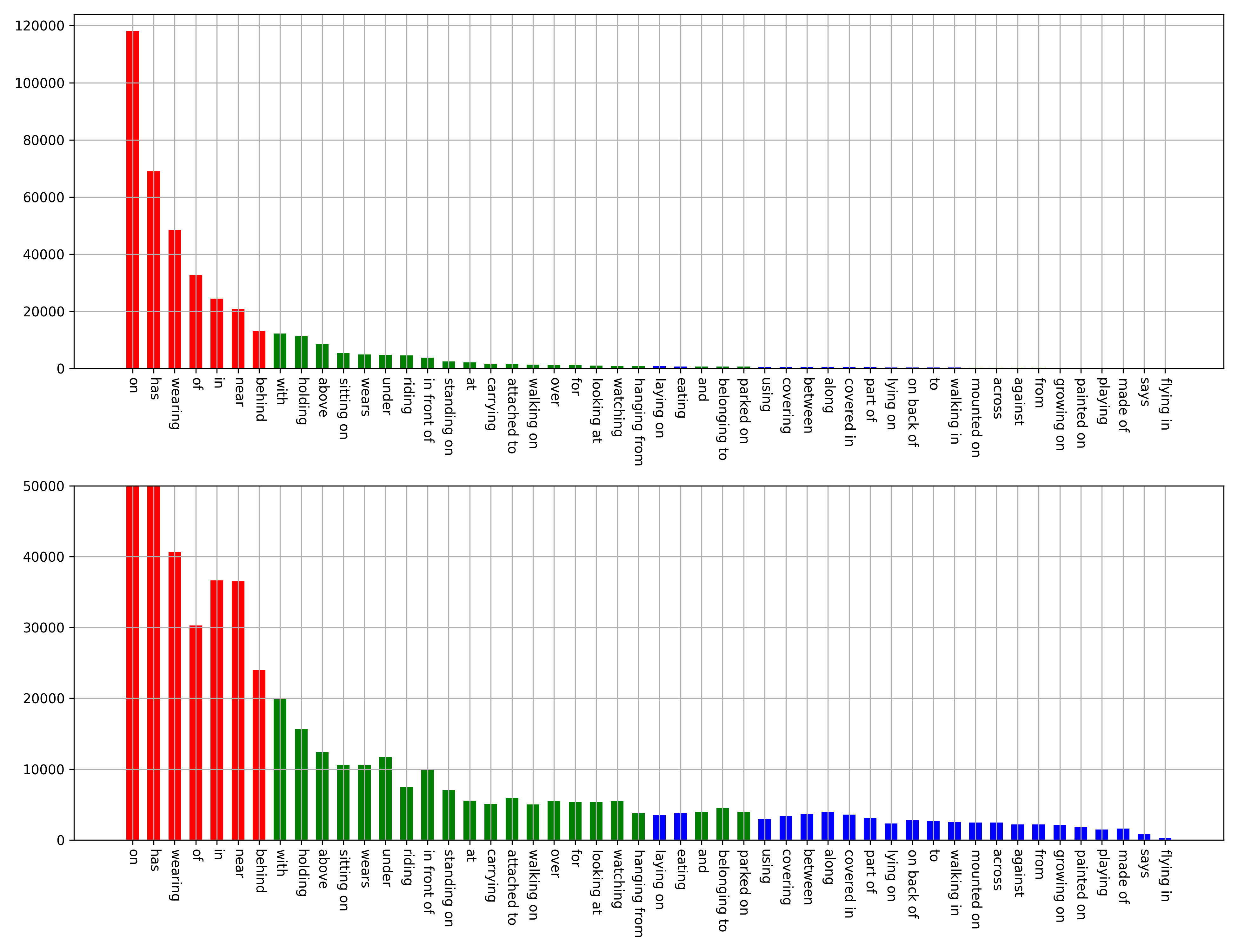

Benchmark: Visual Genome [50] is the most common scene graph generation benchmark, which consists of 108,077 images with an average of 38 objects and 22 relationships per image. In this experiment, we employ the data split protocol as in [9], in which the most frequent 150 object categories and 50 predicate classes are selected. Furthermore, we split the Visual Genome into a training set () and a test set (). For validation, an evaluation set () is randomly selected from the training set. Following [52], according to the number of objects in training split, the relevant categories are divided into three disjoint sets: (more than ), () and (less than ), as demonstrated in Fig.2.

Evaluation Metrics: Due to the reporting bias caused by the data imbalance, the mean Recall () rather than the common Recall () is chosen as the evaluation metric in this experiment. Compared with which only concentrates on common predicates (e.g. ) with abundant training samples, focuses on the informative predicate categories (e.g. ) with much less training samples. Three tasks are applied to validate the proposed method, namely, Predicate Classification (PredCls), Scene Graph Classification (SGCls) and Scene Graph Detection (SGDet). Specifically, PredCls aims to predict the predicate labels, given the input image, the ground-truth bounding boxes and object labels; SGCls tries to predict the labels for objects and predicates, given the input image and the ground-truth bounding boxes; SGDet constructs the output scene graph from the input image.

Implementation Details: Following [38], ResNeXt-101-FPN [53] and Faster-RCNN [40] are chosen as the backbone and the object detector, respectively. Like previous methods, we choose the step training strategy and freeze the above visual perception models during training. As in [16], a bi-level data resampling strategy is adopted to achieve an effective trade-off between the head and the tail categories, which consists of image-level over-sampling and instance-level under-sampling. The former creates a random permutation of images, repeating each image according to its repeat factor in each epoch, while the latter achieves under-sampling according to a drop-out probability for instances of different predicate classes in each image. Specifically, we set the repeat factor and the instance drop rate . The batch size is set to 12, and an SGD optimizer with a learning rate of is applied in this experiment. The number of samples is set to in the variational inference step. For the variational learning step, is set to in the SGDet task and in the PredCls and SGCls tasks.

| PredCls | SGCls | SGDet | ||||

|---|---|---|---|---|---|---|

| Method | mR@50 | mR@100 | mR@50 | mR@100 | mR@50 | mR@100 |

| Motifs+BA[35] | ||||||

| VCTree+BA[35] | ||||||

| Transformer+BA[35] | ||||||

| IWSL+BA | ||||||

-

•

Note: All the above methods apply the same balance adjustment strategy as in [35]. Using bold to represent the proposed method.

4.1.2 Comparisons with State-of-the-art Methods

As demonstrated in Table 1, besides achieving comparable performance with the latest BGNN algorithm in the PredCls task, the proposed IWSL method outperforms the previous state-of-the-art SGG models by a large margin in the remaining SGCls and SGDet tasks. For instance, for the most difficult yet representative SGDet task, compared with the latest BGNN model, the proposed IWSL method achieves and performance gain, respectively. Moreover, such performance is obtained with a quite small number of training iterations (which is usually set to ). This is mainly because the applied generic entropic mirror descent method converges faster than the classical message passing strategy.

Moreover, we also compare the SGDet performance () on the long-tail categorical groups in Table 1, in which the proposed IWSL method archives the best mean performance. For the informative and category groups, the proposed IWSL method outperforms the state-of-the-art SGG models by a large margin. Unlike the previous models, which focus on detecting the common predicate categories, the proposed IWSL method aims to improve the informative predicate detection capability. In other words, the proposed IWSL method could mitigate the intrinsic biased predicate prediction problem caused by the long-tail data distribution exhibited in Visual Genome.

Generally, two types of imbalance lead to the above biased predicate prediction problem, namely the semantic space imbalance and the training sample imbalance. By adopting a generic balance adjustment (BA) strategy [35] in the proposed IWSL method, the resulting IWSL+BA algorithm improves the informative predicate detection capability further. Specifically, to overcome the above two types of imbalance, two procedures are devised in the generic balance adjustment strategy: semantic adjustment and balanced predicate learning. The former aims to induce the predictions by the IWSL method to be more informative via constructing an appropriate transition matrix, while the latter tries to extend the sampling space for informative predicates.

As demonstrated in Table 2, for a fair comparison, the proposed IWSL+BA method is compared with three baseline models as presented in [35]. It can be seen that the proposed IWSL+BA method outperforms the previous state-of-the-art models by a large margin, especially for the PredCls task. This is mainly thanks to: 1) the transition matrix, introduced by the semantic adjustment procedure, which maps the predictions from the IWSL method to more informative ones; 2) more balanced training samples drawn by the balanced predicate learning procedure, which discards some redundant training samples of the common group, while keeping the training samples in the informative and groups.

4.2 Open Images V6

4.2.1 Experiment Configuration

Benchmark: With a superior annotation quality, Open Images V6 [51] is another popular scene graph generation benchmark, which is constructed by Google. It includes 126,368 training images, 5322 test images and 1813 validation images. In this experiment, we adopt the same data processing protocols as in [19], [39], [51].

Evaluation Metrics: According to the evaluation protocols in [19], [39], [51], in this experiment, we choose the following evaluation metrics: the mean Recall (), the regular Recall (), the weighted mean AP of relationships () and the weighted mean AP of phrase (). Like [19], [51], [39], the weight metric score is defined as: .

Implementation Details: Similar to the previous experiment in Visual Genome, we choose ResNeXt-101-FPN [53] and Faster-RCNN [40] as the backbone and the object detector, respectively. We employ the step training strategy and freeze the above visual perception models. The same bi-level data resampling strategy is adopted as in the previous experiment. We set the batch size and utilize an Adam optimizer with the learning rate of . For the variational inference step, the number of samples is set to . For the variational learning step, is set to .

4.2.2 Comparisons with State-of-the-art Methods

To verify the effectiveness of the proposed IWSL method further, in this experiment, we compare it with various state-of-the-art SGG models in Table 3. Some of the methods (with ) are reproduced with the latest code from the authors, while the other methods (with ) employ an additional re-sampling strategy [31]. As demonstrated in Table 3, the proposed IWSL method outperforms the state-of-the-art SGG models by a large margin on the most representative evaluation metric, and achieve a comparable performance with the latest BGNN algorithm on the remaining evaluation metrics.

| Method | mR@50 | R@50 | wmAP_rel | wmAP_phr | score_wtd |

|---|---|---|---|---|---|

| RelDN†[39] | |||||

| RelDN†∗[39] | |||||

| VCTree†[15] | |||||

| G-RCNN†[13] | |||||

| Motifs†[27] | |||||

| VCTree-TDE†[38] | |||||

| GPS-Net†[19] | |||||

| GPS-Net†∗[19] | |||||

| BGNN[16] | |||||

| IWSL |

-

•

Note: All the above methods apply ResNeXt-101-FPN as the backbone. means the re-sampling strategy [31] is applied in this method, and depicts the reproduced results with the latest code from the authors. Using bold to represent the proposed method.

4.3 Ablation Study

The importance weighted lower bound becomes an unbiased estimator when the number of samples reaches infinity. However, it is impossible to achieve the above learning scenario in reality, due to the high computational complexity and the huge computational resources. In practice, for computational efficiency, the number of samples is often set to a relatively small number. To investigate the detection performance dependency of the proposed IWSL method on the number of samples, in this section, we choose three settings and compare their impact on the SGDet performance as shown in Table 4. We observe that the SGDet performance gradually increases with the number of samples. The performance gain obtained from a larger number of samples () is not that obvious, while its corresponding computation time increases dramatically. To pursue a balanced trade-off between the detection performance and the computational complexity, in this paper, the number of samples is set to in variational inference step.

| Number of Samples | mR@20 | mR@50 | mR@100 |

|---|---|---|---|

-

•

Note: We compare the SGDet performance in this ablation study.

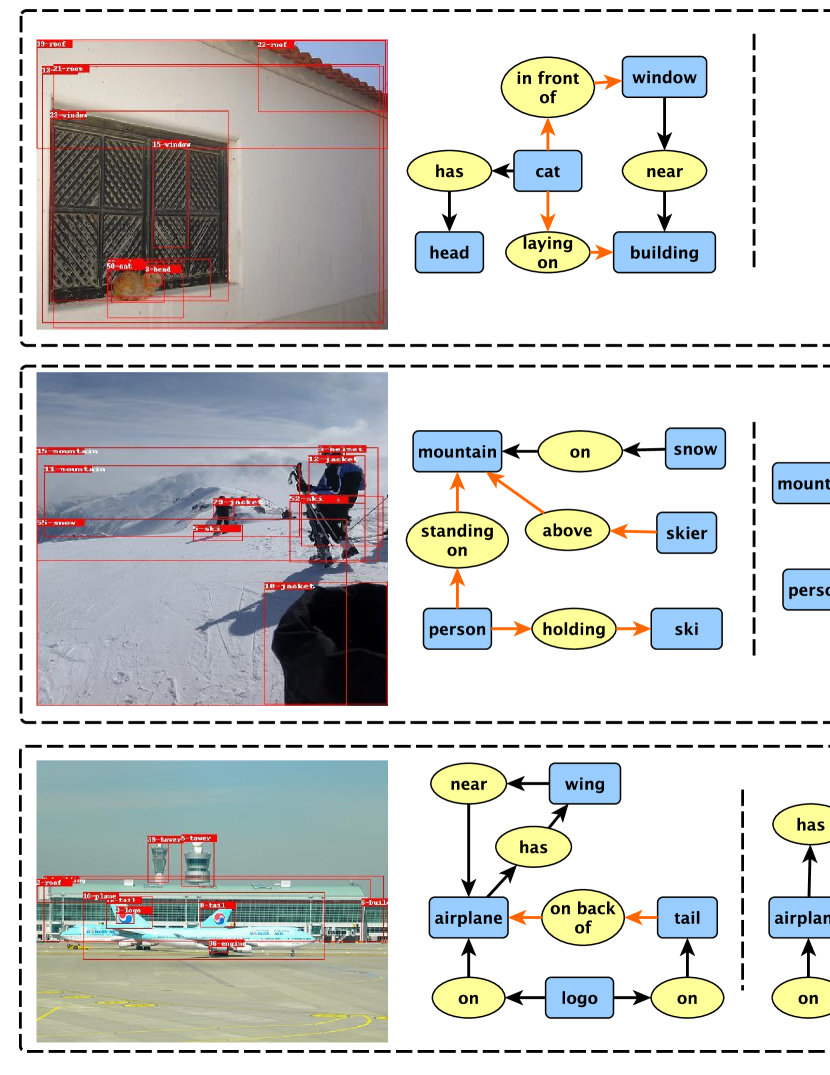

4.4 Visualization Results

To visually demonstrate the superiority of the proposed methods, in Fig. 3, we compare the visualization of the qualitative results of the ground-truth (GT), the baseline BGNN model, the proposed IWSL method and the derived IWSL+BA algorithm in the SGDet task. Compared with the baseline BGNN model, the proposed IWSL method is capable of detecting more informative and predicates. For example, the proposed IWSL could detect an additional predicate for the top image, or an additional predicate for the middle image. Besides, the spatial informative predicates such as can also be detected. Moreover, the derived IWSL+BA method further improves the above capability. For example, it could detect an additional triplet for the top image, or even a new reasonable triplet (which is not included in the ground-truth scene graph GT) for the bottom image. In a word, compared with the baseline BGNN model, the scene graphs generated by the proposed IWSL method and the derived IWSL+BA algorithm are much closer to the ground-truth scene graph GT.

5 Conclusion

To achieve a balanced bias-variance trade-off, in this paper, we propose a novel importance weighted structure learning (IWSL) method, which employs a tighter importance weighted lower bound to replace the classical ELBO as the variational inference objective. This is because the variational approximation derived from the ELBO often underestimates the underlying posterior. A generic entropic mirror descent algorithm, rather than the traditional message passing strategy, is employed to accomplish the resulting constrained variational inference task. We validate the proposed IWSL method on two popular scene graph generation benchmarks: Visual Genome and Open Images V6, showing it outperforms the state-of-the-art models by a large margin.

Acknowledgments

This work was supported in part by the U.K. Defence Science and Technology Laboratory, and in part by the Engineering and Physical Research Council (collaboration between U.S. DOD, U.K. MOD, and U.K. EPSRC through the Multidisciplinary University Research Initiative) under Grant EP/R018456/1.

References

- [1] Q. You, H. Jin, Z. Wang, C. Fang, and J. Luo, “Image captioning with semantic attention,” in Proceedings of the IEEE conference on computer vision and pattern recognition, 2016, pp. 4651–4659.

- [2] S. J. Rennie, E. Marcheret, Y. Mroueh, J. Ross, and V. Goel, “Self-critical sequence training for image captioning,” in Proceedings of the IEEE conference on computer vision and pattern recognition, 2017, pp. 7008–7024.

- [3] X. Yang, K. Tang, H. Zhang, and J. Cai, “Auto-encoding scene graphs for image captioning,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2019, pp. 10 685–10 694.

- [4] D. Teney, L. Liu, and A. van Den Hengel, “Graph-structured representations for visual question answering,” in Proceedings of the IEEE conference on computer vision and pattern recognition, 2017, pp. 1–9.

- [5] P. Anderson, X. He, C. Buehler, D. Teney, M. Johnson, S. Gould, and L. Zhang, “Bottom-up and top-down attention for image captioning and visual question answering,” in Proceedings of the IEEE conference on computer vision and pattern recognition, 2018, pp. 6077–6086.

- [6] J. Shi, H. Zhang, and J. Li, “Explainable and explicit visual reasoning over scene graphs,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2019, pp. 8376–8384.

- [7] M. J. Wainwright, M. I. Jordan et al., “Graphical models, exponential families, and variational inference,” Foundations and Trends in Machine Learning, vol. 1, no. 1–2, pp. 1–305, 2008.

- [8] C. W. Fox and S. J. Roberts, “A tutorial on variational bayesian inference,” Artificial intelligence review, vol. 38, no. 2, pp. 85–95, 2012.

- [9] D. Xu, Y. Zhu, C. B. Choy, and L. Fei-Fei, “Scene graph generation by iterative message passing,” in Proceedings of the IEEE conference on computer vision and pattern recognition, 2017, pp. 5410–5419.

- [10] Y. Li, W. Ouyang, B. Zhou, K. Wang, and X. Wang, “Scene graph generation from objects, phrases and region captions,” in Proceedings of the IEEE international conference on computer vision, 2017, pp. 1261–1270.

- [11] B. Dai, Y. Zhang, and D. Lin, “Detecting visual relationships with deep relational networks,” in Proceedings of the IEEE conference on computer vision and Pattern recognition, 2017, pp. 3076–3086.

- [12] S. Woo, D. Kim, D. Cho, and I. S. Kweon, “Linknet: Relational embedding for scene graph,” Advances in Neural Information Processing Systems, vol. 31, pp. 560–570, 2018.

- [13] J. Yang, J. Lu, S. Lee, D. Batra, and D. Parikh, “Graph r-cnn for scene graph generation,” in Proceedings of the European conference on computer vision (ECCV), 2018, pp. 670–685.

- [14] W. Wang, R. Wang, S. Shan, and X. Chen, “Exploring context and visual pattern of relationship for scene graph generation,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2019, pp. 8188–8197.

- [15] K. Tang, H. Zhang, B. Wu, W. Luo, and W. Liu, “Learning to compose dynamic tree structures for visual contexts,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2019, pp. 6619–6628.

- [16] R. Li, S. Zhang, B. Wan, and X. He, “Bipartite graph network with adaptive message passing for unbiased scene graph generation,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2021, pp. 11 109–11 119.

- [17] Y. Li, W. Ouyang, B. Zhou, J. Shi, C. Zhang, and X. Wang, “Factorizable net: an efficient subgraph-based framework for scene graph generation,” in Proceedings of the European Conference on Computer Vision (ECCV), 2018, pp. 335–351.

- [18] T. Chen, W. Yu, R. Chen, and L. Lin, “Knowledge-embedded routing network for scene graph generation,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2019, pp. 6163–6171.

- [19] X. Lin, C. Ding, J. Zeng, and D. Tao, “Gps-net: Graph property sensing network for scene graph generation,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2020, pp. 3746–3753.

- [20] D. Liu, M. Bober, and J. Kittler, “Visual semantic information pursuit: A survey,” IEEE transactions on pattern analysis and machine intelligence, vol. 43, no. 4, pp. 1404–1422, 2019.

- [21] C. Zhang, J. Bütepage, H. Kjellström, and S. Mandt, “Advances in variational inference,” IEEE transactions on pattern analysis and machine intelligence, vol. 41, no. 8, pp. 2008–2026, 2018.

- [22] R. B. G. Yuri Burda and R. Salakhutdinov, “Importance weighted autoencoders,” in 4th International Conference on Learning Representations (ICLR), 2016.

- [23] S. G. Eric Jang and B. Poole, “Categorical reparameterization with gumbel-softmax,” in 5th International Conference on Learning Representations (ICLR), 2017.

- [24] C. J. Maddison, A. Mnih, and Y. W. Teh, “The concrete distribution: A continuous relaxation of discrete random variables,” in 5th International Conference on Learning Representations (ICLR), 2017.

- [25] A. Beck and M. Teboulle, “Mirror descent and nonlinear projected subgradient methods for convex optimization,” Operations Research Letters, vol. 31, no. 3, pp. 167–175, 2003.

- [26] M. Qi, W. Li, Z. Yang, Y. Wang, and J. Luo, “Attentive relational networks for mapping images to scene graphs,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2019, pp. 3957–3966.

- [27] R. Zellers, M. Yatskar, S. Thomson, and Y. Choi, “Neural motifs: Scene graph parsing with global context,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2018, pp. 5831–5840.

- [28] N. V. Chawla, K. W. Bowyer, L. O. Hall, and W. P. Kegelmeyer, “Smote: synthetic minority over-sampling technique,” Journal of artificial intelligence research, vol. 16, pp. 321–357, 2002.

- [29] L. Shen, Z. Lin, and Q. Huang, “Relay backpropagation for effective learning of deep convolutional neural networks,” in European conference on computer vision. Springer, 2016, pp. 467–482.

- [30] D. Mahajan, R. Girshick, V. Ramanathan, K. He, M. Paluri, Y. Li, A. Bharambe, and L. Van Der Maaten, “Exploring the limits of weakly supervised pretraining,” in Proceedings of the European conference on computer vision (ECCV), 2018, pp. 181–196.

- [31] A. Gupta, P. Dollar, and R. Girshick, “Lvis: A dataset for large vocabulary instance segmentation,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2019, pp. 5356–5364.

- [32] X. Hu, Y. Jiang, K. Tang, J. Chen, C. Miao, and H. Zhang, “Learning to segment the tail,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2020, pp. 14 045–14 054.

- [33] S. Gidaris and N. Komodakis, “Dynamic few-shot visual learning without forgetting,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2018, pp. 4367–4375.

- [34] B. Zhou, Q. Cui, X.-S. Wei, and Z.-M. Chen, “Bbn: Bilateral-branch network with cumulative learning for long-tailed visual recognition,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2020, pp. 9719–9728.

- [35] Y. Guo, L. Gao, X. Wang, Y. Hu, X. Xu, X. Lu, H. T. Shen, and J. Song, “From general to specific: Informative scene graph generation via balance adjustment,” in Proceedings of the IEEE/CVF International Conference on Computer Vision, 2021, pp. 16 383–16 392.

- [36] K. Cao, C. Wei, A. Gaidon, N. Arechiga, and T. Ma, “Learning imbalanced datasets with label-distribution-aware margin loss,” in Proceedings of the 33rd International Conference on Neural Information Processing Systems, 2019, pp. 1567–1578.

- [37] Y. Cui, M. Jia, T.-Y. Lin, Y. Song, and S. Belongie, “Class-balanced loss based on effective number of samples,” in Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 2019, pp. 9268–9277.

- [38] K. Tang, Y. Niu, J. Huang, J. Shi, and H. Zhang, “Unbiased scene graph generation from biased training,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2020, pp. 3716–3725.

- [39] J. Zhang, K. J. Shih, A. Elgammal, A. Tao, and B. Catanzaro, “Graphical contrastive losses for scene graph parsing,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2019.

- [40] S. Ren, K. He, R. Girshick, and J. Sun, “Faster r-cnn: Towards real-time object detection with region proposal networks,” Advances in neural information processing systems, vol. 28, pp. 91–99, 2015.

- [41] C. Sutton and A. McCallum, “An introduction to conditional random fields for relational learning,” Introduction to statistical relational learning, vol. 2, pp. 93–128, 2006.

- [42] A. Blake, P. Kohli, and C. Rother, Markov random fields for vision and image processing. MIT press, 2011.

- [43] F. Scarselli, M. Gori, A. C. Tsoi, M. Hagenbuchner, and G. Monfardini, “The graph neural network model,” IEEE transactions on neural networks, vol. 20, no. 1, pp. 61–80, 2008.

- [44] J. Gilmer, S. S. Schoenholz, P. F. Riley, O. Vinyals, and G. E. Dahl, “Neural message passing for quantum chemistry,” in International conference on machine learning. PMLR, 2017, pp. 1263–1272.

- [45] X. Wang, R. Girshick, A. Gupta, and K. He, “Non-local neural networks,” in Proceedings of the IEEE conference on computer vision and pattern recognition, 2018, pp. 7794–7803.

- [46] J. Zhou, G. Cui, S. Hu, Z. Zhang, C. Yang, Z. Liu, L. Wang, C. Li, and M. Sun, “Graph neural networks: A review of methods and applications,” AI Open, vol. 1, pp. 57–81, 2020.

- [47] M. Teboulle, “Entropic proximal mappings with applications to nonlinear programming,” Mathematics of Operations Research, vol. 17, no. 3, pp. 670–690, 1992.

- [48] G. Raskutti and S. Mukherjee, “The information geometry of mirror descent,” IEEE Transactions on Information Theory, vol. 61, no. 3, pp. 1451–1457, 2015.

- [49] B. Eicke, “Iteration methods for convexly constrained ill-posed problems in hilbert space,” Numerical Functional Analysis and Optimization, vol. 13, no. 5-6, pp. 413–429, 1992.

- [50] R. Krishna, Y. Zhu, O. Groth, J. Johnson, K. Hata, J. Kravitz, S. Chen, Y. Kalantidis, L.-J. Li, D. A. Shamma et al., “Visual genome: Connecting language and vision using crowdsourced dense image annotations,” International journal of computer vision, vol. 123, no. 1, pp. 32–73, 2017.

- [51] A. Kuznetsova, H. Rom, N. Alldrin, J. Uijlings, I. Krasin, J. Pont-Tuset, S. Kamali, S. Popov, M. Malloci, T. Duerig, and V. Ferrari, “The open images dataset v4: Unified image classification, object detection, and visual relationship detection at scale,” International journal of computer vision, 2020.

- [52] Z. Liu, Z. Miao, X. Zhan, J. Wang, B. Gong, and S. X. Yu, “Large-scale long-tailed recognition in an open world,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2019, pp. 2537–2546.

- [53] K. He, X. Zhang, S. Ren, and J. Sun, “Deep residual learning for image recognition,” in Proceedings of the IEEE conference on computer vision and pattern recognition, 2016, pp. 770–778.