IMPROVING AUDIO CAPTIONING USING SEMANTIC SIMILARITY METRICS

Abstract

Audio captioning quality metrics which are typically borrowed from the machine translation and image captioning areas measure the degree of overlap between predicted tokens and gold reference tokens. In this work, we consider a metric measuring semantic similarities between predicted and reference captions instead of measuring exact word overlap. We first evaluate its ability to capture similarities among captions corresponding to the same audio file and compare it to other established metrics. We then propose a fine-tuning method to directly optimize the metric by backpropagating through a sentence embedding extractor and audio captioning network. Such fine-tuning results in an improvement in predicted captions as measured by both traditional metrics and the proposed semantic similarity captioning metric.

Index Terms— audio captioning, semantic similarity, sentence embedding

1 Introduction

The ability to understand the world around us using sound can afford edge AI devices a new level of context awareness. While a lot can be perceived by understanding images and videos, the auditory scene includes complementary information that cannot be captured by a camera without a microphone. For example, a CCTV camera may capture a video of burglars, but only the microphone can capture elements such as the voices of intruders, no matter where the camera is pointed. While a lot of information can be retrieved from images and videos, an auditory scene captured by a microphone includes complementary information that cannot be captured in a visual field. For example, the camera will only capture the burglar breaking glass or picking the lock or breaking the door if the camera is pointed in the direction of the door or window. However, a microphone can simultaneously capture sounds of glass breaking, pounding on the door, squeaking of lock picking, jingling of keys, footsteps, voices and much more. Hence, understanding of audio is crucial to developing automated awareness of context.

The first step towards developing audio understanding was audio tagging, which involves detecting the occurrence of any common sounds from a finite set of sounds. Given that this has been attempted with some success, more can be achieved by being able to describe the sounds using language natural to humans. Such descriptions are natural for human-machine interface applications and can be readily analyzed with modern Natural Language Processing tools [1]. Generating audio captions in natural language also enables description of sounds which cannot be directly tagged with pre-defined labels since they don’t fit into any of the common categories. For example, falling of rocks is not a commonly recorded sound, and hence cannot be described well by audio tagging. The best that audio tagging can do is label it as a “thunk”. More justice can be done to the description of such a sound if an audio captioning engine is able to describe the sound as “falling of hard objects”, or “collision of solid objects”.

A number of audio datasets with human-generated captions are available in the literature [2, 3] and models have been developed to predict natural language audio captions [4, 5]. As in machine translation, one method to evaluate the quality of the generated caption has been to calculate overlap of n-grams between the predicted caption and the reference captions, in the form of precision, F-score or recall, termed as BLEU [6], ROUGE [7] and METEOR [8] respectively. Demanding exact overlap between n-grams is a harsh criterion, and often overlooks synonyms or words/phrases with similar meanings. While the metric METEOR tries to alleviate this problem by accounting for synonyms and words with common stems, such leniency may not be enough to account for words that convey similar meanings. Other audio captioning metrics are adapted from image captioning. Instead of considering n-grams of exact words or their stems/synonyms, the CIDER [9] metric looks at n-grams of Term Frequency-Inverse Document Frequency (TF-IDF)s [10]. Specifically, CIDER calculates cosine similarities between n-grams of TF-IDFs, and averages these for . This is a significant step in moving away from demanding exact matches. Another image captioning metric SPICE [11] creates a scene graph from the set of reference captions, and tries to find an overlap between the scene graphs of the candidate caption and that of the reference captions. The limitation of this method is that it looks for exact matches between components of the scene graph. In this paper, we instead propose metrics which capture semantic similarities between natural language descriptions leveraging word or sentence embeddings for the purpose of audio captioning model training and evaluation. This paper is organized as follows. Section 2 covers some related work. Section 3 describes the procedure, Section 4 explains results, and Section 5 provides a conclusion.

2 Related Work

A typical audio captioning model [4, 5] includes an encoder, which extracts meaningful audio features containing discriminative information relevant to the audio scene, followed by a decoder which takes those features as input, and generates text. For the encoder, a network trained with an audio understanding task such as Sound Event Detection [12], Acoustic Scene Classification [13] or Audio Tagging [14] is used. This may be an RNN, a CNN or a CRNN. The decoder is a auto-regressive component, such as an RNN or a Transformer decoder [15] which generates token probabilities. The cross-entropy loss between one-hot encoded vectors of token IDs is generally used, as shown in Equation 1. Some work has also been attempted in directly optimizing the captioning metric using reinforcement learning, by using the score as a reward [4].

Apart from the metrics described in Section 1, another metric FENSE [16] uses sentence embeddings to capture semantic similarities between captions. Additionally, it penalizes this score by using the output of a neural network trained to detect frequently occurring errors in captioning systems. In our work, we leverage a sentence embedding based similarity metric for the purpose of fine-tuning an audio captioning model, which to our knowledge has not been reported before.

3 Procedure

3.1 Baseline system

The baseline system has an encoder-decoder structure. The encoder is the CNN10 PANN [17] pre-trained for audio tagging. For the decoder, we use a stack of two transformer decoder layers with four heads. We train this decoder to generate token embeddings of the caption. These token embeddings are further projected into a space whose dimension is equal to the size of the vocabulary, so that the prediction can be expressed as a one-hot encoded vector. As shown in Equation 1, the training objective is to minimize the cross-entropy loss between the one-hot encoded vector representations of the reference caption and of the predicted caption , where is one of the many audio clips from the collection of audio clips . This network has about 8M parameters if 128-dimensional word2vec [18] embeddings are used, and about 14M trainable parameters if 384-dimensional BERT [19] embeddings are used. To evaluate results, in addition to metrics introduced in Section 2, we also use the Word Mover’s Distance (WMD) [20], which establishes a correspondence between similar words in two sentences, and calculates the distance that words of one sentence would have to travel to reach the other sentence.

| (1) |

3.2 Fine-tuning to optimize metric

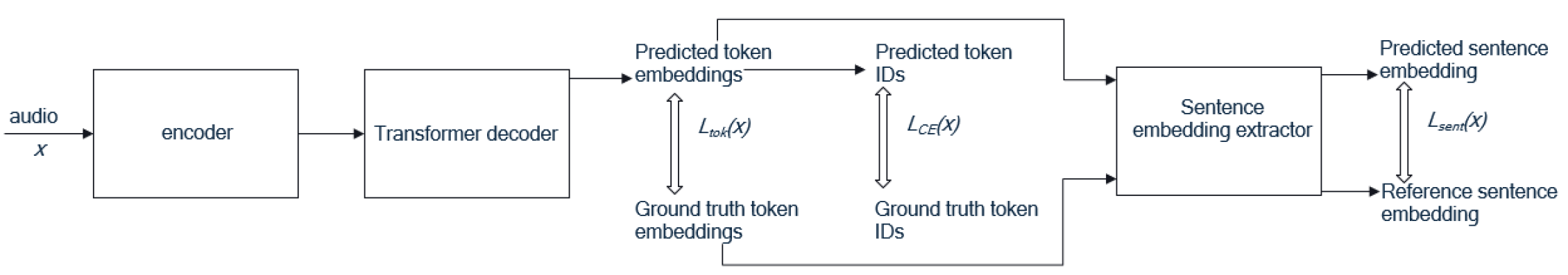

The use of sentence embeddings to capture the meaning of an entire caption in one vector has been proposed [16]. The cosine similarity between candidate and reference caption sentence embeddings is thus a meaningful measure of candidate caption quality. In an effort to optimize this metric directly using backpropagation, we append the sentence embedding network to the audio caption generation network, as shown in Figure 1. Specifically, the token embeddings generated by the decoder just before the token IDs are directly input into the sentence embedding extractor. For fine-tuning the trained network, we add to the cross-entropy loss the cosine distance between the sentence embedding of the generated caption and the sentence embedding of the reference caption . This loss term is shown in Equation 2. Hence, the weights of the audio captioning network are directly optimized to minimize the distance between sentence embeddings, and to consequently make the generated caption closer in meaning to the reference caption. Weights of the sentence embedding network are frozen during fine-tuning. Performing such fine-tuning does not affect the size of the model used during inference, since inference stops once predicted token IDs are obtained, as shown in Figure 1.

| (2) |

We chose the Sentence-BERT [21] sentence embedding network, which was trained with the objective of classifying if two sentences are in entailment of, in contradiction to, or neutral with respect to each other. It accepts token-level BERT [19] embeddings of the sentence, passes it through a version of BERT which was fine-tuned for sentence embedding generation, and then performs mean pooling to generate a 384-dimensional representation of the sentence. The twelve-layer version of BERT was reduced to six layers by keeping only every second layer. Along these lines, we also define the audio captioning metric SBERT_sc to be the cosine similarity between Sentence-BERT embeddings of the candidate and reference captions. For the audio captioning model, the choice of token-level embeddings has to be BERT, so that the token-level embeddings generated before the one-hot encoded vectors representing token IDs can be directly input into Sentence-BERT to enable end-to-end backpropagation.

3.2.1 Evaluating metrics

To evaluate the effectiveness of audio captioning metrics, we devised a simple method. Given a database of sounds along with their human-generated reference captions, we evaluate the scores between all pairs of captions. A good metric will yield a high score for captions describing the same audio file, and a low score for captions describing audio files with different content. For a candidate metric, we average its score over all pairs of captions that describe the same audio file, henceforth referred to as average-over-similar, abbreviated as , and over all pairs of captions that describe distinct audio files, henceforth referred to as average-over-distinct, abbreviated as . However, captions for audio files with similar content may be similar. Hence, from , we eliminate scores of all pairs of captions which correspond to distinct files with overlapping audio tags. We term this score as average-over-different, abbreviated as .

For each metric, we normalized the scores to be between 0 and 1. For the SPICE metric, since all reference captions are needed to calculate the scene graph, pairwise scores do not make sense. Hence, the score of each candidate caption was computed with respect to groups of captions, where each group corresponds to one audio file.

3.3 Implementation Details

3.3.1 Datasets

For evaluating metrics, we used the validation set of the AudioCaps [2] dataset. This dataset consists of 49,838 audio files for training with one human-generated caption per file. It also contains 495 validation audio files and 975 test audio files, all of which are annotated with five human-generated captions per audio file. The audio files are of 10 s in length, and are sourced from the AudioSet database.

For training the audio captioning models, we used the AudioCaps dataset for initial training, followed by further training with the Clotho [3] dataset. The Clotho dataset contains audio files from the Freesound database with durations ranging from 15 s to 30 s, where each audio file is annotated with five different human-generated captions. The train, validation and test splits have 3839, 1045 and 1045 audio files respectively.

3.3.2 Training Details

For both the baseline approach and the fine-tuning approach, the networks were trained for 30 epochs with a batch size of 32. The best epoch was picked, based on the validation loss. The learning rate started with 0.001, and decayed by a factor of 0.1 every 10 epochs. For decoding, we only used greedy decoding.

To train the model for the Clotho dataset, we used the best AudioCaps model after fine-tuning using Sentence-BERT.

4 Results

4.1 Metric Evaluation

| Metric | ||||

|---|---|---|---|---|

| CIDER | 0.0788 | 0.0014 | 0.0006 | 0.0783 |

| SPICE | 0.4553 | 0.0295 | 0.0275 | 0.4278 |

| SBERT_sc | 0.6410 | 0.2172 | 0.1918 | 0.4492 |

From Table 1, we observe that the value of is the highest for SBERT_sc, declaring it as the metric most capable of differentiation between captions belonging to unrelated audio, and of establishing similarities between descriptive captions of the same audio. Hence we decided to optimize this metric via backpropagation. The CIDER score seems low because we normalized it to be between 0 and 1, for fair comparison with the other metrics, which are also scaled between 0 and 1.

4.2 Result of Finetuning

| Captions | SBERT_sc | SBERT_sc | ||

|---|---|---|---|---|

| before FT | after FT | |||

| Predicted caption | w/o FT | a woman speaks and a baby cries | ||

| w/ FT | a woman speaks and laughs | |||

| Reference captions | 1 | a female speech and laughing with running water | 0.360 | 0.661 |

| 2 | ocean waves crashing as a woman laughs and yells as a group of boys and girls scream and laugh in the distance | 0.353 | 0.462 | |

| 3 | a woman yelling and laughing as a group of people shout while ocean waves crash and wind blows into a microphone | 0.417 | 0.637 | |

| 4 | woman screaming and yelling to another person with loud wind and waves in background | 0.472 | 0.505 | |

| 5 | a woman yelling and laughing as ocean waves crash and a group of people shout while wind blows into a microphone | 0.420 | 0.631 | |

| Predicted caption | w/o FT | wind blows strongly and a man speaks | ||

| w/ FT | wind blows strongly and a gun fires | |||

| Reference captions | 1 | several very loud explosions occur | 0.283 | 0.445 |

| 2 | loud bursts of explosions with high wind | 0.450 | 0.590 | |

| 3 | a loud explosion as gusts of wind blow | 0.538 | 0.629 | |

| 4 | loud banging followed by one louder bang with some staticloud banging followed by one louder bang with some static | 0.273 | 0.353 | |

| 5 | very loud explosions with pops and bursts of more explosions | 0.248 | 0.413 |

| BLEU_1 | BLEU_2 | BLEU_3 | BLEU_4 | ROUGE | METEOR | CIDER | SPICE | SBERT_sc | WMD | FENSE-error | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| AudioCaps | |||||||||||

| w/o FT | 0.6612 | 0.4761 | 0.3207 | 0.2020 | 0.4558 | 0.2159 | 0.5730 | 0.1575 | 0.5447 | 6.5078 | 0.4901 |

| w/ FT | 0.6486 | 0.4692 | 0.3204 | 0.2080 | 0.4554 | 0.2178 | 0.5735 | 0.1664 | 0.5552 | 6.4503 | 0.4815 |

| Clotho | |||||||||||

| w/o FT | 0.5253 | 0.3196 | 0.1974 | 0.1200 | 0.3573 | 0.1569 | 0.3061 | 0.1049 | 0.4189 | 6.9616 | 0.6328 |

| w/ FT | 0.5228 | 0.3198 | 0.1952 | 0.1183 | 0.3559 | 0.1582 | 0.3097 | 0.1062 | 0.4262 | 6.8194 | 0.6182 |

The baseline before fine-tuning used the loss, and fine-tuning was performed with the loss. From Table 3, we observe that the fine-tuning step using Sentence-BERT indeed results in an improvement using both image captioning metrics as well as sentence embedding based metrics. As illustrated in Table 2, after fine-tuning, the model generates captions whose SBERT_sc are higher with respect to those of the reference captions, since the captions are closer in meaning to the reference captions after fine-tuning. However, using translation metrics such as BLEU and ROUGE, the improvement is not obvious because the improvement is in semantic similarity and not in exact word overlap. The error, as detected by the error detector of the FENSE metric, also decreases. The large error is caused by incorrect error detection in the missing verb category, in captions such as “a spray is released”, “a man is speaking”, “a dog is growling”, “water is rushing by” and “a door is closed”. The Word Mover’s Distance (WMD) also decreases after fine-tuning, since the distance needed to travel from word embeddings of one caption to reach word embeddings of the other caption decreases.

5 Conclusion

We demonstrate that fine-tuning a vanilla audio captioning model with the objective of minimizing the cosine distance between sentence embeddings of the candidate and the reference captions results in higher caption quality. Future work will focus on exploring metrics that capture semantic similarities at a lower computational cost.

References

- [1] Ravi Choudhary, Arvind Krishna Sridhar, and Erik Visser, “Activity report analysis with automatic single or multispan answer extraction,” 2022.

- [2] Chris Dongjoo Kim, Byeongchang Kim, Hyunmin Lee, and Gunhee Kim, “AudioCaps: Generating Captions for Audios in The Wild,” in NAACL-HLT, 2019.

- [3] Konstantinos Drossos, Samuel Lipping, and Tuomas Virtanen, “Clotho: an audio captioning dataset,” in ICASSP 2020 - 2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), 2020, pp. 736–740.

- [4] Xuenan Xu, Heinrich Dinkel, Mengyue Wu, and Kai Yu, “A crnn-gru based reinforcement learning approach to audio captioning.,” in DCASE, 2020, pp. 225–229.

- [5] Xinhao Mei, Xubo Liu, Haohe Liu, Jianyuan Sun, Mark D. Plumbley, and Wenwu Wang, “Automated audio captioning with keywords guidance,” Tech. Rep., DCASE2022 Challenge, July 2022.

- [6] Kishore Papineni, Salim Roukos, Todd Ward, and Wei-Jing Zhu, “Bleu: a method for automatic evaluation of machine translation,” in Proceedings of the 40th Annual Meeting of the Association for Computational Linguistics, Philadelphia, Pennsylvania, USA, July 2002, pp. 311–318, Association for Computational Linguistics.

- [7] Chin-Yew Lin, “ROUGE: A package for automatic evaluation of summaries,” in Text Summarization Branches Out, Barcelona, Spain, July 2004, pp. 74–81, Association for Computational Linguistics.

- [8] Satanjeev Banerjee and Alon Lavie, “METEOR: An automatic metric for MT evaluation with improved correlation with human judgments,” in Proceedings of the ACL Workshop on Intrinsic and Extrinsic Evaluation Measures for Machine Translation and/or Summarization, Ann Arbor, Michigan, June 2005, pp. 65–72, Association for Computational Linguistics.

- [9] Ramakrishna Vedantam, C. Lawrence Zitnick, and Devi Parikh, “Cider: Consensus-based image description evaluation,” in 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2015, pp. 4566–4575.

- [10] Karen Sparck Jones, “A statistical interpretation of term specificity and its application in retrieval,” Journal of documentation, 1972.

- [11] Peter Anderson, Basura Fernando, Mark Johnson, and Stephen Gould, “Spice: Semantic propositional image caption evaluation,” in European conference on computer vision. Springer, 2016, pp. 382–398.

- [12] Annamaria Mesaros, Toni Heittola, Tuomas Virtanen, and Mark D Plumbley, “Sound event detection: A tutorial,” IEEE Signal Processing Magazine, vol. 38, no. 5, pp. 67–83, 2021.

- [13] Daniele Barchiesi, Dimitrios Giannoulis, Dan Stowell, and Mark D Plumbley, “Acoustic scene classification: Classifying environments from the sounds they produce,” IEEE Signal Processing Magazine, vol. 32, no. 3, pp. 16–34, 2015.

- [14] Jort F Gemmeke, Daniel PW Ellis, Dylan Freedman, Aren Jansen, Wade Lawrence, R Channing Moore, Manoj Plakal, and Marvin Ritter, “Audio set: An ontology and human-labeled dataset for audio events,” in 2017 IEEE international conference on acoustics, speech and signal processing (ICASSP). IEEE, 2017, pp. 776–780.

- [15] Ashish Vaswani, Noam Shazeer, Niki Parmar, Jakob Uszkoreit, Llion Jones, Aidan N Gomez, Lukasz Kaiser, and Illia Polosukhin, “Attention is all you need,” Advances in neural information processing systems, vol. 30, 2017.

- [16] Zelin Zhou, Zhiling Zhang, Xuenan Xu, Zeyu Xie, Mengyue Wu, and Kenny Q Zhu, “Can audio captions be evaluated with image caption metrics?,” in ICASSP 2022-2022 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). IEEE, 2022, pp. 981–985.

- [17] Qiuqiang Kong, Yin Cao, Turab Iqbal, Yuxuan Wang, Wenwu Wang, and Mark D Plumbley, “Panns: Large-scale pretrained audio neural networks for audio pattern recognition,” IEEE/ACM Transactions on Audio, Speech, and Language Processing, vol. 28, pp. 2880–2894, 2020.

- [18] Tomas Mikolov, G.s Corrado, Kai Chen, and Jeffrey Dean, “Efficient estimation of word representations in vector space,” 01 2013, pp. 1–12.

- [19] Jacob Devlin, Ming-Wei Chang, Kenton Lee, and Kristina Toutanova, “BERT: Pre-training of deep bidirectional transformers for language understanding,” in Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long and Short Papers), Minneapolis, Minnesota, June 2019, pp. 4171–4186, Association for Computational Linguistics.

- [20] Matt Kusner, Yu Sun, Nicholas Kolkin, and Kilian Weinberger, “From word embeddings to document distances,” in International conference on machine learning. PMLR, 2015, pp. 957–966.

- [21] Nils Reimers and Iryna Gurevych, “Sentence-BERT: Sentence embeddings using Siamese BERT-networks,” in Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing (EMNLP-IJCNLP), Hong Kong, China, Nov. 2019, pp. 3982–3992, Association for Computational Linguistics.