Improving Variational Autoencoder based Out-of-Distribution Detection for Embedded Real-time Applications

Abstract.

Uncertainties in machine learning are a significant roadblock for its application in safety-critical cyber-physical systems (CPS). One source of uncertainty arises from distribution shifts in the input data between training and test scenarios. Detecting such distribution shifts in real-time is an emerging approach to address the challenge. The high dimensional input space in CPS applications involving imaging adds extra difficulty to the task. Generative learning models are widely adopted for the task, namely out-of-distribution (OoD) detection. To improve the state-of-the-art, we studied existing proposals from both machine learning and CPS fields. In the latter, safety monitoring in real-time for autonomous driving agents has been a focus. Exploiting the spatiotemporal correlation of motion in videos, we can robustly detect hazardous motion around autonomous driving agents. Inspired by the latest advances in the Variational Autoencoder (VAE) theory and practice, we tapped into the prior knowledge in data to further boost OoD detection’s robustness. Comparison studies over nuScenes and Synthia data sets show our methods significantly improve detection capabilities of OoD factors unique to driving scenarios, 42% better than state-of-the-art approaches. Our model also generalized near-perfectly, 97% better than the state-of-the-art across the real-world and simulation driving data sets experimented. Finally, we customized one proposed method into a twin-encoder model that can be deployed to resource limited embedded devices for real-time OoD detection. Its execution time was reduced over four times in low-precision 8-bit integer inference, while detection capability is comparable to its corresponding floating-point model.

This research was funded in part by MoE, Singapore, Tier-2 grant number MOE2019-T2-2-040.

1. INTRODUCTION

Machine learning (ML) is rapidly finding its way into Cyber-Physical Systems (CPS). ML is highly data-driven. The reliability of its outputs decreases when test data deviates away from the distribution of training data (Out-of-Distribution problem - OoD). While ML adds new and attractive features to modern CPS, it also creates a new problem. Safety is critical for CPS because physical processes are involved, such as in autonomous vehicles, robotics, and many more. However, standard verification and validation methodologies in CPS design fail to handle the uncertainties in ML especially in applications where natural images create high input space dimensionality. (nguyen2015deep, 1, 2).

In recent years, autonomous vehicles have caused several fatalities under rare or unbeknownst vision circumstances to ML, such as unexpected maneuvers from surrounding cars and pedestrians pushing bikes across a road in darkness. Hence, developing methods that can analyze hazards in real-time for ML-enabled CPS is very important.

So far, many OoD detection methodologies have been proposed and tested over benchmark data sets that are much simpler than road scenes in autonomous driving. Broadly, these methods can be grouped into two categories. One group works through a supervised learning framework. By tapping into the statistics of neural weights in well-trained deep neural classifiers, novel metric scores that estimate the trustworthiness of a classifiers’ outputs were proposed by (lakshminarayanan2016simple, 3, 4, 5). OoD and adversarial samples would receive trust scores lower than in-distribution (ID) samples.

More recently, an unsupervised generative model approach has been explored by utilizing the information bottleneck property of Variational Autoencoder (VAE) to learn a posterior distribution approximation to infer the unknown true latent distribution of a training data set. Equation 1 is the current widely adopted evidence lower bound (ELBO) objective formulated by (kingma2013auto, 6), where . The first term on the right hand side, likelihood, measures the reconstruction accuracy of input. The second term measures the distribution’s discrepancy in latent space. The learned latent space is better disentangled when adding an adjustable hyperparameter to balance the two terms (higgins2016beta, 7). The value has to be carefully chosen per training data.

| (1) |

Typically, the posterior approximation resides in a low dimensional latent space , hundreds to thousands of times lower than the corresponding input space . Given test samples , the VAE decoder reconstructs inputs with high likelihoods if inputs are ID, and otherwise with low likelihoods. The encoder of the VAE produces data-driven latent variables. The discrepancy of these latent variables’ distribution to the latent prior is commonly measured by Kullback–Leibler (KL) divergence. Detection methods (daxberger2019bayesian, 8, 9) utilized either the distribution discrepancy in latent space or the likelihood in input space as an OoD measure. Along this line, generative adversarial networks have also been explored by (liu2019generative, 10, 11) to detect adversarial samples, a particular type of OoD data. Meanwhile, using likelihood alone failed to detect OoD in a specific situation has been revealed by (nalisnick2018deep, 12).

As of now, existing VAE-based OoD detection methods have reported good performance but only when training and test data are obtained from the same data set, and when detection were performed on standalone images instead of image sequences in video. Model generalization capability and robustness to noisy inputs have not been investigated in these works. Researchers have yet to discover effective methods that will perform robustly across multiple video data sets for real-time applications. Also, the latest advances in VAE formulation theory (tolstikhin2017wasserstein, 13) and its application for OoD detection (nalisnick2018deep, 12) have not been explored. With a focus on safety monitoring for ML-enabled autonomous driving, we probed into the existing likelihood-based detection methods, proposed a robust OoD detection architecture, and made significant enhancements. Contributions of our work are summarised as follows:

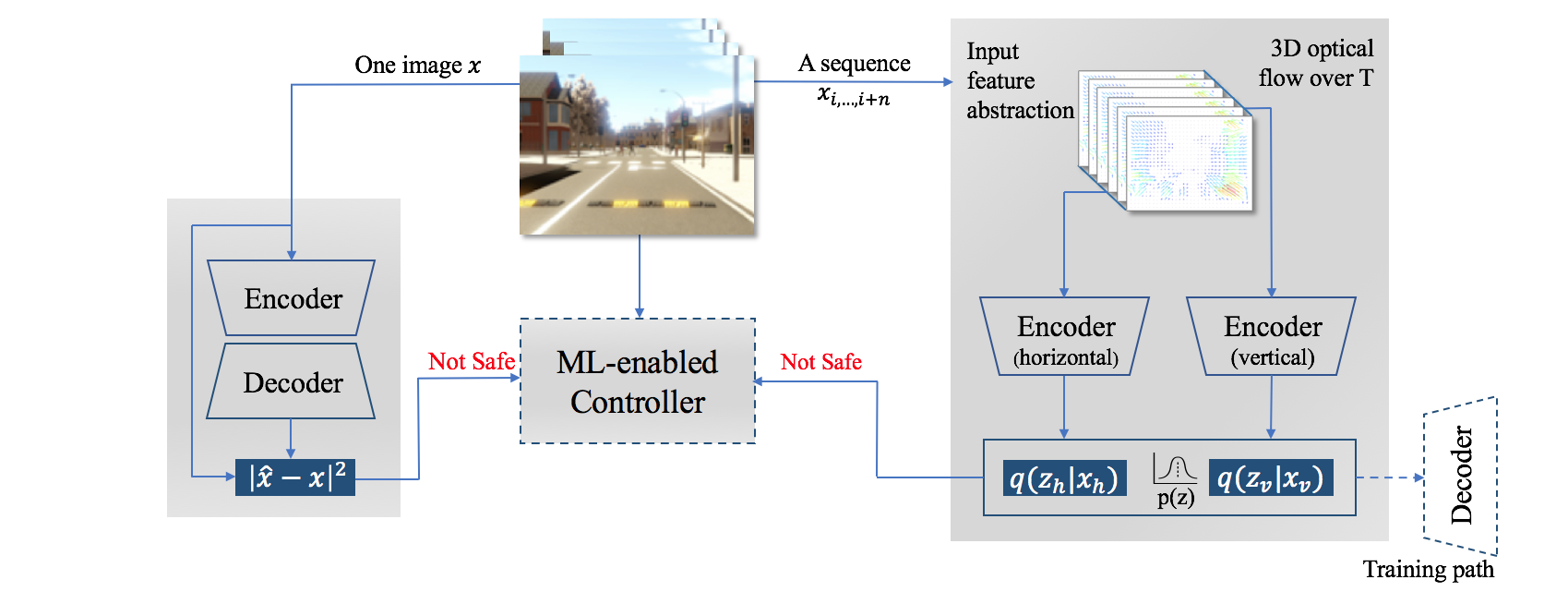

Light-weighted spatiotemporal detection: Hazardous motion around the ego vehicle is a unique and critical OoD factor in autonomous driving. We design a dual-input VAE to detect OoD motions in videos from the VAE latent space. There are two novelties in this design. First, it reduces the VAE input space complexity through feature abstraction by the means of 3D optical flow (barron2005tutorial, 14). This makes it feasible to train a VAE with a Convolutional Neural Network (CNN) on high-dimensional time series data without a computationally costly Recurrent Neural Network. The other is the disentanglement of horizontal and vertical motions through the network architecture, shown in the right-side grey box in Figure 1. Experiments in Section 4.2.1 indicated that our unique design leads to robust performance, 42% better than state-of-art approaches in F1 score.

Optimal latent prior: The standard isotropic Gaussian is a common choice of the latent prior for OoD detection methods in the latent space . Based on a novel autoencoder formulation proposed by (tolstikhin2017wasserstein, 13), we devised an enhanced objective that trains a VAE model with the aggregated prior approximated from its training data set. This further improves performance of our light-weighted spatiotemporal detection method on Synthia (bengar2019temporal, 15), a simulation driving data set, averagely by 8% in F1 score. See evaluation in Section 4.2.2.

Prior compensated OoD measure: Likelihood distributions of ID and OoD samples’ can overlap when a VAE model is trained on a certain data set (nalisnick2018deep, 12). Therefore, using likelihood alone as an OoD detection score is problematic under such conditions. Furthermore, this problem concerning training data is not symmetric. For example, a model trained with data set A can hardly detect data from an unrelated set B as OoD. Whereas training a model of the same network architecture but with data set B can detect set A data as OoD with high accuracy. See detailed illustration in Appendix A. To exploit this asymmetric behavior that was not captured by VAE, we leveraged the image complexity statistics in a model’s training data set to formulate a complexity compensated likelihood score to improve OoD detection of visibility factors critical to driving safety. Over our implementation of related work (cai:iccps2020, 16), the new score formula increases F1 score up to 8% on fog and darkness OoD factors, as shown in Section 4.5.

Robust model generalization capability: Existing OoD detection methods in the CPS literature only reported performance on a hold-out partition from the same source of the training set. A robust OoD detector trained on data collected by driving through city A should not lose its prediction capability significantly when testing in city B. Performance evaluations across real-world and simulation driving data sets showed that our detection method generalized near-perfectly while related works failed badly. Average detection performance of our method drops trivially from 0.986 to 0.980 in AUROC111AUROC stands for Area Under the Receiver Operating Characteristic curve., 97% better than the state-of-the-art approaches. See comparison study in Section 4.3.

Designed for embedded real-time applications: There is a lack of studies to evaluate whether the existing OoD detection methods are efficient for deployment to embedded devices for real-time applications. After compressed into an 8-bit integer version, the VAE model inference time was reduced over four times on Google Edge TPU. One OoD detection took 58.3 milliseconds on average on a Rasberry Pi mini-computer, while detection performance remains comparable to its corresponding floating-point model. See Section 5.2-5.3 for evaluation and comparison with related works.

In the rest of the paper, we first discuss related works in Section 2 and subsequently describe our proposals in Section 3. Six groups of evaluation and comparison studies are presented in Section 4. Section 5 is dedicated to deploying the proposed OoD detector and shows the related works to embedded devices and the experimental results.

Our light-weighted spatiotemporal detection method conducts detection in the VAE latent space. It is depicted in the right-side grey box, where represents latent variables, is unknown true prior distribution, and is the data-driven approximation of posterior distribution. The left-side grey box shows how OoD is detected in the input space. To tackle the likelihood overlap concern, we propose an enhancement to the commonly used reconstruction error measure .

2. RELATED WORK

OoD detection for safety-critical ML-enabled autonomous CPS is an emerging field. There is a lack of closely related work in the literature. The mean absolute error between an image and its corresponding reconstruction from a VAE decoder was used as an OoD measure (cai:iccps2020, 16). The KL-divergence values were utilized in -VAE latent space to detect OoD samples (sundar2020out, 9, 17). In these works, VAE learns from standalone images. For real-time detection from video sequences, the inductive conformal prediction was leveraged by (cai:iccps2020, 16, 17) to construct a detector that takes a sequence of OoD scores as input. These methods reported impressive performance in detecting OoD factors important to driving safety, such as rain intensities and illumination levels. However, whether these methods generalize well to a different driving data set have not been investigated in these works.

VAE-based OoD detection methods in input space have been studied extensively. Many works have focused on a particular type of domain shift, i.e., how well a model trained on image set A can detect images from set B as OoD. This type of OoD factor may not be desirable for driving safety. In a driving setting, it could be a requirement that a background shift from town to countryside shall not be taken as OoD. Nevertheless, the likelihood overlap problem pointed out by (nalisnick2018deep, 12), and subsequent solutions in the literature inspire our work. Specifically, a VAE model trained on CIFAR10 222CIFAR10, SVHN, and MNIST series are widely used image data sets for machine learning and computer vision research. An explanation of likelihood overlap is provided in Appendix A images including common objects fails to detect most digit images from SVHN set as OoD, because the distribution of likelihood overlaps with .

The typicality set notion in (choi2018waic, 18) argued that likelihoods do not reveal where its probability mass is concentrated. By subtracting the likelihood’s variance across independent samples from the posterior , the authors showed the problem can be solved well, with 16 samplings or 5 model ensembles to compute the likelihood variance.

Based on a finding that the likelihood can be confounded by a general population level background statistics in genomics sequences, a likelihood ratio measure was proposed by (ren2019likelihood, 19), where model encodes genome information along with background information while model only encodes background statistics. Their method also solves the likelihood overlap problem between the MNIST series image sets.

Extensive experiments with VAE and other popular generative models in (serra2019input, 20) suggest that this problem is due to the excessive influence of input complexity on likelihoods. The authors also proposed a likelihood ratio solution , where is a complexity estimation instead of a second VAE model as in (ren2019likelihood, 19). In practice, the authors had chosen image compression ratios to estimate .

For real-time CPS, the extra computing cost incurred by model ensembles or over a dozen independent samplings may render (choi2018waic, 18, 19) inefficient, especially on resource limited embedded CPS. Also, none of these likelihood correction proposals directly leverage known knowledge, e.g., ID statistics, in a training data set.

OoD detection in latent space has only been recently explored. The encoder of a VAE discovers distributions over a set of latent properties so that the decoder can reconstruct the input with a high likelihood. This approach requires the VAE latent space dimension to be much higher than the number of OoD factors. The dimensions and type of distribution of the unknown latent space are system parameters. So far, there is no mechanism to control which latent variable or variables respond to which OoD factor. To overcome this, a training set’s KL-divergence scores were computed for each latent variable in (daxberger2019bayesian, 8) and simply half of the latent space with the highest scores were taken as OoD. Through testing, a subset of latent variables was identified in (shreyas:emsoft2020wip, 17). This subset encodes the most information about the training set. A specific latent variable that responds the most to an OoD factor of interest was subsequently sought from this subset. When training an identical VAE architecture over a different data set, we observed that a particular latent variable that was in the aforementioned most informative subset could fall out of the set. It casts a concern about the model’s generalization ability across data sets.

In the existing OoD detection literature, there is a lack of works utilizing the temporal dimension available in video, investigating model generalization capability, and exploiting the prior knowledge in the underlying data but were absent in the VAE learned latent space. This paper aims to address these missing points.

3. PROPOSALS

In existing methods (sundar2020out, 9, 16, 17), VAE learns image sequences in videos as time-independent data points to reconstruct semantic details in underlying images or encode semantic properties in the latent space. If we consider that the most critical factor to road safety is always maintaining a safe margin of space around an autonomous vehicle, the robustness of these OoD detection methods are questionable. Firstly, semantic novelties do not principally explain for unseen motion. For example, instead of learning the spatial change of raindrops in an image sequence, existing methods rely on detecting unseen texture patterns from a single image. Secondly, novel background semantics could trigger many OoD signals, mostly considered as false alarms which pose no risk to driving safety. This background semantics play a similar role to the genomics background in (ren2019likelihood, 19), where it was compensated by a second model trained only on the background information. The inductive conformal prediction was applied by (cai:iccps2020, 16, 17) to sequential OoD scores produced by VAE. This post-processing approach increased detection reliability but does not address the motion factor principally.

3.1. Motion detection in spatiotemporal domain

Recurrent neural networks (RNN) are a common choice of architecture for learning time series data. In (li2018anomaly, 21), a GAN-based anomaly detector was designed to learn inputs from sensors and actuators during the normal working conditions of a CPS. The sensors’ and actuators’ inputs are low dimensional. For high dimensional image video, an RNN needs to be huge based on its neuron count, rendering it less computationally feasible for real-time CPS on embedded systems.

Optical flow is a classical computer vision technique widely used to estimate objects’ actual movement in the physical world by computing changes in the neighboring pixels’ intensity over time. If we want robust OoD detection for unusual motion, optical flow is an ideal feature extraction mechanism due to its deterministic computational outcome. Let denote pixel intensity in image frames and be the unknown flow velocity in 2D space. The optical flow constraint equation assumes that the pixel intensity in a local region remains constant over a very short time frame. Solving the partial differential Equation 2 gives us the flow velocity. is the order of the Taylor series.

| (2) |

is a 2D vector field that represents surrounding object motions between consecutive image frames. A type of flow field example of driving on an open road is shown in Figure 1, where far-away background semantics at the front view are filtered out, relative motions of the closer front road surface and lamp pole are transformed into motion vectors. We found that anomaly event detection in video surveillance (hu2004survey, 22) to be broadly relevant to our work. However, unlike in the surveillance setting, where camera locations are fixed, motion in video feeds from a driving scene moves faster. The unique challenge here is to discriminate optical flow vectors caused by genuine OoD motions from those caused by continually changing backgrounds and other ID motion patterns in the environment. Today, the use of a deep neural network probably is the most effective technique to tackle this challenge . (yu2021hmflow, 23, 24)

Our motion OoD detection problem is different from anomaly detection for autonomous vehicles. Our problem deals with monitoring the potentially unreliable ML-enabled components in autonomous vehicles, specifically, the machine perception system. Anomaly detection in autonomous vehicles is not limited to the vision system (i.e., a extremely high data dimension problem). For example, non-vision low-dimension data from vehicle sensors are utilized in (guo2019detecting, 25) to to detect anomalies from pair-wise data correlation, such as between the acceleration and wheel torque. The approach proposed by (ryan2020end, 26) is framed in the end-to-end autonomous driving context. However, this approach detects for anomalies in vehicle control (steering, braking, and accelerating) through training a CNN model to learn the correlation between input driving video and human driving behavior. Although both approaches tackle the safety challenge in autonomous driving, our OoD detection problem focuses on the cause of uncertainty arising from machine vision. In contrast, anomaly detection focuses on the unexpected outputs from the vehicle controller.

To this end, we propose to extract ID motion series from image video into 3D optical flow tensors. While the spatial dimension of 3D optical flow remains the same as input images, the signal complexity is dramatically reduced. Therefore, it is feasible to train the time series with significantly fewer 3D convolutional network blocks. Let and be the 3D optical flow tensors for the horizontal and vertical directions respectively, the two 3D convolutional VAE encoders individually learn the input manifold, forming two separate latent sub-spaces. The latent sub-spaces are then concatenated as one input and then fed to the VAE decoder. The objective of our VAE network is to minimize the loss as given in Equation 3a, where and are model parameters of the encoder and the decoder respectively. The second and third terms represent two encoders measuring the KL-divergence in the horizontal and vertical latent sub-spaces. The first term refers to the decoder measuring the likelihood between encoders’ input and the decoder’s reconstruction given input from the latent sub-spaces. A high level diagram of the network architecture is depicted in Figure 1.

| (3a) |

| (3b) |

Bernoulli distribution, as proposed in (kingma2013auto, 6), is optimal for modeling black and white image data like MNIST because pixels value are either 0 or 1. Optical flow values and normalized color image pixel values are real numbers in a continuous domain. In practice, we adopt a common approach that modeling the training data with a Gaussian distribution with identity covariance. Hence a Gaussian decoder, where the negative log-likelihood term in Equation 3a is transformed into mean square error (MSE) in implementation, as shown in Equation 3b. See Appendix B for the derivation steps. M is the input space dimension, a multiplication of length, width, and depth of an input. OoD score is a summation of in horizontal and vertical latent sub-spaces. is the number of dimensions in the latent sub-space. Experiments with depth values of 6 and 1 showed that the proposed feature abstraction in spatiotemporal space leads to the robustness of OoD detection.

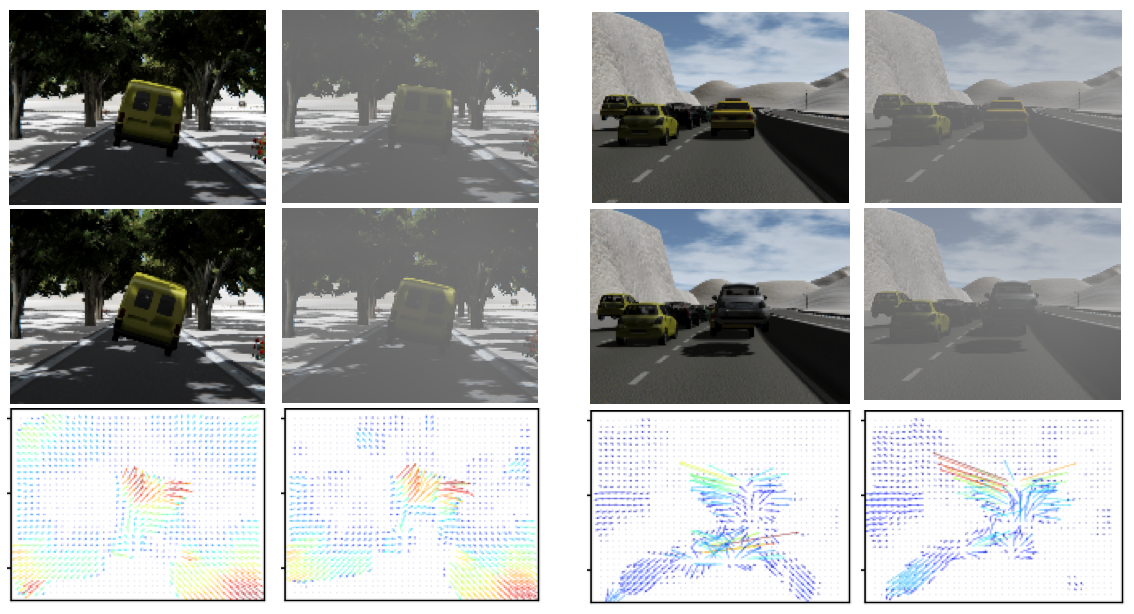

On the left example, a car at the front flips over. On the right, a vehicle is being spawned onto the driving lane.

Can hazardous motion features still be robustly extracted when OoD factors that affect visibility, such as fog, co-occur? In Figure 2, we visualized the flow vectors of two OoD motion examples. Flow vectors shown in the bottom row are computed from the pair of image frames from the row above. Clean image sequences were obtained from (bengar2019temporal, 15). The fog was rendered over the clean sequences with dynamic effects through Autodesk Maya software. Both cases simulate that a car is driving ahead. Optical flow vectors in the front view are visualized as colored vectors. A cluster of red represents the prominent motion between two video frames. We can see that the fog almost has no impact in both cases. Qualitatively, we showed that a robust representation of motion around an autonomous vehicle can be extracted through optical flow under diverse visibility conditions. Since this representation is abstract, environmental background semantics will not be encoded into the latent space, which encourages model generalization and this better suits to driving environments that are different from the training data set. In related works (shreyas:emsoft2020wip, 17, 8), an informative subset of the latent space has to be experimentally selected for good OoD detection performance. A consequential benefit of this proposed method is that the entire latent space or a particular sub-space can be used for OoD detection.

3.2. Optimal prior distribution in latent space

Commonly, a simple prior is used to represent the true but unknown latent prior , and the KL-divergence is used to compute the distribution discrepancy between the encoder output and the prior, as given in Equation 4.

| (4) |

For robust OoD detection in the latent space, it is critical that the posterior accurately approximates underlying ID data properties. Intuitively, assuming that any underlying data follows a simple prior is not optimal. One way for improvement is to optimise the prior and not just the encoder and decoder (hoffman2016elbo, 27).

Substituting the simple prior with an approximation of aggregated posterior was proposed by (tomczak2018vae, 28). A Student’s t-distribution prior allowed a more robust approximation of the underlying data in the presence of outliers (abiri2020variational, 29). A Dirichlet prior was chosen by (xiao2018dirichlet, 30) to model a latent representation of categorical distribution, specifically the class labels distribution, for a downstream object classification task. Since the input and latent spaces in our VAE proposal encode the same physical meaning but because the latent dimension is dramatically reduced, we decided to identify an optimal prior from model’s training data.

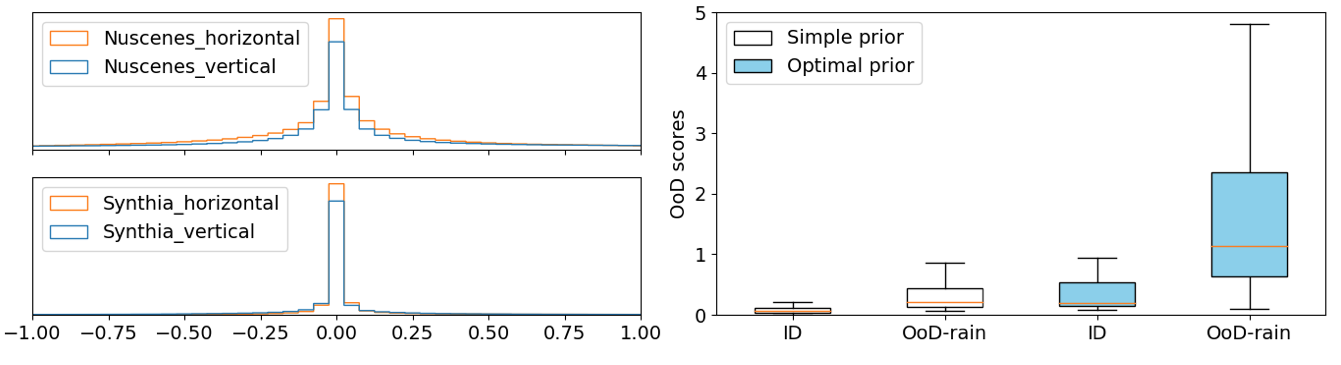

We select a random subset from training data and plot a histogram of optical flow values , as shown in the left column of Figure 3. Distributions of flow values in both data sets approximate to Gaussian in horizontal and vertical directions but with very small deviations. In practice, we replace the variance of the simple prior with approximated from a random partition of the training set .

The left column shows histograms of optical flow from the underlying ID data. The right column shows box plots of OoD scores, and , from the corresponding test set.

Since the value is very small here, training with Equation 4 as the objective will be unstable. To overcome this, we adopt a novel autoencoder formulation from (tolstikhin2017wasserstein, 13), the Wasserstein Auto-Encoder (WAE). Motivated by the transportation theory in mathematics, the objective of WAE is to minimize the transportation cost between true data distribution and a generative model, i.e., the decoder modeled after latent distribution , denoted as in Equation 5. Notation in the second term of the objective is a distance metric that encourages the encoded training distribution to match its prior . Both the cost and metric functions can be arbitrary, so WAE is a non-parametric probabilistic model. is a hyperparameter. With WAE, we can use a metric other than KL-divergence as an OoD measure and any reconstruction error function to regularize the decoder.

| (5) |

With this new objective, we revise the objective in Equation 3 by substituting the penalization with a 2-order Wasserstein metric , as given in Equation 6. The decoder is still regularized by MSE loss and . For isotropic Gaussian, covariance matrix . A penalization ensures the training is stable even when is close to zero.

| (6) |

How will the optimal prior impact the OoD scores ? Will the optimal prior lead to model overfitting? The box plots of and in Figure 3’s right column show that the difference between mean OoD scores of ID and OoD rain test samples increases in the model trained with an optimal prior. In experiments, we observed a consistent detection performance enhancement over test samples from the same or from different data sets, which indicates that the proposed optimal prior does not cause overfitting. Refer to Section 4.3 for a quantitative evaluation.

3.3. Compensated OoD measure in input space

Section 3.1 explained that the motion abstraction with optical low is robust under diverse visibility conditions. However, we can’t rely to this abstraction when visibility is close to zero. To address such extreme visibility conditions, we propose an improvement to OoD detection in the input space.

In Section 2, we introduced the likelihood overlap problem and commented that most existing likelihood compensation approaches are not suitable for real-time CPS application because of the extra computing cost incurred. There is an exception where authors of (serra2019input, 20) proposed to compensate with an image complexity ratio estimated by compression algorithms such as JPEG2000.

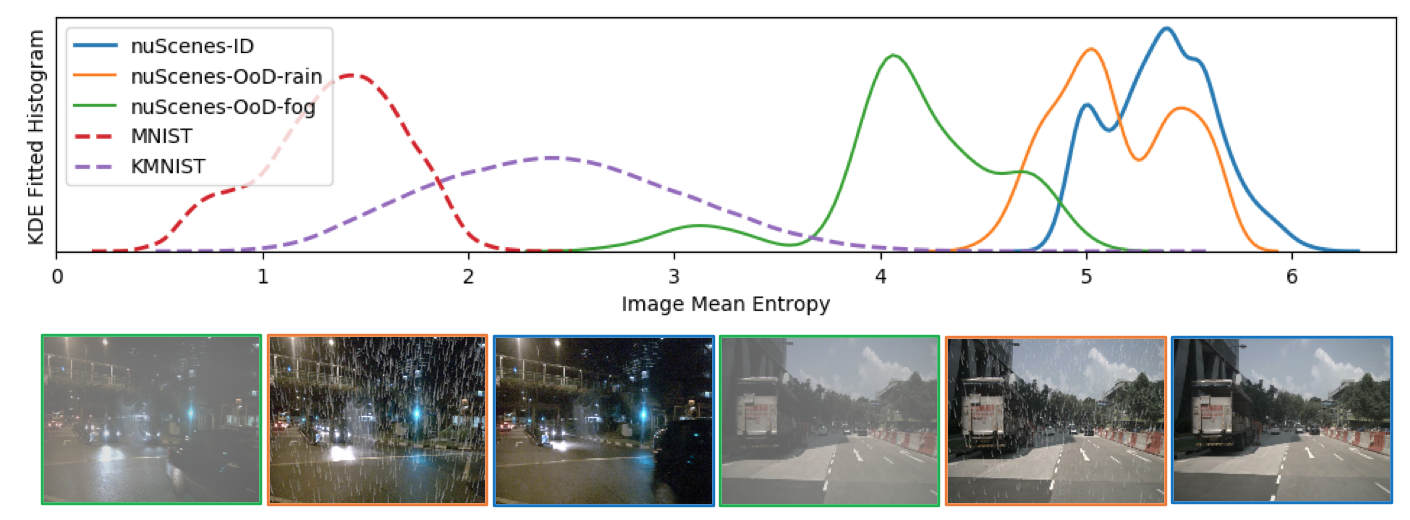

Instead of compression ratio, we use image entropy as a complexity measure to study the likelihood overlap problem. Information entropy is a widely used complexity measure not limited to just images. 2D image entropy measures the complexity in local neighborhoods of an image. From the histograms of image entropy in Figure 4, we can see that the fog factor does cause a considerable reduction of entropy while the rain factor almost has no impact. Also, images in the nuScenes data set are more complex than the benchmark data sets such as MNIST and KMNIST.

The top row shows kernel density estimation (KDE) fitted histograms of the image mean entropy. The fog and rain OoD factors are rendered over ID images from the nuScenes data set with Autodesk Maya software’s dynamic effects. Examples of OoD fog, OoD rain, and ID images are shown at the bottom.

Inspired by related works, we propose to improve the detection of low visibility conditions by calibrating the likelihood with a data-driven baseline. The baseline is the mean entropy of model’s training data, denoted as in Equation 7. is the mean entropy of a test image. There does not exist ubiquitous background statistics in our problem, as in the genomics sequence study (ren2019likelihood, 19). So the likelihood is compensated only when a test image’s mean entropy is lower than the baseline. In experiments, we use instead of likelihood .

| (7) |

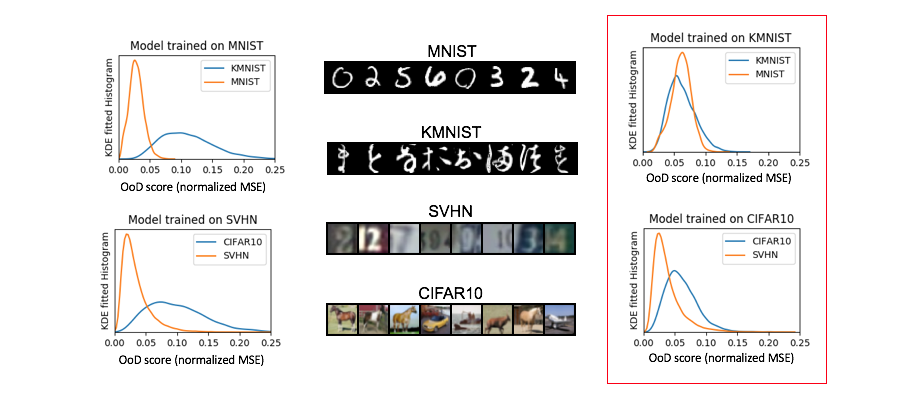

How effective is this metric compensation proposal? In Appendix A, we demonstrated that a model trained with KMNIST cannot differentiate MNIST images from KMNIST. Their likelihoods histogram almost overlap. Since most MNIST images can easily be separated from KMNIST images by entropy (see in Figure 4), calibrating likelihoods improves cross data-domain OoD detection performance from 0.504 to 0.939 in AUROC.

We shall note that this likelihood calibration is explicitly designed for robust detection of low visibility factors in an autonomous driving setting. Its effectiveness is evaluated in Section 4.2 using visibility factors such as fog and low light.

4. EVALUATION

The evaluation part has seven sections. Section 4.1 describes the data sets used and hte experiment setup. Sections 4.2-4.6 evaluate the OoD detection performance of proposed methods and compare them with related works. Section 4.7 covers the computational cost. The feasibility and customization required for real-time OoD detection on off-the-shelf embedded devices is studied in Section 5.

4.1. Experiment setup

We implemented two of the most closely related OoD detection methods, (cai:iccps2020, 16) and (shreyas:emsoft2020wip, 17) from the autonomous CPS domain, for performance comparison. The performance advantages of our light-weighted spatiotemporal detection method were demonstrated with input sequences of different lengths in time. Our improvement to the likelihood overlap issue was validated. Experiment details are summarized in the below.

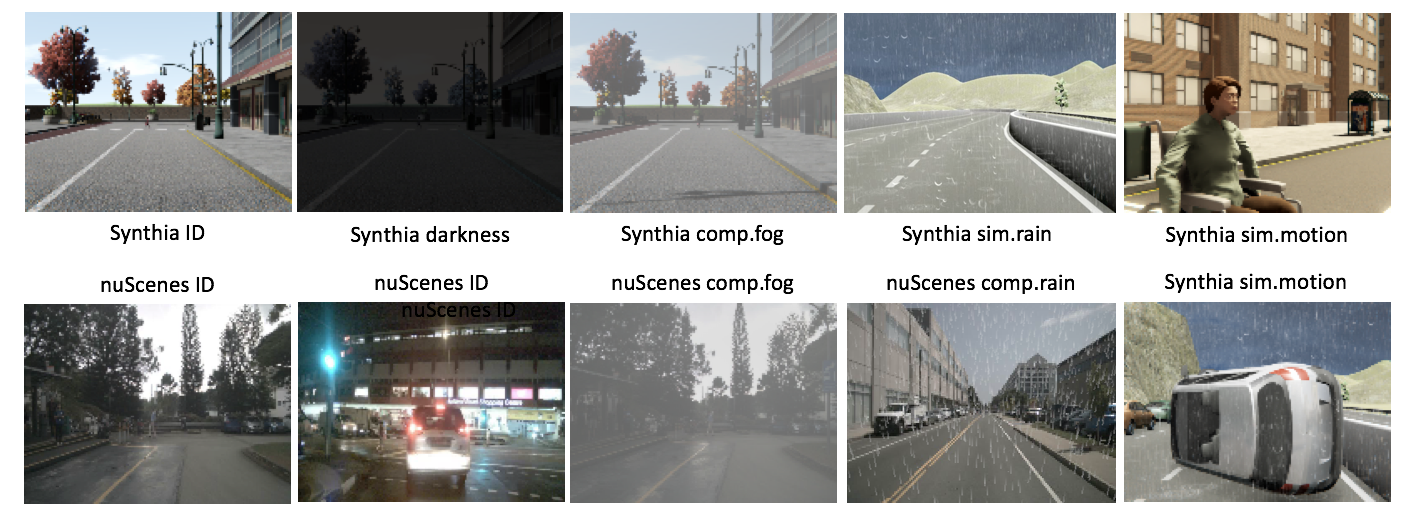

OoD factors and Experiment Data Sets We are interested in detecting factors that are potentially dangerous to safe driving. OoD factors evaluated were close hazardous motion, rain, fog, and low light. We selected a driving simulation data set Synthia (bengar2019temporal, 15) and a real-world driving data set nuScenes mini (caesar2020nuscenes, 31) to partition into training and test sets.

Synthia has 288 video scenes captured from a driving car in a virtual world at 25fps with a scene length between 10 to 30 seconds and a rich mixture of weather, landscape (i.e., city, town, and highway), and dynamic and static objects (car, pedestrian, bicycle, wheelchair, veneration, traffic light, etc.). We manually curated 94 video scenes from Synthia to form a training set of 27519 images. The nuScenes mini has 10 driving video scenes recorded in city environment. The first 48 frames of each nuScenes mini video were kept for testing, and the remainder of 1870 images were used for model training. Both training sets only include ID data.

Details of test sets are summarized in Table 1, where the source and sample numbers of images or video episodes for each class are listed in the third column. OoD classes starting with \saycomp were generated via rendering dynamic effects rain and fog over ID image sequences using Autodesk Maya software. Images in the darkness OoD class were generated by reducing ID images’ brightness in the HSV color space. OoD classes starting with \saysim are original images from the Synthia data set. In the sim.motion OoD class, each episode is 60 frames long with at least one motion OoD occurrence. Some examples of ID and OoD images are shown in Figure 5.

| Class | Group | Images | Description |

| ID | Synthia (6660) nuScenes (480) | Synthia (day time, dry weather, on straight street or highway, stopping at cross junctions.) nuScenes (day or night, city street, driving straight or fast turns.) | |

| OoD sim.motion | motion | Synthia (60 episodes) | One of the five situations occurred without or with rain: abrupt cut of the driving lane by spawned car or pedestrian; car crash in front of the driving car; inter-vehicle distance drops drastically (i.e., emergency braking); the driving car vibrates vertically; the driving car turns at a road intersection. |

| OoD sim.rain | motion visibility | Synthia (7740) | Rain generated by Synthia 3D simulation |

| OoD comp.rain | motion visibility | Synthia (480) nuScenes (480) | 2D composition rain rendered over ID test set |

| OoD comp.fog | visibility | Synthia (480) nuScenes (480) | 2D composition fog rendered over ID test set |

| OoD darkness | visibility | Synthia (6660) | Reduce brightness of ID test set images |

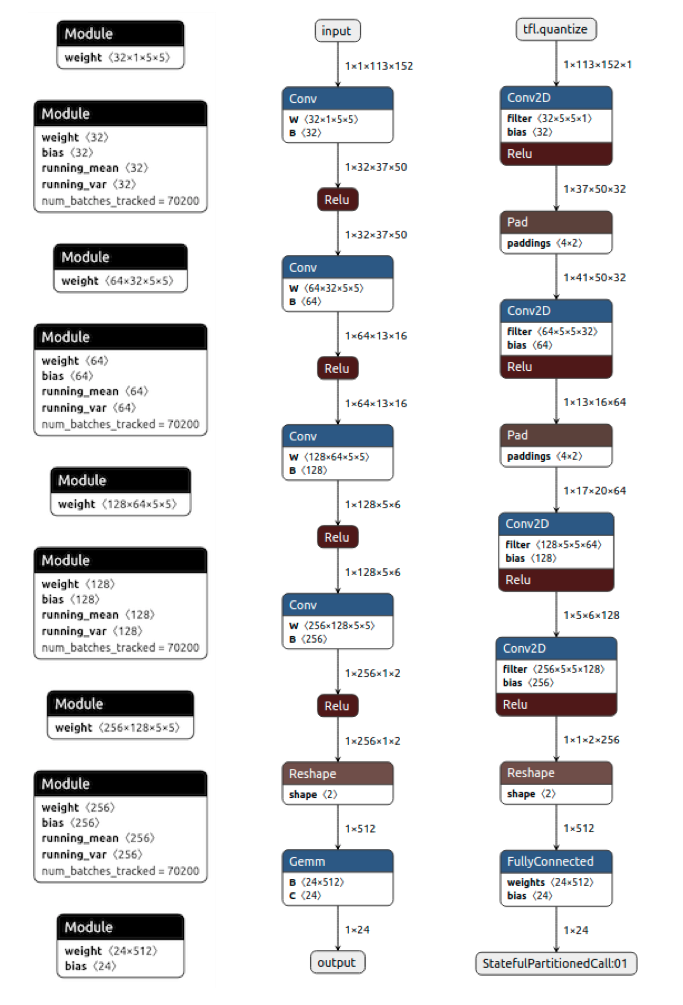

VAE networks To fairly compare the three detection methods, we used an identical CNN architecture for the encoders and decoders, because this network architecture was used by (cai:iccps2020, 16, 17). Their corresponding comparison studies will be carried out in Sections 4.2, 4.3, 4.5 and 4.7. All input images were resized to 120x160 in pixels. The encoder has four CNN blocks of 32/64/128/256 x (5x5) filters with exponential linear unit (ELU) activation and BatchNorm. The decoder is architecturally symmetric. The extra layers in (cai:iccps2020, 16, 17) networks are two MaxPool in the encoder and two MaxUnPool in the decoder, after the CNN blocks. Whereas our network does not have these pool layers as we use larger strides in the CNN blocks.

The dimension of latent space varies. Following the original proposals, the latent dimension is 1024 and 30 for (cai:iccps2020, 16) and (shreyas:emsoft2020wip, 17), respectively. The latent dimension in our method is 12 for each sub-space. The depth of 3D optical flow is 6, computed from 7 consecutive image frames. In sections 4.4 and 4.6, we show how different numbers of CNN layers, dimensions of latent space, and depth of input size influence the overall performance of the proposed OoD detection methods.

Hyperparameters All VAE models were trained with the Adam optimiser using PyTorch. For the Synthia training set, model (cai:iccps2020, 16) was trained with constant learning rates and for 200 and 150 epochs, respectively. Model (shreyas:emsoft2020wip, 17) was trained with a constant learning rate for 100 epochs. These learning rates and training epochs were adopted from (cai:iccps2020, 16, 17). We tested a faster learning rate , however it degraded the model performance in these methods. Our VAE model was trained with a constant learning rate for 100 epochs.

Increasing total training epochs did not enhance performance with the Synthia training set but was the opposite with the nuScenes-mini training set. After a quick search, all corresponding nuScenes models were trained with 600 epochs.

In (shreyas:emsoft2020wip, 17) method, we set the value to 1.4 and selected the nine latent variables that encode the most information, as proposed in the original paper. See Appendix D for the selection algorithm.

Evaluation metrics Equation 8 defines the OoD calculation formula used to analyze experiments in this paper. It is a summation of distribution discrepancies between a test sample and the latent priors in horizontal and vertical sub-spaces.

| (8) |

We used AUROC to compare the performances of OoD detection methods across different score thresholds. In true positive rate (TPR) 95%, i.e., tolerating 5% miss-out, we compared point performances of OoD detectors in precision and F1 score metrics. When TPR is fixed, precision, , reveals the false positive rate, while the F1 score conveys the balance between precision and sensitivity .

4.2. Robust Motion OoD Detection

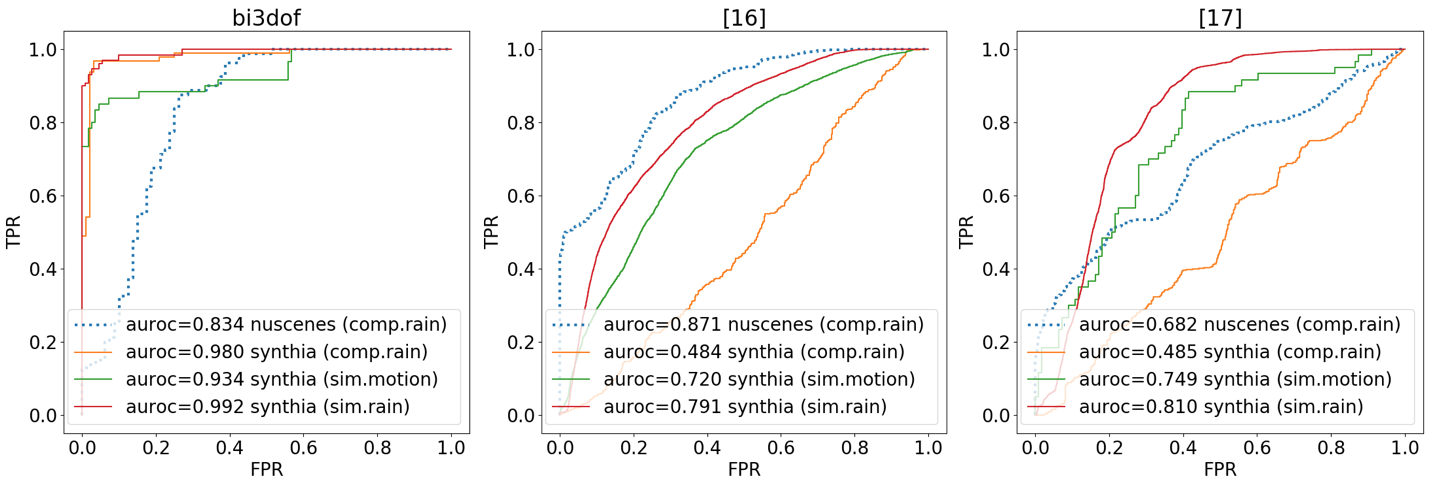

In this section, we first compared the performances of three VAE-based detection methods over the motion OoD group. Next, the benefit of optimizing prior distribution was analysed over the motion OoD group. Finally, the extra performance benefit from detecting OoD in a latent sub-space was shown. For simplicity, our detection method proposed in Section 3.1 is termed as bi3dof and its enhanced version proposed in Section 3.2 as bi3dof-optprior.

4.2.1. Comparison Study

Figure 6 summarizes each method’s detection capacity broken down by OoD classes. We can observe the bi3dof trained on the Synthia data set is very robust. Its overall detection performance varies between 0.980 to 0.992 across all motion OoD classes. Whereas, the best results from (cai:iccps2020, 16) and (shreyas:emsoft2020wip, 17) are 0.791 and 0.810 respectively on the Synthia data set.

Methods (cai:iccps2020, 16, 17) failed to detect the comp.rain class on the Synthia data set, both AUROC values are bellow 0.5. But on the nuScenes test set, their detection performance over the comp.rain class increases, with (cai:iccps2020, 16) scores 0.871, higher than bi3dof which is 0.834. The nuScenes training set size is 6.7% of the Synthia training set and includes fast turning and night scenarios. It was expected that bi3dof’s performance on the nuScenes test sets would drop. What was unexpected is that an opposite outcome was observed in methods (cai:iccps2020, 16, 17). To this end, we suspect that this could be a benefit induced by color contrast, i.e., white rain is more salient over darker images because methods (cai:iccps2020, 16, 17) learn from color images directly. The average intensity of ID images in the nuScenes test set is 94.8 out of 256, while it is 116.9 in the Synthia test set.

For applications which require detection, a decision threshold is required. Having a 95% TPR for an OoD detector is acceptable, i.e., tolerating a 5% miss rate; the performances of each detector are shown in Table 2. We can see that on nuScenes test sets, bi3dof outperforms (cai:iccps2020, 16) in both F1 score and precision, and (cai:iccps2020, 16) outperforms (shreyas:emsoft2020wip, 17). On Synthia test sets, bi3dof scores the best in each of the OoD classes, in both F1 score and precision. Performance of (cai:iccps2020, 16) is slightly better than (shreyas:emsoft2020wip, 17) except for the sim.motion OoD class.

The comparison study shows our spatiotemporal-based feature abstraction approach through 3D optical flow provides superior robustness to motion OoD detection. It also reveals that the sim.motion class is the most difficult OoD class to detect in our experiments. Although bi3dof performs the best among the three for this OoD class at 95% TPR, the precision of bi3dof is still below 0.5 over Synthia test sets. This indicates that a high percentage of ID samples were falsely classified as OoD.

| Synthia | nuScenes | |||

| Detector | sim.motion | sim.rain | comp.rain | comp.rain |

| F1 Score | ||||

| (cai:iccps2020, 16) | 0.560 | 0.760 | 0.666 | 0.775 |

| (shreyas:emsoft2020wip, 17) | 0.576 | 0.735 | 0.647 | 0.659 |

| bi3dof | 0.644 | 0.961 | 0.969 | 0.819 |

| Precision | ||||

| (cai:iccps2020, 16) | 0.397 | 0.633 | 0.512 | 0.653 |

| (shreyas:emsoft2020wip, 17) | 0.413 | 0.600 | 0.504 | 0.504 |

| bi3dof | 0.483 | 0.961 | 0.969 | 0.713 |

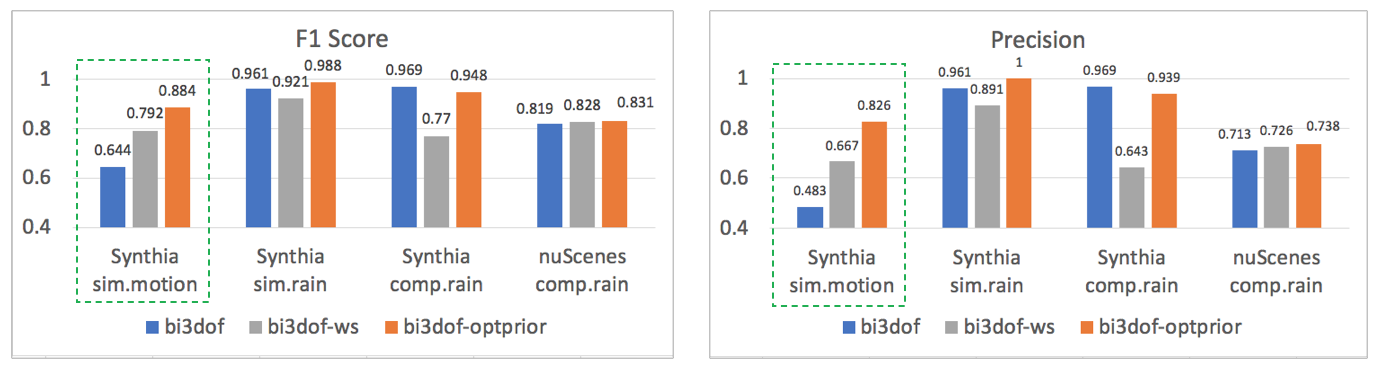

4.2.2. Improving bi3dof with optimal latent prior

The bi3dof models in the comparison study were trained with objective given in Equations 3 and 4, i.e., the simple latent prior was used for model’s training and inference. Using the exact same training sets and hyperparameters, we trained bi3dof-optprior models with objective given in Equations 5 and 6. As proposed in Section 3.2, we obtained the optimal prior from a random partition of model’s training set. The optimal priors used for training bi3dof-optprior models are and for the Synthia set, in the horizontal and vertical sub-spaces respectively. For the nuScenes set, the horizontal is and the vertical is .

In bi3dof-optprior, the latent space training is regularized by the 2-order Wasserstein distance instead of KL-divergence. To discern the source of impact to detection performance, we trained a third model regularized with the 2-order Wasserstein but the simple prior. This third model was named bi3dof-ws, represented by grey bars in Figure 7.

At a 95% TPR, the F1 score and precision of bi3dof-ws does not improve as shown in Figure 7, except for the sim.motion class in the Synthia test set. With the optimal prior, the F1 score and precision further improved from 0.792 to 0.884 and from 0.667 to 0.826, respectively. In the same figure, we can see a very big drop of F1 score over the comp.rain class for Synthia, from 0.969 to 0.770, in the bi3dof-ws detector. However, with the optimal prior, its F1 score is restored to 0.948.

This group of experiments shows that our optimal prior proposal addresses bi3dof’s high false alarm rate over the sim.motion class pointed out in the comparison study, with negligible impact to the detection capacity for other motion OoD classes.

4.2.3. Detection from latent sub-space

In our VAE network, horizontal and vertical motion patterns are encoded into two separate latent sub-spaces. When representative OoD samples in both motion directions are available, detection thresholds in each motion direction can be sought separately. We used the rain OoD class as an example to show its benefit. As rain motions largely are in the vertical direction, We took in Equation 8 to detect rain OoD from ID samples. As shown in Table 3, we can see an overall detection performance improvement when AUROC values from a combined latent space was not close to 100% correct, while the improvement on those that were already very high is minimal. Unless otherwise specified, we report all experiment results outside this sub-section using OoD scores defined in Equation 8.

| Synthia | nuScenes | ||

| bi3dof- optprior | sim. rain | comp. rain | comp. rain |

| 0.998 | 0.978 | 0.901 | |

| 1.000 | 0.977 | 0.877 | |

| Synthia | nuScenes | ||

| bi3dof | sim. rain | comp. rain | comp. rain |

| 0.991 | 0.983 | 0.875 | |

| 0.992 | 0.980 | 0.839 | |

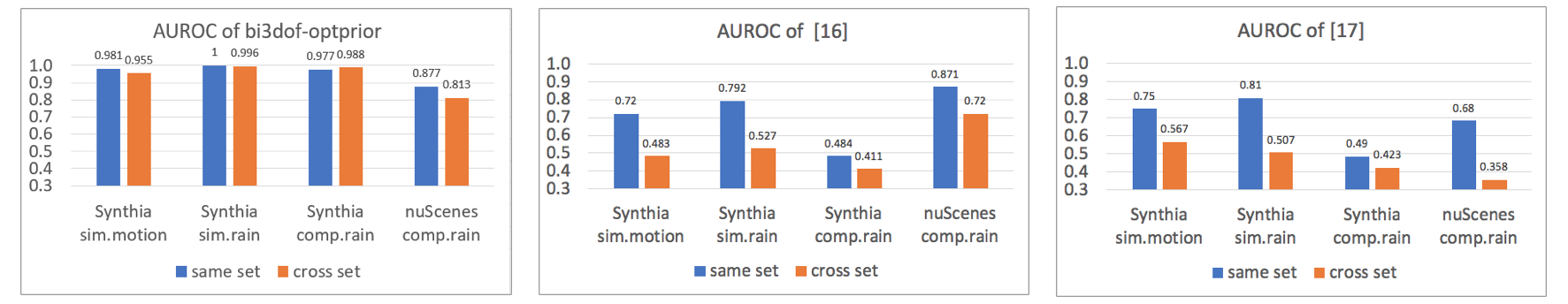

4.3. Model generalization

We believe that without an input space abstraction, the semantic meanings of environmental background will be encoded into the latent space, which discourages a model from generalizing to driving environments that differ from training. However, as pointed out in previous sections, related works only reported their detection performance on test sets partitioned from the same data set that was used for training.

In this section, we examined how the models in Section 4.2.1 behave if test sets are from a source different from a model’s training set. We denote such tests as cross-sets. An example of a cross-set test is to have the nuScenes test set evaluated against a model trained on Synthia data.

From Figure 8, we can see bi3dof models trained on the nuScenes set generalize perfectly on Synthia test sets across all OoD classes. As the motion ID patterns in the nuScenes training set are more complex than those in the Synthia set, we even observed a trivial performance increase for the Synthia comp.rain class. The maximum performance drop is 0.026 over the sim.motion class. Models trained on the Synthia set generalize slightly less perfectly, having a drop between 0.053 and 0.064. This observation confirms that for a robust ML model, it is vital that an application’s population data is well sampled into the model’s training set.

While models trained with bi3dof methods generalize very robustly, we can see models from both the related works failing badly. Six out of eight cross-set tests produced AUROC values around or below 0.5. An AUROC value less than 0.5 indicates a classifier’s performance is worse than a random guess, suggesting that the score over ID samples may be statistically higher than OoD samples in most of the cross-set tests here. A relatively good outcome was observed in (cai:iccps2020, 16) on the nuScenes cross-set test, where the AUROC value decreases by 0.151.

4.4. Impact of Input Temporal Window Length

Besides the latent sub-spaces, another novelty in our detection method is enhancing OoD detection’s robustness through directly learning 3D optical flow with a sufficiently long temporal window. If a vehicle is moving at 50 km per hour and the video is captured at 25 frames per second, a depth value of 6 encompass motions spanning over 3.3 meters in 240 milliseconds. If the depth value is 1, each optical flow input data point would covers only motions over half a meter. Intuitively, it is less effective in exploiting the motions’ correlation over time. Using the nuScenes training set and , we trained a model with optimal prior (i.e., bi2dof-optprior) to validate the effectiveness of learning from spatiotemporal data points. The result in Table 4 shows, in both same and cross-set tests, 3D optical flow at depth 6 clearly improve detection performance by a big margin. Specifically, precision values of sim.rain and sim.motion OoD classes in cross-set tests almost double, which indicates false alarm rate dropped dramatically. The 2D optical flow requires less computing capacity, which is an advantage in resource-limited situations.

| nuScenes | Synthia (cross-set) | |||

| Detector | comp.rain | comp.rain | sim.rain | sim.motion |

| F1 Score | ||||

| bi2dof-optprior | 0.698 | 0.706 | 0.574 | 0.537 |

| bi3dof-optprior | 0.831 | 0.959 | 0.976 | 0.864 |

| Precision | ||||

| bi2dof-optprior | 0.554 | 0.561 | 0.411 | 0.372 |

| bi3dof-optprior | 0.738 | 0.949 | 1.000 | 0.792 |

| Synthia | nuScenes | ||||||

| Detector | comp. rain | comp. fog | darkness | micro- average | micro- average | comp. fog | comp. rain |

| F1 Score | |||||||

| (cai:iccps2020, 16) | 0.666 | 0.689 | 0.690 | 0.684 | 0.764 | 0.761 | 0.775 |

| our | 0.656 | 0.682 | 0.810 | 0.742 | 0.803 | 0.861 | 0.771 |

| Precision | |||||||

| (cai:iccps2020, 16) | 0.512 | 0.540 | 0.542 | 0.535 | 0.640 | 0.634 | 0.653 |

| our | 0.500 | 0.531 | 0.706 | 0.608 | 0.695 | 0.788 | 0.649 |

4.5. Entropy compensated OoD metric

So far, our analysis focused on motion OoD detection in the latent space of VAE. This section discusses experimental outcome of enhanced input-space-based detection proposed in Section 3.3. We used (cai:iccps2020, 16)’s method as a baseline to compare OoD classes’ performances in the visibility group as given in Table 1.

Following the definition in Equation 7, each OoD score is compensated by mean image entropy ratio between the model’s training set and the individual test sample. We used VAE models from Section 4.2.1 to compute a raw OoD score of a test sample. The baseline entropy, , for Synthia and nuScenes models are 4.657 and 5.364, respectively. The difference in values indicates images in the nuScenes data set are averagely more complex than images in the Synthia data set.

Since there are night scenes in the nuScenes training set, darkness detection cannot be tested on nuScenes as dark scenes are considered ID. From Table 5, we can see the entropy compensated OoD metric improves average detection capacity in both Synthia and nuScenes data sets, with negligible impact on the motion OoD classes. Higher precision values over the comp.fog class in nuScenes and darkness class in Synthia indicate that the false alarm rate was reduced. However, the new metric does not improve the F1 score and precision over the Synthia comp-fog class. A pair of comp.fog images in Figure 5. We think this could be a simple mean entropy (in Equation 7) is not effective for very bright images. A statistically more sophisticated entropy score might worth looking into in future work.

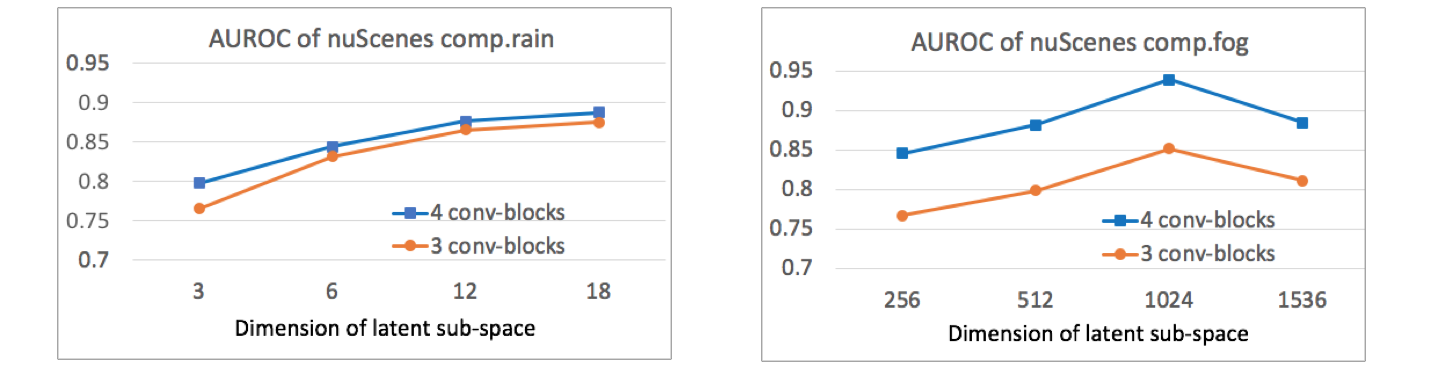

4.6. Aspects of Network Architecture

In our comparison studies, all encoders and decoders have four conv-blocks, where each conv-block comprises of one convolutional neural layer, one ELU activation layer, and one BatchNorm layer. In this section, we investigate whether this network choice is optimal for our light-weighted spatiotemporal and entropy-compensated OoD detectors. We experimented with narrower or wider latent dimensions and reduced conv-blocks. The results is summarized in Figure 9. For the bi3dof-optprior detection method, we can see increasing the dimension of latent space leads to higher performance. When the size of latent space is the same, a network of four conv-blocks consistently outperforms the network with three conv-blocks. Based on the performance curve, four conv-blocks with 12 dimensions for latent sub-space is a good design choice that balances between performance and computational cost. A choice of three conv-blocks with 18 dimensions for latent sub-space is reasonable if one trades performance for less computational cost. For the entropy-compensated OoD detection method in VAE input space, one more conv-block contributes significantly to detection performance. However, when the latent space dimension is too high, detection performance drops. This can be understood as the information bottleneck required to distill proper abstract representation from underlying data was not formed. A network of four conv-blocks with 1024 dimensions is the optimal choice among all the network parameter space searched.

Left is bi3dof-optprior detector. Right is our entropy compensated method from Section 4.5.

4.7. Computational cost

The execution time of an detector matters for OoD detection in real-time, as well as its memory footprint. The average execution times of each detector on a workstation with an Intel Xeon 3.50GHz is shown in Table 6. The pre-process computation includes image resizing and optical flow operations in the case of bi3dof. The VAE model inference in (cai:iccps2020, 16) takes the longest execution time because the decoder computation is required. For the same reason, (cai:iccps2020, 16)’s memory footprint is the largest. Our method has the lowest number of dimensions in the latent space, hence the smallest memory footprint. Its execution time is equivalent to (shreyas:emsoft2020wip, 17). With one execution below 10 milliseconds, all three detection methods can efficiently do real-time OoD detection on general purpose desktop computers. If the neighboring depth-6 3D optical flow results cannot be reused, for example, a frame drop happened while streaming the input video, the total execution time will take 26.3 milliseconds.

5. OoD Detector for Real-Time Applications on Embedded Devices

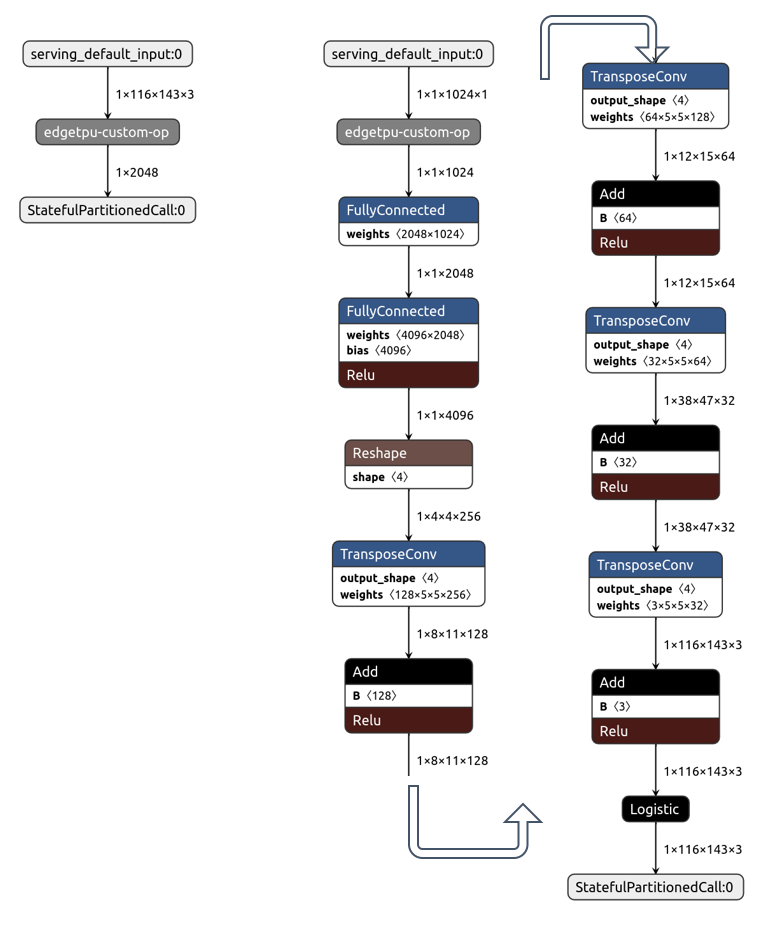

Many ML-enabled CPS research (almasi2020robust, 32) has been using the Duckietown platform since its launch. The platform runs on the Robot Operating System (ROS) software system and off-the-shelf mini-computers such as Raspberry Pi. It is also widely used in Internet of Things (IoT) applications. The relatively weak computing power of the Raspberry Pi creates a resource constraint to a direct deployment DNN based OoD detector onto such hardware platform for real-time OoD detection. For example, the execution time of our bi3dof detector is about half a second on a Rasberry Pi 3B+ model (RPI-3B+), which renders directly deploying the bi3dof detector on a Duckiebot for real-time operation infeasible. We introduce a design that customizes our bi3dof method to be deployable onto Google’s Coral Edge TPU (cass2019taking, 33). The Edge TPU is optimized for deep neural networks to run real-time inferences on embedded devices.

With the recent introduction of the new generation Jetson Nano-powered Duckiebot, we have more deployment options. The Jetson Nano is a system-on-chip (SoC) platform. Its CPU clock speed is similar to Raspberry Pi, and it supports model inference in floating-point at 472 GFLOPs. In contrast, the Coral Edge TPU supports integer inference at 4 TOPs but uses USB as a data communication interface. Each embedded platform has its own advantages and disadvantages. For example, we can run model inference directly in floating-point on the Jetson Nano but at approximately one-eighth speed of the Edge TPU. On the other hand, the SoC architecture gives Jetson Nano an advantage in data transmission between the CPU and the model inference unit. A high-end SoC platform, such as Jetson Xavier NX supports model inference in integer number at 21 TOPS. Comparatively, its model inference speed is five times of the Edge TPU. Deploying an integer version of our full-model bi3dof on a high-end embedded platform is thus feasible.

In the rest of this section, we analyse the computational costs of our model and implementation of (cai:iccps2020, 16, 17) on the Raspberry Pi plus Edge TPU embedded platform, introduce a model compression workflow. Finally, we discuss the impact of the model reduction and compression on the OoD detection power.

5.1. Computational Constraints of Embedded Devices for Real-time Applications

The RPI-3B+ has a ARMv8 CPU at 1.4Hz. Averagely, one optical flow operation takes 31.7 milliseconds, and one model inference takes 117.7 milliseconds. If all RPI-3B+ computing resource is dedicated to the OoD detector, to achieve zero frame drop in real-time means the video streaming has to be set at 6 frames per second (FPS). In the meantime, the bi3dof model used in Section 4 experiments requires 7 consecutive frames from the input video stream to do one OoD detection process. This will incur a long time delay between the image frames captured and when an OoD result is produced, which does not make it suitable for real-time applications on common off-the-shelf embedded devices.

To adapt to the RPI-3B+ CPU, we used the bi2dof-optprior model trained on the nuScenes training set. After reducing the input space dimension, the model takes about 200 millisecond to produce an OoD result on a RPI-3B+ mini-computer. However, the model inference time of bi2dof is still very long, compared to 7.6-26.3 milliseconds of the bi3dof model running on a workstation. After pushing the model onto the Edge TPU, the VAE model inference time can be reduced on average over four times. In the rest of this section, we discuss the pros and cons of applying model compression, including its impact to the model’s detection capability and compare with related works in Section 4.

| Execution Times (milliseconds) | Memory size | |||

| Detector | Pre-process | Model inference | Total | (MB) |

| Floating-point model | ||||

| bi2dof-optprior | 31.7 | 117.7 | 149.4 | 8.7 |

| (cai:iccps2020, 16)-variant | 9.9 | 336.6 | 346.5 | 101.0 |

| (shreyas:emsoft2020wip, 17) | 9.9 | 220.6 | 230.5 | 14.3 |

| 8-bit-integer model | ||||

| bi2dof-optprior | 31.7 | 26.6 | 58.3 | 2.4 |

| (cai:iccps2020, 16)-variant | 9.9 | 482.1 | 492.0 | 25.5 |

| (shreyas:emsoft2020wip, 17) | 9.9 | 10.7 | 20.6 | 3.7 |

5.2. Model Compression

DNN models rely on millions or even billions of parameters to achieve superb prediction abilities. For example, the famous AlexNet has over 60 million parameters that require floating point operations. Our floating-point CPU model has 2.2 million parameters. Graphics processing units (GPUs) have been the horsepower for training and inference of DNN models. In recent years, Tensor Processing Units (TPUs), together with various DNN model compression and acceleration techniques, are overcoming challenges in deploying DNN models to embedded devices with limited resources. Parameter quantization is a model compression technique that reduces the number of bits required to store each parameter. With a minimal loss in classification accuracy, train and infer in 8-bit quantized integers, a running time reduction of 50% was reported on the open-source TensorFlow (jacob2018quantization, 34).

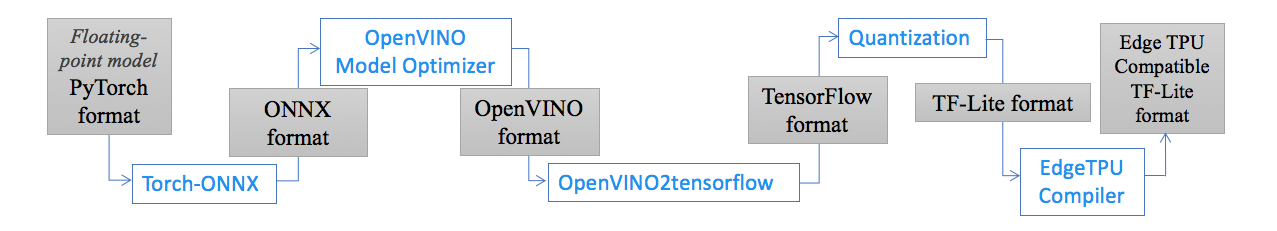

ONNX provides a toolkit for converting machine learning models built with various frameworks into an open standard format. OpenVINO is a machine learning toolkit from Intel. It was used here to optimize the ONNX format and then convert tensors from channel left (NCHW) to channel right (NHWC) format that TensorFlow supports. Quantization was done with the TFLite-Convertor.

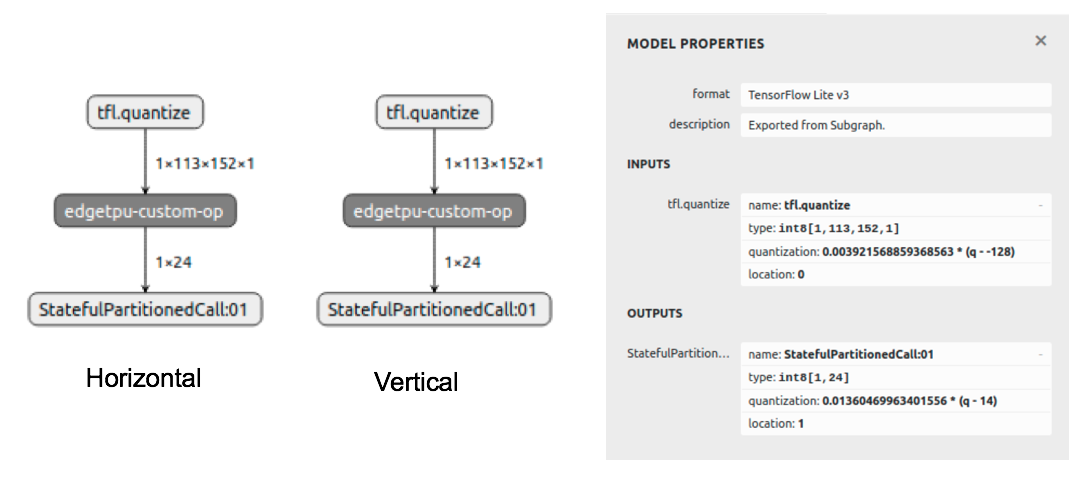

Instead of training a new model in 8-bit directly, we took the 32-bit floating point PyTorch model (bi2dof-optprior) from Section 4.5 and applied a full integer post-training quantization. The quantized model is then compiled into the Google Edge TPU’s supported format. The conversion workflow is outlined in Figure 10. Applying the same procedure, we compressed our implementation of (shreyas:emsoft2020wip, 17) in Section 4 and a variant implementation of (cai:iccps2020, 16). Model graphs of all final outputs and intermediate output of our model are shown in Appendix C.

A variant implementation is needed because as (cai:iccps2020, 16) does OoD detection in the input space, its decoder is required and needs to be compressed. However, the OpenVINO model optimizer doesn’t support the MaxPool layers in the encoder to pass on pooling indices to the MaxUnPool layers in the decoder. As such, we removed all MaxPool and MaxUnPool layers in the variant implementation. This ended up increasing a significant number of neurons to the linear layer after pooling in the variant model.

The results of computational cost are summarised in Table 7. The compressed 8-bit-integer bi2dof-optprior, is 3.6 times smaller and 2.6 times faster than its floating-point model. The compressed 8-bit-integer version of (shreyas:emsoft2020wip, 17) is 3.8 times smaller and 11 times faster than its floating-point model. A lower end-to-end reduction ratio of execution time in bi2dof-optprior is because two model inferences are invoked in the integer version, one for the horizontal and one for the vertical branch, including an extra pre-process computing (optical flow). The 8-bit-integer version of (cai:iccps2020, 16)-variant is 3.9 times smaller than its floating-point model because most of the decoder’s layer computation could not to be pushed onto the Edge TPU, as shown in Appendix C, Figure 15. The execution time however, increased by 42% instead, which implies that the time savings from the computation of the encoder branch on Edge TPU was wiped out by the time spent transmitting the decoder’s intermediate result back to the host via USB 2.0.

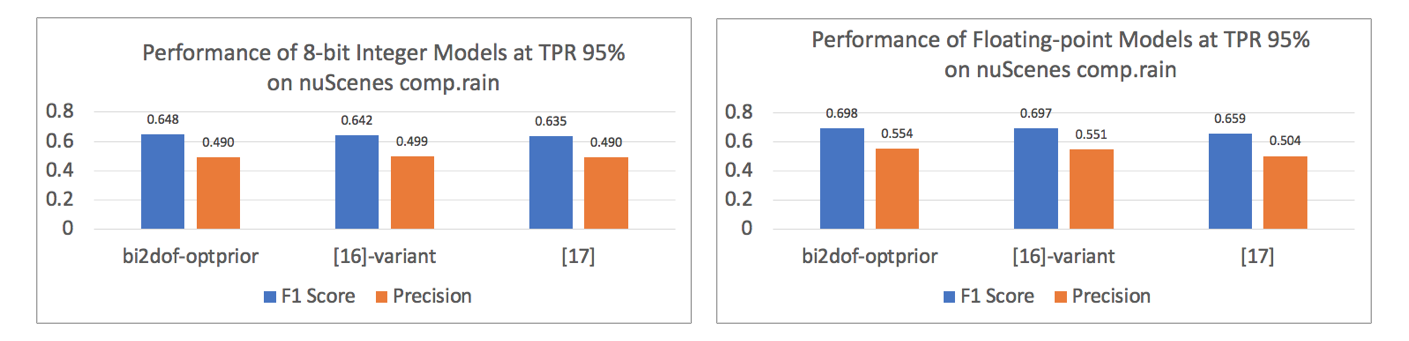

5.3. Impacts of model compression and input-space reduction to performance

Quantization approximates floating-point values into integers with . It has been reported that its impact on image classification models’ performance is minimal. For example, the ImageNet trained Inception_V4 top-1 accuracy is 80.1%, as reported in the TensorFlow guideline. Its corresponding quantized model top-1 accuracy is 79.5%. In classification tasks, the integer vector output can be used directly for class prediction.

In our case, integer-8 bi2dof-optprior outputs are mean and variance values to be plugged in to Equation 8 to derive an OoD score. We used the scale and zero_point values shown in Appendix C, Figure 13 for converting integer values to floating values and vice versa. The process requires a typical data set for calibrating the range of floating-point input tensors. We used bi2dof’s training set as the typical data set. a same compression workflow was applied to (shreyas:emsoft2020wip, 17) and (cai:iccps2020, 16)-variant.

Comparing detection performance of 8-integer models in Figure 11 with corresponding floating-point versions, we can see that the quantized model’s performance dropped a little across all three. While precision is tired up, the F1 score of integer-8 bi2dof-optprior remains higher than the rest. Performance-wise the 8-integer (cai:iccps2020, 16)-variant is closer to our method, but it is not deployable on the RPI + Edge TPU platform. Because its end-to-end execution time is 492 milliseconds.

The result from integer-8 bi2dof-optprior is comparable with its corresponding 32-bit floating-point model, although its performance loss is not minimal as compared to well-known classification tasks. The conversion of integer outputs to float values for OoD score calculation could be one reason for losing more precision in our case.

5.4. Section Summary

We explored the resource challenges of deploying a DNN-based OoD detector onto off-the-shelf embedded hardware platforms for real-time detection. It showed a compressed version of our detector completed one detection at 58.3 milliseconds at an accuracy comparable with its corresponding floating-point model. That is, given an embedded hardware platform with RPI-3B+ and Google Edge TPU dedicated for OoD detection, our compressed integer-8 bi2dof-optprior, can do one execution without any frame-drop when streaming real-time video at 17 FPS. On the other hand, we showed that reducing input data point in the temporal dimension caused the OoD detection to be less robust. One optical flow operation on the aforementioned CPU takes 31.7 milliseconds (see in Table 7). Handling input data points with a larger temporal dimension on off-the-shelf embedded hardware platforms such as Rasberry Pi is challenging. One option for future implementation and deployment of our OoD detector onto a similar embedded platform is to design a FIFO buffer to cache the previous optical flow computation results for a bi3dof OoD detector to reuse. In this feasibility study, we established a bottom line on performance and computational cost.

6. CONCLUSION and FUTURE WORK

This paper proposed a robust OoD detection method through input space feature abstraction and exploiting the spatiotemporal correlation of motion in videos. Tapping into the prior knowledge in data, we also enhanced the existing VAE-based OoD detection method. Through a series of experiments, we validated the novelties of our new detection methods and enhancement proposals. We showed that our methods’ detection performance is significantly better than the state-of-the-art, especially on model generalization capabilities. We plan to deploy our algorithm onto off-the-shelf embedded devices for OoD detection in real-time in future work. In this paper, we compressed our detection model to suit a mini-computer’s computing capacity and a recently available Edge TPU. Finally, we provided a feasibility analysis on deployment to an embedded platform, impacts of model compression on detection performance, and how its execution latency affects real-time applications.

Acknowledgements.

This research was funded in part by MoE, Singapore, Tier-2 grant number MOE2019-T2-2-040.References

- (1) Anh Nguyen, Jason Yosinski and Jeff Clune “Deep neural networks are easily fooled: High confidence predictions for unrecognizable images” In Proceedings of the IEEE conference on computer vision and pattern recognition, 2015, pp. 427–436

- (2) Kevin Eykholt et al. “Robust physical-world attacks on deep learning visual classification” In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2018, pp. 1625–1634

- (3) Balaji Lakshminarayanan, Alexander Pritzel and Charles Blundell “Simple and scalable predictive uncertainty estimation using deep ensembles” In arXiv preprint arXiv:1612.01474, 2016

- (4) Kimin Lee, Kibok Lee, Honglak Lee and Jinwoo Shin “A simple unified framework for detecting out-of-distribution samples and adversarial attacks” In Advances in Neural Information Processing Systems, 2018, pp. 7167–7177

- (5) Philipp Oberdiek, Matthias Rottmann and Hanno Gottschalk “Classification uncertainty of deep neural networks based on gradient information” In IAPR Workshop on Artificial Neural Networks in Pattern Recognition, 2018, pp. 113–125 Springer

- (6) Diederik P Kingma and Max Welling “Auto-encoding variational bayes” In arXiv preprint arXiv:1312.6114, 2013

- (7) Irina Higgins et al. “beta-vae: Learning basic visual concepts with a constrained variational framework”, 2016

- (8) Erik Daxberger and José Miguel Hernández-Lobato “Bayesian variational autoencoders for unsupervised out-of-distribution detection” In arXiv preprint arXiv:1912.05651, 2019

- (9) Vijaya Kumar Sundar et al. “Out-of-Distribution Detection in Multi-Label Datasets using Latent Space of beta-VAE” In 2020 IEEE Security and Privacy Workshops (SPW), 2020, pp. 250–255 IEEE

- (10) Yezheng Liu et al. “Generative adversarial active learning for unsupervised outlier detection” In IEEE Transactions on Knowledge and Data Engineering 32.8 IEEE, 2019, pp. 1517–1528

- (11) Julia Nitsch et al. “Out-of-distribution detection for automotive perception” In arXiv preprint arXiv:2011.01413, 2020

- (12) Eric Nalisnick et al. “Do deep generative models know what they don’t know?” In arXiv preprint arXiv:1810.09136, 2018

- (13) Ilya Tolstikhin, Olivier Bousquet, Sylvain Gelly and Bernhard Schoelkopf “Wasserstein auto-encoders” In arXiv preprint arXiv:1711.01558, 2017

- (14) John L Barron and Neil A Thacker “Tutorial: Computing 2D and 3D optical flow” In Imaging science and biomedical engineering division, medical school, university of manchester 1, 2005

- (15) Javad Zolfaghari Bengar et al. “Temporal coherence for active learning in videos” In 2019 IEEE/CVF International Conference on Computer Vision Workshop (ICCVW), 2019, pp. 914–923 IEEE

- (16) F. Cai and X. Koutsoukos “Real-time Out-of-distribution Detection in Learning-Enabled Cyber-Physical Systems” In 2020 ACM/IEEE 11th International Conference on Cyber-Physical Systems (ICCPS), 2020, pp. 174–183

- (17) Arvind Easwaran Shreyas Ramakrishna and Abhishek Dubey “WIP: Resource Efficient Multi-class Out-of-distribution reasoning for Perception Based Networks” In Work-in-Progress Session of the IEEE/ACM International Conference on Embedded Software (EMSOFT), 2020

- (18) Hyunsun Choi, Eric Jang and Alexander A Alemi “Waic, but why? generative ensembles for robust anomaly detection” In arXiv preprint arXiv:1810.01392, 2018

- (19) Jie Ren et al. “Likelihood ratios for out-of-distribution detection” In arXiv preprint arXiv:1906.02845, 2019

- (20) Joan Serrà et al. “Input complexity and out-of-distribution detection with likelihood-based generative models” In arXiv preprint arXiv:1909.11480, 2019

- (21) Dan Li, Dacheng Chen, Jonathan Goh and See-kiong Ng “Anomaly detection with generative adversarial networks for multivariate time series” In arXiv preprint arXiv:1809.04758, 2018

- (22) Weiming Hu, Tieniu Tan, Liang Wang and Steve Maybank “A survey on visual surveillance of object motion and behaviors” In IEEE Transactions on Systems, Man, and Cybernetics, Part C (Applications and Reviews) 34.3 IEEE, 2004, pp. 334–352

- (23) Suihanjin Yu et al. “HMFlow: Hybrid Matching Optical Flow Network for Small and Fast-Moving Objects” In 2020 25th International Conference on Pattern Recognition (ICPR), 2021, pp. 1197–1204 IEEE

- (24) Gabriele Costante and Thomas Alessandro Ciarfuglia “LS-VO: Learning dense optical subspace for robust visual odometry estimation” In IEEE Robotics and Automation Letters 3.3 IEEE, 2018, pp. 1735–1742

- (25) Fei Guo et al. “Detecting vehicle anomaly in the edge via sensor consistency and frequency characteristic” In IEEE Transactions on Vehicular Technology 68.6 IEEE, 2019, pp. 5618–5628

- (26) Cian Ryan, Finbarr Murphy and Martin Mullins “End-to-End Autonomous Driving Risk Analysis: A Behavioural Anomaly Detection Approach” In IEEE Transactions on Intelligent Transportation Systems IEEE, 2020

- (27) Matthew D Hoffman and Matthew J Johnson “Elbo surgery: yet another way to carve up the variational evidence lower bound” In Workshop in Advances in Approximate Bayesian Inference, NIPS 1, 2016, pp. 2

- (28) Jakub Tomczak and Max Welling “VAE with a VampPrior” In International Conference on Artificial Intelligence and Statistics, 2018, pp. 1214–1223 PMLR

- (29) Najmeh Abiri and Mattias Ohlsson “Variational auto-encoders with Student’s t-prior” In arXiv preprint arXiv:2004.02581, 2020

- (30) Yijun Xiao, Tiancheng Zhao and William Yang Wang “Dirichlet variational autoencoder for text modeling” In arXiv preprint arXiv:1811.00135, 2018

- (31) Holger Caesar et al. “nuscenes: A multimodal dataset for autonomous driving” In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 2020, pp. 11621–11631

- (32) Péter Almási, Róbert Moni and Bálint Gyires-Tóth “Robust reinforcement learning-based autonomous driving agent for simulation and real world” In arXiv preprint arXiv:2009.11212, 2020

- (33) Stephen Cass “Taking AI to the edge: Google’s TPU now comes in a maker-friendly package” In IEEE Spectrum 56.5 IEEE, 2019, pp. 16–17

- (34) Benoit Jacob et al. “Quantization and training of neural networks for efficient integer-arithmetic-only inference” In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2018, pp. 2704–2713

Appendix A Likelihood Overlap Explained

We trained two VAE models to reproduce the likelihood overlap problem. The mean square errors between the input and reconstructed images were used as OoD scores. The model encoder includes three CNN blocks of 32/64/128 x (3/4/5) filters with ReLU activation. The decoder is symmetric. And the latent space dimension is 32.

As shown in Figure 12, distributions of OoD scores of KMNIST and MNIST test sets (right column top row) overlap when the detection model is trained with the KMNIST training set. In contrast, when the detection model is trained using the same network but MNIST training set, the OoD scores distribution of the KMNIST test set (left column top row) clearly diverges from OoD scores of the MNIST test set. The phenomenon is not symmetric with regard to the model training set. This pattern can be observed in CIFAR10 versus SVHN sets as well, in a weaker form.

Sample images from each data set is shown in the middle column. Distributions of OoD scores from test sets are shown in the left and right columns (likelihood overlap cases) .

Appendix B Derivation of Equation 3b

For abbreviation, A shorter version of notation is used here, i.e. denotes in Equation 3b, for and for .

Let be the value of a Gaussian decoder’s reconstruction of in dimension and parameters and to model. The decoder probability density function and the likelihood is . So the negative log-likelihood

| (9) |

Appendix C Model Graphs from the Compression Workflow

Scale and zero_point values used for converting input data from floating points to 8-bit integers and output data in the horizontal branch back to floating points are shown in the model properties panel. Scale and zero_point values of the vertical branch are 0.01613845 and 13, respectively.

Only the horizontal encoder is shown. The vertical encoder network structure is identical. From left to right are the encoder network structure in PyTorch format, ONNX format, and TFLite format after quantization. Activation function ReLU was used here instead of ELU because the Google Edge TPU doesn’t support ELU. The OpenVINO tool shifts channel right (NCHW) tensors into channel left (NHWC) format. Its output is in XML format, not shown here.

The graph in the left-most column is the encoder. The middle and right columns are graphs of the decoder. Computation of grey-colored layers will be executed on Edge TPU. Computation of none-grey-colored layers will be performed on the CPU.

Appendix D Latent Variables Selection Algorithm in (shreyas:emsoft2020wip, 17)

| Pseudo code | |

| Input: | calibration set , number of latent variables to select |

| Output: | index of selected latent variables |

| 1: | |

| 2: | For each scene in do |

| 3: | ordered frames belongs to the scene |

| 4: | For do |

| 5: | |

| 6: | |

| 7: | |

| 8: | end for |

| 9: | end for |

| 10: | |

| 11: | return index of first latent variables in |