Incorporating Prior Knowledge of Latent Group Structure in Panel Data Models111Please check here for the latest version.

First Version: June 14, 2022

This Version: August 1, 2025

)

Abstract

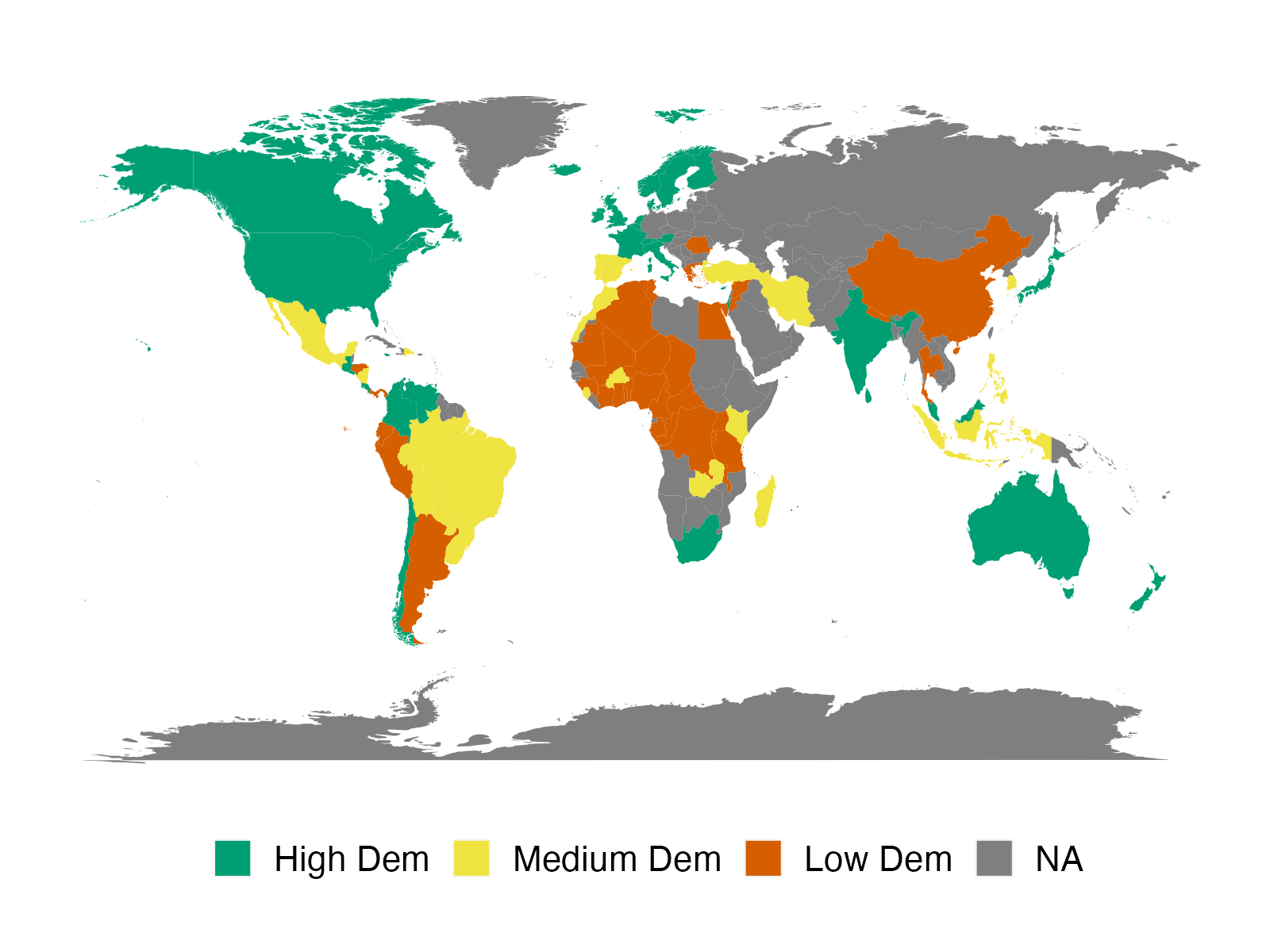

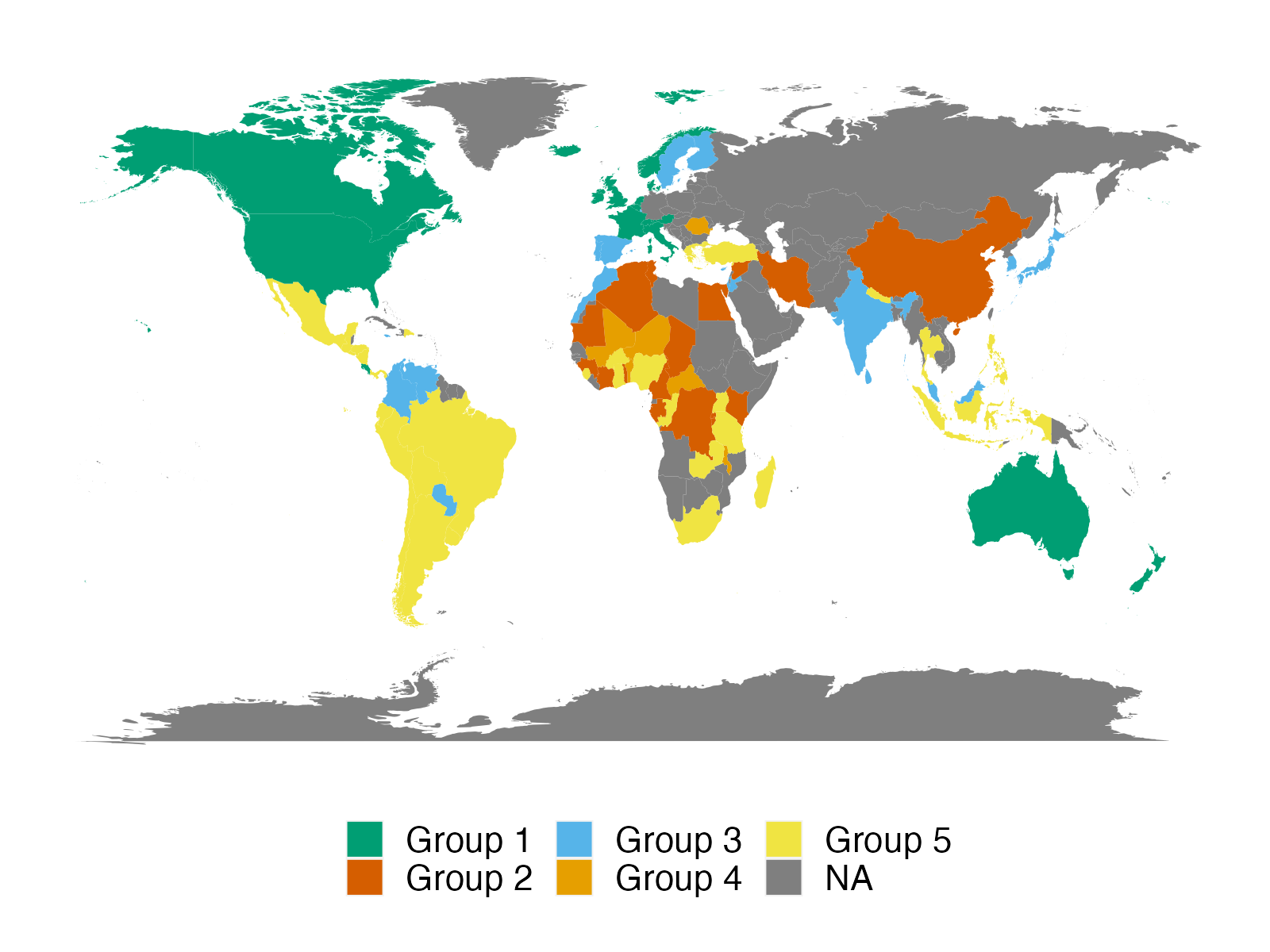

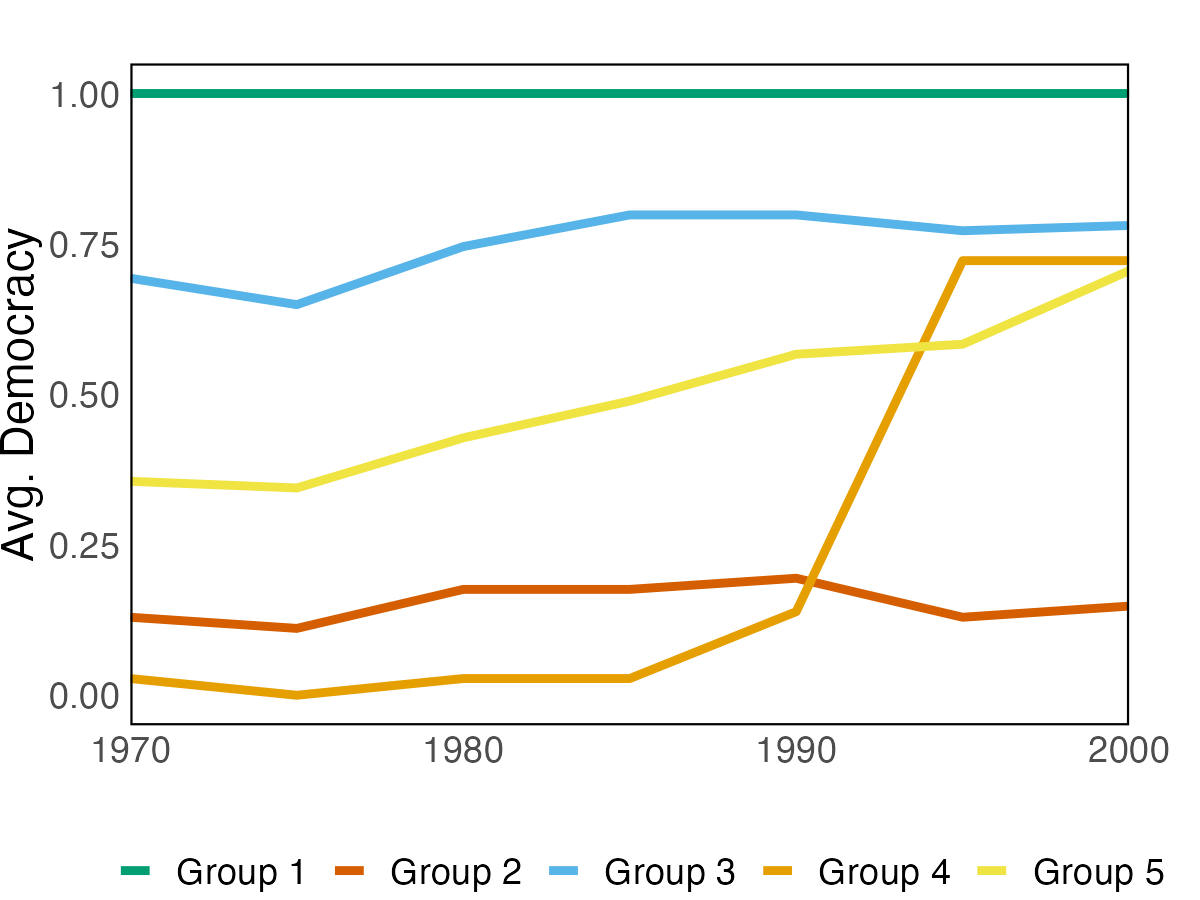

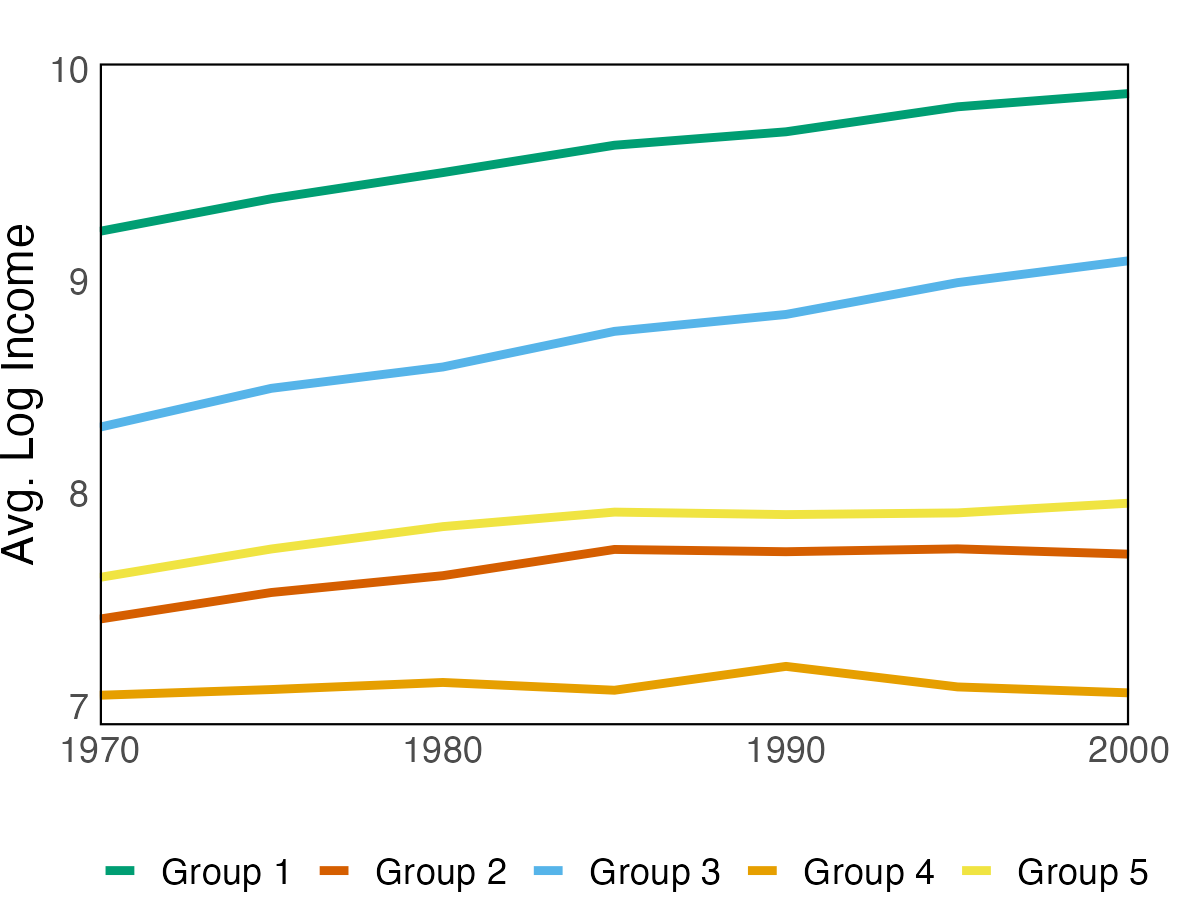

The assumption of group heterogeneity has become popular in panel data models. We develop a constrained Bayesian grouped estimator that exploits researchers’ prior beliefs on groups in a form of pairwise constraints, indicating whether a pair of units is likely to belong to a same group or different groups. We propose a prior to incorporate the pairwise constraints with varying degrees of confidence. The whole framework is built on the nonparametric Bayesian method, which implicitly specifies a distribution over the group partitions, and so the posterior analysis takes the uncertainty of the latent group structure into account. Monte Carlo experiments reveal that adding prior knowledge yields more accurate estimates of coefficient and scores predictive gains over alternative estimators. We apply our method to two empirical applications. In a first application to forecasting U.S. CPI inflation, we illustrate that prior knowledge of groups improves density forecasts when the data is not entirely informative. A second application revisits the relationship between a country’s income and its democratic transition; we identify heterogeneous income effects on democracy with five distinct groups over ninety countries.

JEL CLASSIFICATION: C11, C14, C23, E31

KEY WORDS: Grouped Heterogeneity; Bayesian Nonparametrics; Dirichlet Process Prior; Density Forecast; Inflation Rate Forecasting; Democracy and Development.

1 Introduction

Numerous studies have examined and demonstrated the important role of panel data models in empirical research throughout the social and business sciences, as the availability of panel data has increased. Using fixed-effects, panel data permits researchers to model unobserved heterogeneity across individuals, firms, regions, and countries as well as possible structural changes over time. As individual heterogeneity is often empirically relevant, fixed-effects are an objective of interest in numerous empirical studies. For example, teachers’ fixed-effects are viewed as a measure of teacher quality in the literature on teacher valued-added (Rockoff, 2004; Chetty et al., 2014); the heterogeneous coefficients are crucial for panel data forecasting (Liu et al., 2020; Pesaran et al., 2022). In practice, however, researchers may face a short panel where is large and is short and fixed. When applying the least squares estimator for the fixed-effects, a significant number of noisy estimates are produced. To alleviate this issue, a popular and parsimonious assumption that has recently been used is to introduce a group pattern into the individual coefficients, so that units within each group have identical coefficients (Bonhomme and Manresa (2015, BM hereafter), Su et al. (2016), Bonhomme et al. (2022)).

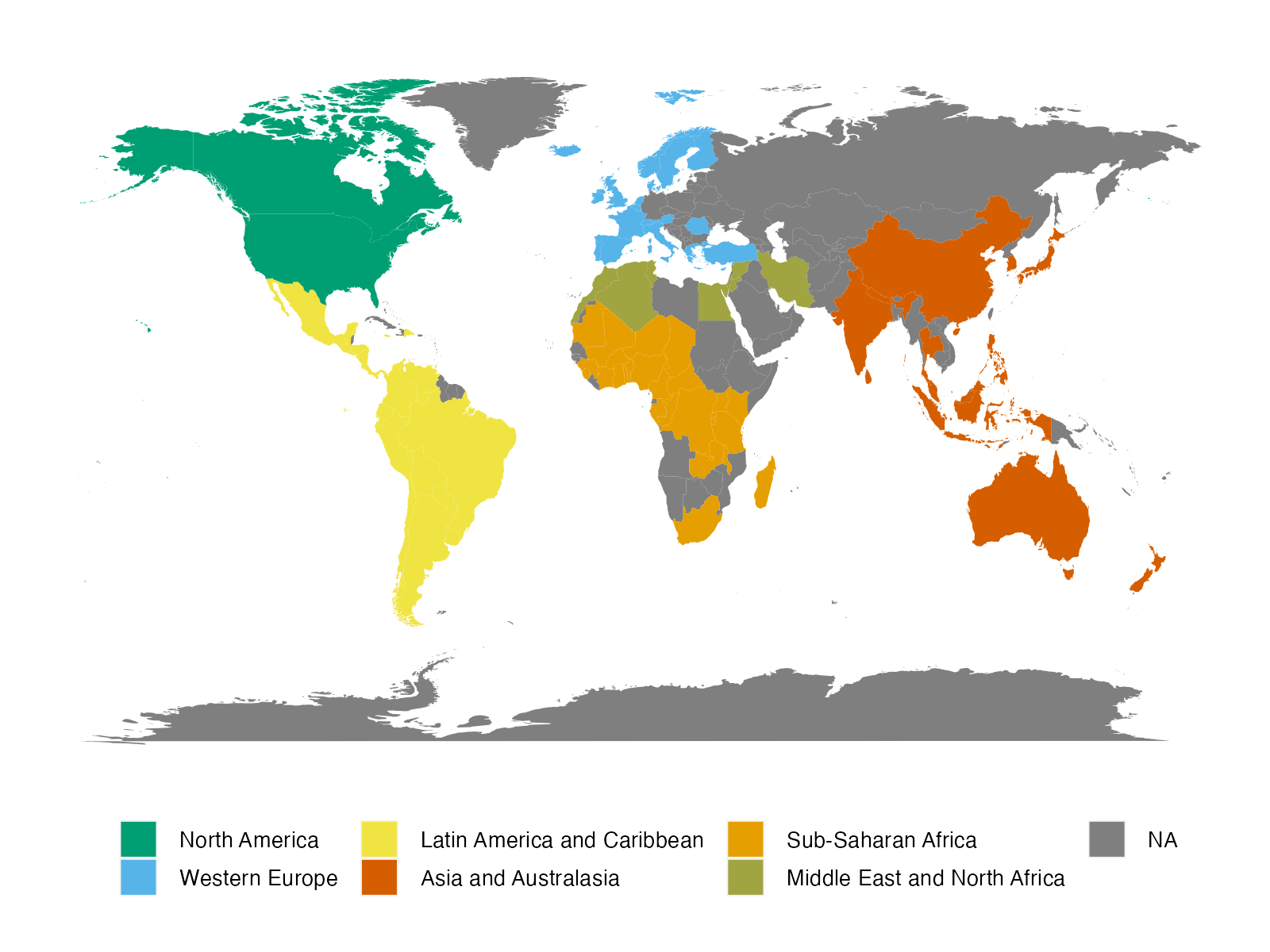

To recover the group pattern, we essentially face a clustering problem, e.g., dividing units into several unknown groups. All existing methods for estimating group heterogeneity solve a clustering problem by assuming that units are exchangeable and treating all units equally a priori. In a cross-country application of evaluating the impact of climate change on economic growth (Hsiang, 2016; Henseler and Schumacher, 2019; Kahn et al., 2021), countries in different climatic zones are assumed to have equal probabilities of being grouped together. The assumption of exchangeability might not be reasonable since correlations are common between observations at proximal locations and researchers could have knowledge of the underlying group structure based on theories or empirical findings. For instance, Sweden and Finland, which share a border, an economic structure, and weather conditions, may have a higher chance of being in the same group than African countries. In such a scenario, it is preferable to use additional information to break the exchangeability between countries to facilitate grouping as opposed to clustering based solely on observations in the sample. The availability of this information drives us to formalize such prior knowledge, which we wish to leverage to improve model performance.

In this paper, we focus on the group heterogeneity in the linear panel data model and develop a nonparametric Bayesian framework to incorporate prior knowledge of groups, which is considered additional information that does not enter the likelihood function. The prior knowledge aids in clustering units into groups and sharpens the inference of group-specific parameters, particularly when units are not well-separated.

The whole framework is built on the nonparametric Bayesian method, where we do not impose a restriction on the number of groups, and model selection is not required. The baseline model is a linear panel data model with an unknown group pattern in fixed-effects, slope coefficients, and cross-sectional error variances. We estimate the model using the stick-breaking representation Sethuraman (1994) of the Dirichlet process (DP) Ferguson (1973, 1974) prior, a standard prior in nonparametric Bayesian inference. In this framework, the number of groups is considered a random variable and is subject to posterior inference. The number of groups and group membership are estimated together with the heterogeneous coefficients. Moreover, since the DP prior implicitly defines a prior distribution on the group partitionings, the posterior analysis takes the uncertainty of the latent group structure into account.

The derivation of the proposed prior starts with summarizing prior knowledge in the form of pairwise constraints, which describe a bilateral relationship between any two units. Inspired by the work of Wagstaff and Cardie (2000), we consider two types of constraints: positive-link and negative-link constraints, representing the preference of assigning two units to the same group or distinct groups. Instead of imposing these constraints dogmatically, each constraint is given a level of accuracy that shows how confident the researchers are in their choice. There is a hyperparameter that controls the overall strength of the prior knowledge: a small value partially recovers the exchangeability assumption on units, whereas a large value confines the prior distribution of group partitioning around group structure based on prior knowledge. We choose the optimal value for the hyperparameter by maximizing the marginal data density. Summarizing prior knowledge in the form of pairwise constraints is practical and flexible since it eliminates the need to predetermine the number of groups and focuses on the bilateral relationships within any subset of units.

The aforementioned pairwise constraints are used to modify the standard DP prior. In particular, the pairwise constraints are combined with the prior distribution of the group partitioning, shrinking the distribution toward my prior knowledge. We refer to the estimator using the proposed prior as the Bayesian group fixed-effects (BGFE) estimator.

We derive a posterior sampling algorithm for the framework with the modified DP prior. Adopting conjugate priors on group-specific coefficients allows for drawing directly from posteriors using a computationally efficient Gibbs sampler. With the newly proposed prior, it can be shown that, compared to the framework that uses a standard DP prior, all that is needed to implement pairwise constraints is a simple modification to the posterior of the group indices.

The pairwise constraint-based framework is closely related and applicable to other models where group structure plays a role. Although we concentrate primarily on the panel data model, the DP prior with pairwise constraints applies to models without the time dimension, such as the standard clustering problem and the estimation of heterogeneous treatment effects. The framework is also applicable to estimating panel VARs (Holland et al., 1983), which involves multiple dependent variables. The group structure is used to overcome overparameterization and overfitting issues by clustering the VAR coefficients into groups, and pairwise constraints add additional information to the highly parameterized model. Moreover, the proposed Gibbs sampler with pairwise constraints is connected to the KMeans-type algorithm, motivating a frequentist’s counterpart of our estimator with a fixed . Essentially, the assignment step in the Pairwise Constrained-KMeans algorithm Basu et al. (2004a), a constrained version of the KMeans algorithm MacQueen et al. (1967), is remarkably similar to the step of drawing a group membership indicator from its posterior. The same exact equivalence can be achieved by applying small-variance asymptotics to the posterior densities under certain conditions. To obtain the frequentist’s analog of our pairwise constrained Bayesian estimators, one can utilize the same approach in BM with the Pairwise Constrained-KMeans algorithm.

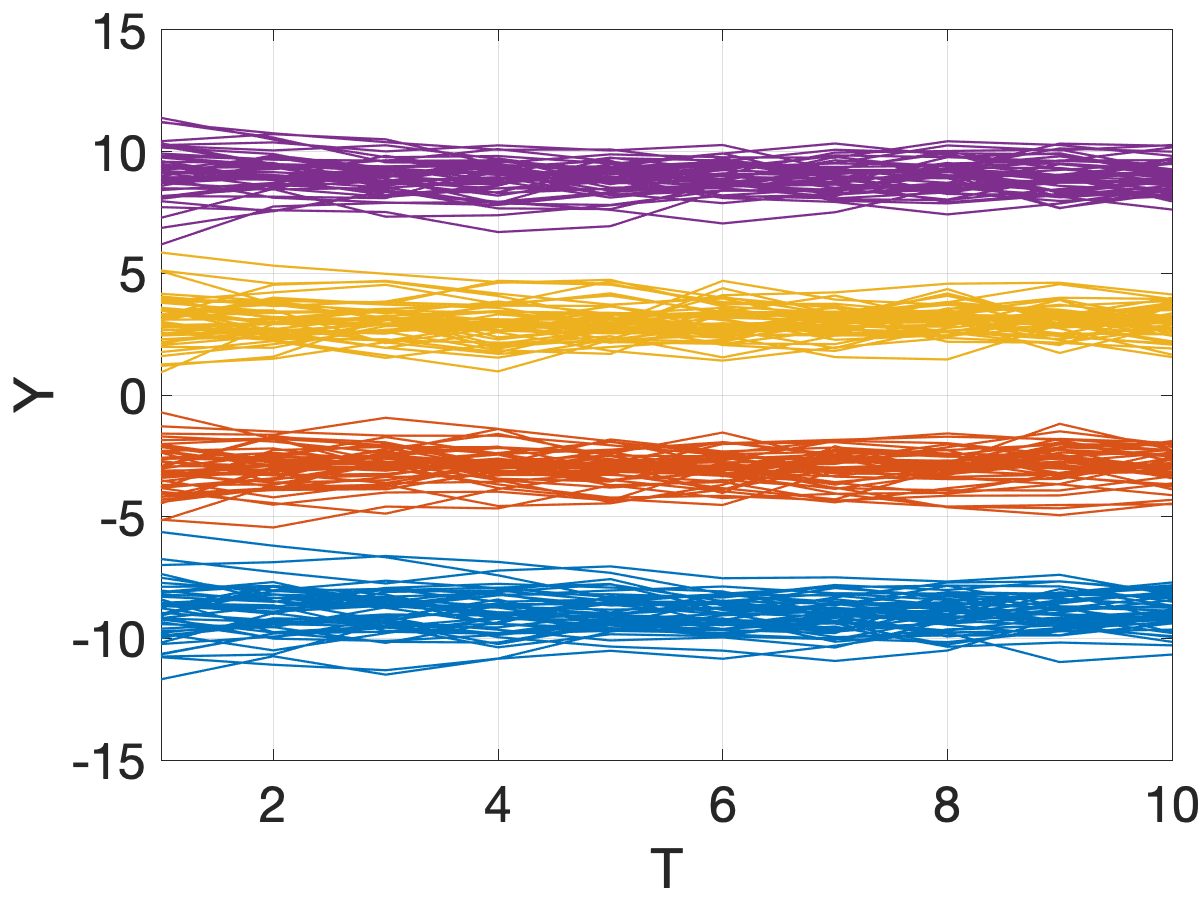

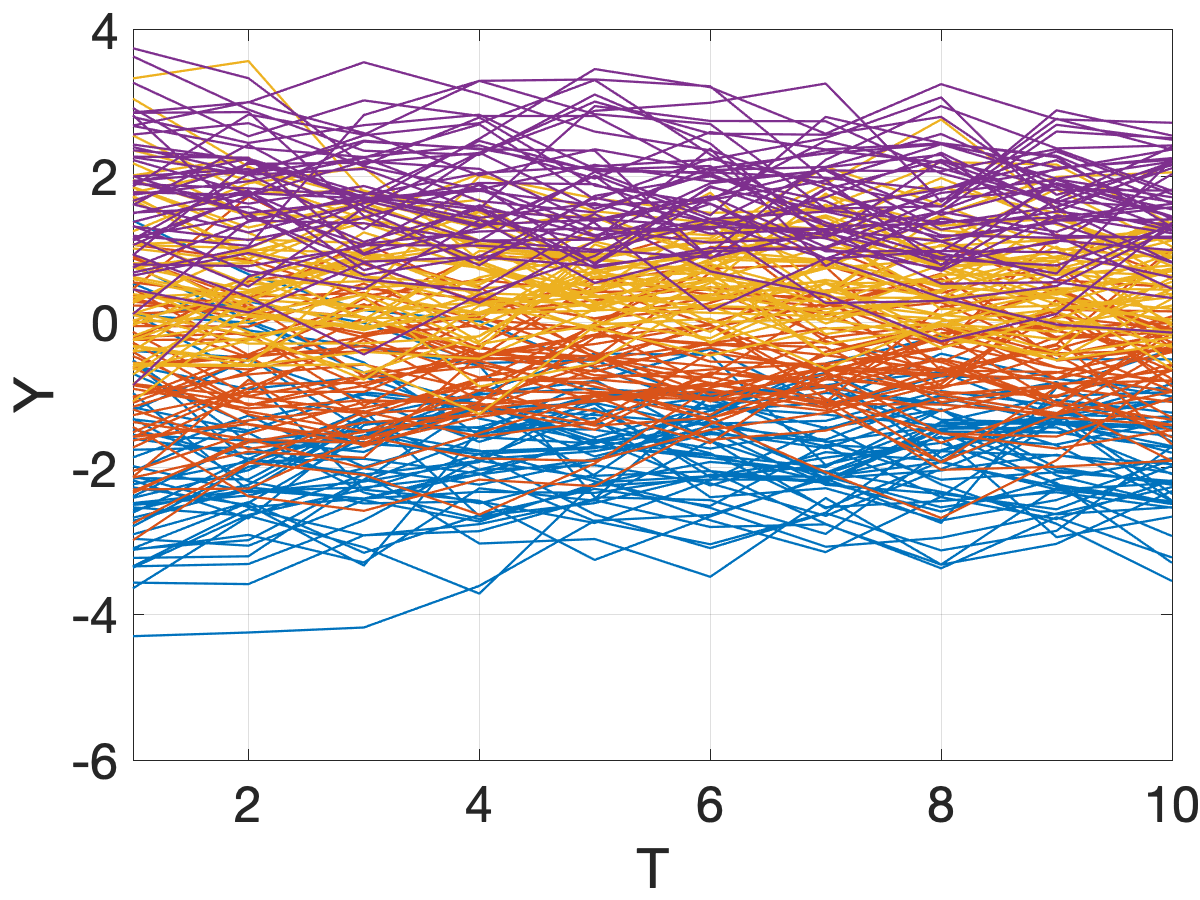

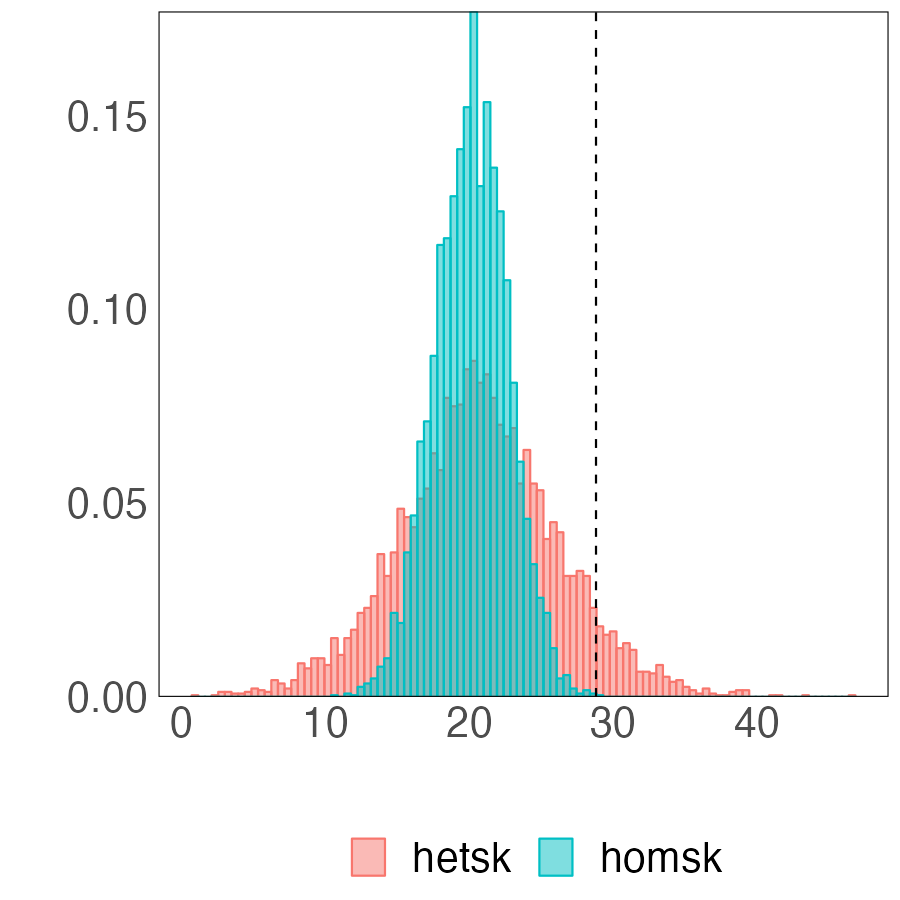

We compare the performance of the BGFE estimator to alternative estimators using simulated data. The Monte Carlo simulation demonstrates that the BGFE estimator generates more accurate estimates of the group-specific parameters and the number of groups than the BGFE estimator without including any constraints. The improved performance is mostly attributable to the precise group structure estimation. The BGFE estimator clearly dominates the estimators that omit the group structure by assuming homogeneity or full heterogeneity. We also evaluate the performance of one-step ahead point, set, and density forecasts. Unsurprisingly, the accurate estimates translate into the predictive power of the underlying model; the BGFE estimator outperforms the rest of the estimators.

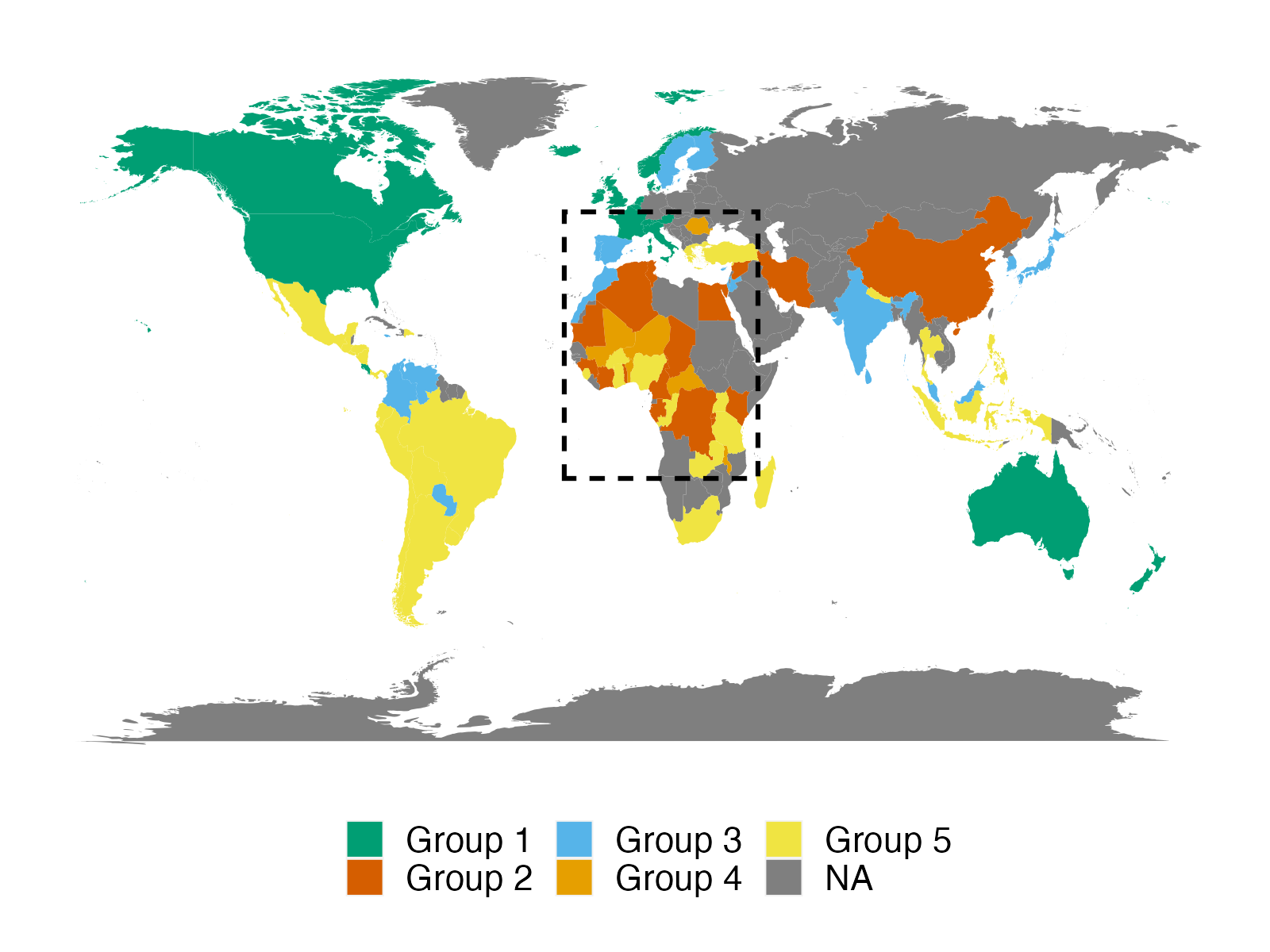

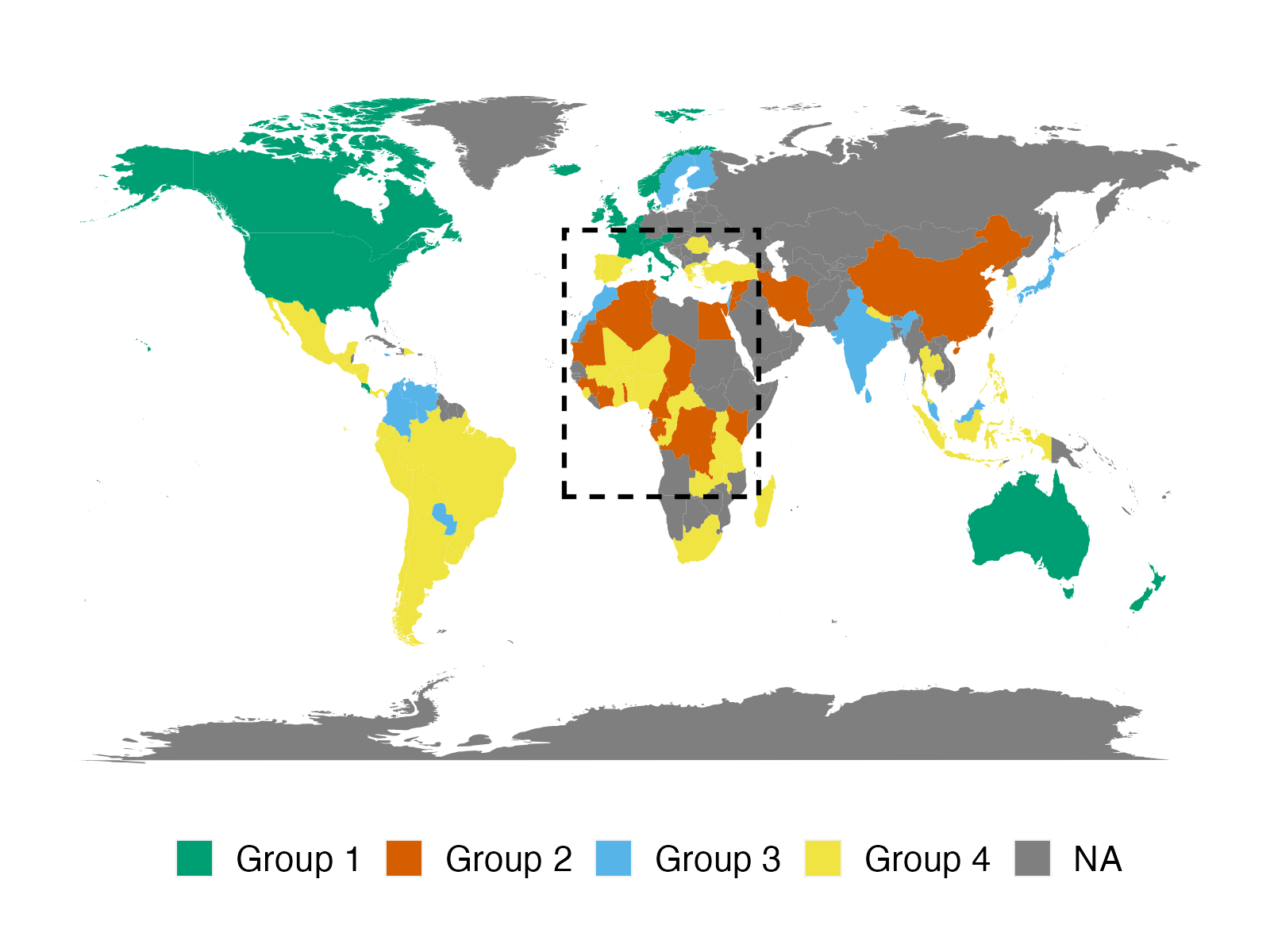

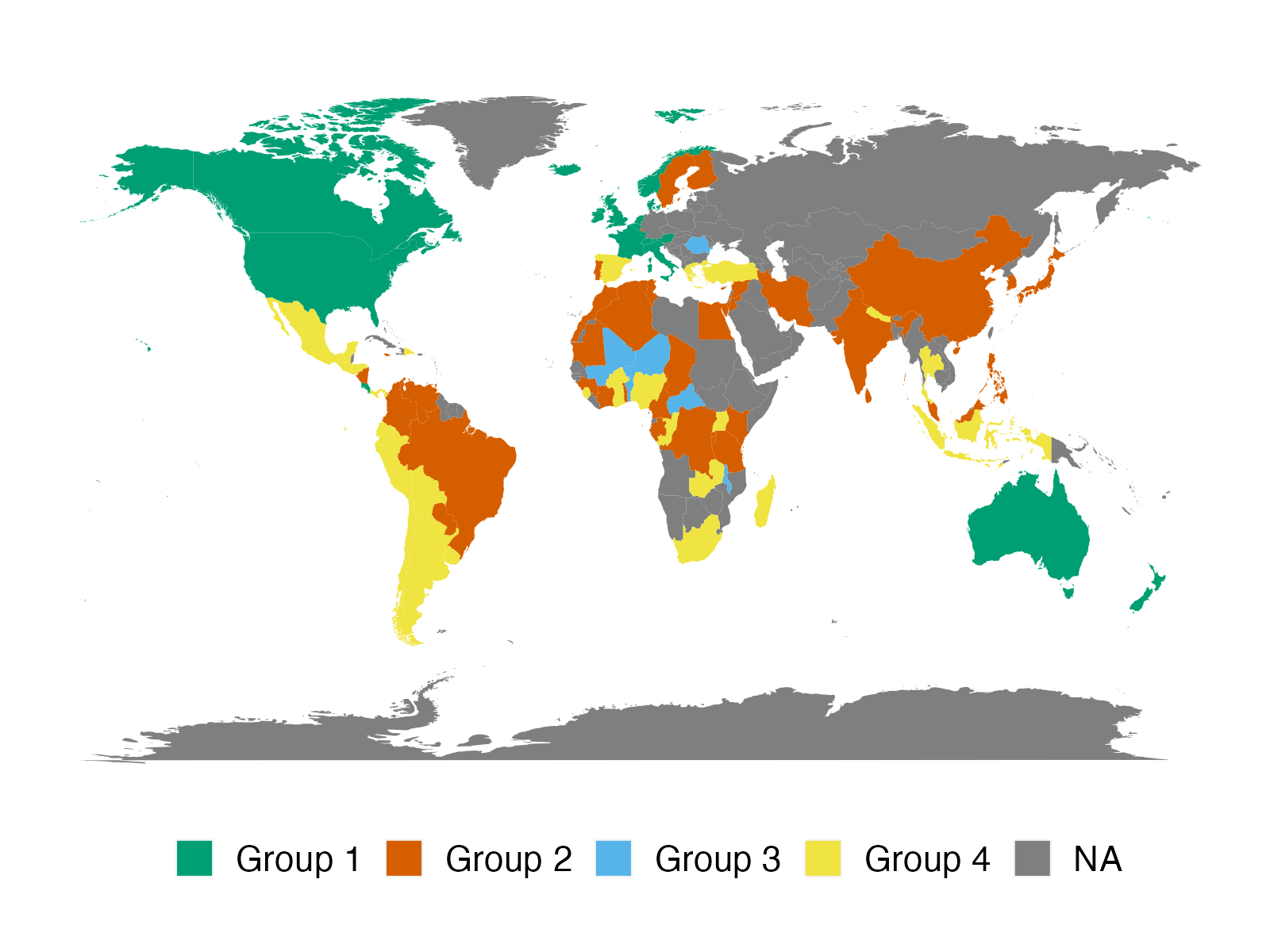

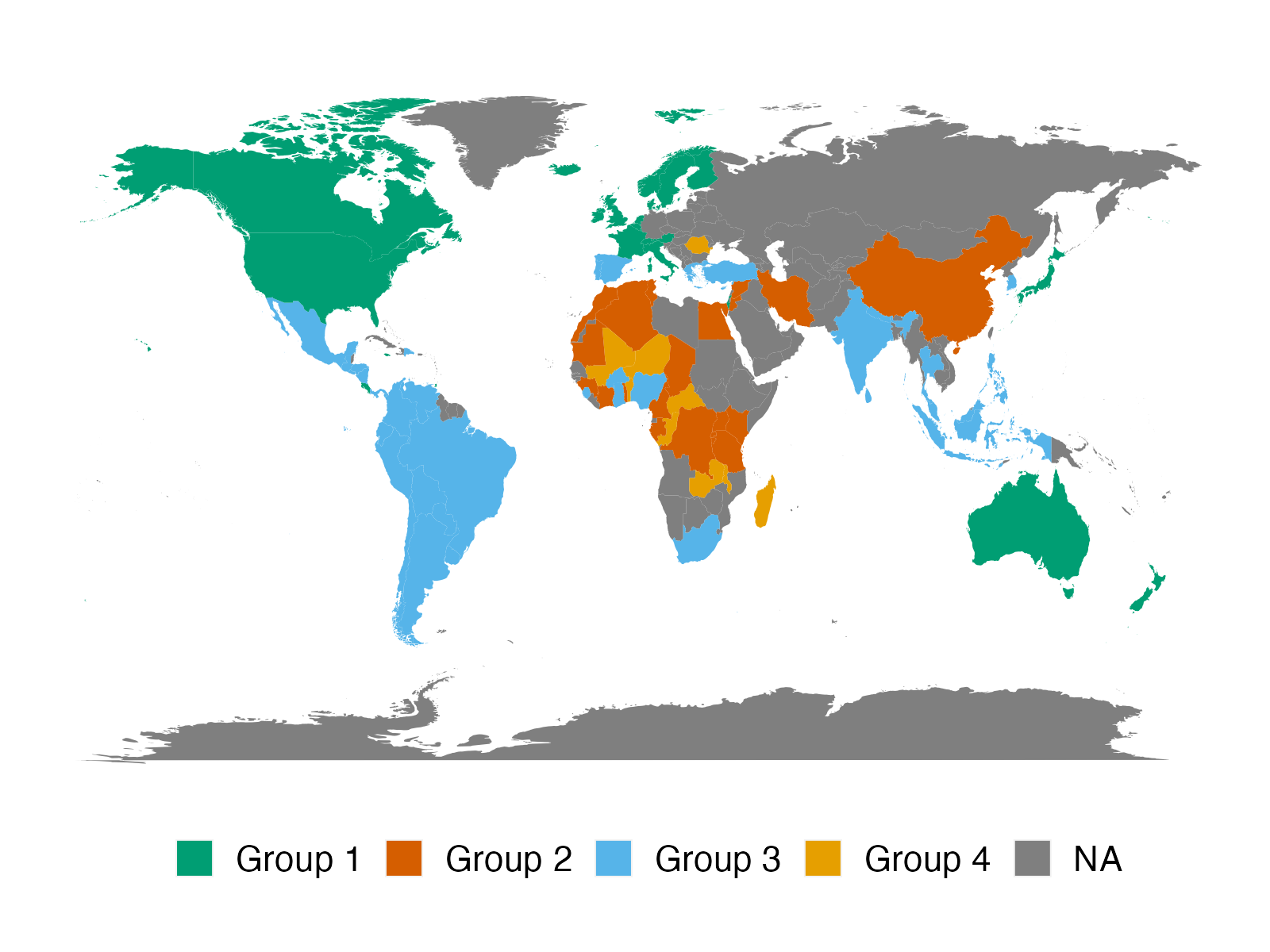

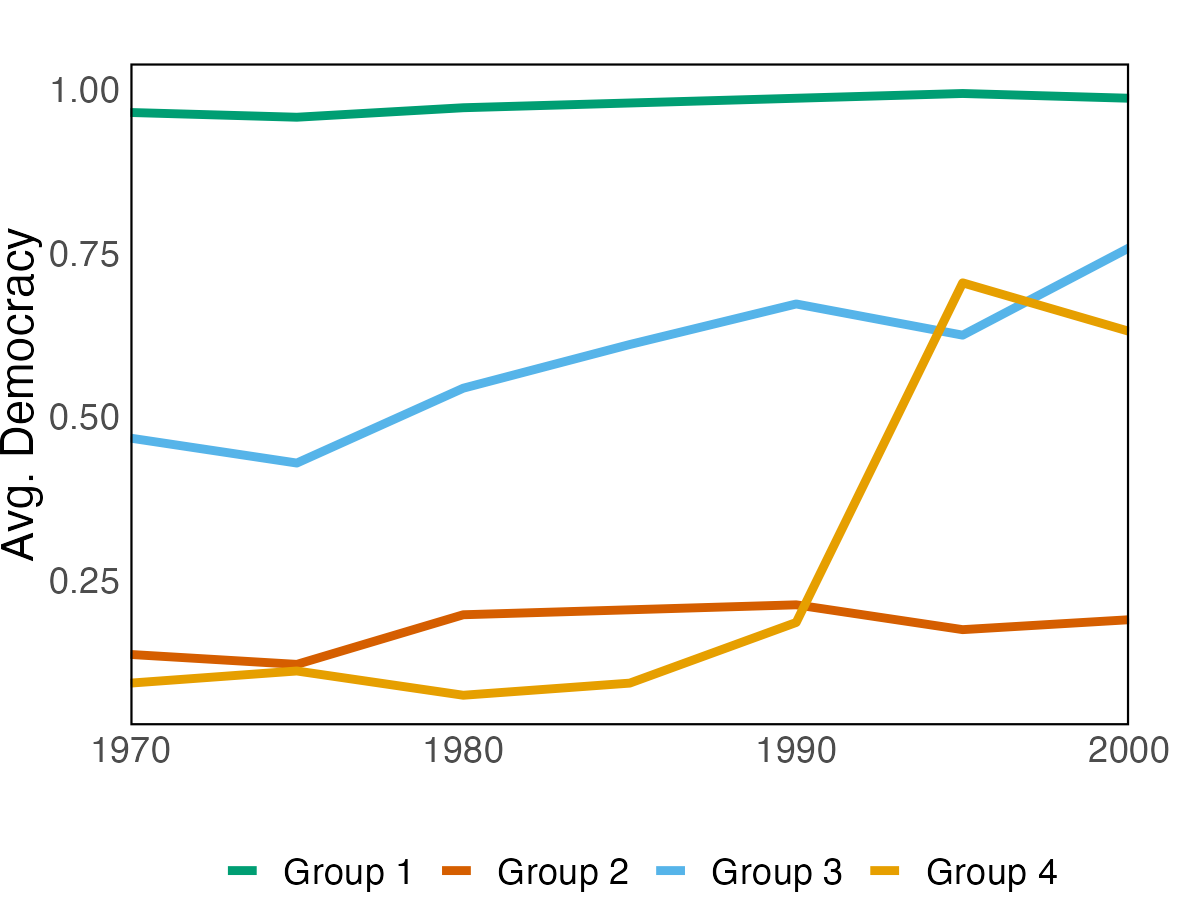

We apply the proposed method to two empirical applications. An application to forecasting the inflation of the U.S. CPI sub-indices demonstrates that the suggested predictor yields more accurate density predictions. The better forecasting performance is mostly attributable to three key characteristics: the nonparametric Bayesian prior, prior belief on group structure, and grouped cross-sectional heteroskedasticity. In a second application, we revisit the relationship between a country’s income and its democratic transition. This question was originally studied by Acemoglu et al. (2008), who demonstrate that the positive income effect on democracy disappears if country fixed effects are introduced into the model. The proposed framework recovers a group structure with a moderate number of groups. Each group has a clear and distinct path to democracy. In addition, we identify heterogeneous income effects on democracy and, contrary to the initial findings, show that a positive income effect persists in some groups of countries, though quantitatively small.

Literature. This paper relates to the econometric literature on clustering in panel data models. Early contributions include Sun (2005) and Buchinsky et al. (2005). Hahn and Moon (2010) provide economic and theoretical foundations for fixed effects with a finite support. Most recent works focus on linear222See Wang and Su (2021); Bonhomme et al. (2022), among others, for procedures to identify latent group structures in nonlinear panel data models. panel data models with discrete unobserved group heterogeneity. Lin and Ng (2012) and Sarafidis and Weber (2015) apply the KMeans algorithm to identify the unobserved group structure of slope coefficients. Bonhomme and Manresa (2015) also use the KMeans algorithm to recover the group pattern, but they assume group structure in the additive fixed effects. Bonhomme et al. (2022) modify this method and split the procedure into two steps. They first classify individuals into groups using KMeans algorithm and then estimate the coefficients. Ando and Bai (2016) improved on BM’s approach by allowing for group structure among the interactive fixed effects. The underlying factor structure in the interactive fixed effects is the key to forming groups. Su et al. (2016) develop a new variant of Lasso to shrink individual slope coefficients to unknown group-specific coefficients. This method is then extended by Su and Ju (2018) and Su et al. (2019). Freeman and Weidner (2022) consider two-way grouped fixed effects that allow for different group patterns in time and cross-sectional dimensions. Okui and Wang (2021) and Lumsdaine et al. (2022) identify structure breaks in parameters along with grouped patterns. From the Bayesian perspective, Kim and Wang (2019), Zhang (2020), and Liu (2022) adopt the Dirichlet process prior to estimate grouped heterogeneous intercepts in linear panel data models in the semiparametric Bayesian framework. moon2023 incorporate a version of a spike and slab prior to recover one core group of units. Alternative methods, such as binary segmentation (Wang et al., 2018) and assumptions, such as multiple latent groups structure (Cheng et al., 2019; Cytrynbaum, 2021) have also been explored to flourish group heterogeneity literature.

Our work concerns prior knowledge. Bonhomme and Manresa (2015)’s grouped fixed-effects (GFE) estimator is able to include prior knowledge, but it is plagued by practical issues to some extent. They add a collection of individual group probabilities as a penalty term in the objective function, which is a by matrix describing the probability of assigning each unit to all potential groups. This additional penalty term balances the respective weights attached to prior and data information in estimation. The main challenge is providing the set of individual group probabilities for each potential value of as the underlying KMeans algorithm requires model selection. It is rather cumbersome to assess these probabilities for each possible and to adjust for changes in reallocating probabilities across .

None but Aguilar and Boot (2022) explore heterogeneous error variance, and they extend BM’s GFE estimator to allow for group-specific error variances. They modify the objective function to avoid the singularity issue in pseudo-likelihood. Despite the fact that their work paves the way for identifying groups in the error variance, their framework is not yet ready to satisfactorily incorporate prior knowledge because they face the same issue as BM. Building on these works, we investigate the value of prior knowledge of group structure.

This paper also relates to the literature of constraint-based semi-supervised clustering in statistics and computer science. Pairwise constraints have been widely implemented in numerous models and have been shown to improve clustering performance. In the past two decades, various pairwise constrained KMeans algorithms using prior information have been suggested Wagstaff et al. (2001); Basu et al. (2002, 2004a); Bilenko et al. (2004); Davidson and Ravi (2005); Pelleg and Baras (2007); Yoder and Priebe (2017). Prior information is also introduced in the model-based method. Shental et al. (2003) develop a framework to incorporate prior information for the density estimation with Gaussian mixture models. The Dirichlet process mixture model with pairwise constraints has been discussed in Vlachos et al. (2008), Vlachos et al. (2009), Orbanz and Buhmann (2008), Vlachos et al. (2010), Ross and Dy (2013). Lu and Leen (2004), Lu (2007) and Lu and Leen (2007) assume the knowledge on constraints is incomplete and penalize the constraints in accordance with their weights. Law et al. (2004) extents Shental et al. (2003) to allow for soft constraints in the mixture model by adding another layer of latent variables for the group label. Nelson and Cohen (2007) propose a new framework that samples pairwise constraints given a set of probabilities related to the weights of constraints.

Our paper is closely related to Paganin et al. (2021), who address a similar problem using a novel Bayesian framework. Their proposed method shrinks the prior distribution of group partitioning toward a full target group structure, which is an initial clustering of all units provided by experts. This is demanding since not every application can have a full target group structure, as their birth defect epidemiology study did. Our framework circumvents this problem by using pairwise constraints, which are flexibly assigned to any two units. In addition, the induced shrinkage of their framework is produced by the distance function defined by Variation of Information (Meilă, 2007). It can be demonstrated that a partition can readily become caught in local modes, preventing it from ever shrinking toward the prior partition. The use of pairwise relationships in this paper circumvents this issue as well. By fixing the group indices of other pairs, our framework makes sure that the partition with a specific pair that fits our prior belief has a higher prior probability than the partition with a pair that goes against our prior belief.

Outline. In section 2, we present the specification of the dynamic panel data model with group pattern in slope coefficients and error variances and provide details on nonparametric Bayesian priors without prior knowledge, which are then extended to accommodate soft pairwise constraints. Section 3 focuses on the posterior analysis, where the posterior sampling algorithm is provided. We also highlight the posterior estimate of group structure and discuss the connection to constrained KMeans models. We briefly discuss the extensions of the baseline model in section 5. In section 4, we present empirical analysis in which we forecast the inflation rate of the U.S. CPI sub-indices and estimate the country’s income effect on its democracy. Finally, we conclude in section 6. Monte Carlo simulations, additional empirical results, and proofs are relegated to the appendix.

2 Model and Prior Specification

We begin our analysis by setting up a linear panel data model with group heterogeneity in intercepts, slope coefficients, and cross-sectional innovation variance. We then elaborate a nonparametric Bayesian prior for the unknown parameters that takes prior beliefs in the group pattern into account. We briefly highlight several key concepts of a standard nonparametric Bayesian prior as our proposed prior inherits some of its properties.

2.1 A Basic Linear Panel Data Model

We consider a panel with observations for cross-sectional units in periods . Given the panel data set , a basic linear panel data model with grouped heterogeneous slope coefficients and grouped heteroskedasticity takes the following form:

| (2.1) |

where are a vector of covariates, which may contain intercept, lagged , other informative covariates. denote the group-specific slope coefficients (including intercepts). are the group-specific variance. is the latent group index with an unknown number of groups . are the idiosyncratic errors that are independent across and conditional on . They feature zero mean and grouped heteroskedasticity , with cross-sectional homoskedasticity being a special case where . This setting leads to a heterogeneous panel with group pattern modeled through both and .

It is convenient to reformulate the model in (2.1) in matrix form by stacking all observations for unit :

| (2.2) |

where , , , and is a vector of group indices.

Group structure is the key element in our approach. It can be either represented as a vector of group indices describing to which group each unit belongs or as a collection of disjoint blocks induced by , where contains all the units in the -th group and is the number of groups in the sample of size . denotes the cardinality of the set with .

Remark 2.1.

Identification issues may arise with certain specifications. If the grouped fixed-effects in are allowed to vary over time, for example, cannot be identified when the group contains only one unit. Aguilar and Boot (2022) propose a solution, but this problem is beyond the scope of this work. They suggest using the square-root objective function rather than the pseudo-log-likelihood function as the objective function, which replaces the logarithm of with the square root of , to avoid the singularity problem.

Following Sun (2005), Lin and Ng (2012) and BM, we assume that the composition of groups does not change over time. In addition, for any group , we assume that they have different slope coefficients, e.g., , and no single unit can simultaneously belong to these two groups: . Note that these assumptions are used to simplified the prior construction and are not necessary to incorporate prior knowledge. As we show in Section 5, both assumptions can be relaxed by using slightly different priors.

The primary objective of this paper is to estimate the group-specific slope coefficients , group-specific variance , group membership as well as the unknown number of groups using full sample and prior knowledge of the group structure. Given estimates of group-specific coefficients, we are able to offer the point, set, and density forecasts of for each unit . Throughout this paper, we will concentrate on the one-step ahead forecast where . For multiple-step forecasting, the procedure can be extended by iterating in accordance with (2.1) given the estimates of parameters or estimating the model in the style of direct forecasting. The method proposed in this paper is applicable beyond forecasting. In certain applications, the heterogeneous parameters themselves are the objects of interest. For example, the technique developed here can be adapted to infer group-specific heterogeneous treatment effects.

2.2 Nonparametric Bayesian Prior with Knowledge on

We propose a nonparametric Bayesian prior for the unknown parameters with prior beliefs on the group pattern. Figure 1 provides a preview of the procedure for introducing prior knowledge into the model. We propose to use pairwise constraints to summarize researchers’ prior knowledge, with each constraint accompanied by a hyperparameter indicating the researchers’ levels of confidence in their choice. The is then incorporated directly in the prior distribution of the group partition , which is induced from a standard nonparametric Bayesian prior, yielding a new prior. We will elaborate the details throughout this subsection and highlight the clustering properties of the underlying nonparametric Bayesian priors in Section 2.3.

2.2.1 A New Prior with Soft Pairwise Constraints

The derivation of the proposed prior starts from summarizing prior knowledge in the form of pairwise constraints, which describe a bilateral relationship between any two units. Inspired by the literature on semi-supervised learning Wagstaff and Cardie (2000),333We essentially follow the same idea of the pairwise constraints in Wagstaff and Cardie (2000). To better demonstrate the beliefs on constraints, we use different names: positive-link and negative-link, rather than must-link and cannot-link. we consider two types of pairwise constraints: (1) positive-link (PL) constraints, , and (2) negative-link (NL) constraints, . A positive-link constraint specifies that two units are more likely to be assigned to the same group, whereas a negative-link constraint indicates that the units are prone to be assigned to different groups.

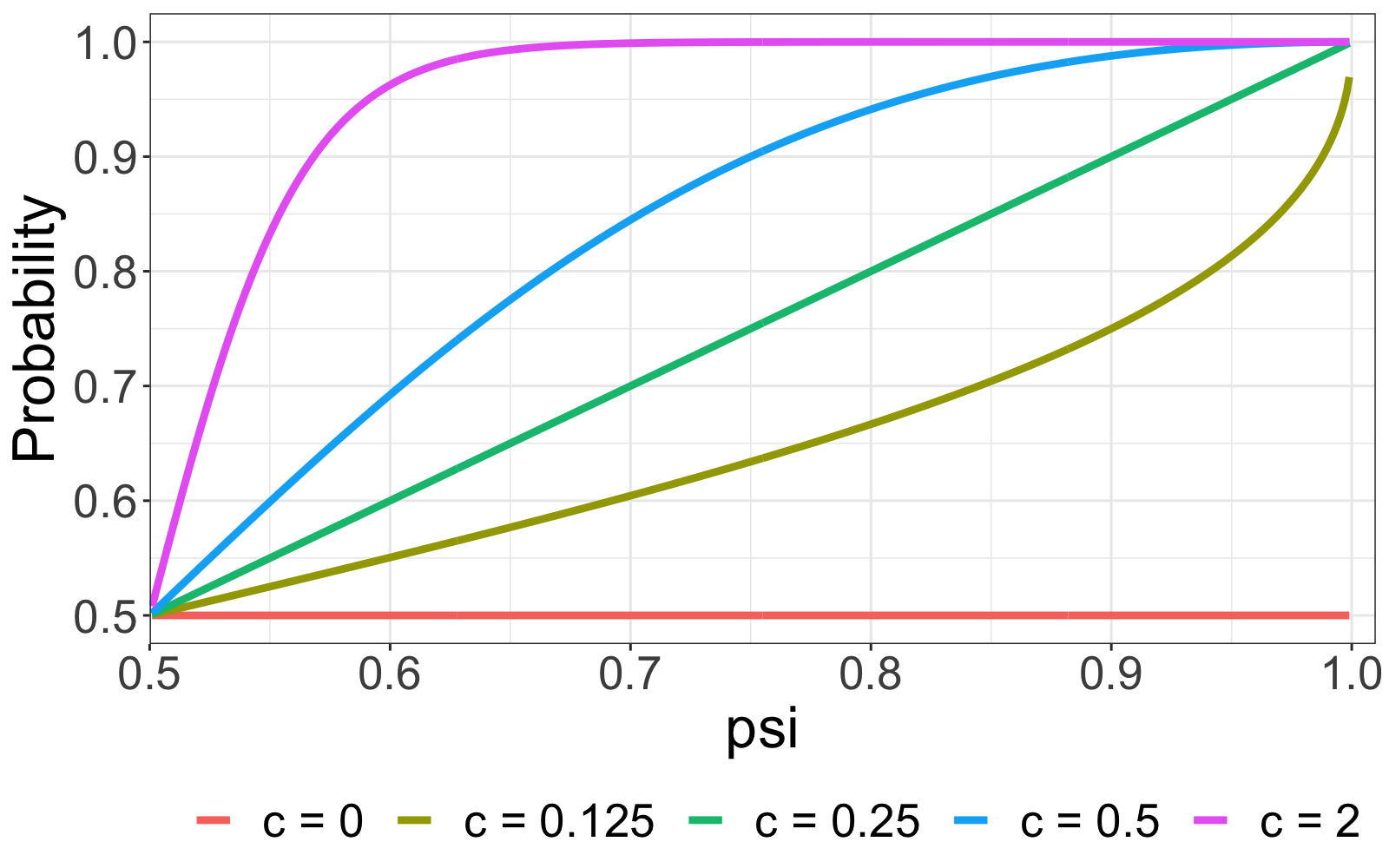

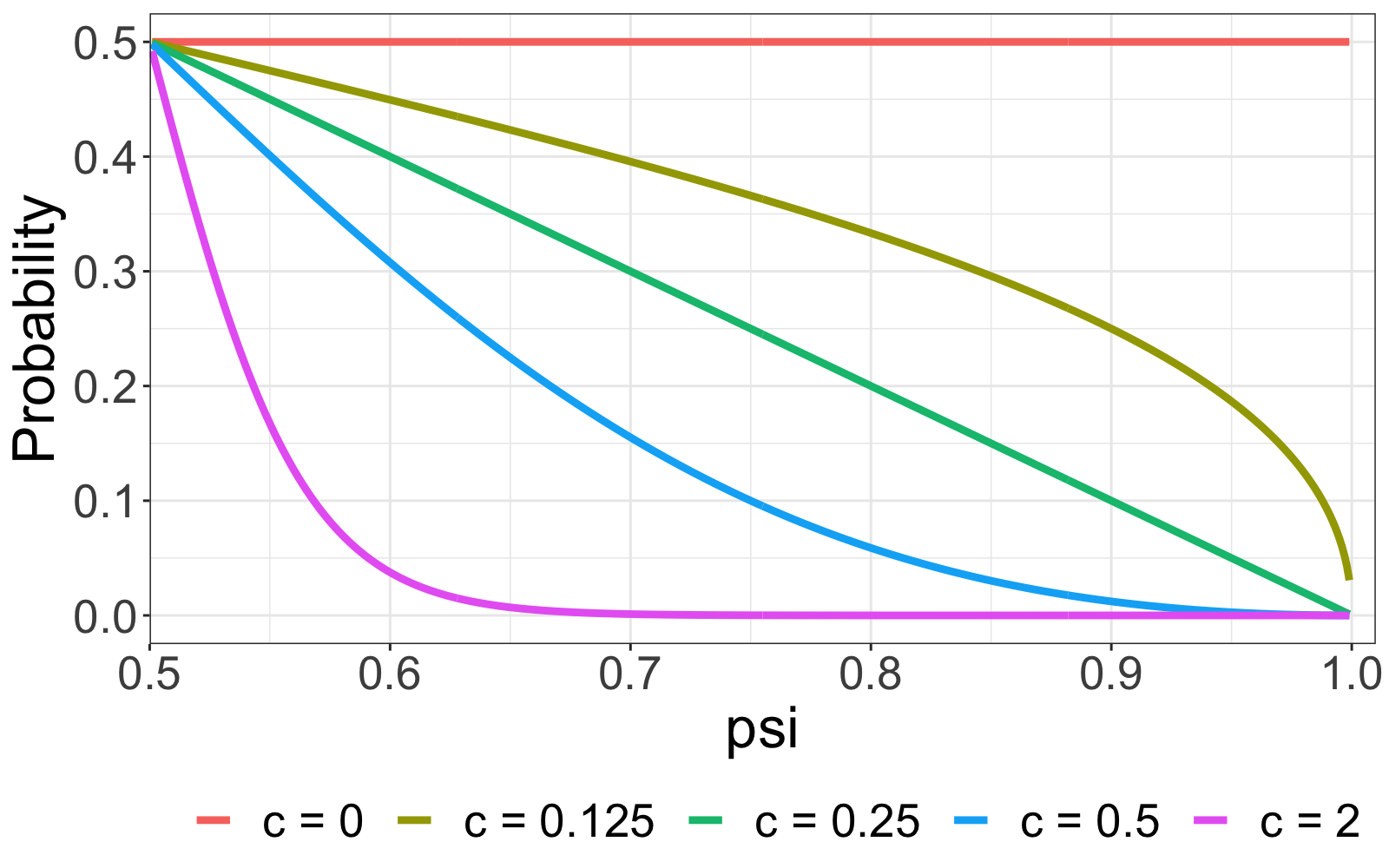

Instead of imposing these constraints dogmatically, the constraint between units and is given a hyperparameter which describes how confident the researchers are in their choice for different types of constraints. is continuously valued on the real line, as depicted in Figure 2. On the one hand, the sign of specifies the constraint type, with a positive (negative) value indicating a PL (NL) constraint between and . On the other hand, the absolute value of reflects the strength of the prior belief. We become increasingly confident in our prior belief on units and as . If , we essentially impose the constraint, which is known as a hard PL/NL constraint. Otherwise, it’s a soft PL/NL constraint with a nonzero and finite . if there is no prior belief in units and .

We assume the weight is a logit function of two user-defined hyperparameters444This parametric form is related to the penalized probabilistic clustering proposed by Lu and Leen (2004, 2007). See detailed discussion in Appendix D.2., accuracy and type :

| (2.3) |

Accuracy, , describes the user-specified probability of assigning a constraint for unit and being correct given our prior preference. Specifically, implies the constraint between and must be imposed since we confident that it is accurate, while specifying is equivalent to a random guess or no information is provided. is bounded below by 0.5, following the assumption that leaving the pair unrestricted is more rational than setting a less likely constraint. The type of constraints is denoted by . if unit and are specified to be positive-linked, and for a NL constraints. If the pair doesn’t involve any constraint, we assume .

To incorporate these constraints into the prior, we propose modifying the exchangeable partition probability function (EPPF) or the prior distribution of group indices, , of the baseline Dirichlet process, which we will highlight in the Section 2.3. The resulting group partition will receive a strictly higher (lower) probability if it is (in)consistent with pairwise constraints. As a result, the induced prior on the group indices directly depend on the characteristics of user-specific pairwise constraints and is able to increase or decrease the likelihood of a certain .

In the presence of soft constraints, we modify the EPPF by multiplying a function of characteristics of constraints,

| (2.4) |

where is the prior odds for the constraint between unit and , is a transformed Kronecker delta function such that

| (2.5) |

and is a positive number that controls the overall strength of prior belief. For , corresponds to the baseline EPPF , while for , , where satisfies all pairwise constraints.

Remark 2.2.

Due to the presence of pairwise constraints, the partition probability function presented in (2.4) no longer satisfies the exchangeable assumption as we now distinguish units within each group.

Remark 2.3.

The current framework enables us to impose some constraints. It is an extreme case of soft constraint and thus handy to implement, requiring only setting for the pair . Intuitively, any group partition violating the pairwise constraint between and (i.e., ) will have zero probability, since for such a partition,

and hence the constraint on is imposed and referred to as a hard constraint as opposed to soft constraint. By assigning proper for the pairs , we can flexibly combine soft and hard constraints inside a single specification.

Remark 2.4.

Soft pairwise constraints solve the transitivity issue that might be a problem for hard pairwise constraints. For instance, if we have and , we can still have in the framework of soft pairwise constraints since it preserves the possibility of violating any of these constraints. This is not the case in hard pairwise constraints, as and implies by transitivity.

With the definition of , we rewrite the partition probability function defined in (2.4) in terms of to ease notation,

| (2.6) |

where

| (2.7) |

is a normalization constant and we will use the prior hereinafter. In practice, we will first specify for the constraint between unit and and then construct the corresponding weight via the equation (2.3).

Remark 2.5.

In the particular case where we don’t have any constraint information, reduces to 1 as for all and , and recovers the original DP prior. Hence, our method can cater to all levels of supervision, ranging from hard constraints to a complete lack of constraints.

2.2.2 The Effect of Constraints and Scaling Constant on Group Partitioning

The function in Equation (2.4) is crucial in shifting the prior probability of . By design, when the constraint between and is met in a group partitioning defined by . The prior probability for is therefore increased since . Similarly, if a group partitioning violates the constraint between and , then and the prior probability for drops due to . Therefore, with , the resulting group partition is shrunk toward our prior knowledge without imposing any constraint.

To fix ideas, consider a simplified scenario with units where there are at most two groups. For illustrative purposes, we set the concentration parameter to so that 555Antoniak (1974) provides analytical formulas for probabilities of more general events with larger . In this example, = . if no constraint exists. When , listing all partitions is possible, , and we can calculate the probabilities for each using (2.6). As a result, we are able to derive the probability of units 1 and 2 belonging to the same or different groups, i.e., analytical formulae for and , which neatly demonstrate the effect of and on group partitioning.

It is straightforward to show as a function of , and :

| (2.8) |

Figure 3 traces out the equation (2.8) for a range of values. The left panel (a) displays the curve for a PL constraint. Firstly, observe that when , remains unchanged at regardless of the value of . This is the situation in which eliminates the constraint’s effect on the prior. Next, given a particular , increases in , which means that a stronger soft PL constraint between units 1 and 2 leads to higher chance of assigning both units to the same group. When is fixed, increasing easily results in a higher , indicating that a larger value magnifies the effect of the PL constraint. In contrast, panel (b) depicts the curve with a NL constraint. and clearly have the opposite effect on : drops significantly as or increases. Notably, even with a large , the soft constraint framework maintains the possibility of breaching the constraint, which is another important feature that preserves the chance of correctly assigning group indices even if the constraint is erroneous.

In the general case where numerous PL and NL constraints are enforced, concurrently affects all constraints. In other words, the value of determines the overall “strength” of the prior belief of . If the prior belief is coherent with the real group partition, it would be preferable to have a large to intensify the effect on constraints, allowing prior information to take precedence over data information, and vice versa.

Remark 2.6.

We propose to find the optimal that maximizes marginal data density using grid search, see details in Appendix 4.1.2. Alternatively, the scaling constant can be pair-specific and data-driven. Basu et al. (2004b), for instance, assume that is a function of observables, i.e., . is monotonically increasing (decreasing) in the distance between units and if they are involved in positive-link (negative-link) constraints. This reflects the belief that if two more distant units are assumed to be positive-linked (negative-linked), their constraint should be given more (less) weight.

2.2.3 Specification of Soft Pairwise Constraints

In reality, it is practical to establish soft pairwise constraints based on existing information on group, even if it is not the genuine group partitioning. In the empirical analysis, for instance, we use the official expenditure categories of CPI sub-indices to construct soft pairwise constraints. When information on group partitioning is insufficient, especially when the number of units is large, these official expenditure categories may serve as a trustworthy starting point. Before formalizing the idea, we first introduce the prior similarity matrix which is a symmetric matrix describing the prior probability of any two units belonging to the same group, i.e., conditional on all hyperparameters in the prior.

The general idea is to derive soft pairwise constraints using the existing information on a preliminary group partitioning , which is allowed to involve only a subset of units. We start with the type of constraints between any two units. Given the preliminary group structure, such as expenditure categories, we specify PL constraints for all pairs of units within the same group and NL constraints for all pairs of units from different groups. This means that we believe the preliminary group structure is correct a priori. Despite the fact that more elaborate and subtle constraints might be implemented, this rough specification is usually a great starting point.

The accuracy for constraints is then specified. When our prior knowledge is limited or the number of units is large, we cannot specify for all pairs with solid knowledge of them. Instead, one desirable yet simple choice is to assume again based on preliminary group partitioning . More specifically, all units in the same group are positive-linked with identical , i.e., for units and from the group , we have . Units from different groups are assumed to be negative-linked with identical , i.e., for units and from distinct groups, we assume and . Following this strategy, depends solely on and hence two units from the same group would have identical soft pairwise constraints with other units. Notice that the number of possible distinct reduces from to , where is the number of groups in and .

This framework permits no prior belief in certain units. If at least one unit in a pair is not included in , we assume that this pair of units is free of constraints and we set to 0 or to 0.5 in the prior. Note that the absence of a constraint does not ensure that the units and are completely unrelated. Instead, if both and are involved in constraints with a third unit , or are connected through a series of constraints, , then the prior probability of and belonging to the same group differs from the prior probability without any constraints. If we wish to prevent two units from linking a priori, they must not be subject to any constraints with the remaining units.

The aforementioned specification strategy induces a block prior similarity matrix, i.e., for an unit , if . Intuitively, if two units have identical soft pairwise constraints and hence posit an identical relationship with all other units, they are equivalent and exchangeable. As a result, these units should have an equal prior probability of sharing the same group index with any other units. More formally,

Theorem 1 (Stochastic Equivalence).

Given two units from the same prior group, if for all , then for all unit in the prior, given weights .

Theorem 1 echos the concept of stochastic equivalence (Nowicki and Snijders, 2001) in stochastic block model666For a more comprehensive review of the stochastic block model, see Lee and Wilkinson (2019). (SBM) (Holland et al., 1983). In less technical terms, for nodes and in the same group, has the same (and independent) probability of connecting with node , as does. Interestingly, this relationship is not coincidental. The prior draw of group membership with the aforementioned specification of and can be viewed as a simulation of a simple SBM. In a simple SBM, there are two essential components: a vector of group memberships and a block matrix, each element of which represents the edge probability of two nodes, given their group memberships. In our case, the preliminary group structure serves as the group membership in SBM. The DP prior and the weight (or and ) of each constraint induce a prior similarity probability comparable to the block matrix.

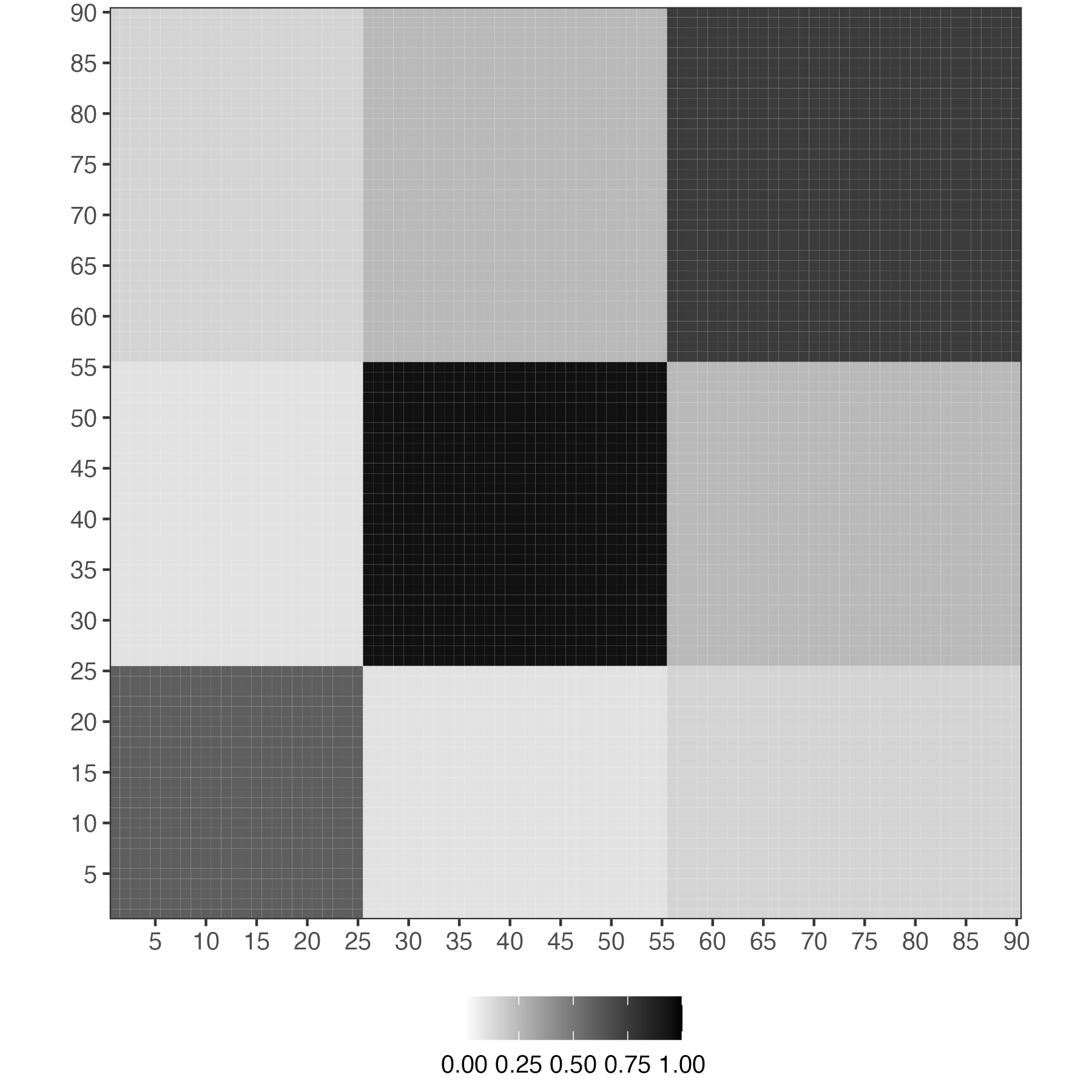

Let’s consider an example of 90 units. The preliminary group structure divides units into 3 groups, with groups 1, 2 and 3 containing 25, 30 and 35 units, respectively. Figure 4 shows the prior similarity matrix, which is based on the aforementioned specification strategy, so it becomes a block matrix with equal entries in each of nine blocks. Units within the same group are stochastically equivalent, as their prior probabilities of being grouped not only with each other but also with units from other groups are the same. As a result, the similarity probability of each pair depends solely on their preliminary membership (and ).

2.2.4 Comparison to Existing Methods

Bonhomme and Manresa (2015) incorporates prior knowledge of group membership by adding a penalty term to the objective function. They assume that prior information is in the form of probabilities which describe the prior probability of unit belonging to group with at most groups as . Consequently, the estimated group index is given by:

| (2.9) |

where is a hyperparameter need to be tuned further and is the predetermined number of groups.

The penalty determines the weights assigned to prior and data information in estimation. Due to the fact that is frequently unknown in advance, this method requires model selection to determine the ideal number of groups. Assume we have alternative options for . We have a matrix for prior information for a given . As a result, in order to pick a model, we must therefore provide sets of prior probability matrix , which is cumbersome and inconvenient. For instance, if has values ranging from and , there are 3,600 entries for , none of which can be missing or undefined. In addition, the information criteria may be unreliable in the finite-sample results, necessitating further care when selecting an appropriate variant for empirical application.

Summarizing prior knowledge through pairwise constraints is often more practical than the penalty function approach in BM and solves the aforementioned practical issues. Pairwise relationships can be derived intuitively from researchers’ input without requiring in-depth knowledge of the underlying groups; researchers do not need to fix the number of groups or group membership a priori. One only needs to focus on a pair of units each time and specify the preference of assigning them to the same or different groups. Moreover, since the pairwise constraints are incorporated into the DP prior, which implicitly defines a prior distribution on the group partitions, model selection is not required and the posterior analysis also takes the uncertainty of the latent group structure into account.

Paganin et al. (2021) offer a statistical framework for including concrete prior knowledge on the partition. Their proposed method aims to shrink the prior distribution towards a complete prior clustering structure, which is an initial clustering involving all units provided by experts. Specifically, they suggest a prior on group partition that is proportional to a baseline EPPF of the DP prior multiplied by a penalization term,

| (2.10) |

with is a penalization parameter, is a suitable distance measuring how far is from , and indicates the same EPPF as in (2.4). Because of the penalization term, the resulting group indices shrink toward the initial target .

This framework is parsimonious and easy to implement, but it comes with a cost. The method is incapable of coping with an initial clustering in a subset of the units under study or multiple plausible prior partitions; otherwise, the distance measure is not well-defined. In addition, the authors suggest utilizing Variation of Information (Meilă, 2007) as the distance measure. It can be shown that the resulting partition can easily become trapped in local modes, leading the partition to never shrink toward . They also argue that other available distance methods have flaws. As a result, the penalty term does not function as anticipated.

Our proposed framework with pairwise constraints is more flexible than adopting a target partition. Actually, the target partition in Paganin et al. (2021) can be viewed as a special case of the pairwise constants, in which every unit must be involved in at least one PL constraint. Our framework could manage partitions involving arbitrary subsets of the units by tactically specifying the bilateral relationships. Most importantly, when the group indices of other pairs are fixed, our framework ensures that the partition containing a specific pair that is consistent with our prior belief receives a strictly greater prior probability than the partition that is inconsistent with our prior belief. This guarantees that the generated shrinks in the direction of our prior belief.

2.3 Nonparametric Bayesian Prior

The baseline model contains the parameters listed below: . We rely mostly on nonparametric Bayesian models.777For a more comprehensive review of the nonparametric Bayesian literature, see Ghosal and Van der Vaart (2017) and Müeller et al. (2018). Bayesian nonparametric models have emerged as rigorous and principled paradigms to bypass the model selection problem in parametric models by introducing a nonparametric prior distribution on the unknown parameters. The prior assumes that a collection of and is drawn from the Dirichlet process prior.888There have been some empirical works that use Dirichlet process model with panel data. Dirichlet process mixture prior is specified for either the distribution of the innovations (Hirano, 2002) or intercepts (Fisher and Jensen, 2022). is a vector of mixture probabilities in Dirichlet process that is produced by the stick-breaking approach with stick length . is the concentration parameter in the Dirichlet process, whereas is a collection of hyperparameters in the base measure . We consider prior distributions in the partially separable form,999The joint prior includes but not . Because the stick-breaking formulation of is a deterministic transformation of , knowing is identical to knowing .

We tentatively focus on the random coefficients model where, conditional on , and are independent to the conditional set that includes initial value of each unit , the initial values of predetermined variables, and the whole history of exogenous variables. The assumption guarantees that and can be sampled separately and simplifies the inference of the underlying distribution of and to an unconditional density estimation problem, therefore lowering computational complexity. The joint distribution of heterogeneous parameters as a function of the conditioning variables can then be modeled to extend the model to the correlated random coefficient model, which is briefed in Section 2.3.3. A full explanation and derivation for the correlated random coefficient model are provided in the online appendix.

2.3.1 Prior on Group-Specific Parameters

In the nonparametric Bayesian literature, the Dirichlet Process (DP) prior Ferguson (1973, 1974); Sethuraman (1994) is a canonical choice, notable for its capacity to construct group structure and accommodate an infinite number of possible group components.101010See Appendix A.1 for a brief overview of the DP and Appendix A.1.3 for its clustering properties. The DP mixture is also known as a “infinite” mixture model due to the fact that the data indicate a finite number of components, but fresh data can uncover previously undetected components Neal (2000). When the model is estimated, it chooses automatically an appropriate subset of groups to characterize any finite data set. Therefore, there is no need to determine the “proper” number of groups.

The DP prior can be written as an infinite mixture of point mass with the probability mass function:

| (2.11) |

where denotes the Dirac-delta function concentrated at and is the base distribution. We adopt an Independent Normal Inverse-Gamma (INIG) distribution for the base distribution :

| (2.12) |

with a set of hyperparameters .

The group probabilities are constructed by an infinite-dimensional stick-breaking process Sethuraman (1994) governed by the concentration parameter ,

| (2.13) |

where stick lengths are independent random variables drawn from the beta distribution,111111Recall that a distribution is supported on the unit interval and has mean . . The group probability will be random but still satisfy almost surely.

Equation (2.13) is essential to understanding how the DP prior controls the number of groups. The building of group probabilities is compared to the breaking of a stick of unit length sequentially, in which the length of each break is assigned to the current value of . As the number of groups increases, the probability created by the stochastic process decreases because the remaining stick becomes shorter with each break. In practice, the number of groups does not increase as fast as due to the characteristic of the stick-breaking process that leads the group probability to soon approach zero.

Although in principle we do not restrict the maximum number of groups and allow the number to rise as increases, a finite number of instances will only occupy a finite number of components. The concentration parameter in the prior of determines the degree of discretization – the complexity of the mixture and, consequently, , as also revealed in (A.3). As , the realizations are all concentrated at a single value, however as , the realizations become continuous-valued as its based distribution. Specifically, Antoniak (1974) derives the relationship between and the number of unique groups,

that is, the expected number of unique groups is increasing in both and the number of units .

Escobar and West (1995) highlights the importance of specifying when imposing prior smoothness on an unknown density and demonstrates that the number of estimated groups under a DP prior is sensitive to . This suggests that a data-driven estimate of is more reasonable. Moreover, Ascolani et al. (2022) emphasizes the importance of introducing a prior for as it is crucial for learning the true number of groups as increases and hence establishing the posterior consistency. We define a gamma hyperprior for and update it based on the observed data in order to alter the smoothness level. This step generates a posterior estimate of , which indirectly determines the number of groups without reestimating the models with different group sizes. Essentially, this represents “automated” model selection.

Collectively, we specify a DP prior for . The DP prior is a mixture of an infinite number of possible point masses, which can be constructed through the stick-breaking process. The discretization of the underlying distribution is governed by the concentration parameter . With a hyerprior on , we permit the data to determine the number of groups present in the data, which can expand unboundedly along with the data.

2.3.2 Prior on Group Partitions

In a formal Bayesian formulation, a prior distribution is specified to partition with associated indices . Despite the fact that DP prior does not specify this prior distribution explicitly, we can characterize it using the exchangeable partition probability function (EPPF) (Pitman, 1995). As we briefly mentioned in the last subsection, the EPPF plays a significant role in connecting the prior belief on group structure to the DP prior, which is included as part of our proposed prior distribution in Equation (2.4).

The EPPF characterizes the distribution of a partition induced by . As the generic Dirichlet process assumes units are exchangeable, any permutation has no effect on the joint probability distribution of ; hence, the EPPF is determined entirely by the number of groups and the size of each group. Pitman (1995) demonstrates that the EPPF of the Dirichlet process has the closed form,

| (2.14) |

where is the concentration parameter and denotes the Gamma function. Note that the partition is conceived as a random object and hence the group number is not predetermined, but rather is a function of , .

Sethuraman (1994) and Pitman (1996) constructively show that group indices/partitions can be drawn from the EPPF for DP using the stick-breaking process defined in (2.13). As a result, the EPPF does not explicitly appear in the posterior analysis in the current setting so long as the priors for the stick lengths are included.

2.3.3 Correlated Random Coefficients Model

As suggested by Chamberlain (1980), allowing the individual effects to be correlated with the initial condition can eliminate the omitted variable bias. This subsection presents the first attempt to introduce dependence between grouped effects and covariates, under the presence of group structure in both heterogeneous slope coefficients and cross-sectional variance. The underlying theorems, such as posterior consistency, and the performance of the framework are left for future study.

We primarily follow the proposed framework in Liu (2022) and utilize Mixtures of Gaussian Linear Regressions (MGLRx) for the group-specific parameters. MGLRx prior is discussed in Pati et al. (2013) and can be viewed as a Dirichlet Process Mixture (DPM) prior that takes the dependence of covariates into account. Notice that the correlated random coefficients model requires a DPM-type prior for and . This is because and are assumed to be correlated with covariates of each individual, and as such, they are not identical within a group.

Following Liu (2022), we first transform and define , where is some small (large) positive number. This transformation simplifies the prior for , which is now dependent on covariates, and ensures that a similar prior structures can be applied to both and .

In the correlated random coefficients model, the DPM prior for or is an infinite mixture of Normal densities with the probability density function:

| (2.15) | |||

| (2.16) |

where is the conditioning set at period 0, which includes initial value of each unit , the initial values of predetermined variables, and the whole history of exogenous variables. Notice that and share the same set of group probabilities .

Similar but not identical to the DP prior, it is the component parameters or that are directly drawn from the base distribution . is assumed to be a conjugate Matricvariate-Normal-Inverse-Wishart distribution.

The group probabilities are now characterized by a probit stick-breaking process (Rodriguez and Dunson, 2011),

| (2.17) |

where the stochastic function is drawn from a Gaussian process, for . The Gaussian process is assumed to have zero mean and the covariance function . defined as follows,

| (2.18) |

where and has its own hyperprior, see details in Pati et al. (2013).

3 Posterior Analysis

This section describes the procedure for analyzing posterior distributions for the baseline model described in (2.1) with the priors specified in Section 2.2.1. The joint posterior distribution of model parameters is

| (3.1) |

where is the likelihood function given by equation (2.1) for an i.i.d. model conditional on group indices , and is the additional term of pairwise constraints with the form .

3.1 Posterior Sampling

Draws from the joint posterior distribution can be obtained by using blocked Gibbs sampling. The algorithm is derived from Ishwaran and James (2001) and Walker (2007). Due to the use of a finite-dimensional prior and truncation, the method described in Ishwaran and James (2001) cannot truly address our demand for estimating the number of groups without a predetermined value or upper bound. We employ the slice sampler (Walker, 2007), which is the exact block Gibbs sampler for the posterior computation in infinite-dimensional Dirichlet process models, modifying the block Gibbs sampler of Ishwaran and James (2001) to avoid truncation approximations. Walker (2007) augments the posterior distribution with a set of auxiliary variables consisting of i.i.d. standard uniform random variables, i.e., for . The augmented posterior is then represented as

| (3.2) |

where is substituted for in the equation (3).

To roughly see how slice sampling works, recall that the group probabilities are constructed in a sequential manner, following a stick-breaking procedure. The leftover of the stick after each break gets smaller and smaller. Given the finite number of units, we can always find the smallest such that for all groups , the minimum of among all units is larger than , which is bounded above by the length of the leftover after breaks, . More formally,

| (3.3) |

As a result, all units receive strictly zero probability of being assigned to any group since the indicator function is zero.

There are two advantages to incorporating the auxiliary variable into the model. First and foremost, directly determines the largest possible number of groups in each sampling iteration. This reduces the support of and to a finite space, enabling us to solve a problem of finite dimensions without truncation. Furthermore, have no effect on the joint posterior of other parameters because the original posterior can be restored by integrating out for .

The Gibbs sampler are used to simulate the joint posterior distribution of . We break this vector into blocks and sequentially sampling for each block conditional on the current draws for the other parameters and the data. The full conditional distributions for each block are easily derived using the conjugate priors specified in Section 2.

For the group-specific parameters, we directly draw samples from their posterior densities as we adapt conjugate priors. The posterior inference with respect to becomes standard once we condition on the latent group indices . It is essentially a Bayesian panel data regression for each group. The conditional posterior for the stick length is a beta distribution given , and hence direct sampling is possible.

We follow Walker (2007) to derive the posterior of auxiliary variable . As are standard uniformly distributed, the posterior is a uniform distribution defined on , conditional on the group probabilities and group indices. In terms of the concentration parameter , we use a 2-step procedure proposed by Escobar and West (1995). Following their approach, we first draw a latent variable from . Then, given and number of groups in the current iteration, we directly draw from a mixture of two Gamma distribution.

It is worth noting that the steps for implementing the DP prior with or without soft pairwise constraints are the same for all parameter besides the group indices . This is due to the fact that soft pairwise constraints only affect other parameters through the group indices. It is handy to sample group indices with soft pairwise constraints conditional on other parameters. The posterior probability of assigning unit to group includes additional term to rewards (penalizes) the abidance (violation) of constraints,

| (3.4) |

where is the maximal number of groups after generating potential new group-specific slope coefficients and variance. We then draw the group index for unit from a multinomial distribution:

| (3.5) |

Algorithm 1 below summarizes the algorithm for the proposed Gibbs sampling. For illustrative purposes, we focus primarily on the posterior densities of major parameters and omit details on step (vii). In short, step (vii) creates potential groups by sampling new from the prior if the latest based on newly drawn and is larger than previous , which indicate the current iteration permits more groups. This Detailed derivations and explanation of each step are provided in Appendix C.2.

Algorithm 1.

(Gibbs Sampler for Random Coefficients Model with Soft Pairwise Constraints)

For each iteration ,

-

(i)

Calculate number of active groups:

-

(ii)

Group-specific slope coefficients: draw from for .

-

(iii)

Group-specific variance: draw from for .

-

(iv)

Group “stick length”: draw from for and update group probability in accordance to the stick-breaking procedure.

-

(v)

Auxiliary variable: draw from for and calculate .

-

(vi)

DP concentration parameter: draw a latent variable from and draw from .

-

(vii)

Generate potential groups based on and find the maximal number of groups .

-

(xi)

Group indices: draw from for and .

3.2 Determining Partition

In contrast to popular algorithms such as agglomerative hierarchical clustering or the KMeans algorithm, which return a single clustering solution, Bayesian nonparametric models provide a posterior over the entire space of partitions, enabling the assessment of statistical properties, such as the uncertainty on the number of groups.

However, when the group structure is part of the major conclusion of an empirical analysis, the point estimate of group structure becomes crucial. Wade and Ghahramani (2018) discuss in detail an appropriate point estimate of the group partitioning based on the posterior draws. From the decision theory, the point estimate minimizes the posterior expected loss,

where the loss function is the variation of information by Meilă (2007), which measures the amount of information lost and gained in changing from partition to .121212Another possible loss function is the 0-1 loss function , which leads to being the posterior mode. This loss function is undesirable since it ignores similarity between two partitions. For instance, a partition that deviates from the truth in the allocation of only one unit is penalized the same as a partition that deviates from the truth in the allocation of numerous units. Furthermore, it is generally recognized that the mode may not accurately reflect the distribution’s center. The Variation of Information is based on the Shannon entropy , and can be computed as

where denotes base 2, is the cardinality of the group , and the size of blocks of the intersection and hence the number of indices in block under partition and block under .

3.3 Connection to Constrained KMeans Algorithm

The procedure of Gibbs sampling with soft constraints in Algorithm 1 is closely related to constrained clustering in the computer science literature. In this parallel literature, constrained clustering refers to the process of introducing prior knowledge to guide a clustering algorithm. For a subset of the data, the prior knowledge takes the form of constraints that supplement the information derived from the data via a distance metric. As we shall see below, under several simplifying assumptions, our framework could be reduced to a deterministic method for estimating group heterogeneity using a constrained KMeans algorithm. Though this deterministic method may address the practical issues in BM, it only works for certain restricted models and hence is not as general as our proposed framework.

We start with a brief review of the Pairwise Constrained KMeans (PC-KMeans) clustering algorithm by Basu et al. (2004a), which is a well-known clustering algorithm in the field of semi-supervised machine learning. It’s a pairwise constrained variant of the standard KMeans algorithm in which an augmented objective function is used in the assignment step. Given a collection of observations , a set of positive-link constraints , a set of negative-link constraints , the cost of violating constraints and the number of groups , the PC-KMeans algorithm divides observations into groups (the assignment step) so as to minimize the following objective function,

| (3.7) |

where is the centroid of group , i.e., , is the set of units assigned to group , and is the size of group . The first part is the objective function for the conventional KMeans algorithm, while the second part accounts for the incurred cost of violating either PL constraints () or NL constraints ().

Similar to KMeans, PC-KMeans alternates between reassigning units to groups and recomputing the means. In the assignment step, it determines a disjoint partitioning that minimizes (3.7). Then the update step of the algorithm recalculates centroids of observations assigned to each cluster and updates for all .

By applying asymptotics to the variance of distributions within the model, we demonstrate linkages between the posterior sampler of our constrained BGFE estimator and KMeans-type algorithms in Theorem 2. We investigate small-variance asymptotics for posterior densities, motivated by the asymptotic connection between the Gibbs sampling algorithm for the Dirichlet process mixture model and KMeans Kulis and Jordan (2011), and demonstrate that the Gibbs sampling algorithm for the CBG estimator with soft constraints encompasses the constrained clustering algorithm PC-KMean in the limit.

Theorem 2.

(Equivalency between BGFE with Soft Constraints and PC-KMeans)

If the following conditions hold,

-

(i)

group pattern is in fixed-effects but not in slope coefficients, i.e., . Other covariates might be introduced, but they cannot have grouped effects on ;

-

(ii)

The number of group is fixed at ;

-

(iii)

Homoscedasticity: for all ;

-

(iv)

Constraint weights is scaled by the variance of errors: ;

then the proposed Gibbs sampling algorithm for the BGFE estimator with soft constraint embodies the PC-KMeans clustering algorithm in the limit as . In particular, the posterior draw of group indices is the solution to the PC-KMeans algorithm.

We return to the world of grouped fixed-effects models. In fact, the clustering algorithm is essential for BM and Bonhomme et al. (2022), who use the KMeans algorithm to reveal the group pattern in the fixed-effects. With the theorem described above, it motivates a constrained version of BM’s GFE estimator. We show that it is straightforward to incorporate prior knowledge in the form of soft paired restrictions into the GFE estimator. The soft pairwise constrained grouped fixed-effects (SPC-GFE) estimator is defined as the solution to the following minimization problem given the number of groups :

| (3.8) |

where the minimum is taken over all possible partitions of the units into groups, common parameters , and group-specific time effects . and are the user-specified costs on PL and NL constraints.

For given values of and , the optimal group assignment for each individual unit is

| (3.9) |

where we essentially apply the PC-KMeans algorithm to get the group partition. The SPC-GFE estimator of in (3.8) can then be written as

| (3.10) |

where is given by (3.9). and are computed using an OLS regression that controls for interactions of group indices and time dummies. The SPC-GFE estimate of is then simply .

Remark 3.1.

While the SPC-GFE estimator implements soft constraints, it still requires a predetermined number of group and model selection.

4 Empirical Applications

We apply our panel forecasting methods to the following two empirical applications: inflation of the U.S. CPI sub-indices and the income effect on democracy. The first application focuses mostly on predictive performance, whereas the second application focuses primarily on parameter estimation and group structure.

4.1 Model Estimation and Measures of Forecasting Performance

To accommodate richer assumptions on model, we use variants of the baseline model in Equation (2.1) in this section, either by adding common regressors or allowing for time-variation in the intercept. We use the conjugate prior for all parameters, see details in Appendix B.

4.1.1 Specification of Constraints

In both applications, the prior knowledge of the latent group structure or the pre-grouping structure covers all units. In the first application, CPI sub-indices can be clustered by expenditure category, whereas countries in the second application may be grouped according to their geographic regions. We build positive-link and negative-link constraints given the prior knowledge: all units within the same group are presumed to be positive-linked, while units from different categories are believed to be negative-linked. In terms of the accuracy of constraints, and are equal for all constraints with the same type, following the strategy described in Section 2.2.3. In the applications below, we fix and to reflect the belief that PL constraints (attracting forces) play slightly more important role than the NL constraints (repelling forces) and NL constraints cannot be ignored. Finally, we construct weights using (2.3). Notice that these assumptions on prior and hyperparameters are an example to showcase how the proposed framework works with real data. In practice, we may specify constraints for a subset of units with different levels of weights, either in a data-driven manner (for instance, highly correlated units may fall into the same group with a high level of confidence) or in a model-based manner (i.e., is a function of covariates).

4.1.2 Determining the Scaling Constant

Given that the dimension of the space of group partitions increases exponentially with the number of units , attention must be given while selecting across analyses with different . As suggested by Paganin et al. (2021), calibrating the modified prior is computationally intensive. We are facing a trade-off between investing time to get the prior “exactly right” and letting the constant be an estimated model parameter. As such, we propose to find the optimal that maximizes marginal data density using grid search.

In the Monte Carlo simulation, the value of is fixed for simplicity, but in the empirical applications, is determined by marginal data density (MDD). We calculate MDD using the harmonic mean estimator (Newton and Raftery, 1994), which defined as

| (4.1) |

given a sample from the posterior . The simplicity of the harmonic mean estimator is its main advantage over other more specialized techniques. It uses only within-model posterior samples and likelihood evaluations, which are often available anyway as part of posterior sampling. We finally choose the optimal value for that maximizes MDD.

4.1.3 Estimators

We consider six estimators in the section. The first three estimators are our proposed Bayesian grouped fixed-effects (BGFE) estimator with different assumptions on cross-sectional variance and pairwise constraints. The last three estimator ignore the group structure.

-

(i)

BGFE-he-cstr: group-specific slope coefficients and heteroskedasticity with constraints.

-

(ii)

BGFE-he: group-specific slope coefficients and heteroskedasticity without constraints.

-

(iii)

BGFE-ho: homoskedastic version of BGFE-he.

-

(iv)

Pooled OLS: fully homogeneous estimator

-

(v)

AR-he: flat-prior estimator that assumes corresponds to standard AR model with additional regressor in this environment.

-

(vi)

AR-he-PC: AR-he with the lagged value of the first principal component as additional regressor.

In the first application, we focus on inflation forecasting. For the most recent advances in this topic, Faust and Wright (2013) provide a comprehensive overview of a large set of traditional and recently developed forecasting methods. Among many candidate methods, we choose the AR model as the benchmark and exclusively include it as an alternative estimator in this exercise. This is because the AR is relatively hard to beat and, notably, other popular methods, such as the Atkeson–Ohanian version random walk model (Atkeson et al., 2001), UCSV (Stock and Watson, 2007), and TVP-VAR (Primiceri, 2005), generally do as reasonably well as the AR model, according to Faust and Wright (2013).

4.1.4 Posterior Predictive Densities

We generate one-step ahead forecasts of for conditional on the history of observations

and newly available variables at .

The posterior predictive distribution for unit is given by

| (4.2) |

where is a vector of parameters . This density is the posterior expectation of the following function:

| (4.3) |

which is invariant to relabeling the components of the mixture and is the number of groups in . Given posterior draws, the posterior predictive distribution estimated from the MCMC draws is

| (4.4) |

where

| (4.5) |

We can therefore draw samples from by simulating (2.1) forward conditional on the posterior draws of and observations. Note that MCMC exhibits the true Bayesian predictive distribution, implicitly integrating over the entire underlying parameter space.

4.1.5 Point Forecasts

We evaluate the point forecasts via the real-time recursive out-of-sample Root Mean Squared Forecast Error (RMSFE) under the quadratic compound loss function averaged across units. Let represent the predicted value conditional on the observed data up to period , the loss function is written as

| (4.6) |

where is the realization at and denote the forecast error.

The optimal posterior forecast under quadratic loss function is obtain by minimizing the posterior risk,

| (4.7) |

This implies optimal posterior forecast is the posterior mean,

| (4.8) |

Conditional on posterior draws of parameters, the mean forecast can be approximated by the Monte Carlo averaging,

| (4.9) |

Finally, the RMSFE across units is given by

| (4.10) |

4.1.6 Density Forecasts

To compare the performance of density forecasts for various estimators, we report the average log predictive scores (LPS) to assess the performance of the density forecast from the view of the probability distribution function. As suggested in Geweke and Amisano (2010), the LPS for a panel reads as,

| (4.11) |

where the expectation can be approximated using posterior draws:

| (4.12) |

4.2 Inflation of the U.S. CPI Sub-Indices

Policymakers and market participants are very interested in the abilities to reliably predict the future disaggregated inflation rate. Central banks predict future inflation trends to justify interest rate decisions, control and maintain inflation around their targets. The Federal Reserve Board forecasts disaggregated price categories for short-term inflation forecasting (Bernanke, 2007). They rely primarily on the bottom-up approach that focuses on estimating and forecasting price behavior for the various categories of goods and services that make up the aggregate price index. Moreover, investors in fixed-income markets in the private sector wish to forecast future sectoral inflation in order to anticipate future trends in discounted real returns. Some private firms also need to predict specific inflation components in order to forecast price dynamics and reduce risks accordingly.

In this section, we demonstrate the use of constrained BGFE estimators with prior knowledge on the group pattern to forecast inflation rates for the sub-indices of U.S. Consumer Expenditure Index (CPI). We focus primarily on the one-step ahead point and density forecast. Due to space constraints, we only report the group partitioning for the most recent month in the main text.

4.2.1 Model Specification and Data

Model: We start by exploring the out-of-sample forecast performance of a simple, generic Phillips curve model. It is a panel autoregressive distributed lag (ADL) model with a group pattern in the intercept, coefficients, as well as error variance. The model is given by

| (4.13) |

where is year-over-year inflation rate, i.e., , and is the slack measure for the labor market, the unemployment gap. We fix at 3 because the benchmark AR model would have the best predictive performance.

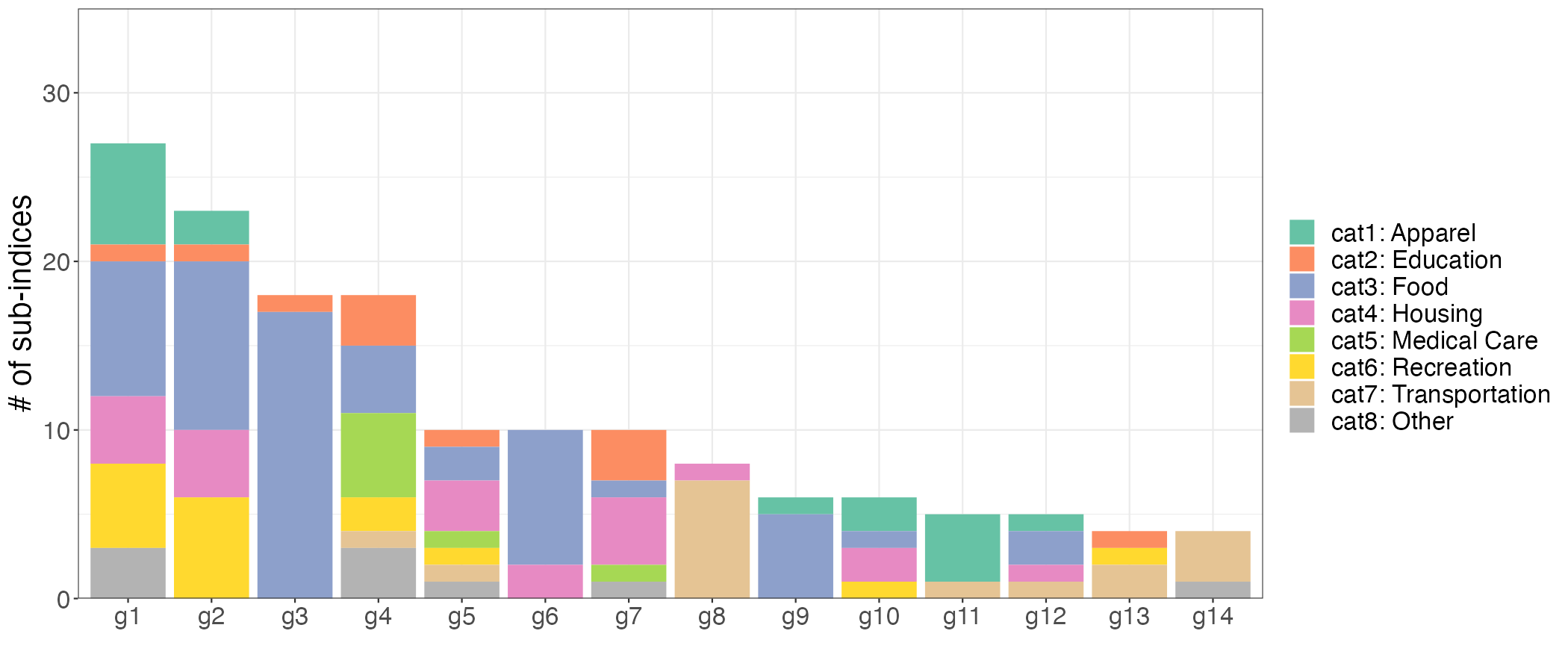

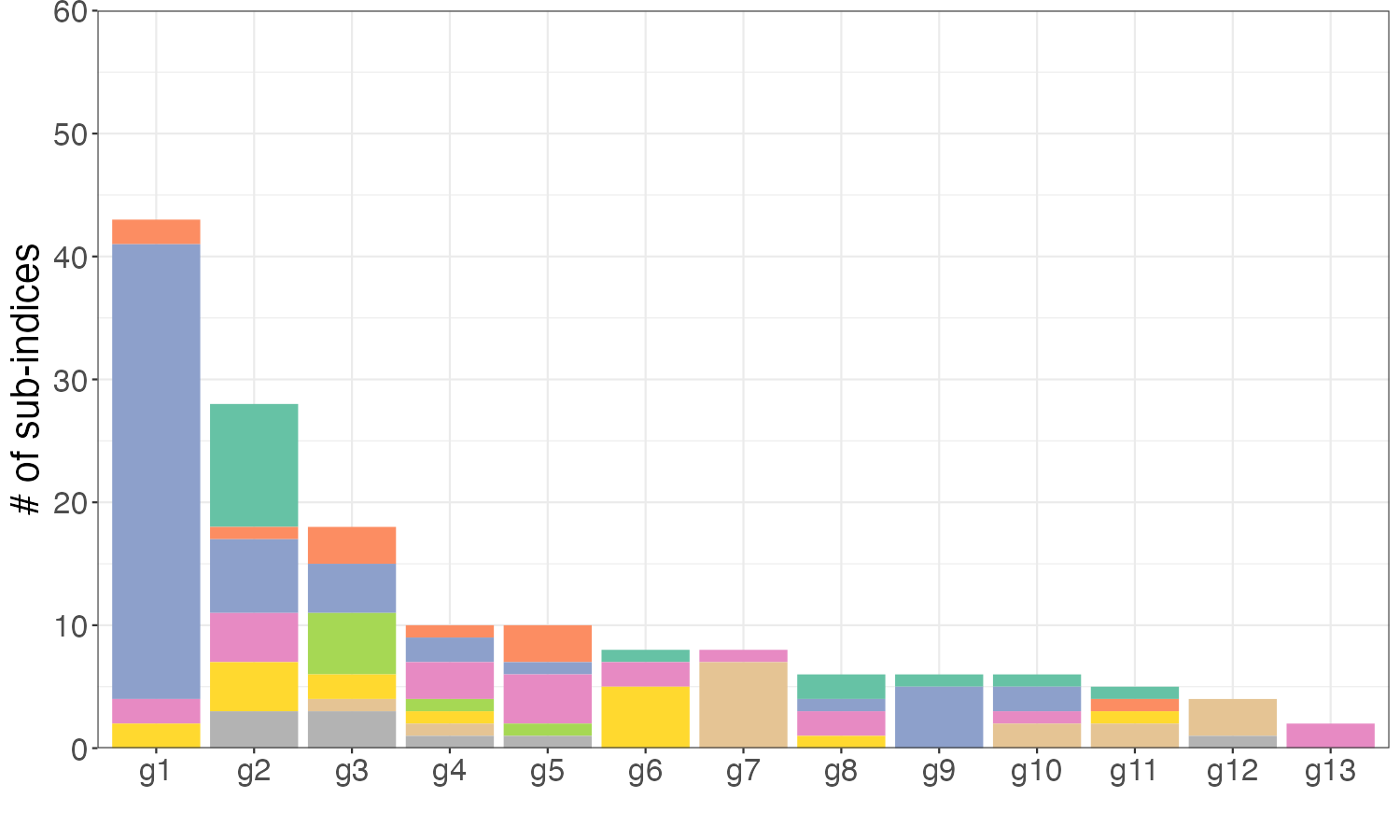

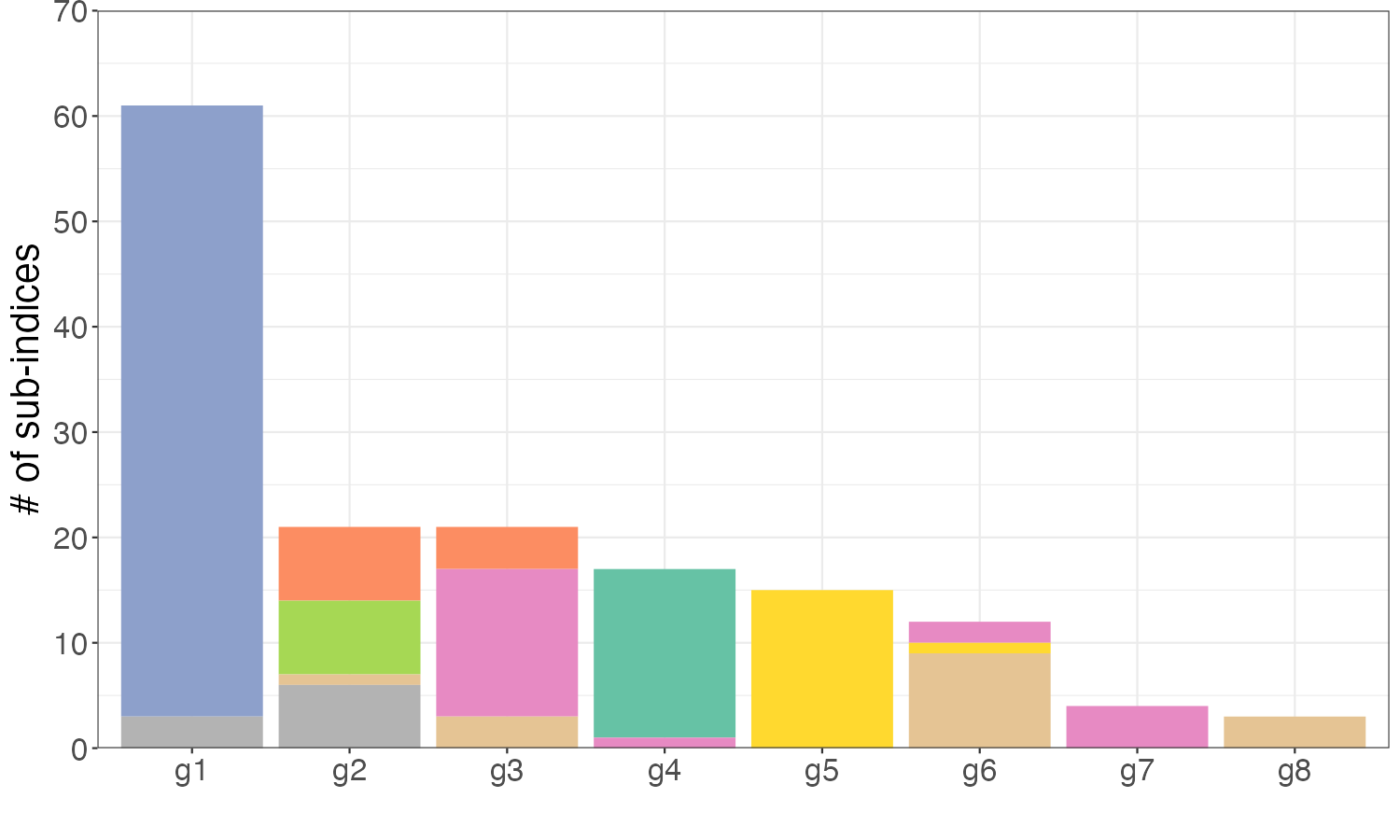

Data: We collect the sub-indices of CPI for all urban consumer (CPI-U) that include food and energy. The raw data is obtained from the U.S. Bureau of Labor Statistics (BLS), which is recorded on a monthly basis from January 1947 to August 2022. The CPI-U is a hierarchical composite index system that partitions all consumer goods and services into a hierarchy of increasingly detailed categories. It consists of eight major expenditure categories (1) Apparel; (2) Education and Communication; (3) Food and Beverages; (4) Housing; (5) Medical Care; (6) Recreation; (7) Transportation; (8) Other Goods and Services. Each category is composed of finer and finer sub-indexes until the most detailed levels or “leaves” are reached. This hierarchical structure can be represented as a tree structure, as shown in Figure 5. It is important to note that the parent series and its child series may be highly correlated and readily form a group due to the fact that parent series are generated from child series. For instance, the Energy Services is expected to correlated with its child series Utility gas service and Electricity. Due to our focus on group structure, it is vital to eliminate all parent series in order to prevent not just double-counting but also dubious grouping results. More details regarding the data are provided in Appendix F.1.

forked edges, for tree= grow = east, align = left, font=, [All Items [Housing [Shelter [Rent of primary residence] [Owners’ equivalent rent of residences] ] [Fuels and utilities [Water sewer and trash] [Household energy [Fuel oil and other fuels [Fuel oil] [Propane kerosene and firewood]] [Energy services [Electricity] [Utility gas service]]] ] ] [Transportation […] ] [Food and beverages […] ] ]

Pre-grouping: The official expenditure categories are used to build PL and NL constraints: all units within the same categories are presumed to be positive-linked, while units from different categories are believed to be negative-linked.

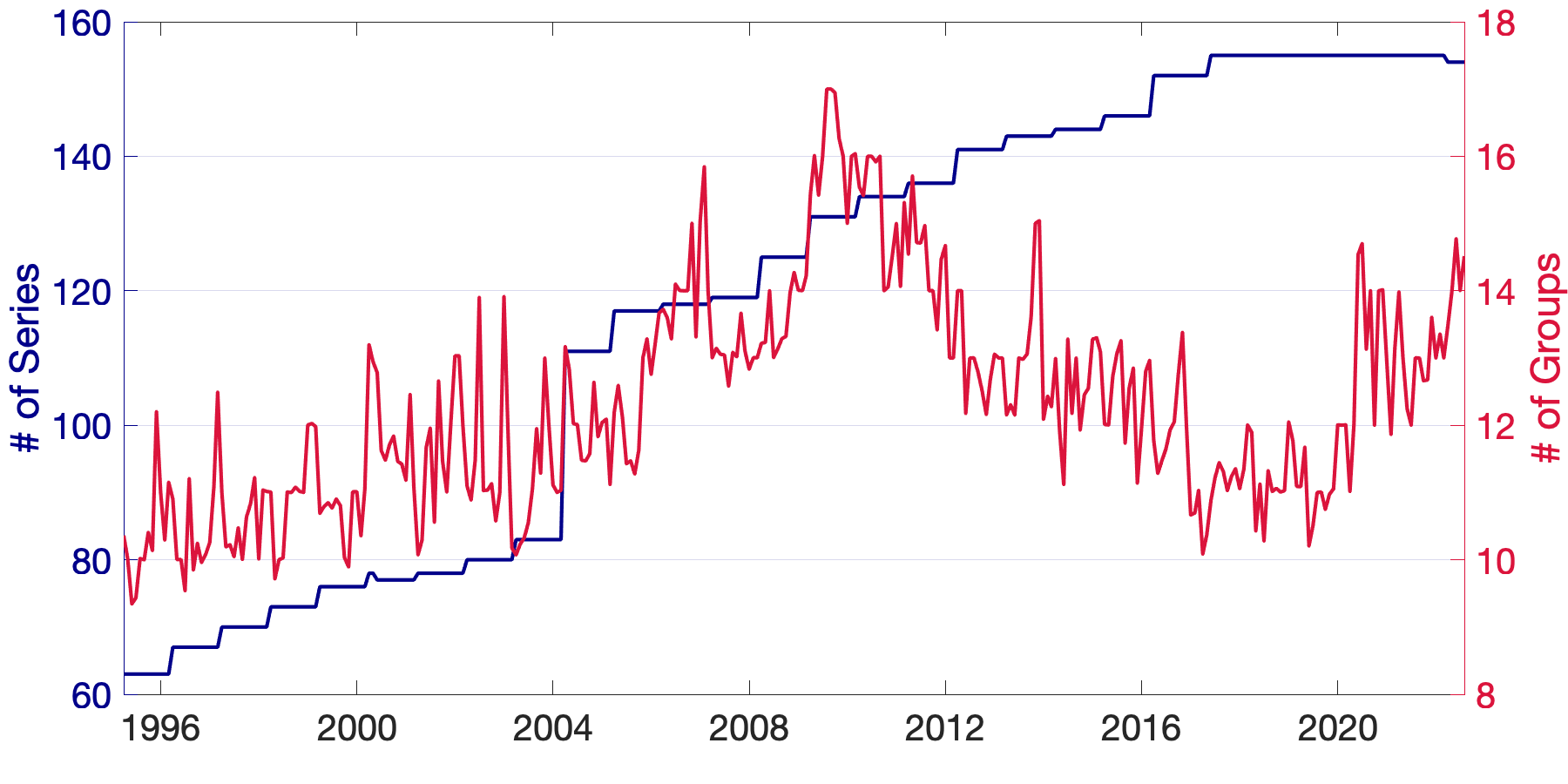

We focus on the CPI sub-indices after January 1990 for two reasons: (1) the number of sub-indices before 1990 was relatively small, diminishing the importance of the group structure; and (2) the consumption has been changed and more expenditure series were introduced in the 1990s as a result of the popularity of electronic products, food care, etc. After the elimination of all parent nodes, the unbalanced panel consists of 156 sub-indices in eight major expenditure categories. We employ rolling estimation windows of 48 months131313The benchmark AR-he model scores the best overall performance with a window size of 48. and require each estimation sample to be balanced, removing individual series lacking a complete set of observations in a given window. Finally, we generate 329 samples with the first forecast produced for April 1995.

4.2.2 Results

We begin the empirical analysis by comparing the performance of point and density forecasts across 329 samples. Throughout the analysis, the AR-he estimator serves as the benchmark as it essentially assumes individual effects.

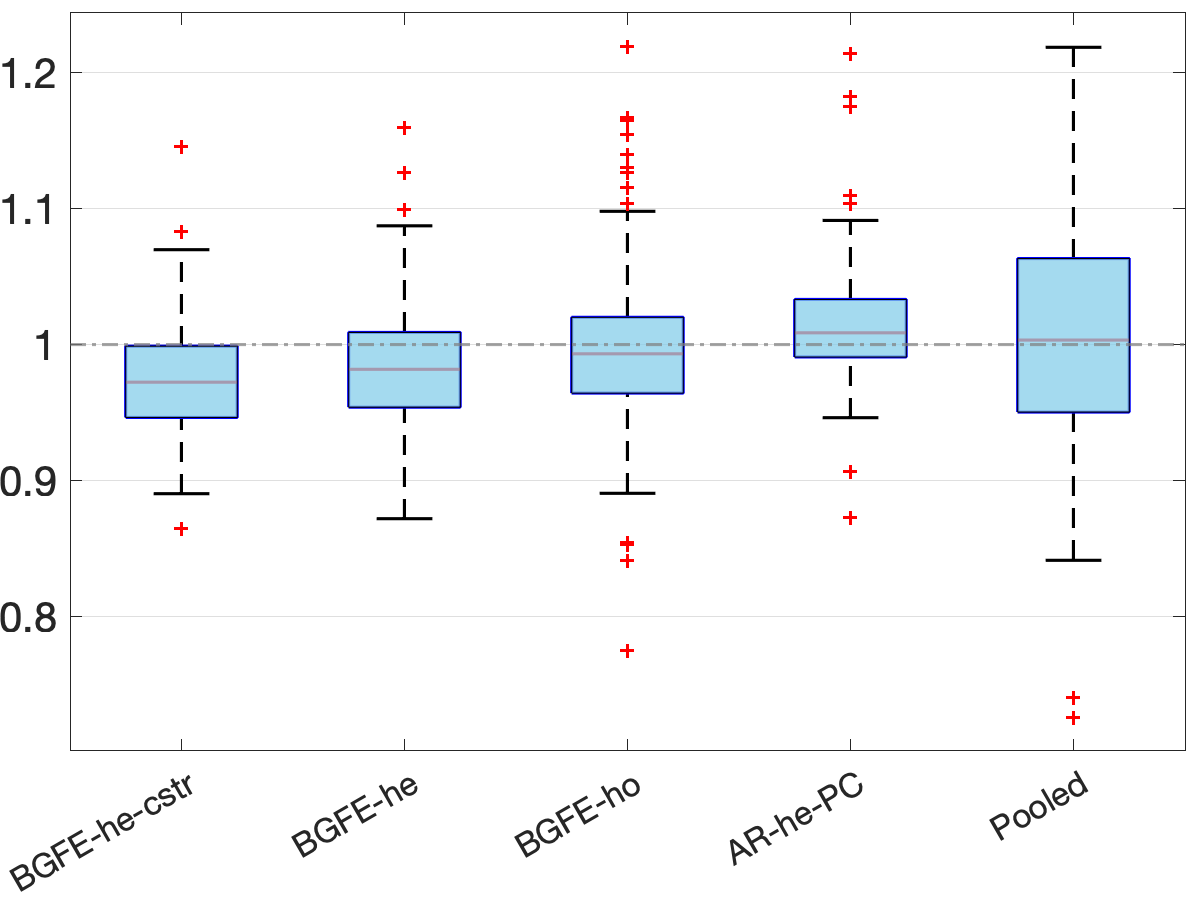

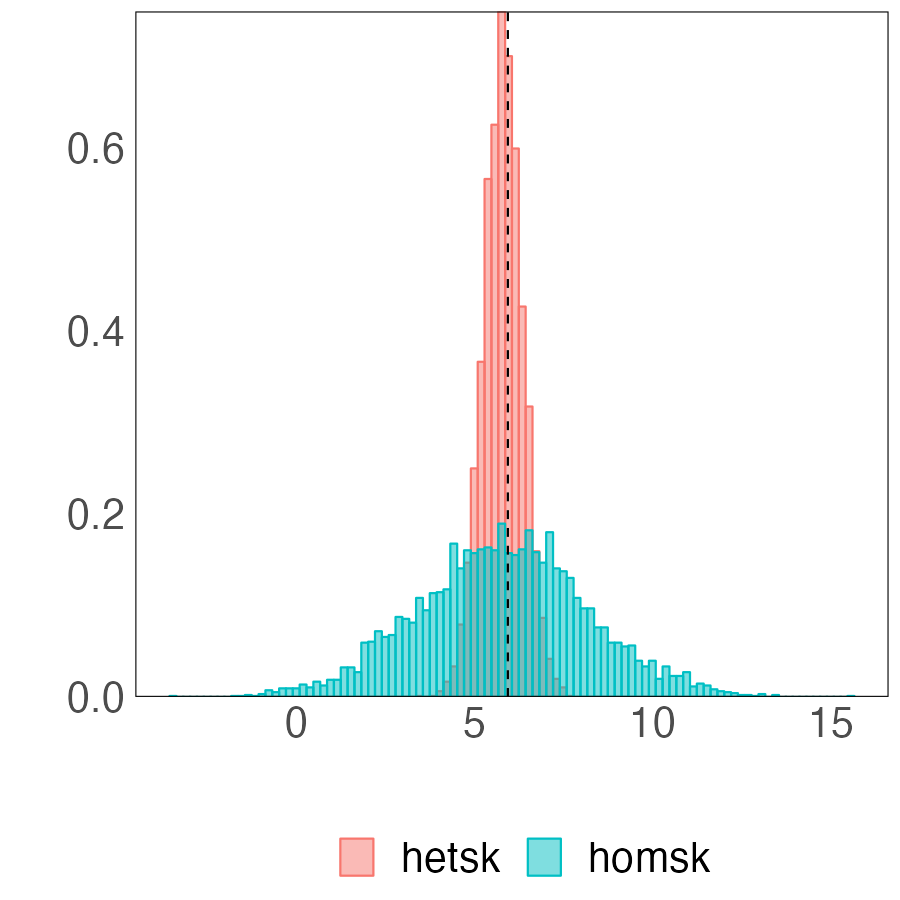

In Figure 6, we present the frequency of each estimator with the lowest RMSFE in the panel (a) and the boxplot141414The boundaries of the whiskers is based on the 1.5 IQR value. All other points outside the boundary of the whiskers are plotted as outliers in red crosses. of the ratio of RMSFE relative to the AR-he estimator in the panel (b). First, the AR-he and AR-he-PC estimators, which rely only on an individual’s own past data, are not competitive in point forecasts and perform considerably worse than the others. This implies that it is highly advantageous to explore cross-sectional information to improve point forecasts. Moreover, the BGRE-he-cstr estimator scores the highest frequency of being the best estimator despite the fact that BGFE-he-cstr, BGFE-he, BGFE-ho, and pooled OLS estimators all utilize cross-sectional information. Examining the box plot, we find that the BGFE-ho and pooled OLS estimators, which overlook heteroskedasticity, can achieve greater performance in some samples, but make poorer forecasts more often than the other estimators. BGFE-he-cstr and BGFE-he estimator, on the other hand, typically outperform the others and the benchmark in terms of median RMSFE and the ability to produce forecasts with the lowest RMSFE without also increasing the risk of generating the least accurate forecasts.

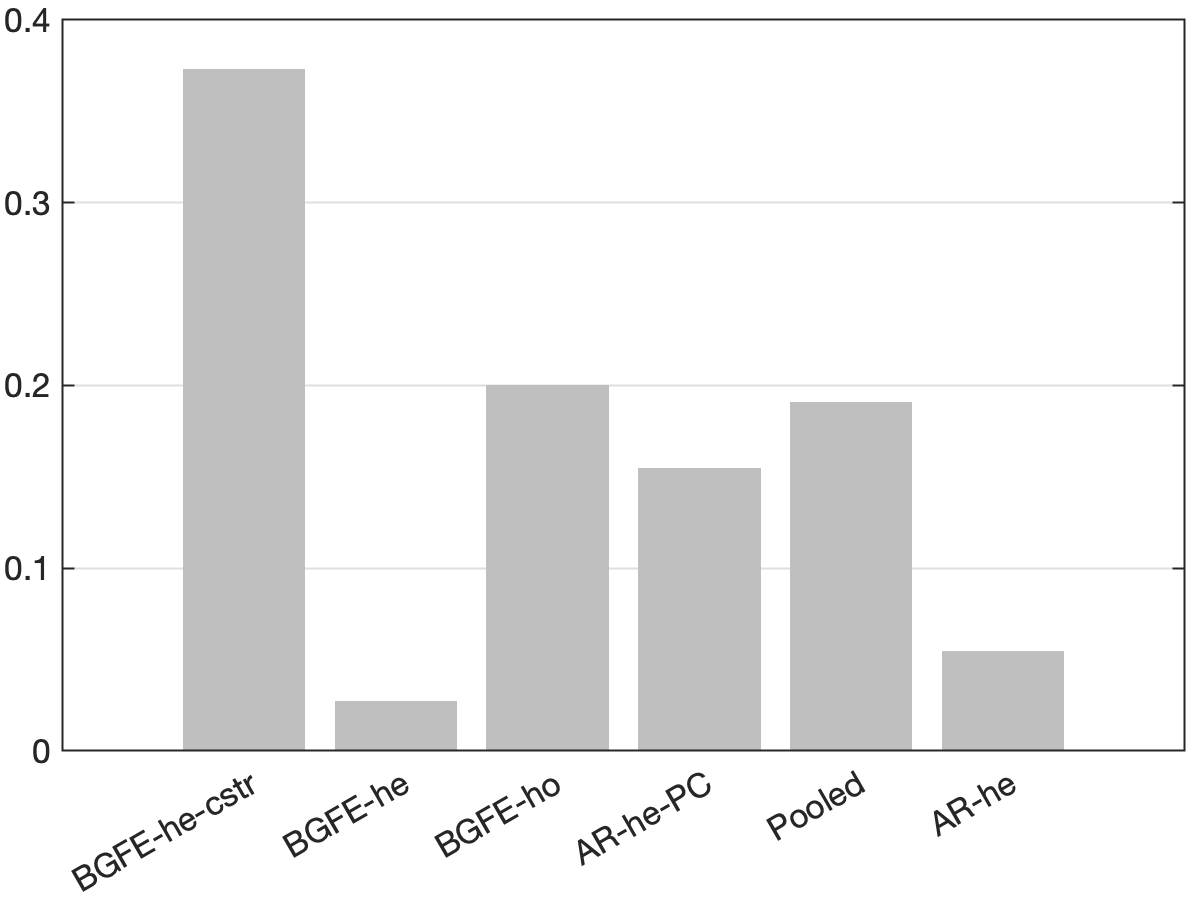

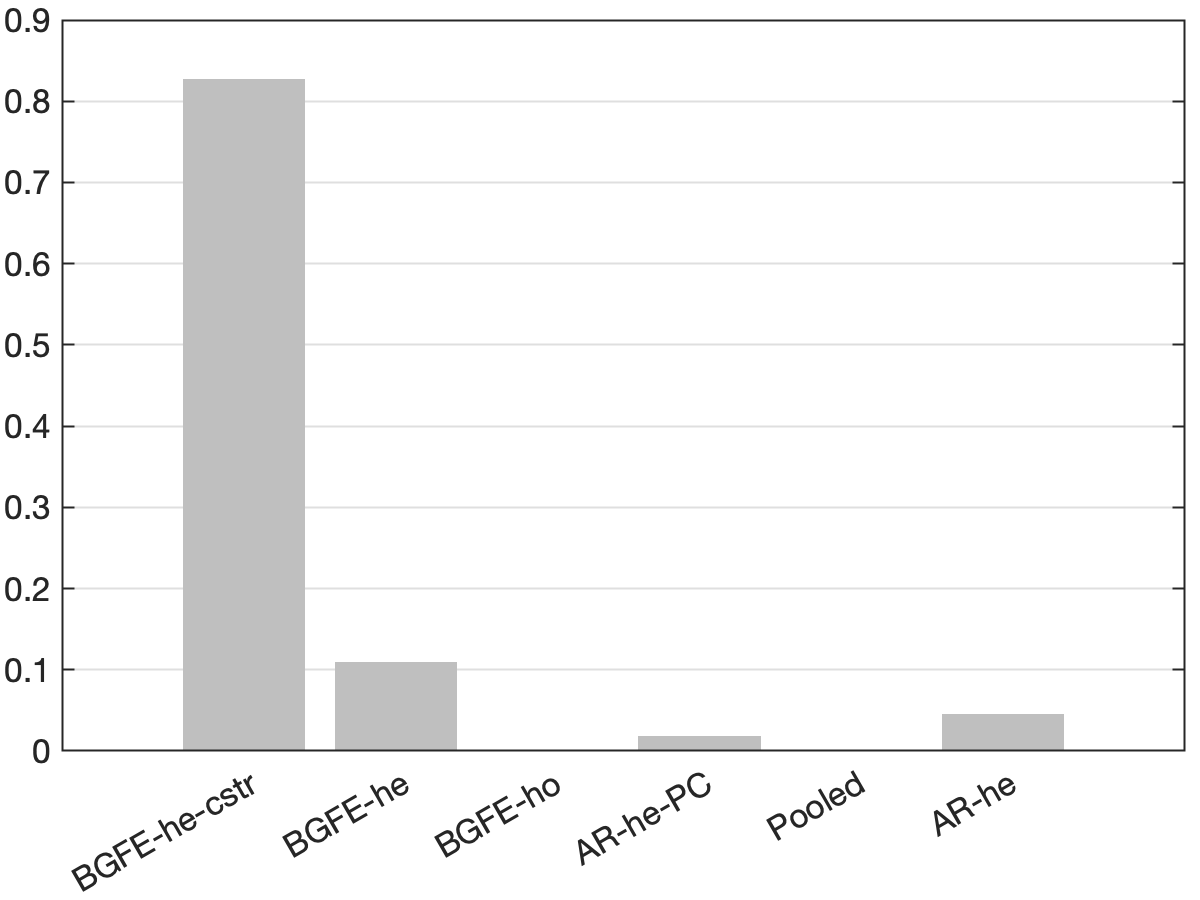

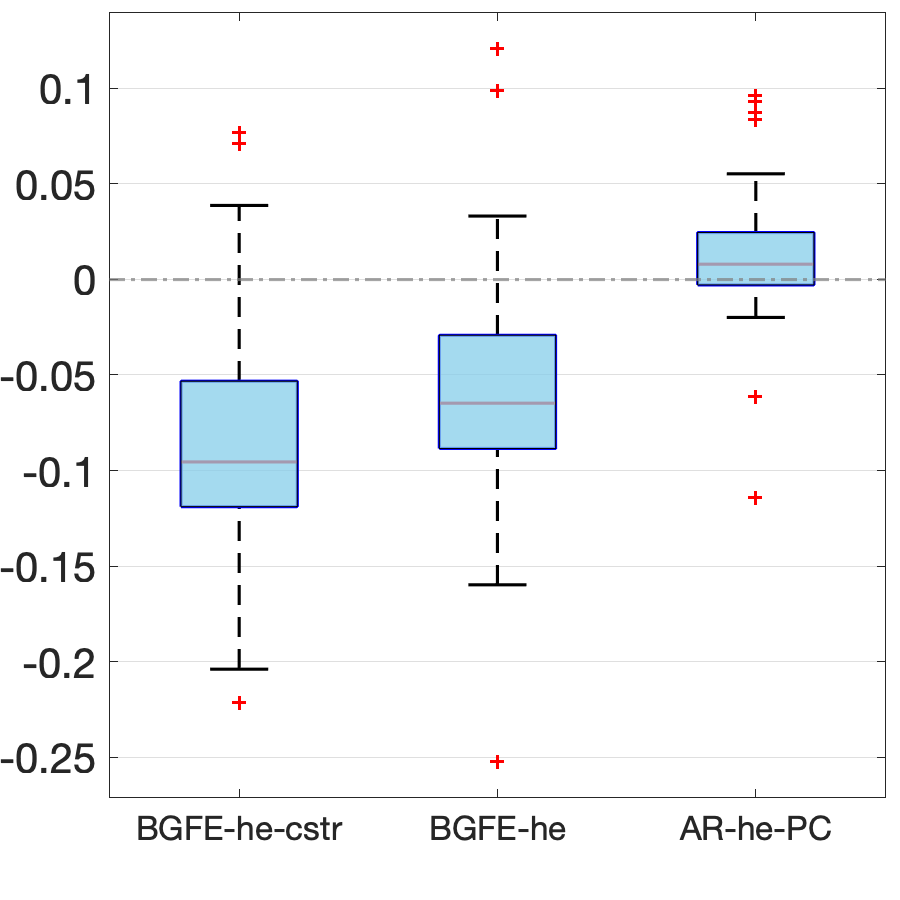

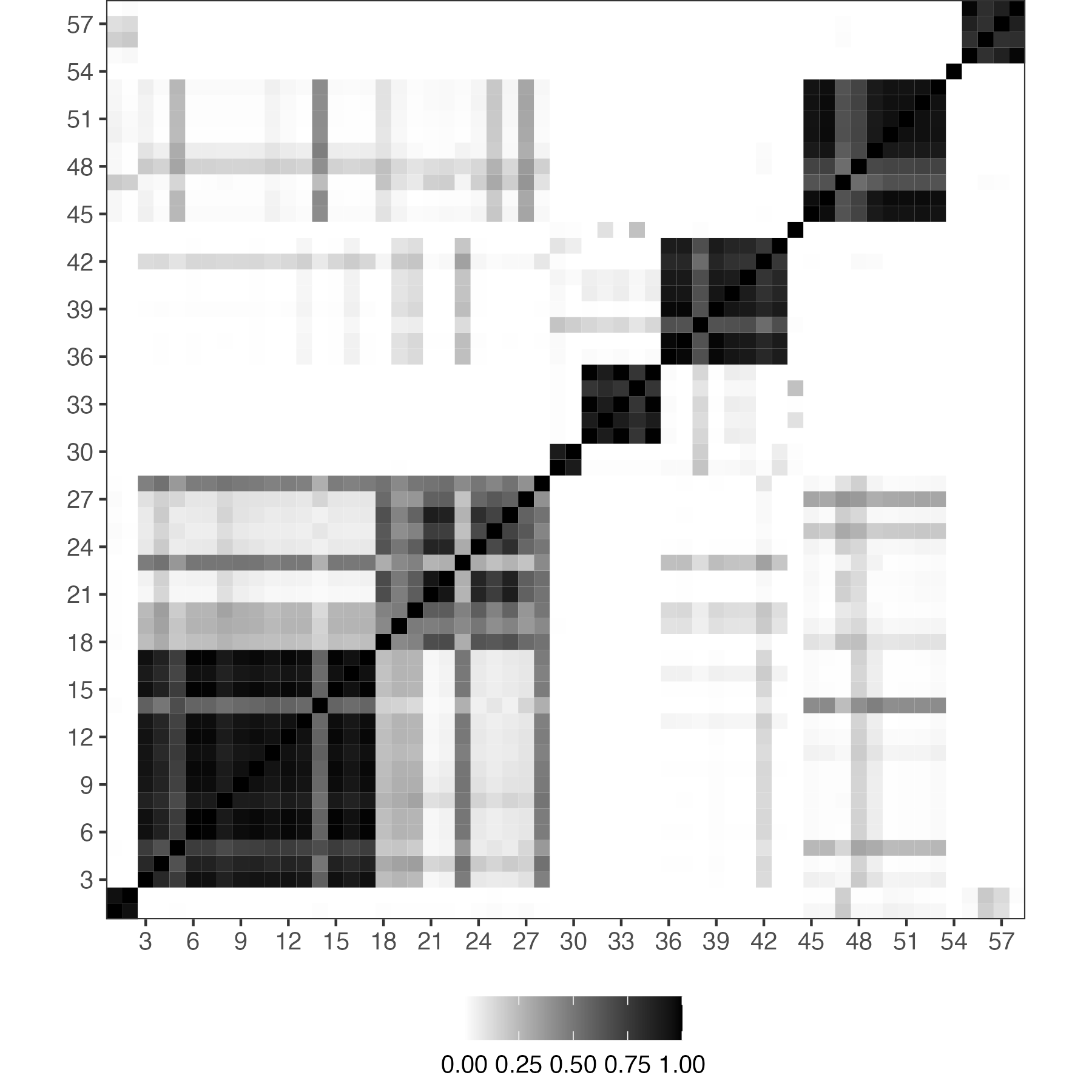

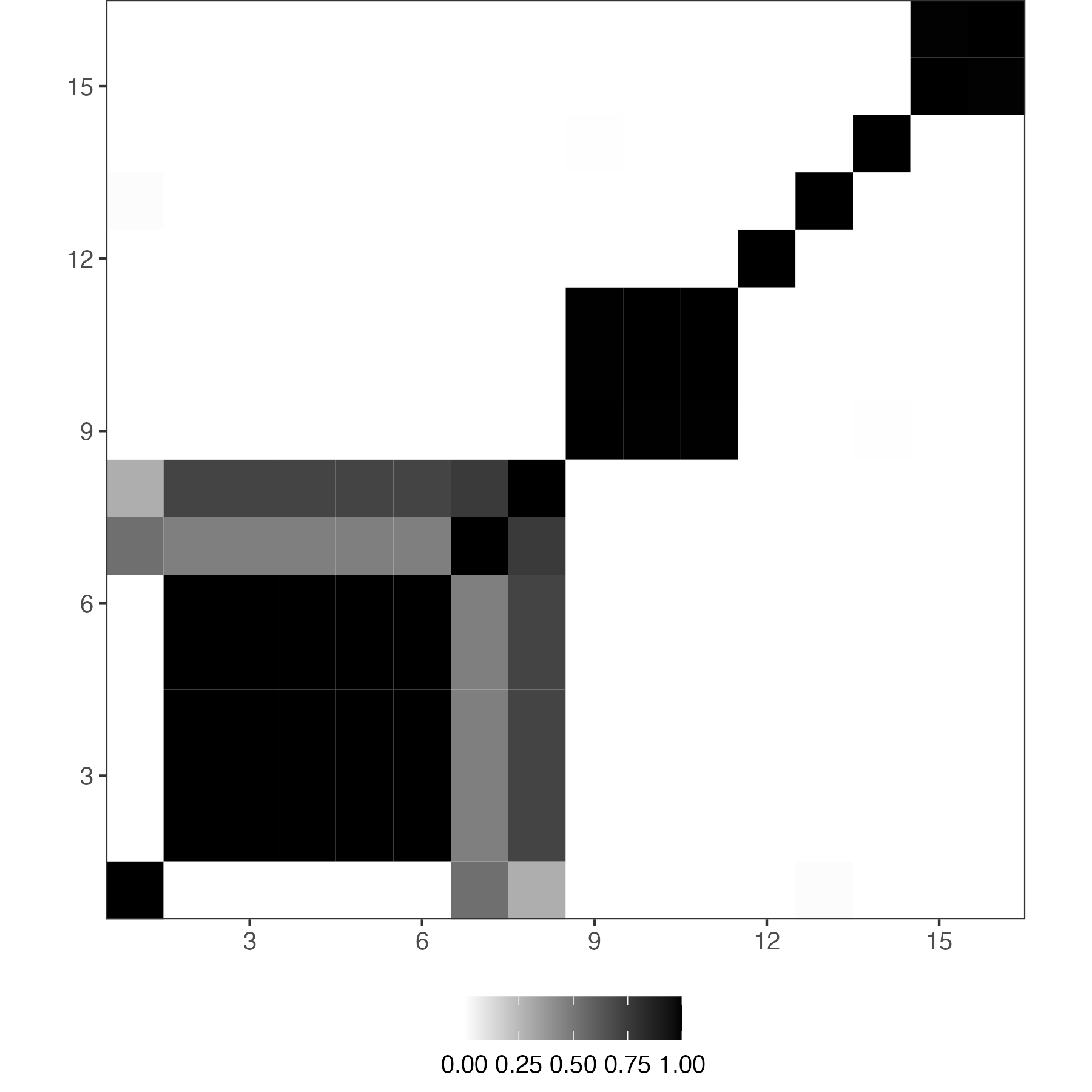

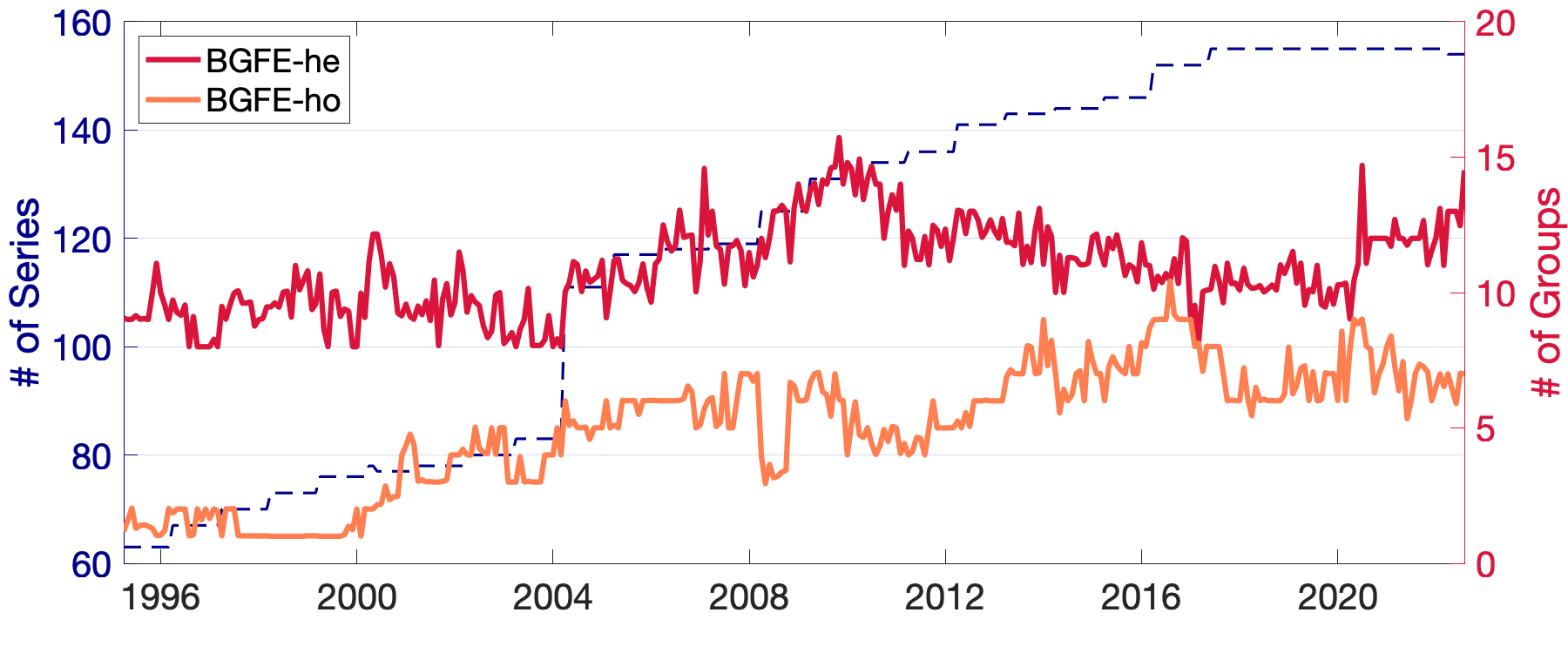

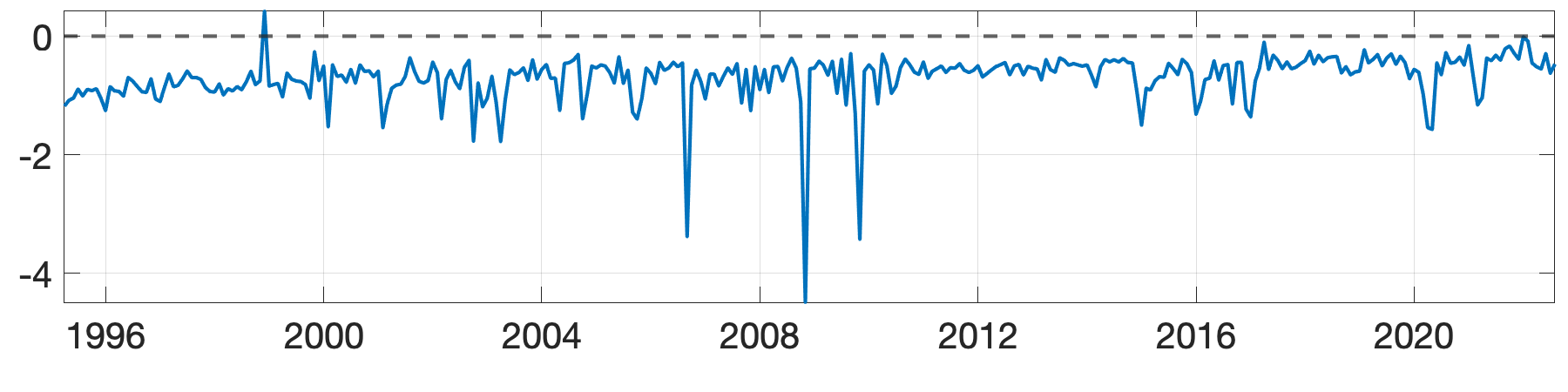

The revealing patterns of the density forecast are significantly distinct from those of the point forecast. Figure 7 depicts the log predictive score (LPS) for density forecast. The most notable pattern from the panel (a) is that the BGFE-he-cstr estimator, which incorporates prior knowledge, is dominating and outperforms the rest in over 80% of the samples. It emerges as the apparent winner in this case. Furthermore, when generating density forecast, the BGFE-ho and pooled OLS are not as accurate as they are in point forecast: they never have the lowest LPS across samples. This also confirms that the heteroscedasticity151515We provide more results in Section G.1.2 to explore the importance of heteroskedasticity in density forecast for the inflation. is a well-known feature of the inflation time series (Clark and Ravazzolo, 2015). In the boxplot, we ignore BGFE-ho and pooled OLS and show the differences in LPS between the respective estimators and the AR-he estimator. As LPS differences represent percentage point differences, BGFE-he-cstr can provide density forecasts that are up to 22% more accurate compared to the benchmark model. Finally, despite the fact that the BGFE-he-cstr and BGFE-he estimators are mainly based on the same algorithm, the use of prior knowledge on group pattern further enhances the performance, resulting in the BGFE-he-cstr estimator having a lower LPS and scoring the best model with the highest frequency.

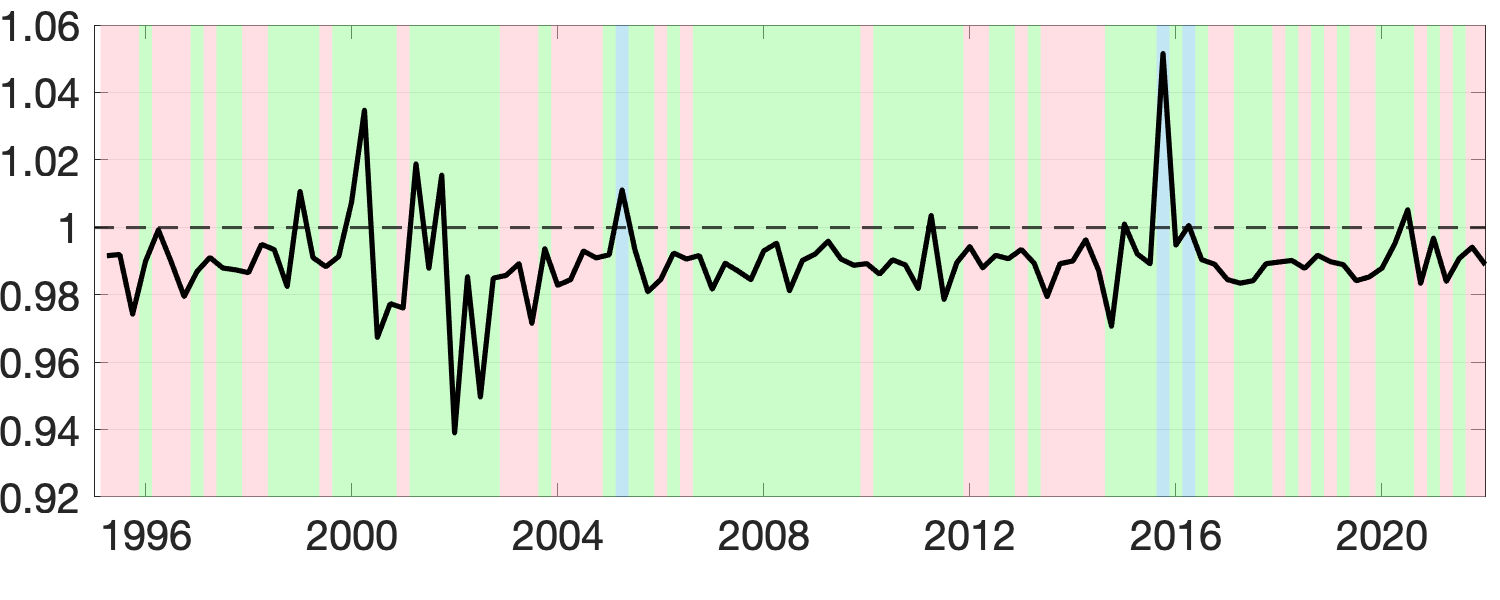

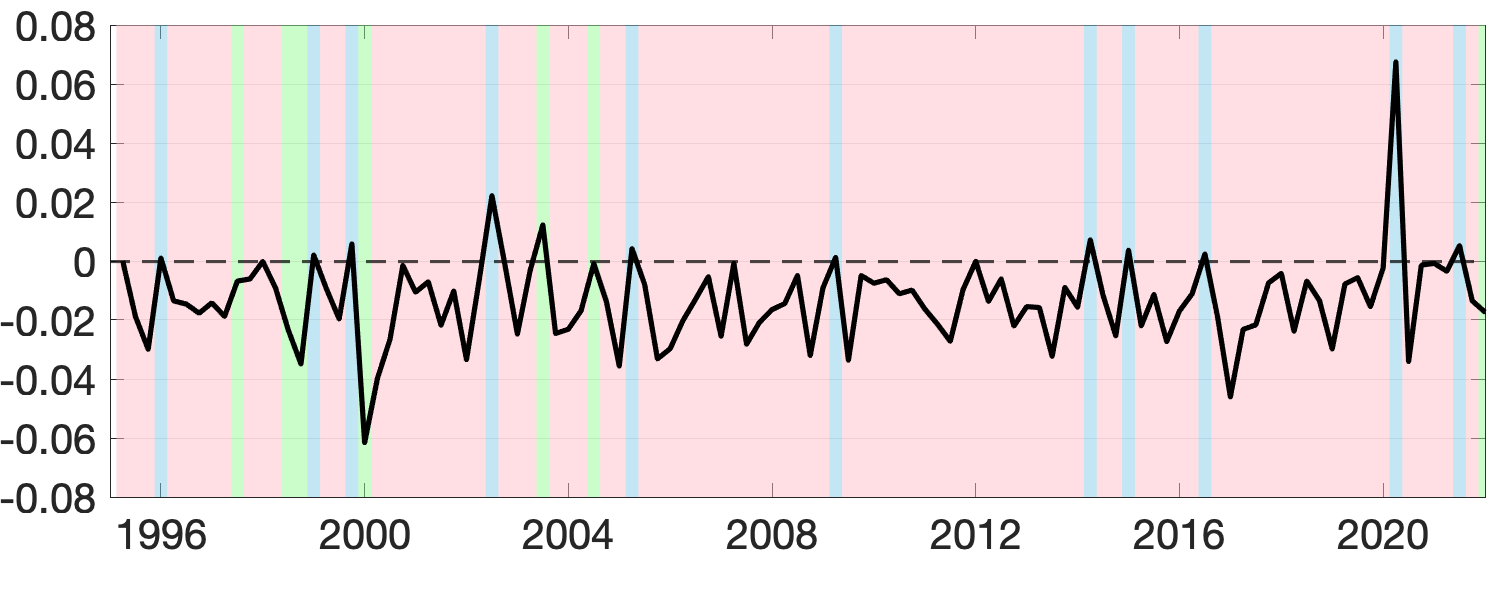

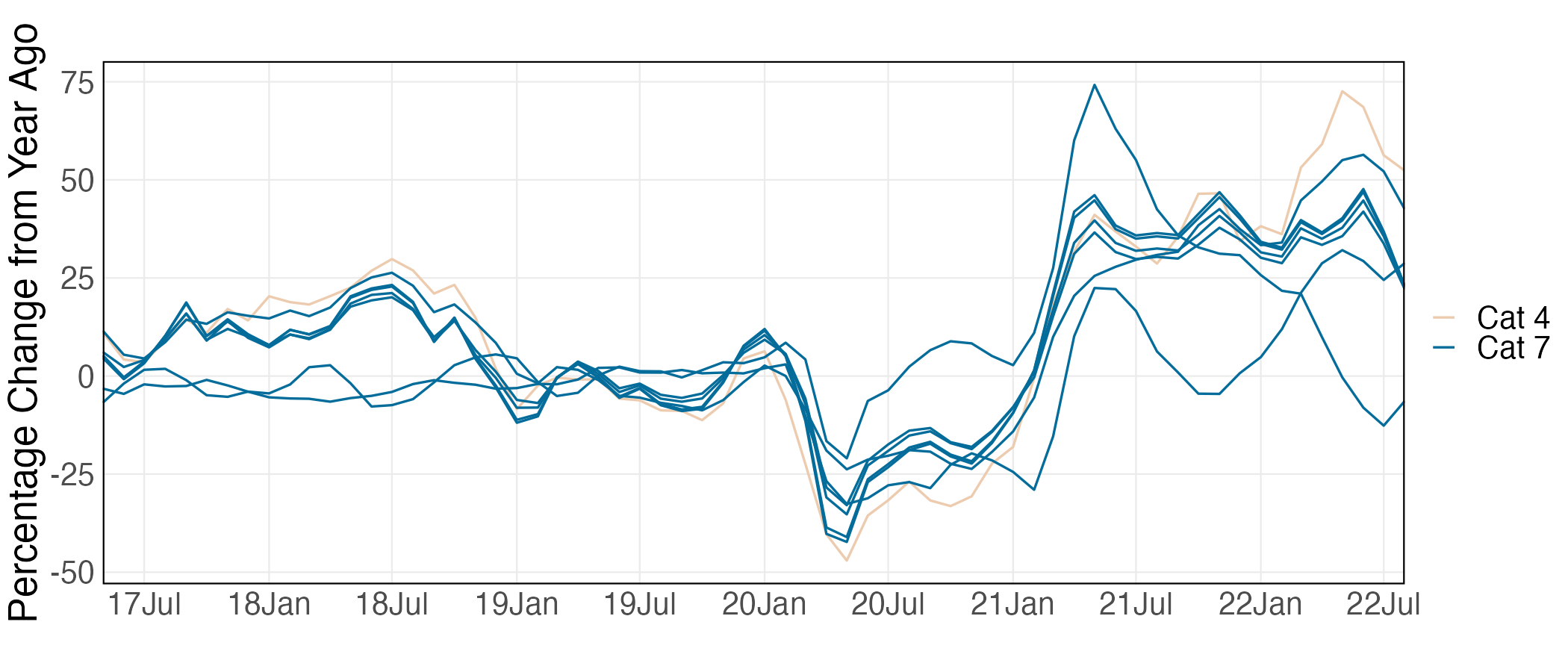

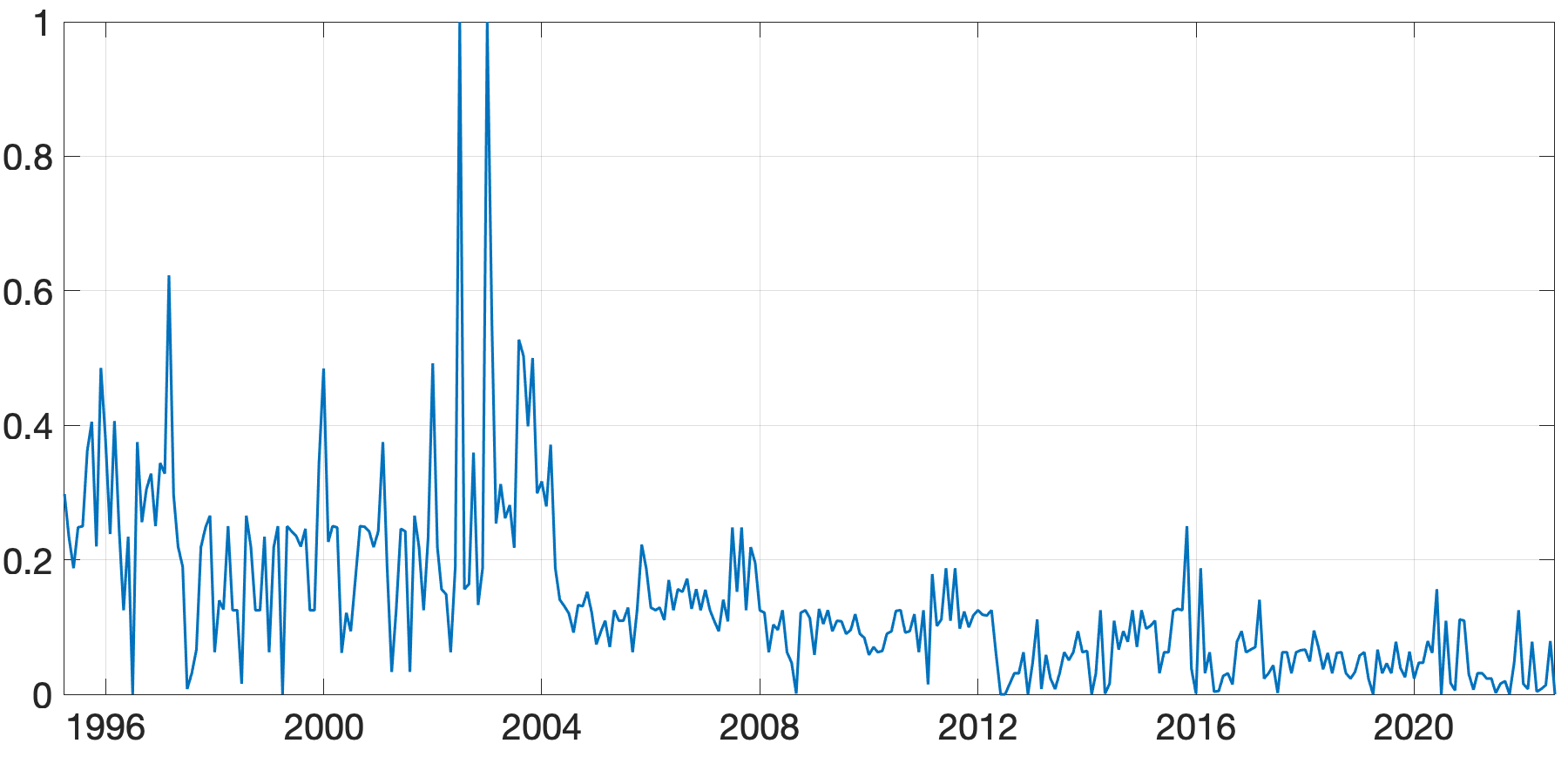

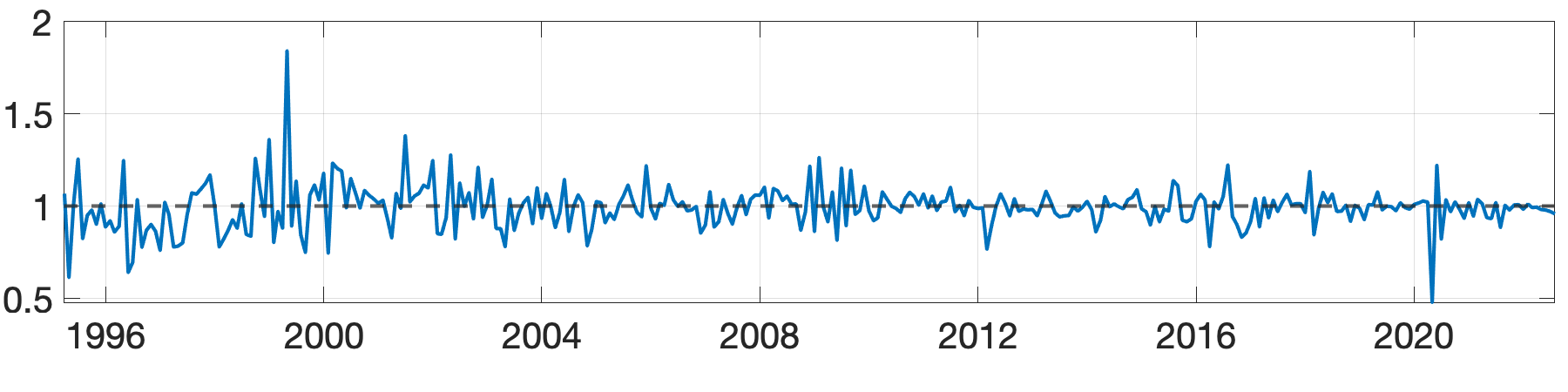

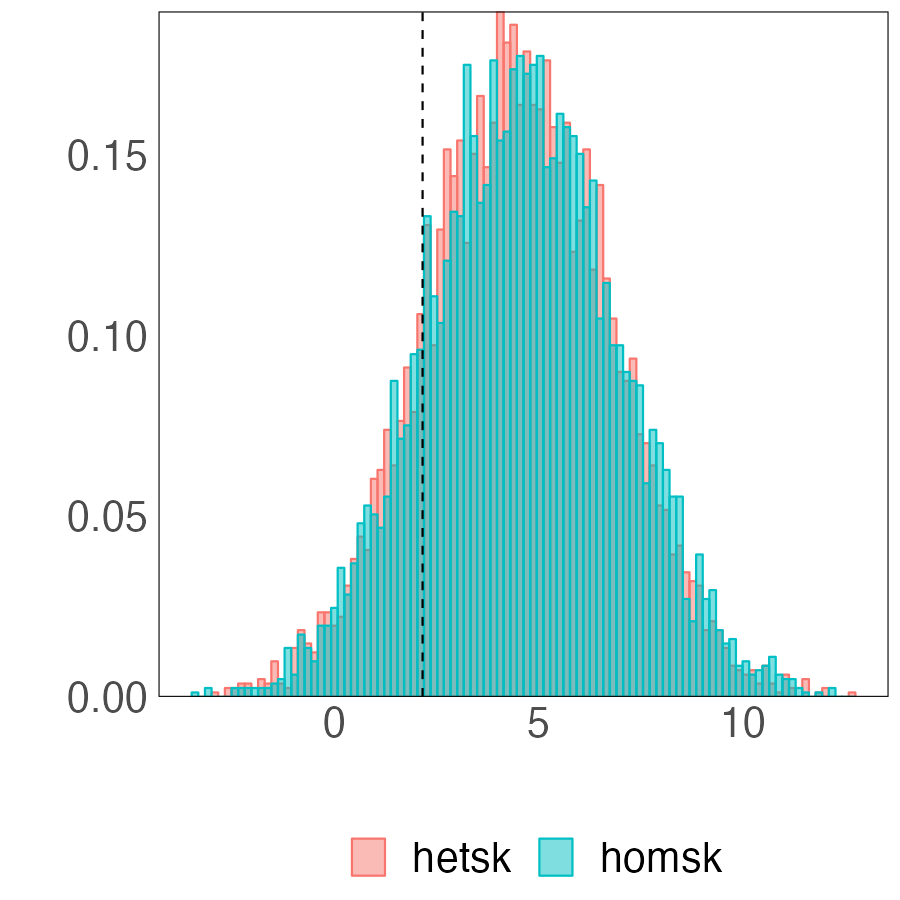

Next, we assess the value of adding prior information about groups by comparing the performance of the BGFE-he-cstr and BGFE-he estimators exclusively. The solid black line in Figure 8 represents the ratio of RMSE between BGFE-he-cstr and BGFE-he. The periods during which BGFE-he-cstr, BGFE-he, and all other estimators achieve the lowest RMSE are indicated by pink, blue, and green shaded areas, respectively. Though the BGFE-he-cstr estimator is not always the best across samples, the prior information improves the performance of the Bayesian grouped estimator. The BGFE-he-cstr estimator performs better than the BGFE-he estimator in most samples, with an average improvement of 2%.

Adding prior information on groups substantially improves the accuracy of density forecasts. Figure 9 shows the comparison between BGFE-he-cstr and BGFE-he in terms of the difference in LPS. We find the prior information valuable as BGFE-he-cstr outperforms BGFE-he in more than 98% of the samples. Clearly, the majority of the figure is covered by a pink background, showing that BGFE-he-cstr is typically the best choice. All of these facts demonstrate that adding prior informatio is favorable and essential, especially for density forecasting.