Inference in Nonparametric Series Estimation with Specification Searches for the Number of Series Terms

Abstract

Nonparametric series regression often involves specification search over the tuning parameter, i.e., evaluating estimates and confidence intervals with a different number of series terms. This paper develops pointwise and uniform inferences for conditional mean functions in nonparametric series estimations that are uniform in the number of series terms. As a result, this paper constructs confidence intervals and confidence bands with possibly data-dependent series terms that have valid asymptotic coverage probabilities. This paper also considers a partially linear model setup and develops inference methods for the parametric part uniform in the number of series terms. The finite sample performance of the proposed methods is investigated in various simulation setups as well as in an illustrative example, i.e., the nonparametric estimation of the wage elasticity of the expected labor supply from Blomquist and Newey (2002).

Keywords: Nonparametric series regression, Pointwise confidence interval, Smoothing parameter choice, Specification search, Undersmoothing, Uniform confidence bands.

JEL classification: C12, C14.

1 Introduction

We consider the following nonparametric regression model

| (1.1) |

where is i.i.d., is a scalar response variable, is a vector of covariates, and is the conditional mean function. The theory of estimation and inference is well developed for nonparametric series (sieve) methods in a large body of econometrics and statistics literature. Series estimators have also received attention in applied economics because they have many appealing features, e.g., they can easily impose shape restrictions such as additive separability and monotonicity. Once the basis function is chosen (e.g., polynomial or regression spline series of fixed order), implementation requires a choice of the number of series terms where denotes the order of the polynomials or the number of knots in the splines. However, this often involves some ad-hoc specification searches over . For example, when is vector valued, researchers often evaluate the different numbers of terms in each dimension separately and construct a set of bases with different powers and cross-products of covariates. Although specification search seems necessary in some cases, it may lead to misleading inference without considering the first-step specification search or series term selection.111As a referee noted, the bias and MSE of the series estimator depend on not only but also the specific bases or sieve spaces, e.g., the order of the splines. In this paper, we fix the basis function, and we do not allow searching over the specific bases or sieve spaces.

Existing theory for the asymptotic normality of t-statistics and valid inference imposes a so-called undersmoothing (i.e., overfitting) condition that is a faster rate of than the mean-squared error (MSE) optimal convergence rates, and many papers in the literature typically suggest rule-of-thumb rules that give the desired level of undersmoothing. Among many others, Newey (2013) suggested increasing until the standard errors are large relative to small changes in objects of interest. Newey, Powell, and Vella (1999) suggested using more terms than that chosen by cross-validation. Horowitz and Lee (2012) suggested increasing until the integrated variance suddenly increases and then adding additional terms.

In this paper, we formally justify these rule-of-thumb methods or “plug-in” methods with undersmoothed for valid inference in nonparametric series regression. Specifically, we provide pointwise inference for with possibly data-dependent (undersmoothed) , i.e., constructing confidence interval (CI),

| (1.2) |

with an estimator , variance using series terms, and critical values from the supremum of the t-statistics. For this result, we first develop a uniform distributional approximation theory of the absolute value of the supremum of the t-statistics over different series terms to construct asymptotically valid confidence intervals, which are uniform in ,

| (1.3) |

The critical values can be easily implemented using simple simulation or weighted bootstrap methods.

Furthermore, this paper develops the construction of confidence bands for with asymptotically uniform (in ) coverage with critical values chosen to satisfy

| (1.4) |

Analogous to the pointwise inference in (1.2), we can show the validity of confidence bands with the data-dependent . Even in pointwise inference, deriving a uniform asymptotic distribution theory for all sequences of t-statistics over may not be possible unless is finite. Allowing as , results in this paper build on coupling inequalities for the supremum of the empirical process developed by Chernozhukov, Chetverikov, and Kato (2014a, 2016) combined with anti-concentration inequality in Chernozhukov, Chetverikov, and Kato (2014b).

We also provide inference methods in a partially linear model setup focusing on the common parametric part. Unlike the nonparametric object of interest that has a slower convergence rate than (e.g., regression function or regression derivative), the t-statistics for the parametric object of interest are asymptotically equivalent for all sequences of under standard rate conditions as . To account for the dependency of the t-statistics with the different sequences of in this setup, we consider a faster rate of that grows as fast as the sample size , as in Cattaneo, Jansson, and Newey (2018a, 2018b), and develop an asymptotic distribution of the t-statistics over . Then, we discuss methods to construct confidence intervals that are similar to the nonparametric regression setup and provide uniform (in ) coverage properties.

We investigate finite sample coverage and length properties of the proposed CIs and uniform confidence bands in various simulation setups. As an illustrative example, we revisit nonparametric estimation of labor supply function using the entire individual piecewise-linear budget set as in Blomquist and Newey (2002). Imposing additive separability, which is derived by economic theory, Blomquist and Newey (2002) estimate the conditional mean of labor supply function using series estimation and report wage elasticity of the expected labor supply as well as other welfare measures with various specifications of the different number of series terms.

Several important papers have investigated the asymptotic properties of series (and sieve) estimators, including papers by Andrews (1991a); Eastwood and Gallant (1991); Newey (1997); Chen and Shen (1998); Huang (2003); Chen (2007); Chen and Liao (2014); Chen, Liao, and Sun (2014); Belloni, Chernozhukov, Chetverikov, and Kato (2015); and Chen and Christensen (2015), among many others. This paper extends inference based on the t-statistic under a single sequence of to the sequences of over a set and focuses both on the pointwise and uniform inferences on , which is irregular (i.e., slower than a rate of ) and a linear functional, under an i.i.d. setup.

The supremum t-statistics have been used as a correction for multiple-testing problems and to construct simultaneous confidence bands, and the importance of multiple-testing problems (data mining or data snooping) has long been noted in various other contexts (see Leamer (1983), White (2000), Romano and Wolf (2005), Hansen (2005)).

There is also a growing literature on data-dependent series term selection and its impact on estimation and inference in econometrics and statistics. Asymptotic optimality results of cross-validation have been developed, e.g., in papers by Li (1987), Andrews (1991b), and Hansen (2015). Horowitz (2014) develops data-driven methods for choosing the sieve dimension in the nonparametric instrumental variables (NPIV) estimation such that resulting NPIV estimators attain the optimal sup-norm or norm rates adaptive to the unknown smoothness of . Although we do not pursue adaptive inference in this paper, there is also a large statistical literature on adaptive inference. For example, Giné and Nickl (2010), Chernozhukov, Chetverikov, and Kato (2014b) construct adaptive confidence bands in the density estimation problem (see Giné and Nickl (2015, Section 8) for comprehensive lists of references). However, once data-driven choice is obtained for adaptive estimation (e.g., Lepski (1990)-type procedures), one still requires an undersmoothing condition for inference to eliminate asymptotic bias terms (see Theorem 1 of Giné and Nickl (2010)), and this may result in similar specification search issues when choosing sufficiently “large” in practice.

We can, in principle, consider kernel-based estimation where several data-dependent bandwidth selections or explicit bias corrections have been proposed.222See Härdle and Linton (1994), Li and Racine (2007) for references. See also Hall and Horowitz (2013), Calonico, Cattaneo, and Farrell (2018), Schennach (2015) and references therein for various recent works on related bias issues and inference for kernel estimators. However, there exist many examples estimating using (global) series estimation and imposing shape constraints easily (such as additive separability to reduce dimensionality) that are also interested in both pointwise and uniform inference. Given the issues of specification search, our paper is closely related to a recent paper by Armstrong and Kolesár (2018) which considers a bandwidth snooping adjustment for kernel-based inference.

Unlike kernel-based methods, little is known about the statistical properties of data-dependent selection rules and explicit bias formulas for general series estimation. Zhou, Shen, and Wolfe (1998) and Huang (2003) are two of the few exceptions. A recent paper, Cattaneo, Farrell, and Feng (2019), develops novel explicit asymptotic bias/integrated mean squared error (IMSE) formulas and asymptotic theory of the bias-correction methods for general partitioning-based series estimators. The results in Cattaneo, Farrel, and Feng (2019) can be used as an alternative to the undersmoothing approach to avoid specification search issues.

The remainder of the paper is organized as follows. Section 2 introduces the basic nonparametric series regression setup and the candidate set . Section 3 provides the pointwise inference, and Section 4 provides uniform inference in . Section 5 extends our inference methods to the partially linear model setup. Section 6 summarizes Monte Carlo experiments in various setups, and Section 7 illustrates an empirical example as in Blomquist and Newey (2002). Then, Section 8 concludes the paper. Appendix A includes the main proofs, and Appendix B includes figures and tables. Additional supporting lemmas and simulation results are provided in the Online Supplementary Material available at Cambridge Journals Online (journals.cambridge.org/ect).

1.1 Notation

denotes the spectral norm, which equals the largest singular value of a matrix , and denote the minimum and maximum eigenvalues of a symmetric matrix , respectively. and denote the usual stochastic order symbols, denotes convergence in distribution, and denotes weak convergence. Let and denote as the largest integer less than the real number . For two sequences of positive real numbers and , denotes for all sufficiently large with some constant that is independent of . denotes and . Furthermore, denotes . For a given random variable and , is the space of all -norm bounded functions with , denotes the space of all bounded functions under the sup-norm, and for the bounded real-valued functions on the support .

2 Setup

We introduce the nonparametric series regression setup in the model (1.1). Given a random sample , we are interested in inference on the conditional mean at a particular point or uniform in .

Let be an estimator of using series terms , which is a vector of basis functions that can change with . Standard examples for the basis functions are power series, Fourier series, orthogonal polynomials, splines and wavelets. The series estimator is then obtained by the least square (LS) estimation of on regressors

| (2.1) |

where . Define the least square residuals as ,

| (2.2) | ||||

and consider the t-statistic

| (2.3) |

Under standard regularity conditions (discussed in the next section), the t-statistic can be decomposed as follows:

| (2.4) |

where , , and is the best linear projection coefficient. The first term in the decomposition (2.4) converges to a standard normal distribution for the deterministic sequence as , and the second term does not necessarily converge to 0 due to approximation errors . The second term can be ignored with an undersmoothing assumption, and the asymptotic distribution of the t-statistic, , is well known in the literature (see, for examples, Andrews (1991a), Newey (1997), Belloni et al. (2015), and Chen and Christensen (2015), among many others). Then, the confidence interval for can be easily constructed using the normal critical value

| (2.5) |

However, it is not clear whether the conventional CI using normal critical values (2.5) has a correct coverage probability with a possibly data-dependent such as cross-validation or IMSE-optimal selection. First, may not hold with a random sequence of , even if we assume the asymptotic bias is negligible. Second, it is well known that some data-dependent rules do not satisfy the undersmoothing rate conditions, which can lead to a large asymptotic bias and coverage distortion of the standard CI. For example, suppose that the researcher uses selected by cross-validation; then, is typically too “small” and violates the undersmoothing assumption needed to ensure the asymptotic normality without bias terms and the valid inference.

As discussed in the introduction, the undersmoothing assumption involves possibly ad-hoc methods to choose series terms over a candidate set for a valid inference, and cross-validation methods naturally involve specification search over a set of the different number of series terms.

The following set assumption on is constructed to allow a broad range of such that can allow (unknown) an optimal MSE rate of as well as an undersmoothing rate that increases faster than the optimal MSE rate.

Assumption 2.1.

(Set of number of series terms) Assume the candidate set as , where and as .

Here, we consider a possibly growing set of the number of series terms, and a similar assumption is used in the literature, for example, in Newey (1994a, 1994b). Suppose belongs to the Hölder space of smoothness , ; then, we obtain optimal convergence rates with . Assumption 2.1 allows having optimal rates of in a large set of classes of functions. By setting , and with , Assumption 2.1 contains the number of series terms that obtain an optimal rate of convergence for , . A similar assumption is used in the literature on adaptive inference, although we do not pursue this direction in the current paper.

Assumption 2.1 gives flexible choices of , as we only assume the rates of , for example, , where and can be set arbitrarily small or large. We only require rate restrictions uniformly over to guarantee the linearization of the t-statistic in (2.4) and the rates of the cardinality . Since is a positive integer and , is growing at a rate much slower than under the rate restrictions in Section 3.

Remark 2.1 ( and the largest ).

As a referee noted, specification search is often performed over a simple pre-defined set in practice. For example, a researcher may only use quadratic, cubic, or quartic terms in polynomial regression or try only a few different numbers of knots in regression splines to observe how the estimate and standard error change. In the nonparametric estimation of the Mincer equation (Heckman, Lochner, and Todd (2006)), researchers may consider a regression of log wages on experience with polynomials of order (linear) to (quartic).333All of our results continue to hold with fixed ; however, it may be preferred to use larger sets with to give greater flexibility to the candidate models as the sample size increases.

However, it may not be clear how to define a priori in practice. One must first consider a set of pre-selected models over which to search. As discussed earlier and suggested by many papers in the literature, some formal data-dependent methods to obtain optimal norm or sup-norm rates, such as cross-validation, can be a useful guideline for . For example, one can consider a reasonable set first, choose by cross-validation, and then consider or for some constants . One can also search and sequentially by calculating changes in cross-validation or standard errors from the initial candidate set. Extending results developed in this paper with data-dependent are beyond the scope of the paper.

3 Pointwise Inference

In this section, we focus on pointwise inference for . The goal of this section is to provide a uniform distributional approximation theory of over a set and provide uniform (in ) coverage properties of confidence intervals for in (1.2), (1.3) with the construction of critical values.

From the decomposition of the t-statistic in (2.4), we first consider the (infeasible) test statistic

| (3.1) |

where with the series variance , . In general, does not have a limiting distribution because it is not asymptotically tight under Assumption 2.1 unless is finite or under the restrictive assumption on .444In an earlier version of the paper, we provide the weak convergence of a series process under the same rates of and high-level assumptions. This can be viewed as an analogous result in the kernel estimation literature (see Section 2 of Armstrong and Kolesár (2018) and other references therein). However, we show below that there exists a sequence of random variables such that for a sequence of constants , where is a Gaussian random vector in such that with elements of the variance-covariance matrix

| (3.2) |

.

By replacing unknown with consistent estimators , we show below that we can approximate by and then obtain critical values by using a simulation-based method to provide valid coverage properties in (1.2) and (1.3). We define as follows:

| (3.3) | ||||

where is a consistent estimator of the variance-covariance matrix defined in (3.2), is the simple plug-in estimator for as in (2.2), and . One can compute by simulating (typically or ) i.i.d. random vectors and by taking a sample quantile of . Alternatively, we can use weighted bootstrap methods. See Section 4 for the implementation and the validity of bootstrap procedures in the construction of confidence bands.

To establish our main results, we impose mild regularity conditions uniform in . For each , define as the largest normalized length of the regressor vector and for design matrix .

Assumption 3.1.

(Regularity conditions - model)

-

(i)

are random variables satisfying the model (1.1).

-

(ii)

, and for each , as , there exists such that

where .

Assumption 3.2.

(Regularity conditions - pointwise inference)

-

(i)

as .

-

(ii)

, , and either of the following conditions hold: (a) for or (b) there exists a constant such that .

-

(iii)

, .

Assumptions 3.1(ii) and 3.2(i) are similar to those imposed in Belloni et al. (2015) and Chen and Christensen (2015), and all the discussions made there also apply here except that we impose rate conditions of uniformly over . The rate conditions can be replaced by the specific bounds of with various sieve bases. For example, when , the probability density of is uniformly bounded above and bounded away from zero, and , i.e., the Hölder space of smoothness , then , , for regression spline series of order , and Assumption 3.2(i) is satisfied when . Other standard regularity conditions in the literature (e.g., Newey (1997) and Chen (2007)) can also be used here, and the rate condition can be improved with different pointwise linearization and approximation bounds in Huang (2003) for splines and Cattaneo et al. (2019) for partitioning-based estimators.

Assumption 3.2(ii) imposes either the bounded polynomial moment conditions or sub-exponential moments of the regression errors. Assumption 3.2(iii) imposes the consistency of variance estimator uniformly in , and this holds under mild regularity conditions (see Lemma 5.1 of Belloni et al. (2015) and Lemma 3.1-3.2 of Chen and Christensen (2015)).

Theorem 3.1.

Suppose that Assumptions 2.1, 3.1, and 3.2 hold and that either of the following rate conditions hold depending on the case (a) or (b) in Assumption 3.2(ii): (a) or (b) . If, in addition, we assume that , then

| (3.4) |

and the following coverage property holds

| (3.5) |

with a critical value defined in (3.3). Alternatively, if we assume with , then the following holds:

| (3.6) |

Theorem 3.1 provides a uniform coverage property of the confidence interval over for the regression function . Equation (3.6) guarantees the asymptotic coverage of CI for data-dependent with undersmoothing. Note that standard inference methods in the nonparametric regression setup typically consider a singleton set with as . The rate restriction is mild because it only requires , up to terms, in case and , up to terms, in case when for splines and wavelet series. Theorem 3.1 builds upon a coupling inequality for maxima of sums of random vectors in Chernozhukov, Chetverikov, and Kato (2014a) combined with the anti-concentration inequality in Chernozukhov, Chetverikov, and Kato (2014b).

Remark 3.1 (Undersmoothing assumption).

Note that (3.5) requires an undersmoothing assumption uniformly over . Without , coverage in (3.5) can be understood as the uniform confidence intervals for the pseudo-true value , i.e.,

| (3.7) |

However, a uniform undersmoothing condition is not assumed in (3.6), and it only requires that the chosen satisfies the undersmoothing condition such that the asymptotic bias is negligible. This allows broader ranges of in including an unknown optimal MSE rate. We formally justify rule-of-thumb methods for valid inference suggested in the literature that include an additional number of series terms, a blow up of the numbers after using cross-validation, or some “plug-in” methods for choosing such as those in Newey, Powell, and Vella (1999), Newey (2013). Here, uniform (in ) inference considers uncertainty from specification search and using larger critical values than the normal critical value .

Remark 3.2 (Other functionals).

Here, we focus on the leading example with for some fixed point ; however, we can consider other linear functionals such as the regression derivatives . All the results in this paper can be applied to irregular (slower than rate) linear functionals using estimators and an appropriate transformation of basis with proper smoothness condition on the functional and continuity conditions on the derivative as in Newey (1997). Although the verification of previous results for regular ( rate) functionals, such as integrals and weighted average derivatives, is beyond the scope of this paper, we examine similar results for the partially linear model setup in Section 5.

4 Uniform Inference

This section provides construction of uniform confidence bands for (uniform in ) given in (1.4). We define the following empirical process

| (4.1) |

over , and we show below that the supremum of the empirical process can be approximated by a sequence of random variables , where is a tight Gaussian random process in with zero mean and covariance function

| (4.2) |

Although the Gaussian approximation is an important first step, the covariance function (4.2) is generally difficult to construct for the purpose of uniform inference. Thus, we employ weighted bootstrap methods similar to Belloni et al. (2015) and show the validity of the bootstrap procedure for uniform confidence bands.

Let be a sequence of i.i.d. standard exponential random variables that are independent of . For , we define a (centered) weighted bootstrap process

| (4.3) |

where , and is obtained by the following weighted least squares regression

| (4.4) |

Define the critical value

| (4.5) |

and we consider confidence bands of the form

| (4.6) |

To provide the validity of the bootstrap critical values and confidence bands in (4.6), we show below that the conditional distribution of is “close” to the distribution of and that of using coupling inequalities for the supremum of the empirical process and the bootstrap process as in Chernozhukov et al. (2016). Then, similar to Theorem 3.1, this gives bounds on the Kolmogorov distance for the distribution functions of and .

The following assumptions are used to establish the coverage probability of confidence bands uniformly over . Define , and

Assumption 4.1.

(Regularity conditions - uniform inference)

-

(i)

for and .

-

(ii)

as .

-

(iii)

, , and .

-

(iv)

.

For uniform inference, we require similar but slightly stronger conditions compared to Assumption 3.2. We also impose mild rate restrictions on and similar to Chernozhukov et al. (2014a) and Belloni et al. (2015).

Theorem 4.1.

Suppose that Assumptions 2.1, 3.1, and 4.1 hold, and , as . If, in addition, we assume that , then

| (4.7) |

with a critical value in (4.5).

Alternatively, if we assume with , then the following coverage property holds:

| (4.8) |

Theorem 4.1 shows the uniform asymptotic coverage property of the confidence bands defined in (4.6) uniformly over . Furthermore, it shows a confidence band with possibly data-dependent having an asymptotic coverage of at least . The confidence band constructed in (4.8) requires a substantially weaker assumption on the undersmoothing similar to Theorem 3.1.

5 Extension: Partially Linear Model

In this section we provide inference methods for the partially linear model (PLM) setup. For notational simplicity, we use similar notation as defined in the nonparametric regression setup. Suppose we observe random samples , where is the scalar response variable, is the treatment/policy variable of interest, and is a set of explanatory variables. For simplicity, we shall assume that is a scalar. We consider the model

| (5.1) |

We are interested in inference on after approximating an unknown function by series terms/regressors among a set of potential control variables. Specification searches can be performed for the number of different approximating terms or for the number of covariates in estimating the nonparametric part.

The series estimator for using the first terms is obtained by standard LS estimation of on and and has the usual “partialling out” formula

| (5.2) |

where . The asymptotic normality and valid inference for have been developed in the literature.555See also Robinson (1988), Linton (1995) and references therein for the results of the kernel estimators. Donald and Newey (1994) derived the asymptotic normality of under standard rate conditions . Belloni, Chernozukhov, and Hansen (2014) analyzed asymptotic normality and uniformly valid inference for the post-double-selection estimator even when is much larger than (see also Kozbur (2018)). Recent papers by Cattaneo, Jansson, and Newey (2018a, 2018b) provided a valid approximation theory for when grows at the same rate of .

A different approximation theory using a faster rate of () than the standard rate conditions () is particularly useful for our purpose to establish the asymptotic distribution of t-statistics over . From the results in Cattaneo, Jansson, and Newey (2018a), we have the following decomposition:

| (5.3) |

where , and . For any deterministic sequence satisfying standard rate conditions , is asymptotically normal with variance . Unlike the nonparametric object of interest in the fully nonparametric model, where the variance term increases with , has a parametric () convergence rate, and with all different sequences of are asymptotically equivalent under .666This is also related to the well-known results of the two-step semiparametric estimation; the asymptotic variance of two-step semiparametric estimators does not depend on the type of the first-step estimator or smoothing parameter sequences under certain conditions (see Newey (1994b)). However, under faster rate conditions, for , the second term in (5.3) is not negligible and converges to bounded random variables. Cattaneo, Jansson, and Newey (2018a) apply the central limit theorem of degenerate U-statistics for the second term, similar to the many instrument asymptotics analyzed in Chao, Swanson, Hausman, Newey, and Woutersen (2012). Then, the limiting normal distribution has a larger variance than the standard first-order asymptotic variance, and the adjusted variances generally depend on the number of terms such that we can provide an asymptotic distribution of the t-statistics with the different sequence of over .

The following assumption on is considered, and we impose the regularity conditions that are used in Cattaneo, Jansson, and Newey (2018a, Assumption PLM) uniformly over .

Assumption 5.1.

(Set of finite number of series terms)

-

Assume , where as for all , constant such that , and fixed .

Assumption 5.2.

(Regularity conditions - partially linear model)

-

(i)

are random variables satisfying the model (5.1).

-

(ii)

There exists constants such that and , and .

-

(iii)

(a.s.) and for for all .

-

(iv)

For each , there exists some ,

Assumption 5.2 does not require which is required to obtain asymptotic normality in the literature (e.g., Donald and Newey (1994)). Similar to Assumption 3.2(iii) in the nonparametric setup, Assumption 5.2(iv) holds for the polynomials and spline basis. For example, 5.2(iv) holds with when is compact and when the unknown functions and have and continuous derivates, respectively.

Under Assumptions 5.1, 5.2 and undersmoothing condition (), we have a joint asymptotic distribution of the t-statistics over :

where

and the variance-covariance matrix with element

| (5.4) | ||||

for Then, we can similarly define critical values as in (3.3) to construct confidence intervals for uniform in analogous to the nonparametric setup. Let

| (5.5) |

where is a consistent estimator for unknown defined in (5.4).

Theorem 5.1 is the main result for the partially linear model setup and provides the asymptotic coverage results of the CIs uniform in analogous to the nonparametric setup in Section 3.

Theorem 5.1.

Remark 5.1.

Note that the construction of CIs requires consistent variance estimation of . As discussed in Cattaneo, Jansson, and Newey (2018a, 2018b), the construction of the heteroskedasticity-robust estimator for under is challenging, and the Eicker-Huber-White-type variance estimator generally requires for consistency. Cattaneo, Jansson, and Newey (2018b) considers the following standard error formula:

| (5.8) |

where and symmetric matrix with element . Cattaneo, Jansson, and Newey (2018b) show that is consistent even under heteroskedasticity and with a certain choice of and provide a sufficient condition for consistency. See Theorems 3 and 4 of Cattaneo, Jansson, and Newey (2018b) for further discussion.

6 Simulations

This section investigates the small sample performance of the proposed inference methods. We report the empirical coverage and the average length of the confidence intervals/confidence bands considered in Sections 3 and 4 with various simulation setups.

We consider the following data generating process:

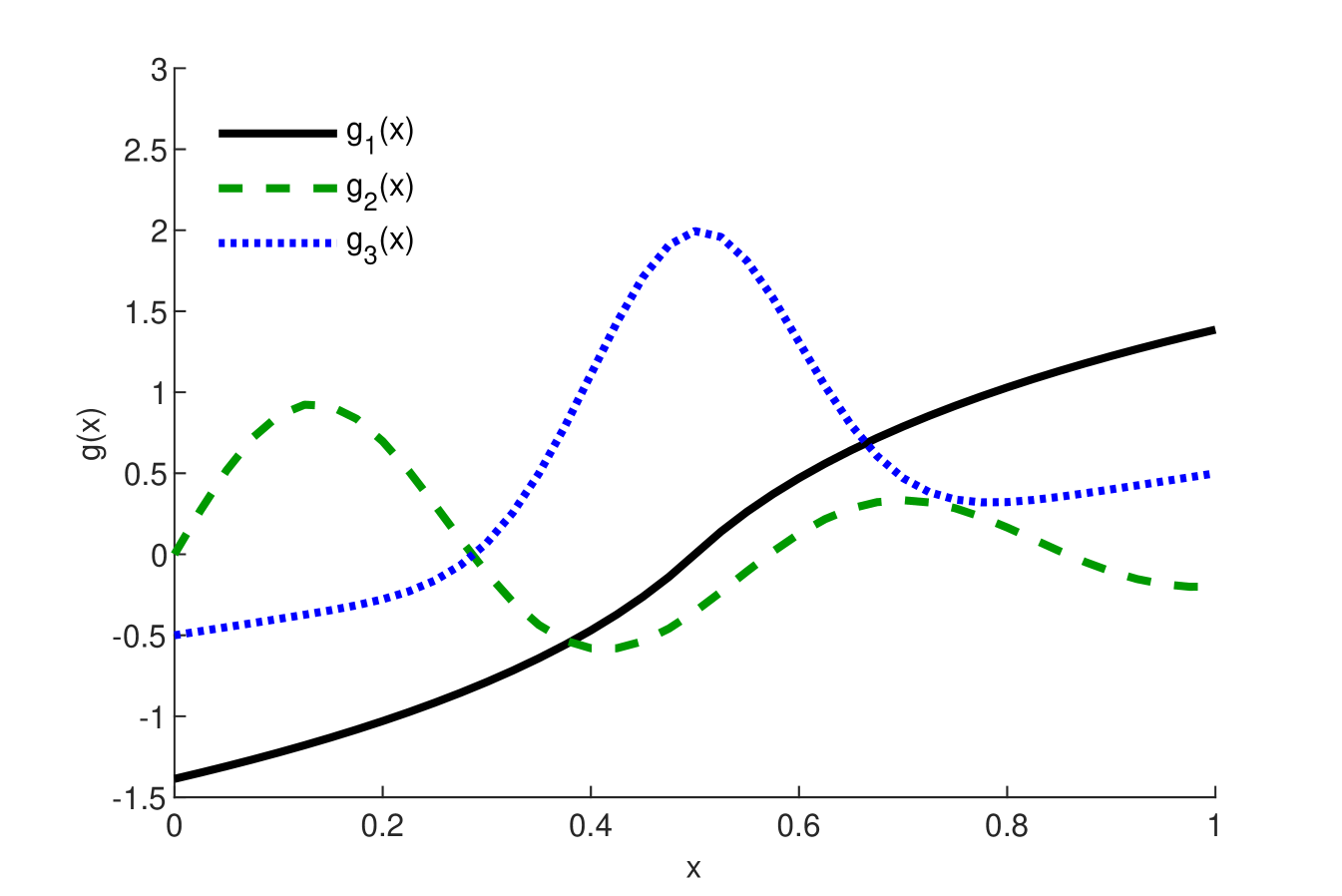

where is the standard normal cumulative distribution function needed to ensure compact support, and (heteroskedastic). We investigate the following three functions for : , , and , where is the standard normal probability density function, and is the sign function. is used in Newey and Powell (2003), as well as Chen and Christensen (2018). and are rescaled versions used in Hall and Horowitz (2013). See Figure 1 for the shapes of all three functions on . For all simulation results below, we generate 2000 simulation replications for each design with a sample size .

Results for quadratic splines with evenly placed knots are reported where the number of knots are selected among by setting and rounded up to the nearest integer. Then, we calculate a pointwise coverage rate (COV) and the average length (AL) of various 95% nominal CIs, as well as analogous uniform CBs for the grid points of on the support . To calculate critical values, 1000 additional Monte Carlo or bootstrap replications are performed on each simulation iteration. In addition, we investigate results for homoskedastic errors (), different sample sizes , polynomial regressions, and different specifications as in Cattaneo and Farrell (2013) with multivariate and non-normal regressors; however, the results show qualitatively similar patterns and hence are not reported here for brevity. Additional simulation results are reported in the Online Supplementary Material.

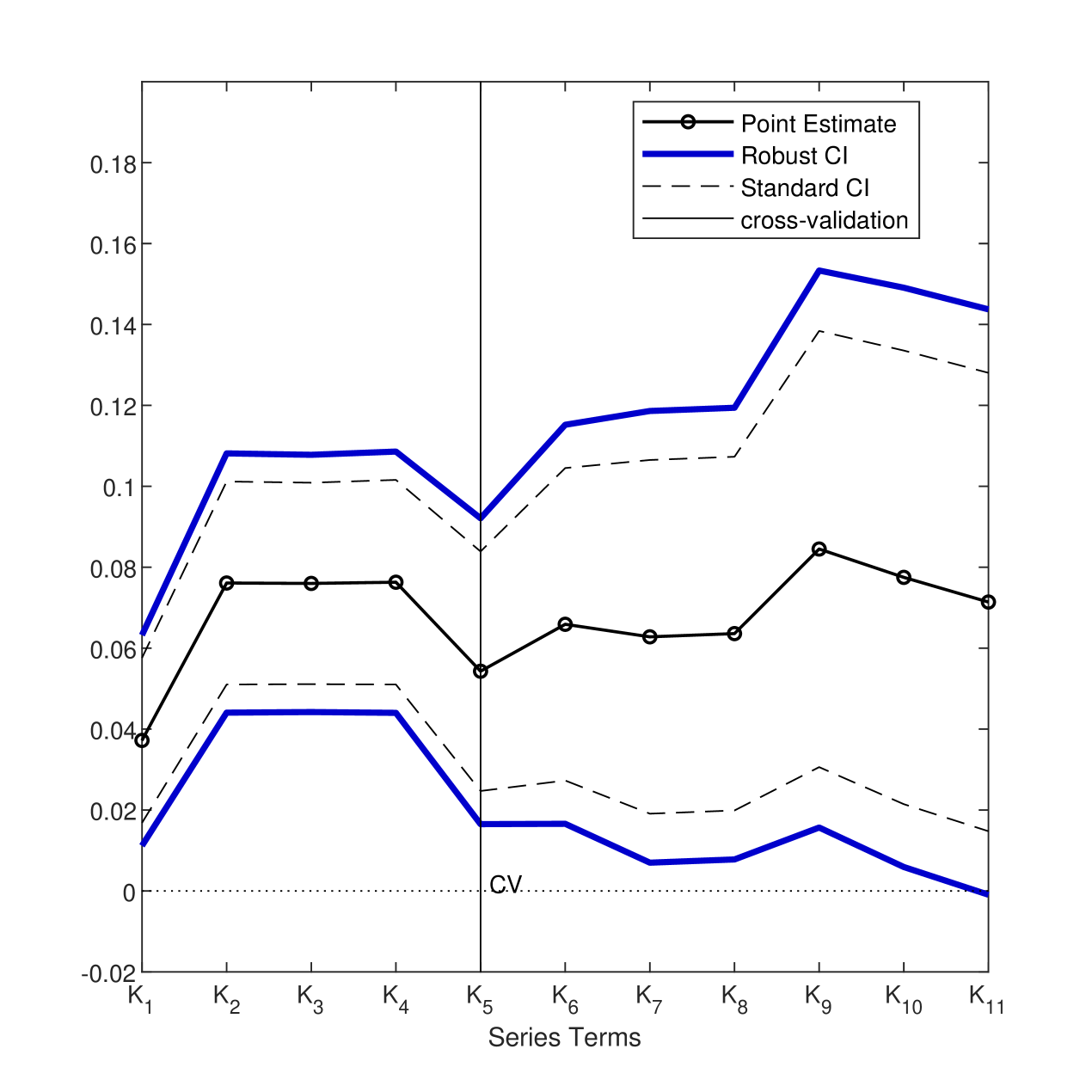

Table 1 reports the nominal 95% coverage of the following pointwise CIs at : (1) the standard CI in (2.5) with selected to minimize the leave-one-out cross-validation; (2) robust CI in (3.6) with using the critical value ; (3) robust CI using . Analogous uniform inference results for CBs are also reported. The critical values, and are constructed using the Monte Carlo methods and weighted bootstrap method, respectively.

Overall, we find that the coverage of the standard CI with is far less than 95% over the support although it has the shortest length. However, the coverage of robust CIs based on or with is close to or above 95% and performs well across the different simulation designs, and this is consistent with theoretical results in Theorem 3.1. Using the undersmoothed (using more terms than the cross-validation) seems to work quite well at most points and for highly nonlinear designs where there exists relatively large bias, e.g., Model 3 at .777The possibly poor coverage property of the standard kernel-based CIs for at the single peak () was also described in Hall and Horowitz (2013, Figure 3). Uniform coverage rates of confidence bands with selected seem conservative, and this is due to the large critical values based on weighted bootstrap methods to be uniform in both and , including boundary points.

7 Empirical application

In this section, we illustrate inference procedures by revisiting Blomquist and Newey (2002). Understanding how tax policy affects individual labor supply has been a central issue in labor economics (see Hausman (1985) and Blundell and MaCurdy (1999), among many others). Blomquist and Newey (2002) estimate the conditional mean of hours of work given the individual nonlinear budget sets using nonparametric series estimation. They also estimate the wage elasticity of the expected labor supply and find evidence of possible misspecification of the usual parametric model such as maximum likelihood estimation (MLE).

Specifically, Blomquist and Newey (2002) consider the following model by exploiting an additive structure from the utility maximization with piecewise linear budget sets:

| (7.1) | ||||

| (7.2) |

where is the hours worked of the th individual and is the budget set, which can be represented by the intercept (non-labor income), slope (marginal wage rates) and the end point of the th segment in a piecewise linear budget with segments. Equation (7.2) for the conditional mean function follows from Theorem 2.1 of Blomquist and Newey (2002), and this additive structure substantially reduces the dimensionality issues. To approximate , they consider the power series, .

From the Swedish “Level of Living” survey in 1973, 1980 and 1990, they pool the data from three waves and use the data for married or cohabiting men of ages 20-60. Changes in the tax system over three different time periods give a large variation in the budget sets. The sample size is . See Section 5 of Blomquist and Newey (2002) for more detailed descriptions. They estimate the wage elasticity of the expected labor supply

| (7.3) |

which is the regression derivative of evaluated at the mean of the net wage rates , virtual income and level of hours .

Table 2 is the same table as in Blomquist and Newey (2002, Table 1). They report estimates and standard errors with a different number of series terms by adding additional series terms. For example, the estimates in the second row use the term in the first row with the additional terms . Here, denotes approximating the term . Blomquist and Newey (2002) also report cross-validation criteria, , for each specification. In their formula, series terms are chosen to maximize , which minimizes the asymptotic MSE. In addition to their original table, we add the standard 95% CI for each specification, i.e., . In Table 2, it is ambiguous as to which large model () can be used for the inference, and we do not have compelling data-dependent methods for selecting one of the large for the confidence interval to be reported. Here we want to construct CIs that are robust to specification searches.

Figure 2 displays pointwise 95% uniform CIs for , where corresponds to each specification in Table 2 with increasing order of series terms, along with the point estimates and standard 95% confidence interval.888It is straightforward to construct using the covariance structure under the homoskedastic error and it only requires estimated variances for different that are already reported in the table of Blomquist and Newey (2002). Based on 100,000 simulation repetitions, we have . From Figure 2, we reject a zero wage elasticity of the labor supply for almost all models except . Table 2 also reports robust confidence intervals with possibly data-dependent justified by Theorem 3.1 (eq (3.6)). Note that cross-validation chooses , and the standard CI with is and the robust CI is . Using or widens the standard CI, and the robust CIs are .

8 Conclusion

This paper considers nonparametric inference methods given specification searches over different numbers of series terms in the nonparametric series regression model. We provide methods of constructing uniform CIs and confidence bands by adjusting the conventional normal critical value to the critical value based on the supremum of the t-statistics. The critical values can be constructed using simple Monte Carlo simulation or weighted bootstrap methods. Then, we provide an extension of the proposed CIs in the partially linear model setup. Finally, we investigate the finite sample properties of the proposed methods and illustrate uniform CIs in an empirical example of Blomquist and Newey (2002).

While beyond the scope of this paper, there are some potential directions to extend the results established here. First, investigating the coverage property of CIs with data-dependent using bias-corrected methods is of interest. In particular, it would be of interest to analyze the bias-corrected CI and confidence bands using cross-validation methods combined with the recent results established in Cattaneo, Farrell, and Feng (2019). Second, an extension of the current theory for quantile regression (e.g., Belloni, Chernozhukov, Chetverikov, and Fernández-Val (2019)) or the nonparametric IV setup would be desirable. In the NPIV setup, for example, one can consider pointwise CIs (or uniform confidence bands) that are uniform in pairs of with an additional dimension of the instrument sieve and the number of instruments . This is a difficult problem, and it would require a distinct theory to address the ill-posed inverse problem as well as two-dimensional choices. We leave these topics for future research.

References

-

Andrews, D. W. K. (1991a): “Asymptotic Normality of Series Estimators for Nonparametric and Semiparametric Regression Models,”Econometrica, 59, 307-345.

-

Andrews, D. W. K. (1991b): “Asymptotic Optimality of Generalized , Cross-Validation, and Generalized Cross-Validation in Regression with Heteroskedastic Errors,”Journal of Econometrics, 47, 359-377.

-

Armstrong, T. B. and M. Kolesár (2018): “A Simple Adjustment for Bandwidth Snooping,”Review of Economic Studies, 85, 732-765.

-

Belloni, A., V. Chernozhukov, D. Chetverikov, and I. Fernández-Val (2019): “Conditional quantile processes based on series or many regressors,” Journal of Econometrics, 213, 4-29.

-

Belloni, A., V. Chernozhukov, D. Chetverikov, and K. Kato (2015): “Some New Asymptotic Theory for Least Squares Series: Pointwise and Uniform Results,” Journal of Econometrics, 186, 345-366.

-

Belloni, A., V. Chernozhukov, and C. Hansen (2014): “Inference on Treatment Effects after Selection among High-Dimensional Controls,”Review of Economic Studies, 81, 608-650.

-

Blomquist, S. and W. K. Newey (2002): “Nonparametric Estimation with Nonlinear Budget Sets,” Econometrica, 70, 2455-2480.

-

Blundell, R. and T. E. MaCurdy (1999): “Labor Supply: A Review of Alternative Approaches,” Handbook of Labor Economics, In: O. Ashenfelter, D. Card (Eds.), vol. 3., Elsevier, Chapter 27.

-

Calonico, S., M. D. Cattaneo, and M. H. Farrell (2018): “On the Effect of Bias Estimation on Coverage Accuracy in Nonparametric Inference,”Journal of the American Statistical Association, 113, 767-779.

-

Cattaneo, M. D. and M. H. Farrell (2013): “Optimal Convergence Rates, Bahadur Representation, and Asymptotic Normality of Partitioning Estimators,” Journal of Econometrics, 174, 127-143.

-

Cattaneo, M. D., M. H. Farrell, and Y. Feng (2019): “Large Sample Properties of Partitioning-Based Series Estimators,” Annals of Statistics, forthcoming.

-

Cattaneo, M. D., M. Jansson, and W. K. Newey (2018a): “Alternative Asymptotics and the Partially Linear Model with Many Regressors,” Econometric Theory, 34, 277-301.

-

Cattaneo, M. D., M. Jansson, and W. K. Newey (2018b): “Inference in Linear Regression Models with Many Covariates and Heteroscedasticity,” Journal of the American Statistical Association, 113, 1350-1361.

-

Chao, J. C., N. R. Swanson, J. A. Hausman, W. K. Newey, and T. Woutersen (2012): “Asymptotic Distribution of JIVE in a Heteroskedastic IV Regression with Many Instruments,”Econometric Theory, 28, 42-86.

-

Chatterjee, S. (2005): “An error bound in the Sudakov-Fernique inequality,”arXiv:math/0510424

-

Chen, X. (2007): “Large Sample Sieve Estimation of Semi-nonparametric Models,” Handbook of Econometrics, In: J.J. Heckman, E. Leamer (Eds.), vol. 6B., Elsevier, Chapter 76.

-

Chen, X. and T. Christensen (2015): “Optimal Uniform Convergence Rates and Asymptotic Normality for Series Estimators Under Weak Dependence and Weak Conditions,” Journal of Econometrics, 188, 447-465.

-

Chen, X. and T. Christensen (2018): “Optimal Sup-norm Rates and Uniform Inference on Nonlinear Functionals of Nonparametric IV Regression”, Quantitative Economics, 9(1), 39-85.

-

Chen, X. and Z. Liao (2014): “Sieve M inference on irregular parameters,” Journal of Econometrics,182, 70-86.

-

Chen, X., Z. Liao, and Y. Sun (2014): “Sieve inference on possibly misspecified semi-nonparametric time series models,” Journal of Econometrics,178, 639-658.

-

Chen, X. and X. Shen (1998): “Sieve extremum estimates for weakly dependent data,” Econometrica, 66 (2), 289-314.

-

Chernozhukov V, D. Chetverikov, and K. Kato (2014a): “Gaussian approximation of suprema of empirical processes,” The Annals of Statistics, 42(4), 1564-1597.

-

Chernozhukov V, D. Chetverikov, and K. Kato (2014b): “Anti-Concentration and Honest, Adaptive Confidence Bands,”The Annals of Statistics, 42(5), 1787-1818.

-

Chernozhukov V, D. Chetverikov, and K. Kato (2016): “Empirical and multiplier bootstraps for suprema of empirical processes of increasing complexity, and related Gaussian couplings,”Stochastic Processes and their Applications, 126(12), 3632-3651.

-

Donald, S. G. and W. K. Newey (1994): “Series Estimation of Semilinear Models,” Journal of Multivariate Analysis, 50, 30-40.

-

Eastwood, B. J. and A.R. Gallant, (1991): “Adaptive Rules for Seminonparametric Estimators That Achieve Asymptotic Normality,”Econometric Theory, 7, 307-340.

-

Giné, E. and R. Nickl (2010): “Confidence bands in density estimation,” The Annals of Statistics, 38, 1122-1170.

-

Giné, E. and R. Nickl (2015): Mathematical Foundations of Infinite-Dimensional Statistical Models, Cambridge University Press.

-

Hall, P. and J. Horowitz (2013): “A Simple Bootstrap Method for Constructing Nonparametric Confidence Bands for Functions,” The Annals of Statistics, 41, 1892-1921.

-

Hansen B. E. (2015): “The Integrated Mean Squared Error of Series Regression and a Rosenthal Hilbert-Space Inequality,” Econometric Theory, 31, 337-361.

-

Hansen, P.R. (2005): “A Test for Superior Predictive Ability,”Journal of Business and Economic Statistics, 23, 365-380.

-

Härdle, W. and O. Linton (1994): “Applied Nonparametric Methods,” Handbook of Econometrics, In: R. F. Engle, D. F. McFadden (Eds.), vol. 4., Elsevier, Chapter 38.

-

Hausman, J. A. (1985): “The Econometrics of Nonlinear Budget Sets”, Econometrica, 53, 1255-1282.

-

Heckman, J. J., L. J. Lochner, and P. E. Todd (2006): “Earnings Functions, Rates of Return and Treatment Effects: The Mincer Equation and Beyond,” Handbook of the Economics of Education, In: E. A. Hanushek, and F. Welch (Eds.), Vol. 1, Elsevier, Chapter 7.

-

Horowitz, J. L. (2014): “Adaptive Nonparametric Instrumental Variables Estimation: Empirical Choice of the Regularization Parameter,” Journal of Econometrics, 180, 158-173.

-

Horowitz, J. L. and S. Lee (2012): “Uniform Confidence Bands for Functions Estimated Nonparametrically with Instrumental Variables,” Journal of Econometrics, 168, 175-188.

-

Huang, J. Z. (2003): “Local Asymptotics for Polynomial Spline Regression,” The Annals of Statistics, 31, 1600-1635.

-

Kozbur, D. (2018): “Inference in Additively Separable Models With a High-Dimensional Set of Conditioning Variables,” Working Paper, arXiv:1503.05436.

-

Leamer, E. E. (1983): “Let’s Take the Con Out of Econometrics,”The American Economic Review, 73, 31-43.

-

Lepski, O. V. (1990): “On a problem of adaptive estimation in Gaussian white noise,”Theory of Probability and its Applications, 35, 454-466.

-

Li, K. C. (1987): “Asymptotic Optimality for , , Cross-Validation and Generalized Cross-Validation: Discrete Index Set,” The Annals of Statistics, 15, 958-975.

-

Li, Qi, and J. S. Racine (2007): Nonparametric Econometrics: Theory and Practice, Princeton University Press.

-

Linton, O. (1995): “Second order approximation in the partialy linear regression model,” Econometrica, 63(5), 1079-1112.

-

Newey, W. K. (1994a): “Series Estimation of Regression Functionals,” Econometric Theory, 10, 1-28.

-

Newey, W. K. (1994b): “The Asymptotic Variance of Semiparametric Estimators,” Econometrica, 62, 1349-1382.

-

Newey, W. K. (1997): “Convergence Rates and Asymptotic Normality for Series Estimators,”Journal of Econometrics, 79, 147-168.

-

Newey, W. K. (2013): “Nonparametric Instrumental Variables Estimation,”American Economic Review: Papers & Proceedings, 103, 550-556.

-

Newey, W. K. and J. L. Powell (2003): “Instrumental Variable Estimation of Nonparametric Models,”Econometrica, 71, 1565-1578.

-

Newey, W. K. and J. L. Powell, F. Vella (1999): “Nonparametric Estimation of Triangular Simultaneous Equations Models,”Econometrica, 67, 565-603.

-

Robinson, P. M. (1988): “Root-N-Consistent Semiparametric Regression,”Econometrica, 56(4), 931-954.

-

Romano, J. P. and M. Wolf (2005): “Stepwise Multiple Testing as Formalized Data Snooping,”Econometrica, 73, 1237-1282.

-

Schennach, S. M. (2015): “A bias bound approach to nonparametric inference,” CEMMAP working paper CWP71/15.

-

Van Der Vaart, A. W. and J. A. Wellner (1996): Weak Convergence and Empirical Processes, Springer.

-

White, H. (2000): “A Reality Check for Data Snooping,”Econometrica, 68, 1097-1126.

-

Zhou, S., X. Shen, and D.A. Wolfe (1998): “Local Asymptotics for Regression Splines and Confidence Regions,” The Annals of Statistics, 26, 1760-1782.

Appendix A Proofs

A.1 Preliminaries and Useful Lemmas

We define additional notations for the empirical process theory used in the proof of Theorem 4.1. Given measurable space , let as a class of measurable functions . For any probability measure on , we define as covering numbers, which is the minimal number of the balls of radius to cover with norms . The uniform entropy numbers relative to the norms are defined as where the supremum is over all discrete probability measures with an envelope function . We define as a VC type with envelope if there are constants such that for all .

Let the data be i.i.d. random vectors defined on the probability space with common probability distribution . We think of as the coordinates of the infinite product probability space. We avoid discussing nonmeasurability issues and outer expectations (for the related issues, see van der Vaart and Wellner (1996)). Throughout the proofs, we denote as universal constants that do not depend on .

For any sequence under Assumption 2.1, we first define the orthonormalized vector of basis functions

We observe that

Without loss of generality, we may impose normalizations of or uniformly over , since is invariant to nonsingular linear transformations of . However, we shall treat as unknown and deal with the non-orthonormalized series terms. Next, we re-define pseudo true value , with an abuse of notation, using orthonormalized series terms . That is, where , , and . We also define , .

We first provide useful lemmas which will be used in the proof of Theorem 3.1 and 4.1. The versions of proof of Lemmas 1 and 2 with are available in the literature, such as Belloni et al. (2015) and Chen and Christensen (2015), among many others. The maximal inequalities are used in the proof of Lemmas 1 and 2 to bound the remainder terms in the linearization of the t-statistics. Also note that different rate conditions of such as those in Newey (1997) can be used here but lead to different bounds. We provide the proofs of Lemma 1 and 2 in the Online Supplementary Material (Section B).

Lemma 1.

A.2 Proofs of the Main Results

A.2.1 Proof of Theorem 3.1

Proof.

For any , we first consider the decomposition of the t-statistic in (2.4) with the known variance ,

where , are defined in Lemma 1, and . Define

where with and . Note that and for all . By Lemma A.2 in the Online Supplementary Material, for any , there exists a random variable with independent random vectors , , such that

where , and

First consider the case (a) in Assumption 3.2(ii). Combining bounds for in Lemma B.1 in the Online Supplementary Material gives, for any ,

For , by setting

we have

where are positive constants that depend only on . If we take sufficiently slowly, e.g., , then the above implies there exists such that

Next, consider the case (b) in Assumption 3.2(ii). For any ,

by Lemma B.1 in the Online Supplementary Material. Similarly, by setting

we have, for ,

where are universal constants which do not depend on . Here we use . By taking , there exists such that

In either case (a) or (b), the above coupling inequality shows that there exists a sequence of random variables such that , under the rate conditions imposed in Theorem 3.1. Furthermore,

| (A.5) |

with by Lemma 1 and the assumption imposed in Theorem 3.1. We also have

| (A.6) |

where we use Lemma 1 and by the maximal inequality (e.g., Lemma A.4 in the Online Supplementary Material) and Assumption 3.2(iii) with . Combining (A.5) and (A.6) gives with . Then, there exists some sequence of positive constant such that and .

For any , we have

where the last inequality uses anti-concentration inequality (Lemma A.8 in the Online Supplementary Material). The reverse inequality holds with a similar argument above, and thus

where we use by Gaussian maximal inequality and . Using the same arguments above, by Sudakov-Fernique type bound (e.g., Chatterjee (2005)) and Assumption 3.2(iii), we have . Therefore, the following holds by the triangle inequality,

and then we conclude

with a critical value given in (3.3), and the coverage result (3.5) follows.

Finally, we will show (3.6). For , observe that

| (A.7) |

by the triangle inequality. Then,

| (A.8) | ||||

| (A.9) | ||||

| (A.10) | ||||

| (A.11) | ||||

| (A.12) |

The first inequality follows by (A.7), and (A.8) holds by Assumption 3.2(iii) with some sequence of positive constant and (A.9) follows by from Lemma 1 and the assumption with and some sequences of constants . (A.10) follows by , and (A.11) holds by with some sequences and defining . Finally, (A.12) holds by Lemma A.8, and since by Lemma A.15. This completes the proof. ∎

A.2.2 Proof of Theorem 4.1

Proof.

Similar to the proof of Theorem 3.1, we have the following linearization of the t-statistics uniformly in ,

where and . Define for given , ,

| (A.13) |

and consider the class of measurable functions . Then, we consider the following empirical process:

which is indexed by classes of functions . Define . Note that for any . We define the envelope function . By Assumption 4.1, we have

for all where . Therefore, the class of functions is a VC type and there are constants such that

for each . Then, using Theorem 2.1 (Lemma A.9 in the Online Supplementary Material) in Chernozhukov et al. (2016) with , there exists a tight Gaussian process in and in with zero mean and covariance function (4.2), and a sequence of random variables such that, for every ,

| (A.14) |

where are positive constants that depend only on , and

by Assumption 4.1(iii) and assuming . By taking , we have

Furthermore, uniformly in with by Lemma 2 and the rate conditions. Again, consider the class of functions and then

by Lemma A.13 and Assumption 4.1(iii), and we have . Further, using Dudley’s inequality (Corollary 2.2.8 in van der Vaart and Wellner (1996)) and using the same arguments given in Theorem 3.1, we have with and

| (A.15) |

Next we consider following (infeasible) bootstrap process

where , is defined in (4.4) with , and is i.i.d. standard exponential random variables independent of . Then, we have

where , , , and are defined the same as in Lemma 1 with the rescaled data . Note that is the weighted least square estimator for the original data, and we can extend the uniform linearization results in Lemma 2 by replacing with and noting that .

By applying Theorem 2.1 in Chernozhukov et al. (2016) to the weighted bootstrap process , there exists a random variable such that, for every ,

| (A.16) |

where are positive constants that depend only on ,

and denotes that the two random variables have the same conditional distribution given .

Further,

by using , Assumption 4.1(iv), and uniformly in under the rate conditions in Assumption 4.1(ii) with . Then, there exists some sequence of positive constant such that ,

| (A.17) |

Combining (A.16) and (A.17) gives

| (A.18) |

By the Markov’s inequality, the following is deduced from (A.18), for every ,

| (A.19) |

with probability at least . Similar derivation as in Theorem 3.1 using Lemma A.14 gives

| (A.20) |

with probability at least where we use and . By taking and sufficiently slower than , and using , the rate conditions imposed in the theorem, (A.20) is . Combining this with (A.15),

| (A.21) |

Then, the coverage result (4.7) follows. The second part of the theorem, (4.8), can be similarly derived as in the proof of Theorem 3.1 and this completes the proof. ∎

A.2.3 Proof of Theorem 5.1

Proof.

Conditional on , the following decomposition holds for any sequence :

where , and . All remaining proofs contain conditional expectations (conditioning on ) and hold almost surely (a.s.). Under Assumption 5.2,

by Lemma 1 of Cattaneo, Jansson, and Newey (2018a). Moreover,

since for , , by Lemma 2 of Cattaneo, Jansson and Newey (2018a) under Assumption 5.2. Then, the following holds:

by Theorem 1 of Cattaneo, Jansson and Newey (2018a).

For simplicity, here we only show the joint convergence of bivariate t-statistics, but the proof can be easily extended to the multivariate case. For any in , we show

| (A.22) |

where .

Define and as follows

where , , , and are similarly defined with and . Note that and a.s. for large enough by Assumption 5.2, and it follows that . Also, a.s. by Assumption 5.2(ii). Using the same arguments in the proof of Lemma A2 in Chao et al. (2012), we have and unconditionally, thus .

Let and define the -fields for Then, conditional on , is a martingale difference array with . We apply the martingale central limit theorem to show, conditional on , a.s. Note that for all . Then similar to the proof of Lemma A2 in Chao et al. (2012),

Moreover, we have as in the proof of Lemma A2 of Chao et al. (2012).

It remains to prove that for any , . Note that

| (A.23) | |||

| (A.24) | |||

| (A.25) | |||

| (A.26) |

(A.23) and (A.24) converge to 0 a.s. by the proof of Lemma A2 in Chao et al. (2012). Moreover, it is straightforward to verify that (A.25) and (A.26) converge to 0 a.s. since , and by closely following the proof of Lemma A2 in Chao et al. (2012). Then we can apply the martingale central limit theorem and deduce using similar arguments to the proof of Lemma A2 in Chao et al. (2012). Coverage results (5.6) and (5.7) follow by the joint convergence of with as under the assumption imposed in Theorem 5.1 and the Slutzky theorem. This completes the proof. ∎

Appendix B Figures and Tables

Solid lines (Black) are ; Dashed lines (Green) are ; Dotted lines (Blue) are , where is the standard normal pdf.

| Pointwise | Uniform | |||||||||

| COV | AL | COV | AL | COV | AL | COV | AL | COV | AL | |

| Model 1: | ||||||||||

| Standard | 0.93 | 0.27 | 0.93 | 0.36 | 0.91 | 0.92 | 0.92 | 1.49 | 0.42 | 0.69 |

| Robust () | 0.98 | 0.37 | 0.98 | 0.46 | 0.96 | 1.14 | 0.95 | 1.76 | 0.97 | 1.33 |

| Robust () | 0.98 | 0.51 | 0.98 | 0.49 | 0.98 | 1.51 | 0.97 | 2.08 | 0.98 | 1.42 |

| Model 2: | ||||||||||

| Standard | 0.80 | 0.28 | 0.93 | 0.36 | 0.91 | 0.92 | 0.92 | 1.49 | 0.27 | 0.69 |

| Robust () | 0.93 | 0.37 | 0.97 | 0.46 | 0.96 | 1.14 | 0.95 | 1.76 | 0.96 | 1.33 |

| Robust () | 0.98 | 0.51 | 0.98 | 0.49 | 0.98 | 1.51 | 0.97 | 2.08 | 0.98 | 1.42 |

| Model 3: | ||||||||||

| Standard | 0.77 | 0.29 | 0.65 | 0.40 | 0.89 | 1.00 | 0.91 | 1.57 | 0.16 | 0.70 |

| Robust () | 0.88 | 0.39 | 0.74 | 0.50 | 0.96 | 1.23 | 0.95 | 1.85 | 0.75 | 1.35 |

| Robust () | 0.98 | 0.52 | 0.92 | 0.53 | 0.98 | 1.52 | 0.97 | 2.06 | 0.97 | 1.44 |

-

Notes: “Pointwise” reports coverage (COV) and average length (AL) of (1) the standard 95% CI with ; (2) robust CI with ; (3) robust CI with . “Uniform” reports analogous uniform inference results for confidence bands. is selected to minimize leave-one-out cross-validation and . Using quadratic spline regressions with evenly placed knots.

| Additional Terms1 | |||||

|---|---|---|---|---|---|

| 0.00472 | 0.0372 | 0.0104 | [0.0168, 0.0576] | ||

| 0.0313 | 0.0761 | 0.0128 | [0.0510, 0.1012] | ||

| 0.0305 | 0.0760 | 0.0127 | [0.0511, 0.1009] | ||

| 0.0323 | 0.0763 | 0.0129 | [0.0510, 0.1016] | ||

| 0.0369 | 0.0543 | 0.0151 | [0.0247, 0.0839] | ||

| 0.0364 | 0.0659 | 0.0197 | [0.0273, 0.1045] | ||

| 0.0350 | 0.0628 | 0.0223 | [0.0191, 0.1065] | ||

| 0.0364 | 0.0636 | 0.0223 | [0.0199, 0.1073] | ||

| 0.0331 | 0.0845 | 0.0275 | [0.0306, 0.1384] | ||

| 0.0263 | 0.0775 | 0.0286 | [0.0214, 0.1336] | ||

| 0.0252 | 0.0714 | 0.0289 | [0.0148, 0.1280] | ||

| MLE estimates | 0.123 | 0.0137 | |||

| critical values: , 3 | |||||

-

1

: non-labor income, : marginal wage rates, : the end point of the segment in a piecewise linear budget set. denotes .

-

2

denotes the cross-validation criteria defined in Blomquist and Newey (2002, p.2464). , the 5th smallest model, is chosen by the cross-validation, and let , .

-

3

, .