Inference Networks for Sequential Monte Carlo in Graphical Models

Inference Networks for Sequential Monte Carlo in Graphical Models

Abstract

We introduce a new approach for amortizing inference in directed graphical models by learning heuristic approximations to stochastic inverses, designed specifically for use as proposal distributions in sequential Monte Carlo methods. We describe a procedure for constructing and learning a structured neural network which represents an inverse factorization of the graphical model, resulting in a conditional density estimator that takes as input particular values of the observed random variables, and returns an approximation to the distribution of the latent variables. This recognition model can be learned offline, independent from any particular dataset, prior to performing inference. The output of these networks can be used as automatically-learned high-quality proposal distributions to accelerate sequential Monte Carlo across a diverse range of problem settings.

1 Introduction

Recently proposed methods for Bayesian inference based on sequential Monte Carlo (Doucet et al.,, 2001) have shown themselves to provide state-of-the art results in applications far broader than the traditional use of sequential Monte Carlo (SMC) for filtering in state space models (Gordon et al.,, 1993; Pitt and Shephard,, 1999), with diverse application to factor graphs (Naesseth et al.,, 2014), hierarchical Bayesian models (Lindsten et al.,, 2014), procedural generative graphics (Ritchie et al.,, 2015), and general probabilistic programs (Wood et al.,, 2014; Todeschini et al.,, 2014). These are accompanied by complementary computational advances, including memory-efficient implementations (Jun and Bouchard-Côté,, 2014), and highly-parallel variants (Murray et al.,, 2014; Paige et al.,, 2014).

All these algorithms, however, share the need for specifying a series of proposal distributions, used to sample candidate values at each stage of the algorithm. Sequential Monte Carlo methods perform inference progressively, iteratively targeting a sequence of intermediate distributions which culminates in a final target distribution. Well-chosen proposal distributions for transitioning from one intermediate target distribution to the next can lead to sample-efficient inference, and are necessary for practical application of these methods to difficult inference problems. Theoretically optimal proposal distributions (Doucet et al.,, 2000; Cornebise et al.,, 2008) are in general intractable, thus in practice implementing these algorithms requires either active (human) work to design an appropriate proposal distribution prior to sampling, or using an online estimation procedure to approximate the optimal proposal during inference (as in e.g. Van Der Merwe et al., (2000) or Cornebise et al., (2014) for state-space models). In many cases, a baseline proposal distribution which simulates from a prior distribution can be used, analogous to the so-called bootstrap particle filter for inference in state-space models; however, when confronted with tightly peaked likelihoods (i.e. highly informative observations), proposing from the prior distribution may be arbitrarily statistically inefficient (Del Moral and Murray,, 2015). Furthermore, for some choices of sequences of densities there is no natural prior distribution, or even it may not be available in closed form. All in all, the need to design appropriate proposal distributions is a real impediment to the automatic application of these SMC methods to new models and problems.

This paper investigates how autoregressive neural network models for modeling probability distributions (Bengio and Bengio,, 1999; Uria et al.,, 2013; Germain et al.,, 2015) can be leveraged to automate the design of model-specific proposal distributions for sequential Monte Carlo. We propose a method for learning proposal distributions for a given probabilistic generative model offline, prior to performing inference on any particular dataset. The learned proposals can then be reused as desired, allowing SMC inference to be performed quickly and efficiently for the same probabilistic model, but for new data — that is, for new settings of the observed random variables — once we have incurred the up-front cost of learning the proposals.

We thus present this work as an amortized inference procedure in the sense of Gershman and Goodman, (2014), in that it takes a model as its input and generates an artifact which then can be leveraged for accelerating future inference tasks. Such procedures have been considered for other inference methods: learning idealized Gibbs samplers offline for models in which closed-form full conditionals are not available (Stuhlmüller et al.,, 2013), using pre-trained neural networks to inform local MCMC proposal kernels (Jampani et al.,, 2015; Kulkarni et al.,, 2015), and learning messages for new factors for expectation-propagation (Heess et al.,, 2013). In the context of SMC, offline learning of high-quality proposal distributions provides a similar opportunity for amortizing runtime costs of inference, while simultaneously automating a currently-manual process.

Source code for all experiments (in PyTorch) is available at

https://github.com/tbrx/compiled-inference.

2 Preliminaries

A directed graphical model, or Bayesian network (Pearl and Russell,, 1998), defines a joint probability distribution and conditional independence structure via a directed acyclic graph. For each in a set of random variables , the network structure specifies a conditional density , where denotes the parent nodes of . Inference tasks in Bayesian networks involve marking certain nodes as observed random variables, and characterizing the posterior distribution of the remaining latent nodes. The joint distribution over latent random variables and observed random variables is defined as

| (1) |

where and refer to the probability density or mass functions associated with the respective latent and observed random variables . Posterior inference in directed graphical models entails using Bayes’ rule to estimate the posterior distribution of the latent variables given particular observed values ; that is, to characterize the target density . In most models, exact posterior inference is intractable, and one must resort to either variational or finite-sample approximations.

2.1 Sequential Monte Carlo

Importance sampling methods approximate expectations with respect to a (presumably intractable) distribution by weighting samples drawn from a (presumably simpler) proposal distribution . In graphical models, with , we define an unnormalized target density such that , where the normalizing constant is unknown.

The sequential Monte Carlo algorithms we consider (Doucet et al.,, 2001) for inference on an dimensional latent space proceed by incrementally importance sampling a weighted set of particles, with interspersed resampling steps to direct computation towards more promising regions of the high-dimensional space. We break the problem of estimating the posterior distribution of into a series of simpler lower-dimensional problems by constructing an artificial sequence of target densities (and corresponding unnormalized densities ) defined on increasing subsets , , where the final is the full target posterior of interest. At each intermediate density, the importance sampling density only needs to adequately approximate a low-dimensional step from to .

Procedurally, we initialize at by sampling values of from a proposal density , and assigning each of these particles an associated importance weight

| (2) |

For each subsequent , we first resample the particles according to the normalized weights at , preferentially duplicating high-weight particles and discarding those with low weight. To do this we draw particle ancestor indices from a resampling distribution corresponding to any standard resampling scheme (Douc et al.,, 2005). We then extend each particle by sampling a value for from the proposal kernel , and update the importance weights

| (3) | ||||

| (4) |

We can approximate expectations with respect to the target density using the SMC estimator

| (5) |

where is a Dirac point mass.

2.2 Target densities and proposal kernels

The choice of incremental target densities is application-specific; innovation in SMC algorithms often involves proposing novel manners for constructing sequences of intermediate distributions. These incremental densities do not necessarily need to correspond to marginal distributions of full target. Particularly relevant recent work directed towards improving SMC inference in the same class of models we address includes the Biips ordering and arrangement algorithm (Todeschini et al.,, 2014), the divide and conquer approach (Lindsten et al.,, 2014), and heuristics for scoring orderings in general factor graphs (Naesseth et al.,, 2014, 2015). All these methods provide a means for selecting a sequence of intermediate target densities — however, given a sequence of targets, one still must supply an appropriate proposal density.

The ideal choice for this proposal in general is found by proposing directly from the incremental change in densities (Doucet et al.,, 2000), with

| (6) |

Using this proposal, each of the unnormalized weights in Equation (3) are independent of the sampled values of . In practice this conditional density is nearly always intractable, and one must resort to approximation.

Adaptive importance sampling methods aim to learn the optimal proposal online during the course of inference, immediately prior to proposing values for the next target density. In both in the context of population Monte Carlo (PMC) (Cappé et al.,, 2008) and sequential Monte Carlo (Cornebise et al.,, 2008, 2014; Gu et al.,, 2015), a parametric family is proposed, with is a free parameter, and the adaptive algorithms aim to minimize either the reverse Kullback-Leibler (KL) divergence or Chi-squared distance between the approximating family and the optimal proposal density. This can be optimized via stochastic gradient descent (Gu et al.,, 2015), or for specific forms of by online Monte Carlo expectation maximization, both for population Monte Carlo (Cappé et al.,, 2008) and in state-space models (Cornebise et al.,, 2014). Note that this is the reverse of the KL divergence traditionally used in variational inference (Jordan et al.,, 1999), and takes the form of an expectation with respect to the intractable target distribution.

2.3 Neural autoregressive distribution estimation

As a general model class for , we adapt recent advances in flexible neural network density estimators, appropriate for both discrete and continuous high-dimensional data. We focus particularly on the use of autoregressive neural network density estimation models (Bengio and Bengio,, 1999; Larochelle and Murray,, 2011; Uria et al.,, 2013; Germain et al.,, 2015) which model high-dimensional distribution by learning a sequence of one-dimensional conditional distributions; that is, learning each product term in

| (7) |

typically with weight parameter sharing across densities.

We choose to adapt the masked autoencoder for distribution estimation (MADE) model (Germain et al.,, 2015), which fits an autoregressive model to binary data, with structure inspired by autoencoders. In its simplest form, a single-layer MADE model described on dimensional binary data has a hidden layer and output with

| (8) | ||||

| (9) |

where are real-valued parameters to be learned, denotes elementwise multiplication, are nonlinear functions, and are fixed binary masks. Critically, the construction of the masks is such that computing the network output for each requires only the inputs , with the zeros in the masks dropping the connections. The masks are generated by assigning each unit in each hidden layer a number from , describing which of the dimensions it is permitted to take as input; output units then are only permitted to take as input hidden nodes numbered lower than their output.

With a logistic function sigmoid as , then can be interpreted as a probability , and to compute one does not need supply any value as input to for the dimensions . That is, if one follows all connections “back” through the network from to the input , one would find only themselves at .

3 Approach

Our approach is two-fold. First, given a Bayesian network that acts as a generative model for our observed data given latent variables , we construct a new Bayesian network which acts as a generative model for our latent , given observed data . This network is constructed such that the joint distribution of the new “inverse model”, which we will refer to as , preserves the conditional dependence structure in the original model , but has a different factorization (Stuhlmüller et al.,, 2013).

Unfortunately, unlike the original forward model, the inverse model has conditional densities which we do not in general know how to normalize or sample from. However, were we to know the full conditional density of the inverse model , then we could directly draw samples of given a particular dataset .

Thus our second task is to learn approximations for the conditionals , where are parents of in the inverse model. To do so we employ neural density estimators and design a procedure to train these “offline”, in the sense that no real data is required.

3.1 Defining the inverse model

We begin by constructing an inverse model which admits the same distribution over all random variables as , but with a different factorization. We first note that the directed acyclic graph structure of imposes a partial ordering on all random variables and ; we choose any single valid ordering arbitrarily, and define the sequences and such that for any , , and for any , .

Our goal here is to construct as simple as possible a distribution whose factorization does not introduce any new conditional independencies not also present in the original generative model. Consider two extremes: a fully factorized which assumes all are conditionally independent given may be attractive for computational reasons, but fails to capture all the structure of the posterior; whereas a fully connected is guaranteed to capture all dependencies, but may be unnecessarily complex.

To define the approximating distribution at each , we invert the dependencies on , effectively running the generative model backwards. Following the heuristic algorithm of Stuhlmüller et al., (2013), we do this by literally constructing the dependency graph in reverse. Ordering the random variables , we define a new parent set for each in the transformed model, with . Define the Markov blanket to be the set of all random variables which share a factor with ; that is, the union of the parents of , the children of , and the parents of the children of . Then defining the parent sets in the transformed model as

yields a model with the same local dependency structure as the original model ; however, now the sequence is reversed such that the observed values are inputs (i.e., ). The sequence under the new model, which we will refer to as , factorizes naturally as ; particularly important to us is the factorization of the conditional density .

This algorithm produces inverse graph structures which despite not being fully connected, preserve local conditional dependencies in the original graph:

Proposition 1. Preservation of local conditional dependence. Let be latent or observed random variables in with graph structure , and with each of adjacent to at least one of the others under . Then let denote the corresponding random variables in the inverse model with graph structure , constructed via the algorithm above. If and are conditionally independent given in the inverse model , they were also conditionally independent in the original model ; that is,

Proof. Suppose we had a conditional dependence in which was not preserved in , i.e. with but . Without loss of generality assume was added to the inverse graph prior to , i.e. in . Note that can occur either due to a direct dependence between and , or, due to both ; in either case, . Then when adding to the inverse graph we are guaranteed to have , in which case .

3.2 Learning a family of approximating densities

Following Cappé et al., (2008), learning proposals for importance sampling on in a single-dataset setting (i.e., with fixed ) entails proposing a parametric family , where is a free parameter, and then choosing to minimize

| (10) |

This KL divergence between the true posterior distribution and proposal distribution is also known as the relative entropy criterion, and is a preferred objective function in situations in which the estimation goal construct a high-quality weighted sample representation, rather than to minimize the variance of a particular expectation (Cornebise et al.,, 2008).

In an amortized inference setting, instead of learning explicitly for a fixed value of , we learn a mapping from to . More explicitly, if and , then learning a deterministic mapping allows performing approximate inference for with only the computational complexity of evaluating the function . The tradeoff is that the training of itself may be quite involved.

We thus generalize the adaptive importance sampling algorithms by learning a family of distributions , parameterized by the observed data . Suppose that , where the function is parameterized by a set of upper-level parameters . We would like a choice of which performs well across all datasets . We can frame this as minimizing the expected value of Eq. (10) under , suggesting an objective function defined as

| (11) |

which has a gradient

| (12) |

Notice that these expectations in Equations (11) and (12) are with respect to the tractable joint distribution . We can thus fit by stochastic gradient descent, estimating the expectation of the gradient by sampling synthetic full-data training examples from the original model. This procedure can be performed entirely offline — we require only to be able to sample from the joint distribution to generate candidate data points (effectively providing infinite training data). In any directed graphical model this can be achieved by ancestral sampling, where in addition to sampling we sample values of the as-yet unobserved variables . Furthermore, we do not need need to be able to compute gradients of our model itself — we only need the gradients of our recognition model , allowing use of any differentiable representation for . We choose the parametric family and the transformation such that this inner gradient in Eq. (12) can be computed easily.

We can now use the conditional independence structure in our inverse model to break down , an approximation of , into a product of smaller conditional densities each approximating . The full proposal density can be decomposed as

| (13) |

with the gradient similarly decomposing as

Each of these expectations requires only samples of the random variables in , reducing the dimensionality of the joint optimization problem.

3.3 Joint conditional neural density estimation

We particularly wish to construct the inverse factorization (and our proposal model ) in such a way that we deal naturally with the presence of head-to-head nodes, in which one random variable may have a very large parent set. This situation is common in machine learning models: it is quite common to have generative models which factorize in the joint distribution, but have complex dependencies in the posterior; see for example the model in Figure 1.

We thus choose to treat all such situations in our inverse factorization — where a sequence of variables are fully dependent on one another after conditioning on a shared set of parent nodes — as a single joint conditional density which we will approximate with an autoregressive density model. We extend MADE (Germain et al.,, 2015) to function as a conditional density estimator by allowing it to take as additional inputs, and constructing the masks such that these additional inputs are propagated through all hidden layers to all outputs, even for the very first dimension. As in MADE this can be achieved by labeling the hidden units with integers denoting which input dimensions they are allowed to accept. In contrast to the original MADE, we label hidden units with numbers from , where hidden units labeled 0 to take as input only the dimensions in . For single-dimensional data, where , all hidden units are labeled 0 and all feed forward into the single output , recovering a standard mixture density network (Bishop,, 1994).

To model non-binary data, MADE can be extended by altering the output layer network to emit parameters of any univariate probability density function. We take the same approach by which RNADE (Uria et al.,, 2013) modifies the binary autoregressive distribution estimator NADE (Larochelle and Murray,, 2011) to handle real-valued data, with an output layer that parameterizes a univariate mixture of Gaussians for each dimension conditioned on its parents. The probability of any particular is given by

where is the Gaussian probability density. This requires an output layer with dimensions, to predict each of means , standard deviations , weights ; to enforce positivity of standard deviations we apply a softplus function to the raw network outputs, and a softmax function to ensure is a probability vector.

3.4 Training the neural network

Contrary to many standard settings in which one is limited by the amount of data present, we are armed with a sampler which allows us to generate effectively infinite training data. This could be used to sample a “giant” synthetic dataset, which we then use for mini-batch training via gradient descent; however, then we must decide how large a dataset is required. Alternatively, we could sample a brand new set of training examples for every mini-batch, never re-using previous samples.

In testing we found that a hybrid training procedure, which samples new synthetic datasets based on performance on a held-out set of synthetic validation data, appeared more efficient than resampling a new synthetic dataset for each new gradient update. We perform mini-batch gradient updates on using synthetic training data, while evaluating on the validation set. If the validation error increases, or after a set maximum number of steps, we draw new sets of both synthetic training and validation data from .

In all experiments we use Adam (Kingma and Ba,, 2015) with the suggested default parameters to update learning rates online, and use rectified linear activation functions.

4 Examples

4.1 Inverting a single factor

To illustrate the basic method for inverting factors, we consider a non-conjugate polynomial regression model, with global-only latent variables. The graphical model, its inversion, and the neural network structure are shown in Figure 1. Here we place a Laplace prior on the regression weights, and have Student-t likelihoods, giving us

for fixed , and uniformly. The goal is to estimate the posterior distribution of weights for the constant, linear, and quadratic terms, given any possible collected dataset . In the notation of the preceding sections, we have latent variables and observed variables .

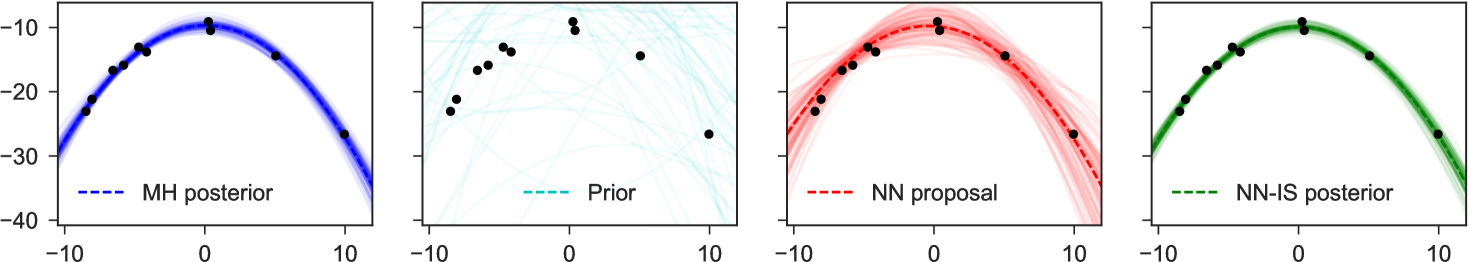

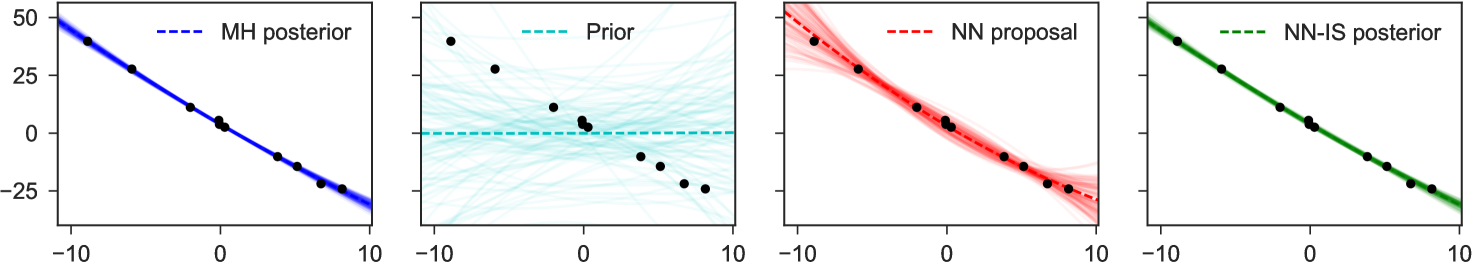

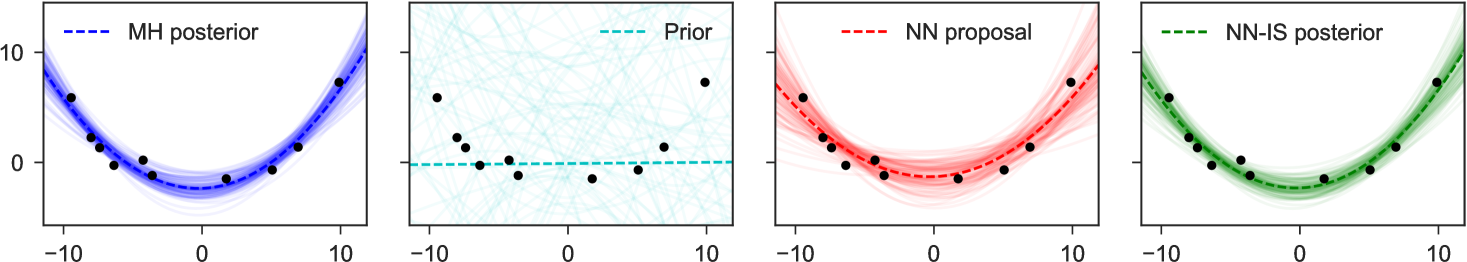

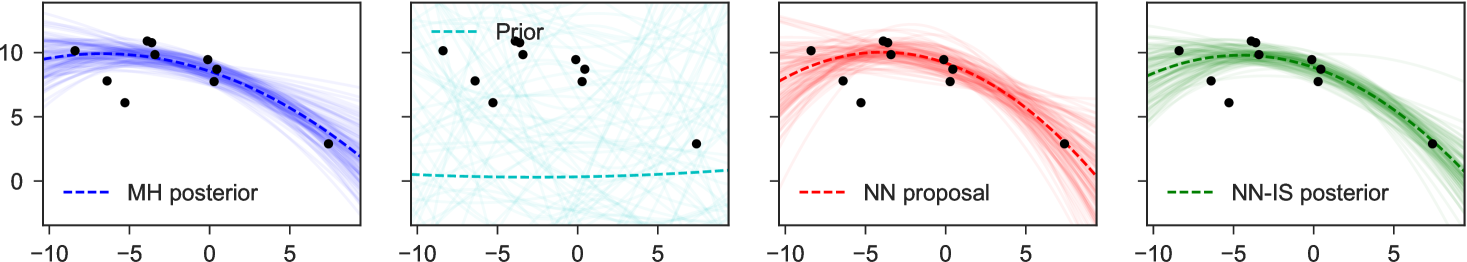

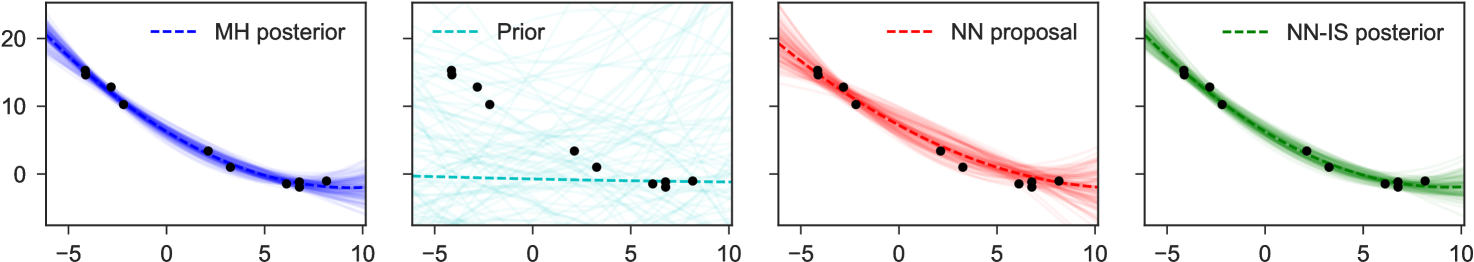

Note particularly that although the original graphical model which expressed factorizes into products over which are conditionally independent given , in the inverse model due to the explaining-away phenomenon all latent variables depend on all others: there are no latent variables which can be -separated from the observed , and all latent variables share as parents. This means we fit as proposal only a single joint density . Examples of representative output from this network are shown in Figure 4. The trained network used here 300 hidden units in each of two hidden layers, and a mixture of 3 Gaussians as each output.

4.2 A hierarchical Bayesian model

Consider as a new example a representative multilevel model where exact inference is intractable, a Poisson model for estimating failure rates of power plant pumps (George et al.,, 1993). Given power plant pumps, each having operated for thousands of hours, we see failures, following

The graphical model, an inverse factorization, and the neural network structure are shown in Figure 2. To generating synthetic training data, are sampled iid from an exponential distribution with mean 50.

The repeated structure in the inverse factorization of this model allows us to learn a single inverse factor to represent the distribution across all . This yields a far simpler learning problem than were we forced to fit all of jointly. Further, the repeated structure allows us to use a divide-and-conquer SMC algorithm (Lindsten et al.,, 2014) which works particularly efficiently on this model. Each of the replicated structures are sampled in parallel with independent particle sets, weighted locally, and resampled; once all are sampled, we end by sampling and jointly, which need both be included in order to evaluate the final terms in the joint target density. We stress that there is no obvious baseline proposal density to use for a divide-and-conquer SMC algorithm, as neither the marginal prior nor posterior distributions over are available in closed form. Any usage of this algorithm requires manual specification of some proposal .

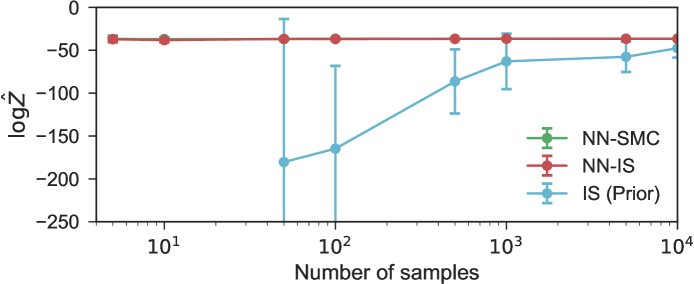

We test our proposals on the actual power pump failure data analyzed in George et al., (1993). The relative convergence speeds of marginal likelihood estimators from importance sampling from prior and neural network proposals, and SMC with neural network proposals, are shown in Figure 5. To capture the wide tails of the broad gamma distributions, we use a mixture of 10 Gaussians here at each output node, and 500 hidden units in each of two hidden layers.

4.3 Factorial hidden Markov model

Proposals can also be learned to approximate the optimal filtering distribution in models for sequential data; we demonstrate here on a factorial hidden Markov model (Ghahramani and Jordan,, 1997), where each time step has a combinatorial latent space. The additive model we consider is inspired by the model studied in Kolter and Jaakkola, (2012) for disaggregation of household energy usage; effective inference in this model is a subject of continued research. Some number of devices are either in an active state, in which case each device consumes units of energy, or it is off, in which case it consumes no energy. At each time step we receive a noisy observation of the total amount of energy consumed, summed across all devices. This model, whose graphical model structure is shown in Figure 3, can be represented as

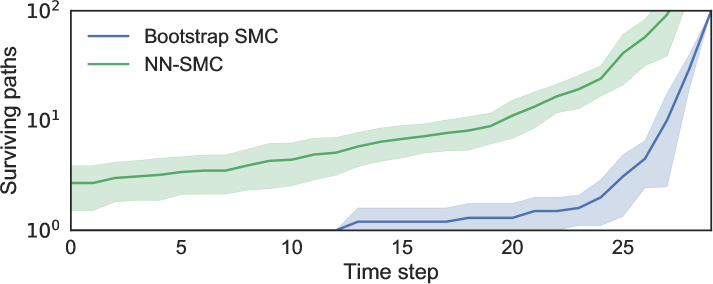

where represents the prior probability of devices switching on or off at each time increment. We design a synthetic example with , meaning each time step has possible discrete states; the parameters are spread out from 30 to 500, with . Each individual device has an initial probability of being activated at , switching state at subsequent with probability .

As different combinations of devices can yield identical total energy usage it is impossible to disambiguate between different combinations of active devices from a single observation, meaning any successful inference algorithm must attempt to mix across many disconnected modes over time to preserve the multiple possible explanations. The effect of the learned proposals on the overall number of surviving particles is shown in Figure 6. Our proposal model uses Bernoulli outputs in a 4-layer network, with 300 units per hidden layer; it takes as input the latent states at the previous time , as well as the current observation .

5 Discussion

We present this work primarily as a manner by which we compile away application-time inference costs when performing SMC, and automating the manual task of designing proposal densities. However, in some situations direct sampling from the model may provide a satisfactory approximation even eschewing importance weighting steps; in such cases our approach can be viewed as a graphical-model-regularized algorithm for designing and training neural networks with interpretable structural representations. Rather than learning from data, the emulator model is chosen to approximate the specified generative model, akin to the “sleep” cycle of the wake-sleep algorithm (Hinton et al.,, 1995).

In contrast to variational autoencoders (Kingma and Welling,, 2014), where one simultaneously learns parameters for both the inference network and generative model from data, we assume a known generative model with fixed parameters and structured, interpretable latent variables. This provides robustness to bias arising from training data which comes from an unrepresentative sample, and also allows us to apply our method in situations where a sufficiently large supply of exemplar data is unavailable. However, it does require placing trust in the generative model: in particular, it requires a generative model which could plausibly create the data we will later collect and condition on.

Beyond these differences, our choice of , the same minimized by EP, leads to approximations more appropriate for SMC refinement than a variational Bayes objective function; see e.g. Minka, (2005) for a discussion of “zero-forcing” behavior, and e.g. Cappé et al., (2008) for a discussion of pathological cases in learned importance sampling distributions.

Acknowledgements

BP would like to thank both Tom Jin and Jan-Willem van de Meent for their helpful discussions, feedback, and ongoing commiseration. FW is supported under DARPA PPAML through the U.S. AFRL under Cooperative Agreement number FA8750-14-2-0006, Sub Award number 61160290-111668.

References

- Bengio and Bengio, (1999) Bengio, Y. and Bengio, S. (1999). Modeling high-dimensional discrete data with multi-layer neural networks. In Advances in Neural Information Processing Systems, volume 99, pages 400–406.

- Bishop, (1994) Bishop, C. M. (1994). Mixture density networks. Technical report.

- Cappé et al., (2008) Cappé, O., Douc, R., Guillin, A., Marin, J.-M., and Robert, C. P. (2008). Adaptive importance sampling in general mixture classes. Statistics and Computing, 18(4):447–459.

- Cornebise et al., (2008) Cornebise, J., Moulines, É., and Olsson, J. (2008). Adaptive methods for sequential importance sampling with application to state space models. Statistics and Computing, 18:461–480.

- Cornebise et al., (2014) Cornebise, J., Moulines, É., and Olsson, J. (2014). Adaptive sequential Monte Carlo by means of mixture of experts. Statistics and Computing, 24:317–337.

- Del Moral and Murray, (2015) Del Moral, P. and Murray, L. M. (2015). Sequential Monte Carlo with highly informative observations. SIAM/ASA Journal on Uncertainty Quantification, 3(1):969–997.

- Douc et al., (2005) Douc, R., Cappé, O., and Moulines, E. (2005). Comparison of resampling schemes for particle filtering. In In 4th International Symposium on Image and Signal Processing and Analysis (ISPA), pages 64–69.

- Doucet et al., (2001) Doucet, A., De Freitas, N., Gordon, N., et al. (2001). Sequential Monte Carlo methods in practice. Springer New York.

- Doucet et al., (2000) Doucet, A., Godsill, S., and Andrieu, C. (2000). On sequential Monte Carlo sampling methods for Bayesian filtering. Statistics and computing, 10(3):197–208.

- George et al., (1993) George, E. I., Makov, U., and Smith, A. (1993). Conjugate likelihood distributions. Scandinavian Journal of Statistics, pages 147–156.

- Germain et al., (2015) Germain, M., Gregor, K., Murray, I., and Larochelle, H. (2015). MADE: masked autoencoder for distribution estimation. In Proceedings of the 32nd International Conference on Machine Learning, ICML 2015, pages 881–889.

- Gershman and Goodman, (2014) Gershman, S. J. and Goodman, N. D. (2014). Amortized inference in probabilistic reasoning. In Proceedings of the Thirty-Sixth Annual Conference of the Cognitive Science Society.

- Ghahramani and Jordan, (1997) Ghahramani, Z. and Jordan, M. I. (1997). Factorial hidden Markov models. Machine learning, 29(2-3):245–273.

- Gordon et al., (1993) Gordon, N. J., Salmond, D. J., and Smith, A. F. (1993). Novel approach to nonlinear/non-Gaussian Bayesian state estimation. IEE Proceedings F (Radar and Signal Processing), 140(2):107–113.

- Gu et al., (2015) Gu, S., Ghahramani, Z., and Turner, R. E. (2015). Neural adaptive sequential Monte Carlo. In Advances in Neural Information Processing Systems 28.

- Heess et al., (2013) Heess, N., Tarlow, D., and Winn, J. (2013). Learning to pass expectation propagation messages. In Advances in Neural Information Processing Systems 26, pages 3219–3227.

- Hinton et al., (1995) Hinton, G. E., Dayan, P., Frey, B. J., and Neal, R. M. (1995). The “wake-sleep” algorithm for unsupervised neural networks. Science, 268(5214):1158–1161.

- Jampani et al., (2015) Jampani, V., Nowozin, S., Loper, M., and Gehler, P. V. (2015). The informed sampler: A discriminative approach to Bayesian inference in generative computer vision models. Computer Vision and Image Understanding, 136:32–44.

- Jordan et al., (1999) Jordan, M. I., Ghahramani, Z., Jaakkola, T. S., and Saul, L. K. (1999). An introduction to variational methods for graphical models. Machine learning, 37(2):183–233.

- Jun and Bouchard-Côté, (2014) Jun, S.-H. and Bouchard-Côté, A. (2014). Memory (and time) efficient sequential Monte Carlo. In Proceedings of the 31st international conference on Machine learning, pages 514–522.

- Kingma and Ba, (2015) Kingma, D. and Ba, J. (2015). Adam: A method for stochastic optimization. In Proceedings of the International Conference on Learning Representations (ICLR).

- Kingma and Welling, (2014) Kingma, D. P. and Welling, M. (2014). Auto-encoding variational Bayes. In Proceedings of the International Conference on Learning Representations (ICLR).

- Kolter and Jaakkola, (2012) Kolter, J. Z. and Jaakkola, T. (2012). Approximate inference in additive factorial HMMs with application to energy disaggregation. In International conference on artificial intelligence and statistics, pages 1472–1482.

- Kulkarni et al., (2015) Kulkarni, T. D., Kohli, P., Tenenbaum, J. B., and Mansinghka, V. K. (2015). Picture: a probabilistic programming language for scene perception. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition.

- Larochelle and Murray, (2011) Larochelle, H. and Murray, I. (2011). The neural autoregressive distribution estimator. In International Conference on Artificial Intelligence and Statistics, pages 29–37.

- Lindsten et al., (2014) Lindsten, F., Johansen, A. M., Naesseth, C. A., Kirkpatrick, B., Schön, T. B., Aston, J., and Bouchard-Côté, A. (2014). Divide-and-conquer with sequential Monte Carlo. arXiv preprint arXiv:1406.4993.

- Minka, (2005) Minka, T. (2005). Divergence measures and message passing. Technical report, Microsoft Research.

- Murray et al., (2014) Murray, L. M., Lee, A., and Jacob, P. E. (2014). Parallel resampling in the particle filter. arXiv preprint arXiv:1301.4019.

- Naesseth et al., (2014) Naesseth, C. A., Lindsten, F., and Schön, T. B. (2014). Sequential Monte Carlo for graphical models. In Advances in Neural Information Processing Systems 27.

- Naesseth et al., (2015) Naesseth, C. A., Lindsten, F., and Schön, T. B. (2015). Towards automated sequential Monte Carlo for probabilistic Graphical Models. In NIPS Workshop on Black Box Learning and Inference.

- Paige et al., (2014) Paige, B., Wood, F., Doucet, A., and Teh, Y. W. (2014). Asynchronous anytime sequential Monte Carlo. In Advances in Neural Information Processing Systems 27, pages 3410–3418.

- Pearl and Russell, (1998) Pearl, J. and Russell, S. (1998). Bayesian networks. Computer Science Department, University of California.

- Pitt and Shephard, (1999) Pitt, M. K. and Shephard, N. (1999). Filtering via simulation: auxiliary particle filter. Journal of the American Statistical Association, 94:590–599.

- Ritchie et al., (2015) Ritchie, D., Mildenhall, B., Goodman, N. D., and Hanrahan, P. (2015). Controlling procedural modeling programs with stochastically-ordered sequential Monte Carlo. ACM Transactions on Graphics (TOG), 34(4):105.

- Stuhlmüller et al., (2013) Stuhlmüller, A., Taylor, J., and Goodman, N. (2013). Learning stochastic inverses. In Advances in Neural Information Processing Systems 26, pages 3048–3056.

- Todeschini et al., (2014) Todeschini, A., Caron, F., Fuentes, M., Legrand, P., and Del Moral, P. (2014). Biips: software for Bayesian inference with interacting particle systems. arXiv preprint arXiv:1412.3779.

- Uria et al., (2013) Uria, B., Murray, I., and Larochelle, H. (2013). RNADE: The real-valued neural autoregressive density-estimator. In Advances in Neural Information Processing Systems, pages 2175–2183.

- Van Der Merwe et al., (2000) Van Der Merwe, R., Doucet, A., De Freitas, N., and Wan, E. (2000). The unscented particle filter. In Advances in Neural Information Processing Systems, pages 584–590.

- Wood et al., (2014) Wood, F., van de Meent, J. W., and Mansinghka, V. (2014). A new approach to probabilistic programming inference. In Proceedings of the 17th International conference on Artificial Intelligence and Statistics.